Method evaluation Martijn Schuemie Ph D Janssen Research

- Slides: 23

Method evaluation Martijn Schuemie, Ph. D Janssen Research and Development

Selected research topics – Topic 1: Method evaluation • Have initiated the Method Evaluation Task Force – Topic 3: Smooshed comparators • Collaboration between UPenn and Jn. J – Topic 5: Heterogeneous treatment effects • Will be put on hold until 1 is completed 2

Method evaluation • Population-level effect size estimation: – What is the relative risk of outcome X when using drug A? – What is the relative risk of outcome X when using drug A compared to drug B? • How accurate and reliable are estimates of a particular method? 3

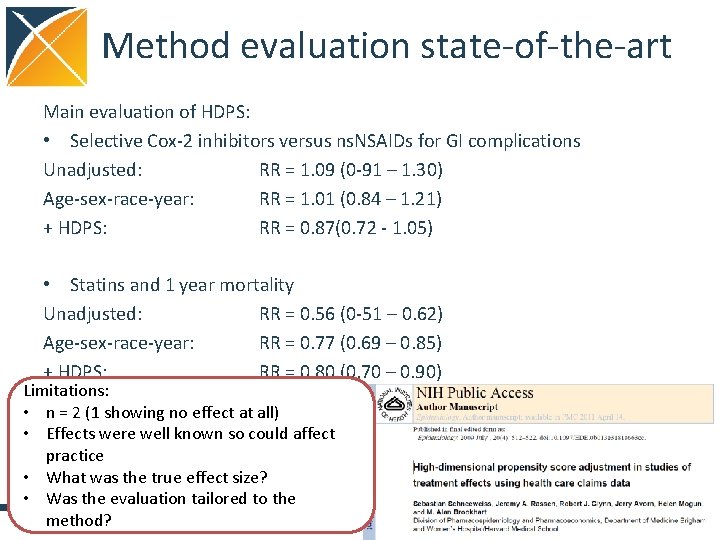

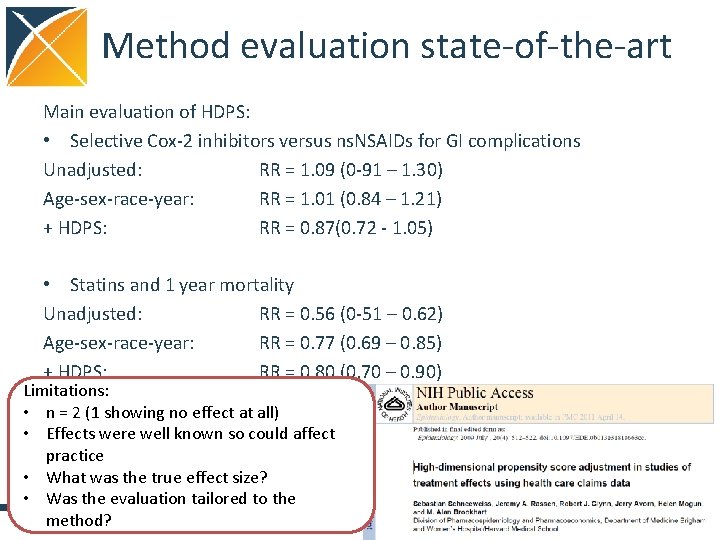

Method evaluation state-of-the-art Main evaluation of HDPS: • Selective Cox-2 inhibitors versus ns. NSAIDs for GI complications Unadjusted: RR = 1. 09 (0 -91 – 1. 30) Age-sex-race-year: RR = 1. 01 (0. 84 – 1. 21) + HDPS: RR = 0. 87(0. 72 - 1. 05) • Statins and 1 year mortality Unadjusted: RR = 0. 56 (0 -51 – 0. 62) Age-sex-race-year: RR = 0. 77 (0. 69 – 0. 85) + HDPS: RR = 0. 80 (0. 70 – 0. 90) Limitations: • n = 2 (1 showing no effect at all) • Effects were well known so could affect practice • What was the true effect size? • Was the evaluation tailored to the method? 4

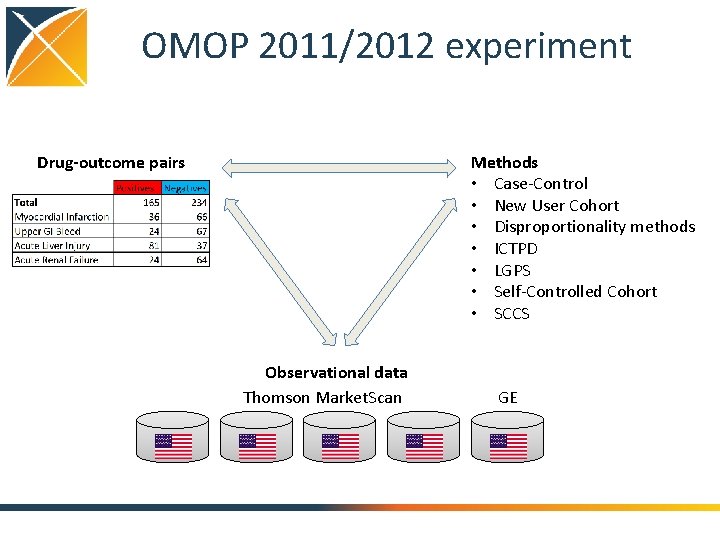

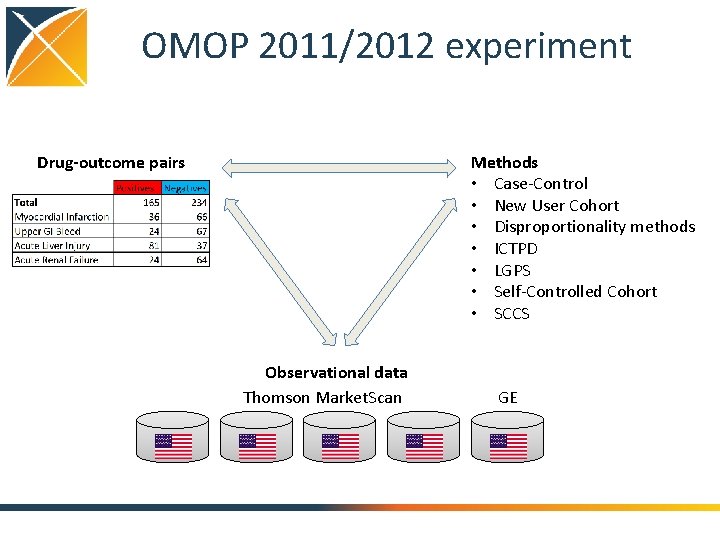

OMOP 2011/2012 experiment Methods • Case-Control • New User Cohort • Disproportionality methods • ICTPD • LGPS • Self-Controlled Cohort • SCCS Drug-outcome pairs Observational data Thomson Market. Scan GE

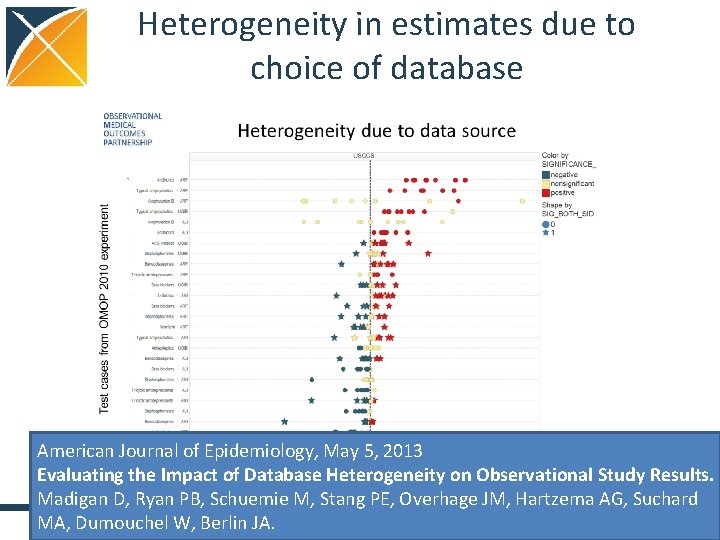

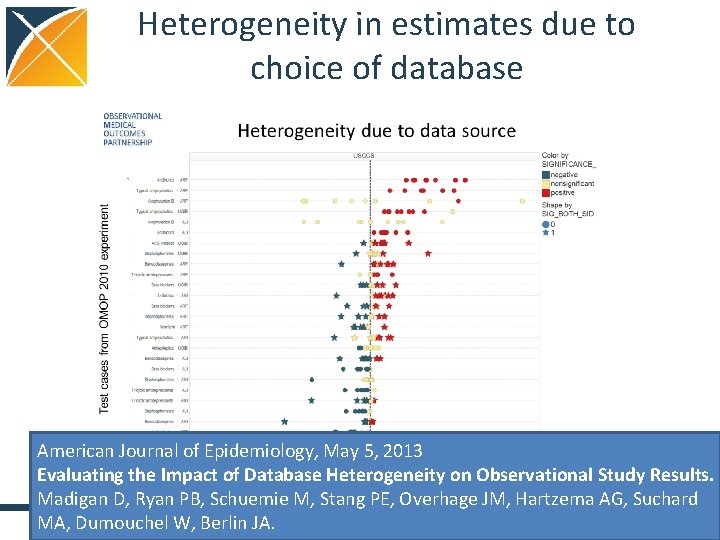

Heterogeneity in estimates due to choice of database American Journal of Epidemiology, May 5, 2013 Evaluating the Impact of Database Heterogeneity on Observational Study Results. Madigan D, Ryan PB, Schuemie M, Stang PE, Overhage JM, Hartzema AG, Suchard MA, Dumouchel W, Berlin JA. 6

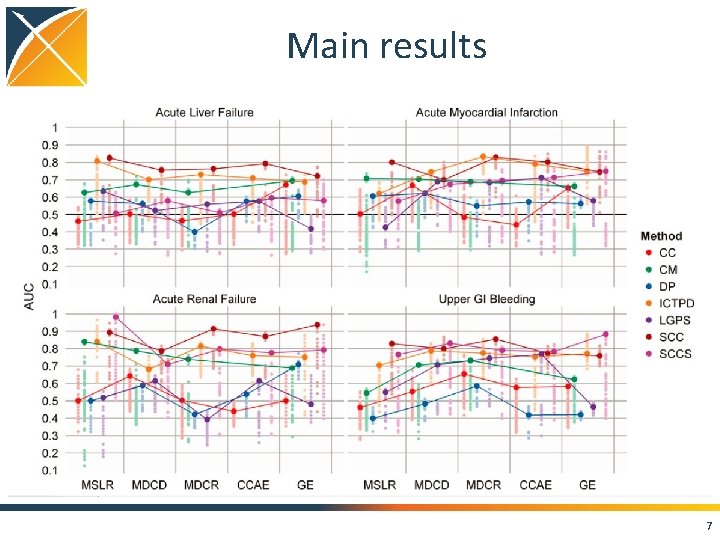

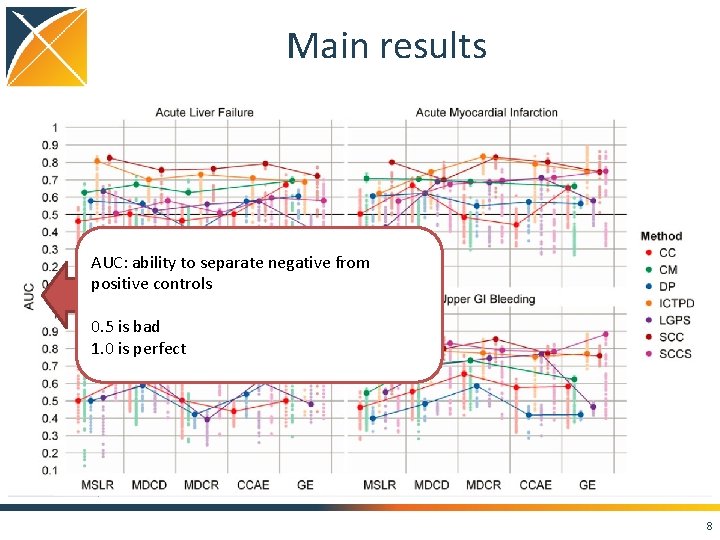

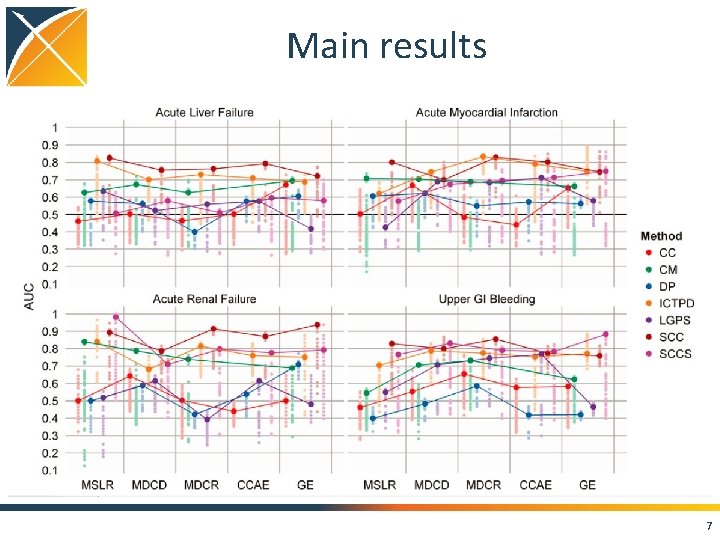

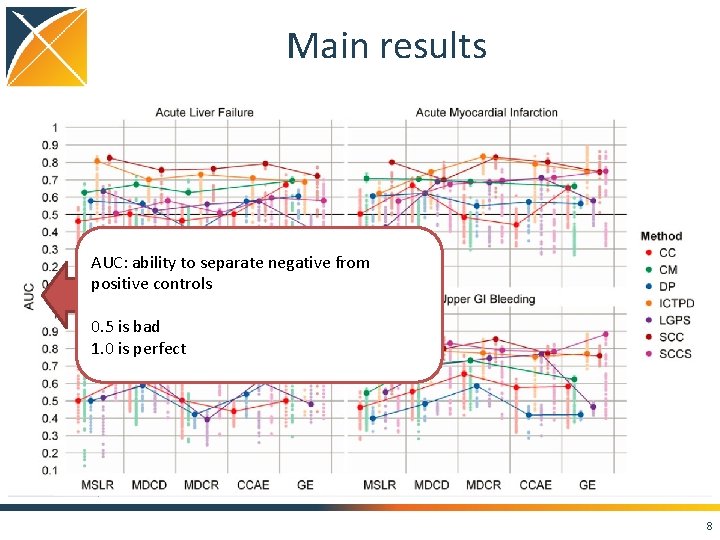

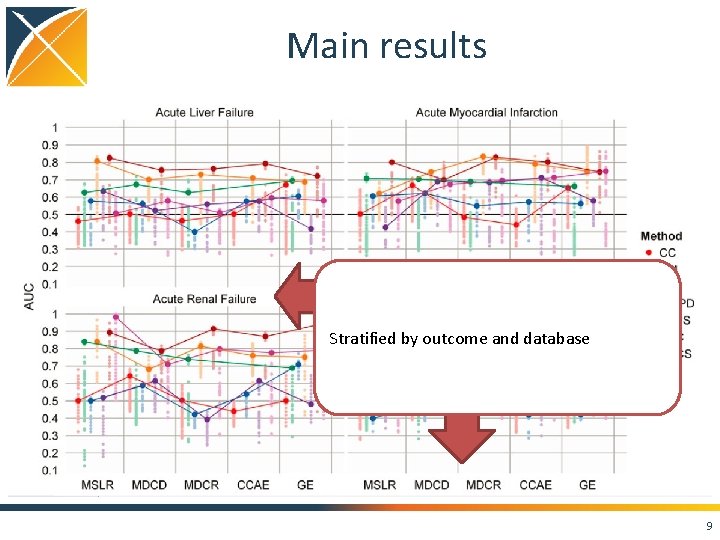

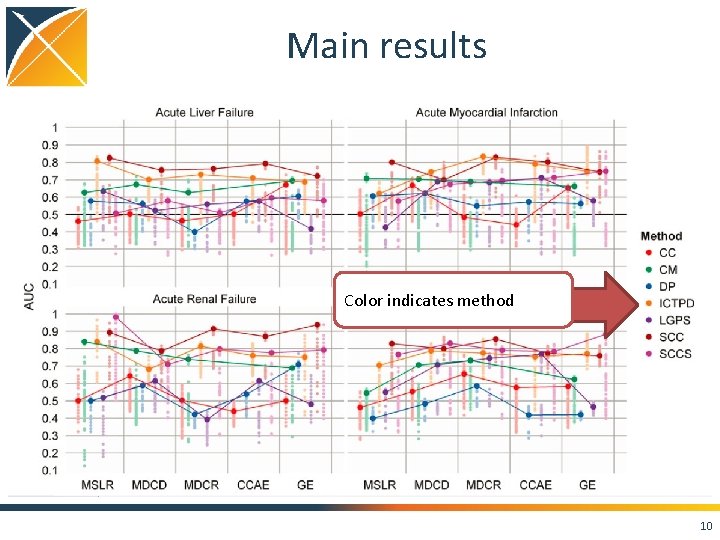

Main results 7

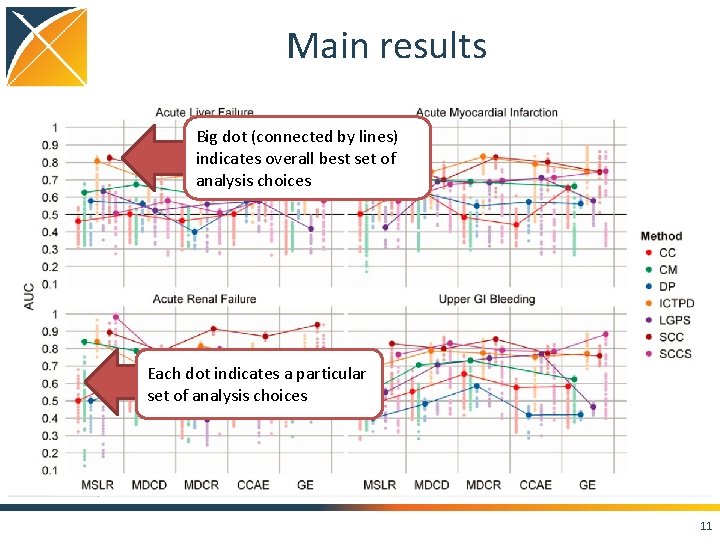

Main results AUC: ability to separate negative from positive controls 0. 5 is bad 1. 0 is perfect 8

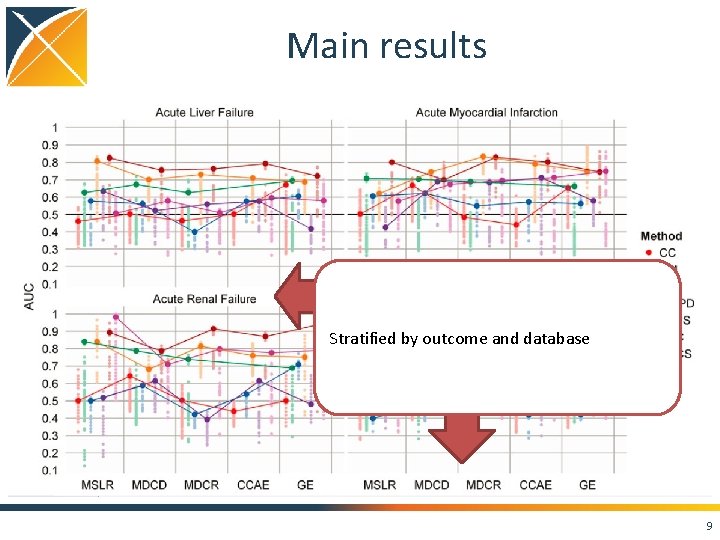

Main results Stratified by outcome and database 9

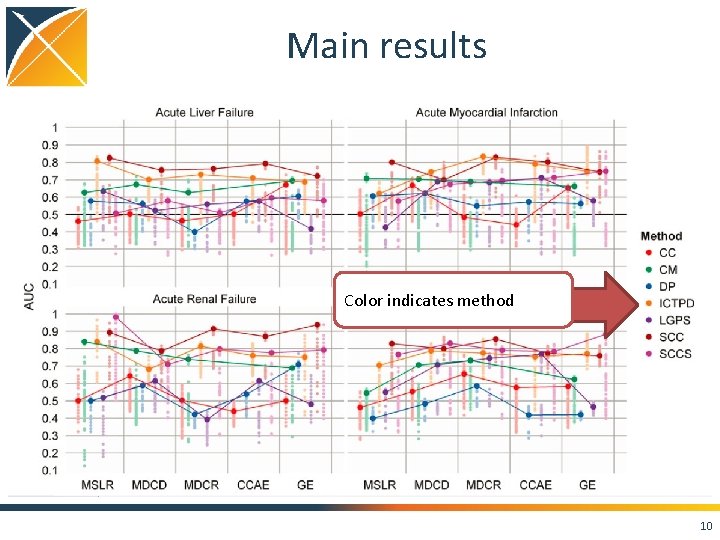

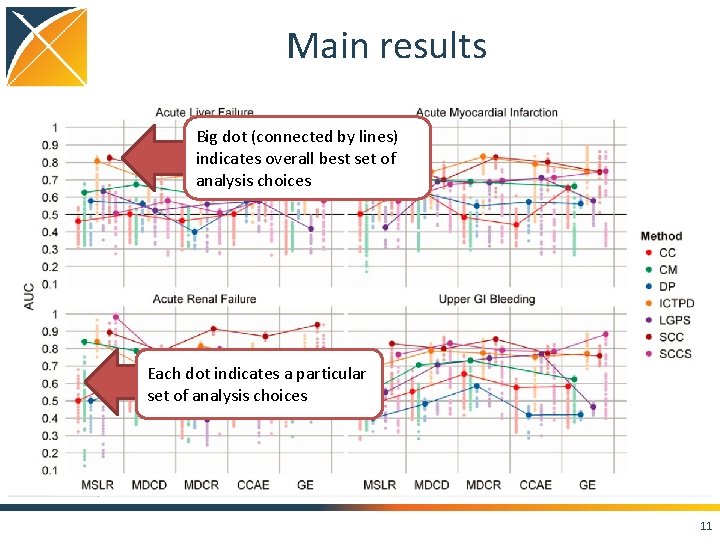

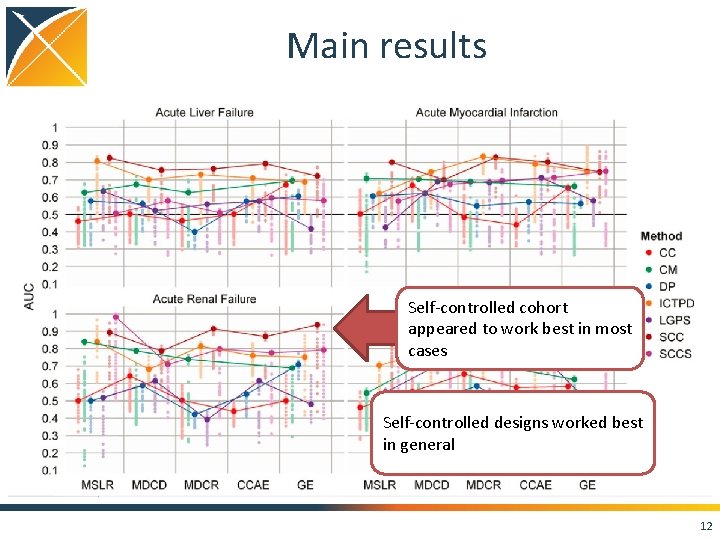

Main results Color indicates method 10

Main results Big dot (connected by lines) indicates overall best set of analysis choices Each dot indicates a particular set of analysis choices 11

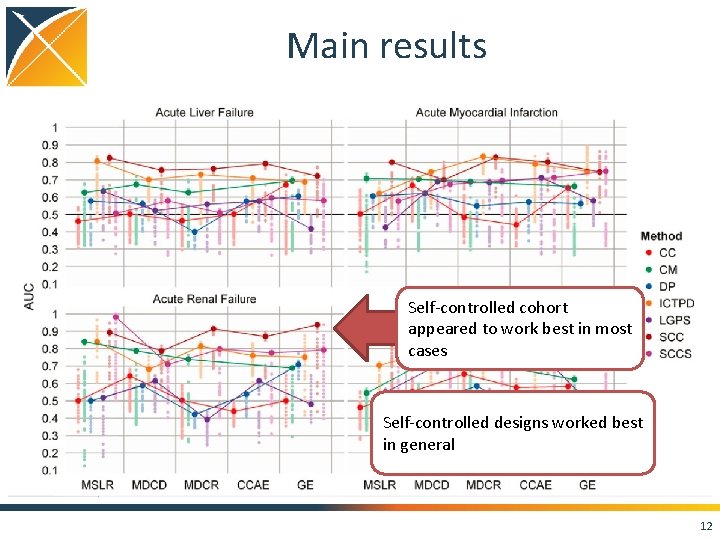

Main results Self-controlled cohort appeared to work best in most cases Self-controlled designs worked best in general 12

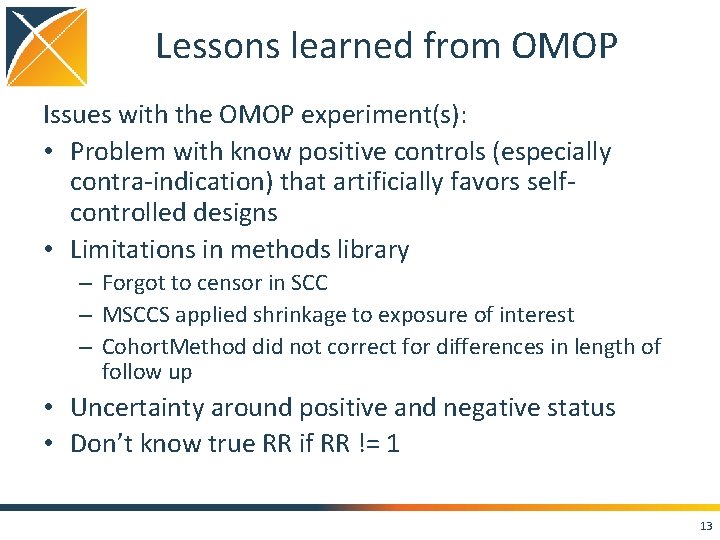

Lessons learned from OMOP Issues with the OMOP experiment(s): • Problem with know positive controls (especially contra-indication) that artificially favors selfcontrolled designs • Limitations in methods library – Forgot to censor in SCC – MSCCS applied shrinkage to exposure of interest – Cohort. Method did not correct for differences in length of follow up • Uncertainty around positive and negative status • Don’t know true RR if RR != 1 13

Method Evaluation Task Force Objectives • Develop the methodology for evaluating methods • Use the developed methodology to systematically evaluate a large set of study designs and design choices. 14

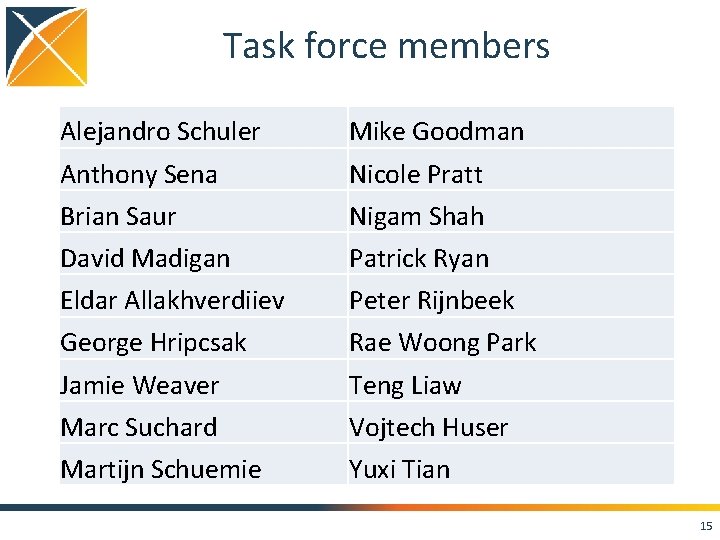

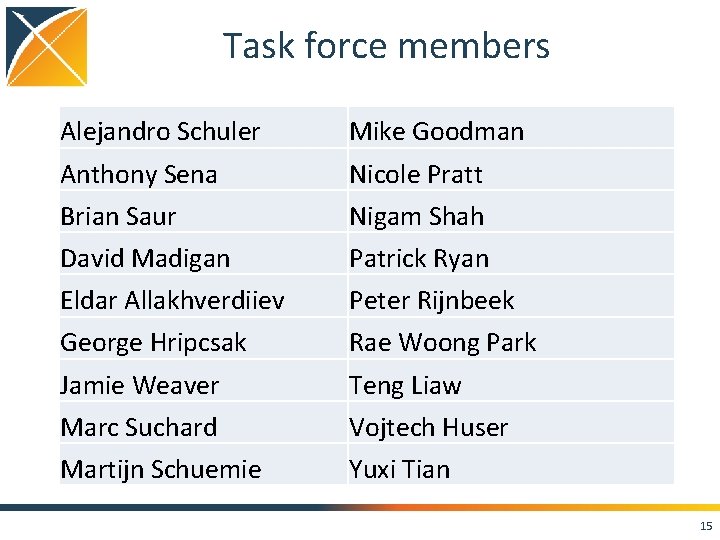

Task force members Alejandro Schuler Mike Goodman Anthony Sena Nicole Pratt Brian Saur Nigam Shah David Madigan Patrick Ryan Eldar Allakhverdiiev Peter Rijnbeek George Hripcsak Rae Woong Park Jamie Weaver Teng Liaw Marc Suchard Vojtech Huser Martijn Schuemie Yuxi Tian 15

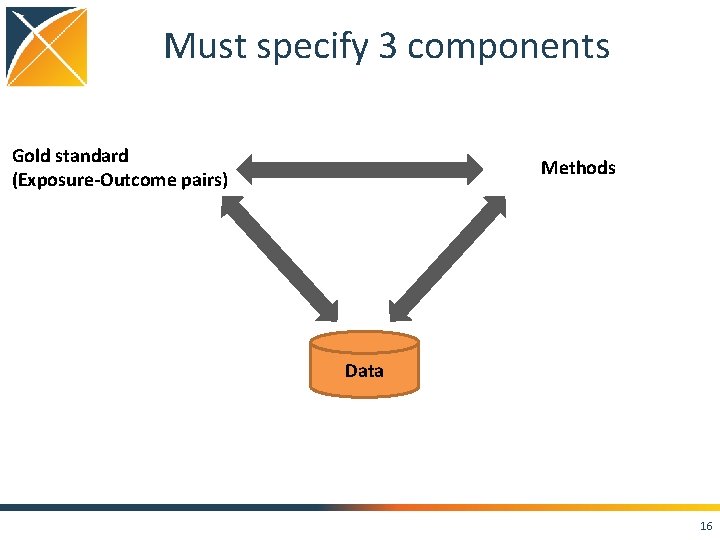

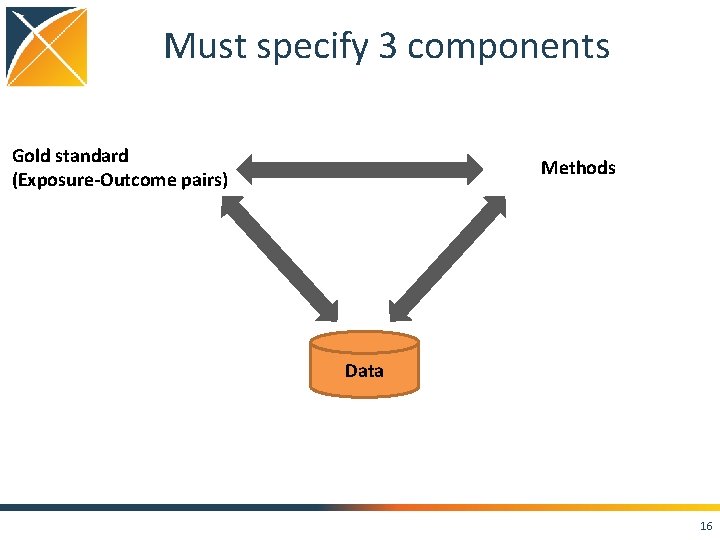

Must specify 3 components Gold standard (Exposure-Outcome pairs) Methods Data 16

Gold standard • Real negative controls • Synthetic positive controls • Recent RCTs: use only data from before

Negative controls Pro: • Probably unbiased • Includes measured and unmeasured confounding Con: • Null effects only 18

Synthetic positive controls Pro • Probably unbiased Con • Injection doesn’t preserve unmeasured confounding May be addressed by simulating unmeasured confounding by removing confounders from the data 19

RCTs Pro • Most convincing evidence for most people Con • RCTs themselves are likely biased due to nonrandom acts after the moment of randomization • RCTs typically have limited sample size • Even though we would like to have RCTs with observational data preceding the moment the effect was known, the effect was probably already known long before the trial 20

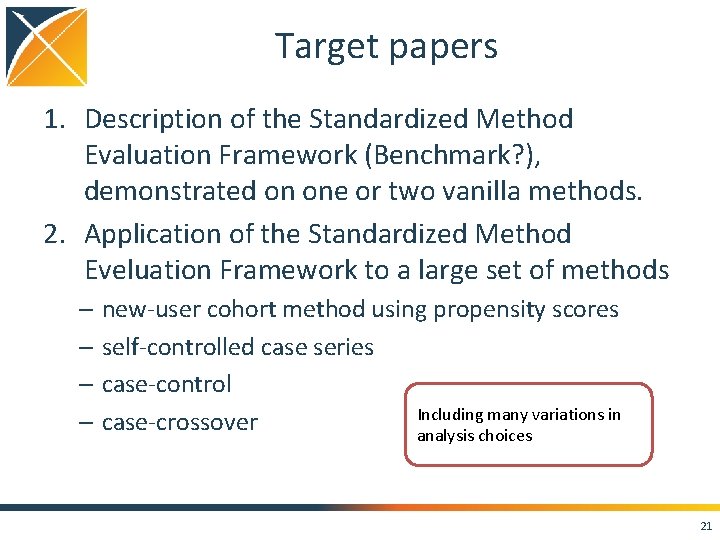

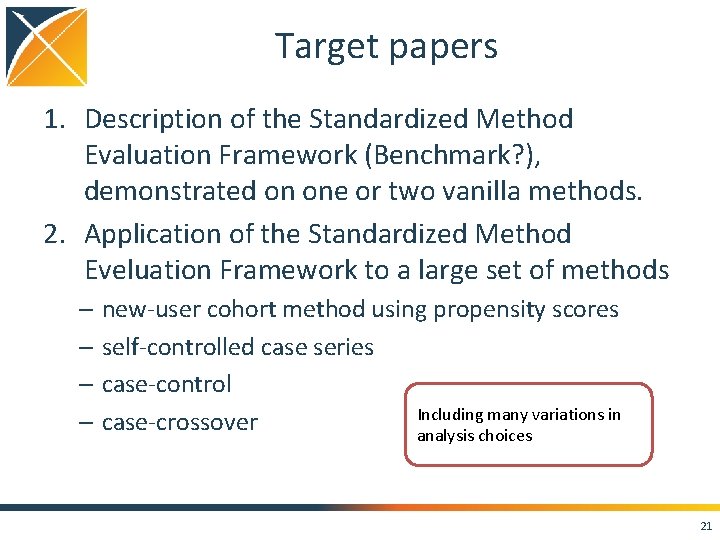

Target papers 1. Description of the Standardized Method Evaluation Framework (Benchmark? ), demonstrated on one or two vanilla methods. 2. Application of the Standardized Method Eveluation Framework to a large set of methods – new-user cohort method using propensity scores – self-controlled case series – case-control Including many variations in – case-crossover analysis choices 21

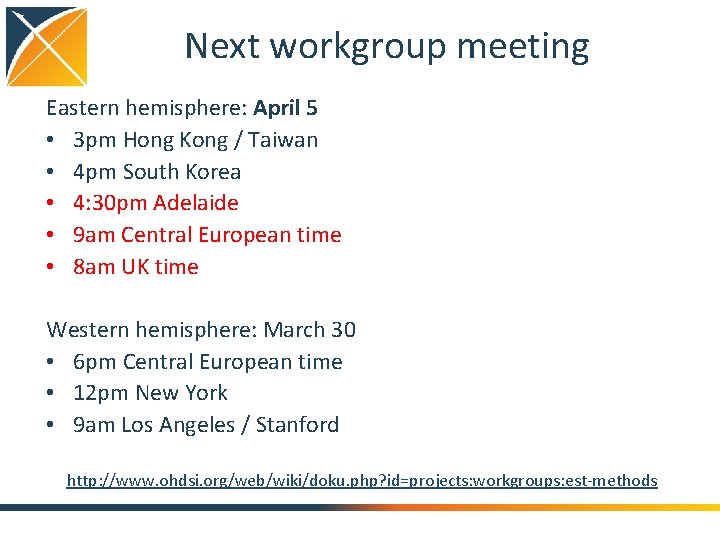

Method evaluation tasks • Identify exposures of interest and negative controls • Refine approach to positive control synthesis • Evaluate effect of unmeasured confounding in positive control synthesis • Identify RCTs and implement inclusion criteria • Implement case-crossover / case-time-control • Define universe of methods to evaluate • Identify list of databases to run on • Develop evaluation metrics • Implement and execute evaluation • Write paper 22

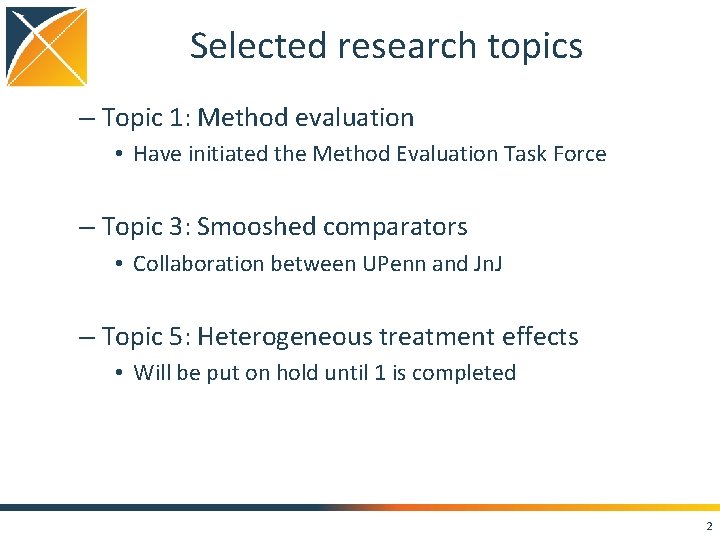

Next workgroup meeting Eastern hemisphere: April 5 • 3 pm Hong Kong / Taiwan • 4 pm South Korea • 4: 30 pm Adelaide • 9 am Central European time • 8 am UK time Western hemisphere: March 30 • 6 pm Central European time • 12 pm New York • 9 am Los Angeles / Stanford http: //www. ohdsi. org/web/wiki/doku. php? id=projects: workgroups: est-methods