Magnetic Disks tracks platter spindle readwrite head actuator

- Slides: 21

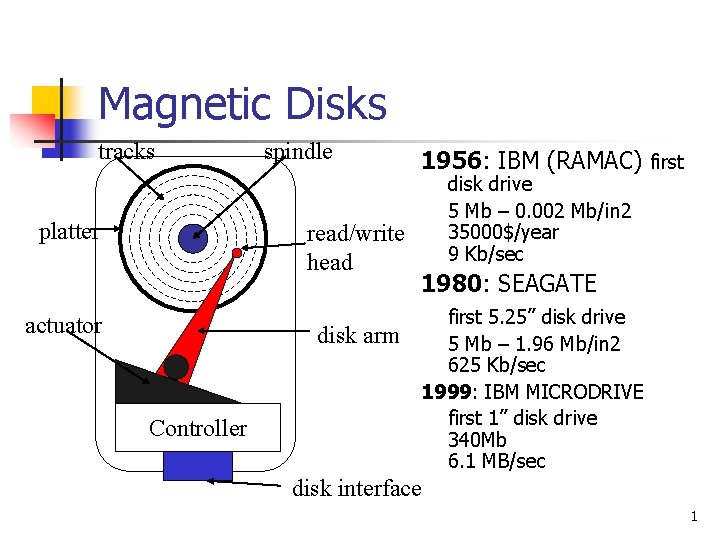

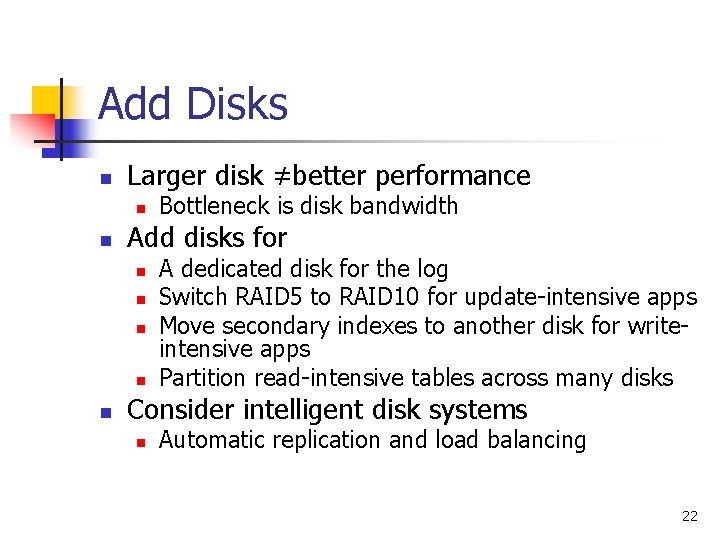

Magnetic Disks tracks platter spindle read/write head actuator disk arm Controller 1956: IBM (RAMAC) first disk drive 5 Mb – 0. 002 Mb/in 2 35000$/year 9 Kb/sec 1980: SEAGATE first 5. 25’’ disk drive 5 Mb – 1. 96 Mb/in 2 625 Kb/sec 1999: IBM MICRODRIVE first 1’’ disk drive 340 Mb 6. 1 MB/sec disk interface 1

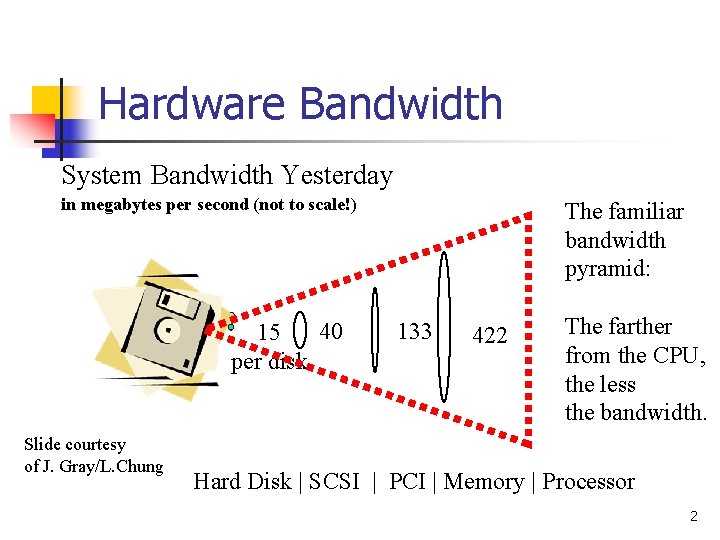

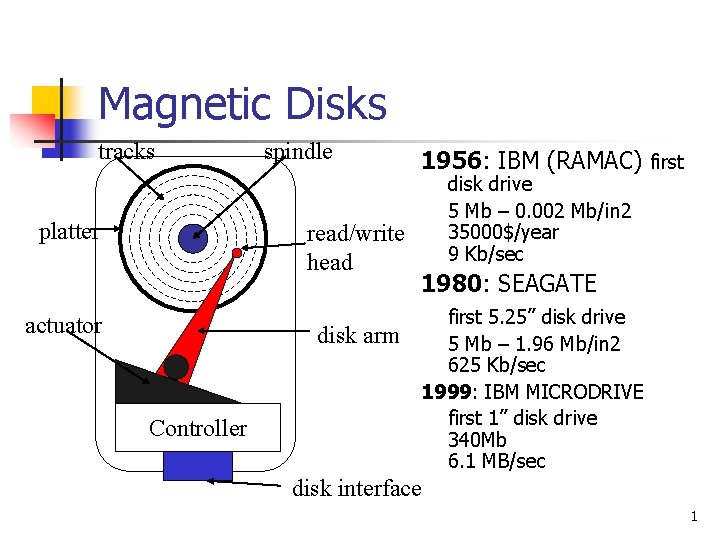

Hardware Bandwidth System Bandwidth Yesterday in megabytes per second (not to scale!) 40 15 per disk Slide courtesy of J. Gray/L. Chung The familiar bandwidth pyramid: 133 422 The farther from the CPU, the less the bandwidth. Hard Disk | SCSI | PCI | Memory | Processor 2

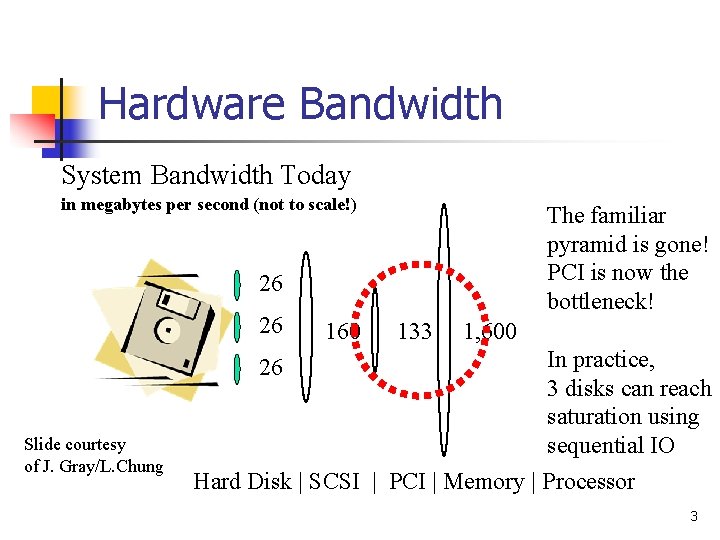

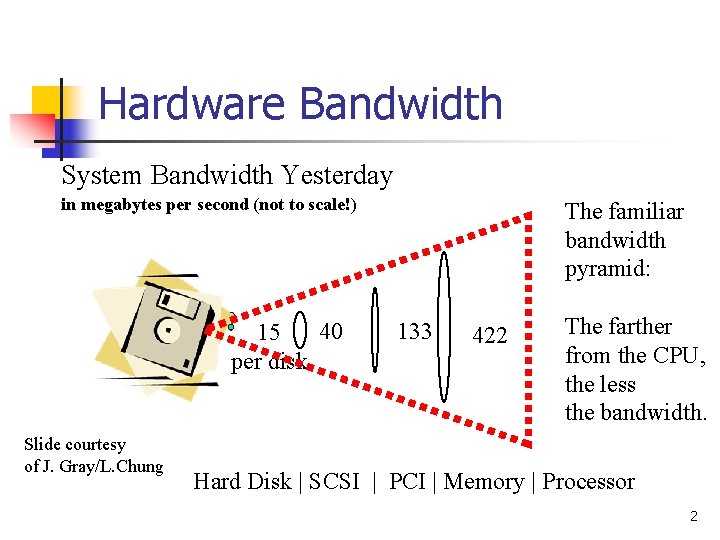

Hardware Bandwidth System Bandwidth Today in megabytes per second (not to scale!) The familiar pyramid is gone! PCI is now the bottleneck! 26 26 160 133 1, 600 In practice, 3 disks can reach saturation using sequential IO Hard Disk | SCSI | PCI | Memory | Processor 26 Slide courtesy of J. Gray/L. Chung 3

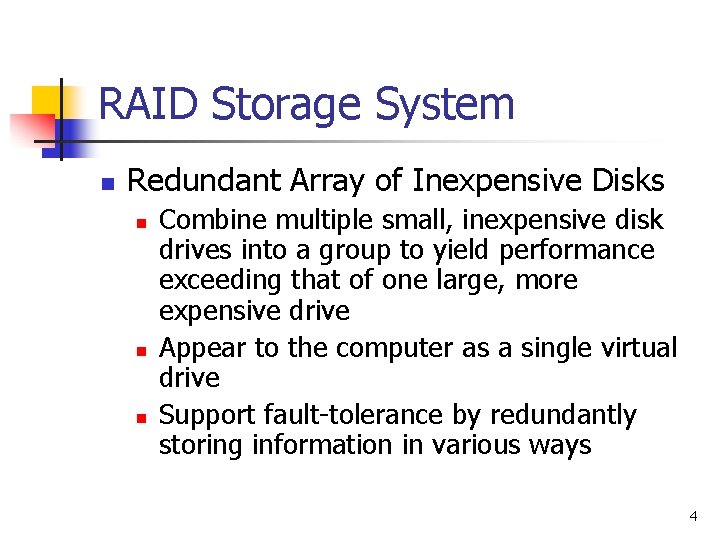

RAID Storage System n Redundant Array of Inexpensive Disks n n n Combine multiple small, inexpensive disk drives into a group to yield performance exceeding that of one large, more expensive drive Appear to the computer as a single virtual drive Support fault-tolerance by redundantly storing information in various ways 4

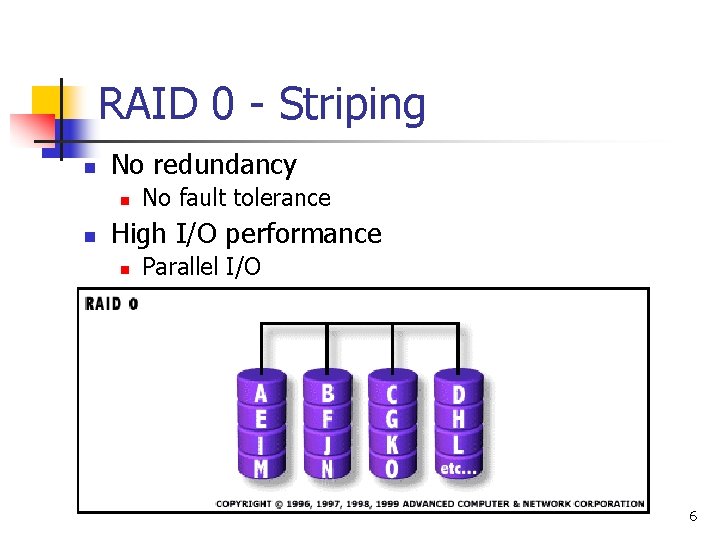

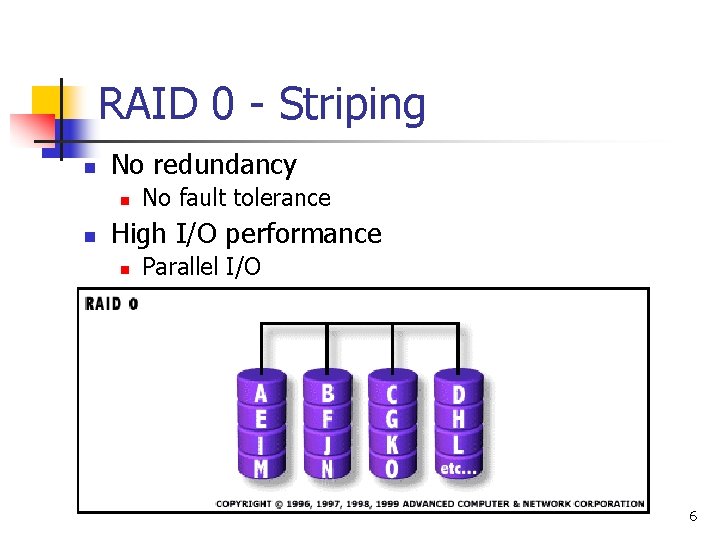

RAID 0 - Striping n No redundancy n n No fault tolerance High I/O performance n Parallel I/O 6

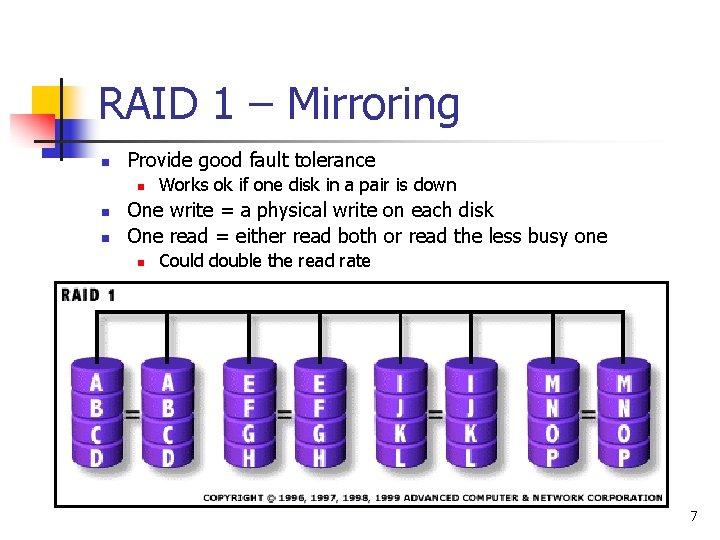

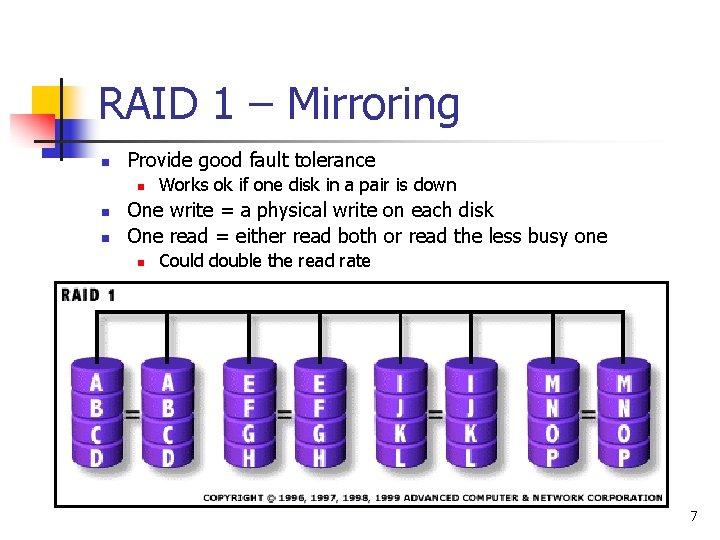

RAID 1 – Mirroring n Provide good fault tolerance n n n Works ok if one disk in a pair is down One write = a physical write on each disk One read = either read both or read the less busy one n Could double the read rate 7

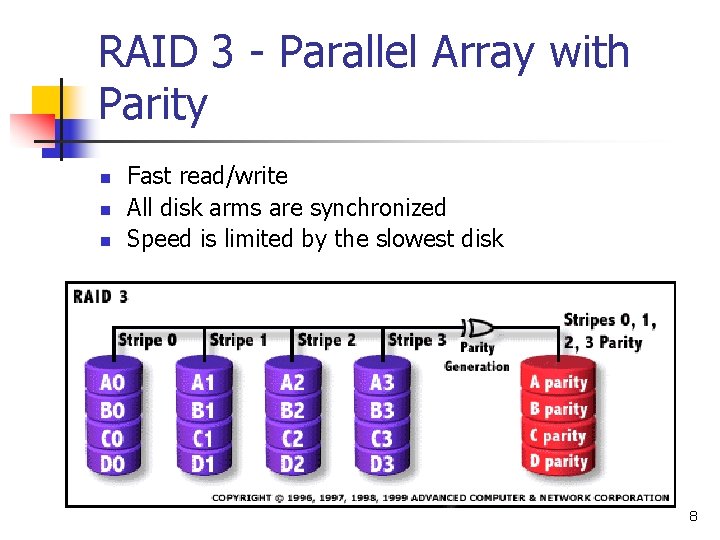

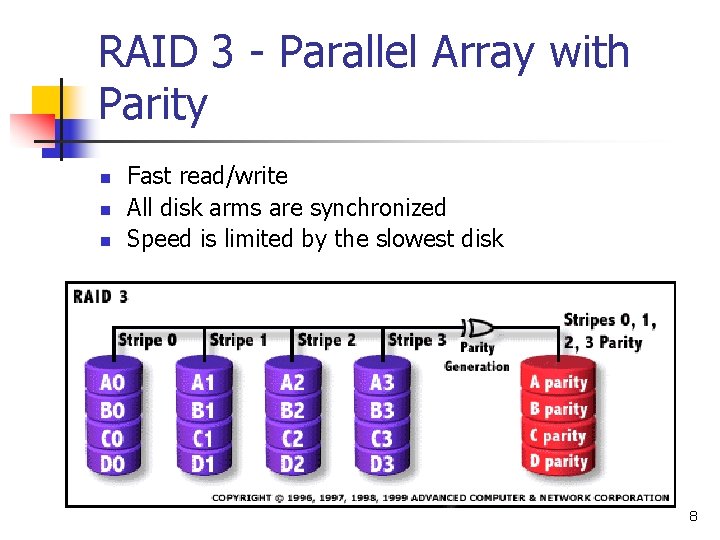

RAID 3 - Parallel Array with Parity n n n Fast read/write All disk arms are synchronized Speed is limited by the slowest disk 8

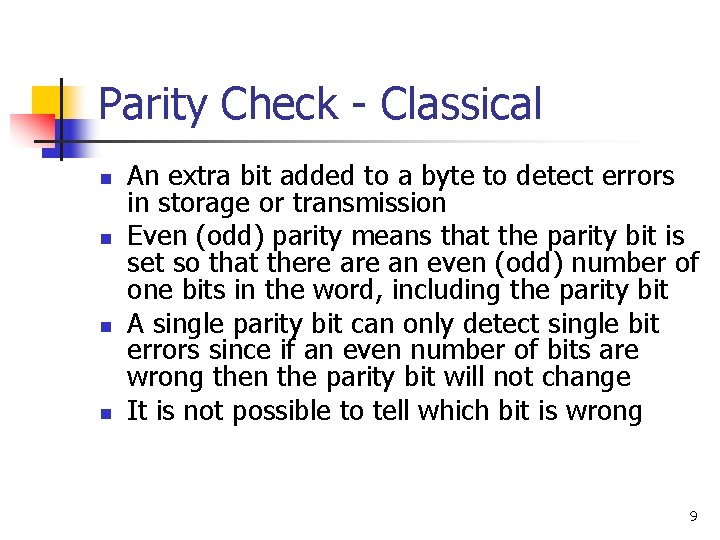

Parity Check - Classical n n An extra bit added to a byte to detect errors in storage or transmission Even (odd) parity means that the parity bit is set so that there an even (odd) number of one bits in the word, including the parity bit A single parity bit can only detect single bit errors since if an even number of bits are wrong then the parity bit will not change It is not possible to tell which bit is wrong 9

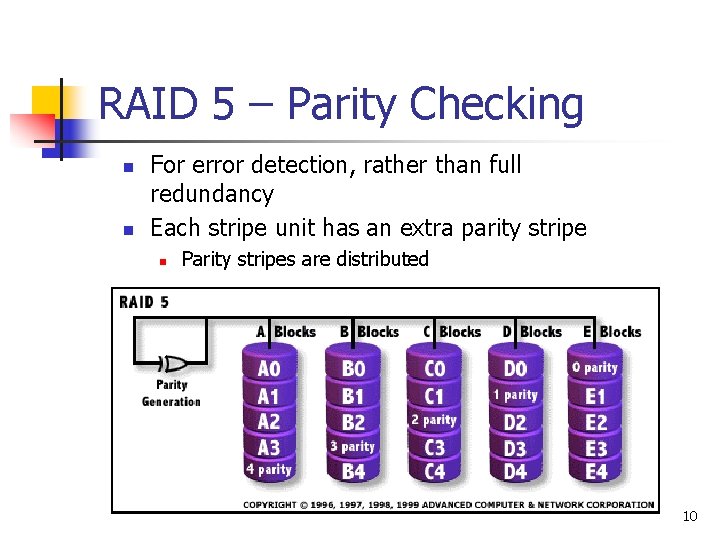

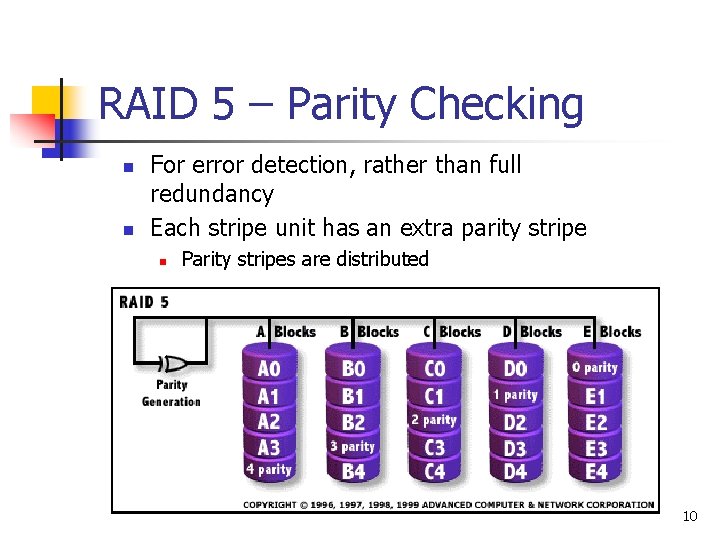

RAID 5 – Parity Checking n n For error detection, rather than full redundancy Each stripe unit has an extra parity stripe n Parity stripes are distributed 10

RAID 5 Read/Write n Read: parallel stripes read from multiple disks n n Good performance Write: 2 reads + 2 writes n n Read old data stripe; read parity stripe (2 reads) XOR old data stripe with new data stripe. XOR result into parity stripe. Write new data stripe and new parity stripe (2 writes). 11

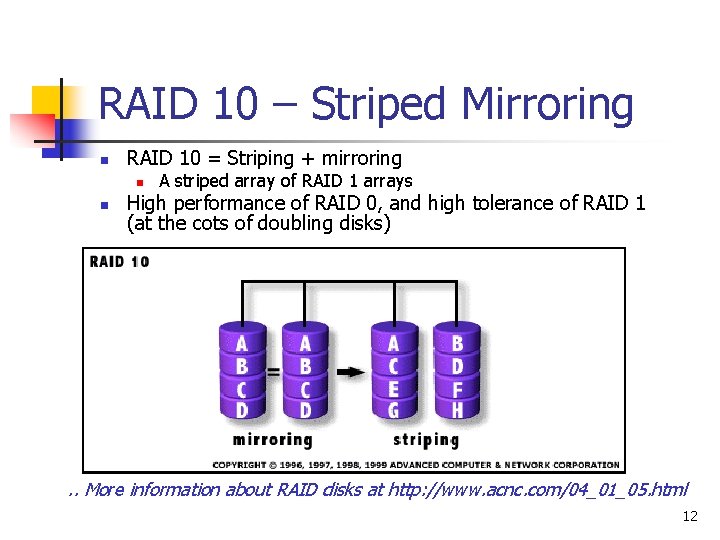

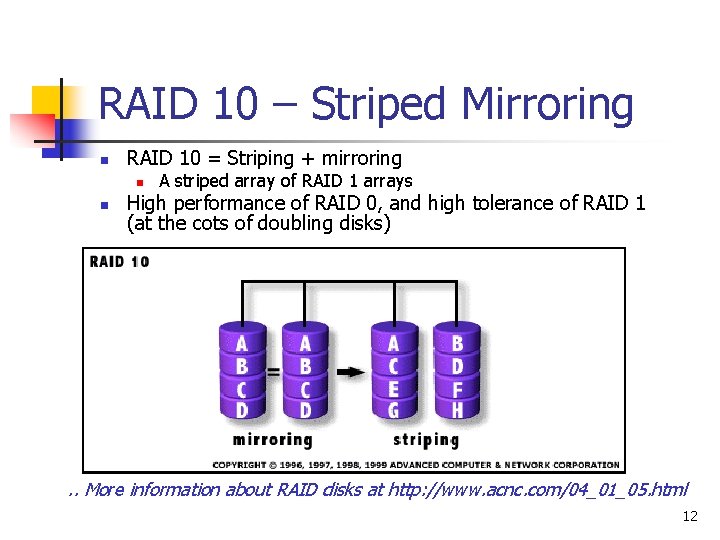

RAID 10 – Striped Mirroring n RAID 10 = Striping + mirroring n n A striped array of RAID 1 arrays High performance of RAID 0, and high tolerance of RAID 1 (at the cots of doubling disks) . . More information about RAID disks at http: //www. acnc. com/04_01_05. html 12

Hardware vs. Software RAID n n n n Software RAID: run on the server’s CPU Directly dependent on server CPU performance and load Occupies host system memory and CPU operation, degrading server performance Hardware RAID n n n Hardware RAID: run on the RAID controller’s CPU Does not occupy any host system memory. Is not operating system dependent Host CPU can execute applications while the array adapter's processor simultaneously executes array functions: true hardware multi-tasking 13

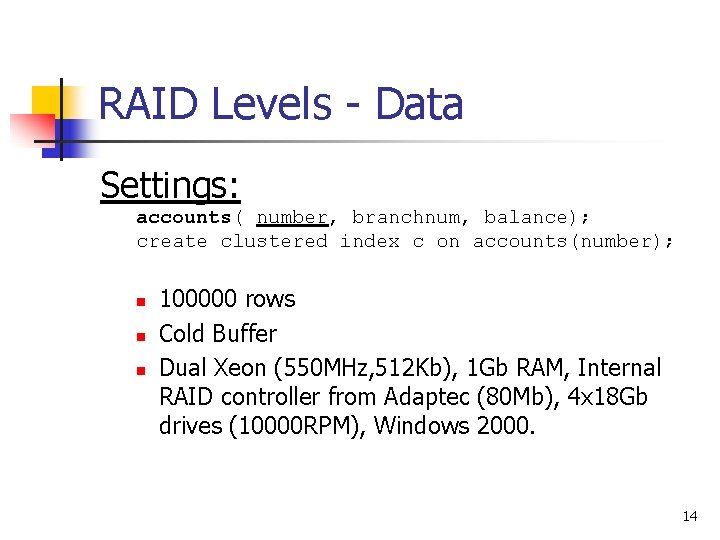

RAID Levels - Data Settings: accounts( number, branchnum, balance); create clustered index c on accounts(number); n n n 100000 rows Cold Buffer Dual Xeon (550 MHz, 512 Kb), 1 Gb RAM, Internal RAID controller from Adaptec (80 Mb), 4 x 18 Gb drives (10000 RPM), Windows 2000. 14

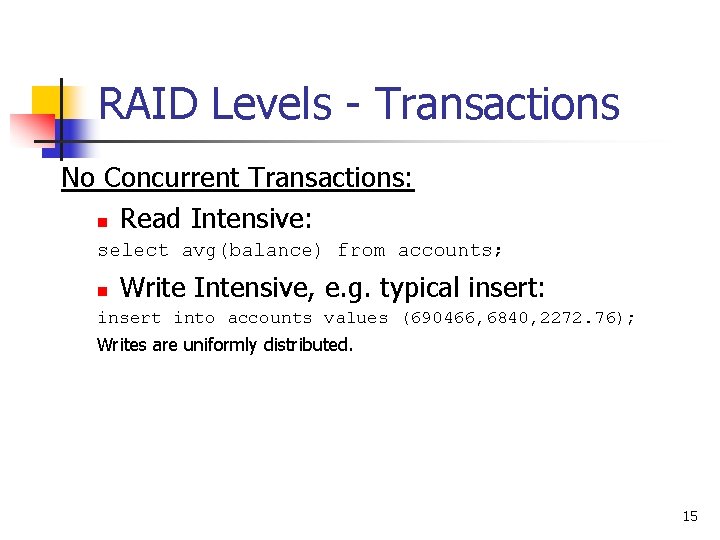

RAID Levels - Transactions No Concurrent Transactions: n Read Intensive: select avg(balance) from accounts; n Write Intensive, e. g. typical insert: insert into accounts values (690466, 6840, 2272. 76); Writes are uniformly distributed. 15

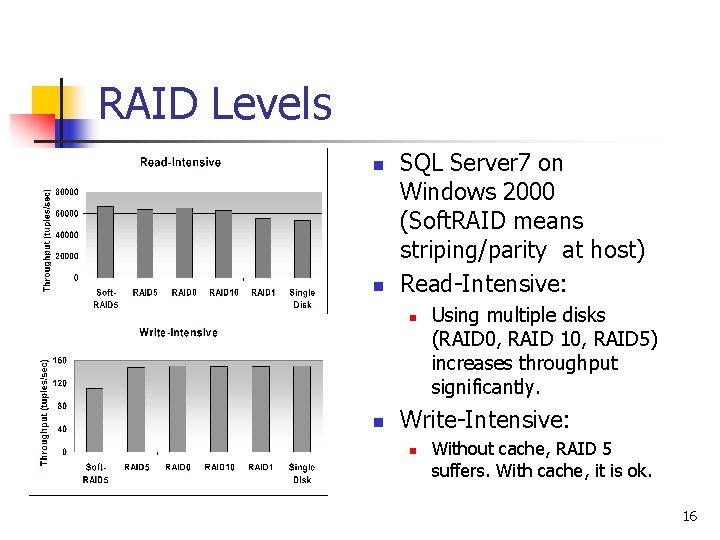

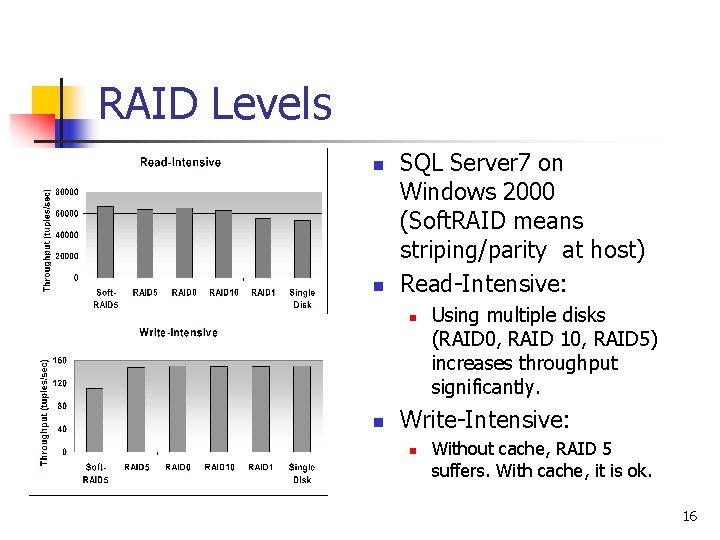

RAID Levels n n SQL Server 7 on Windows 2000 (Soft. RAID means striping/parity at host) Read-Intensive: n n Using multiple disks (RAID 0, RAID 10, RAID 5) increases throughput significantly. Write-Intensive: n Without cache, RAID 5 suffers. With cache, it is ok. 16

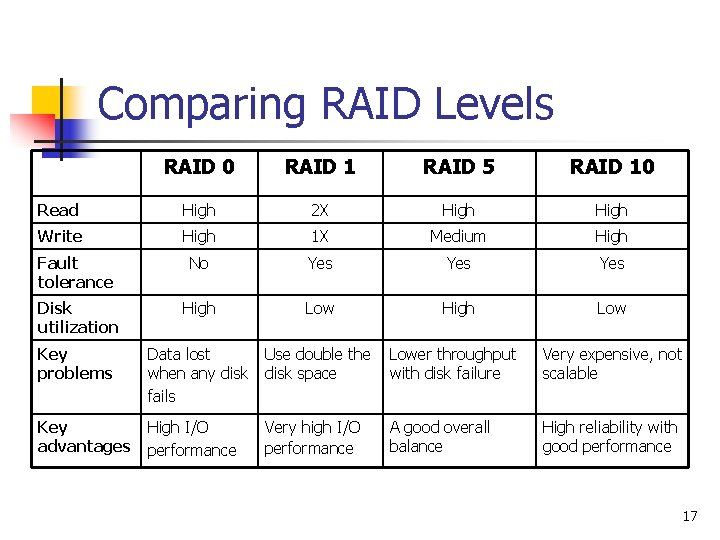

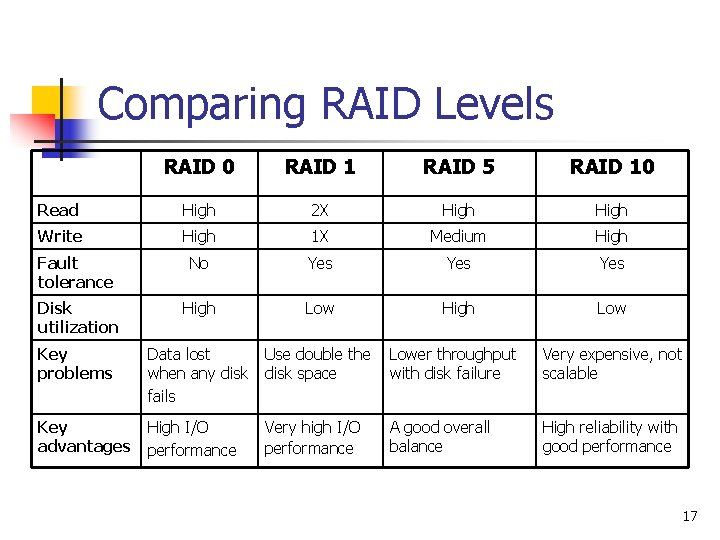

Comparing RAID Levels RAID 0 RAID 1 RAID 5 RAID 10 Read High 2 X High Write High 1 X Medium High Fault tolerance No Yes Yes Disk utilization High Low Key problems Data lost when any disk fails Use double the disk space Lower throughput with disk failure Very expensive, not scalable Key advantages High I/O performance Very high I/O performance A good overall balance High reliability with good performance 17

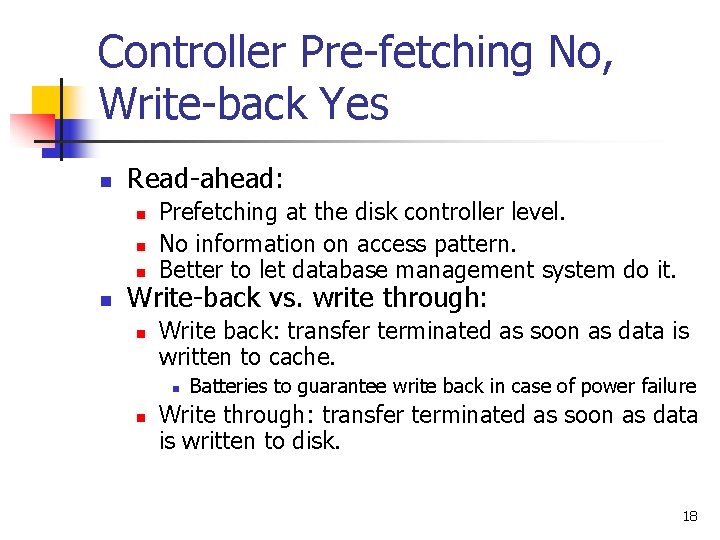

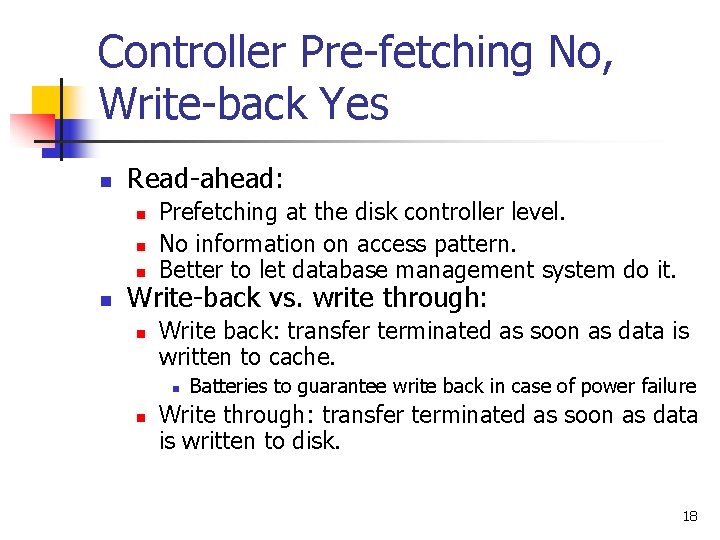

Controller Pre-fetching No, Write-back Yes n Read-ahead: n n Prefetching at the disk controller level. No information on access pattern. Better to let database management system do it. Write-back vs. write through: n Write back: transfer terminated as soon as data is written to cache. n n Batteries to guarantee write back in case of power failure Write through: transfer terminated as soon as data is written to disk. 18

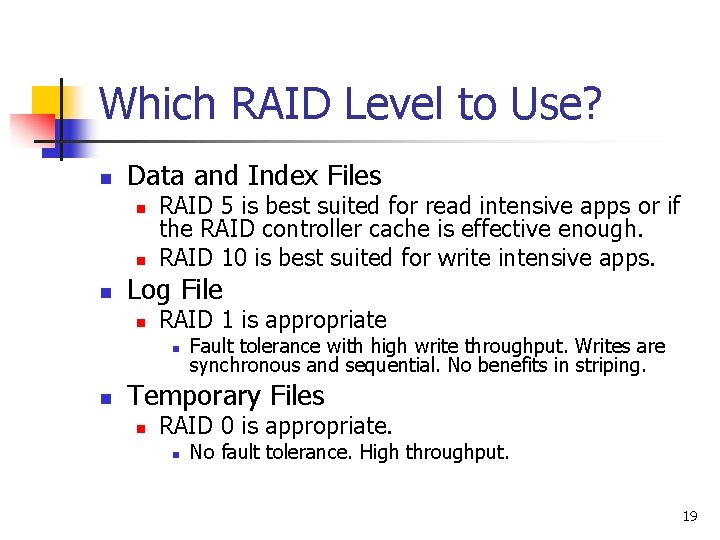

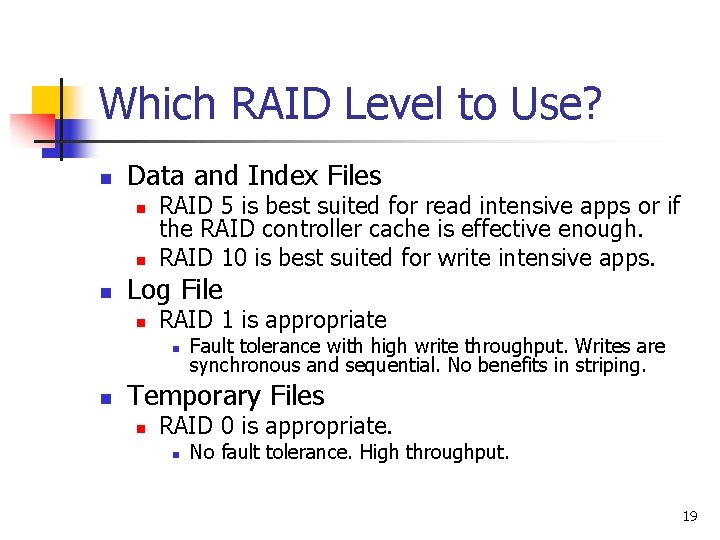

Which RAID Level to Use? n Data and Index Files n n n RAID 5 is best suited for read intensive apps or if the RAID controller cache is effective enough. RAID 10 is best suited for write intensive apps. Log File n RAID 1 is appropriate n n Fault tolerance with high write throughput. Writes are synchronous and sequential. No benefits in striping. Temporary Files n RAID 0 is appropriate. n No fault tolerance. High throughput. 19

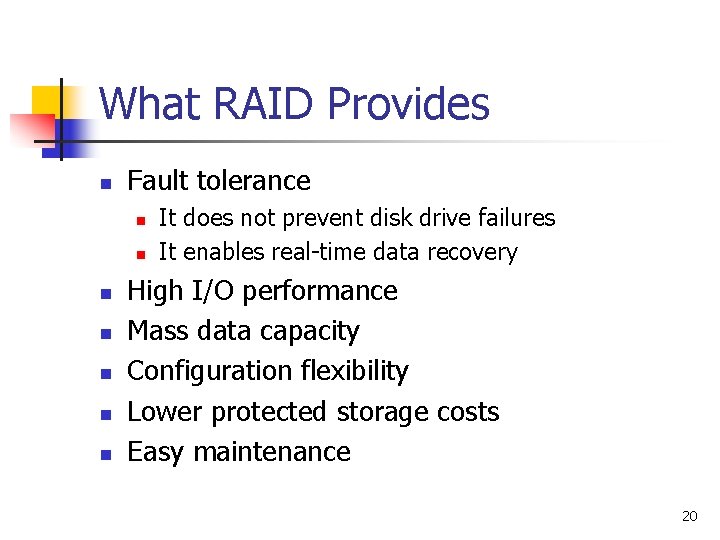

What RAID Provides n Fault tolerance n n n n It does not prevent disk drive failures It enables real-time data recovery High I/O performance Mass data capacity Configuration flexibility Lower protected storage costs Easy maintenance 20

Enhancing Hardware Config. n Add memory n n Cheapest option to get better performance Can be used to enlarge DB buffer pool n n Better hit ratio If used for enlarge OS buffer (as disk cache), it benefits but to other apps as well Add disks Add processors 21

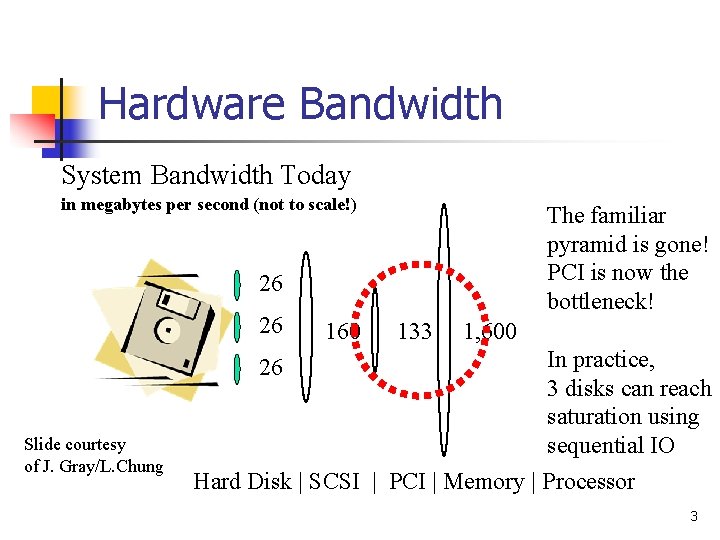

Add Disks n Larger disk ≠better performance n n Add disks for n n n Bottleneck is disk bandwidth A dedicated disk for the log Switch RAID 5 to RAID 10 for update-intensive apps Move secondary indexes to another disk for writeintensive apps Partition read-intensive tables across many disks Consider intelligent disk systems n Automatic replication and load balancing 22