Lecture 16 Large Cache Innovations Today Large cache

- Slides: 20

Lecture 16: Large Cache Innovations • Today: Large cache design and other cache innovations • Midterm scores § 91 -80: 17 students § 79 -75: 14 students § 74 -68: 8 students § 63 -54: 5 students • Q 1 (HM), Q 2 (bpred), Q 3 (stalls), Q 7 (loops) mostly correct • Q 4 (ooo) – 50% correct; many didn’t stall renaming • Q 5 (multi-core) – less than a handful got 8+ points • Q 6 (memdep) – less than a handful got part (b) right and correctly articulated the effect on power/energy 1

Shared Vs. Private Caches in Multi-Core • Advantages of a shared cache: § Space is dynamically allocated among cores § No wastage of space because of replication § Potentially faster cache coherence (and easier to locate data on a miss) • Advantages of a private cache: § small L 2 faster access time § private bus to L 2 less contention 2

UCA and NUCA • The small-sized caches so far have all been uniform cache access: the latency for any access is a constant, no matter where data is found • For a large multi-megabyte cache, it is expensive to limit access time by the worst case delay: hence, non-uniform cache architecture 3

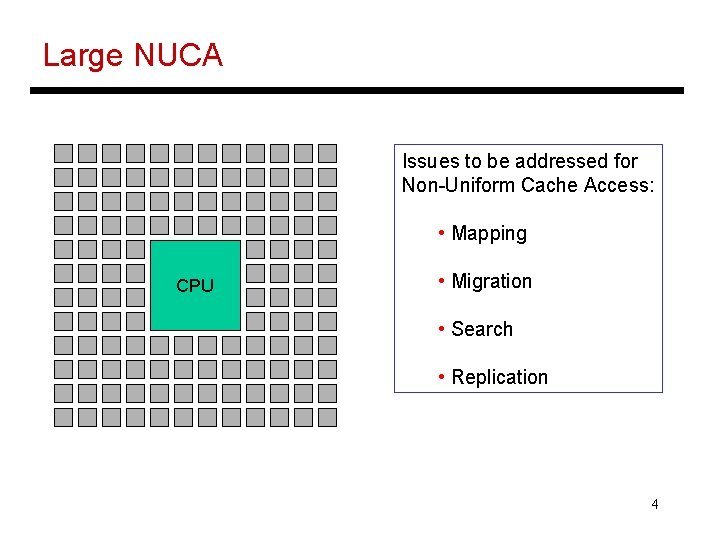

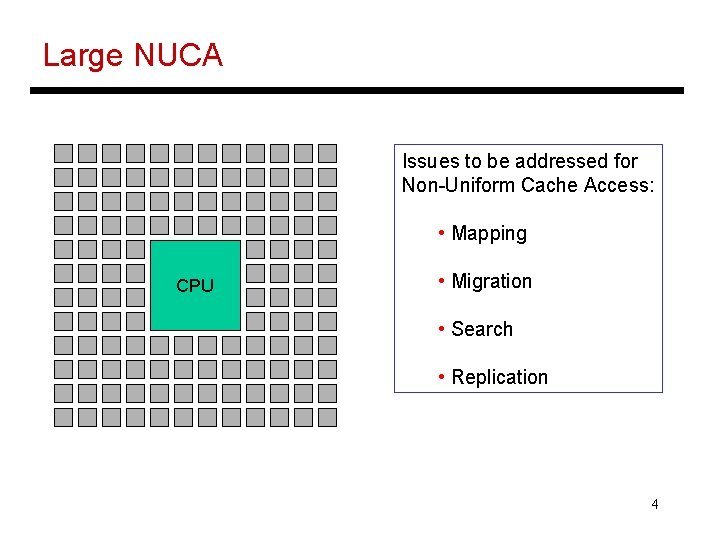

Large NUCA Issues to be addressed for Non-Uniform Cache Access: • Mapping CPU • Migration • Search • Replication 4

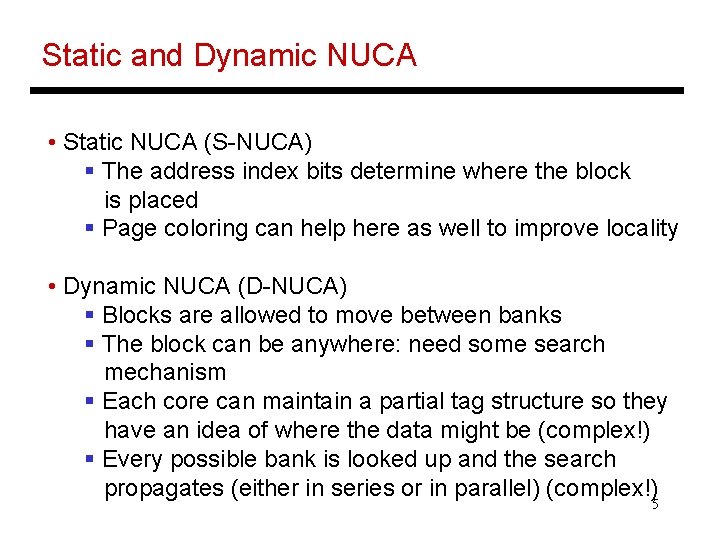

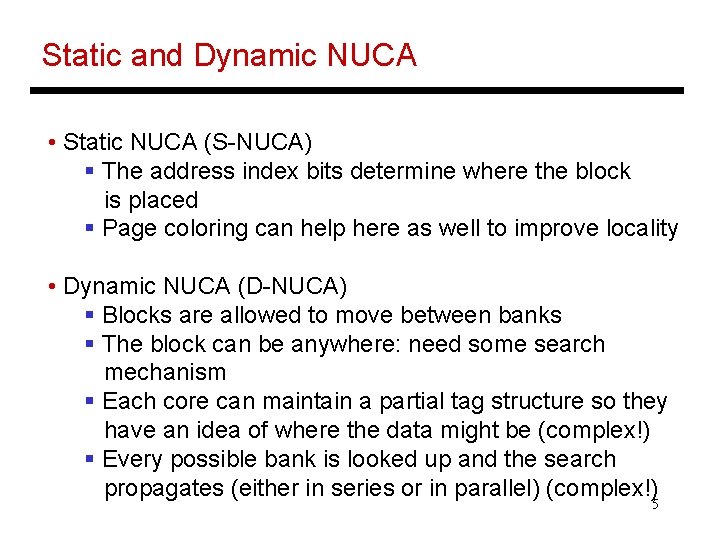

Static and Dynamic NUCA • Static NUCA (S-NUCA) § The address index bits determine where the block is placed § Page coloring can help here as well to improve locality • Dynamic NUCA (D-NUCA) § Blocks are allowed to move between banks § The block can be anywhere: need some search mechanism § Each core can maintain a partial tag structure so they have an idea of where the data might be (complex!) § Every possible bank is looked up and the search propagates (either in series or in parallel) (complex!) 5

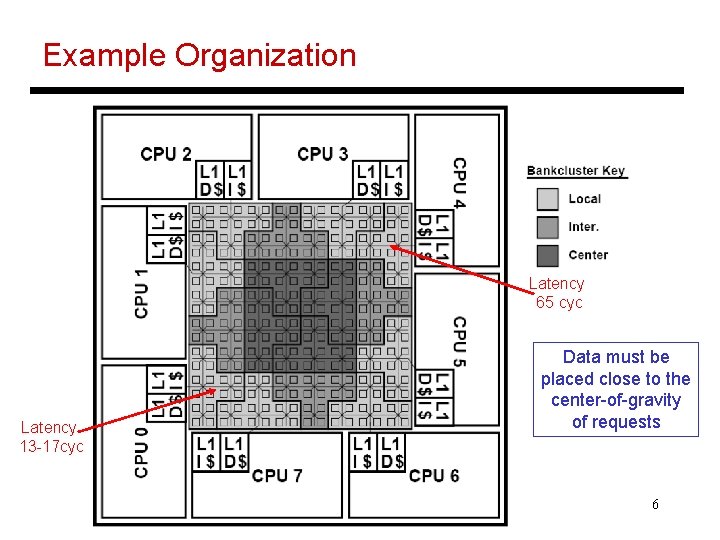

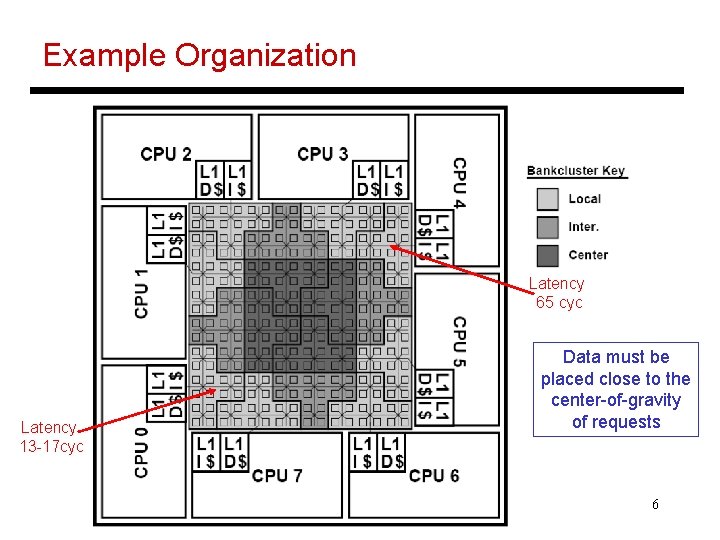

Example Organization Latency 65 cyc Latency 13 -17 cyc Data must be placed close to the center-of-gravity of requests 6

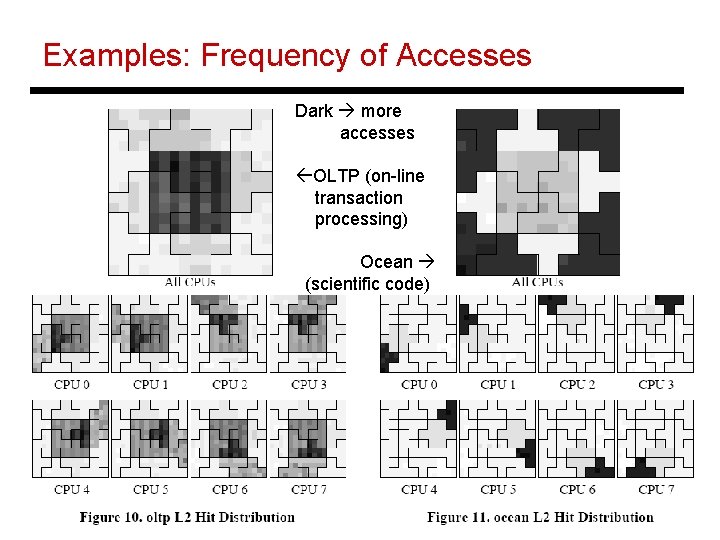

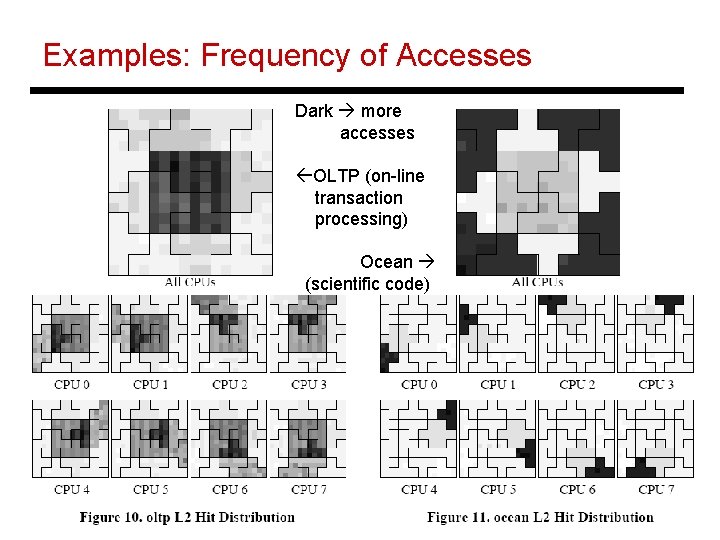

Examples: Frequency of Accesses Dark more accesses ßOLTP (on-line transaction processing) Ocean (scientific code) 7

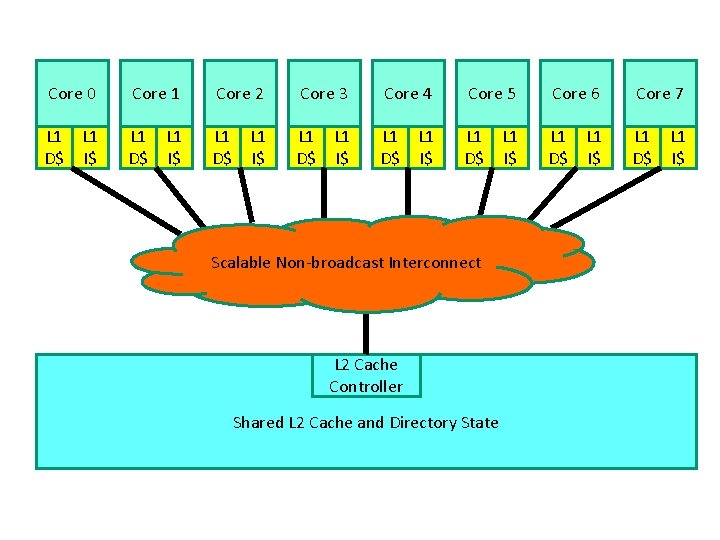

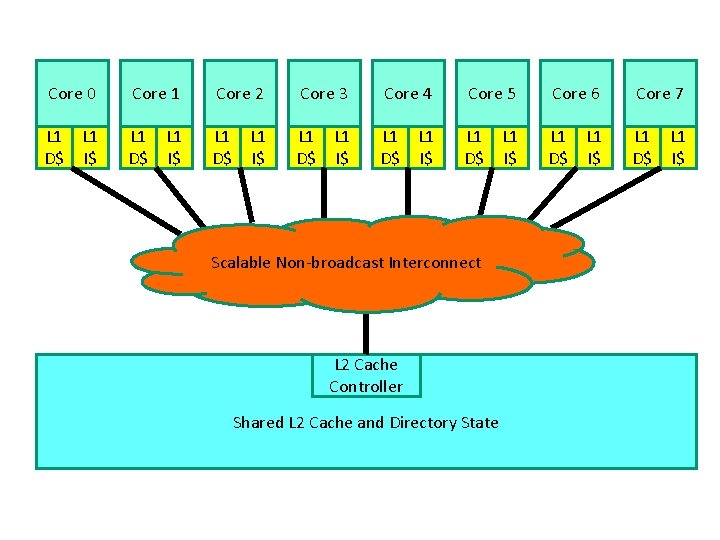

Core 0 Core 1 Core 2 Core 3 Core 4 Core 5 Core 6 Core 7 L 1 L 1 D$ I$ L 1 L 1 D$ I$ Scalable Non-broadcast Interconnect L 2 Cache Controller Shared L 2 Cache and Directory State

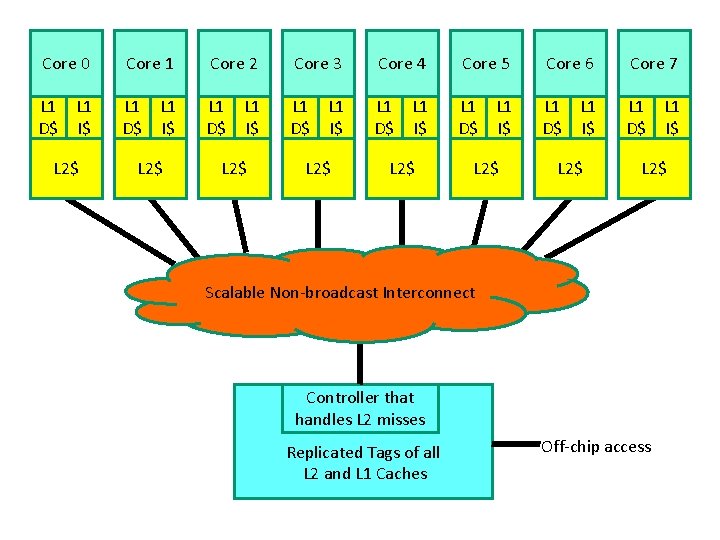

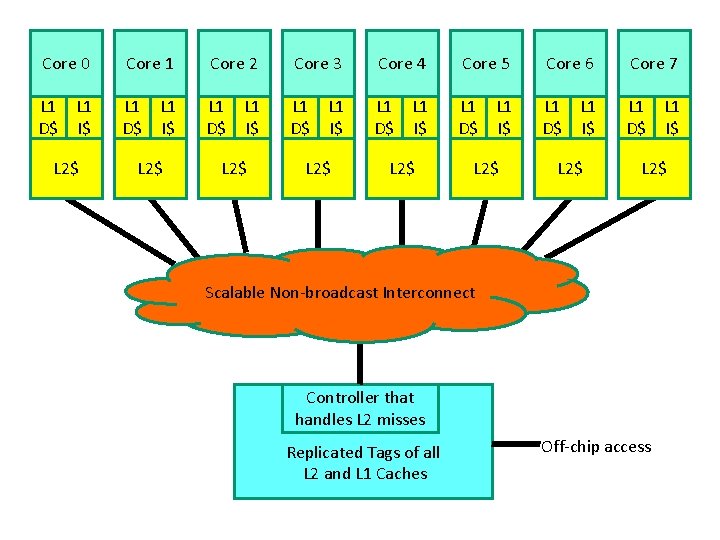

Core 0 Core 1 Core 2 Core 3 Core 4 Core 5 Core 6 Core 7 L 1 L 1 D$ I$ L 1 L 1 D$ I$ L 2$ L 2$ Scalable Non-broadcast Interconnect Controller that handles L 2 misses Replicated Tags of all L 2 and L 1 Caches Off-chip access

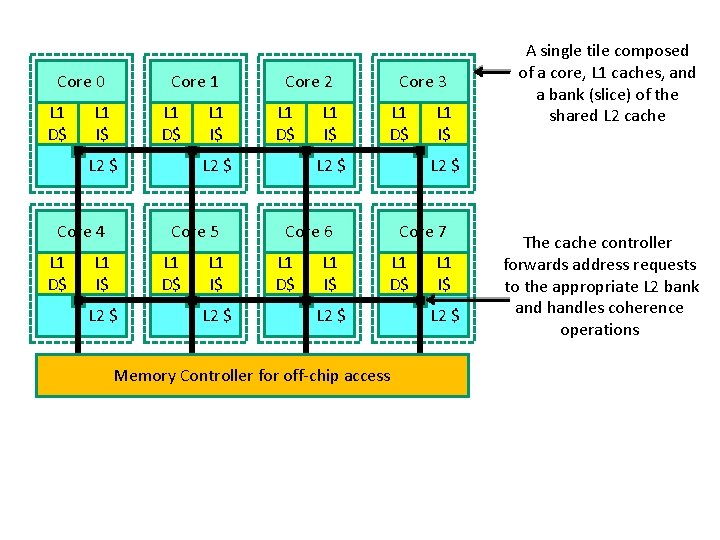

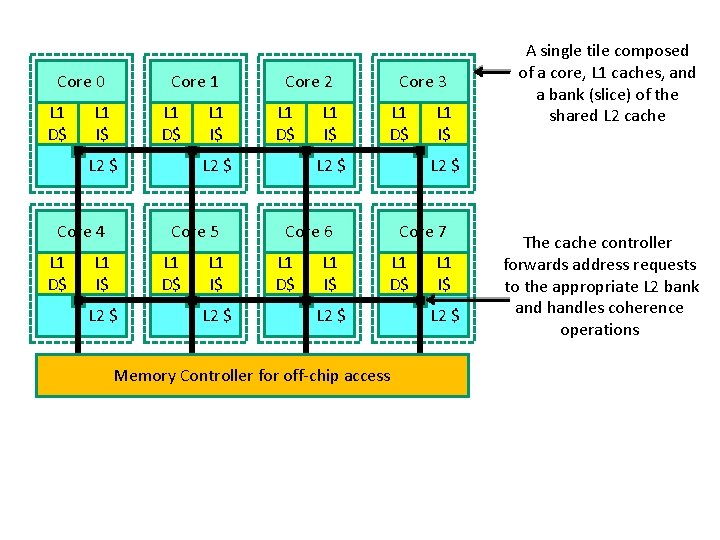

Core 0 L 1 D$ Core 1 L 1 I$ L 1 D$ L 2 $ Core 4 L 1 D$ L 1 I$ L 1 D$ L 2 $ Core 5 L 1 I$ Core 2 L 1 I$ L 2 $ L 1 I$ Core 3 L 1 D$ L 2 $ Core 6 L 1 D$ L 1 I$ A single tile composed of a core, L 1 caches, and a bank (slice) of the shared L 2 cache Core 7 L 1 D$ L 2 $ Memory Controller for off-chip access L 1 I$ L 2 $ The cache controller forwards address requests to the appropriate L 2 bank and handles coherence operations

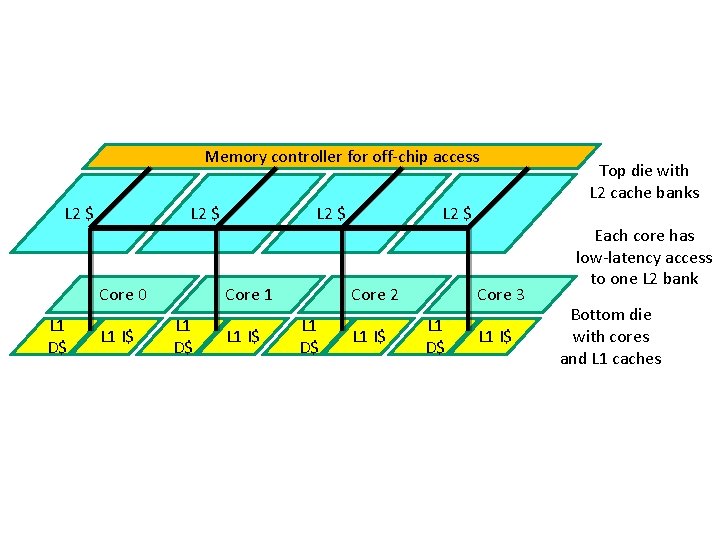

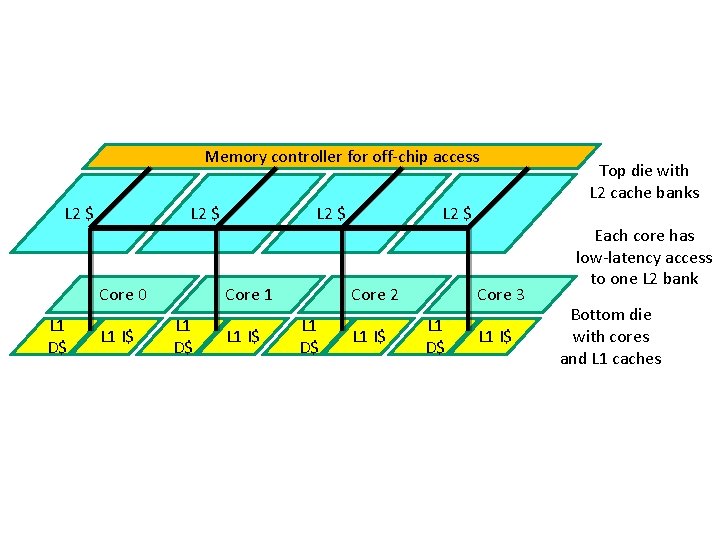

Memory controller for off-chip access L 2 $ Core 0 L 1 D$ L 1 I$ L 2 $ Core 1 L 1 D$ L 1 I$ L 2 $ Core 2 L 1 D$ L 1 I$ Core 3 L 1 D$ L 1 I$ Top die with L 2 cache banks Each core has low-latency access to one L 2 bank Bottom die with cores and L 1 caches

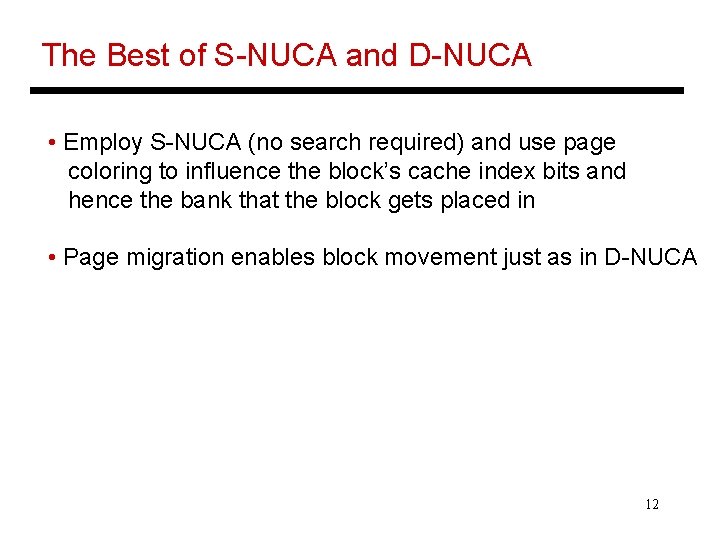

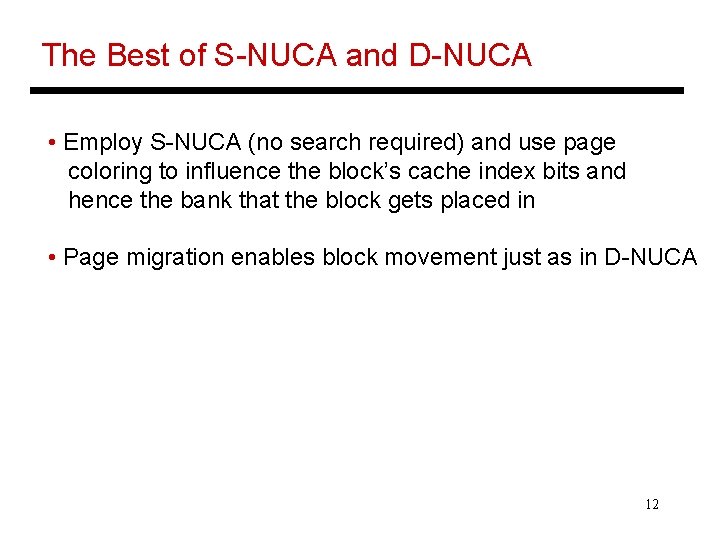

The Best of S-NUCA and D-NUCA • Employ S-NUCA (no search required) and use page coloring to influence the block’s cache index bits and hence the bank that the block gets placed in • Page migration enables block movement just as in D-NUCA 12

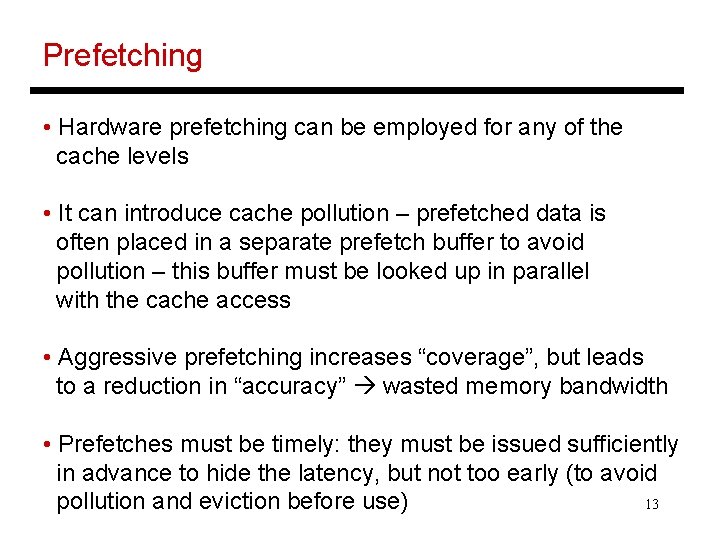

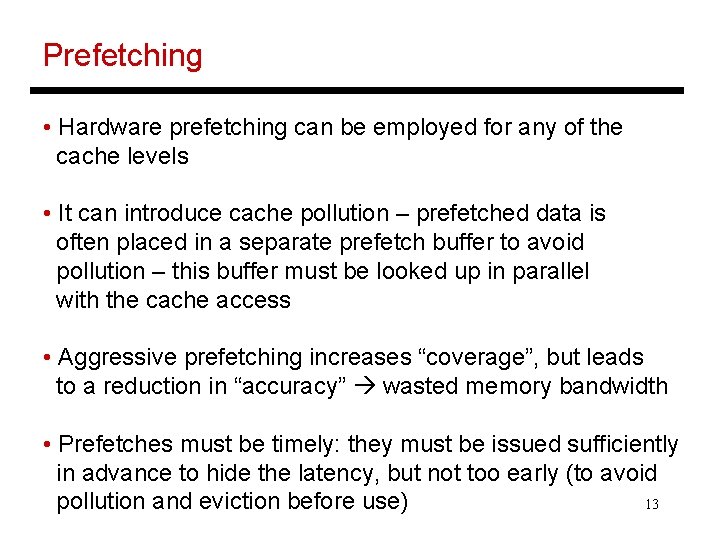

Prefetching • Hardware prefetching can be employed for any of the cache levels • It can introduce cache pollution – prefetched data is often placed in a separate prefetch buffer to avoid pollution – this buffer must be looked up in parallel with the cache access • Aggressive prefetching increases “coverage”, but leads to a reduction in “accuracy” wasted memory bandwidth • Prefetches must be timely: they must be issued sufficiently in advance to hide the latency, but not too early (to avoid 13 pollution and eviction before use)

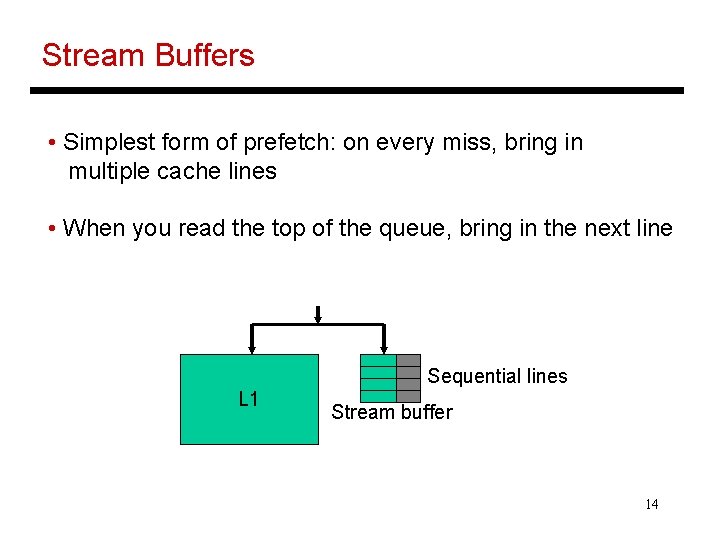

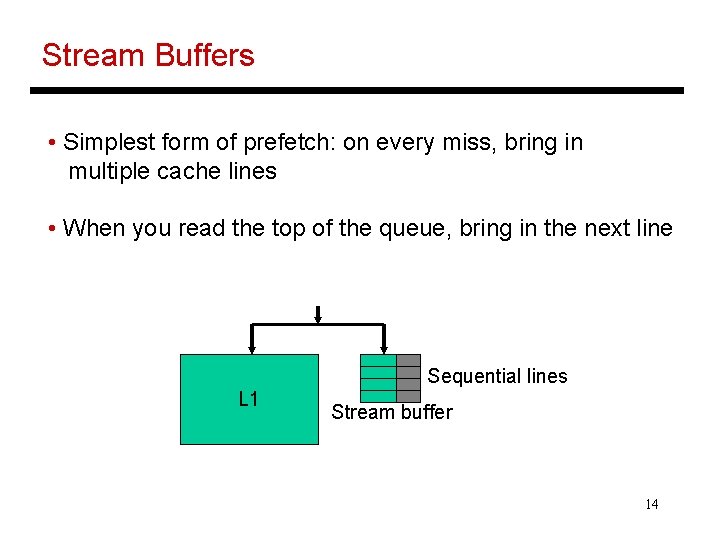

Stream Buffers • Simplest form of prefetch: on every miss, bring in multiple cache lines • When you read the top of the queue, bring in the next line Sequential lines L 1 Stream buffer 14

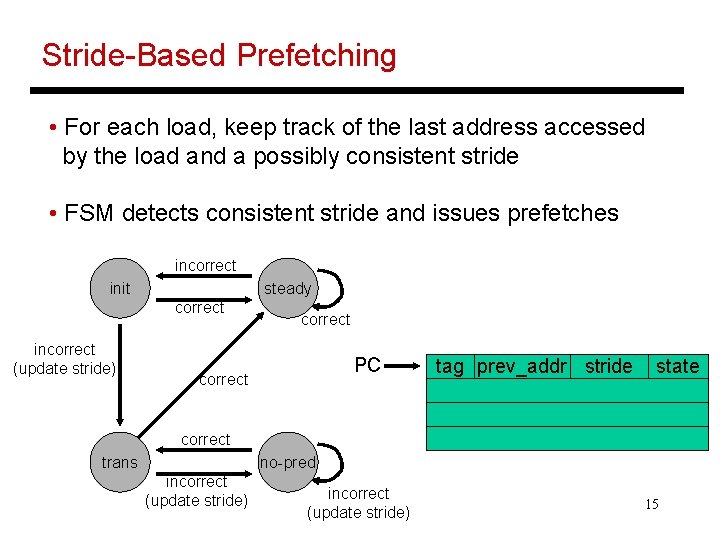

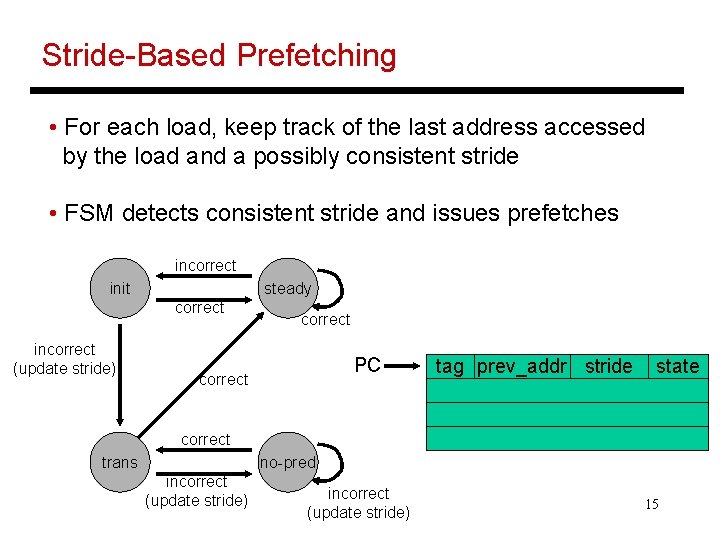

Stride-Based Prefetching • For each load, keep track of the last address accessed by the load and a possibly consistent stride • FSM detects consistent stride and issues prefetches incorrect init steady correct incorrect (update stride) correct PC correct tag prev_addr stride state correct trans no-pred incorrect (update stride) 15

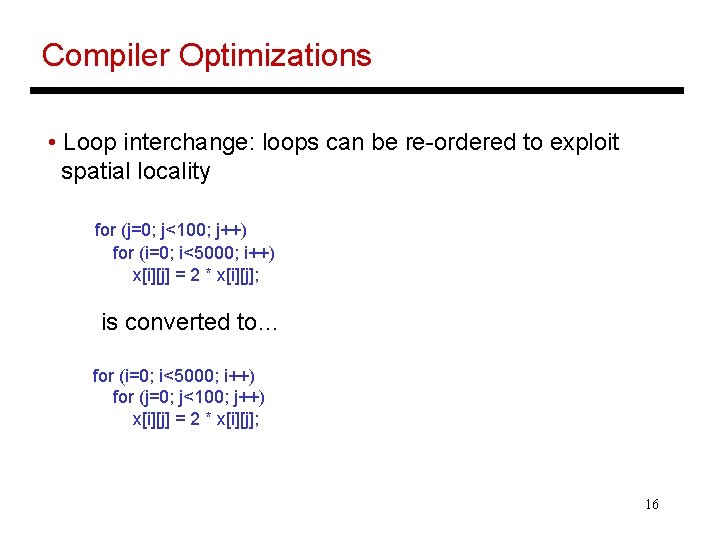

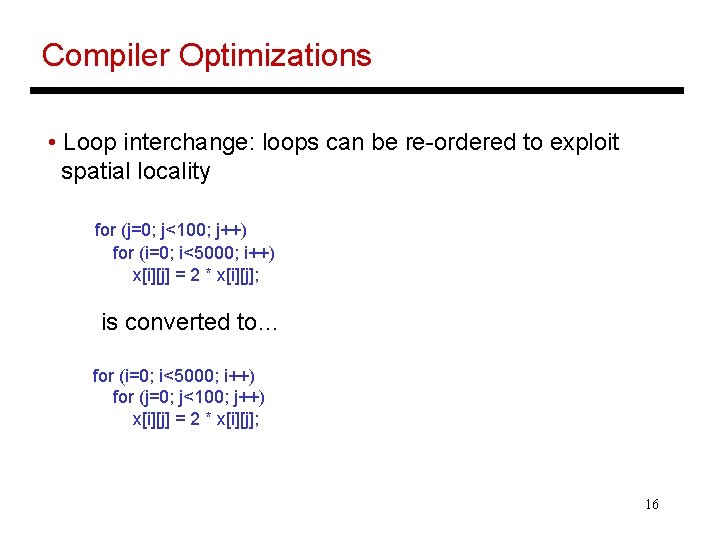

Compiler Optimizations • Loop interchange: loops can be re-ordered to exploit spatial locality for (j=0; j<100; j++) for (i=0; i<5000; i++) x[i][j] = 2 * x[i][j]; is converted to… for (i=0; i<5000; i++) for (j=0; j<100; j++) x[i][j] = 2 * x[i][j]; 16

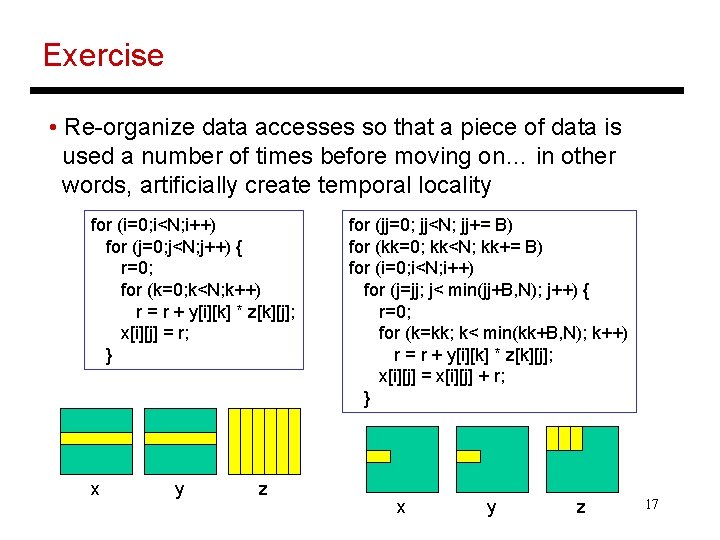

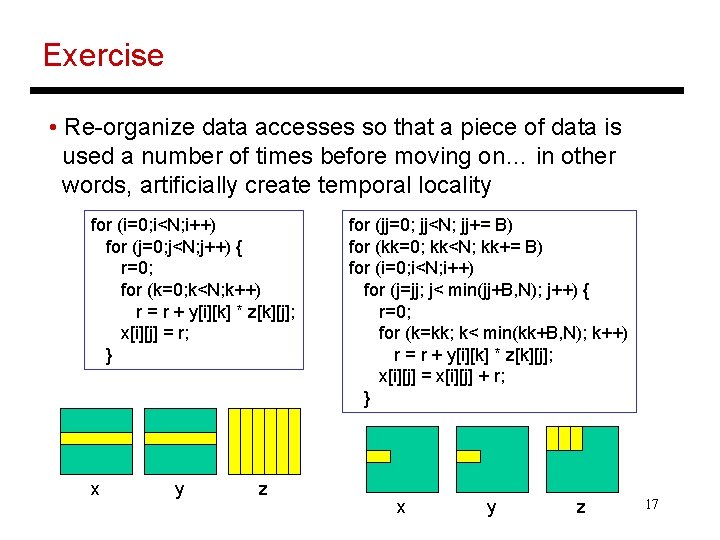

Exercise • Re-organize data accesses so that a piece of data is used a number of times before moving on… in other words, artificially create temporal locality for (i=0; i<N; i++) for (j=0; j<N; j++) { r=0; for (k=0; k<N; k++) r = r + y[i][k] * z[k][j]; x[i][j] = r; } x y z for (jj=0; jj<N; jj+= B) for (kk=0; kk<N; kk+= B) for (i=0; i<N; i++) for (j=jj; j< min(jj+B, N); j++) { r=0; for (k=kk; k< min(kk+B, N); k++) r = r + y[i][k] * z[k][j]; x[i][j] = x[i][j] + r; } x y z 17

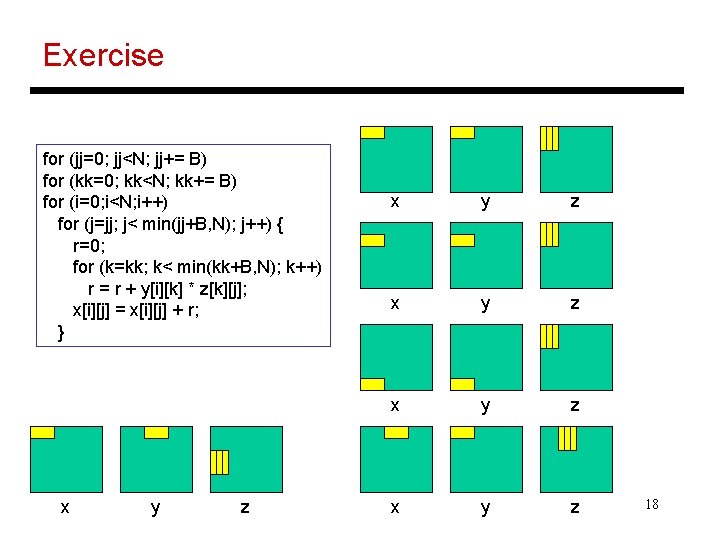

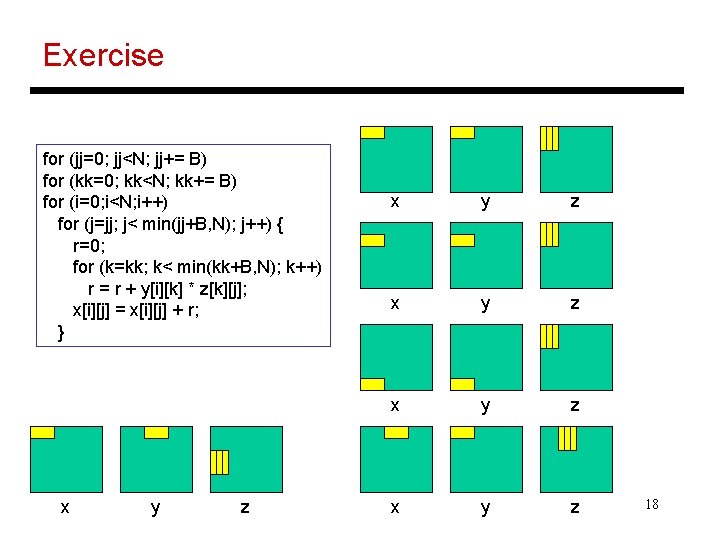

Exercise for (jj=0; jj<N; jj+= B) for (kk=0; kk<N; kk+= B) for (i=0; i<N; i++) for (j=jj; j< min(jj+B, N); j++) { r=0; for (k=kk; k< min(kk+B, N); k++) r = r + y[i][k] * z[k][j]; x[i][j] = x[i][j] + r; } x y z x y z 18

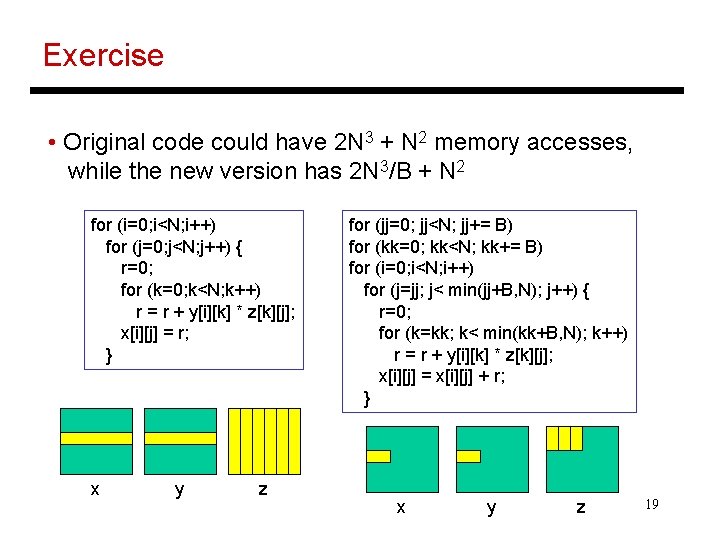

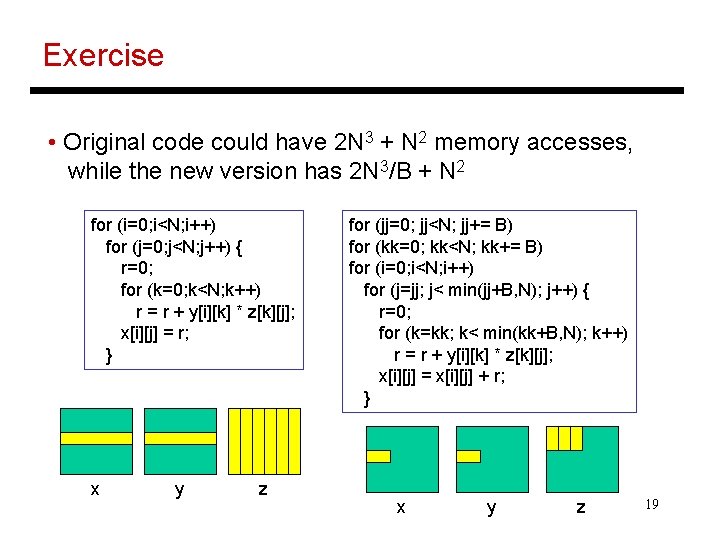

Exercise • Original code could have 2 N 3 + N 2 memory accesses, while the new version has 2 N 3/B + N 2 for (i=0; i<N; i++) for (j=0; j<N; j++) { r=0; for (k=0; k<N; k++) r = r + y[i][k] * z[k][j]; x[i][j] = r; } x y z for (jj=0; jj<N; jj+= B) for (kk=0; kk<N; kk+= B) for (i=0; i<N; i++) for (j=jj; j< min(jj+B, N); j++) { r=0; for (k=kk; k< min(kk+B, N); k++) r = r + y[i][k] * z[k][j]; x[i][j] = x[i][j] + r; } x y z 19

Title • Bullet 20