Johann Mouton CREST HESA Conference on Research and

- Slides: 36

Johann Mouton, CREST HESA Conference on Research and Innovation 4 April 2012 Measuring differentiation in knowledge production at SA universities

Preliminary comments �Given the different institutional histories, missions and capacities, a high degree of differentiation in terms of key research production dimensions are only to be expected �The differentiation constructs and associated indicators presented and discussed here are not independent of each other (in statistical terms there are multiple “interaction effects”)

Unpacking the concept of “research differentiation” We still need a proper conceptualisation of the notion of research differentiation. As a first attempt I would distinguish the following SIX types or categories: Differentiation in terms of � Volume of research production � Shape of research production (differences in distribution of output by scientific field) � Site of publication (comparative journal indexes) � Research collaboration � Research impact (High or low visibility or recognition) � Demographics: Differences in distribution of output by gender/ race/ qualification/ age

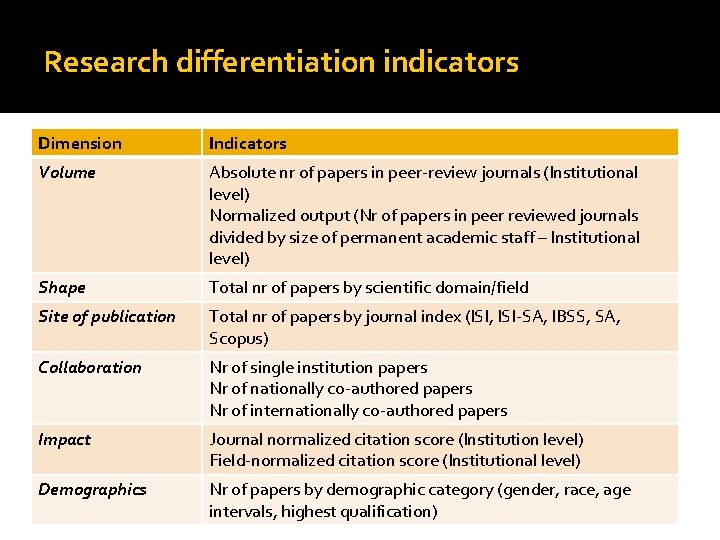

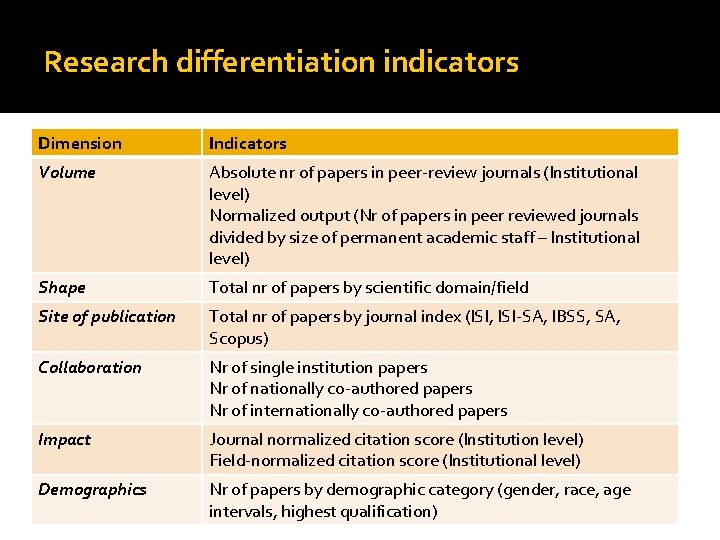

Research differentiation indicators Dimension Indicators Volume Absolute nr of papers in peer-review journals (Institutional level) Normalized output (Nr of papers in peer reviewed journals divided by size of permanent academic staff – Institutional level) Shape Total nr of papers by scientific domain/field Site of publication Total nr of papers by journal index (ISI, ISI-SA, IBSS, SA, Scopus) Collaboration Nr of single institution papers Nr of nationally co-authored papers Nr of internationally co-authored papers Impact Journal normalized citation score (Institution level) Field-normalized citation score (Institutional level) Demographics Nr of papers by demographic category (gender, race, age intervals, highest qualification)

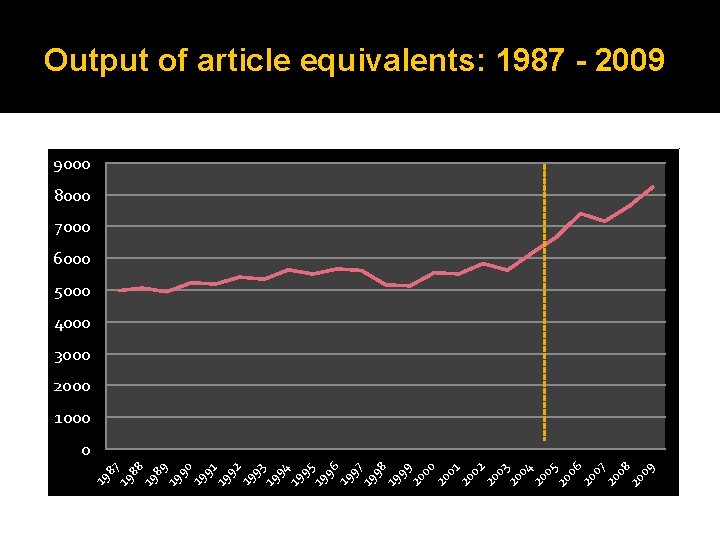

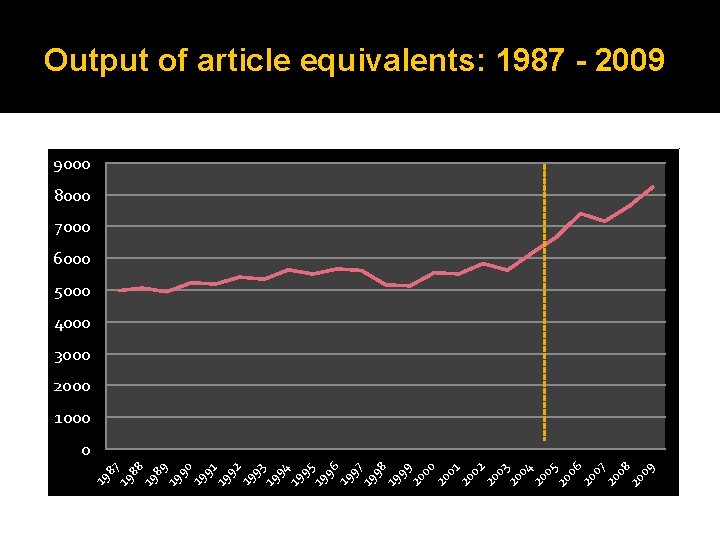

Proposition 1 University research production - since the introduction of a national research subsidy scheme in 1987 – initially remained quite stable (ranging between 5000 and 5500 article units between 1988 and 2003) BUT then increased dramatically to reach more than 8000 units in 2010. The best explanation for this dramatic increase is the introduction of the new research funding framework in 2003 (and which came into effect in 2005) which provided much more significant financial reward for research units and clearly provided a huge incentive to institutions to increase their output

89 19 90 19 91 19 92 19 93 19 94 19 95 19 96 19 97 19 98 19 99 20 00 20 01 20 02 20 03 20 04 20 05 20 06 20 07 20 08 20 09 19 88 19 87 19 Output of article equivalents: 1987 - 2009 9000 8000 7000 6000 5000 4000 3000 2000 1000 0

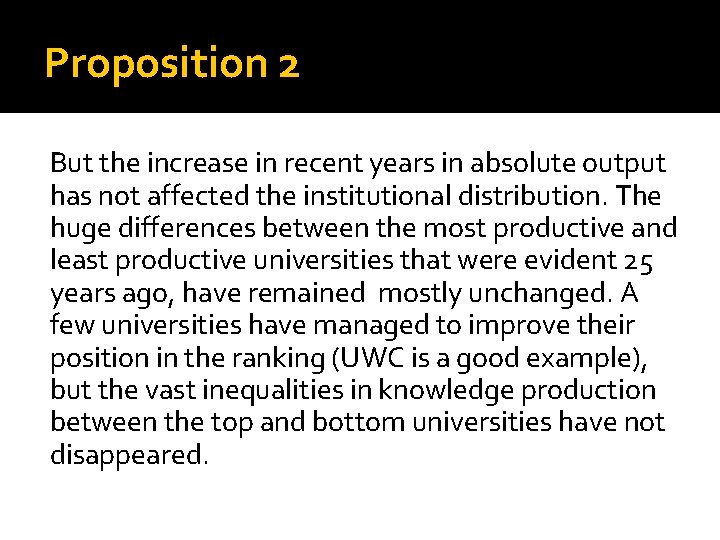

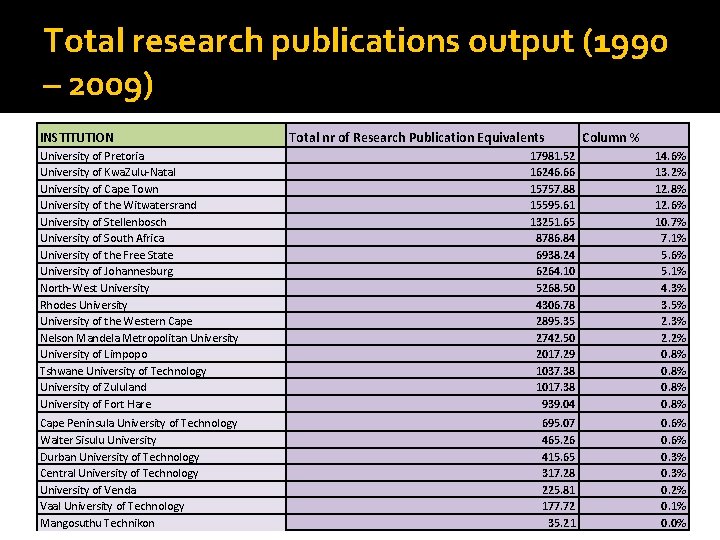

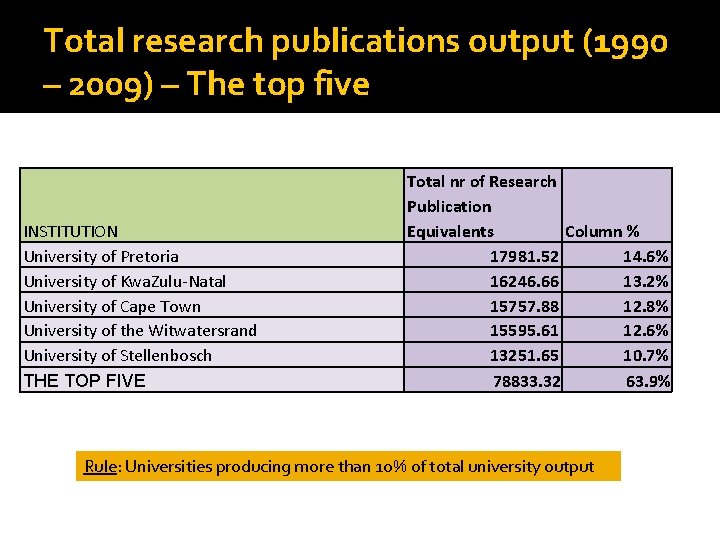

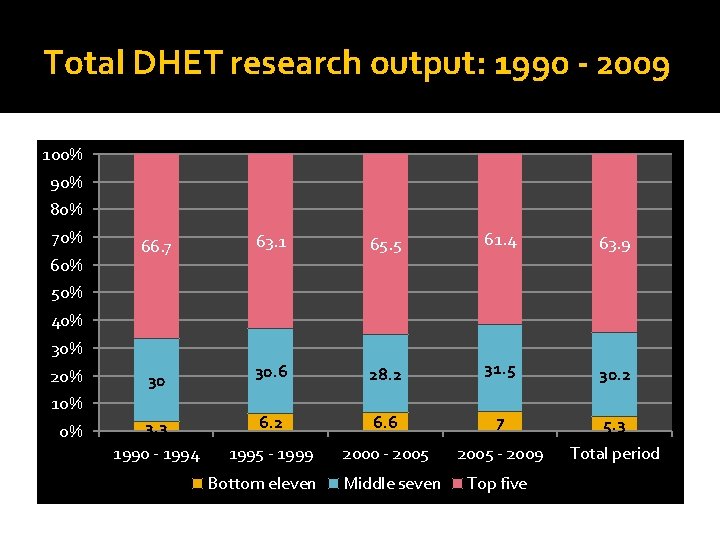

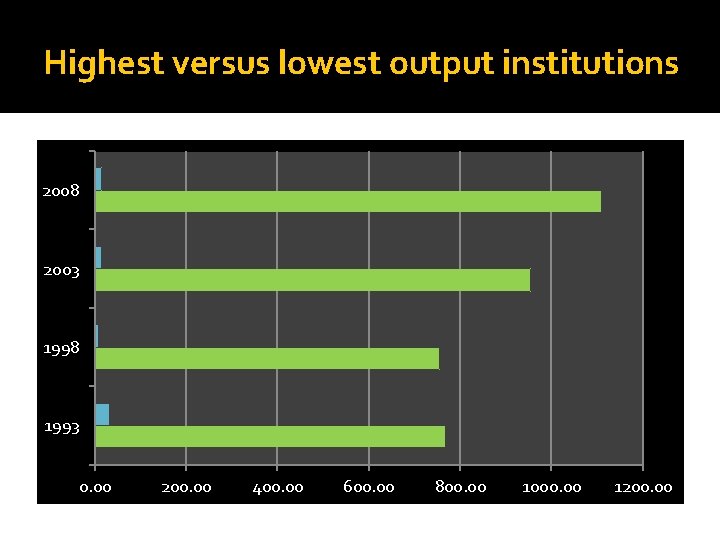

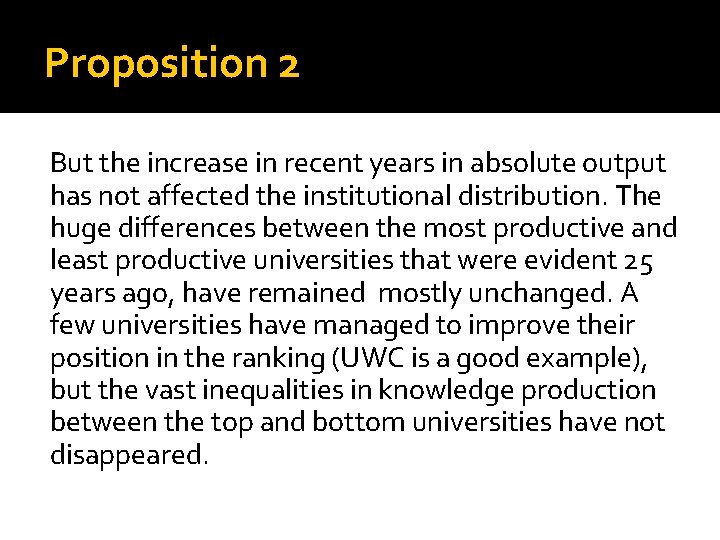

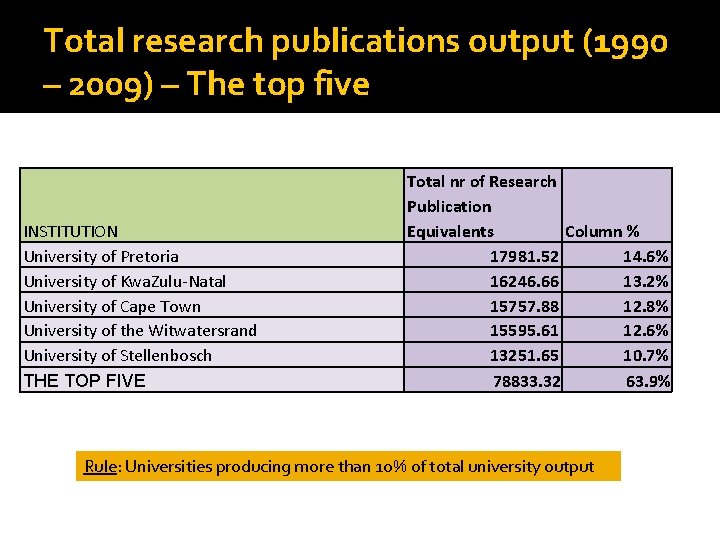

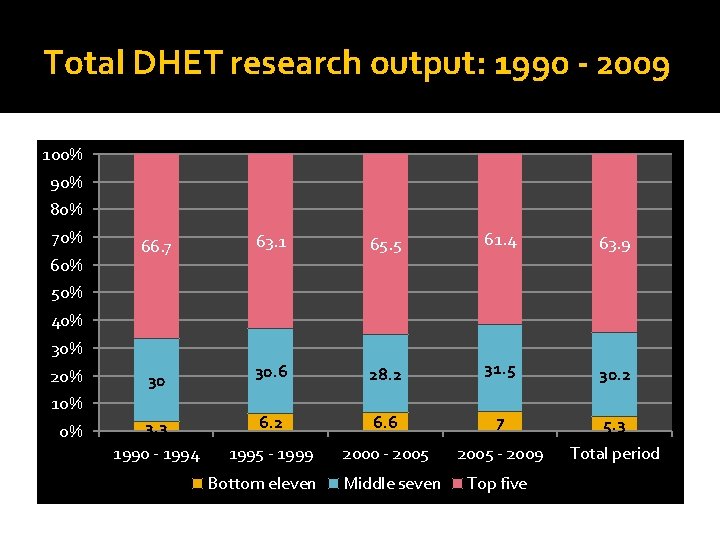

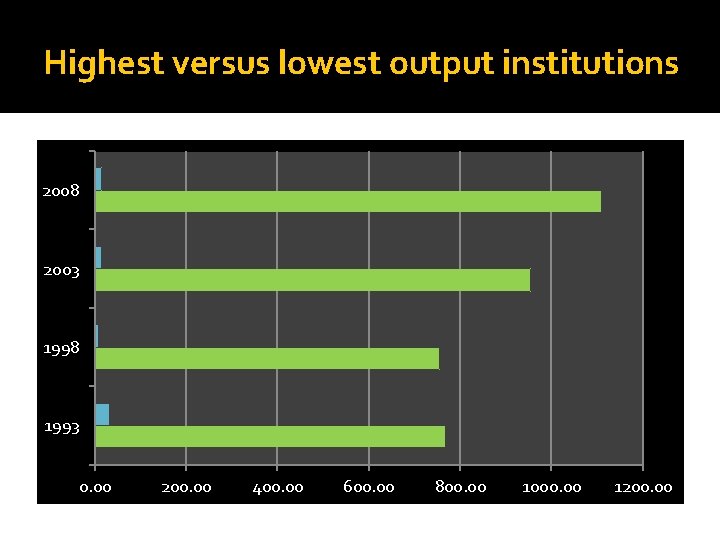

Proposition 2 But the increase in recent years in absolute output has not affected the institutional distribution. The huge differences between the most productive and least productive universities that were evident 25 years ago, have remained mostly unchanged. A few universities have managed to improve their position in the ranking (UWC is a good example), but the vast inequalities in knowledge production between the top and bottom universities have not disappeared.

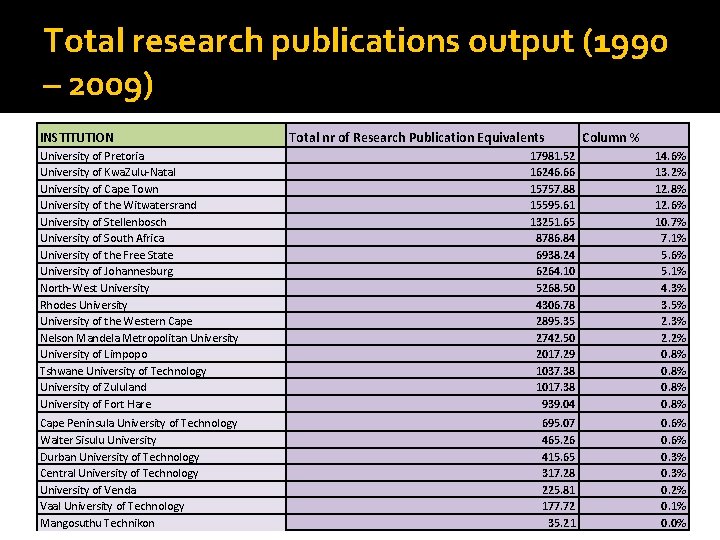

Total research publications output (1990 – 2009) INSTITUTION Total nr of Research Publication Equivalents Column % University of Pretoria University of Kwa. Zulu-Natal University of Cape Town University of the Witwatersrand University of Stellenbosch University of South Africa University of the Free State University of Johannesburg North-West University Rhodes University of the Western Cape Nelson Mandela Metropolitan University of Limpopo Tshwane University of Technology University of Zululand University of Fort Hare 17981. 52 16246. 66 15757. 88 15595. 61 13251. 65 8786. 84 6938. 24 6264. 10 5268. 50 4306. 78 2895. 35 2742. 50 2017. 29 1037. 38 1017. 38 939. 04 14. 6% 13. 2% 12. 8% 12. 6% 10. 7% 7. 1% 5. 6% 5. 1% 4. 3% 3. 5% 2. 3% 2. 2% 0. 8% Cape Peninsula University of Technology Walter Sisulu University Durban University of Technology Central University of Technology University of Venda Vaal University of Technology Mangosuthu Technikon 695. 07 465. 26 415. 65 317. 28 225. 81 177. 72 35. 21 0. 6% 0. 3% 0. 2% 0. 1% 0. 0%

Total research publications output (1990 – 2009) – The top five INSTITUTION University of Pretoria University of Kwa. Zulu-Natal University of Cape Town University of the Witwatersrand University of Stellenbosch THE TOP FIVE Total nr of Research Publication Equivalents Column % 17981. 52 14. 6% 16246. 66 13. 2% 15757. 88 12. 8% 15595. 61 12. 6% 13251. 65 10. 7% 78833. 32 63. 9% Rule: Universities producing more than 10% of total university output

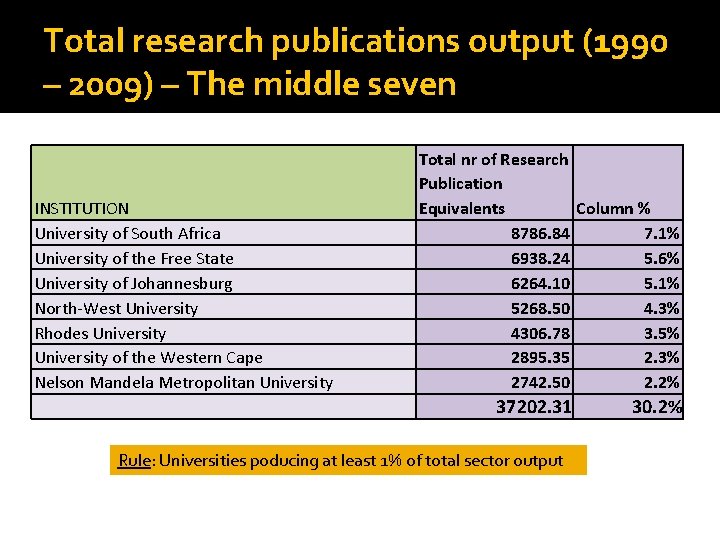

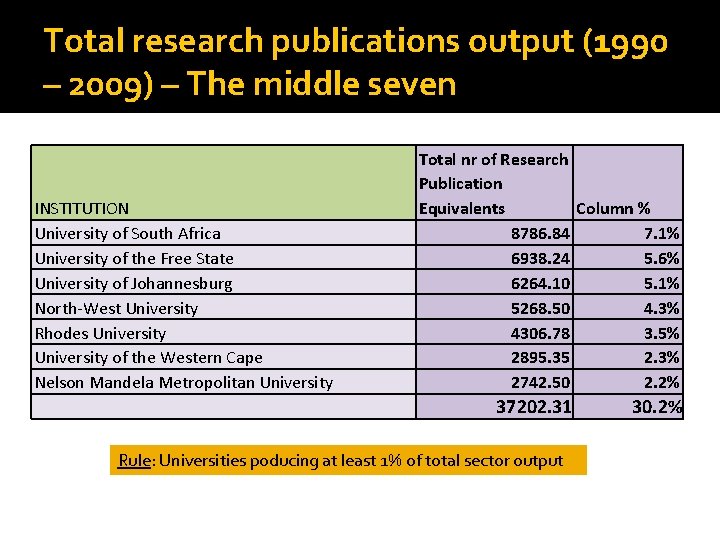

Total research publications output (1990 – 2009) – The middle seven INSTITUTION University of South Africa University of the Free State University of Johannesburg North-West University Rhodes University of the Western Cape Nelson Mandela Metropolitan University Total nr of Research Publication Equivalents Column % 8786. 84 7. 1% 6938. 24 5. 6% 6264. 10 5. 1% 5268. 50 4. 3% 4306. 78 3. 5% 2895. 35 2. 3% 2742. 50 2. 2% 37202. 31 Rule: Universities poducing at least 1% of total sector output 30. 2%

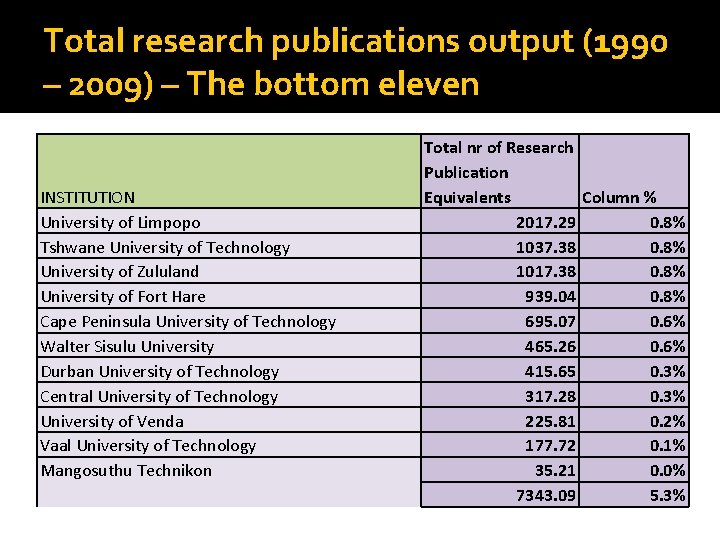

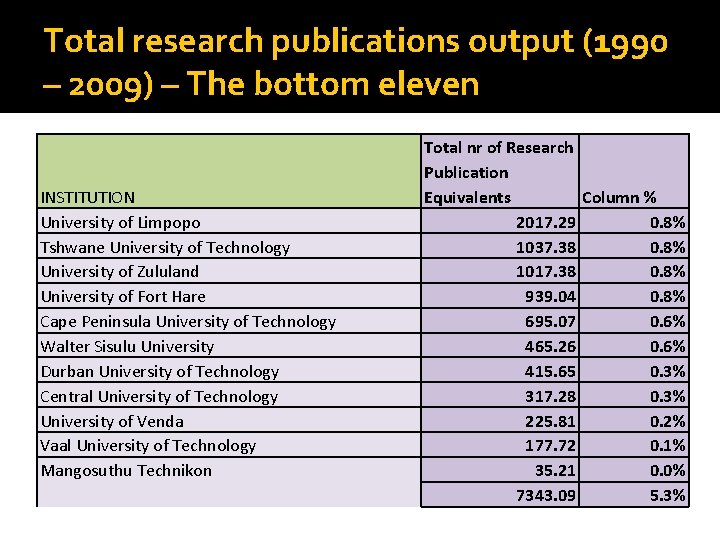

Total research publications output (1990 – 2009) – The bottom eleven INSTITUTION University of Limpopo Tshwane University of Technology University of Zululand University of Fort Hare Cape Peninsula University of Technology Walter Sisulu University Durban University of Technology Central University of Technology University of Venda Vaal University of Technology Mangosuthu Technikon Total nr of Research Publication Equivalents Column % 2017. 29 0. 8% 1037. 38 0. 8% 1017. 38 0. 8% 939. 04 0. 8% 695. 07 0. 6% 465. 26 0. 6% 415. 65 0. 3% 317. 28 0. 3% 225. 81 0. 2% 177. 72 0. 1% 35. 21 0. 0% 7343. 09 5. 3%

Total DHET research output: 1990 - 2009 100% 90% 80% 70% 66. 7 63. 1 65. 5 61. 4 63. 9 30 30. 6 28. 2 31. 5 30. 2 3. 3 1990 - 1994 6. 2 6. 6 7 5. 3 1995 - 1999 2000 - 2005 - 2009 Total period 50% 40% 30% 20% 10% 0% Bottom eleven Middle seven Top five

Highest versus lowest output institutions 2008 2003 1998 1993 0. 00 200. 00 400. 00 600. 00 800. 00 1000. 00 1200. 00

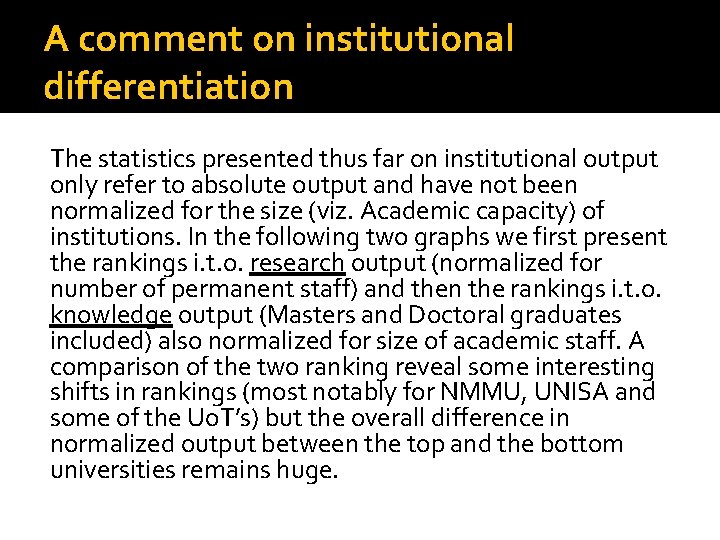

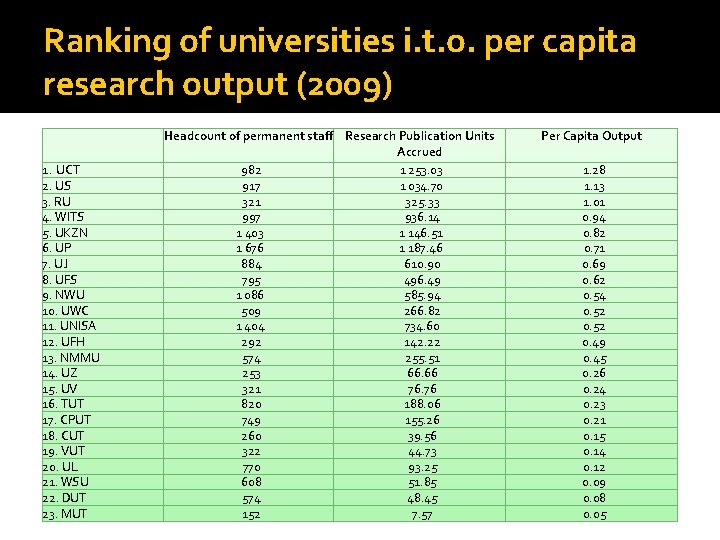

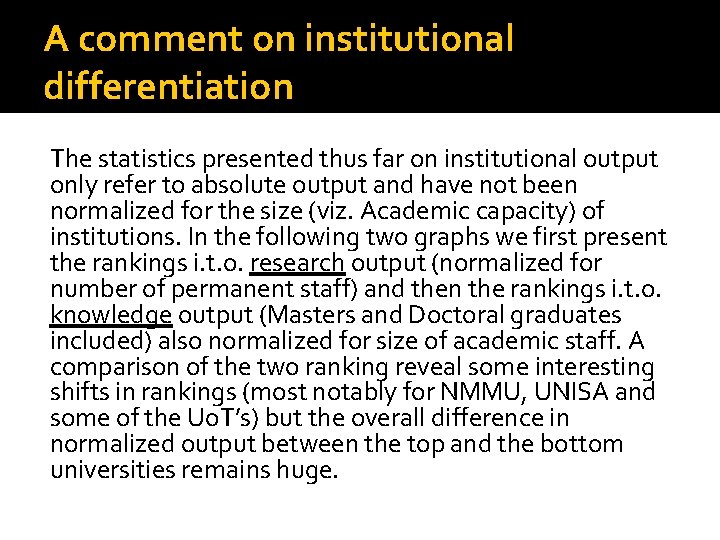

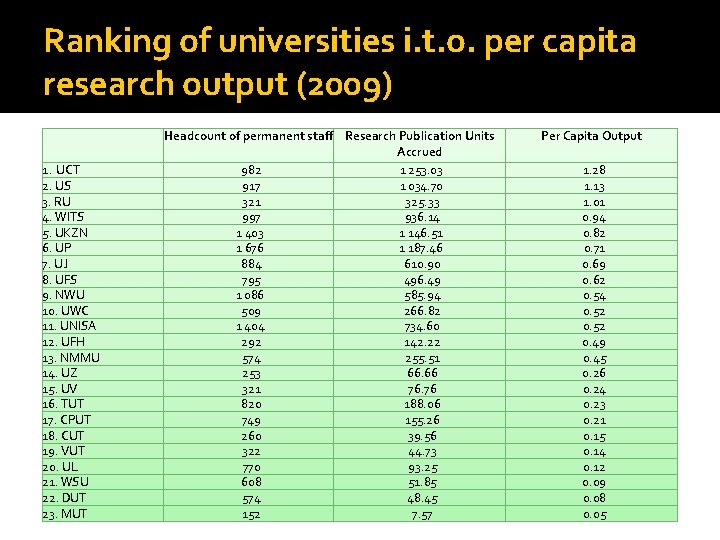

A comment on institutional differentiation The statistics presented thus far on institutional output only refer to absolute output and have not been normalized for the size (viz. Academic capacity) of institutions. In the following two graphs we first present the rankings i. t. o. research output (normalized for number of permanent staff) and then the rankings i. t. o. knowledge output (Masters and Doctoral graduates included) also normalized for size of academic staff. A comparison of the two ranking reveal some interesting shifts in rankings (most notably for NMMU, UNISA and some of the Uo. T’s) but the overall difference in normalized output between the top and the bottom universities remains huge.

Ranking of universities i. t. o. per capita research output (2009) 1. UCT 2. US 3. RU 4. WITS 5. UKZN 6. UP 7. UJ 8. UFS 9. NWU 10. UWC 11. UNISA 12. UFH 13. NMMU 14. UZ 15. UV 16. TUT 17. CPUT 18. CUT 19. VUT 20. UL 21. WSU 22. DUT 23. MUT Headcount of permanent staff Research Publication Units Accrued 982 1 253. 03 917 1 034. 70 321 325. 33 997 936. 14 1 403 1 146. 51 1 676 1 187. 46 884 610. 90 795 496. 49 1 086 585. 94 509 266. 82 1 404 734. 60 292 142. 22 574 255. 51 253 66. 66 321 76. 76 820 188. 06 749 155. 26 260 39. 56 322 44. 73 770 93. 25 608 51. 85 574 48. 45 152 7. 57 Per Capita Output 1. 28 1. 13 1. 01 0. 94 0. 82 0. 71 0. 69 0. 62 0. 54 0. 52 0. 49 0. 45 0. 26 0. 24 0. 23 0. 21 0. 15 0. 14 0. 12 0. 09 0. 08 0. 05

Ranking of universities according to average normed knowledge production (2007 – 2009) Rank University 1 (2) STELLENBOSCH 2 (1) CAPE TOWN 3 (3) RHODES 4 (4) WITWATERSRAND 5 (6) PRETORIA 6 (7) JOHANNESBURG 7 (5) KWA-ZULU NATAL 8 (13) NELSON MANDELA 9 (9) NORTH WEST 10 (8) FREE STATE 11 (10) WESTERN CAPE 12 (16) TSHWANE UT 13 (18) CENTRAL UT 14 (11) SOUTH AFRICA 15 (17) CAPE PENINSULA UT 16 (12) FORT HARE 17 (14) ZULULAND 18 (19) VAAL UT 19 (22) DURBAN UT 20 (20) LIMPOPO 21 (15) VENDA 22 (22) WALTER SISULU 23 (23) MANGOSUTHU Average annual weighted output 2007 – 2009 1833 1926 550 1609 2216 847 1768 482 1110 898 505 277 74 938 184 199 146 43 82 243 80 24 2 Average annual normed output for 2007 – 2009 177 166 140 131 110 107 103 99 94 94 82 75 61 60 60 53 49 33 30 25 24 6 4

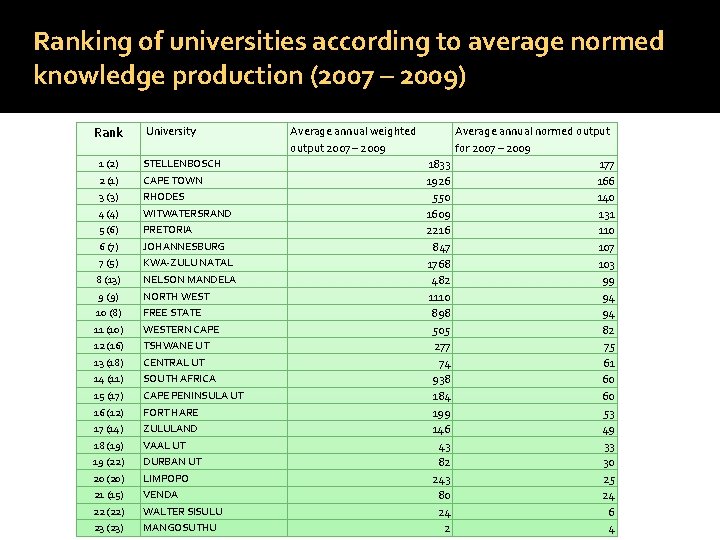

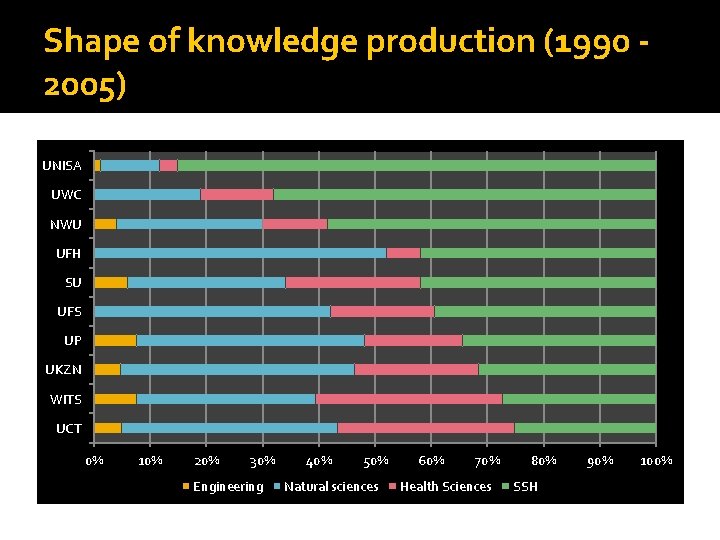

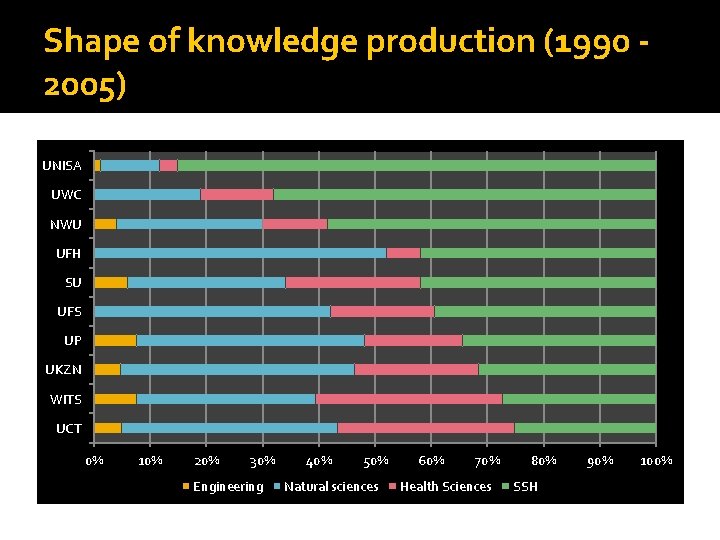

Proposition 3 SA universities vary hugely in terms of the “shape” of their knowledge production. The big differences in scientific field profiles of the different universities is clearly a function of institutional histories (e. g. having a medical school or faculty of theology) and institutional missions (research intensive universities versus more teaching universities and ex-technikons)

Shape of knowledge production (1990 2005) UNISA UWC NWU UFH SU UFS UP UKZN WITS UCT 0% 10% 20% 30% Engineering 40% 50% Natural sciences 60% 70% Health Sciences 80% SSH 90% 100%

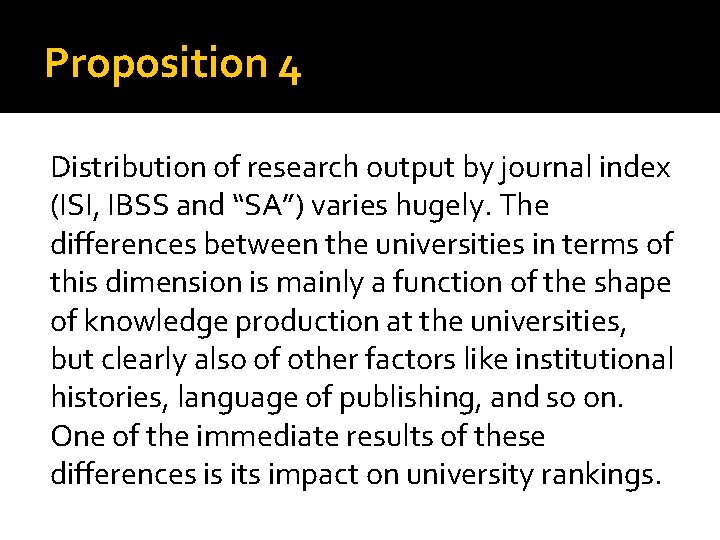

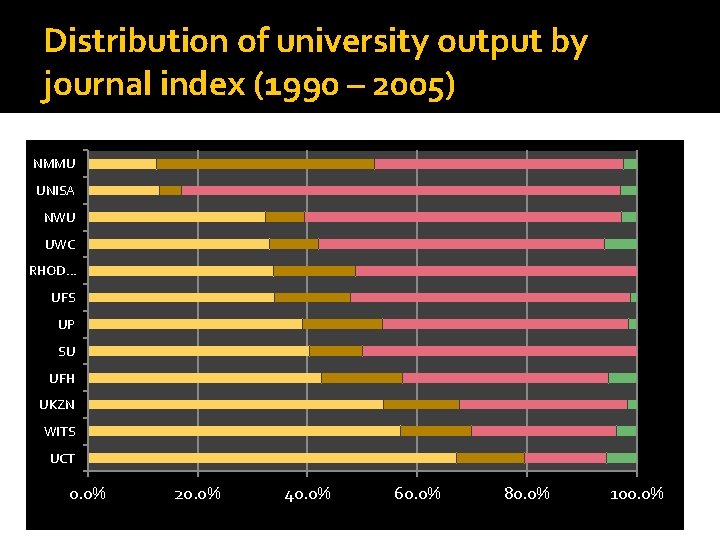

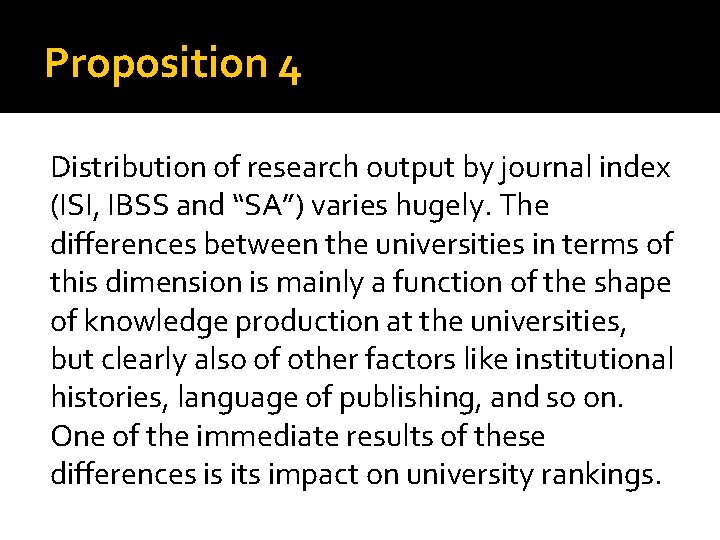

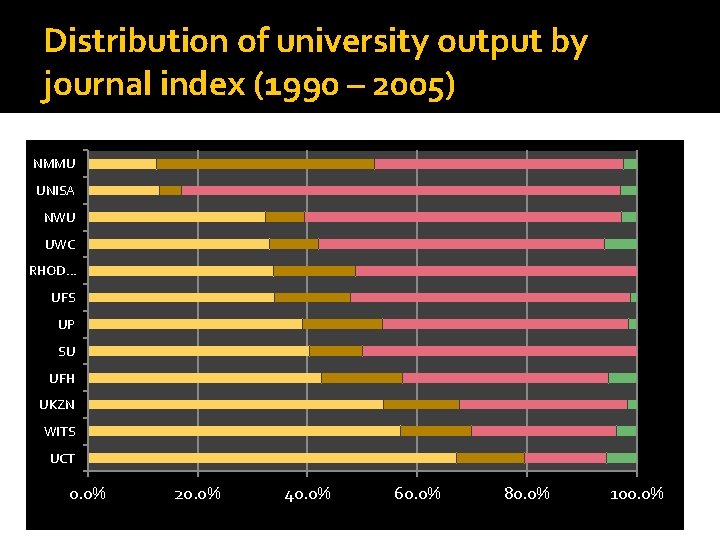

Proposition 4 Distribution of research output by journal index (ISI, IBSS and “SA”) varies hugely. The differences between the universities in terms of this dimension is mainly a function of the shape of knowledge production at the universities, but clearly also of other factors like institutional histories, language of publishing, and so on. One of the immediate results of these differences is its impact on university rankings.

Distribution of university output by journal index (1990 – 2005) NMMU UNISA NWU UWC RHOD. . . UFS UP SU UFH UKZN WITS UCT 0. 0% 20. 0% 40. 0% 60. 0% 80. 0% 100. 0%

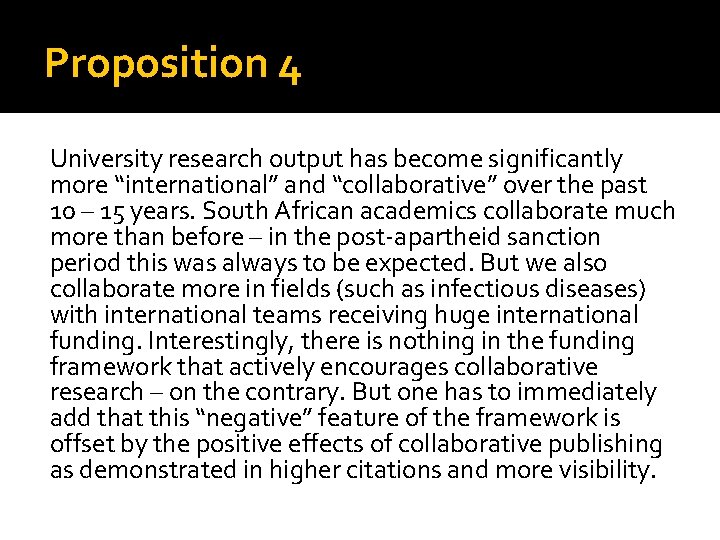

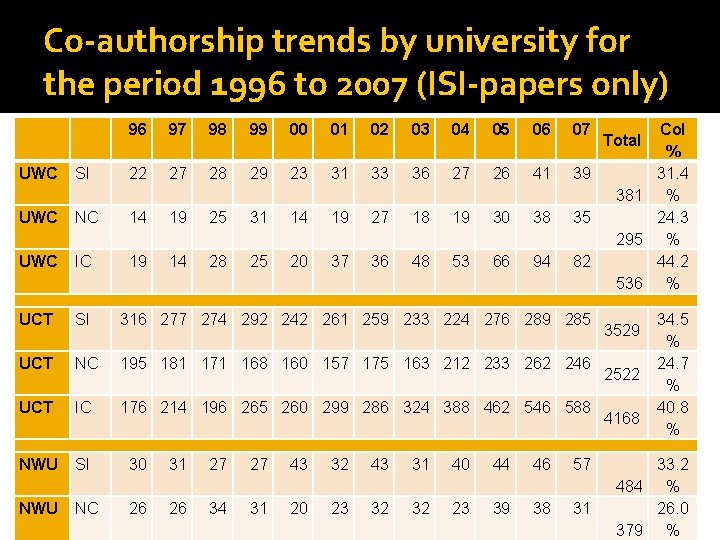

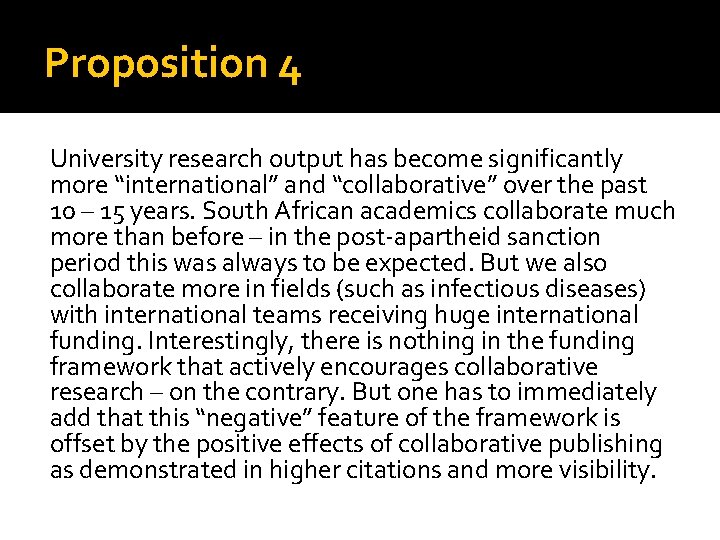

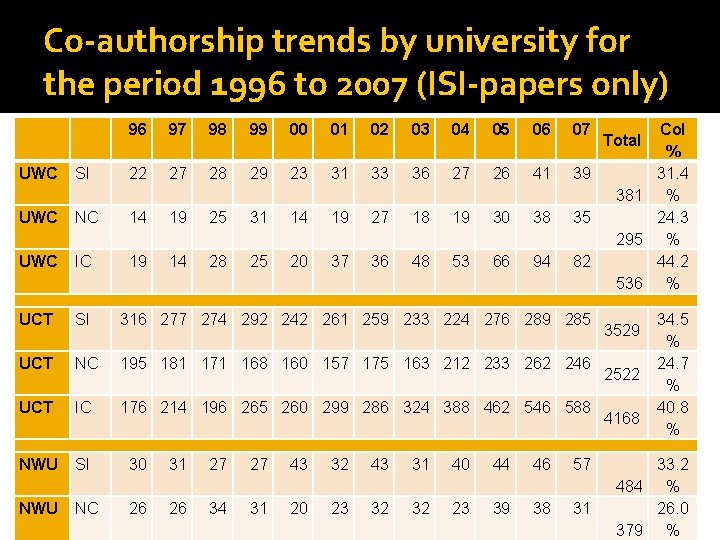

Proposition 4 University research output has become significantly more “international” and “collaborative” over the past 10 – 15 years. South African academics collaborate much more than before – in the post-apartheid sanction period this was always to be expected. But we also collaborate more in fields (such as infectious diseases) with international teams receiving huge international funding. Interestingly, there is nothing in the funding framework that actively encourages collaborative research – on the contrary. But one has to immediately add that this “negative” feature of the framework is offset by the positive effects of collaborative publishing as demonstrated in higher citations and more visibility.

Co-authorship trends by university for the period 1996 to 2007 (ISI-papers only) 96 97 98 99 00 01 02 03 04 05 06 07 UWC SI 22 27 28 29 23 31 33 36 27 26 41 39 UWC NC 14 19 25 31 14 19 27 18 19 30 38 35 UWC IC 19 14 28 25 20 37 36 48 53 66 94 82 UCT SI 316 277 274 292 242 261 259 233 224 276 289 285 UCT NC 195 181 171 168 160 157 175 163 212 233 262 246 UCT IC 176 214 196 265 260 299 286 324 388 462 546 588 NWU SI 30 31 27 27 43 32 43 31 40 44 46 57 NWU NC 26 26 34 31 20 23 32 32 23 39 38 31 Col % 31. 4 381 % 24. 3 295 % 44. 2 536 % Total 3529 2522 4168 34. 5 % 24. 7 % 40. 8 % 33. 2 484 % 26. 0 379 %

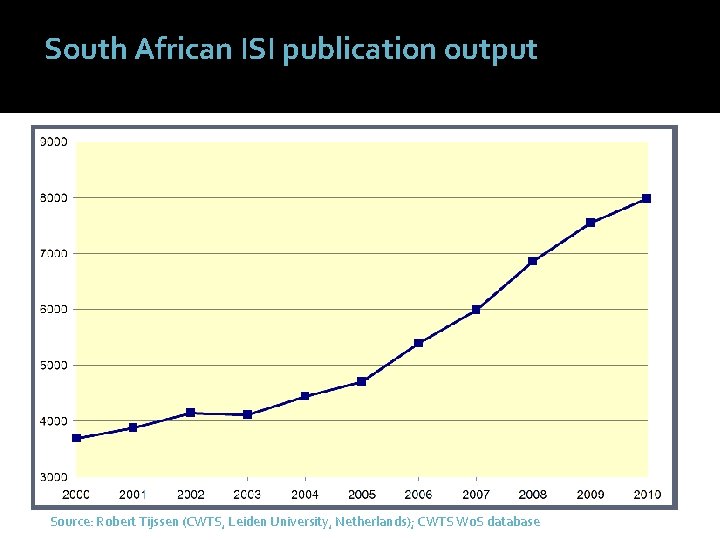

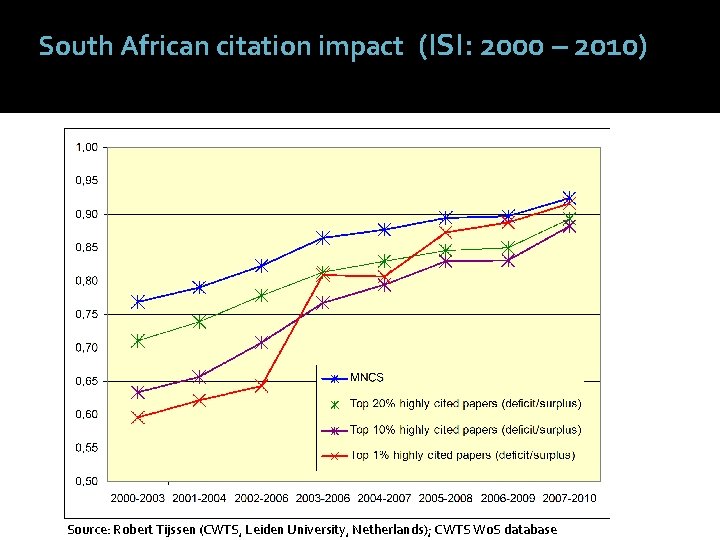

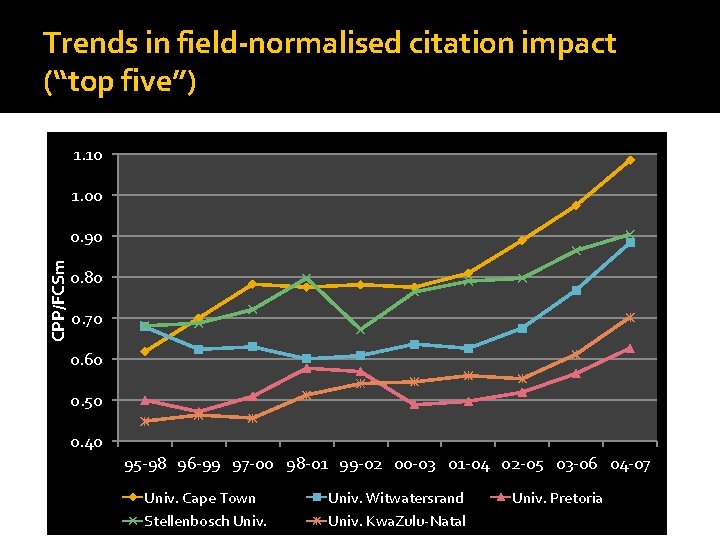

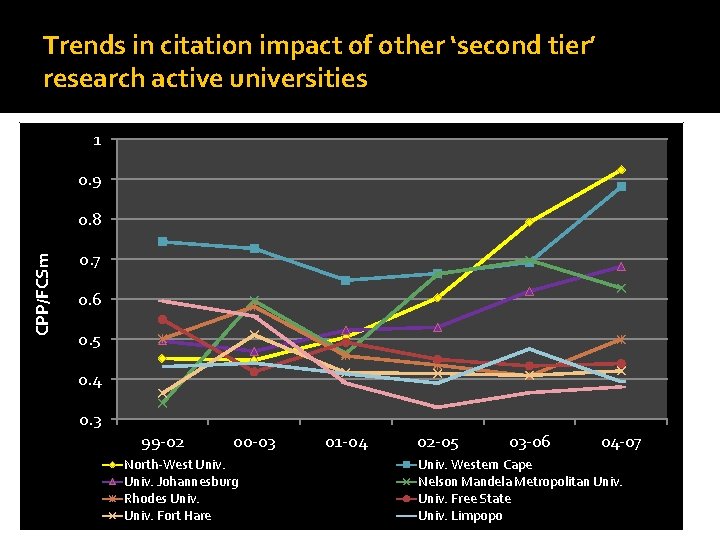

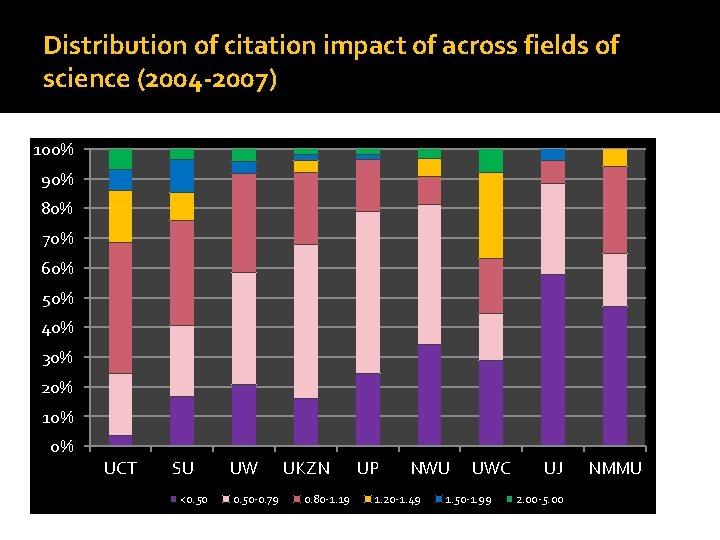

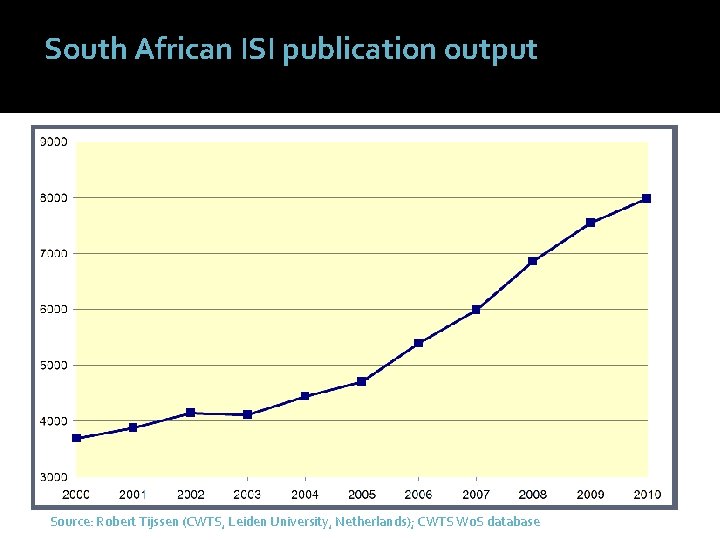

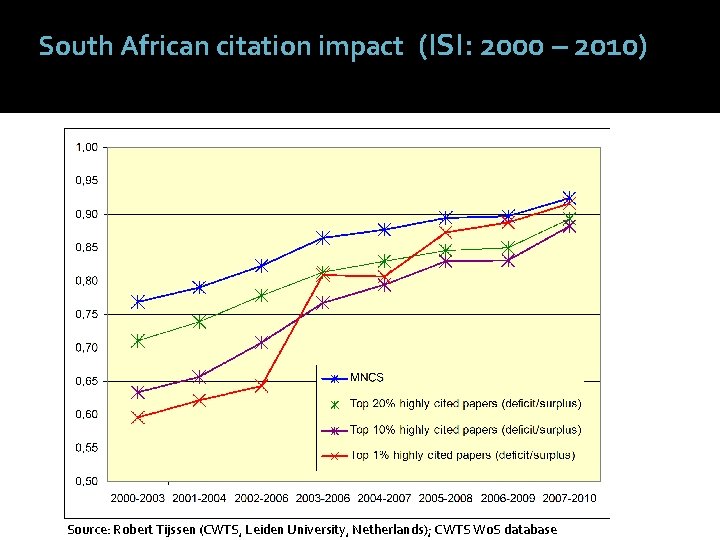

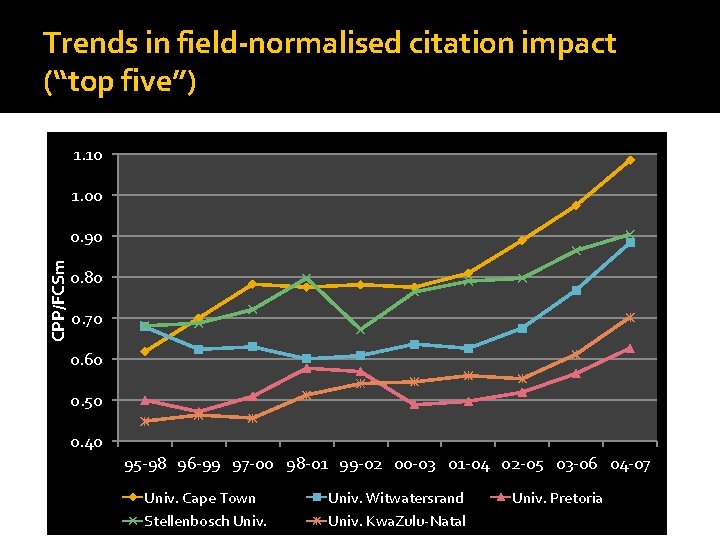

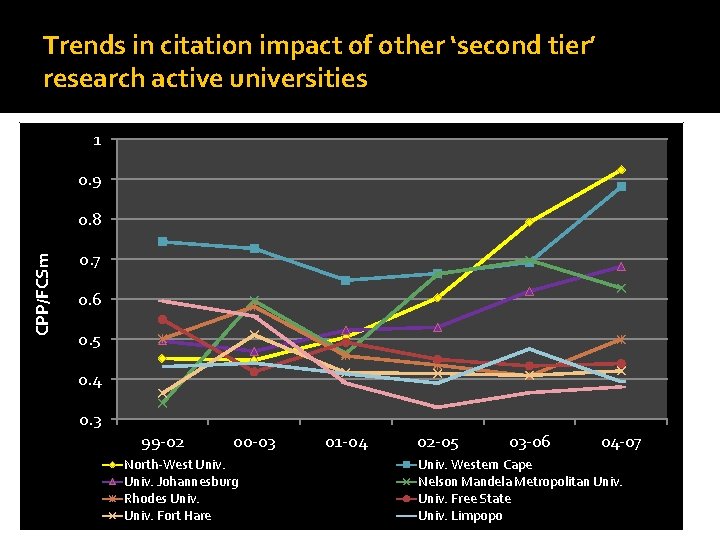

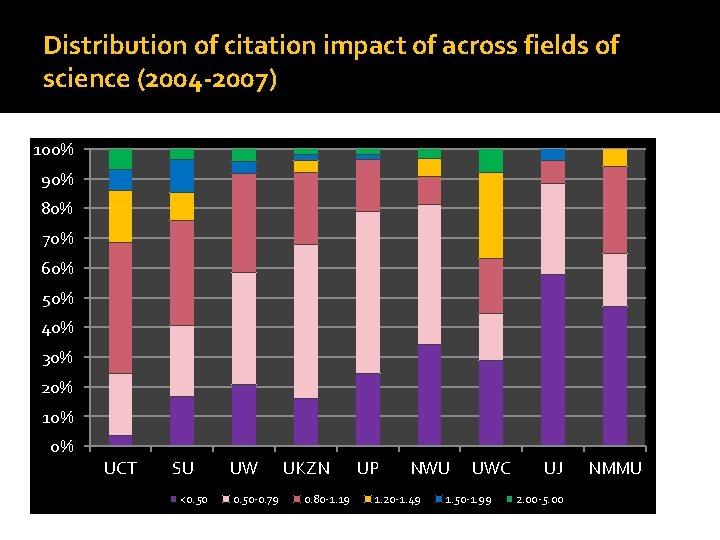

Proposition 5 The impact of SA’s research production has increased significantly over the past 15 years – mostly because of collaborative publishing (in high-impact journals) – and possibly also because of increased research in highly visible research areas. This is true at the country level, but with very different impact levels at the institutional level.

South African ISI publication output Source: Robert Tijssen (CWTS, Leiden University, Netherlands); CWTS Wo. S database

South African citation impact (ISI: 2000 – 2010) Source: Robert Tijssen (CWTS, Leiden University, Netherlands); CWTS Wo. S database

Trends in field-normalised citation impact (“top five”) 1. 10 1. 00 CPP/FCSm 0. 90 0. 80 0. 70 0. 60 0. 50 0. 40 95 -98 96 -99 97 -00 98 -01 99 -02 00 -03 01 -04 02 -05 03 -06 04 -07 Univ. Cape Town Stellenbosch Univ. Witwatersrand Univ. Kwa. Zulu-Natal Univ. Pretoria

Trends in citation impact of other ‘second tier’ research active universities 1 0. 9 CPP/FCSm 0. 8 0. 7 0. 6 0. 5 0. 4 0. 3 99 -02 00 -03 North-West Univ. Johannesburg Rhodes Univ. Fort Hare 01 -04 02 -05 03 -06 04 -07 Univ. Western Cape Nelson Mandela Metropolitan Univ. Free State Univ. Limpopo

Distribution of citation impact of across fields of science (2004 -2007) 100% 90% 80% 70% 60% 50% 40% 30% 20% 10% 0% UCT SU <0. 50 UW 0. 50 -0. 79 UKZN 0. 80 -1. 19 UP NWU 1. 20 -1. 49 UWC 1. 50 -1. 99 UJ 2. 00 -5. 00 NMMU

Enablers of productivity and impact

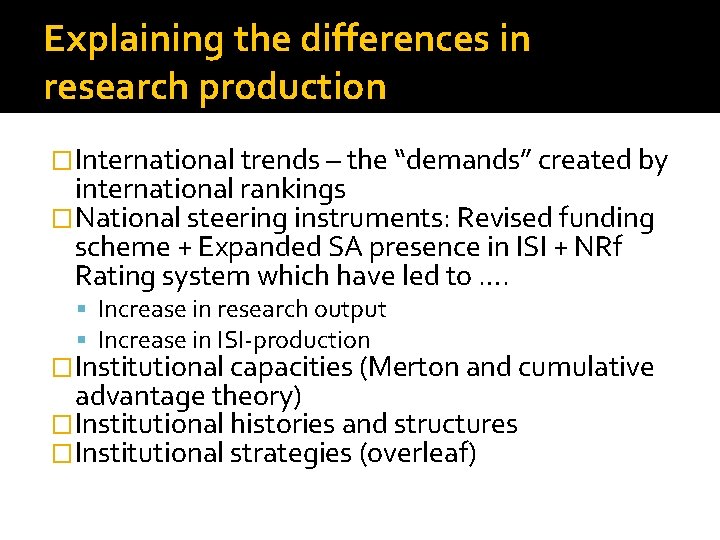

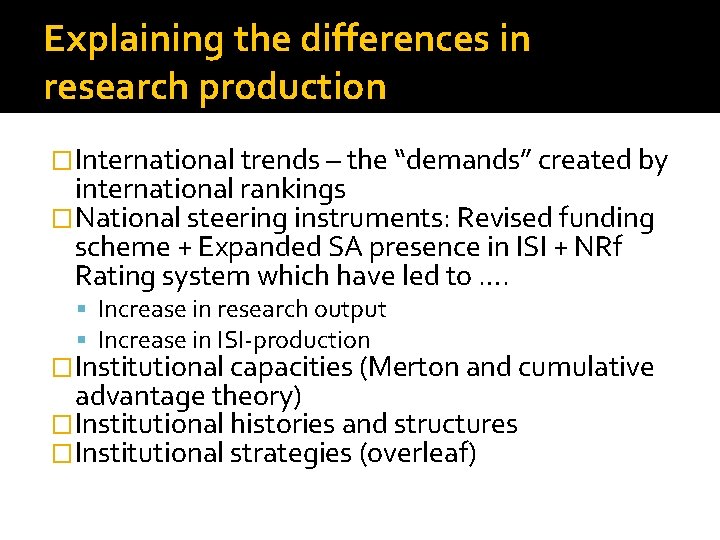

Explaining the differences in research production �International trends – the “demands” created by international rankings �National steering instruments: Revised funding scheme + Expanded SA presence in ISI + NRf Rating system which have led to …. Increase in research output Increase in ISI-production �Institutional capacities (Merton and cumulative advantage theory) �Institutional histories and structures �Institutional strategies (overleaf)

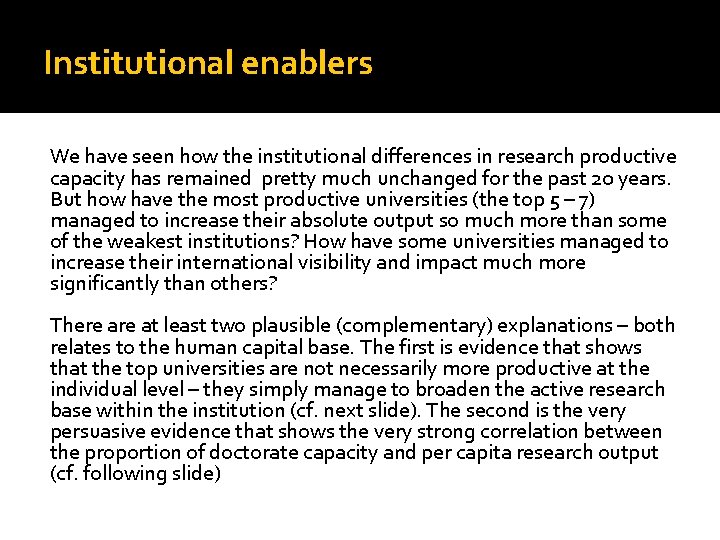

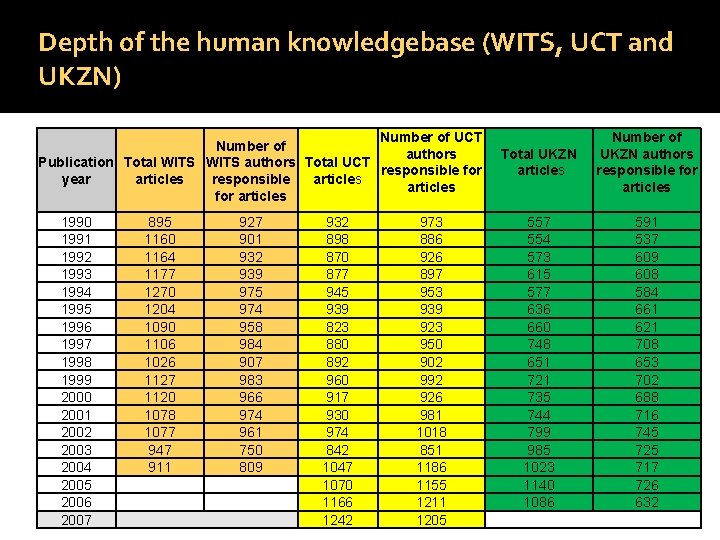

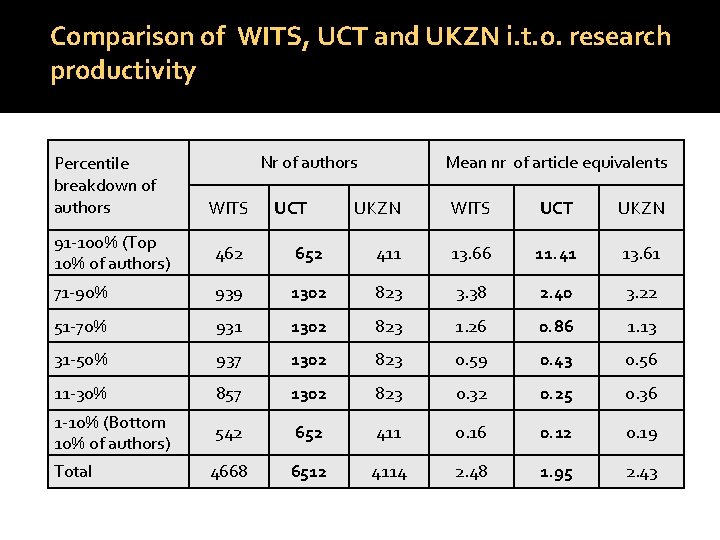

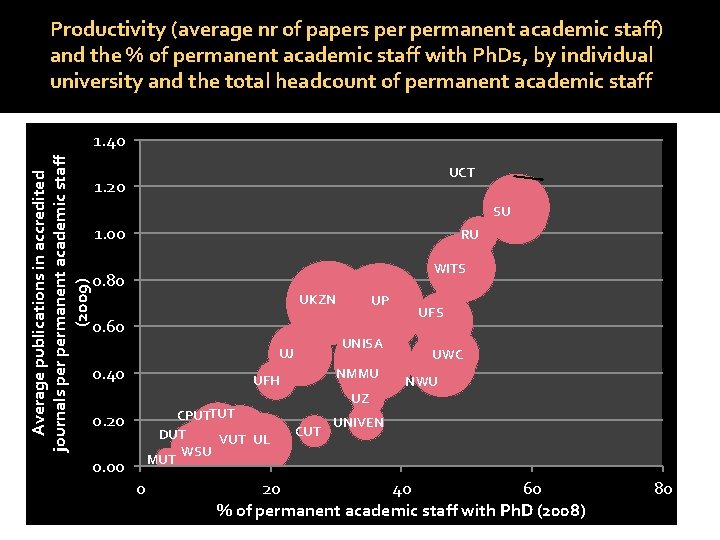

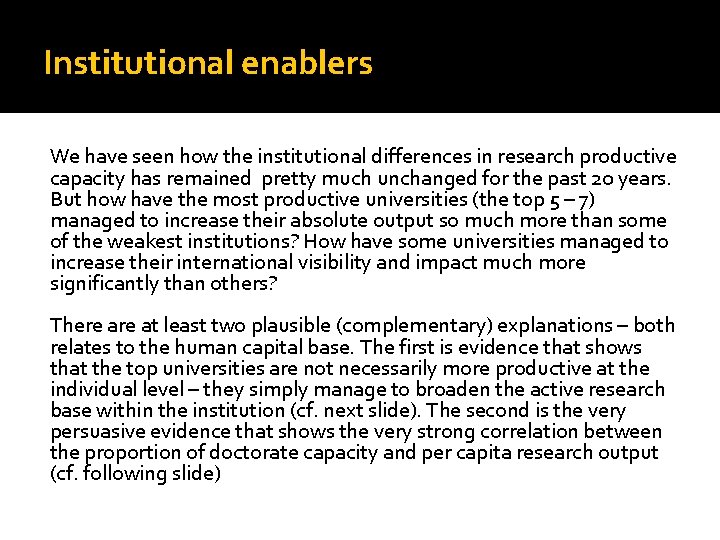

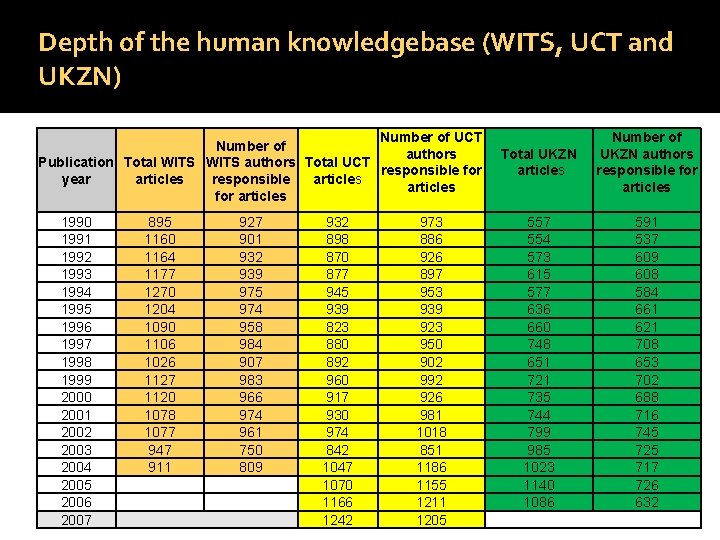

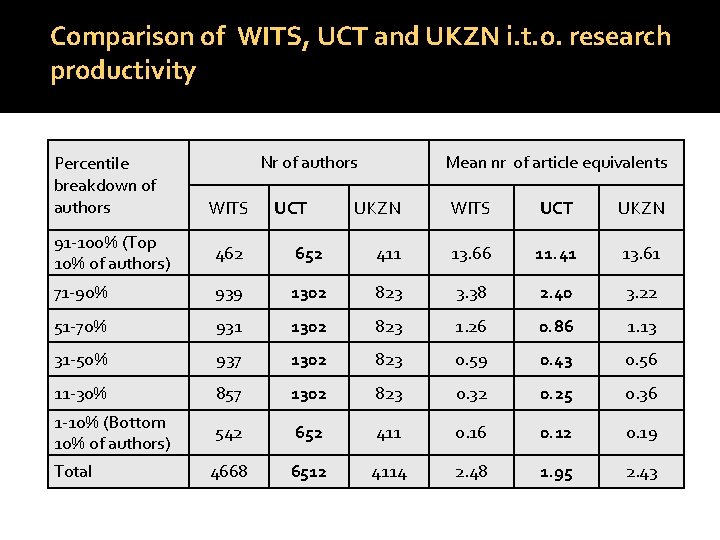

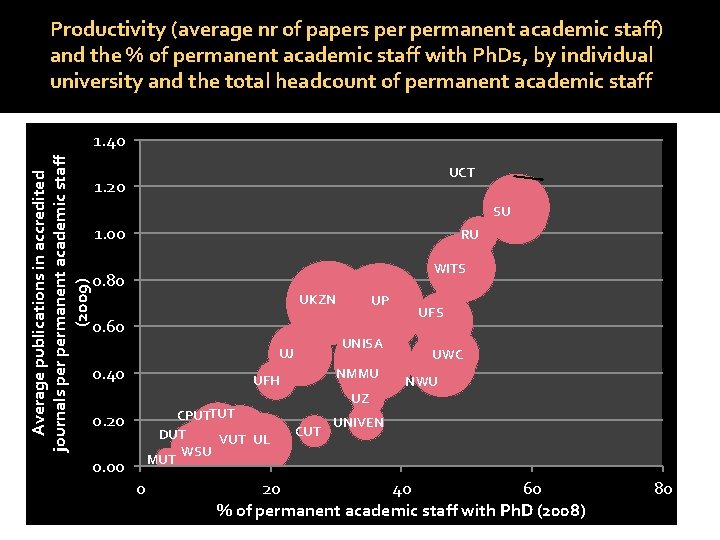

Institutional enablers We have seen how the institutional differences in research productive capacity has remained pretty much unchanged for the past 20 years. But how have the most productive universities (the top 5 – 7) managed to increase their absolute output so much more than some of the weakest institutions? How have some universities managed to increase their international visibility and impact much more significantly than others? There at least two plausible (complementary) explanations – both relates to the human capital base. The first is evidence that shows that the top universities are not necessarily more productive at the individual level – they simply manage to broaden the active research base within the institution (cf. next slide). The second is the very persuasive evidence that shows the very strong correlation between the proportion of doctorate capacity and per capita research output (cf. following slide)

Depth of the human knowledgebase (WITS, UCT and UKZN) Number of UCT Number of authors Publication Total WITS authors Total UCT responsible for year articles responsible articles for articles 1990 1991 1992 1993 1994 1995 1996 1997 1998 1999 2000 2001 2002 2003 2004 2005 2006 2007 895 1160 1164 1177 1270 1204 1090 1106 1026 1127 1120 1078 1077 947 911 927 901 932 939 975 974 958 984 907 983 966 974 961 750 809 932 898 870 877 945 939 823 880 892 960 917 930 974 842 1047 1070 1166 1242 973 886 926 897 953 939 923 950 902 992 926 981 1018 851 1186 1155 1211 1205 Total UKZN articles Number of UKZN authors responsible for articles 557 554 573 615 577 636 660 748 651 721 735 744 799 985 1023 1140 1086 591 537 609 608 584 661 621 708 653 702 688 716 745 725 717 726 632

Comparison of WITS, UCT and UKZN i. t. o. research productivity Percentile breakdown of authors Nr of authors WITS UCT Mean nr of article equivalents UKZN WITS UCT UKZN 91 -100% (Top 10% of authors) 462 652 411 13. 66 11. 41 13. 61 71 -90% 939 1302 823 3. 38 2. 40 3. 22 51 -70% 931 1302 823 1. 26 0. 86 1. 13 31 -50% 937 1302 823 0. 59 0. 43 0. 56 11 -30% 857 1302 823 0. 32 0. 25 0. 36 1 -10% (Bottom 10% of authors) 542 652 411 0. 16 0. 12 0. 19 Total 4668 6512 4114 2. 48 1. 95 2. 43

Productivity (average nr of papers permanent academic staff) and the % of permanent academic staff with Ph. Ds, by individual university and the total headcount of permanent academic staff Average publications in accredited journals permanent academic staff (2009) 1. 40 UCT 1. 20 SU 1. 00 RU WITS 0. 80 UKZN 0. 60 UNISA UJ 0. 40 0. 00 NMMU UFH CPUTTUT DUT VUT UL WSU MUT 0. 20 0 UP UFS UWC NWU UZ CUT UNIVEN 20 40 60 % of permanent academic staff with Ph. D (2008) 80

In conclusion � We undoubtedly have a highly differentiated university sector when assessed in terms of key and relevant indicators � Some of the “causes” of these differences reflect the path-dependency of historical factors, missions and structures. Other differences are the results of more recent institutional responses to international and national policies, strategies and incentives. � I have argued that the trends presented show that there are identifiable enabling mechanisms and drivers that impact on greater productivity and international impact even within a differentiated system.

The end

Hesa data futures

Hesa data futures Likert leadership theory

Likert leadership theory Basic approaches to leadership

Basic approaches to leadership Blake and mouton managerial grid

Blake and mouton managerial grid Lewin féle vezetési stílusok

Lewin féle vezetési stílusok Ledarskap teorier

Ledarskap teorier 17 french classical menu

17 french classical menu Modelo managerial grid de blake y mouton

Modelo managerial grid de blake y mouton Hersey en blanchard test

Hersey en blanchard test Leiderschapsstijlen blake en mouton

Leiderschapsstijlen blake en mouton Nils mouton

Nils mouton Sophie mouton nancy

Sophie mouton nancy Charles mouton md

Charles mouton md Blake ve mouton liderlik skalası

Blake ve mouton liderlik skalası Blake ve mouton yönetim ızgarası

Blake ve mouton yönetim ızgarası Grelha de gestão de blake e mouton

Grelha de gestão de blake e mouton Blake ve mouton yönetim tarzı matriksi

Blake ve mouton yönetim tarzı matriksi Blake en mouton

Blake en mouton Angular frequency to frequency

Angular frequency to frequency Skid lashing services

Skid lashing services Victoria olsen

Victoria olsen Fdic/jfsr bank research conference

Fdic/jfsr bank research conference Uvm powerpoint template

Uvm powerpoint template Palimpses

Palimpses The additive process of sculpture includes

The additive process of sculpture includes Obama bush verwandt

Obama bush verwandt Lars gabriel branting contribution in physical education

Lars gabriel branting contribution in physical education Barock geschichte hintergrund

Barock geschichte hintergrund Js bach lebenslauf

Js bach lebenslauf Johann wolfgang dobereiner

Johann wolfgang dobereiner Johann sebastian bach prezentace

Johann sebastian bach prezentace Johann sebastian bach děti

Johann sebastian bach děti Johann sebastian bach lebenslauf kurz

Johann sebastian bach lebenslauf kurz Johann sebastian bach nejznámější díla

Johann sebastian bach nejznámější díla Johann sebastian bach obras destacadas

Johann sebastian bach obras destacadas Johann leiss

Johann leiss Johann carl friedrich gauss

Johann carl friedrich gauss