Interactive Deduplication using Active Learning Sunita Sarawagi and

- Slides: 17

Interactive Deduplication using Active Learning Sunita Sarawagi and Anuradha Bhamidipaty Presented by Doug Downey

Active Learning for de-duplication • De-duplication systems try to learn a function: • Where D is the data set. – f is learned using a labeled training data set – Normally, D is large, so many sets Lp are possible. • Choosing a representative & useful Lp is hard. • Instead of a fixed set Lp, in Active Learning the learner interactively chooses pairs from D D to be labeled and added to Lp.

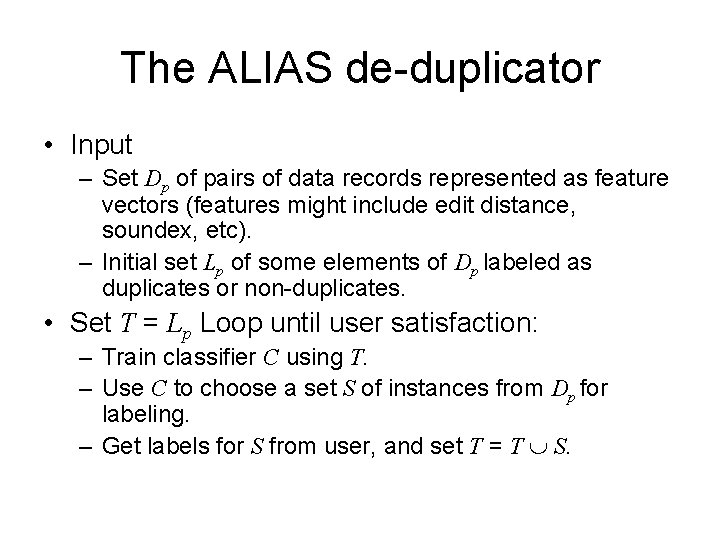

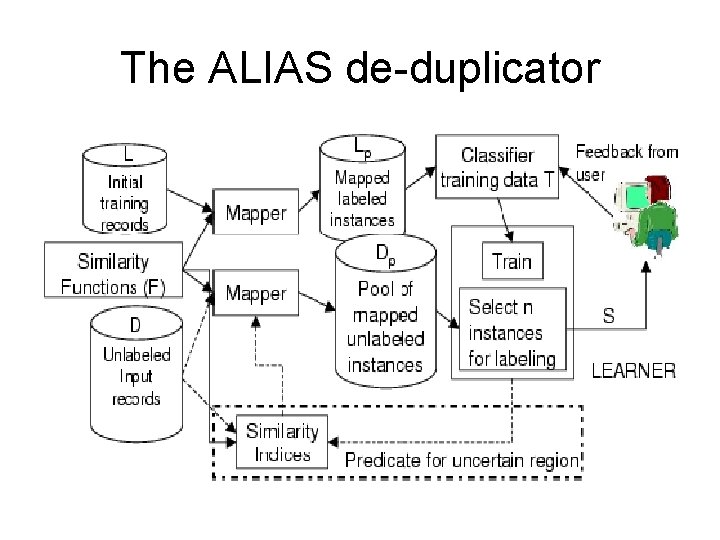

The ALIAS de-duplicator • Input – Set Dp of pairs of data records represented as feature vectors (features might include edit distance, soundex, etc). – Initial set Lp of some elements of Dp labeled as duplicates or non-duplicates. • Set T = Lp Loop until user satisfaction: – Train classifier C using T. – Use C to choose a set S of instances from Dp for labeling. – Get labels for S from user, and set T = T S.

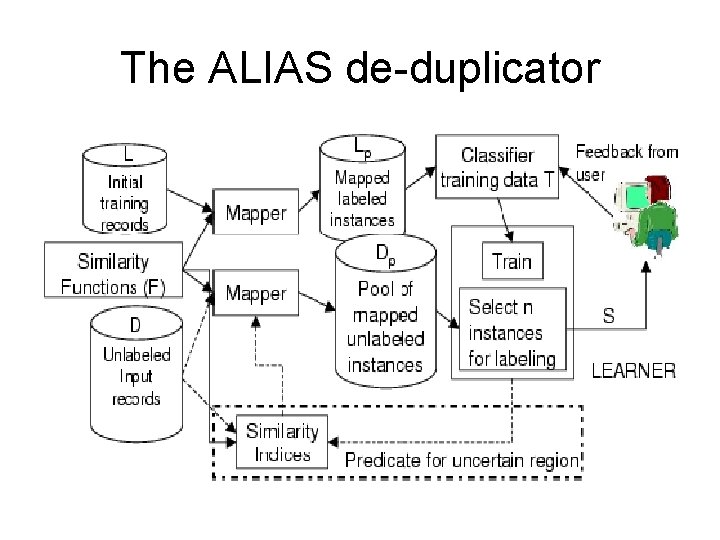

The ALIAS de-duplicator

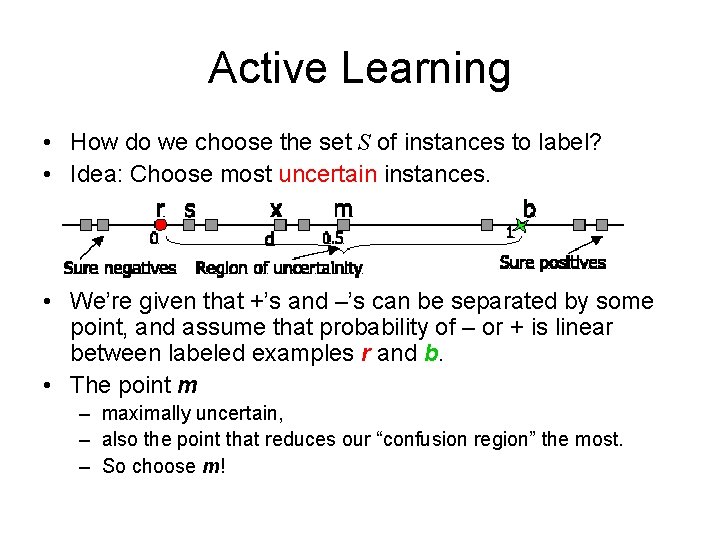

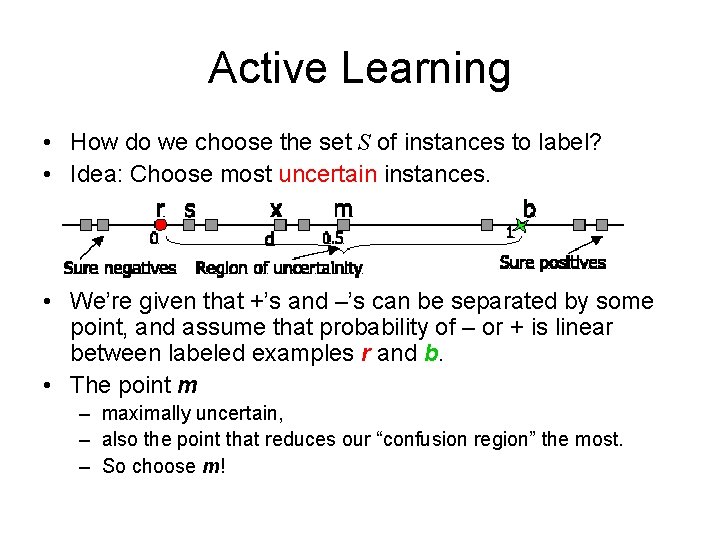

Active Learning • How do we choose the set S of instances to label? • Idea: Choose most uncertain instances. • We’re given that +’s and –’s can be separated by some point, and assume that probability of – or + is linear between labeled examples r and b. • The point m – maximally uncertain, – also the point that reduces our “confusion region” the most. – So choose m!

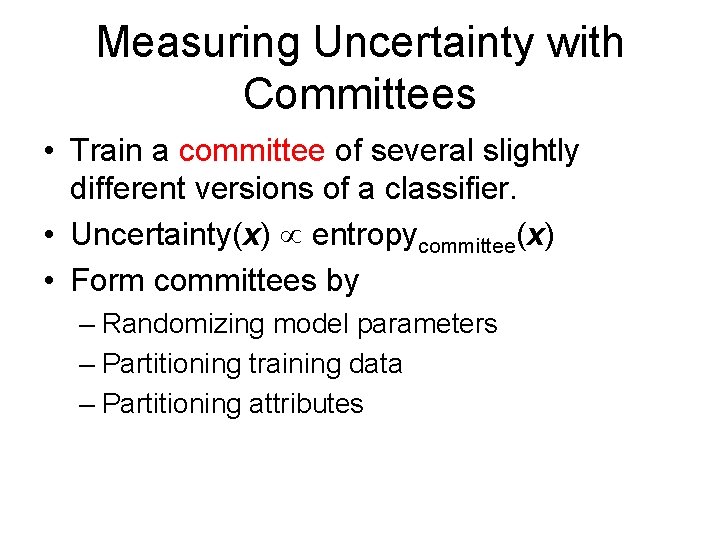

Measuring Uncertainty with Committees • Train a committee of several slightly different versions of a classifier. • Uncertainty(x) entropycommittee(x) • Form committees by – Randomizing model parameters – Partitioning training data – Partitioning attributes

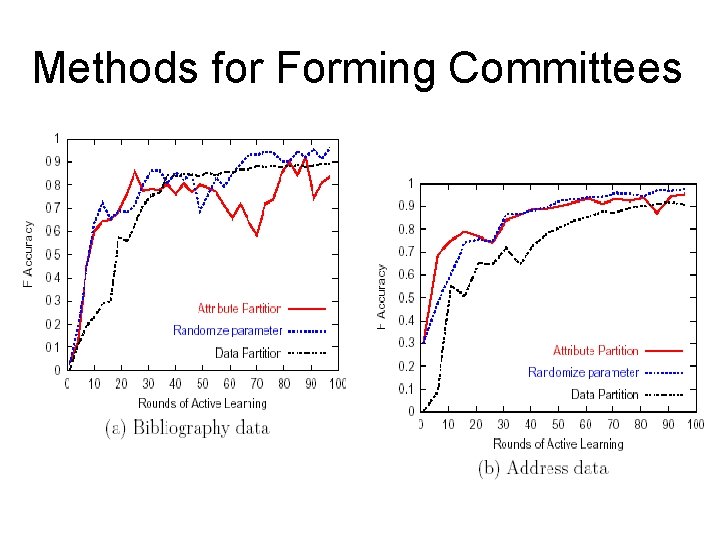

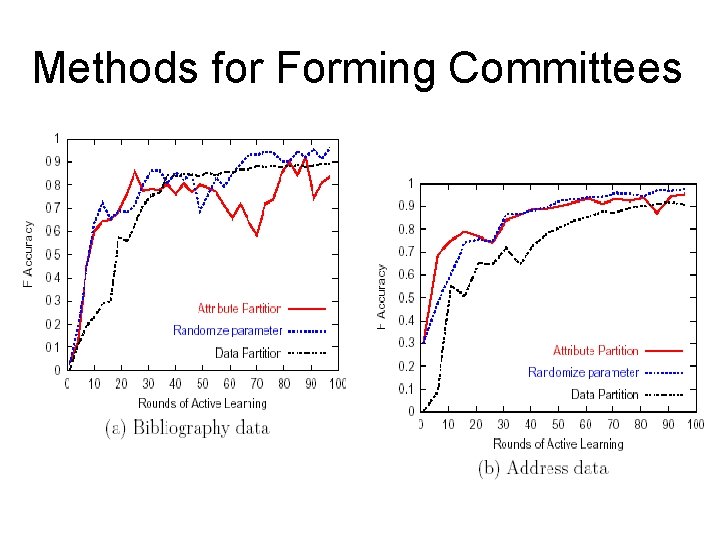

Methods for Forming Committees

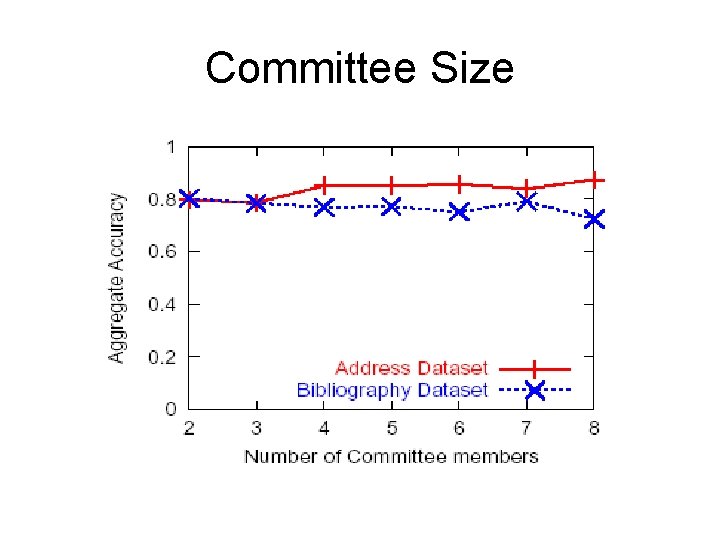

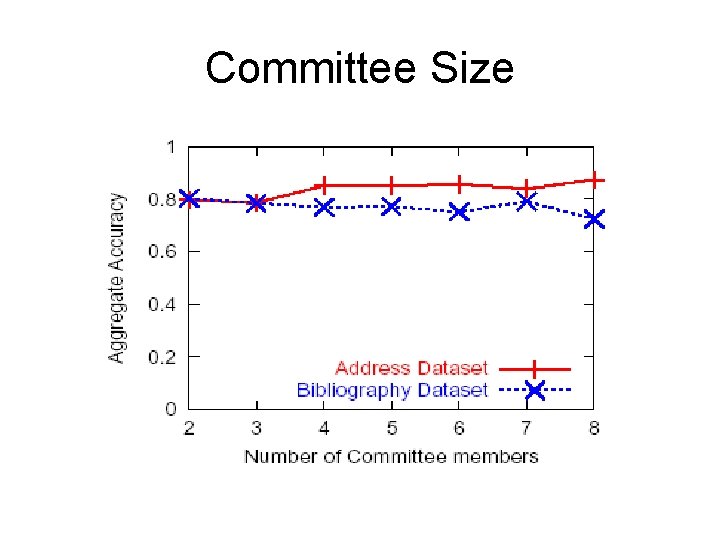

Committee Size

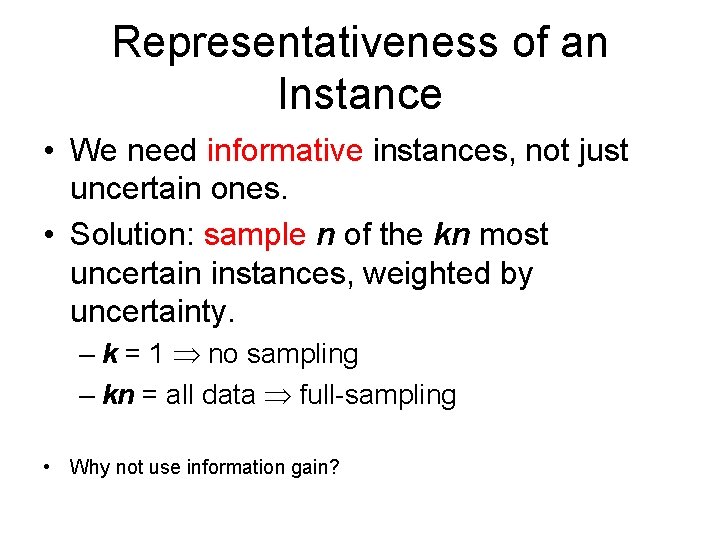

Representativeness of an Instance • We need informative instances, not just uncertain ones. • Solution: sample n of the kn most uncertain instances, weighted by uncertainty. – k = 1 no sampling – kn = all data full-sampling • Why not use information gain?

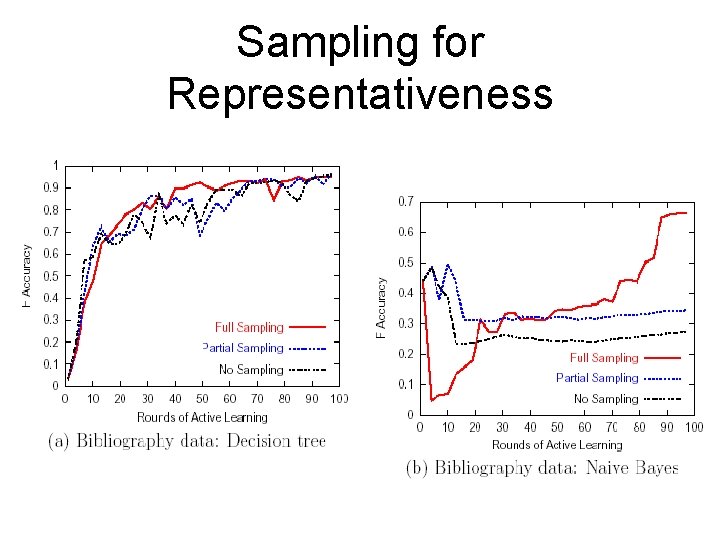

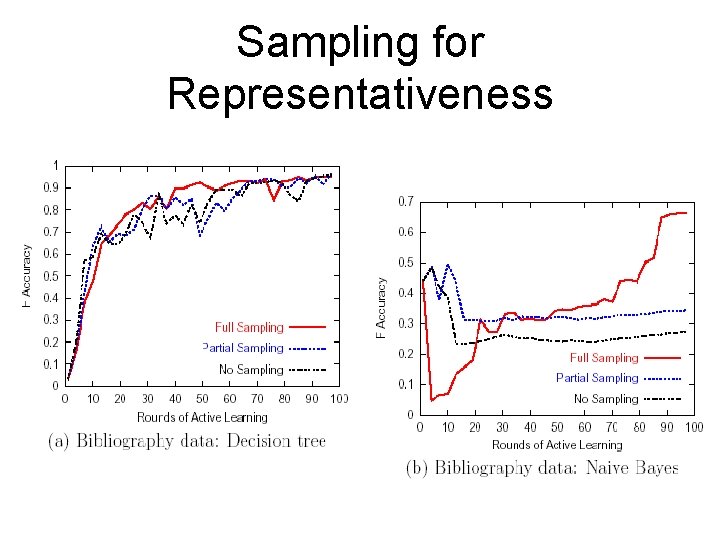

Sampling for Representativeness

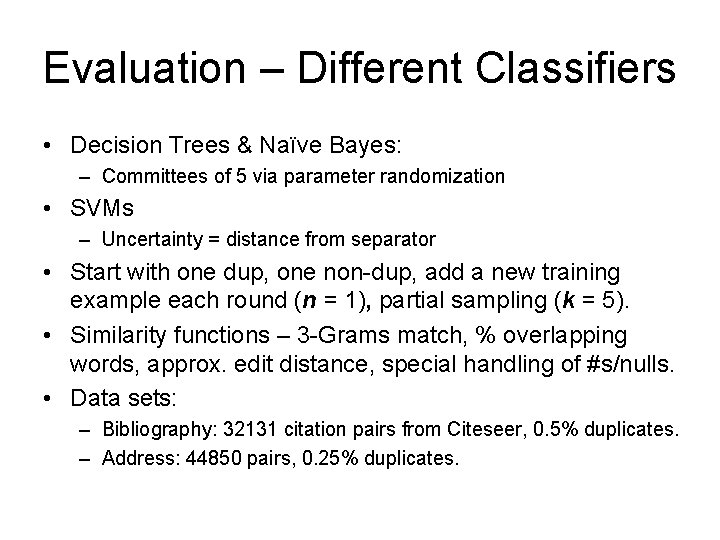

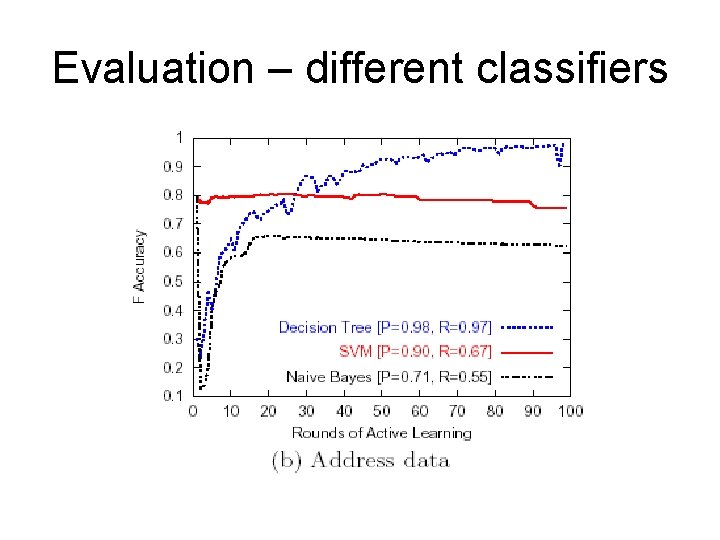

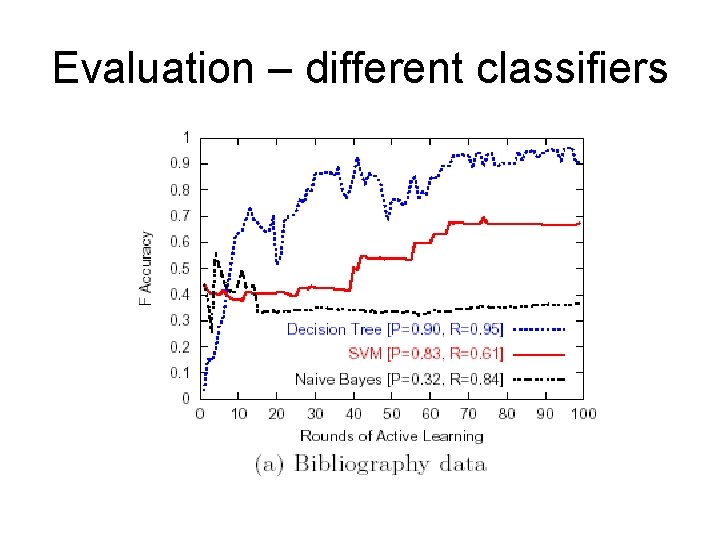

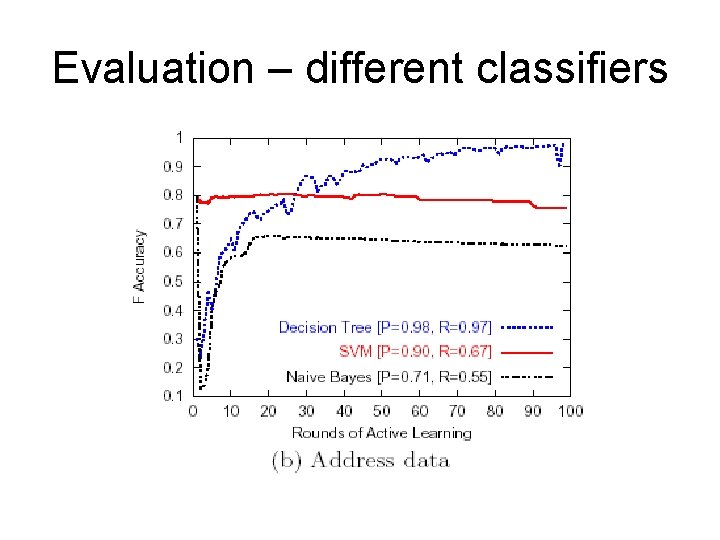

Evaluation – Different Classifiers • Decision Trees & Naïve Bayes: – Committees of 5 via parameter randomization • SVMs – Uncertainty = distance from separator • Start with one dup, one non-dup, add a new training example each round (n = 1), partial sampling (k = 5). • Similarity functions – 3 -Grams match, % overlapping words, approx. edit distance, special handling of #s/nulls. • Data sets: – Bibliography: 32131 citation pairs from Citeseer, 0. 5% duplicates. – Address: 44850 pairs, 0. 25% duplicates.

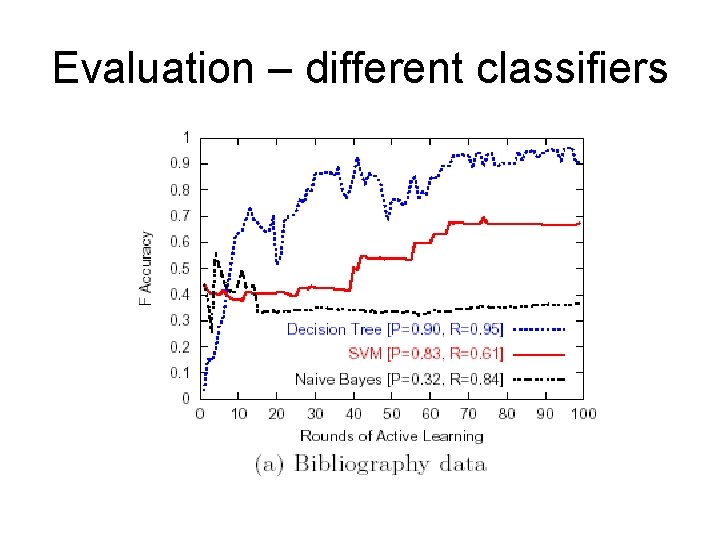

Evaluation – different classifiers

Evaluation – different classifiers

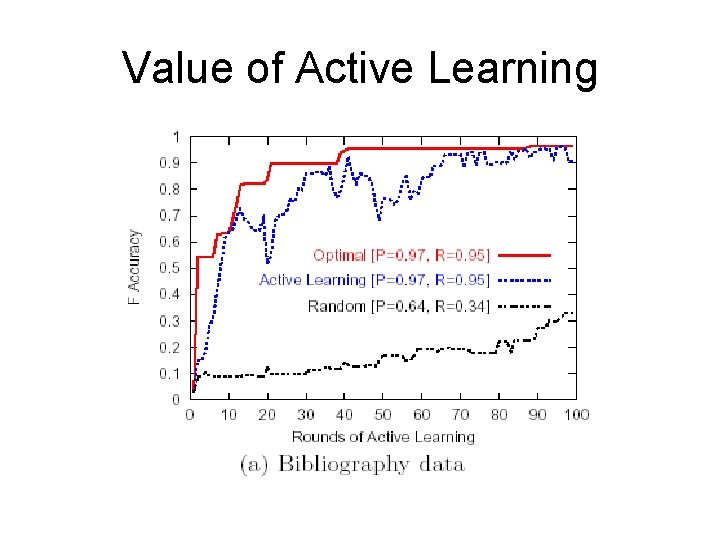

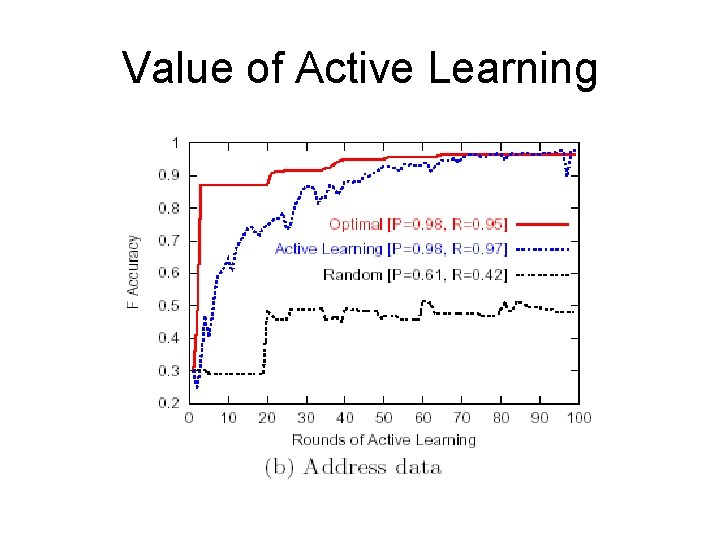

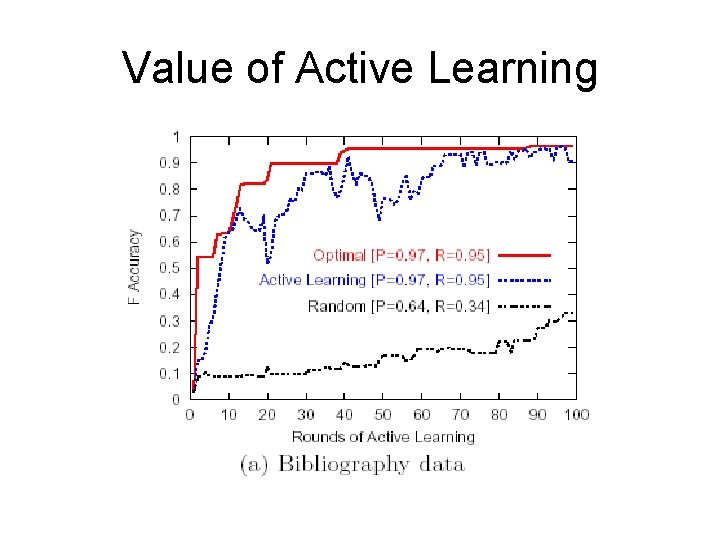

Value of Active Learning

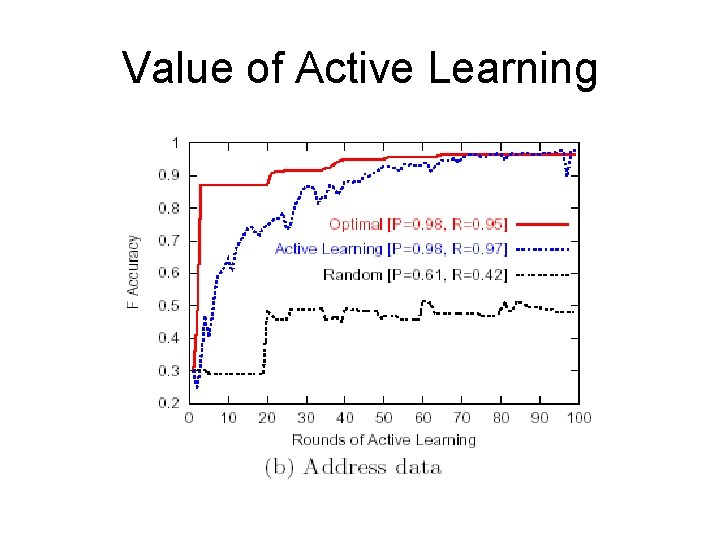

Value of Active Learning

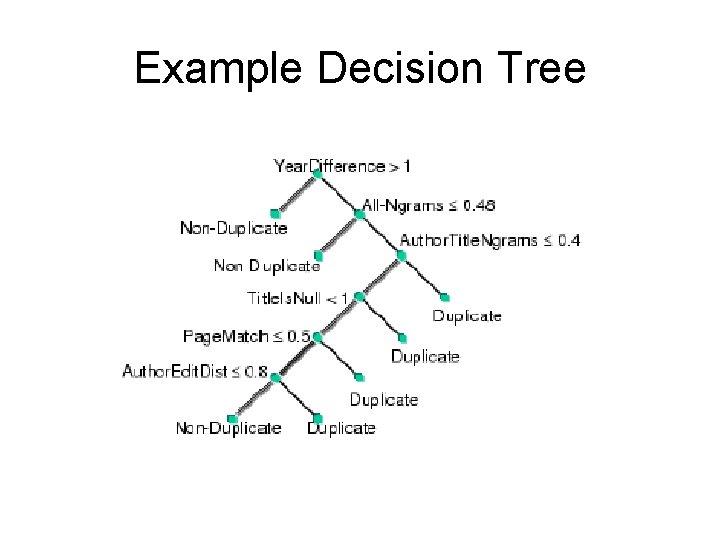

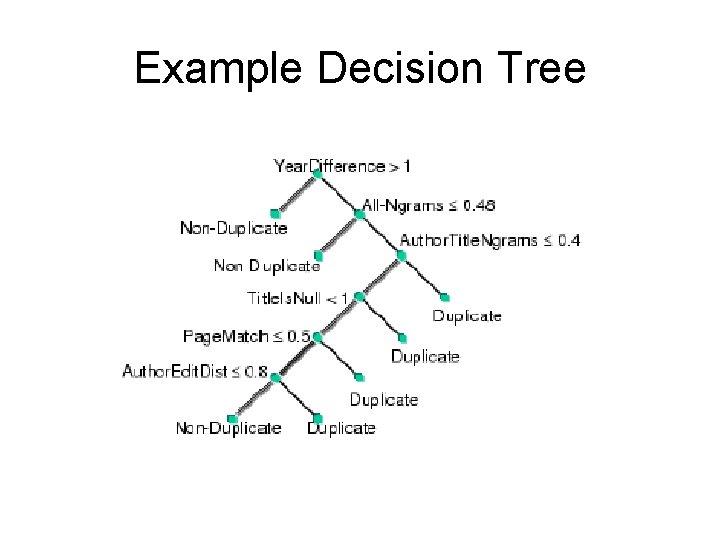

Example Decision Tree

Conclusions • Active Learning improves performance over random selection. – Uses two orders of magnitude less training data. – Note: not due just to change in +/- mix. • In these experiments, Decision Trees outperformed SVMs and Naïve Bayes.