Intelligent Assistants A Decision Theoretic Model Sriraam Natarajan

- Slides: 43

Intelligent Assistants - A Decision. Theoretic Model Sriraam Natarajan * Joint work with Prasad Tadepalli, Alan Fern, Kshitij Judah School of EECS, Oregon state University * Currently at AIC, SRI International

Motivation § Several assistant systems proposed to § § Assist users in daily tasks Reduce their cognitive load § Examples: CALO (CALO 2003), COACH (Boger et al. 2005), Electric Elves (Varakantham et al. 2005) etc § Problems with previous work § § § Fine-tuned to particular application domains Utilize specialized technologies Lack an overarching framework

Goals n Decision-theoretic model n n n Problems with the model n n n General notion of assistance Evaluate on a real world domain – Folder predictor Rationality Assumption Flat user goals Relational Hierarchical Model n n n Remove the assumption Augment with prior knowledge Combines ideas from decision-theory, logical models and probabilistic methods

Outline Decision-Theoretic Model of Assistance n Experiments – Folder Predictor n Incorporating Relational Hierarchies n Experiments n Conclusion n

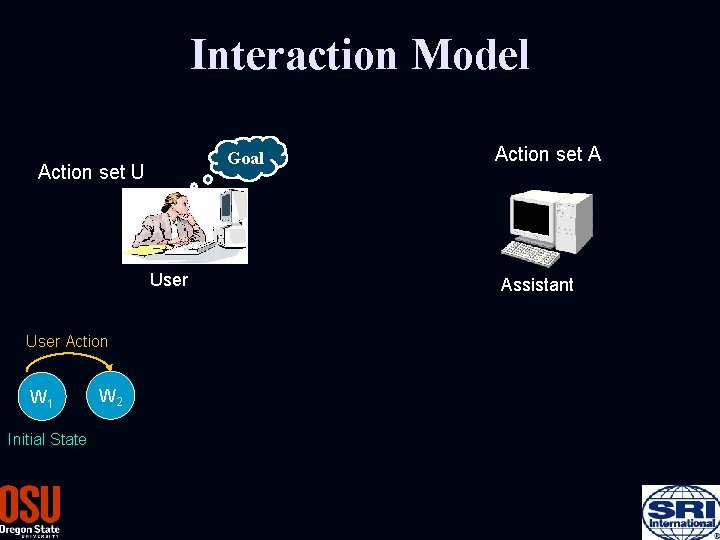

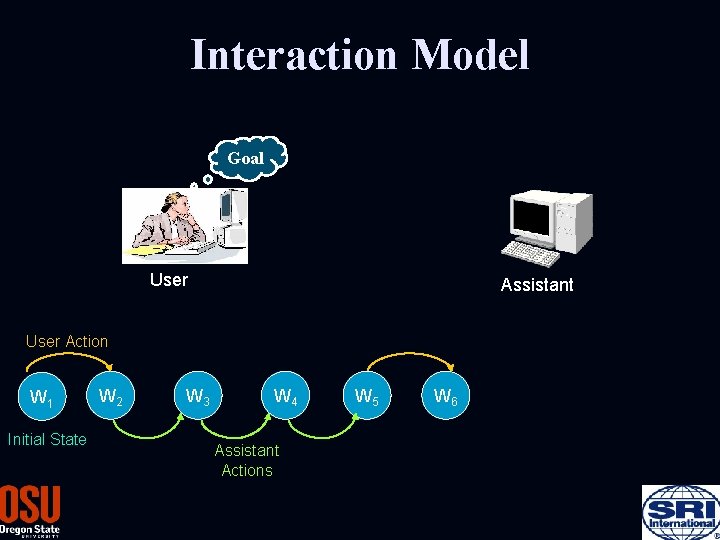

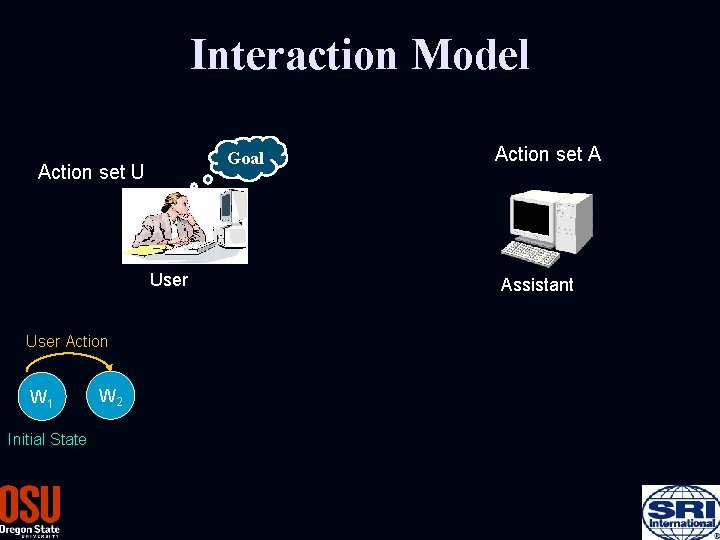

Interaction Model Goal Action set U User Action W 1 Initial State W 2 Action set A Assistant

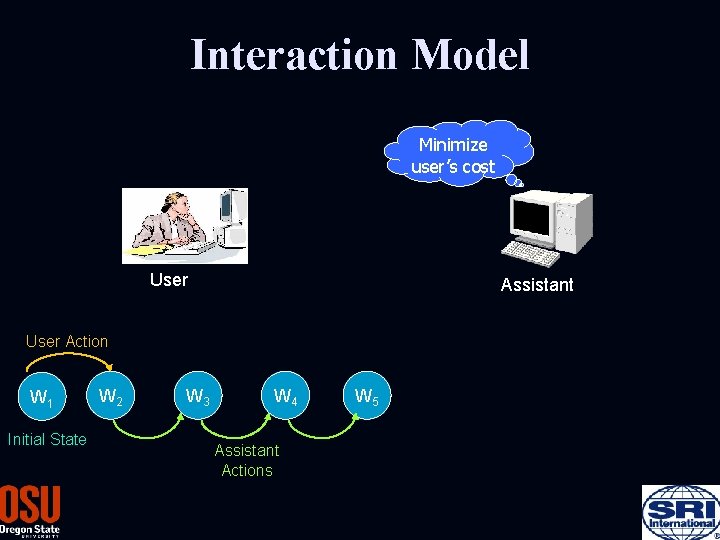

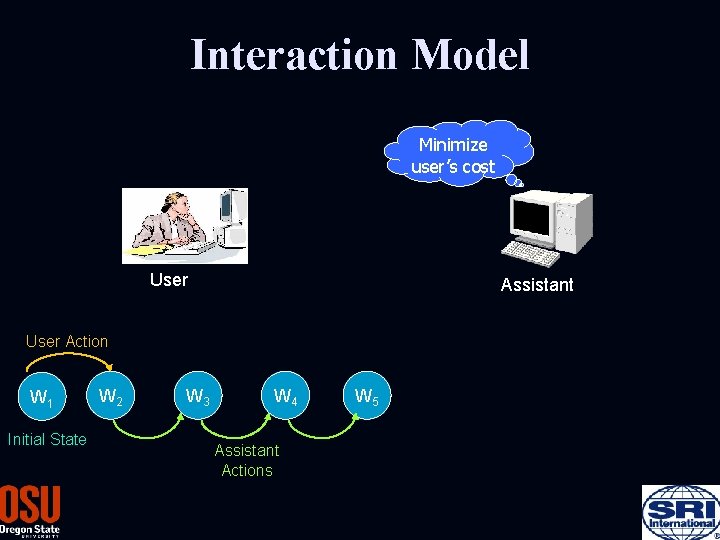

Interaction Model Minimize user’s cost User Assistant User Action W 1 Initial State W 2 W 3 W 4 Assistant Actions W 5

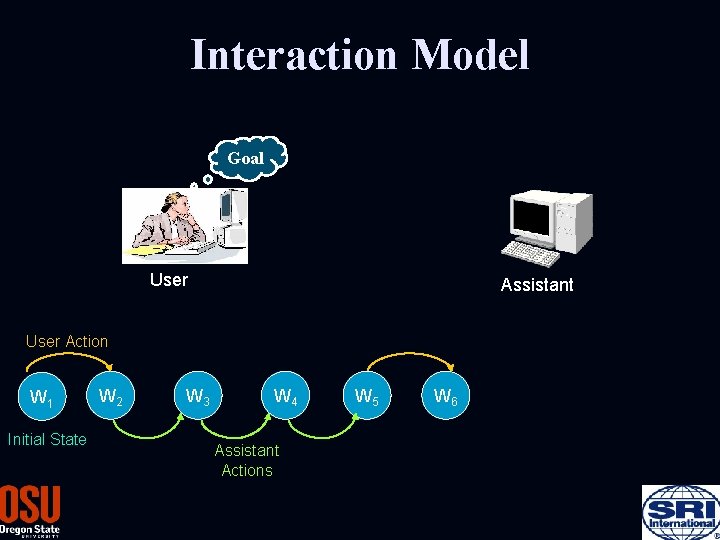

Interaction Model Goal User Assistant User Action W 1 Initial State W 2 W 3 W 4 Assistant Actions W 5 W 6

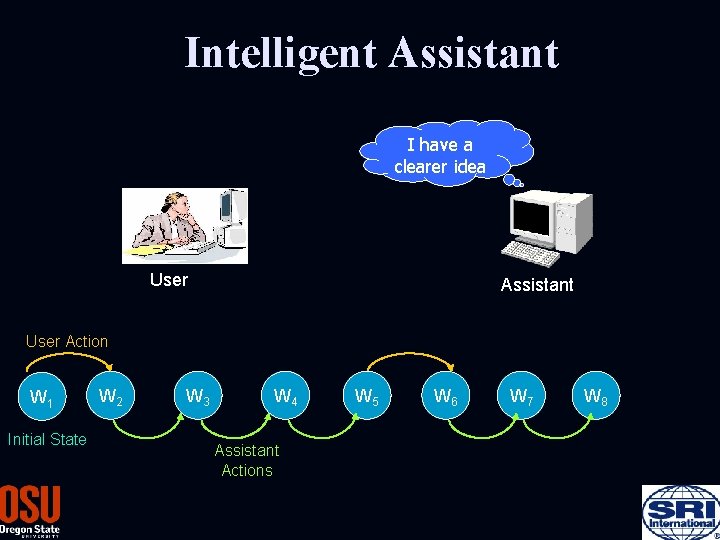

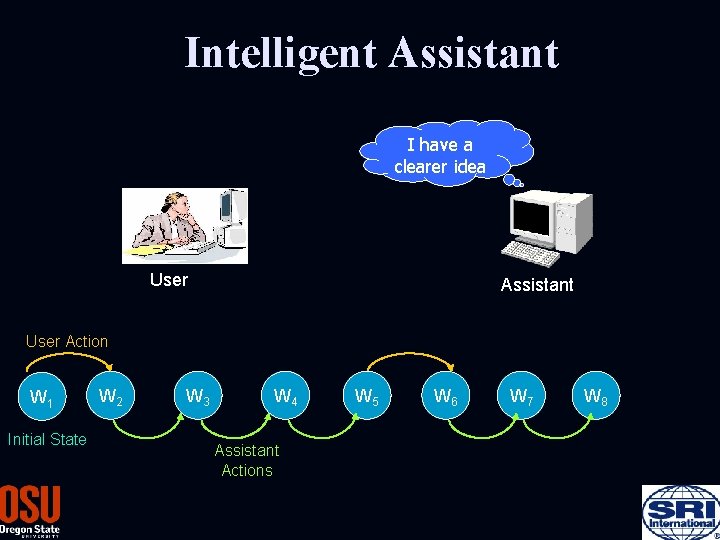

Intelligent Assistant I have a clearer idea User Assistant User Action W 1 Initial State W 2 W 3 W 4 Assistant Actions W 5 W 6 W 7 W 8

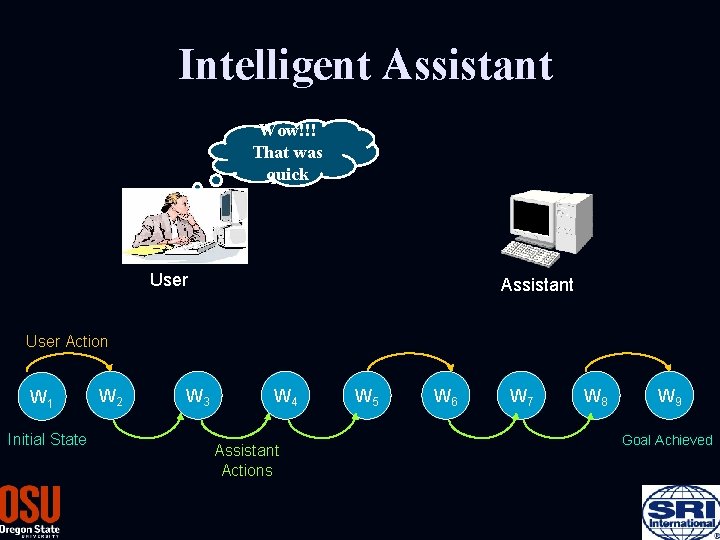

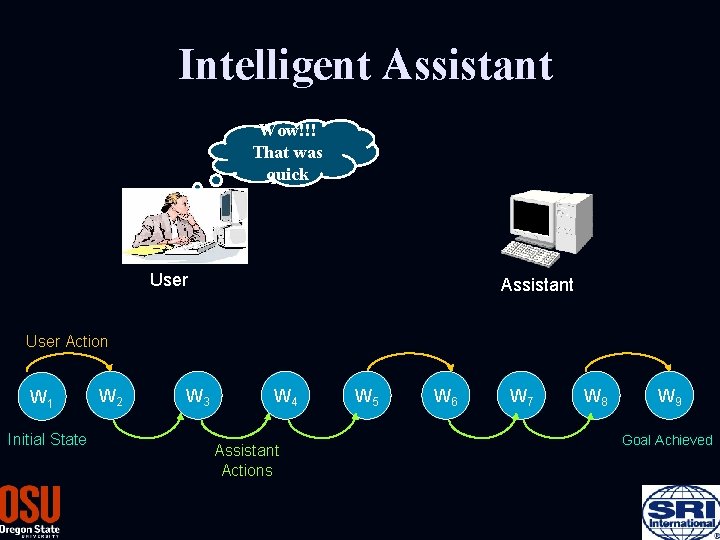

Intelligent Assistant Wow!!! That was quick User Assistant User Action W 1 Initial State W 2 W 3 W 4 Assistant Actions W 5 W 6 W 7 W 8 W 9 Goal Achieved

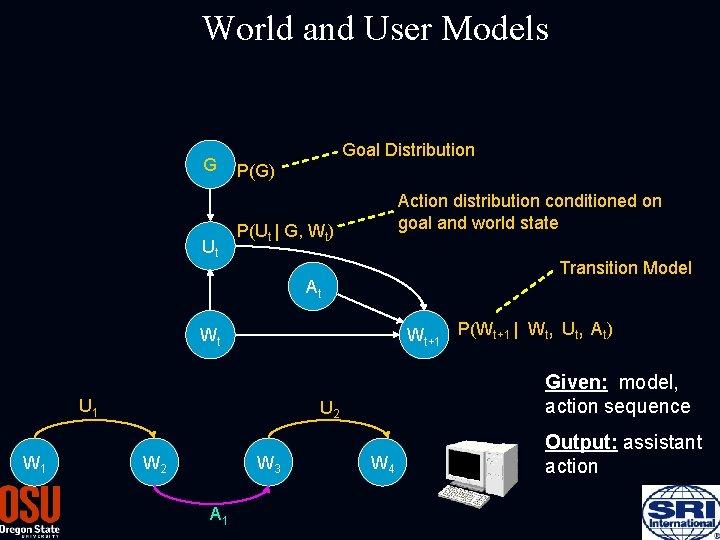

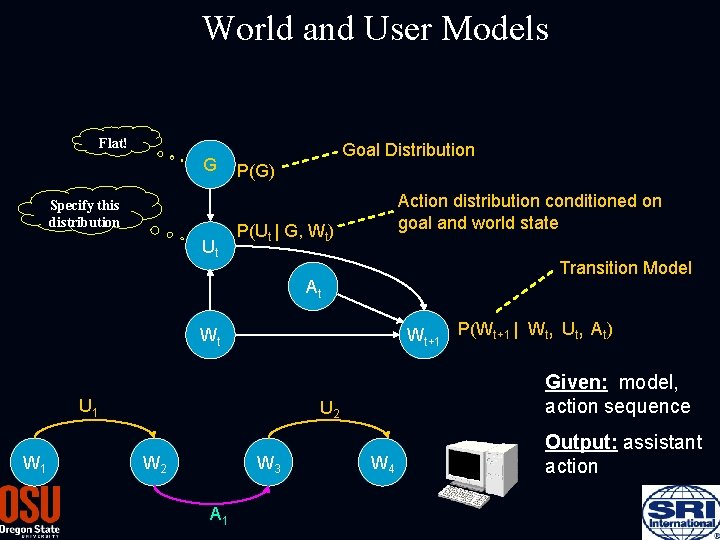

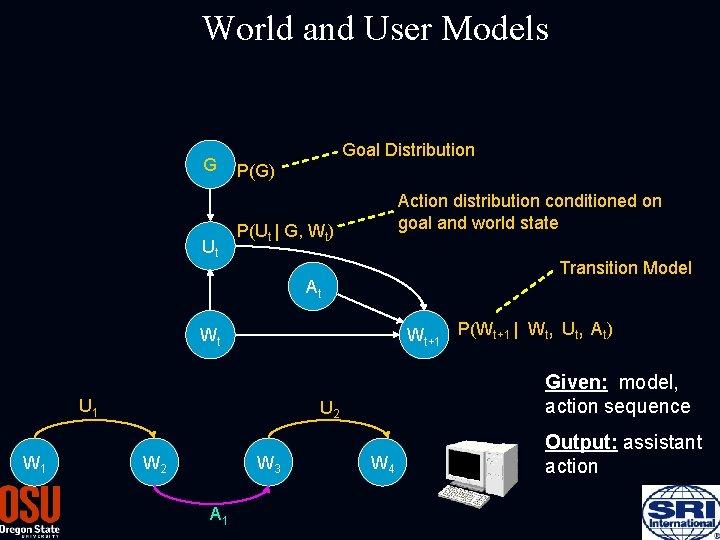

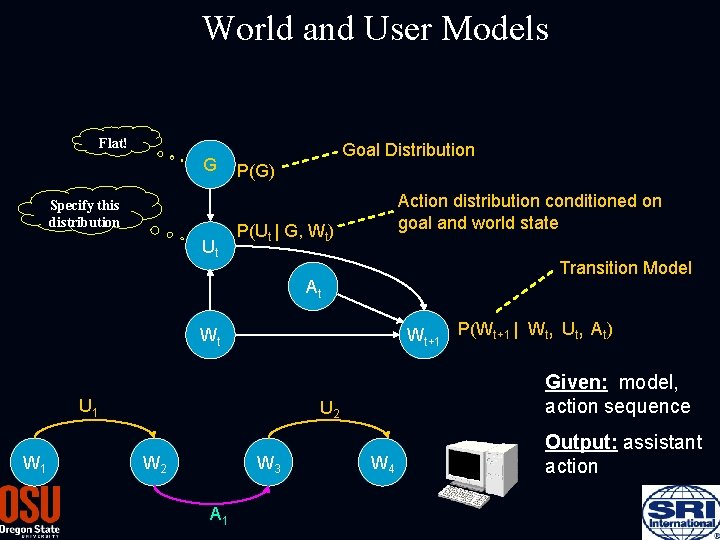

World and User Models G Ut Goal Distribution P(G) Action distribution conditioned on goal and world state P(Ut | G, Wt) Transition Model At Wt+1 P(Wt+1 | Wt, Ut, At) Wt U 1 W 1 Given: model, action sequence U 2 W 3 A 1 W 4 Output: assistant action

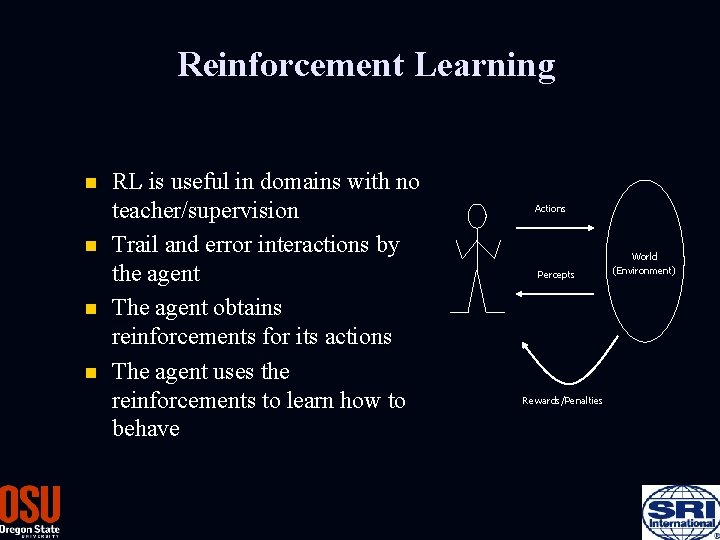

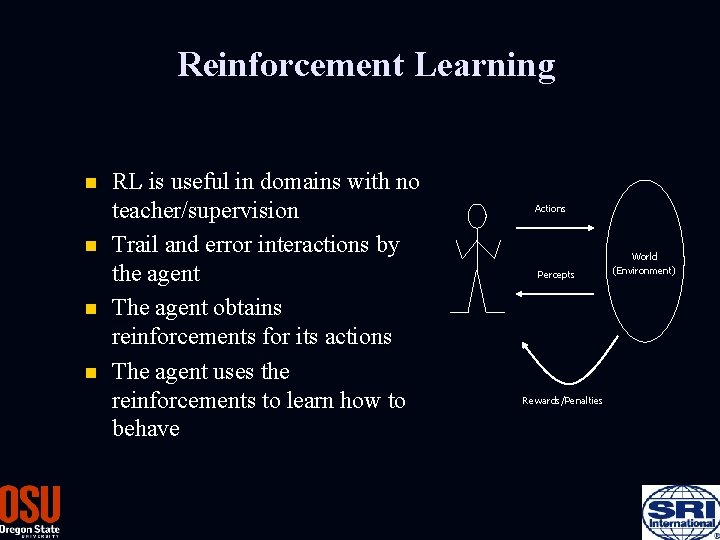

Reinforcement Learning n n RL is useful in domains with no teacher/supervision Trail and error interactions by the agent The agent obtains reinforcements for its actions The agent uses the reinforcements to learn how to behave Actions Percepts Rewards/Penalties World (Environment)

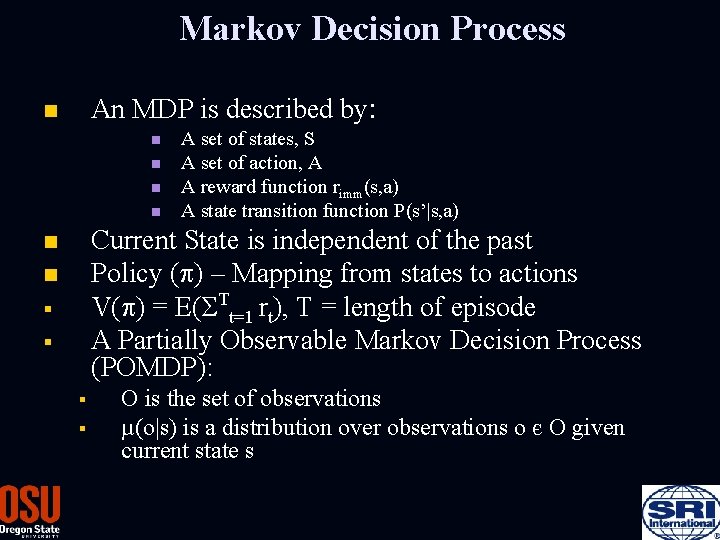

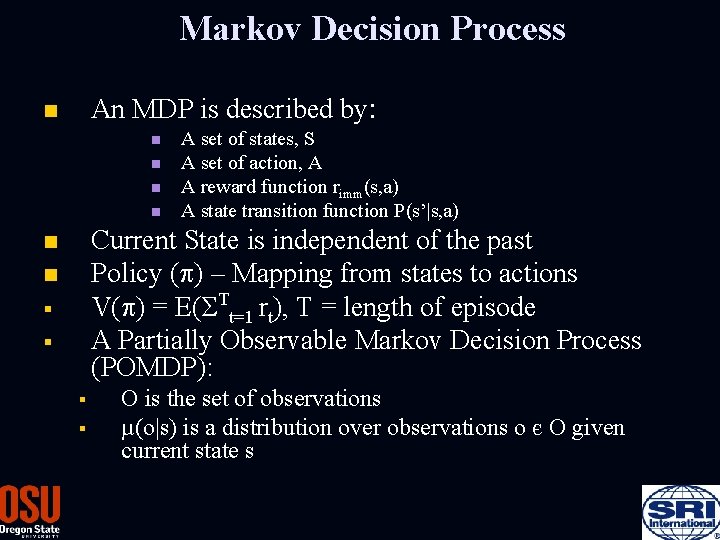

Markov Decision Process An MDP is described by: n n n A set of states, S A set of action, A A reward function rimm(s, a) A state transition function P(s’|s, a) Current State is independent of the past Policy (p ( ) – Mapping from states to actions V(p) = E(ΣTt=1 rt), T = length of episode A Partially Observable Markov Decision Process (POMDP): n n § § O is the set of observations µ(o|s) is a distribution over observations o є O given current state s

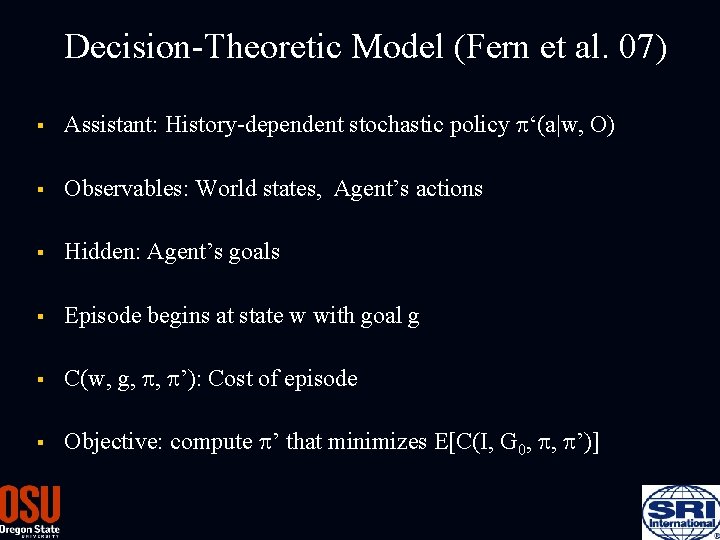

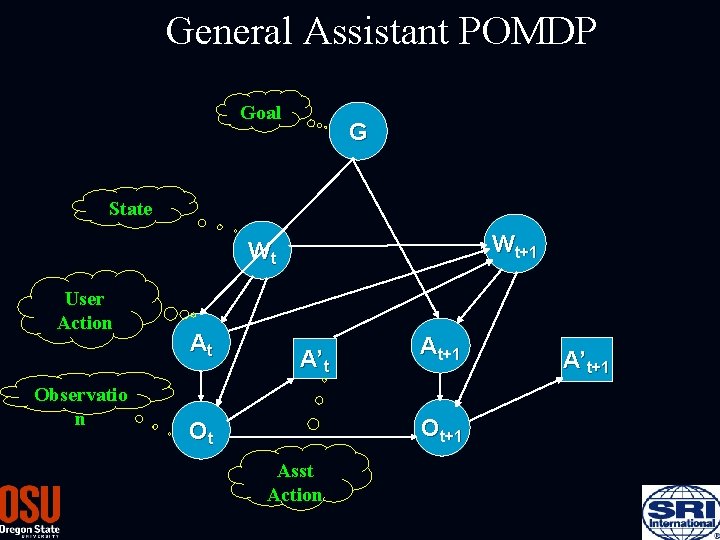

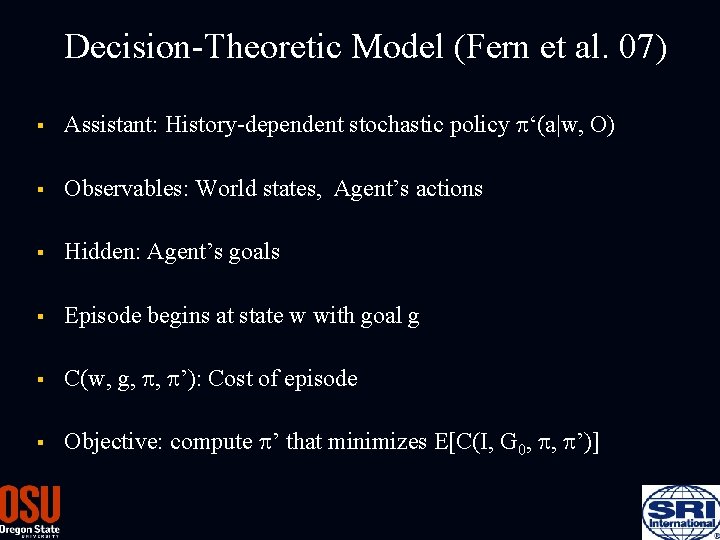

Decision-Theoretic Model (Fern et al. 07) § Assistant: History-dependent stochastic policy p‘(a|w, O) § Observables: World states, Agent’s actions § Hidden: Agent’s goals § Episode begins at state w with goal g § C(w, g, p, p’): Cost of episode § Objective: compute p’ that minimizes E[C(I, G 0, p, p’)]

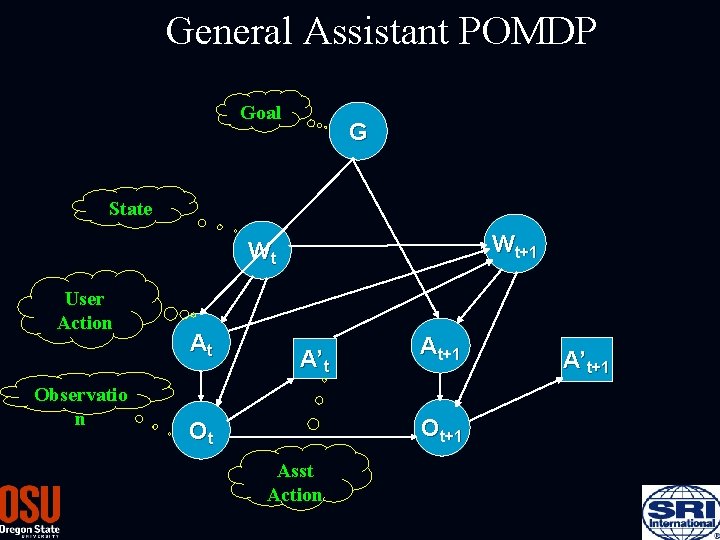

General Assistant POMDP Goal G State Wt+1 Wt User Action Observatio n At A’t At+1 Ot Asst Action A’t+1

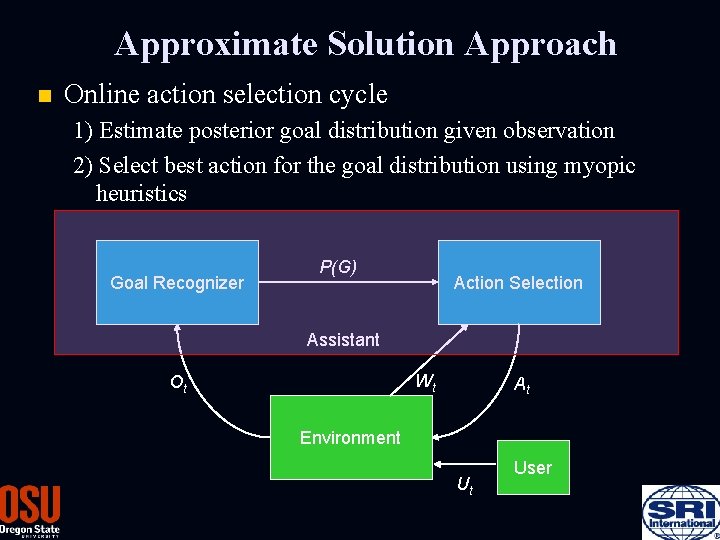

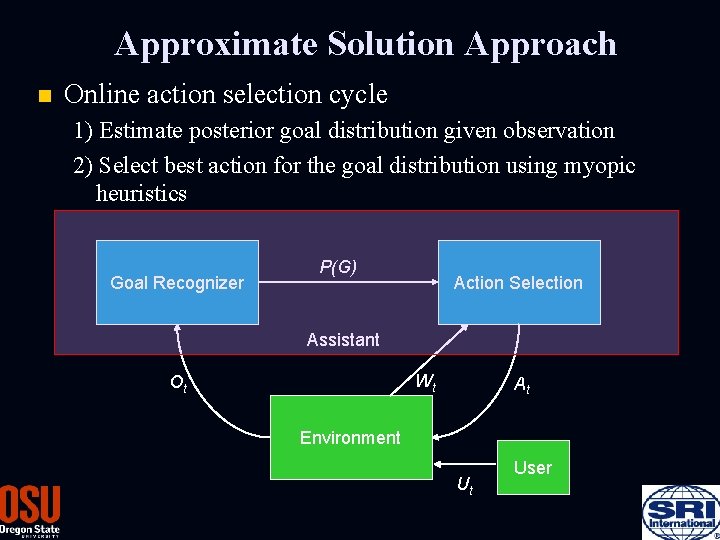

Approximate Solution Approach n Online action selection cycle 1) Estimate posterior goal distribution given observation 2) Select best action for the goal distribution using myopic heuristics Goal Recognizer P(G) Action Selection Assistant Wt Ot At Environment Ut User

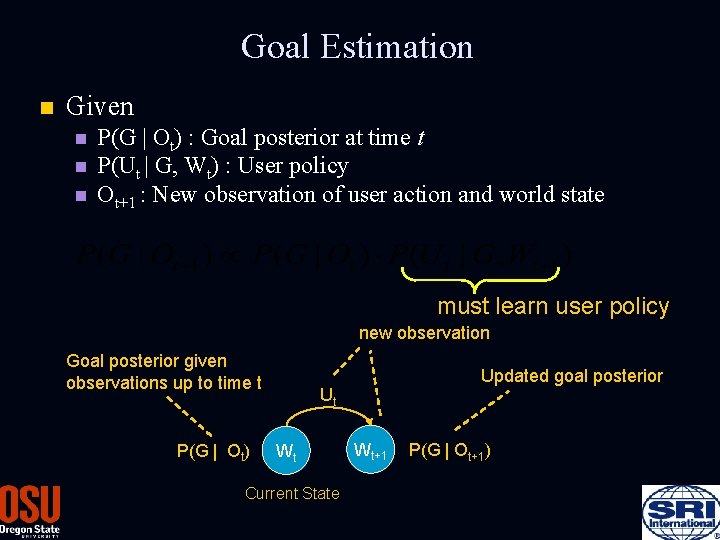

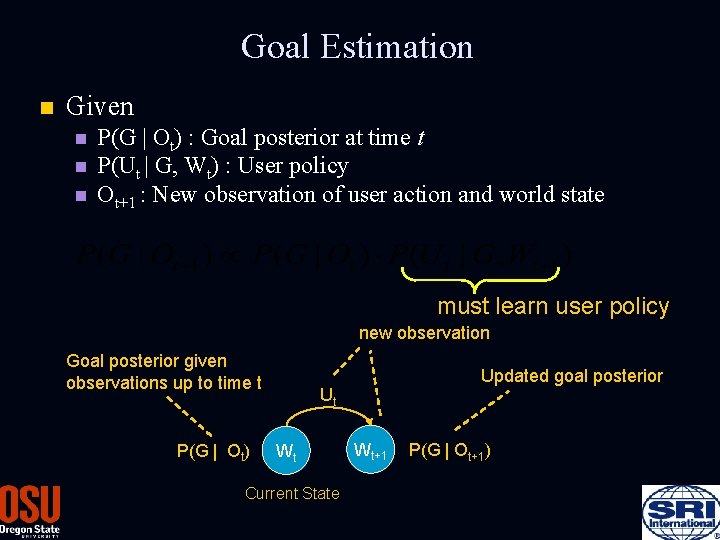

Goal Estimation n Given n P(G | Ot) : Goal posterior at time t P(Ut | G, Wt) : User policy Ot+1 : New observation of user action and world state must learn user policy new observation Goal posterior given observations up to time t P(G | Ot) Updated goal posterior Ut Wt Current State Wt+1 P(G | Ot+1)

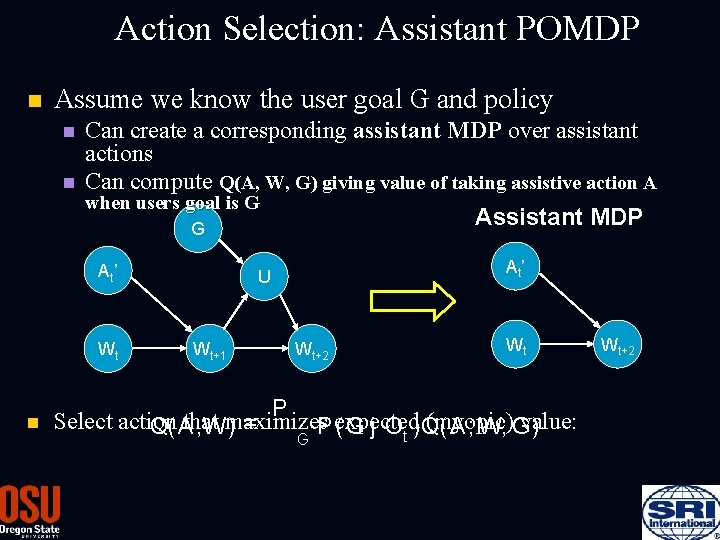

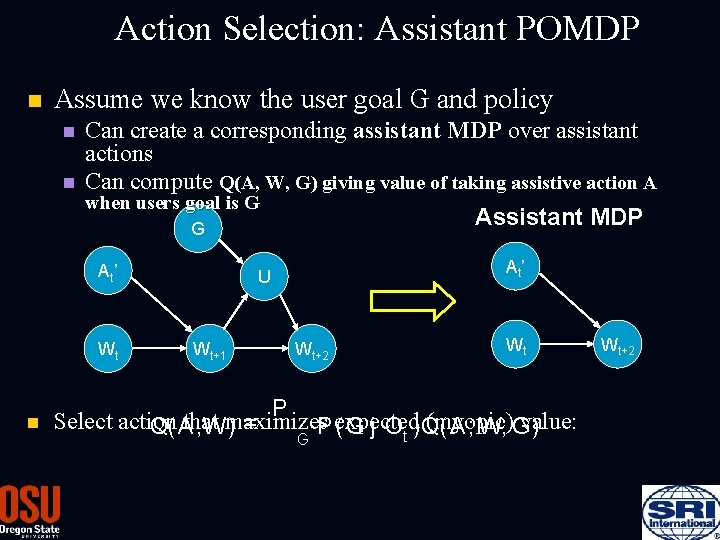

Action Selection: Assistant POMDP n Assume we know the user goal G and policy n n Can create a corresponding assistant MDP over assistant actions Can compute Q(A, W, G) giving value of taking assistive action A when users goal is G Assistant MDP G At’ Wt n At’ U Wt+1 Wt+2 Wt P Select action that maximizes (myopic) value: = P expected (G j Ot )Q(A , W ) ; W; G) G Wt+2

Decision-Theoretic Model n Experiments – Folder Predictor n Incorporating Relational Hierarchies n Experiments n Conclusion n

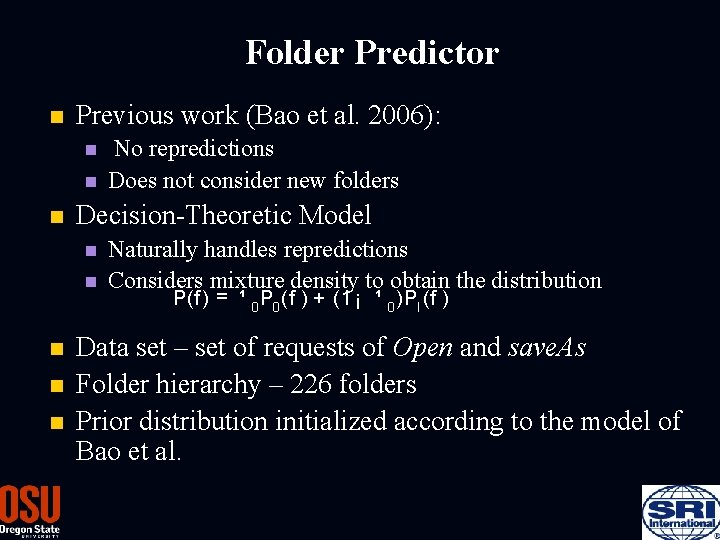

Folder Predictor n Previous work (Bao et al. 2006): n n n Decision-Theoretic Model n n n No repredictions Does not consider new folders Naturally handles repredictions Considers mixture density to obtain the distribution P(f ) = ¹ 0 P 0 (f ) + (1 ¡ ¹ 0 )Pl (f ) Data set – set of requests of Open and save. As Folder hierarchy – 226 folders Prior distribution initialized according to the model of Bao et al.

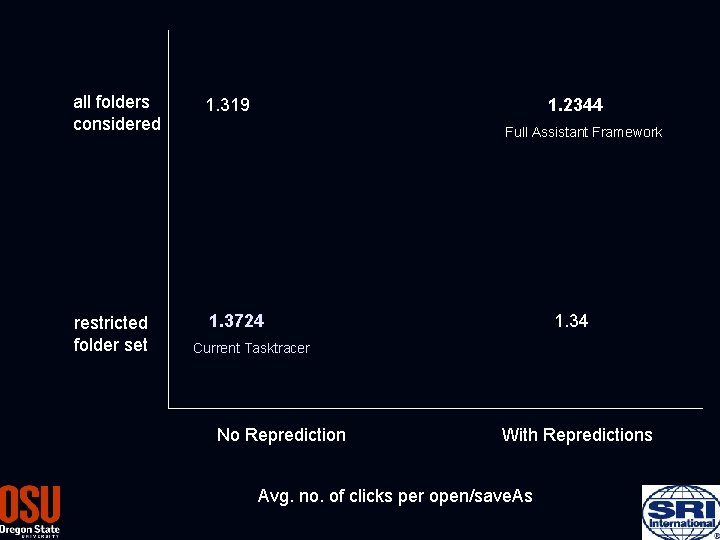

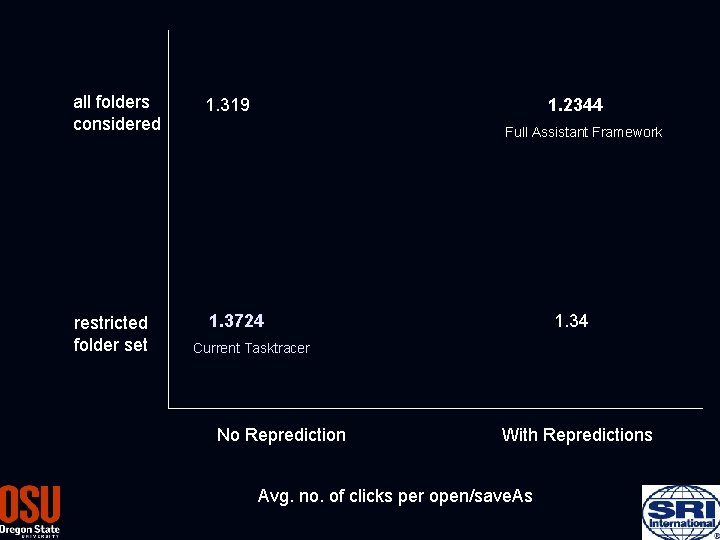

all folders considered 1. 319 restricted folder set 1. 3724 1. 2344 Full Assistant Framework 1. 34 Current Tasktracer No Reprediction With Repredictions Avg. no. of clicks per open/save. As

Decision-Theoretic Model n Experiments – Folder Predictor n Incorporating Relational Hierarchies n Experiments n Conclusion n

Motivation – Early Assistance n Models need to be specified for early assistance n Assumption - User is “nearly rational” n Assumption – Flat user policy: Unreasonable in many domains n Goal: Remove assumptions by using Relational Hierarchical Goal structure n Goal structure and probabilistic relationships serve as prior knowledge

World and User Models Flat! G Specify this distribution Ut Goal Distribution P(G) Action distribution conditioned on goal and world state P(Ut | G, Wt) Transition Model At Wt+1 P(Wt+1 | Wt, Ut, At) Wt U 1 W 1 Given: model, action sequence U 2 W 3 A 1 W 4 Output: assistant action

Goal: Biasing the Assistant with Prior Knowledge n Initialize the assistant with hierarchical relational task knowledge Relational Hierarchical Prior Knowledge Goal Recognizer P(G) Action Selection Assistant Wt Ot At Environment Ut User

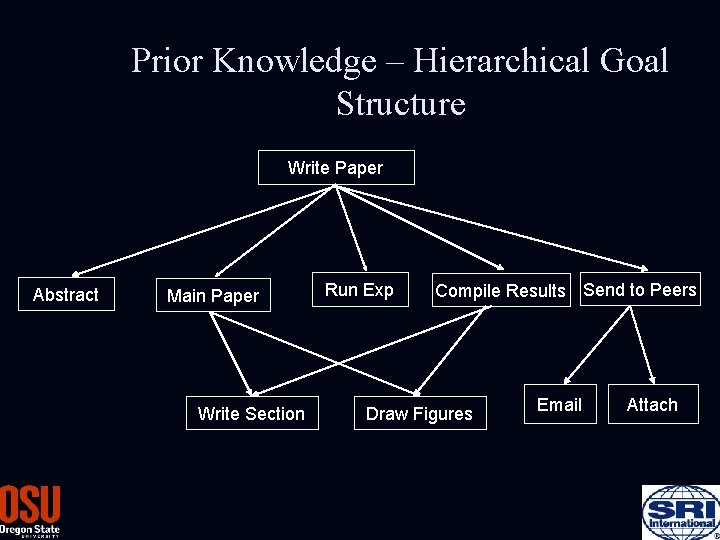

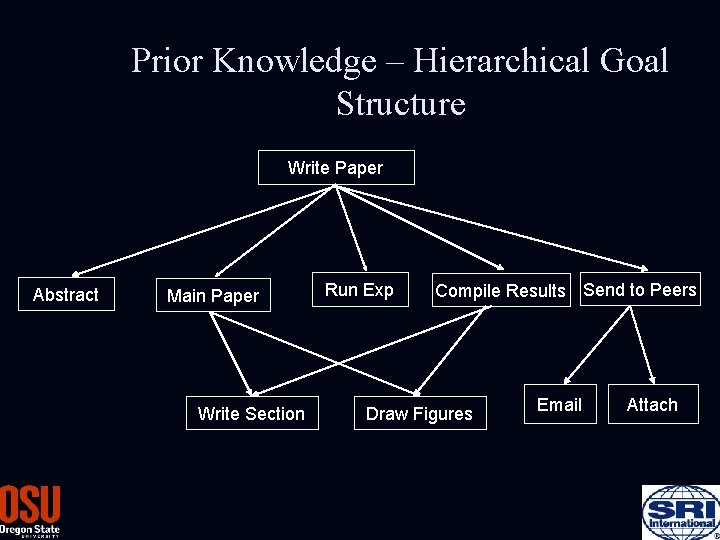

Prior Knowledge – Hierarchical Goal Structure Write Paper Abstract Main Paper Write Section Run Exp Compile Results Send to Peers Draw Figures Email Attach

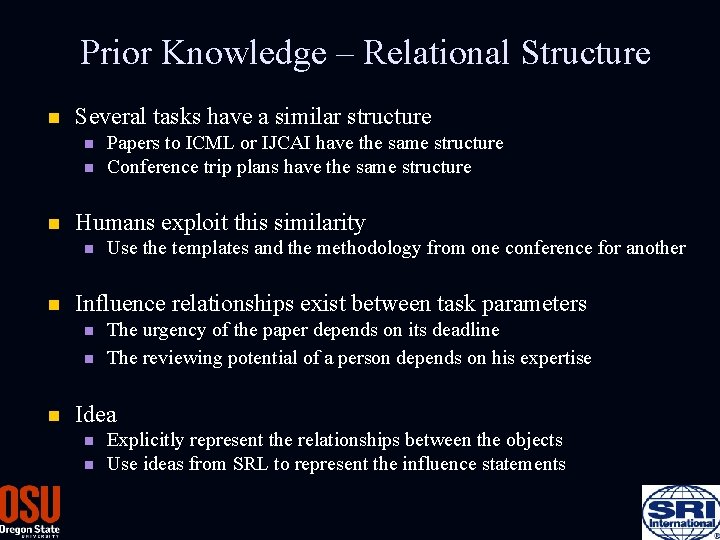

Prior Knowledge – Relational Structure n Several tasks have a similar structure n n n Humans exploit this similarity n n Use the templates and the methodology from one conference for another Influence relationships exist between task parameters n n n Papers to ICML or IJCAI have the same structure Conference trip plans have the same structure The urgency of the paper depends on its deadline The reviewing potential of a person depends on his expertise Idea n n Explicitly represent the relationships between the objects Use ideas from SRL to represent the influence statements

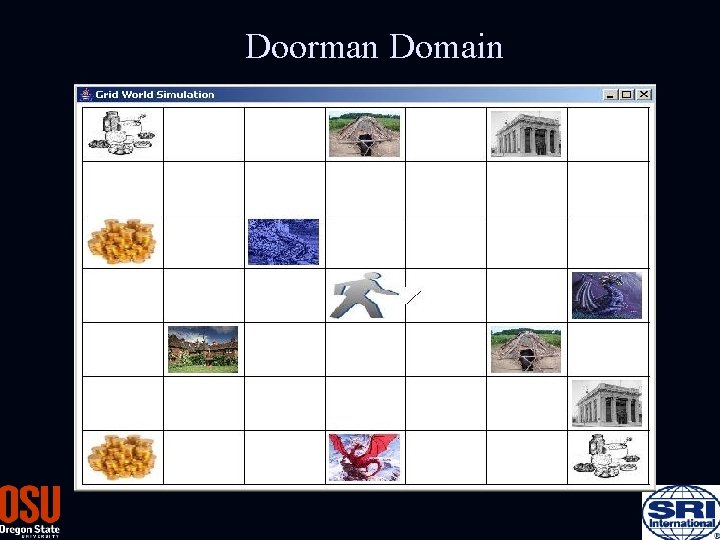

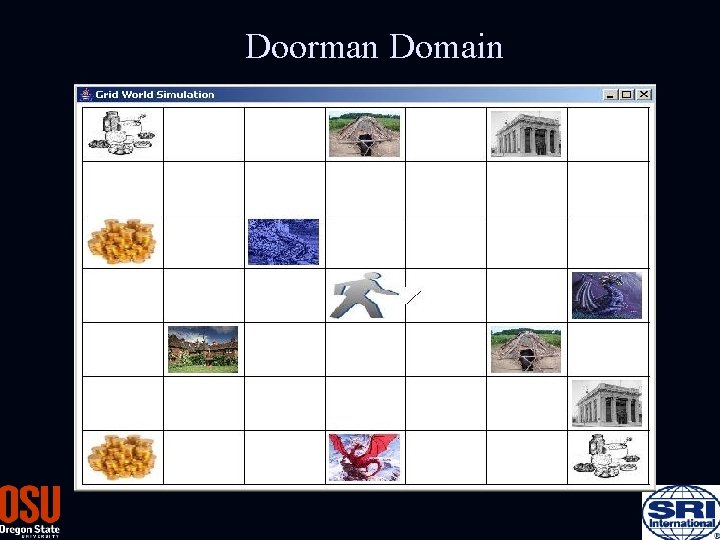

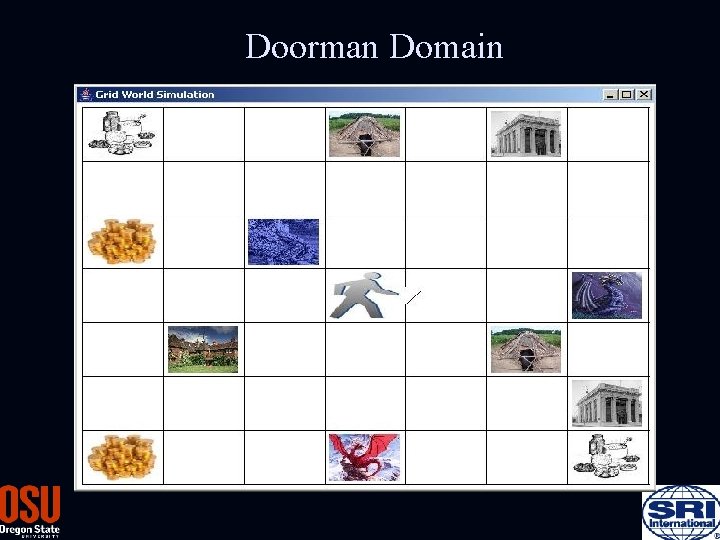

Doorman Domain

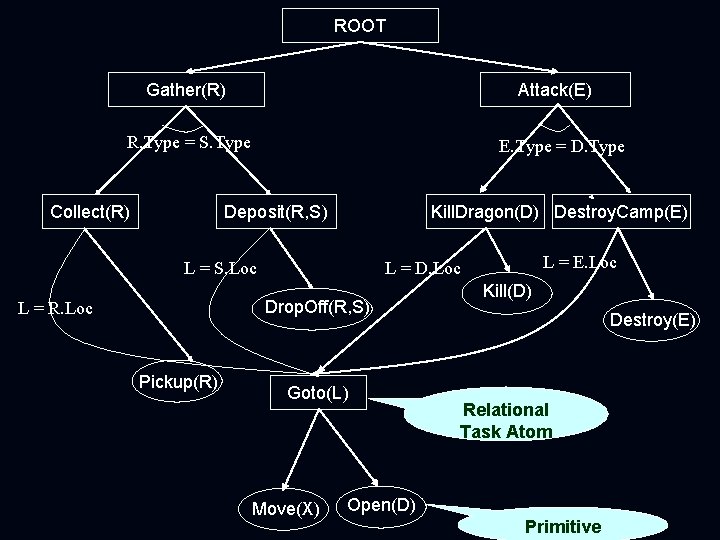

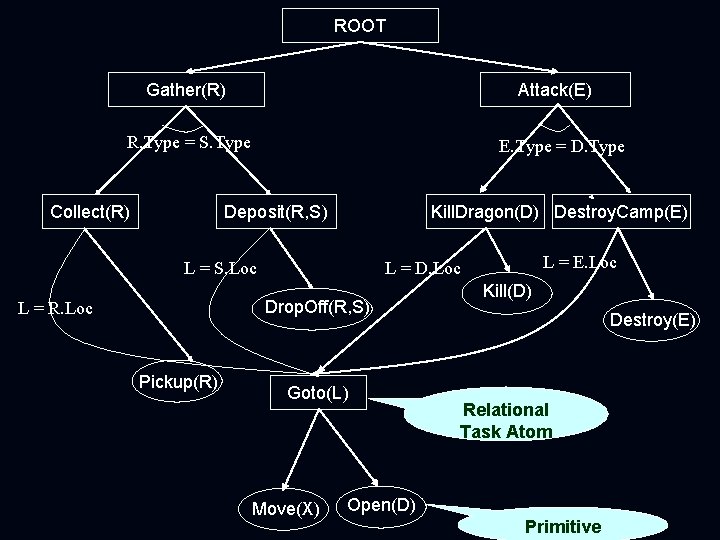

ROOT Gather(R) Attack(E) R. Type = S. Type Collect(R) E. Type = D. Type Deposit(R, S) Kill. Dragon(D) Destroy. Camp(E) L = S. Loc Drop. Off(R, S) L = R. Loc Pickup(R) L = E. Loc L = D. Loc Goto(L) Move(X) Kill(D) Destroy(E) Relational Task Atom Open(D) Primitive

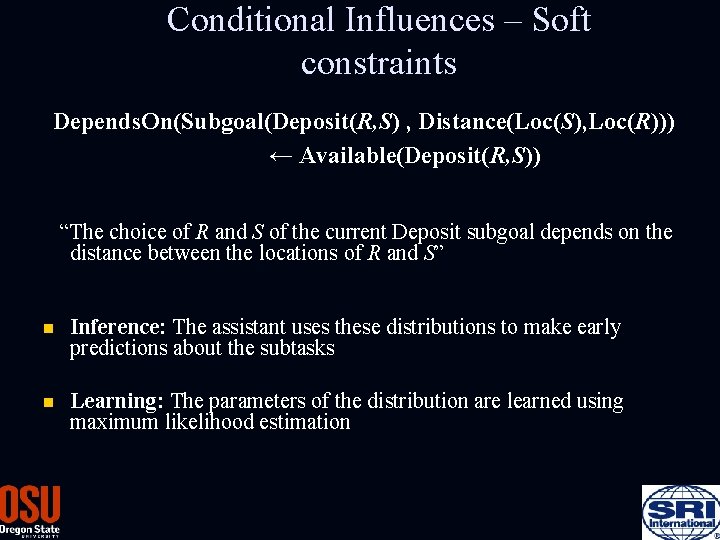

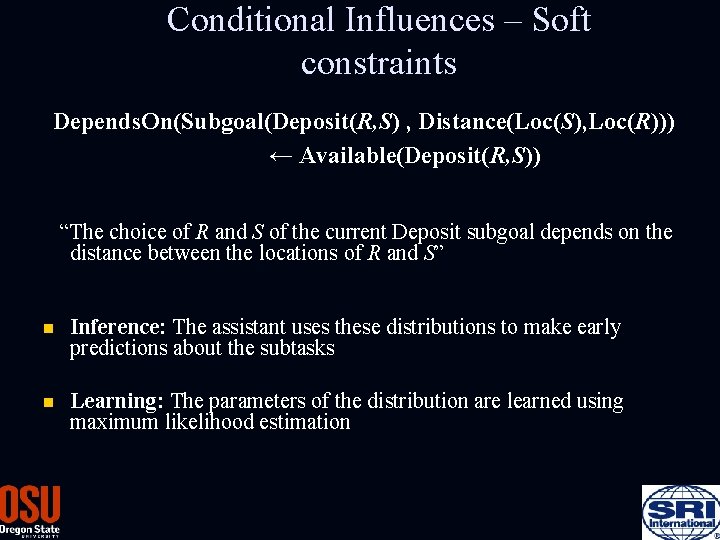

Conditional Influences – Soft constraints Depends. On(Subgoal(Deposit(R, S) , Distance(Loc(S), Loc(R))) ← Available(Deposit(R, S)) “The choice of R and S of the current Deposit subgoal depends on the distance between the locations of R and S” n Inference: The assistant uses these distributions to make early predictions about the subtasks n Learning: The parameters of the distribution are learned using maximum likelihood estimation

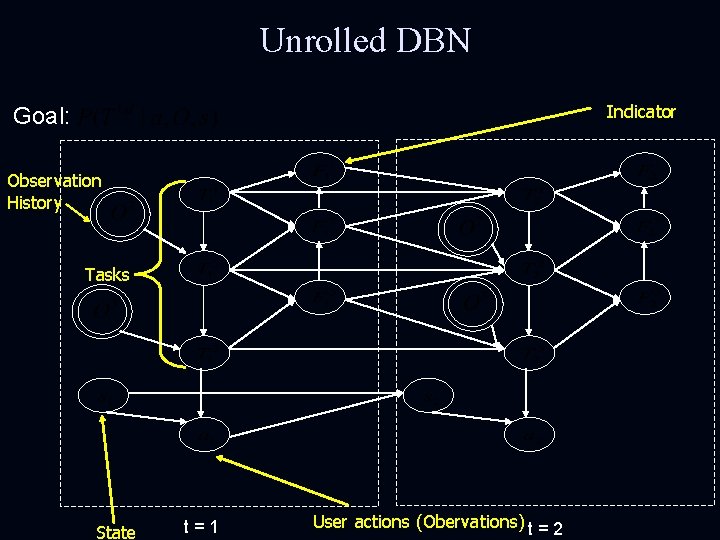

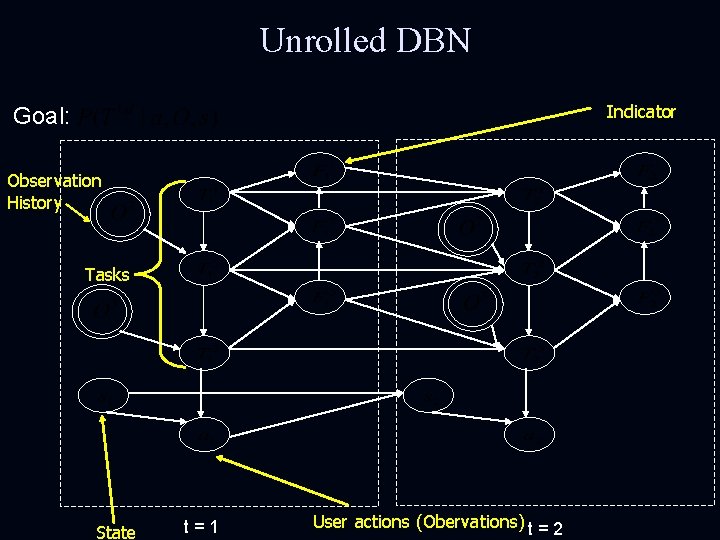

Unrolled DBN Indicator Goal: Observation History Tasks State t=1 User actions (Obervations) t = 2

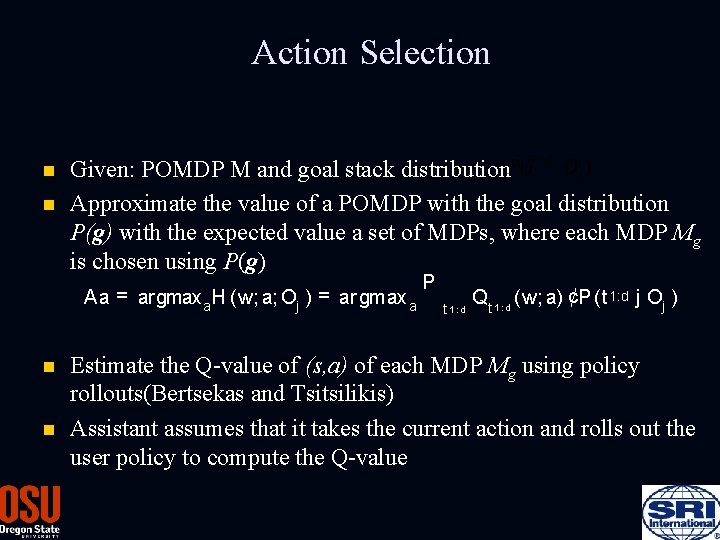

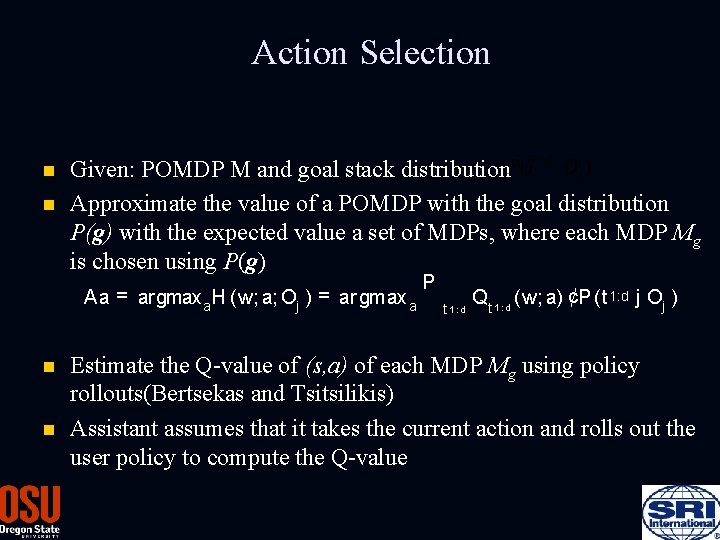

Action Selection n n Given: POMDP M and goal stack distribution Approximate the value of a POMDP with the goal distribution P(g) with the expected value a set of MDPs, where each MDP Mg is chosen using P(g) Aa = argmaxa H (w; a; Oj ) = ar gmax a n n P t 1: d Qt 1 : d (w; a) ¢P (t 1: d j Oj ) Estimate the Q-value of (s, a) of each MDP Mg using policy rollouts(Bertsekas and Tsitsilikis) Assistant assumes that it takes the current action and rolls out the user policy to compute the Q-value

Decision-Theoretic Model n Incorporating Relational Hierarchies n Experiments n Conclusion n

Doorman Domain

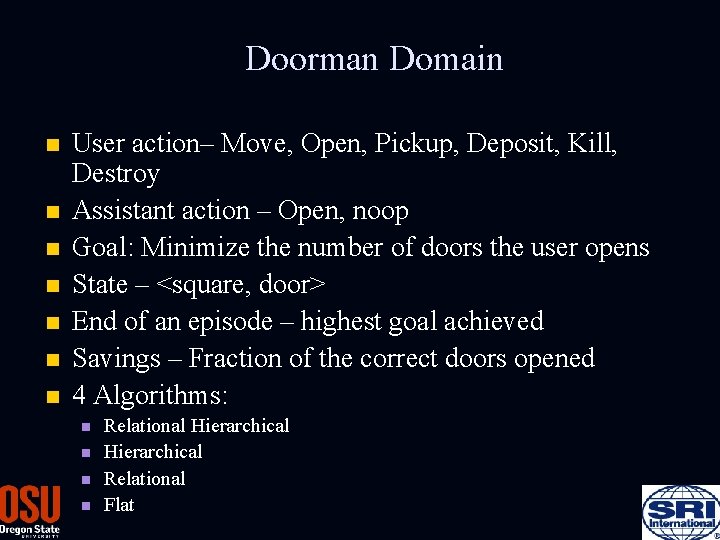

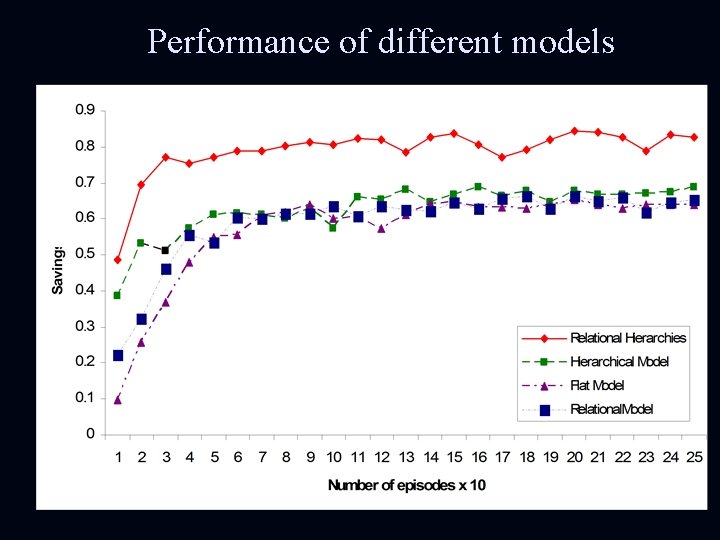

Doorman Domain n n n User action– Move, Open, Pickup, Deposit, Kill, Destroy Assistant action – Open, noop Goal: Minimize the number of doors the user opens State – <square, door> End of an episode – highest goal achieved Savings – Fraction of the correct doors opened 4 Algorithms: n n Relational Hierarchical Relational Flat

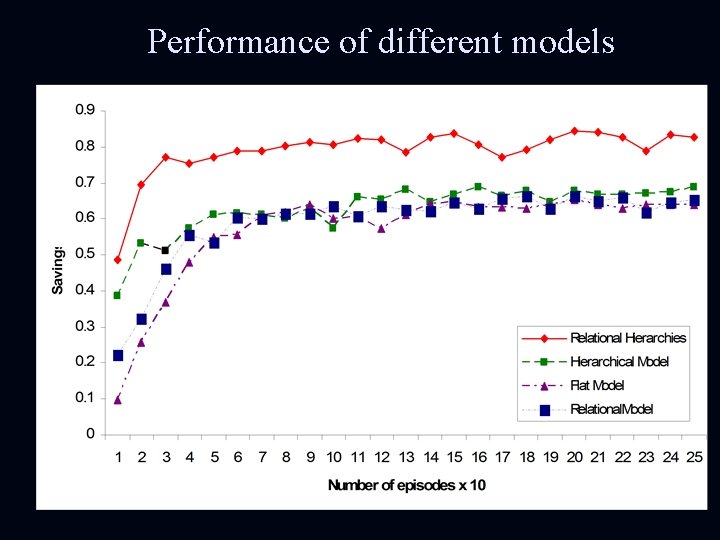

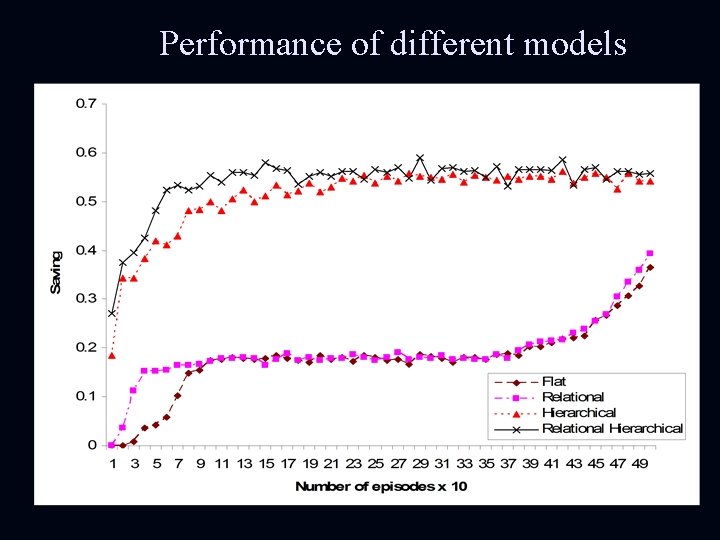

Performance of different models

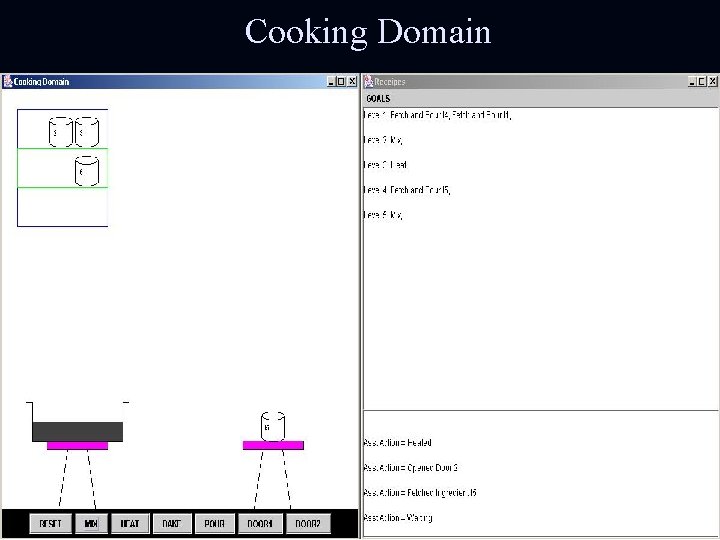

Cooking Domain

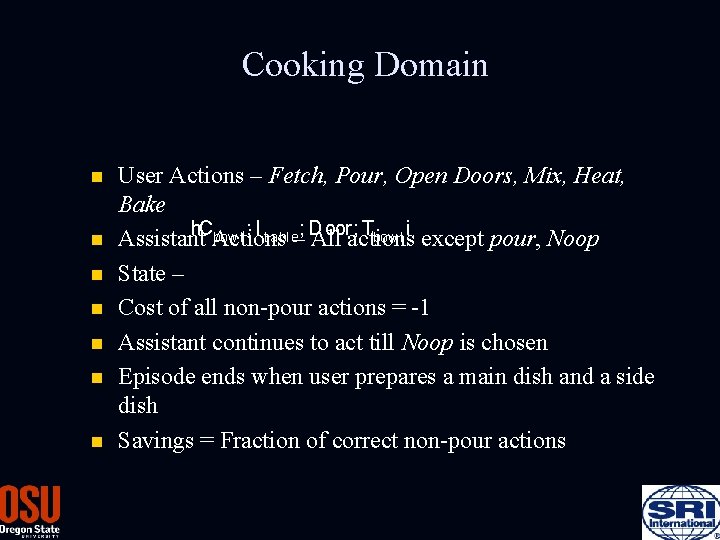

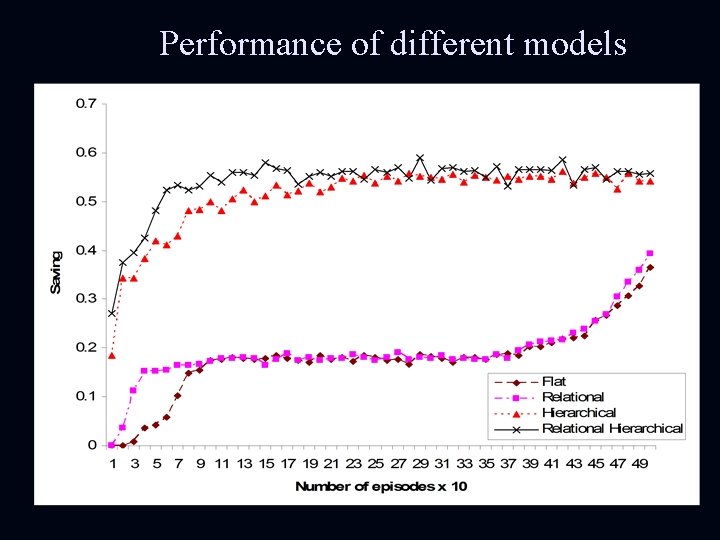

Cooking Domain n n n User Actions – Fetch, Pour, Open Doors, Mix, Heat, Bake h. Cbow l ; I t abl e ; D oor; Tbow l i Assistant Actions – All actions except pour, Noop State – Cost of all non-pour actions = -1 Assistant continues to act till Noop is chosen Episode ends when user prepares a main dish and a side dish Savings = Fraction of correct non-pour actions

Performance of different models

Decision-Theoretic Model n Incorporating Relational Hierarchies n Experiments n Conclusion n

Conclusion n Exploit the combination of probability, logical models and decision-theory to perform effective assistance n Proposed a general model of assistance n Evaluated the system on a real world domain n Proposed parameterized task hierarchies to capture hierarchical goal structure n Demonstrated effective early assistance in two domains n Provided a method to incorporate actions into SRL methods

Future work n Evaluate the framework on real-world domains (CALO) n Inference in current work could be inefficient due to grounding n Currently, n n n Working with Hung Bui on a Logical Hierarchical Hidden Markov model Track the user’s actions in CALO Idea: Convert the relational task hierarchy to a Lo. Hi. HMM n Use RBPF or a similar algorithm to perform inference n Generalize the framework as a way to combine hierarchies and relations in RL n Incorporate sophisticated user models

Thank you!!!

Sriraam natarajan

Sriraam natarajan Sriraam natarajan

Sriraam natarajan Sriraam natarajan

Sriraam natarajan Objectives of decision making

Objectives of decision making Dividend decision in financial management

Dividend decision in financial management Making best use of teaching assistants

Making best use of teaching assistants Maximising the impact of teaching assistants

Maximising the impact of teaching assistants Scaffolding framework for teaching assistants

Scaffolding framework for teaching assistants Summarize three obstructions to professionalism

Summarize three obstructions to professionalism Pricewaterhouse

Pricewaterhouse Decision support systems and intelligent systems

Decision support systems and intelligent systems G natarajan advocate

G natarajan advocate G natarajan advocate

G natarajan advocate Anand natarajan dtu

Anand natarajan dtu Madhu natarajan

Madhu natarajan Dr suma natarajan

Dr suma natarajan Madhu natarajan

Madhu natarajan Decision table and decision tree examples

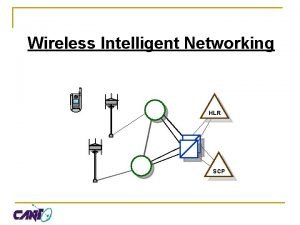

Decision table and decision tree examples Any time interrogation call flow

Any time interrogation call flow Intelligence vs intelligent

Intelligence vs intelligent Vni4140

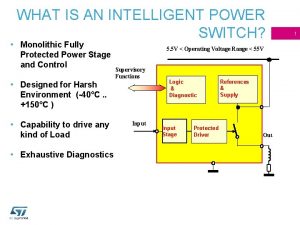

Vni4140 . intelligent sharing of power is done among

. intelligent sharing of power is done among Intelligent customer routing

Intelligent customer routing Contoh peas kecerdasan buatan

Contoh peas kecerdasan buatan Intelligent techniques adalah

Intelligent techniques adalah Structure of intelligent agents

Structure of intelligent agents Automatic workload management

Automatic workload management Ace web application firewall

Ace web application firewall Intelligent data storage

Intelligent data storage Intelligent process automation in audit

Intelligent process automation in audit Mathsbot differentiated

Mathsbot differentiated Coreils

Coreils Peas model example

Peas model example Faith is the bird that feels the light meaning

Faith is the bird that feels the light meaning Superlative form for hot

Superlative form for hot Comfortably comparative and superlative

Comfortably comparative and superlative Structure of intelligent agents

Structure of intelligent agents Intelligent disk subsystem

Intelligent disk subsystem Intelligent platform management interface market demand

Intelligent platform management interface market demand Jared peet

Jared peet Teori evolusi intelligent design

Teori evolusi intelligent design A smart grid for intelligent energy use

A smart grid for intelligent energy use Porsche intelligent performance

Porsche intelligent performance Form of intelligent humor

Form of intelligent humor