INFSCI 2480 RSS Feeds Document Filtering Yiling Lin

, Doc 2[ … money …](s), Doc 3[ … Weighted Probability Doc 1[… money …](s), Doc 2[ … money …](s), Doc 3[ …](https://slidetodoc.com/presentation_image_h2/b9bf2714a91662bb7640ec02078570c1/image-23.jpg)

- Slides: 35

INFSCI 2480 RSS Feeds Document Filtering Yi-ling Lin 02/02/2011

Feed? RSS? Atom? RSS = Rich Site Summary RSS = RDF (Resource Description Framework) Site Summary RSS = Really Simple Syndicate ATOM

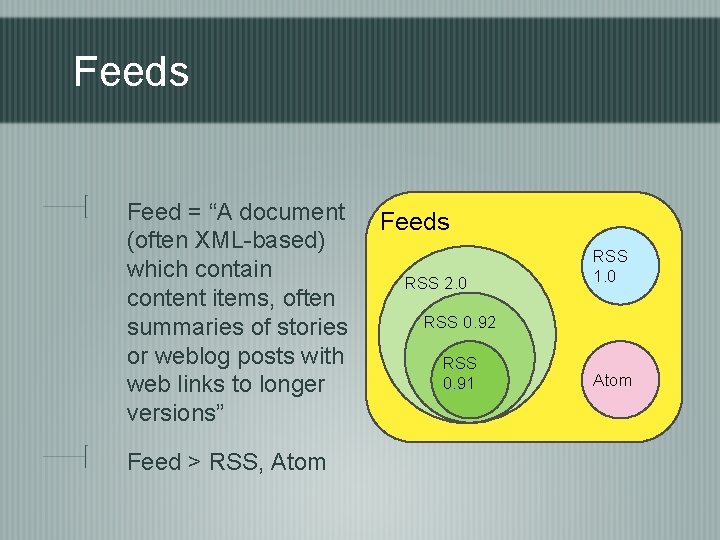

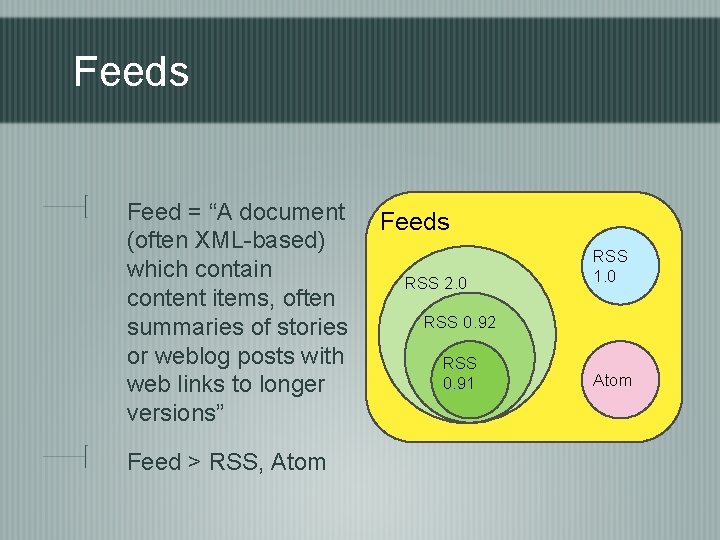

Feeds Feed = “A document (often XML-based) which contain content items, often summaries of stories or weblog posts with web links to longer versions” Feed > RSS, Atom Feeds RSS 2. 0 RSS 1. 0 RSS 0. 92 RSS 0. 91 Atom

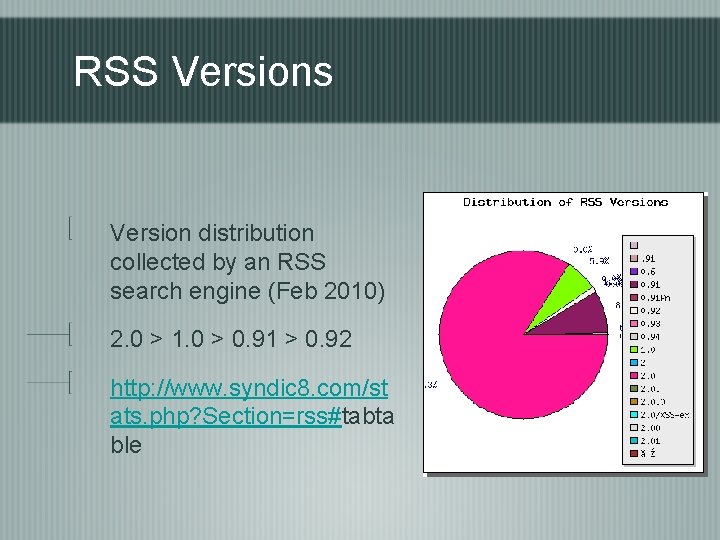

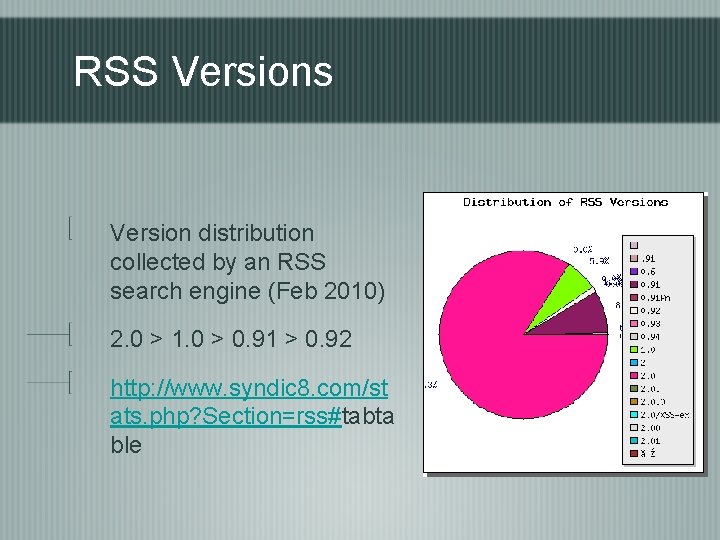

RSS Versions Version distribution collected by an RSS search engine (Feb 2010) 2. 0 > 1. 0 > 0. 91 > 0. 92 http: //www. syndic 8. com/st ats. php? Section=rss#tabta ble

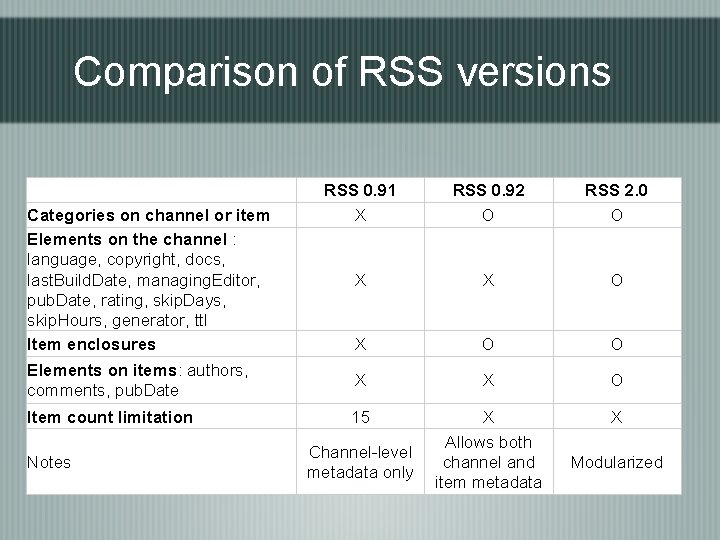

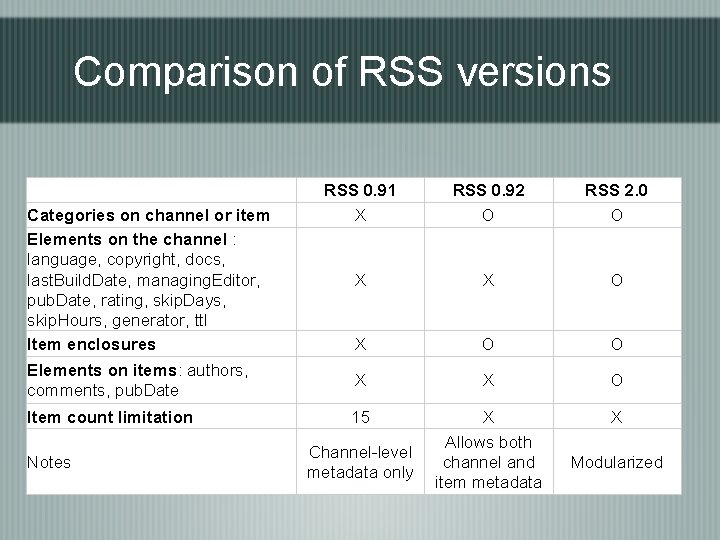

Comparison of RSS versions RSS 0. 91 X RSS 0. 92 O RSS 2. 0 O X X O O Elements on items: authors, comments, pub. Date X X O Item count limitation 15 X Allows both channel and item metadata X Categories on channel or item Elements on the channel : language, copyright, docs, last. Build. Date, managing. Editor, pub. Date, rating, skip. Days, skip. Hours, generator, ttl Item enclosures Notes Channel-level metadata only Modularized

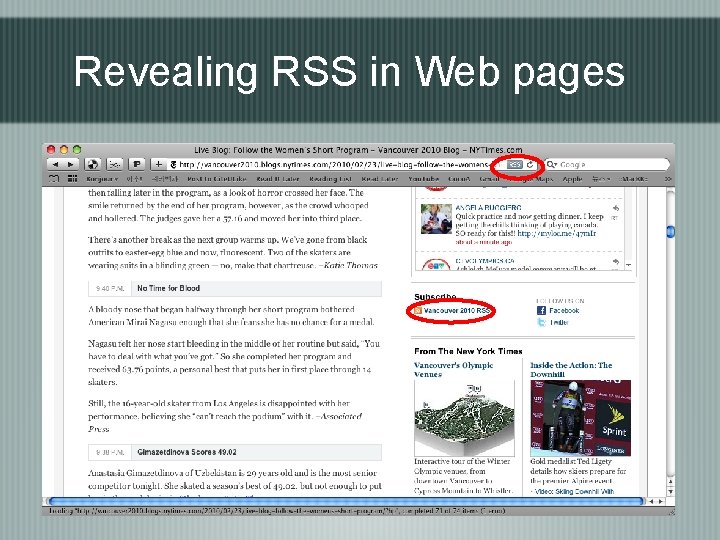

Revealing RSS in Web pages

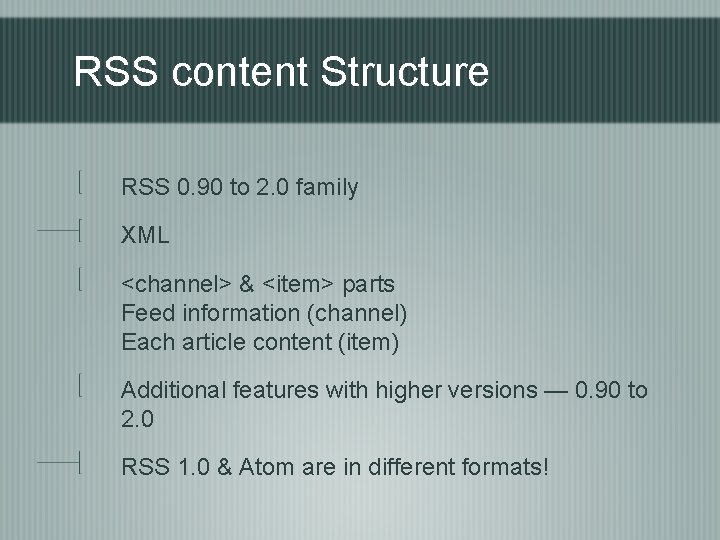

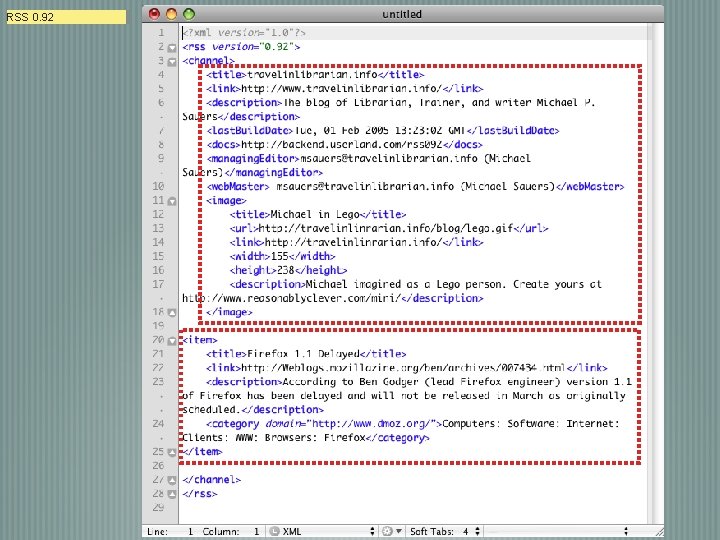

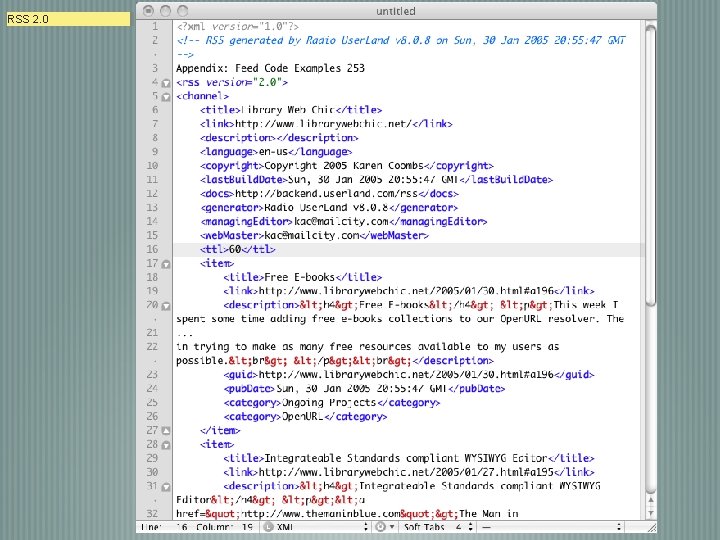

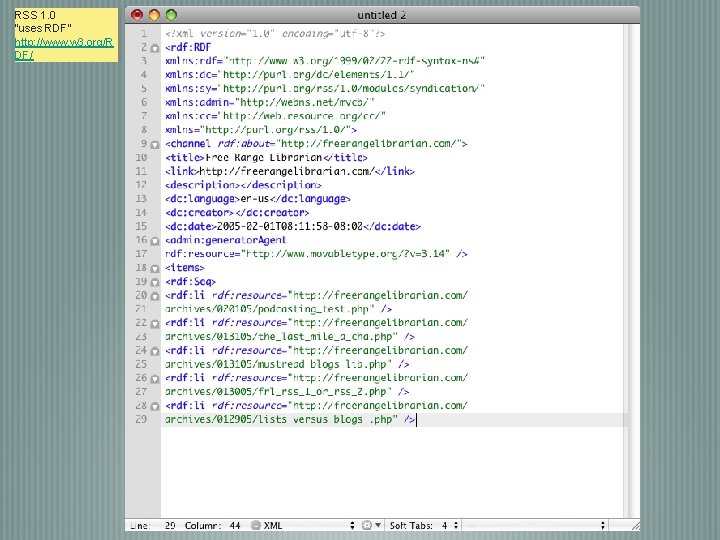

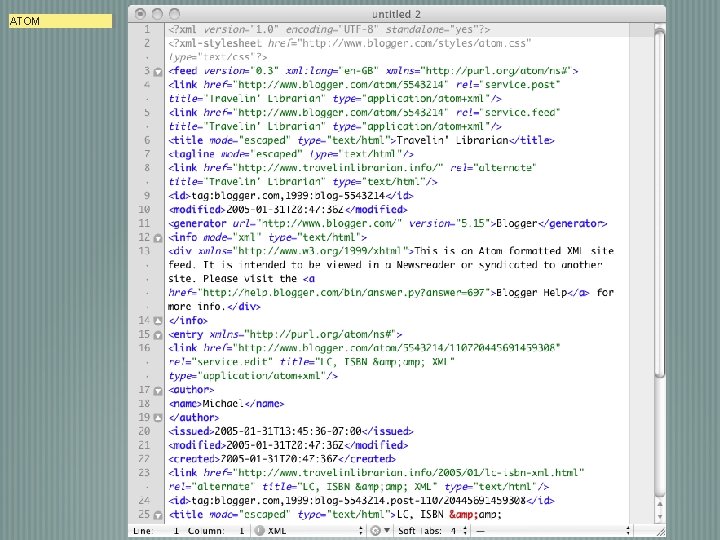

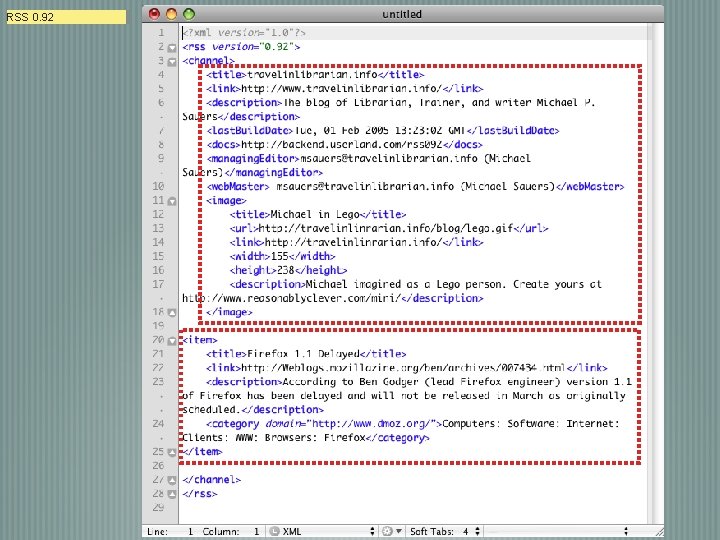

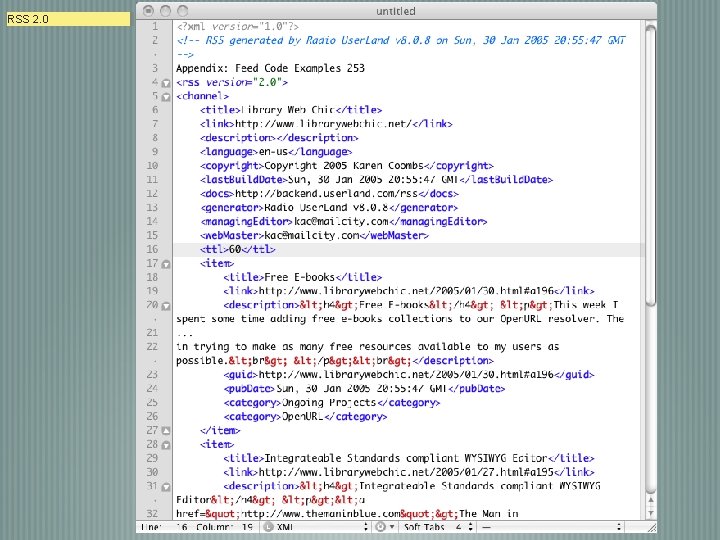

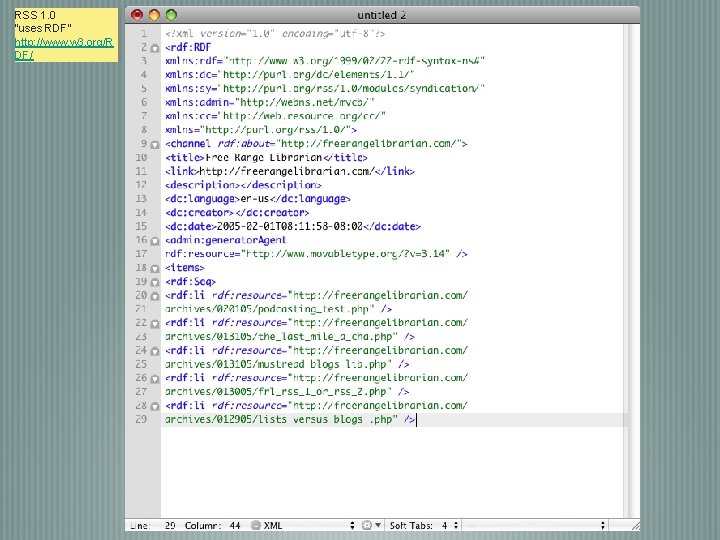

RSS content Structure RSS 0. 90 to 2. 0 family XML <channel> & <item> parts Feed information (channel) Each article content (item) Additional features with higher versions — 0. 90 to 2. 0 RSS 1. 0 & Atom are in different formats!

RSS 0. 92

RSS 2. 0

RSS 1. 0 “uses RDF” http: //www. w 3. org/R DF/

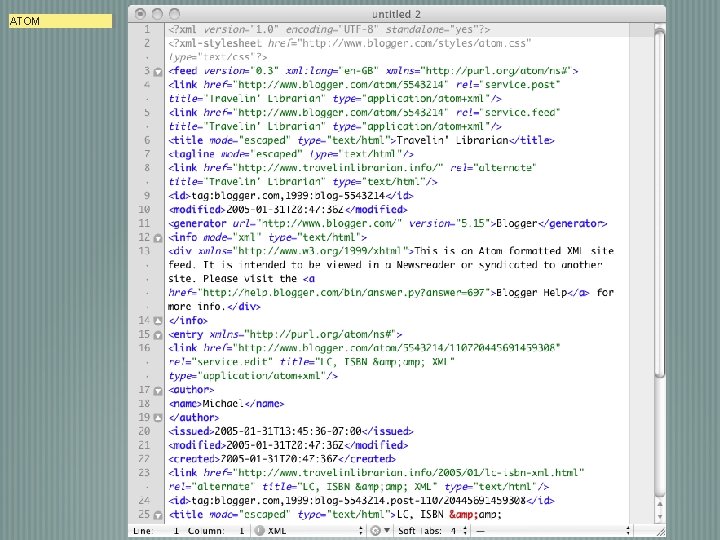

ATOM

In more detail. . . Specifications RSS 0. 91: http: //www. rssboard. org/rss-0 -9 -1 -netscape RSS 2. 0: http: //cyber. law. harvard. edu/rss. html

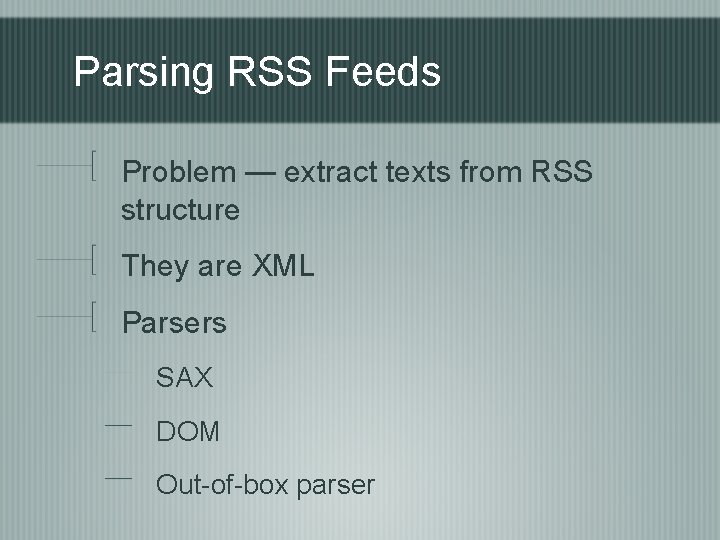

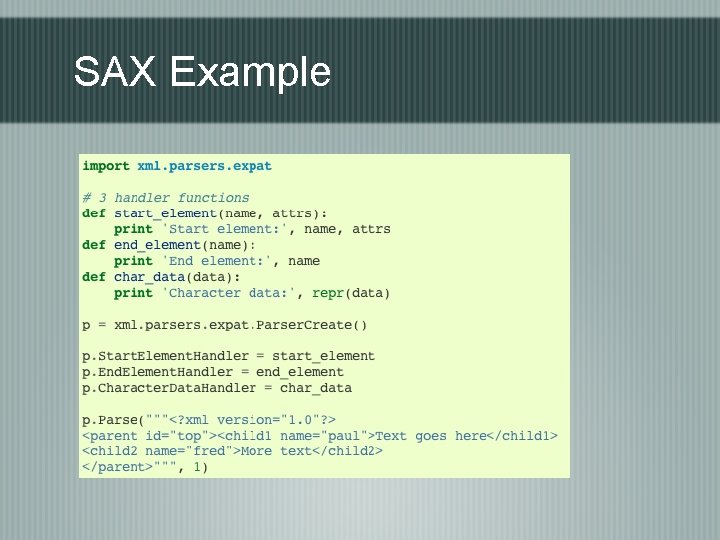

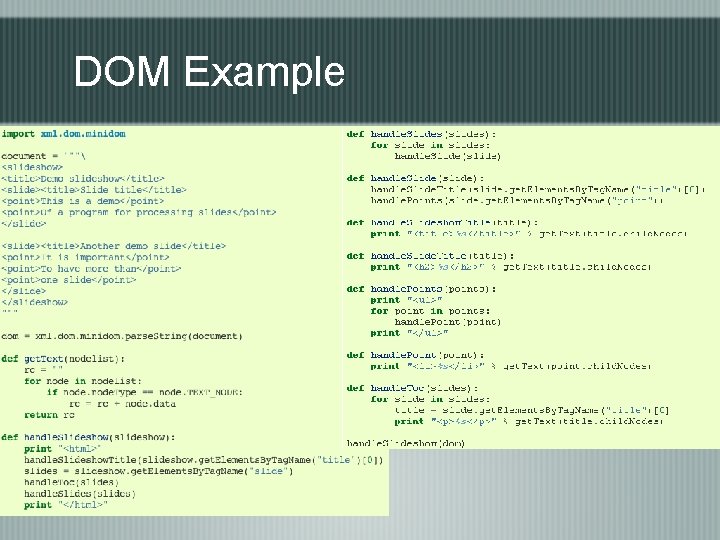

Parsing RSS Feeds Problem — extract texts from RSS structure They are XML Parsers SAX DOM Out-of-box parser

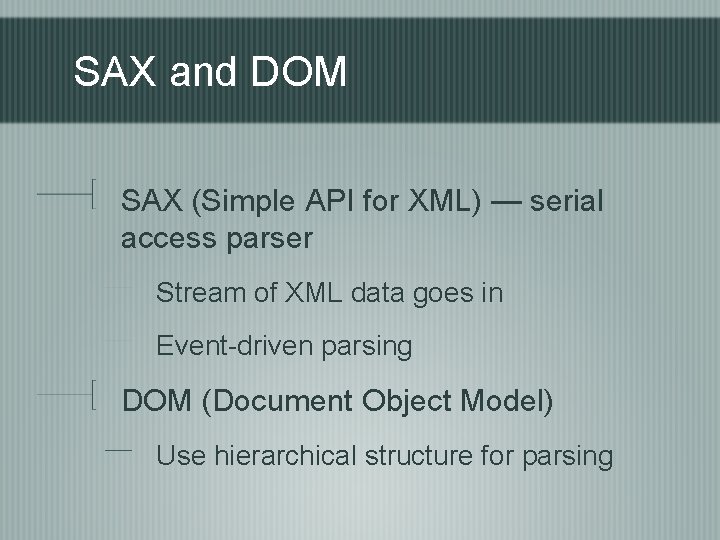

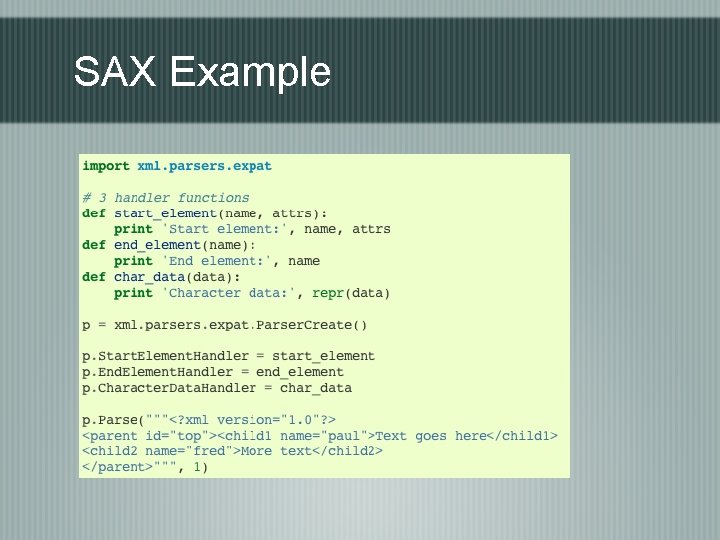

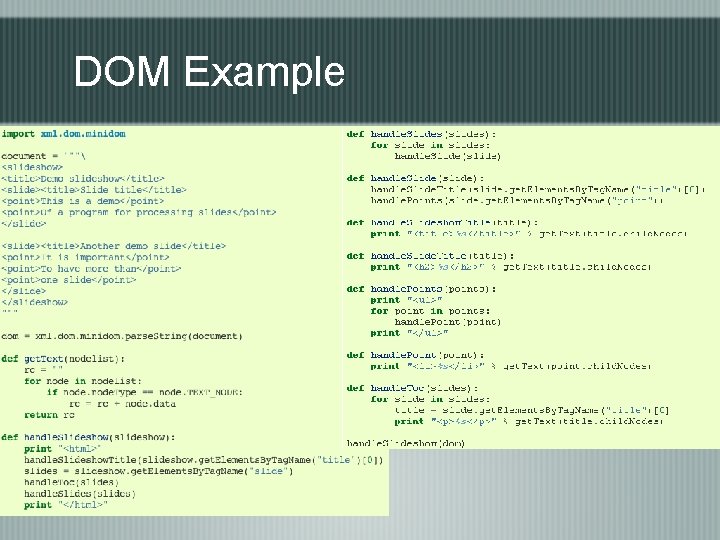

SAX and DOM SAX (Simple API for XML) — serial access parser Stream of XML data goes in Event-driven parsing DOM (Document Object Model) Use hierarchical structure for parsing

SAX Example

DOM Example

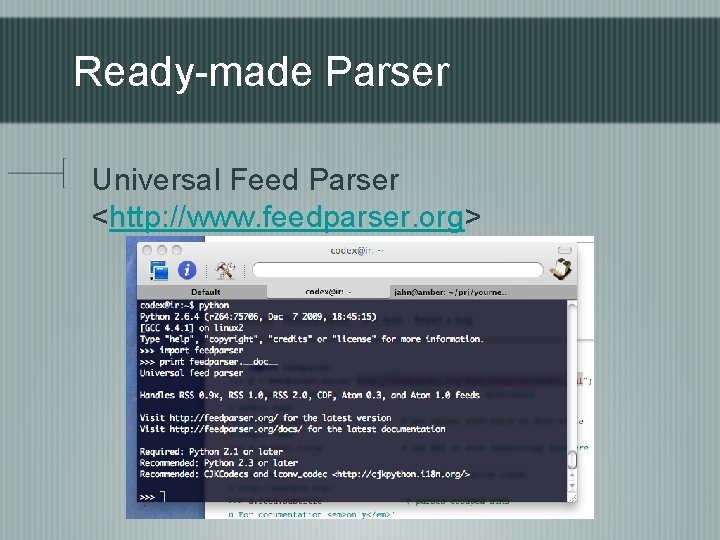

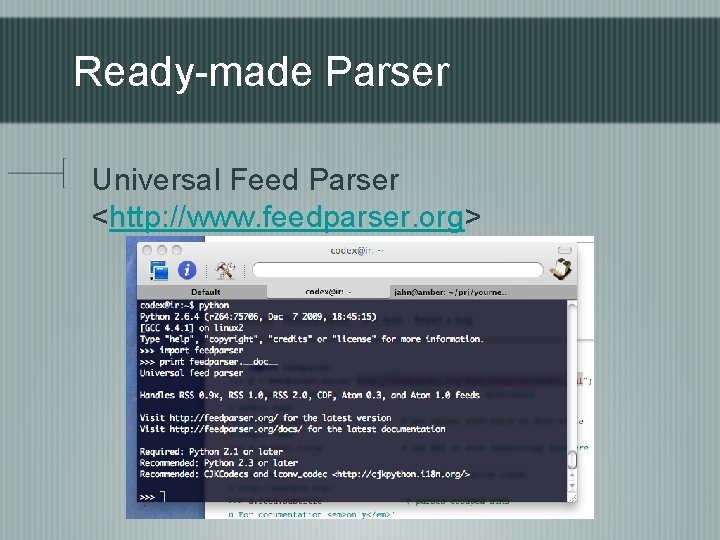

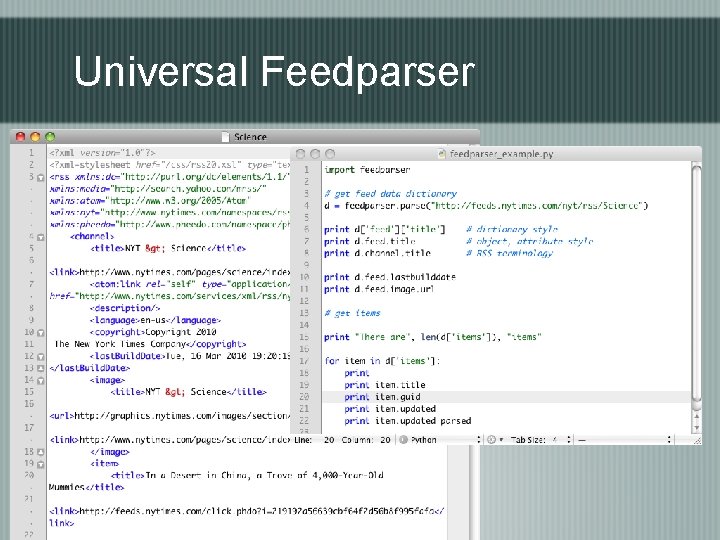

Ready-made Parser Universal Feed Parser <http: //www. feedparser. org>

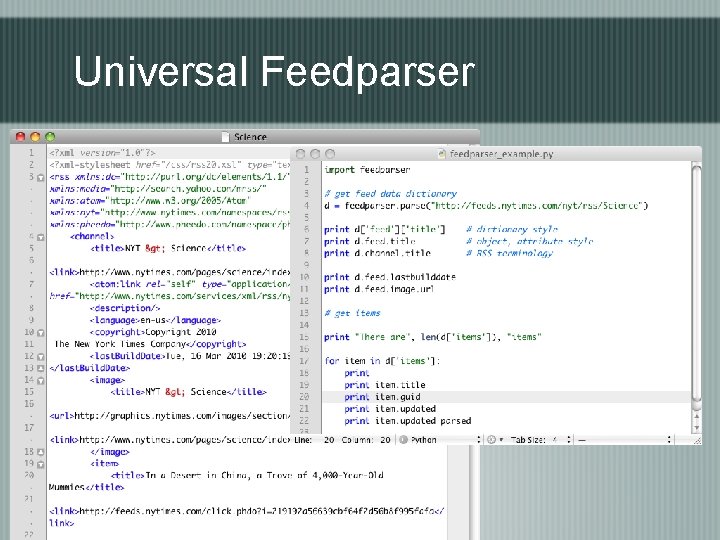

Universal Feedparser

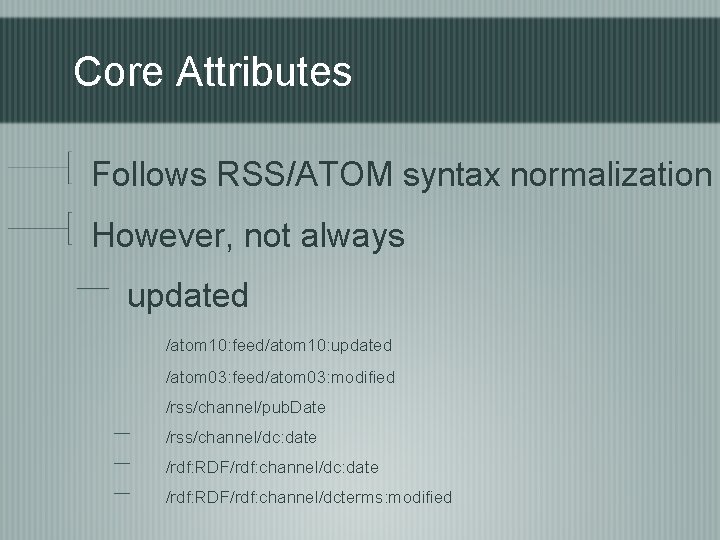

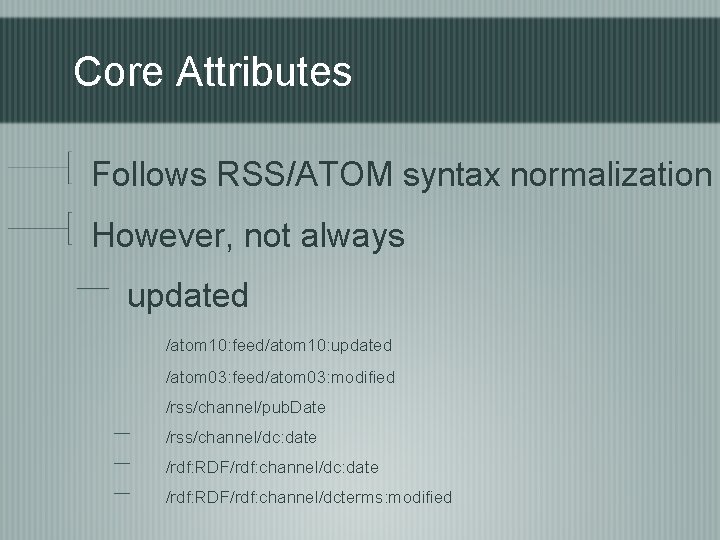

Core Attributes Follows RSS/ATOM syntax normalization However, not always updated /atom 10: feed/atom 10: updated /atom 03: feed/atom 03: modified /rss/channel/pub. Date /rss/channel/dc: date /rdf: RDF/rdf: channel/dcterms: modified

Advanced features Date parsing HTML sanitization Content normalization Namespace handling and more. . .

Document classification

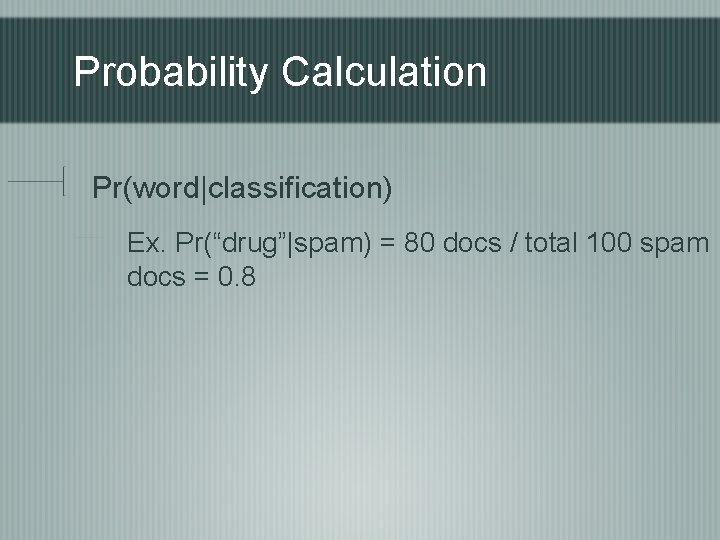

Probability Calculation Pr(word|classification) Ex. Pr(“drug”|spam) = 80 docs / total 100 spam docs = 0. 8

, Doc 2[ … money …](s), Doc 3[ …](https://slidetodoc.com/presentation_image_h2/b9bf2714a91662bb7640ec02078570c1/image-23.jpg)

Weighted Probability Doc 1[… money …](s), Doc 2[ … money …](s), Doc 3[ … money …](s), Doc 4[……](s), Doc 5[……](ns) Pr(“money”|spam) = 3/4 = 0. 75 Pr(“money”|no-spam) = 0/1 = 0 Pr = 0. 5 (we don’t know) may be better than Pr = 0 (never) Ex. After finding one spam instance

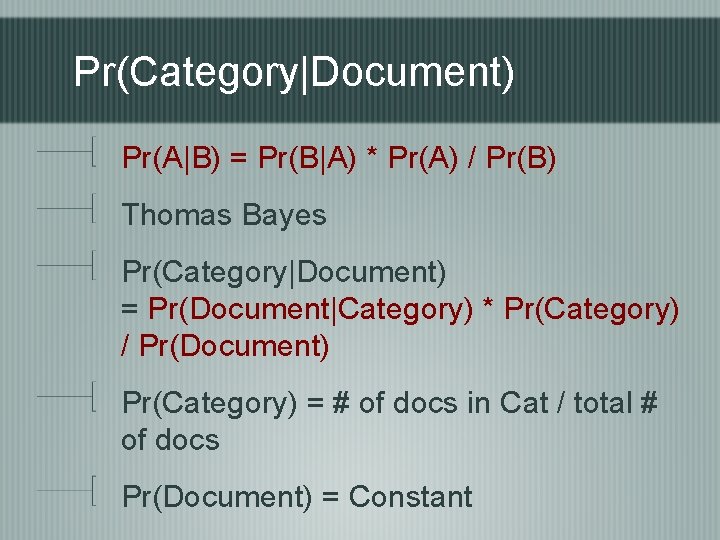

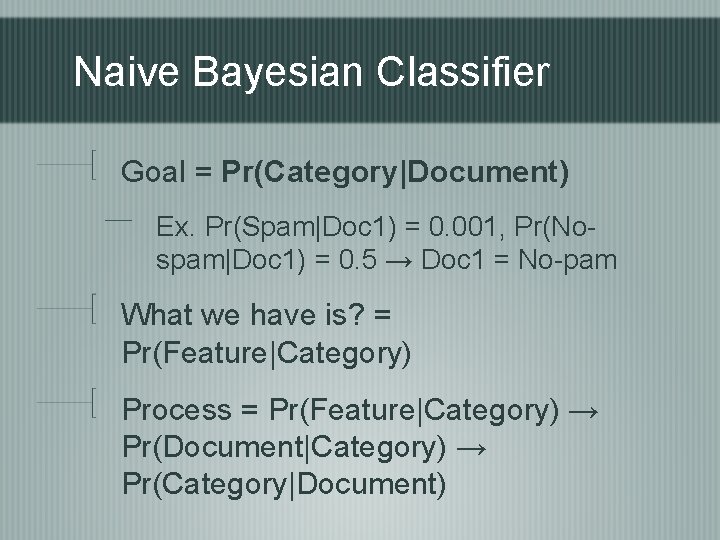

Naive Bayesian Classifier Goal = Pr(Category|Document) Ex. Pr(Spam|Doc 1) = 0. 001, Pr(Nospam|Doc 1) = 0. 5 → Doc 1 = No-pam What we have is? = Pr(Feature|Category) Process = Pr(Feature|Category) → Pr(Document|Category) → Pr(Category|Document)

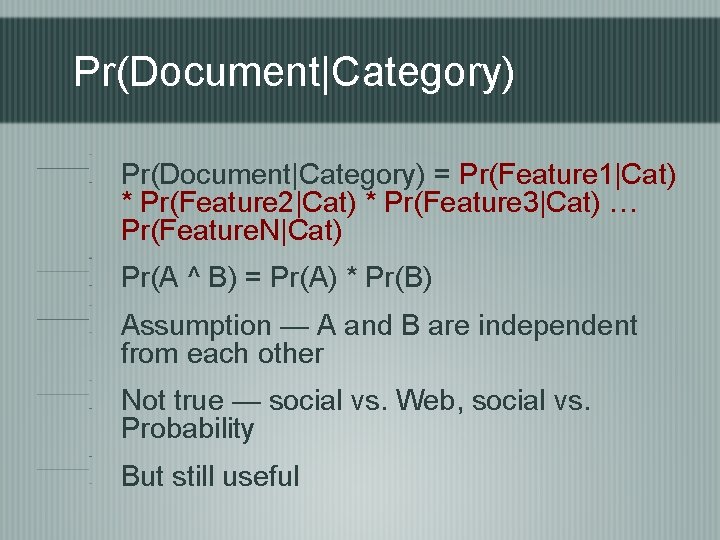

Pr(Document|Category) = Pr(Feature 1|Cat) * Pr(Feature 2|Cat) * Pr(Feature 3|Cat) … Pr(Feature. N|Cat) Pr(A ^ B) = Pr(A) * Pr(B) Assumption — A and B are independent from each other Not true — social vs. Web, social vs. Probability But still useful

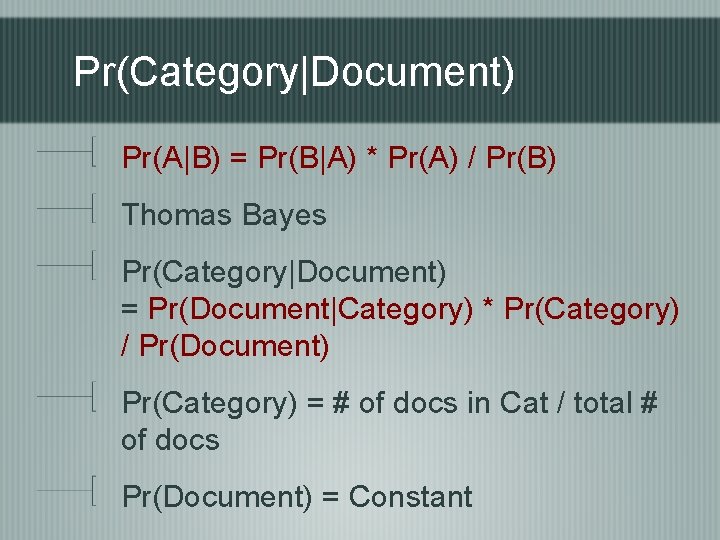

Pr(Category|Document) Pr(A|B) = Pr(B|A) * Pr(A) / Pr(B) Thomas Bayes Pr(Category|Document) = Pr(Document|Category) * Pr(Category) / Pr(Document) Pr(Category) = # of docs in Cat / total # of docs Pr(Document) = Constant

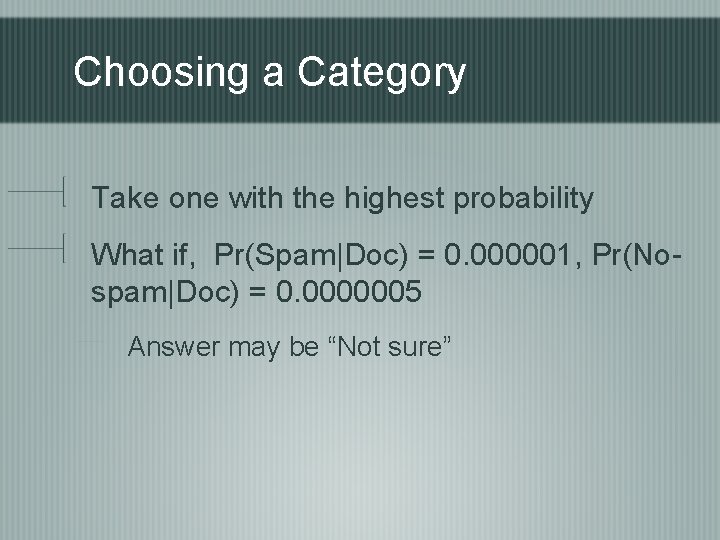

Choosing a Category Take one with the highest probability What if, Pr(Spam|Doc) = 0. 000001, Pr(Nospam|Doc) = 0. 0000005 Answer may be “Not sure”

Choosing a Category Thresholding If Pr(Spam|Doc) > 3 * Pr(No-spam|Doc), Then spam → which is more reasonable

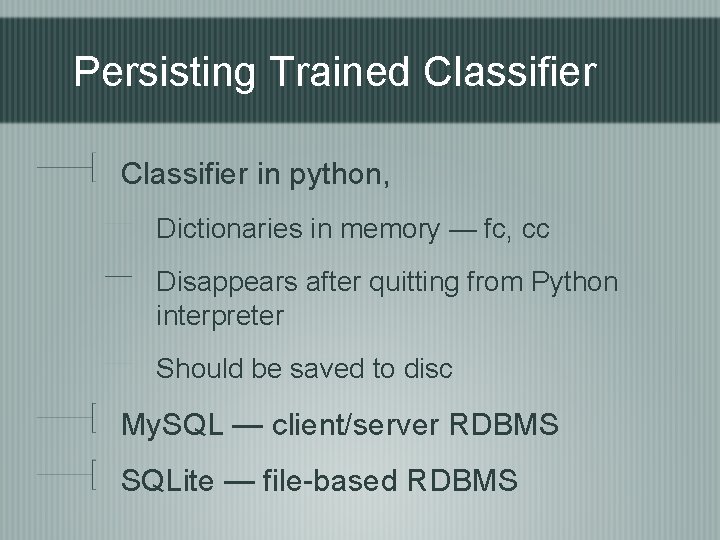

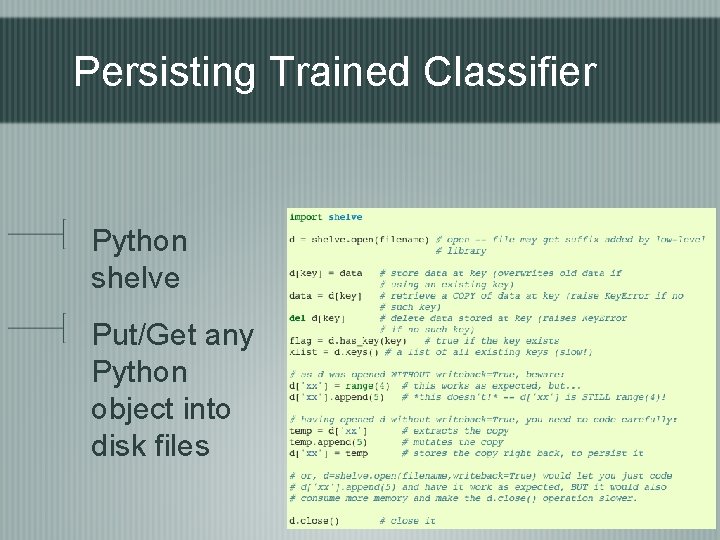

Persisting Trained Classifier in python, Dictionaries in memory — fc, cc Disappears after quitting from Python interpreter Should be saved to disc My. SQL — client/server RDBMS SQLite — file-based RDBMS

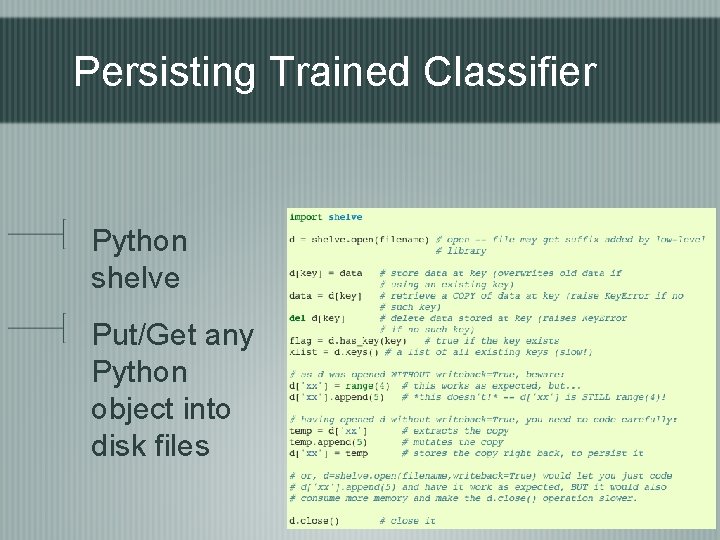

Persisting Trained Classifier Python shelve Put/Get any Python object into disk files

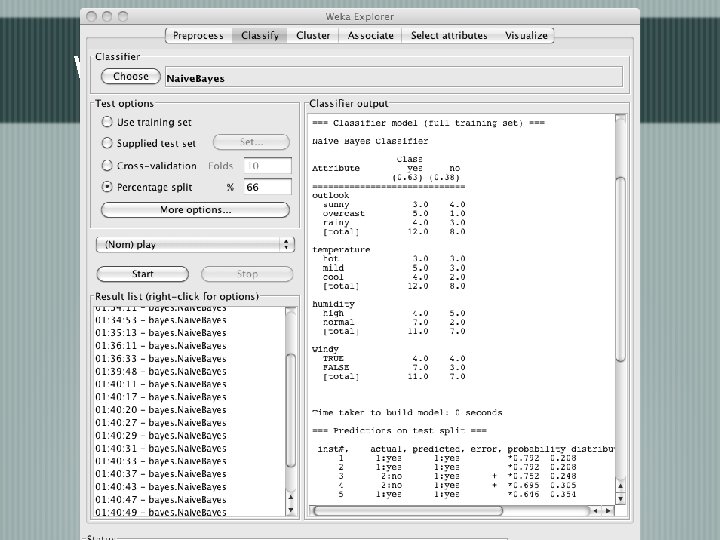

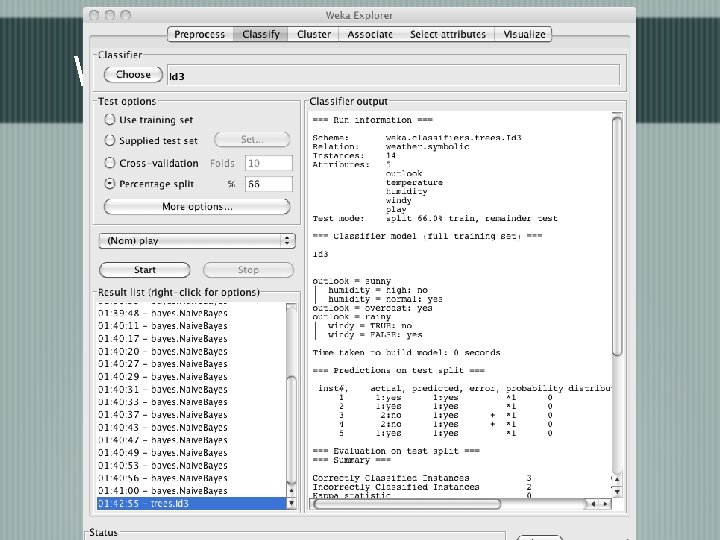

Alternative Methods Supervised learning methods Neural network Support Vector Machine Decision Tree Software packages Weka, R, SPSS Clementine, etc

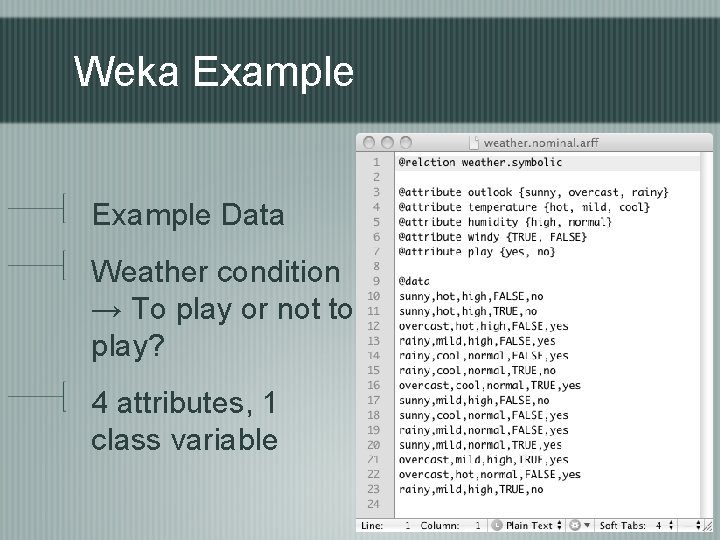

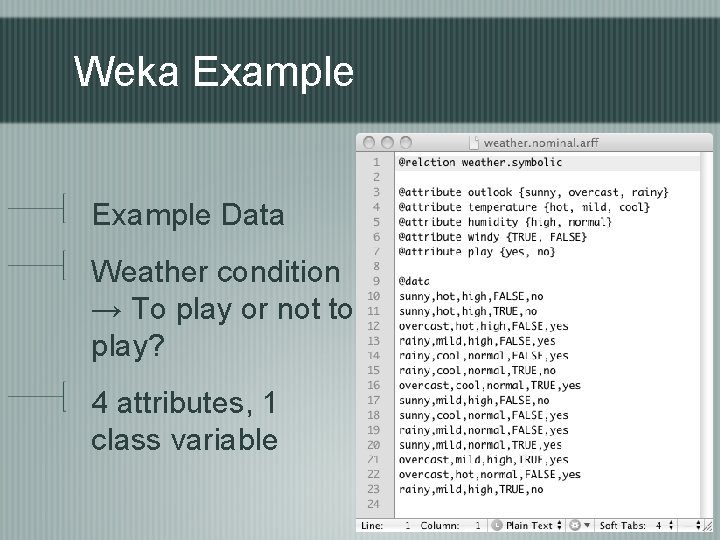

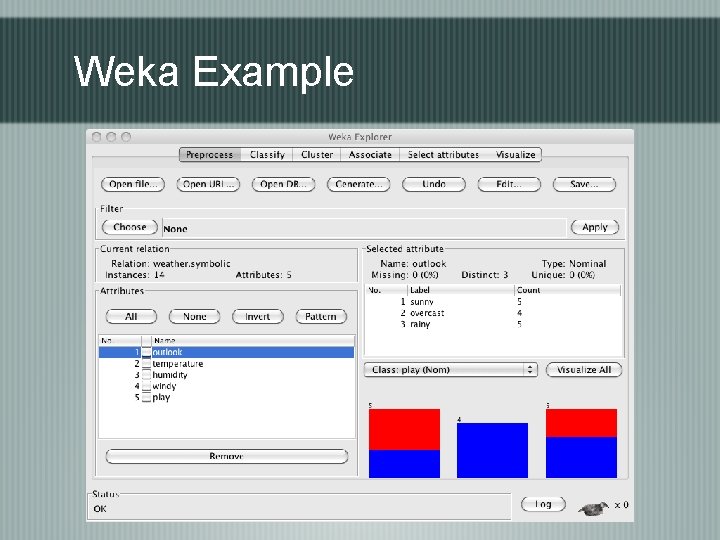

Weka Example Data Weather condition → To play or not to play? 4 attributes, 1 class variable

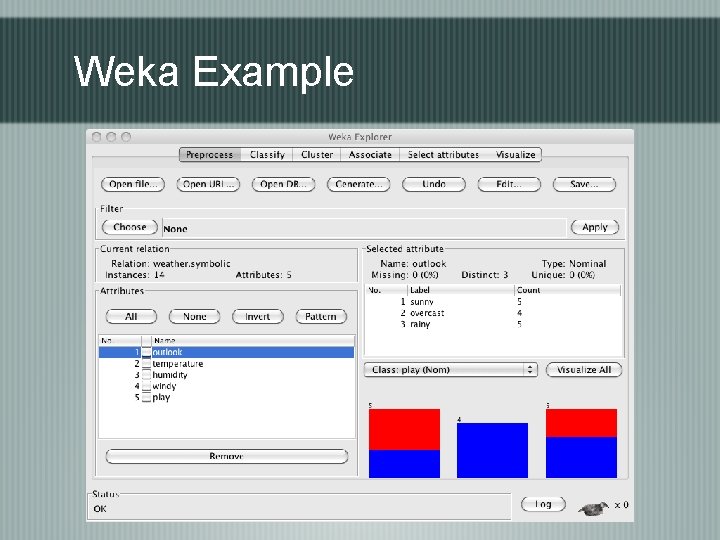

Weka Example

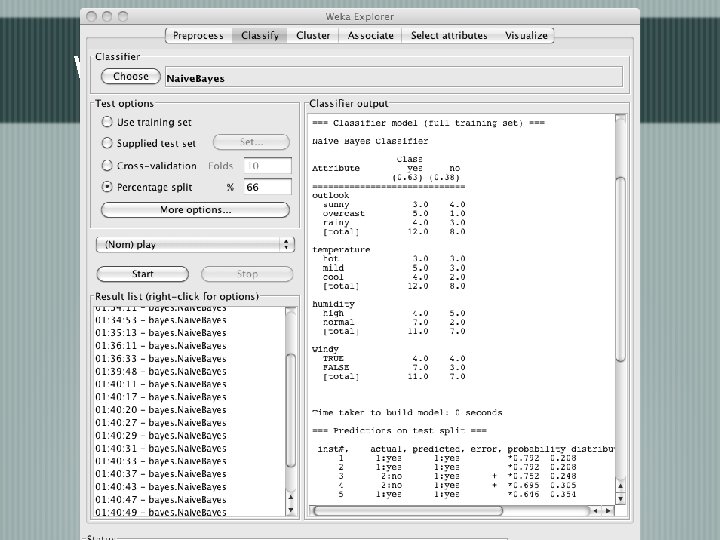

Weka Example

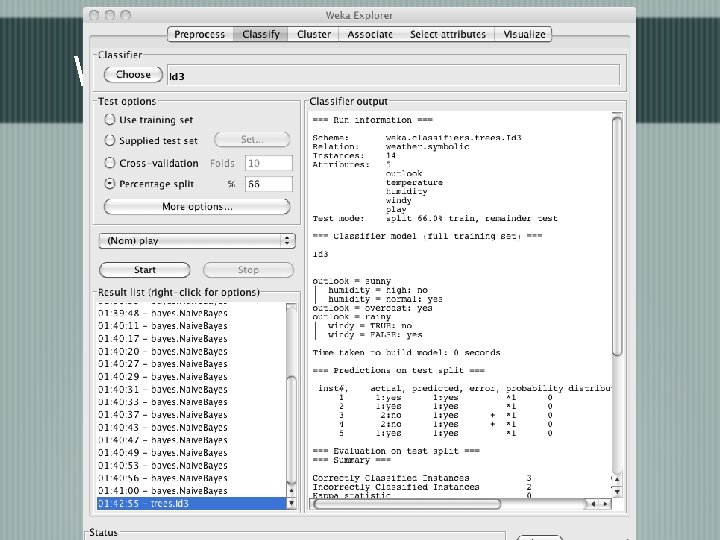

Weka Example