Future Trends in Nuclear Physics Computing David Skinner

- Slides: 18

Future Trends in Nuclear Physics Computing David Skinner Strategic Partnerships Lead National Energy Research Scientific Computing Lawrence Berkeley National Lab - 1 -

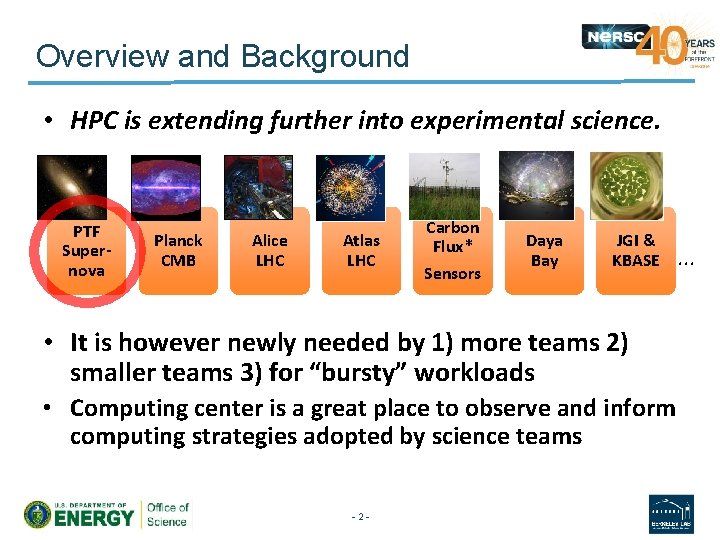

Overview and Background • HPC is extending further into experimental science. PTF Supernova Planck CMB Alice LHC Atlas LHC Carbon Flux* Sensors Daya Bay JGI & KBASE • It is however newly needed by 1) more teams 2) smaller teams 3) for “bursty” workloads • Computing center is a great place to observe and inform computing strategies adopted by science teams - 2 - . . .

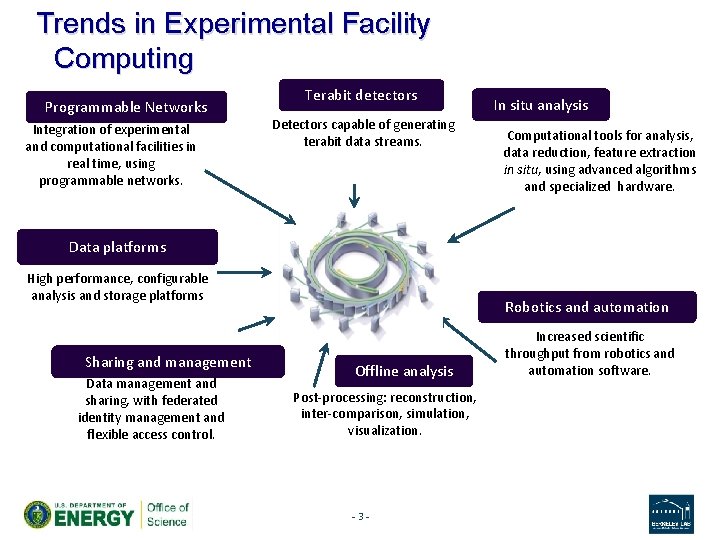

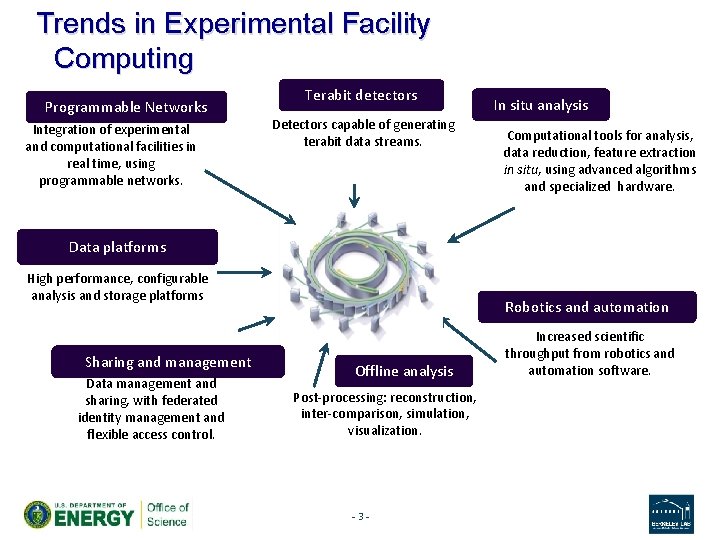

Trends in Experimental Facility Computing Programmable Networks Integration of experimental and computational facilities in real time, using programmable networks. Terabit detectors Detectors capable of generating terabit data streams. In situ analysis Computational tools for analysis, data reduction, feature extraction in situ, using advanced algorithms and specialized hardware. Data platforms High performance, configurable analysis and storage platforms Sharing and management Data management and sharing, with federated identity management and flexible access control. Robotics and automation Offline analysis Post-processing: reconstruction, inter-comparison, simulation, visualization. - 3 - Increased scientific throughput from robotics and automation software.

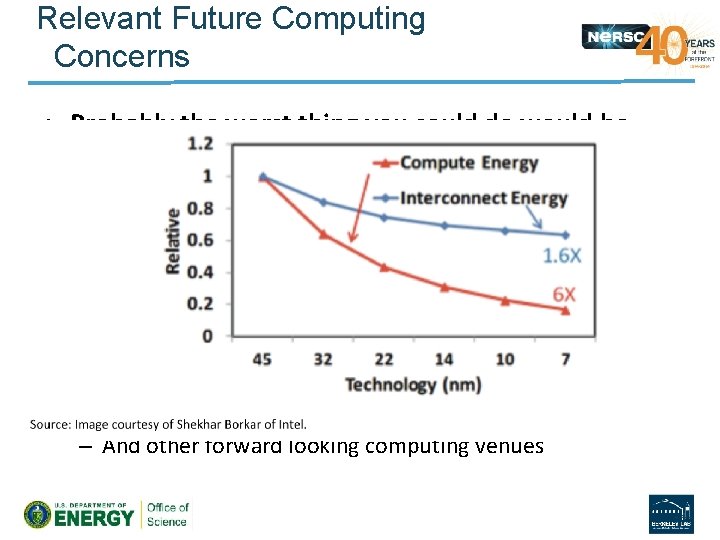

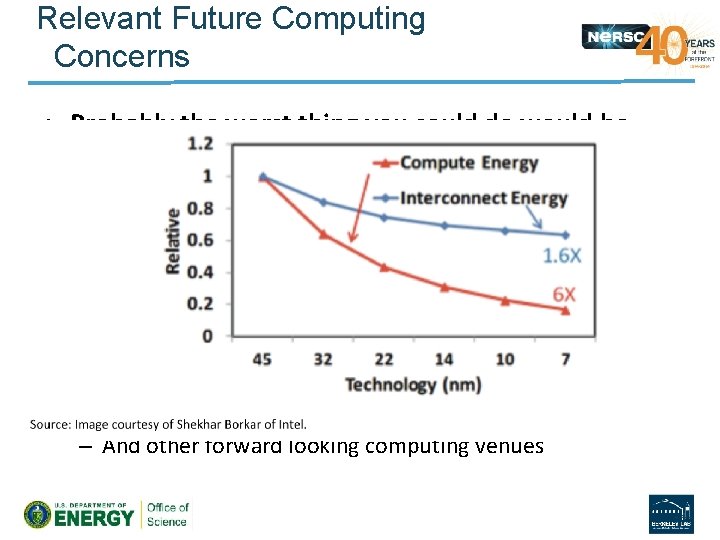

Relevant Future Computing Concerns • Probably the worst thing you could do would be technology selection – Moore’s Law is ending. What’s next is…. lower power. • Find the key algorithms which involve data motion in the end-to-end workflow. – How do these scale? – Would you like better algorithms? • Track / Participate in the ASCR Requirements Reviews – And other forward looking computing venues - 4 -

Free Advice • Presuppose little about computing other than it having a power budget. • Focus instead on the metrics for success of the overall instrument, some of which will probably involve a computer. • In 2040 computers and networks will be different. Focus on the power demands of the end-to-end workflow. • Focus on algorithms that minimize data movement. • Pay attention to the plumbing.

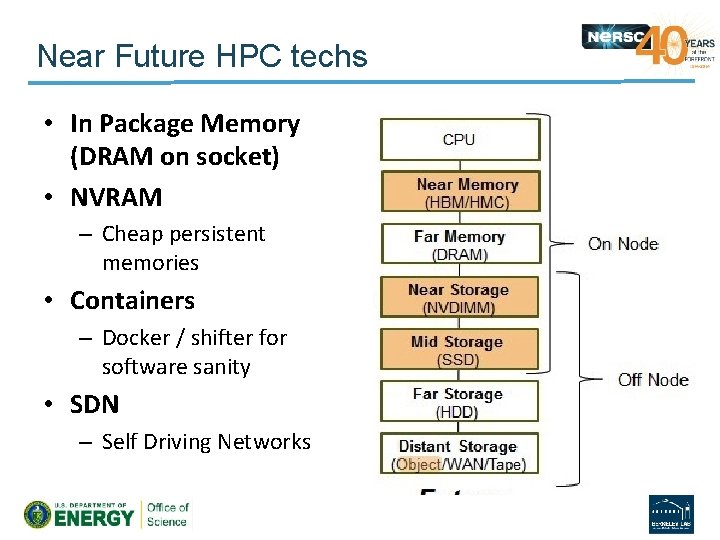

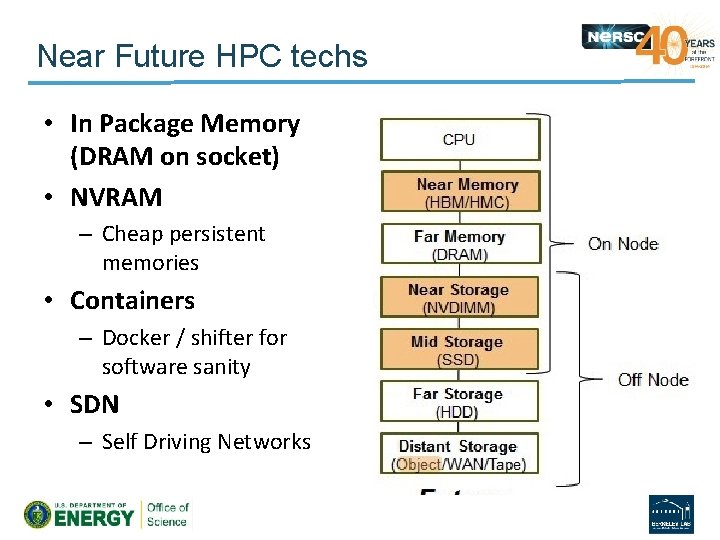

Near Future HPC techs • In Package Memory (DRAM on socket) • NVRAM – Cheap persistent memories • Containers – Docker / shifter for software sanity • SDN – Self Driving Networks

Three Examples PTF, JGI, and LCLS

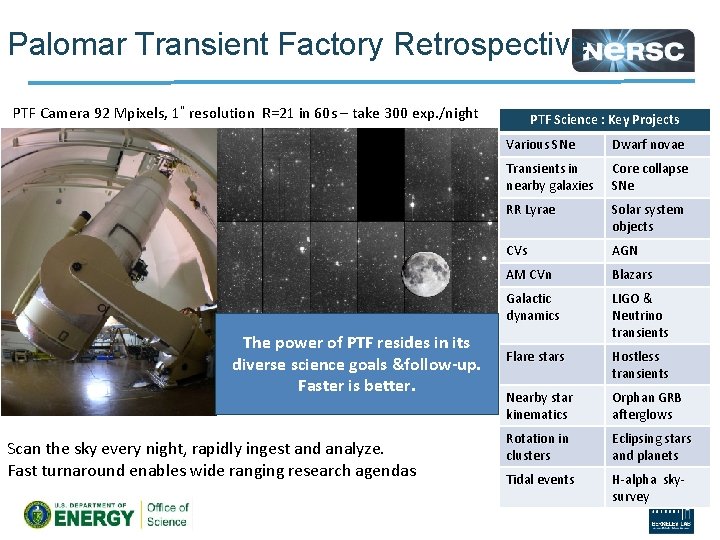

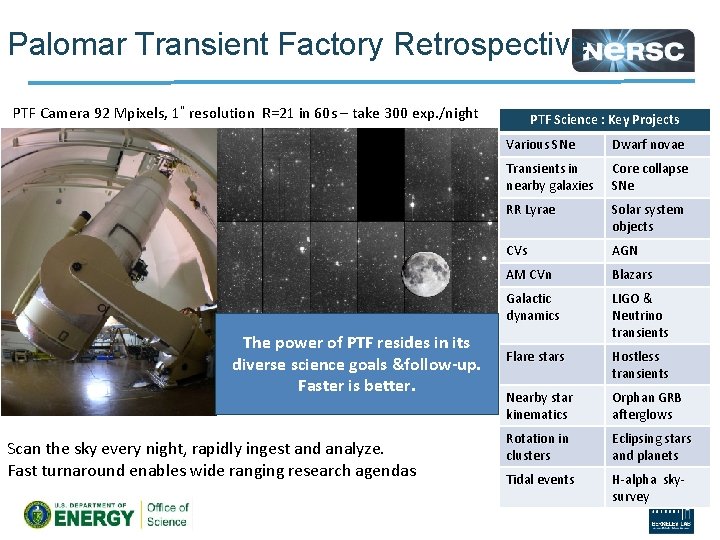

Palomar Transient Factory Retrospective PTF Camera 92 Mpixels, 1” resolution R=21 in 60 s – take 300 exp. /night The power of PTF resides in its diverse science goals &follow-up. Faster is better. Scan the sky every night, rapidly ingest and analyze. Fast turnaround enables wide ranging research agendas PTF Science : Key Projects Various SNe Dwarf novae Transients in nearby galaxies Core collapse SNe RR Lyrae Solar system objects CVs AGN AM CVn Blazars Galactic dynamics LIGO & Neutrino transients Flare stars Hostless transients Nearby star kinematics Orphan GRB afterglows Rotation in clusters Eclipsing stars and planets Tidal events H-alpha skysurvey

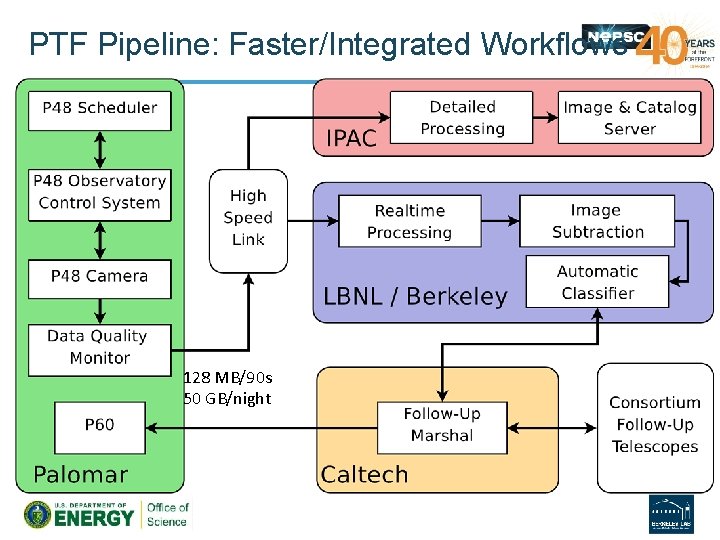

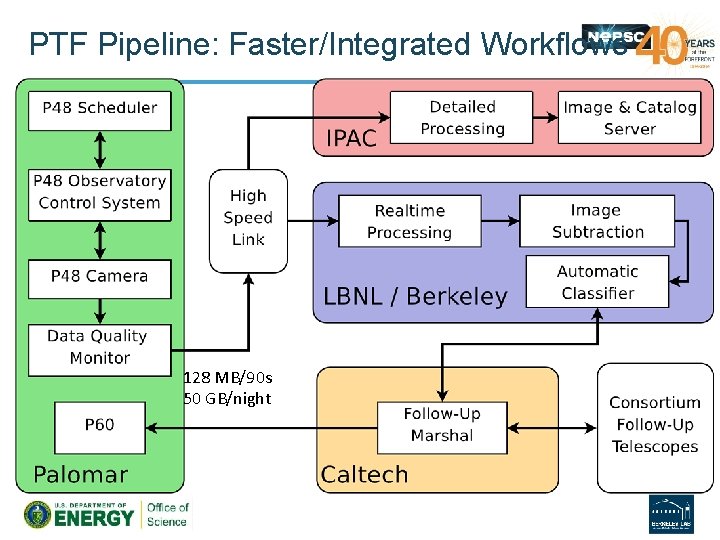

PTF Pipeline: Faster/Integrated Workflows 128 MB/90 s 50 GB/night

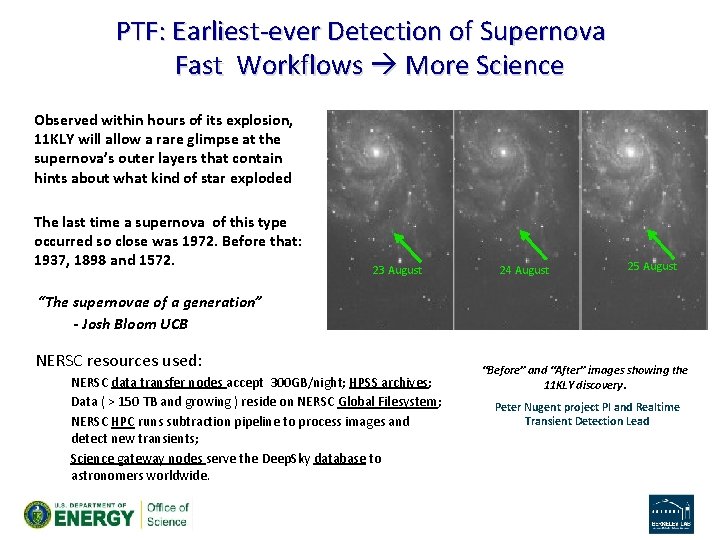

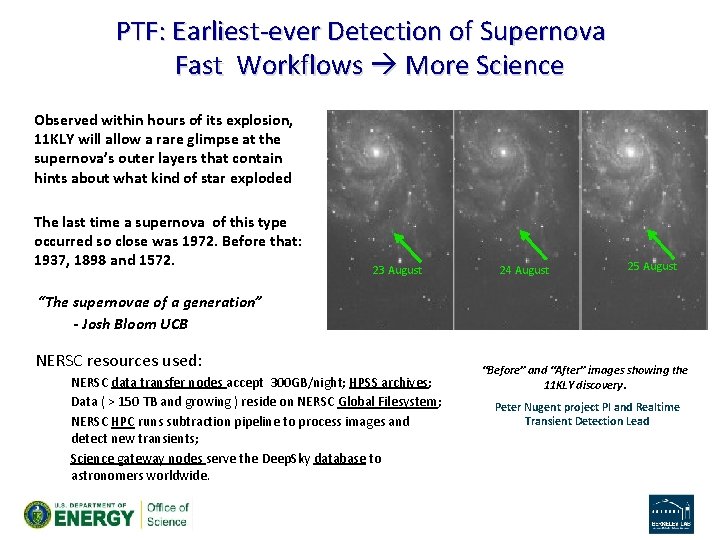

PTF: Earliest-ever Detection of Supernova Fast Workflows More Science Observed within hours of its explosion, 11 KLY will allow a rare glimpse at the supernova’s outer layers that contain hints about what kind of star exploded The last time a supernova of this type occurred so close was 1972. Before that: 1937, 1898 and 1572. 23 August 24 August 25 August “The supernovae of a generation” - Josh Bloom UCB NERSC resources used: NERSC data transfer nodes accept 300 GB/night; HPSS archives; Data ( > 150 TB and growing ) reside on NERSC Global Filesystem; NERSC HPC runs subtraction pipeline to process images and detect new transients; Science gateway nodes serve the Deep. Sky database to astronomers worldwide. “Before” and “After” images showing the 11 KLY discovery. Peter Nugent project PI and Realtime Transient Detection Lead

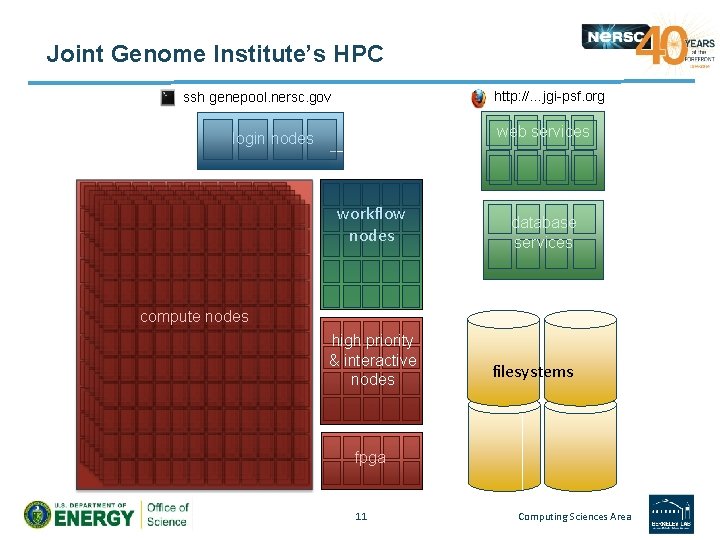

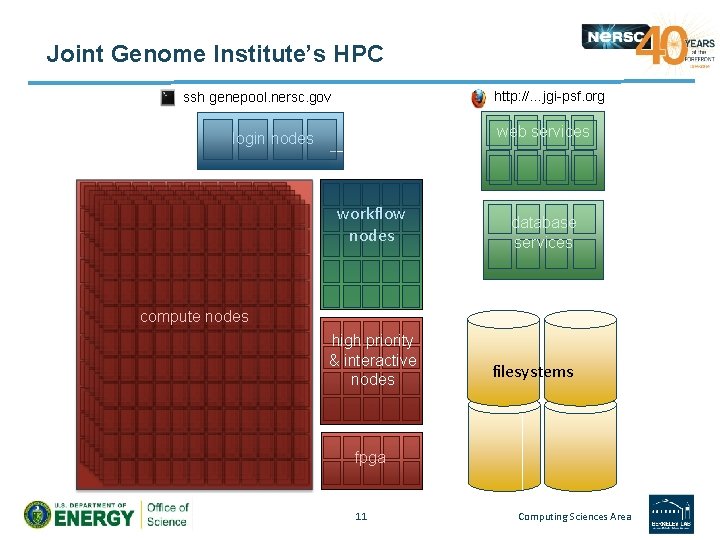

JJoint Genome Institute’s HPC at NERSC http: //…jgi-psf. org ssh genepool. nersc. gov web services login nodes workflow nodes database services compute nodes high priority & interactive nodes filesystems fpga 11 Computing Sciences Area

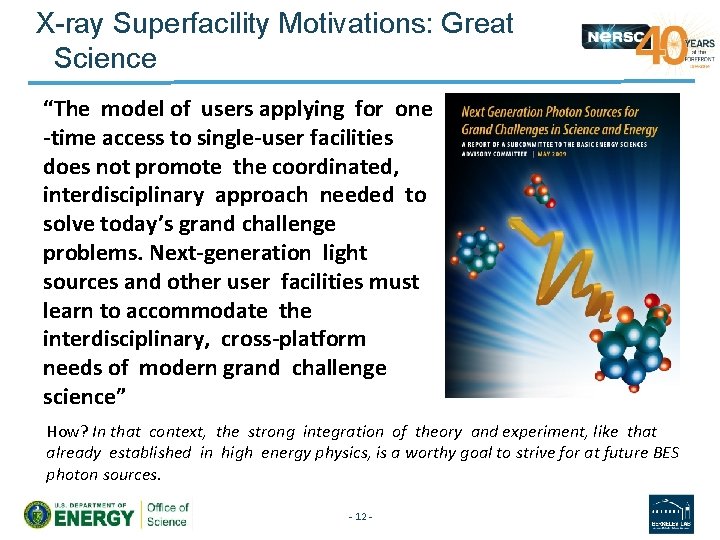

X-ray Superfacility Motivations: Great Science “The model of users applying for one -time access to single-user facilities does not promote the coordinated, interdisciplinary approach needed to solve today’s grand challenge problems. Next-generation light sources and other user facilities must learn to accommodate the interdisciplinary, cross-platform needs of modern grand challenge science” How? In that context, the strong integration of theory and experiment, like that already established in high energy physics, is a worthy goal to strive for at future BES photon sources. - 12 -

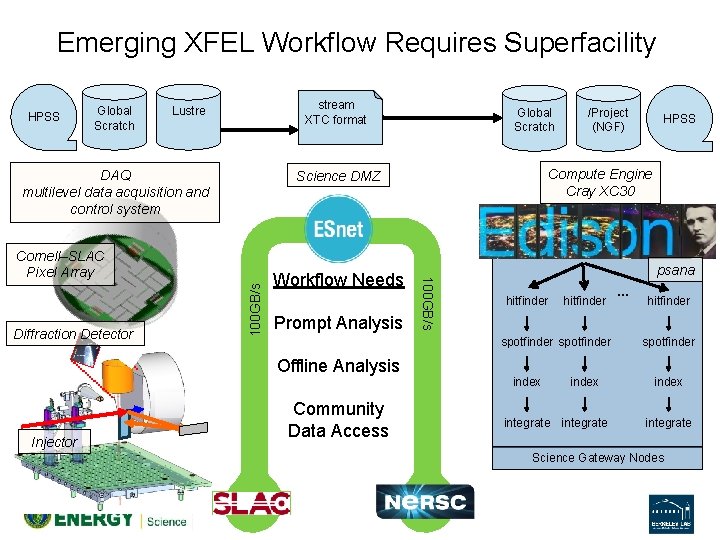

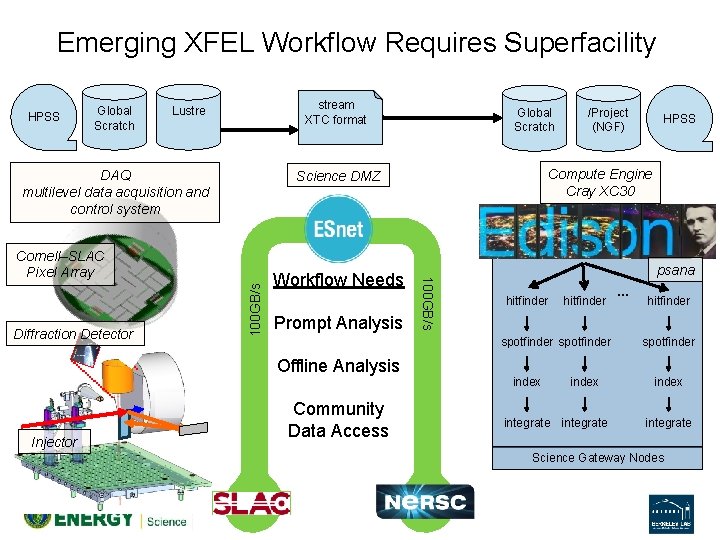

Emerging XFEL Workflow Requires Superfacility HPSS Global Scratch stream XTC format Lustre DAQ multilevel data acquisition and control system 100 GB/s Prompt Analysis Community Data Access HPSS psana hitfinder spotfinder Offline Analysis Injector 100 GB/s Workflow Needs /Project (NGF) Compute Engine Cray XC 30 Science DMZ Cornell–SLAC Pixel Array Diffraction Detector Global Scratch index integrate … hitfinder spotfinder index integrate Science Gateway Nodes

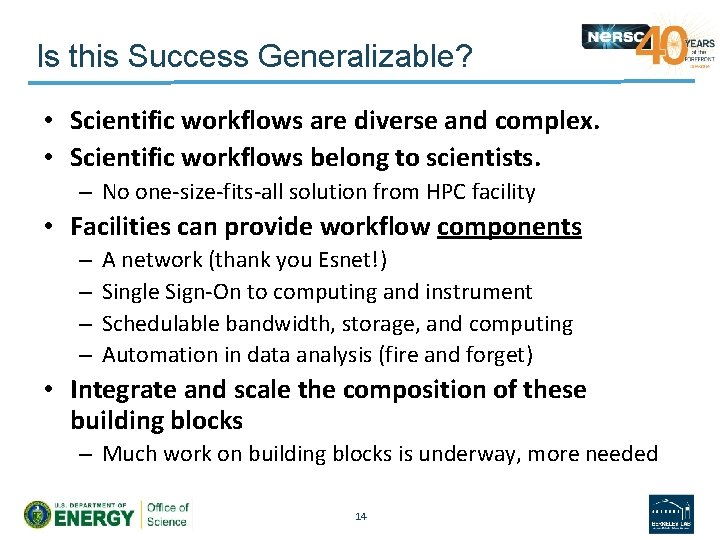

Is this Success Generalizable? • Scientific workflows are diverse and complex. • Scientific workflows belong to scientists. – No one-size-fits-all solution from HPC facility • Facilities can provide workflow components – – A network (thank you Esnet!) Single Sign-On to computing and instrument Schedulable bandwidth, storage, and computing Automation in data analysis (fire and forget) • Integrate and scale the composition of these building blocks – Much work on building blocks is underway, more needed 14

Super Facility: Emerging Areas of Focus • Automated Data Logistics – Your data is where you need it, automated by policies • Federated Identity – Single sign on is a big win for researchers. Globally Fed ID we may leave for others. • Real-time HPC – “real-time” means different things, but given enough insight into your workflow we intend make the computing happen “when you need” Bursty workloads are a great place to exercise this new capability. • APIs for Facilities – Interoperation through REST not email. Please.

Superfacility Concepts for Lightsources Photon science has growing data challenges. An HPC data analysis capability has potential to: • Accelerate existing x-ray beamline science by transparently connecting HPC capabilities to the beamline workflow. • Broaden the impact of x-ray beamline data by exporting data for offline analysis and community re-use on a shared platform • Expand the role of simulation in experimental photon science by leveraging leading-edge applied mathematics and HPC architectures to combine experiment and simulation Let’s examine one recent example in applying these concepts - 16 -

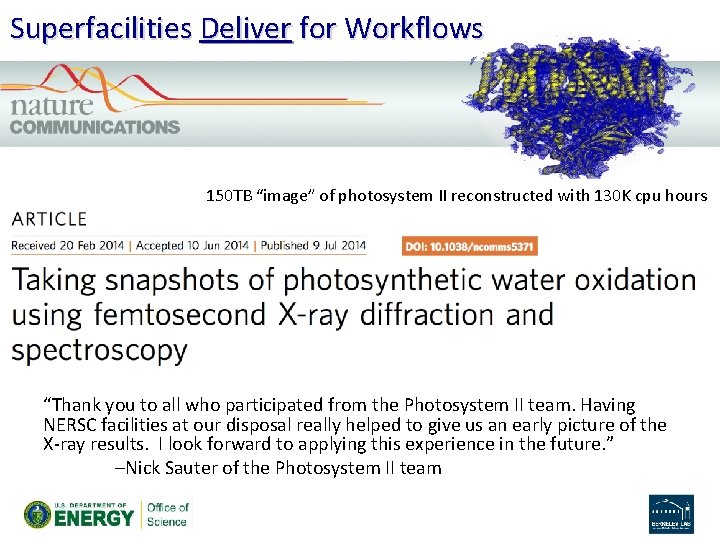

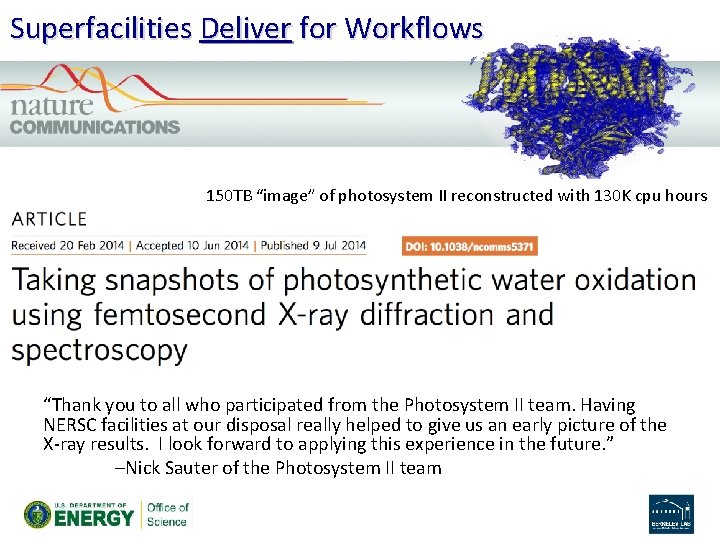

Superfacilities Deliver for Workflows 150 TB “image” of photosystem II reconstructed with 130 K cpu hours “Thank you to all who participated from the Photosystem II team. Having NERSC facilities at our disposal really helped to give us an early picture of the X-ray results. I look forward to applying this experience in the future. ” –Nick Sauter of the Photosystem II team

Thank You 18