Floating Point vs Fixed Point for FPGA 1

- Slides: 21

Floating Point vs. Fixed Point for FPGA 1

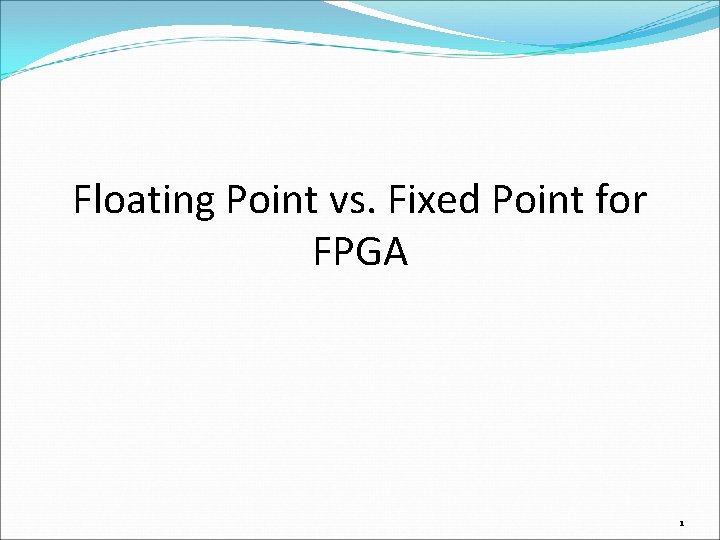

Applications Digital Signal Processing - Encoders/Decoders - Compression - Encryption Control - Automotive/Aerospace - Industrial - Space 2

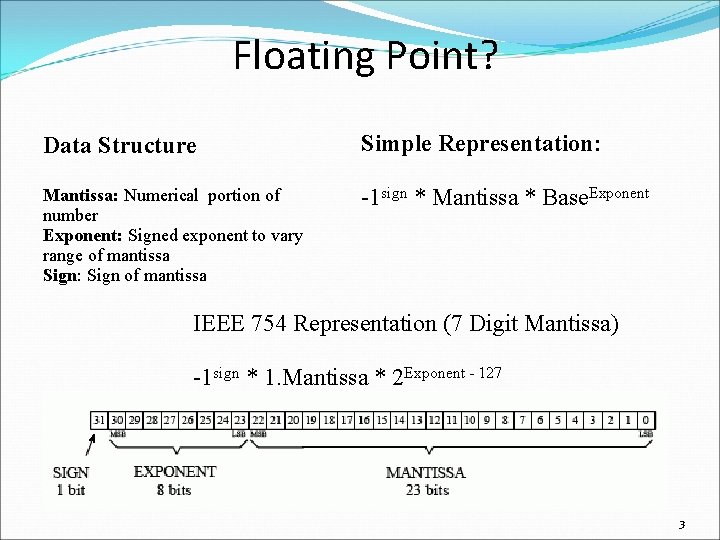

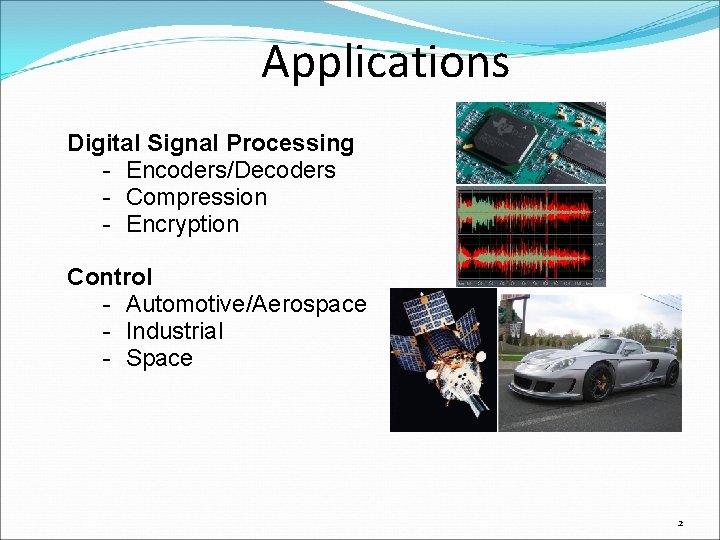

Floating Point? Data Structure Simple Representation: Mantissa: Numerical portion of number Exponent: Signed exponent to vary range of mantissa Sign: Sign of mantissa -1 sign * Mantissa * Base. Exponent IEEE 754 Representation (7 Digit Mantissa) -1 sign * 1. Mantissa * 2 Exponent - 127 3

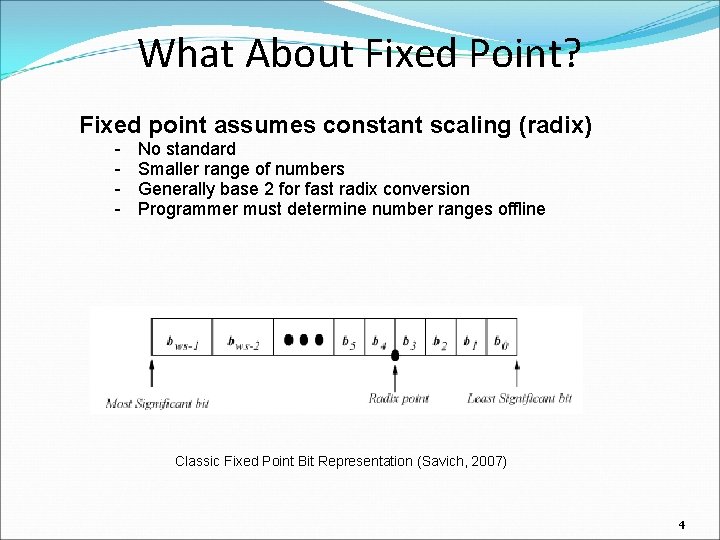

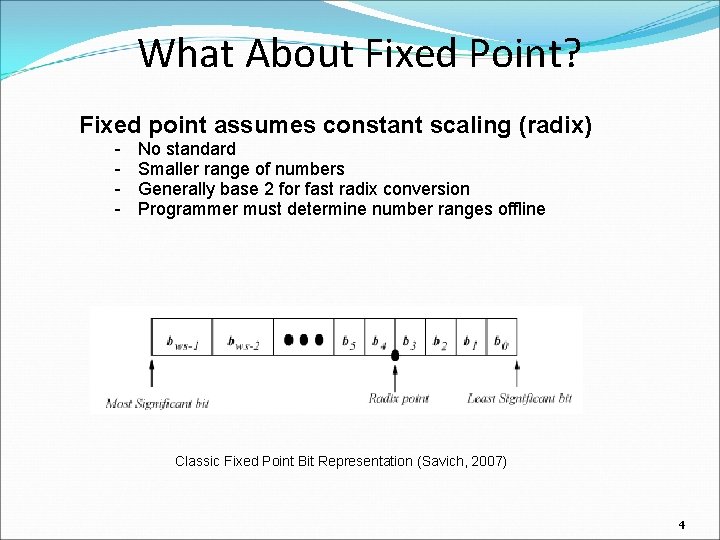

What About Fixed Point? Fixed point assumes constant scaling (radix) - No standard Smaller range of numbers Generally base 2 for fast radix conversion Programmer must determine number ranges offline Classic Fixed Point Bit Representation (Savich, 2007) 4

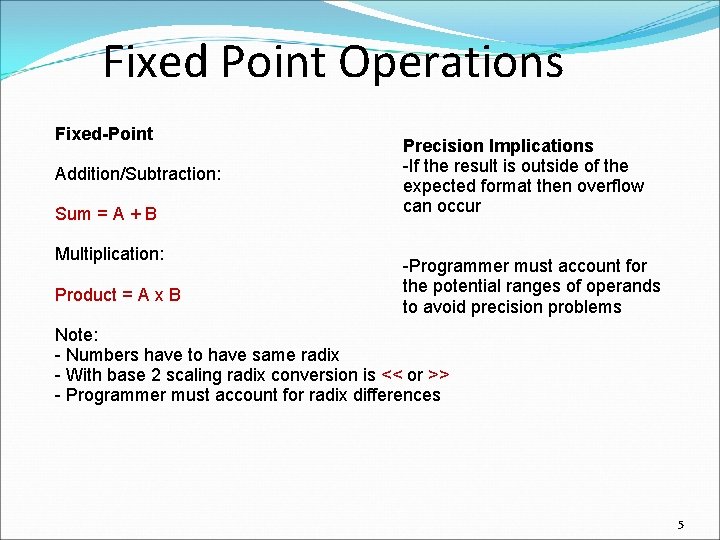

Fixed Point Operations Fixed-Point Addition/Subtraction: Sum = A + B Multiplication: Product = A x B Precision Implications -If the result is outside of the expected format then overflow can occur -Programmer must account for the potential ranges of operands to avoid precision problems Note: - Numbers have to have same radix - With base 2 scaling radix conversion is << or >> - Programmer must account for radix differences 5

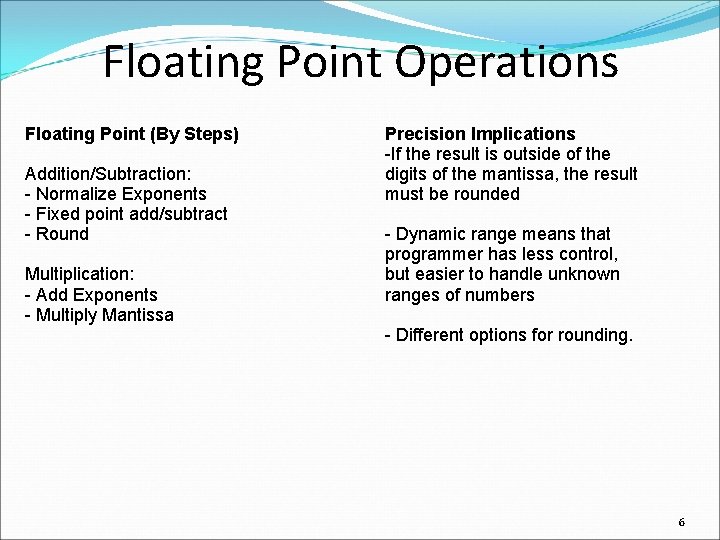

Floating Point Operations Floating Point (By Steps) Addition/Subtraction: - Normalize Exponents - Fixed point add/subtract - Round Multiplication: - Add Exponents - Multiply Mantissa Precision Implications -If the result is outside of the digits of the mantissa, the result must be rounded - Dynamic range means that programmer has less control, but easier to handle unknown ranges of numbers - Different options for rounding. 6

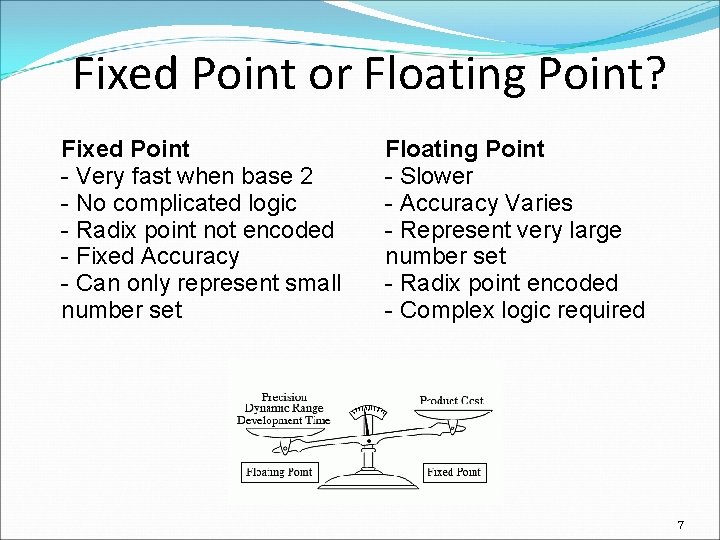

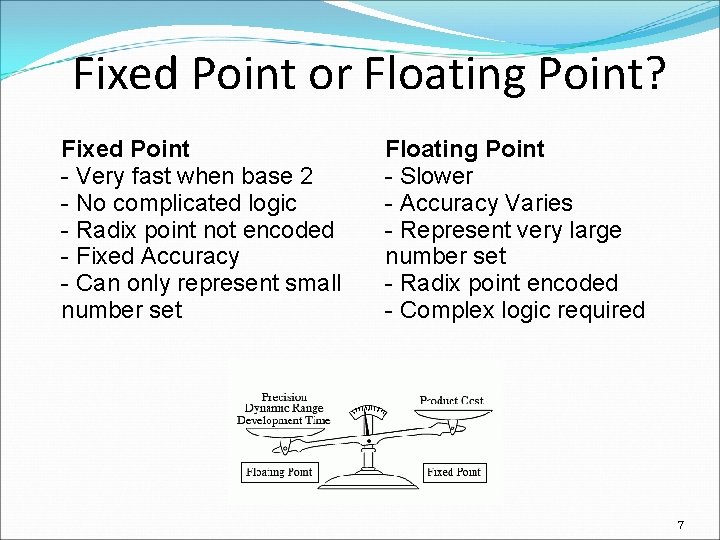

Fixed Point or Floating Point? Fixed Point - Very fast when base 2 - No complicated logic - Radix point not encoded - Fixed Accuracy - Can only represent small number set Floating Point - Slower - Accuracy Varies - Represent very large number set - Radix point encoded - Complex logic required 7

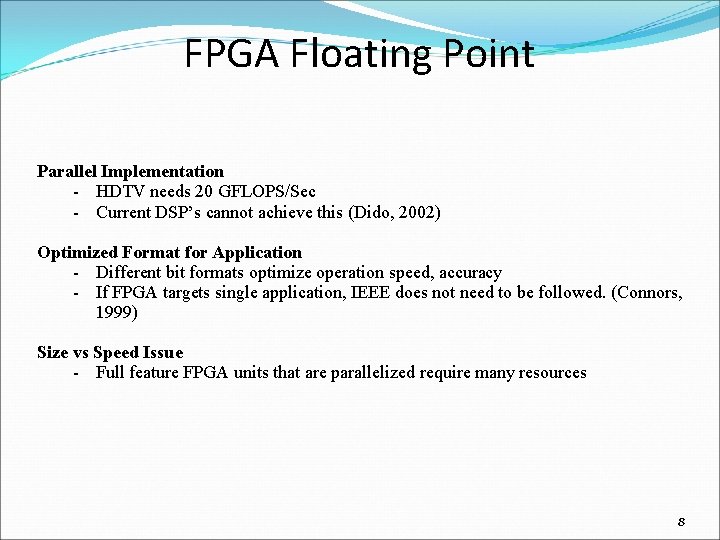

FPGA Floating Point Parallel Implementation - HDTV needs 20 GFLOPS/Sec - Current DSP’s cannot achieve this (Dido, 2002) Optimized Format for Application - Different bit formats optimize operation speed, accuracy - If FPGA targets single application, IEEE does not need to be followed. (Connors, 1999) Size vs Speed Issue - Full feature FPGA units that are parallelized require many resources 8

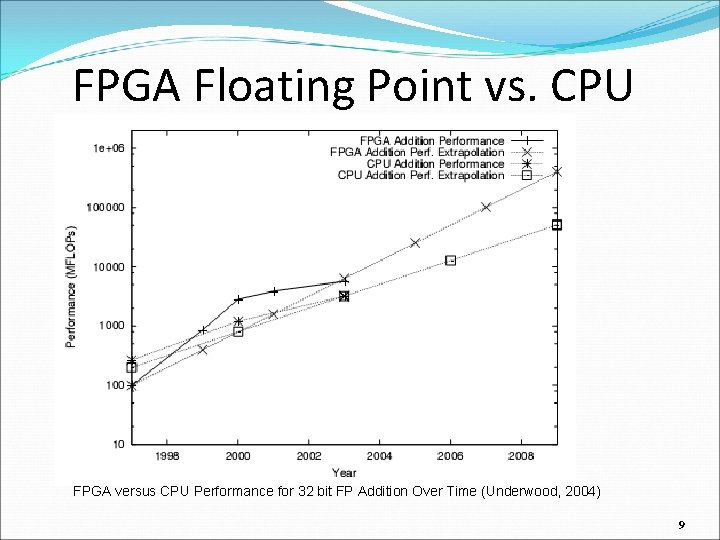

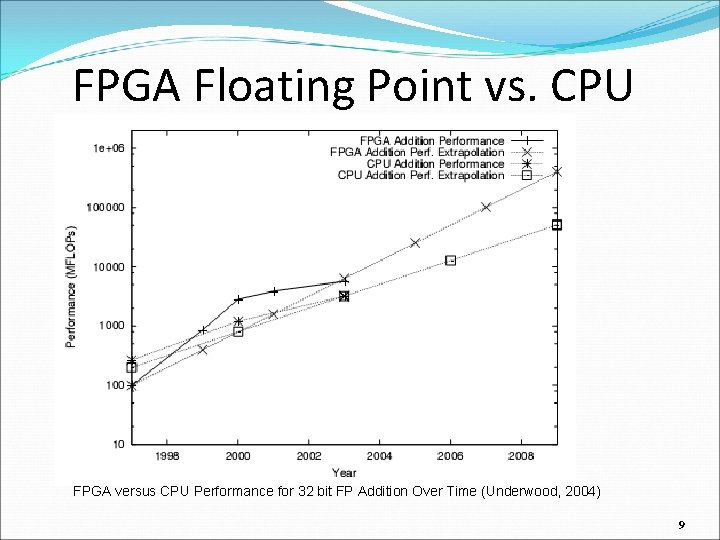

FPGA Floating Point vs. CPU FPGA versus CPU Performance for 32 bit FP Addition Over Time (Underwood, 2004) 9

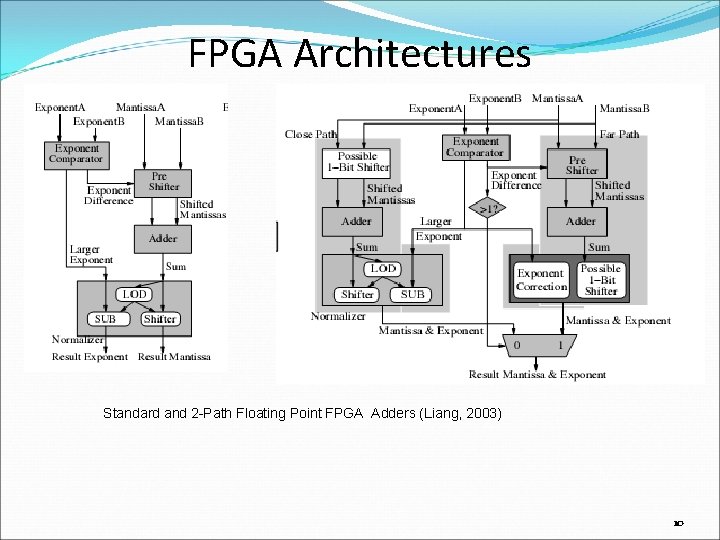

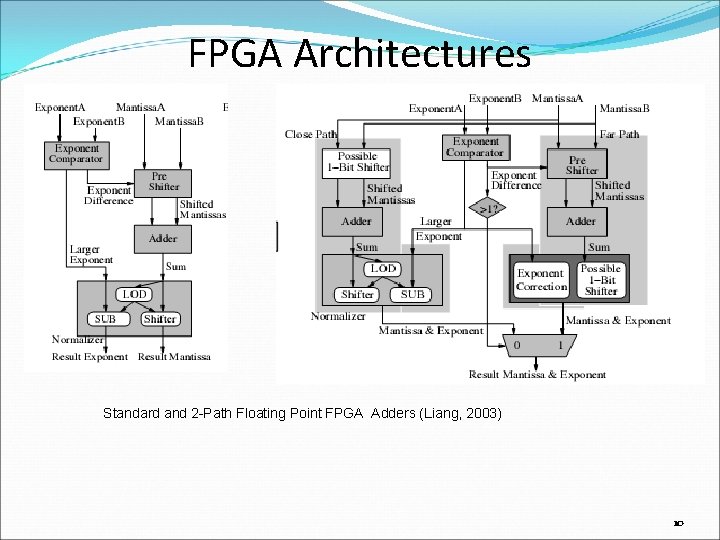

FPGA Architectures Standard and 2 -Path Floating Point FPGA Adders (Liang, 2003) 10

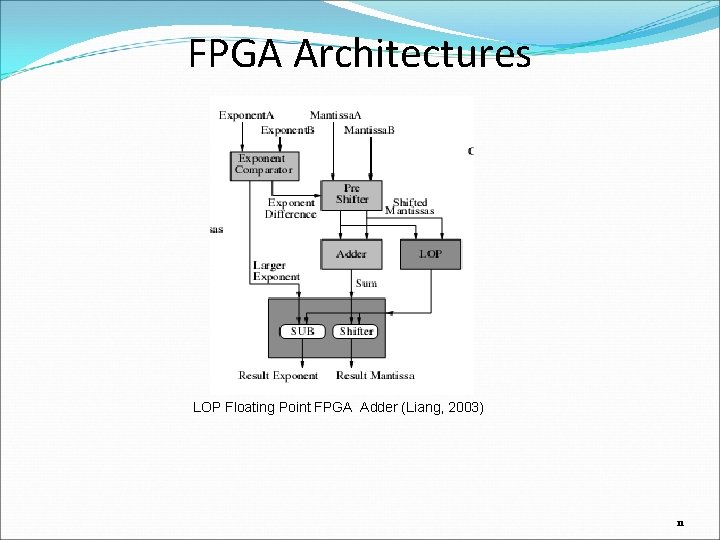

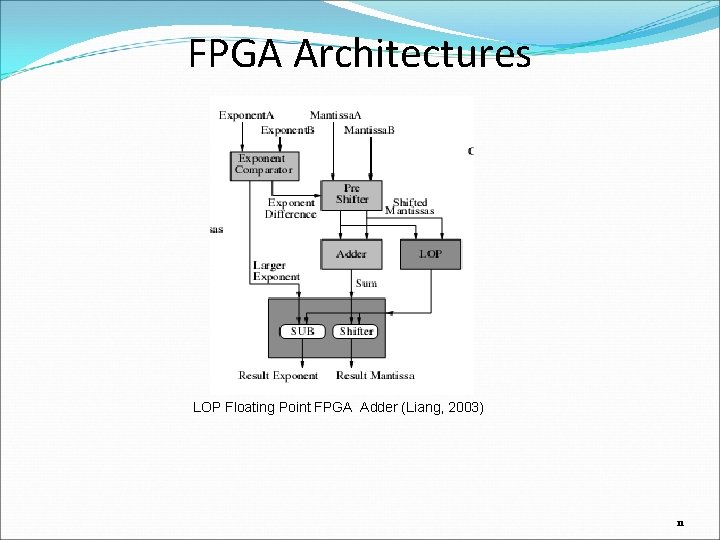

FPGA Architectures LOP Floating Point FPGA Adder (Liang, 2003) 11

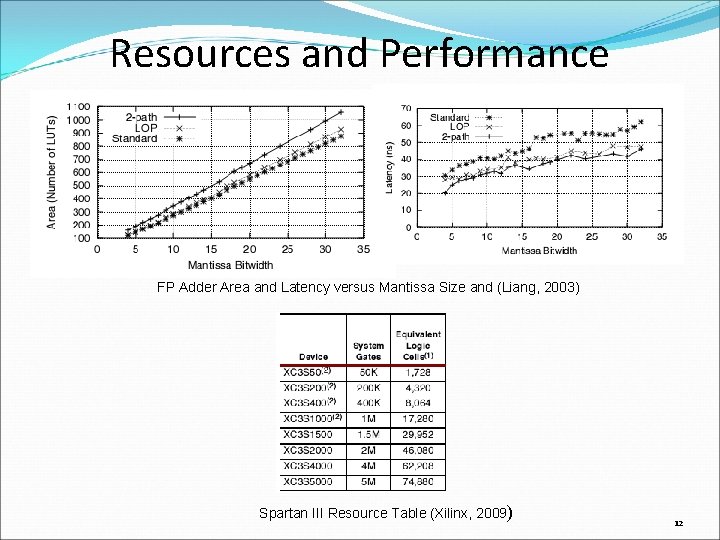

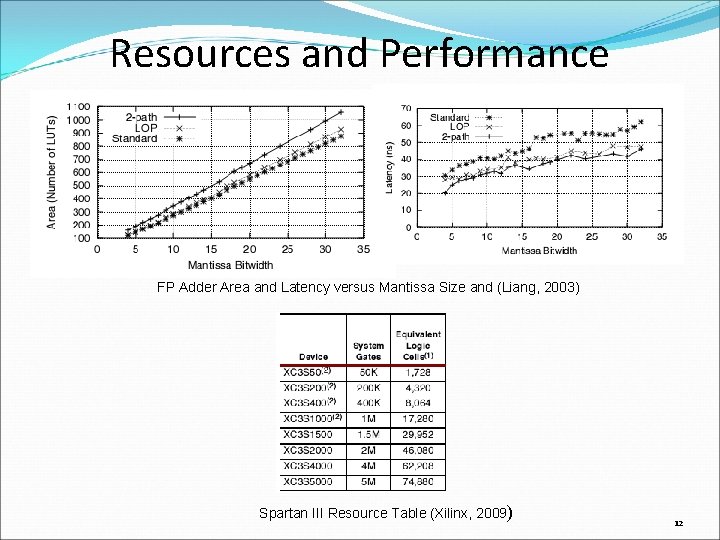

Resources and Performance FP Adder Area and Latency versus Mantissa Size and (Liang, 2003) Spartan III Resource Table (Xilinx, 2009) 12

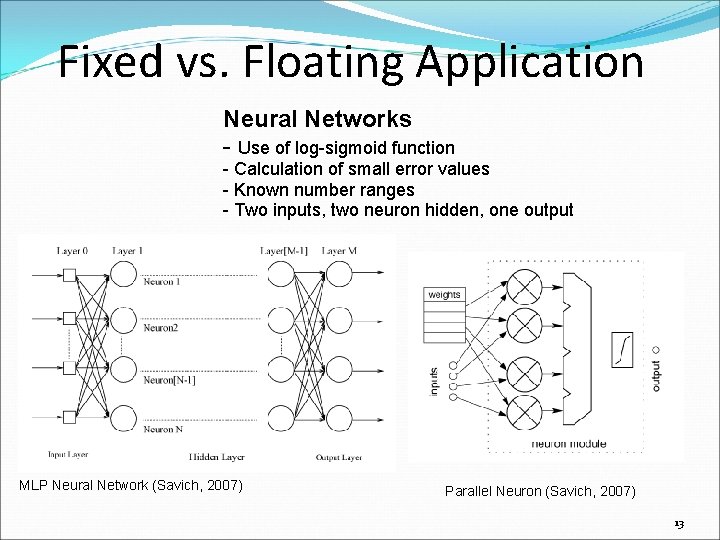

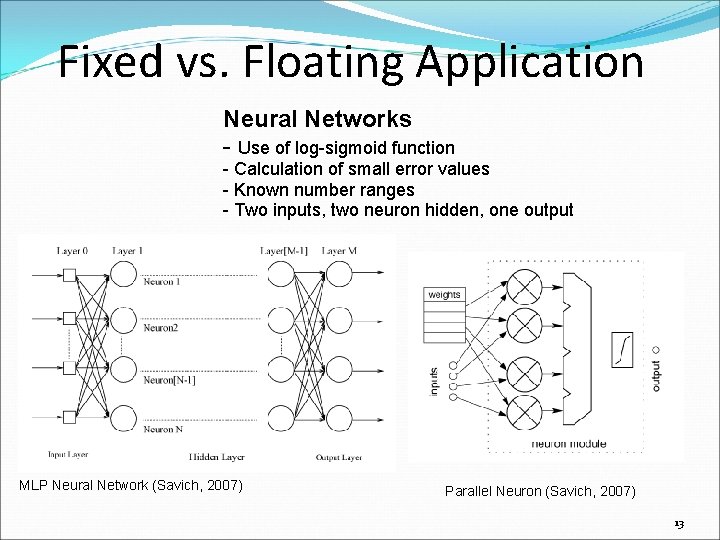

Fixed vs. Floating Application Neural Networks - Use of log-sigmoid function - Calculation of small error values - Known number ranges - Two inputs, two neuron hidden, one output MLP Neural Network (Savich, 2007) Parallel Neuron (Savich, 2007) 13

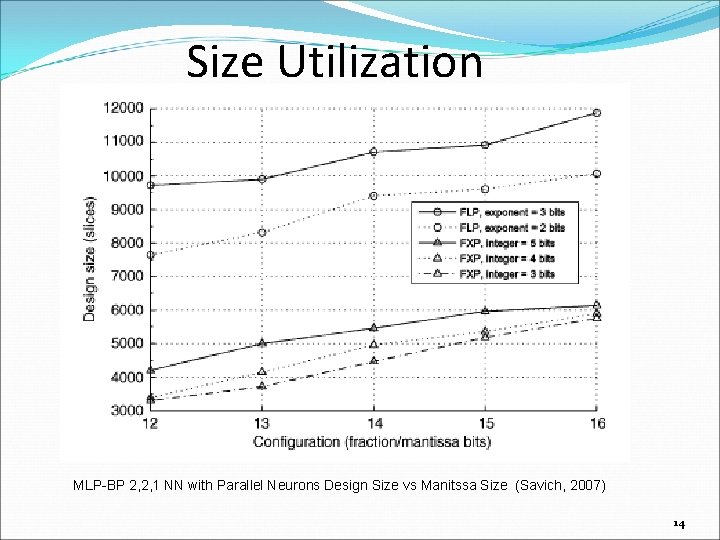

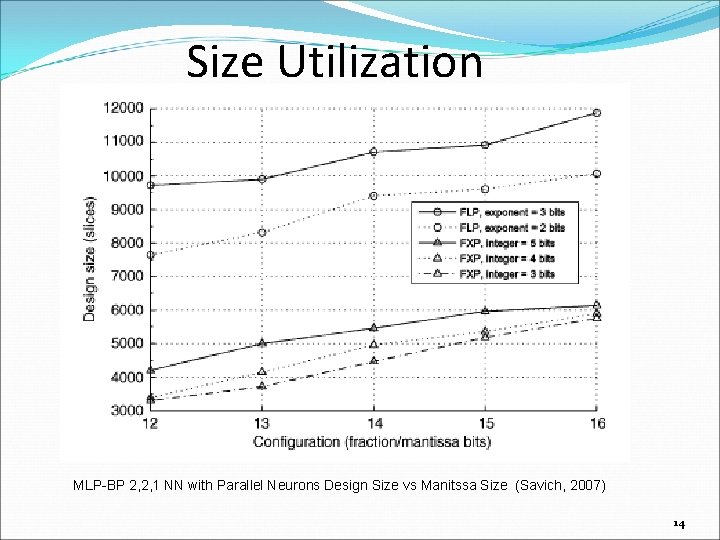

Size Utilization MLP-BP 2, 2, 1 NN with Parallel Neurons Design Size vs Manitssa Size (Savich, 2007) 14

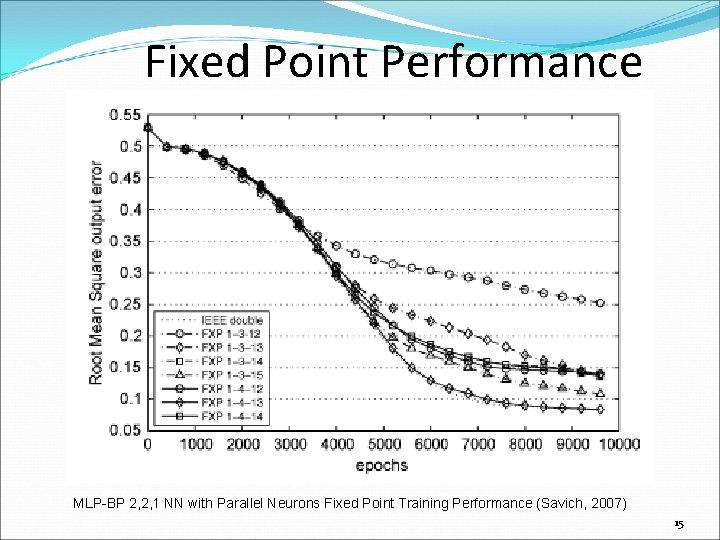

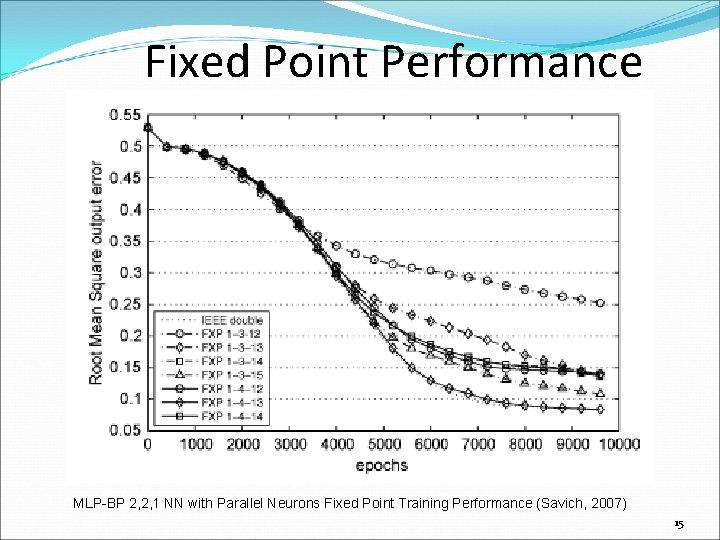

Fixed Point Performance MLP-BP 2, 2, 1 NN with Parallel Neurons Fixed Point Training Performance (Savich, 2007) 15

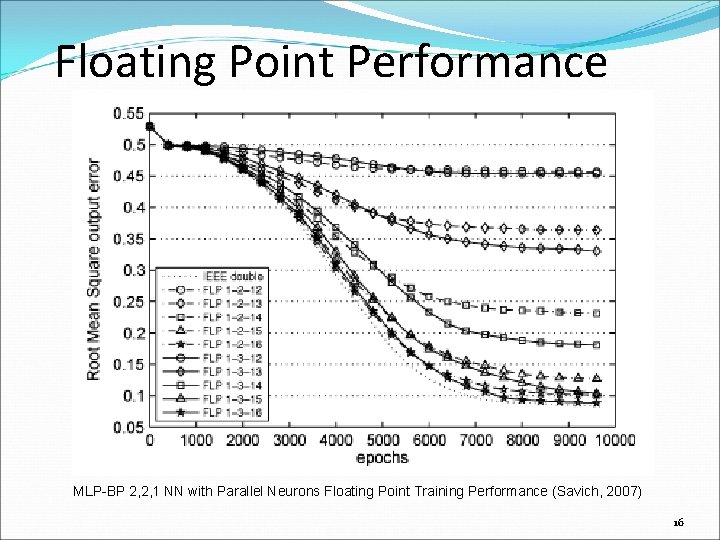

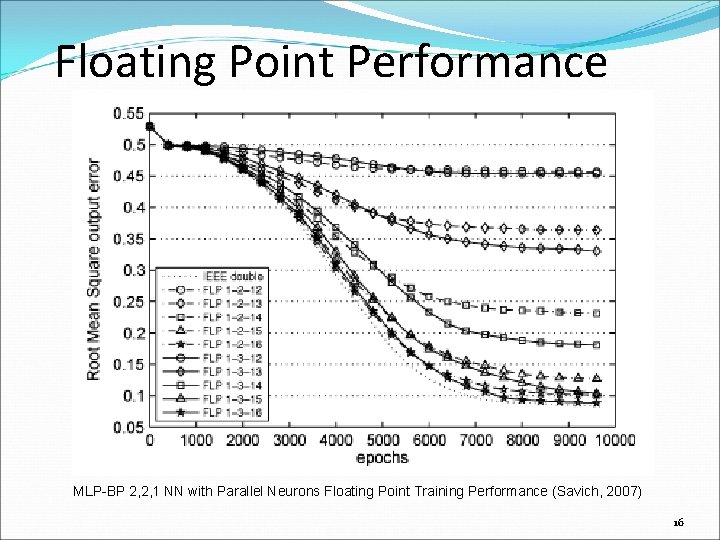

Floating Point Performance MLP-BP 2, 2, 1 NN with Parallel Neurons Floating Point Training Performance (Savich, 2007) 16

When do we NEED Floating Point? 1. Accuracy is paramount - Accuracy at small numbers while operating on large numbers 2. Range of numbers unpredictable - Fixed point programs must anticipate number ranges or errors will occur 3. Development time is very short - Time must be spent to analyze algorithm on a low level to determine number ranges 17

Floating Point Application Military Radar - Compute complex integral at a high speed - Accuracy is required due to obvious safety implications - Floating point lowers noise introduction while executing FFT High Performance DSP - More favorable signal-to-noise ratio due to high accuracy at low values - Signal-to-noise for floating point is 30 x 106 to 1 versus 30, 000 to 1 for fixed point - High resolution ADC (20 bits plus) requires floating point, fixed point registers are too small for accuracy 18

Conclusions Fixed Point is preferable for most applications - Low Resources - Low gate delays - Simplementation of HW components Floating point is useful when: - Accuracy over a large range of numbers is required - Impossible or too hard to estimate number ranges - Programming time is severely limited - The floating point architecture is best customized via FPGA 19

References Dido, J. , Geraudie, N. , Loiseau, L. , Payeur, O. , Savaria, Y. , & Poirier, D. (2002). A flexible floating-point format for optimizing data-paths and operators in FPGA based DSPs. FPGA '02: Proceedings of the 2002 ACM/SIGDA Tenth International Symposium on Field-Programmable Gate Arrays, Monterey, California, USA. 50 -55. Liang, J. , Tessier, R. , & Mencer, O. (2003). Floating point unit generation and evaluation for FPGAs. FCCM '03: Proceedings of the 11 th Annual IEEE Symposium on Field-Programmable Custom Computing Machines, 185. Savich, A. W. , Moussa, M. , & Areibi, S. (2007). The impact of arithmetic representation on implementing MLP-BP on FPGAs: A study. Neural Networks, IEEE Transactions on, 18(1), 240 -252 Underwood, K. (2004). FPGAs vs. CPUs: Trends in peak floating-point performance. FPGA '04: Proceedings of the 2004 ACM/SIGDA 12 th International Symposium on Field Programmable Gate Arrays, Monterey, California, USA. 171 -180. Xilinx. (2009). Xilinx DS 099 spartan-3 FPGA family data sheet. Retrieved 02/20, 2010, from www. xilinx. com/support/documentation/data_sheets/ds 099. pdf Yoji, D. C. , Connors, D. A. , Yamada, Y. , & Hwu, W. W. (1998). A software-oriented floating-point format for enhancing automotive control systems. Control Systems, Workshop on Compiler and Architecture Support for Embedded Computing Systems, 20

Thank You Questions? 21