Expressive Graphical Models in Variational Approximations ChainGraphs and

![Outline u Structured variational approximations [review] u Using chain-graphs u Adding hidden variables u Outline u Structured variational approximations [review] u Using chain-graphs u Adding hidden variables u](https://slidetodoc.com/presentation_image_h2/8ec22e2daa376428955e024b535f4f85/image-8.jpg)

![Structured Approximations Goal: Maximize the following functional KL Distance 0 F[Q] is a lower Structured Approximations Goal: Maximize the following functional KL Distance 0 F[Q] is a lower](https://slidetodoc.com/presentation_image_h2/8ec22e2daa376428955e024b535f4f85/image-10.jpg)

- Slides: 22

Expressive Graphical Models in Variational Approximations: Chain-Graphs and Hidden Variables Tal El-Hay & Nir Friedman School of Computer Science & Engineering Hebrew University .

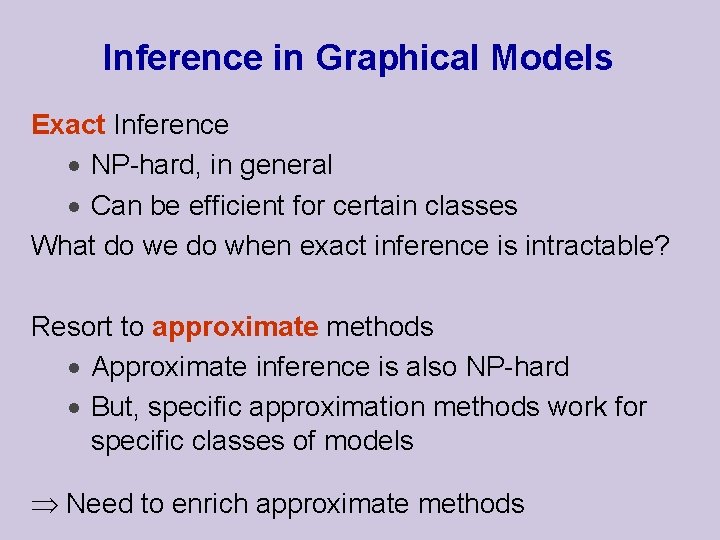

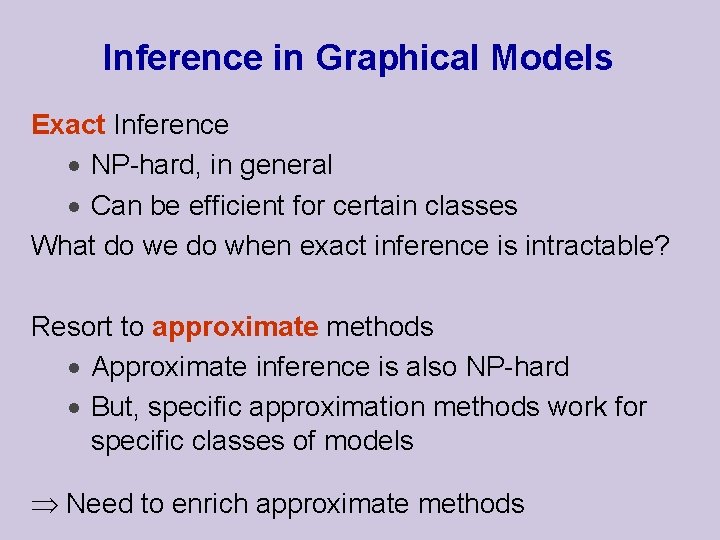

Inference in Graphical Models Exact Inference · NP-hard, in general · Can be efficient for certain classes What do we do when exact inference is intractable? Resort to approximate methods · Approximate inference is also NP-hard · But, specific approximation methods work for specific classes of models Need to enrich approximate methods

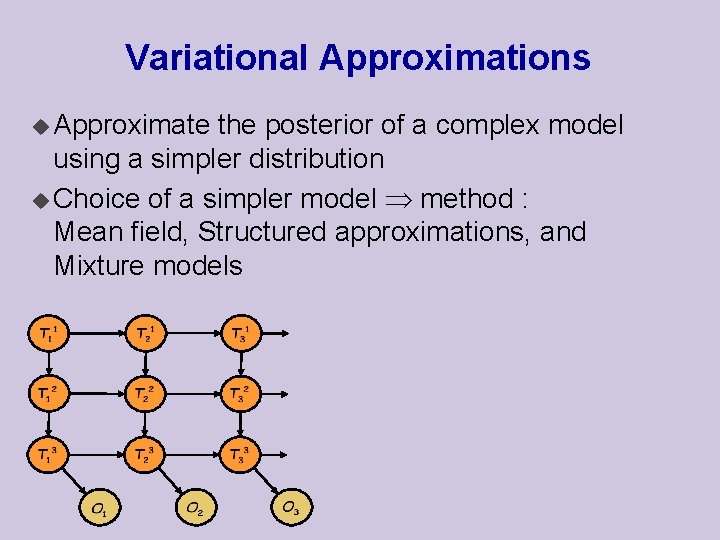

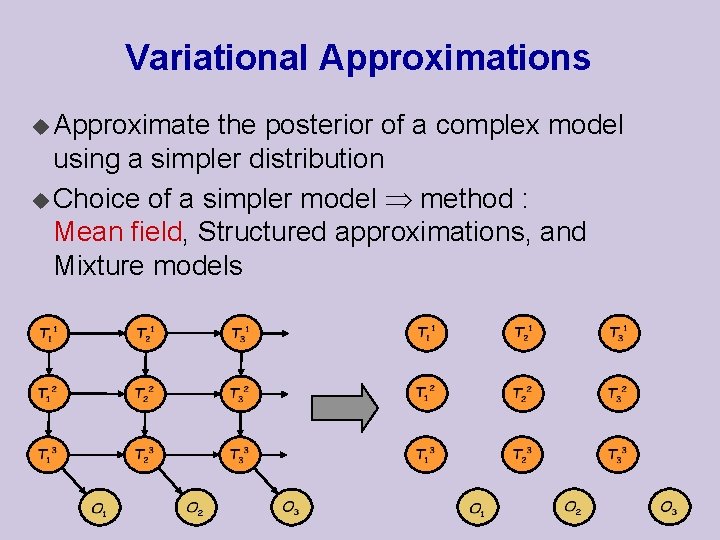

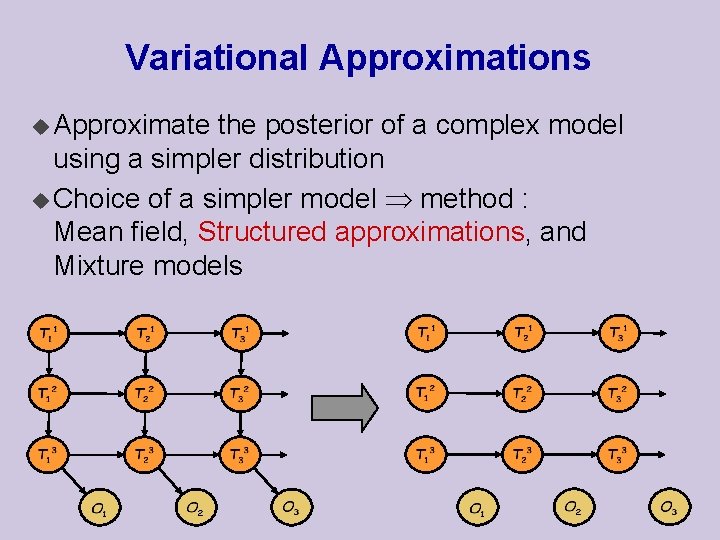

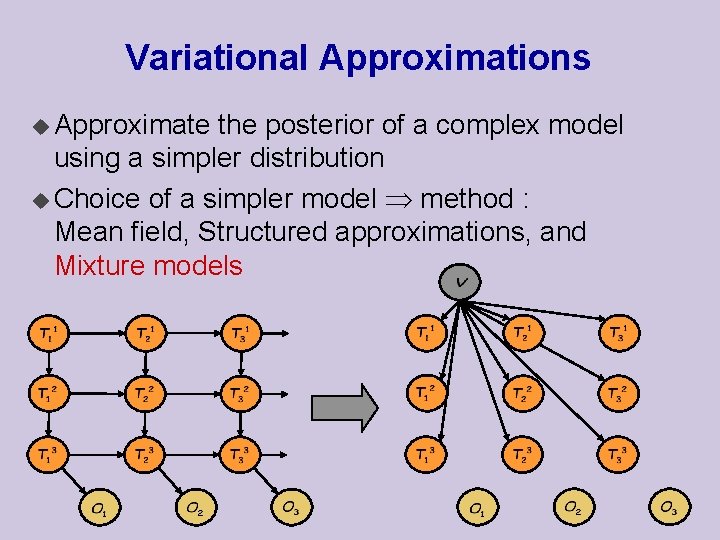

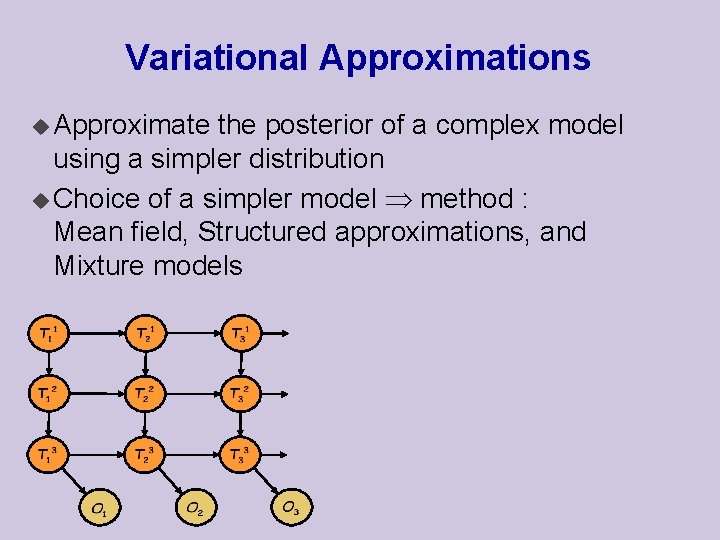

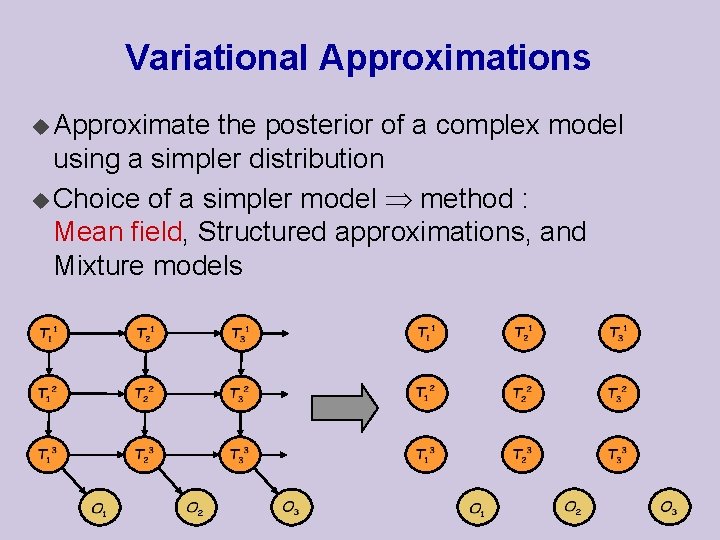

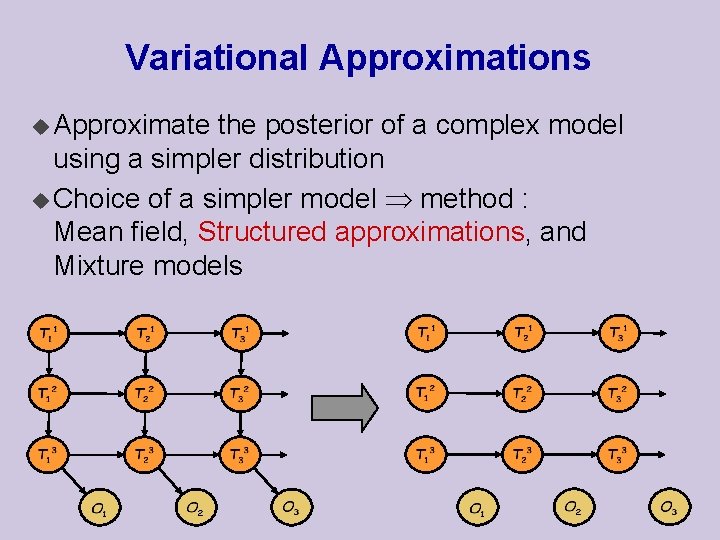

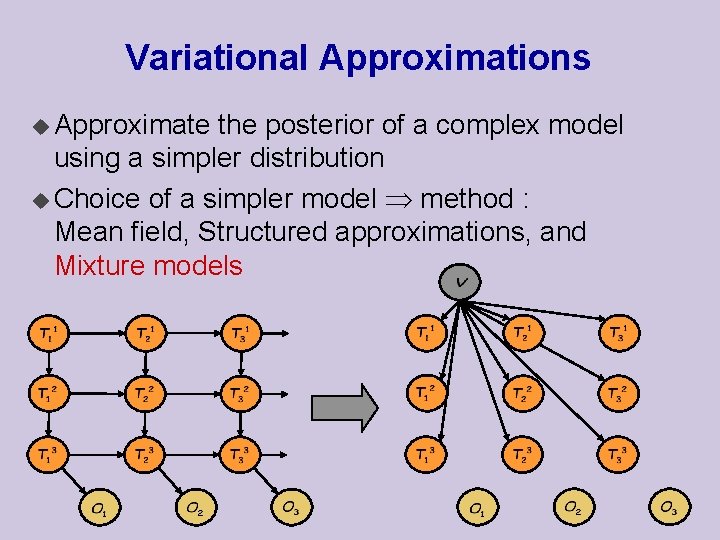

Variational Approximations u Approximate the posterior of a complex model using a simpler distribution u Choice of a simpler model method : Mean field, Structured approximations, and Mixture models

Variational Approximations u Approximate the posterior of a complex model using a simpler distribution u Choice of a simpler model method : Mean field, Structured approximations, and Mixture models

Variational Approximations u Approximate the posterior of a complex model using a simpler distribution u Choice of a simpler model method : Mean field, Structured approximations, and Mixture models

Variational Approximations u Approximate the posterior of a complex model using a simpler distribution u Choice of a simpler model method : Mean field, Structured approximations, and Mixture models

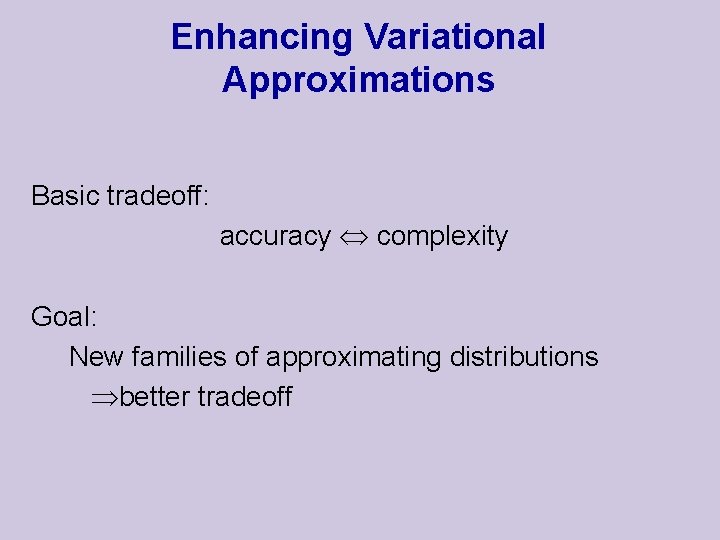

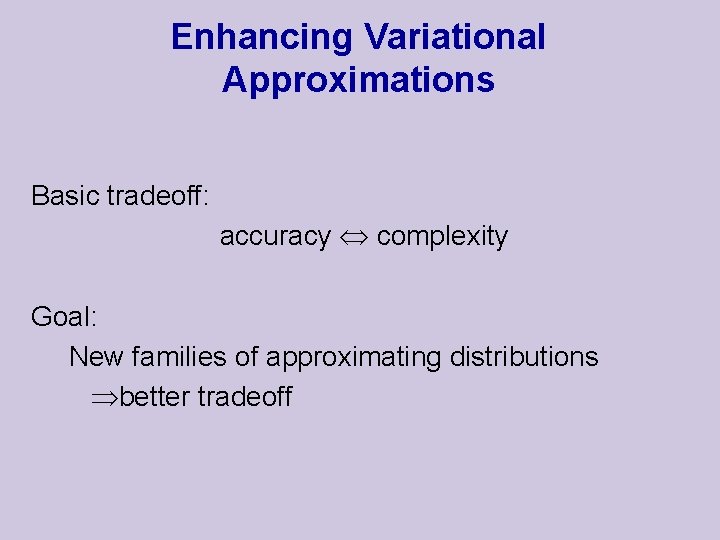

Enhancing Variational Approximations Basic tradeoff: accuracy complexity Goal: New families of approximating distributions better tradeoff

![Outline u Structured variational approximations review u Using chaingraphs u Adding hidden variables u Outline u Structured variational approximations [review] u Using chain-graphs u Adding hidden variables u](https://slidetodoc.com/presentation_image_h2/8ec22e2daa376428955e024b535f4f85/image-8.jpg)

Outline u Structured variational approximations [review] u Using chain-graphs u Adding hidden variables u Discussion

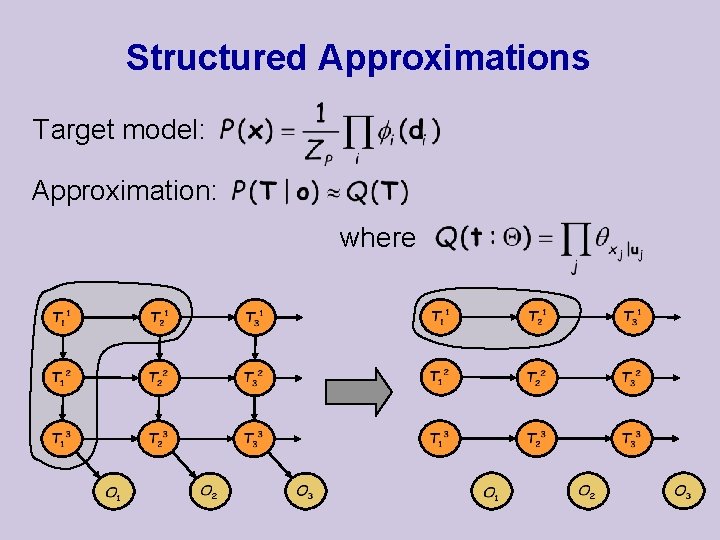

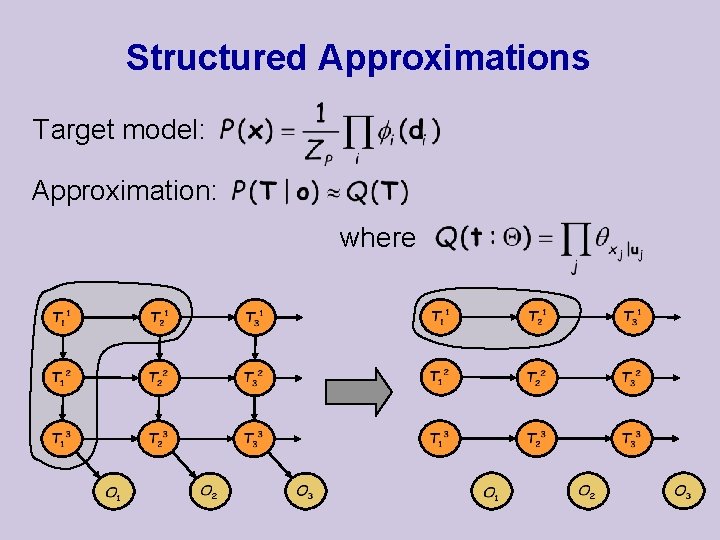

Structured Approximations Target model: Approximation: where

![Structured Approximations Goal Maximize the following functional KL Distance 0 FQ is a lower Structured Approximations Goal: Maximize the following functional KL Distance 0 F[Q] is a lower](https://slidetodoc.com/presentation_image_h2/8ec22e2daa376428955e024b535f4f85/image-10.jpg)

Structured Approximations Goal: Maximize the following functional KL Distance 0 F[Q] is a lower bound on the log likelihood u If Q is tractable then F[Q] might be tractable

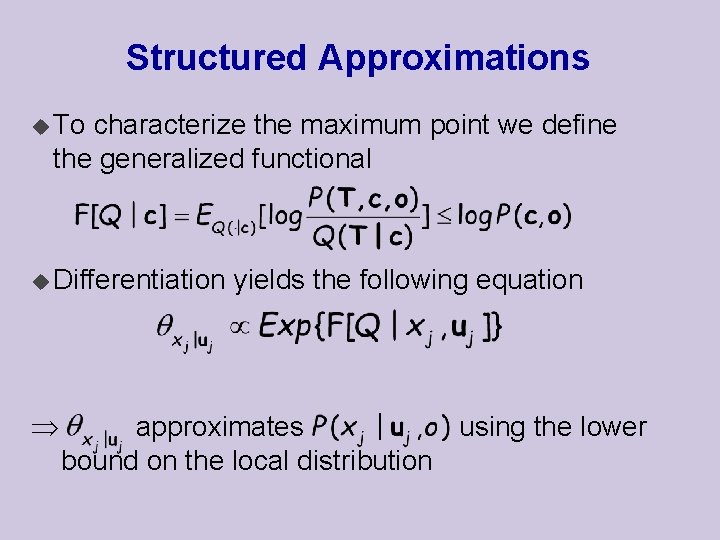

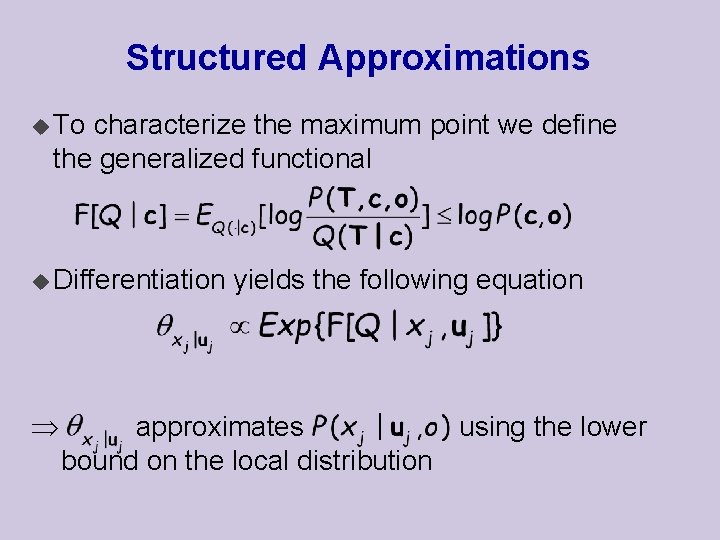

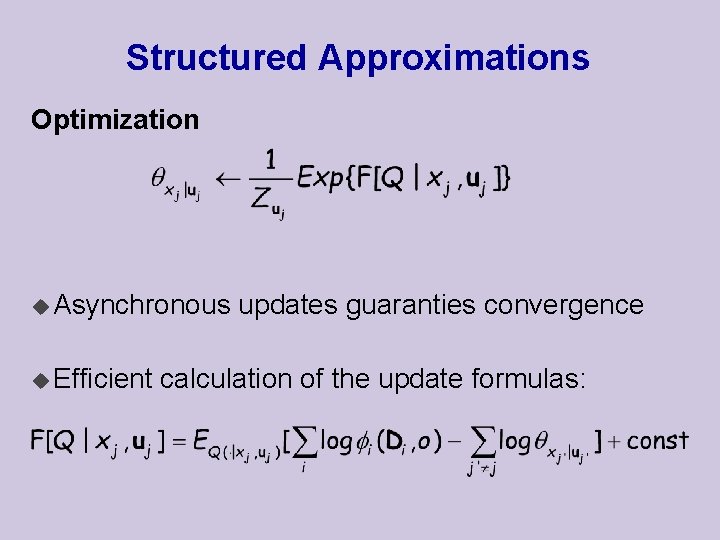

Structured Approximations u To characterize the maximum point we define the generalized functional u Differentiation yields the following equation approximates using the lower bound on the local distribution

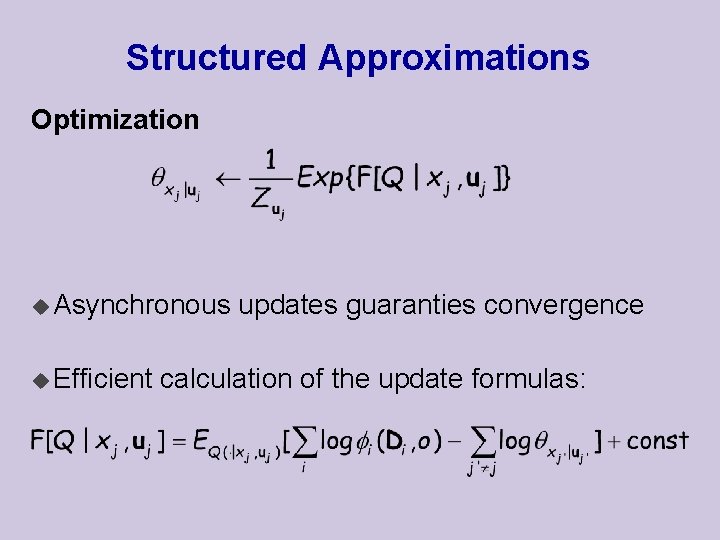

Structured Approximations Optimization u Asynchronous u Efficient updates guaranties convergence calculation of the update formulas:

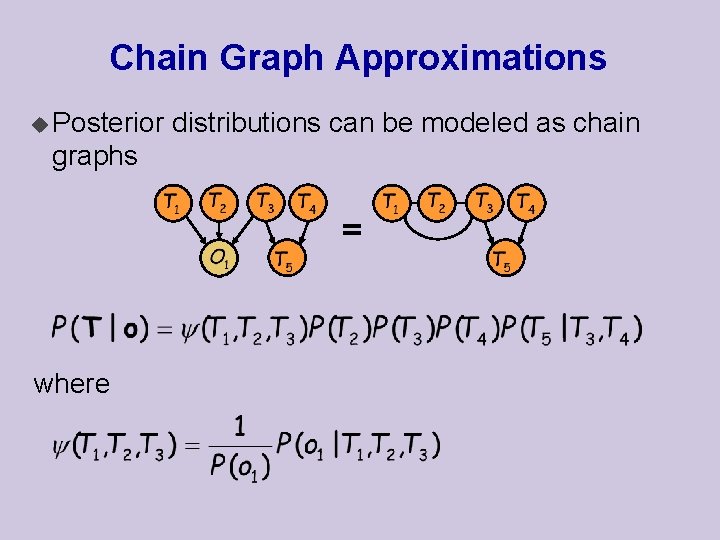

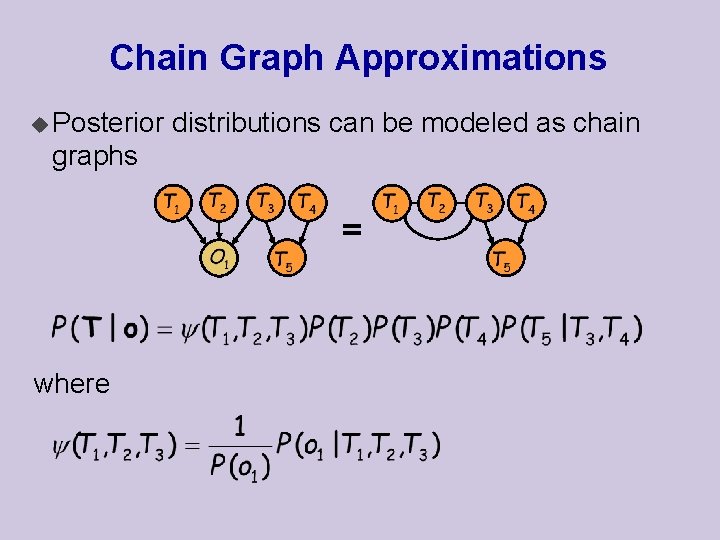

Chain Graph Approximations u Posterior distributions can be modeled as chain graphs = where

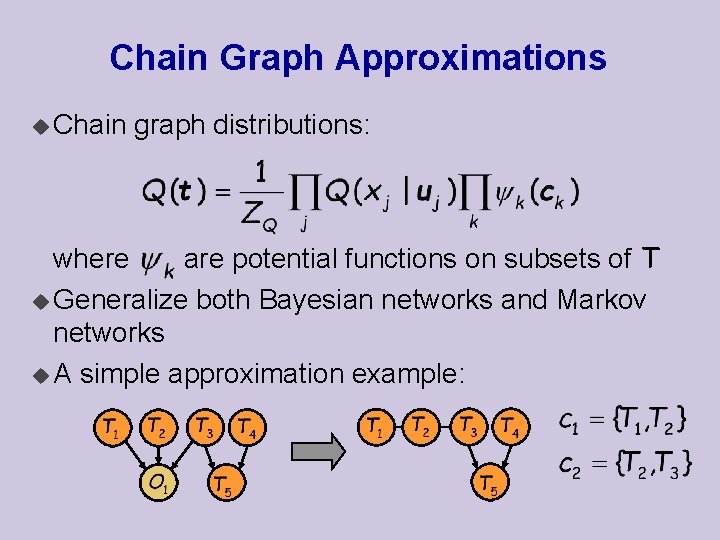

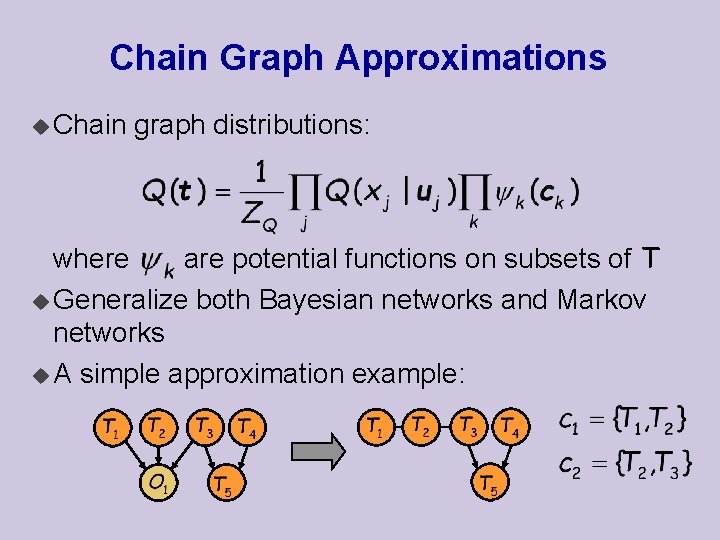

Chain Graph Approximations u Chain graph distributions: where are potential functions on subsets of T u Generalize both Bayesian networks and Markov networks u A simple approximation example:

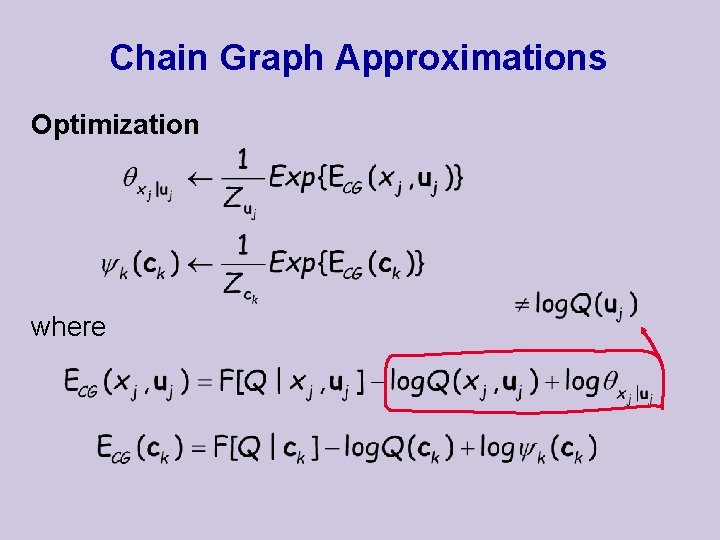

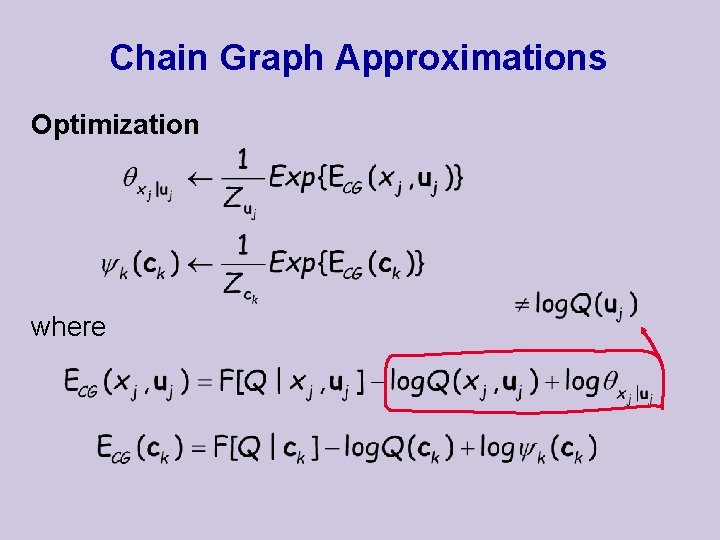

Chain Graph Approximations Optimization where

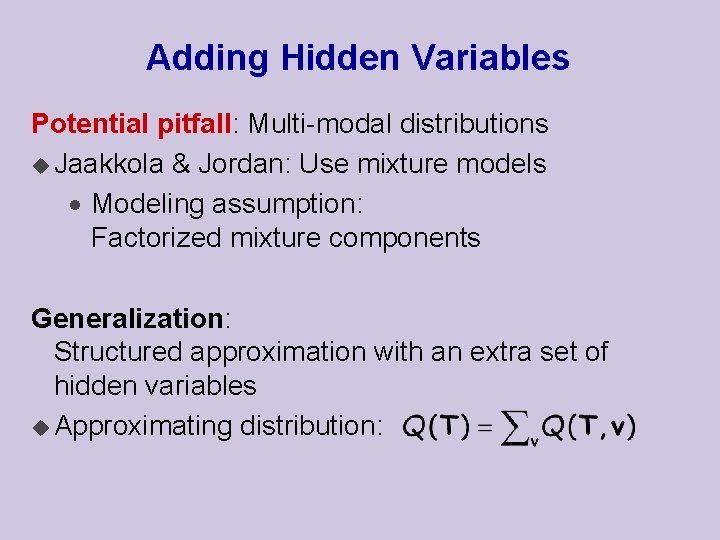

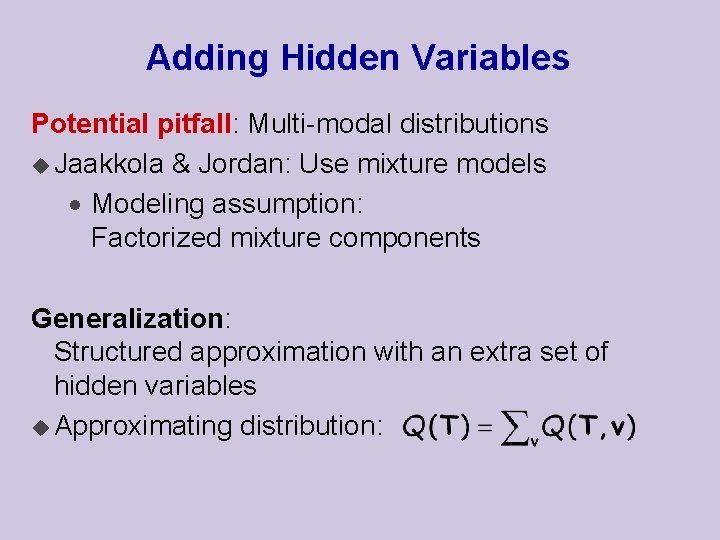

Adding Hidden Variables Potential pitfall: Multi-modal distributions u Jaakkola & Jordan: Use mixture models · Modeling assumption: Factorized mixture components Generalization: Structured approximation with an extra set of hidden variables u Approximating distribution:

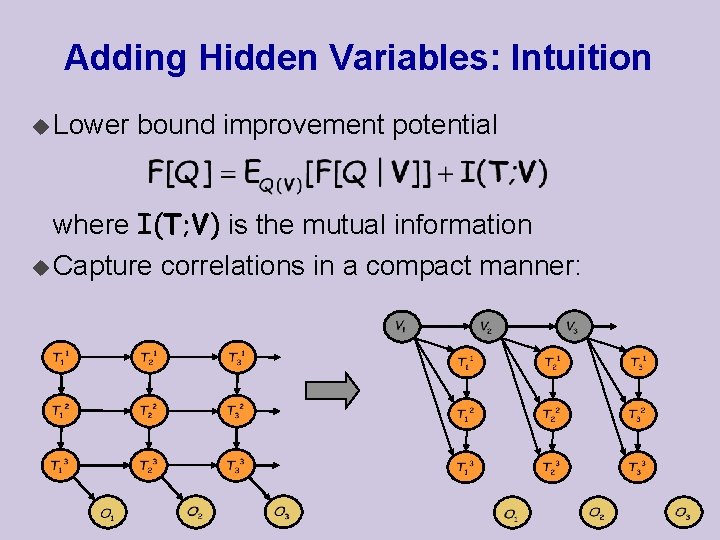

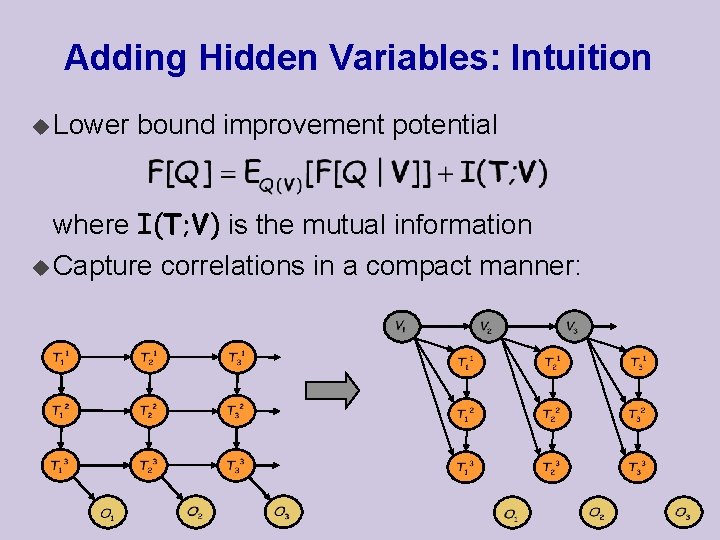

Adding Hidden Variables: Intuition u Lower bound improvement potential where I(T; V) is the mutual information u Capture correlations in a compact manner:

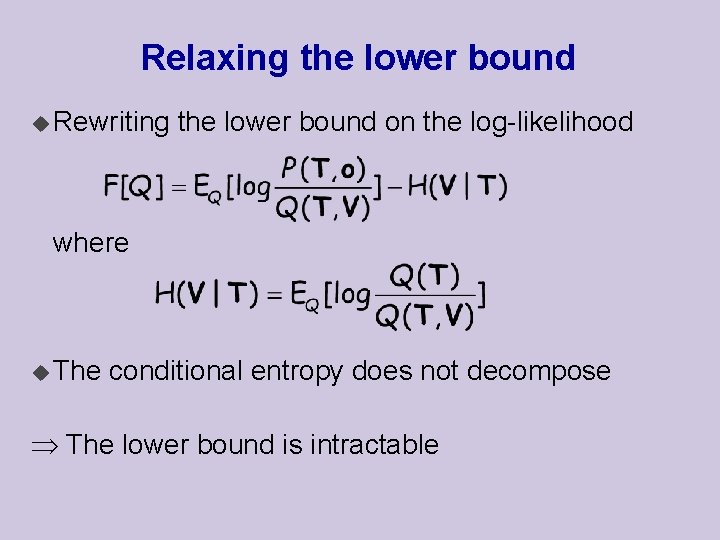

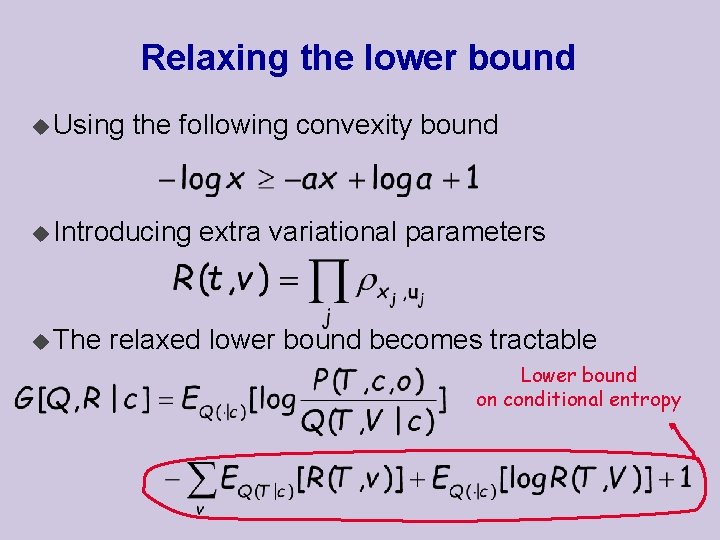

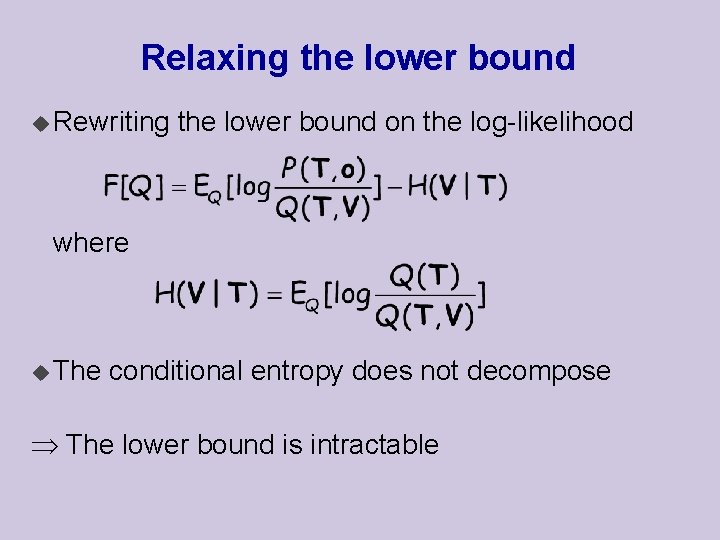

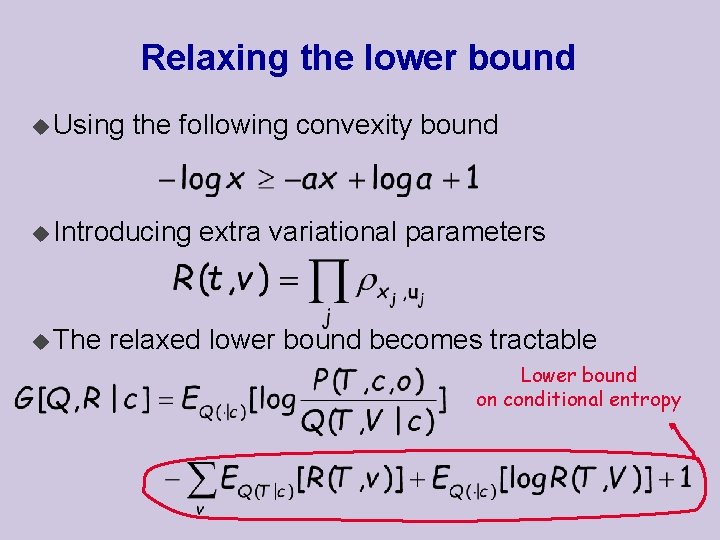

Relaxing the lower bound u Rewriting the lower bound on the log-likelihood where u The conditional entropy does not decompose The lower bound is intractable

Relaxing the lower bound u Using the following convexity bound u Introducing u The extra variational parameters relaxed lower bound becomes tractable Lower bound on conditional entropy

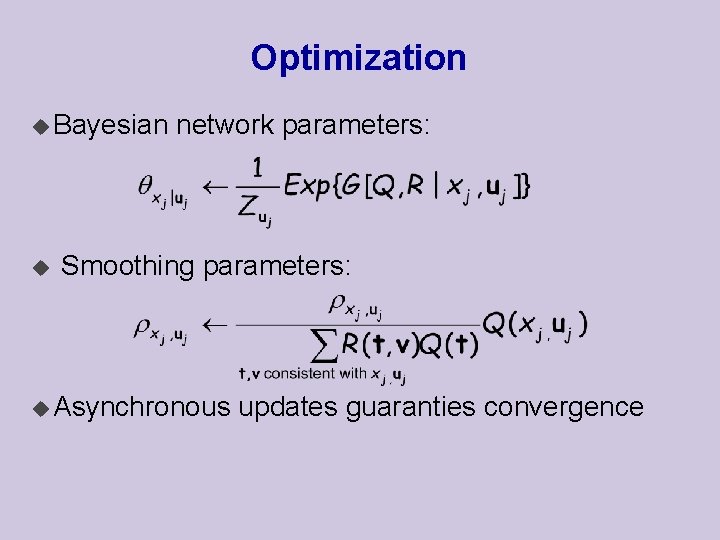

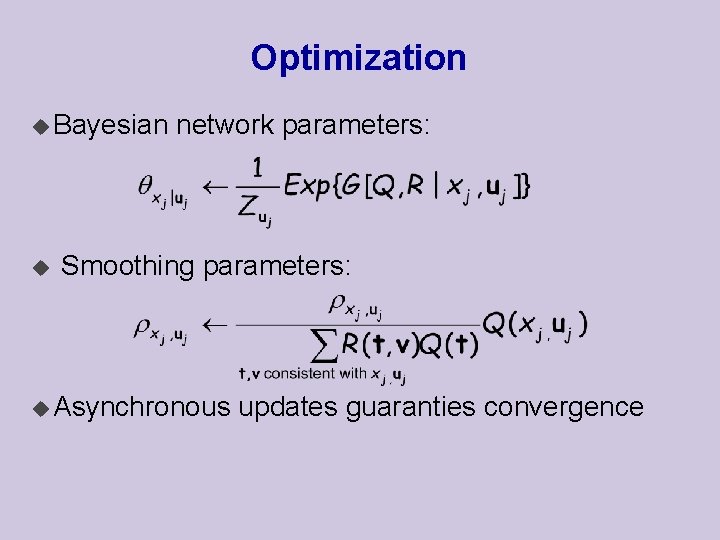

Optimization u Bayesian u network parameters: Smoothing parameters: u Asynchronous updates guaranties convergence

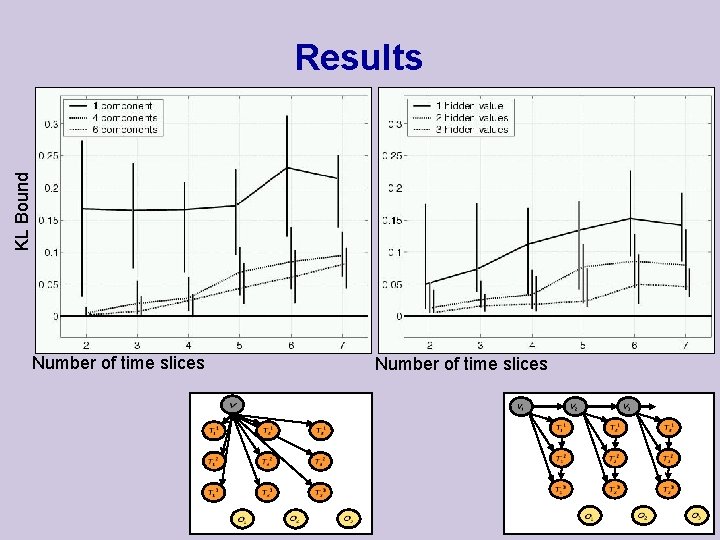

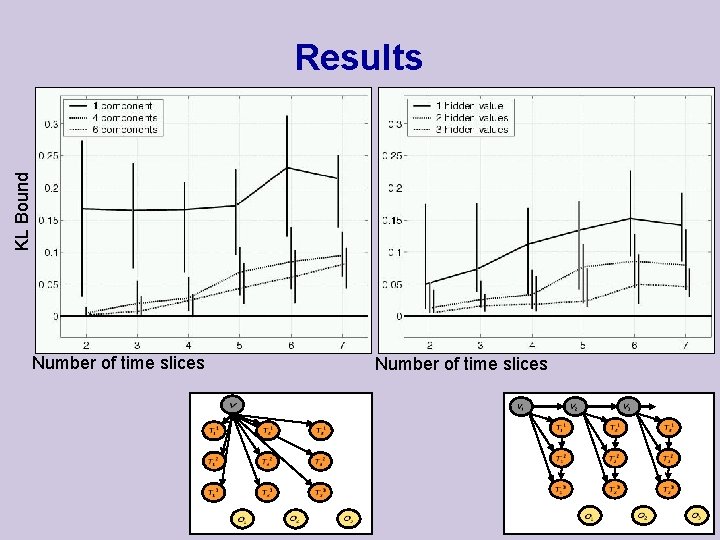

KL Bound Results Number of time slices

Discussion u Extending representational features of approximating distributions Better tradeoff ? u Addition of hidden variables improves approximation u Derivations of different methods use a uniform machinery Future directions u Saving computations by planning the order of updates u Structure of the approximating distribution