EE 384 Packet Switch Architectures Winter 2012 Lecture

- Slides: 38

EE 384 Packet Switch Architectures Winter 2012 Lecture 8 a Packet Buffers with Latency Sundar Iyer

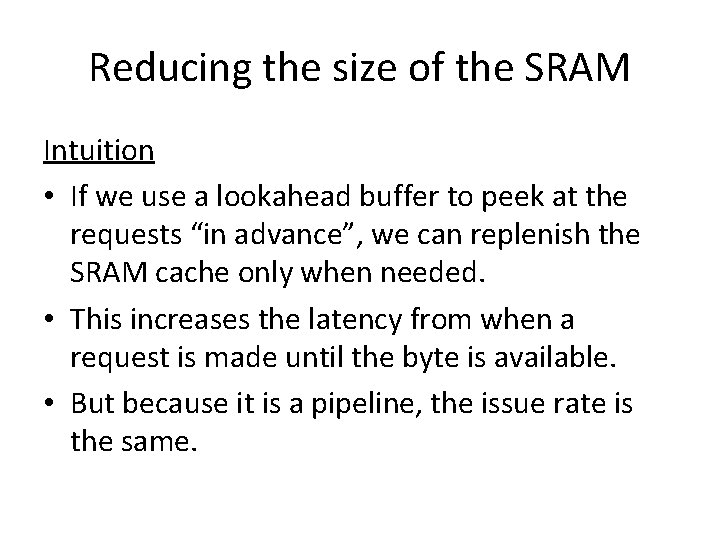

Reducing the size of the SRAM Intuition • If we use a lookahead buffer to peek at the requests “in advance”, we can replenish the SRAM cache only when needed. • This increases the latency from when a request is made until the byte is available. • But because it is a pipeline, the issue rate is the same.

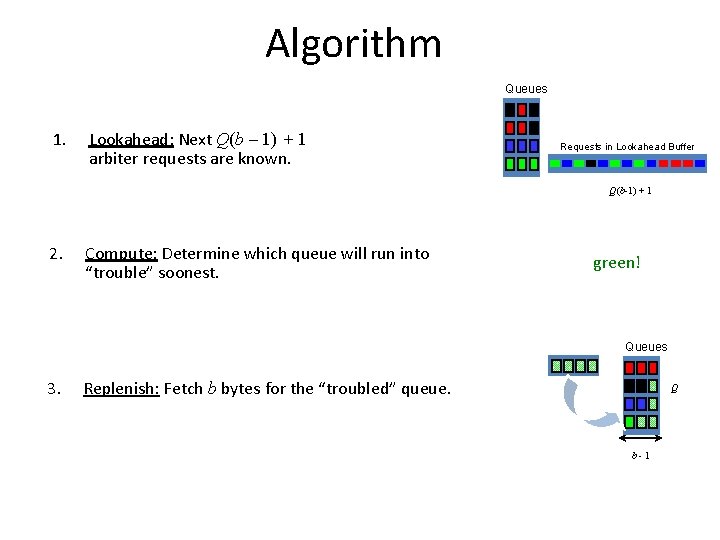

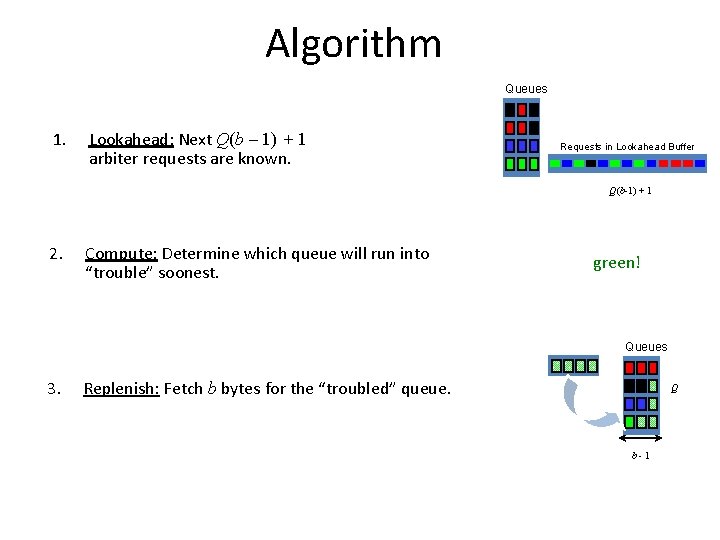

Algorithm Queues Q 1. Lookahead: Next Q(b – 1) + 1 arbiter requests are known. Requests in Lookahead Buffer b-1 Q(b-1) + 1 2. Compute: Determine which queue will run into “trouble” soonest. green! Queues 3. Replenish: Fetch b bytes for the “troubled” queue. Q b-1

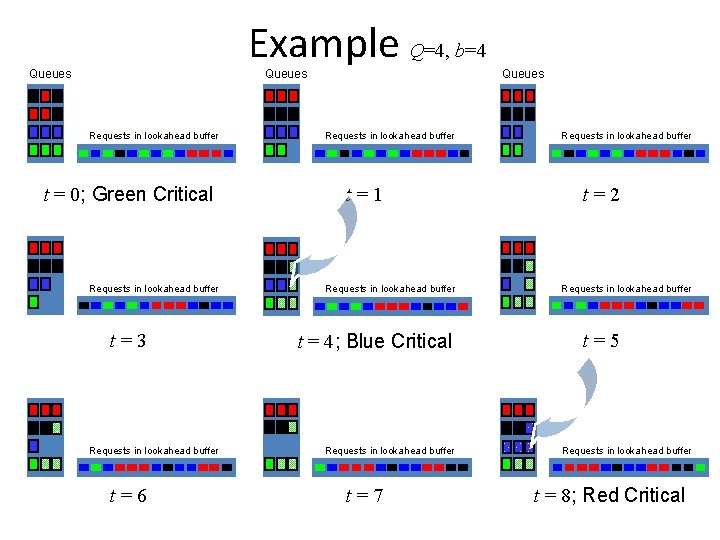

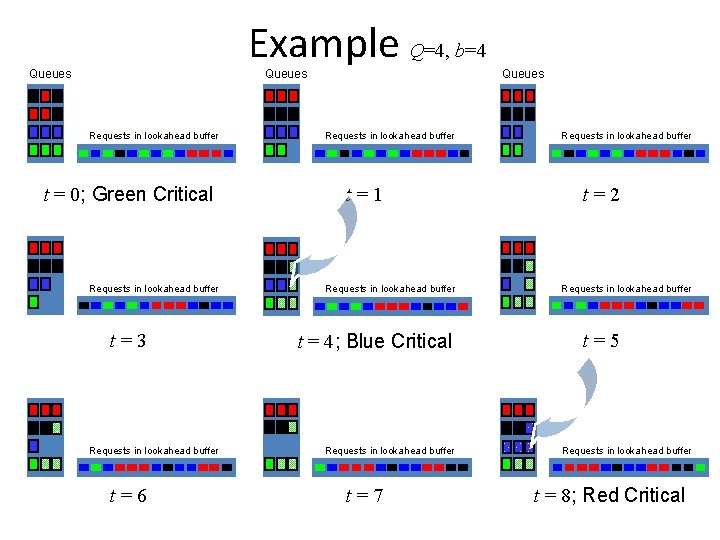

Example Q=4, b=4 Queues Requests in lookahead buffer t = 0; Green Critical Requests in lookahead buffer t=3 Requests in lookahead buffer t=6 Queues Requests in lookahead buffer t=1 t=2 Requests in lookahead buffer t=5 t = 4; Blue Critical Requests in lookahead buffer t=7 EE 384 x Requests in lookahead buffer 4 t = Winter 8; Red Critical 2008

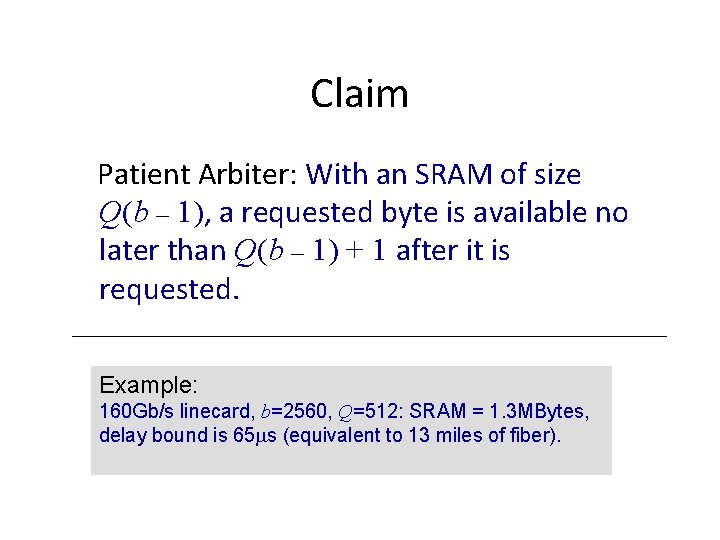

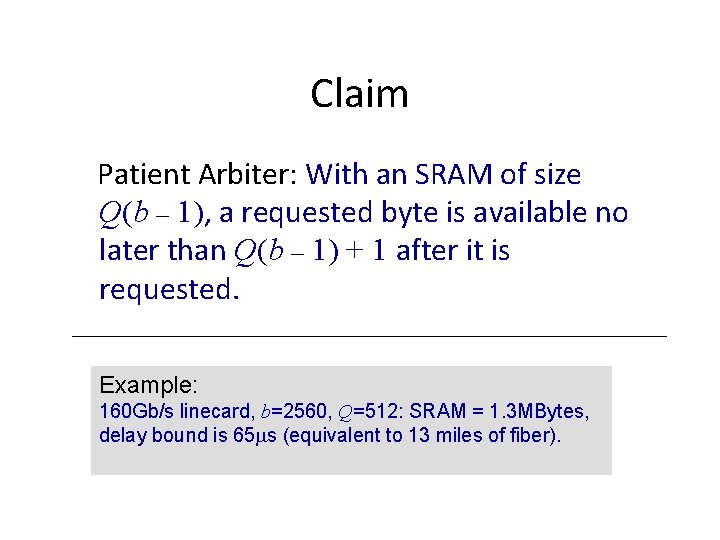

Claim Patient Arbiter: With an SRAM of size Q(b – 1), a requested byte is available no later than Q(b – 1) + 1 after it is requested. Example: 160 Gb/s linecard, b=2560, Q=512: SRAM = 1. 3 MBytes, delay bound is 65 ms (equivalent to 13 miles of fiber).

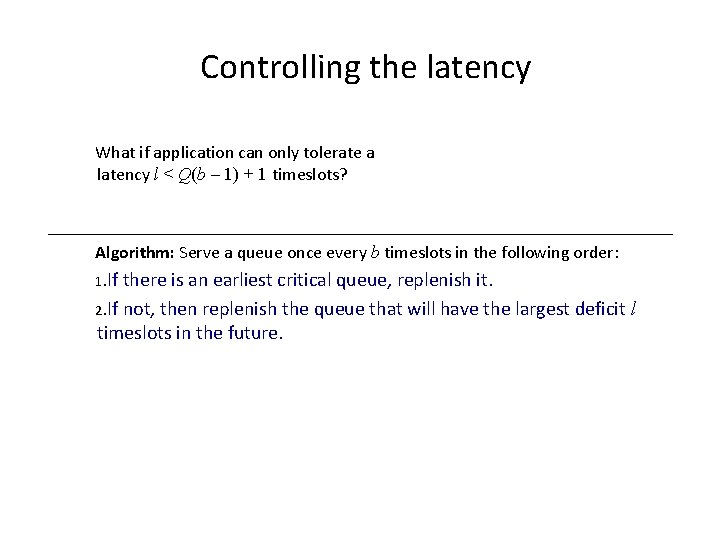

Controlling the latency What if application can only tolerate a latency l < Q(b – 1) + 1 timeslots? Algorithm: Serve a queue once every b timeslots in the following order: 1. If there is an earliest critical queue, replenish it. 2. If not, then replenish the queue that will have the largest deficit timeslots in the future. l

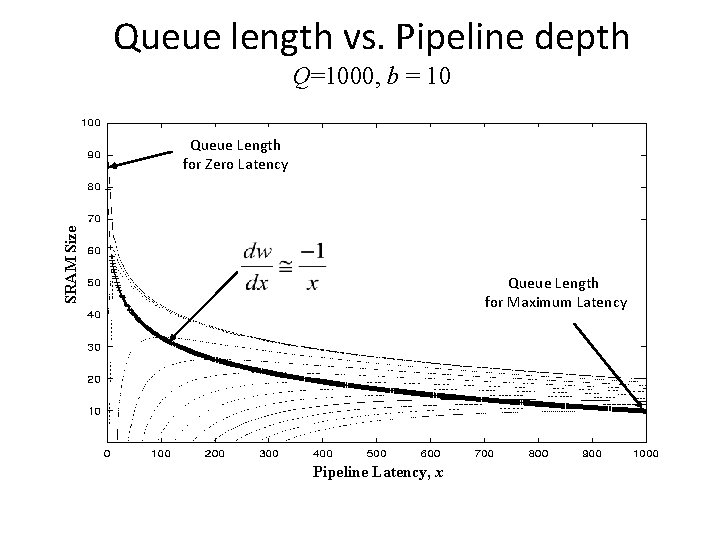

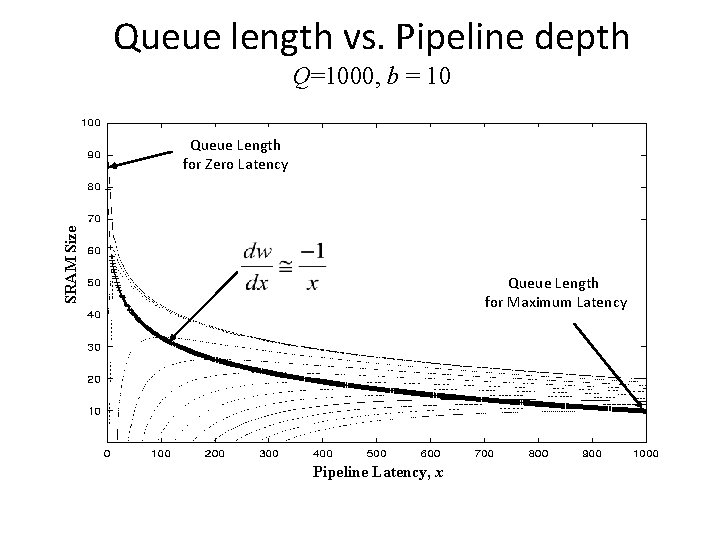

Queue length vs. Pipeline depth Q=1000, b = 10 SRAM Size Queue Length for Zero Latency Queue Length for Maximum Latency Pipeline Latency, x

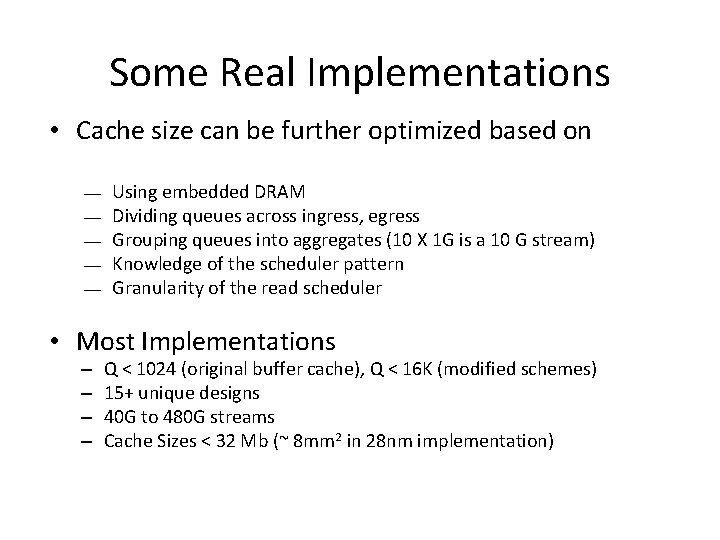

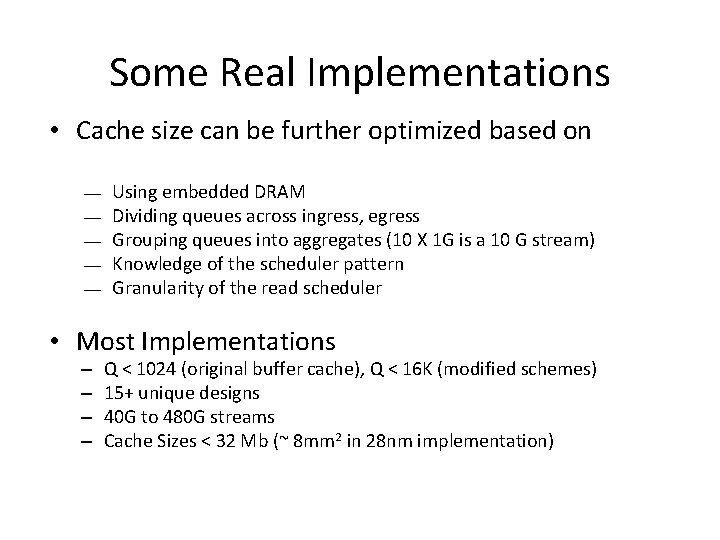

Some Real Implementations • Cache size can be further optimized based on ¾ ¾ ¾ Using embedded DRAM Dividing queues across ingress, egress Grouping queues into aggregates (10 X 1 G is a 10 G stream) Knowledge of the scheduler pattern Granularity of the read scheduler • Most Implementations – – Q < 1024 (original buffer cache), Q < 16 K (modified schemes) 15+ unique designs 40 G to 480 G streams Cache Sizes < 32 Mb (~ 8 mm 2 in 28 nm implementation)

EE 384 Packet Switch Architectures Winter 2012 Lecture 8 b Statistics Counters Sundar Iyer

What is a Statistics Counter? • When a packet arrives, it is first classified to determine the type of the packet. • Depending on the type(s), one or more counters corresponding to the criteria are updated/incremented

Examples of Statistics Counters • Packet switches maintain counters for – Route prefixes, ACLs, TCP connections – SNMP MIBs • Counters are required for – Traffic Engineering • Policing & Shaping – Intrusion detection – Performance Monitoring (RMON) – Network Tracing

Motivation: Build high speed counters Determine and analyze techniques for building very high speed (>100 Gb/s) statistics counters and support an extremely large number of counters. OC 192 c = 10 Gb/s; Counters/pkt (C) = 10; Reff = 100 Gb/s; Total Counters (N) = 1 million; Counter Size (M) = 64 bits; Counter Memory = N*M = 64 Mb; MOPS =150 M reads, 150 M writes Stanford University

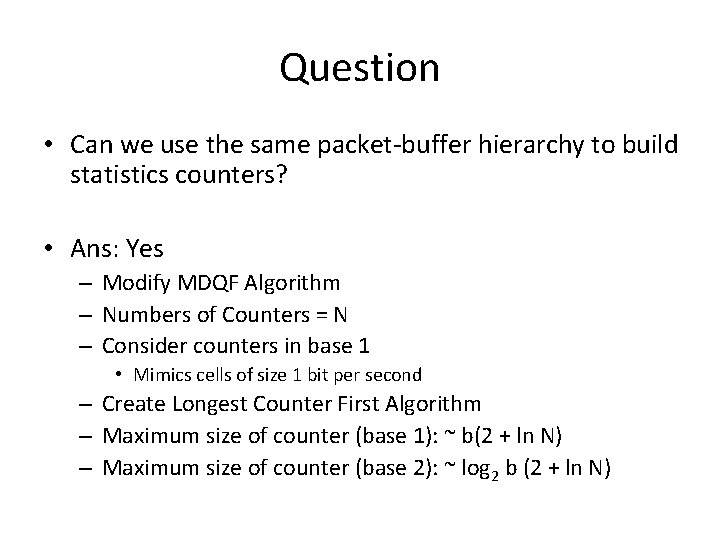

Question • Can we use the same packet-buffer hierarchy to build statistics counters? • Ans: Yes – Modify MDQF Algorithm – Numbers of Counters = N – Consider counters in base 1 • Mimics cells of size 1 bit per second – Create Longest Counter First Algorithm – Maximum size of counter (base 1): ~ b(2 + ln N) – Maximum size of counter (base 2): ~ log 2 b (2 + ln N)

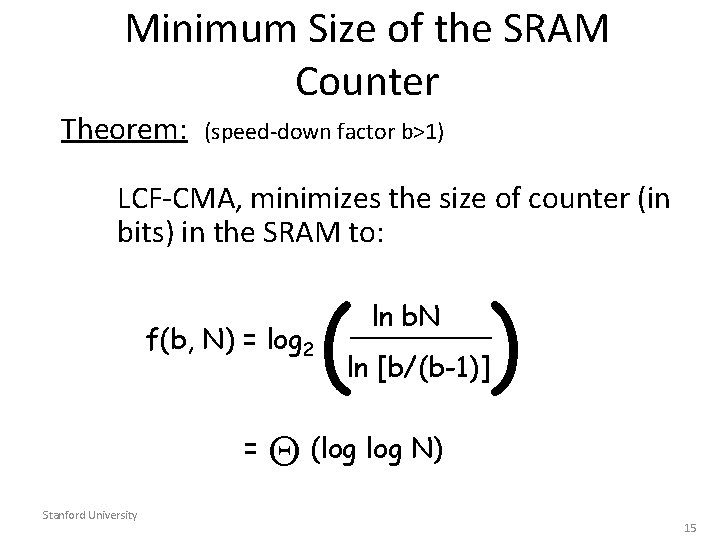

Optimality of LCF-CMA Can we do better? Theorem: LCF-CMA, is optimal in the sense that it minimizes the size of the counter maintained in SRAM Stanford University 14

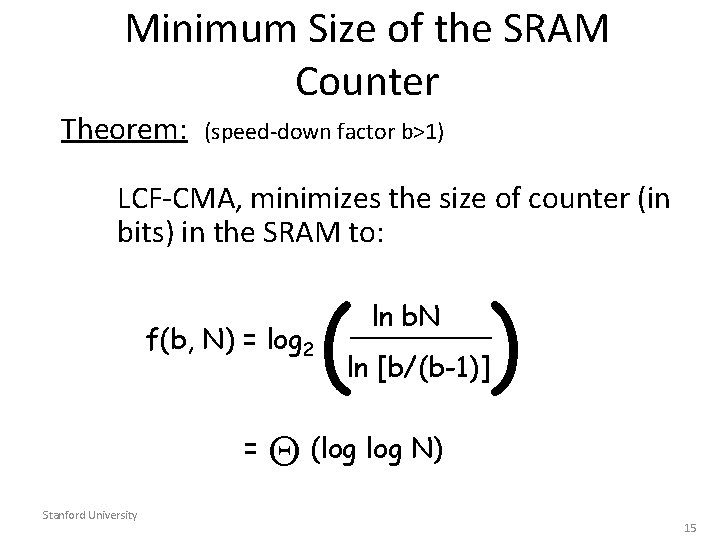

Minimum Size of the SRAM Counter Theorem: (speed-down factor b>1) LCF-CMA, minimizes the size of counter (in bits) in the SRAM to: f(b, N) = log 2 = Stanford University ( ) ln b. N ____ ln [b/(b-1)] (log N) 15

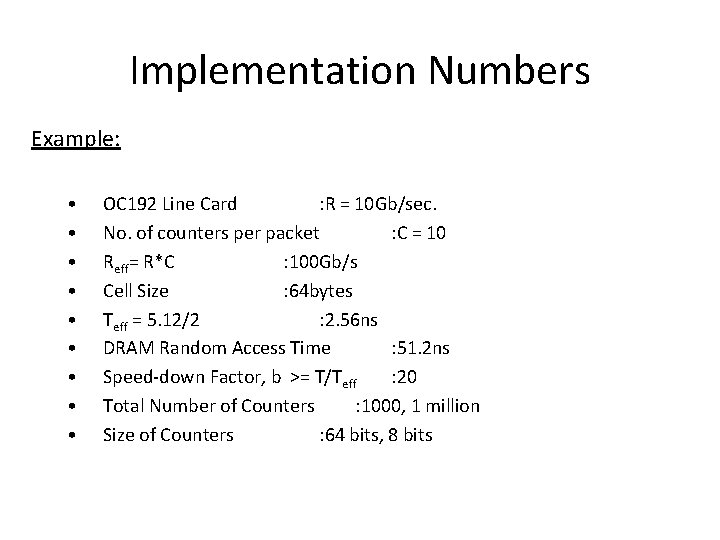

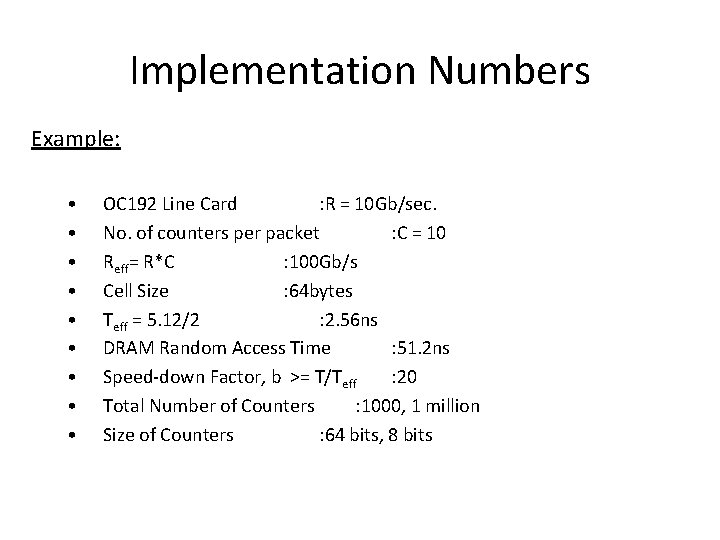

Implementation Numbers Example: • • • OC 192 Line Card : R = 10 Gb/sec. No. of counters per packet : C = 10 Reff= R*C : 100 Gb/s Cell Size : 64 bytes Teff = 5. 12/2 : 2. 56 ns DRAM Random Access Time : 51. 2 ns Speed-down Factor, b >= T/Teff : 20 Total Number of Counters : 1000, 1 million Size of Counters : 64 bits, 8 bits

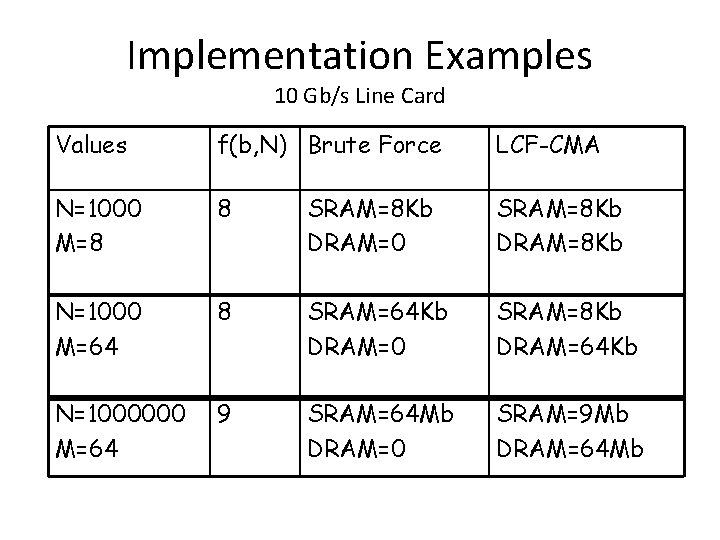

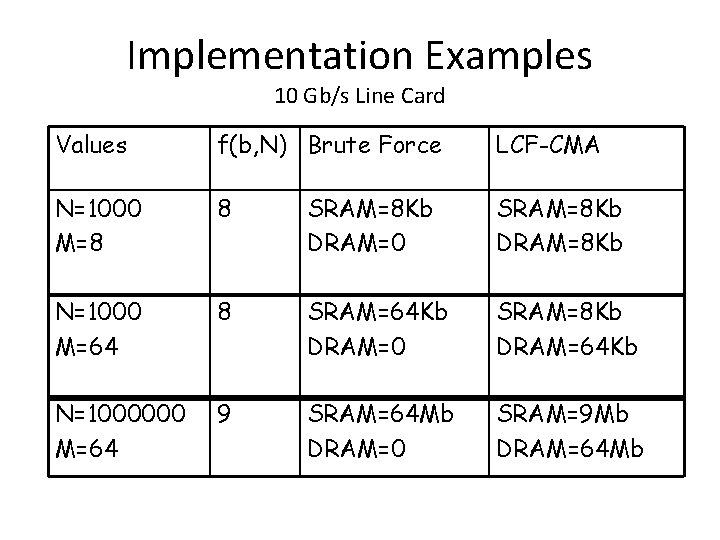

Implementation Examples 10 Gb/s Line Card Values f(b, N) Brute Force LCF-CMA N=1000 M=8 8 SRAM=8 Kb DRAM=0 SRAM=8 Kb DRAM=8 Kb N=1000 M=64 8 SRAM=64 Kb DRAM=0 SRAM=8 Kb DRAM=64 Kb N=1000000 M=64 9 SRAM=64 Mb DRAM=0 SRAM=9 Mb DRAM=64 Mb

Conclusion • The SRAM size required by LCF-CMA is a very slow growing function of N • There is a minimal tradeoff in SRAM memory size • The LCF-CMA technique allows a designer to arbitrarily scale the speed of a statistics counter architecture using existing memory technology

EE 384 Packet Switch Architectures Winter 2012 Lecture 8 c Qo. S and PIFO Sundar Iyer

Contents 1. Parallel routers: work-conserving (Recap) 2. Parallel routers: delay guarantees 20

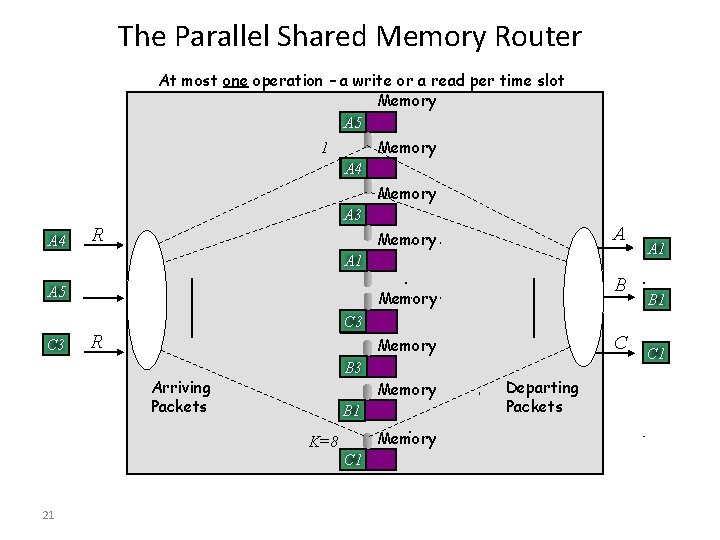

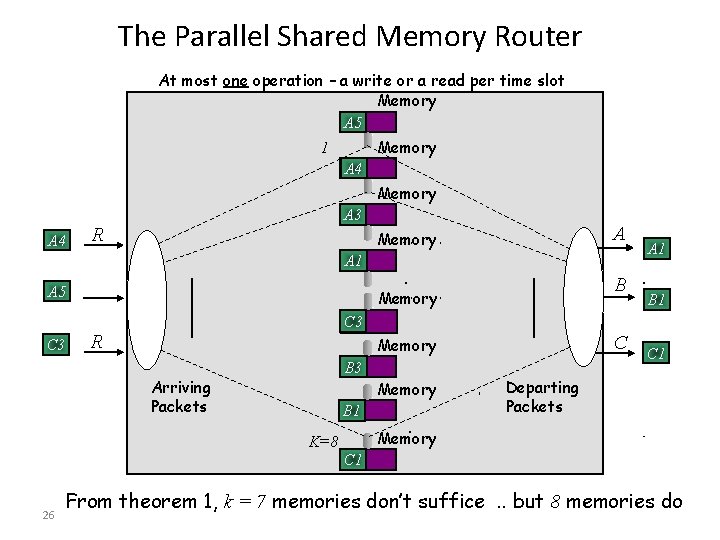

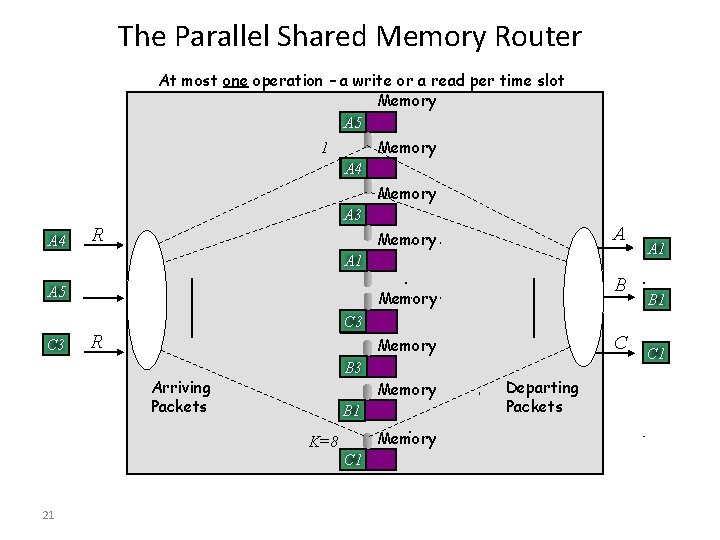

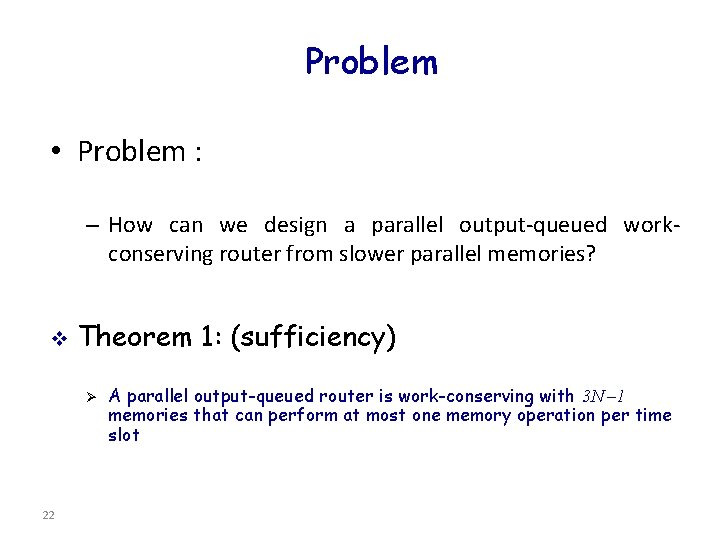

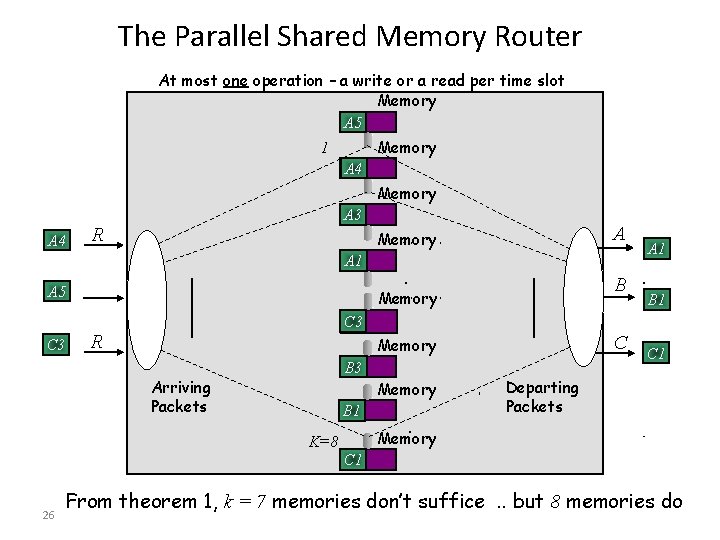

The Parallel Shared Memory Router At most one operation – a write or a read per time slot Memory A 5 Memory 1 A 4 Memory A 4 A 3 R A Memory A 1 A 5 C 3 Memory B 1 C 3 R C Memory B 3 Arriving Packets Memory B 1 Memory K=8 C 1 21 B A 1 Departing Packets C 1

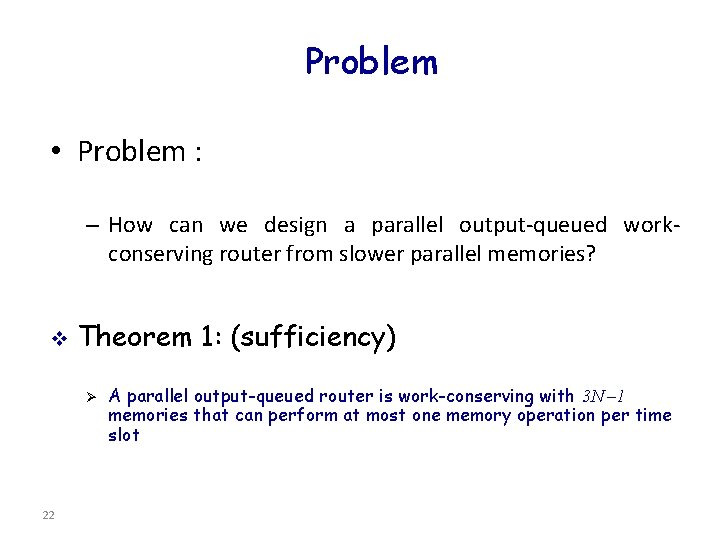

Problem • Problem : – How can we design a parallel output-queued workconserving router from slower parallel memories? v Theorem 1: (sufficiency) Ø 22 A parallel output-queued router is work-conserving with 3 N – 1 memories that can perform at most one memory operation per time slot

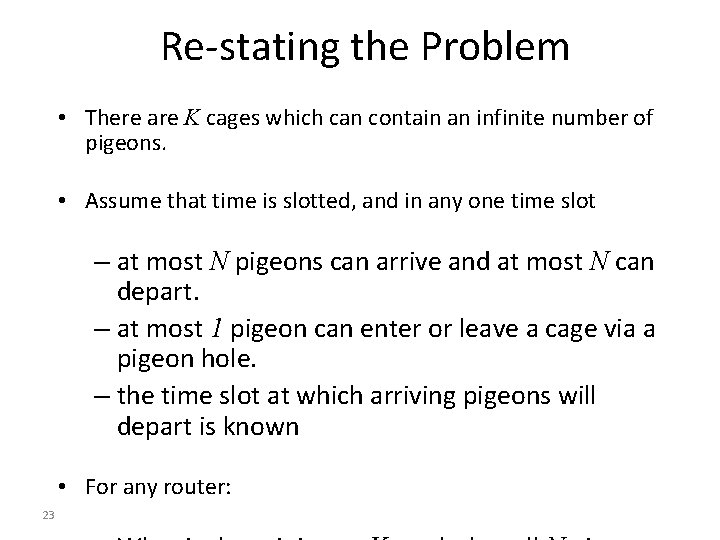

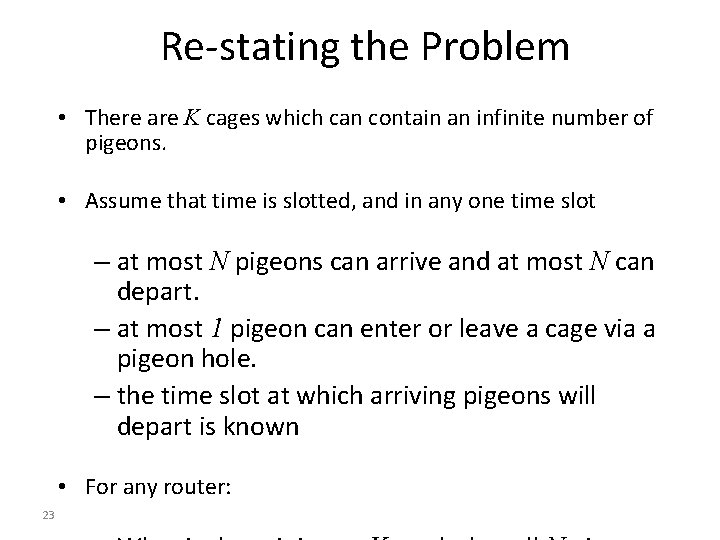

Re-stating the Problem • There are K cages which can contain an infinite number of pigeons. • Assume that time is slotted, and in any one time slot – at most N pigeons can arrive and at most N can depart. – at most 1 pigeon can enter or leave a cage via a pigeon hole. – the time slot at which arriving pigeons will depart is known • For any router: 23

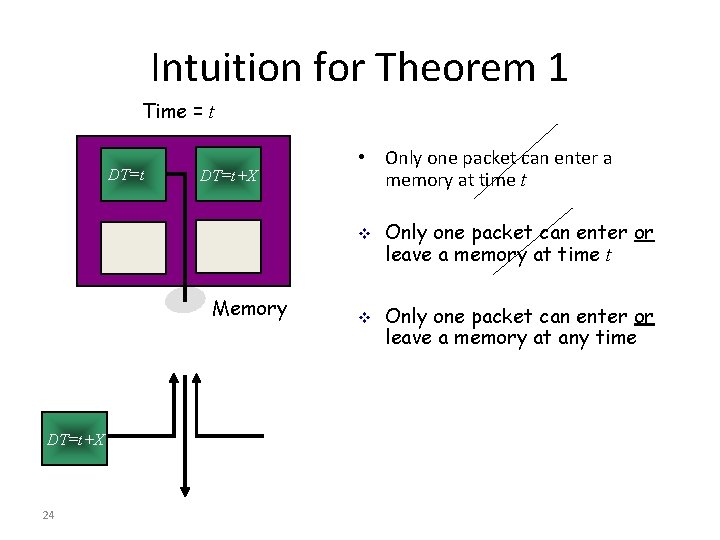

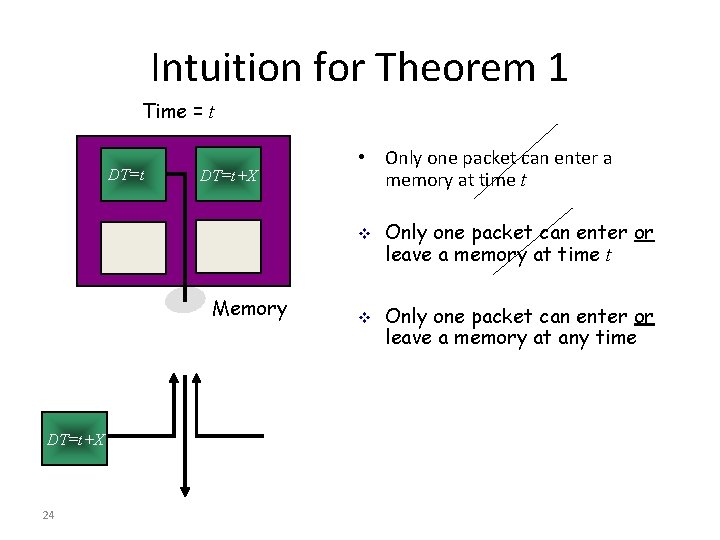

Intuition for Theorem 1 Time = t DT=t+X • Only one packet can enter a memory at time t v Memory DT=t+X 24 v Only one packet can enter or leave a memory at time t Only one packet can enter or leave a memory at any time

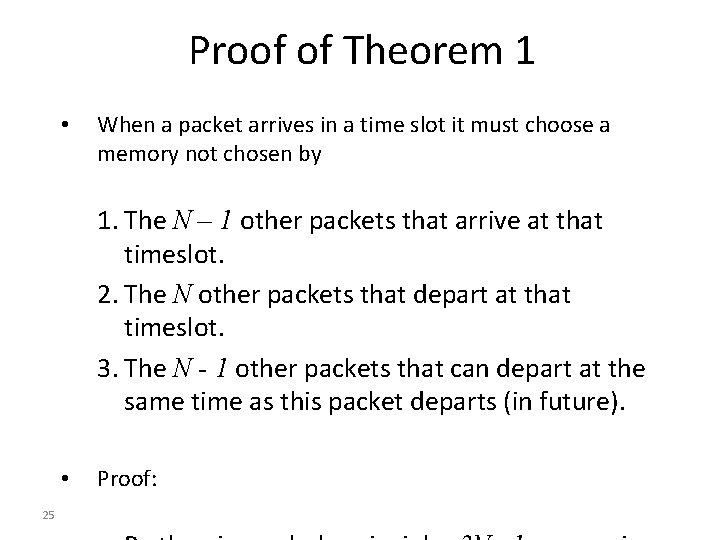

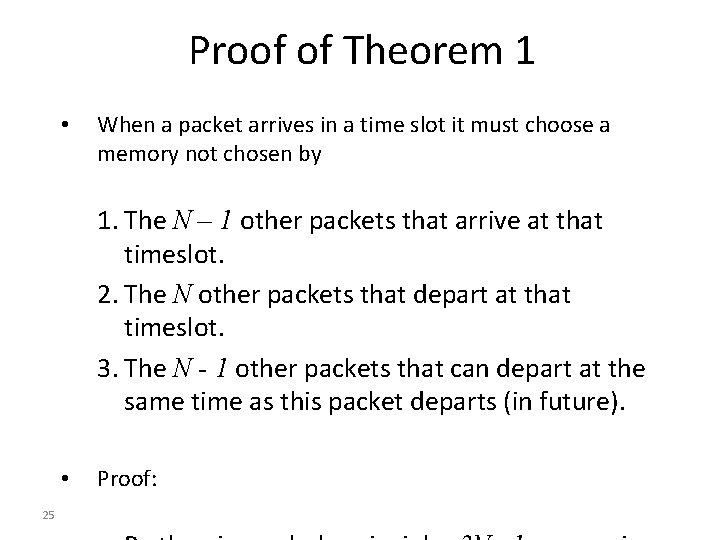

Proof of Theorem 1 • When a packet arrives in a time slot it must choose a memory not chosen by 1. The N – 1 other packets that arrive at that timeslot. 2. The N other packets that depart at that timeslot. 3. The N - 1 other packets that can depart at the same time as this packet departs (in future). • 25 Proof:

The Parallel Shared Memory Router At most one operation – a write or a read per time slot Memory A 5 Memory 1 A 4 Memory A 4 A 3 R A Memory A 1 A 5 C 3 B Memory A 1 B 1 C 3 R C Memory B 3 Arriving Packets Memory B 1 C 1 Departing Packets Memory K=8 C 1 26 From theorem 1, k = 7 memories don’t suffice. . but 8 memories do

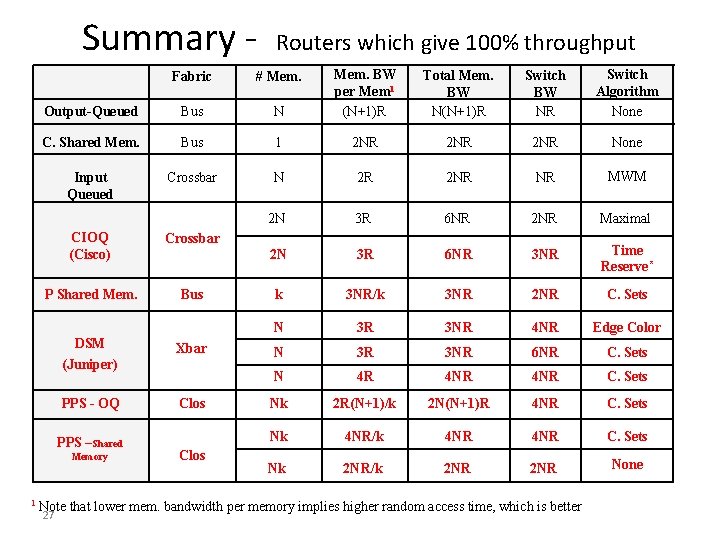

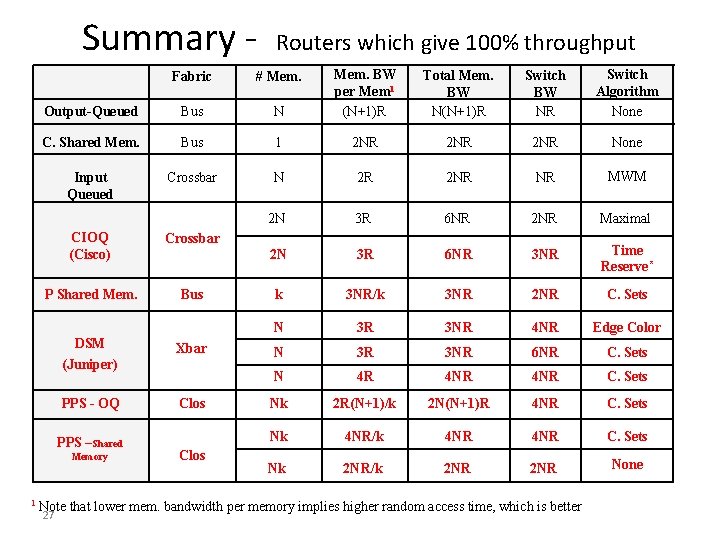

Summary - N Mem. BW per Mem 1 (N+1)R Total Mem. BW N(N+1)R Switch BW NR Switch Algorithm None Bus 1 2 NR 2 NR None Crossbar N 2 R 2 NR NR MWM 2 N 3 R 6 NR 2 NR Maximal 2 N 3 R 6 NR 3 NR Time Reserve* k 3 NR/k 3 NR 2 NR C. Sets N 3 R 3 NR 4 NR Edge Color N 3 R 3 NR 6 NR C. Sets N 4 R 4 NR C. Sets Nk 2 R(N+1)/k 2 N(N+1)R 4 NR C. Sets Nk 4 NR/k 4 NR C. Sets Nk 2 NR/k 2 NR None Fabric # Mem. Output-Queued Bus C. Shared Mem. Input Queued CIOQ (Cisco) Crossbar P Shared Mem. Bus DSM (Juniper) Xbar PPS - OQ Clos PPS –Shared Memory 1 Routers which give 100% throughput Clos Note that lower mem. bandwidth per memory implies higher random access time, which is better 27

Contents 1. Parallel routers: work-conserving 2. Parallel routers: delay guarantees 28

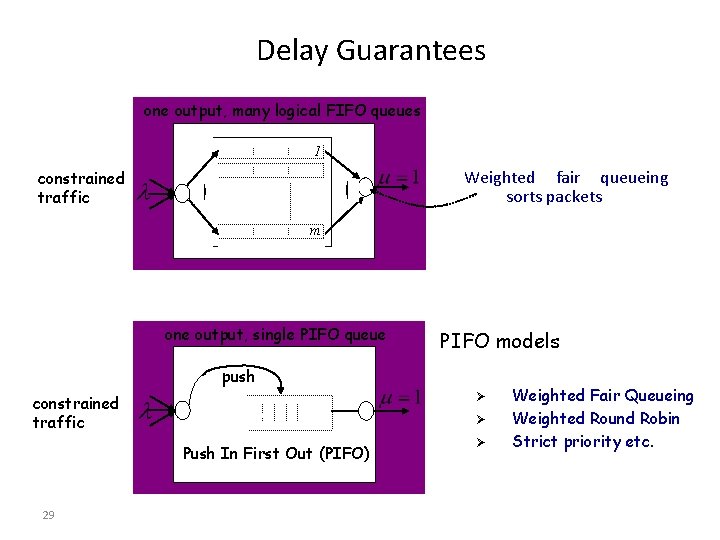

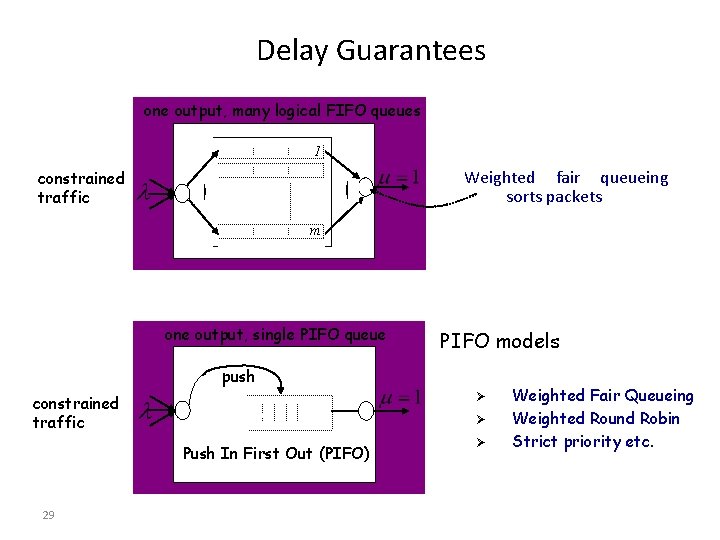

Delay Guarantees one output, many logical FIFO queues 1 Weighted fair queueing sorts packets constrained traffic m one output, single PIFO queue PIFO models push Ø constrained traffic Ø Push In First Out (PIFO) 29 Ø Weighted Fair Queueing Weighted Round Robin Strict priority etc.

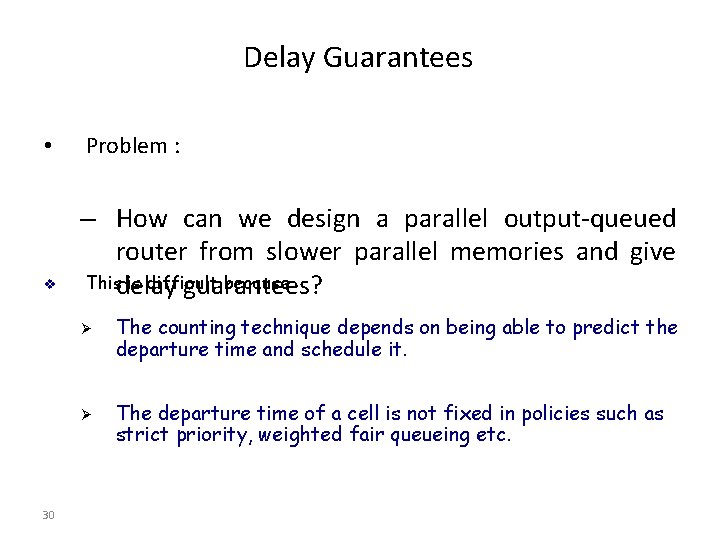

Delay Guarantees • v Problem : – How can we design a parallel output-queued router from slower parallel memories and give Thisdelay is difficult because guarantees? Ø Ø 30 The counting technique depends on being able to predict the departure time and schedule it. The departure time of a cell is not fixed in policies such as strict priority, weighted fair queueing etc.

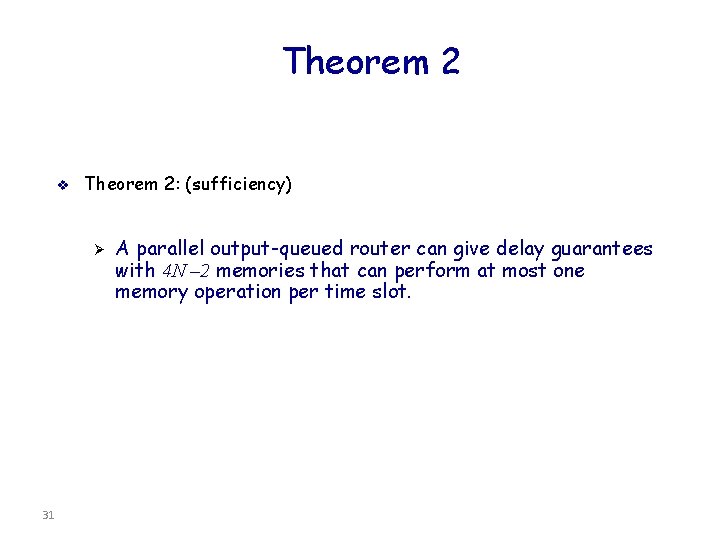

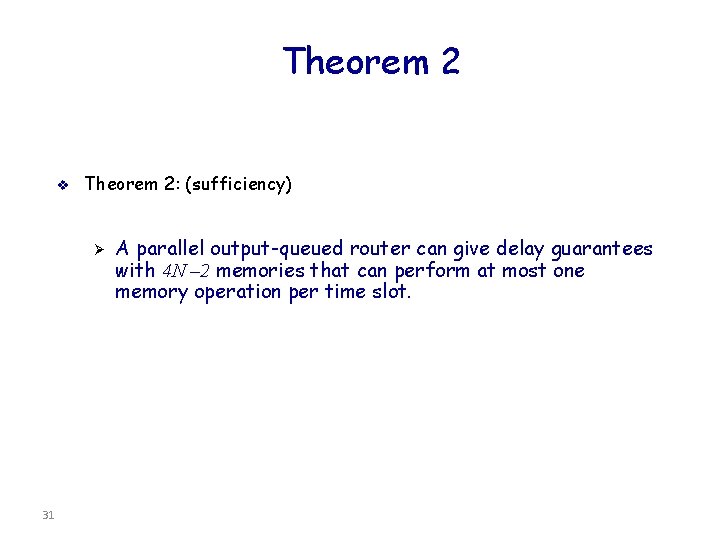

Theorem 2 v Theorem 2: (sufficiency) Ø 31 A parallel output-queued router can give delay guarantees with 4 N – 2 memories that can perform at most one memory operation per time slot.

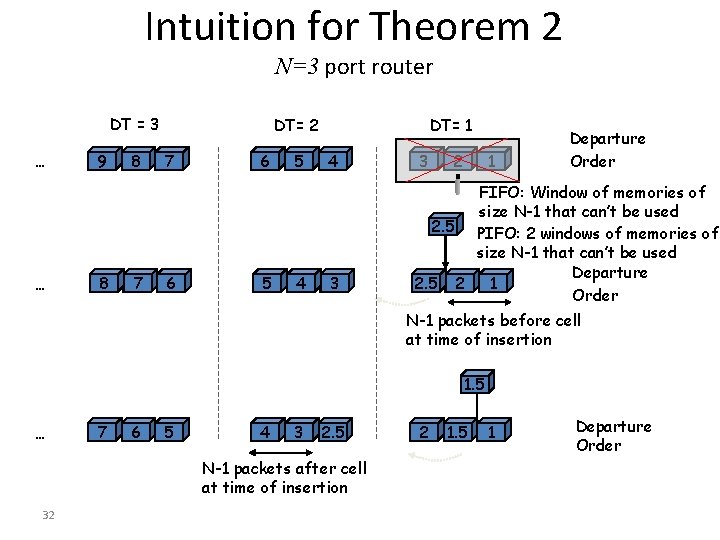

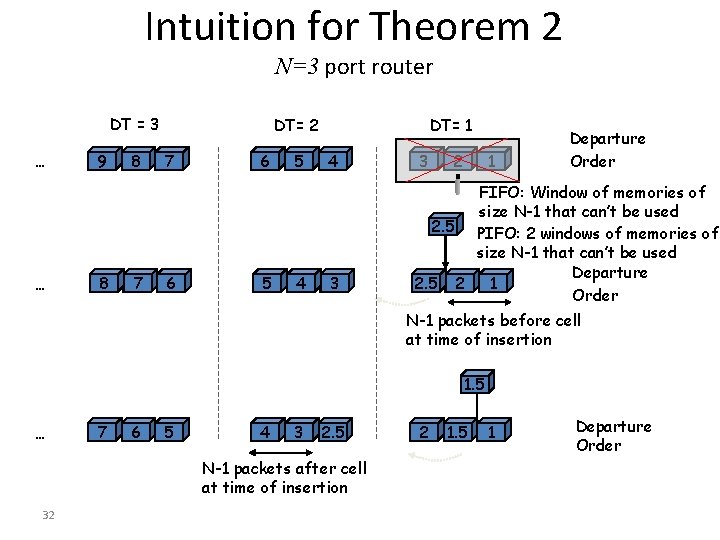

Intuition for Theorem 2 N=3 port router DT = 3 … … 9 8 7 8 DT= 2 7 6 5 6 5 4 5 DT= 1 4 3 2 1 Departure Order FIFO: Window of memories of size N-1 that can’t be used 2. 5 PIFO: 2 windows of memories of size N-1 that can’t be used Departure 2. 5 3 2 1 Order N-1 packets before cell at time of insertion 1. 5 … 8 7 7 6 6 5 5 4 4 3 3 2. 5 N-1 packets after cell at time of insertion 32 2. 5 2 2 1. 5 1 Departure Order

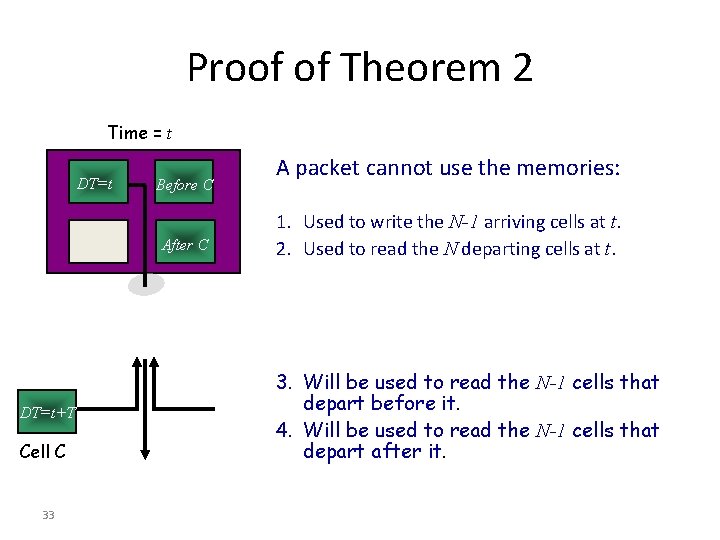

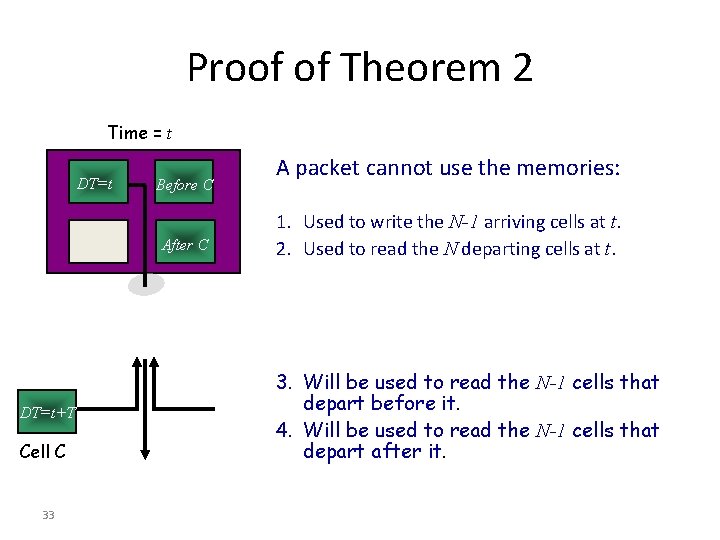

Proof of Theorem 2 Time = t DT=t Before C After C DT=t+T Cell C 33 A packet cannot use the memories: 1. Used to write the N-1 arriving cells at t. 2. Used to read the N departing cells at t. 3. Will be used to read the N-1 cells that depart before it. 4. Will be used to read the N-1 cells that depart after it.

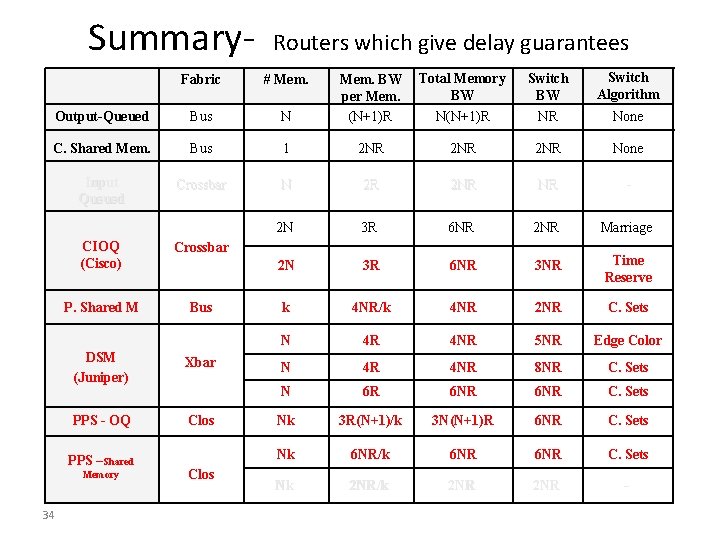

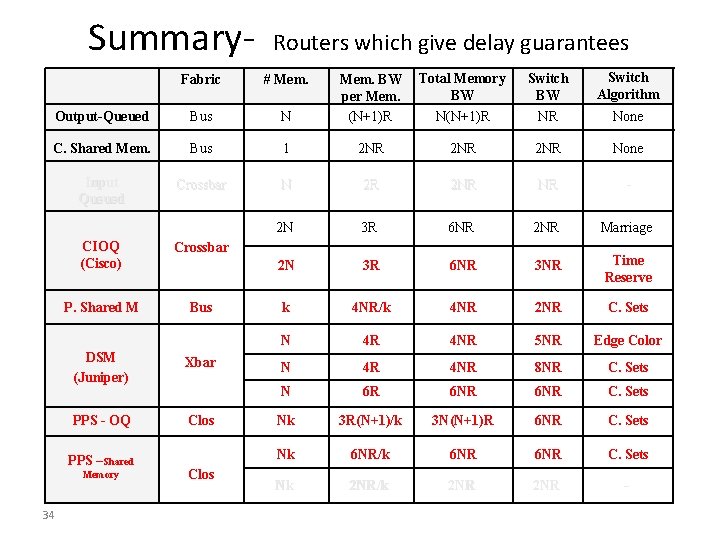

Summary- Routers which give delay guarantees Total Memory BW N(N+1)R Switch BW NR Switch Algorithm N Mem. BW per Mem. (N+1)R Bus 1 2 NR 2 NR None Crossbar N 2 R 2 NR NR - 2 N 3 R 6 NR 2 NR Marriage 2 N 3 R 6 NR 3 NR Time Reserve k 4 NR/k 4 NR 2 NR C. Sets N 4 R 4 NR 5 NR Edge Color N 4 R 4 NR 8 NR C. Sets N 6 R 6 NR C. Sets Nk 3 R(N+1)/k 3 N(N+1)R 6 NR C. Sets Nk 6 NR/k 6 NR C. Sets Nk 2 NR/k 2 NR - Fabric # Mem. Output-Queued Bus C. Shared Mem. Input Queued CIOQ (Cisco) Crossbar P. Shared M Bus DSM (Juniper) Xbar PPS - OQ Clos PPS –Shared Memory 34 Clos None

Backups 35

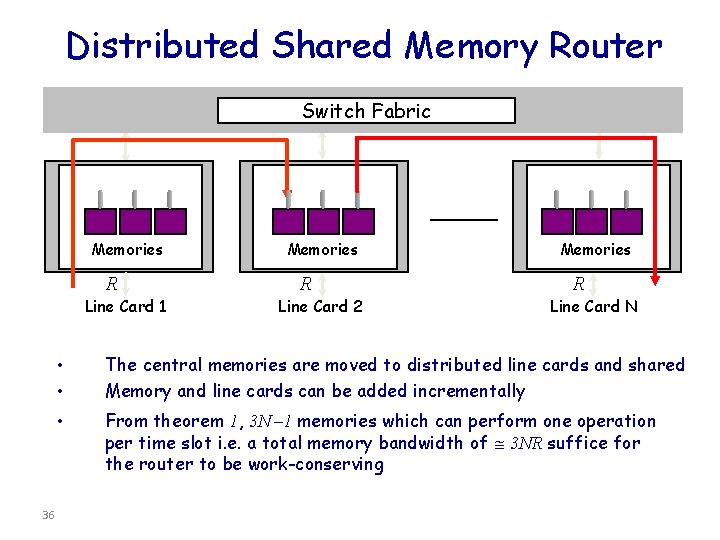

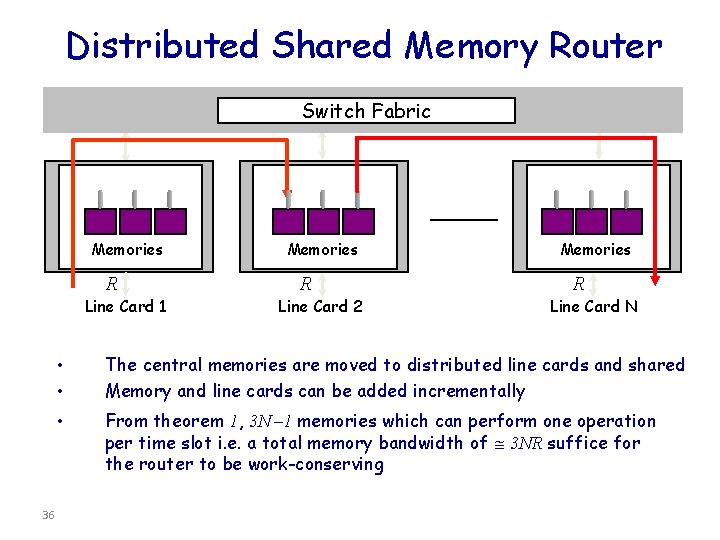

Distributed Shared Memory Router Switch Fabric Memories R Line Card 1 • • • 36 Memories R Line Card 2 Memories R Line Card N The central memories are moved to distributed line cards and shared Memory and line cards can be added incrementally From theorem 1, 3 N – 1 memories which can perform one operation per time slot i. e. a total memory bandwidth of 3 NR suffice for the router to be work-conserving

Corollary 1 • Problem: – What is the switch bandwidth for a workconserving DSM router? • Corollary 1: (sufficiency) – A switch bandwidth of 4 NR is sufficient for a distributed shared memory router to be workconserving • Proof 1: 37

Corollary 2 • Problem: – What is the switching algorithm for a work-conserving DSM router? • Bus • Crossbar : No algorithm needed, but impractical : Algorithm needed because only permutations are allowed • Corollary 2: (existence) – An edge coloring algorithm can switch packets for a work-conserving distributed shared memory router • Proof : – Follows from König’s theorem - Any bipartite graph with maximum degree has an edge coloring with colors 38