EE 384 x Packet Switch Architectures Spring 2012

- Slides: 35

EE 384 x Packet Switch Architectures Spring 2012 Lecture 2, 3 Output Queueing Nick Mc. Keown

Outline 1. Output Queued Switches 2. Rate and Delay guarantees

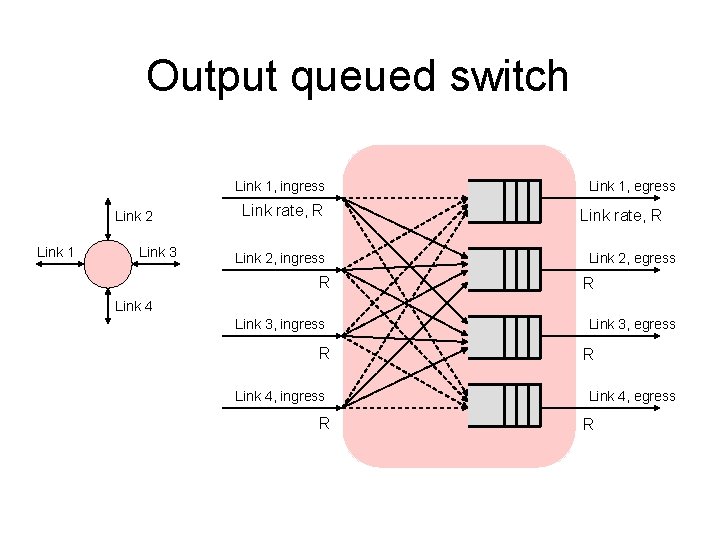

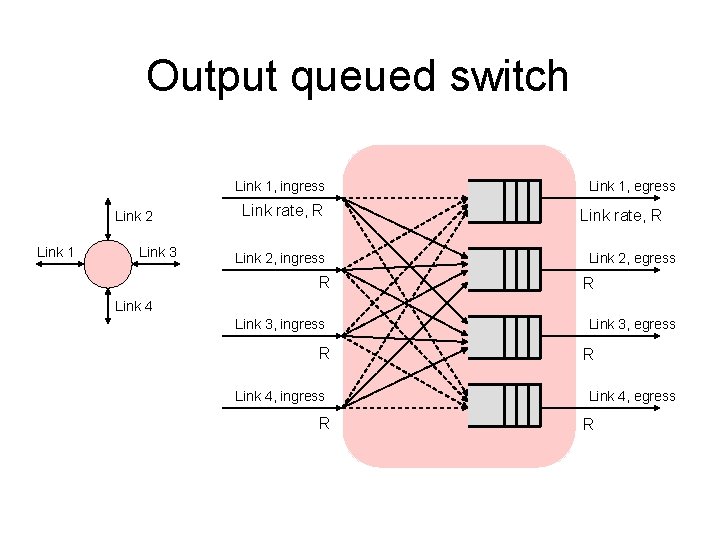

Output queued switch Link 1, ingress Link 2 Link 1 Link 3 Link rate, R Link 2, ingress R Link 1, egress Link rate, R Link 2, egress R Link 4 Link 3, ingress R Link 4, ingress R Link 3, egress R Link 4, egress R

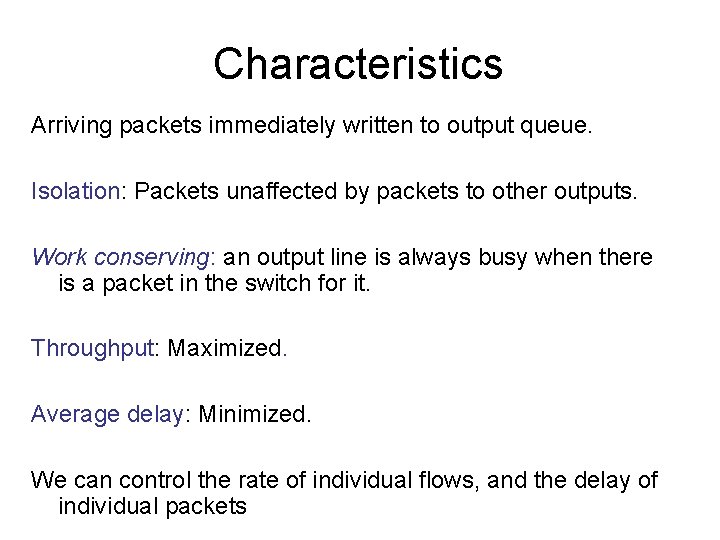

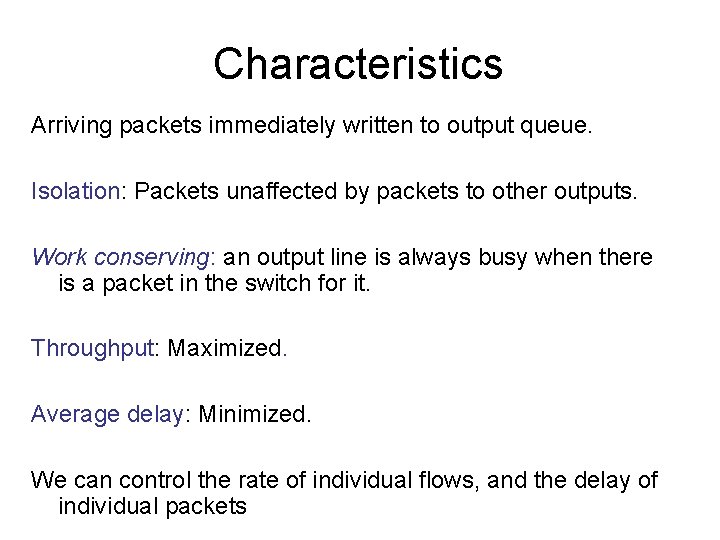

Characteristics Arriving packets immediately written to output queue. Isolation: Packets unaffected by packets to other outputs. Work conserving: an output line is always busy when there is a packet in the switch for it. Throughput: Maximized. Average delay: Minimized. We can control the rate of individual flows, and the delay of individual packets

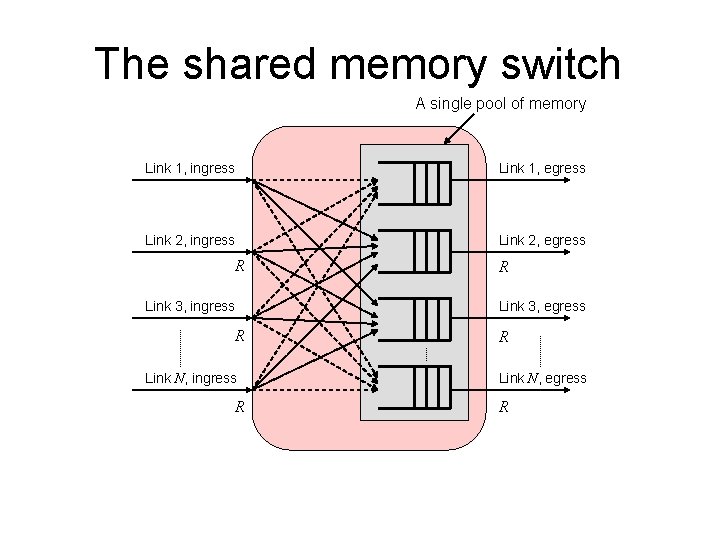

The shared memory switch A single pool of memory Link 1, ingress Link 1, egress Link 2, ingress Link 2, egress R Link 3, ingress R Link N, ingress R R Link 3, egress R Link N, egress R

Questions • Is a shared memory switch work conserving? • How does average packet delay compare to an output queued switch? • Packet loss?

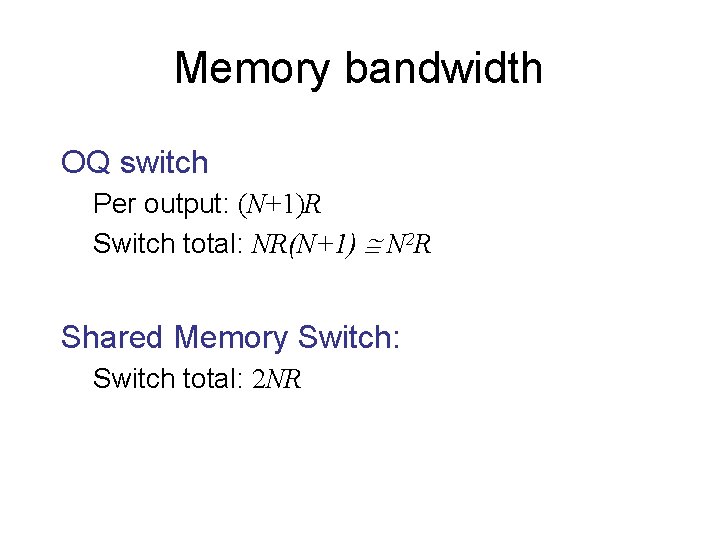

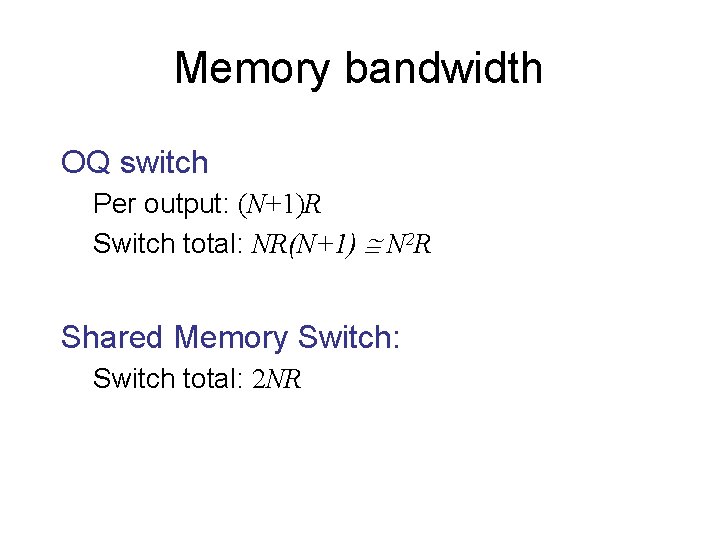

Memory bandwidth OQ switch Per output: (N+1)R Switch total: NR(N+1) @ N 2 R Shared Memory Switch: Switch total: 2 NR

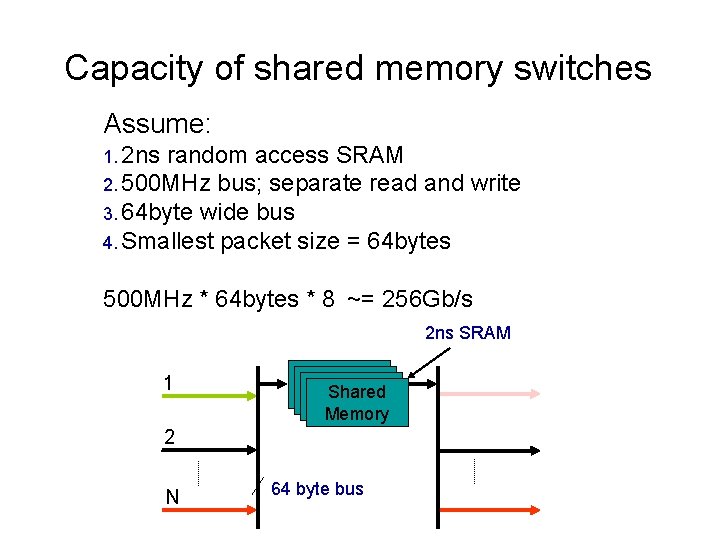

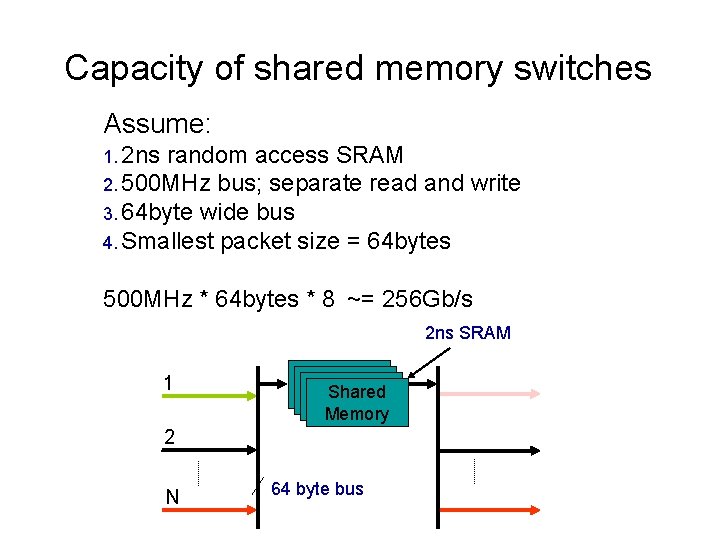

Capacity of shared memory switches Assume: 1. 2 ns random access SRAM 2. 500 MHz bus; separate read and write 3. 64 byte wide bus 4. Smallest packet size = 64 bytes 500 MHz * 64 bytes * 8 ~= 256 Gb/s 2 ns SRAM 1 Shared Memory 2 N 64 byte bus

Outline 1. Output Queued Switches 2. Rate and Delay guarantees

Rate Guarantees Problem #1: In a FIFO queue, all packets and flows receive the same service rate. Solution: Place each flow in its own output queue; serve each queue at a different rate.

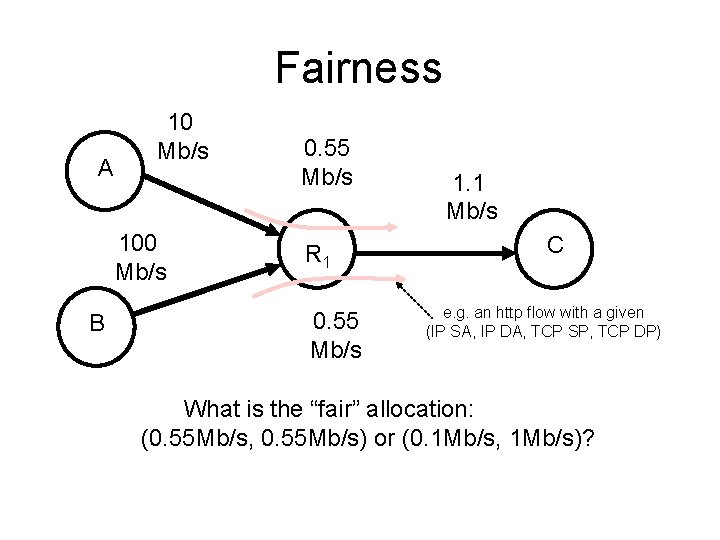

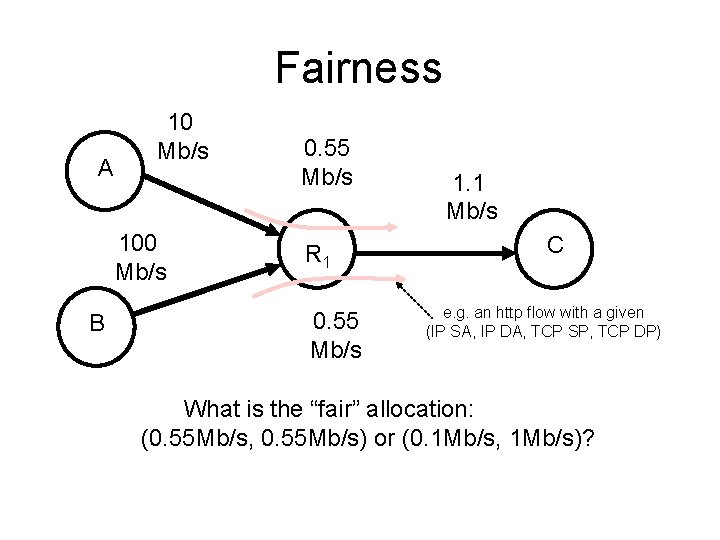

Fairness A 10 Mb/s 100 Mb/s B 0. 55 Mb/s R 1 0. 55 Mb/s 1. 1 Mb/s C e. g. an http flow with a given (IP SA, IP DA, TCP SP, TCP DP) What is the “fair” allocation: (0. 55 Mb/s, 0. 55 Mb/s) or (0. 1 Mb/s, 1 Mb/s)?

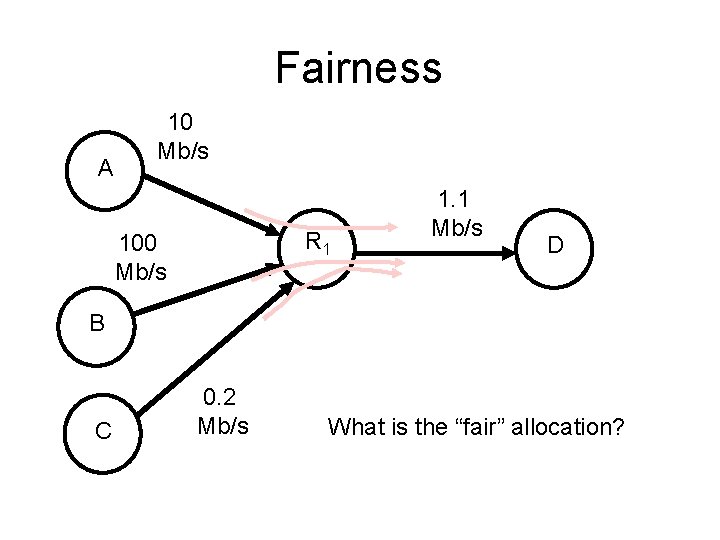

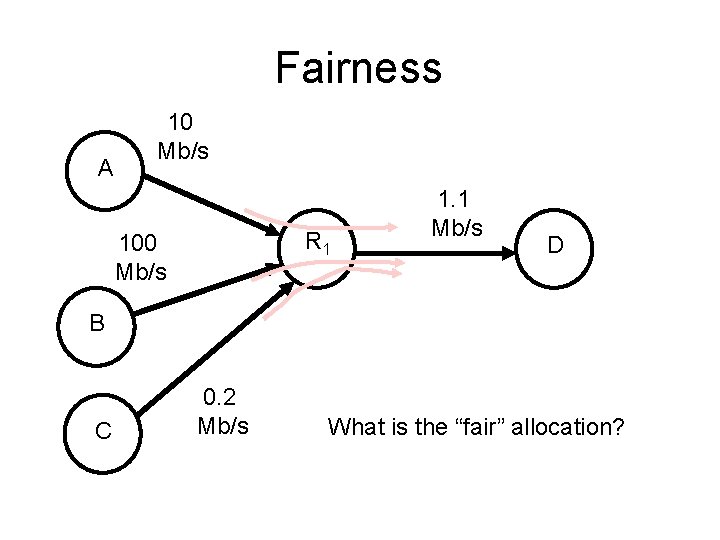

Fairness A 10 Mb/s R 1 100 Mb/s 1. 1 Mb/s D B C 0. 2 Mb/s What is the “fair” allocation?

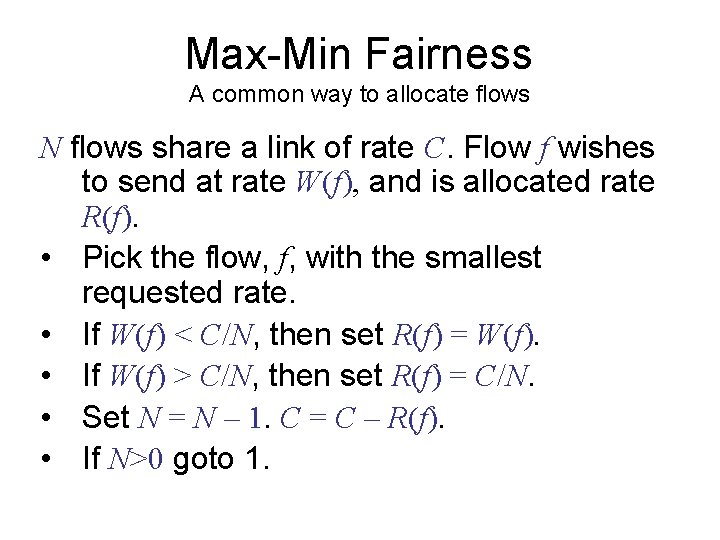

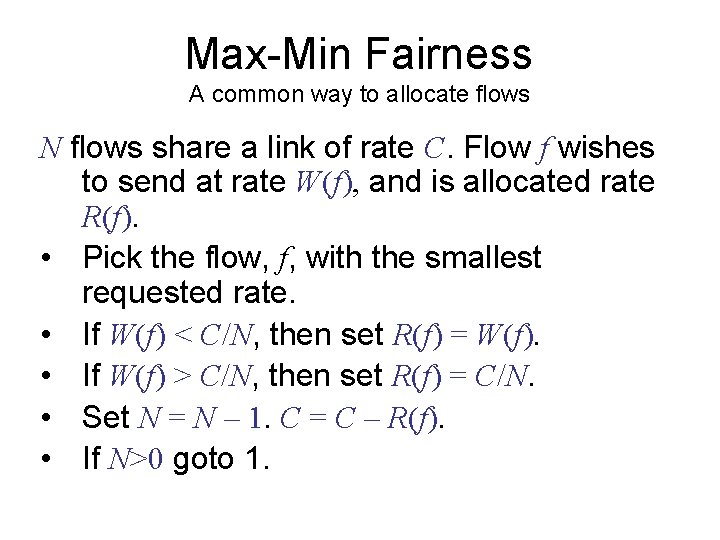

Max-Min Fairness A common way to allocate flows N flows share a link of rate C. Flow f wishes to send at rate W(f), and is allocated rate R(f). • Pick the flow, f, with the smallest requested rate. • If W(f) < C/N, then set R(f) = W(f). • If W(f) > C/N, then set R(f) = C/N. • Set N = N – 1. C = C – R(f). • If N>0 goto 1.

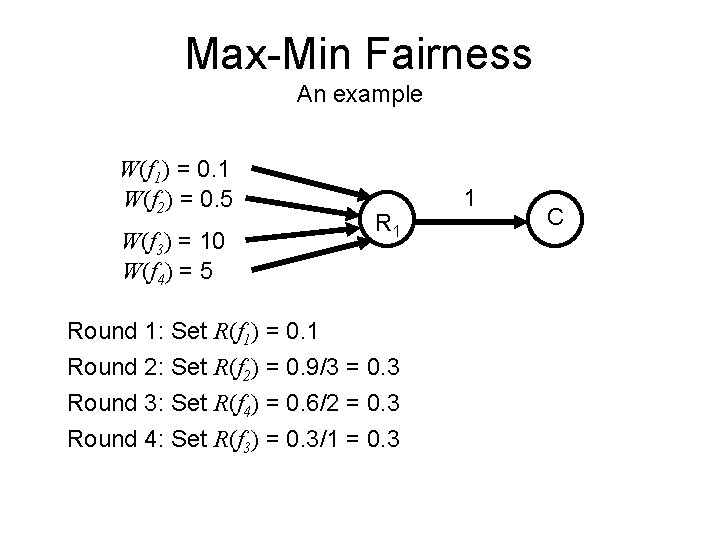

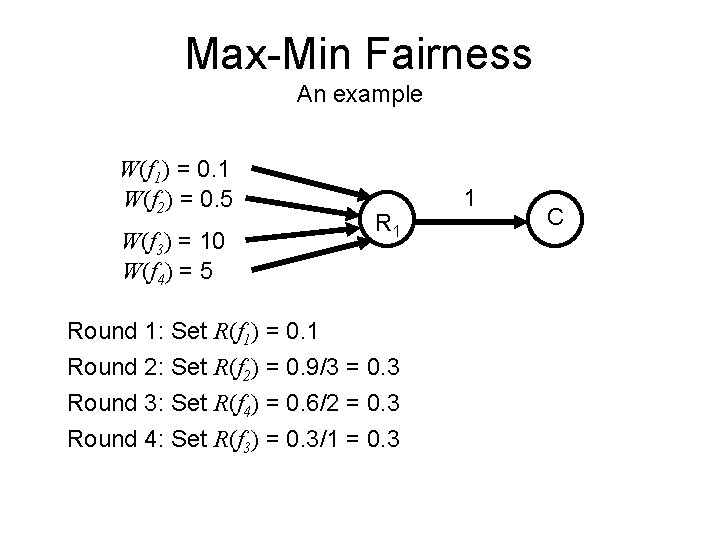

Max-Min Fairness An example W(f 1) = 0. 1 W(f 2) = 0. 5 W(f 3) = 10 W(f 4) = 5 R 1 Round 1: Set R(f 1) = 0. 1 Round 2: Set R(f 2) = 0. 9/3 = 0. 3 Round 3: Set R(f 4) = 0. 6/2 = 0. 3 Round 4: Set R(f 3) = 0. 3/1 = 0. 3 1 C

Max-Min Fairness How can a router “allocate” different rates to different flows? First, let’s see how a router can allocate the “same” rate to different flows…

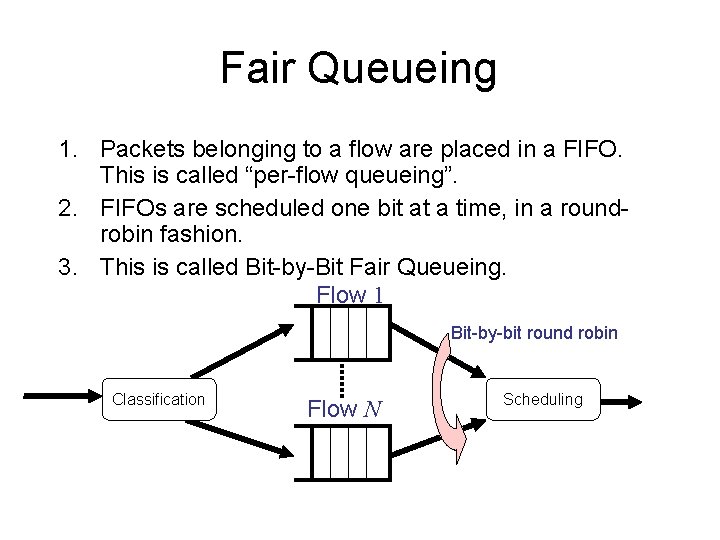

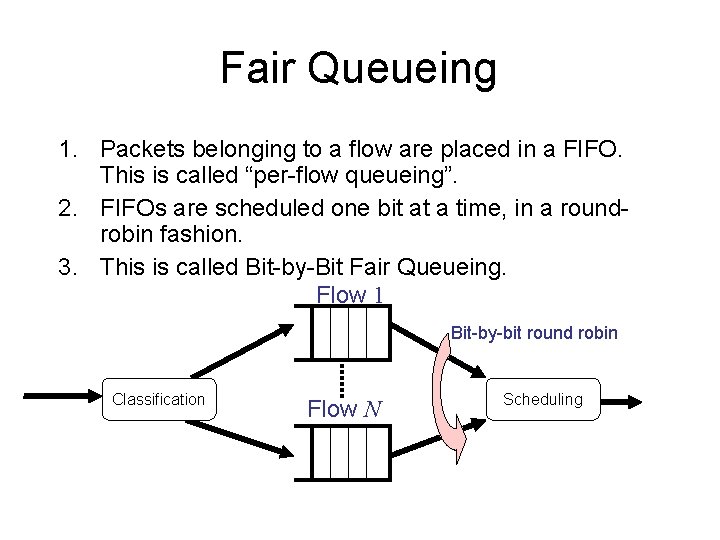

Fair Queueing 1. Packets belonging to a flow are placed in a FIFO. This is called “per-flow queueing”. 2. FIFOs are scheduled one bit at a time, in a roundrobin fashion. 3. This is called Bit-by-Bit Fair Queueing. Flow 1 Bit-by-bit round robin Classification Flow N Scheduling

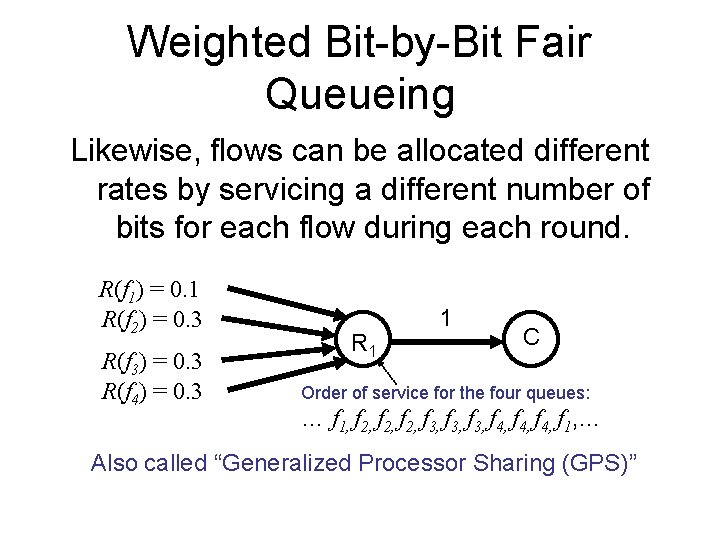

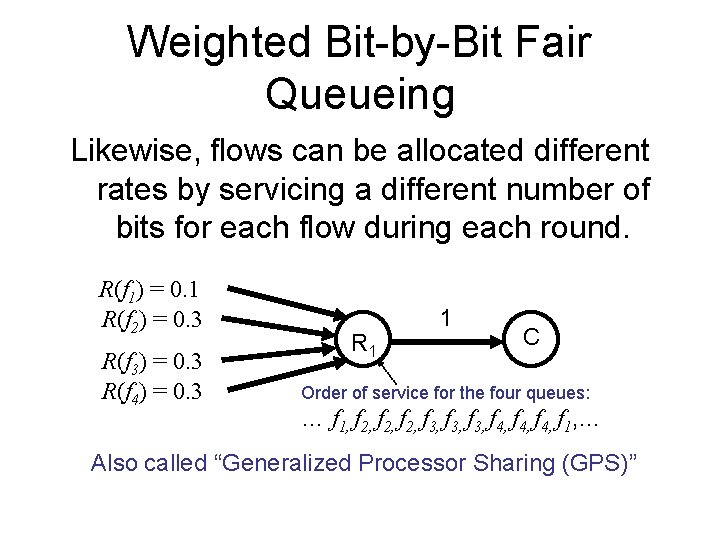

Weighted Bit-by-Bit Fair Queueing Likewise, flows can be allocated different rates by servicing a different number of bits for each flow during each round. R(f 1) = 0. 1 R(f 2) = 0. 3 R(f 3) = 0. 3 R(f 4) = 0. 3 R 1 1 C Order of service for the four queues: … f 1, f 2, f 3, f 4, f 1, … Also called “Generalized Processor Sharing (GPS)”

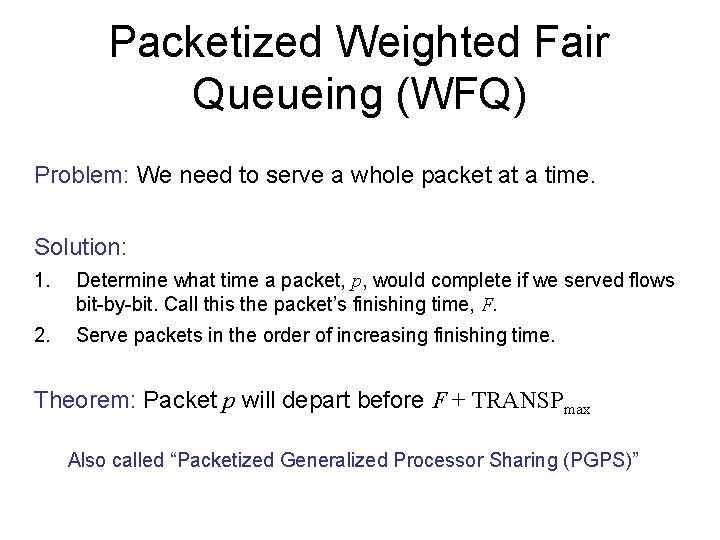

Packetized Weighted Fair Queueing (WFQ) Problem: We need to serve a whole packet at a time. Solution: 1. Determine what time a packet, p, would complete if we served flows bit-by-bit. Call this the packet’s finishing time, F. 2. Serve packets in the order of increasing finishing time. Theorem: Packet p will depart before F + TRANSPmax Also called “Packetized Generalized Processor Sharing (PGPS)”

Intuition behind Packetized WFQ 1. Consider packet p that arrives and immediately enters service under WFQ. 2. Potentially, there are packets Q = {q, r, …} that arrive after p that would have completed service before p under bit-by-bit WFQ. These packets are delayed by the duration of p’s service. 3. Because the amount of data in Q that could have departed before p must be less than or equal to the length of p, their ordering is simply changed. 4. Packets in Q are delayed by the maximum duration of p. (Detailed proof in Parekh and Gallager)

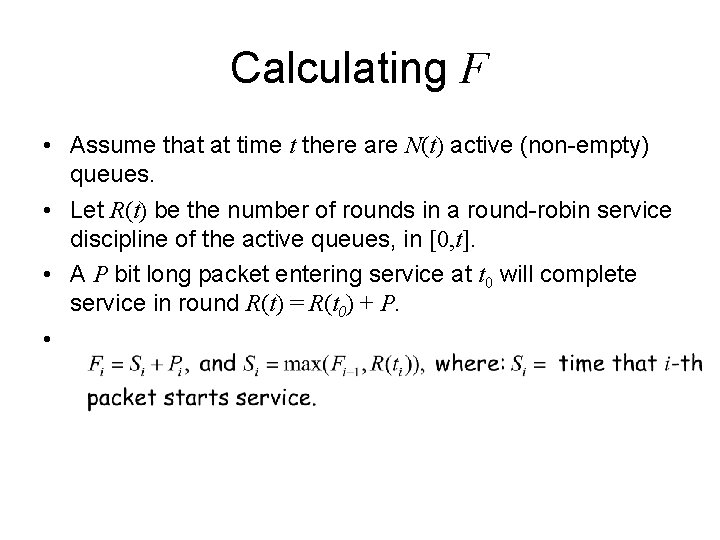

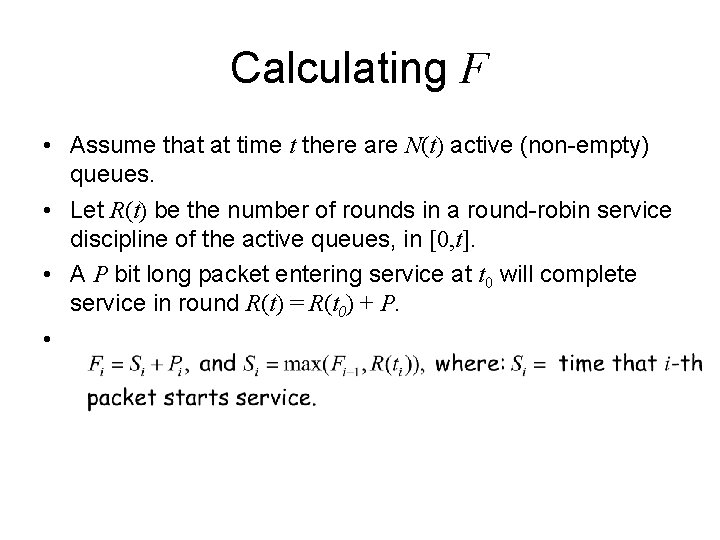

Calculating F • Assume that at time t there are N(t) active (non-empty) queues. • Let R(t) be the number of rounds in a round-robin service discipline of the active queues, in [0, t]. • A P bit long packet entering service at t 0 will complete service in round R(t) = R(t 0) + P. •

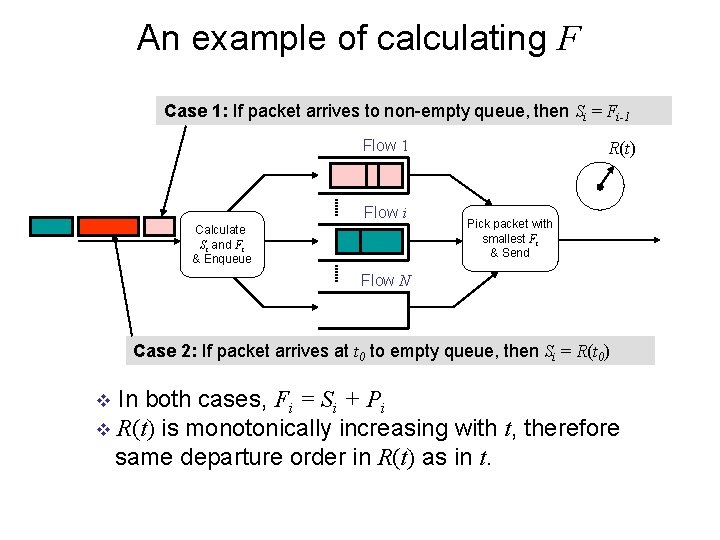

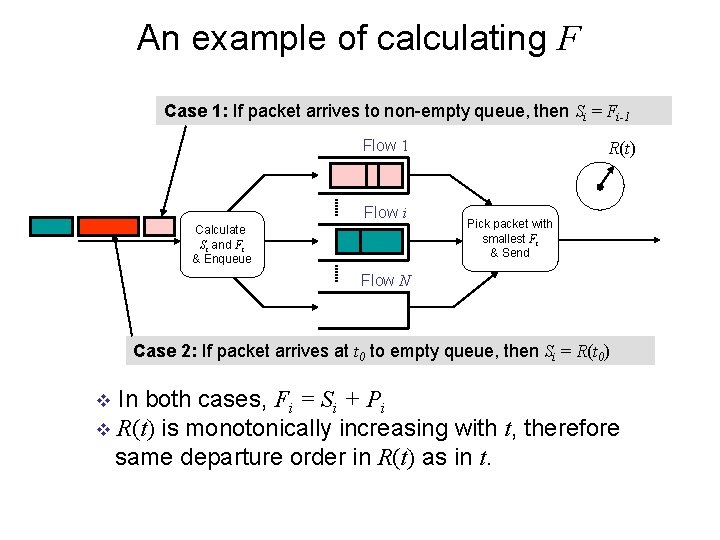

An example of calculating F Case 1: If packet arrives to non-empty queue, then Si = Fi-1 Flow i Calculate Si and Fi & Enqueue R(t) Pick packet with smallest Fi & Send Flow N Case 2: If packet arrives at t 0 to empty queue, then Si = R(t 0) In both cases, Fi = Si + Pi v R(t) is monotonically increasing with t, therefore same departure order in R(t) as in t. v

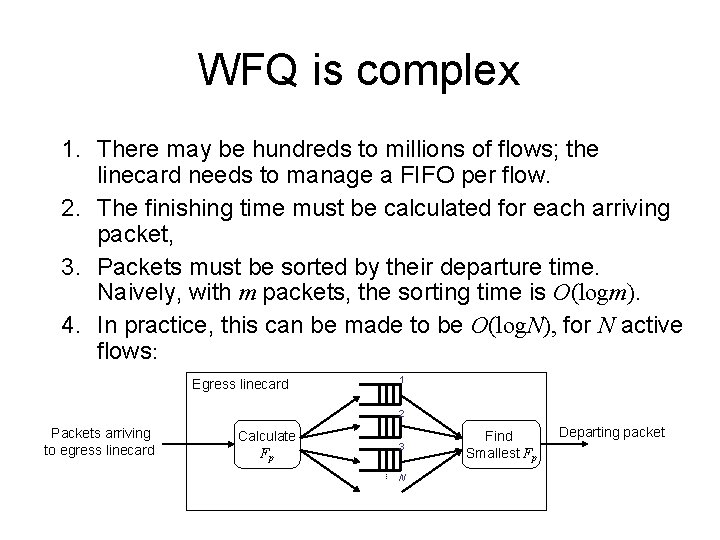

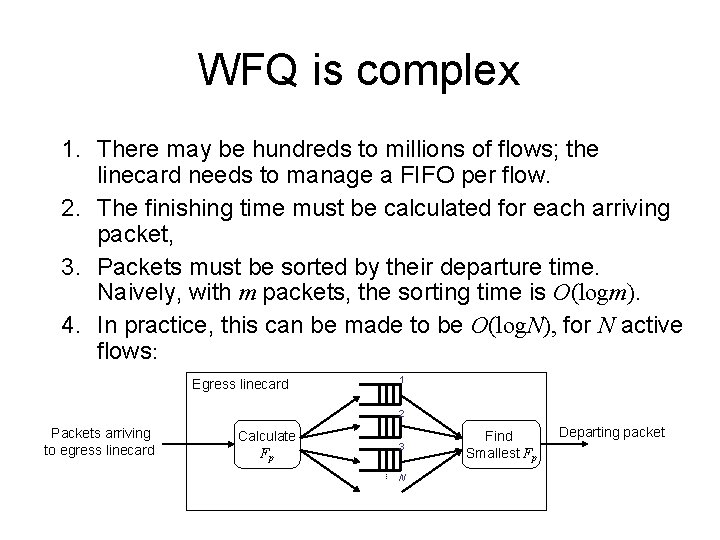

WFQ is complex 1. There may be hundreds to millions of flows; the linecard needs to manage a FIFO per flow. 2. The finishing time must be calculated for each arriving packet, 3. Packets must be sorted by their departure time. Naively, with m packets, the sorting time is O(logm). 4. In practice, this can be made to be O(log. N), for N active flows: Egress linecard 1 2 Packets arriving to egress linecard Calculate Fp 3 N Find Smallest Fp Departing packet

Delay Guarantees Problem #2: In a FIFO queue, the delay of a packet is determined by the number of packets ahead of it (from other flows). Solution: Control the departure time of each packet.

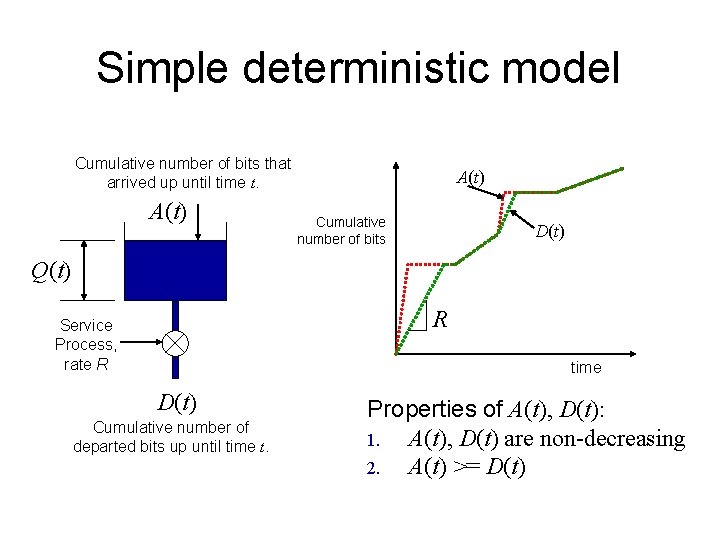

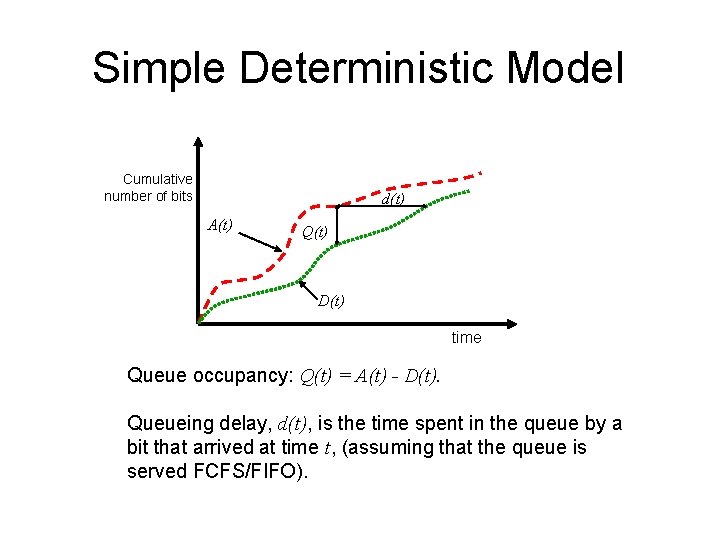

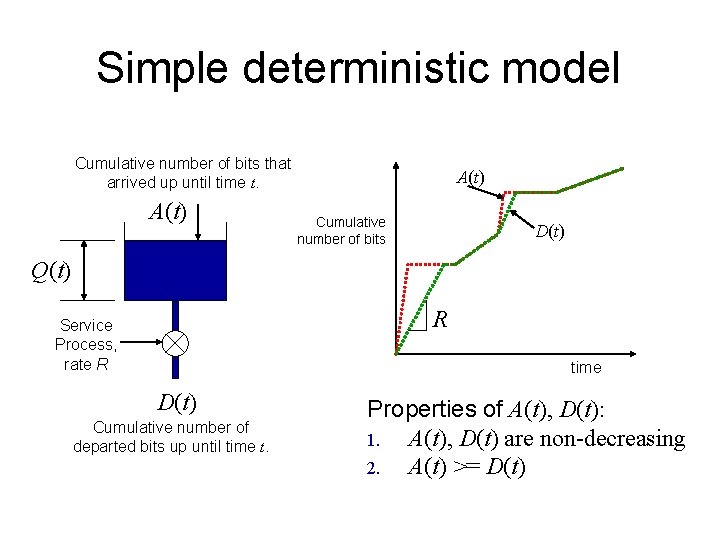

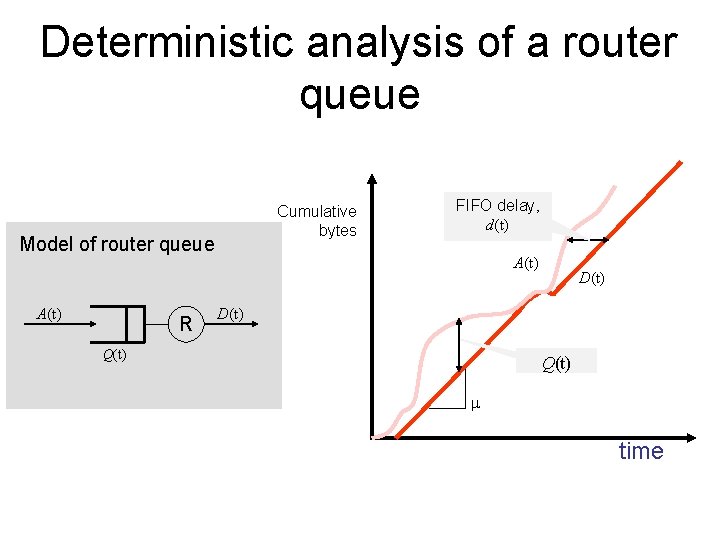

Simple deterministic model Cumulative number of bits that arrived up until time t. A(t) Cumulative number of bits D(t) Q(t) R Service Process, rate R time D(t) Cumulative number of departed bits up until time t. Properties of A(t), D(t): 1. A(t), D(t) are non-decreasing 2. A(t) >= D(t)

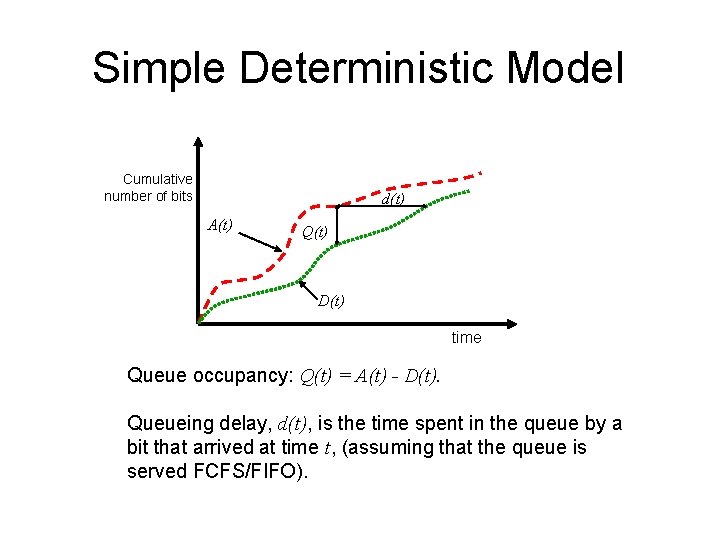

Simple Deterministic Model Cumulative number of bits d(t) A(t) Q(t) D(t) time Queue occupancy: Q(t) = A(t) - D(t). Queueing delay, d(t), is the time spent in the queue by a bit that arrived at time t, (assuming that the queue is served FCFS/FIFO).

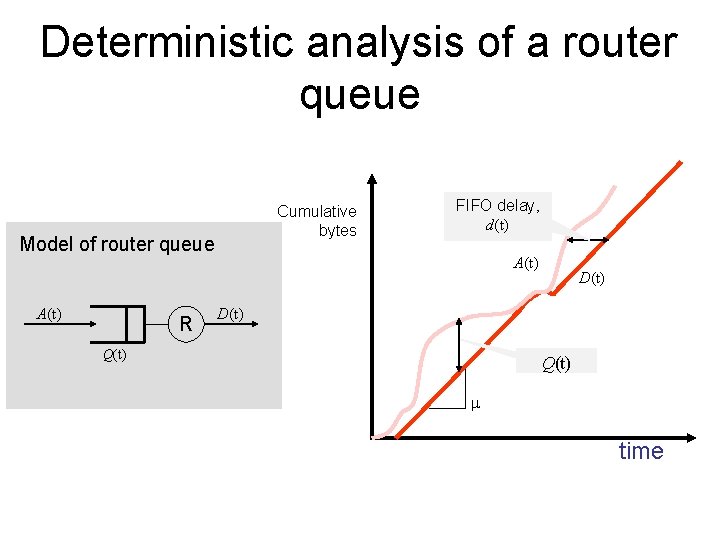

Deterministic analysis of a router queue Cumulative bytes Model of router queue A(t) R FIFO delay, d(t) A(t) D(t) Q(t) m time

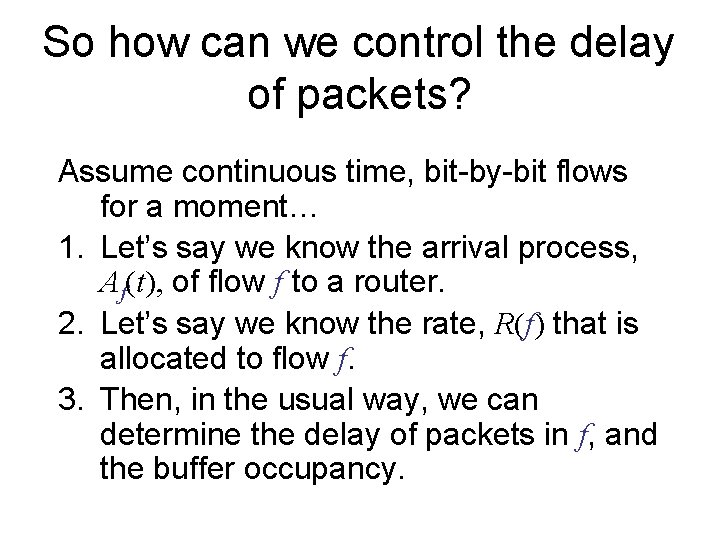

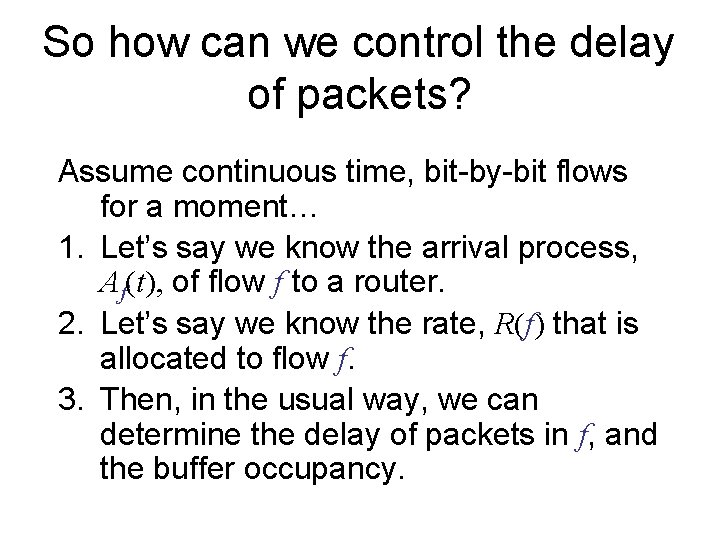

So how can we control the delay of packets? Assume continuous time, bit-by-bit flows for a moment… 1. Let’s say we know the arrival process, Af(t), of flow f to a router. 2. Let’s say we know the rate, R(f) that is allocated to flow f. 3. Then, in the usual way, we can determine the delay of packets in f, and the buffer occupancy.

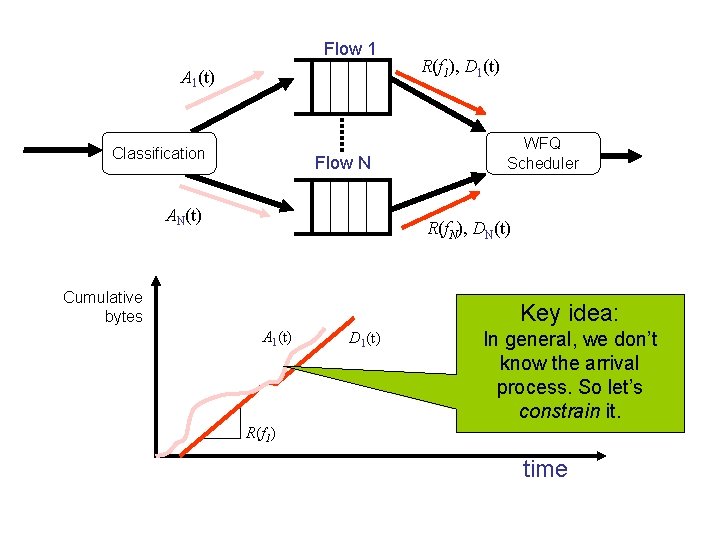

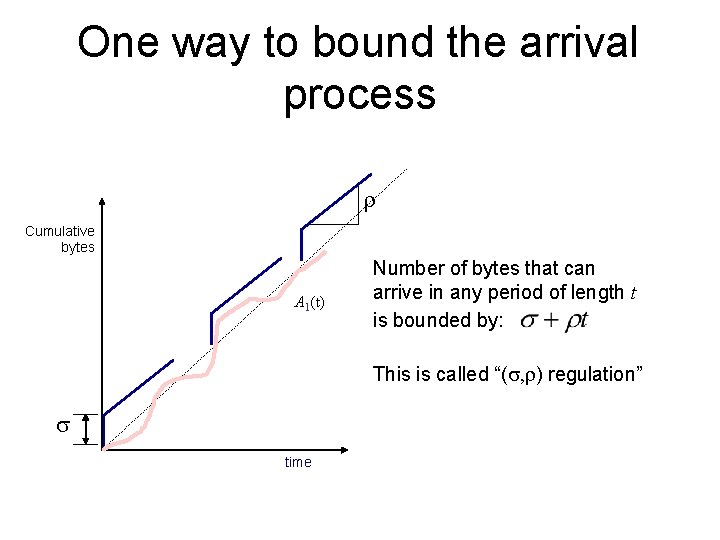

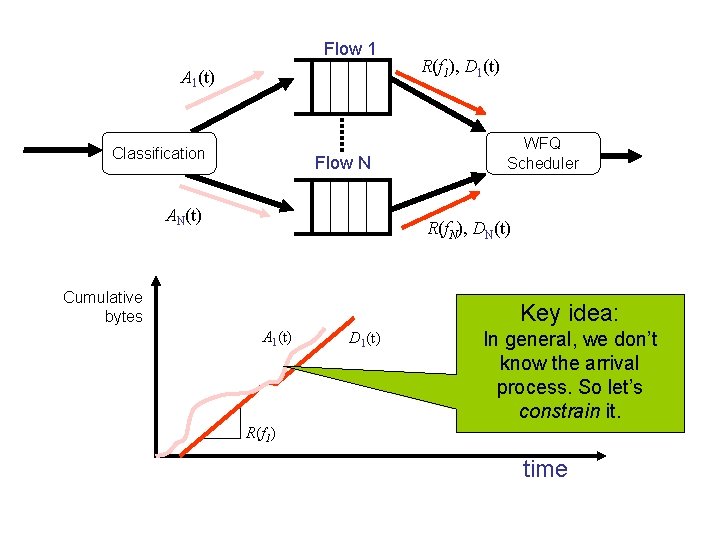

Flow 1 A 1(t) Classification Flow N AN(t) R(f 1), D 1(t) WFQ Scheduler R(f. N), DN(t) Cumulative bytes Key idea: A 1(t) D 1(t) In general, we don’t know the arrival process. So let’s constrain it. R(f 1) time

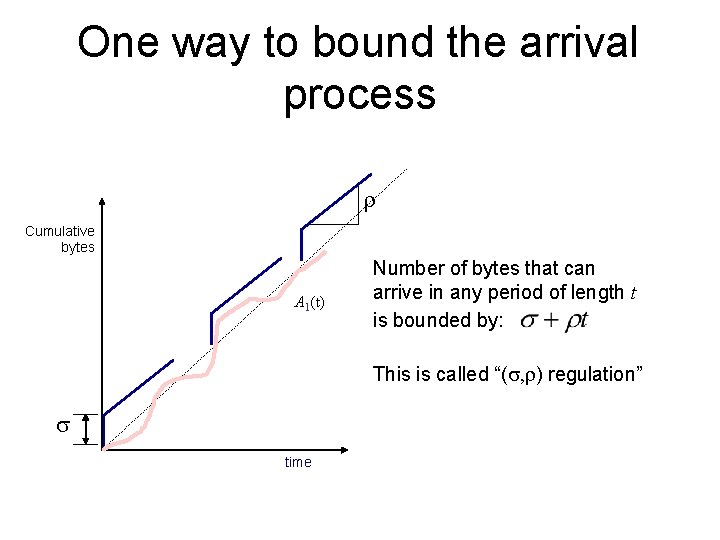

One way to bound the arrival process r Cumulative bytes A 1(t) Number of bytes that can arrive in any period of length t is bounded by: This is called “(s, r) regulation” s time

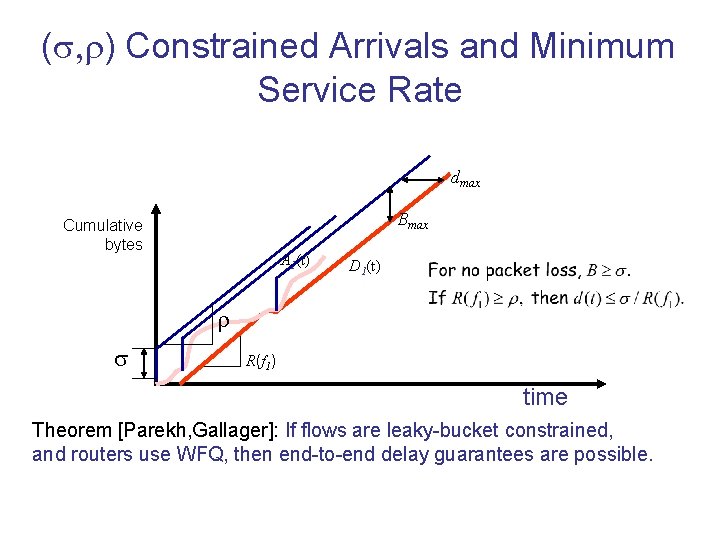

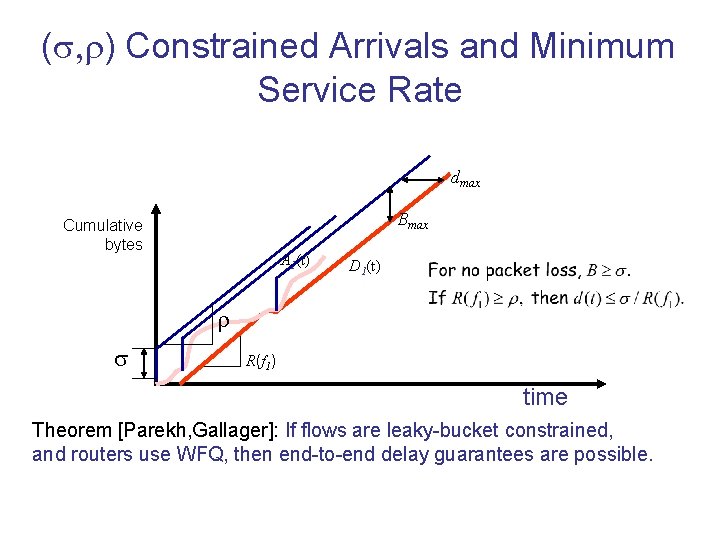

(s, r) Constrained Arrivals and Minimum Service Rate dmax Bmax Cumulative bytes A 1(t) D 1(t) r s R(f 1) time Theorem [Parekh, Gallager]: If flows are leaky-bucket constrained, and routers use WFQ, then end-to-end delay guarantees are possible.

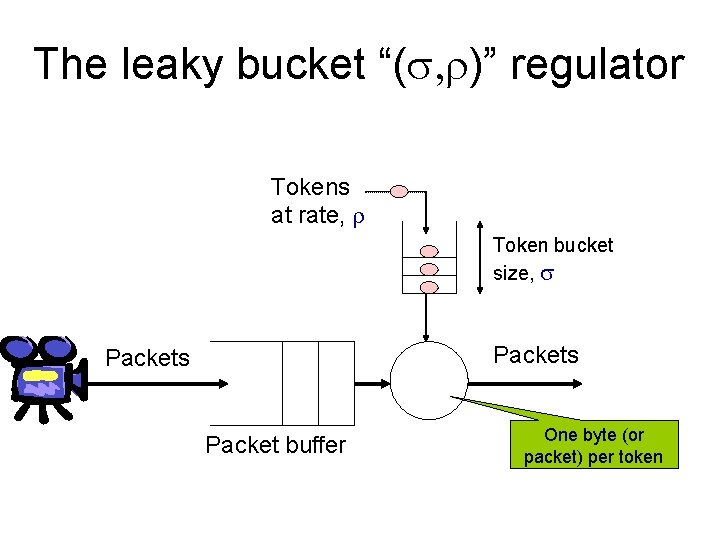

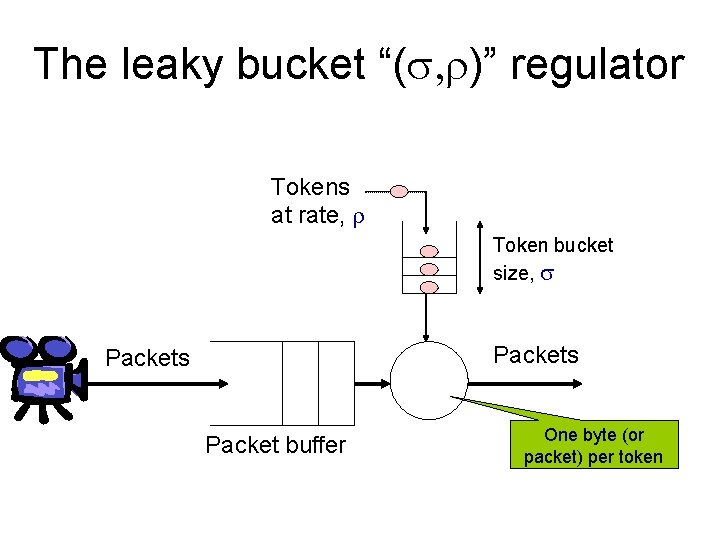

The leaky bucket “(s, r)” regulator Tokens at rate, r Token bucket size, s Packets Packet buffer One byte (or packet) per token

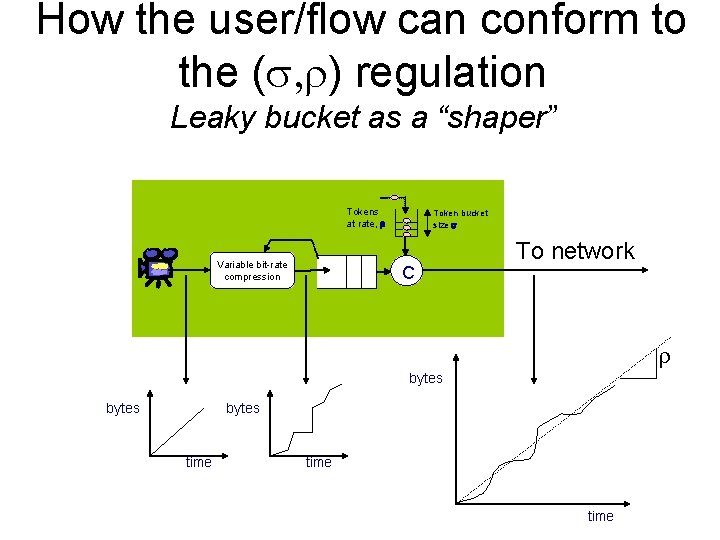

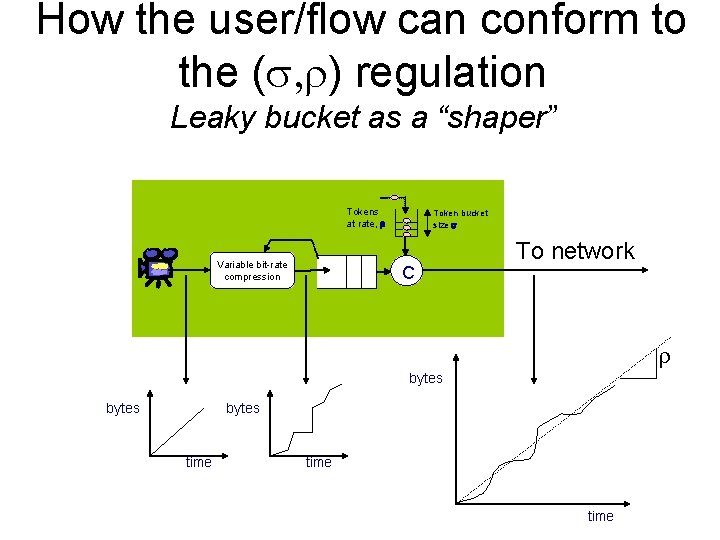

How the user/flow can conform to the (s, r) regulation Leaky bucket as a “shaper” Tokens at rate, r Variable bit-rate compression Token bucket size s C To network r bytes time

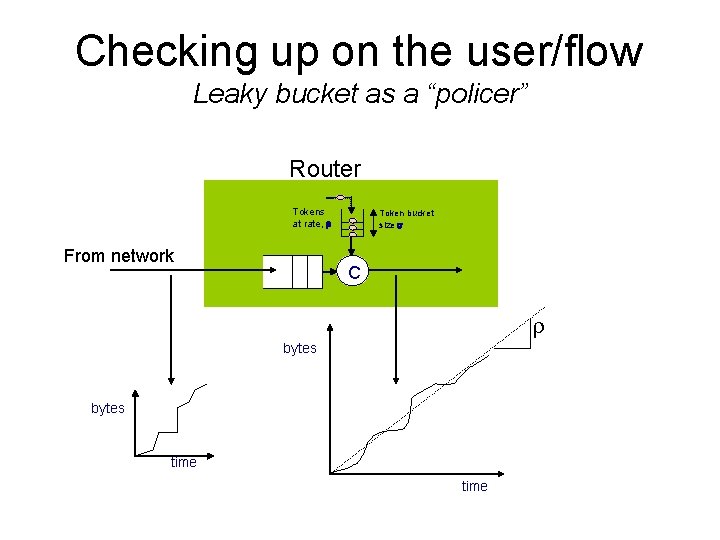

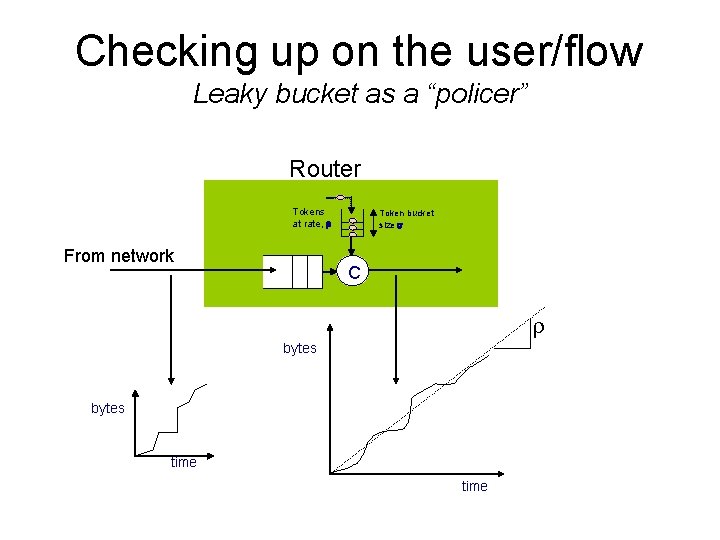

Checking up on the user/flow Leaky bucket as a “policer” Router Tokens at rate, r From network Token bucket size s C r bytes time

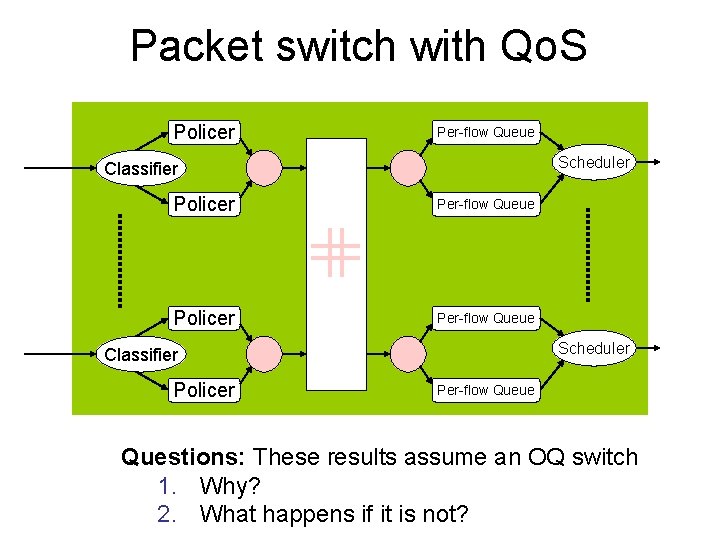

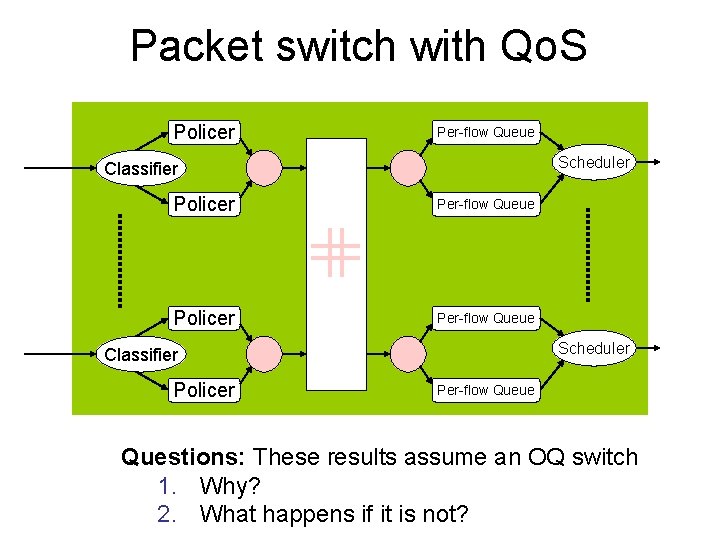

Packet switch with Qo. S Policer Per-flow Queue Scheduler Classifier Policer Per-flow Queue Questions: These results assume an OQ switch 1. Why? 2. What happens if it is not?

References 1. Abhay K. Parekh and R. Gallager “A Generalized Processor Sharing Approach to Flow Control in Integrated Services Networks: The Single Node Case” IEEE Transactions on Networking, June 1993. 2. M. Shreedhar and G. Varghese “Efficient Fair Queueing using Deficit Round Robin”, ACM Sigcomm, 1995.