ECE 454 Computer Systems Programming Compiler and Optimization

- Slides: 41

ECE 454 Computer Systems Programming Compiler and Optimization (II) Ding Yuan ECE Dept. , University of Toronto http: //www. eecg. toronto. edu/~yuan

Content • Compiler Basics (last lec. ) • Understanding Compiler Optimization (last lec. ) • Manual Optimization • Advanced Optimizations • Parallel Unrolling • Profile-Directed Feedback 2 Ding Yuan, ECE 454

Manual Optimization Example: Vector Sum Function 3 Ding Yuan, ECE 454

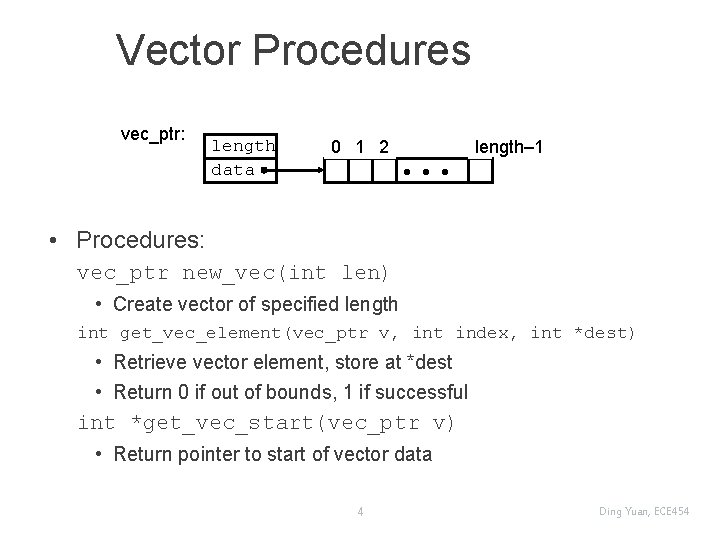

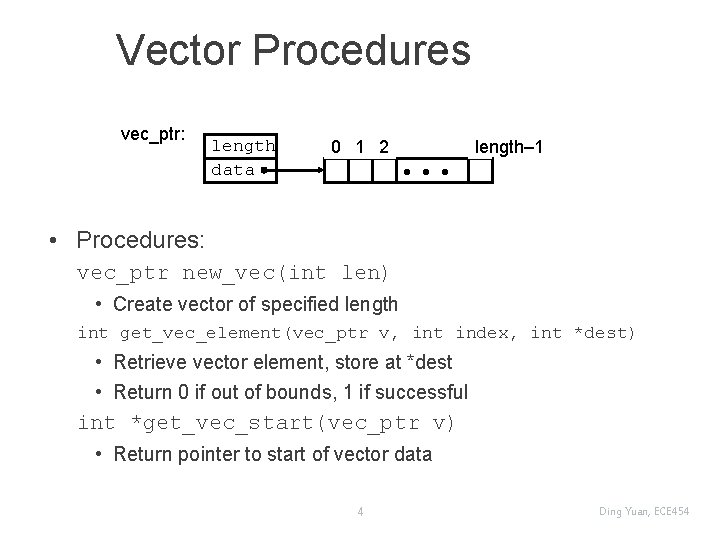

Vector Procedures vec_ptr: length data 0 1 2 length– 1 • Procedures: vec_ptr new_vec(int len) • Create vector of specified length int get_vec_element(vec_ptr v, int index, int *dest) • Retrieve vector element, store at *dest • Return 0 if out of bounds, 1 if successful int *get_vec_start(vec_ptr v) • Return pointer to start of vector data 4 Ding Yuan, ECE 454

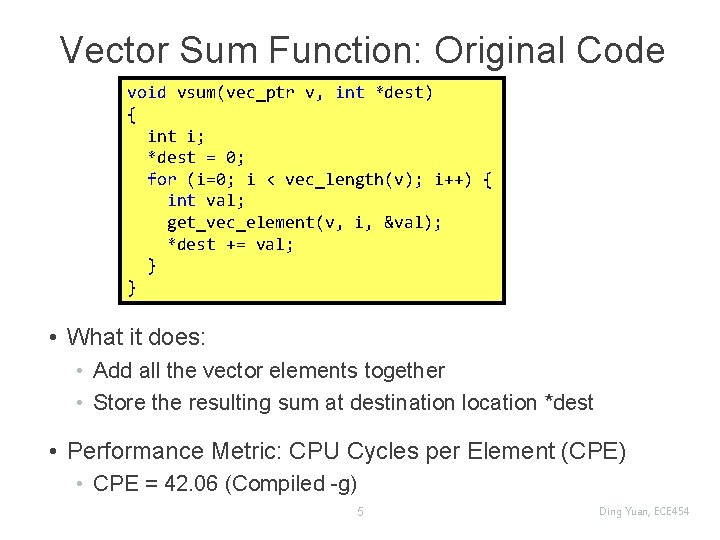

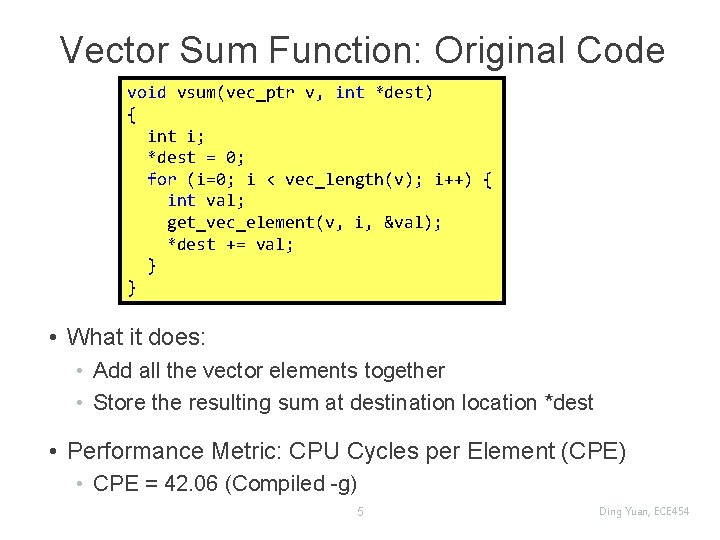

Vector Sum Function: Original Code void vsum(vec_ptr v, int *dest) { int i; *dest = 0; for (i=0; i < vec_length(v); i++) { int val; get_vec_element(v, i, &val); *dest += val; } } • What it does: • Add all the vector elements together • Store the resulting sum at destination location *dest • Performance Metric: CPU Cycles per Element (CPE) • CPE = 42. 06 (Compiled -g) 5 Ding Yuan, ECE 454

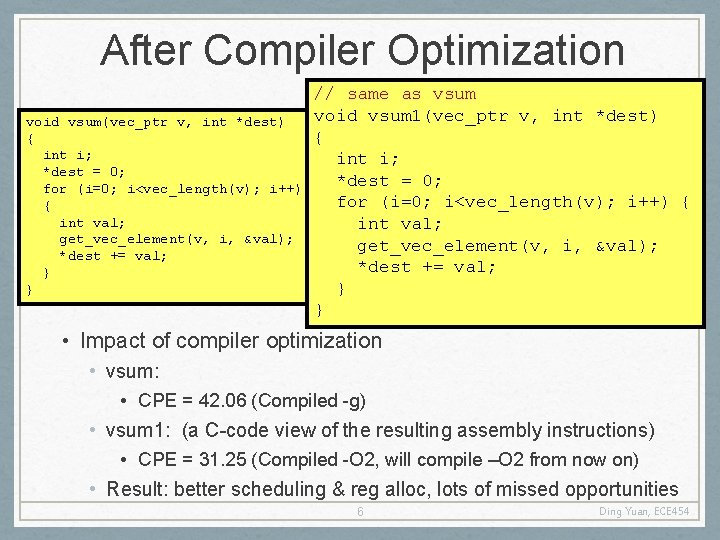

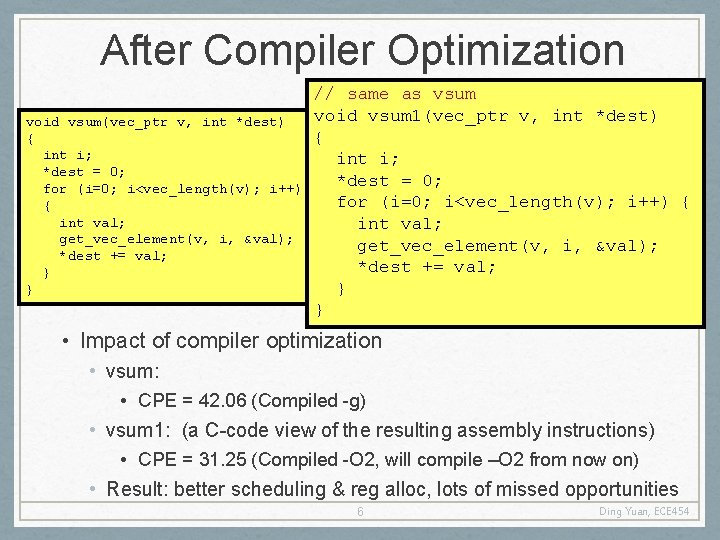

After Compiler Optimization void vsum(vec_ptr v, int *dest) { int i; *dest = 0; for (i=0; i<vec_length(v); i++) { int val; get_vec_element(v, i, &val); *dest += val; } } // same as vsum void vsum 1(vec_ptr v, int *dest) { int i; *dest = 0; for (i=0; i<vec_length(v); i++) { int val; get_vec_element(v, i, &val); *dest += val; } } • Impact of compiler optimization • vsum: • CPE = 42. 06 (Compiled -g) • vsum 1: (a C-code view of the resulting assembly instructions) • CPE = 31. 25 (Compiled -O 2, will compile –O 2 from now on) • Result: better scheduling & reg alloc, lots of missed opportunities 6 Ding Yuan, ECE 454

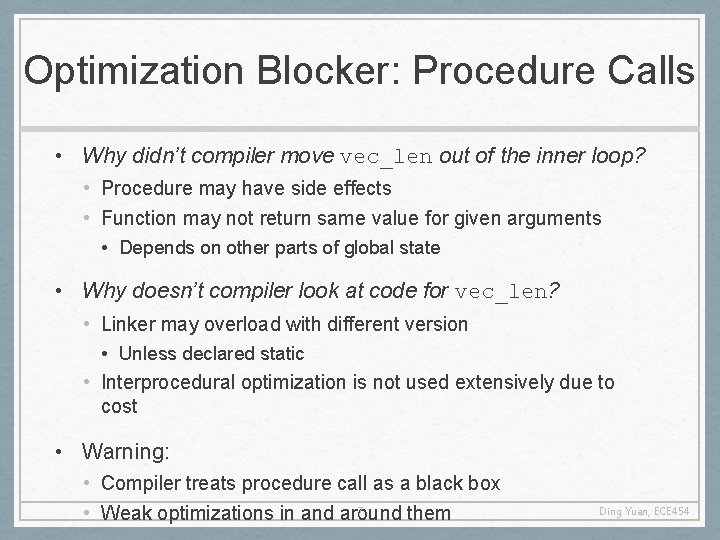

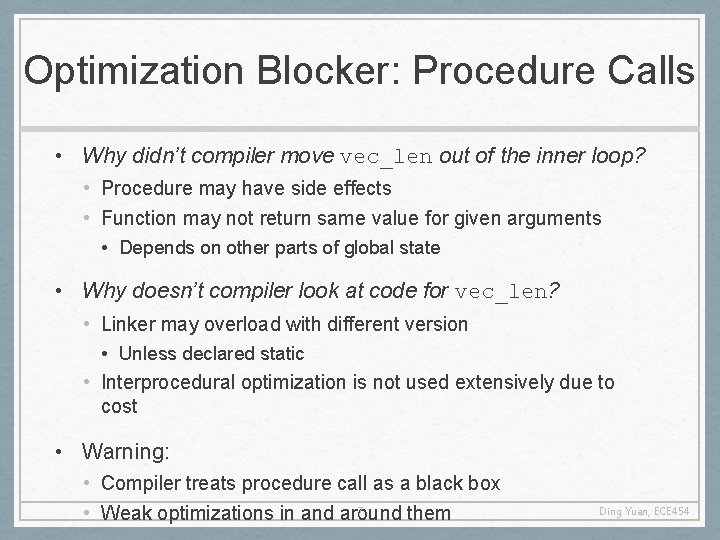

Optimization Blocker: Procedure Calls • Why didn’t compiler move vec_len out of the inner loop? • Procedure may have side effects • Function may not return same value for given arguments • Depends on other parts of global state • Why doesn’t compiler look at code for vec_len? • Linker may overload with different version • Unless declared static • Interprocedural optimization is not used extensively due to cost • Warning: • Compiler treats procedure call as a black box 7 • Weak optimizations in and around them Ding Yuan, ECE 454

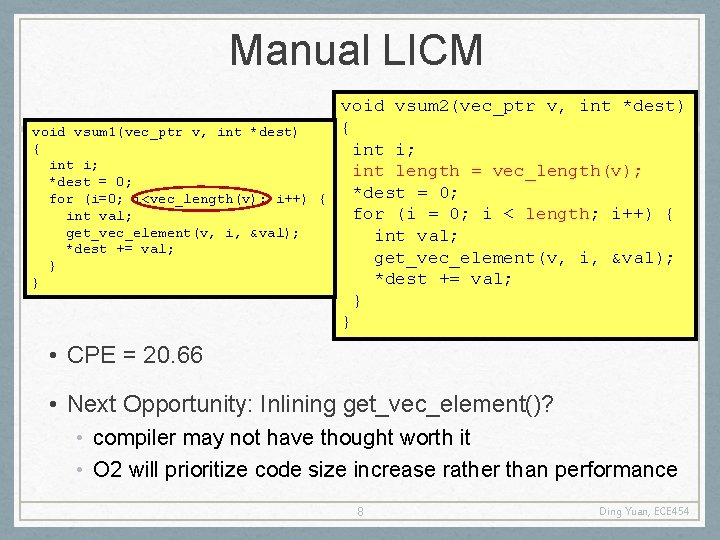

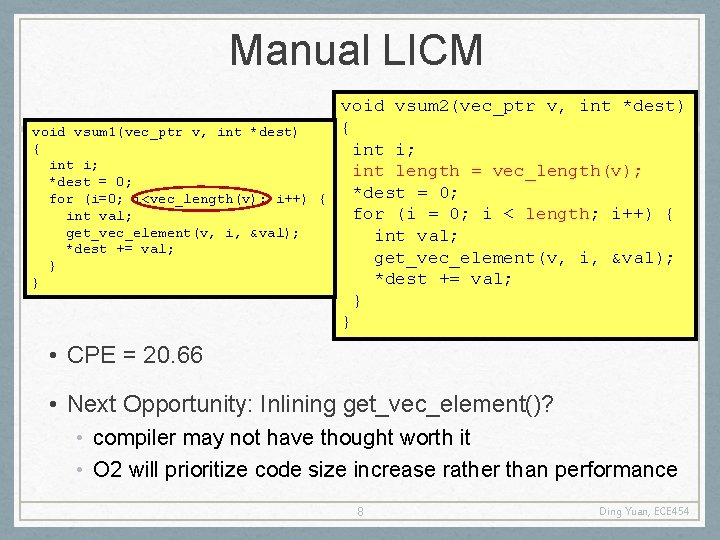

Manual LICM void vsum 1(vec_ptr v, int *dest) { int i; *dest = 0; for (i=0; i<vec_length(v); i++) { int val; get_vec_element(v, i, &val); *dest += val; } } void vsum 2(vec_ptr v, int *dest) { int i; int length = vec_length(v); *dest = 0; for (i = 0; i < length; i++) { int val; get_vec_element(v, i, &val); *dest += val; } } • CPE = 20. 66 • Next Opportunity: Inlining get_vec_element()? • compiler may not have thought worth it • O 2 will prioritize code size increase rather than performance 8 Ding Yuan, ECE 454

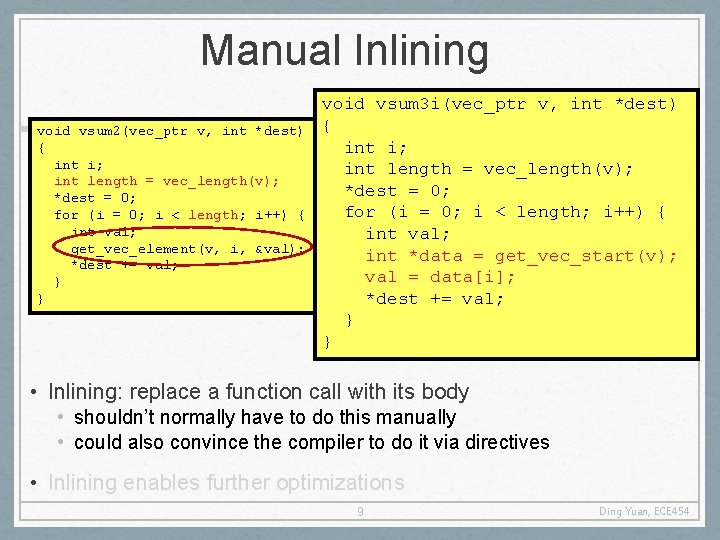

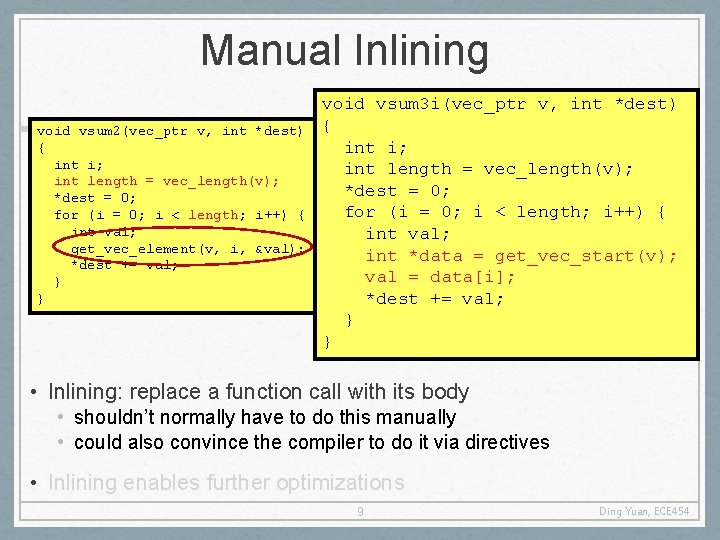

Manual Inlining void vsum 2(vec_ptr v, int *dest) { int i; int length = vec_length(v); *dest = 0; for (i = 0; i < length; i++) { int val; get_vec_element(v, i, &val); *dest += val; } } void vsum 3 i(vec_ptr v, int *dest) { int i; int length = vec_length(v); *dest = 0; for (i = 0; i < length; i++) { int val; int *data = get_vec_start(v); val = data[i]; *dest += val; } } • Inlining: replace a function call with its body • shouldn’t normally have to do this manually • could also convince the compiler to do it via directives • Inlining enables further optimizations 9 Ding Yuan, ECE 454

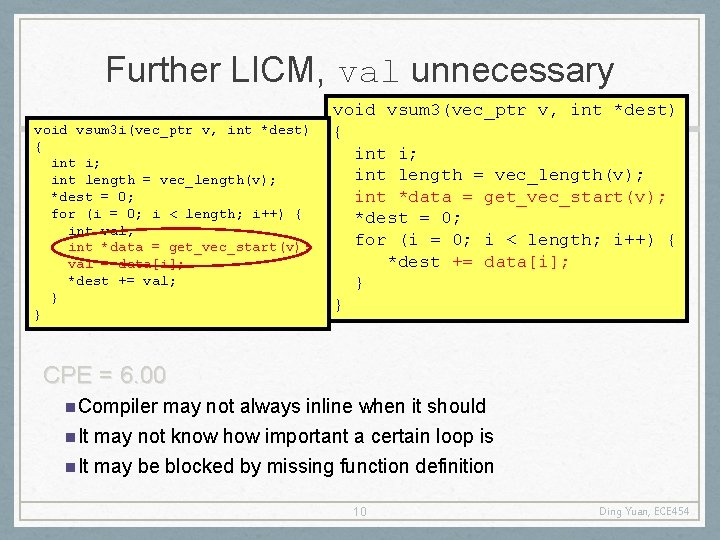

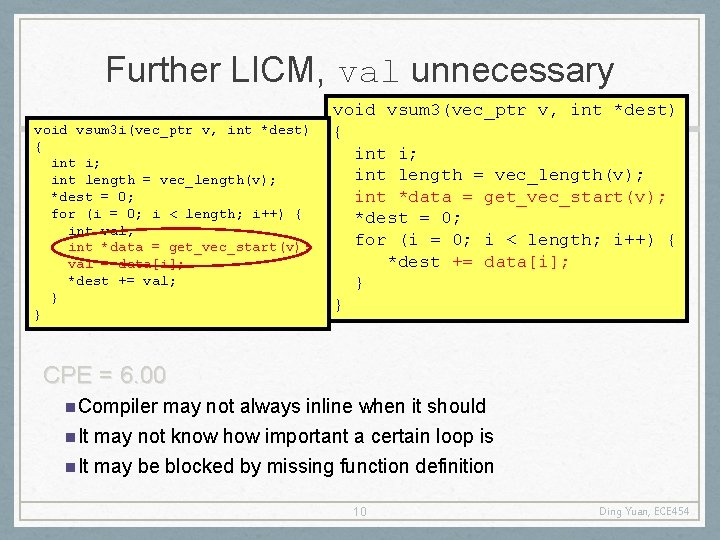

Further LICM, val unnecessary void vsum 3 i(vec_ptr v, int *dest) { int i; int length = vec_length(v); *dest = 0; for (i = 0; i < length; i++) { int val; int *data = get_vec_start(v); val = data[i]; *dest += val; } } void vsum 3(vec_ptr v, int *dest) { int i; int length = vec_length(v); int *data = get_vec_start(v); *dest = 0; for (i = 0; i < length; i++) { *dest += data[i]; } } CPE = 6. 00 n Compiler may not always inline when it should n It may not know how important a certain loop is n It may be blocked by missing function definition 10 Ding Yuan, ECE 454

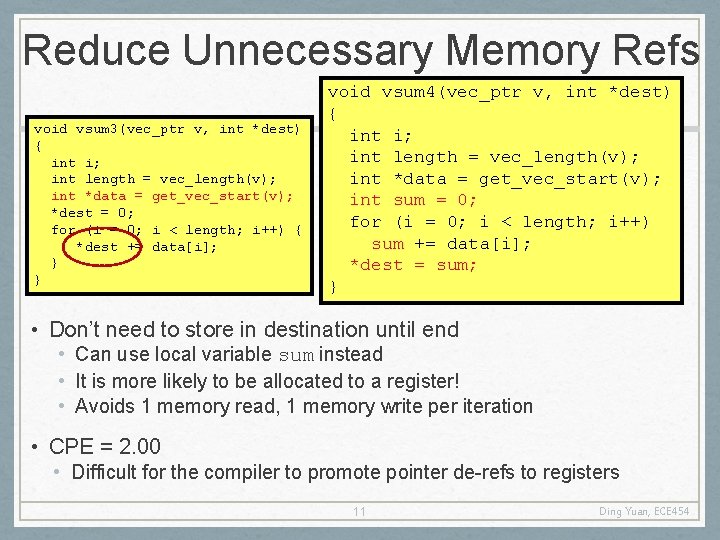

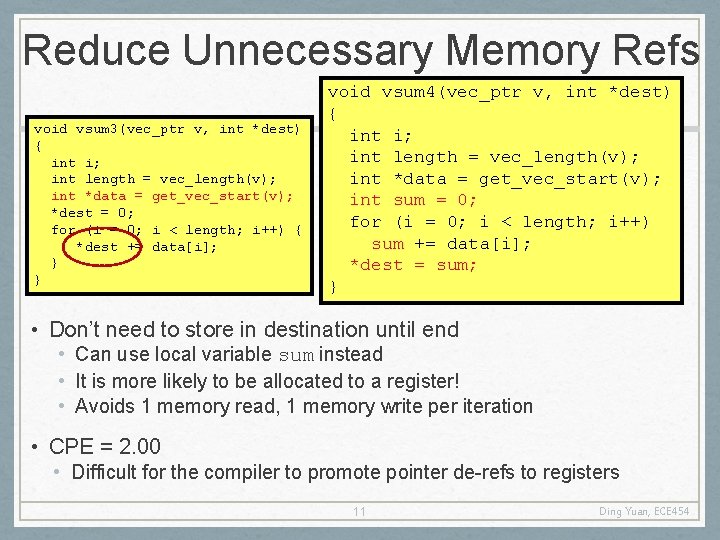

Reduce Unnecessary Memory Refs void vsum 3(vec_ptr v, int *dest) { int i; int length = vec_length(v); int *data = get_vec_start(v); *dest = 0; for (i = 0; i < length; i++) { *dest += data[i]; } } void vsum 4(vec_ptr v, int *dest) { int i; int length = vec_length(v); int *data = get_vec_start(v); int sum = 0; for (i = 0; i < length; i++) sum += data[i]; *dest = sum; } • Don’t need to store in destination until end • Can use local variable sum instead • It is more likely to be allocated to a register! • Avoids 1 memory read, 1 memory write per iteration • CPE = 2. 00 • Difficult for the compiler to promote pointer de-refs to registers 11 Ding Yuan, ECE 454

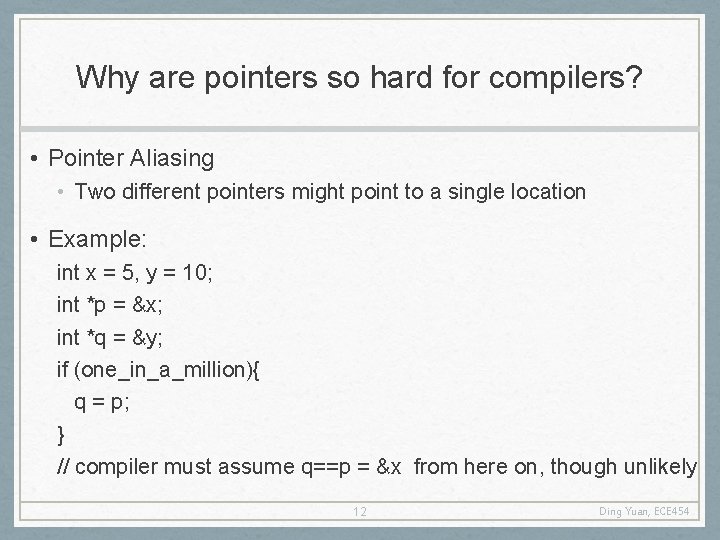

Why are pointers so hard for compilers? • Pointer Aliasing • Two different pointers might point to a single location • Example: int x = 5, y = 10; int *p = &x; int *q = &y; if (one_in_a_million){ q = p; } // compiler must assume q==p = &x from here on, though unlikely 12 Ding Yuan, ECE 454

Minimizing the impact of Pointers • Easy to over-use pointers in C/C++ • Since allowed to do address arithmetic • Direct access to storage structures • Get in habit of introducing local variables • Eg. , when accumulating within loops • Your way of telling compiler not to worry about aliasing 13 Ding Yuan, ECE 454

Understanding Instruction-Level Parallelism: Unrolling and Software Pipelining 14 Ding Yuan, ECE 454

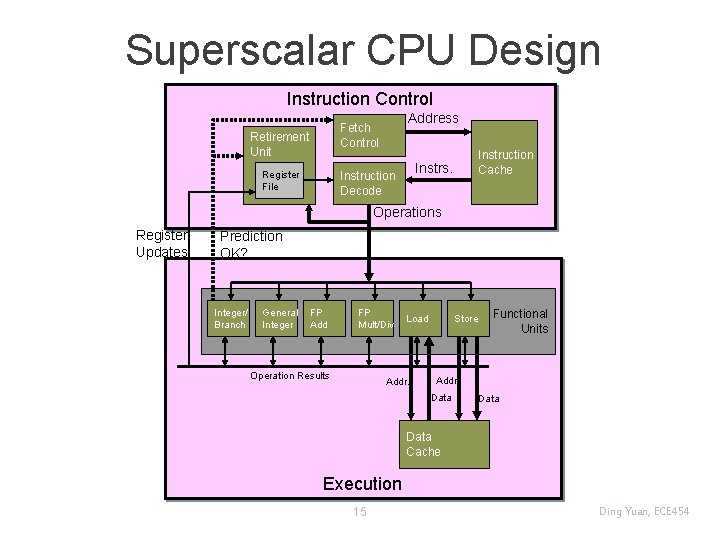

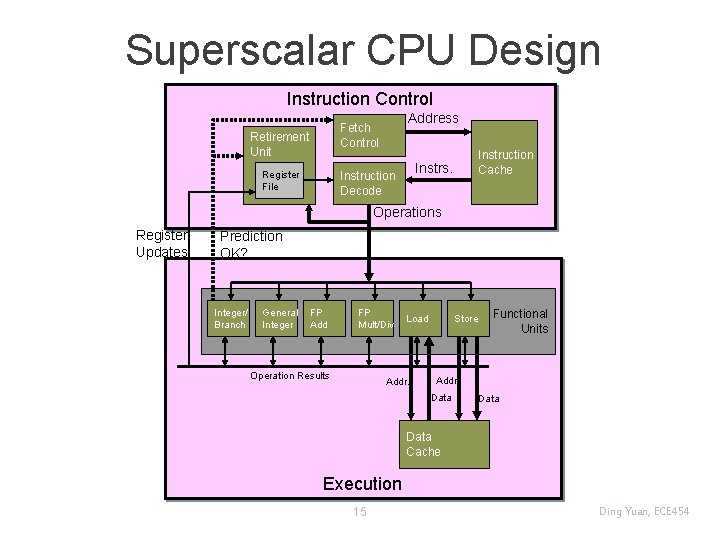

Superscalar CPU Design Instruction Control Address Fetch Control Retirement Unit Register File Instruction Cache Instrs. Instruction Decode Operations Register Updates Prediction OK? Integer/ Branch General Integer FP Add FP Mult/Div Operation Results Load Addr. Store Functional Units Addr. Data Cache Execution 15 Ding Yuan, ECE 454

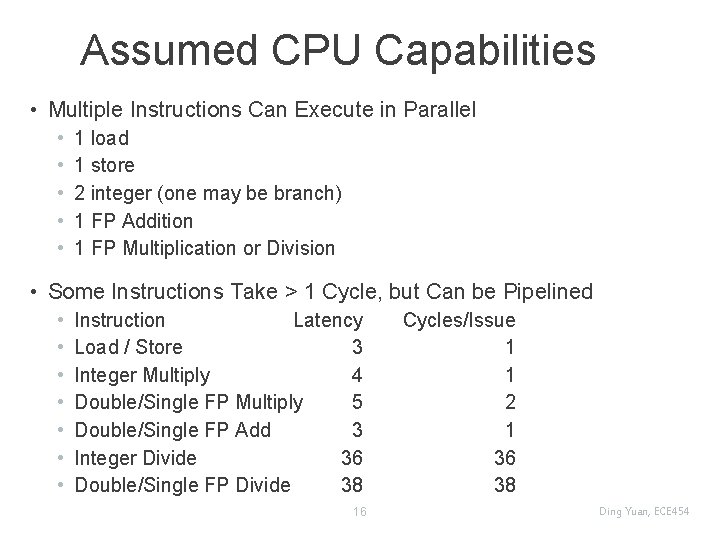

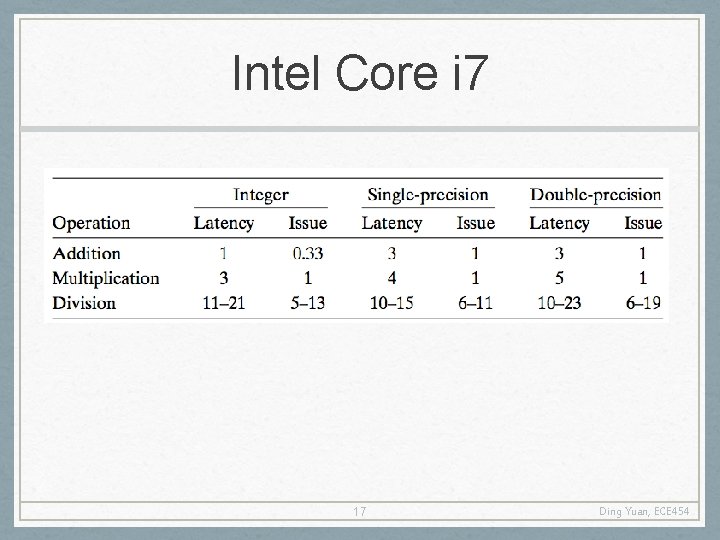

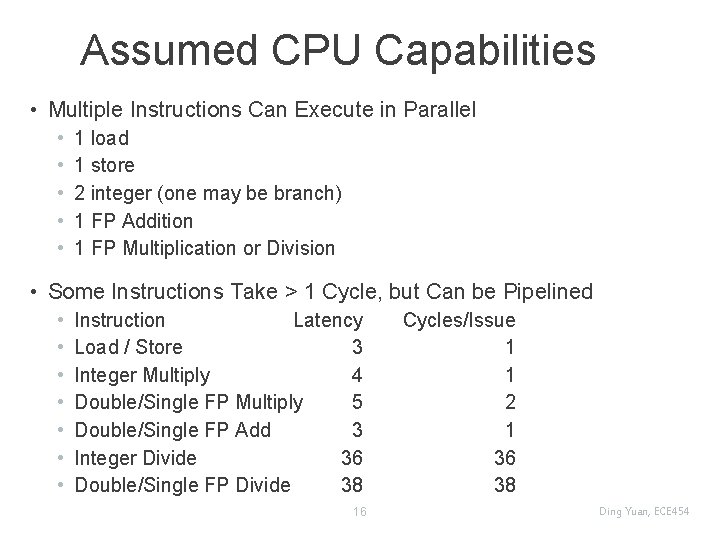

Assumed CPU Capabilities • Multiple Instructions Can Execute in Parallel • • • 1 load 1 store 2 integer (one may be branch) 1 FP Addition 1 FP Multiplication or Division • Some Instructions Take > 1 Cycle, but Can be Pipelined • • Instruction Latency Load / Store 3 Integer Multiply 4 Double/Single FP Multiply 5 Double/Single FP Add 3 Integer Divide 36 Double/Single FP Divide 38 16 Cycles/Issue 1 1 2 1 36 38 Ding Yuan, ECE 454

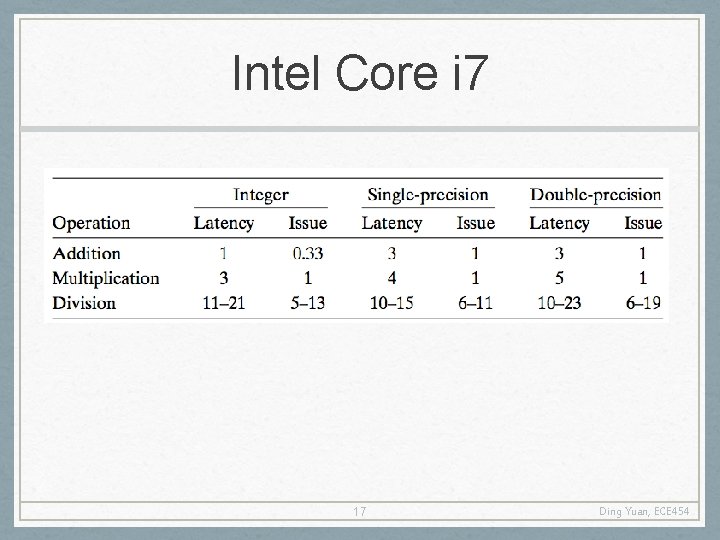

Intel Core i 7 17 Ding Yuan, ECE 454

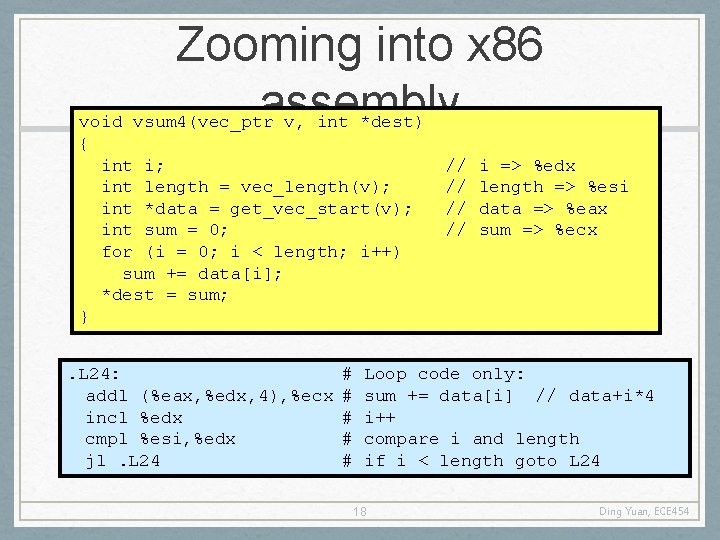

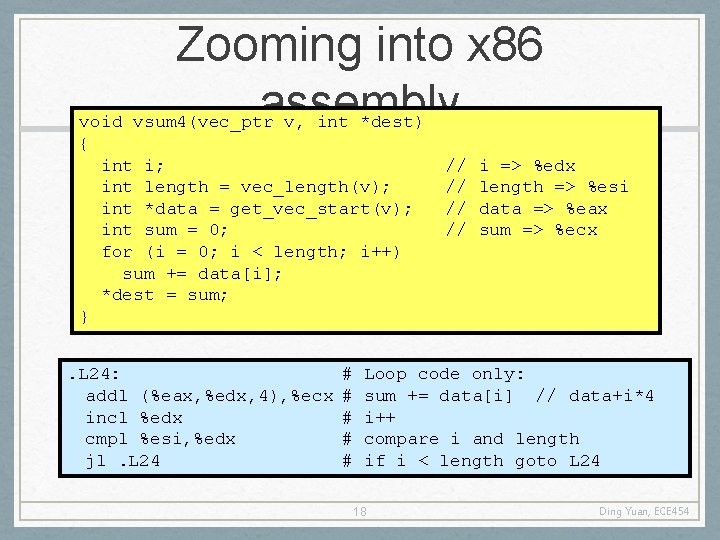

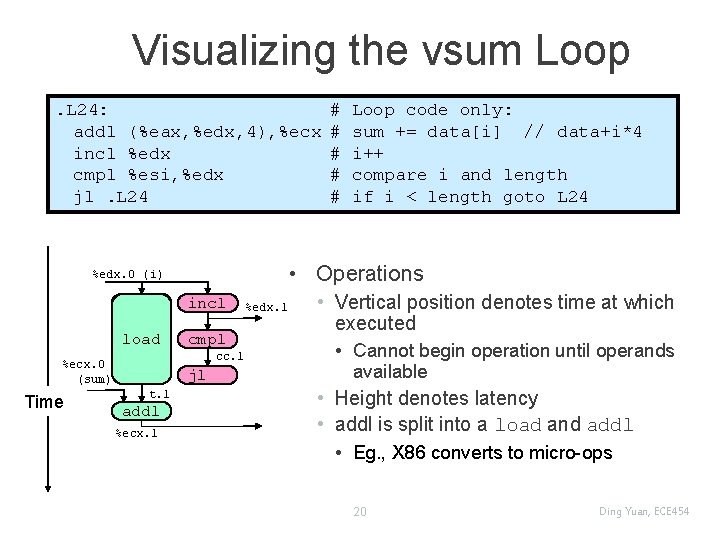

Zooming into x 86 assembly void vsum 4(vec_ptr v, int *dest) { int i; int length = vec_length(v); int *data = get_vec_start(v); int sum = 0; for (i = 0; i < length; i++) sum += data[i]; *dest = sum; }. L 24: addl (%eax, %edx, 4), %ecx incl %edx cmpl %esi, %edx jl. L 24 # # # // // i => %edx length => %esi data => %eax sum => %ecx Loop code only: sum += data[i] // data+i*4 i++ compare i and length if i < length goto L 24 18 Ding Yuan, ECE 454

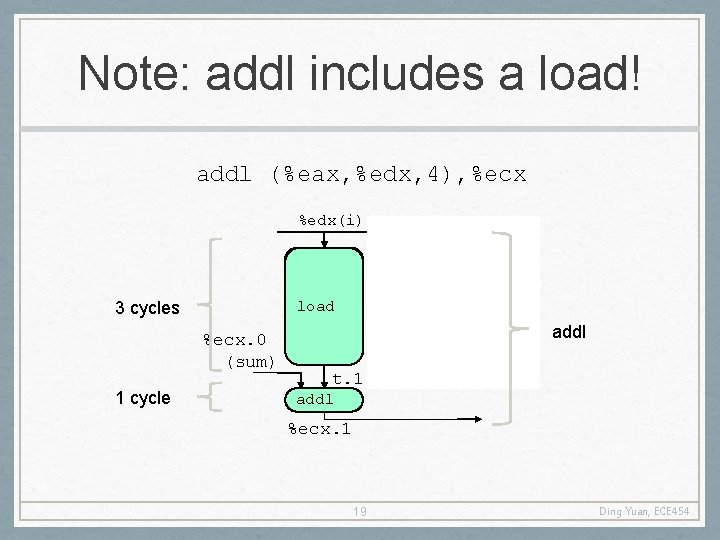

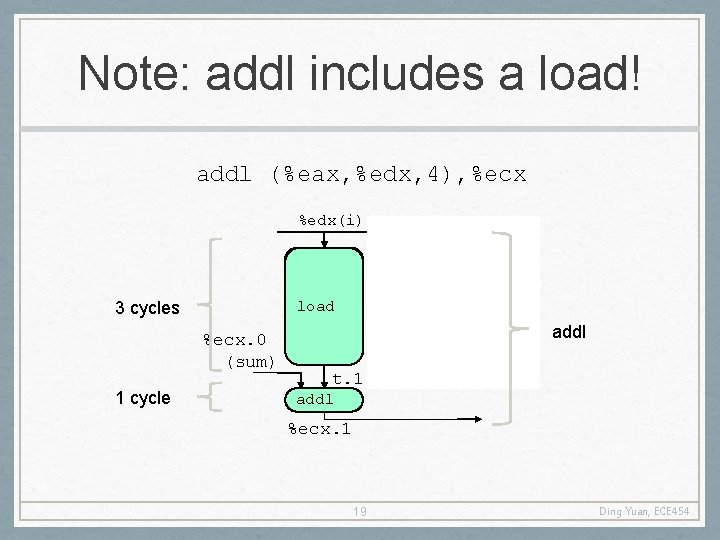

Note: addl includes a load! addl (%eax, %edx, 4), %ecx %edx(i) 3 cycles %ecx. 0 (sum) 1 cycle load incl load %ecx. i cmpl+1 cc. 1 %edx. 1 addl jl t. 1 addl %ecx. 1 19 Ding Yuan, ECE 454

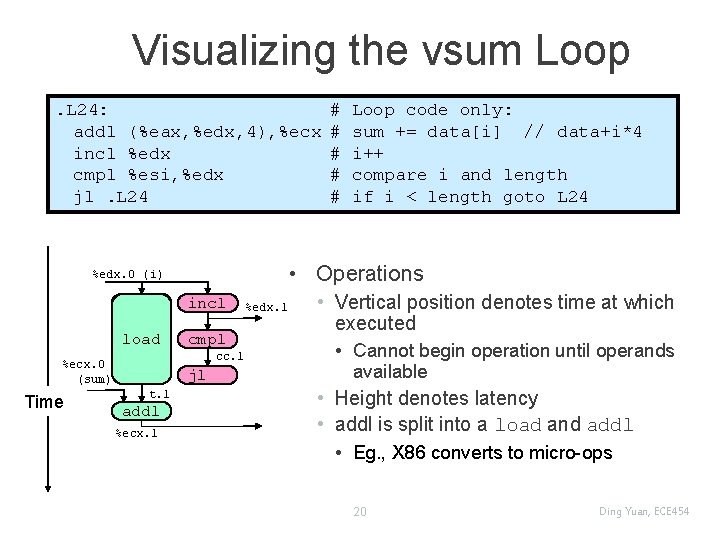

Visualizing the vsum Loop. L 24: addl (%eax, %edx, 4), %ecx incl %edx cmpl %esi, %edx jl. L 24 load %ecx. 0 (sum) Time incl cmpl+1 %ecx. i cc. 1 jl t. 1 addl %ecx. 1 Loop code only: sum += data[i] // data+i*4 i++ compare i and length if i < length goto L 24 • Operations %edx. 0 (i) load # # # %edx. 1 • Vertical position denotes time at which executed • Cannot begin operation until operands available • Height denotes latency • addl is split into a load and addl • Eg. , X 86 converts to micro-ops 20 Ding Yuan, ECE 454

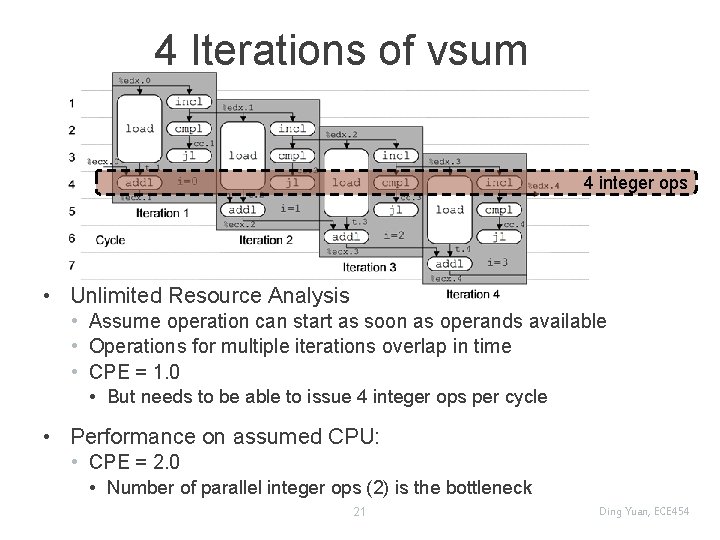

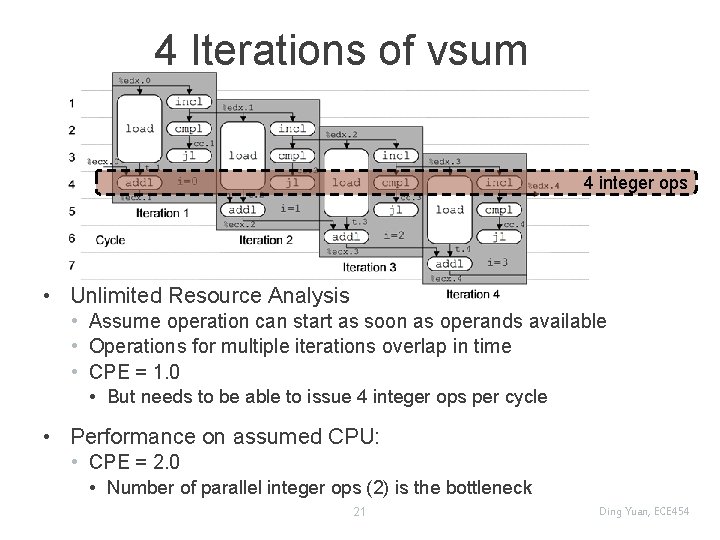

4 Iterations of vsum 4 integer ops • Unlimited Resource Analysis • Assume operation can start as soon as operands available • Operations for multiple iterations overlap in time • CPE = 1. 0 • But needs to be able to issue 4 integer ops per cycle • Performance on assumed CPU: • CPE = 2. 0 • Number of parallel integer ops (2) is the bottleneck 21 Ding Yuan, ECE 454

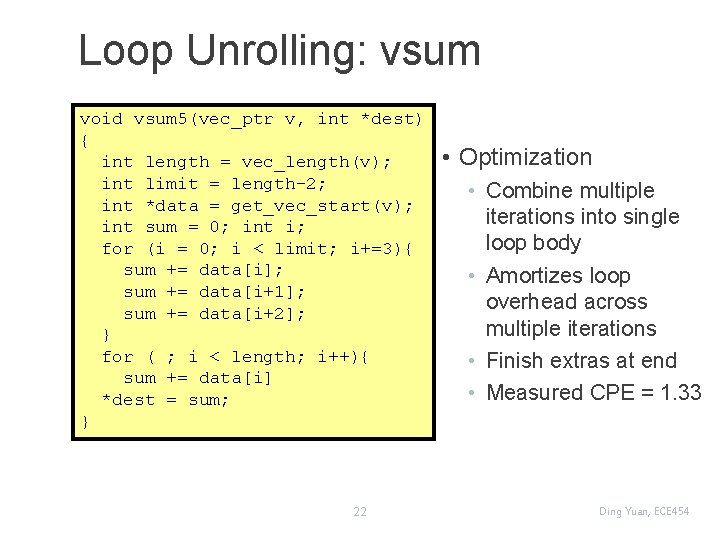

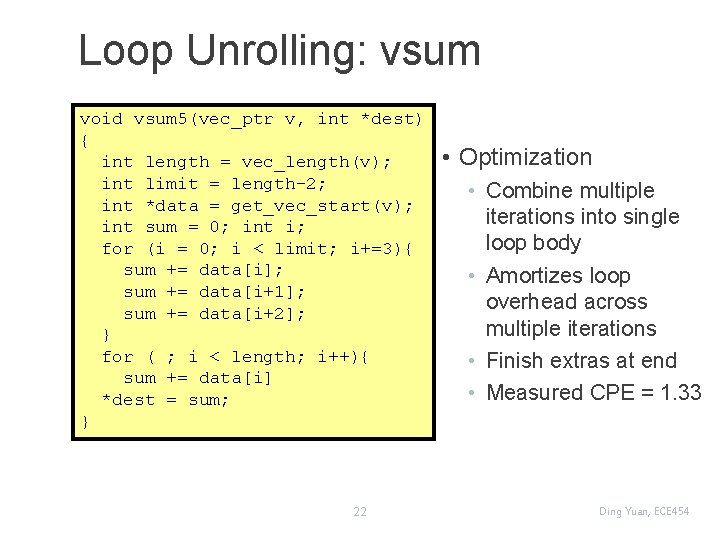

Loop Unrolling: vsum void vsum 5(vec_ptr v, int *dest) { int length = vec_length(v); int limit = length-2; int *data = get_vec_start(v); int sum = 0; int i; for (i = 0; i < limit; i+=3){ sum += data[i]; sum += data[i+1]; sum += data[i+2]; } for ( ; i < length; i++){ sum += data[i] *dest = sum; } 22 • Optimization • Combine multiple iterations into single loop body • Amortizes loop overhead across multiple iterations • Finish extras at end • Measured CPE = 1. 33 Ding Yuan, ECE 454

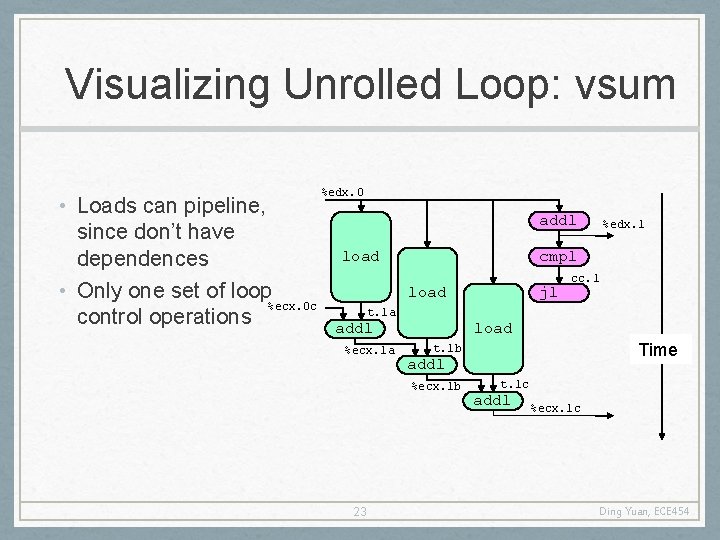

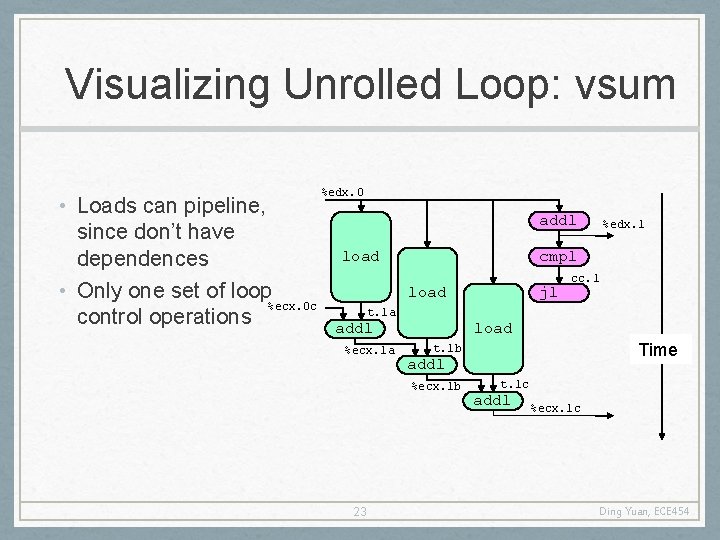

Visualizing Unrolled Loop: vsum • Loads can pipeline, since don’t have dependences • Only one set of loop %ecx. 0 c control operations %edx. 0 addl %edx. 1 cmpl+1 %ecx. i load jl load cc. 1 t. 1 a addl %ecx. 1 a load addl %ecx. 1 b 23 Time t. 1 b t. 1 c addl %ecx. 1 c Ding Yuan, ECE 454

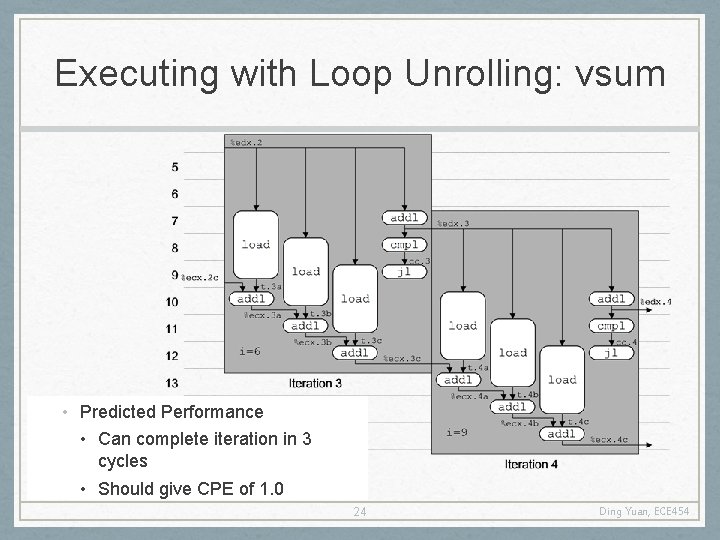

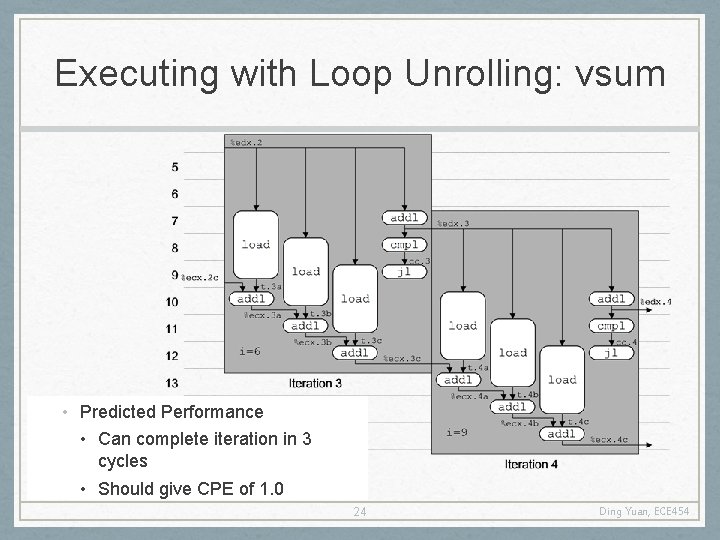

Executing with Loop Unrolling: vsum • Predicted Performance • Can complete iteration in 3 cycles • Should give CPE of 1. 0 24 Ding Yuan, ECE 454

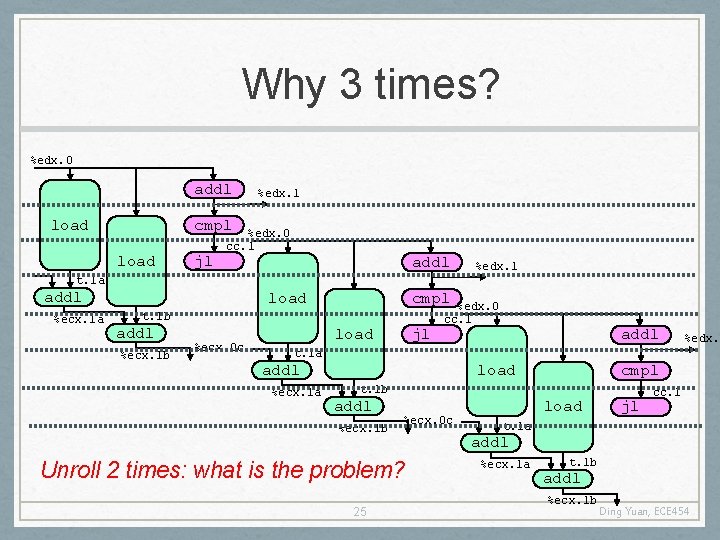

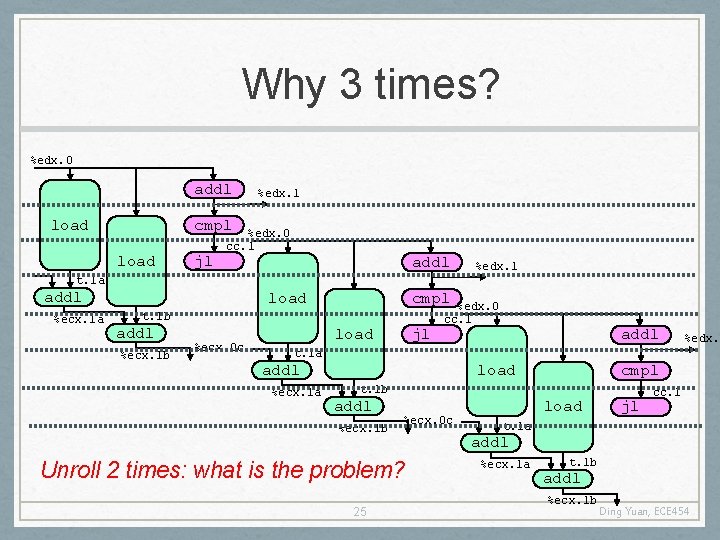

Why 3 times? %edx. 0 addl load %edx. 1 cmpl+1 %ecx. i load jl %edx. 0 cc. 1 addl %edx. 1 t. 1 a addl %ecx. 1 a load t. 1 b addl %ecx. 1 b %ecx. 0 c load cmpl+1%edx. 0 %ecx. i cc. 1 jl addl %edx. t. 1 a addl %ecx. 1 a cmpl+1 %ecx. i load t. 1 b addl %ecx. 1 b %ecx. 0 c Unroll 2 times: what is the problem? 25 load jl cc. 1 t. 1 a addl %ecx. 1 a t. 1 b addl %ecx. 1 b Ding Yuan, ECE 454

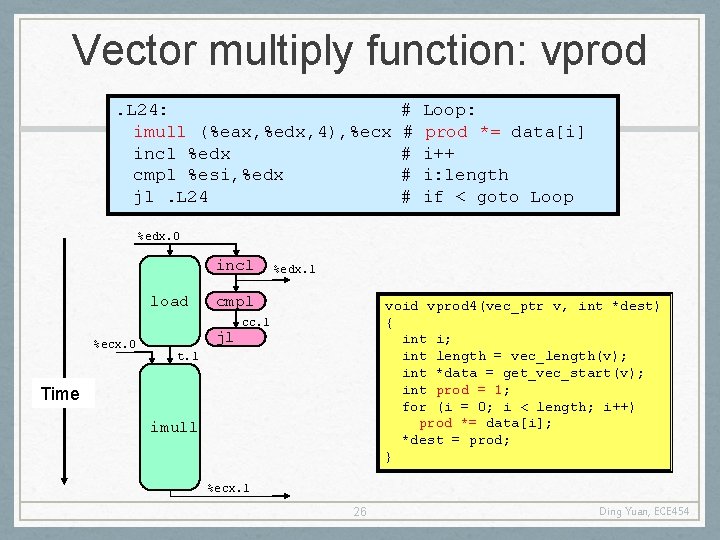

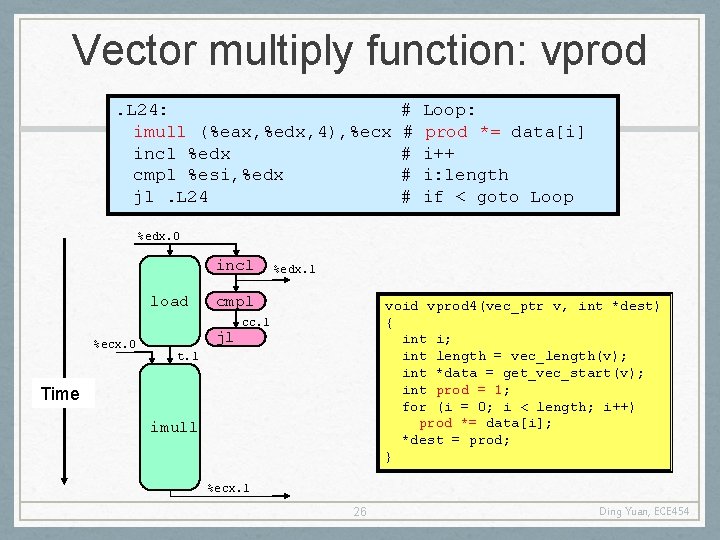

Vector multiply function: vprod. L 24: imull (%eax, %edx, 4), %ecx incl %edx cmpl %esi, %edx jl. L 24 # # # Loop: prod *= data[i] i++ i: length if < goto Loop %edx. 0 incl load %ecx. 0 %edx. 1 cmpl jl void vprod 4(vec_ptr v, int *dest) { int i; int length = vec_length(v); int *data = get_vec_start(v); int prod = 1; for (i = 0; i < length; i++) prod *= data[i]; *dest = prod; } cc. 1 t. 1 Time imull %ecx. 1 26 Ding Yuan, ECE 454

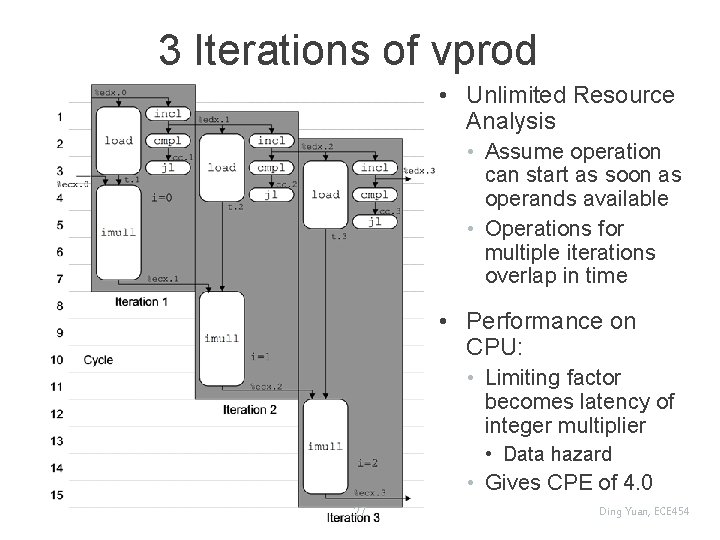

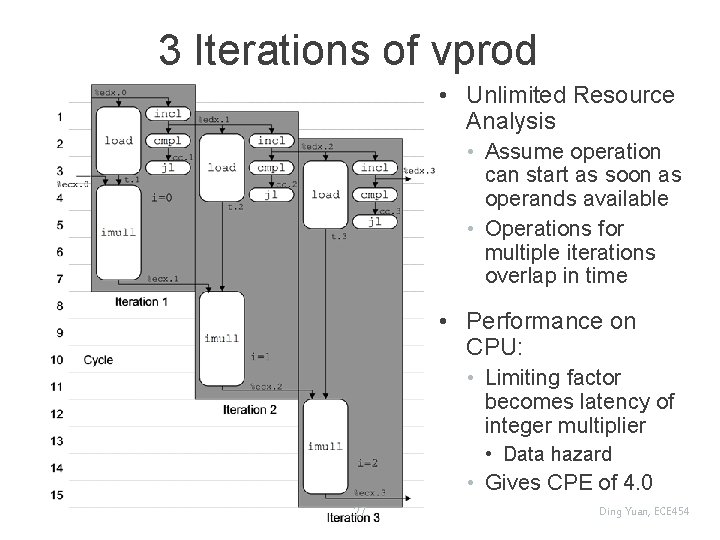

3 Iterations of vprod • Unlimited Resource Analysis • Assume operation can start as soon as operands available • Operations for multiple iterations overlap in time • Performance on CPU: • Limiting factor becomes latency of integer multiplier • Data hazard • Gives CPE of 4. 0 27 Ding Yuan, ECE 454

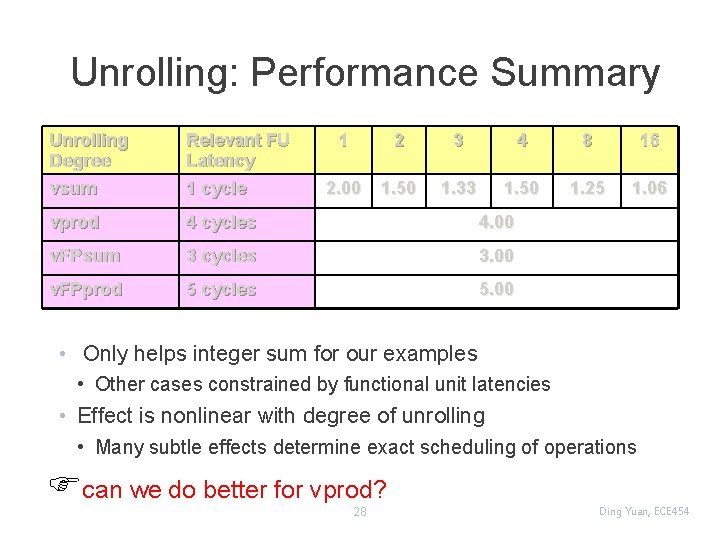

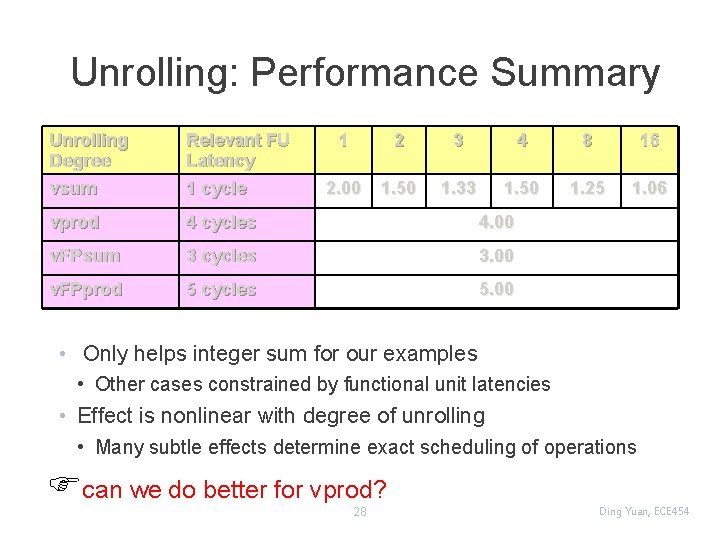

Unrolling: Performance Summary Unrolling Degree vsum Relevant FU Latency 1 cycle 1 2 3 4 8 16 2. 00 1. 50 1. 33 1. 50 1. 25 1. 06 vprod 4 cycles 4. 00 v. FPsum 3 cycles 3. 00 v. FPprod 5 cycles 5. 00 • Only helps integer sum for our examples • Other cases constrained by functional unit latencies • Effect is nonlinear with degree of unrolling • Many subtle effects determine exact scheduling of operations can we do better for vprod? 28 Ding Yuan, ECE 454

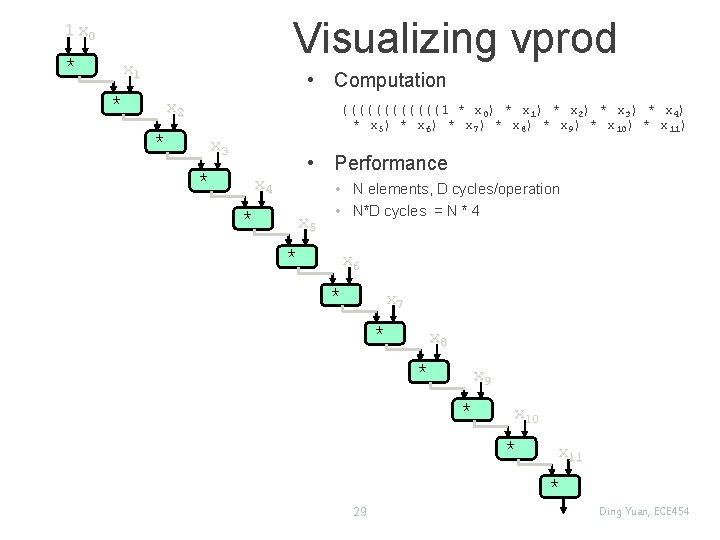

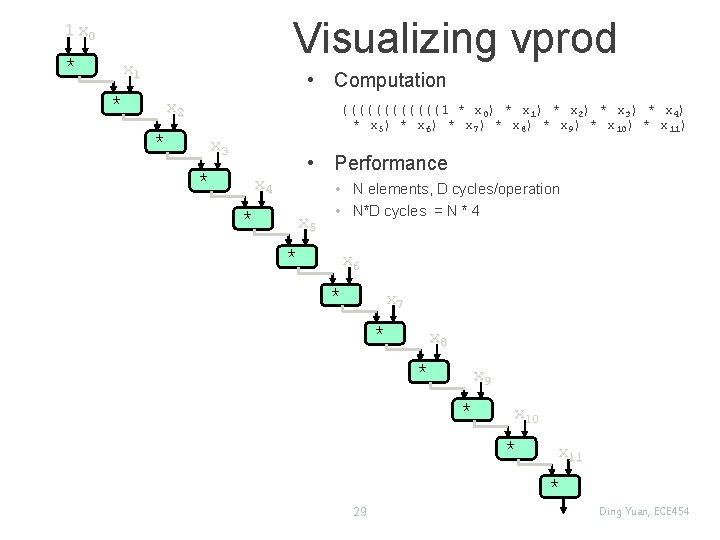

Visualizing vprod 1 x 0 * x 1 • Computation * x 2 ((((((1 * x 0) * x 1) * x 2) * x 3) * x 4) * x 5) * x 6) * x 7) * x 8) * x 9) * x 10) * x 11) * x 3 * • Performance x 4 * x 5 • N elements, D cycles/operation • N*D cycles = N * 4 * x 6 * x 7 * x 8 * x 9 * x 10 * x 11 * 29 Ding Yuan, ECE 454

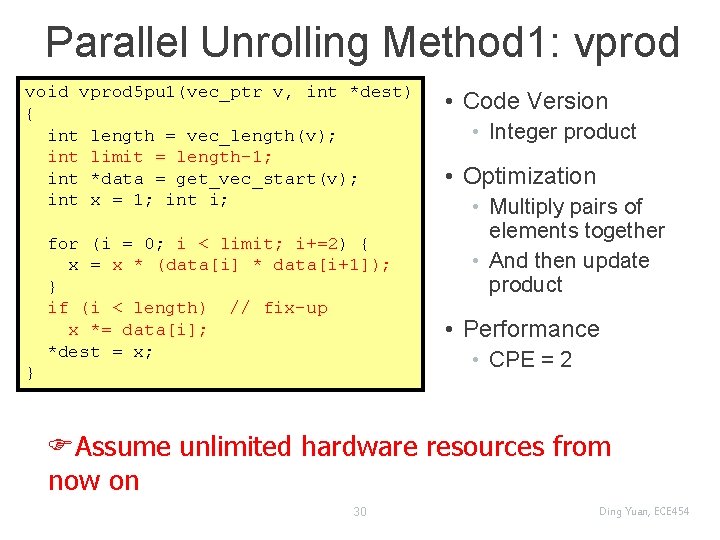

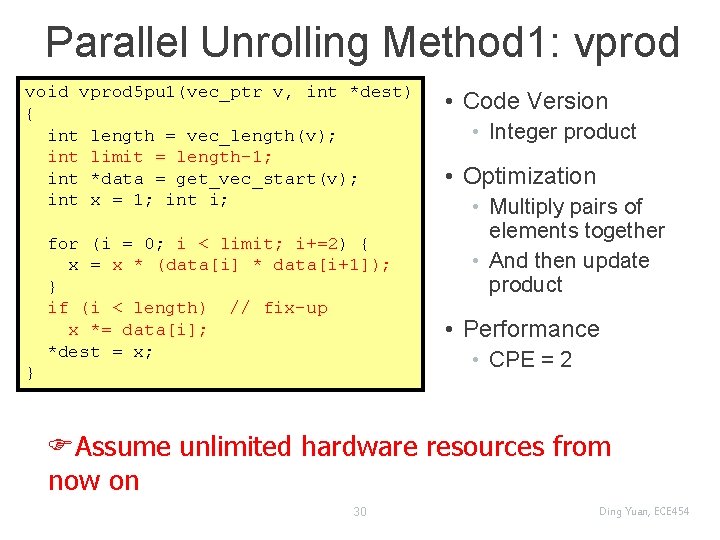

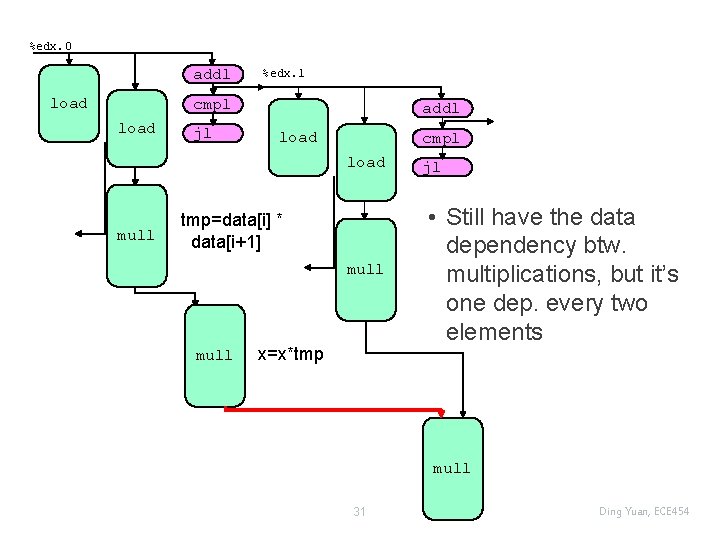

Parallel Unrolling Method 1: vprod void vprod 5 pu 1(vec_ptr v, int *dest) { int length = vec_length(v); int limit = length-1; int *data = get_vec_start(v); int x = 1; int i; for (i = 0; i < limit; i+=2) { x = x * (data[i] * data[i+1]); } if (i < length) // fix-up x *= data[i]; *dest = x; } • Code Version • Integer product • Optimization • Multiply pairs of elements together • And then update product • Performance • CPE = 2 Assume unlimited hardware resources from now on 30 Ding Yuan, ECE 454

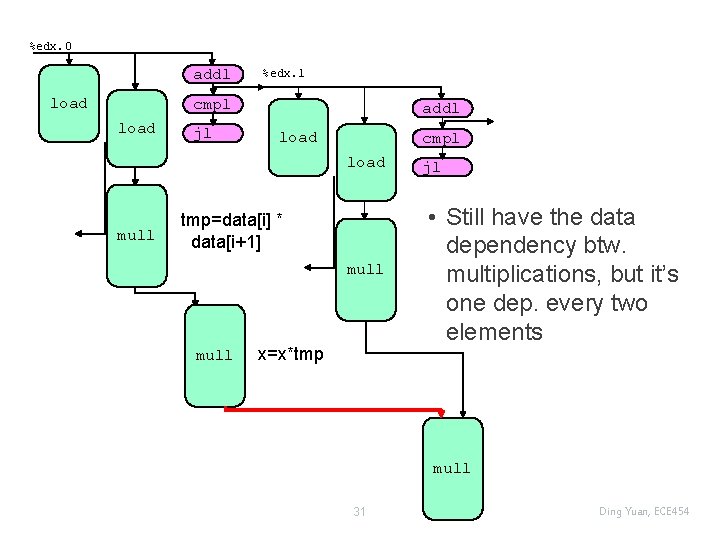

%edx. 0 addl load %edx. 1 cmpl load jl addl load cmpl load mull tmp=data[i] * data[i+1] mull x=x*tmp jl • Still have the data dependency btw. multiplications, but it’s one dep. every two elements mull 31 Ding Yuan, ECE 454

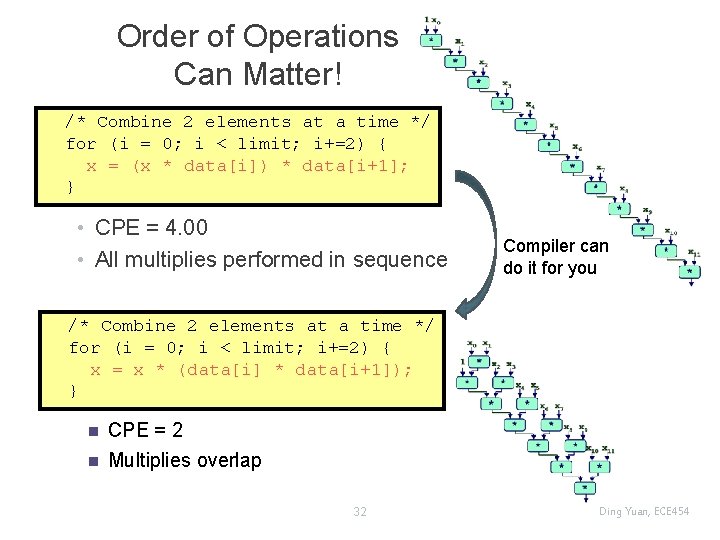

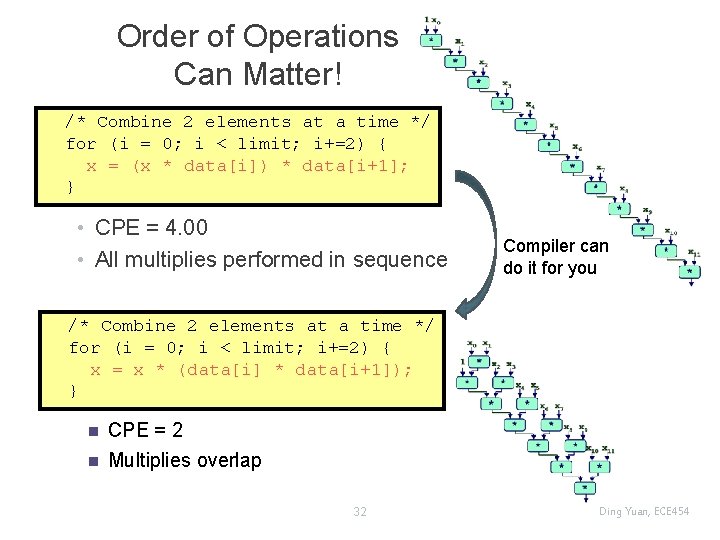

Order of Operations Can Matter! /* Combine 2 elements at a time */ for (i = 0; i < limit; i+=2) { x = (x * data[i]) * data[i+1]; } • CPE = 4. 00 • All multiplies performed in sequence Compiler can do it for you /* Combine 2 elements at a time */ for (i = 0; i < limit; i+=2) { x = x * (data[i] * data[i+1]); } n n CPE = 2 Multiplies overlap 32 Ding Yuan, ECE 454

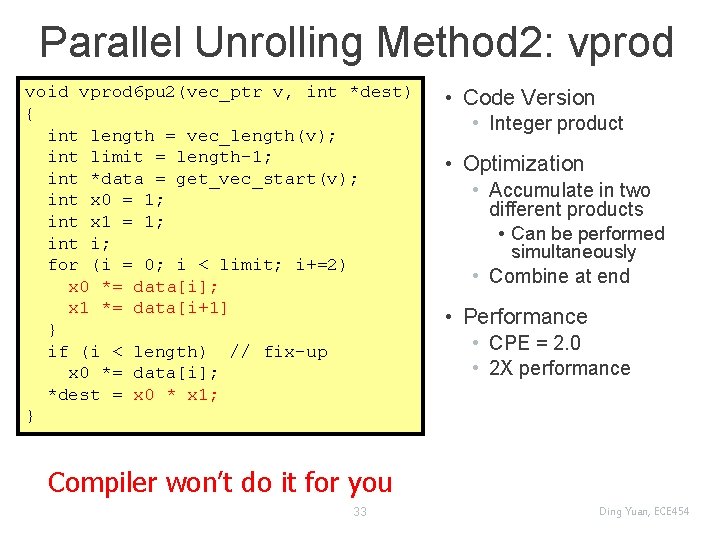

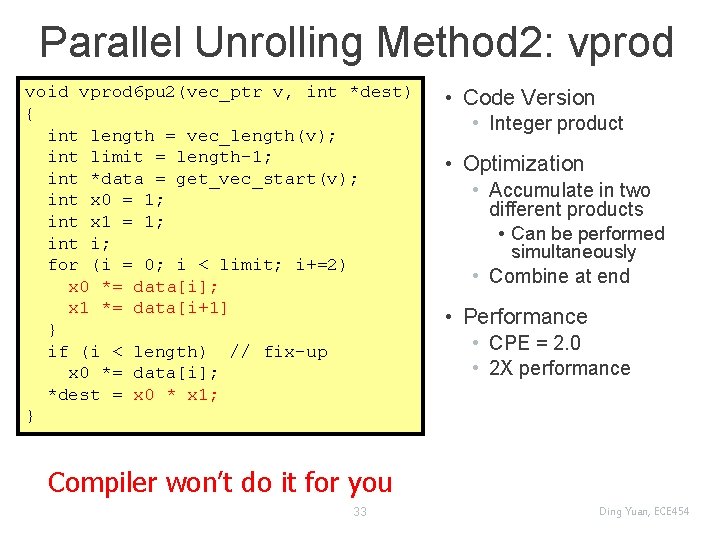

Parallel Unrolling Method 2: vprod void vprod 6 pu 2(vec_ptr v, int *dest) { int length = vec_length(v); int limit = length-1; int *data = get_vec_start(v); int x 0 = 1; int x 1 = 1; int i; for (i = 0; i < limit; i+=2) x 0 *= data[i]; x 1 *= data[i+1] } if (i < length) // fix-up x 0 *= data[i]; *dest = x 0 * x 1; } • Code Version • Integer product • Optimization • Accumulate in two different products • Can be performed simultaneously • Combine at end • Performance • CPE = 2. 0 • 2 X performance Compiler won’t do it for you 33 Ding Yuan, ECE 454

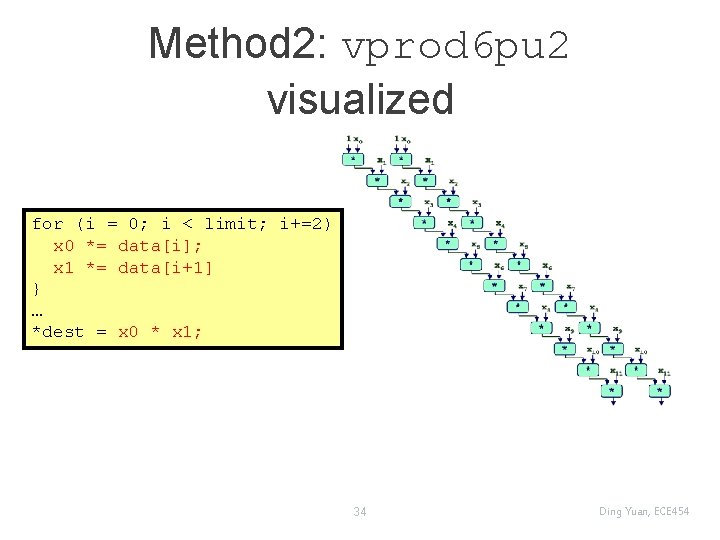

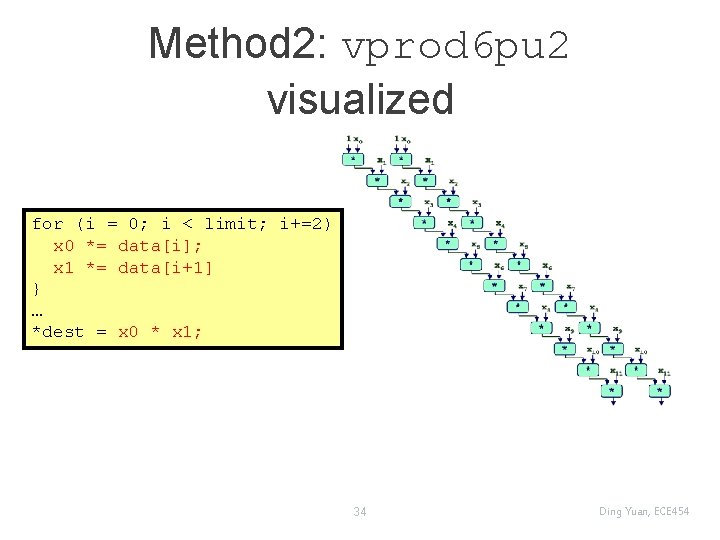

Method 2: vprod 6 pu 2 visualized for (i = 0; i < limit; i+=2) x 0 *= data[i]; x 1 *= data[i+1] } … *dest = x 0 * x 1; 34 Ding Yuan, ECE 454

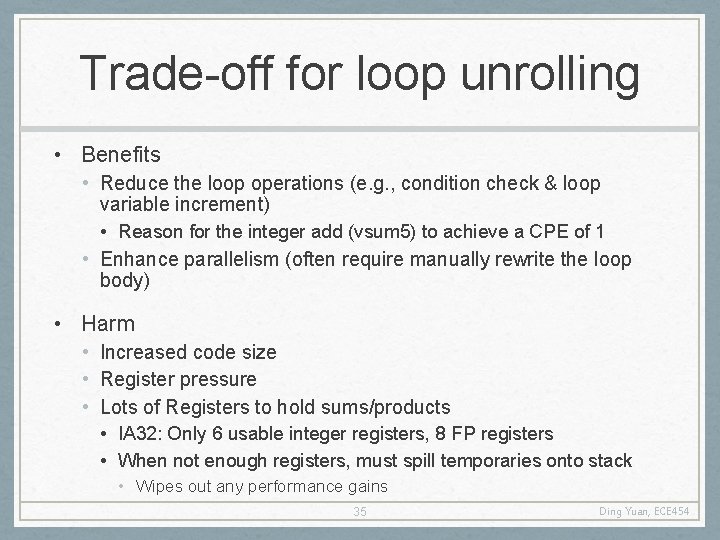

Trade-off for loop unrolling • Benefits • Reduce the loop operations (e. g. , condition check & loop variable increment) • Reason for the integer add (vsum 5) to achieve a CPE of 1 • Enhance parallelism (often require manually rewrite the loop body) • Harm • Increased code size • Register pressure • Lots of Registers to hold sums/products • IA 32: Only 6 usable integer registers, 8 FP registers • When not enough registers, must spill temporaries onto stack • Wipes out any performance gains 35 Ding Yuan, ECE 454

Needed for Effective Parallel Unrolling: • Mathematical • Combining operation must be associative & commutative • OK for integer multiplication • Not strictly true for floating point • OK for most applications • Hardware • Pipelined functional units, superscalar execution • Lots of Registers to hold sums/products 36 Ding Yuan, ECE 454

Summary 37 Ding Yuan, ECE 454

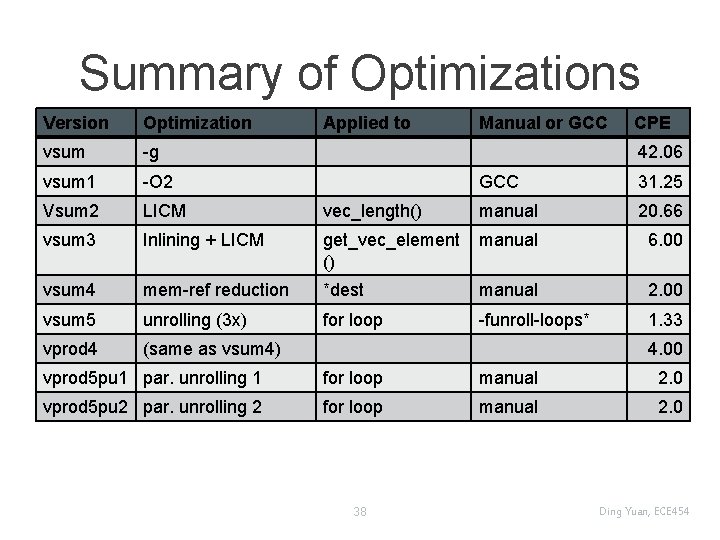

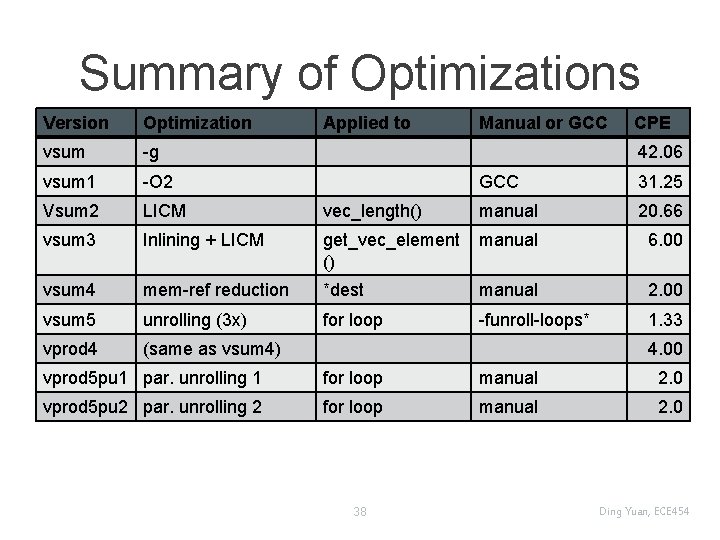

Summary of Optimizations Version Optimization vsum -g vsum 1 -O 2 Vsum 2 LICM vsum 3 Applied to Manual or GCC CPE 42. 06 GCC 31. 25 vec_length() manual 20. 66 Inlining + LICM get_vec_element () manual 6. 00 vsum 4 mem-ref reduction *dest manual 2. 00 vsum 5 unrolling (3 x) for loop -funroll-loops* 1. 33 vprod 4 (same as vsum 4) 4. 00 vprod 5 pu 1 par. unrolling 1 for loop manual 2. 0 vprod 5 pu 2 par. unrolling 2 for loop manual 2. 0 38 Ding Yuan, ECE 454

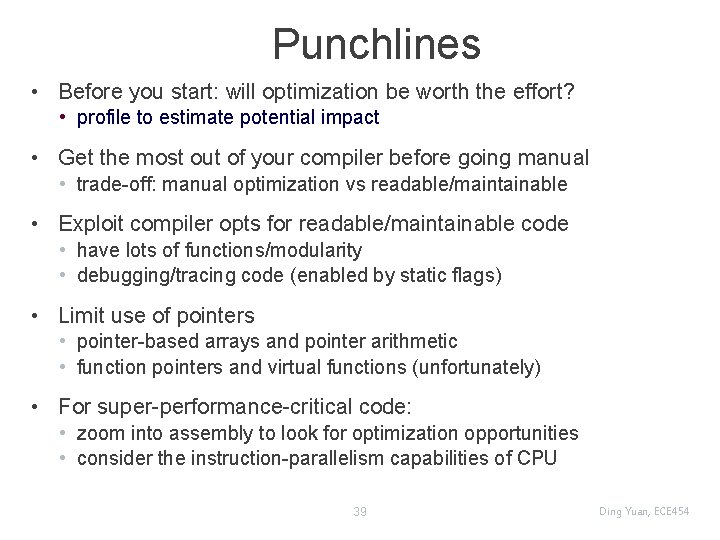

Punchlines • Before you start: will optimization be worth the effort? • profile to estimate potential impact • Get the most out of your compiler before going manual • trade-off: manual optimization vs readable/maintainable • Exploit compiler opts for readable/maintainable code • have lots of functions/modularity • debugging/tracing code (enabled by static flags) • Limit use of pointers • pointer-based arrays and pointer arithmetic • function pointers and virtual functions (unfortunately) • For super-performance-critical code: • zoom into assembly to look for optimization opportunities • consider the instruction-parallelism capabilities of CPU 39 Ding Yuan, ECE 454

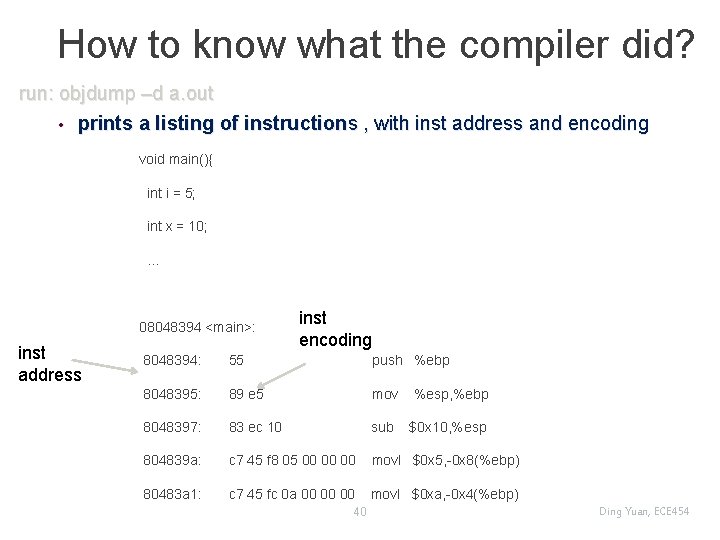

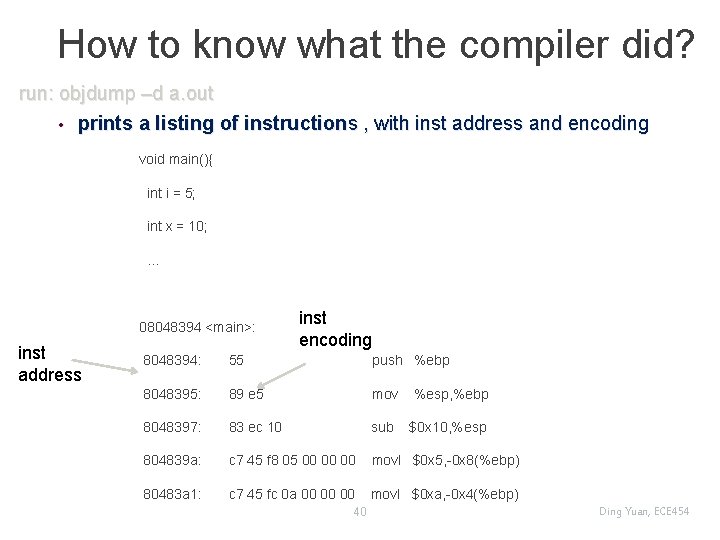

How to know what the compiler did? run: objdump –d a. out • prints a listing of instructions , with inst address and encoding void main(){ int i = 5; int x = 10; … 08048394 <main>: inst address inst encoding 8048394: 55 push %ebp 8048395: 89 e 5 mov %esp, %ebp 8048397: 83 ec 10 sub $0 x 10, %esp 804839 a: c 7 45 f 8 05 00 00 00 movl $0 x 5, -0 x 8(%ebp) 80483 a 1: c 7 45 fc 0 a 00 00 00 movl $0 xa, -0 x 4(%ebp) 40 Ding Yuan, ECE 454

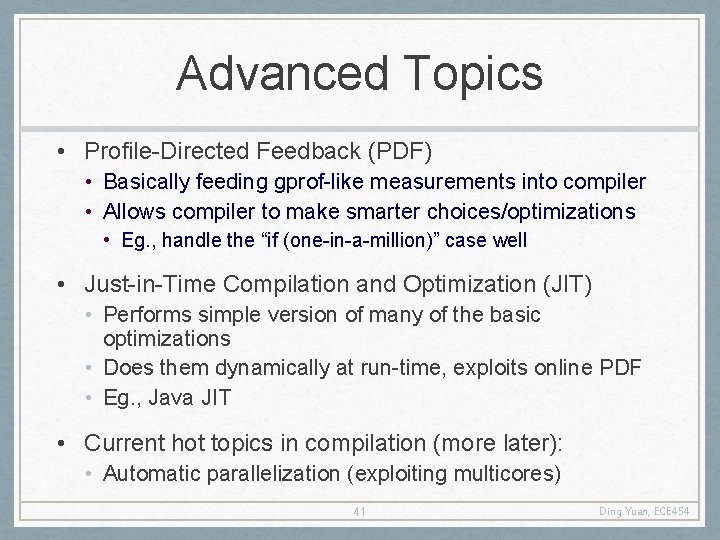

Advanced Topics • Profile-Directed Feedback (PDF) • Basically feeding gprof-like measurements into compiler • Allows compiler to make smarter choices/optimizations • Eg. , handle the “if (one-in-a-million)” case well • Just-in-Time Compilation and Optimization (JIT) • Performs simple version of many of the basic optimizations • Does them dynamically at run-time, exploits online PDF • Eg. , Java JIT • Current hot topics in compilation (more later): • Automatic parallelization (exploiting multicores) 41 Ding Yuan, ECE 454