Derivation of Recursive Least Squares Given that is

![The best way to prove this is to multiply both sides by [A+BCD] Now, The best way to prove this is to multiply both sides by [A+BCD] Now,](https://slidetodoc.com/presentation_image/1801133c52f7b994d015a5397781440f/image-5.jpg)

- Slides: 10

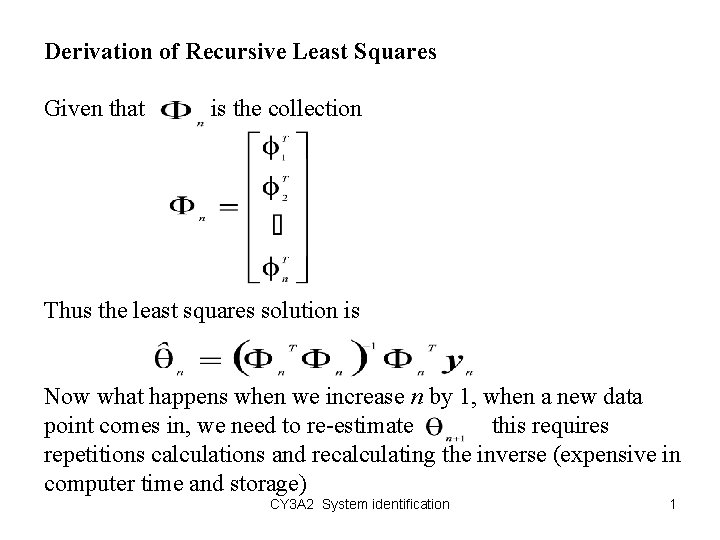

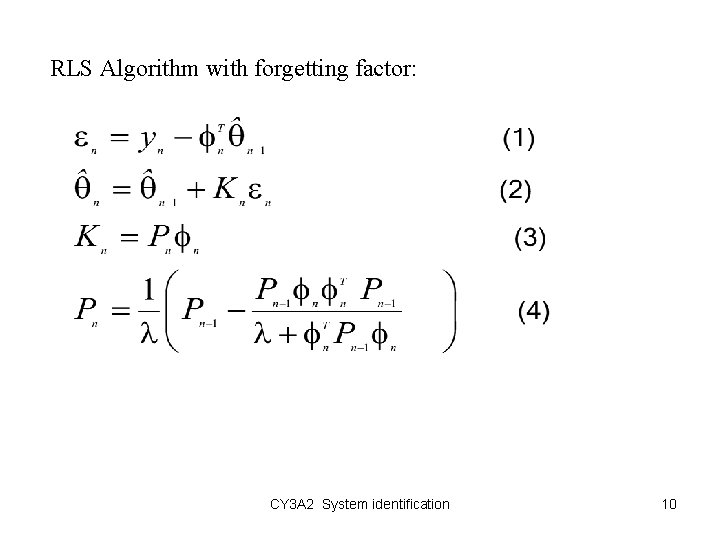

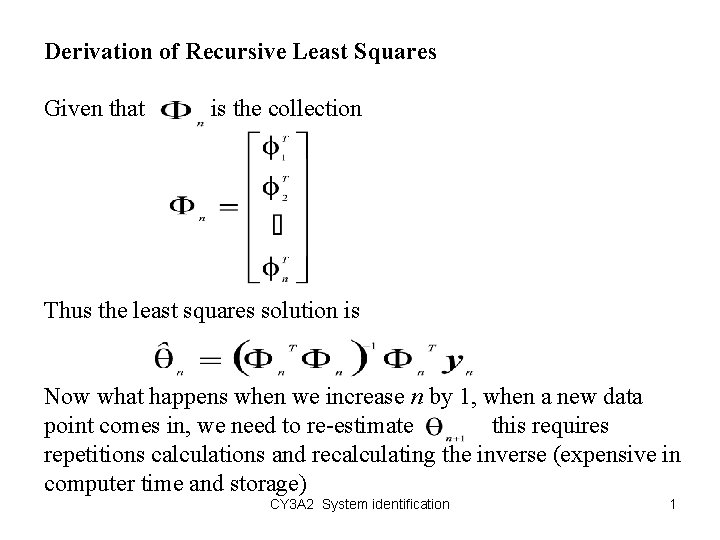

Derivation of Recursive Least Squares Given that is the collection Thus the least squares solution is Now what happens when we increase n by 1, when a new data point comes in, we need to re-estimate this requires repetitions calculations and recalculating the inverse (expensive in computer time and storage) CY 3 A 2 System identification 1

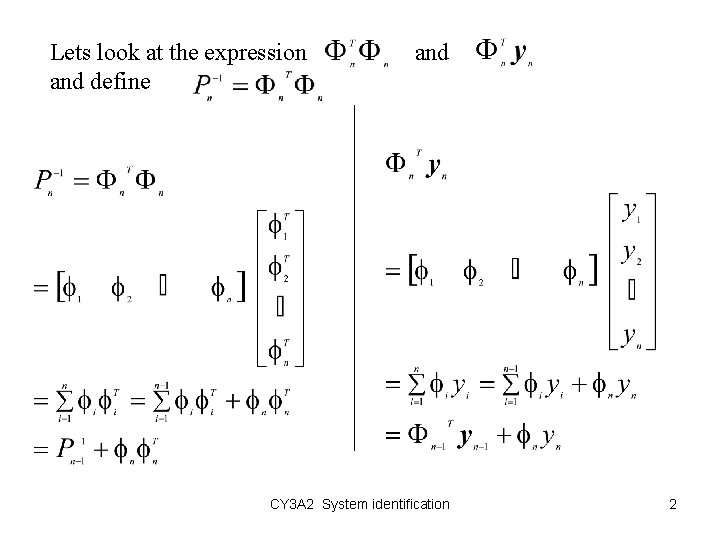

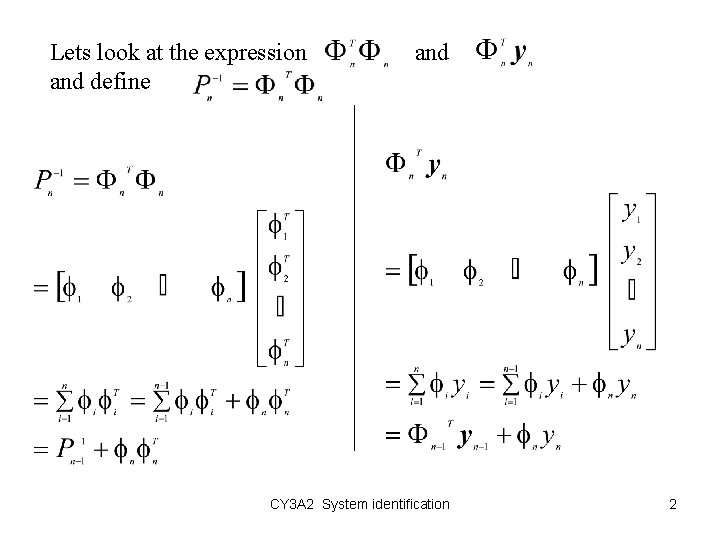

Lets look at the expression and define and CY 3 A 2 System identification 2

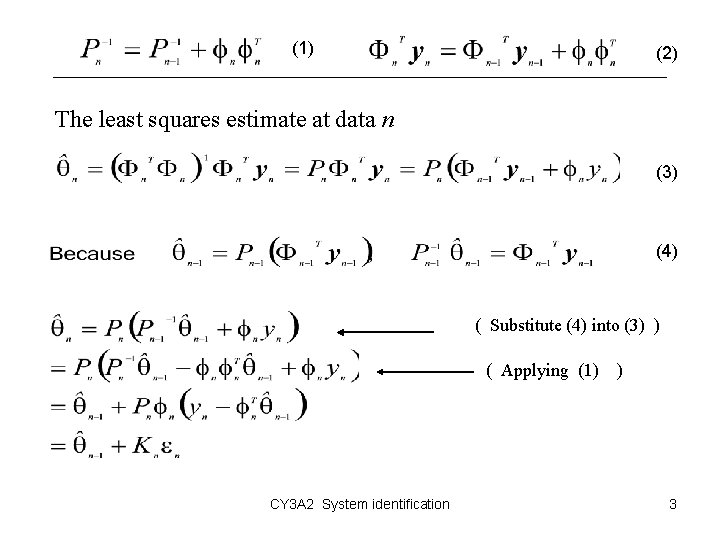

(1) (2) The least squares estimate at data n (3) (4) ( Substitute (4) into (3) ) ( Applying (1) ) CY 3 A 2 System identification 3

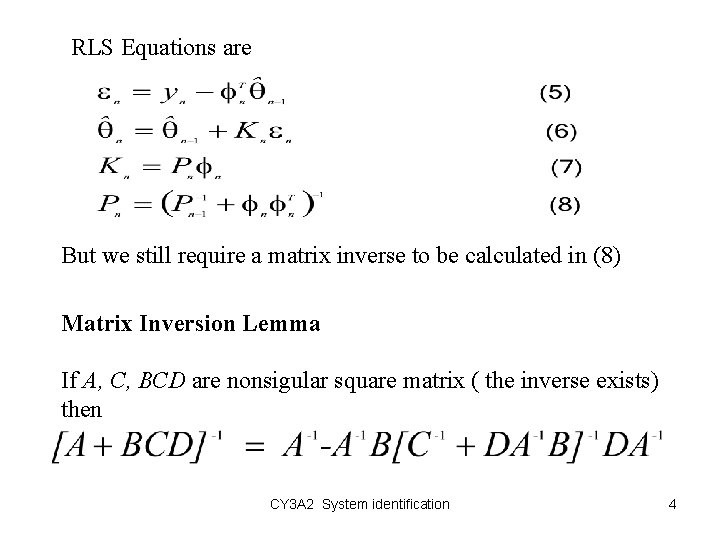

RLS Equations are But we still require a matrix inverse to be calculated in (8) Matrix Inversion Lemma If A, C, BCD are nonsigular square matrix ( the inverse exists) then CY 3 A 2 System identification 4

![The best way to prove this is to multiply both sides by ABCD Now The best way to prove this is to multiply both sides by [A+BCD] Now,](https://slidetodoc.com/presentation_image/1801133c52f7b994d015a5397781440f/image-5.jpg)

The best way to prove this is to multiply both sides by [A+BCD] Now, in (8), identify A, B, C, D CY 3 A 2 System identification 5

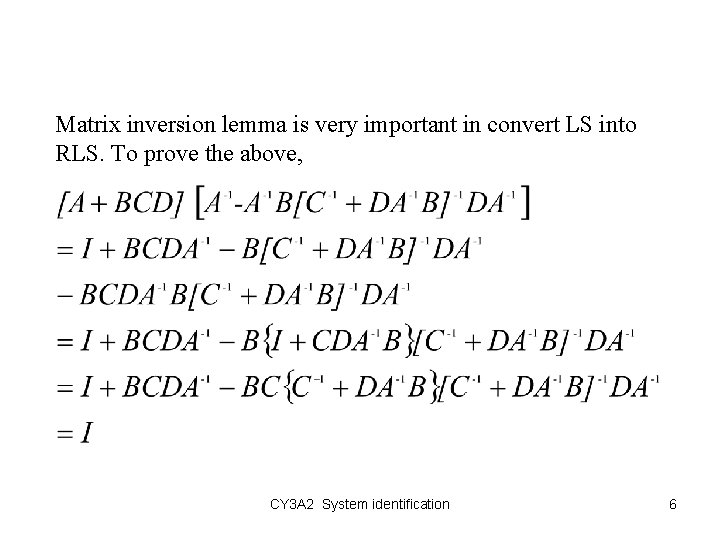

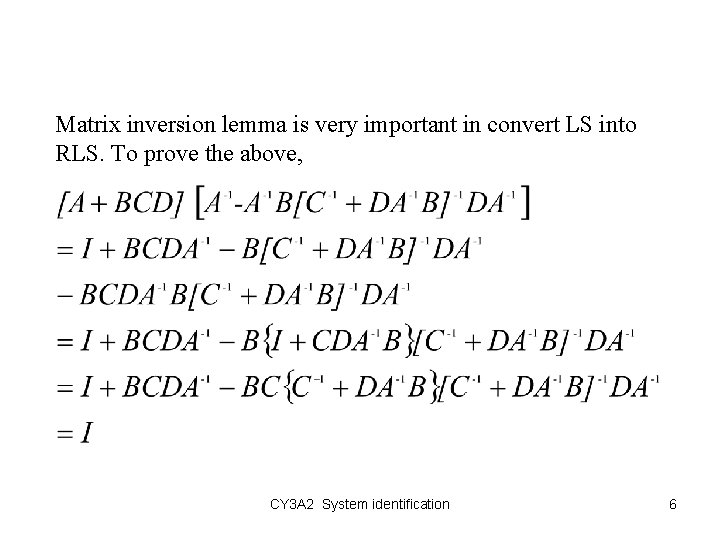

Matrix inversion lemma is very important in convert LS into RLS. To prove the above, CY 3 A 2 System identification 6

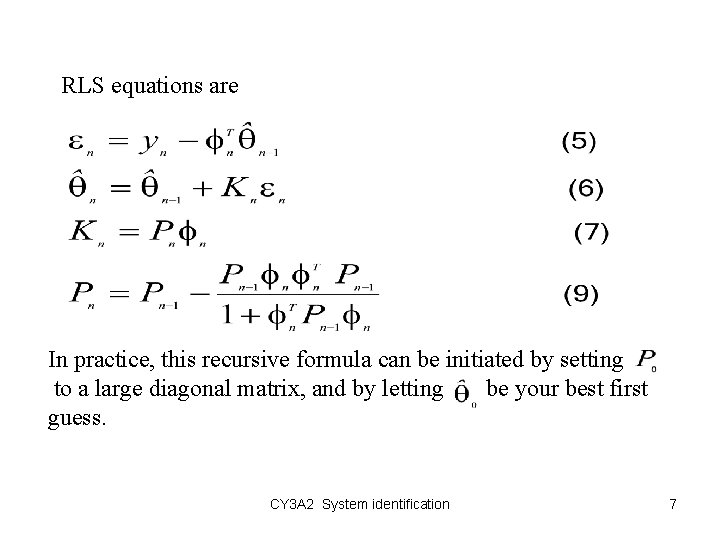

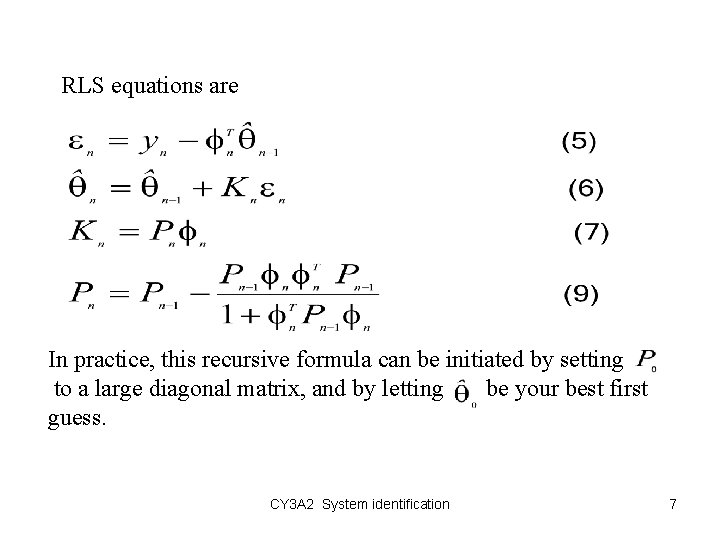

RLS equations are In practice, this recursive formula can be initiated by setting to a large diagonal matrix, and by letting be your best first guess. CY 3 A 2 System identification 7

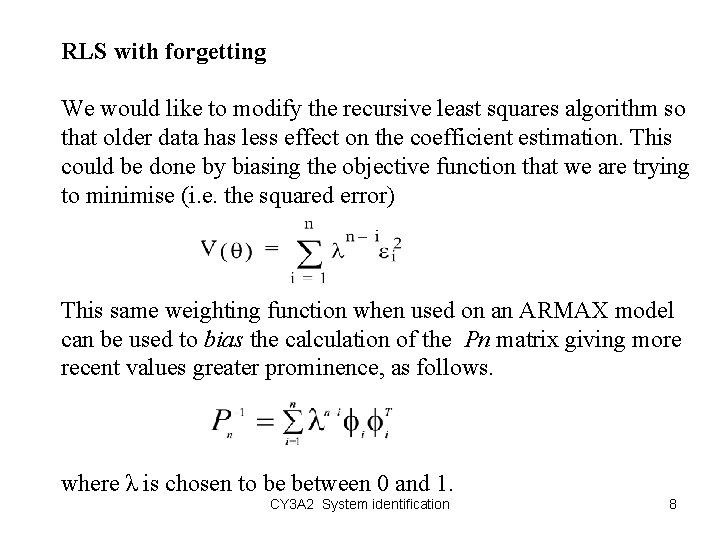

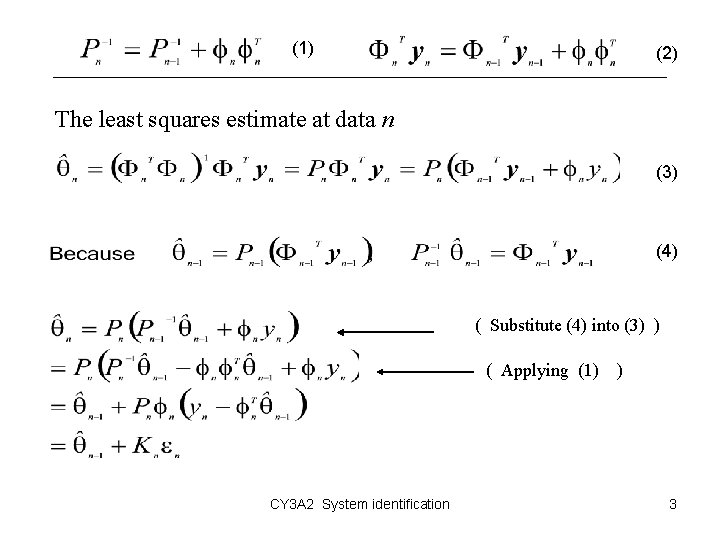

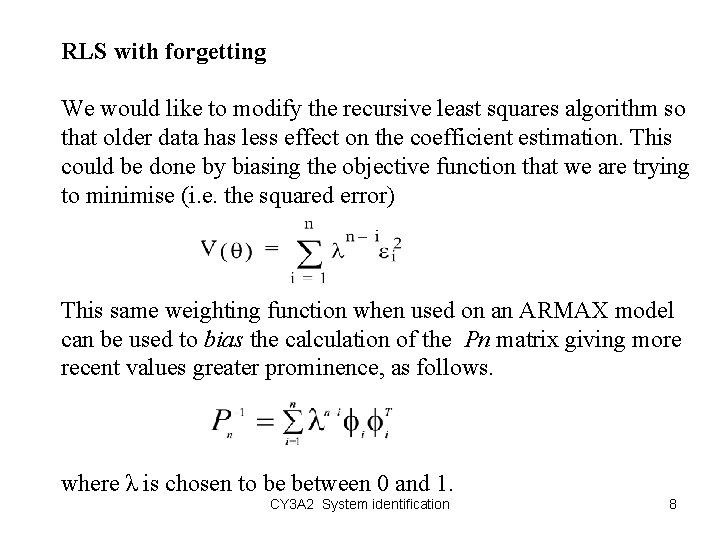

RLS with forgetting We would like to modify the recursive least squares algorithm so that older data has less effect on the coefficient estimation. This could be done by biasing the objective function that we are trying to minimise (i. e. the squared error) This same weighting function when used on an ARMAX model can be used to bias the calculation of the Pn matrix giving more recent values greater prominence, as follows. where λ is chosen to be between 0 and 1. CY 3 A 2 System identification 8

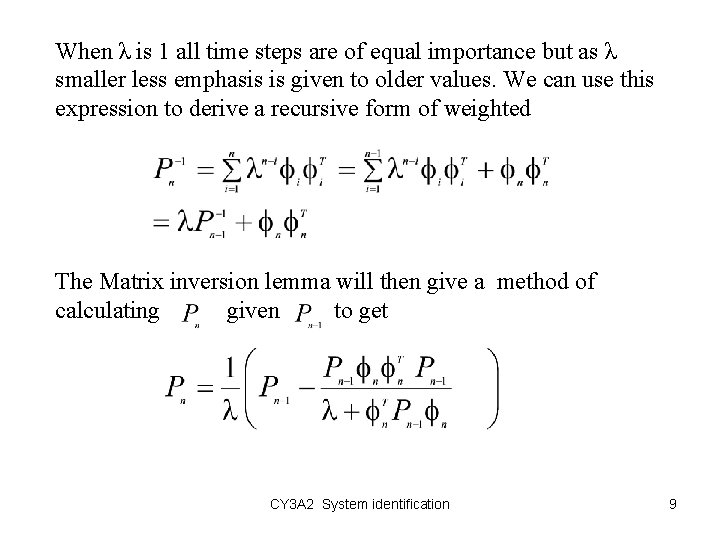

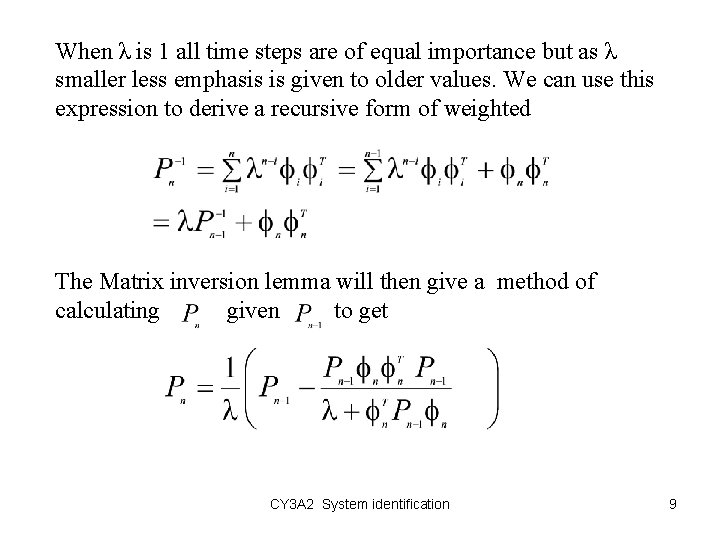

When λ is 1 all time steps are of equal importance but as λ smaller less emphasis is given to older values. We can use this expression to derive a recursive form of weighted The Matrix inversion lemma will then give a method of calculating given to get CY 3 A 2 System identification 9

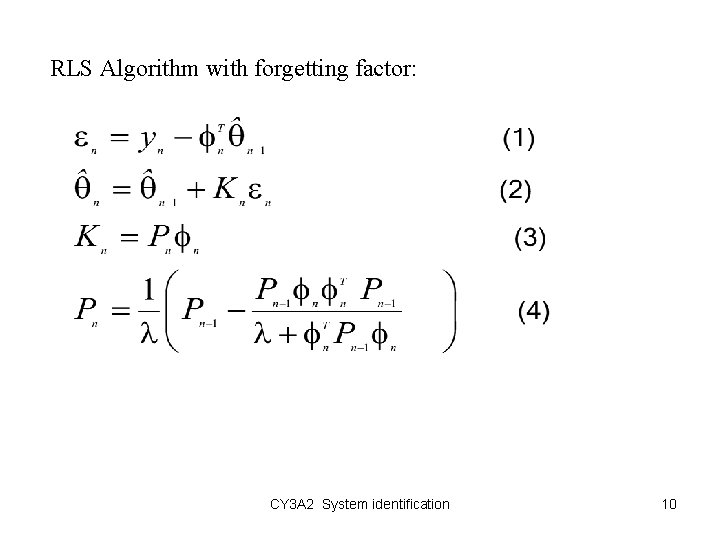

RLS Algorithm with forgetting factor: CY 3 A 2 System identification 10