Data Warehouse Introduction n Data warehousing provides architectures

![Defining Star Schema in DMQL define cube sales_star [time, item, branch, location]: dollars_sold = Defining Star Schema in DMQL define cube sales_star [time, item, branch, location]: dollars_sold =](https://slidetodoc.com/presentation_image_h2/9caf1f754edbd0fdba7c5a85f974d2c9/image-18.jpg)

![Defining Snowflake Schema in DMQL define cube sales_snowflake [time, item, branch, location]: dollars_sold = Defining Snowflake Schema in DMQL define cube sales_snowflake [time, item, branch, location]: dollars_sold =](https://slidetodoc.com/presentation_image_h2/9caf1f754edbd0fdba7c5a85f974d2c9/image-19.jpg)

![Defining Fact Constellation in DMQL define cube sales [time, item, branch, location]: dollars_sold = Defining Fact Constellation in DMQL define cube sales [time, item, branch, location]: dollars_sold =](https://slidetodoc.com/presentation_image_h2/9caf1f754edbd0fdba7c5a85f974d2c9/image-20.jpg)

- Slides: 69

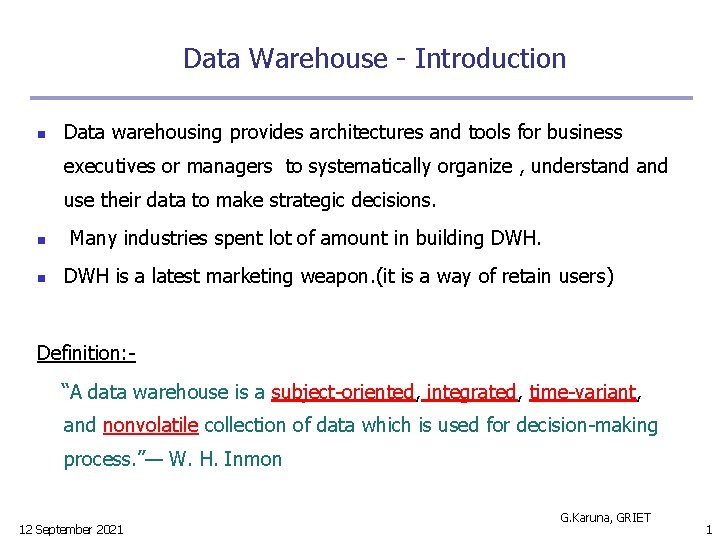

Data Warehouse - Introduction n Data warehousing provides architectures and tools for business executives or managers to systematically organize , understand use their data to make strategic decisions. n n Many industries spent lot of amount in building DWH is a latest marketing weapon. (it is a way of retain users) Definition: “A data warehouse is a subject-oriented, integrated, time-variant, and nonvolatile collection of data which is used for decision-making process. ”— W. H. Inmon 12 September 2021 G. Karuna, GRIET 1

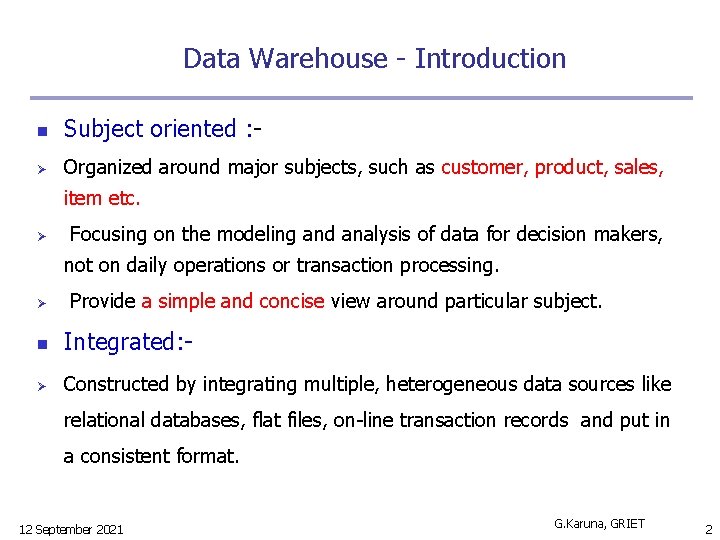

Data Warehouse - Introduction n Subject oriented : - Ø Organized around major subjects, such as customer, product, sales, item etc. Ø Focusing on the modeling and analysis of data for decision makers, not on daily operations or transaction processing. Ø Provide a simple and concise view around particular subject. n Integrated: - Ø Constructed by integrating multiple, heterogeneous data sources like relational databases, flat files, on-line transaction records and put in a consistent format. 12 September 2021 G. Karuna, GRIET 2

Data Warehouse - Introduction n Time-variant: - Ø The Data are stored to provide information from a historical perspective. Ø Implicitly or explicitly the key structure in DWH contains an element of time. n Non-volatile : - Ø DWH is always a physically separate store of data, transformed from application data found in operational environment. Ø Operational update of data does not occur in the data warehouse environment. Only we can extract data, but we do not modify. 12 September 2021 G. Karuna, GRIET 3

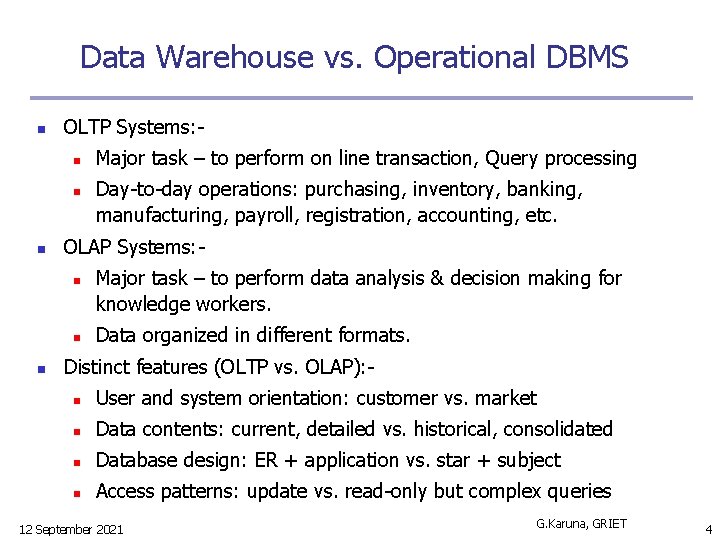

Data Warehouse vs. Operational DBMS n OLTP Systems: n n n Day-to-day operations: purchasing, inventory, banking, manufacturing, payroll, registration, accounting, etc. OLAP Systems: n n n Major task – to perform on line transaction, Query processing Major task – to perform data analysis & decision making for knowledge workers. Data organized in different formats. Distinct features (OLTP vs. OLAP): n User and system orientation: customer vs. market n Data contents: current, detailed vs. historical, consolidated n Database design: ER + application vs. star + subject n Access patterns: update vs. read-only but complex queries 12 September 2021 G. Karuna, GRIET 4

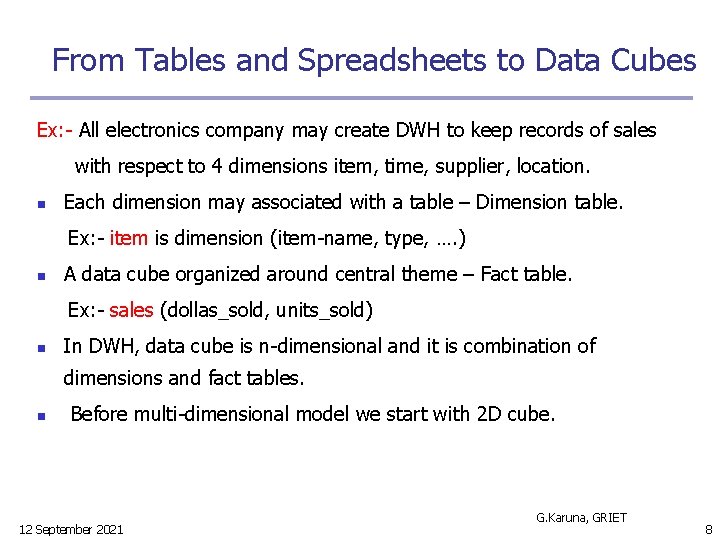

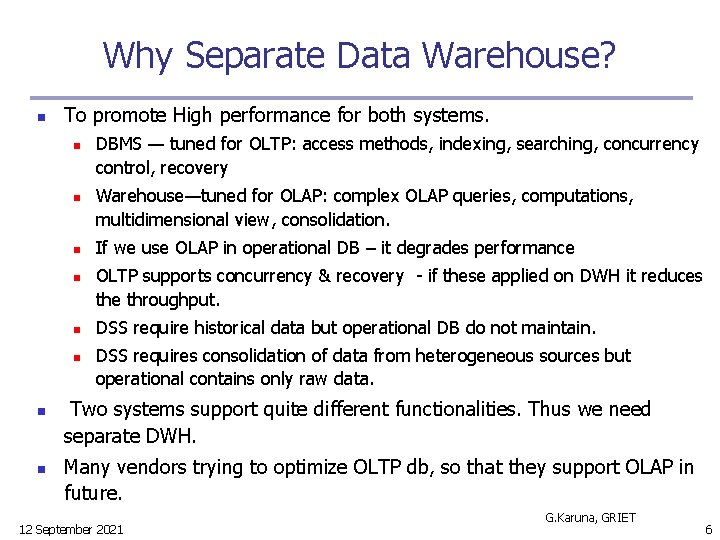

OLTP vs. OLAP S. No. Feature 1 2 3 4 5 6 Definition Characteristic Orientation users Function DB Design 7 Data 8 9 10 11 12 13 14 15 16 17 View Unit of work Access No. Records Users Db size Priority Metrics Focus Operations 12 September 2021 OLTP Online Transaction Processing Operational processing Transaction DBA, DB professional Day to day opearation ER based Design & Application oriented Dynamic, current, relational, detailed, up to date Detailed view Short & simple transaction Read / Write Tens/Hundreds Thousands 100 MB to GB High performance Transaction throughput data in Indexing, hashing OLAP Online analytical Processing Informational processing Analysis Knowledge workers (Manager) Historical (or) Decision Support Star/Snowflake schemas & Subject-oriented Static, historical, summarized, consolidated, multidimentional Summarized view Complex queries required Mostly read Millions Hundreds 100 GB to TB High flexibility Query throughput Information out Lots of scans for analysis G. Karuna, GRIET 5

Why Separate Data Warehouse? n To promote High performance for both systems. n n n n DBMS — tuned for OLTP: access methods, indexing, searching, concurrency control, recovery Warehouse—tuned for OLAP: complex OLAP queries, computations, multidimensional view, consolidation. If we use OLAP in operational DB – it degrades performance OLTP supports concurrency & recovery - if these applied on DWH it reduces the throughput. DSS require historical data but operational DB do not maintain. DSS requires consolidation of data from heterogeneous sources but operational contains only raw data. Two systems support quite different functionalities. Thus we need separate DWH. Many vendors trying to optimize OLTP db, so that they support OLAP in future. 12 September 2021 G. Karuna, GRIET 6

From Tables and Spreadsheets to Data Cubes n A data warehouse & OLAP tools are based on a multidimensional data model which views data in the form of a data cube. n A data cube, allows data to be modeled and viewed in multiple dimensions. i. e data cube is defined by dimensions and facts. n Dimension tables, such as item (item_name, brand, type), or time(day, week, month, quarter, year) n Fact table contains measures (such as dollars_sold) and keys to each of the related dimension tables. n n The physical structure of DWH is a data cube. Data cube provides multidimensional view and allows pre-computation and fast accessing of consolidated data. 12 September 2021 G. Karuna, GRIET 7

From Tables and Spreadsheets to Data Cubes Ex: - All electronics company may create DWH to keep records of sales with respect to 4 dimensions item, time, supplier, location. n Each dimension may associated with a table – Dimension table. Ex: - item is dimension (item-name, type, …. ) n A data cube organized around central theme – Fact table. Ex: - sales (dollas_sold, units_sold) n In DWH, data cube is n-dimensional and it is combination of dimensions and fact tables. n Before multi-dimensional model we start with 2 D cube. 12 September 2021 G. Karuna, GRIET 8

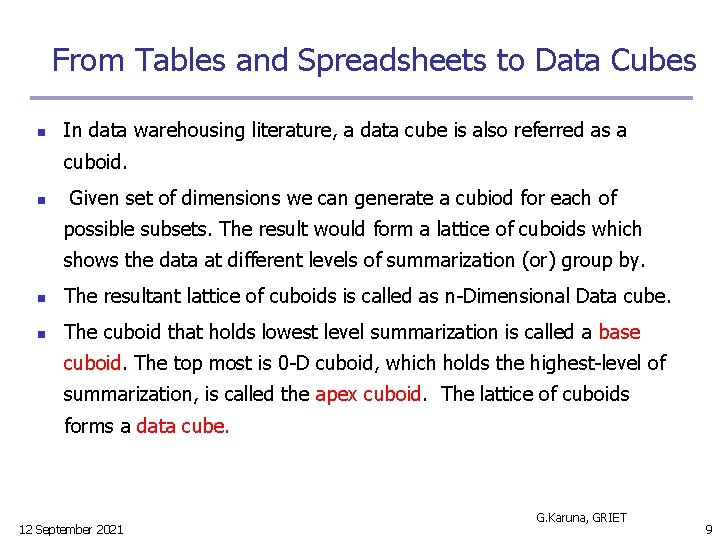

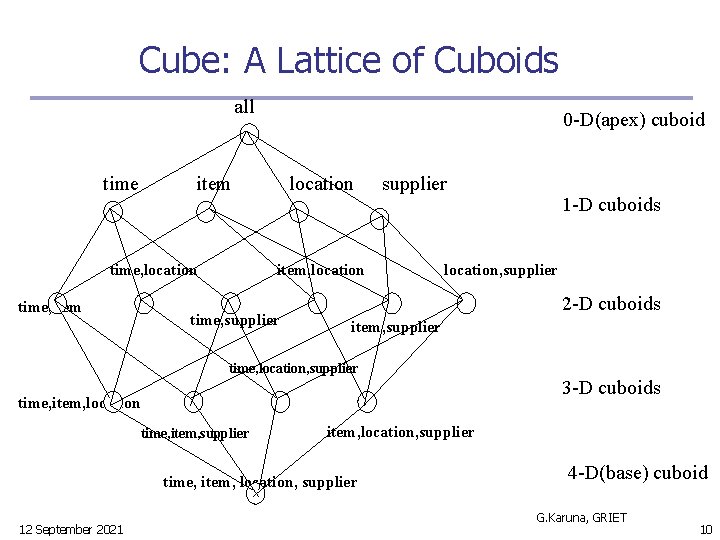

From Tables and Spreadsheets to Data Cubes n In data warehousing literature, a data cube is also referred as a cuboid. n Given set of dimensions we can generate a cubiod for each of possible subsets. The result would form a lattice of cuboids which shows the data at different levels of summarization (or) group by. n The resultant lattice of cuboids is called as n-Dimensional Data cube. n The cuboid that holds lowest level summarization is called a base cuboid. The top most is 0 -D cuboid, which holds the highest-level of summarization, is called the apex cuboid. The lattice of cuboids forms a data cube. 12 September 2021 G. Karuna, GRIET 9

Cube: A Lattice of Cuboids all time item time, location time, item 0 -D(apex) cuboid location supplier item, location time, supplier 1 -D cuboids location, supplier 2 -D cuboids item, supplier time, location, supplier 3 -D cuboids time, item, location time, item, supplier item, location, supplier time, item, location, supplier 12 September 2021 4 -D(base) cuboid G. Karuna, GRIET 10

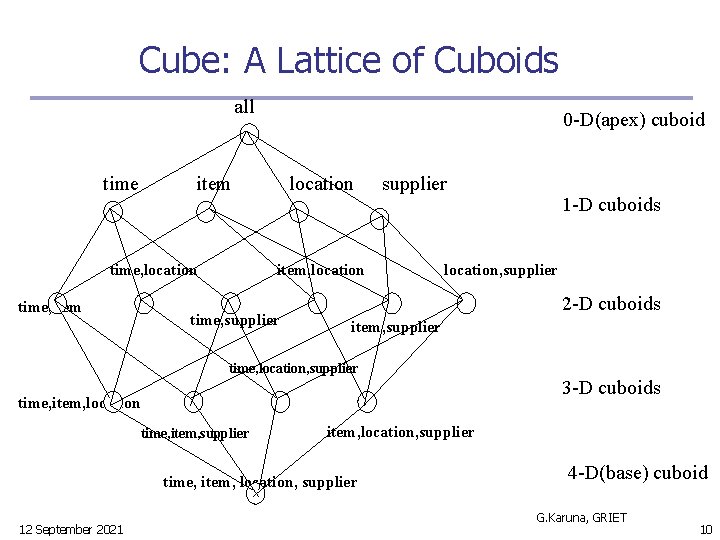

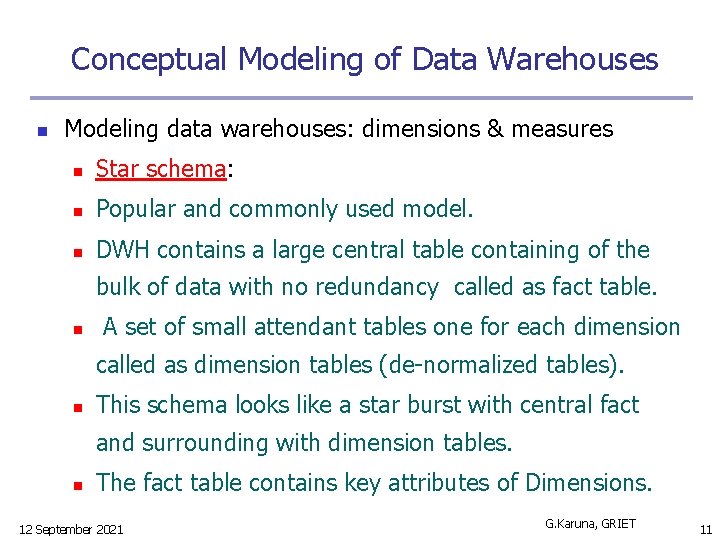

Conceptual Modeling of Data Warehouses n Modeling data warehouses: dimensions & measures n Star schema: n Popular and commonly used model. n DWH contains a large central table containing of the bulk of data with no redundancy called as fact table. n A set of small attendant tables one for each dimension called as dimension tables (de-normalized tables). n This schema looks like a star burst with central fact and surrounding with dimension tables. n The fact table contains key attributes of Dimensions. 12 September 2021 G. Karuna, GRIET 11

Example of Star Schema time item time_key day_of_the_week month quarter year Sales Fact Table time_key item_key branch_key branch_name branch_type location_key units_sold dollars_sold avg_sales item_key item_name brand type supplier_type location_key street city state_or_province country Measures 12 September 2021 G. Karuna, GRIET 12

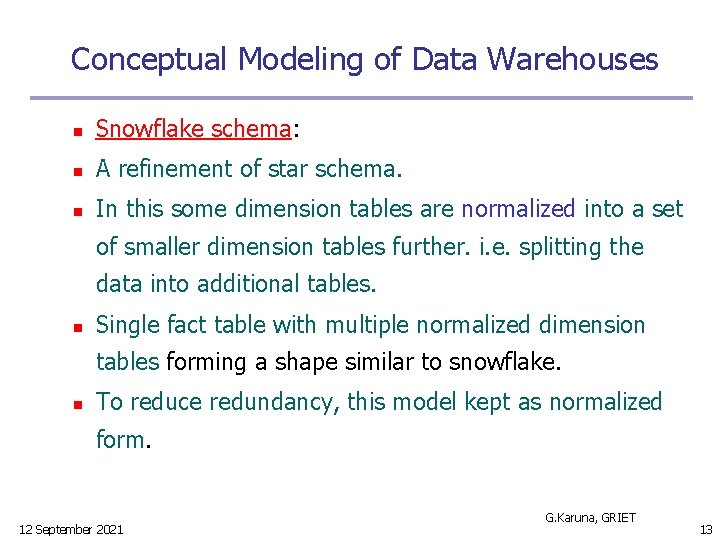

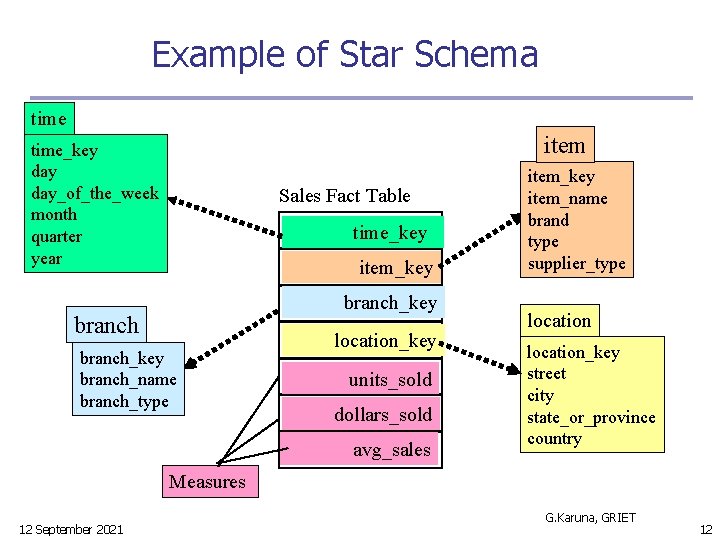

Conceptual Modeling of Data Warehouses n Snowflake schema: n A refinement of star schema. n In this some dimension tables are normalized into a set of smaller dimension tables further. i. e. splitting the data into additional tables. n Single fact table with multiple normalized dimension tables forming a shape similar to snowflake. n To reduce redundancy, this model kept as normalized form. 12 September 2021 G. Karuna, GRIET 13

Example of Snowflake Schema time_key day_of_the_week month quarter year item Sales Fact Table time_key item_key branch location_key branch_name branch_type units_sold dollars_sold avg_sales Measures 12 September 2021 item_key item_name brand type supplier_key supplier_type location_key street city_key city state_or_province country G. Karuna, GRIET 14

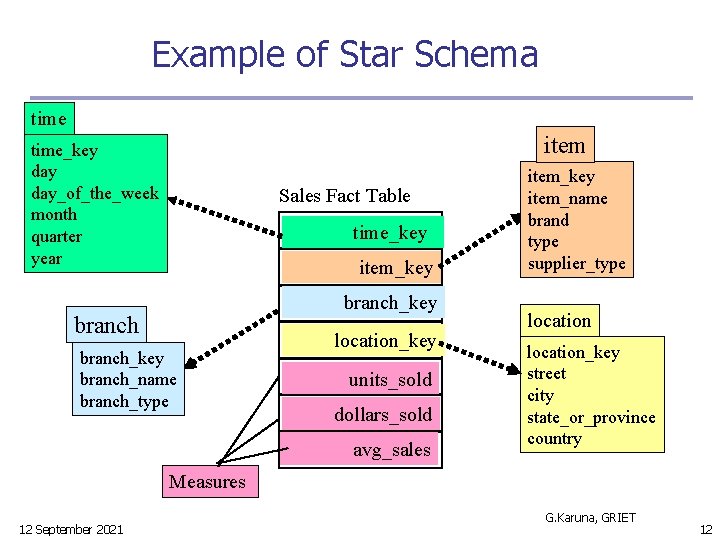

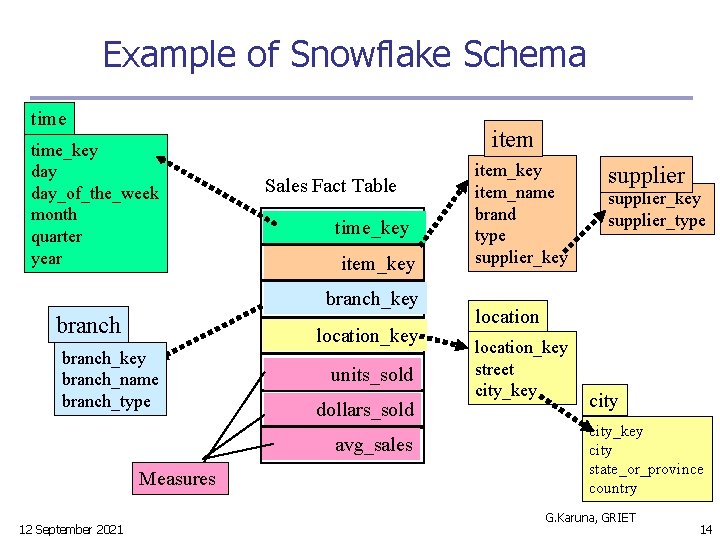

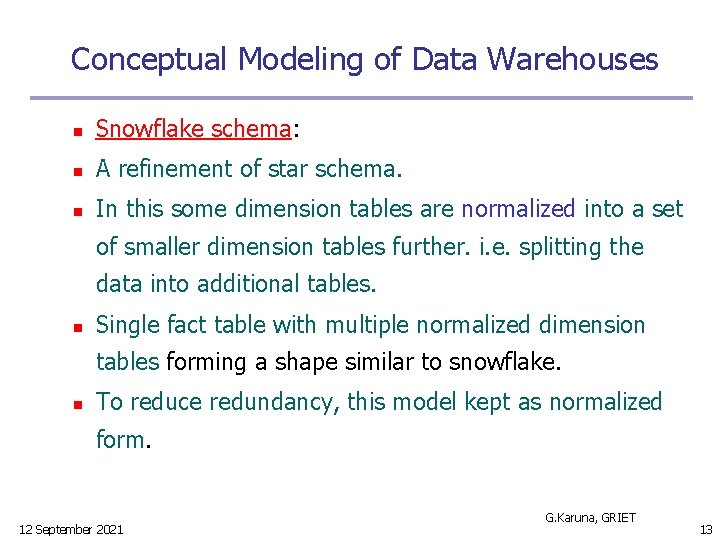

Conceptual Modeling of Data Warehouses n n Fact constellation schema: Sophisticated applications may require multiple fact tables to share multiple dimension tables. n Multiple fact tables with multiple dimension tables, viewed as a collection of stars, therefore called galaxy schema or fact constellation. 12 September 2021 G. Karuna, GRIET 15

Example of Fact Constellation time_key day_of_the_week month quarter year item Sales Fact Table time_key item_key item_name brand type supplier_type location_key branch_name branch_type units_sold dollars_sold avg_sales item_key shipper_key location to_location_key street city province_or_state country dollars_cost Measures 12 September 2021 time_key from_location branch_key branch Shipping Fact Table G. Karuna, GRIET units_shipped shipper_key shipper_name location_key shipper_type 16

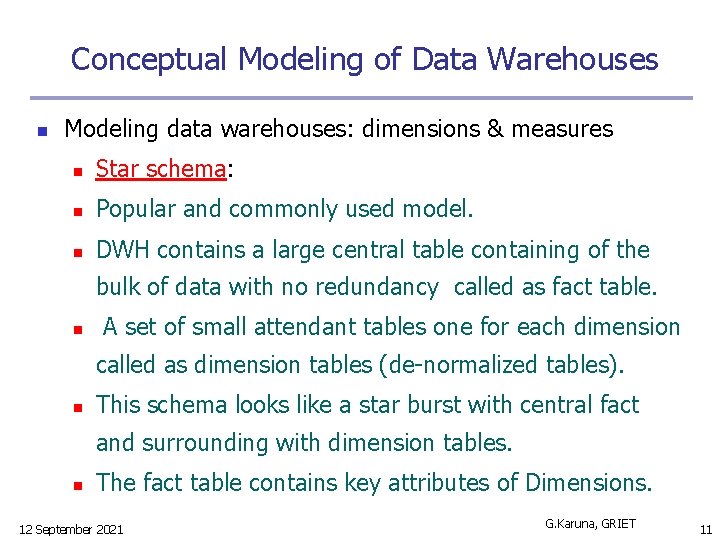

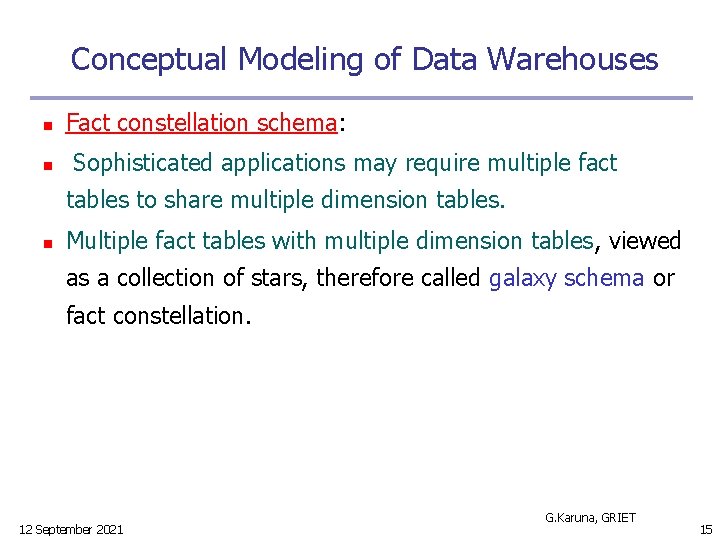

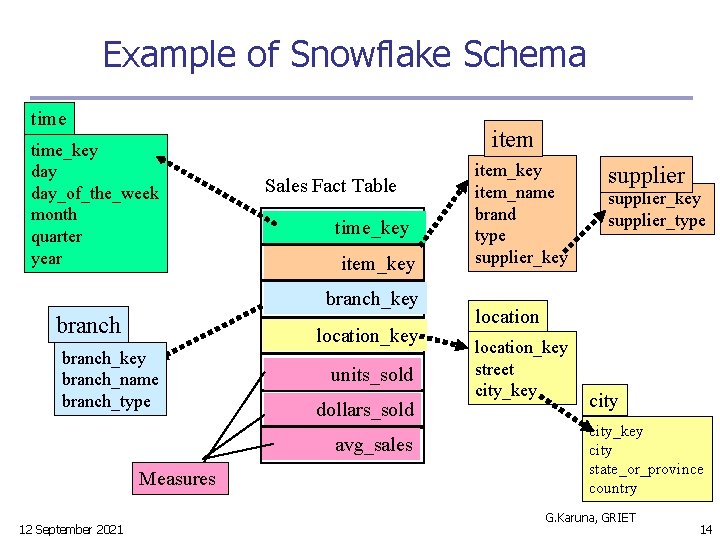

Cube Definition Syntax in DMQL used to specify tasks for DWH like SQL for DBMS. n DWH can be defined using 2 language primitives. Cube Definition (Fact Table) define cube <cube_name> [<dimension_list>]: <measure_list> Dimension Definition (Dimension Table) define dimension <dimension_name> as (<attribute_or_subdimension_list>) 12 September 2021 G. Karuna, GRIET 17

![Defining Star Schema in DMQL define cube salesstar time item branch location dollarssold Defining Star Schema in DMQL define cube sales_star [time, item, branch, location]: dollars_sold =](https://slidetodoc.com/presentation_image_h2/9caf1f754edbd0fdba7c5a85f974d2c9/image-18.jpg)

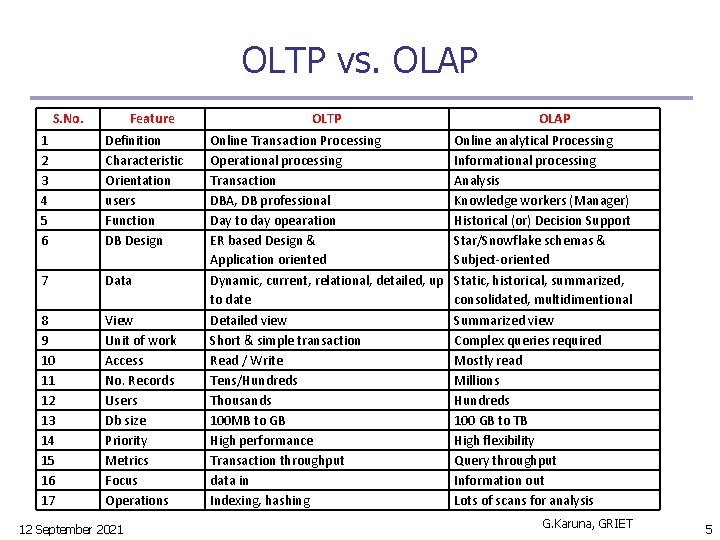

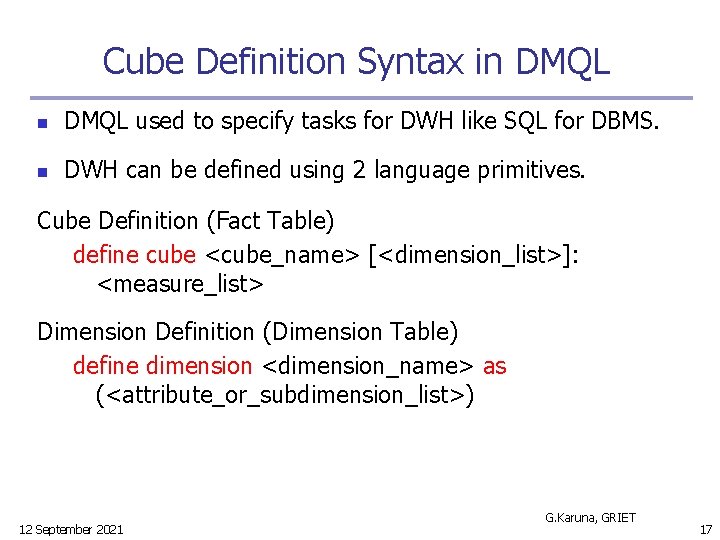

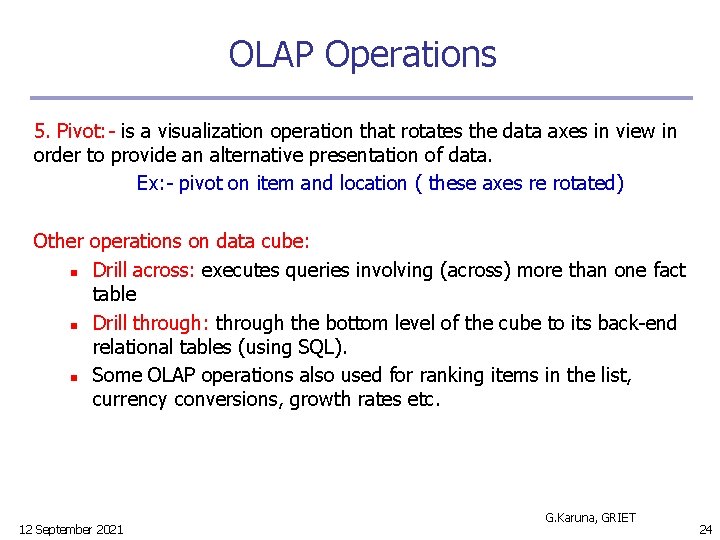

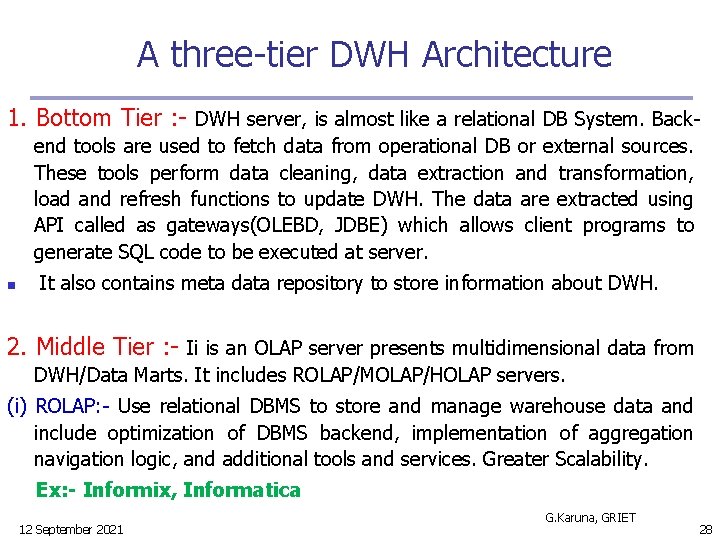

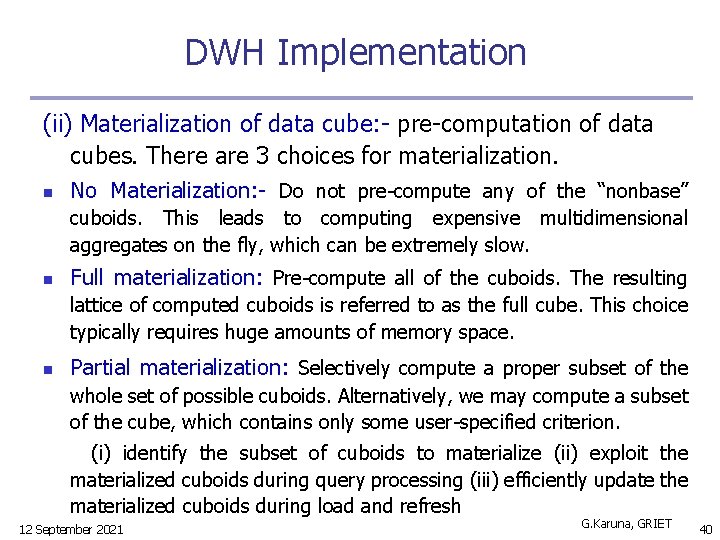

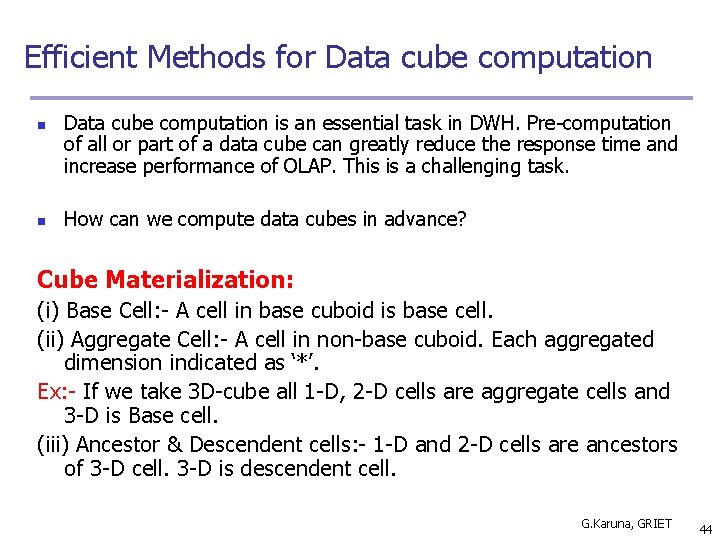

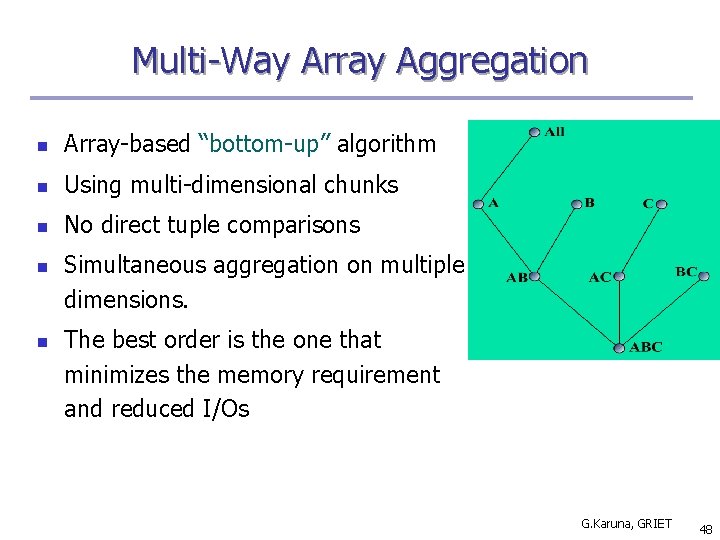

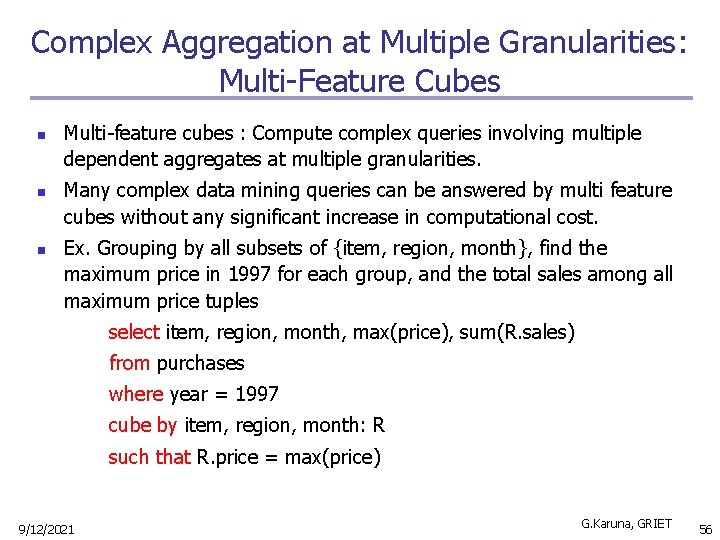

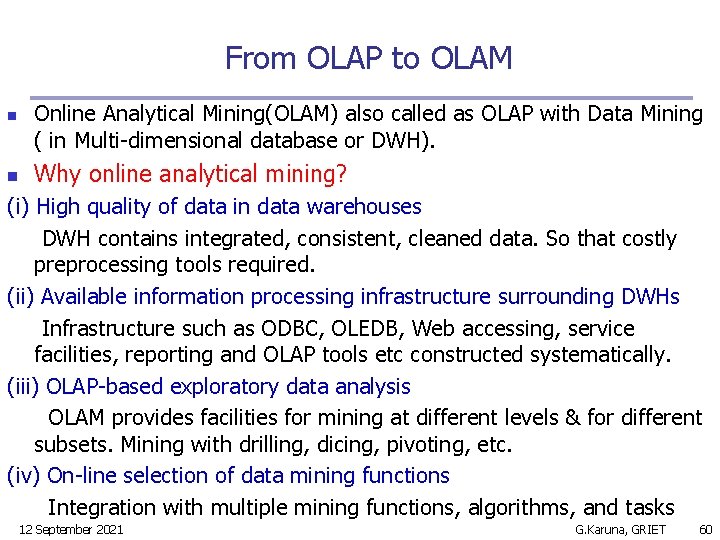

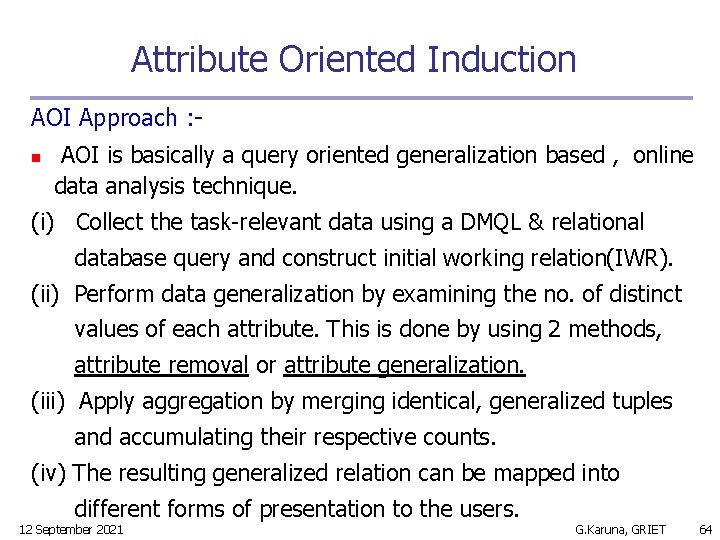

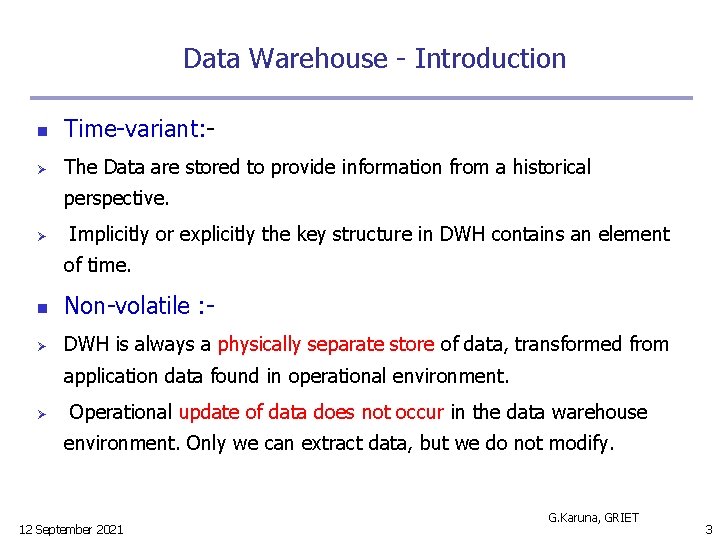

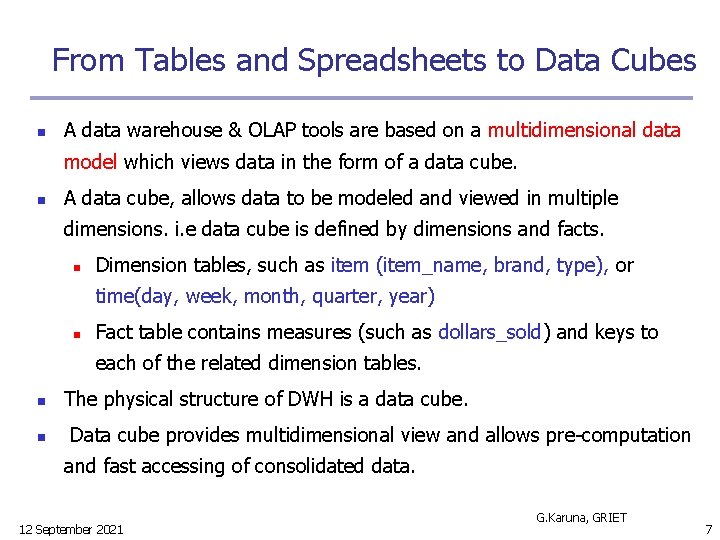

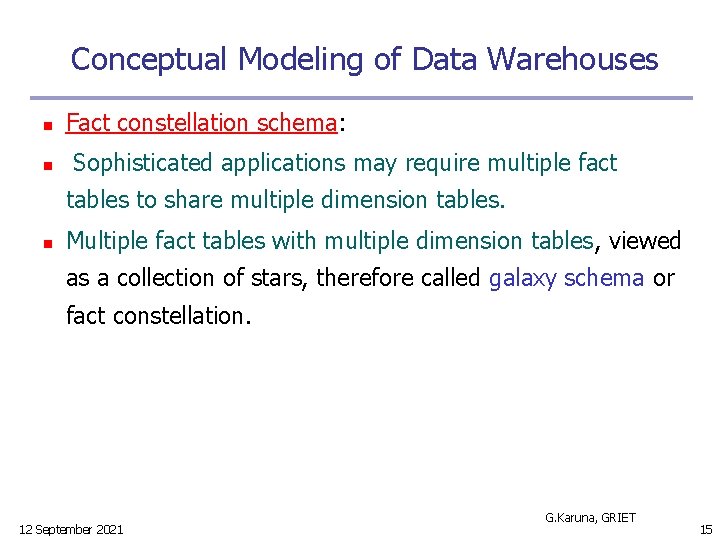

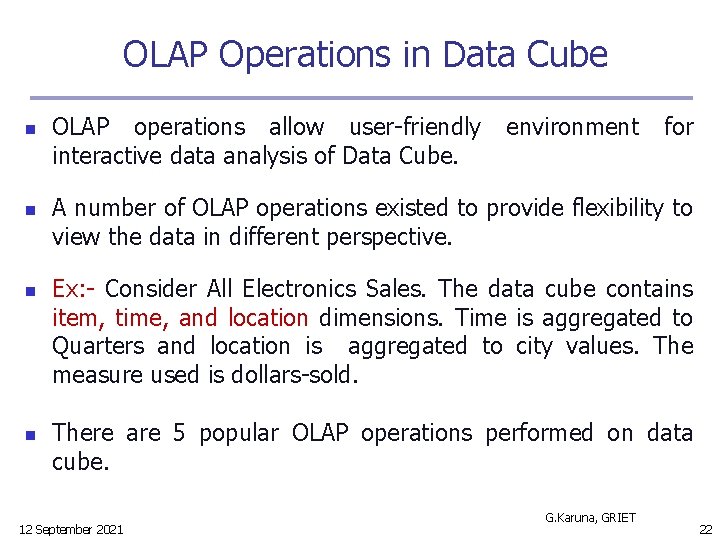

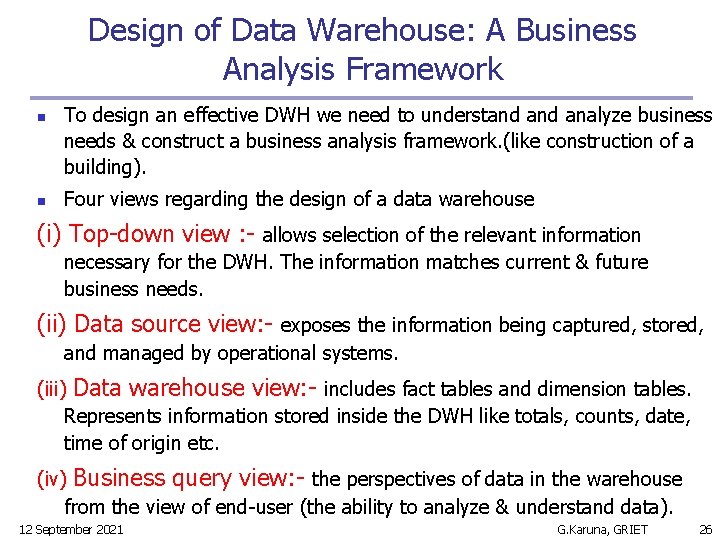

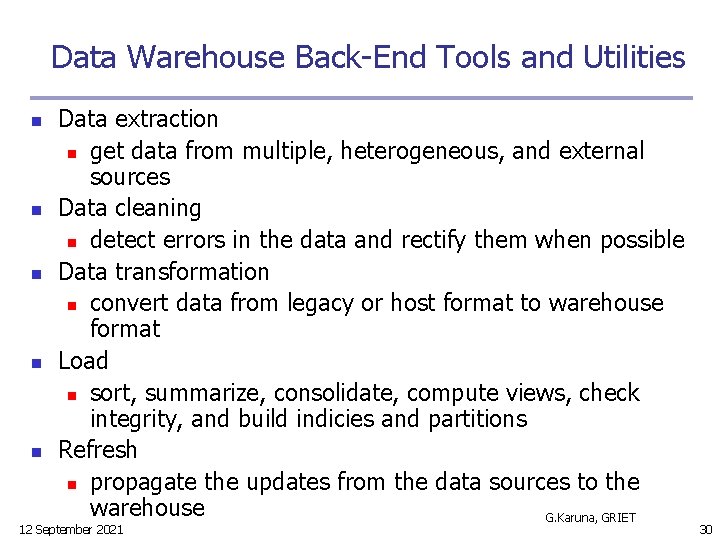

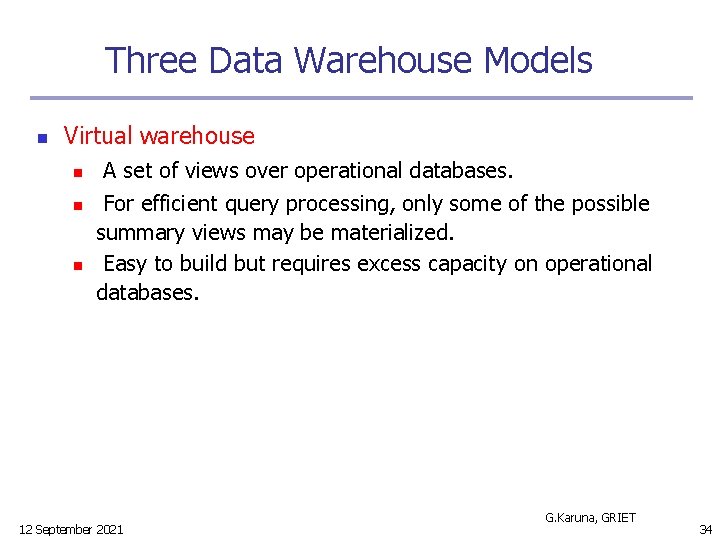

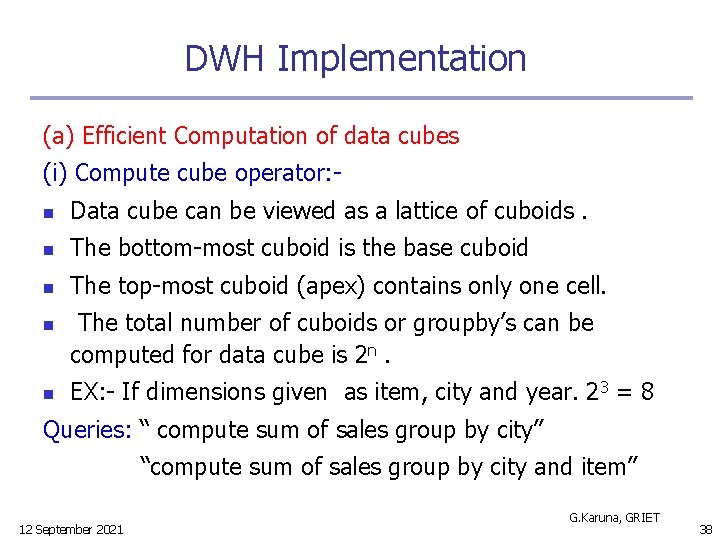

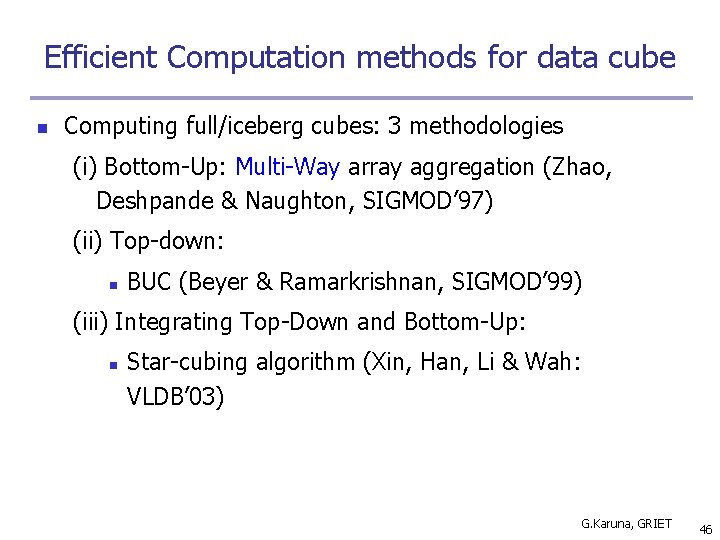

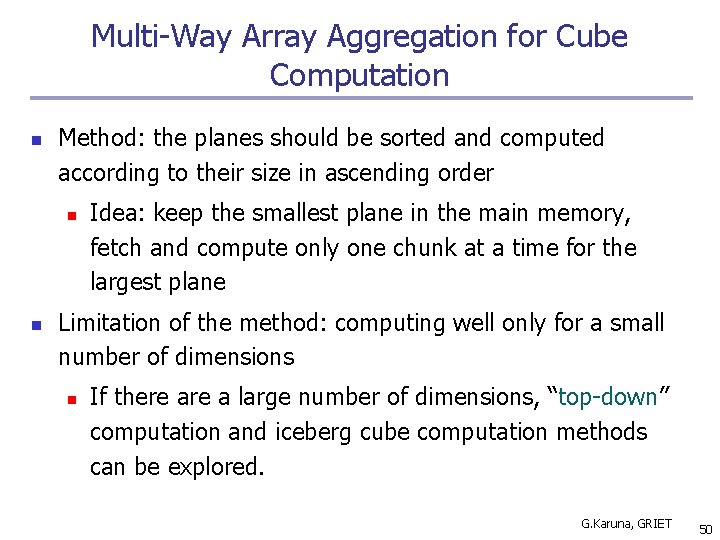

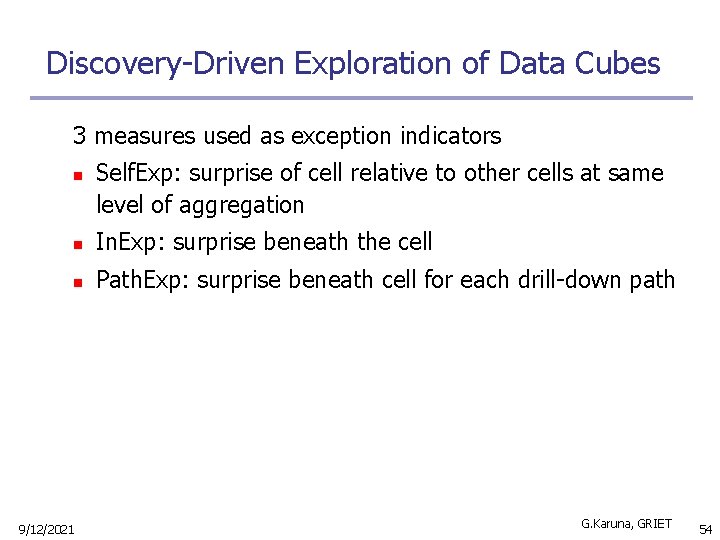

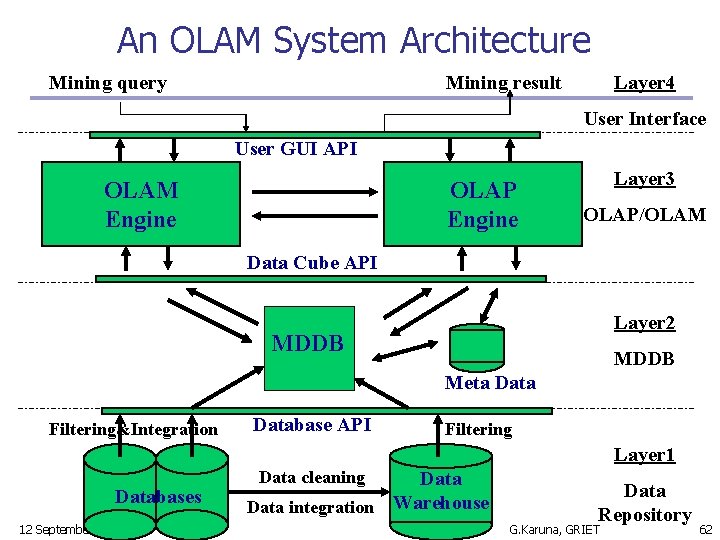

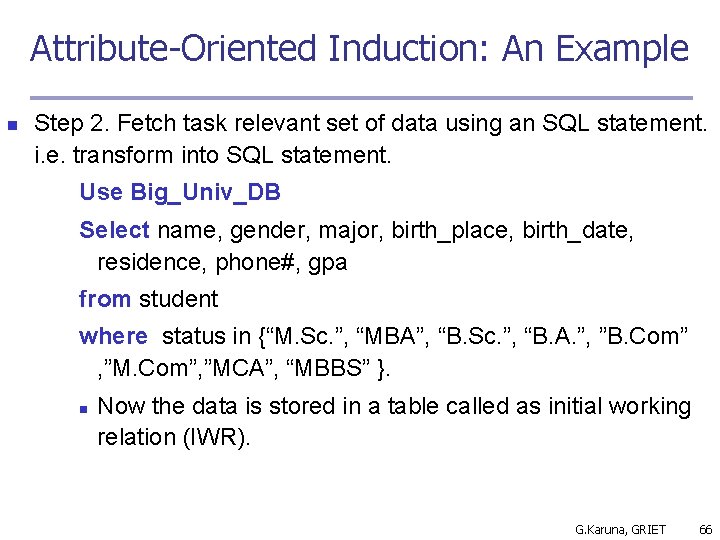

Defining Star Schema in DMQL define cube sales_star [time, item, branch, location]: dollars_sold = sum(sales_in_dollars), avg_sales = avg(sales_in_dollars), units_sold = count(*) define dimension time as (time_key, day_of_week, month, quarter, year) define dimension item as (item_key, item_name, brand, type, supplier_type) define dimension branch as (branch_key, branch_name, branch_type) define dimension location as (location_key, street, city, province_or_state, country) 12 September 2021 G. Karuna, GRIET 18

![Defining Snowflake Schema in DMQL define cube salessnowflake time item branch location dollarssold Defining Snowflake Schema in DMQL define cube sales_snowflake [time, item, branch, location]: dollars_sold =](https://slidetodoc.com/presentation_image_h2/9caf1f754edbd0fdba7c5a85f974d2c9/image-19.jpg)

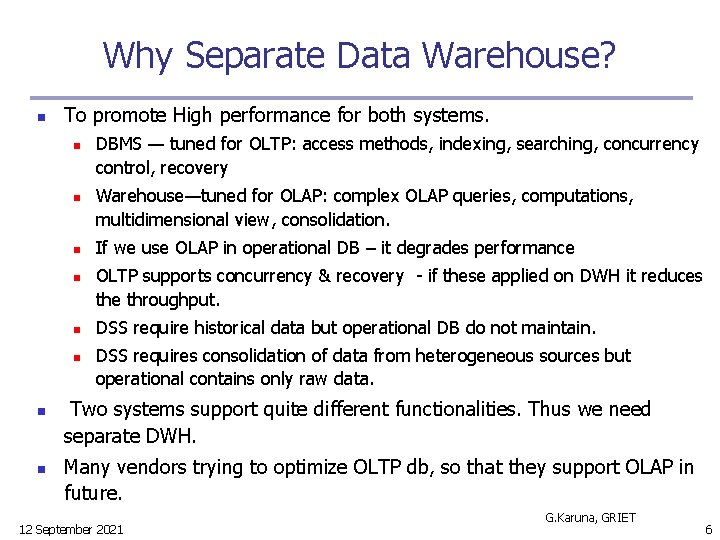

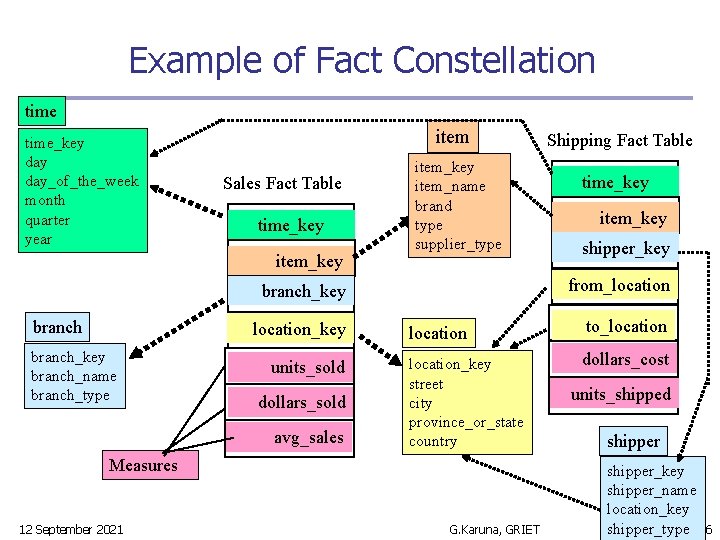

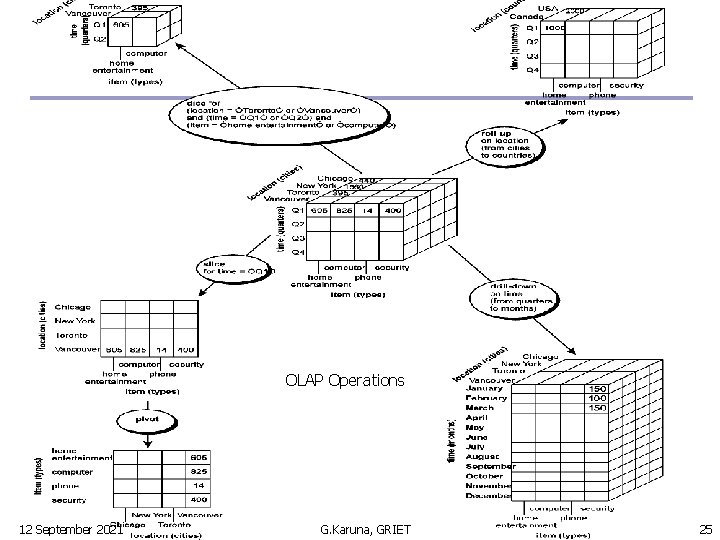

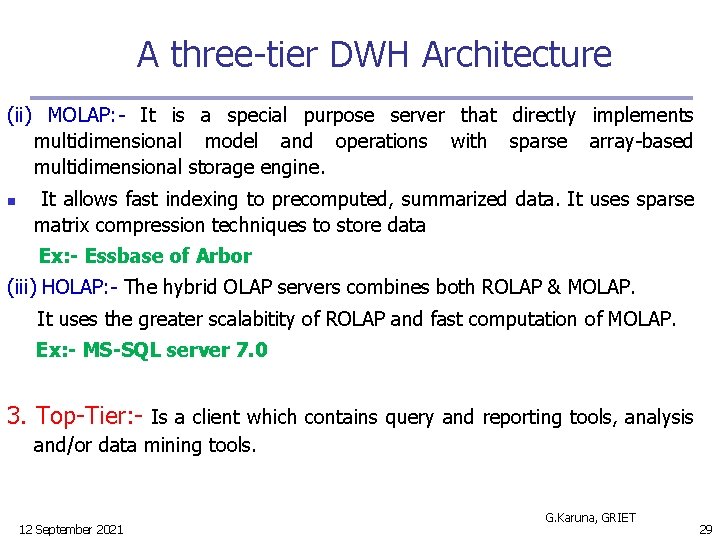

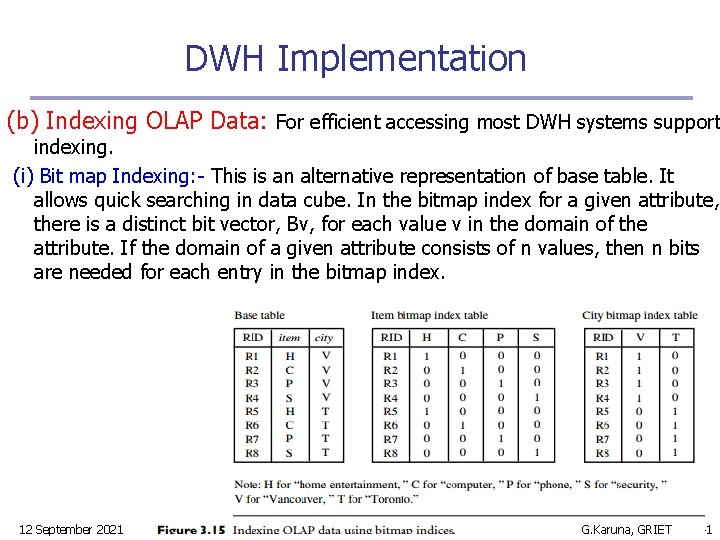

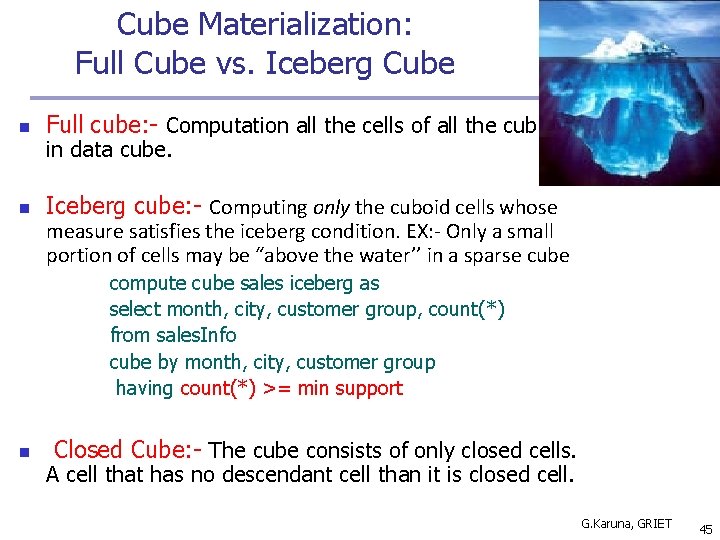

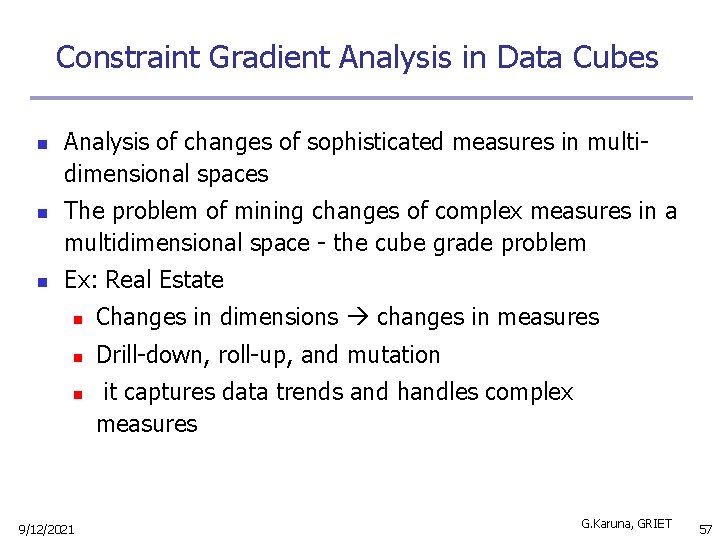

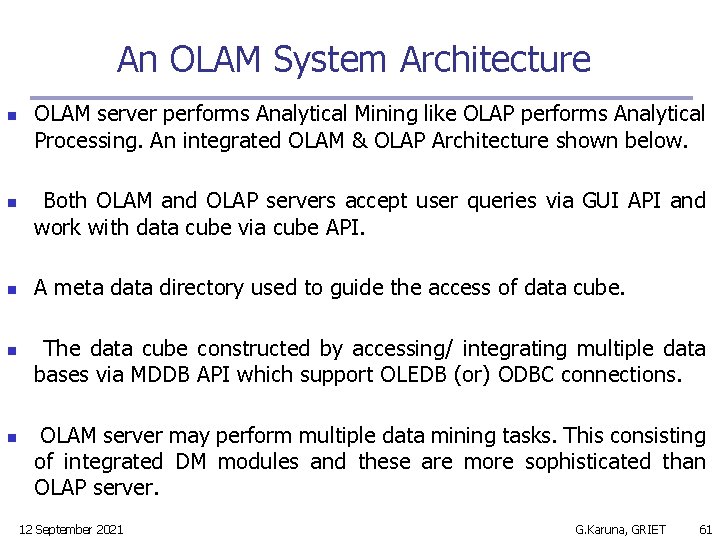

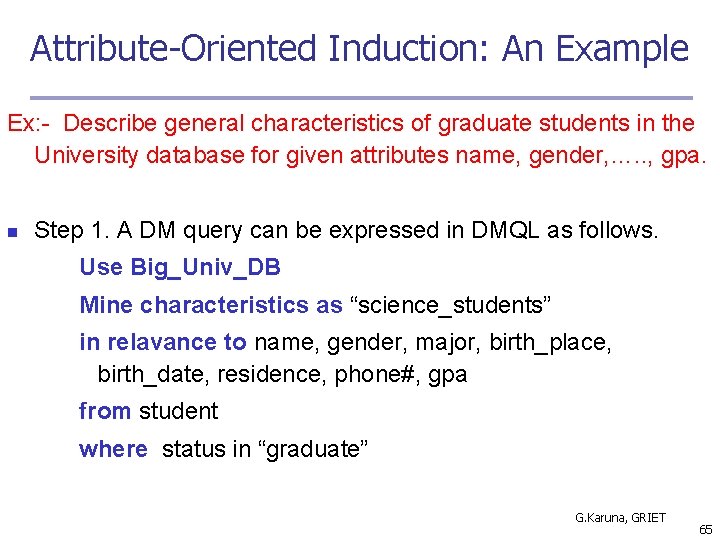

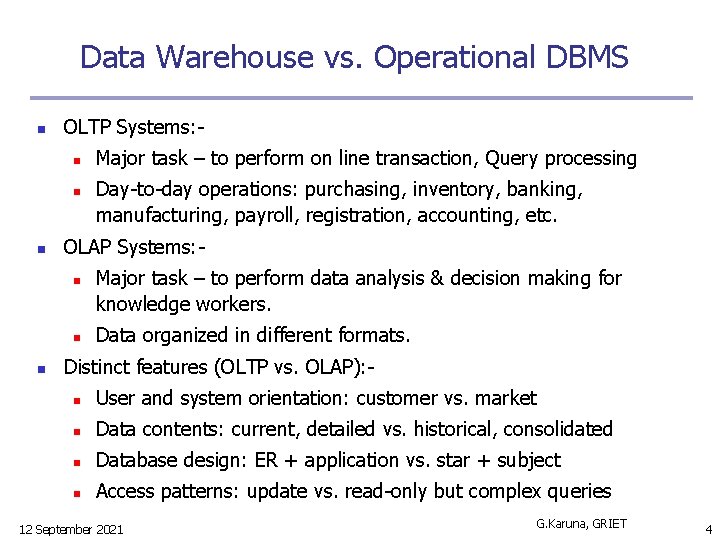

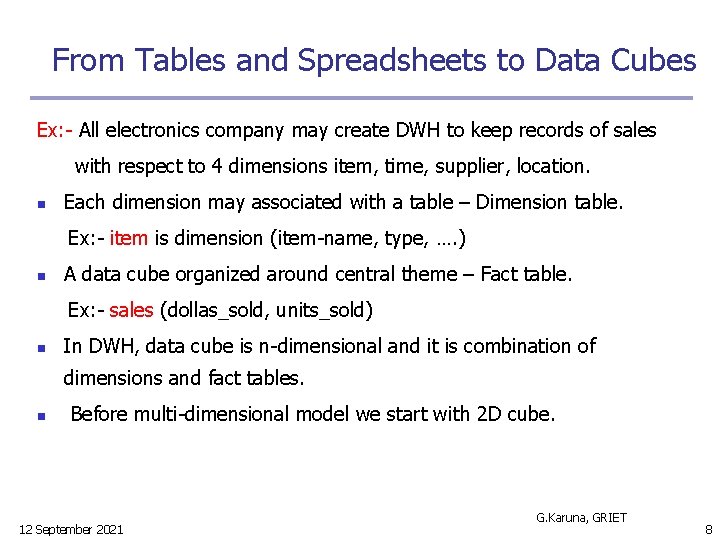

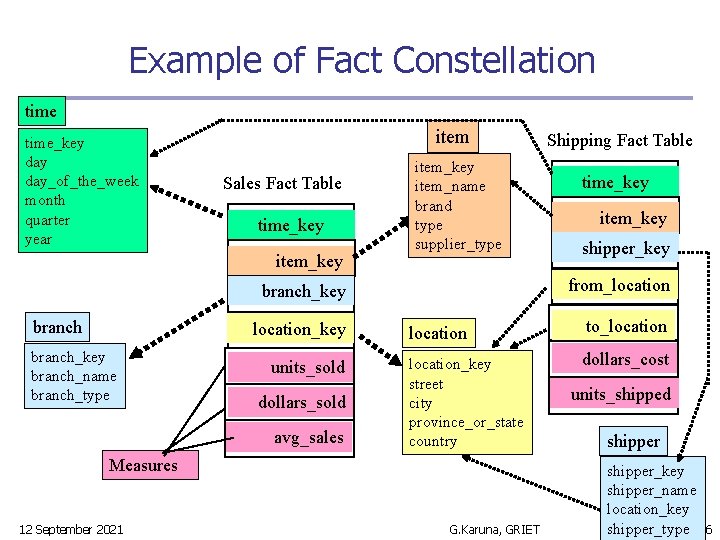

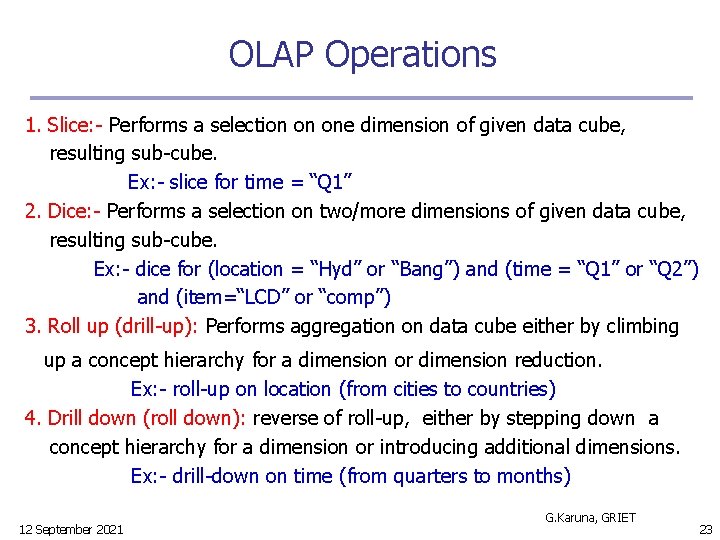

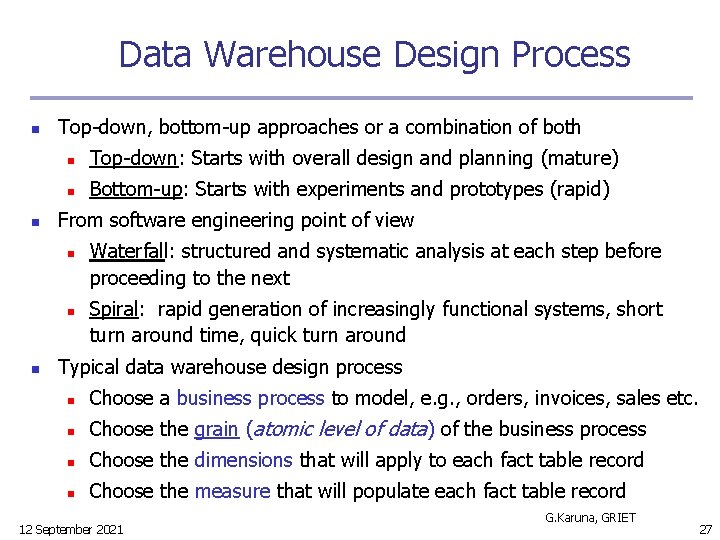

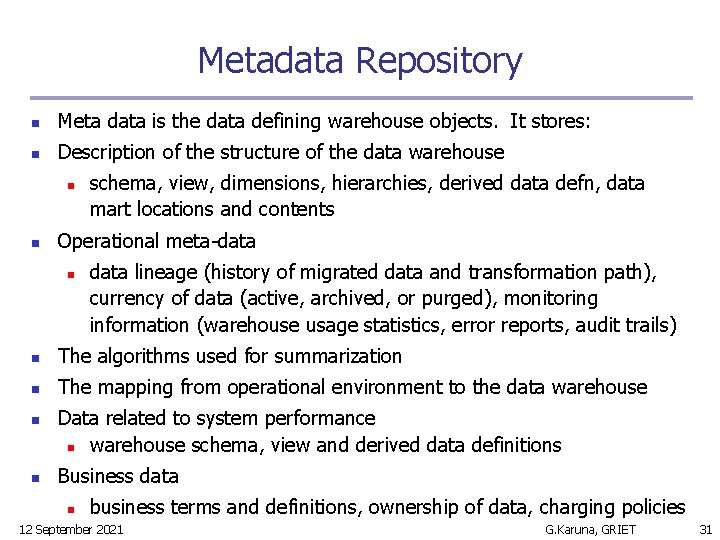

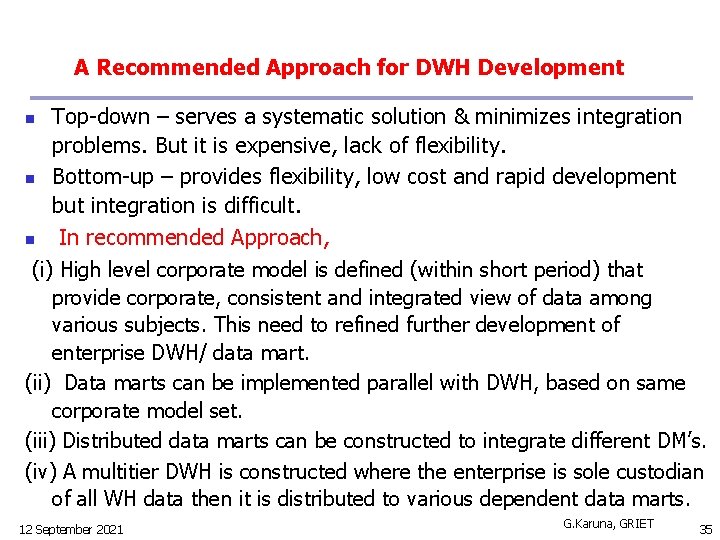

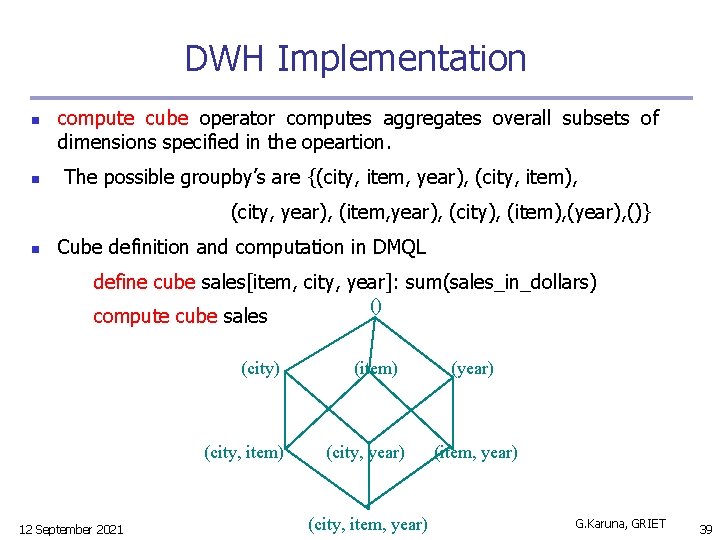

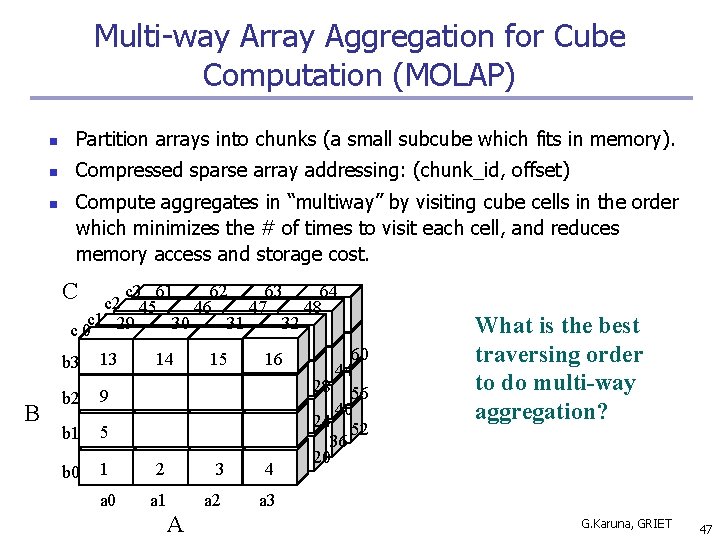

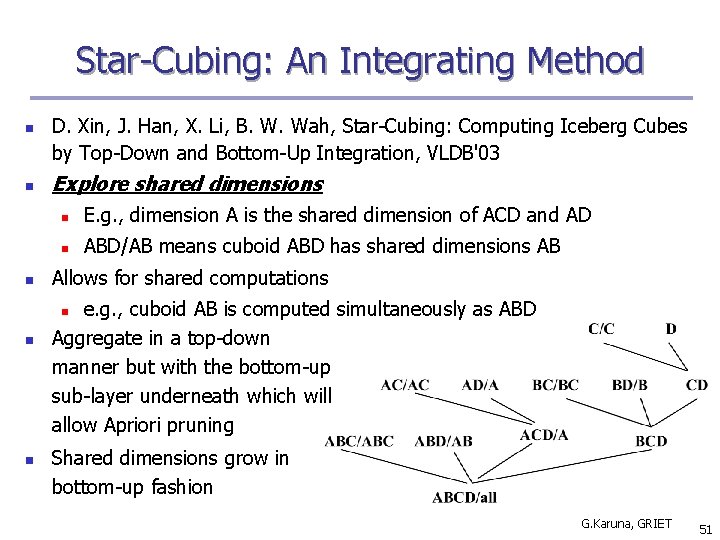

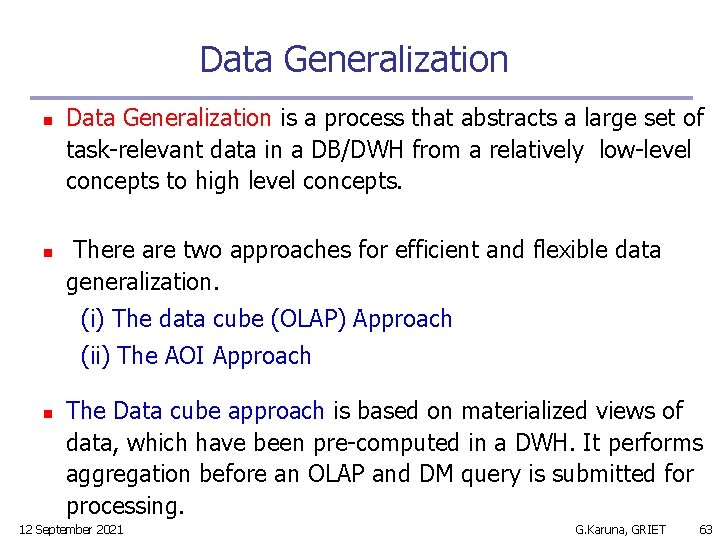

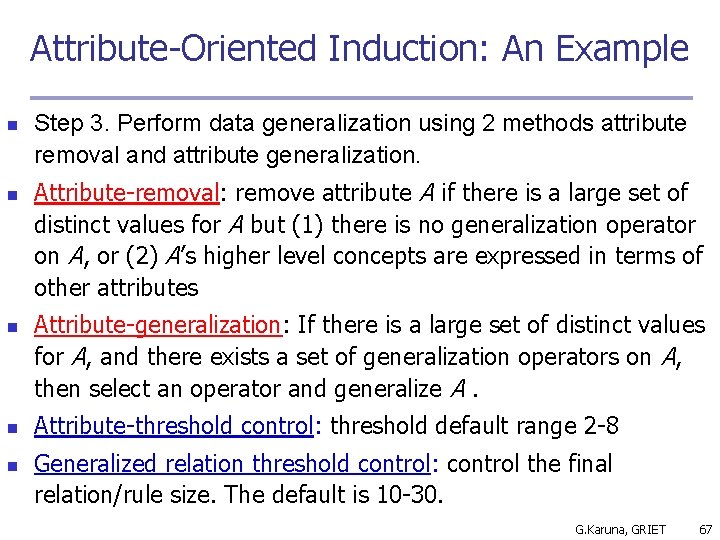

Defining Snowflake Schema in DMQL define cube sales_snowflake [time, item, branch, location]: dollars_sold = sum(sales_in_dollars), avg_sales = avg(sales_in_dollars), units_sold = count(*) define dimension time as (time_key, day_of_week, month, quarter, year) define dimension item as (item_key, item_name, brand, type, supplier(supplier_key, supplier_type)) define dimension branch as (branch_key, branch_name, branch_type) define dimension location as (location_key, street, city(city_key, province_or_state, country)) 12 September 2021 G. Karuna, GRIET 19

![Defining Fact Constellation in DMQL define cube sales time item branch location dollarssold Defining Fact Constellation in DMQL define cube sales [time, item, branch, location]: dollars_sold =](https://slidetodoc.com/presentation_image_h2/9caf1f754edbd0fdba7c5a85f974d2c9/image-20.jpg)

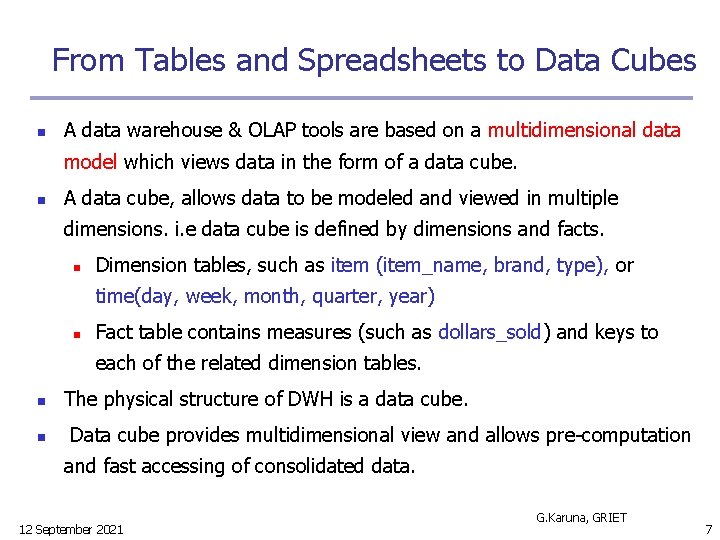

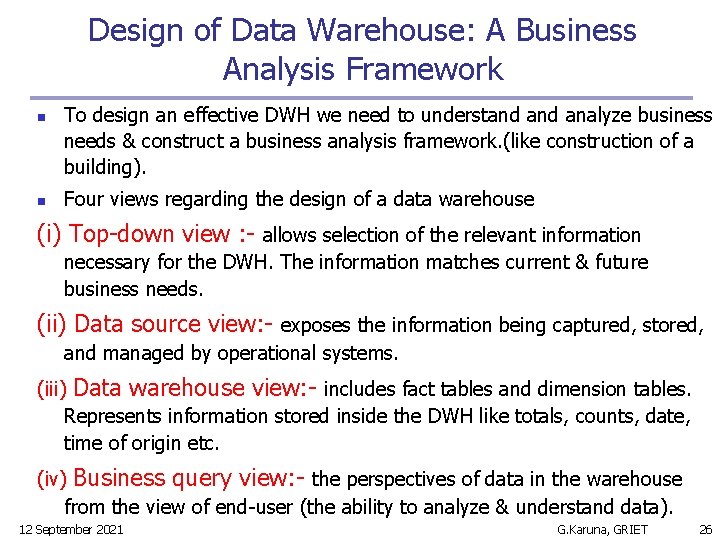

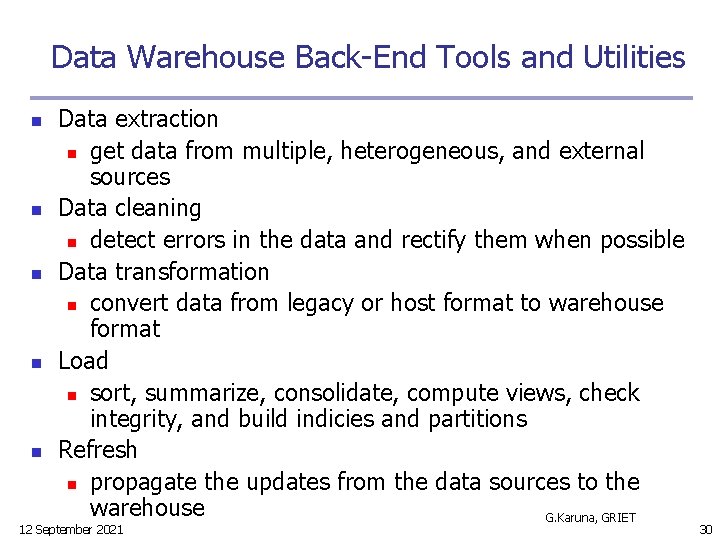

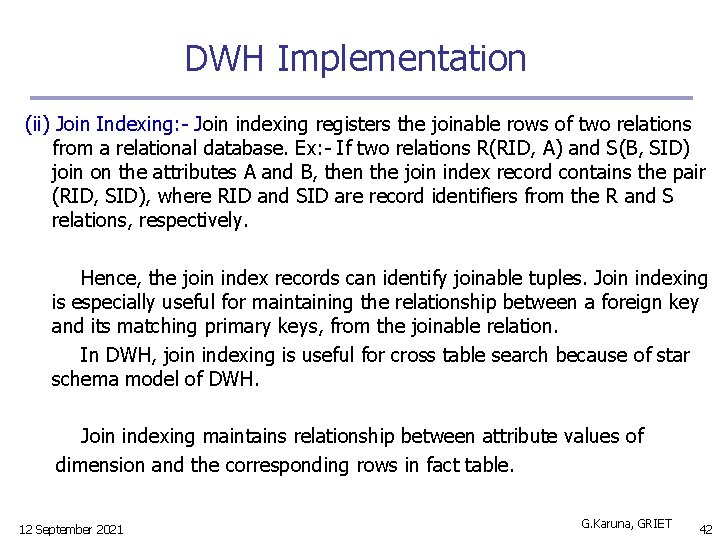

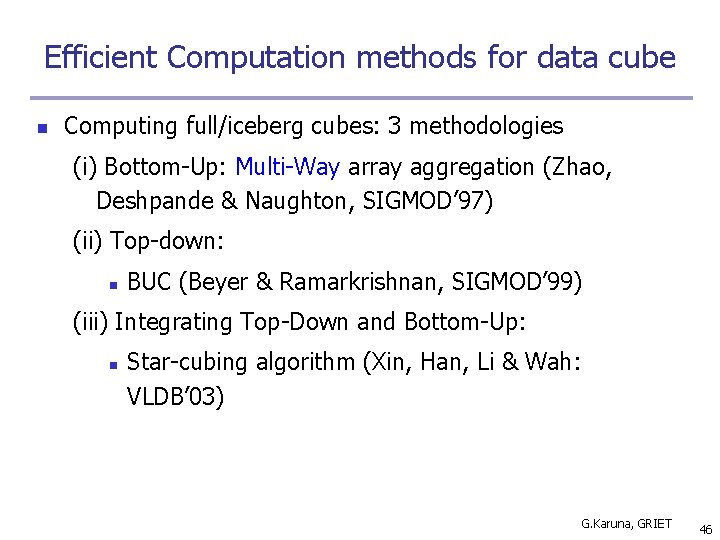

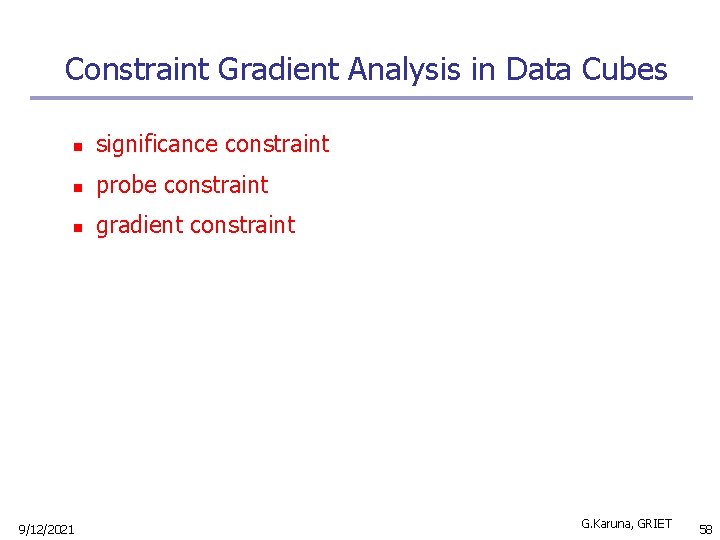

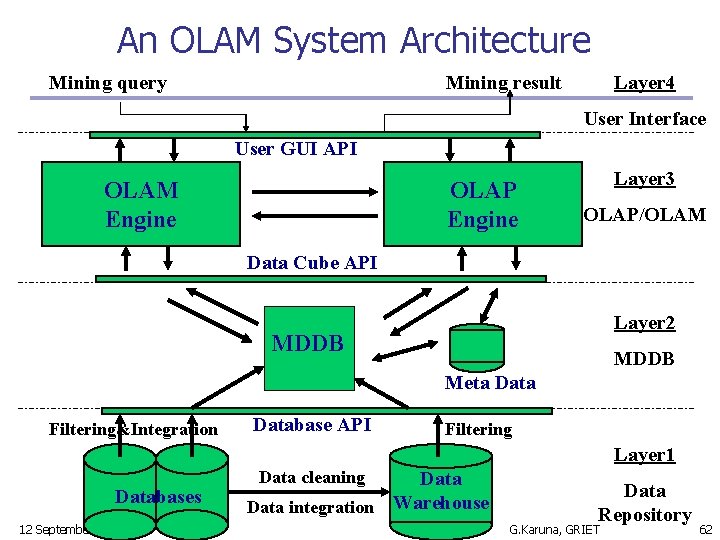

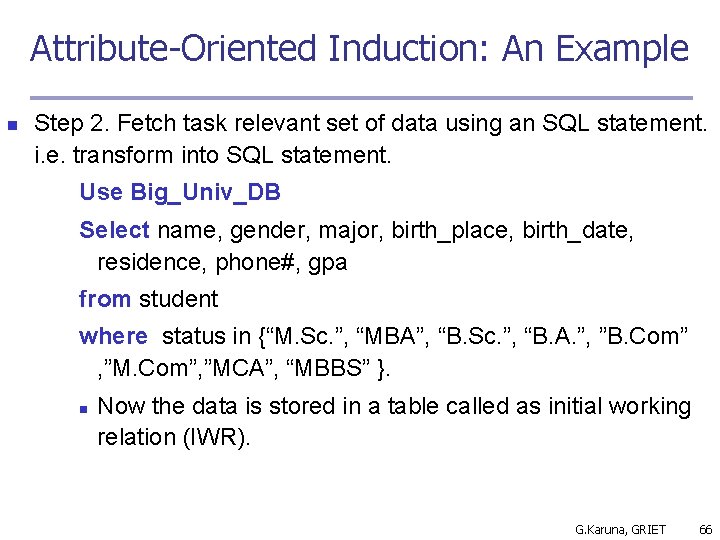

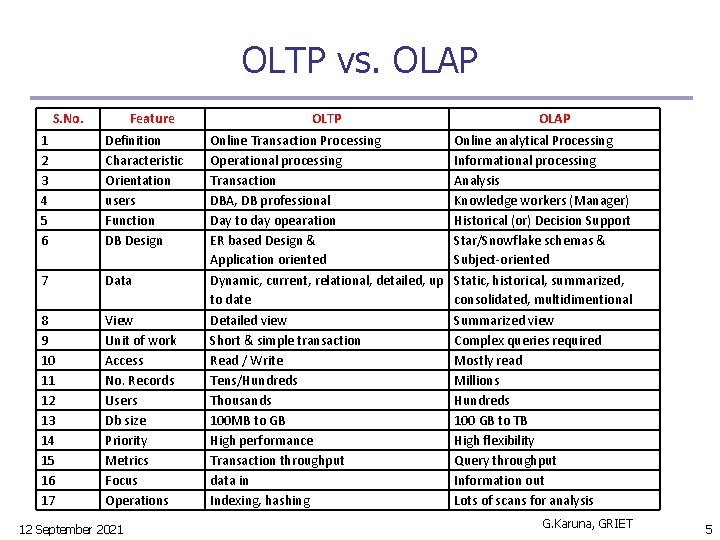

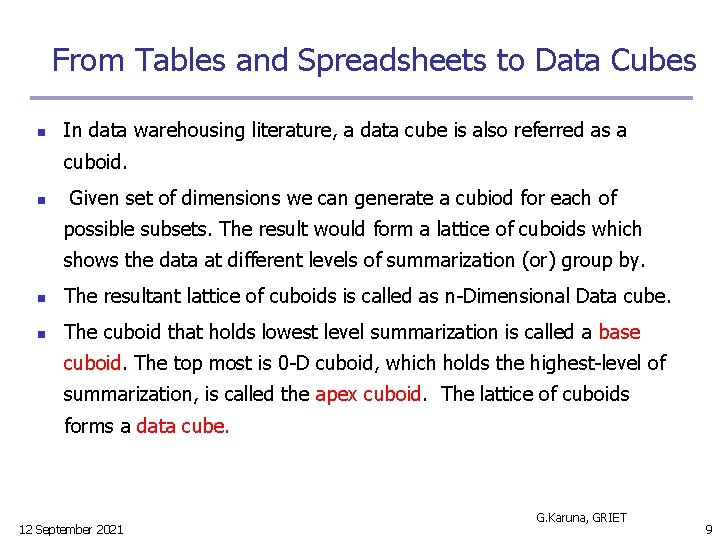

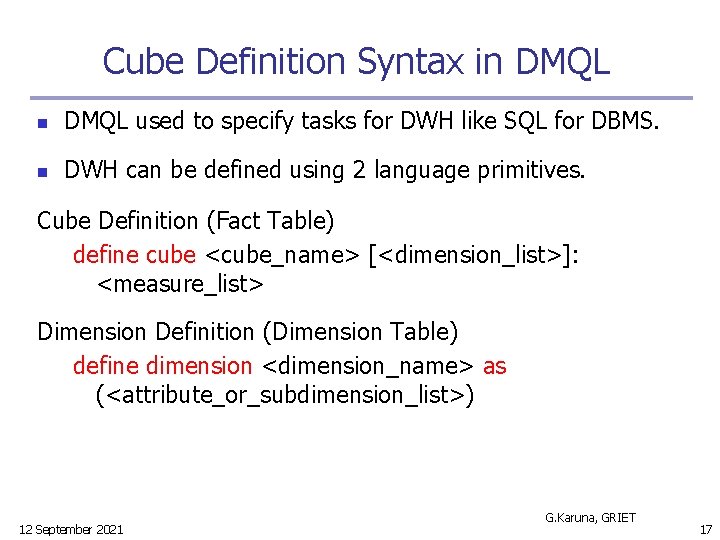

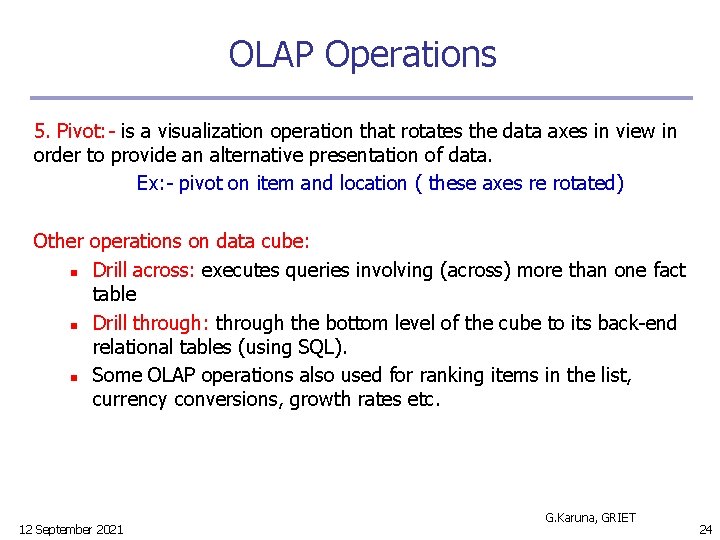

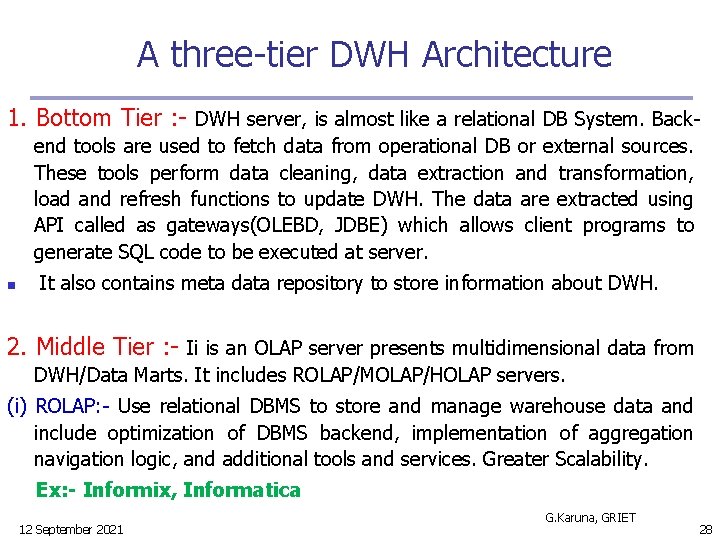

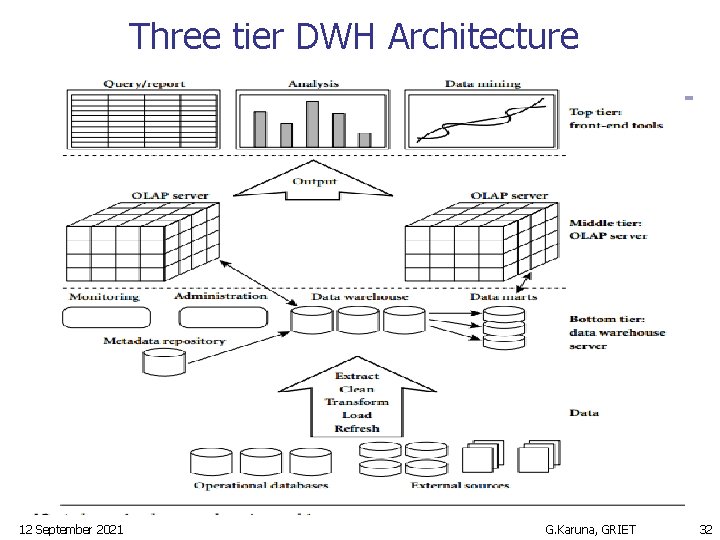

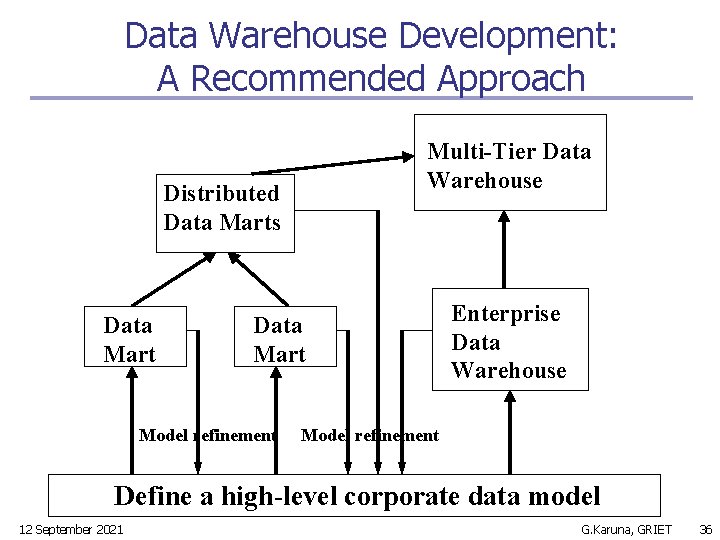

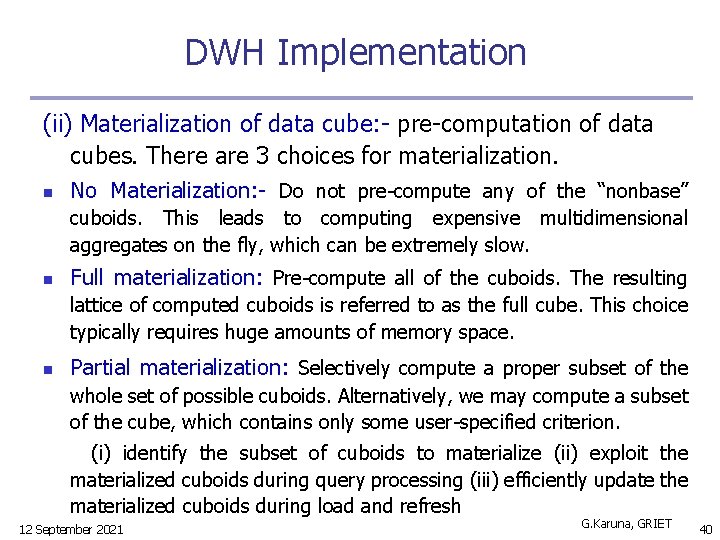

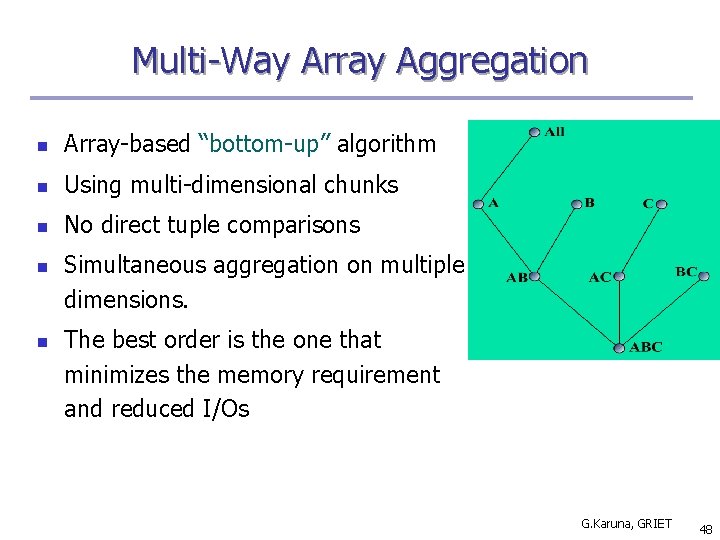

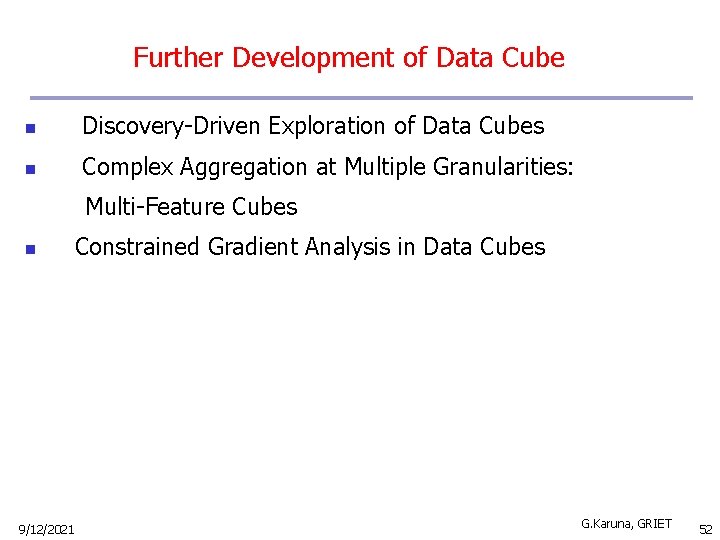

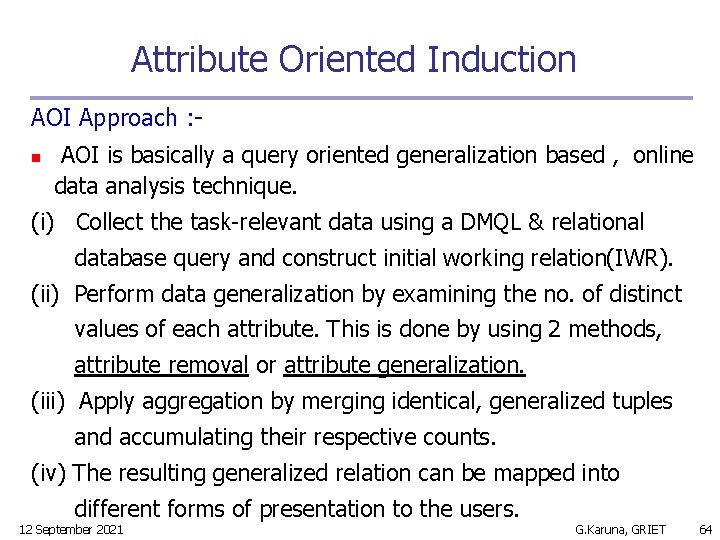

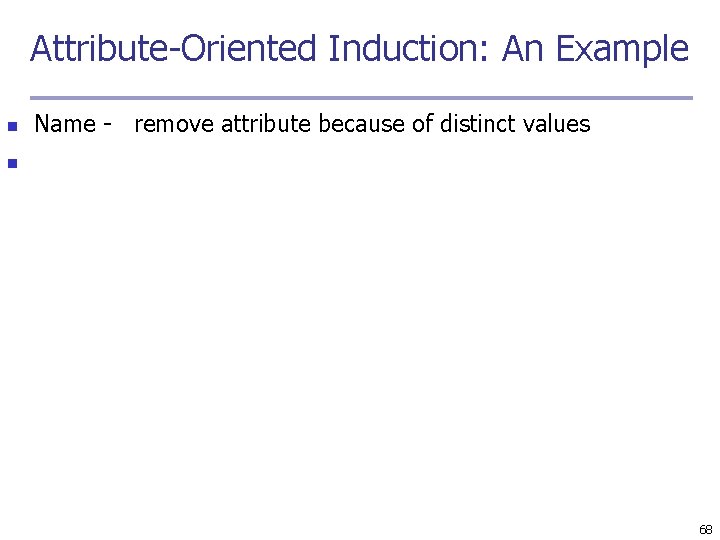

Defining Fact Constellation in DMQL define cube sales [time, item, branch, location]: dollars_sold = sum(sales_in_dollars), avg_sales = avg(sales_in_dollars), units_sold = count(*) define dimension time as (time_key, day_of_week, month, quarter, year) define dimension item as (item_key, item_name, brand, type, supplier_type) define dimension branch as (branch_key, branch_name, branch_type) define dimension location as (location_key, street, city, province_or_state, country) define cube shipping [time, item, shipper, from_location, to_location]: dollar_cost = sum(cost_in_dollars), unit_shipped = count(*) define dimension time as time in cube sales define dimension item as item in cube sales define dimension shipper as (shipper_key, shipper_name, location as location in cube sales, shipper_type) define dimension from_location as location in cube sales define dimension to_location as location in cube sales 12 September 2021 G. Karuna, GRIET 20

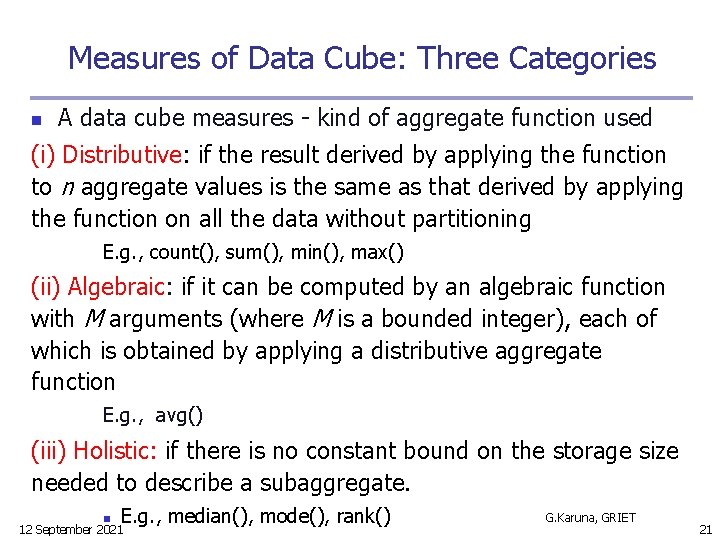

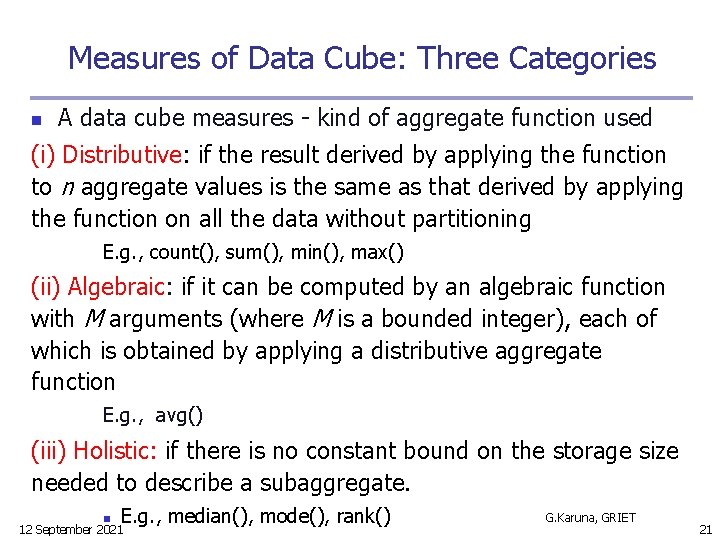

Measures of Data Cube: Three Categories n A data cube measures - kind of aggregate function used (i) Distributive: if the result derived by applying the function to n aggregate values is the same as that derived by applying the function on all the data without partitioning E. g. , count(), sum(), min(), max() (ii) Algebraic: if it can be computed by an algebraic function with M arguments (where M is a bounded integer), each of which is obtained by applying a distributive aggregate function E. g. , avg() (iii) Holistic: if there is no constant bound on the storage size needed to describe a subaggregate. n E. g. , median(), mode(), rank() 12 September 2021 G. Karuna, GRIET 21

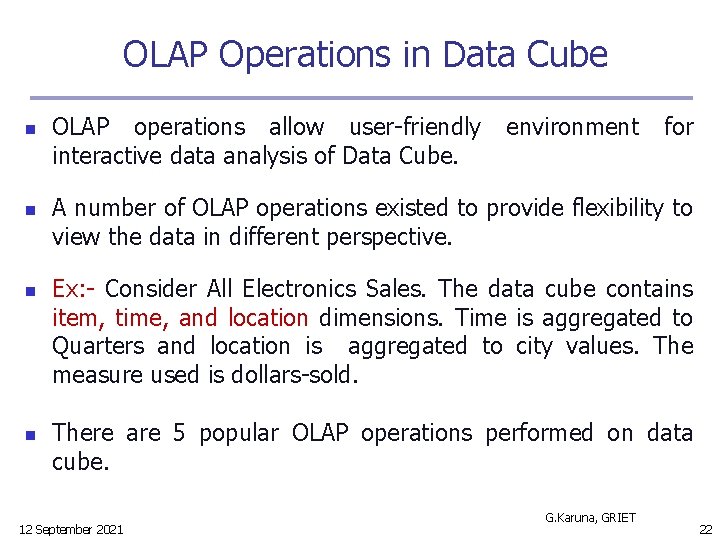

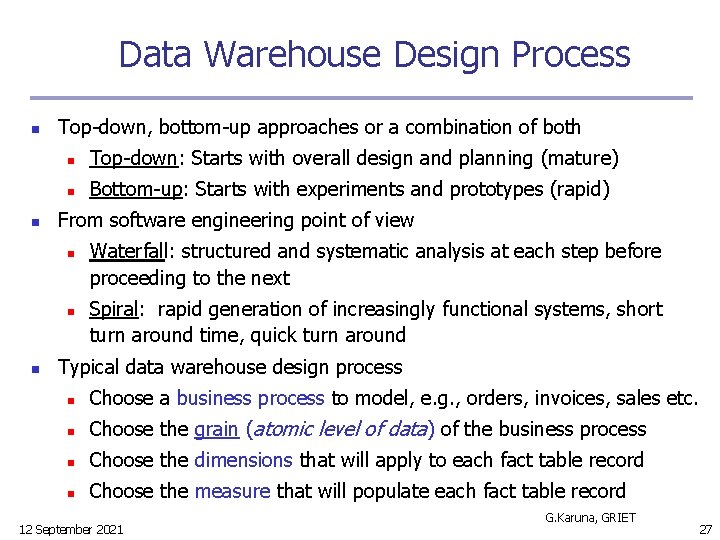

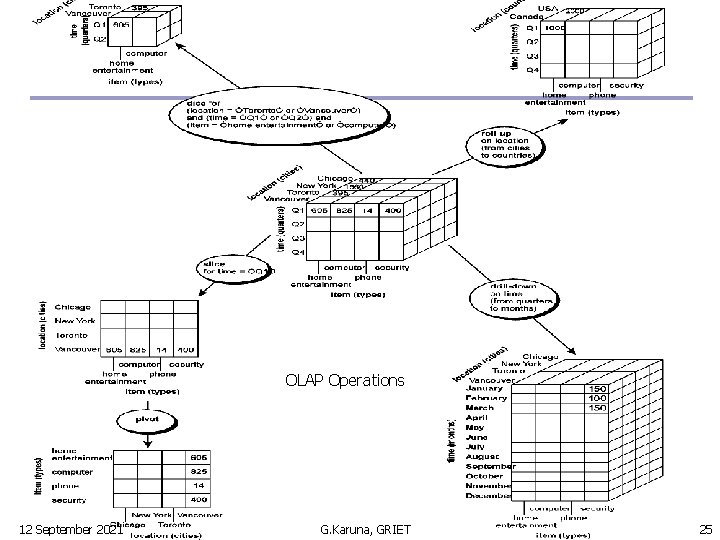

OLAP Operations in Data Cube n n OLAP operations allow user-friendly interactive data analysis of Data Cube. environment for A number of OLAP operations existed to provide flexibility to view the data in different perspective. Ex: - Consider All Electronics Sales. The data cube contains item, time, and location dimensions. Time is aggregated to Quarters and location is aggregated to city values. The measure used is dollars-sold. There are 5 popular OLAP operations performed on data cube. 12 September 2021 G. Karuna, GRIET 22

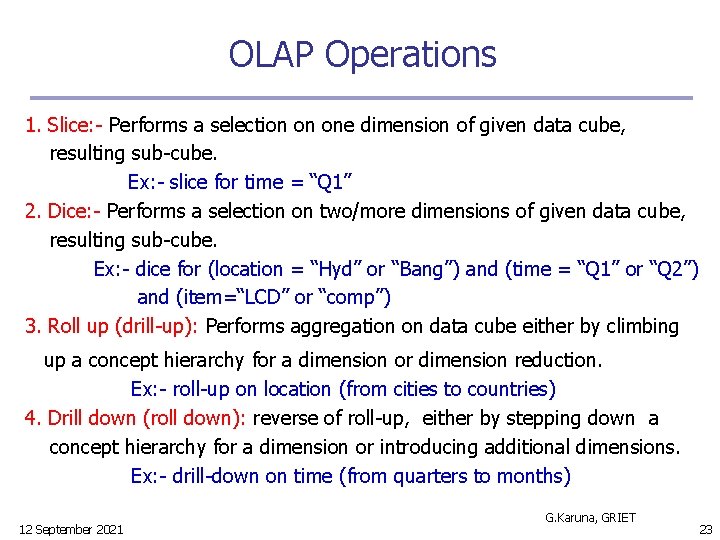

OLAP Operations 1. Slice: - Performs a selection on one dimension of given data cube, resulting sub-cube. Ex: - slice for time = “Q 1” 2. Dice: - Performs a selection on two/more dimensions of given data cube, resulting sub-cube. Ex: - dice for (location = “Hyd” or “Bang”) and (time = “Q 1” or “Q 2”) and (item=“LCD” or “comp”) 3. Roll up (drill-up): Performs aggregation on data cube either by climbing up a concept hierarchy for a dimension or dimension reduction. Ex: - roll-up on location (from cities to countries) 4. Drill down (roll down): reverse of roll-up, either by stepping down a concept hierarchy for a dimension or introducing additional dimensions. Ex: - drill-down on time (from quarters to months) 12 September 2021 G. Karuna, GRIET 23

OLAP Operations 5. Pivot: - is a visualization operation that rotates the data axes in view in order to provide an alternative presentation of data. Ex: - pivot on item and location ( these axes re rotated) Other operations on data cube: n Drill across: executes queries involving (across) more than one fact table n Drill through: through the bottom level of the cube to its back-end relational tables (using SQL). n Some OLAP operations also used for ranking items in the list, currency conversions, growth rates etc. 12 September 2021 G. Karuna, GRIET 24

OLAP Operations 12 September 2021 G. Karuna, GRIET 25

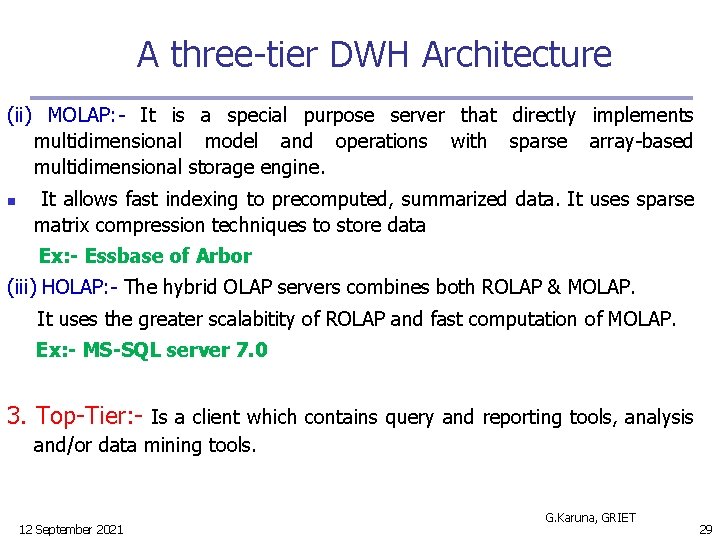

Design of Data Warehouse: A Business Analysis Framework n n To design an effective DWH we need to understand analyze business needs & construct a business analysis framework. (like construction of a building). Four views regarding the design of a data warehouse (i) Top-down view : - allows selection of the relevant information necessary for the DWH. The information matches current & future business needs. (ii) Data source view: - exposes the information being captured, stored, and managed by operational systems. (iii) Data warehouse view: - includes fact tables and dimension tables. Represents information stored inside the DWH like totals, counts, date, time of origin etc. (iv) Business query view: - the perspectives of data in the warehouse from the view of end-user (the ability to analyze & understand data). 12 September 2021 G. Karuna, GRIET 26

Data Warehouse Design Process n n Top-down, bottom-up approaches or a combination of both n Top-down: Starts with overall design and planning (mature) n Bottom-up: Starts with experiments and prototypes (rapid) From software engineering point of view n n n Waterfall: structured and systematic analysis at each step before proceeding to the next Spiral: rapid generation of increasingly functional systems, short turn around time, quick turn around Typical data warehouse design process n Choose a business process to model, e. g. , orders, invoices, sales etc. n Choose the grain (atomic level of data) of the business process n Choose the dimensions that will apply to each fact table record n Choose the measure that will populate each fact table record 12 September 2021 G. Karuna, GRIET 27

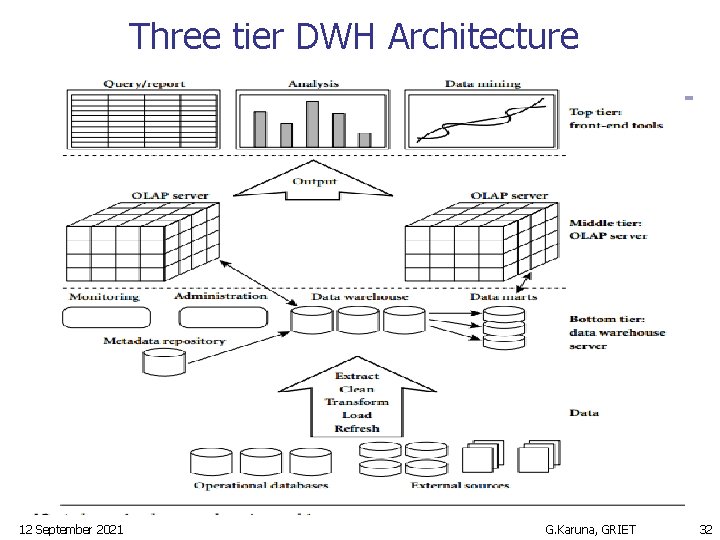

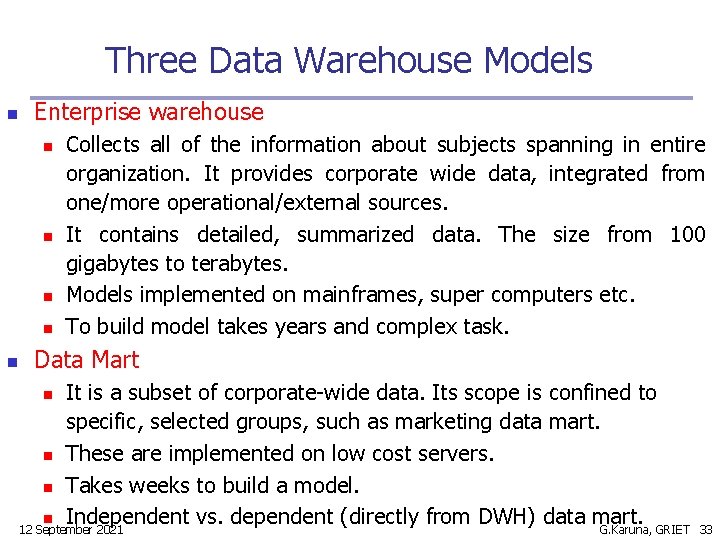

A three-tier DWH Architecture 1. Bottom Tier : - DWH server, is almost like a relational DB System. Backend tools are used to fetch data from operational DB or external sources. These tools perform data cleaning, data extraction and transformation, load and refresh functions to update DWH. The data are extracted using API called as gateways(OLEBD, JDBE) which allows client programs to generate SQL code to be executed at server. n It also contains meta data repository to store information about DWH. 2. Middle Tier : - Ii is an OLAP server presents multidimensional data from DWH/Data Marts. It includes ROLAP/MOLAP/HOLAP servers. (i) ROLAP: - Use relational DBMS to store and manage warehouse data and include optimization of DBMS backend, implementation of aggregation navigation logic, and additional tools and services. Greater Scalability. Ex: - Informix, Informatica 12 September 2021 G. Karuna, GRIET 28

A three-tier DWH Architecture (ii) MOLAP: - It is a special purpose server that directly implements multidimensional model and operations with sparse array-based multidimensional storage engine. n It allows fast indexing to precomputed, summarized data. It uses sparse matrix compression techniques to store data Ex: - Essbase of Arbor (iii) HOLAP: - The hybrid OLAP servers combines both ROLAP & MOLAP. It uses the greater scalabitity of ROLAP and fast computation of MOLAP. Ex: - MS-SQL server 7. 0 3. Top-Tier: - Is a client which contains query and reporting tools, analysis and/or data mining tools. 12 September 2021 G. Karuna, GRIET 29

Data Warehouse Back-End Tools and Utilities n n n Data extraction n get data from multiple, heterogeneous, and external sources Data cleaning n detect errors in the data and rectify them when possible Data transformation n convert data from legacy or host format to warehouse format Load n sort, summarize, consolidate, compute views, check integrity, and build indicies and partitions Refresh n propagate the updates from the data sources to the warehouse G. Karuna, GRIET 12 September 2021 30

Metadata Repository n Meta data is the data defining warehouse objects. It stores: n Description of the structure of the data warehouse n n schema, view, dimensions, hierarchies, derived data defn, data mart locations and contents Operational meta-data n data lineage (history of migrated data and transformation path), currency of data (active, archived, or purged), monitoring information (warehouse usage statistics, error reports, audit trails) n The algorithms used for summarization n The mapping from operational environment to the data warehouse n n Data related to system performance n warehouse schema, view and derived data definitions Business data n business terms and definitions, ownership of data, charging policies 12 September 2021 G. Karuna, GRIET 31

Three tier DWH Architecture 12 September 2021 G. Karuna, GRIET 32

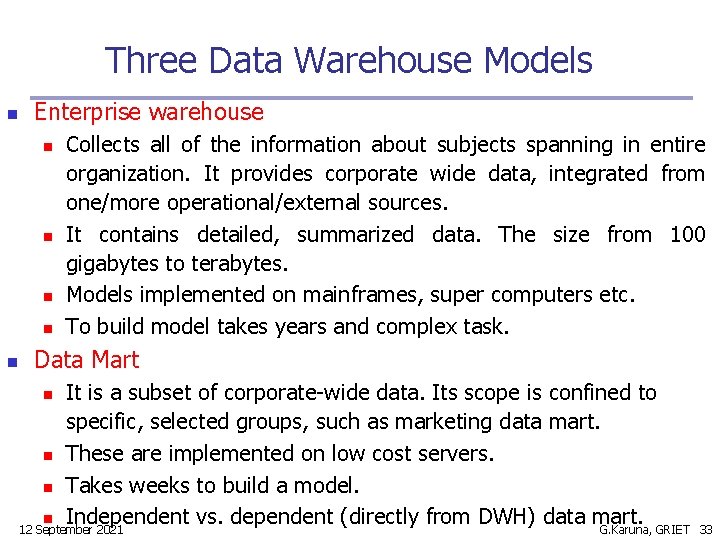

Three Data Warehouse Models n Enterprise warehouse n n n Collects all of the information about subjects spanning in entire organization. It provides corporate wide data, integrated from one/more operational/external sources. It contains detailed, summarized data. The size from 100 gigabytes to terabytes. Models implemented on mainframes, super computers etc. To build model takes years and complex task. Data Mart It is a subset of corporate-wide data. Its scope is confined to specific, selected groups, such as marketing data mart. n These are implemented on low cost servers. n Takes weeks to build a model. n Independent vs. dependent (directly from DWH) data mart. G. Karuna, GRIET 12 September 2021 n 33

Three Data Warehouse Models n Virtual warehouse n n n A set of views over operational databases. For efficient query processing, only some of the possible summary views may be materialized. Easy to build but requires excess capacity on operational databases. 12 September 2021 G. Karuna, GRIET 34

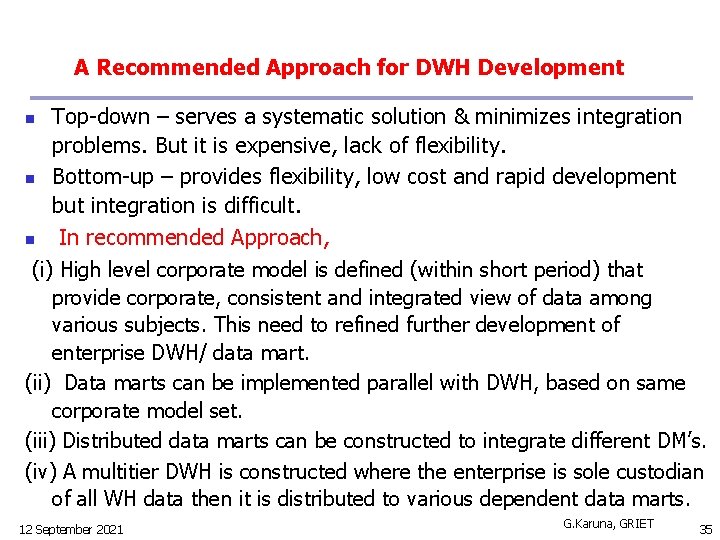

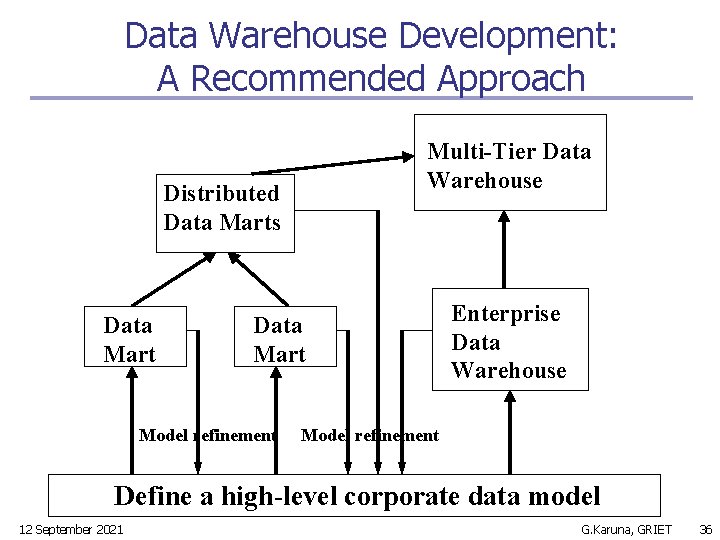

A Recommended Approach for DWH Development Top-down – serves a systematic solution & minimizes integration problems. But it is expensive, lack of flexibility. n Bottom-up – provides flexibility, low cost and rapid development but integration is difficult. n In recommended Approach, (i) High level corporate model is defined (within short period) that provide corporate, consistent and integrated view of data among various subjects. This need to refined further development of enterprise DWH/ data mart. (ii) Data marts can be implemented parallel with DWH, based on same corporate model set. (iii) Distributed data marts can be constructed to integrate different DM’s. (iv) A multitier DWH is constructed where the enterprise is sole custodian of all WH data then it is distributed to various dependent data marts. n 12 September 2021 G. Karuna, GRIET 35

Data Warehouse Development: A Recommended Approach Multi-Tier Data Warehouse Distributed Data Marts Data Mart Model refinement Enterprise Data Warehouse Model refinement Define a high-level corporate data model 12 September 2021 G. Karuna, GRIET 36

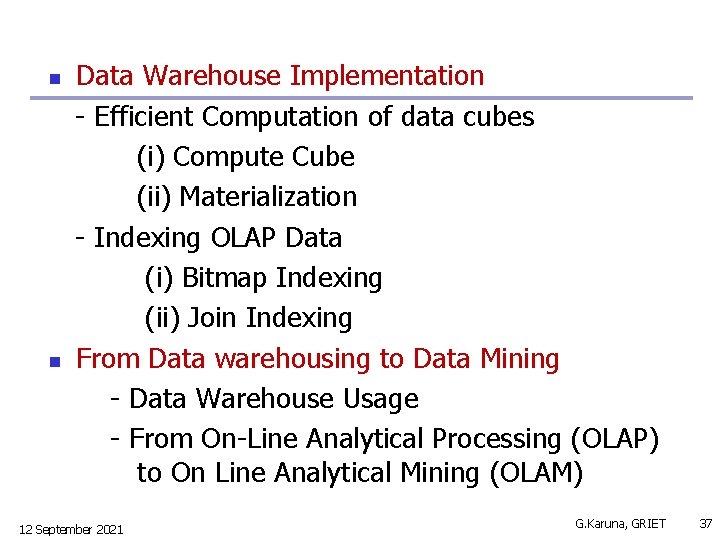

n n Data Warehouse Implementation - Efficient Computation of data cubes (i) Compute Cube (ii) Materialization - Indexing OLAP Data (i) Bitmap Indexing (ii) Join Indexing From Data warehousing to Data Mining - Data Warehouse Usage - From On-Line Analytical Processing (OLAP) to On Line Analytical Mining (OLAM) 12 September 2021 G. Karuna, GRIET 37

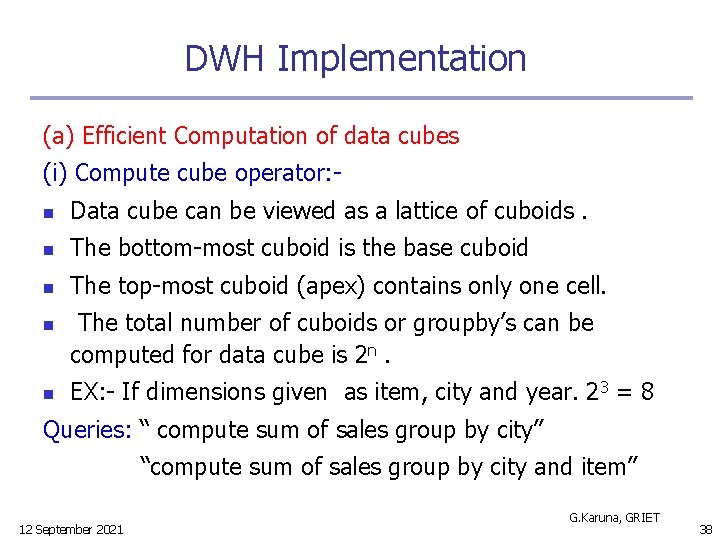

DWH Implementation (a) Efficient Computation of data cubes (i) Compute cube operator: n Data cube can be viewed as a lattice of cuboids. n The bottom-most cuboid is the base cuboid n The top-most cuboid (apex) contains only one cell. n n The total number of cuboids or groupby’s can be computed for data cube is 2 n. EX: - If dimensions given as item, city and year. 23 = 8 Queries: “ compute sum of sales group by city” “compute sum of sales group by city and item” 12 September 2021 G. Karuna, GRIET 38

DWH Implementation n n compute cube operator computes aggregates overall subsets of dimensions specified in the opeartion. The possible groupby’s are {(city, item, year), (city, item), (city, year), (item, year), (city), (item), (year), ()} n Cube definition and computation in DMQL define cube sales[item, city, year]: sum(sales_in_dollars) compute cube sales (city) (city, item) 12 September 2021 () (item) (city, year) (city, item, year) (item, year) G. Karuna, GRIET 39

DWH Implementation (ii) Materialization of data cube: - pre-computation of data cubes. There are 3 choices for materialization. n No Materialization: - Do not pre-compute any of the “nonbase” cuboids. This leads to computing expensive multidimensional aggregates on the fly, which can be extremely slow. n Full materialization: Pre-compute all of the cuboids. The resulting lattice of computed cuboids is referred to as the full cube. This choice typically requires huge amounts of memory space. n Partial materialization: Selectively compute a proper subset of the whole set of possible cuboids. Alternatively, we may compute a subset of the cube, which contains only some user-specified criterion. (i) identify the subset of cuboids to materialize (ii) exploit the materialized cuboids during query processing (iii) efficiently update the materialized cuboids during load and refresh 12 September 2021 G. Karuna, GRIET 40

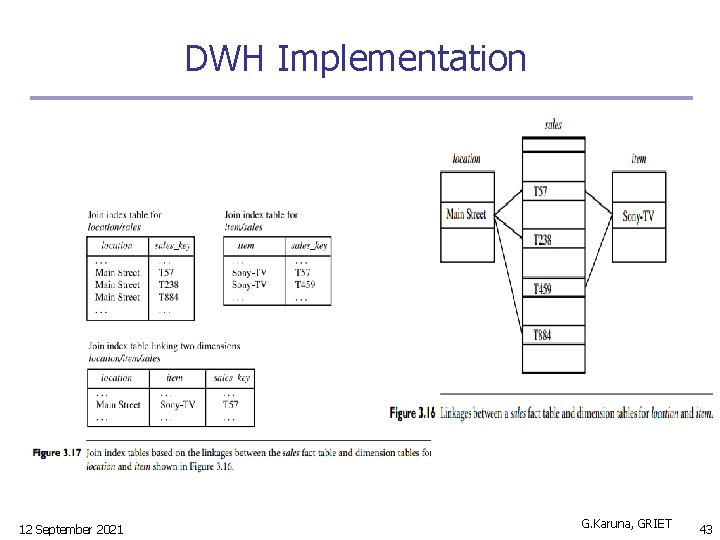

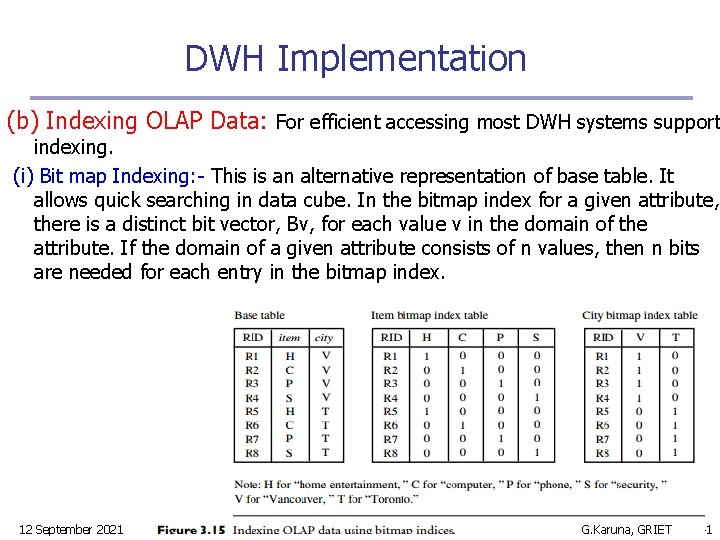

DWH Implementation (b) Indexing OLAP Data: For efficient accessing most DWH systems support indexing. (i) Bit map Indexing: - This is an alternative representation of base table. It allows quick searching in data cube. In the bitmap index for a given attribute, there is a distinct bit vector, Bv, for each value v in the domain of the attribute. If the domain of a given attribute consists of n values, then n bits are needed for each entry in the bitmap index. 12 September 2021 G. Karuna, GRIET 41

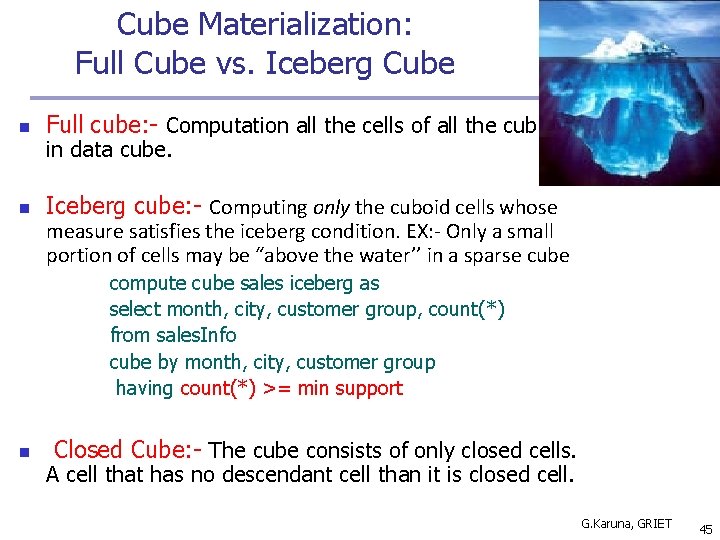

DWH Implementation (ii) Join Indexing: - Join indexing registers the joinable rows of two relations from a relational database. Ex: - If two relations R(RID, A) and S(B, SID) join on the attributes A and B, then the join index record contains the pair (RID, SID), where RID and SID are record identifiers from the R and S relations, respectively. Hence, the join index records can identify joinable tuples. Join indexing is especially useful for maintaining the relationship between a foreign key and its matching primary keys, from the joinable relation. In DWH, join indexing is useful for cross table search because of star schema model of DWH. Join indexing maintains relationship between attribute values of dimension and the corresponding rows in fact table. 12 September 2021 G. Karuna, GRIET 42

DWH Implementation 12 September 2021 G. Karuna, GRIET 43

Efficient Methods for Data cube computation n n Data cube computation is an essential task in DWH. Pre-computation of all or part of a data cube can greatly reduce the response time and increase performance of OLAP. This is a challenging task. How can we compute data cubes in advance? Cube Materialization: (i) Base Cell: - A cell in base cuboid is base cell. (ii) Aggregate Cell: - A cell in non-base cuboid. Each aggregated dimension indicated as ‘*’. Ex: - If we take 3 D-cube all 1 -D, 2 -D cells are aggregate cells and 3 -D is Base cell. (iii) Ancestor & Descendent cells: - 1 -D and 2 -D cells are ancestors of 3 -D cell. 3 -D is descendent cell. G. Karuna, GRIET 44

Cube Materialization: Full Cube vs. Iceberg Cube n Full cube: - Computation all the cells of all the cuboids n Iceberg cube: - Computing only the cuboid cells whose in data cube. measure satisfies the iceberg condition. EX: - Only a small portion of cells may be “above the water’’ in a sparse cube compute cube sales iceberg as select month, city, customer group, count(*) from sales. Info cube by month, city, customer group having count(*) >= min support n Closed Cube: - The cube consists of only closed cells. A cell that has no descendant cell than it is closed cell. G. Karuna, GRIET 45

Efficient Computation methods for data cube n Computing full/iceberg cubes: 3 methodologies (i) Bottom-Up: Multi-Way array aggregation (Zhao, Deshpande & Naughton, SIGMOD’ 97) (ii) Top-down: n BUC (Beyer & Ramarkrishnan, SIGMOD’ 99) (iii) Integrating Top-Down and Bottom-Up: n Star-cubing algorithm (Xin, Han, Li & Wah: VLDB’ 03) G. Karuna, GRIET 46

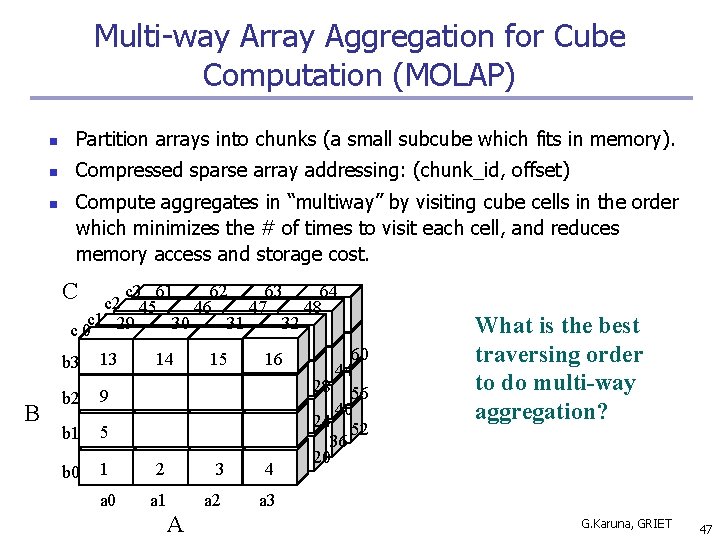

Multi-way Array Aggregation for Cube Computation (MOLAP) n Partition arrays into chunks (a small subcube which fits in memory). n Compressed sparse array addressing: (chunk_id, offset) n Compute aggregates in “multiway” by visiting cube cells in the order which minimizes the # of times to visit each cell, and reduces memory access and storage cost. C c 3 61 62 63 64 c 2 45 46 47 48 c 1 29 30 31 32 c 0 B b 3 B 13 b 2 9 b 1 5 b 0 14 15 16 1 2 3 4 a 0 a 1 a 2 a 3 A 60 44 28 56 40 24 52 36 20 What is the best traversing order to do multi-way aggregation? G. Karuna, GRIET 47

Multi-Way Array Aggregation n Array-based “bottom-up” algorithm n Using multi-dimensional chunks n No direct tuple comparisons n n Simultaneous aggregation on multiple dimensions. The best order is the one that minimizes the memory requirement and reduced I/Os G. Karuna, GRIET 48

12 September 2021 Data Mining: Concepts and Techniques 49

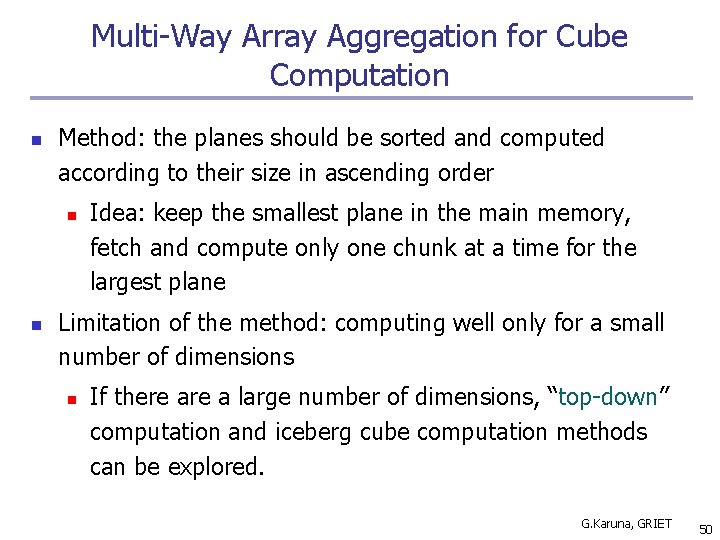

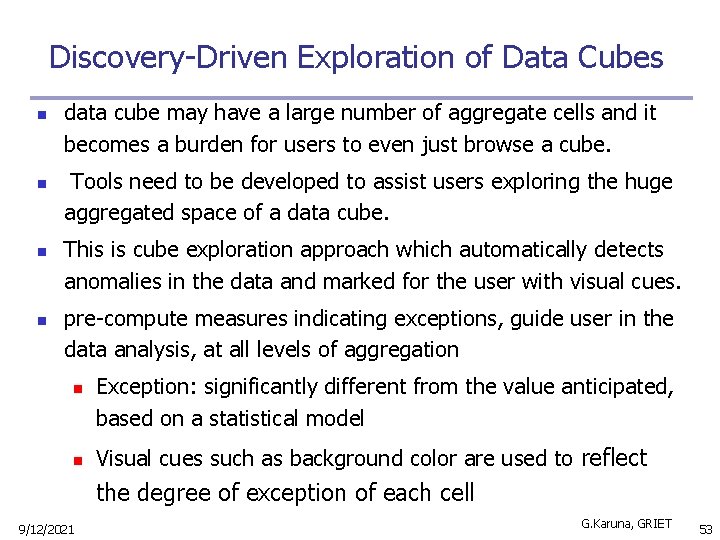

Multi-Way Array Aggregation for Cube Computation n Method: the planes should be sorted and computed according to their size in ascending order n n Idea: keep the smallest plane in the main memory, fetch and compute only one chunk at a time for the largest plane Limitation of the method: computing well only for a small number of dimensions n If there a large number of dimensions, “top-down” computation and iceberg cube computation methods can be explored. G. Karuna, GRIET 50

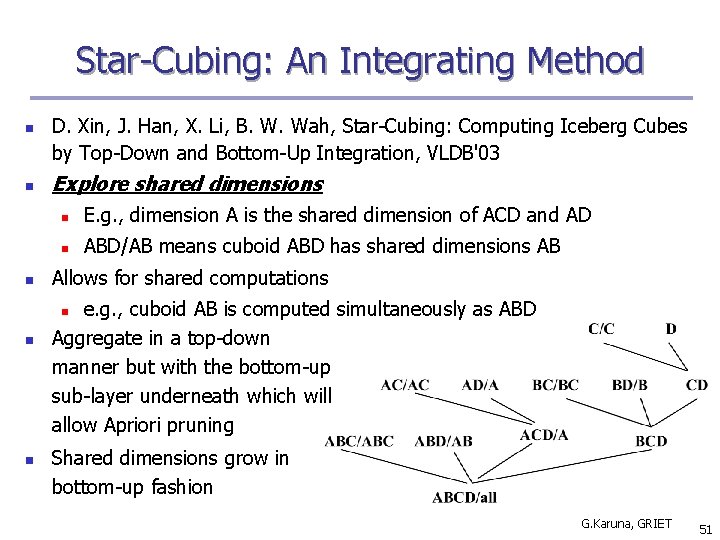

Star-Cubing: An Integrating Method n n n D. Xin, J. Han, X. Li, B. W. Wah, Star-Cubing: Computing Iceberg Cubes by Top-Down and Bottom-Up Integration, VLDB'03 Explore shared dimensions n E. g. , dimension A is the shared dimension of ACD and AD n ABD/AB means cuboid ABD has shared dimensions AB Allows for shared computations e. g. , cuboid AB is computed simultaneously as ABD Aggregate in a top-down manner but with the bottom-up sub-layer underneath which will allow Apriori pruning n n n Shared dimensions grow in bottom-up fashion G. Karuna, GRIET 51

Further Development of Data Cube n Discovery-Driven Exploration of Data Cubes n Complex Aggregation at Multiple Granularities: Multi-Feature Cubes n 9/12/2021 Constrained Gradient Analysis in Data Cubes G. Karuna, GRIET 52

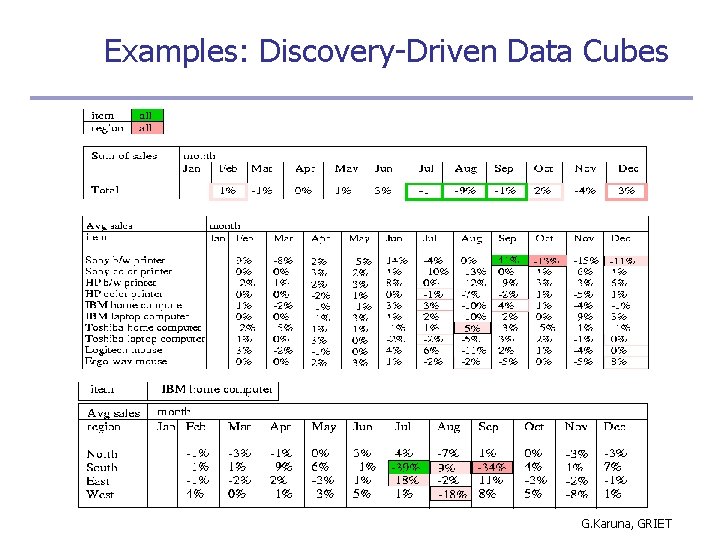

Discovery-Driven Exploration of Data Cubes n n data cube may have a large number of aggregate cells and it becomes a burden for users to even just browse a cube. Tools need to be developed to assist users exploring the huge aggregated space of a data cube. This is cube exploration approach which automatically detects anomalies in the data and marked for the user with visual cues. pre-compute measures indicating exceptions, guide user in the data analysis, at all levels of aggregation n n Exception: significantly different from the value anticipated, based on a statistical model Visual cues such as background color are used to reflect the degree of exception of each cell 9/12/2021 G. Karuna, GRIET 53

Discovery-Driven Exploration of Data Cubes 3 measures used as exception indicators n Self. Exp: surprise of cell relative to other cells at same level of aggregation n In. Exp: surprise beneath the cell n Path. Exp: surprise beneath cell for each drill-down path 9/12/2021 G. Karuna, GRIET 54

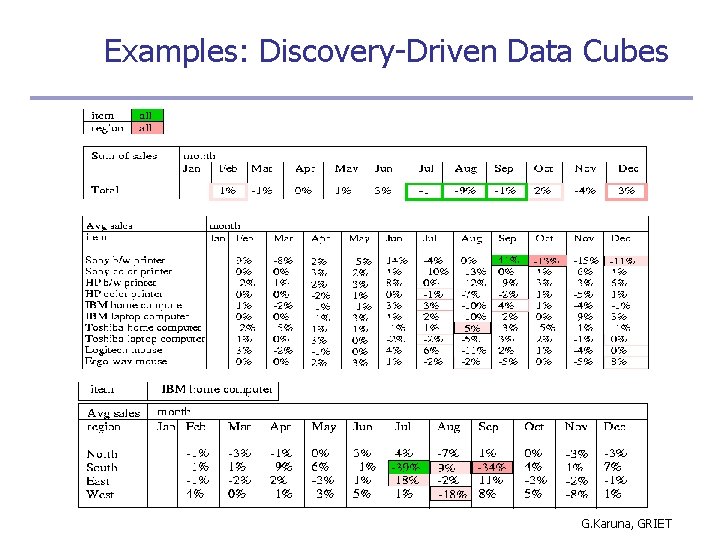

Examples: Discovery-Driven Data Cubes G. Karuna, GRIET

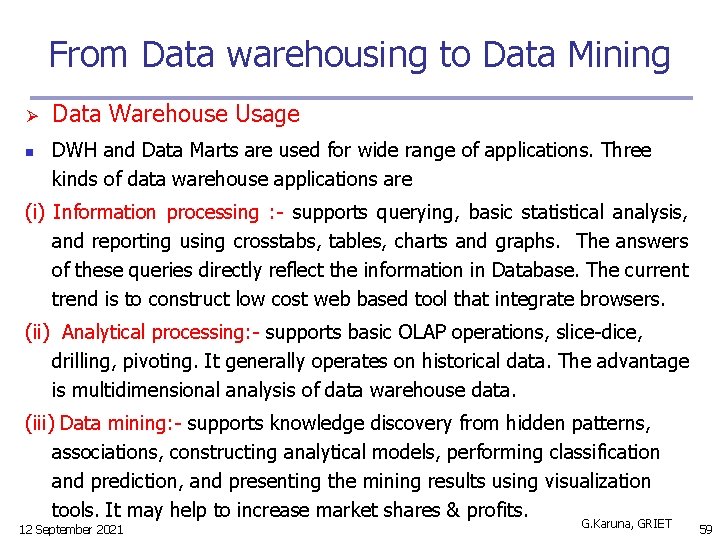

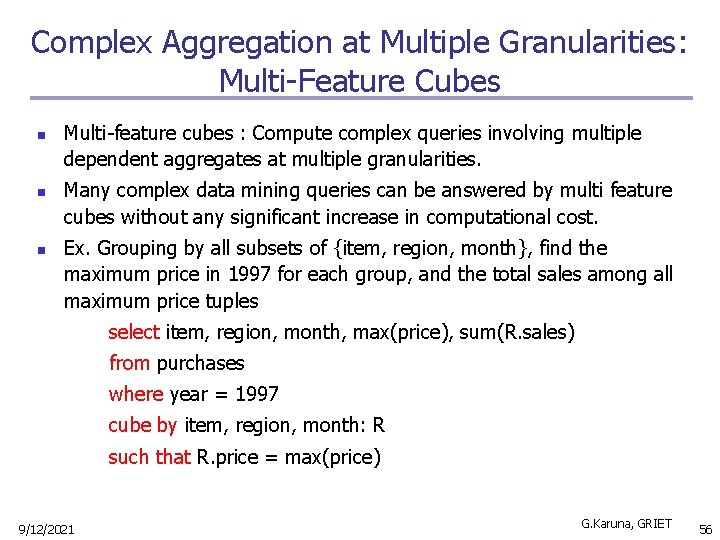

Complex Aggregation at Multiple Granularities: Multi-Feature Cubes n n n Multi-feature cubes : Compute complex queries involving multiple dependent aggregates at multiple granularities. Many complex data mining queries can be answered by multi feature cubes without any significant increase in computational cost. Ex. Grouping by all subsets of {item, region, month}, find the maximum price in 1997 for each group, and the total sales among all maximum price tuples select item, region, month, max(price), sum(R. sales) from purchases where year = 1997 cube by item, region, month: R such that R. price = max(price) 9/12/2021 G. Karuna, GRIET 56

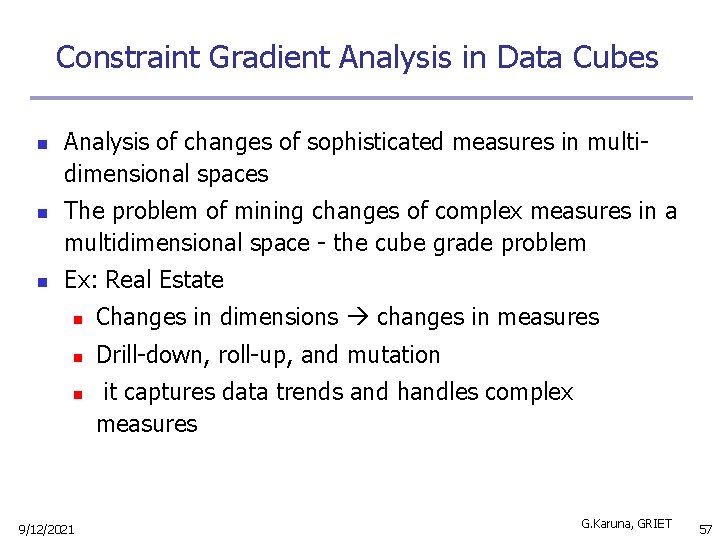

Constraint Gradient Analysis in Data Cubes n n n Analysis of changes of sophisticated measures in multidimensional spaces The problem of mining changes of complex measures in a multidimensional space - the cube grade problem Ex: Real Estate n Changes in dimensions changes in measures n Drill-down, roll-up, and mutation n 9/12/2021 it captures data trends and handles complex measures G. Karuna, GRIET 57

Constraint Gradient Analysis in Data Cubes n significance constraint n probe constraint n gradient constraint 9/12/2021 G. Karuna, GRIET 58

From Data warehousing to Data Mining Ø n Data Warehouse Usage DWH and Data Marts are used for wide range of applications. Three kinds of data warehouse applications are (i) Information processing : - supports querying, basic statistical analysis, and reporting using crosstabs, tables, charts and graphs. The answers of these queries directly reflect the information in Database. The current trend is to construct low cost web based tool that integrate browsers. (ii) Analytical processing: - supports basic OLAP operations, slice-dice, drilling, pivoting. It generally operates on historical data. The advantage is multidimensional analysis of data warehouse data. (iii) Data mining: - supports knowledge discovery from hidden patterns, associations, constructing analytical models, performing classification and prediction, and presenting the mining results using visualization tools. It may help to increase market shares & profits. 12 September 2021 G. Karuna, GRIET 59

From OLAP to OLAM n n Online Analytical Mining(OLAM) also called as OLAP with Data Mining ( in Multi-dimensional database or DWH). Why online analytical mining? (i) High quality of data in data warehouses DWH contains integrated, consistent, cleaned data. So that costly preprocessing tools required. (ii) Available information processing infrastructure surrounding DWHs Infrastructure such as ODBC, OLEDB, Web accessing, service facilities, reporting and OLAP tools etc constructed systematically. (iii) OLAP-based exploratory data analysis OLAM provides facilities for mining at different levels & for different subsets. Mining with drilling, dicing, pivoting, etc. (iv) On-line selection of data mining functions Integration with multiple mining functions, algorithms, and tasks 12 September 2021 G. Karuna, GRIET 60

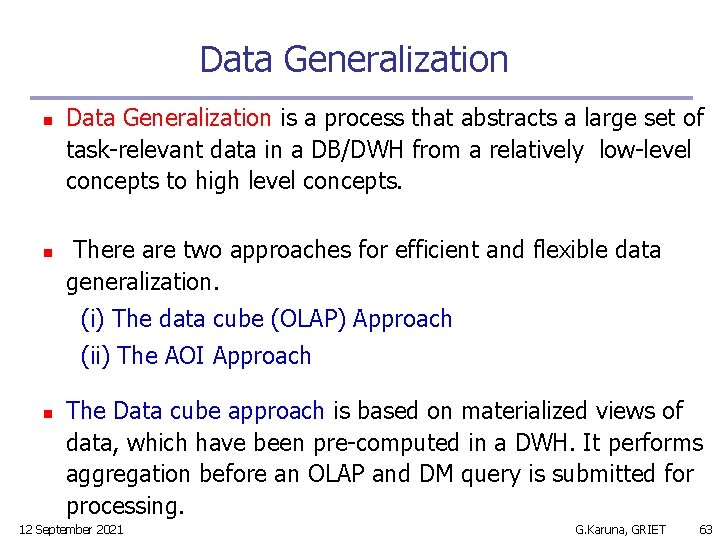

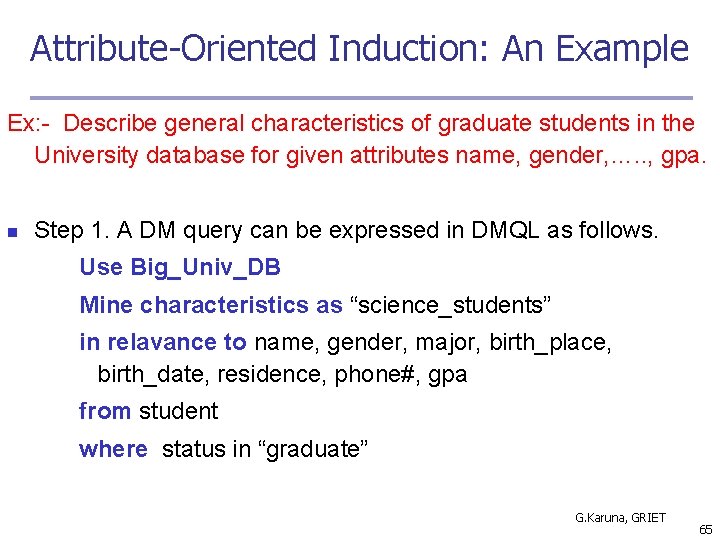

An OLAM System Architecture n n n OLAM server performs Analytical Mining like OLAP performs Analytical Processing. An integrated OLAM & OLAP Architecture shown below. Both OLAM and OLAP servers accept user queries via GUI API and work with data cube via cube API. A meta data directory used to guide the access of data cube. The data cube constructed by accessing/ integrating multiple data bases via MDDB API which support OLEDB (or) ODBC connections. OLAM server may perform multiple data mining tasks. This consisting of integrated DM modules and these are more sophisticated than OLAP server. 12 September 2021 G. Karuna, GRIET 61

An OLAM System Architecture Mining query Mining result Layer 4 User Interface User GUI API OLAM Engine OLAP Engine Layer 3 OLAP/OLAM Data Cube API Layer 2 MDDB Meta Data Filtering&Integration Database API Filtering Layer 1 Databases 12 September 2021 Data cleaning Data integration Warehouse Data Repository G. Karuna, GRIET 62

Data Generalization n n Data Generalization is a process that abstracts a large set of task-relevant data in a DB/DWH from a relatively low-level concepts to high level concepts. There are two approaches for efficient and flexible data generalization. (i) The data cube (OLAP) Approach (ii) The AOI Approach n The Data cube approach is based on materialized views of data, which have been pre-computed in a DWH. It performs aggregation before an OLAP and DM query is submitted for processing. 12 September 2021 G. Karuna, GRIET 63

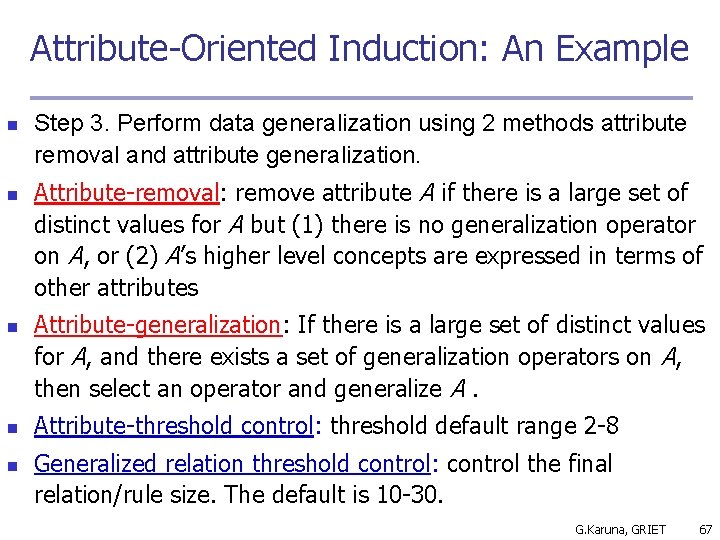

Attribute Oriented Induction AOI Approach : n AOI is basically a query oriented generalization based , online data analysis technique. (i) Collect the task-relevant data using a DMQL & relational database query and construct initial working relation(IWR). (ii) Perform data generalization by examining the no. of distinct values of each attribute. This is done by using 2 methods, attribute removal or attribute generalization. (iii) Apply aggregation by merging identical, generalized tuples and accumulating their respective counts. (iv) The resulting generalized relation can be mapped into different forms of presentation to the users. 12 September 2021 G. Karuna, GRIET 64

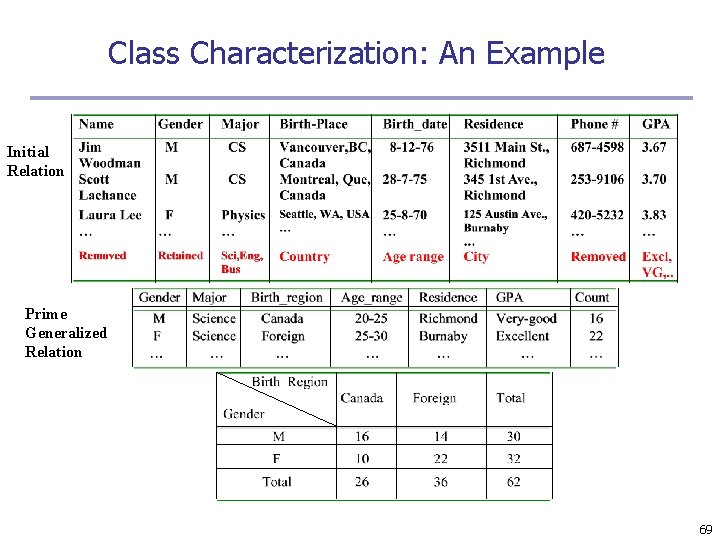

Attribute-Oriented Induction: An Example Ex: - Describe general characteristics of graduate students in the University database for given attributes name, gender, …. . , gpa. n Step 1. A DM query can be expressed in DMQL as follows. Use Big_Univ_DB Mine characteristics as “science_students” in relavance to name, gender, major, birth_place, birth_date, residence, phone#, gpa from student where status in “graduate” G. Karuna, GRIET 65

Attribute-Oriented Induction: An Example n Step 2. Fetch task relevant set of data using an SQL statement. i. e. transform into SQL statement. Use Big_Univ_DB Select name, gender, major, birth_place, birth_date, residence, phone#, gpa from student where status in {“M. Sc. ”, “MBA”, “B. Sc. ”, “B. A. ”, ”B. Com” , ”M. Com”, ”MCA”, “MBBS” }. n Now the data is stored in a table called as initial working relation (IWR). G. Karuna, GRIET 66

Attribute-Oriented Induction: An Example n n n Step 3. Perform data generalization using 2 methods attribute removal and attribute generalization. Attribute-removal: remove attribute A if there is a large set of distinct values for A but (1) there is no generalization operator on A, or (2) A’s higher level concepts are expressed in terms of other attributes Attribute-generalization: If there is a large set of distinct values for A, and there exists a set of generalization operators on A, then select an operator and generalize A. Attribute-threshold control: threshold default range 2 -8 Generalized relation threshold control: control the final relation/rule size. The default is 10 -30. G. Karuna, GRIET 67

Attribute-Oriented Induction: An Example n Name - remove attribute because of distinct values n 68

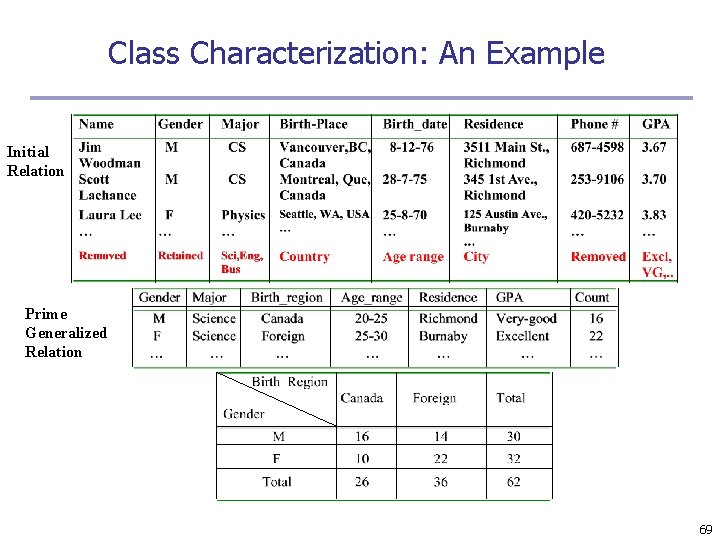

Class Characterization: An Example Initial Relation Prime Generalized Relation 69