Data Warehousing 1 Data Warehouse A data warehouse

- Slides: 30

Data Warehousing 1

Data Warehouse • A data warehouse is a huge database that stores historical data • Example: Store information about all sales of products in all branches of a supermarket • Example: Information about all telephone calls made through a certain company 2

OLTP vs. OLAP • The queries we have seen so far were Online Line Transaction Processing, i. e. , many small queries with results needed immediately • Typically, Online Line Analytical Processing is done against a data warehouse. – A lot of aggregation – Results aren’t needed immediately (queries can run for hours) – Used for decision support (more about this later) 3

Creating a Data Warehouse • Data from different sources is combined – need to transform data to a single schema – need to transform data to have same type of measurements (e. g. , same currency) • Occasionally, data is refreshed (get new data) • Occasionally, data is purged (throw out old data) • Need a meta-data repository to store information for the above 4

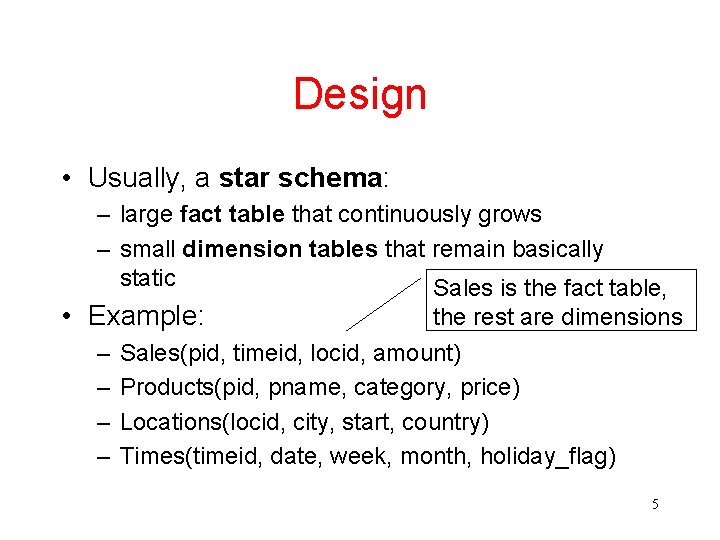

Design • Usually, a star schema: – large fact table that continuously grows – small dimension tables that remain basically static Sales is the fact table, • Example: – – the rest are dimensions Sales(pid, timeid, locid, amount) Products(pid, pname, category, price) Locations(locid, city, start, country) Times(timeid, date, week, month, holiday_flag) 5

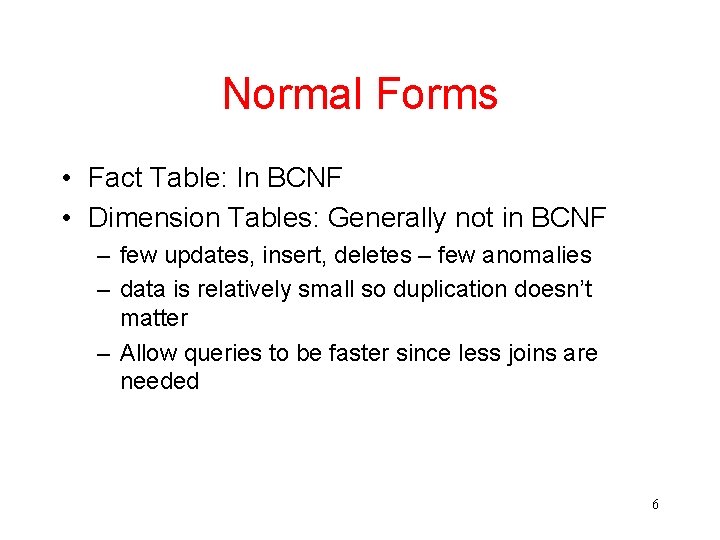

Normal Forms • Fact Table: In BCNF • Dimension Tables: Generally not in BCNF – few updates, insert, deletes – few anomalies – data is relatively small so duplication doesn’t matter – Allow queries to be faster since less joins are needed 6

Data Mining 7

Data Mining • Data Mining is the process of finding interesting trends or patterns in large datasets in order to guide future decisions. • Related to exploratory data analysis (area of statistics) and knowledge discovery (area in artificial intelligence, machine learning). • Data Mining is characterized by having VERY LARGE datasets. 8

Some Real Problems • Associations between products bought in a store – milk – beers and diapers • Deciding on product discounts • Figuring out how to keep customer at lowest cost to company • Personal recommendation page at Amazon • Stock market trends 9

Data Mining vs. Machine Learning • Size: Databases are usually very large so algorithms must scale well • Design Purpose: Databases are not usually designed for data mining (but for other purposes), and thus, may not have convenient attributes • Errors and Noise: Databases almost always contain errors 10

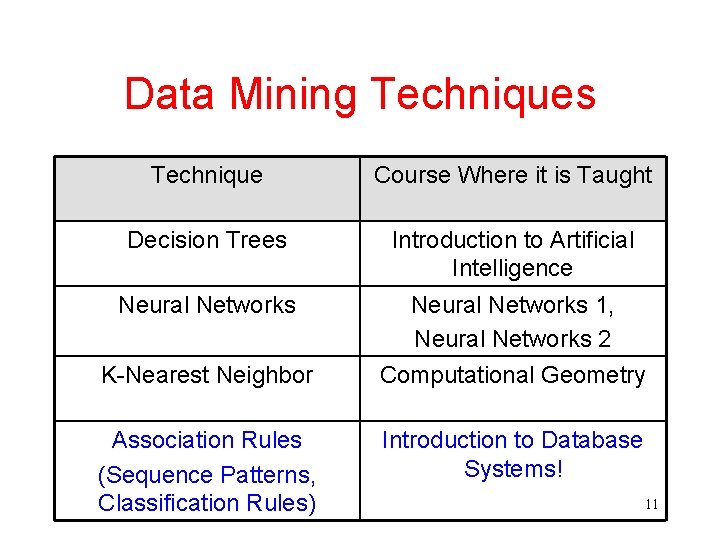

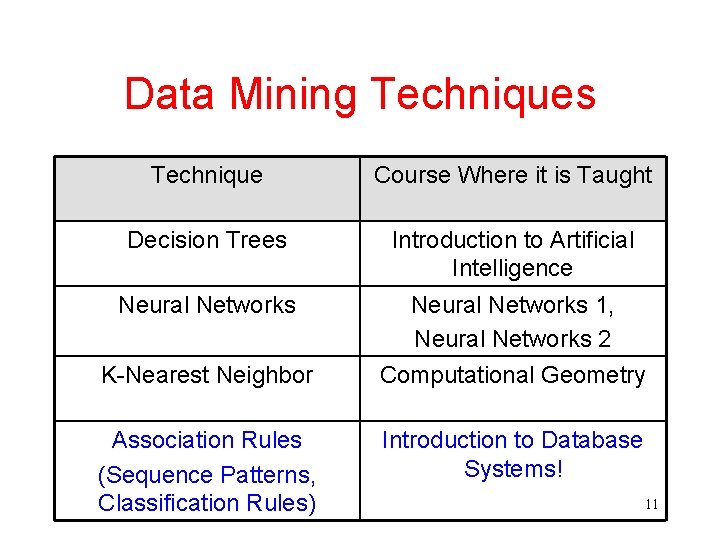

Data Mining Techniques Technique Course Where it is Taught Decision Trees Introduction to Artificial Intelligence Neural Networks 1, Neural Networks 2 Computational Geometry K-Nearest Neighbor Association Rules (Sequence Patterns, Classification Rules) Introduction to Database Systems! 11

Association Rules • A market basket is a collection of items bought by a single customer in a single customer transaction • Problem: Identify sets of items that are purchased together • Attempt to identify rules of the form {pen} {ink} Meaning: If a pen is bought in a transaction, it is likely that ink will also be bought 12

Example Purchases Table transid 111 111 item pen ink diary transid 113 114 item pen diary pen 111 112 112 soap pen ink diary 114 114 ink soap tissues 13

Measures for Association Rules • General form: L R, where L and R are sets of items • Support: The support for L R is the percentage of transactions containing all items from L and from R • Confidence: The confidence for L R are the percentage of transactions that contain R, from among the transactions that contain L 14

Examples • The rule {pen} {ink} has: – support: – confidence: • The rule {tissues} {ink} has: – support: – confidence: • The rule {pen} {soap} has: – support: – confidence: 15

Understanding the Measures: The Intuition • If a rule has low support then it might have arisen by chance. There is not enough evidence to draw a conclusion. • If a rule L R has low confidence then it is likely that there is no relationship between buying L and buying R • Note: L R and R L will always have the same support, but may have different confidence 16

Goal • Find association rules with high support and high confidence. Support and confidence values are specified by the user. • Remember: Finding such a rule does not mean that there must be a relationship between the left and right sides. A person must evaluate such rules by hand. – Example: {milk} {bread} 17

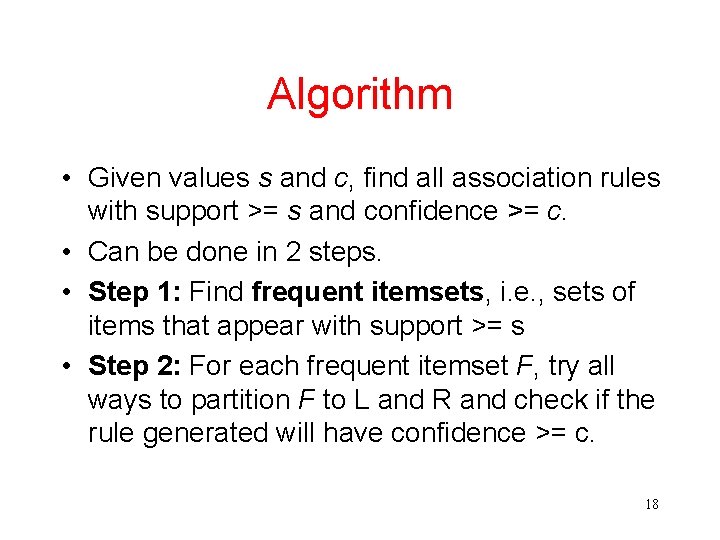

Algorithm • Given values s and c, find all association rules with support >= s and confidence >= c. • Can be done in 2 steps. • Step 1: Find frequent itemsets, i. e. , sets of items that appear with support >= s • Step 2: For each frequent itemset F, try all ways to partition F to L and R and check if the rule generated will have confidence >= c. 18

Finding Frequent Itemsets • How would you do this? Think! 19

Finding Frequent Itemsets • The A Priori Property: Every subset of a frequent itemset must also be a frequent itemset. • This means that we can create frequent itemsets iteratively, by taking frequent itemsets of size n, and extending them to frequent itemsets of size n+1. 20

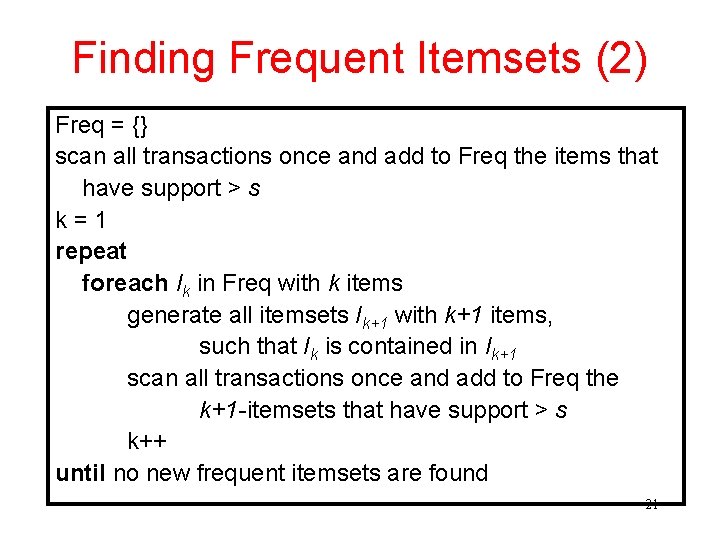

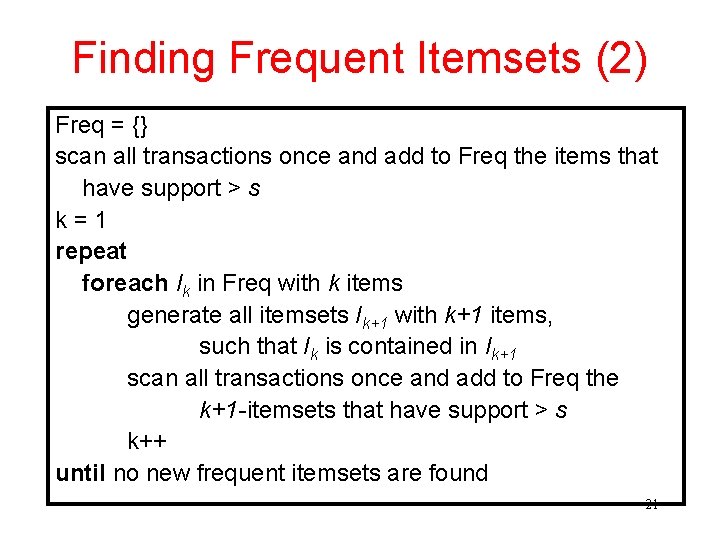

Finding Frequent Itemsets (2) Freq = {} scan all transactions once and add to Freq the items that have support > s k=1 repeat foreach Ik in Freq with k items generate all itemsets Ik+1 with k+1 items, such that Ik is contained in Ik+1 scan all transactions once and add to Freq the k+1 -itemsets that have support > s k++ until no new frequent itemsets are found 21

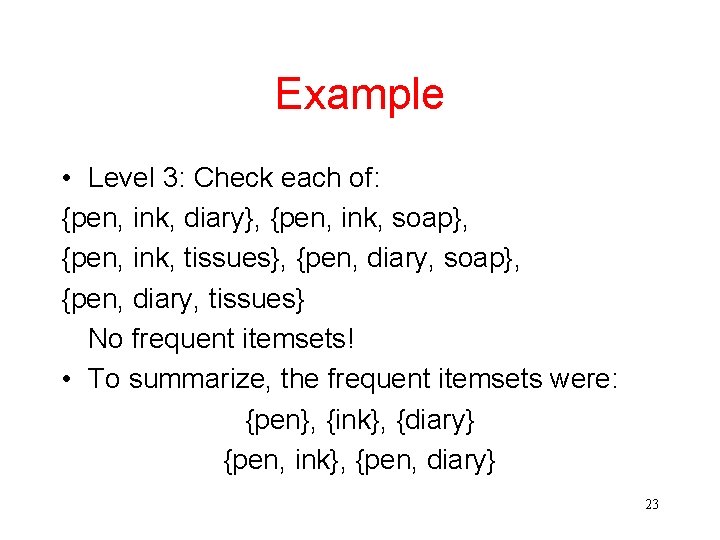

Example Run the algorithm with support = 0. 7 • Level 1: Find frequent itemsets: {pen}, {ink}, {diary} • Level 2: Check each of: {pen, ink}, {pen, diary}, {pen, soap}, {pen, tissues}, {ink, diary}, {ink, soap}, {ink, tissues}, {diary, soap}, {diary, tissues} The frequent itemsets are: {pen, ink}, {pen, diary} 22

Example • Level 3: Check each of: {pen, ink, diary}, {pen, ink, soap}, {pen, ink, tissues}, {pen, diary, soap}, {pen, diary, tissues} No frequent itemsets! • To summarize, the frequent itemsets were: {pen}, {ink}, {diary} {pen, ink}, {pen, diary} 23

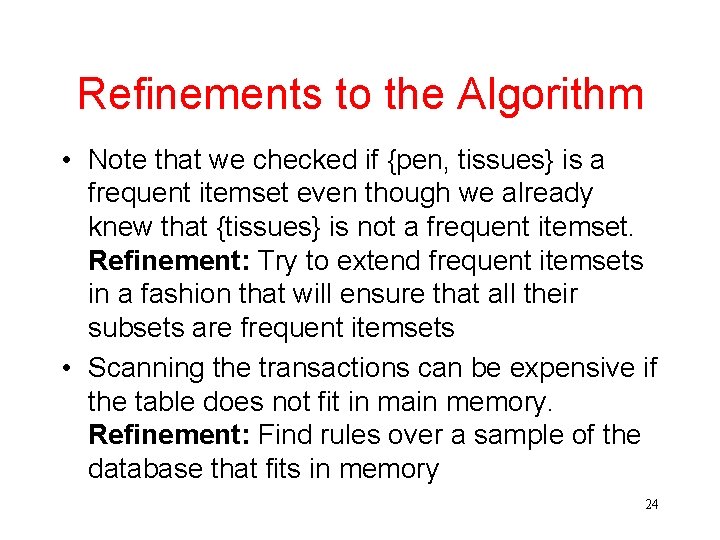

Refinements to the Algorithm • Note that we checked if {pen, tissues} is a frequent itemset even though we already knew that {tissues} is not a frequent itemset. Refinement: Try to extend frequent itemsets in a fashion that will ensure that all their subsets are frequent itemsets • Scanning the transactions can be expensive if the table does not fit in main memory. Refinement: Find rules over a sample of the database that fits in memory 24

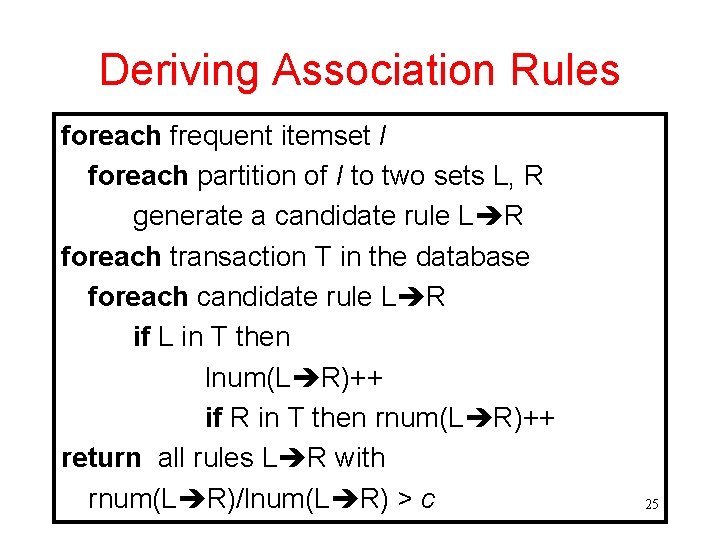

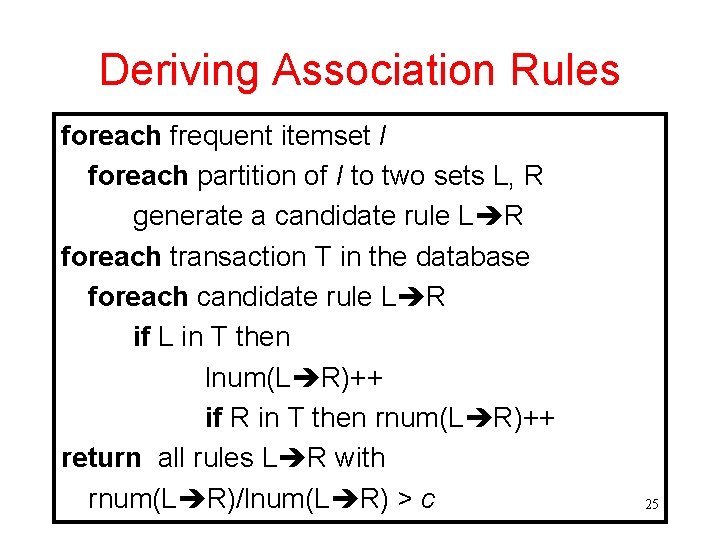

Deriving Association Rules foreach frequent itemset I foreach partition of I to two sets L, R generate a candidate rule L R foreach transaction T in the database foreach candidate rule L R if L in T then lnum(L R)++ if R in T then rnum(L R)++ return all rules L R with rnum(L R)/lnum(L R) > c 25

Other Types of Rules • Sequential Patterns – Each transaction for each customer is a set of items – A sequence of transactions is a sequence of sets. For example: {pen, ink, soap}, {pen, ink diary, soap} – A subsequence is derived from a sequence by deleting some itemsets and some items in other itemsets 26

Sequence Patterns (2) • {pen}, {ink, diary}, {pen, soap} is a subsequence of {pen, ink}, {shirt}, {milk, ink, diary}, {soap, pen, diary} • {pen}, {ink, diary}, {pen, soap} is not a subsequence of {pen, ink}, {shirt}, {soap, pen, diary}, {milk, ink, diary} 27

Sequence Patterns (3) • The support for a sequence pattern S is the percentage of customer sequences that have S as a subsequence. • A sequence s 1, s 2, . . . , sn with high support means that someone who buys s 1 is likely to buy s 2 later on, etc. • Finding sequence patterns: In a similar fashion to finding frequent itemsets. Start with small sequences and try to extend them 28

Classification Rules • Consider a table: Bank. Info(custid, balance, age, return. Loan) • Would like to find rules of the type: “If age is in a certain range and balance is in a certain range, then a customer is likely to not return a loan. ” • We can apply this rule to new customers who ask for a loan. 29

Classification Rules (2) • General Form: (l 1<X 1<h 1) and. . . and (lk < Xk < hk) Y=c • Use values for X 1, . . . , Xk to “predict” value for Y • We can define support and confidence as before. 30