CSCE 513 Computer Architecture Lecture 14 Speculation One

![/* create all threads */ for (i=0; i<NUM_THREADS; ++i) { thread_args[i] = i; printf("In /* create all threads */ for (i=0; i<NUM_THREADS; ++i) { thread_args[i] = i; printf("In](https://slidetodoc.com/presentation_image_h2/95b07ebed17d7f2033f9da9398e3e55e/image-18.jpg)

![int main (int argc, char *argv[]) { pthread_t threads[NUM_THREADS]; int thread_args[NUM_THREADS]; int rc, i; int main (int argc, char *argv[]) { pthread_t threads[NUM_THREADS]; int thread_args[NUM_THREADS]; int rc, i;](https://slidetodoc.com/presentation_image_h2/95b07ebed17d7f2033f9da9398e3e55e/image-34.jpg)

- Slides: 36

CSCE 513 Computer Architecture Lecture 14 Speculation One more time and Coarse Grain Thread Parallelism (Posix Threads) Topics Readings: n n October 30, 2017 Chapter 3 – ROB, Branch target buffers Gustaphson’s Law Chapter 4 Data Parallelism Posix threads

Overview Last Time n Reorder Buffers Today’s Lecture n n Previous slides on the web on ROB … Test 2 – November 23 !!!!! Readings: Chapter 3 n n n ROB one more time Gustaphson’s Law Speculation revisited Branch Target Buffers : Chapter 3 Interleaved memory Thread Level parallelism – l POSIX - https: //computing. llnl. gov/tutorials/pthreads/ – 2– l 4. 1 -4. 2 CSCE 513 Fall 2017

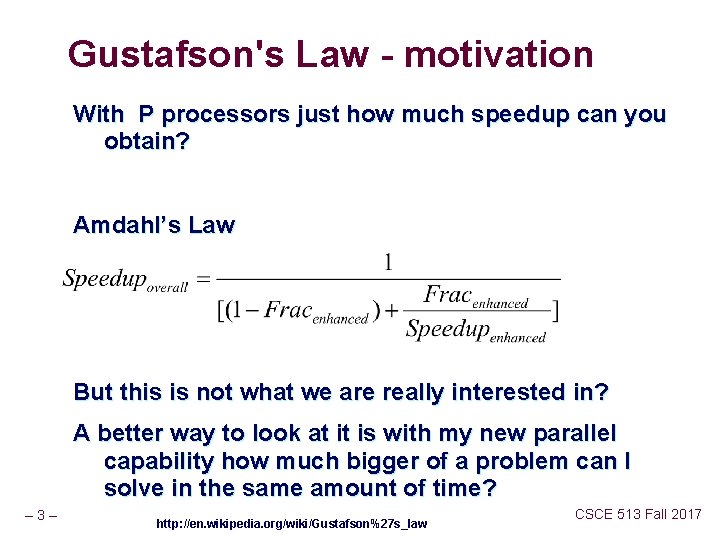

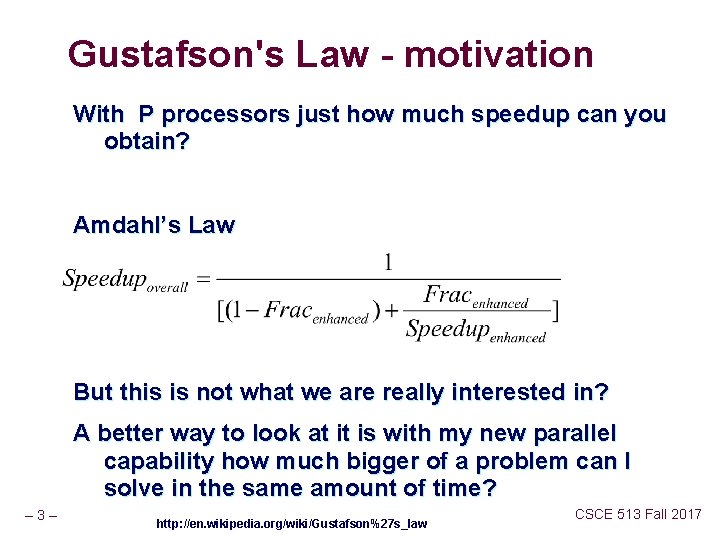

Gustafson's Law - motivation With P processors just how much speedup can you obtain? Amdahl’s Law But this is not what we are really interested in? A better way to look at it is with my new parallel capability how much bigger of a problem can I solve in the same amount of time? – 3– http: //en. wikipedia. org/wiki/Gustafson%27 s_law CSCE 513 Fall 2017

Gustafson's Law Fenhanced – fraction enhanced = fraction parallelizable • Other portion the serial fraction α= (1 - Fparallelizable) P – number of processors Time for typical problem In same time what is the number of operations that can be performed with P processors – 4– http: //en. wikipedia. org/wiki/Gustafson%27 s_law CSCE 513 Fall 2017

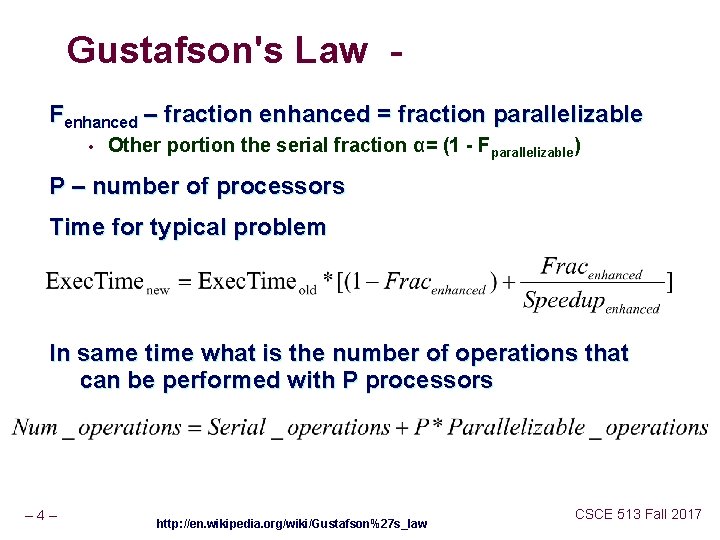

Gustafson's Law “It is based on the idea that if the problem size is allowed to grow monotonically with P, then the sequential fraction of the workload would not ultimately come to dominate. ” § Suppose you are multiplying n x n matrices § Freqparallel = 80% - assume it is constant as n increases § Note since I/O is O(n 2) and multiplying is O(n 3) it is better than this § § – 5– If a 100 x 100 matrix can be multiplied in 1 second with one core. What size matrix can be multiplied in 1 second with 100 processors assuming no communication overhead ? http: //en. wikipedia. org/wiki/Gustafson%27 s_law CSCE 513 Fall 2017

– 6– CSCE 513 Fall 2017

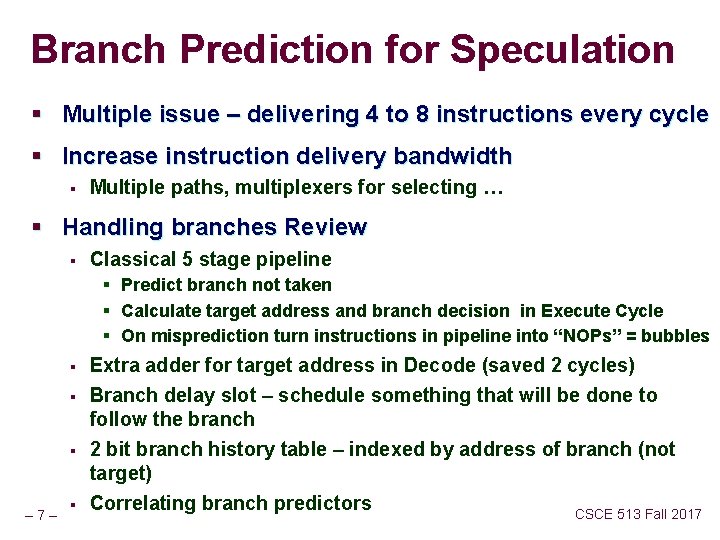

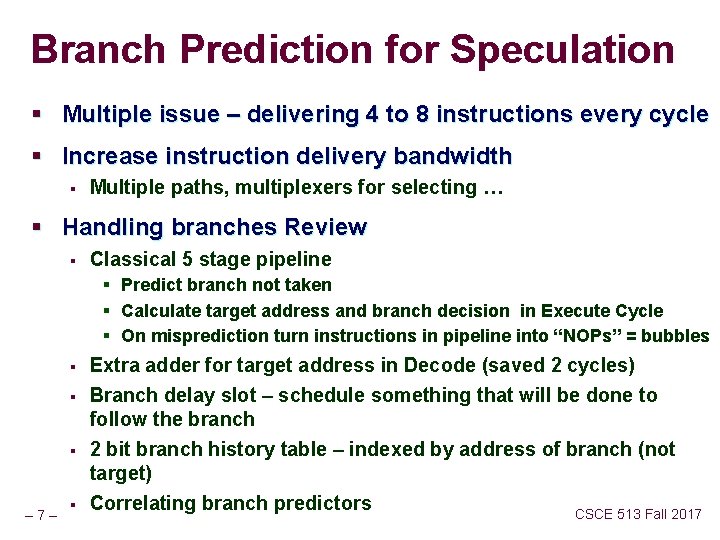

Branch Prediction for Speculation § Multiple issue – delivering 4 to 8 instructions every cycle § Increase instruction delivery bandwidth § Multiple paths, multiplexers for selecting … § Handling branches Review § Classical 5 stage pipeline § Predict branch not taken § Calculate target address and branch decision in Execute Cycle § On misprediction turn instructions in pipeline into “NOPs” = bubbles § § § – 7– § Extra adder for target address in Decode (saved 2 cycles) Branch delay slot – schedule something that will be done to follow the branch 2 bit branch history table – indexed by address of branch (not target) Correlating branch predictors CSCE 513 Fall 2017

Creating a Data Memory Trace in C – 8– CSCE 513 Fall 2017

Links: Threads, Unix Processes, 1. https: //computing. llnl. gov/tutorials/pthreads/ 2. http: //en. wikipedia. org/wiki/POSIX_Threads 3. http: //download. oracle. com/javase/tutorial/essential/concu rrency/procthread. html 4. http: //www. cis. temple. edu/~ingargio/cis 307/readings/syste m-commands. html 5. http: //www. yolinux. com/TUTORIALS/Linux. Tutorial. Posix. Th reads. html – 9– CSCE 513 Fall 2017

Unix System Related Commands ps, kill, lscpu top, nice, jobs, fg, bg /dev/proc – 10 – https: //computing. llnl. gov/tutorials/pthreads/ CSCE 513 Fall 2017

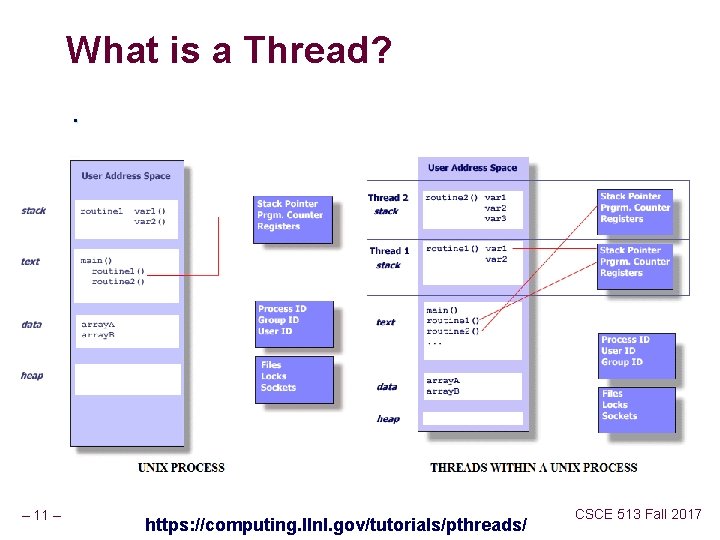

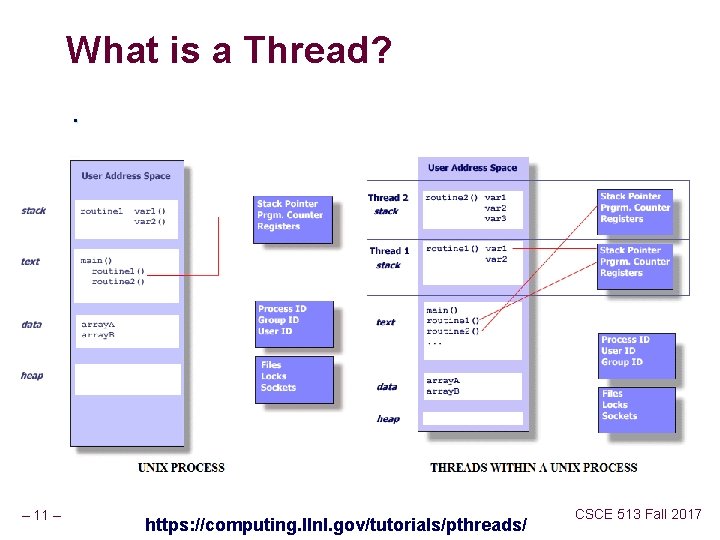

What is a Thread? . – 11 – https: //computing. llnl. gov/tutorials/pthreads/ CSCE 513 Fall 2017

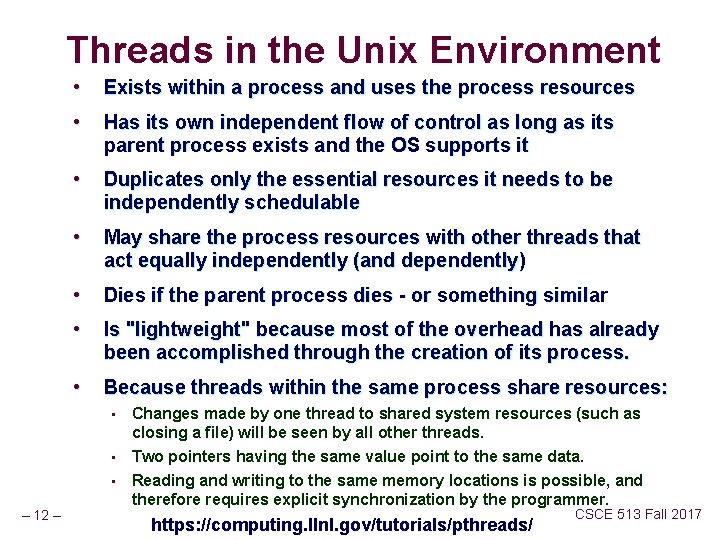

Threads in the Unix Environment • Exists within a process and uses the process resources • Has its own independent flow of control as long as its parent process exists and the OS supports it • Duplicates only the essential resources it needs to be independently schedulable • May share the process resources with other threads that act equally independently (and dependently) • Dies if the parent process dies - or something similar • Is "lightweight" because most of the overhead has already been accomplished through the creation of its process. • Because threads within the same process share resources: • • • – 12 – Changes made by one thread to shared system resources (such as closing a file) will be seen by all other threads. Two pointers having the same value point to the same data. Reading and writing to the same memory locations is possible, and therefore requires explicit synchronization by the programmer. https: //computing. llnl. gov/tutorials/pthreads/ CSCE 513 Fall 2017

Threads Sharing of Data • Because threads within the same process share resources: • • • – 13 – Changes made by one thread to shared system resources (such as closing a file) will be seen by all other threads. Two pointers having the same value point to the same data. Reading and writing to the same memory locations is possible, and therefore requires explicit synchronization by the programmer. https: //computing. llnl. gov/tutorials/pthreads/ CSCE 513 Fall 2017

Sharing of Data between Processes – 14 – CSCE 513 Fall 2017

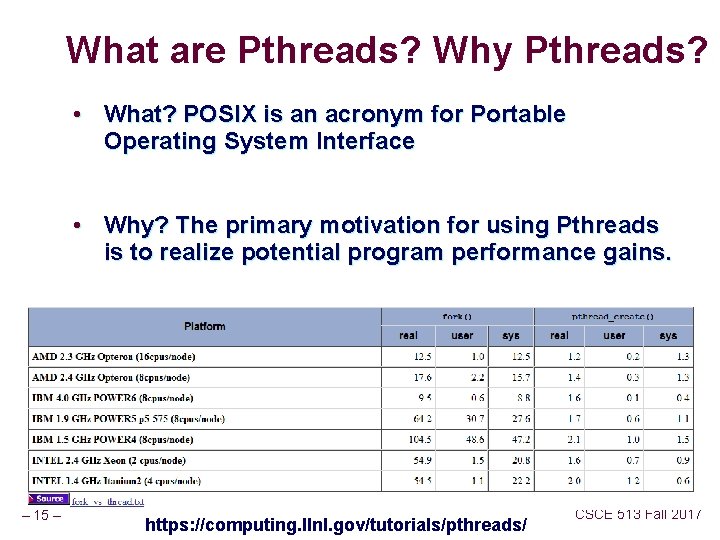

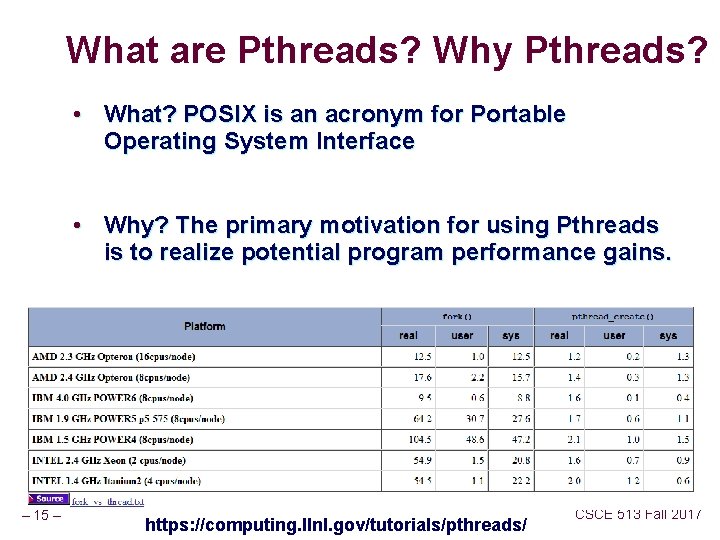

What are Pthreads? Why Pthreads? • What? POSIX is an acronym for Portable Operating System Interface • Why? The primary motivation for using Pthreads is to realize potential program performance gains. – 15 – https: //computing. llnl. gov/tutorials/pthreads/ CSCE 513 Fall 2017

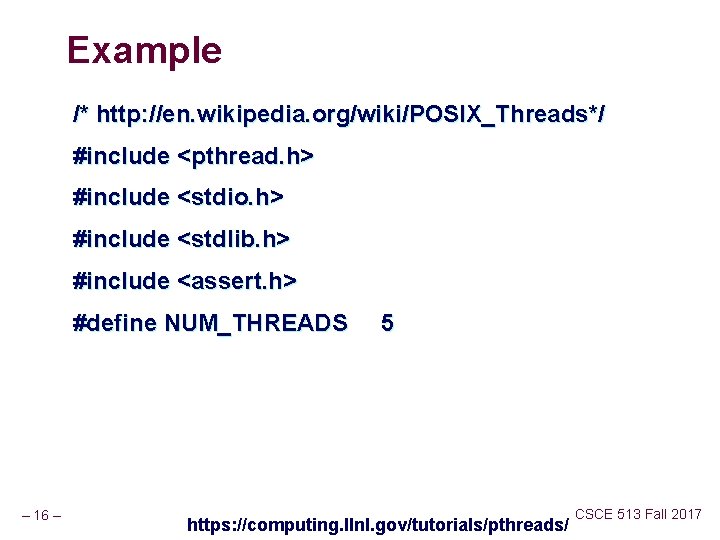

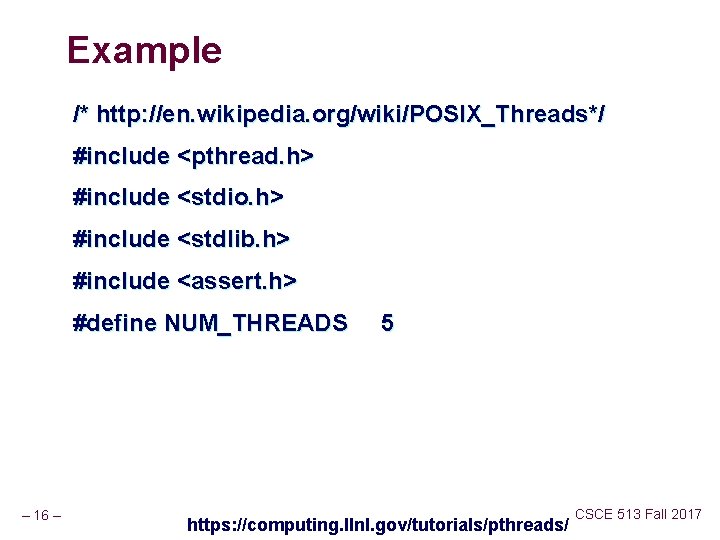

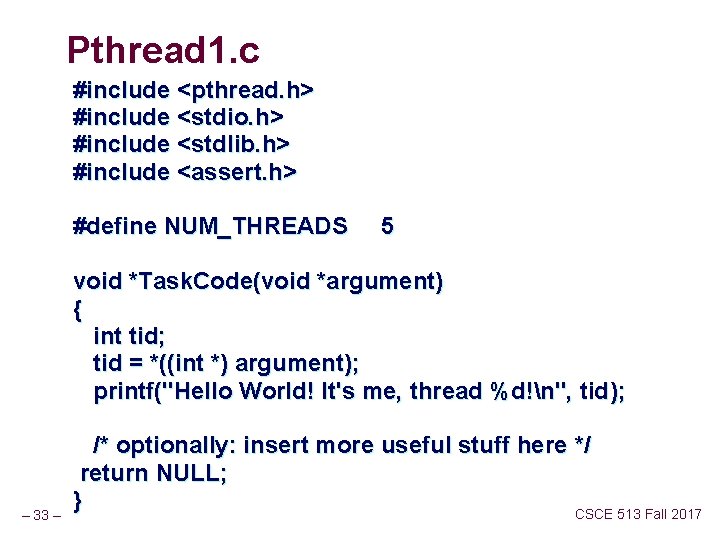

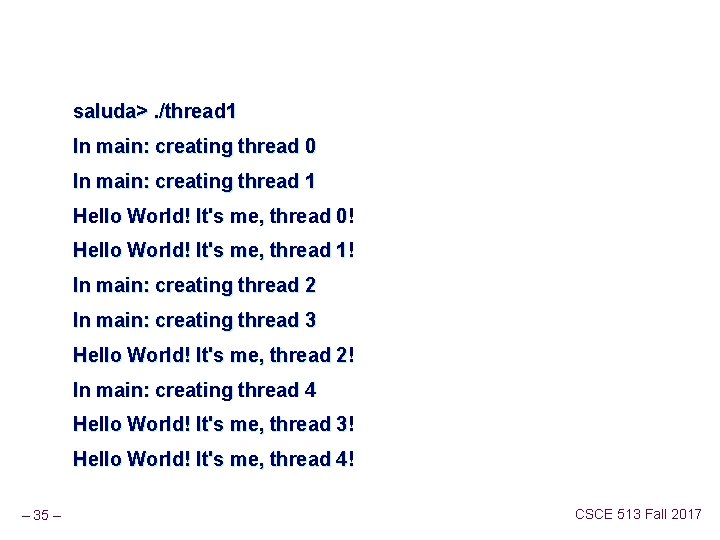

Example /* http: //en. wikipedia. org/wiki/POSIX_Threads*/ #include <pthread. h> #include <stdio. h> #include <stdlib. h> #include <assert. h> #define NUM_THREADS – 16 – 5 https: //computing. llnl. gov/tutorials/pthreads/ CSCE 513 Fall 2017

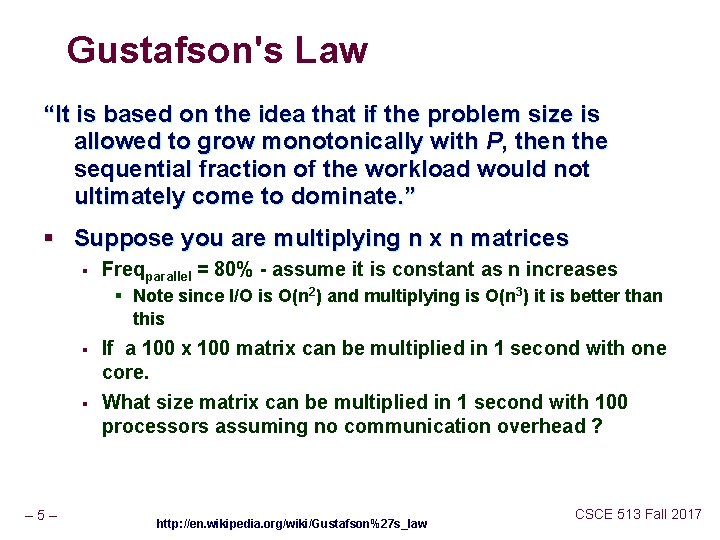

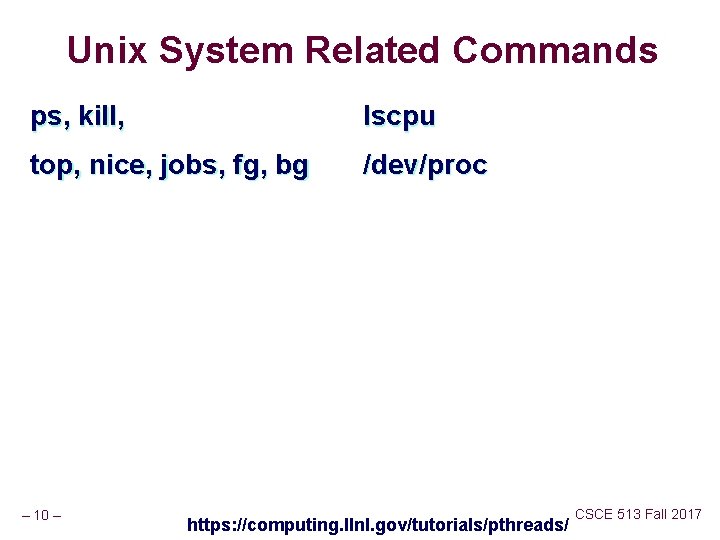

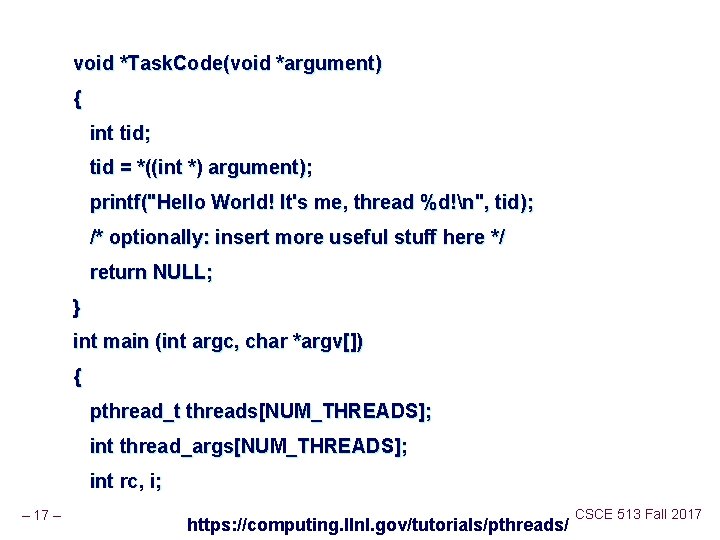

void *Task. Code(void *argument) { int tid; tid = *((int *) argument); printf("Hello World! It's me, thread %d!n", tid); /* optionally: insert more useful stuff here */ return NULL; } int main (int argc, char *argv[]) { pthread_t threads[NUM_THREADS]; int thread_args[NUM_THREADS]; int rc, i; – 17 – https: //computing. llnl. gov/tutorials/pthreads/ CSCE 513 Fall 2017

![create all threads for i0 iNUMTHREADS i threadargsi i printfIn /* create all threads */ for (i=0; i<NUM_THREADS; ++i) { thread_args[i] = i; printf("In](https://slidetodoc.com/presentation_image_h2/95b07ebed17d7f2033f9da9398e3e55e/image-18.jpg)

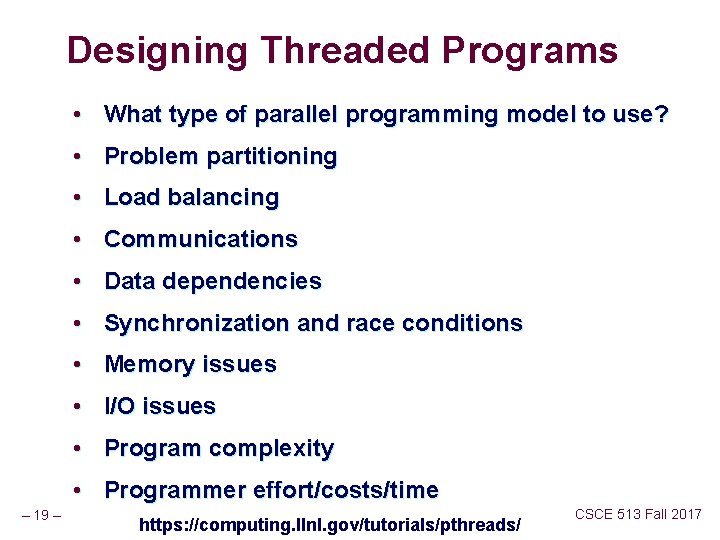

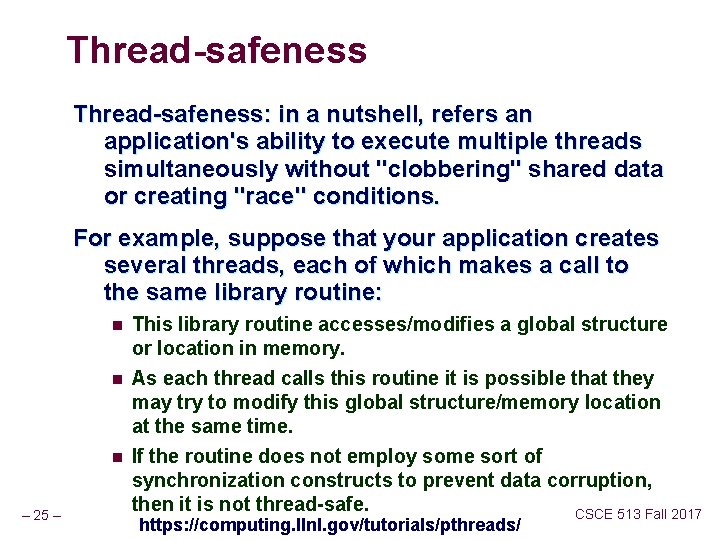

/* create all threads */ for (i=0; i<NUM_THREADS; ++i) { thread_args[i] = i; printf("In main: creating thread %dn", i); rc = pthread_create(&threads[i], NULL, Task. Code, (void *) &thread_args[i]); assert(0 == rc); } /* wait for all threads to complete */ for (i=0; i<NUM_THREADS; ++i) { rc = pthread_join(threads[i], NULL); assert(0 == rc); } exit(EXIT_SUCCESS); – 18 – } https: //computing. llnl. gov/tutorials/pthreads/ CSCE 513 Fall 2017

Designing Threaded Programs • What type of parallel programming model to use? • Problem partitioning • Load balancing • Communications • Data dependencies • Synchronization and race conditions • Memory issues • I/O issues • Program complexity • Programmer effort/costs/time – 19 – https: //computing. llnl. gov/tutorials/pthreads/ CSCE 513 Fall 2017

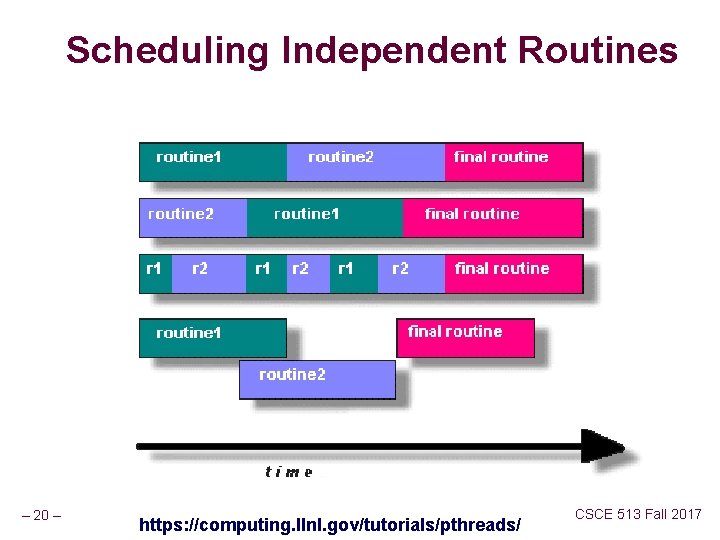

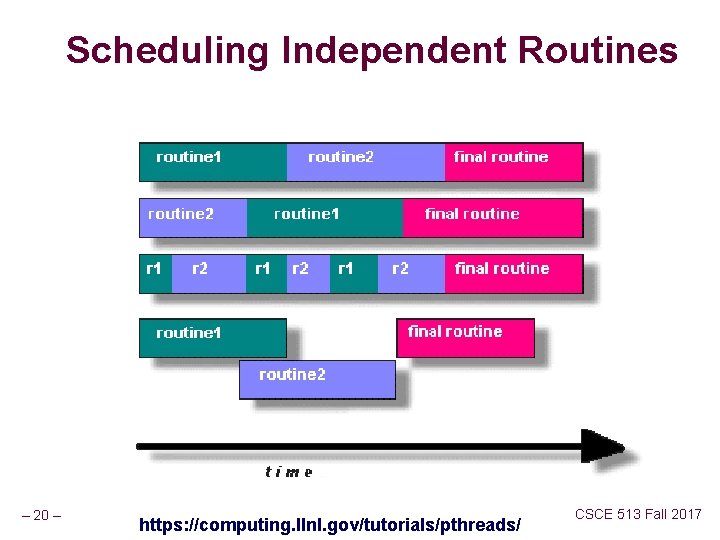

Scheduling Independent Routines – 20 – https: //computing. llnl. gov/tutorials/pthreads/ CSCE 513 Fall 2017

Programs suitable for Multithreading • Work that can be executed, or data that can be operated on, by multiple tasks simultaneously • Block for potentially long I/O waits • Use many CPU cycles in some places but not others • Must respond to asynchronous events • Some work is more important than other work (priority interrupts) – 21 – https: //computing. llnl. gov/tutorials/pthreads/ CSCE 513 Fall 2017

Client-Server Applications – 22 – CSCE 513 Fall 2017

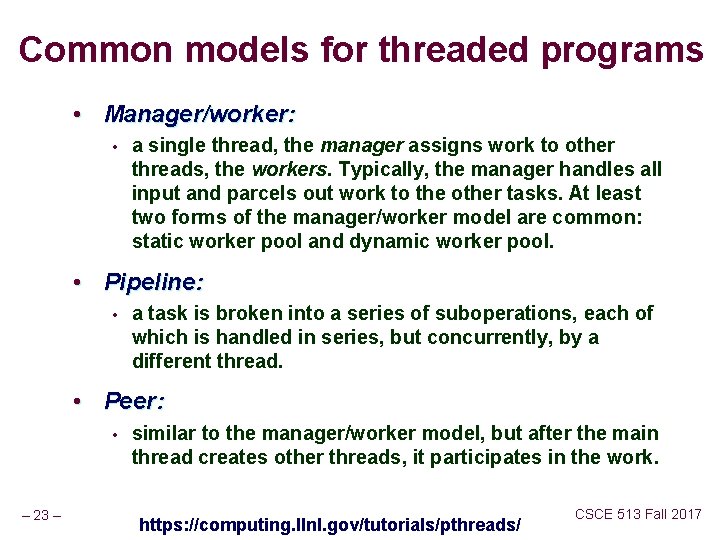

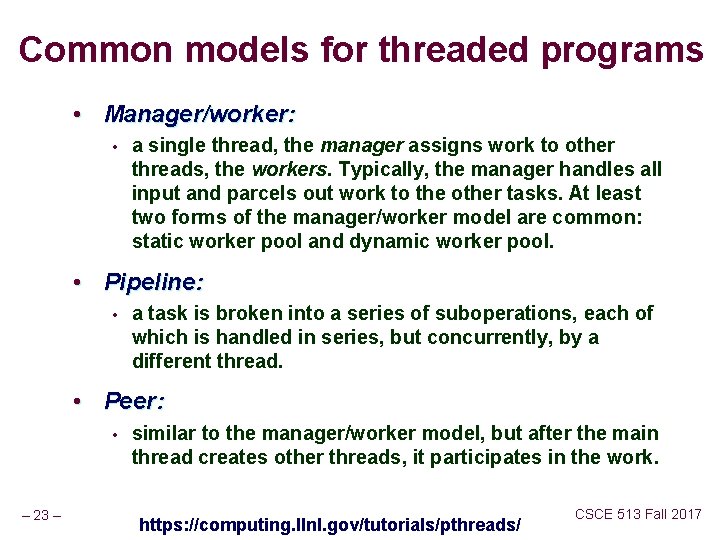

Common models for threaded programs • Manager/worker: • a single thread, the manager assigns work to other threads, the workers. Typically, the manager handles all input and parcels out work to the other tasks. At least two forms of the manager/worker model are common: static worker pool and dynamic worker pool. • Pipeline: • a task is broken into a series of suboperations, each of which is handled in series, but concurrently, by a different thread. • Peer: • – 23 – similar to the manager/worker model, but after the main thread creates other threads, it participates in the work. https: //computing. llnl. gov/tutorials/pthreads/ CSCE 513 Fall 2017

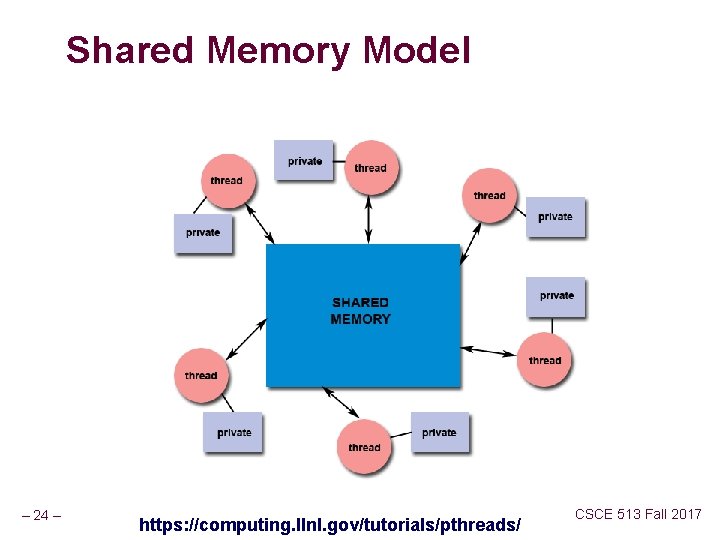

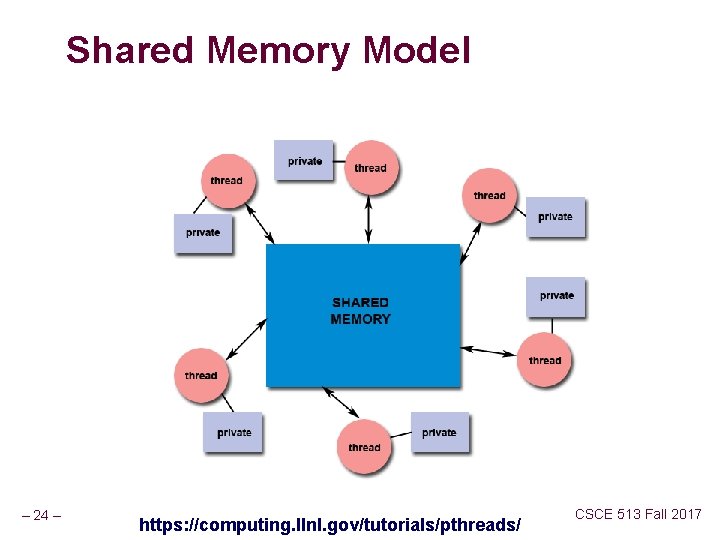

Shared Memory Model – 24 – https: //computing. llnl. gov/tutorials/pthreads/ CSCE 513 Fall 2017

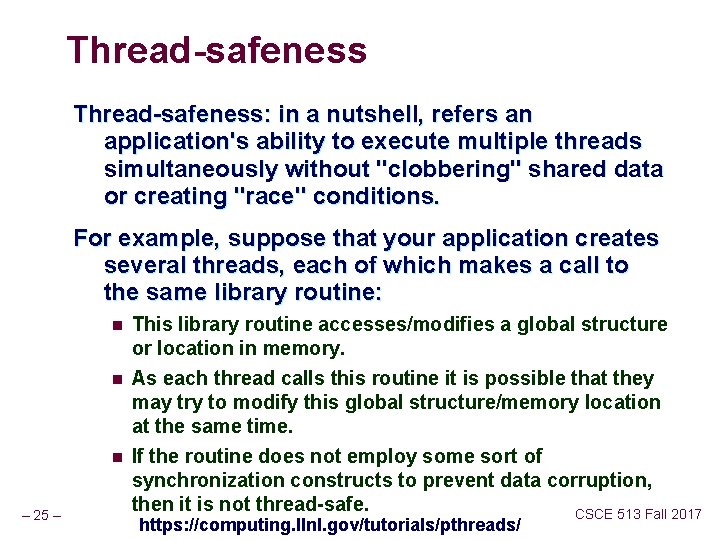

Thread-safeness: in a nutshell, refers an application's ability to execute multiple threads simultaneously without "clobbering" shared data or creating "race" conditions. For example, suppose that your application creates several threads, each of which makes a call to the same library routine: n n n – 25 – This library routine accesses/modifies a global structure or location in memory. As each thread calls this routine it is possible that they may try to modify this global structure/memory location at the same time. If the routine does not employ some sort of synchronization constructs to prevent data corruption, then it is not thread-safe. CSCE 513 Fall 2017 https: //computing. llnl. gov/tutorials/pthreads/

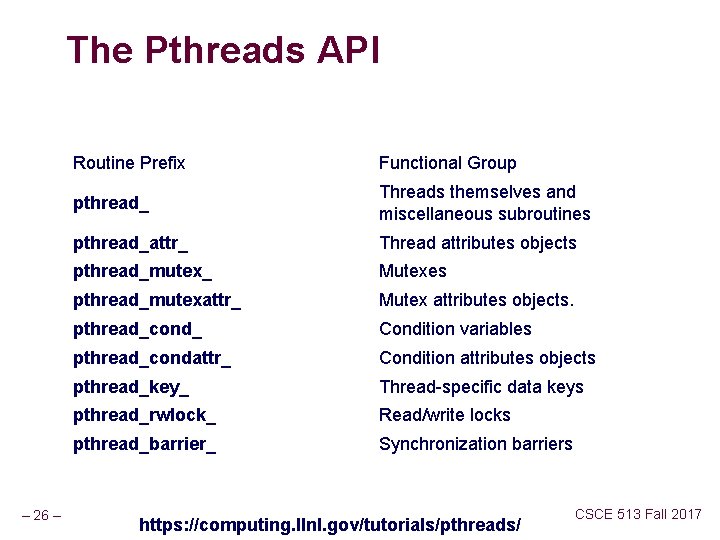

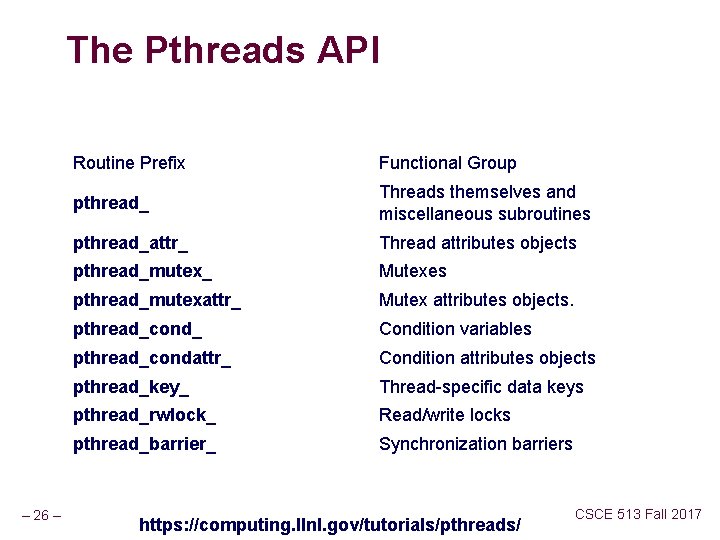

The Pthreads API – 26 – Routine Prefix Functional Group pthread_ Threads themselves and miscellaneous subroutines pthread_attr_ Thread attributes objects pthread_mutex_ Mutexes pthread_mutexattr_ Mutex attributes objects. pthread_cond_ Condition variables pthread_condattr_ Condition attributes objects pthread_key_ Thread-specific data keys pthread_rwlock_ Read/write locks pthread_barrier_ Synchronization barriers https: //computing. llnl. gov/tutorials/pthreads/ CSCE 513 Fall 2017

Compiling Threaded Programs. – 27 – CSCE 513 Fall 2017

Creating and Terminating Threads – 28 – https: //computing. llnl. gov/tutorials/pthreads/ CSCE 513 Fall 2017

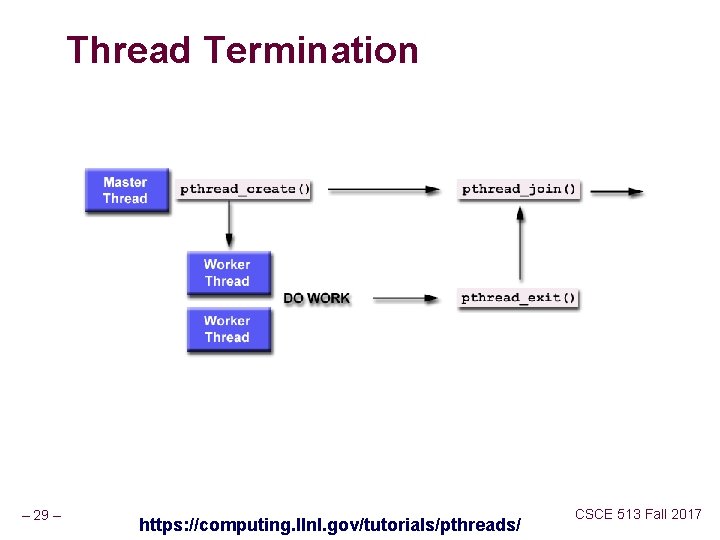

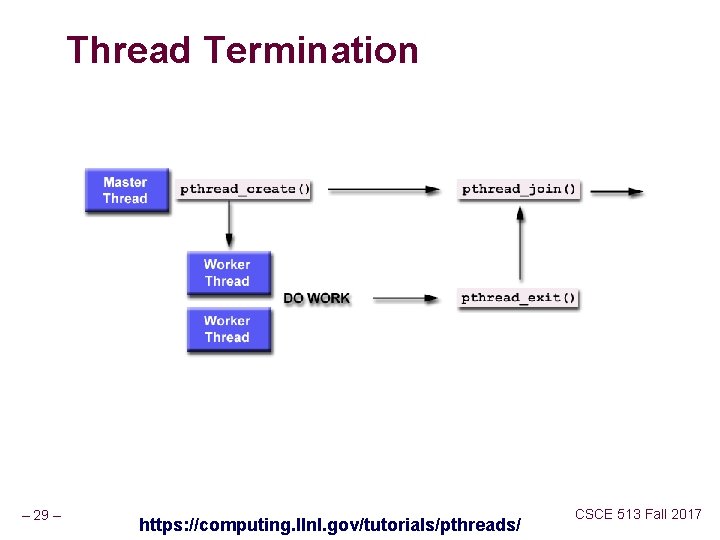

Thread Termination – 29 – https: //computing. llnl. gov/tutorials/pthreads/ CSCE 513 Fall 2017

Mutex Variables pthread_mutex_lock (mutex) pthread_mutex_trylock (mutex) pthread_mutex_unlock (mutex) – 30 – https: //computing. llnl. gov/tutorials/pthreads/ CSCE 513 Fall 2017

The pthread_mutex_lock() routine is used by a thread to acquire a lock on the specified mutex variable. If the mutex is already locked by another thread, this call will block the calling thread until the mutex is unlocked. – 31 – https: //computing. llnl. gov/tutorials/pthreads/ CSCE 513 Fall 2017

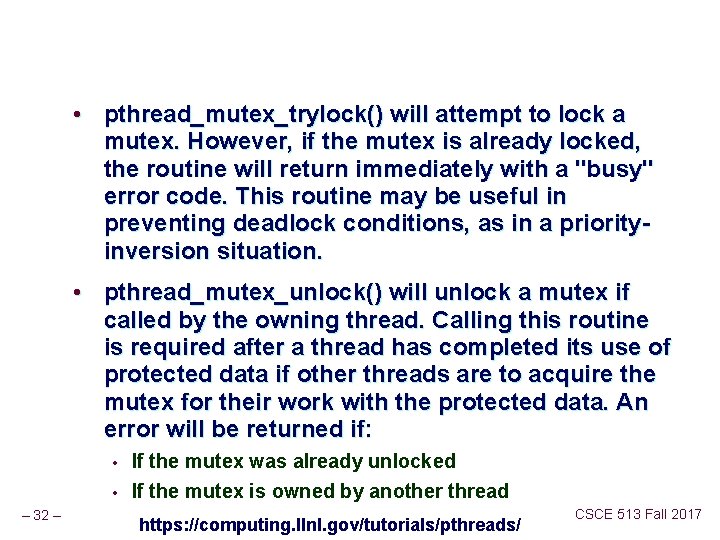

• pthread_mutex_trylock() will attempt to lock a mutex. However, if the mutex is already locked, the routine will return immediately with a "busy" error code. This routine may be useful in preventing deadlock conditions, as in a priorityinversion situation. • pthread_mutex_unlock() will unlock a mutex if called by the owning thread. Calling this routine is required after a thread has completed its use of protected data if other threads are to acquire the mutex for their work with the protected data. An error will be returned if: • • – 32 – If the mutex was already unlocked If the mutex is owned by another thread https: //computing. llnl. gov/tutorials/pthreads/ CSCE 513 Fall 2017

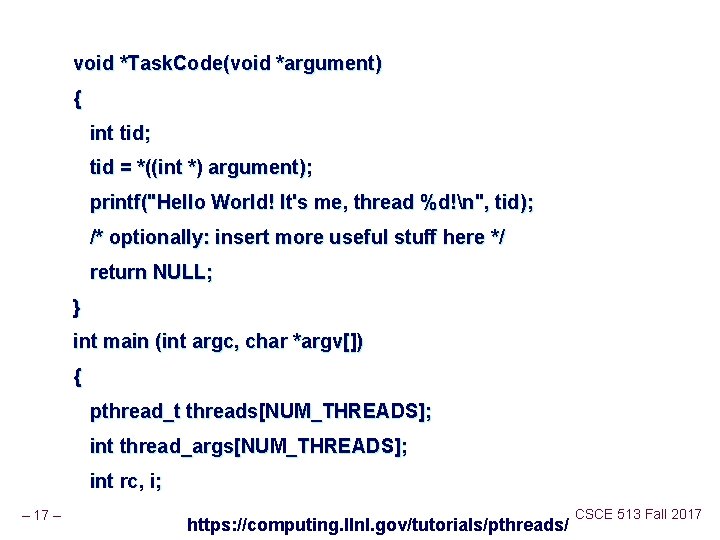

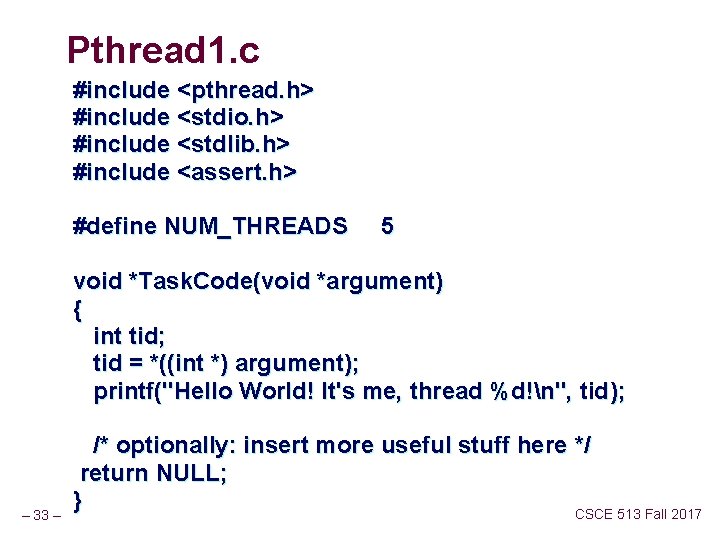

Pthread 1. c #include <pthread. h> #include <stdio. h> #include <stdlib. h> #include <assert. h> #define NUM_THREADS 5 void *Task. Code(void *argument) { int tid; tid = *((int *) argument); printf("Hello World! It's me, thread %d!n", tid); – 33 – /* optionally: insert more useful stuff here */ return NULL; } CSCE 513 Fall 2017

![int main int argc char argv pthreadt threadsNUMTHREADS int threadargsNUMTHREADS int rc i int main (int argc, char *argv[]) { pthread_t threads[NUM_THREADS]; int thread_args[NUM_THREADS]; int rc, i;](https://slidetodoc.com/presentation_image_h2/95b07ebed17d7f2033f9da9398e3e55e/image-34.jpg)

int main (int argc, char *argv[]) { pthread_t threads[NUM_THREADS]; int thread_args[NUM_THREADS]; int rc, i; /* create all threads */ for (i=0; i<NUM_THREADS; ++i) { thread_args[i] = i; printf("In main: creating thread %dn", i); rc = pthread_create(&threads[i], NULL, Task. Code, (void *) &thread_args[i]); assert(0 == rc); } – 34 – /* wait for all threads to complete */ for (i=0; i<NUM_THREADS; ++i) { rc = pthread_join(threads[i], NULL); assert(0 == rc); } exit(EXIT_SUCCESS); } CSCE 513 Fall 2017

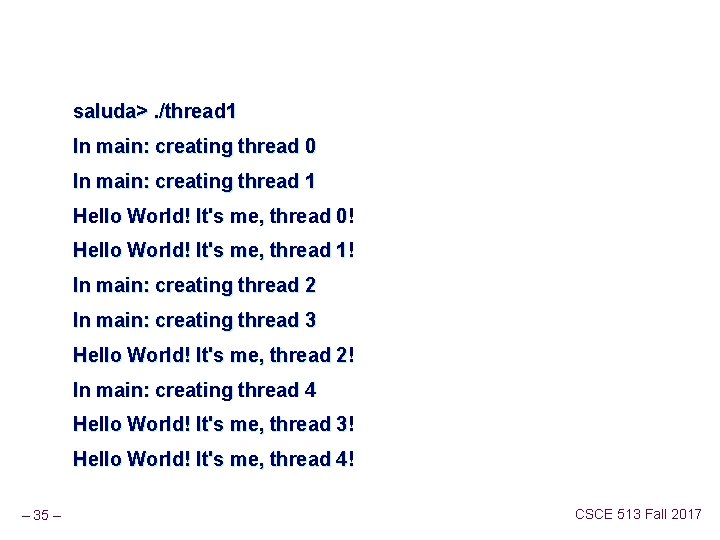

saluda>. /thread 1 In main: creating thread 0 In main: creating thread 1 Hello World! It's me, thread 0! Hello World! It's me, thread 1! In main: creating thread 2 In main: creating thread 3 Hello World! It's me, thread 2! In main: creating thread 4 Hello World! It's me, thread 3! Hello World! It's me, thread 4! – 35 – CSCE 513 Fall 2017

. – 36 – https: //computing. llnl. gov/tutorials/pthreads/ CSCE 513 Fall 2017