CSCE 513 Computer Architecture Lecture 14 Instruction Level

- Slides: 41

CSCE 513 Computer Architecture Lecture 14 Instruction Level Parallelism: Static Scheduling Hyper-threading and limits Topics n Static Scheduling n Hardware threading Limits on ILP n Readings October 31, 2016

Overview Last Time n Vector machines New n n – 2– chapter 3 Topics revisited: multiple issue; tomasulo’s data hazards for address field Hyperthreading Limits on ILP CSCE 513 Fall 2016

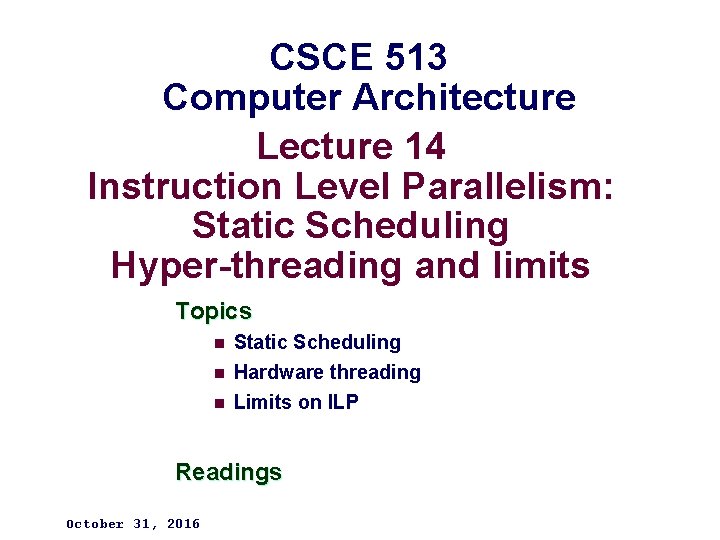

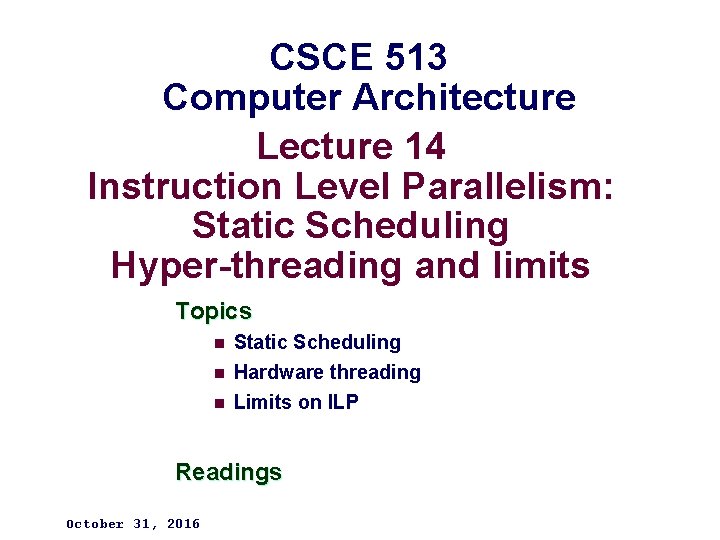

Loop Unrolling Latencies – 3– CSCE 513 Fall 2016

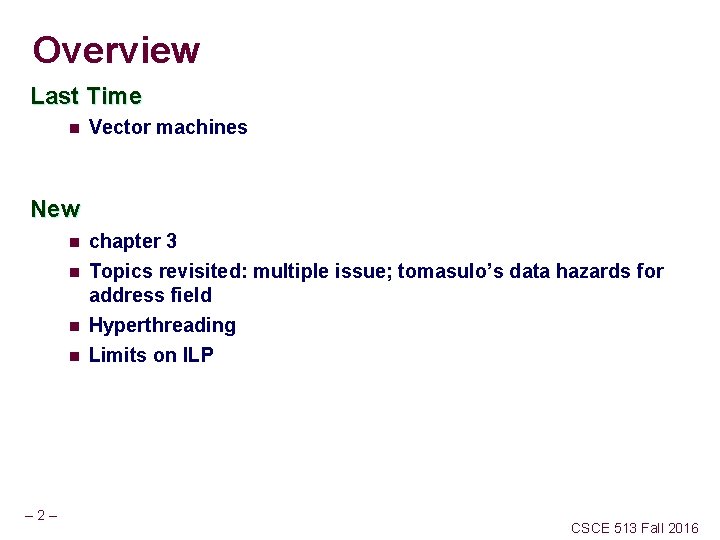

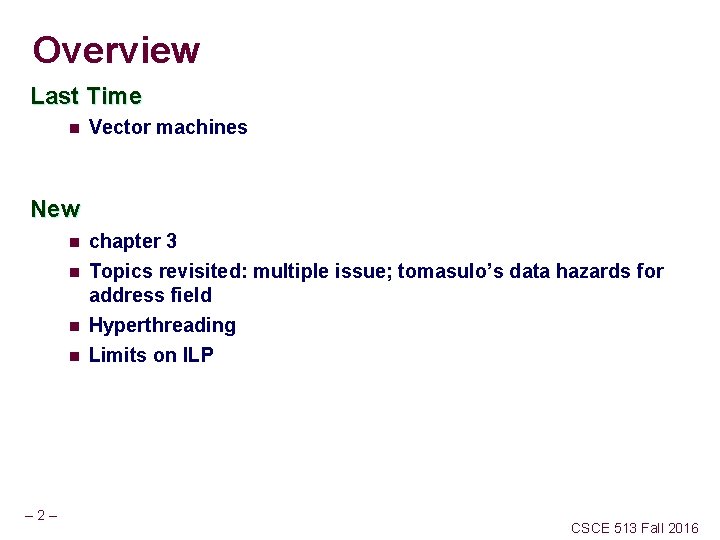

Add constant to array elements – 4– CSCE 513 Fall 2016

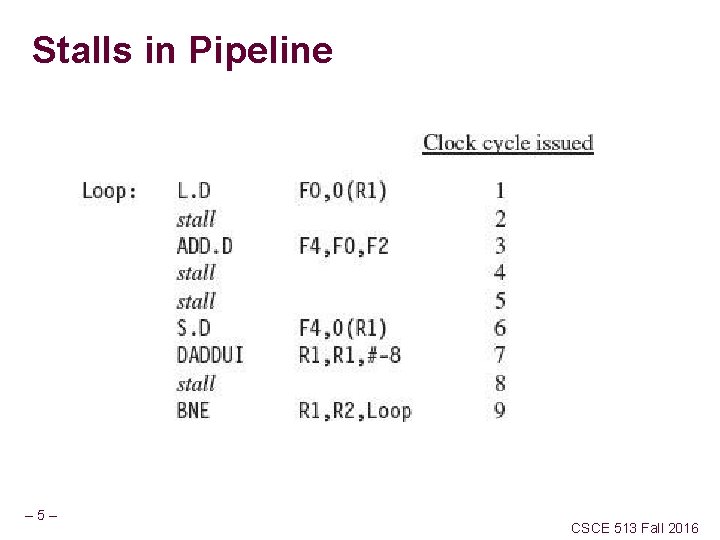

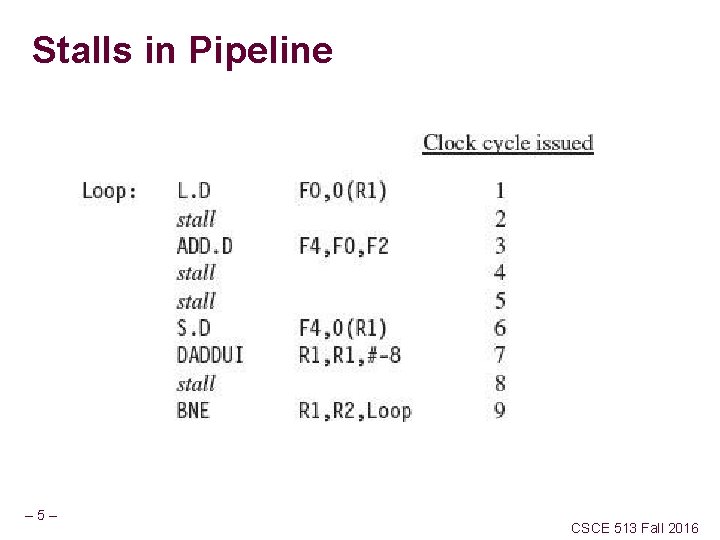

Stalls in Pipeline – 5– CSCE 513 Fall 2016

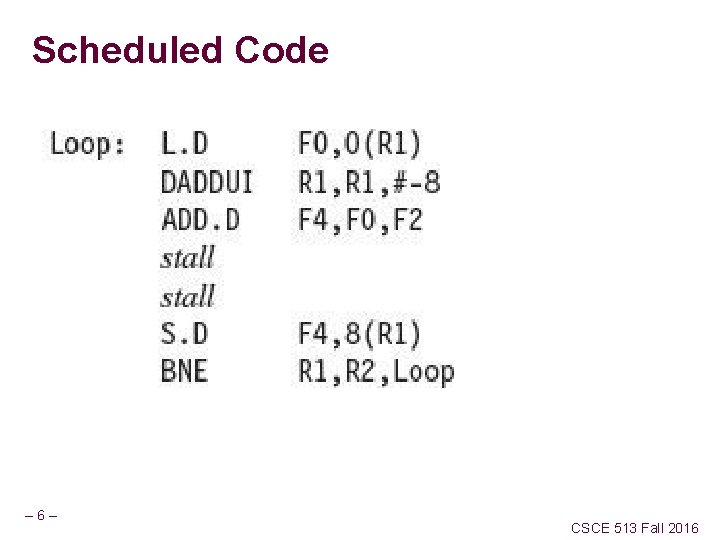

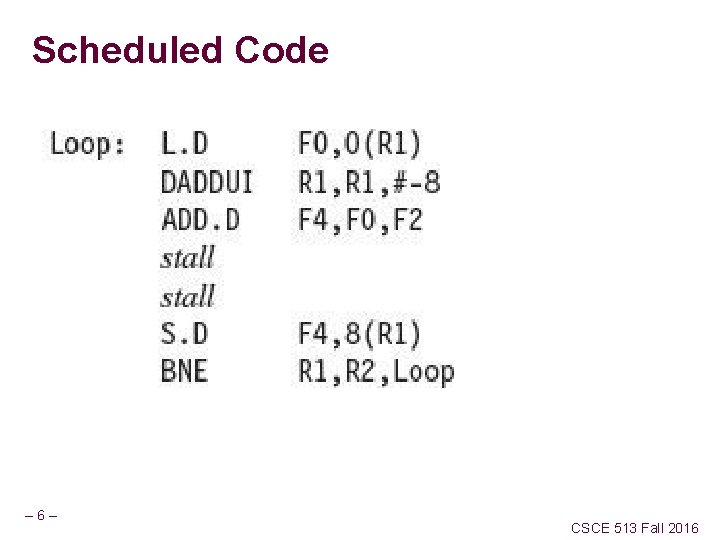

Scheduled Code – 6– CSCE 513 Fall 2016

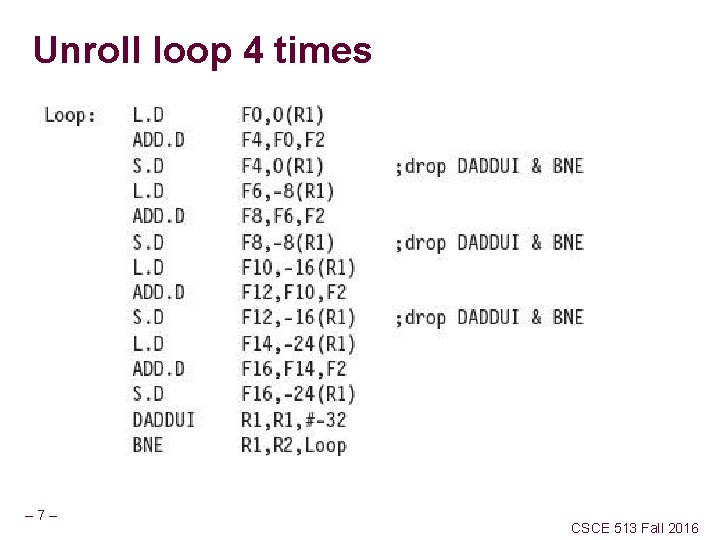

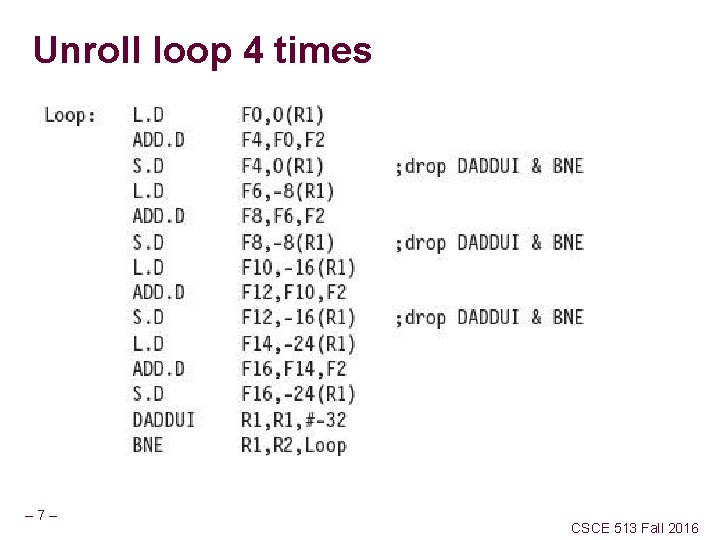

Unroll loop 4 times – 7– CSCE 513 Fall 2016

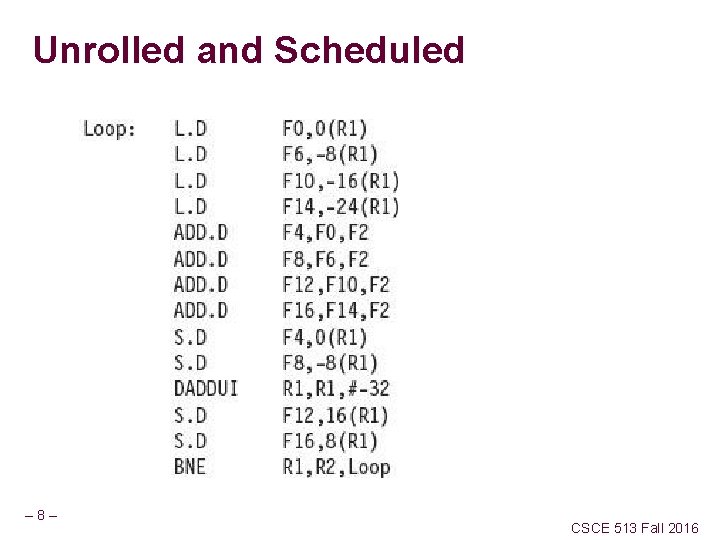

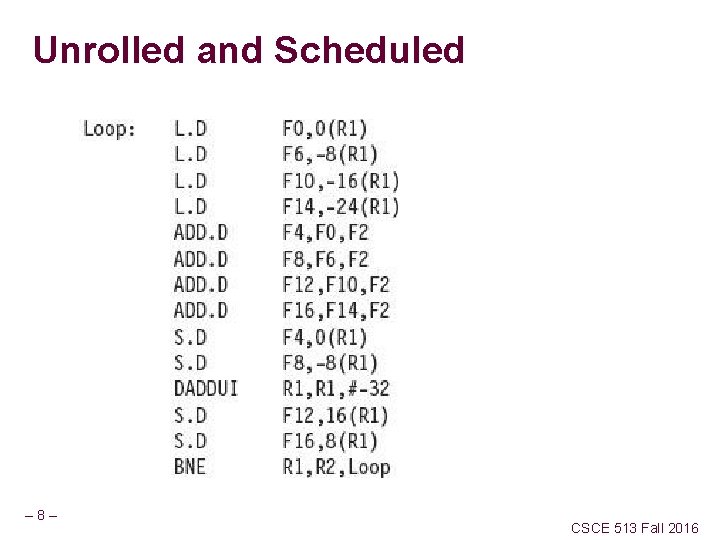

Unrolled and Scheduled – 8– CSCE 513 Fall 2016

Limitations of Static Scheduling – 9– CSCE 513 Fall 2016

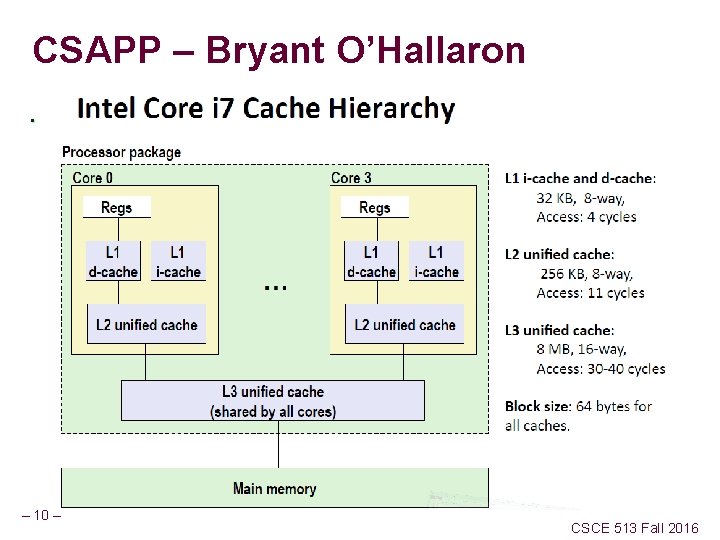

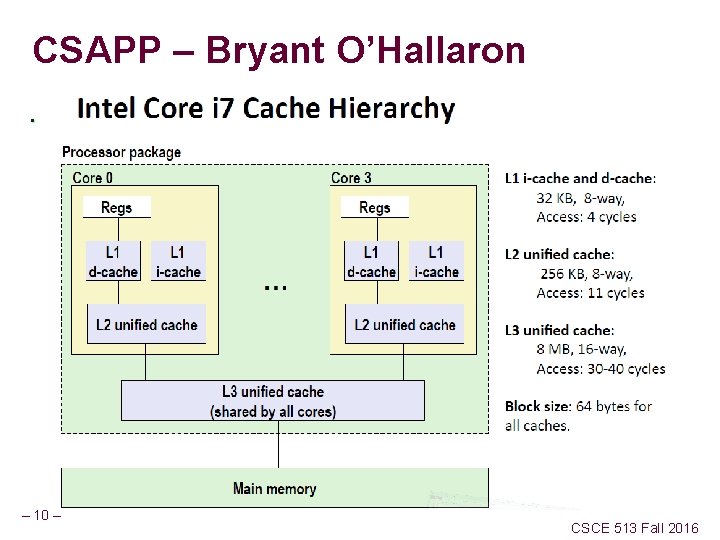

CSAPP – Bryant O’Hallaron. – 10 – CSCE 513 Fall 2016

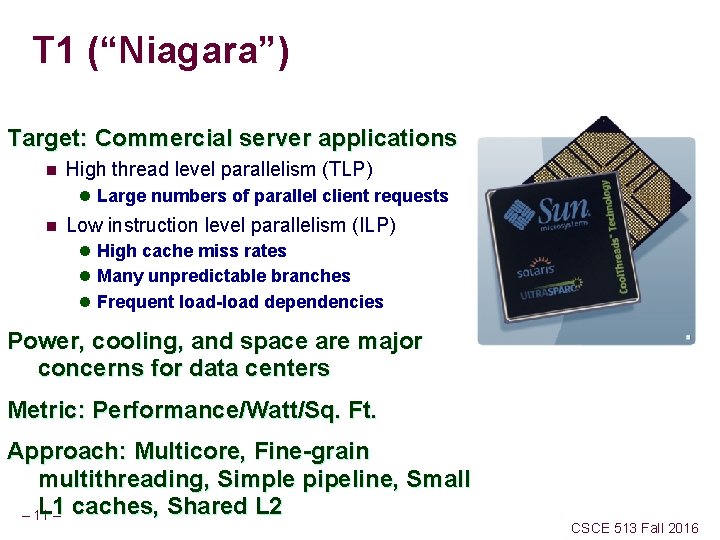

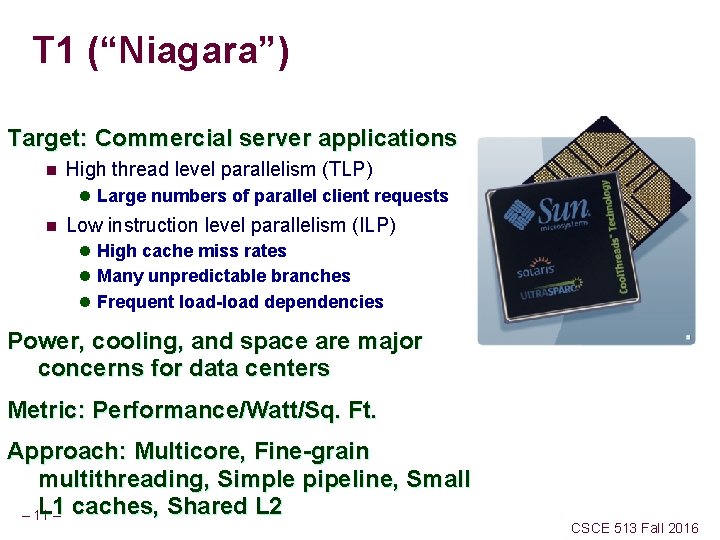

T 1 (“Niagara”) Target: Commercial server applications n High thread level parallelism (TLP) l Large numbers of parallel client requests n Low instruction level parallelism (ILP) l High cache miss rates l Many unpredictable branches l Frequent load-load dependencies Power, cooling, and space are major concerns for data centers Metric: Performance/Watt/Sq. Ft. Approach: Multicore, Fine-grain multithreading, Simple pipeline, Small L 1 – 11 – caches, Shared L 2 CSCE 513 Fall 2016

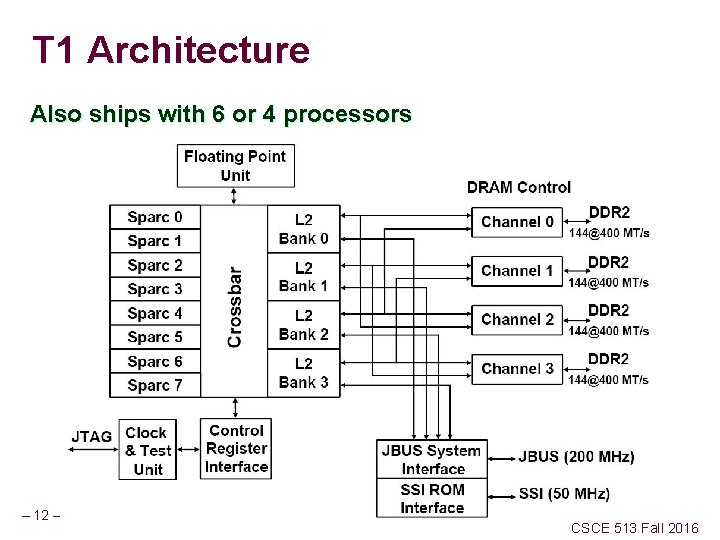

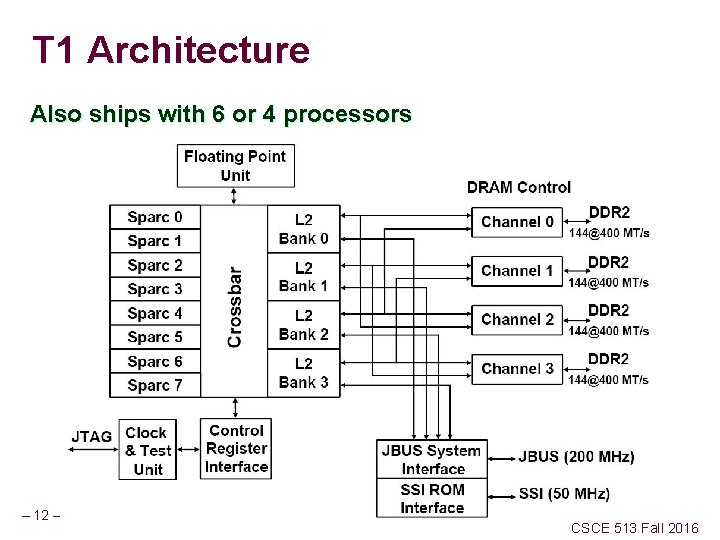

T 1 Architecture Also ships with 6 or 4 processors – 12/25/2021 CS 252 s 06 T 1 12 CSCE 513 Fall 2016

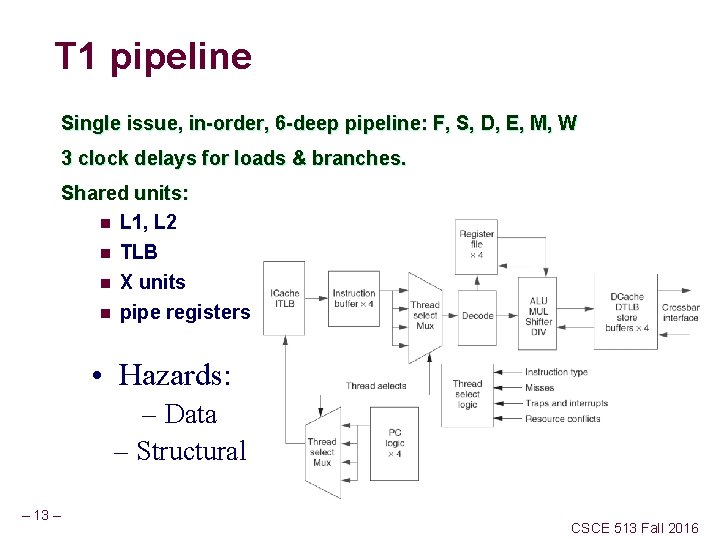

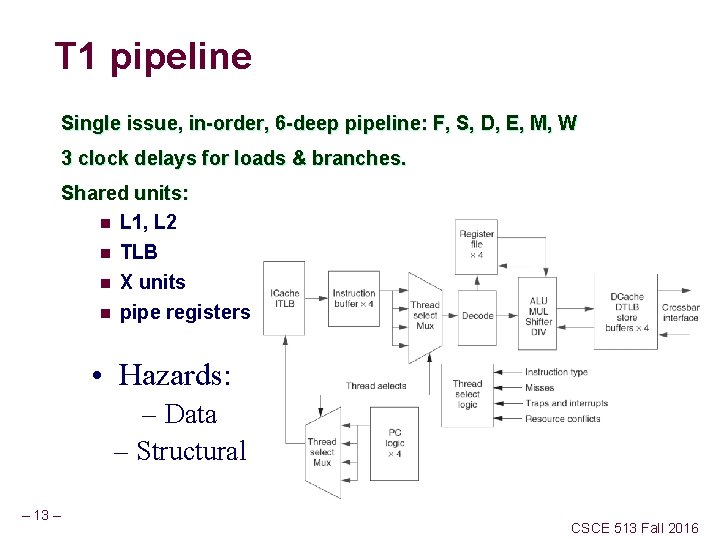

T 1 pipeline Single issue, in-order, 6 -deep pipeline: F, S, D, E, M, W 3 clock delays for loads & branches. Shared units: n L 1, L 2 n TLB n X units n pipe registers • Hazards: – Data – Structural – 13 – CSCE 513 Fall 2016

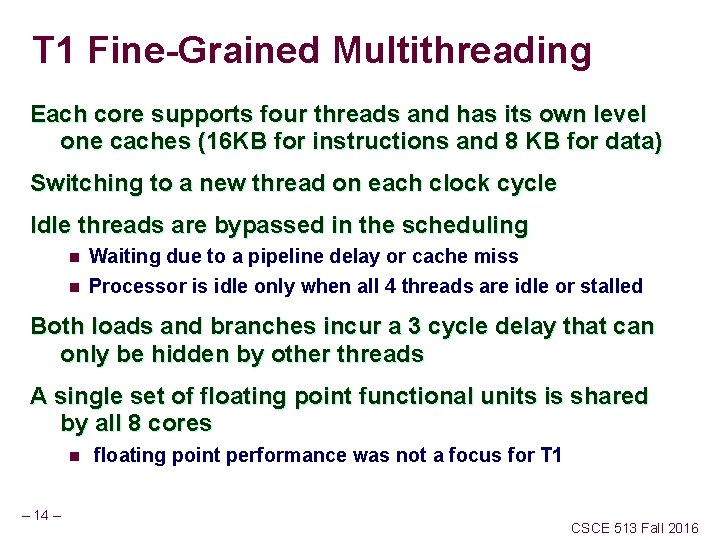

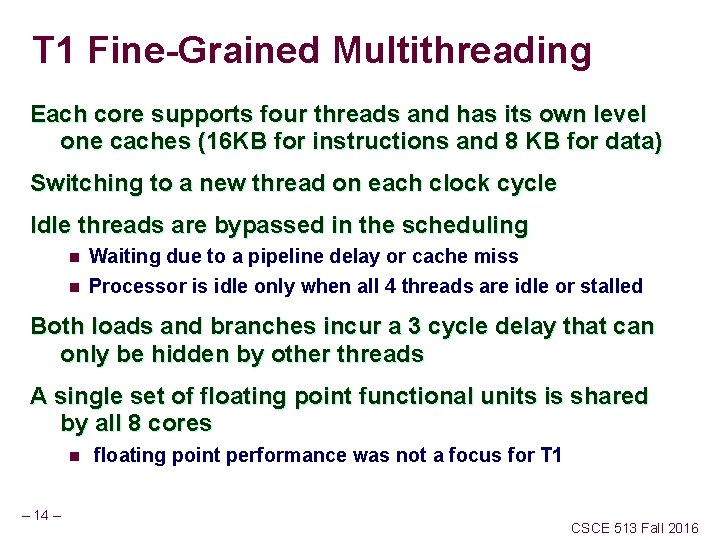

T 1 Fine-Grained Multithreading Each core supports four threads and has its own level one caches (16 KB for instructions and 8 KB for data) Switching to a new thread on each clock cycle Idle threads are bypassed in the scheduling n n Waiting due to a pipeline delay or cache miss Processor is idle only when all 4 threads are idle or stalled Both loads and branches incur a 3 cycle delay that can only be hidden by other threads A single set of floating point functional units is shared by all 8 cores n – 14 – floating point performance was not a focus for T 1 CSCE 513 Fall 2016

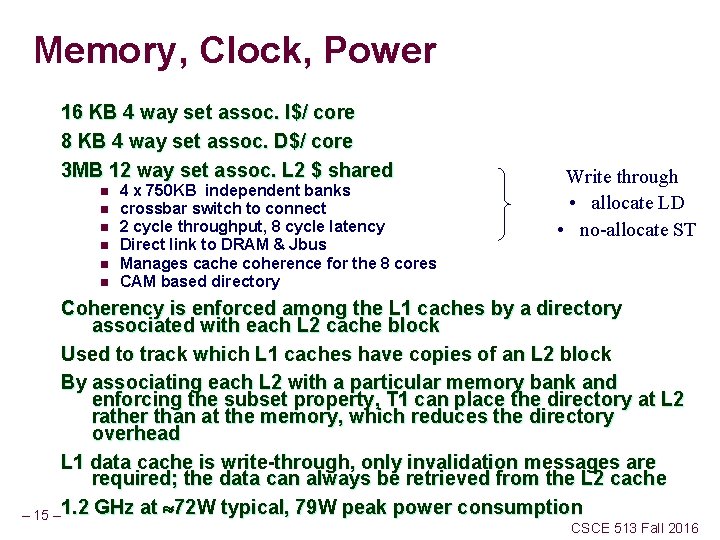

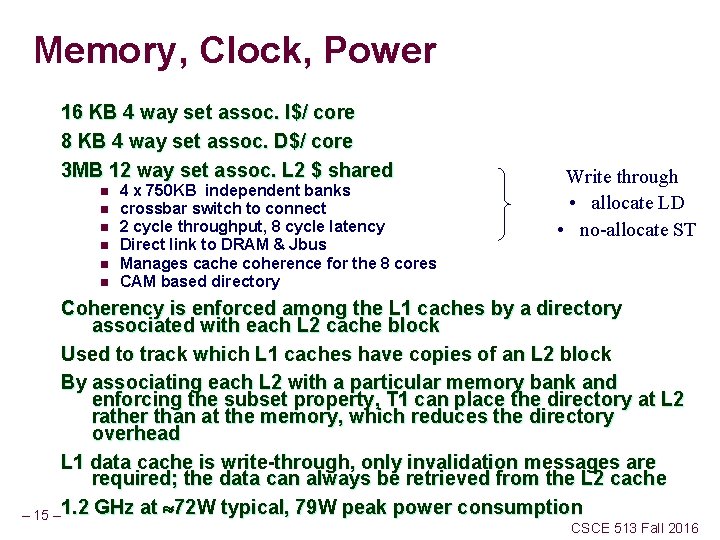

Memory, Clock, Power 16 KB 4 way set assoc. I$/ core 8 KB 4 way set assoc. D$/ core 3 MB 12 way set assoc. L 2 $ shared n n n 4 x 750 KB independent banks crossbar switch to connect 2 cycle throughput, 8 cycle latency Direct link to DRAM & Jbus Manages cache coherence for the 8 cores CAM based directory Write through • allocate LD • no-allocate ST Coherency is enforced among the L 1 caches by a directory associated with each L 2 cache block Used to track which L 1 caches have copies of an L 2 block By associating each L 2 with a particular memory bank and enforcing the subset property, T 1 can place the directory at L 2 rather than at the memory, which reduces the directory overhead L 1 data cache is write-through, only invalidation messages are required; the data can always be retrieved from the L 2 cache – 15 – 1. 2 GHz at 72 W typical, 79 W peak power consumption CSCE 513 Fall 2016

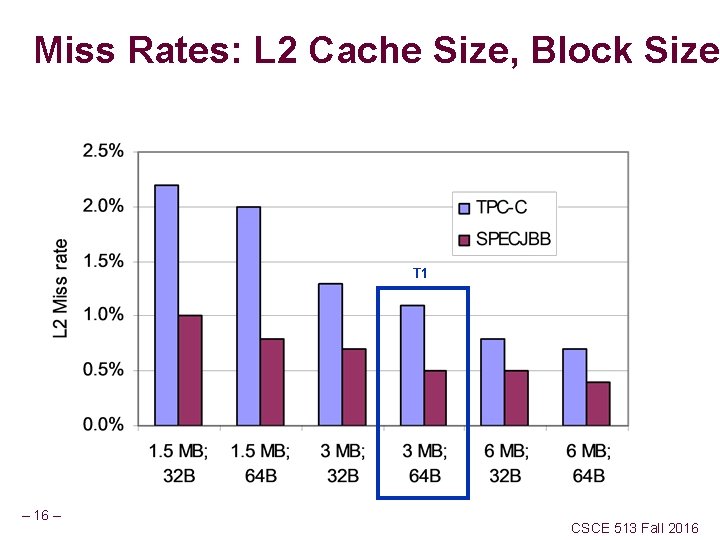

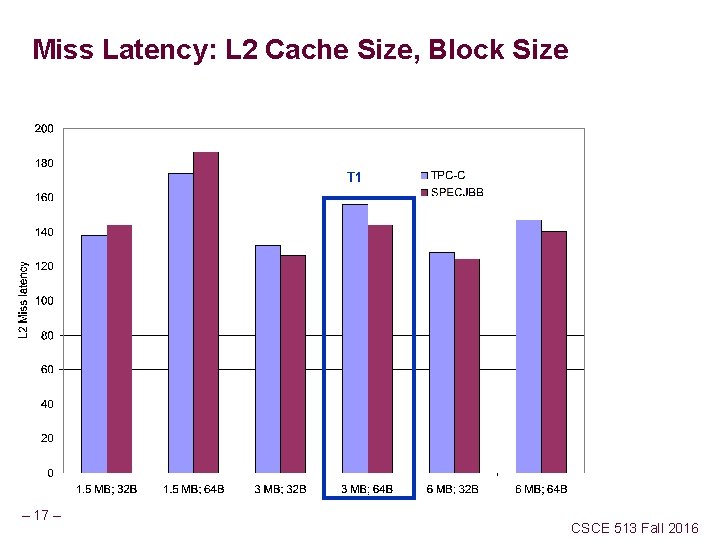

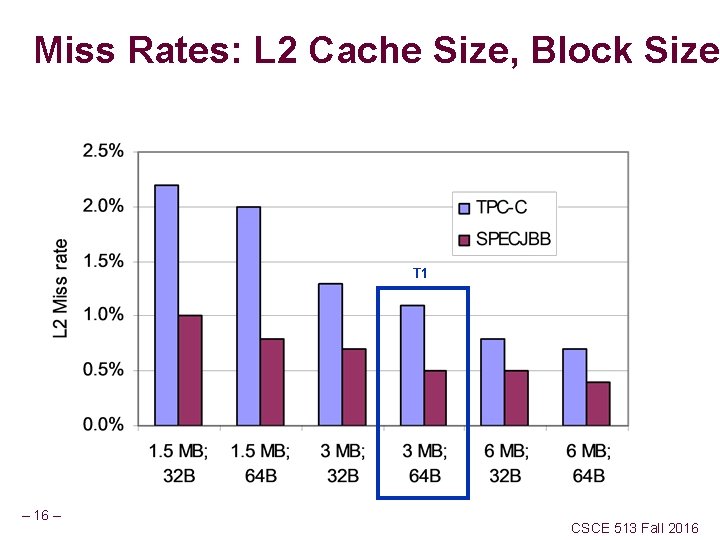

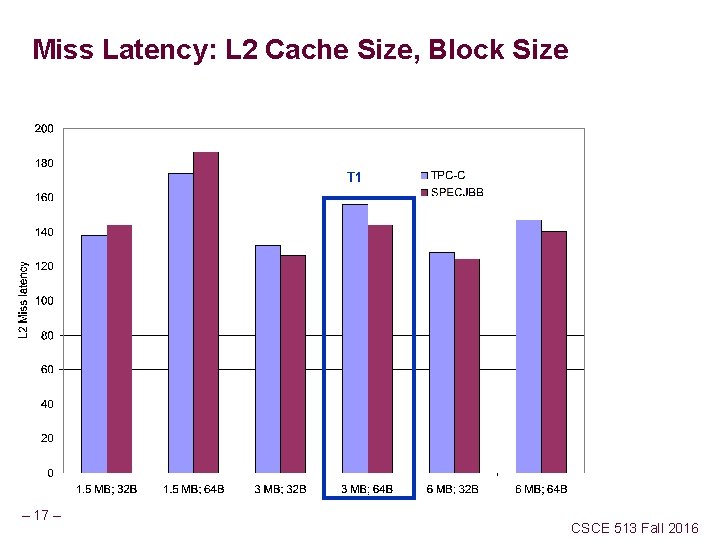

Miss Rates: L 2 Cache Size, Block Size T 1 – 16 – CSCE 513 Fall 2016

Miss Latency: L 2 Cache Size, Block Size T 1 – 17 – CSCE 513 Fall 2016

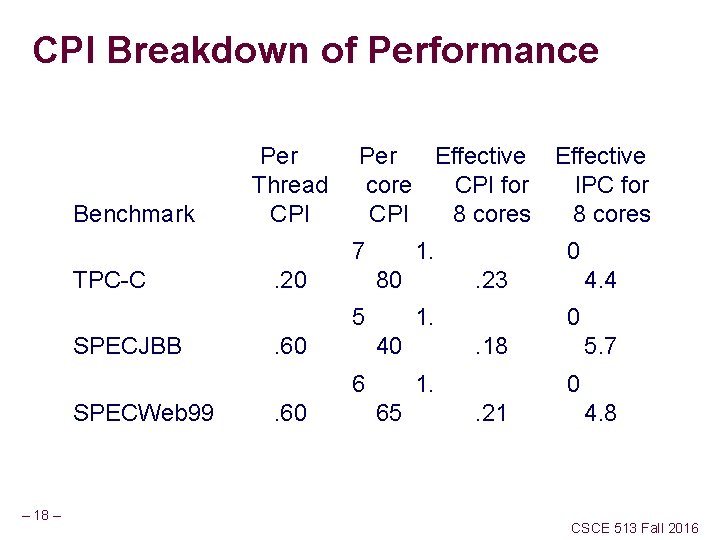

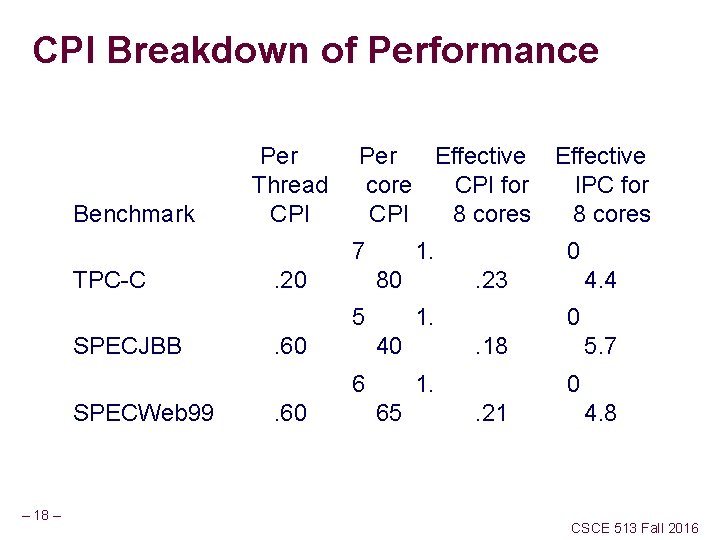

CPI Breakdown of Performance Benchmark Per Thread CPI Per Effective core CPI for IPC for CPI 8 cores 7 TPC-C . 20 1. 80 5 SPECJBB . 60 – 18 – . 60 . 23 1. 40 6 SPECWeb 99 0 0. 18 1. 65 4. 4 5. 7 0 . 21 4. 8 CSCE 513 Fall 2016

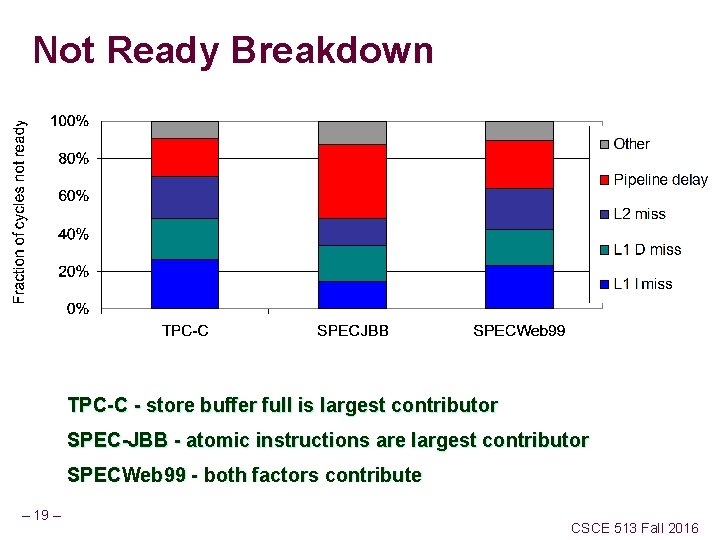

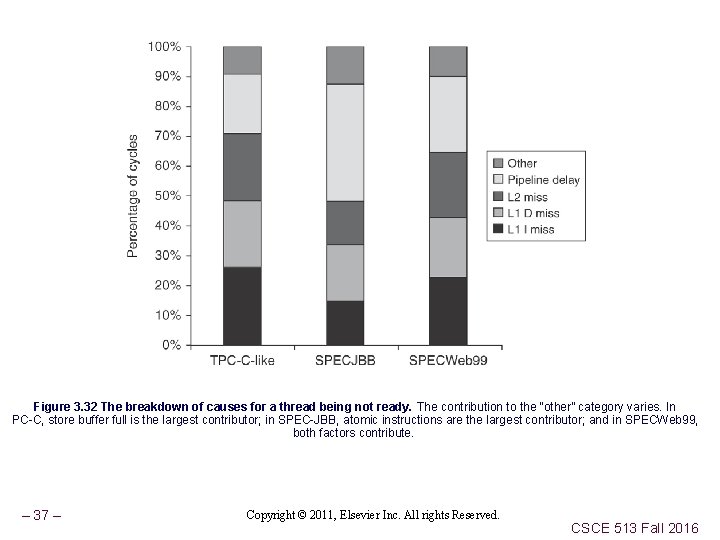

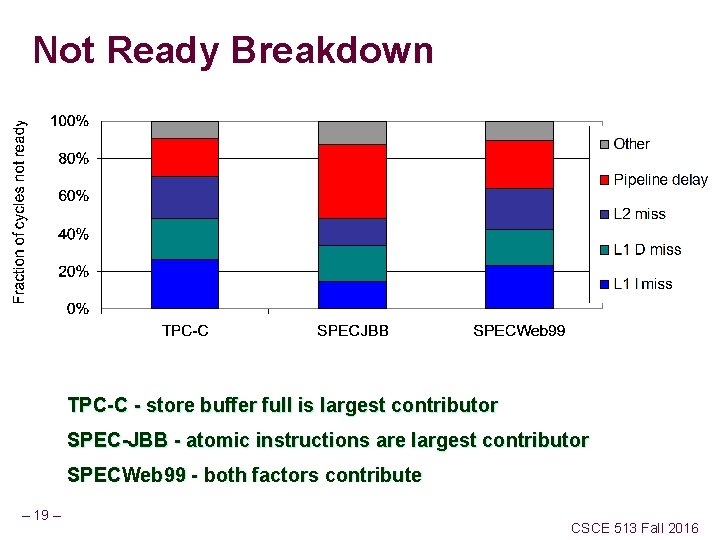

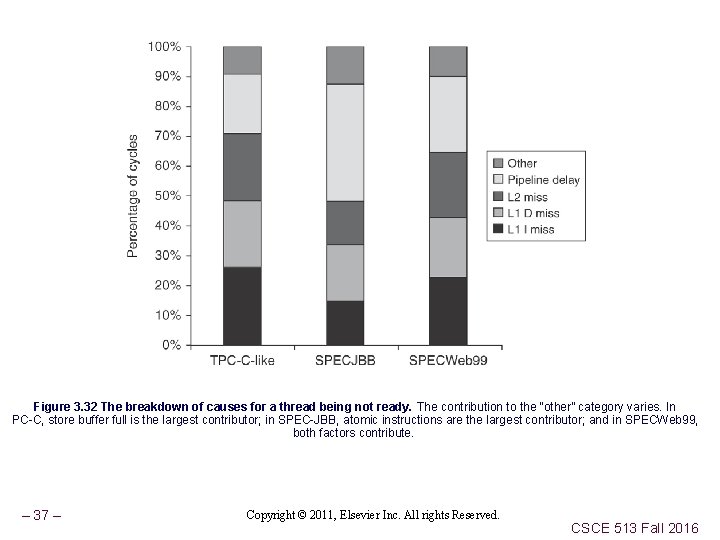

Not Ready Breakdown TPC-C - store buffer full is largest contributor SPEC-JBB - atomic instructions are largest contributor SPECWeb 99 - both factors contribute – 19 – CSCE 513 Fall 2016

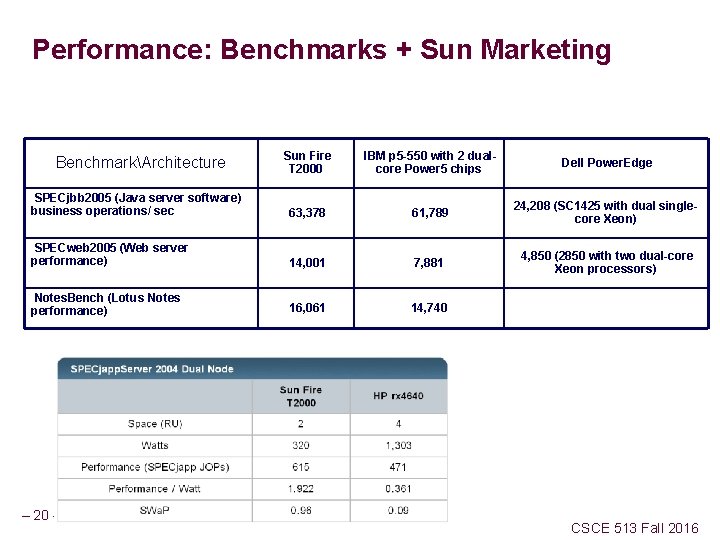

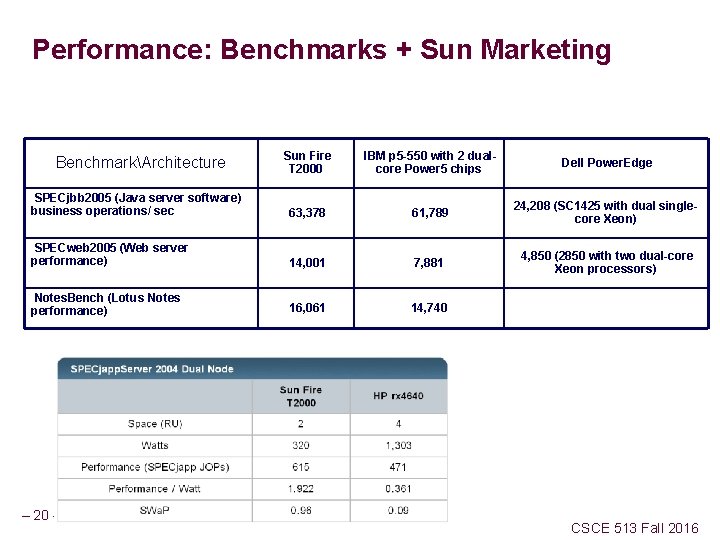

Performance: Benchmarks + Sun Marketing Sun Fire T 2000 IBM p 5 -550 with 2 dualcore Power 5 chips Dell Power. Edge SPECjbb 2005 (Java server software) business operations/ sec 63, 378 61, 789 24, 208 (SC 1425 with dual singlecore Xeon) SPECweb 2005 (Web server performance) 14, 001 7, 881 4, 850 (2850 with two dual-core Xeon processors) Notes. Bench (Lotus Notes performance) 16, 061 14, 740 BenchmarkArchitecture – 20 – CSCE 513 Fall 2016

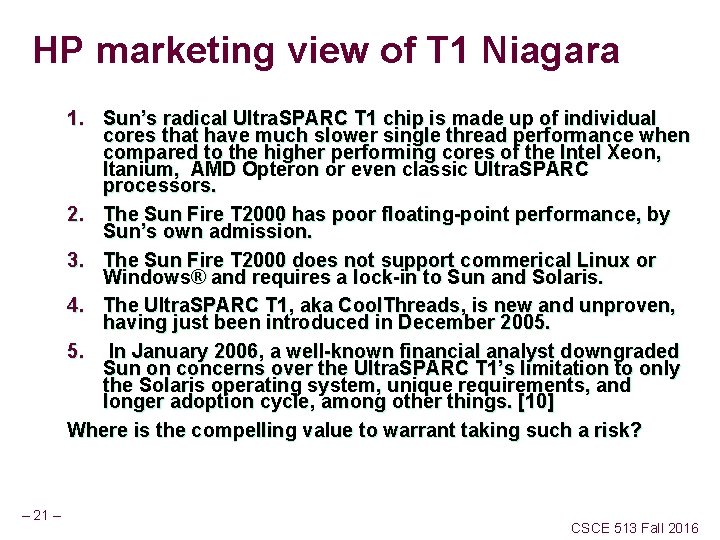

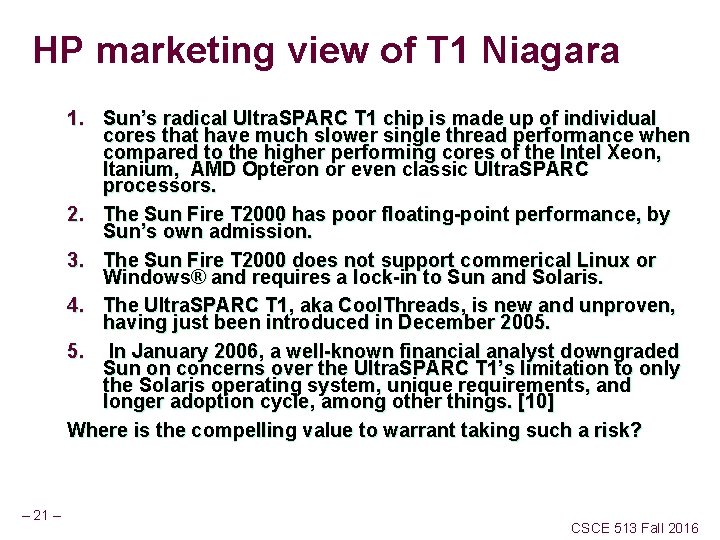

HP marketing view of T 1 Niagara 1. Sun’s radical Ultra. SPARC T 1 chip is made up of individual cores that have much slower single thread performance when compared to the higher performing cores of the Intel Xeon, Itanium, AMD Opteron or even classic Ultra. SPARC processors. 2. The Sun Fire T 2000 has poor floating-point performance, by Sun’s own admission. 3. The Sun Fire T 2000 does not support commerical Linux or Windows® and requires a lock-in to Sun and Solaris. 4. The Ultra. SPARC T 1, aka Cool. Threads, is new and unproven, having just been introduced in December 2005. 5. In January 2006, a well-known financial analyst downgraded Sun on concerns over the Ultra. SPARC T 1’s limitation to only the Solaris operating system, unique requirements, and longer adoption cycle, among other things. [10] Where is the compelling value to warrant taking such a risk? – 21 – CSCE 513 Fall 2016

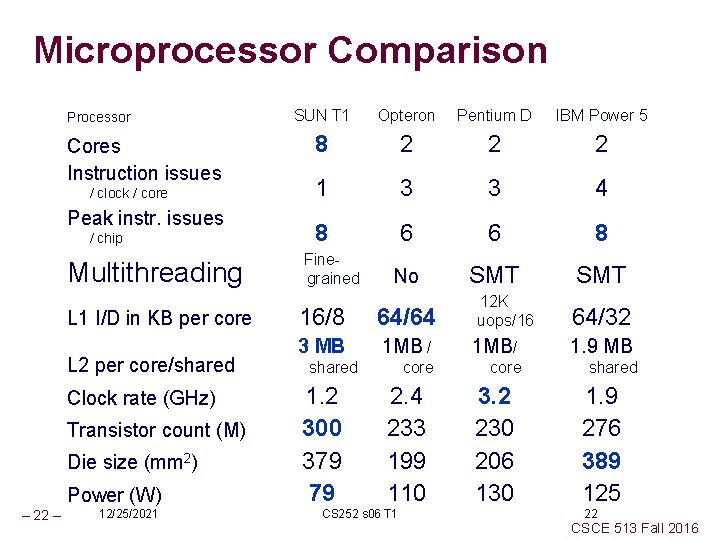

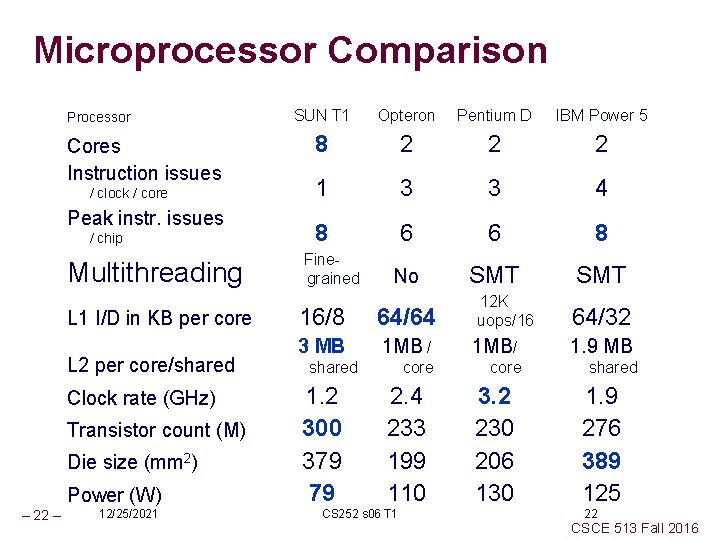

Microprocessor Comparison Processor Cores Instruction issues / clock / core Peak instr. issues / chip Multithreading L 1 I/D in KB per core L 2 per core/shared Clock rate (GHz) Transistor count (M) Die size (mm 2) Power (W) – 22 – 12/25/2021 SUN T 1 Opteron Pentium D IBM Power 5 8 2 2 2 1 3 3 4 8 6 6 8 No SMT Finegrained 12 K uops/16 16/8 64/64 3 MB 1 MB / 1 MB/ 1. 9 MB core shared 1. 2 300 379 79 2. 4 233 199 110 CS 252 s 06 T 1 3. 2 230 206 130 64/32 1. 9 276 389 125 22 CSCE 513 Fall 2016

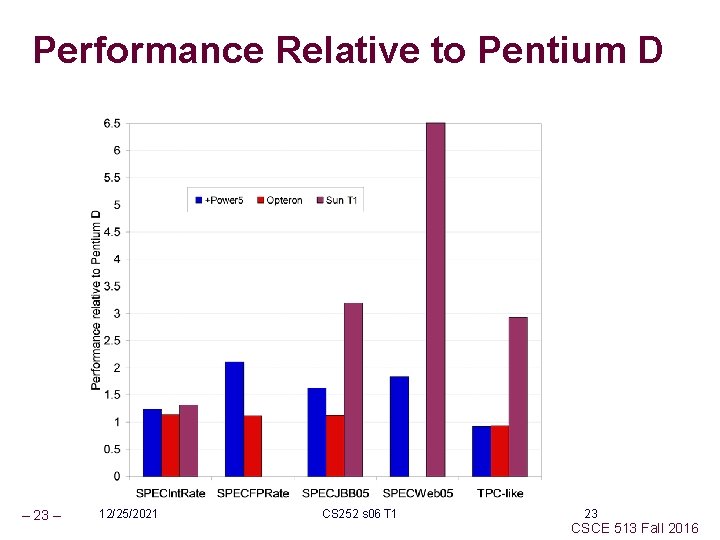

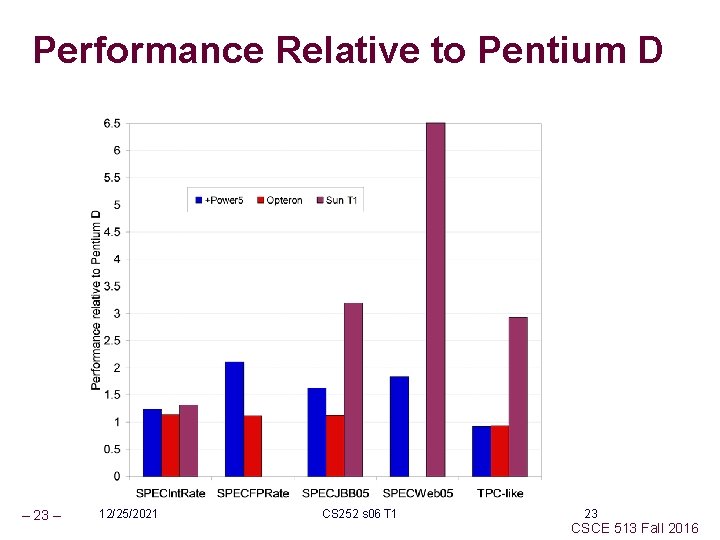

Performance Relative to Pentium D – 23 – 12/25/2021 CS 252 s 06 T 1 23 CSCE 513 Fall 2016

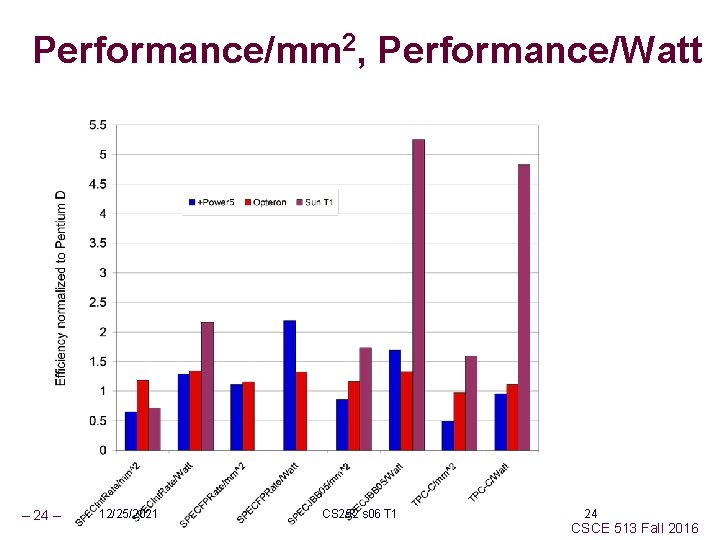

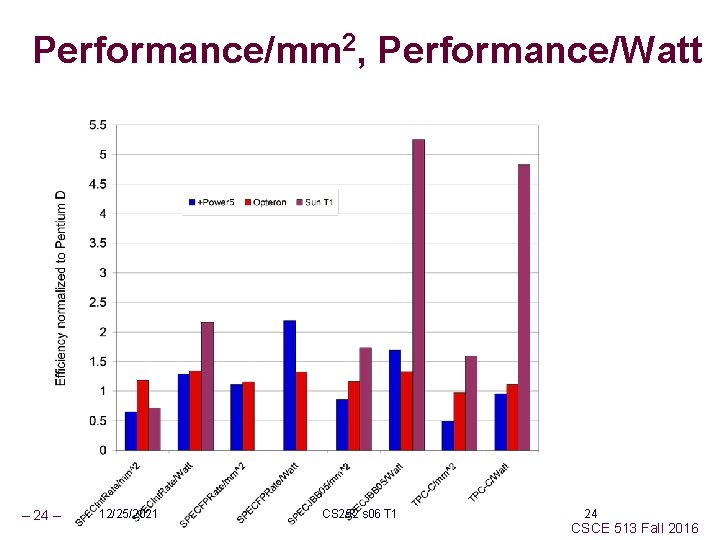

Performance/mm 2, Performance/Watt – 24 – 12/25/2021 CS 252 s 06 T 1 24 CSCE 513 Fall 2016

Niagara 2 Improve performance by increasing threads supported per chip from 32 to 64 n 8 cores * 8 threads per core Floating-point unit for each core, not for each chip Hardware support for encryption standards EAS, 3 DES, and elliptical-curve cryptography Niagara 2 will add a number of 8 x PCI Express interfaces directly into the chip in addition to integrated 10 Gigabit Ethernet XAU interfaces and Gigabit Ethernet ports. Integrated memory controllers will shift support from DDR 2 to FB-DIMMs and double the Kevin Krewell maximum amount of system memory. “Sun's Niagara Begins CMT Flood The Sun Ultra. SPARC T 1 Processor Released” Microprocessor Report, January 3, 2006 – 25 – 12/25/2021 CS 252 s 06 T 1 25 CSCE 513 Fall 2016

Amdahl’s Law Paper Gene Amdahl, "Validity of the Single Processor Approach to Achieving Large-Scale Computing Capabilities", AFIPS Conference Proceedings, (30), pp. 483 -485, 1967. How long is paper? How much of it is Amdahl’s Law? What other comments about parallelism besides Amdahl’s Law? – 26 – CSCE 513 Fall 2016

Parallel Programmer Productivity Lorin Hochstein et al "Parallel Programmer Productivity: A Case Study of Novice Parallel Programmers. " International Conference for High Performance Computing, Networking and Storage (SC'05). Nov. 2005 What did they study? What is argument that novice parallel programmers are a good target for High Performance Computing? How can account for variability in talent between programmers? What programmers studied? What programming styles investigated? How big multiprocessor? How measure quality? How measure cost? – 27 – CSCE 513 Fall 2016

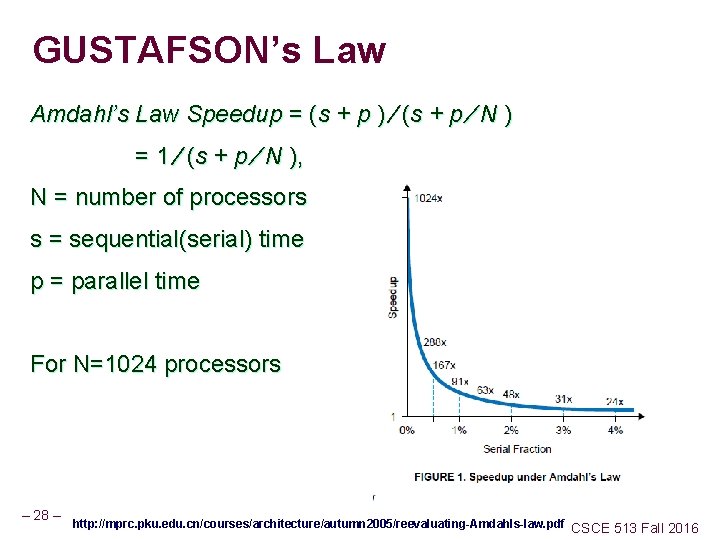

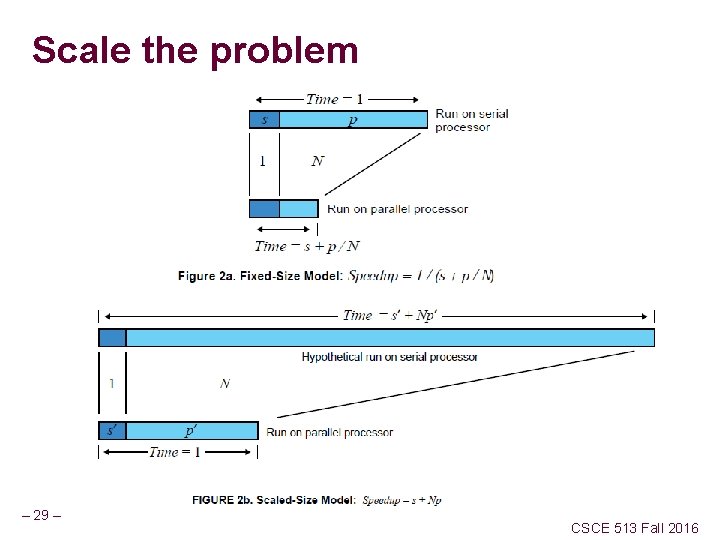

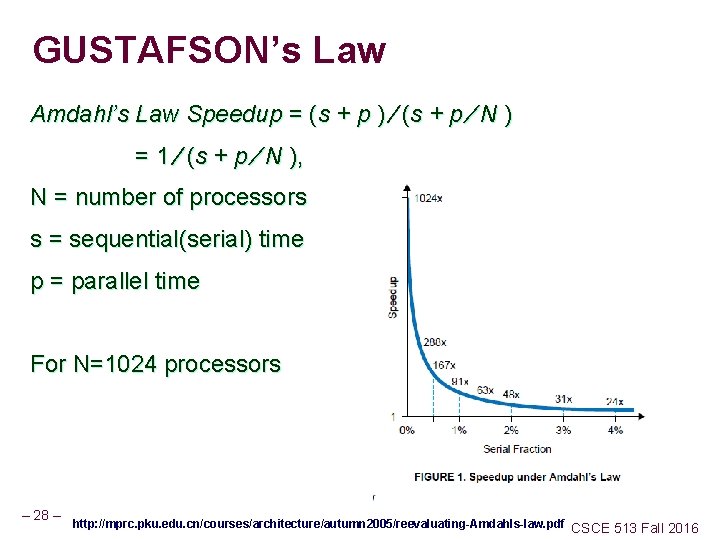

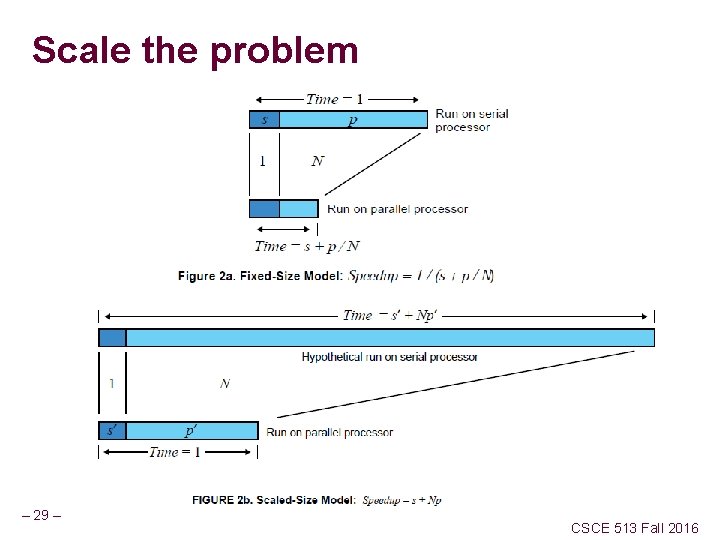

GUSTAFSON’s Law Amdahl’s Law Speedup = (s + p ) ⁄ (s + p ⁄ N ) = 1 ⁄ (s + p ⁄ N ), N = number of processors s = sequential(serial) time p = parallel time For N=1024 processors – 28 – http: //mprc. pku. edu. cn/courses/architecture/autumn 2005/reevaluating-Amdahls-law. pdf CSCE 513 Fall 2016

Scale the problem – 29 – CSCE 513 Fall 2016

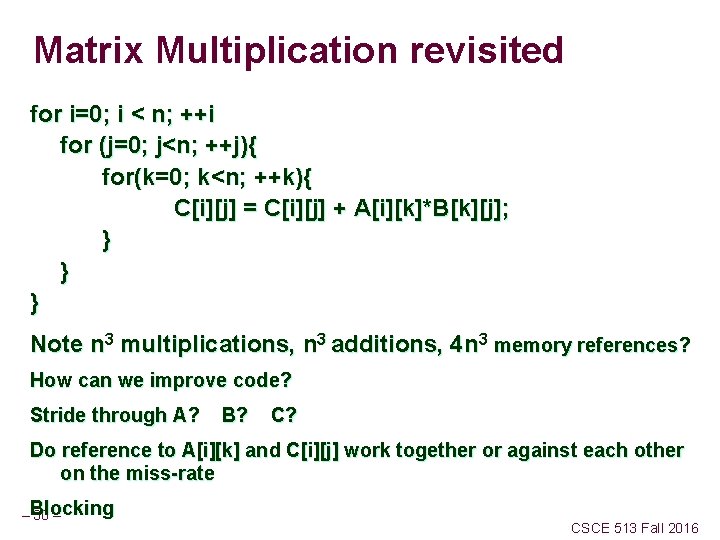

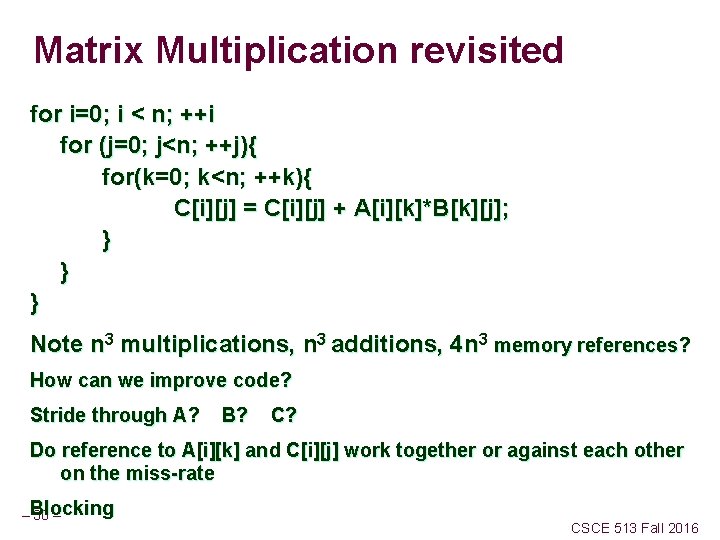

Matrix Multiplication revisited for i=0; i < n; ++i for (j=0; j<n; ++j){ for(k=0; k<n; ++k){ C[i][j] = C[i][j] + A[i][k]*B[k][j]; } } } Note n 3 multiplications, n 3 additions, 4 n 3 memory references? How can we improve code? Stride through A? B? C? Do reference to A[i][k] and C[i][j] work together or against each other on the miss-rate – Blocking 30 – CSCE 513 Fall 2016

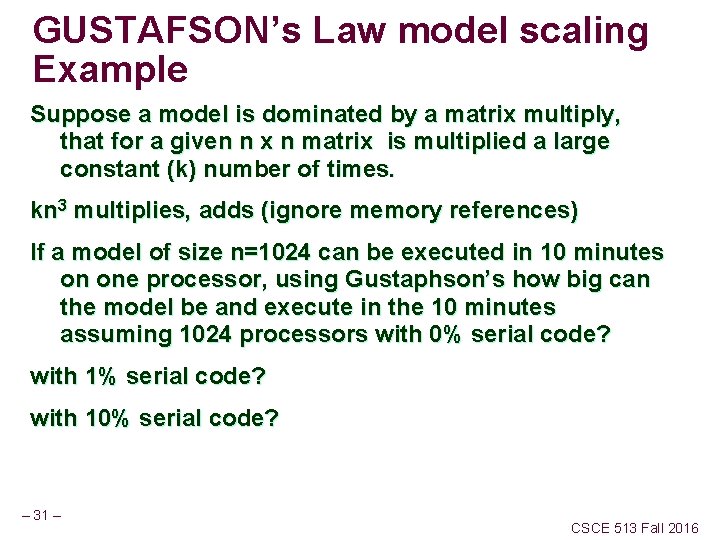

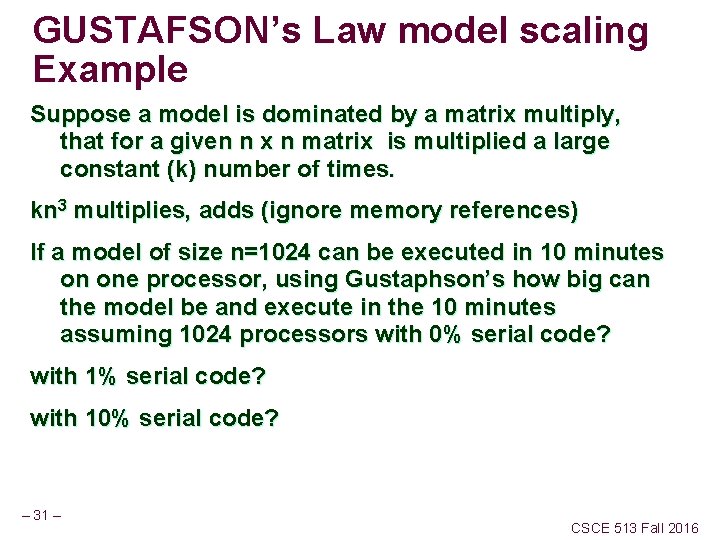

GUSTAFSON’s Law model scaling Example Suppose a model is dominated by a matrix multiply, that for a given n x n matrix is multiplied a large constant (k) number of times. kn 3 multiplies, adds (ignore memory references) If a model of size n=1024 can be executed in 10 minutes on one processor, using Gustaphson’s how big can the model be and execute in the 10 minutes assuming 1024 processors with 0% serial code? with 10% serial code? – 31 – CSCE 513 Fall 2016

– 32 – CSCE 513 Fall 2016

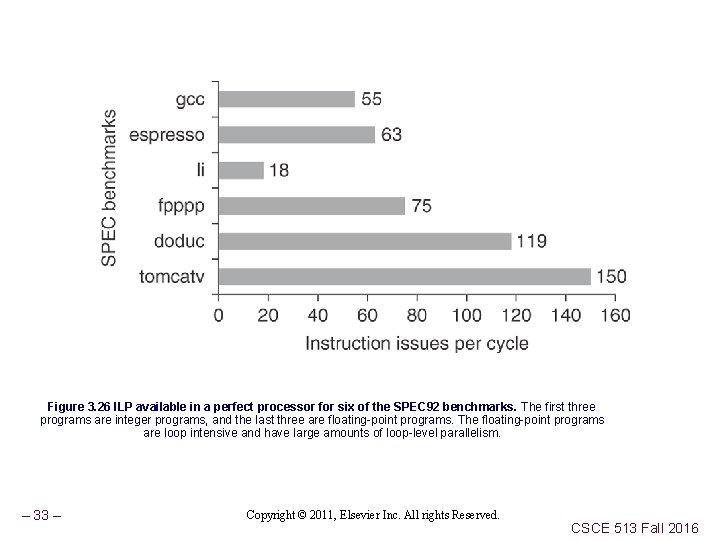

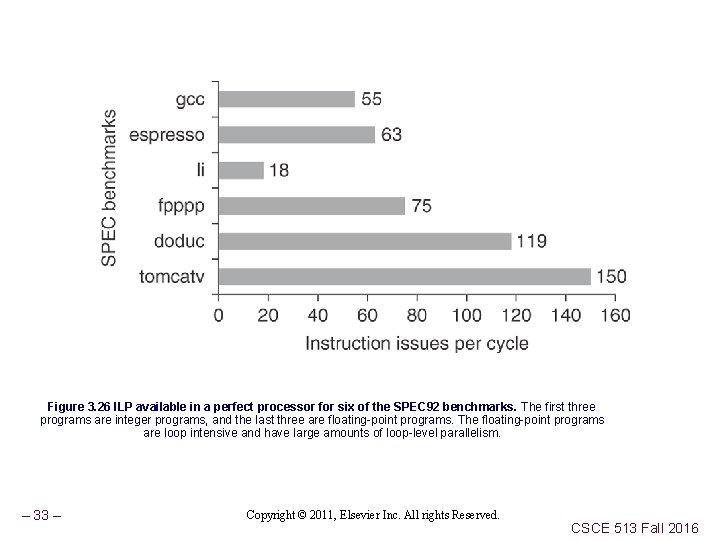

Figure 3. 26 ILP available in a perfect processor for six of the SPEC 92 benchmarks. The first three programs are integer programs, and the last three are floating-point programs. The floating-point programs are loop intensive and have large amounts of loop-level parallelism. – 33 – Copyright © 2011, Elsevier Inc. All rights Reserved. CSCE 513 Fall 2016

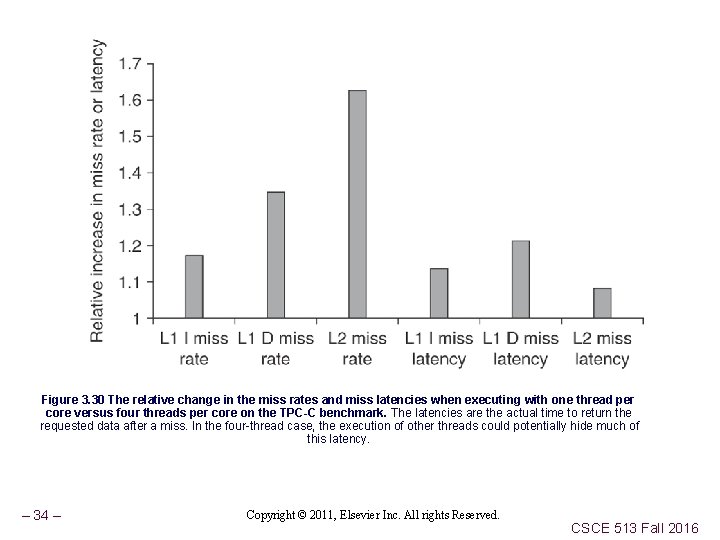

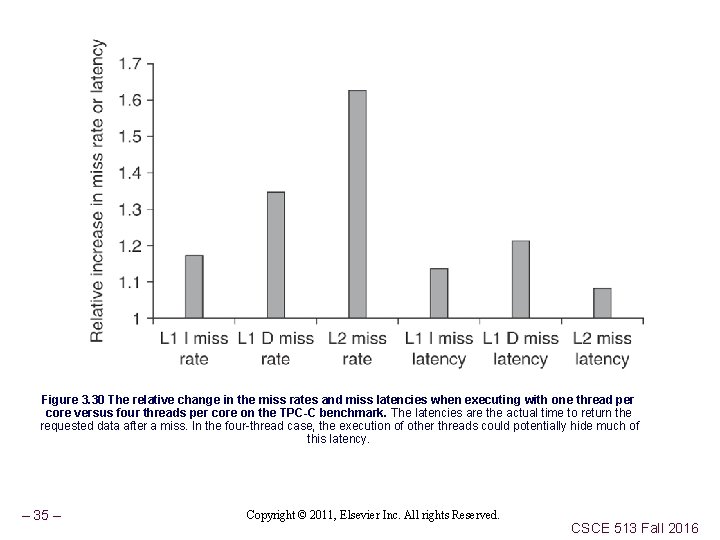

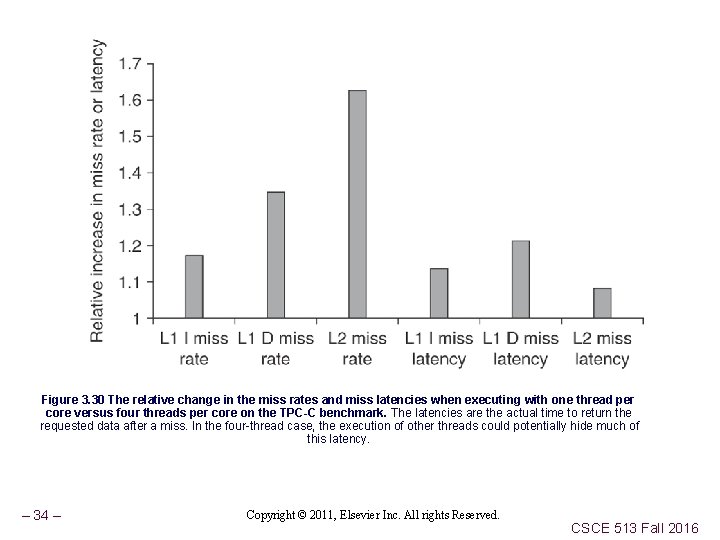

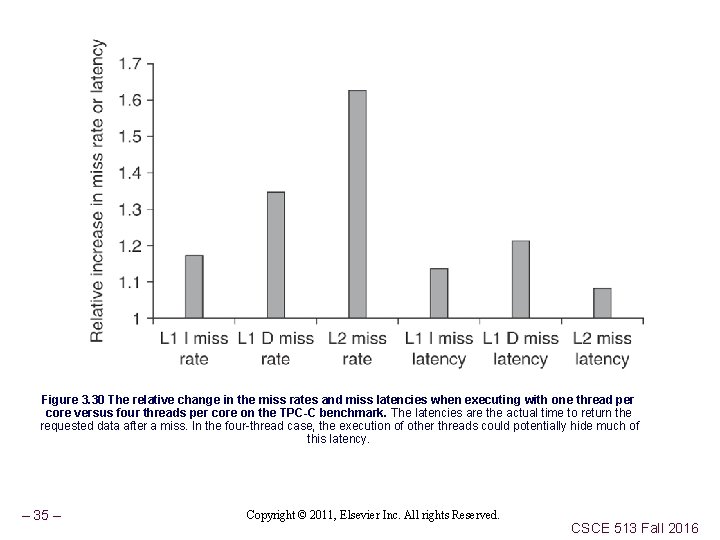

Figure 3. 30 The relative change in the miss rates and miss latencies when executing with one thread per core versus four threads per core on the TPC-C benchmark. The latencies are the actual time to return the requested data after a miss. In the four-thread case, the execution of other threads could potentially hide much of this latency. – 34 – Copyright © 2011, Elsevier Inc. All rights Reserved. CSCE 513 Fall 2016

Figure 3. 30 The relative change in the miss rates and miss latencies when executing with one thread per core versus four threads per core on the TPC-C benchmark. The latencies are the actual time to return the requested data after a miss. In the four-thread case, the execution of other threads could potentially hide much of this latency. – 35 – Copyright © 2011, Elsevier Inc. All rights Reserved. CSCE 513 Fall 2016

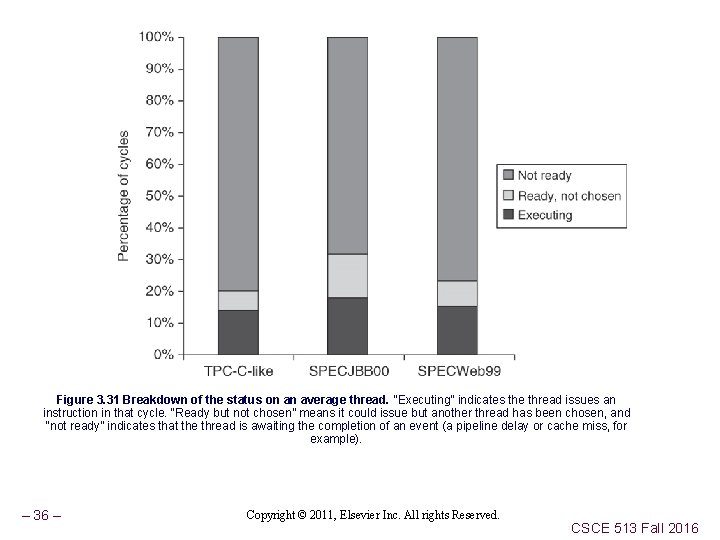

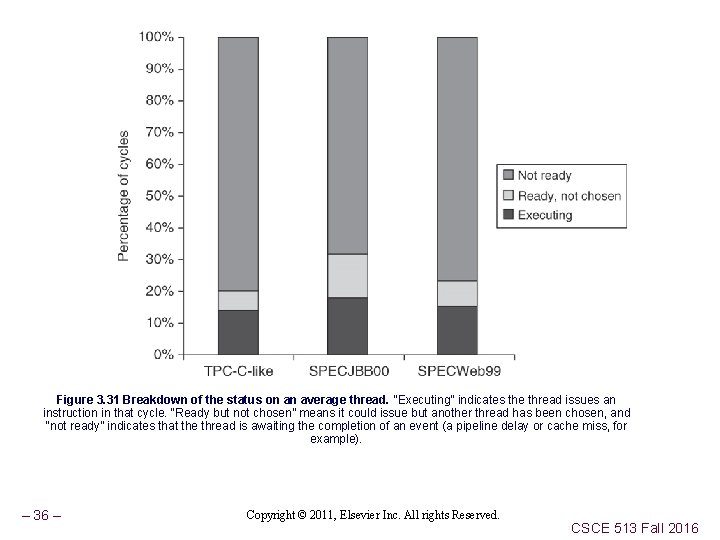

Figure 3. 31 Breakdown of the status on an average thread. “Executing” indicates the thread issues an instruction in that cycle. “Ready but not chosen” means it could issue but another thread has been chosen, and “not ready” indicates that the thread is awaiting the completion of an event (a pipeline delay or cache miss, for example). – 36 – Copyright © 2011, Elsevier Inc. All rights Reserved. CSCE 513 Fall 2016

Figure 3. 32 The breakdown of causes for a thread being not ready. The contribution to the “other” category varies. In PC-C, store buffer full is the largest contributor; in SPEC-JBB, atomic instructions are the largest contributor; and in SPECWeb 99, both factors contribute. – 37 – Copyright © 2011, Elsevier Inc. All rights Reserved. CSCE 513 Fall 2016

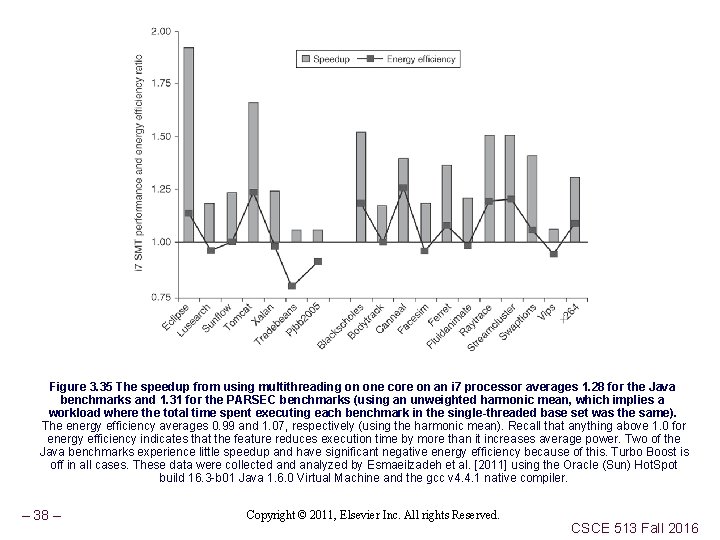

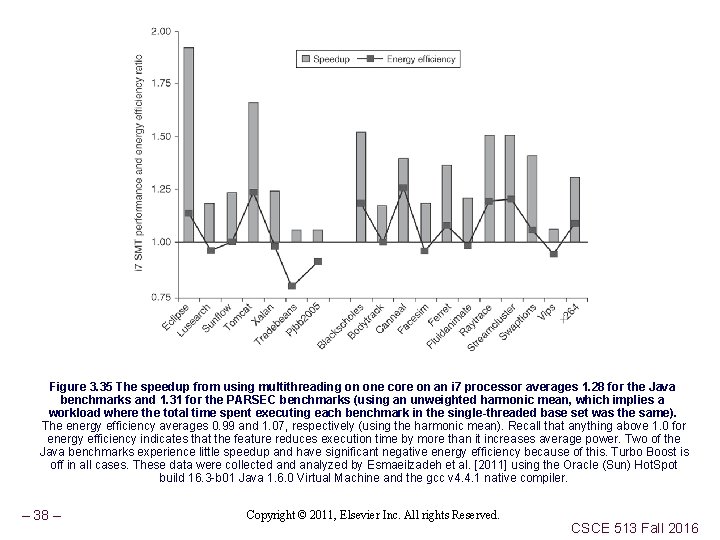

Figure 3. 35 The speedup from using multithreading on one core on an i 7 processor averages 1. 28 for the Java benchmarks and 1. 31 for the PARSEC benchmarks (using an unweighted harmonic mean, which implies a workload where the total time spent executing each benchmark in the single-threaded base set was the same). The energy efficiency averages 0. 99 and 1. 07, respectively (using the harmonic mean). Recall that anything above 1. 0 for energy efficiency indicates that the feature reduces execution time by more than it increases average power. Two of the Java benchmarks experience little speedup and have significant negative energy efficiency because of this. Turbo Boost is off in all cases. These data were collected analyzed by Esmaeilzadeh et al. [2011] using the Oracle (Sun) Hot. Spot build 16. 3 -b 01 Java 1. 6. 0 Virtual Machine and the gcc v 4. 4. 1 native compiler. – 38 – Copyright © 2011, Elsevier Inc. All rights Reserved. CSCE 513 Fall 2016

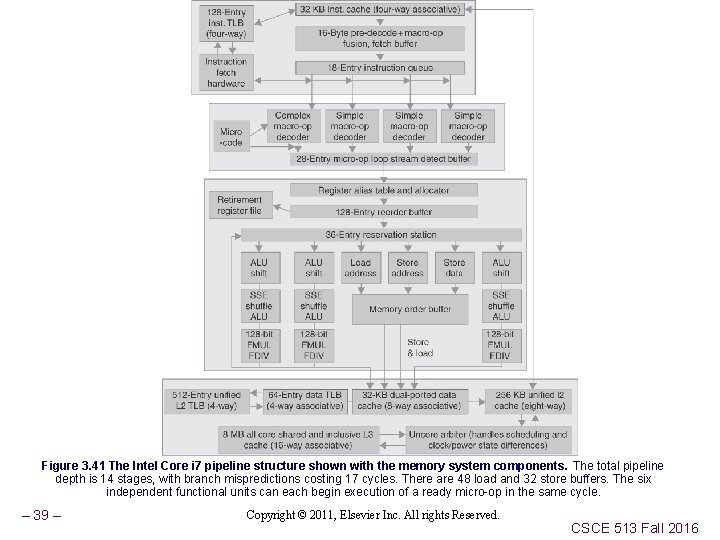

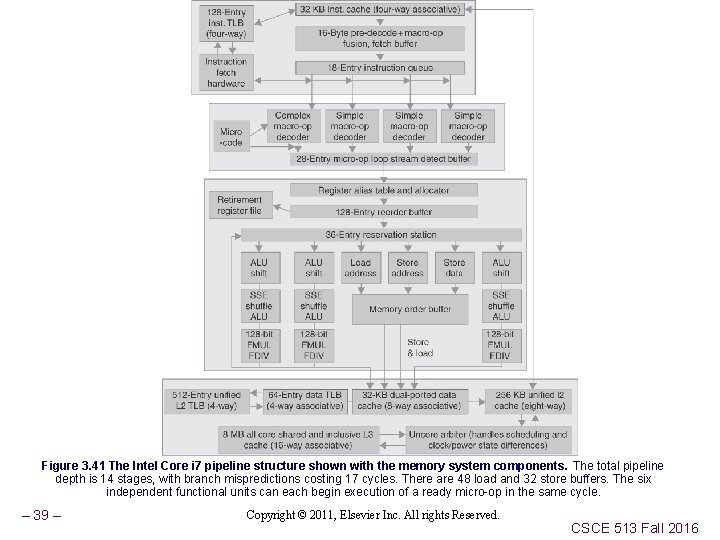

Figure 3. 41 The Intel Core i 7 pipeline structure shown with the memory system components. The total pipeline depth is 14 stages, with branch mispredictions costing 17 cycles. There are 48 load and 32 store buffers. The six independent functional units can each begin execution of a ready micro-op in the same cycle. – 39 – Copyright © 2011, Elsevier Inc. All rights Reserved. CSCE 513 Fall 2016

GPUs Readings for GPU programming n Stanford – (Itunes)http: //code. google. com/p/stanford-cs 193 gsp 2010/ n UIUC ECE 498 AL : Applied Parallel Programming l http: //courses. engr. illinois. edu/ece 498/al/ l Book (online) David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 - 2009 http: //courses. engr. illinois. edu/ece 498/al/Syllabus. html – 40 – CSCE 513 Fall 2016

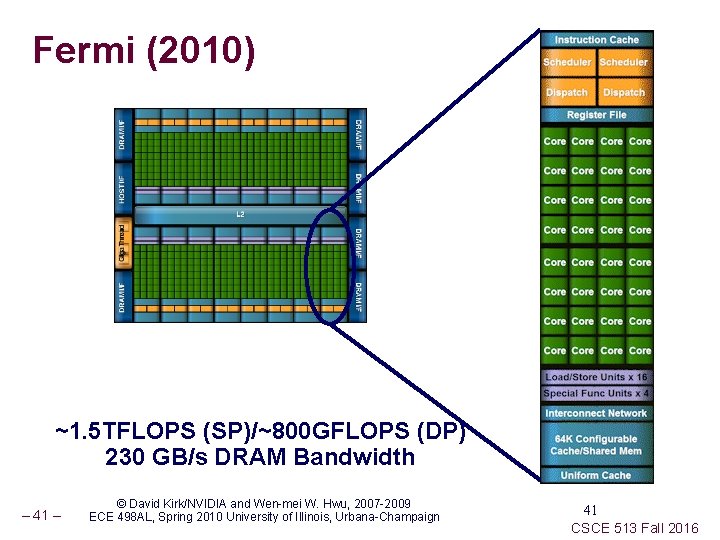

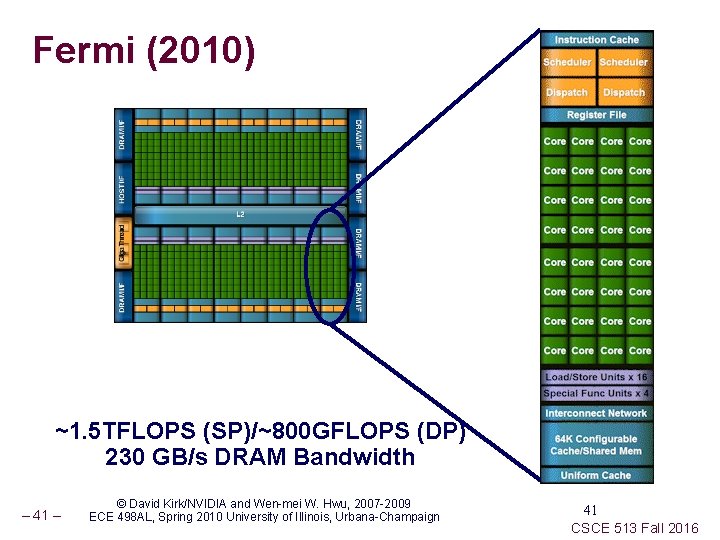

Fermi (2010) ~1. 5 TFLOPS (SP)/~800 GFLOPS (DP) 230 GB/s DRAM Bandwidth – 41 – © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007 -2009 ECE 498 AL, Spring 2010 University of Illinois, Urbana-Champaign 41 CSCE 513 Fall 2016