CSCE 513 Advanced Computer Architecture Lecture 16 Revisiting

![Race Conditions thread. Id: 0 thread. Id: 1917 // vector[0] was equal to 0 Race Conditions thread. Id: 0 thread. Id: 1917 // vector[0] was equal to 0](https://slidetodoc.com/presentation_image/3f9c1607bfee227a267c753d73468bae/image-36.jpg)

- Slides: 55

CSCE 513 Advanced Computer Architecture Lecture 16 Revisiting Strides, CUDA Threads… Topics n Strides through memory n Practical Performance considerations Readings November 6, 2017

Overview Last Time n Intro to CUDA/GPU programming Readings for today n Stanford – (Itunes)http: //code. google. com/p/stanford-cs 193 g-sp 2010/ n http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule n Book (online) David Kirk/NVIDIA and Wen-mei W. Hwu, 20072009 http: //courses. engr. illinois. edu/ece 498/al/Syllabus. html l Chapters 1 -3 New n n n – 2– Open. MP Examples – SC 2008 (link emailed Tuesday) Nvidia CUDA - example CSCE 513 Fall 2017

Nvidia NVIDIA Developer Zone - http: //developer. nvidia. com/cuda-toolkit-41 • CUDA Toolkit Downloads C/C++ compiler, CUDA-GDB, Visual Profiler, CUDA Memcheck, GPU-accelerated libraries, Other tools & Documentation Developer Drivers Downloads GPU Computing SDK Downloads – 3– CSCE 513 Fall 2017

Stanford CS 193 G http: //code. google. com/p/stanford-cs 193 gsp 2010/wiki/Tutorial. Prerequisites Vincent Natol “Kudos for CUDA, ” HPC Wire (2010) Patterson, David A. ; Hennessy, John L. (2011 -08 -01). Computer Architecture: A Quantitative Approach (The Morgan Kaufmann Series in Computer Architecture and Design) (Kindle Locations 75307532). Elsevier Science (reference). Kindle Edition. – 4– CSCE 513 Fall 2017

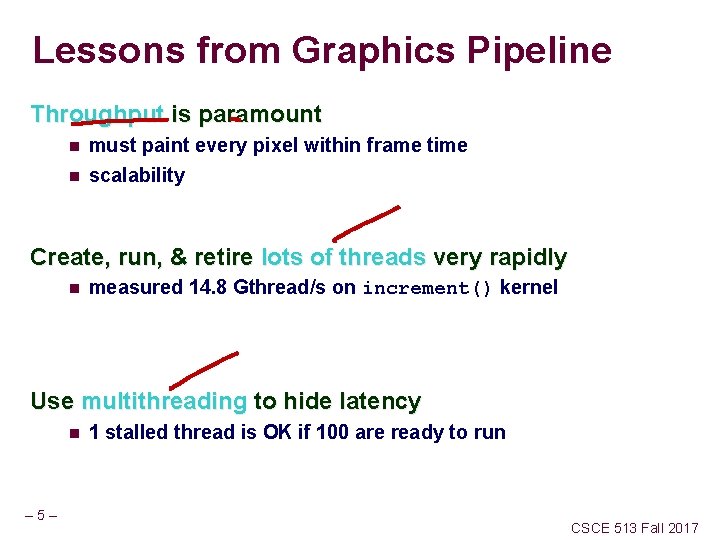

Lessons from Graphics Pipeline Throughput is paramount n must paint every pixel within frame time n scalability Create, run, & retire lots of threads very rapidly n measured 14. 8 Gthread/s on increment() kernel Use multithreading to hide latency n – 5– 1 stalled thread is OK if 100 are ready to run CSCE 513 Fall 2017

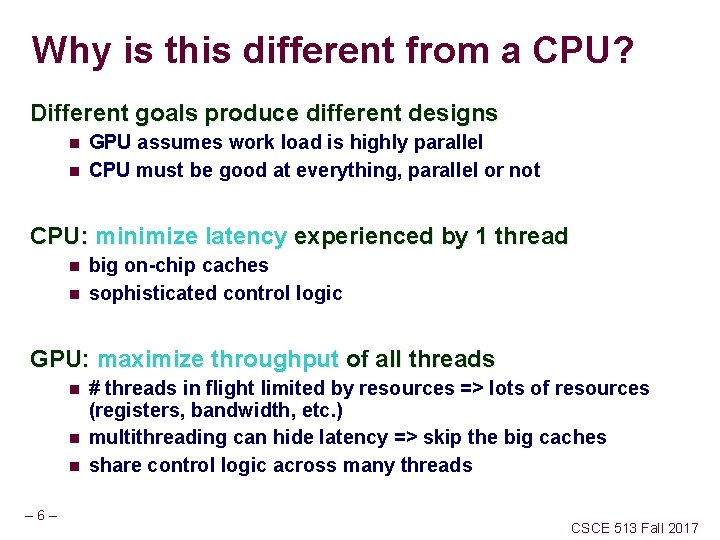

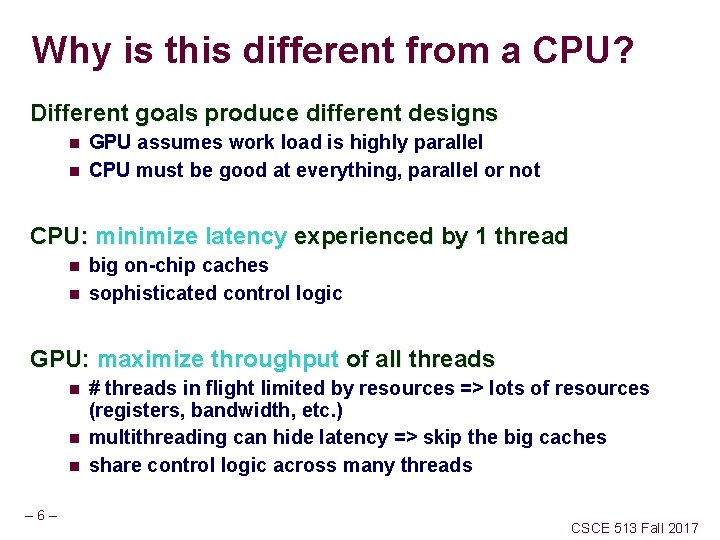

Why is this different from a CPU? Different goals produce different designs n n GPU assumes work load is highly parallel CPU must be good at everything, parallel or not CPU: minimize latency experienced by 1 thread n n big on-chip caches sophisticated control logic GPU: maximize throughput of all threads n n n – 6– # threads in flight limited by resources => lots of resources (registers, bandwidth, etc. ) multithreading can hide latency => skip the big caches share control logic across many threads CSCE 513 Fall 2017

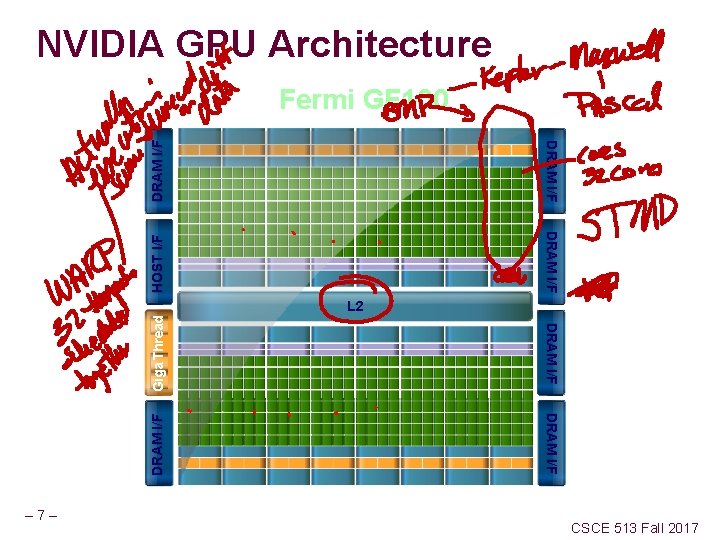

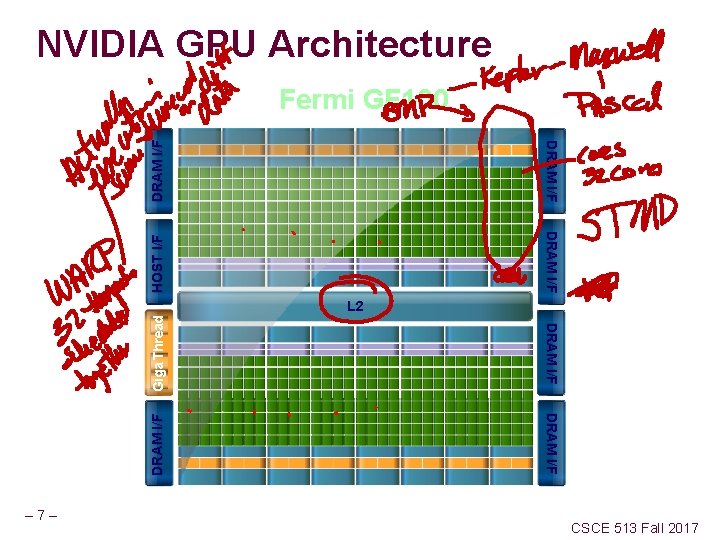

NVIDIA GPU Architecture DRAM I/F Fermi GF 100 HOST I/F DRAM I/F Giga Thread DRAM I/F – 7– DRAM I/F L 2 CSCE 513 Fall 2017

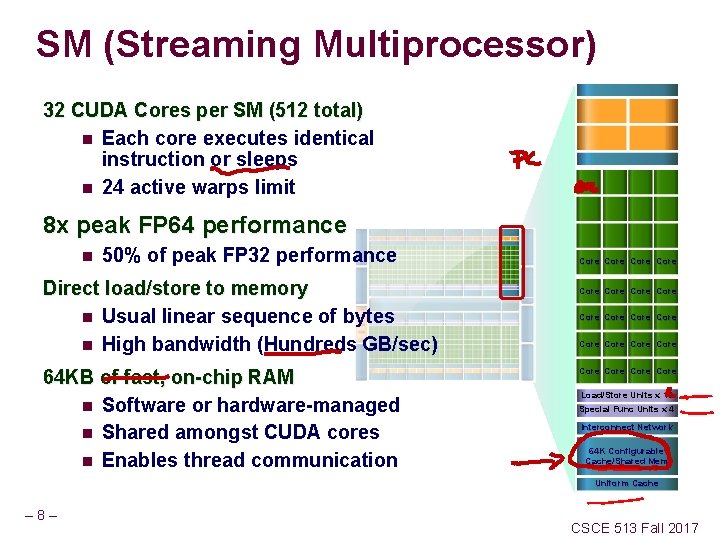

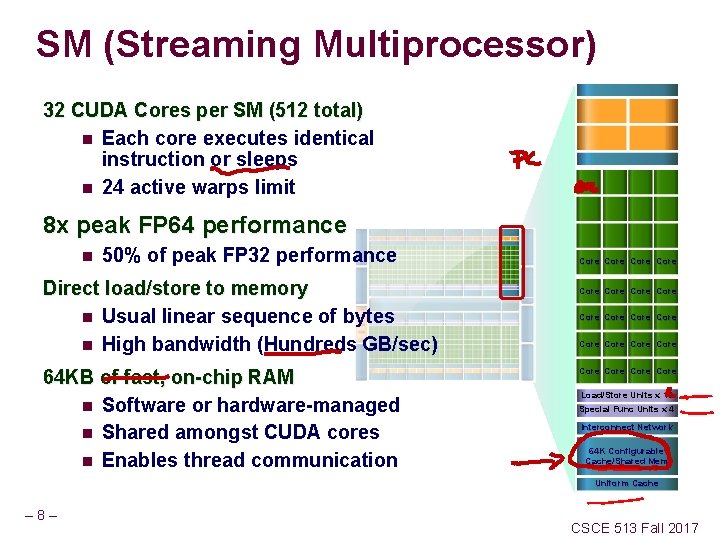

SM (Streaming Multiprocessor) 32 CUDA Cores per SM (512 total) n Each core executes identical instruction or sleeps n 24 active warps limit 8 x peak FP 64 performance n 50% of peak FP 32 performance Direct load/store to memory n Usual linear sequence of bytes n High bandwidth (Hundreds GB/sec) 64 KB of fast, on-chip RAM n Software or hardware-managed n Shared amongst CUDA cores n Enables thread communication Core Core Core Core Core Load/Store Units x 16 Special Func Units x 4 Interconnect Network 64 K Configurable Cache/Shared Mem Uniform Cache – 8– CSCE 513 Fall 2017

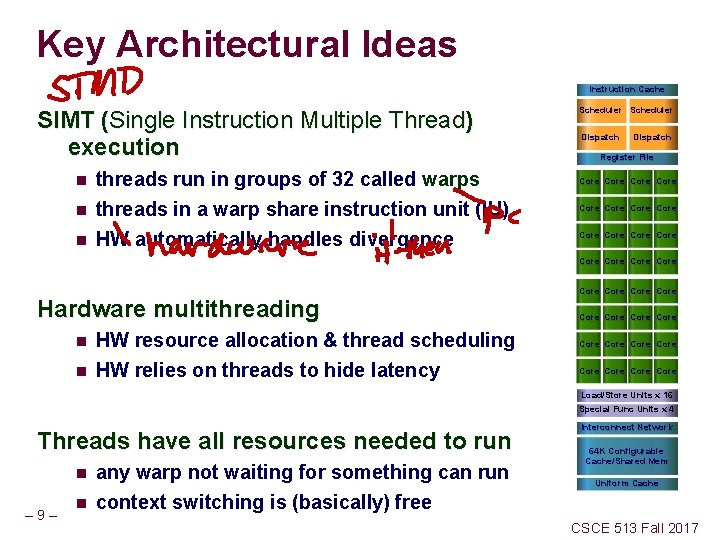

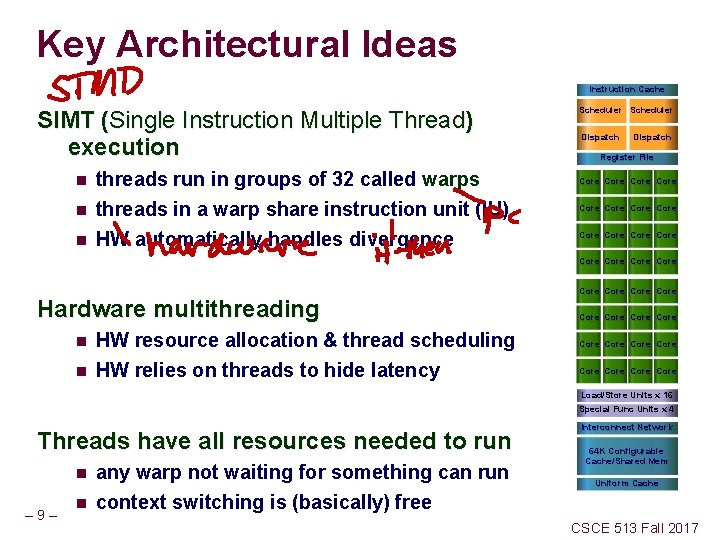

Key Architectural Ideas Instruction Cache SIMT (Single Instruction Multiple Thread) execution n threads run in groups of 32 called warps threads in a warp share instruction unit (IU) HW automatically handles divergence Scheduler Dispatch Register File Core Core Core Core Hardware multithreading n n HW resource allocation & thread scheduling HW relies on threads to hide latency Core Core Core Core Load/Store Units x 16 Special Func Units x 4 Threads have all resources needed to run n – 9– n any warp not waiting for something can run context switching is (basically) free Interconnect Network 64 K Configurable Cache/Shared Mem Uniform Cache CSCE 513 Fall 2017

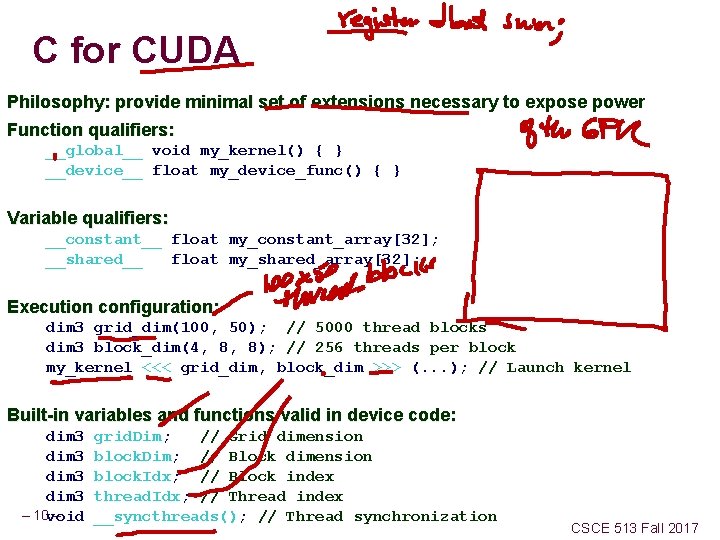

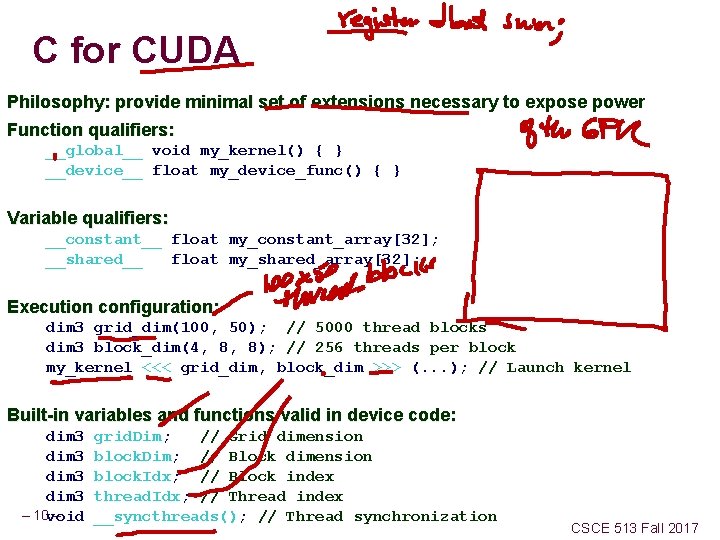

C for CUDA Philosophy: provide minimal set of extensions necessary to expose power Function qualifiers: __global__ void my_kernel() { } __device__ float my_device_func() { } Variable qualifiers: __constant__ float my_constant_array[32]; __shared__ float my_shared_array[32]; Execution configuration: dim 3 grid_dim(100, 50); // 5000 thread blocks dim 3 block_dim(4, 8, 8); // 256 threads per block my_kernel <<< grid_dim, block_dim >>> (. . . ); // Launch kernel Built-in variables and functions valid in device code: dim 3 – 10 void – grid. Dim; // Grid dimension block. Dim; // Block dimension block. Idx; // Block index thread. Idx; // Thread index __syncthreads(); // Thread synchronization CSCE 513 Fall 2017

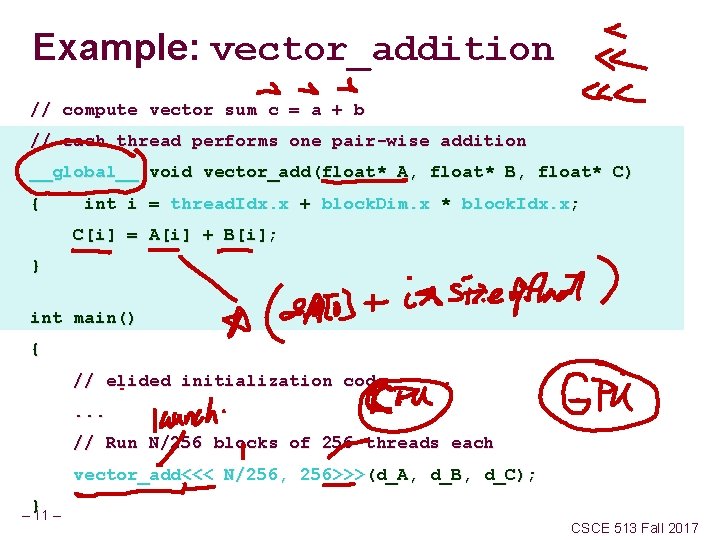

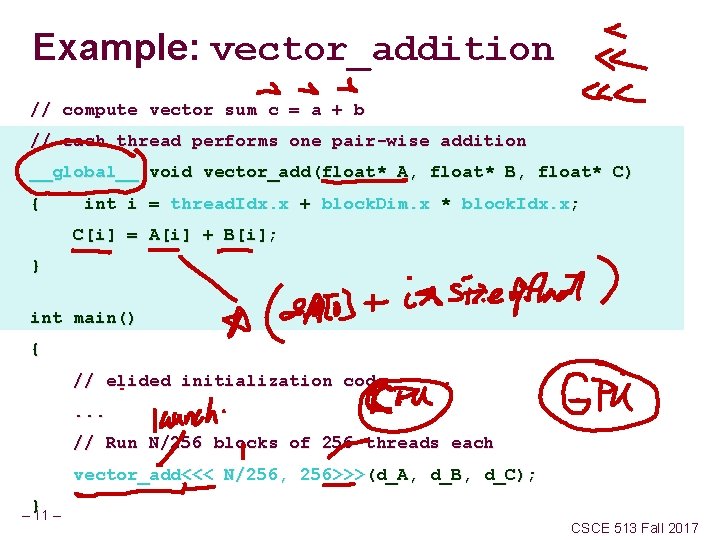

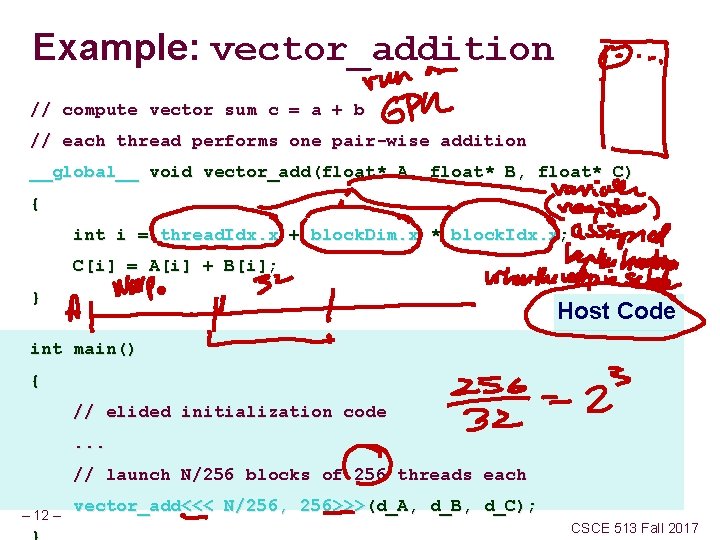

Example: vector_addition // compute vector sum c = a + b // each thread performs one pair-wise addition __global__ void vector_add(float* A, float* B, float* C) { int i = thread. Idx. x + block. Dim. x * block. Idx. x; C[i] = A[i] + B[i]; } int main() { // elided initialization code. . . // Run N/256 blocks of 256 threads each vector_add<<< N/256, 256>>>(d_A, d_B, d_C); } – 11 – CSCE 513 Fall 2017

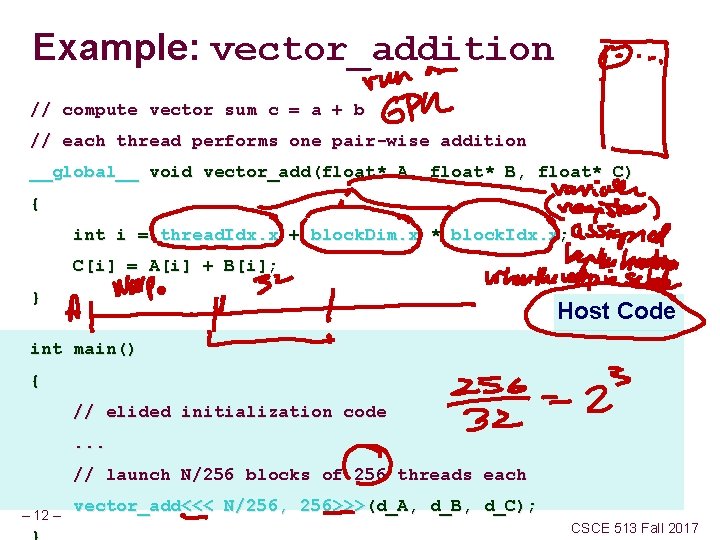

Example: vector_addition // compute vector sum c = a + b // each thread performs one pair-wise addition __global__ void vector_add(float* A, float* B, float* C) { int i = thread. Idx. x + block. Dim. x * block. Idx. x; C[i] = A[i] + B[i]; } Host Code int main() { // elided initialization code. . . // launch N/256 blocks of 256 threads each – 12 – vector_add<<< N/256, 256>>>(d_A, d_B, d_C); CSCE 513 Fall 2017

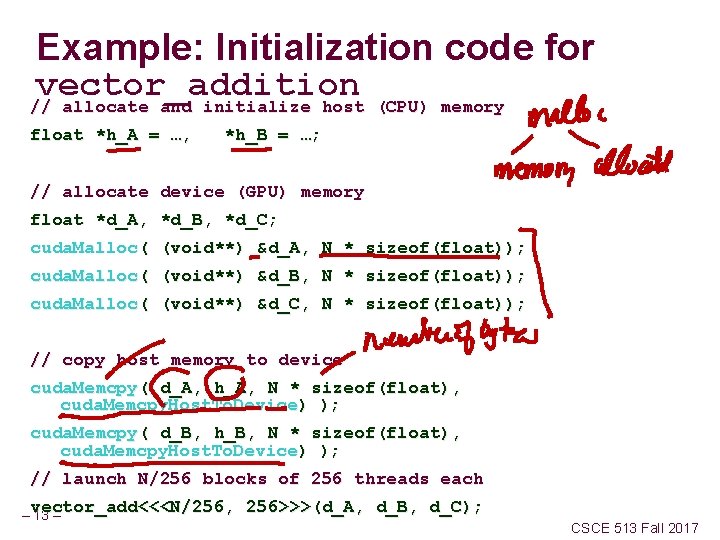

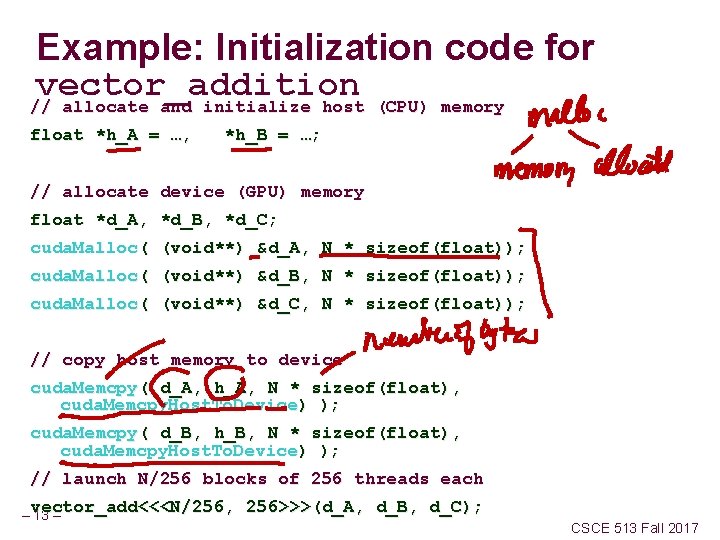

Example: Initialization code for vector_addition // allocate and initialize host (CPU) memory float *h_A = …, *h_B = …; // allocate device (GPU) memory float *d_A, *d_B, *d_C; cuda. Malloc( (void**) &d_A, N * sizeof(float)); cuda. Malloc( (void**) &d_B, N * sizeof(float)); cuda. Malloc( (void**) &d_C, N * sizeof(float)); // copy host memory to device cuda. Memcpy( d_A, h_A, N * sizeof(float), cuda. Memcpy. Host. To. Device) ); cuda. Memcpy( d_B, h_B, N * sizeof(float), cuda. Memcpy. Host. To. Device) ); // launch N/256 blocks of 256 threads each vector_add<<<N/256, 256>>>(d_A, d_B, d_C); – 13 – CSCE 513 Fall 2017

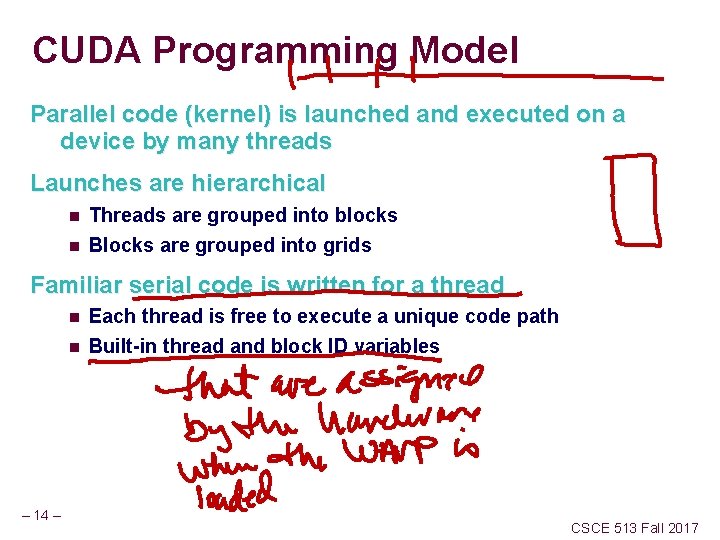

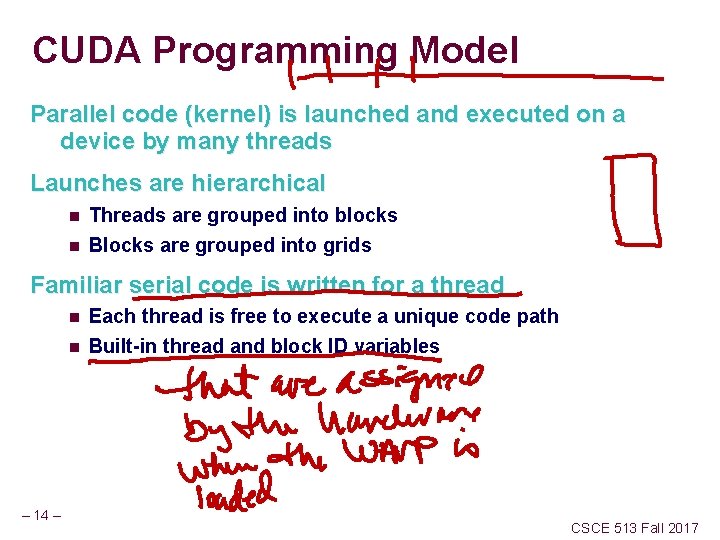

CUDA Programming Model Parallel code (kernel) is launched and executed on a device by many threads Launches are hierarchical n n Threads are grouped into blocks Blocks are grouped into grids Familiar serial code is written for a thread n n – 14 – Each thread is free to execute a unique code path Built-in thread and block ID variables CSCE 513 Fall 2017

DAXPY example in text – 15 – CSCE 513 Fall 2017

– 16 – CSCE 513 Fall 2017

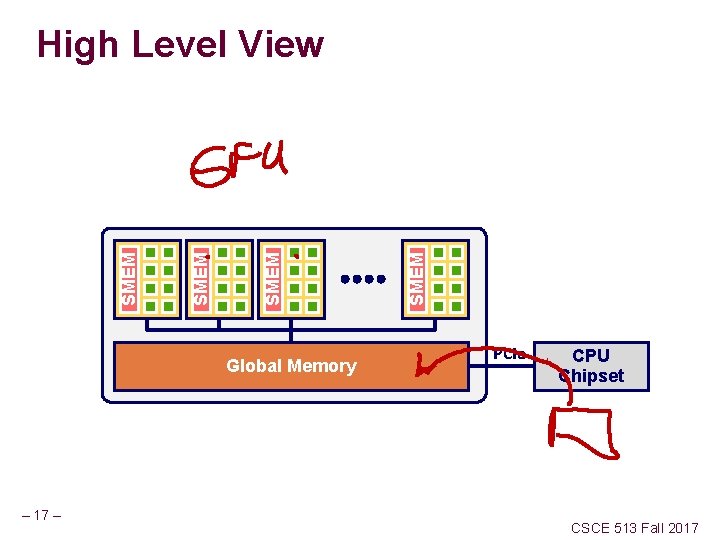

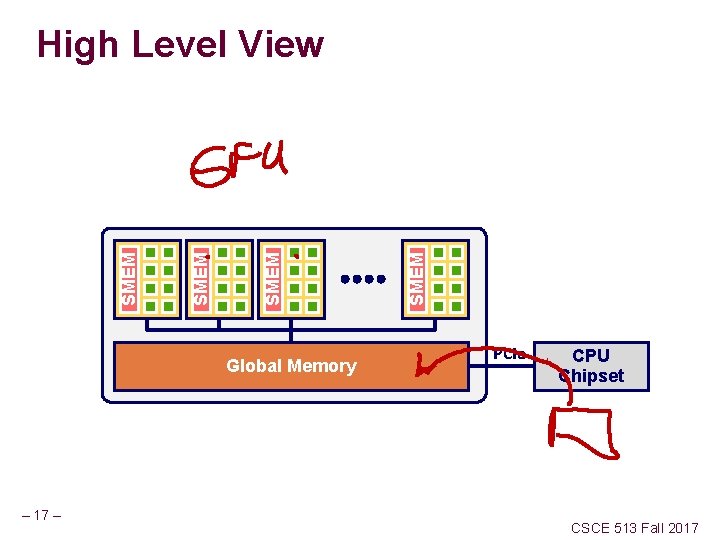

Global Memory – 17 – SMEM High Level View PCIe CPU Chipset CSCE 513 Fall 2017

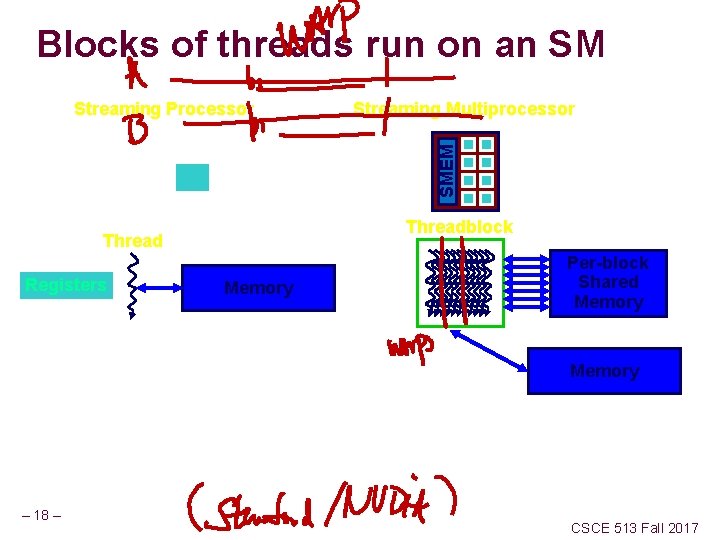

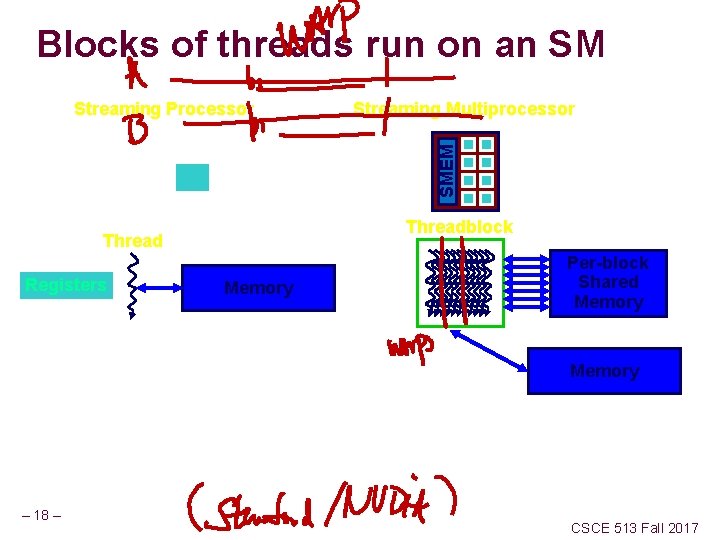

Blocks of threads run on an SM Streaming Multiprocessor SMEM Streaming Processor Threadblock Thread Registers Memory Per-block Shared Memory – 18 – CSCE 513 Fall 2017

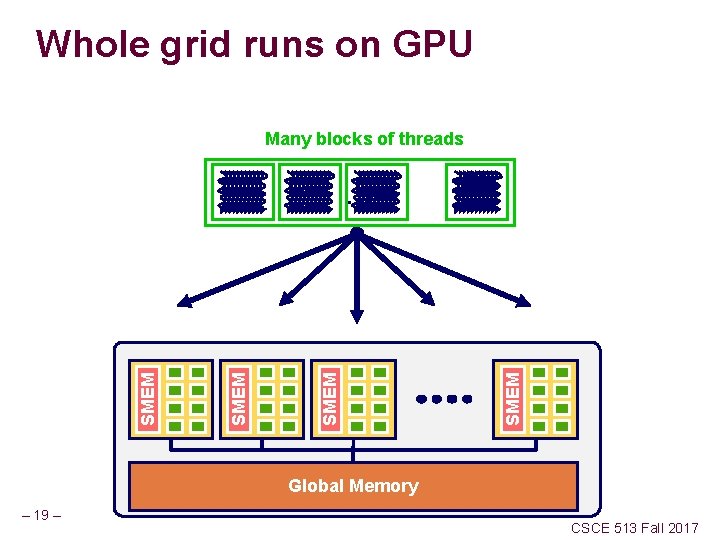

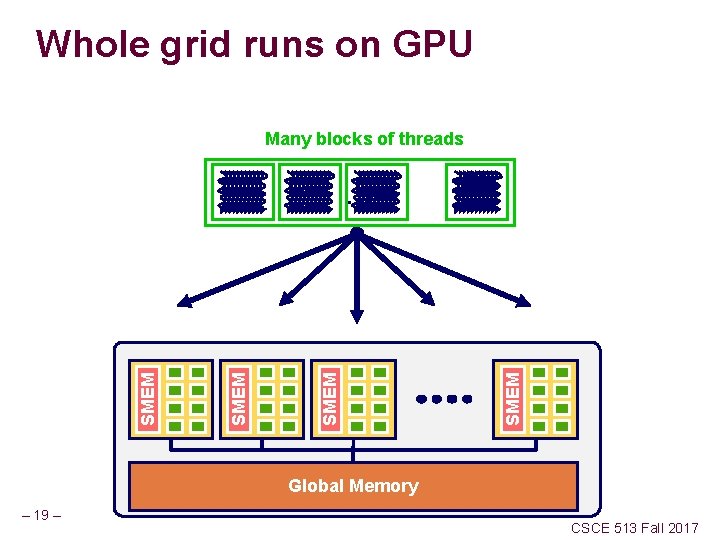

Whole grid runs on GPU Many blocks of threads SMEM . . . Global Memory – 19 – CSCE 513 Fall 2017

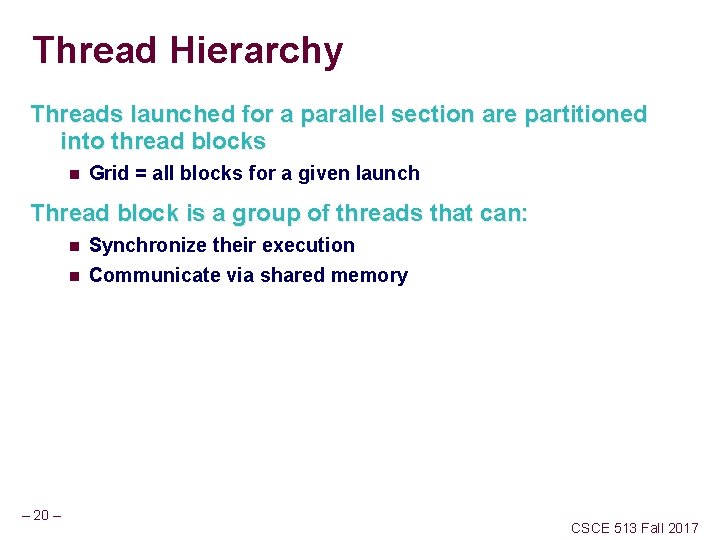

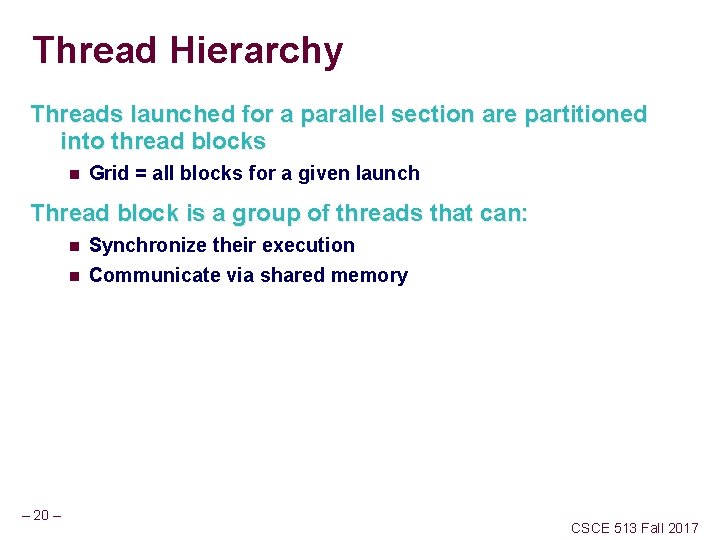

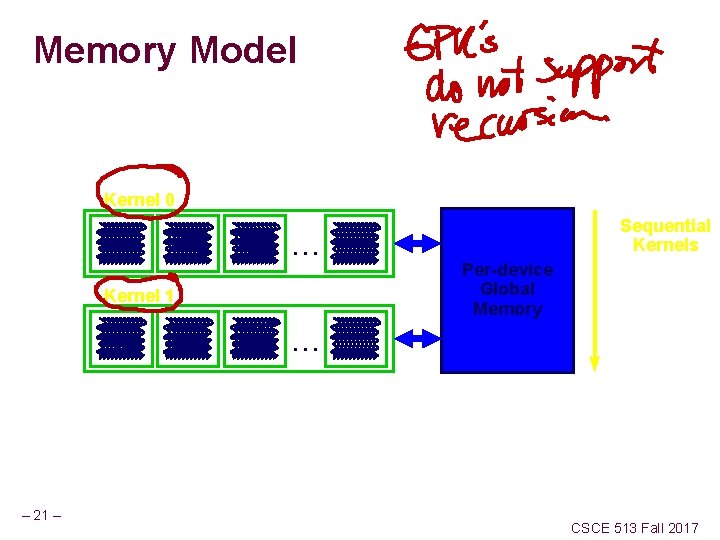

Thread Hierarchy Threads launched for a parallel section are partitioned into thread blocks n Grid = all blocks for a given launch Thread block is a group of threads that can: n n – 20 – Synchronize their execution Communicate via shared memory CSCE 513 Fall 2017

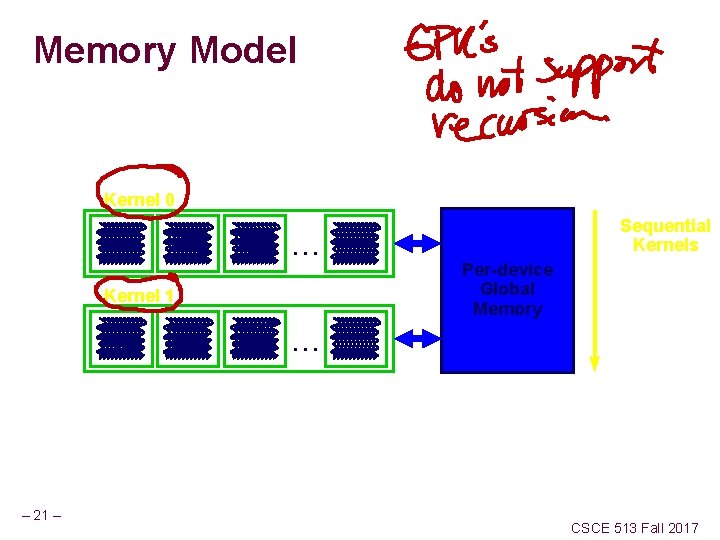

Memory Model Kernel 0 Sequential Kernels . . . Per-device Global Memory Kernel 1. . . – 21 – CSCE 513 Fall 2017

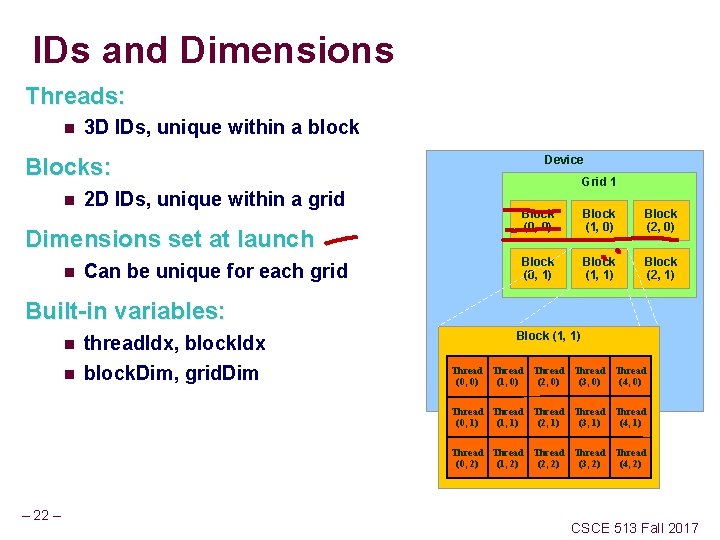

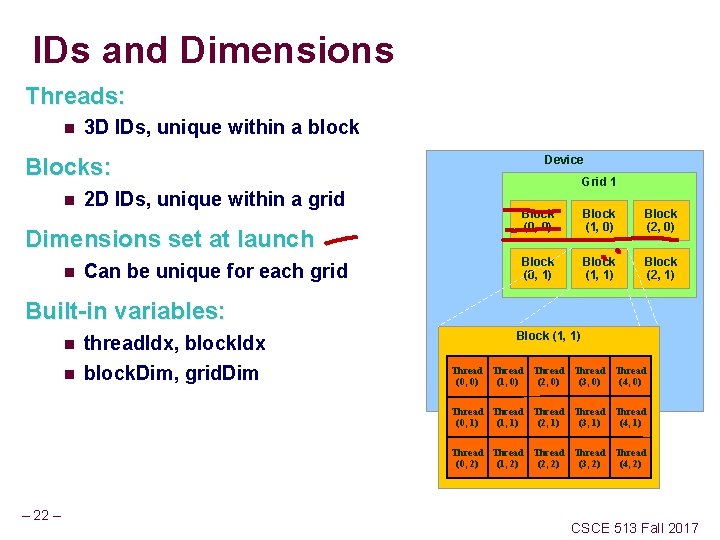

IDs and Dimensions Threads: n 3 D IDs, unique within a block Blocks: n 2 D IDs, unique within a grid Dimensions set at launch n Can be unique for each grid Device Grid 1 Block (0, 0) Block (1, 0) Block (2, 0) Block (0, 1) Block (1, 1) Block (2, 1) Built-in variables: n n thread. Idx, block. Idx block. Dim, grid. Dim Block (1, 1) Thread Thread (0, 0) (1, 0) (2, 0) (3, 0) (4, 0) Thread Thread (0, 1) (1, 1) (2, 1) (3, 1) (4, 1) Thread Thread (0, 2) (1, 2) (2, 2) (3, 2) (4, 2) – 22 – CSCE 513 Fall 2017

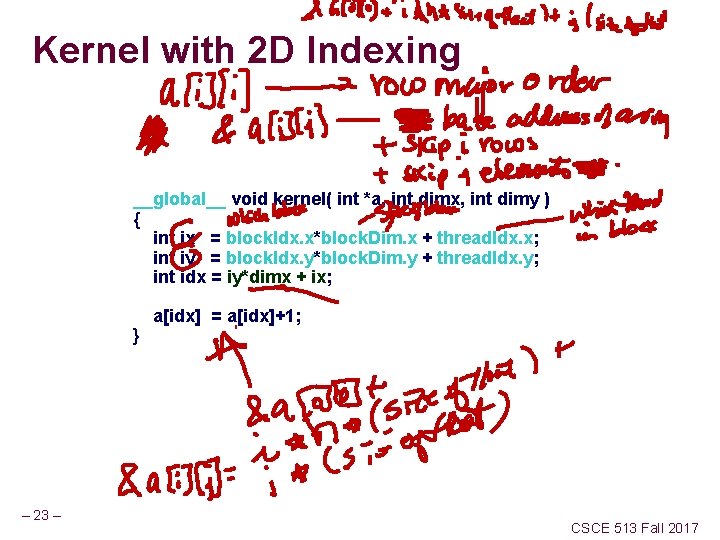

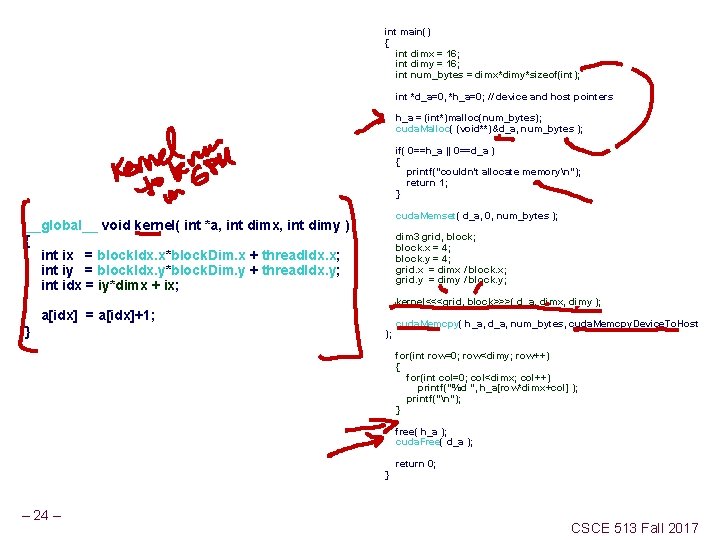

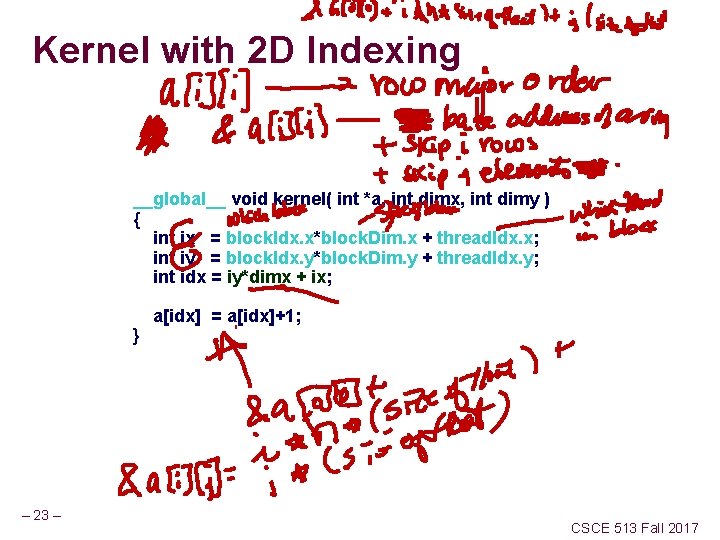

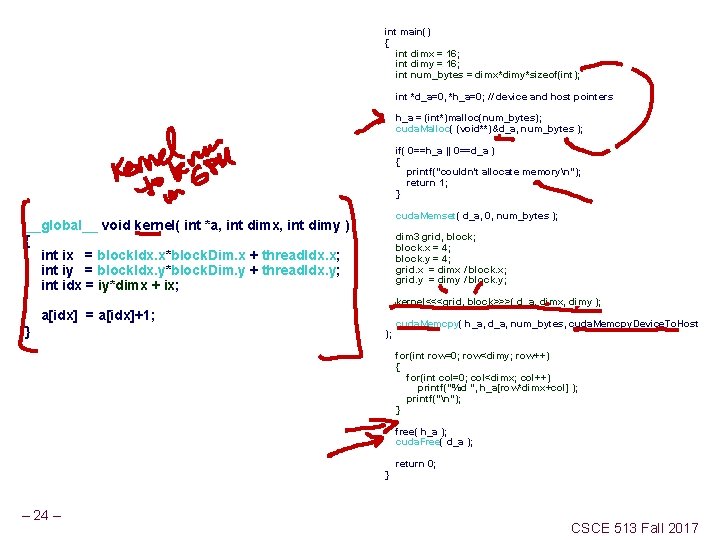

Kernel with 2 D Indexing __global__ void kernel( int *a, int dimx, int dimy ) { int ix = block. Idx. x*block. Dim. x + thread. Idx. x; int iy = block. Idx. y*block. Dim. y + thread. Idx. y; int idx = iy*dimx + ix; a[idx] = a[idx]+1; } – 23 – CSCE 513 Fall 2017

int main() { int dimx = 16; int dimy = 16; int num_bytes = dimx*dimy*sizeof(int); int *d_a=0, *h_a=0; // device and host pointers h_a = (int*)malloc(num_bytes); cuda. Malloc( (void**)&d_a, num_bytes ); if( 0==h_a || 0==d_a ) { printf("couldn't allocate memoryn"); return 1; } __global__ void kernel( int *a, int dimx, int dimy ) { int ix = block. Idx. x*block. Dim. x + thread. Idx. x; int iy = block. Idx. y*block. Dim. y + thread. Idx. y; int idx = iy*dimx + ix; cuda. Memset( d_a, 0, num_bytes ); dim 3 grid, block; block. x = 4; block. y = 4; grid. x = dimx / block. x; grid. y = dimy / block. y; kernel<<<grid, block>>>( d_a, dimx, dimy ); a[idx] = a[idx]+1; } cuda. Memcpy( h_a, d_a, num_bytes, cuda. Memcpy. Device. To. Host ); for(int row=0; row<dimy; row++) { for(int col=0; col<dimx; col++) printf("%d ", h_a[row*dimx+col] ); printf("n"); } free( h_a ); cuda. Free( d_a ); return 0; } – 24 – CSCE 513 Fall 2017

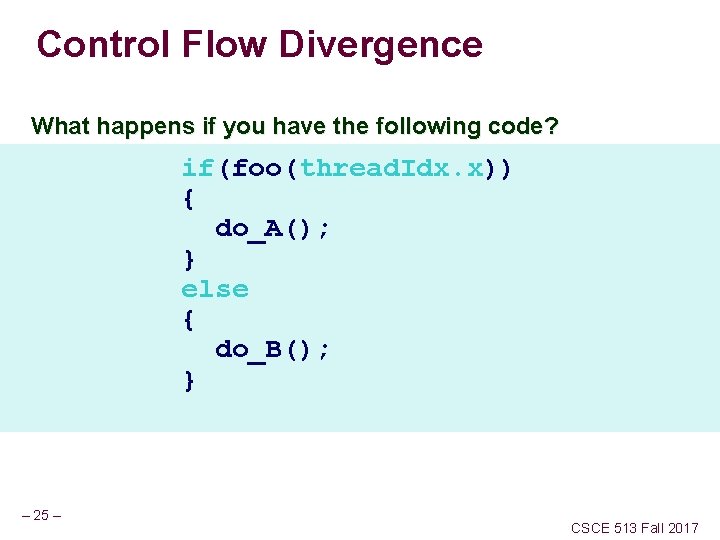

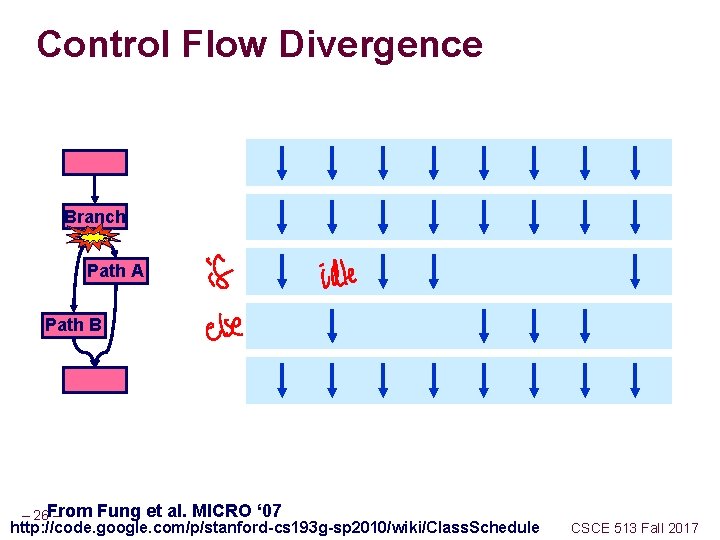

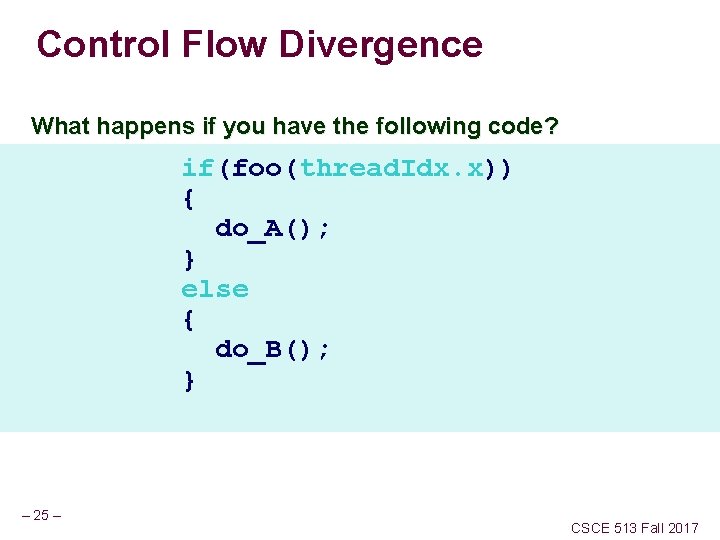

Control Flow Divergence What happens if you have the following code? if(foo(thread. Idx. x)) { do_A(); } else { do_B(); } – 25 – CSCE 513 Fall 2017

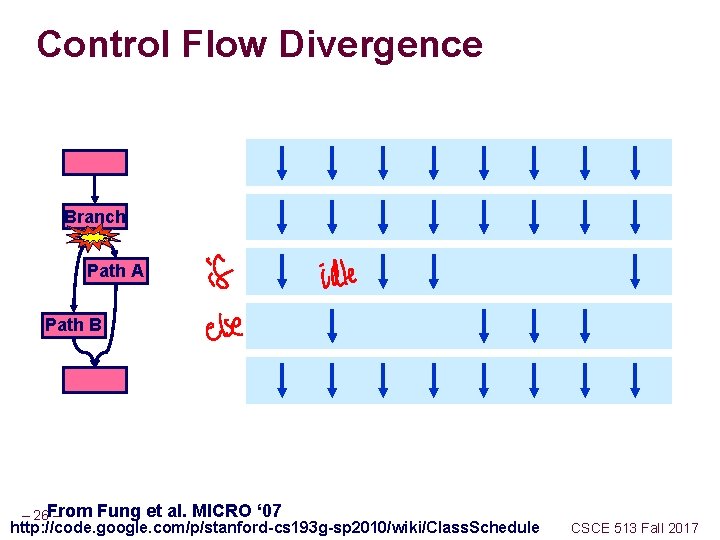

Control Flow Divergence Branch Path A Path B – 26 From Fung et al. MICRO ‘ 07 – http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule CSCE 513 Fall 2017

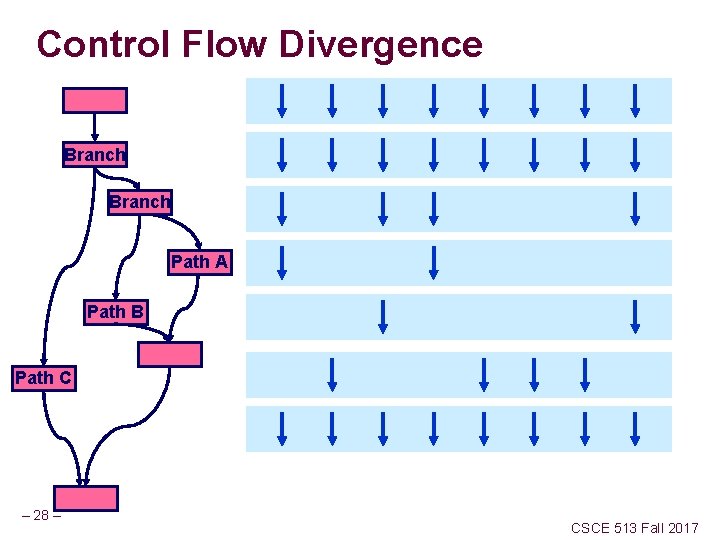

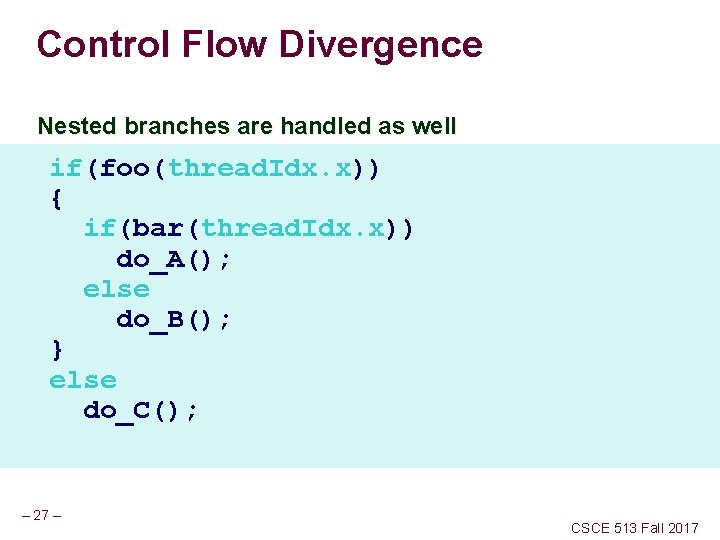

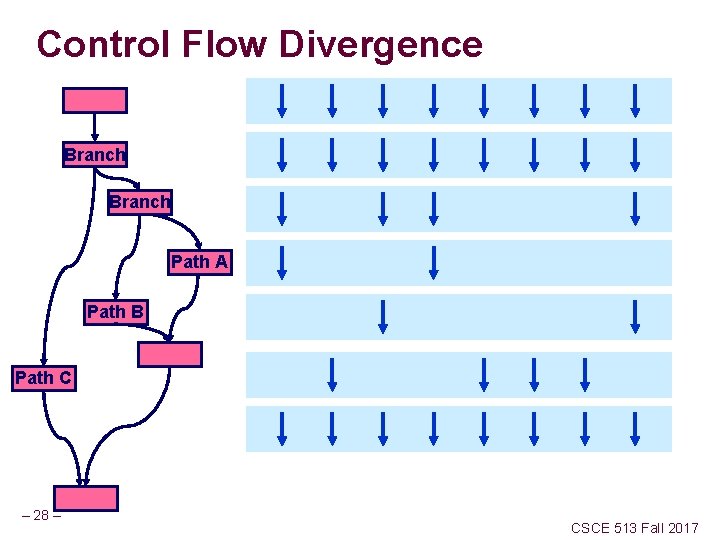

Control Flow Divergence Nested branches are handled as well if(foo(thread. Idx. x)) { if(bar(thread. Idx. x)) do_A(); else do_B(); } else do_C(); – 27 – CSCE 513 Fall 2017

Control Flow Divergence Branch Path A Path B Path C – 28 – CSCE 513 Fall 2017

Control Flow Divergence You don’t have to worry about divergence for correctness (*) You might have to think about it for performance n – 29 – Depends on your branch conditions http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule CSCE 513 Fall 2017

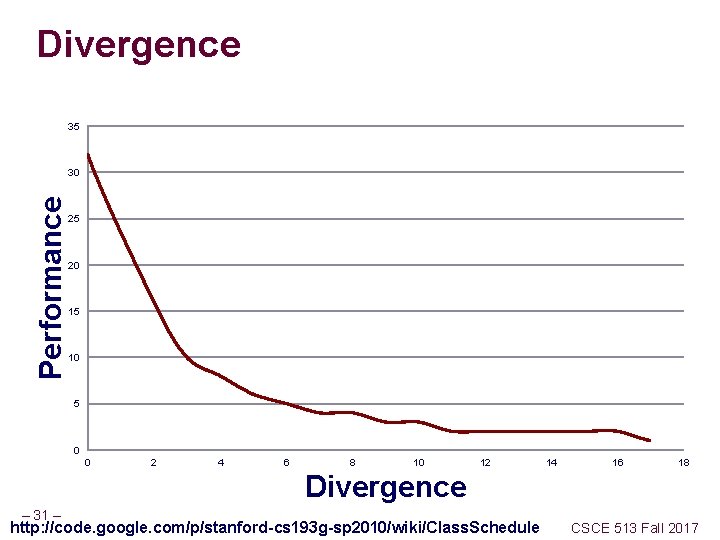

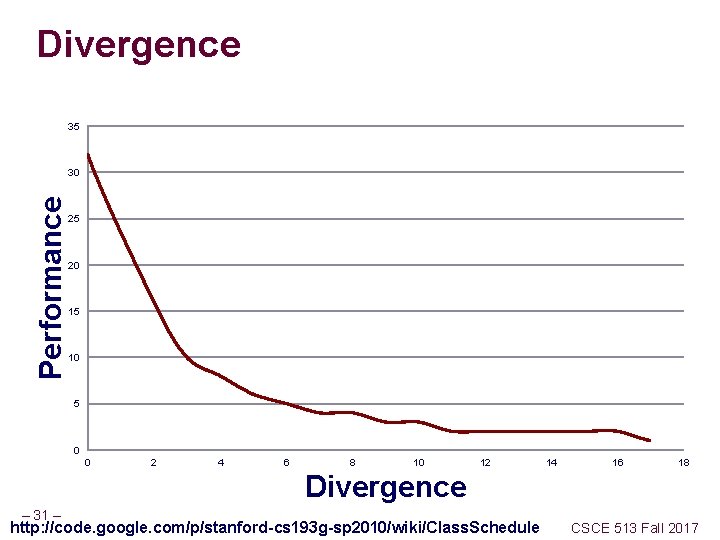

Control Flow Divergence Performance drops off with the degree of divergence switch(thread. Idx. x % N) { case 0: . . . case 1: . . . } – 30 – CSCE 513 Fall 2017

Divergence 35 Performance 30 25 20 15 10 5 0 0 2 4 6 8 10 12 14 16 18 Divergence – 31 – http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule CSCE 513 Fall 2017

The Problem How do you do global communication? Finish a grid and start a new one – 32 – http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule CSCE 513 Fall 2017

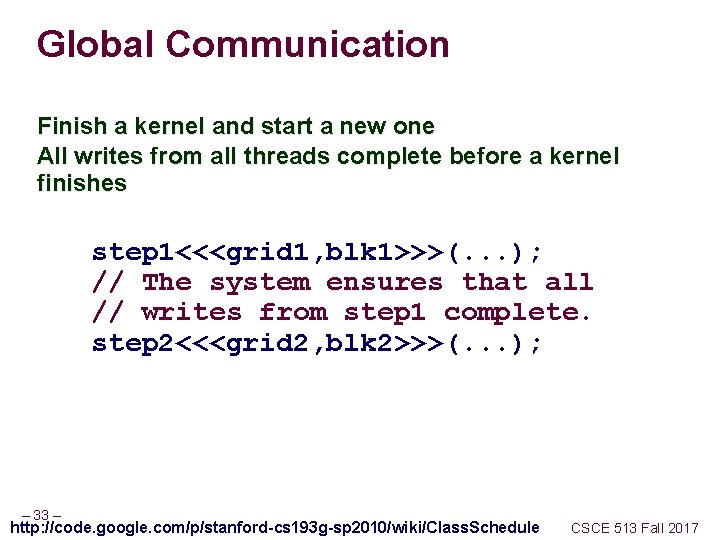

Global Communication Finish a kernel and start a new one All writes from all threads complete before a kernel finishes step 1<<<grid 1, blk 1>>>(. . . ); // The system ensures that all // writes from step 1 complete. step 2<<<grid 2, blk 2>>>(. . . ); – 33 – http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule CSCE 513 Fall 2017

Global Communication Would need to decompose kernels into before and after parts – 34 – http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule CSCE 513 Fall 2017

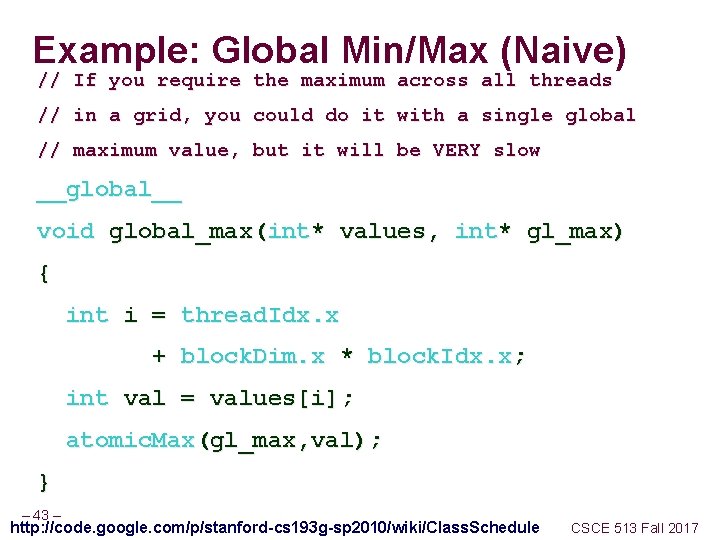

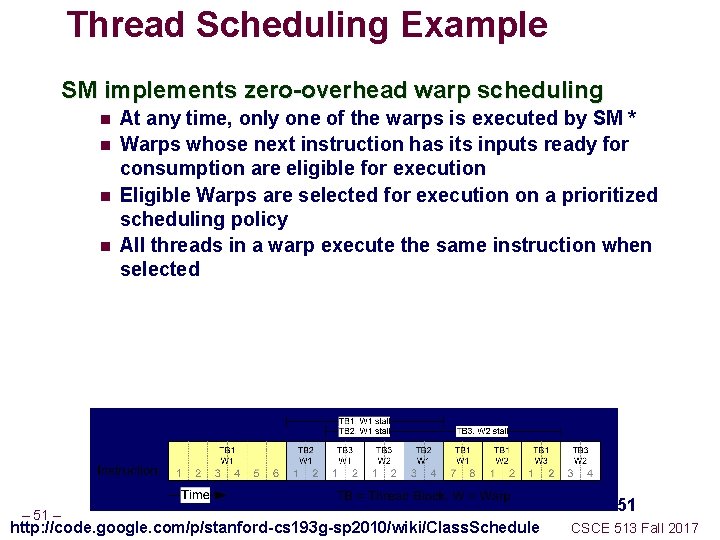

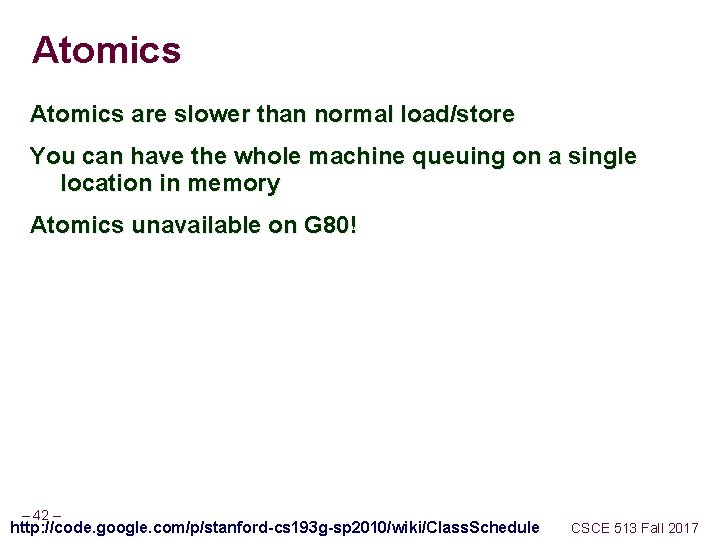

Race Conditions Or, write to a predefined memory location n – 35 – Race condition! Updates can be lost http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule CSCE 513 Fall 2017

![Race Conditions thread Id 0 thread Id 1917 vector0 was equal to 0 Race Conditions thread. Id: 0 thread. Id: 1917 // vector[0] was equal to 0](https://slidetodoc.com/presentation_image/3f9c1607bfee227a267c753d73468bae/image-36.jpg)

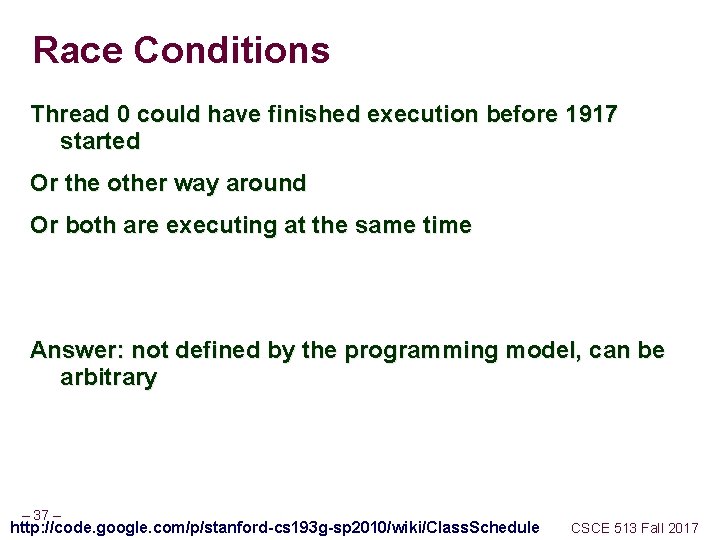

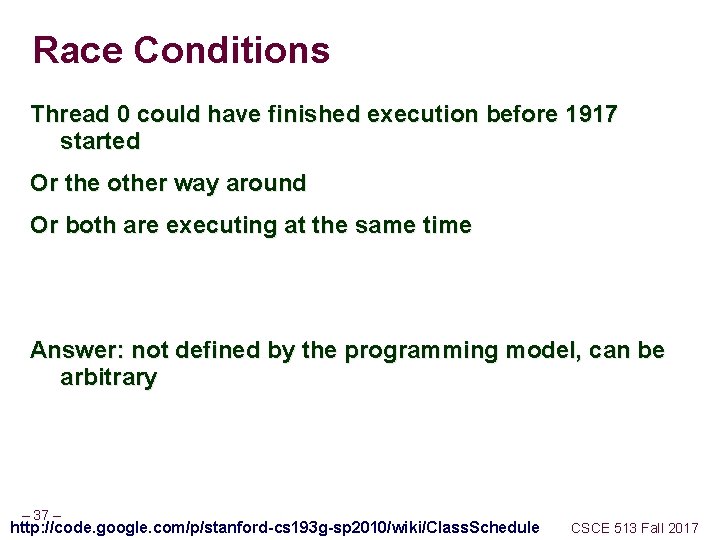

Race Conditions thread. Id: 0 thread. Id: 1917 // vector[0] was equal to 0 vector[0] += 5; += 1; . . . a = vector[0]; What is the value of a in thread 0? vector[0]. . . a = vector[0]; What is the value of a in thread 1917? – 36 – http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule CSCE 513 Fall 2017

Race Conditions Thread 0 could have finished execution before 1917 started Or the other way around Or both are executing at the same time Answer: not defined by the programming model, can be arbitrary – 37 – http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule CSCE 513 Fall 2017

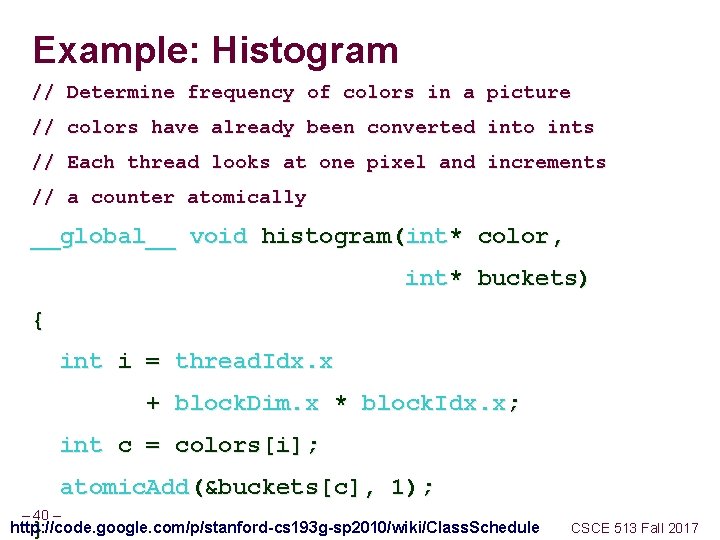

Atomics CUDA provides atomic operations to deal with this problem – 38 – http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule CSCE 513 Fall 2017

Atomics An atomic operation guarantees that only a single thread has access to a piece of memory while an operation completes The name atomic comes from the fact that it is uninterruptable No dropped data, but ordering is still arbitrary Different types of atomic instructions atomic{Add, Sub, Exch, Min, Max, Inc, Dec, CAS, And, Or, Xor} More types in fermi – 39 – http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule CSCE 513 Fall 2017

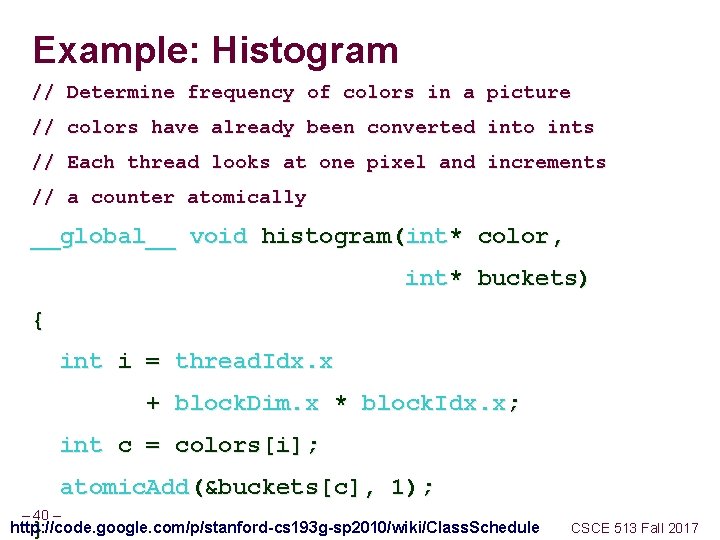

Example: Histogram // Determine frequency of colors in a picture // colors have already been converted into ints // Each thread looks at one pixel and increments // a counter atomically __global__ void histogram(int* color, int* buckets) { int i = thread. Idx. x + block. Dim. x * block. Idx. x; int c = colors[i]; atomic. Add(&buckets[c], 1); – 40 – http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule } CSCE 513 Fall 2017

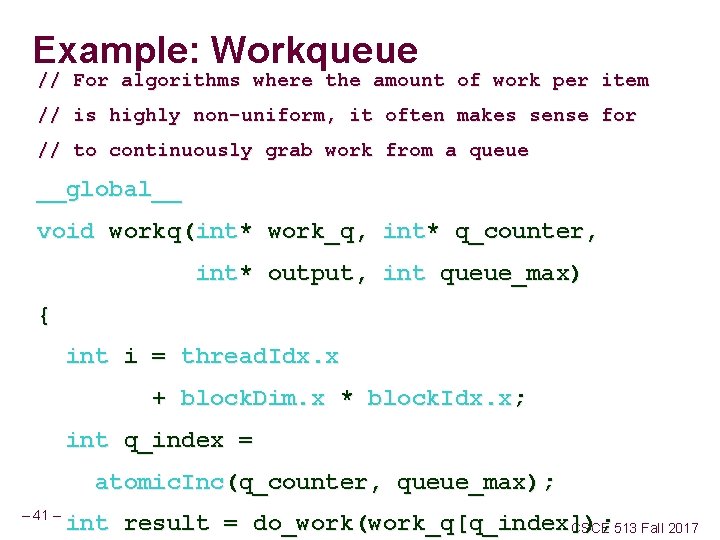

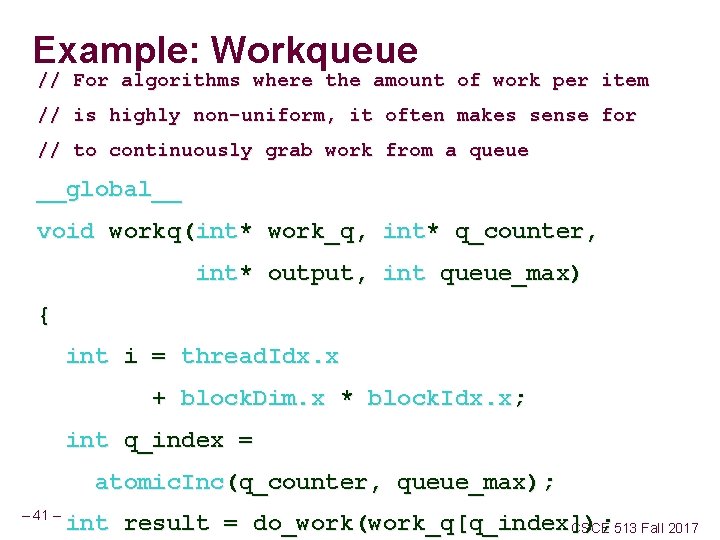

Example: Workqueue // For algorithms where the amount of work per item // is highly non-uniform, it often makes sense for // to continuously grab work from a queue __global__ void workq(int* work_q, int* q_counter, int* output, int queue_max) { int i = thread. Idx. x + block. Dim. x * block. Idx. x; int q_index = atomic. Inc(q_counter, queue_max); – 41 – int result = do_work(work_q[q_index]); CSCE 513 Fall 2017

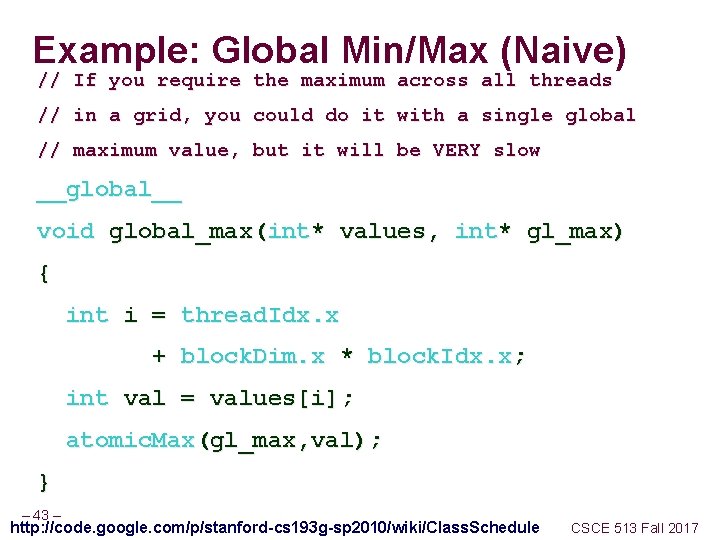

Atomics are slower than normal load/store You can have the whole machine queuing on a single location in memory Atomics unavailable on G 80! – 42 – http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule CSCE 513 Fall 2017

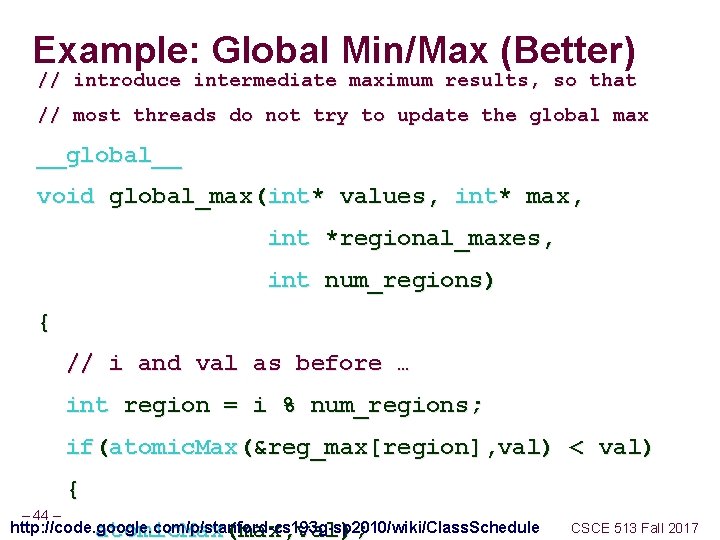

Example: Global Min/Max (Naive) // If you require the maximum across all threads // in a grid, you could do it with a single global // maximum value, but it will be VERY slow __global__ void global_max(int* values, int* gl_max) { int i = thread. Idx. x + block. Dim. x * block. Idx. x; int val = values[i]; atomic. Max(gl_max, val); } – 43 – http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule CSCE 513 Fall 2017

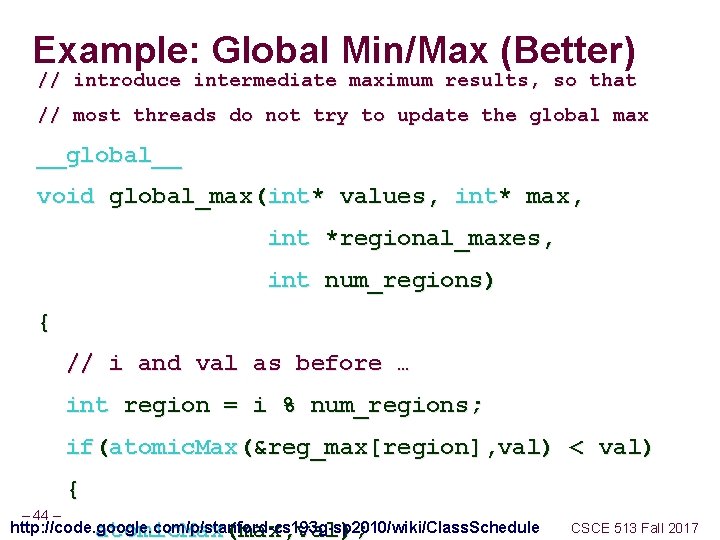

Example: Global Min/Max (Better) // introduce intermediate maximum results, so that // most threads do not try to update the global max __global__ void global_max(int* values, int* max, int *regional_maxes, int num_regions) { // i and val as before … int region = i % num_regions; if(atomic. Max(®_max[region], val) < val) { – 44 – http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule atomic. Max(max, val); CSCE 513 Fall 2017

Global Min/Max Single value causes serial bottleneck Create hierarchy of values for more parallelism Performance will still be slow, so use judiciously See next lecture for even better version! – 45 – http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule CSCE 513 Fall 2017

Summary Can’t use normal load/store for inter-thread communication because of race conditions Use atomic instructions for sparse and/or unpredictable global communication n See next lectures for shared memory and scan for other communication patterns Decompose data (very limited use of single global sum/max/min/etc. ) for more parallelism – 46 – http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule CSCE 513 Fall 2017

How an SM executes threads Overview of how a Stream Multiprocessor works SIMT Execution Divergence – 47 – http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule CSCE 513 Fall 2017

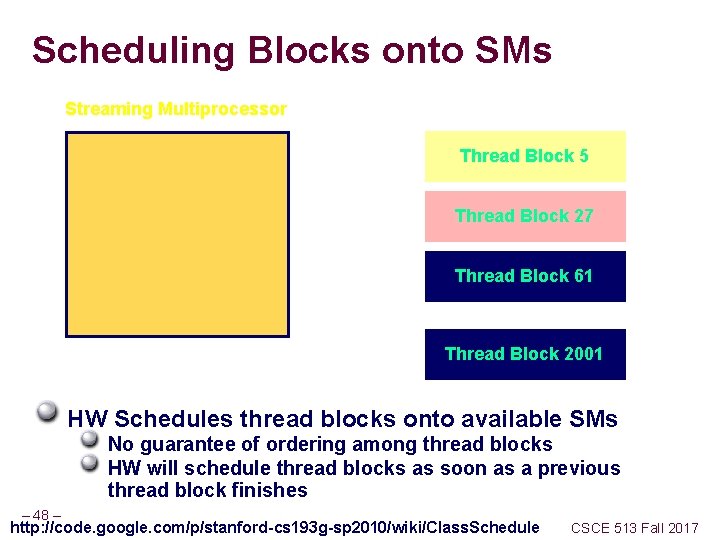

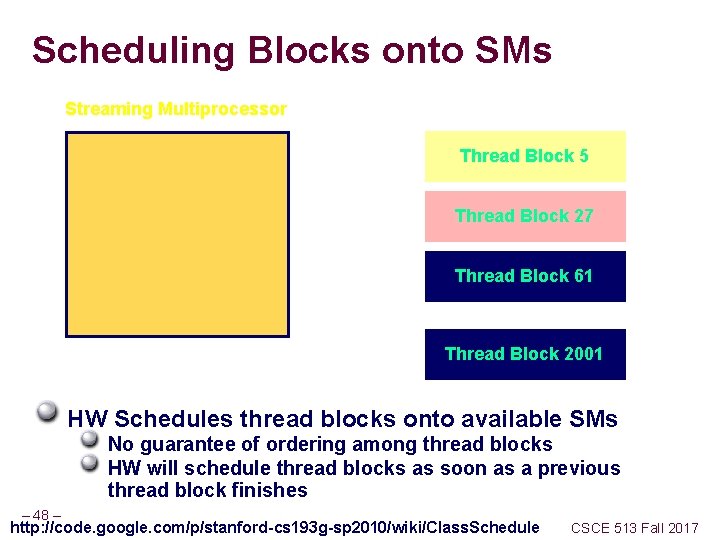

Scheduling Blocks onto SMs Streaming Multiprocessor Thread Block 5 Thread Block 27 Thread Block 61 Thread Block 2001 HW Schedules thread blocks onto available SMs No guarantee of ordering among thread blocks HW will schedule thread blocks as soon as a previous thread block finishes – 48 – http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule CSCE 513 Fall 2017

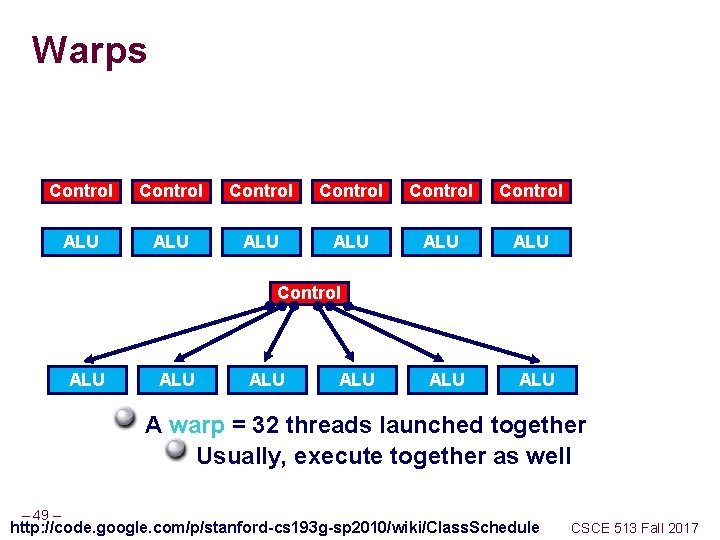

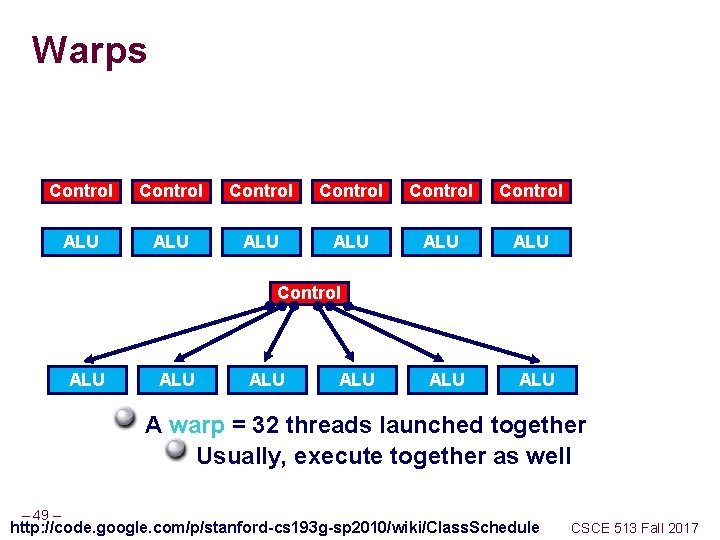

Warps Control Control ALU ALU ALU A warp = 32 threads launched together Usually, execute together as well – 49 – http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule CSCE 513 Fall 2017

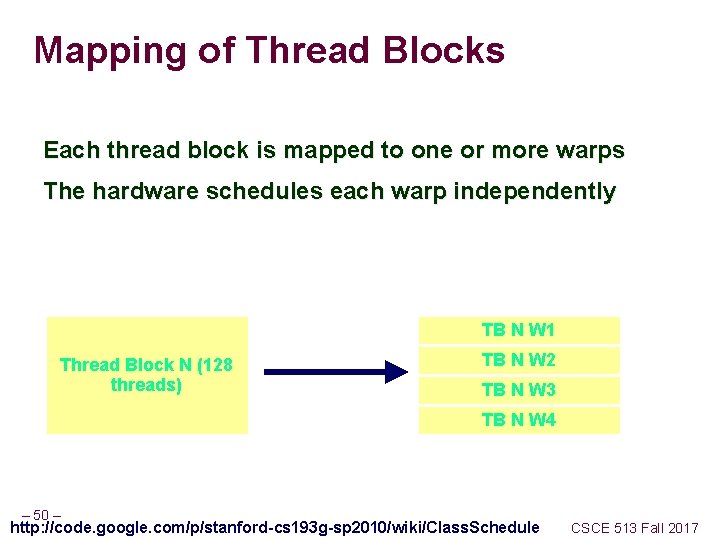

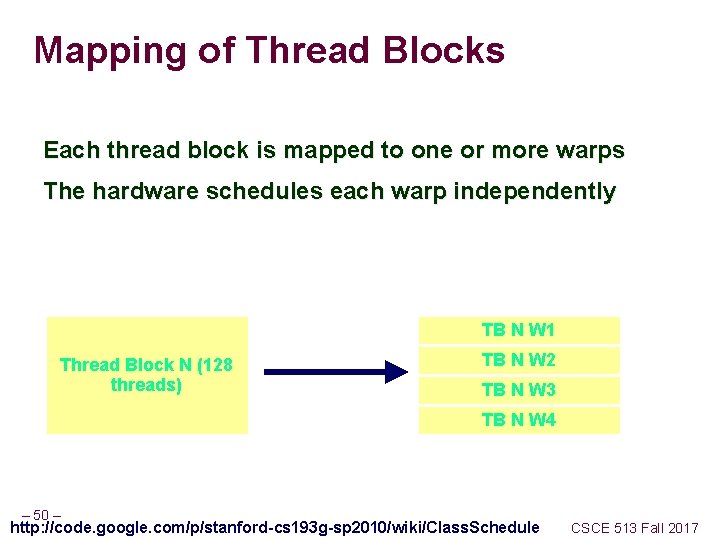

Mapping of Thread Blocks Each thread block is mapped to one or more warps The hardware schedules each warp independently TB N W 1 Thread Block N (128 threads) TB N W 2 TB N W 3 TB N W 4 – 50 – http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule CSCE 513 Fall 2017

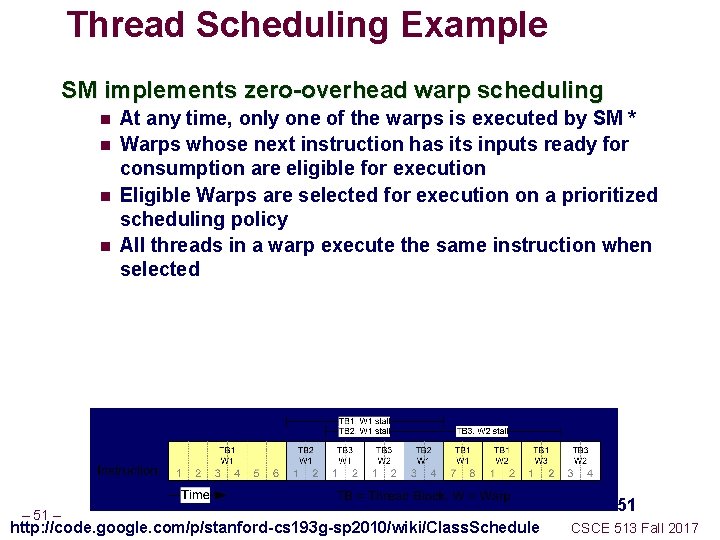

Thread Scheduling Example SM implements zero-overhead warp scheduling n n – 51 – At any time, only one of the warps is executed by SM * Warps whose next instruction has its inputs ready for consumption are eligible for execution Eligible Warps are selected for execution on a prioritized scheduling policy All threads in a warp execute the same instruction when selected http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule 51 CSCE 513 Fall 2017

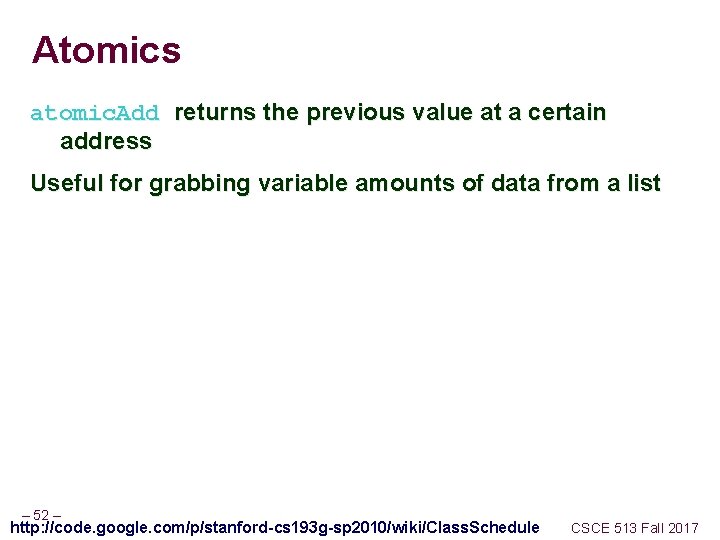

Atomics atomic. Add returns the previous value at a certain address Useful for grabbing variable amounts of data from a list – 52 – http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule CSCE 513 Fall 2017

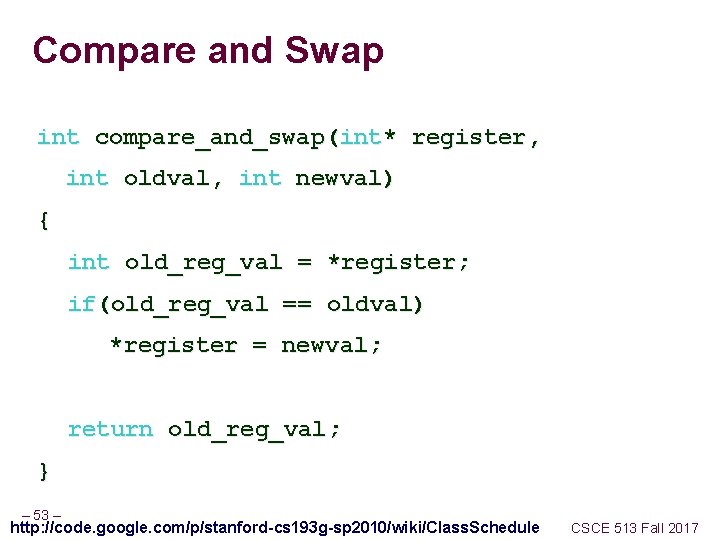

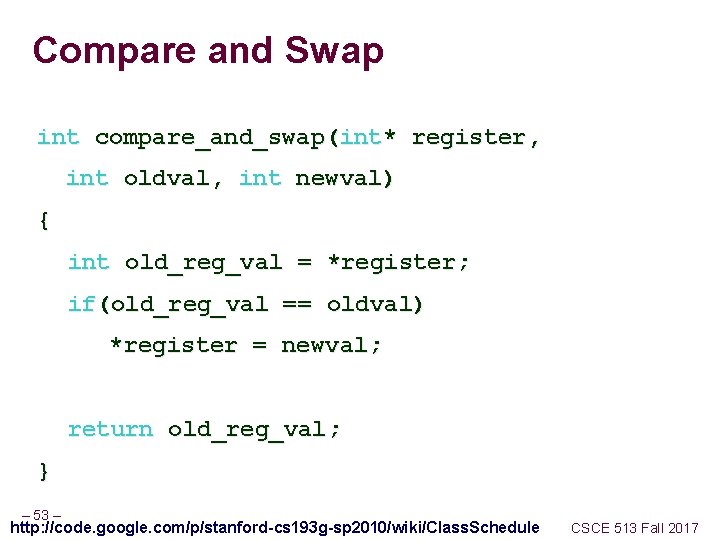

Compare and Swap int compare_and_swap(int* register, int oldval, int newval) { int old_reg_val = *register; if(old_reg_val == oldval) *register = newval; return old_reg_val; } – 53 – http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule CSCE 513 Fall 2017

Compare and Swap Most general type of atomic Can emulate all others with CAS – 54 – http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule CSCE 513 Fall 2017

Locks Use very judiciously Always include a max_iter in your spinloop! Decompose your data and your locks – 55 – http: //code. google. com/p/stanford-cs 193 g-sp 2010/wiki/Class. Schedule CSCE 513 Fall 2017