CS 295 Modern Systems CacheEfficient Algorithms SangWoo Jun

![Some Important Stencils [1] 19 -point 3 D Stencil for Lattice Boltzmann Method flow Some Important Stencils [1] 19 -point 3 D Stencil for Lattice Boltzmann Method flow](https://slidetodoc.com/presentation_image_h2/5a929b8227f110e3f316bf224a8168a5/image-29.jpg)

- Slides: 34

CS 295: Modern Systems Cache-Efficient Algorithms Sang-Woo Jun Spring, 2019

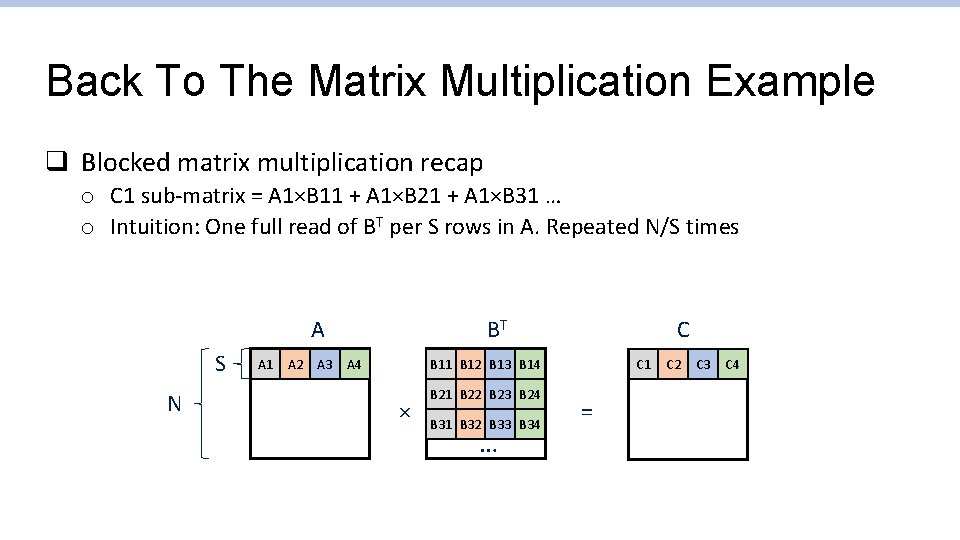

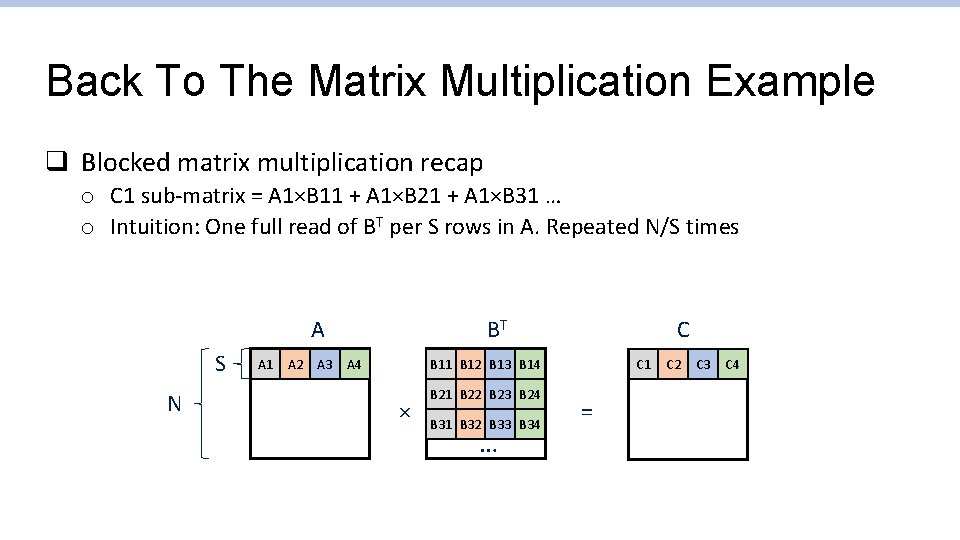

Back To The Matrix Multiplication Example q Blocked matrix multiplication recap o C 1 sub-matrix = A 1×B 11 + A 1×B 21 + A 1×B 31 … o Intuition: One full read of BT per S rows in A. Repeated N/S times A S N A 1 A 2 A 3 BT A 4 C B 11 B 12 B 13 B 14 × B 21 B 22 B 23 B 24 B 31 B 32 B 33 B 34 … C 1 = C 2 C 3 C 4

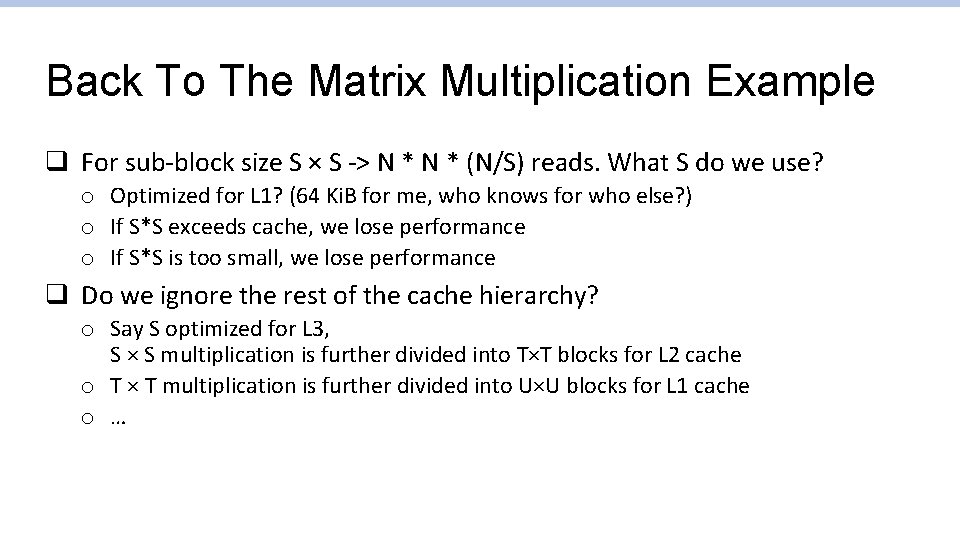

Back To The Matrix Multiplication Example q For sub-block size S × S -> N * (N/S) reads. What S do we use? o Optimized for L 1? (64 Ki. B for me, who knows for who else? ) o If S*S exceeds cache, we lose performance o If S*S is too small, we lose performance q Do we ignore the rest of the cache hierarchy? o Say S optimized for L 3, S × S multiplication is further divided into T×T blocks for L 2 cache o T × T multiplication is further divided into U×U blocks for L 1 cache o …

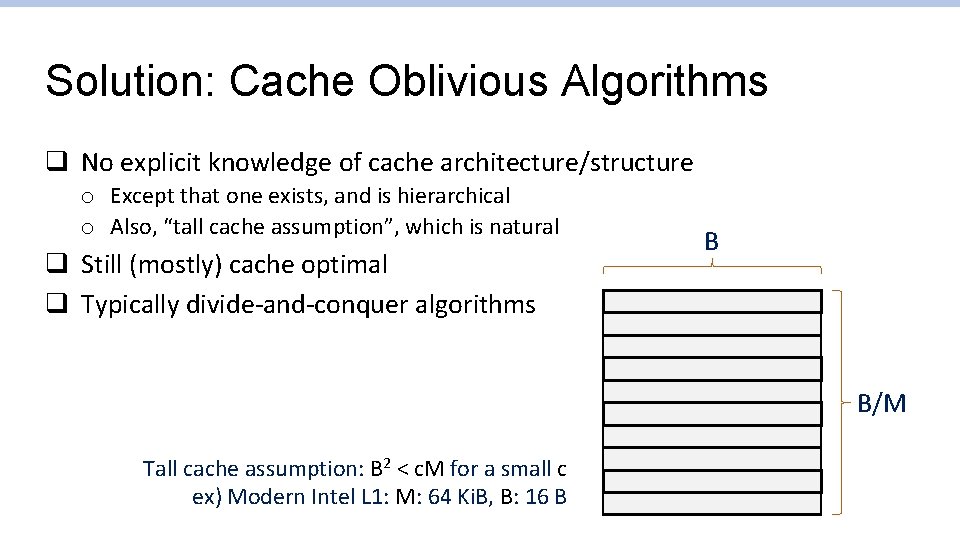

Solution: Cache Oblivious Algorithms q No explicit knowledge of cache architecture/structure o Except that one exists, and is hierarchical o Also, “tall cache assumption”, which is natural q Still (mostly) cache optimal q Typically divide-and-conquer algorithms B B/M Tall cache assumption: B 2 < c. M for a small c ex) Modern Intel L 1: M: 64 Ki. B, B: 16 B

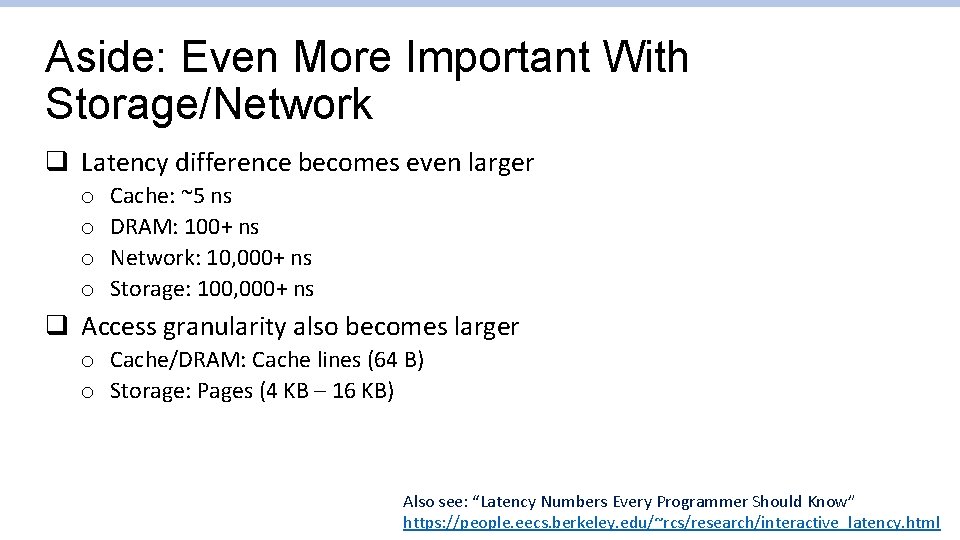

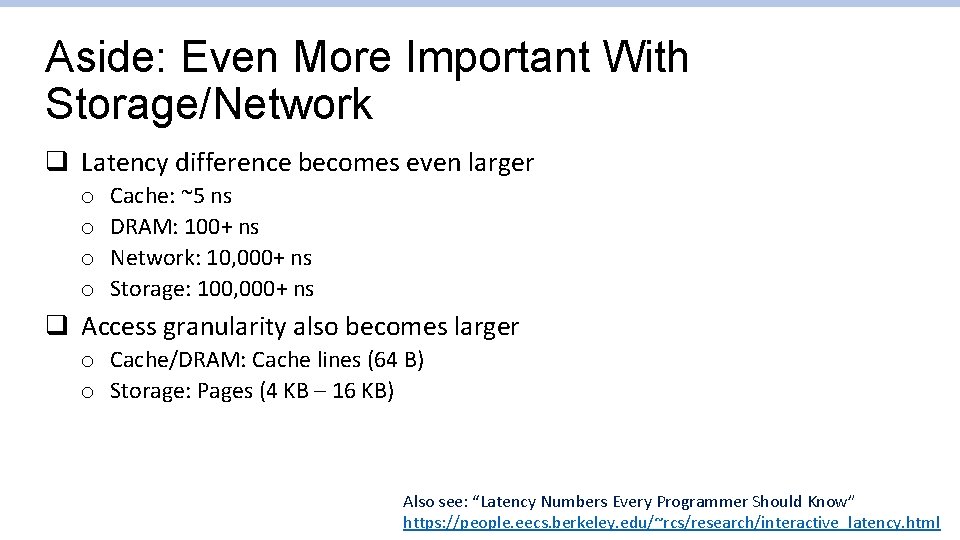

Aside: Even More Important With Storage/Network q Latency difference becomes even larger o o Cache: ~5 ns DRAM: 100+ ns Network: 10, 000+ ns Storage: 100, 000+ ns q Access granularity also becomes larger o Cache/DRAM: Cache lines (64 B) o Storage: Pages (4 KB – 16 KB) Also see: “Latency Numbers Every Programmer Should Know” https: //people. eecs. berkeley. edu/~rcs/research/interactive_latency. html

Applications of Interest q q Matrix multiplication Merge Sort Stencil Computation Trees And Search

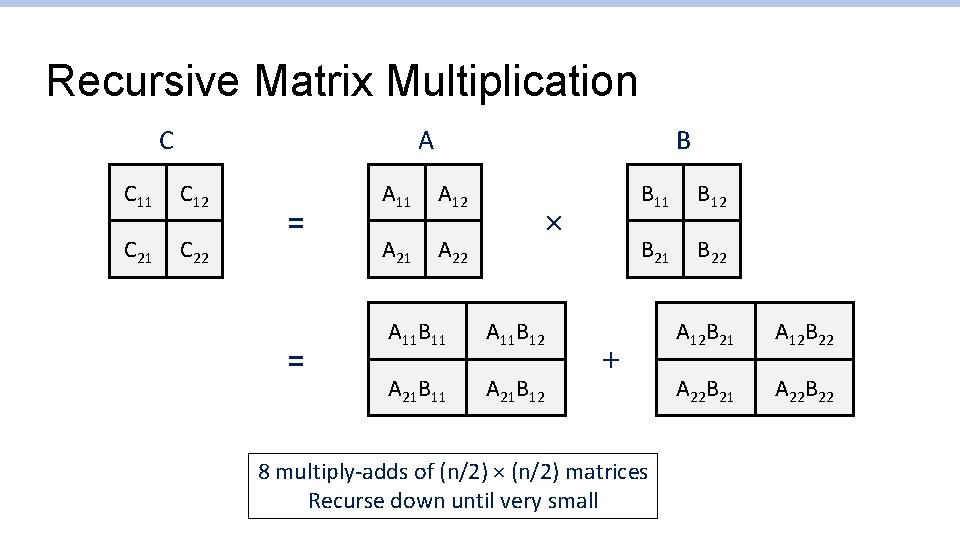

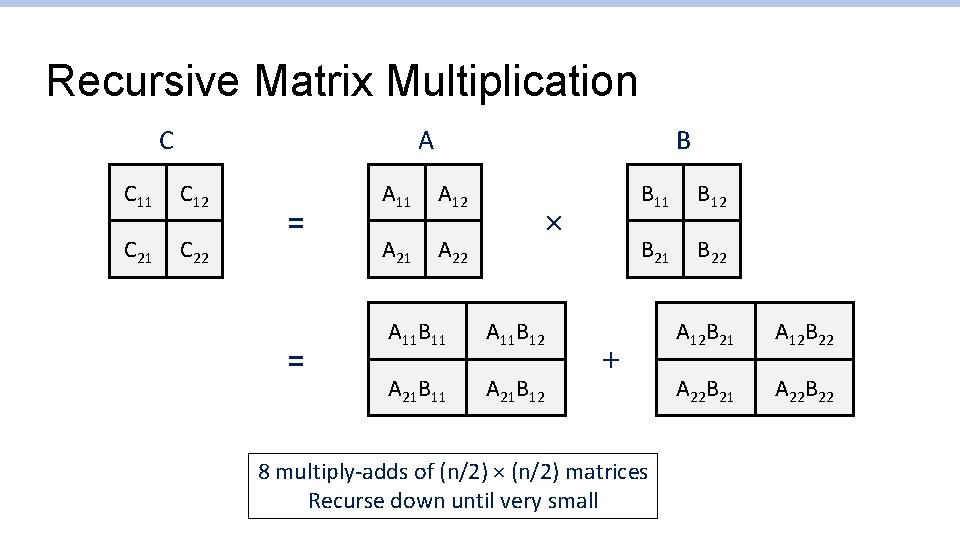

Recursive Matrix Multiplication C A C 11 C 12 C 21 C 22 = = B A 11 A 12 A 21 A 22 × A 11 B 11 A 11 B 12 A 21 B 11 A 21 B 12 B 11 B 12 B 21 B 22 + 8 multiply-adds of (n/2) × (n/2) matrices Recurse down until very small A 12 B 21 A 12 B 22 A 22 B 21 A 22 B 22

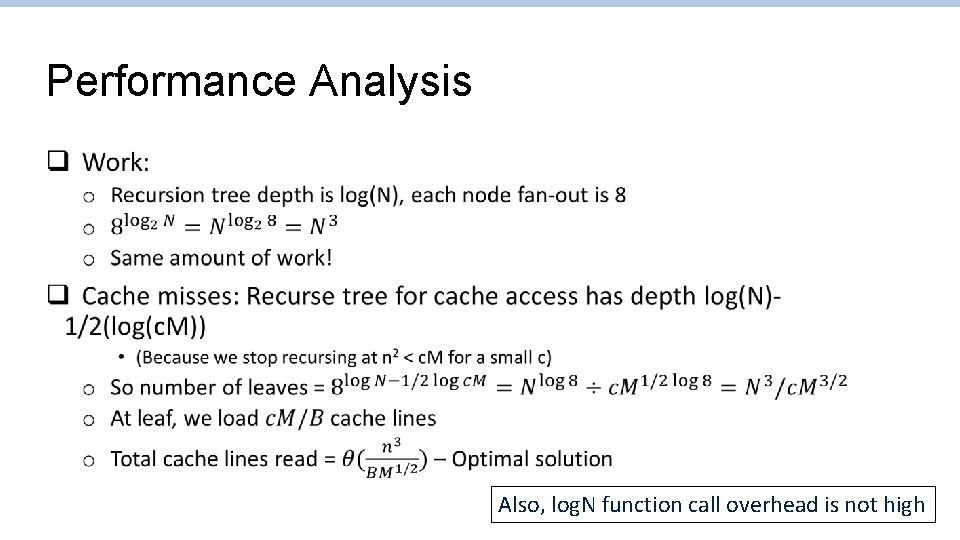

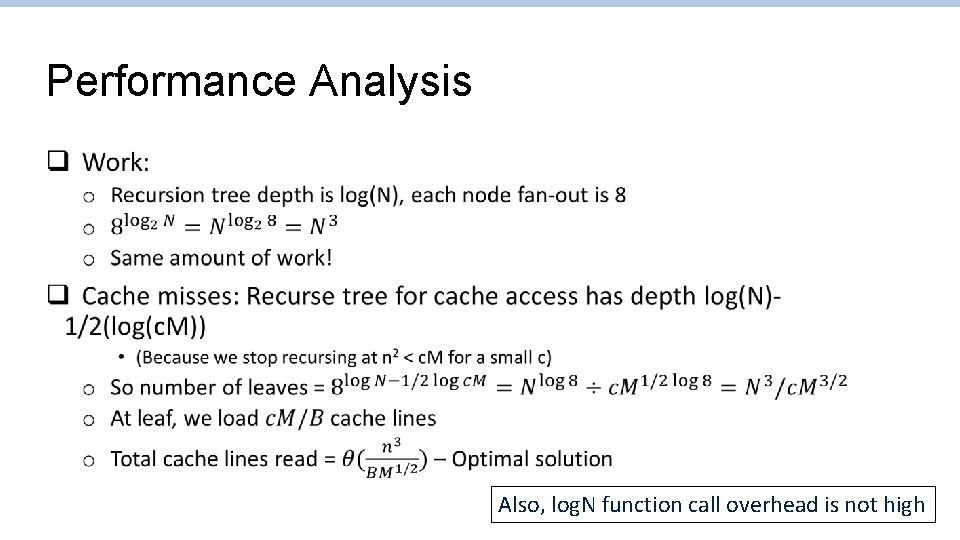

Performance Analysis q Also, log. N function call overhead is not high

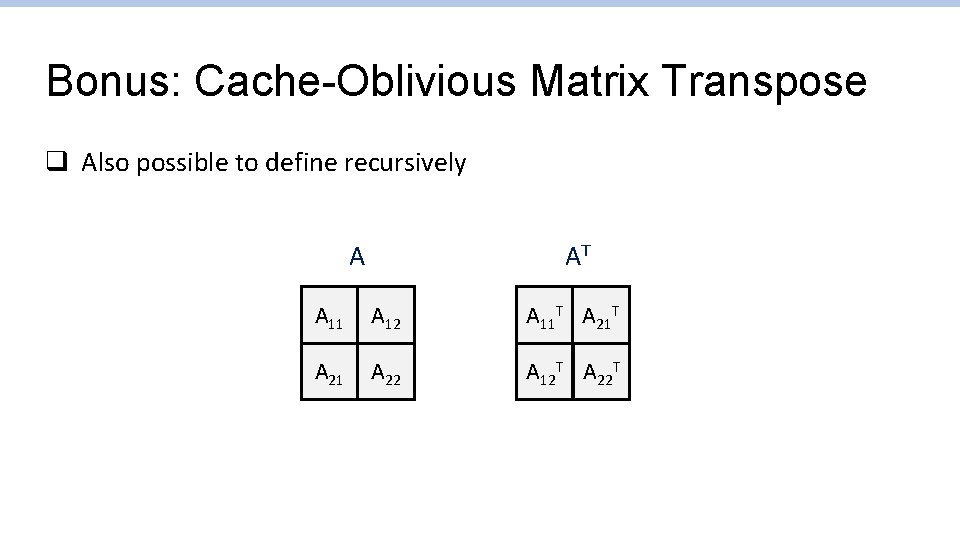

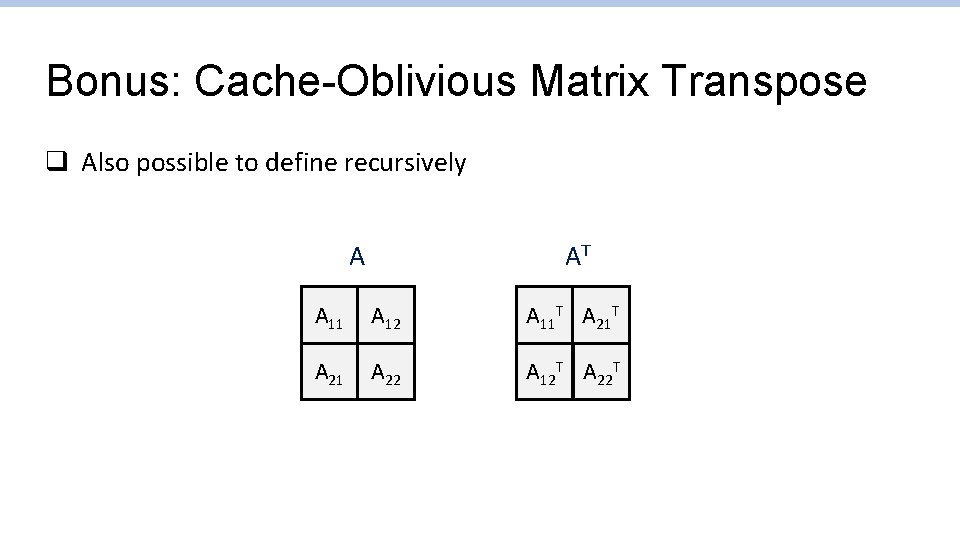

Bonus: Cache-Oblivious Matrix Transpose q Also possible to define recursively AT A A 11 A 12 A 11 T A 21 A 22 A 12 T A 22 T

Applications of Interest q q Matrix multiplication Trees And Search Merge Sort Stencil Computation

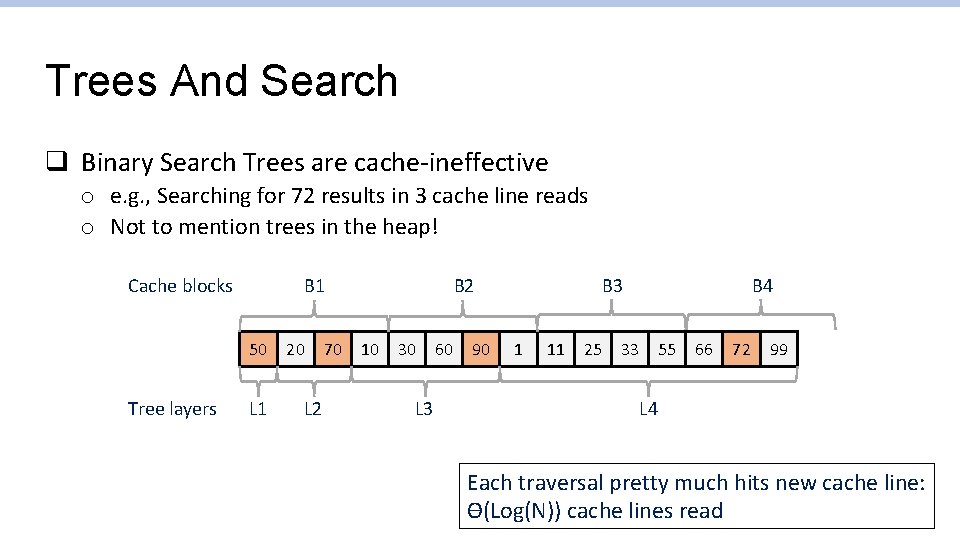

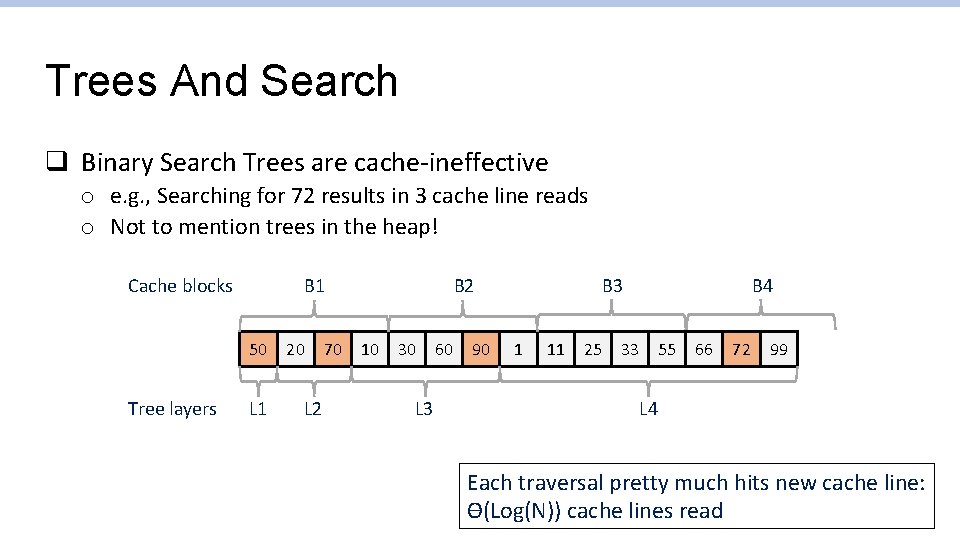

Trees And Search q Binary Search Trees are cache-ineffective o e. g. , Searching for 72 results in 3 cache line reads o Not to mention trees in the heap! Cache blocks B 1 50 Tree layers L 1 20 L 2 70 10 30 L 3 60 90 B 4 B 3 B 2 1 11 25 33 55 66 72 99 L 4 Each traversal pretty much hits new cache line: Ɵ(Log(N)) cache lines read

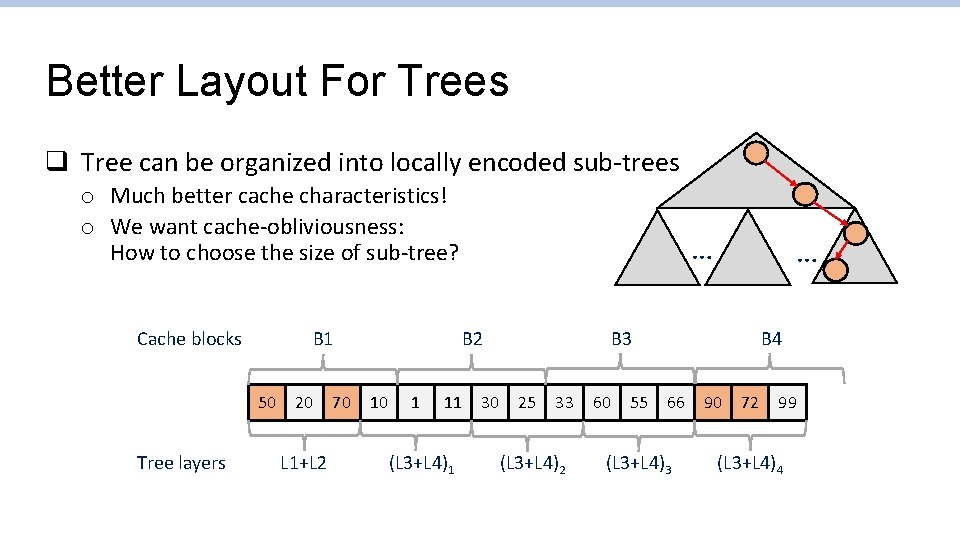

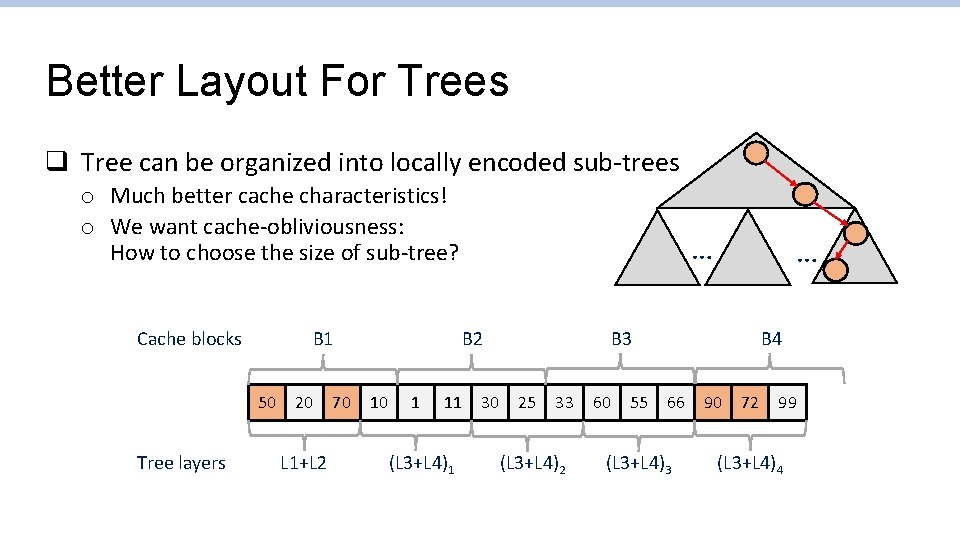

Better Layout For Trees q Tree can be organized into locally encoded sub-trees o Much better cache characteristics! o We want cache-obliviousness: How to choose the size of sub-tree? Cache blocks B 1 50 Tree layers 20 L 1+L 2 70 … 1 11 (L 3+L 4)1 30 B 4 B 3 B 2 10 … 25 33 (L 3+L 4)2 60 55 66 (L 3+L 4)3 90 72 99 (L 3+L 4)4

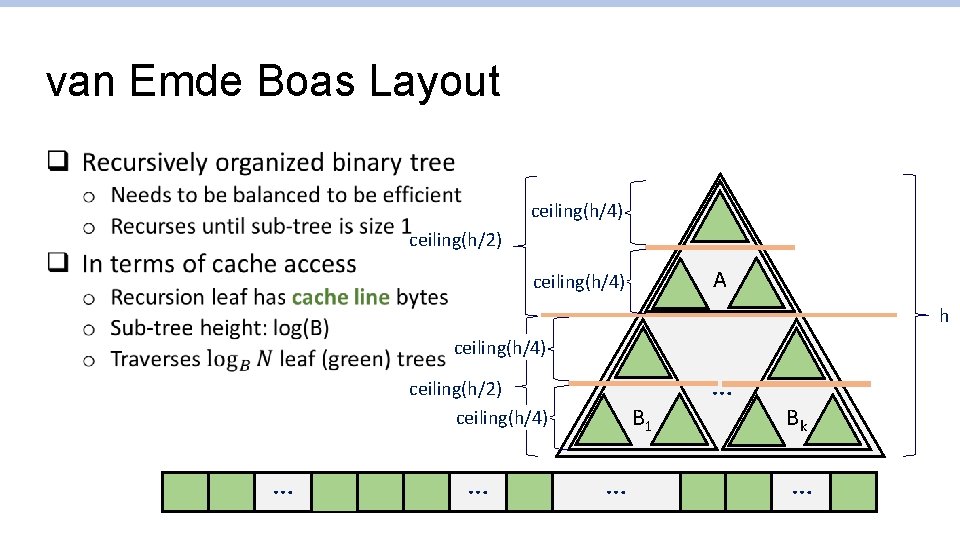

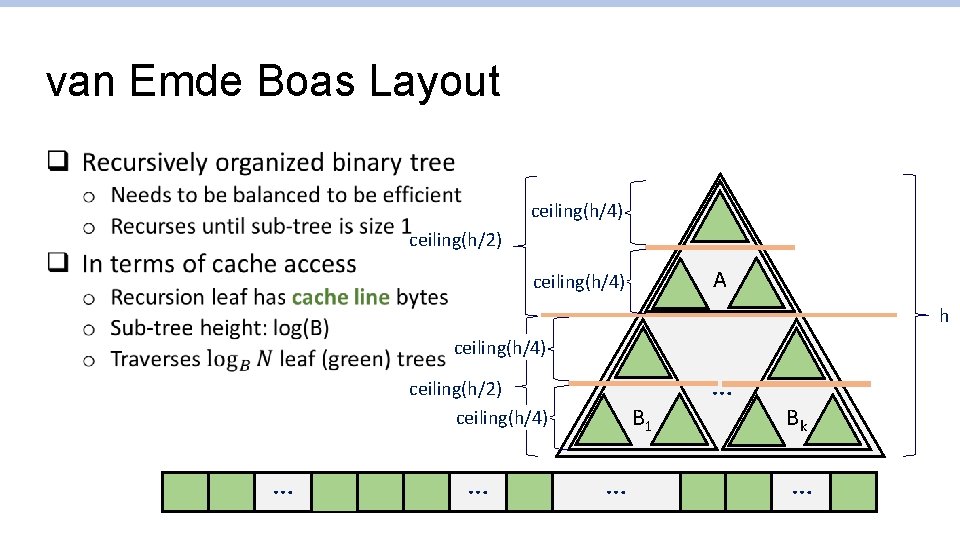

van Emde Boas Layout q ceiling(h/4) ceiling(h/2) A ceiling(h/4) h ceiling(h/4) ceiling(h/2) ceiling(h/4) A… B 1 … … Bk Bk …

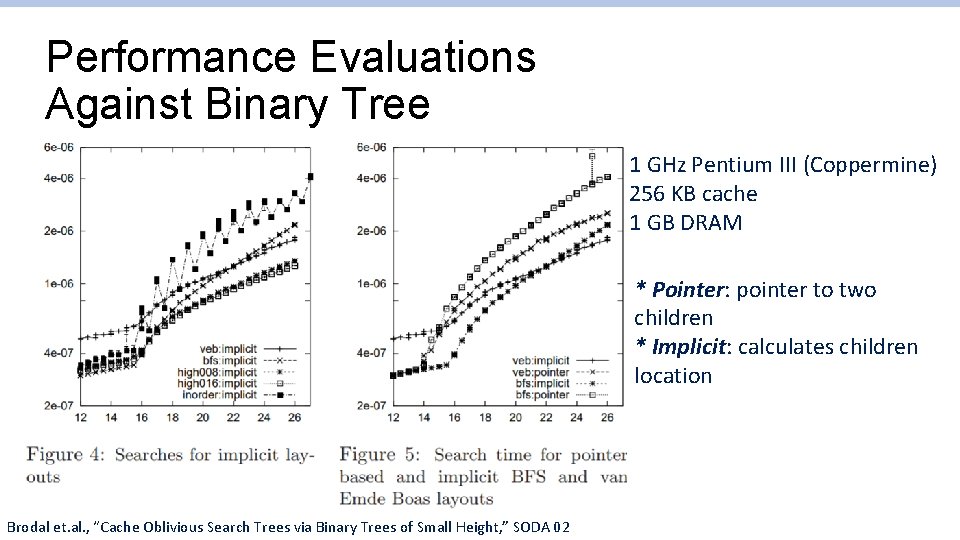

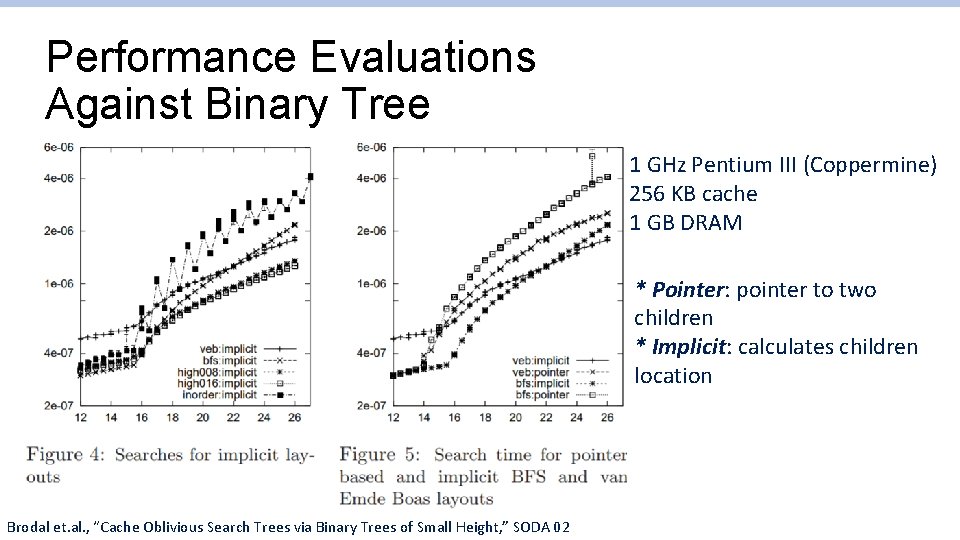

Performance Evaluations Against Binary Tree 1 GHz Pentium III (Coppermine) 256 KB cache 1 GB DRAM * Pointer: pointer to two children * Implicit: calculates children location Brodal et. al. , “Cache Oblivious Search Trees via Binary Trees of Small Height, ” SODA 02

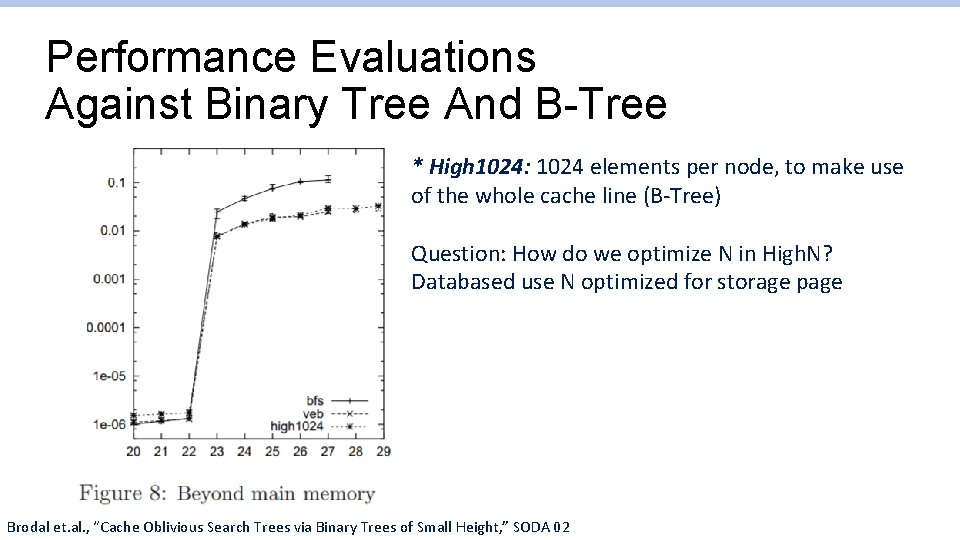

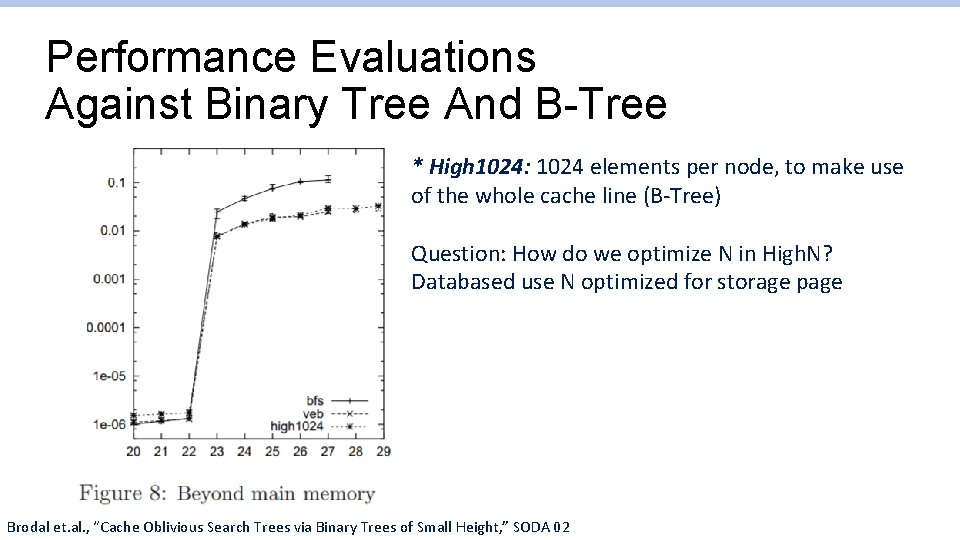

Performance Evaluations Against Binary Tree And B-Tree * High 1024: 1024 elements per node, to make use of the whole cache line (B-Tree) Question: How do we optimize N in High. N? Databased use N optimized for storage page Brodal et. al. , “Cache Oblivious Search Trees via Binary Trees of Small Height, ” SODA 02

More on the van Emde Boas Tree q Actually a tricky data structure to do inserts/deletions o Tree needs to be balanced to be effective o van Emde Boas trees with van Emde Boas trees as leaves? q Good thing to have, in the back of your head!

Applications of Interest q q Matrix multiplication Trees And Search Merge Sort Stencil Computation

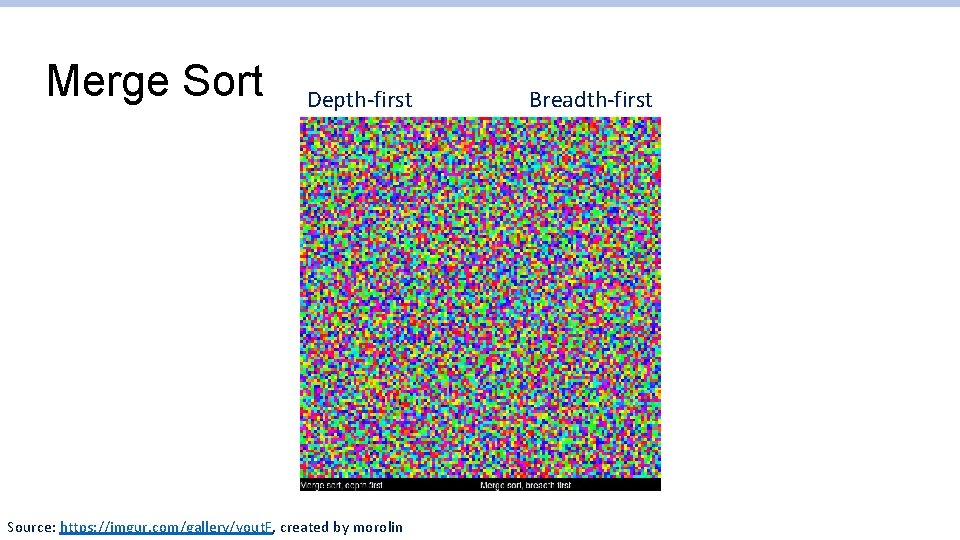

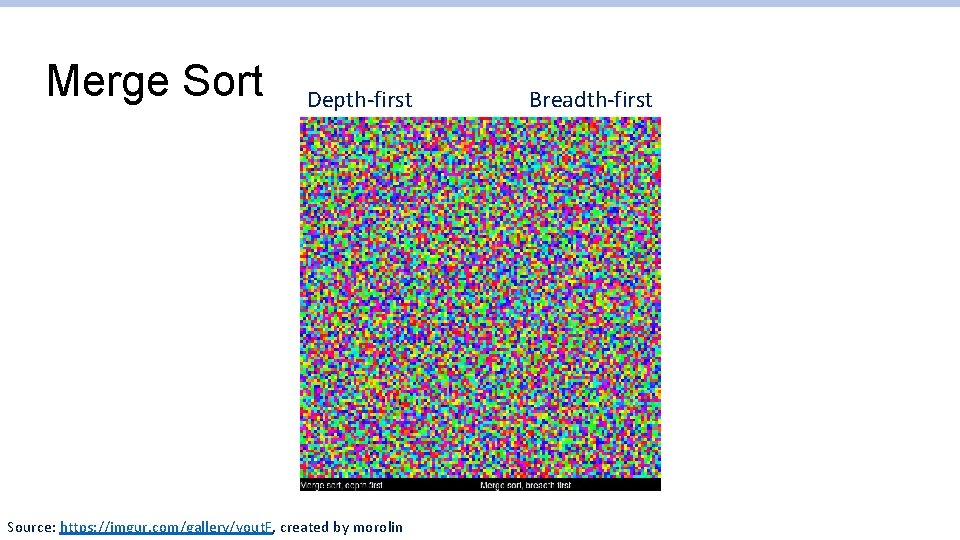

Merge Sort Depth-first Source: https: //imgur. com/gallery/vout. F, created by morolin Breadth-first

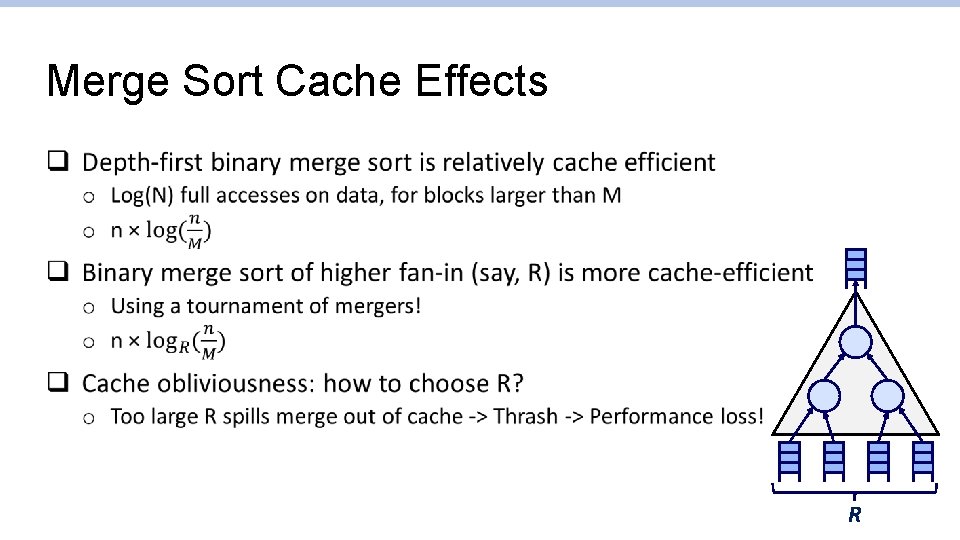

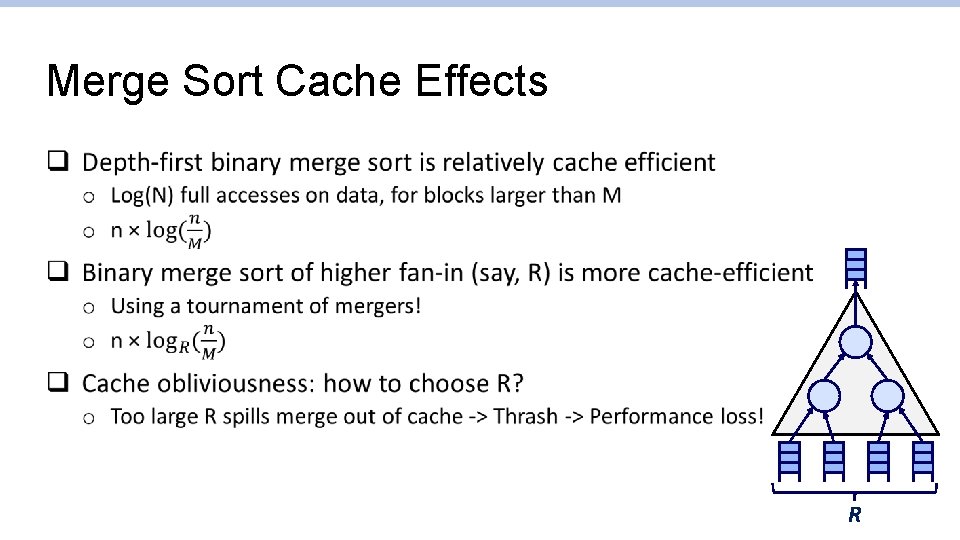

Merge Sort Cache Effects q R

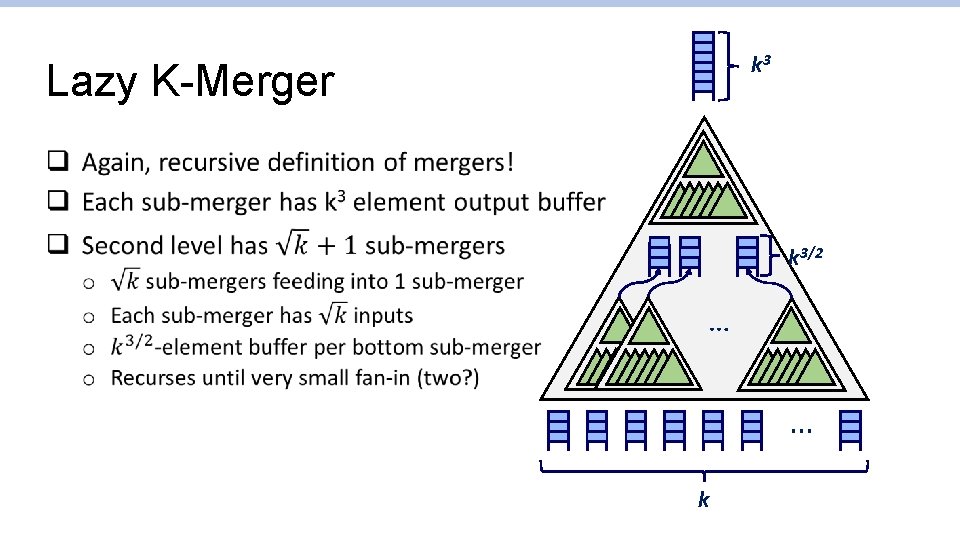

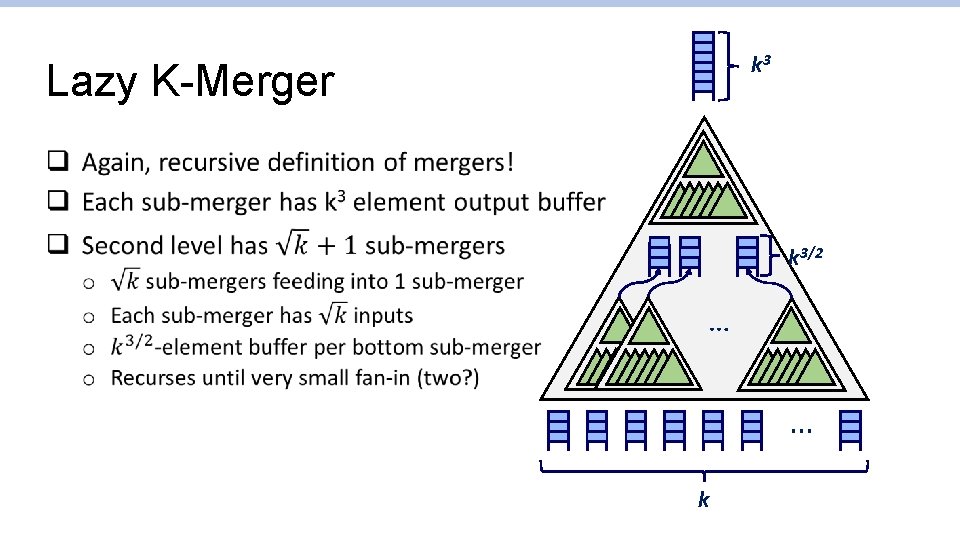

k 3 Lazy K-Merger q k 3/2 … … k

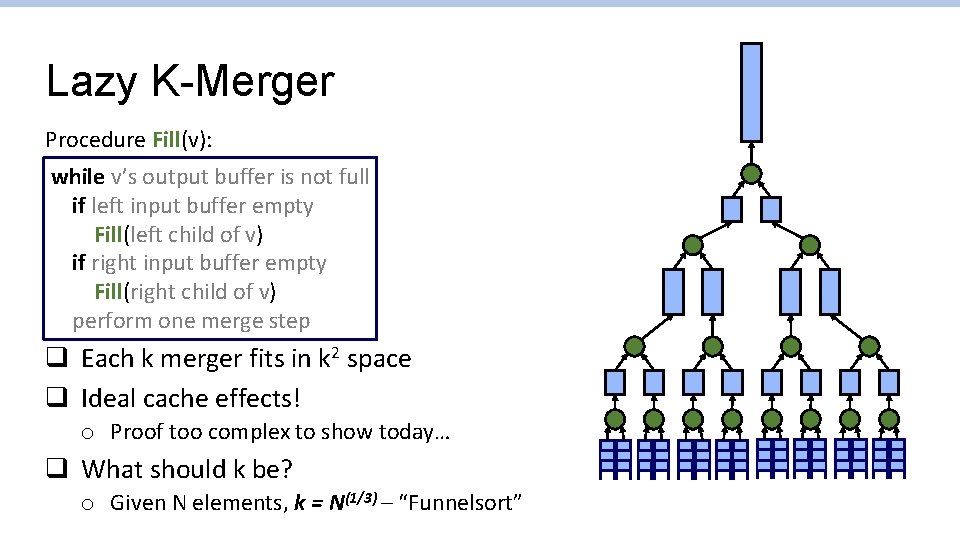

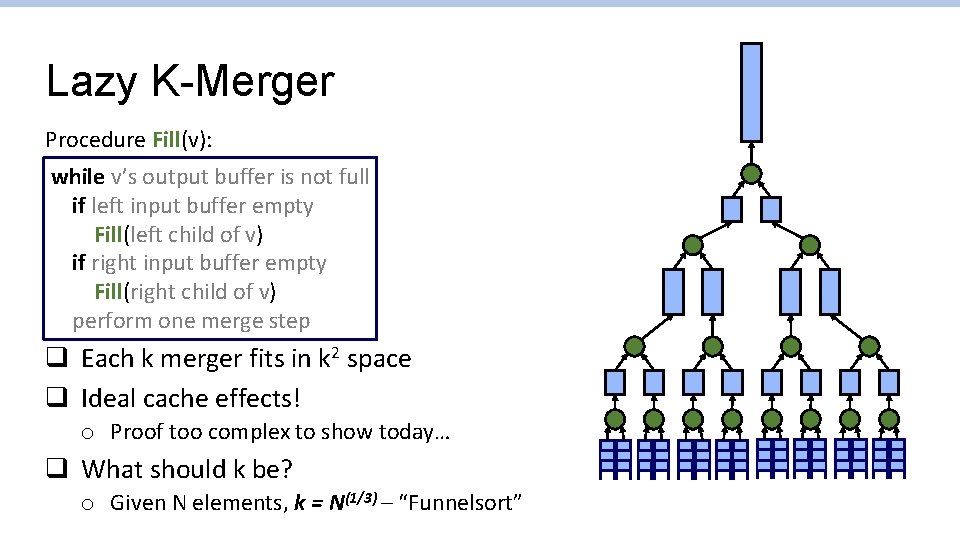

Lazy K-Merger Procedure Fill(v): while v’s output buffer is not full if left input buffer empty Fill(left child of v) if right input buffer empty Fill(right child of v) perform one merge step q Each k merger fits in k 2 space q Ideal cache effects! o Proof too complex to show today… q What should k be? o Given N elements, k = N(1/3) – “Funnelsort”

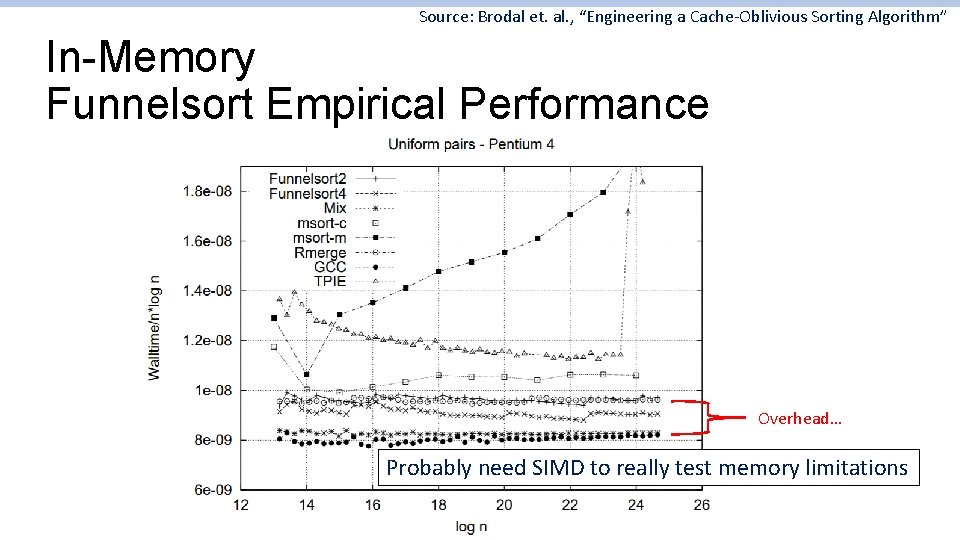

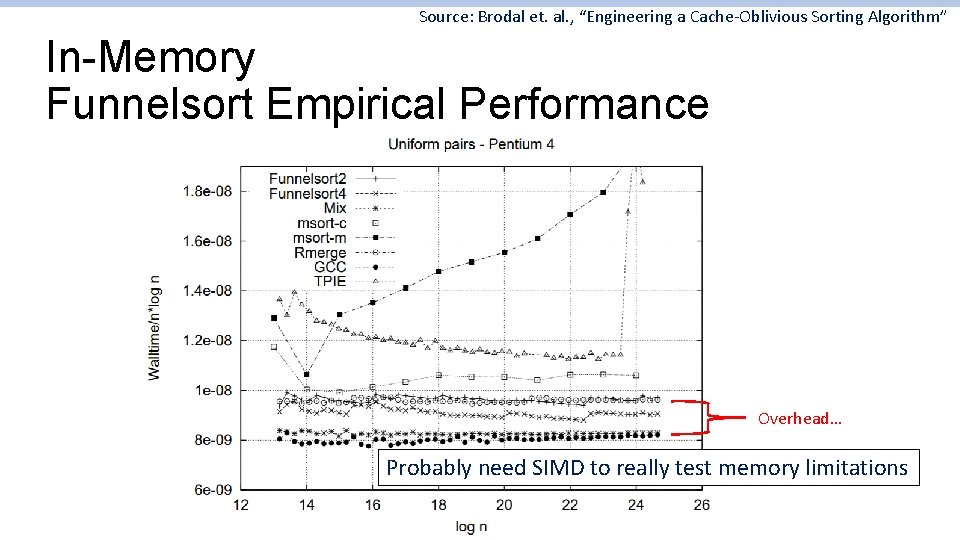

Source: Brodal et. al. , “Engineering a Cache-Oblivious Sorting Algorithm” In-Memory Funnelsort Empirical Performance Overhead… Probably need SIMD to really test memory limitations

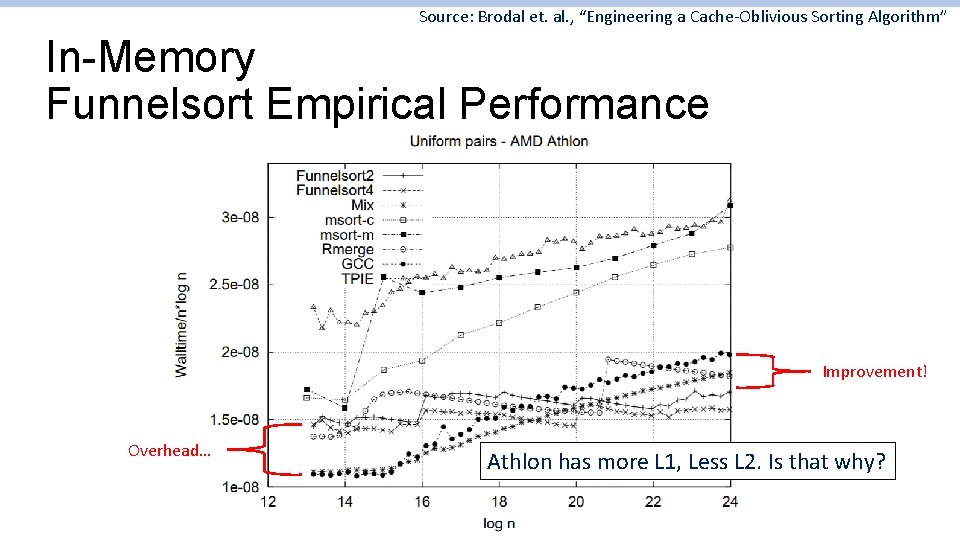

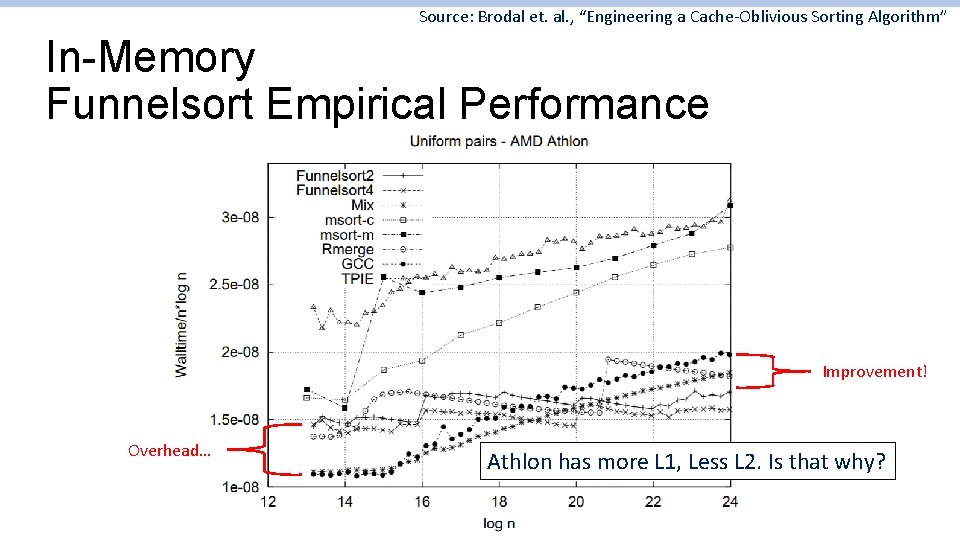

Source: Brodal et. al. , “Engineering a Cache-Oblivious Sorting Algorithm” In-Memory Funnelsort Empirical Performance Improvement! Overhead… Athlon has more L 1, Less L 2. Is that why?

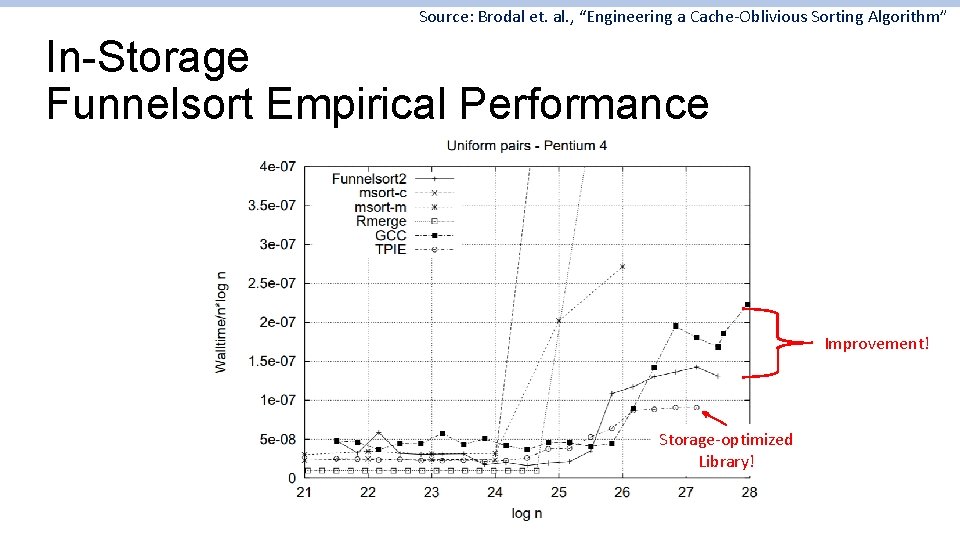

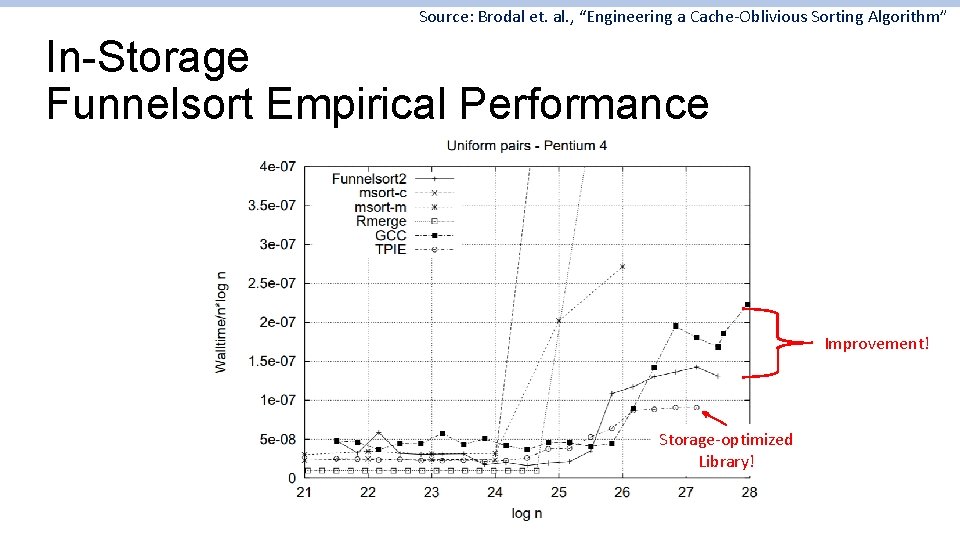

Source: Brodal et. al. , “Engineering a Cache-Oblivious Sorting Algorithm” In-Storage Funnelsort Empirical Performance Improvement! Storage-optimized Library!

Applications of Interest q q Matrix multiplication Trees And Search Merge Sort Stencil Computation

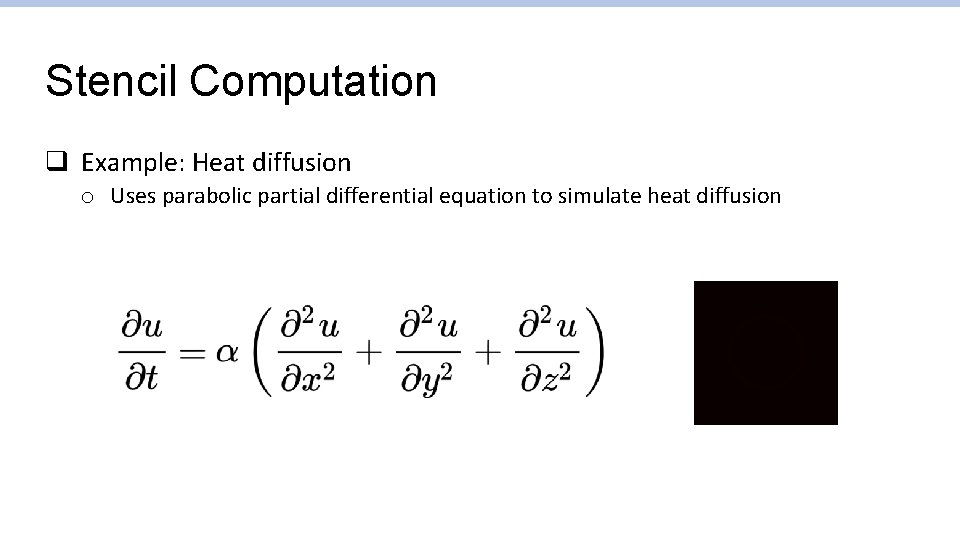

Stencil Computation q Example: Heat diffusion o Uses parabolic partial differential equation to simulate heat diffusion

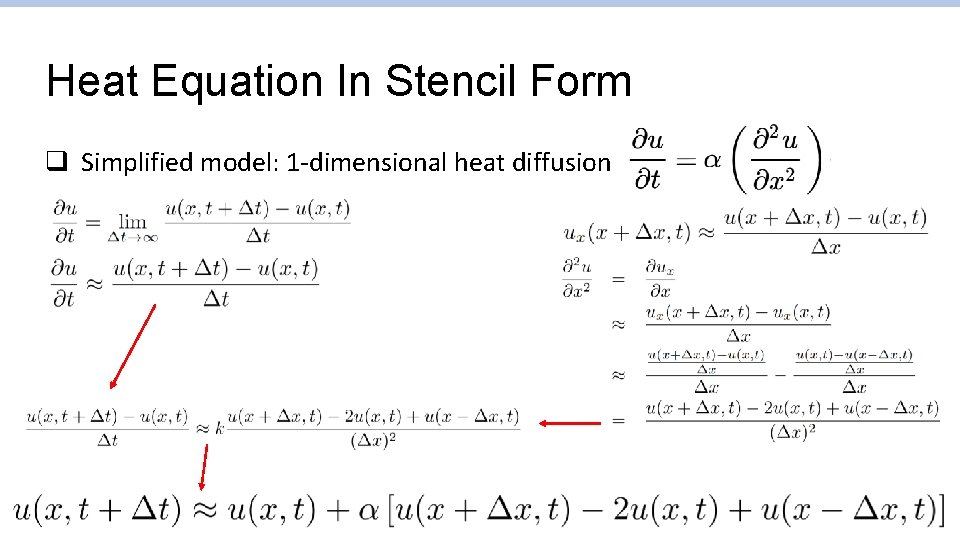

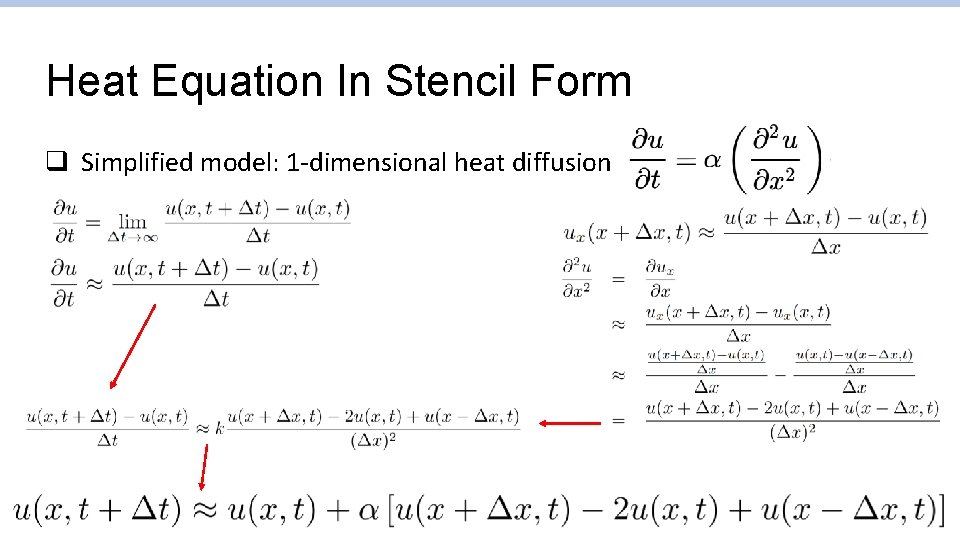

Heat Equation In Stencil Form q Simplified model: 1 -dimensional heat diffusion

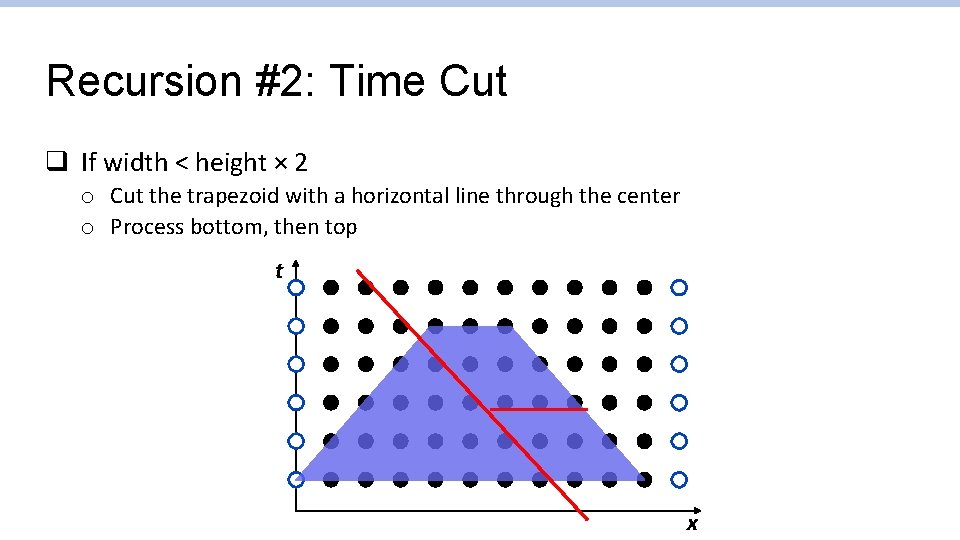

A 3 -point Stencil q u(x, t + Δt) can be calculated using u(x, t), u(x + Δx, t), u(x - Δx, t) q A “stencil” updates each position t using surrounding values as input o This is a 1 D 3 -point stencil o 2 D 5 point, 2 D 9 point, 3 D 7 point, 3 D 25 -point stencils popular o Popular for simulations, including fluid dynamics, solving linear equations and PDEs Sentries x

![Some Important Stencils 1 19 point 3 D Stencil for Lattice Boltzmann Method flow Some Important Stencils [1] 19 -point 3 D Stencil for Lattice Boltzmann Method flow](https://slidetodoc.com/presentation_image_h2/5a929b8227f110e3f316bf224a8168a5/image-29.jpg)

Some Important Stencils [1] 19 -point 3 D Stencil for Lattice Boltzmann Method flow simulation [1] Peng, et. al. , “High-Order Stencil Computations on Multicore Clusters” [2] Gentryx, Wikipedia [2] 25 -point 3 D stencil for seismic wave propagation applications

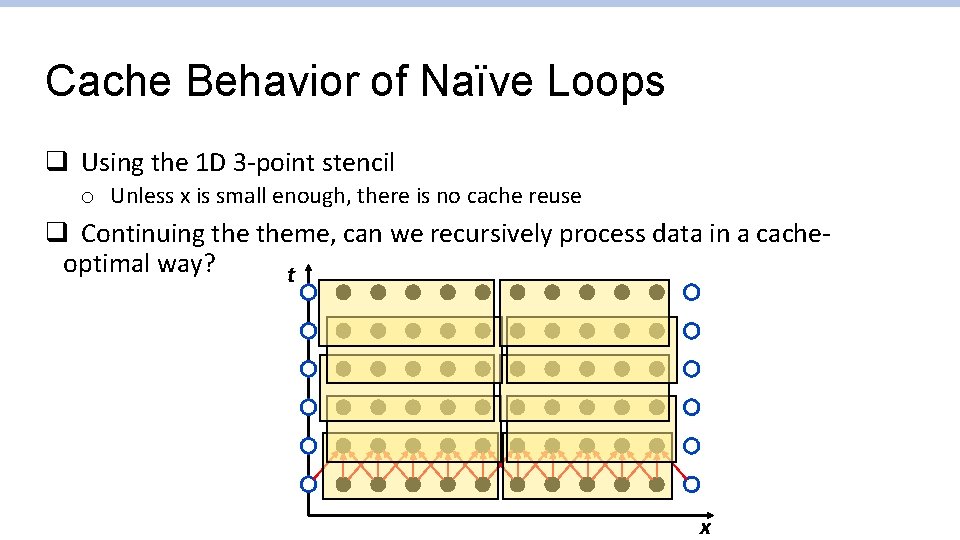

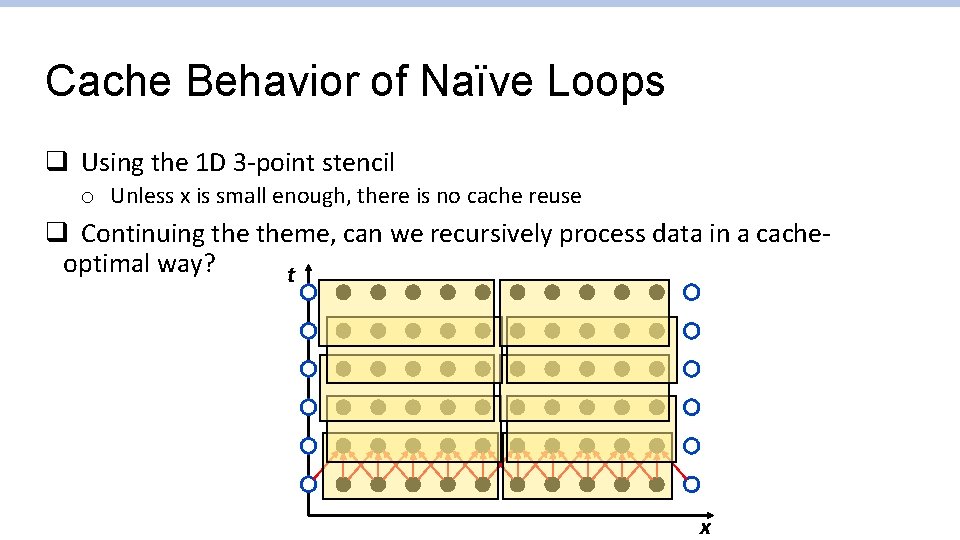

Cache Behavior of Naïve Loops q Using the 1 D 3 -point stencil o Unless x is small enough, there is no cache reuse q Continuing theme, can we recursively process data in a cacheoptimal way? t x

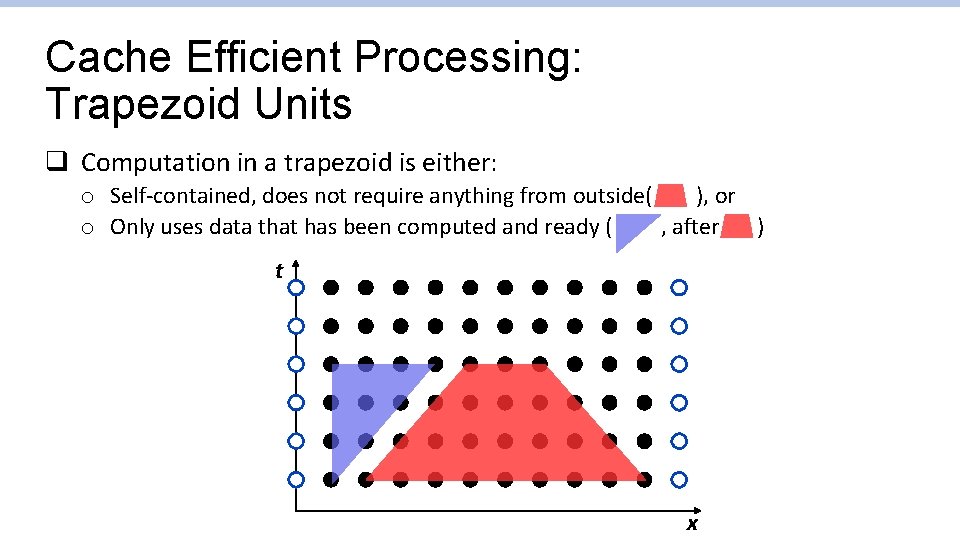

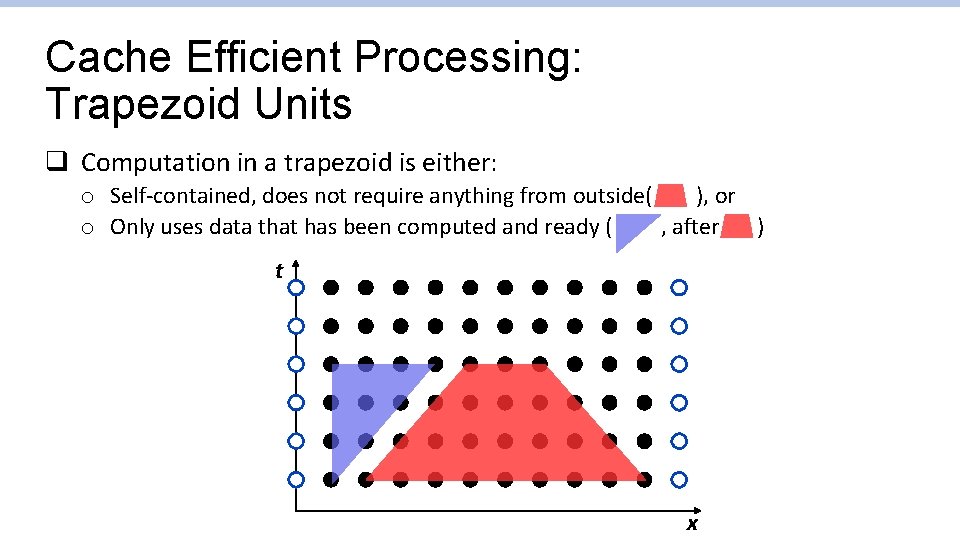

Cache Efficient Processing: Trapezoid Units q Computation in a trapezoid is either: o Self-contained, does not require anything from outside( ), or o Only uses data that has been computed and ready ( , after ) t x

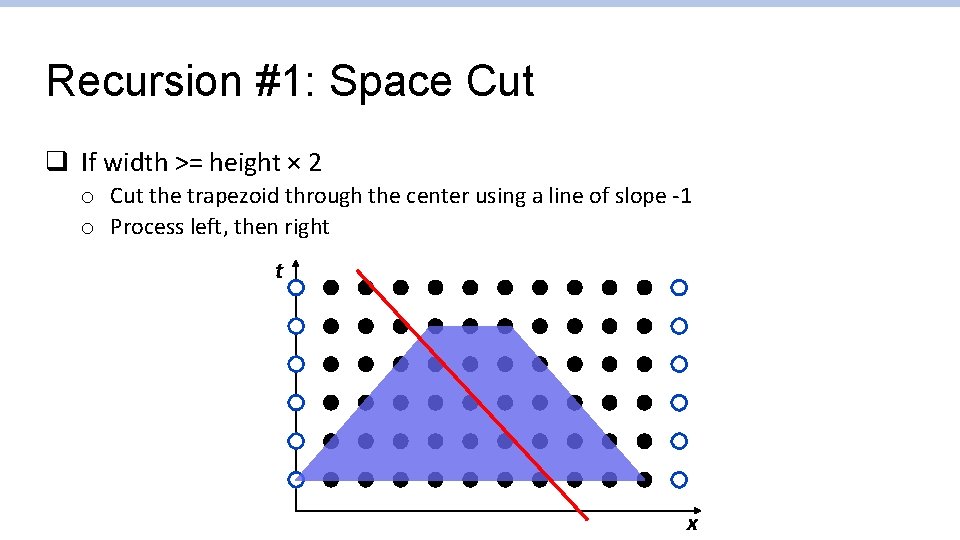

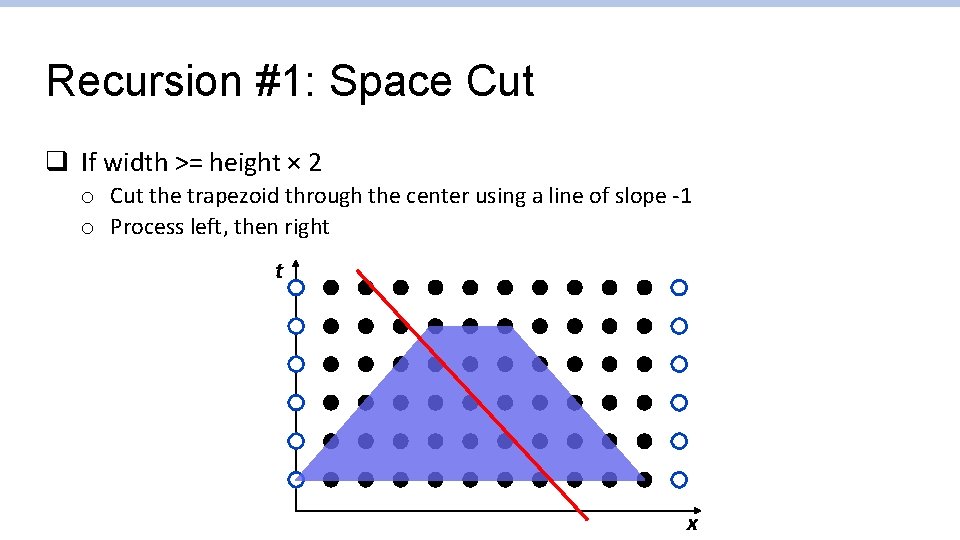

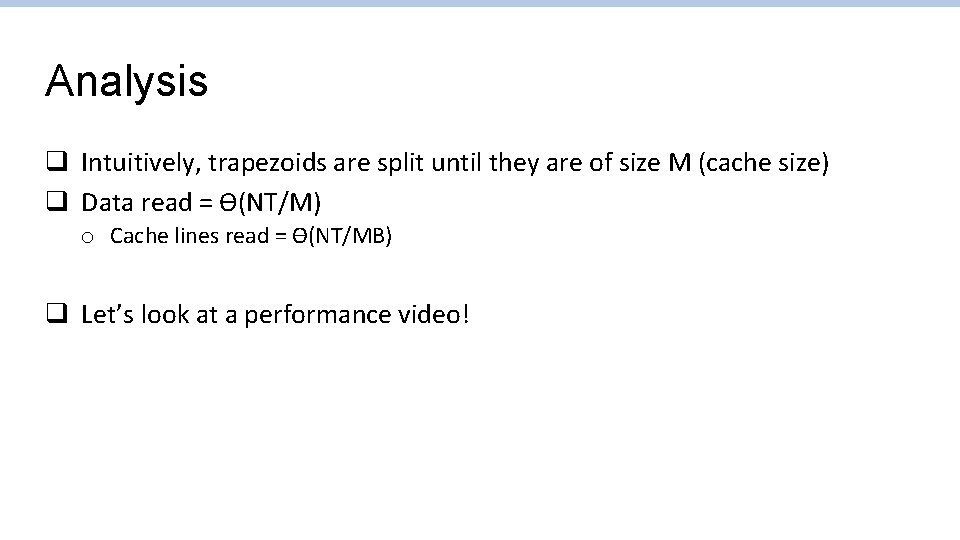

Recursion #1: Space Cut q If width >= height × 2 o Cut the trapezoid through the center using a line of slope -1 o Process left, then right t x

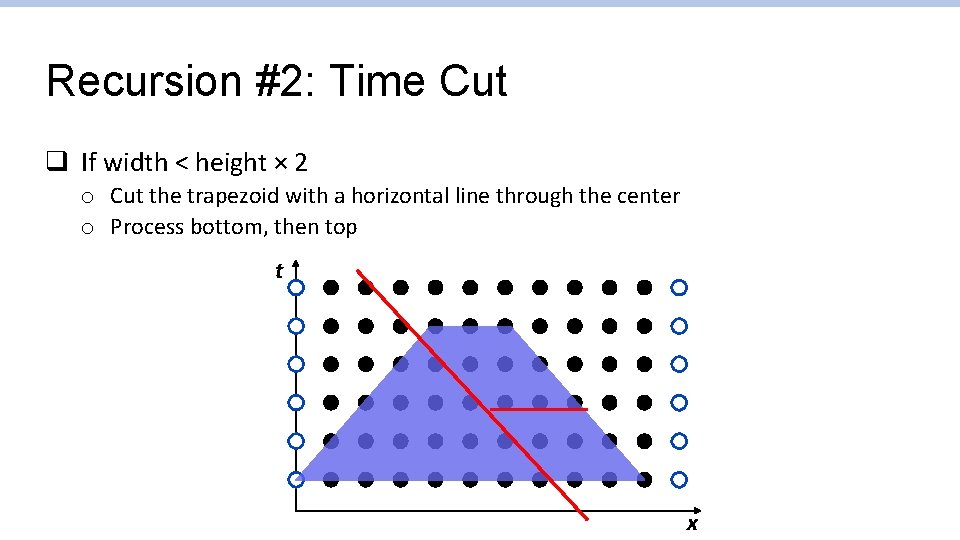

Recursion #2: Time Cut q If width < height × 2 o Cut the trapezoid with a horizontal line through the center o Process bottom, then top t x

Analysis q Intuitively, trapezoids are split until they are of size M (cache size) q Data read = Ɵ(NT/M) o Cache lines read = Ɵ(NT/MB) q Let’s look at a performance video!