Lecture 6 Smaller Network RNN This is our

- Slides: 39

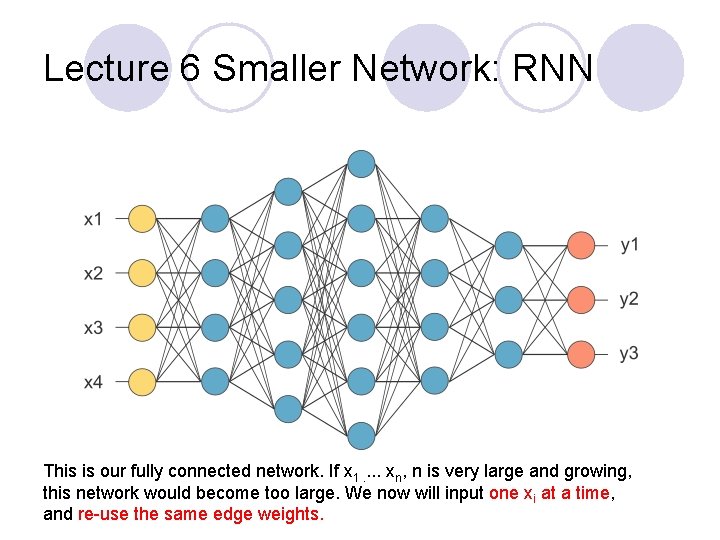

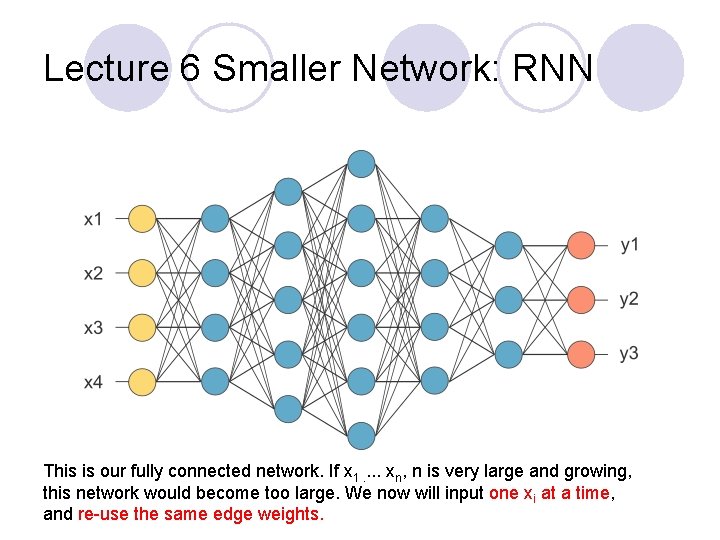

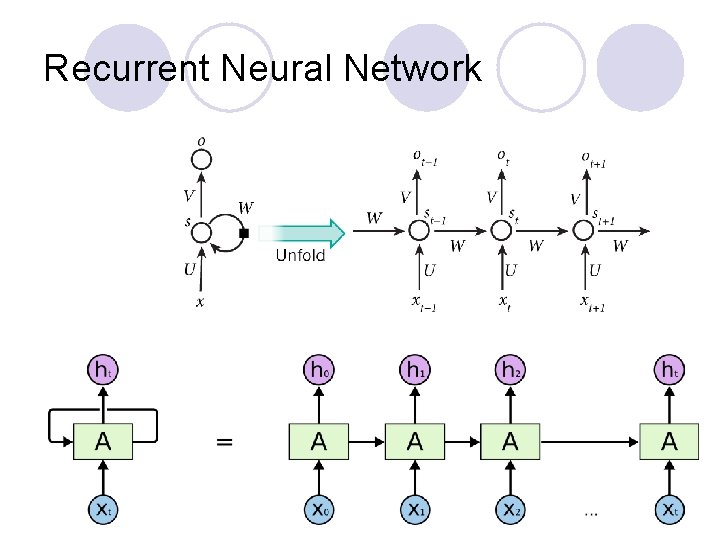

Lecture 6 Smaller Network: RNN This is our fully connected network. If x 1. . xn, n is very large and growing, this network would become too large. We now will input one xi at a time, and re-use the same edge weights.

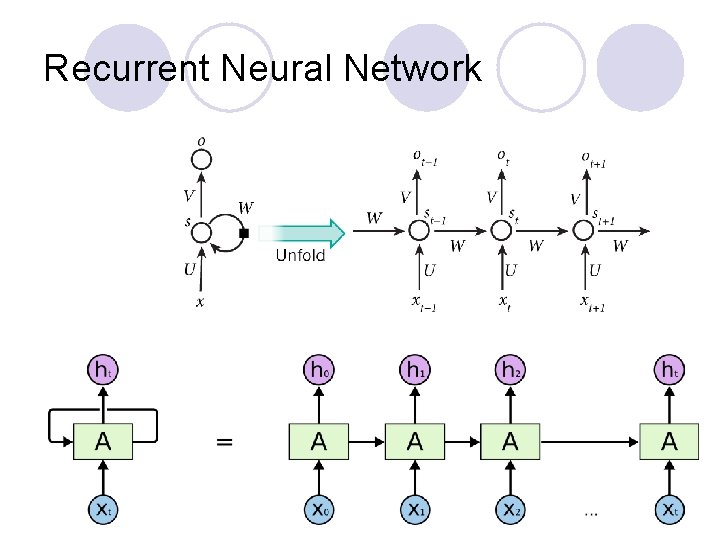

Recurrent Neural Network

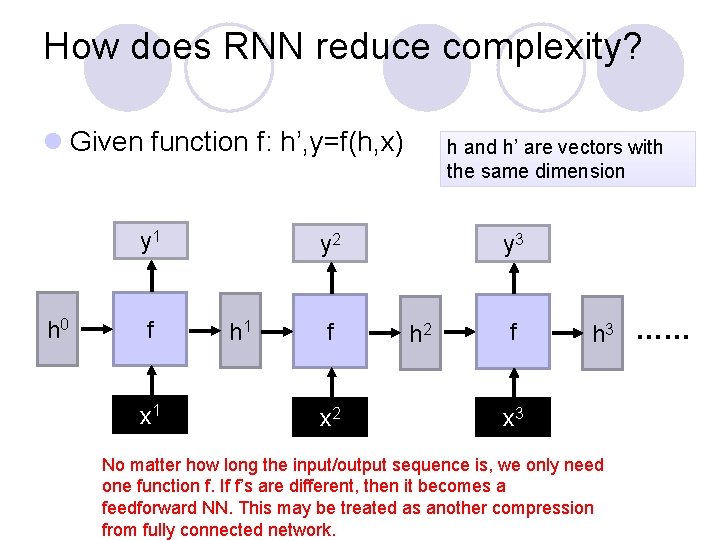

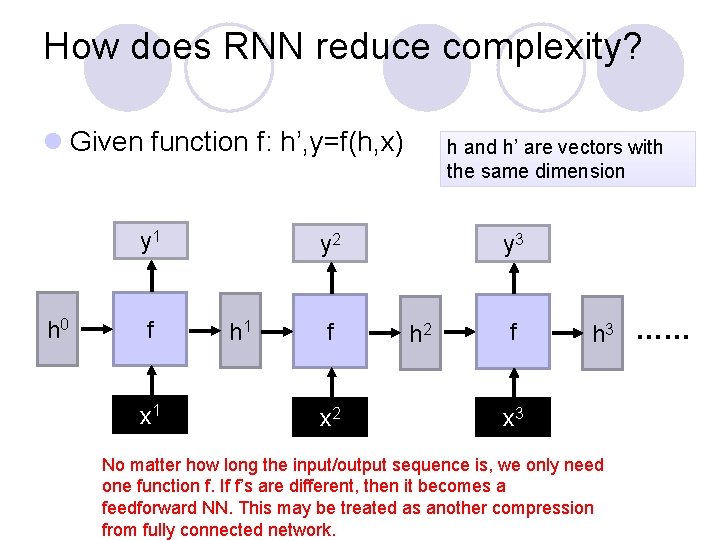

How does RNN reduce complexity? l Given function f: h’, y=f(h, x) y 1 h 0 f x 1 h and h’ are vectors with the same dimension y 2 h 1 f x 2 y 3 h 2 f h 3 …… x 3 No matter how long the input/output sequence is, we only need one function f. If f’s are different, then it becomes a feedforward NN. This may be treated as another compression from fully connected network.

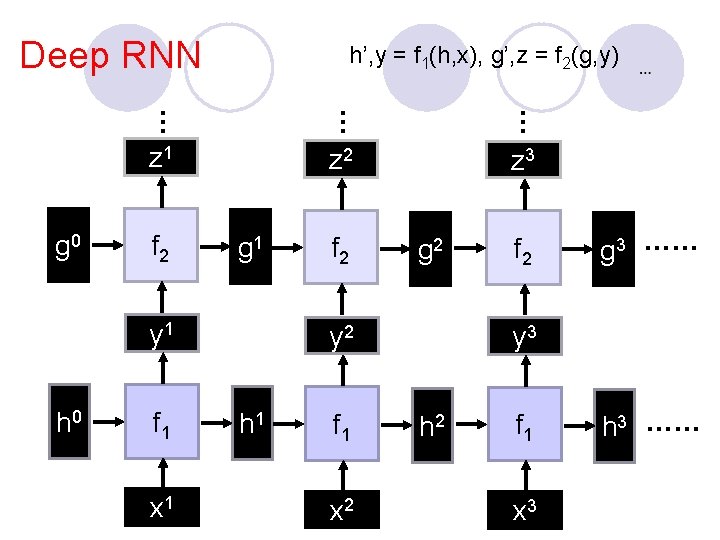

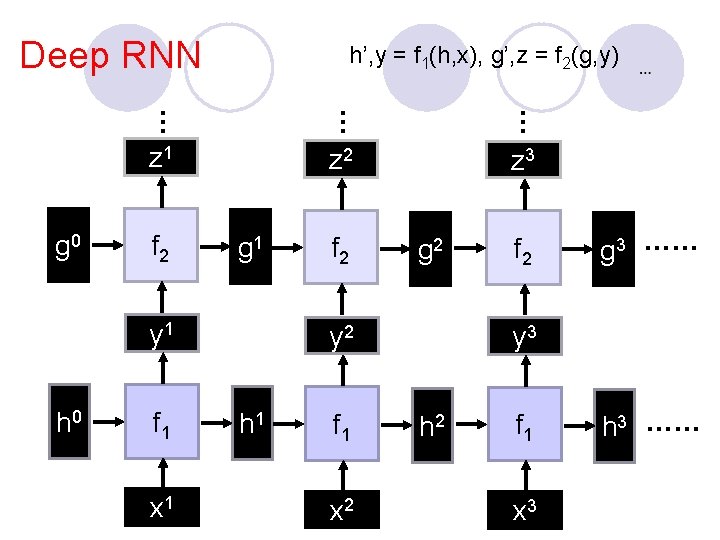

Deep RNN … … … g 0 h’, y = f 1(h, x), g’, z = f 2(g, y) z 1 z 2 z 3 f 2 g 1 y 1 h 0 f 1 x 1 f 2 g 2 y 2 h 1 f 1 x 2 f 2 g 3 …… y 3 h 2 f 1 x 3 h 3 ……

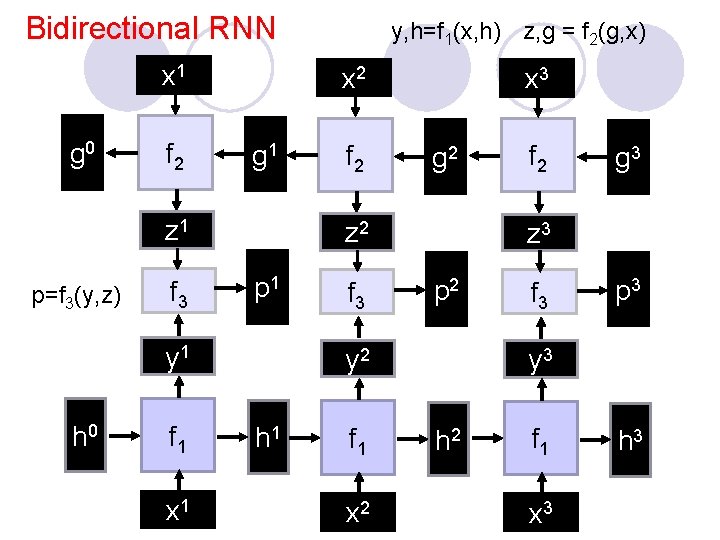

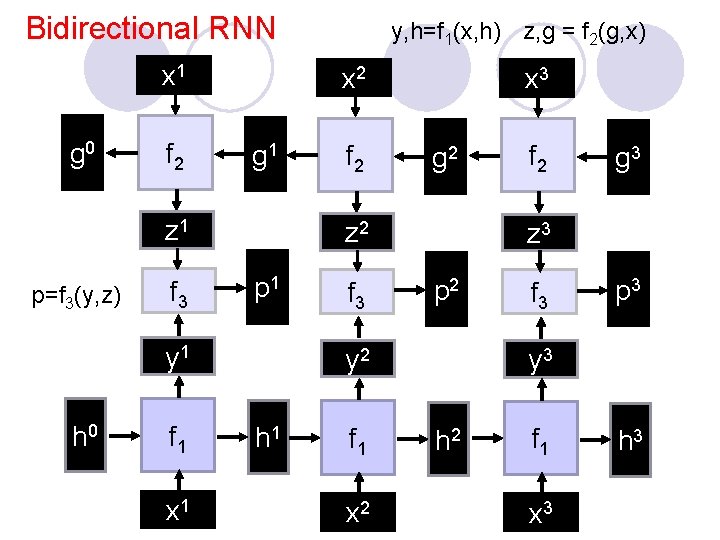

Bidirectional RNN x 1 g 0 f 2 x 2 g 1 z 1 p=f 3(y, z) f 3 f 1 x 1 f 2 x 3 g 2 z 2 p 1 y 1 h 0 y, h=f 1(x, h) z, g = f 2(g, x) f 3 f 1 x 2 g 3 z 3 p 2 y 2 h 1 f 2 f 3 p 3 y 3 h 2 f 1 x 3 h 3

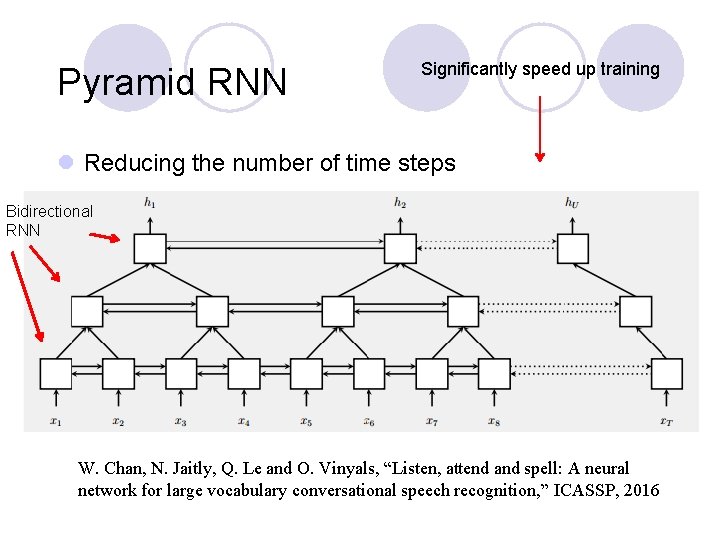

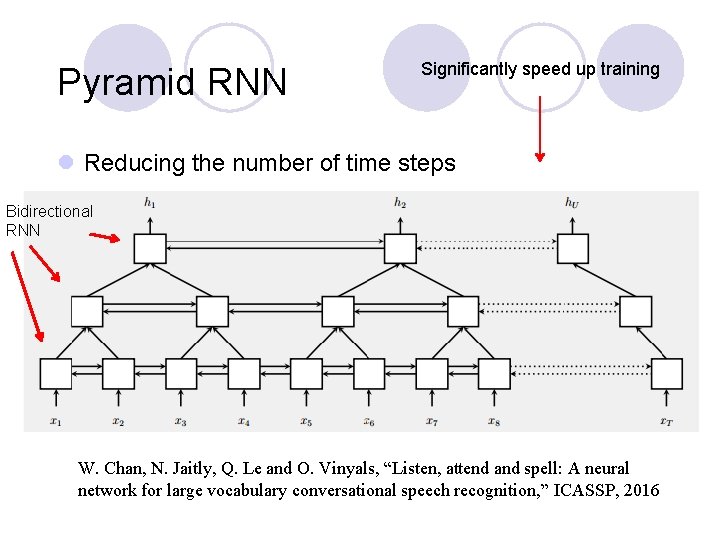

Pyramid RNN Significantly speed up training l Reducing the number of time steps Bidirectional RNN W. Chan, N. Jaitly, Q. Le and O. Vinyals, “Listen, attend and spell: A neural network for large vocabulary conversational speech recognition, ” ICASSP, 2016

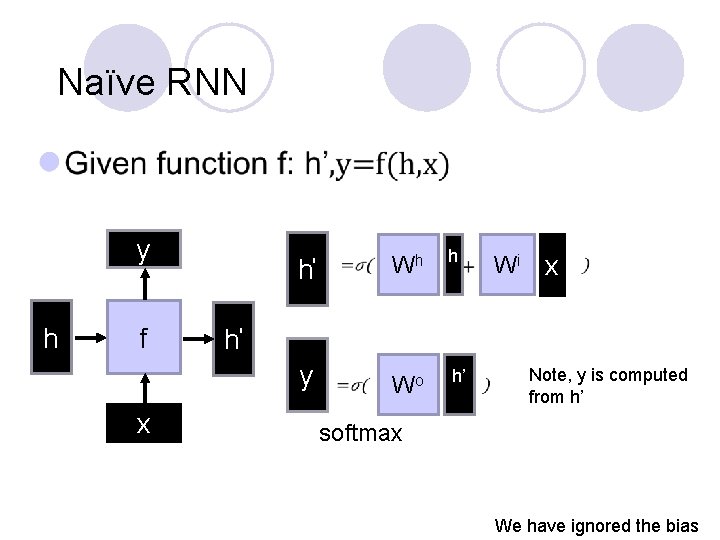

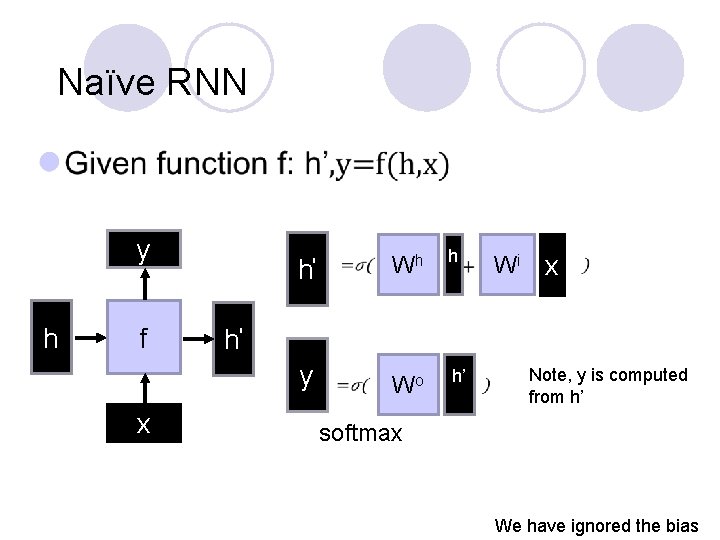

Naïve RNN l y h f h' Wh h Wo h’ Wi x h' y x Note, y is computed from h’ softmax We have ignored the bias

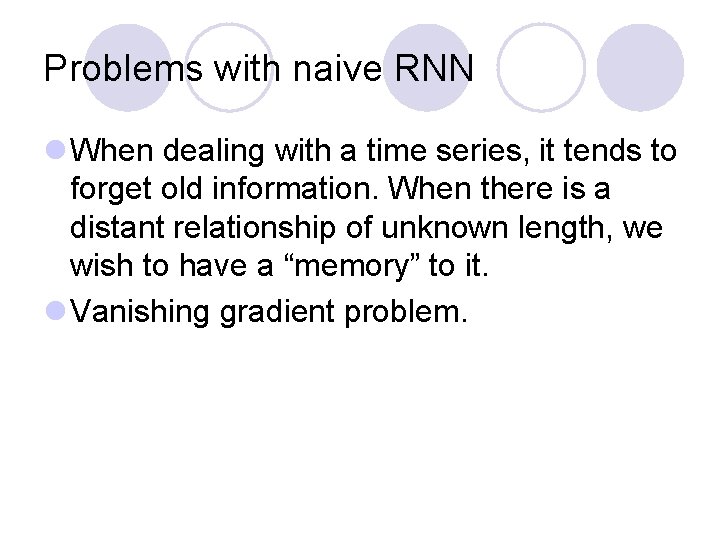

Problems with naive RNN l When dealing with a time series, it tends to forget old information. When there is a distant relationship of unknown length, we wish to have a “memory” to it. l Vanishing gradient problem.

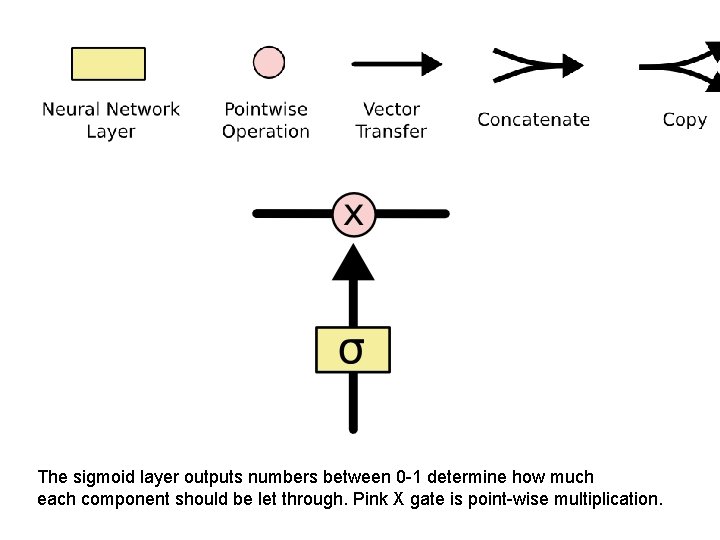

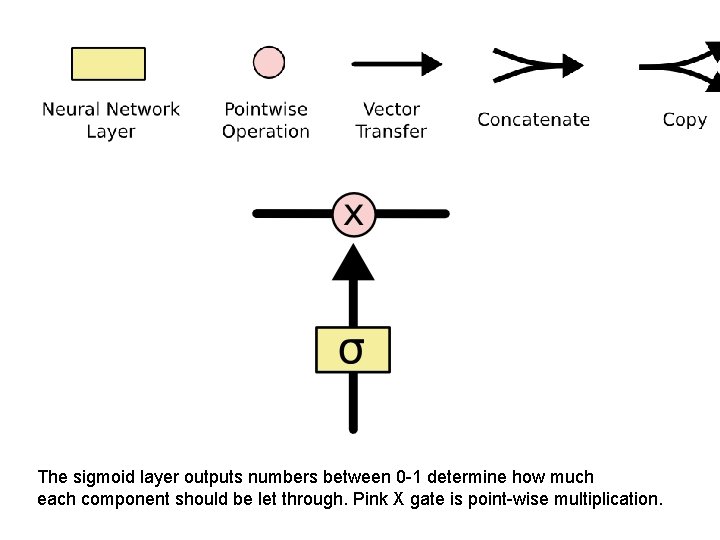

The sigmoid layer outputs numbers between 0 -1 determine how much each component should be let through. Pink X gate is point-wise multiplication.

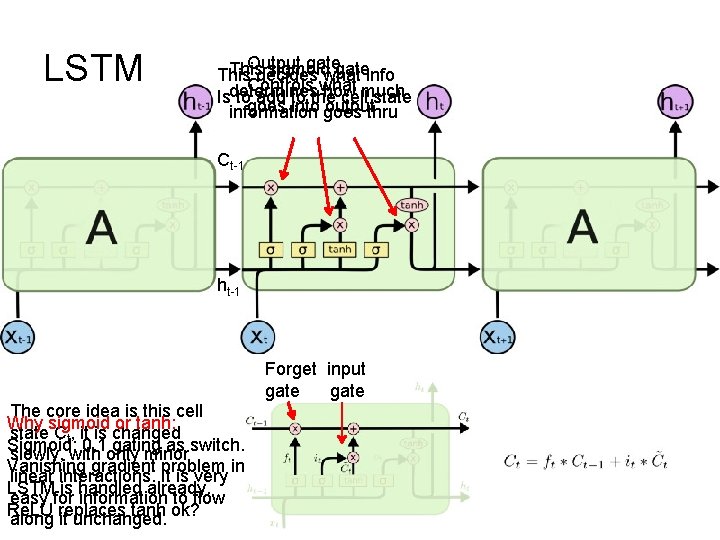

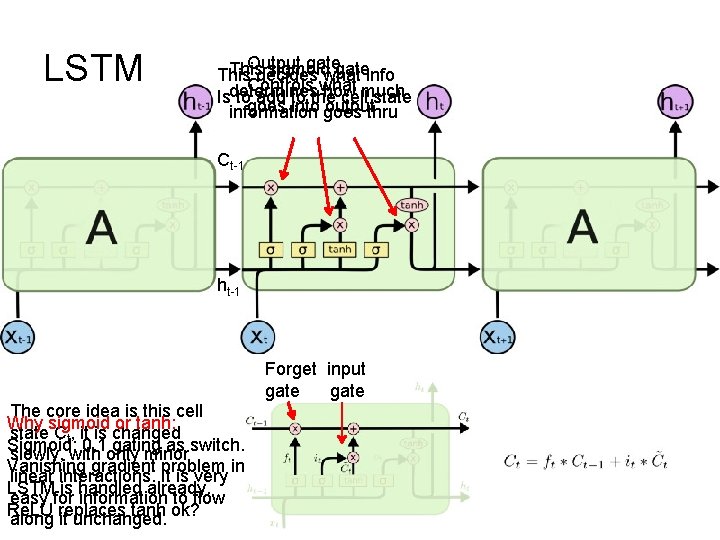

LSTM Output gate This sigmoid gate This decides what info Controls what determines how much Is to add to the cell state goes into output information goes thru Ct-1 ht-1 Forget input gate The core idea is this cell Why sigmoid or tanh: state Ct, it is changed Sigmoid: 0, 1 gating as switch. slowly, with only minor Vanishing gradient problem in linear interactions. It is very LSTM is handled already. easy for information to flow Re. LU replaces tanh ok? along it unchanged.

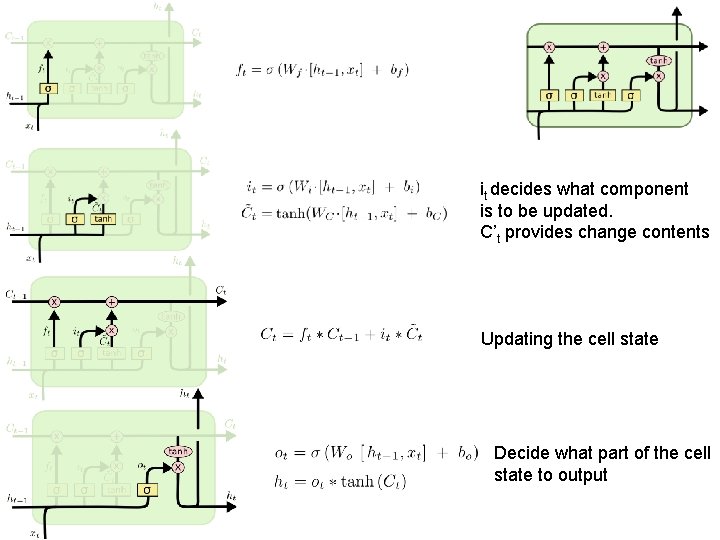

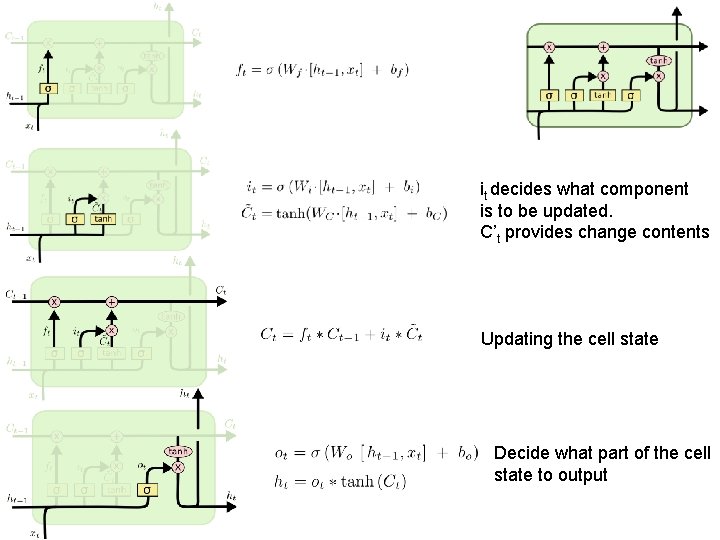

it decides what component is to be updated. C’t provides change contents Updating the cell state Decide what part of the cell state to output

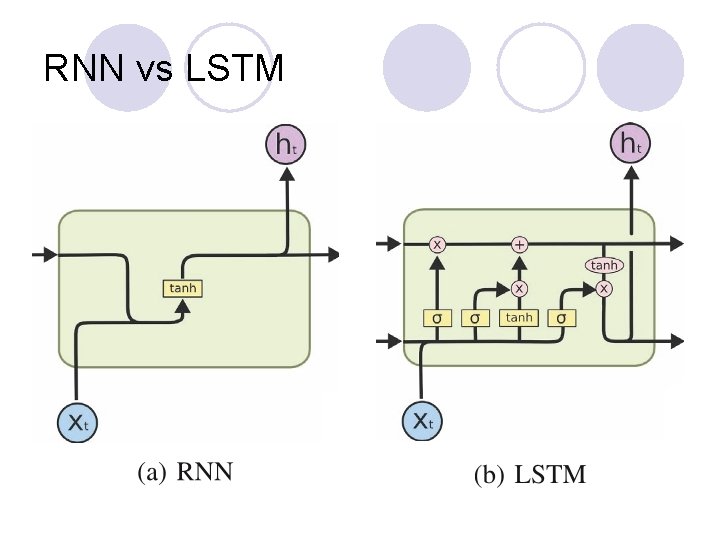

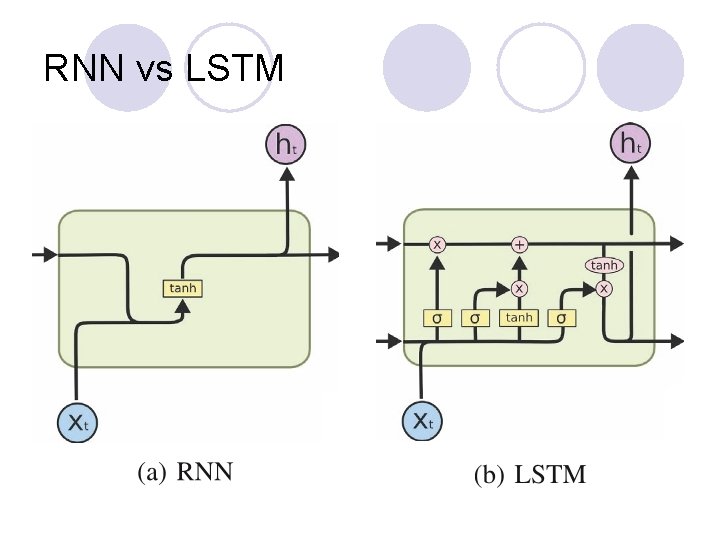

RNN vs LSTM

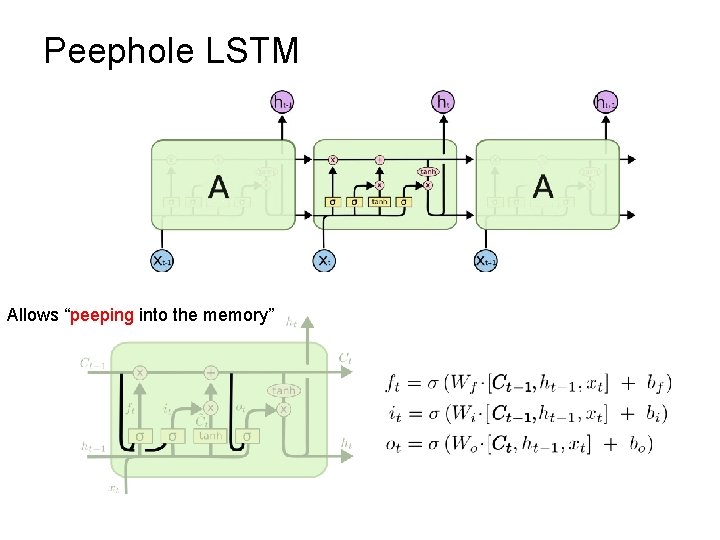

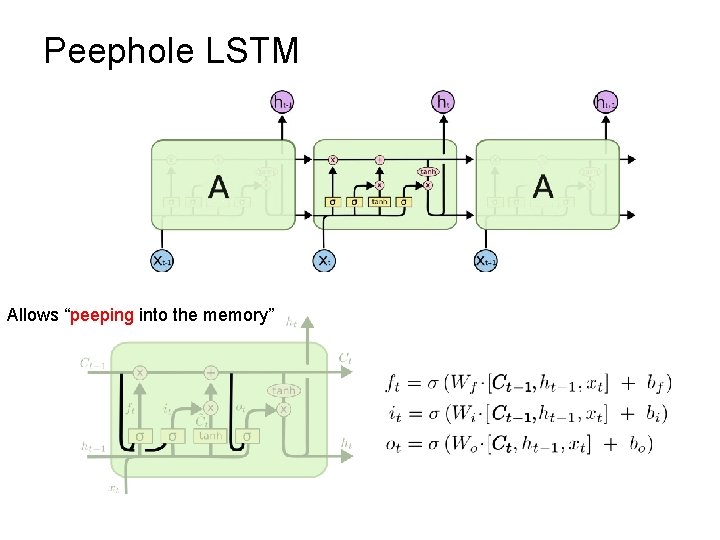

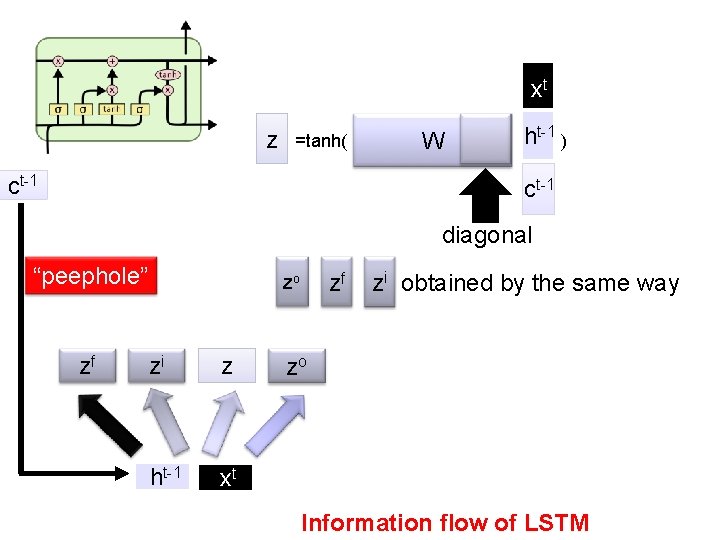

Peephole LSTM Allows “peeping into the memory”

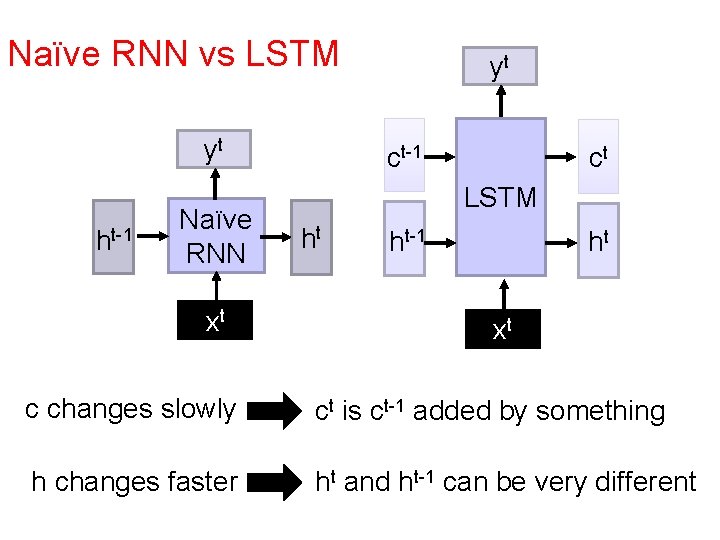

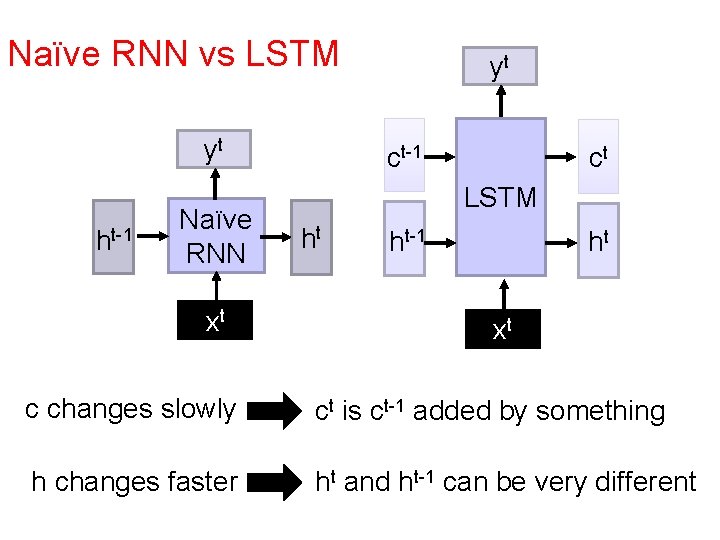

Naïve RNN vs LSTM yt ht-1 Naïve RNN xt yt ct-1 ct LSTM ht ht-1 ht xt c changes slowly ct is ct-1 added by something h changes faster ht and ht-1 can be very different

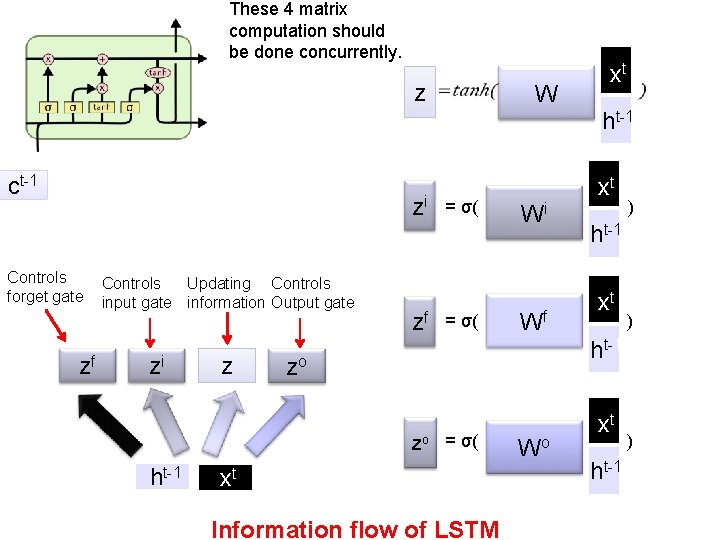

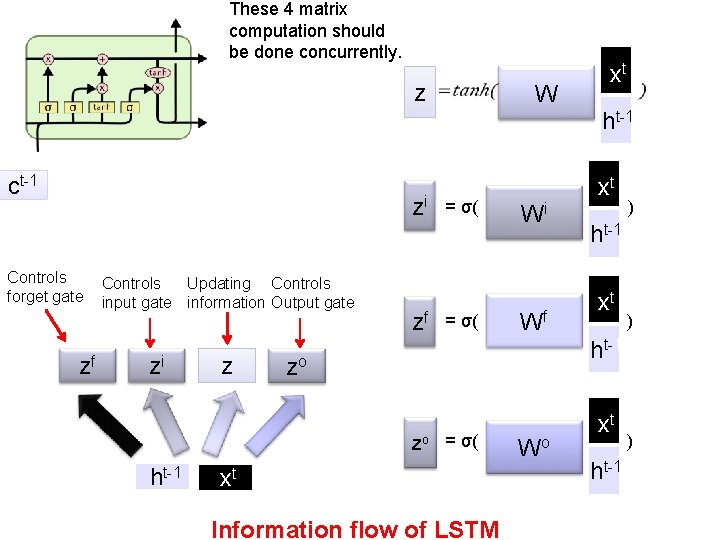

These 4 matrix computation should be done concurrently. z W xt ht-1 ct-1 zi xt = σ( ) Wi ht-1 Controls forget gate zf Controls Updating Controls input gate information Output gate zi z zf xt = σ( ) Wf ht-1 zo xt zo = σ( ) Wo ht-1 xt Information flow of LSTM ht-1

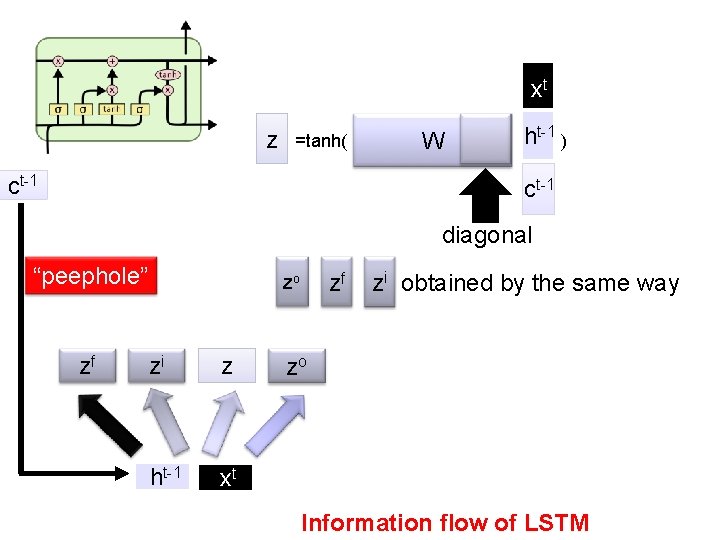

xt z h =tanh( ) W t-1 ct-1 diagonal “peephole” zf zf zo zi z ht-1 xt zi obtained by the same way zo Information flow of LSTM

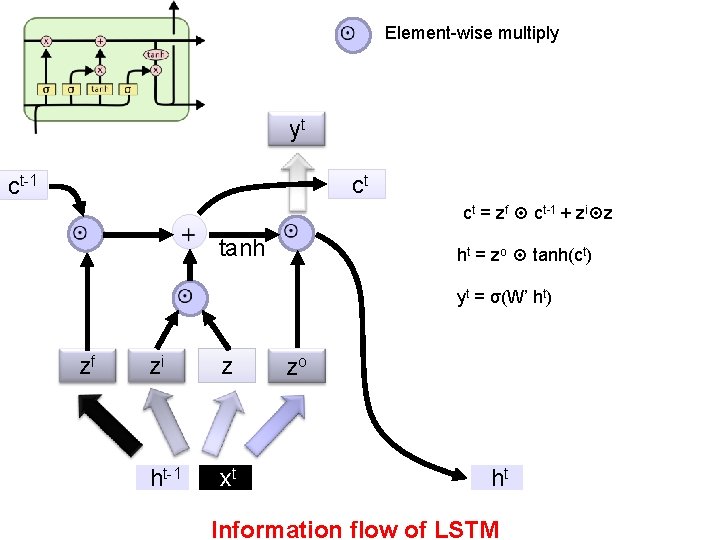

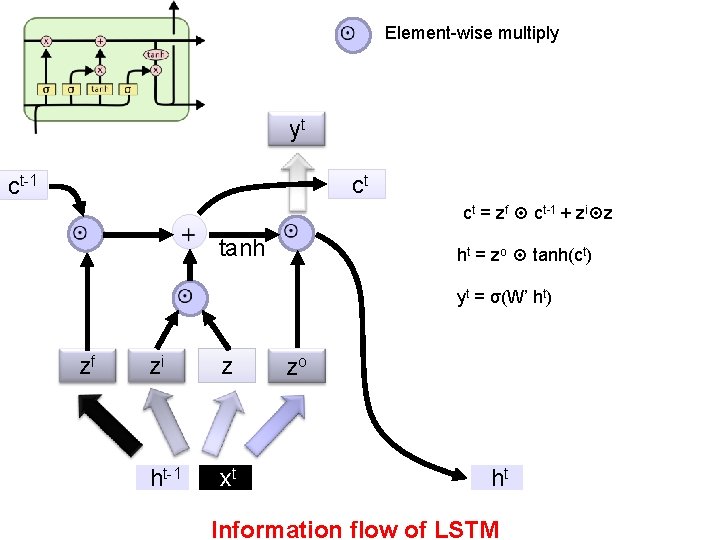

Element-wise multiply yt ct ct-1 tanh ht = zo tanh(ct) zf ct = zf ct-1 + zi z yt = σ(W’ ht) zi z ht-1 xt zo ht Information flow of LSTM

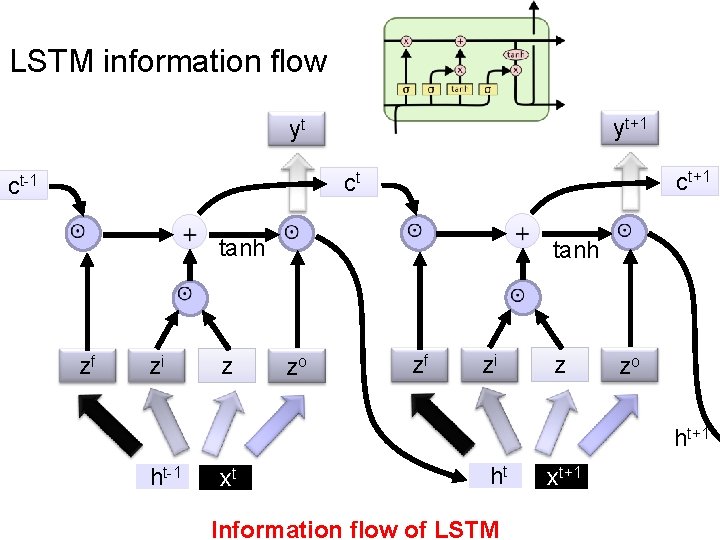

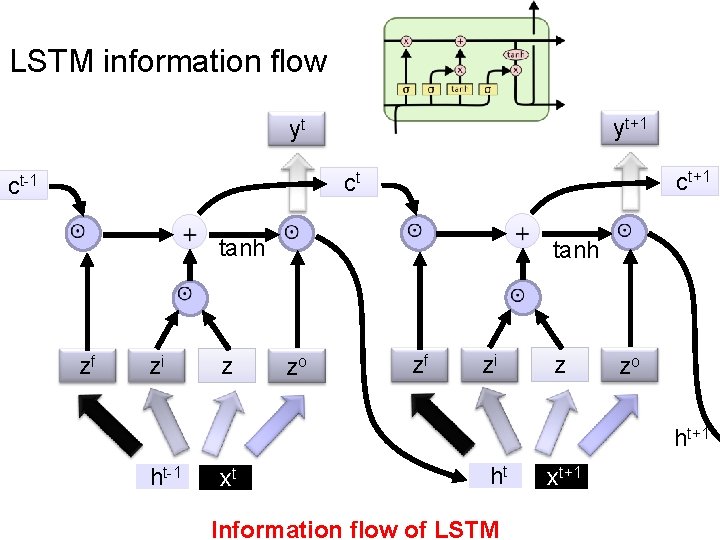

LSTM information flow yt+1 yt ct+1 ct ct-1 tanh zf zi tanh z zo zf zi z zo ht+1 ht-1 xt ht Information flow of LSTM xt+1

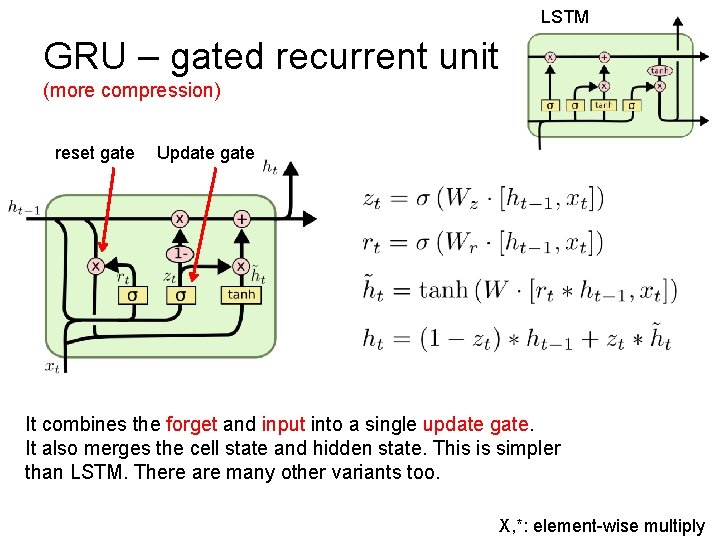

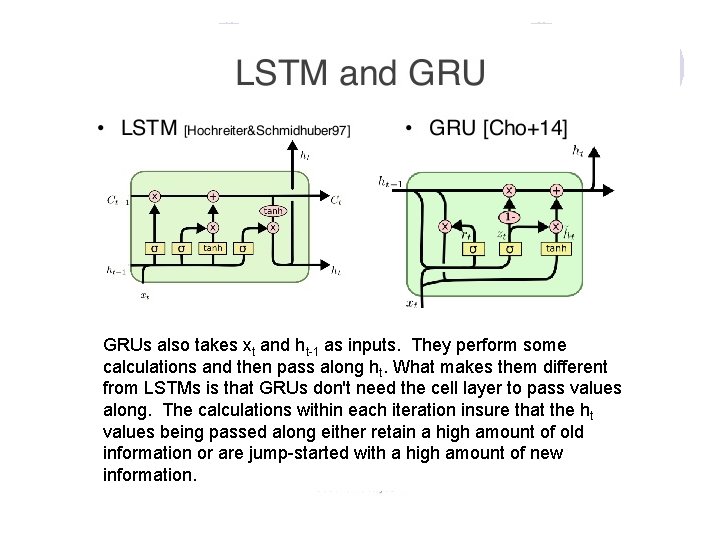

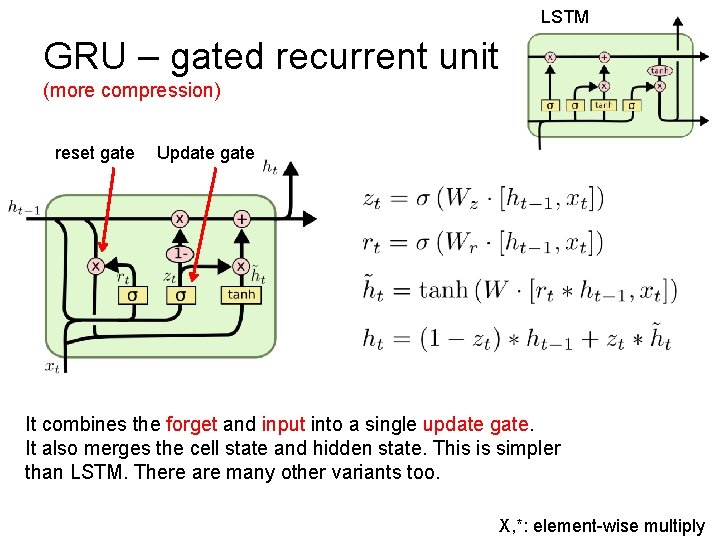

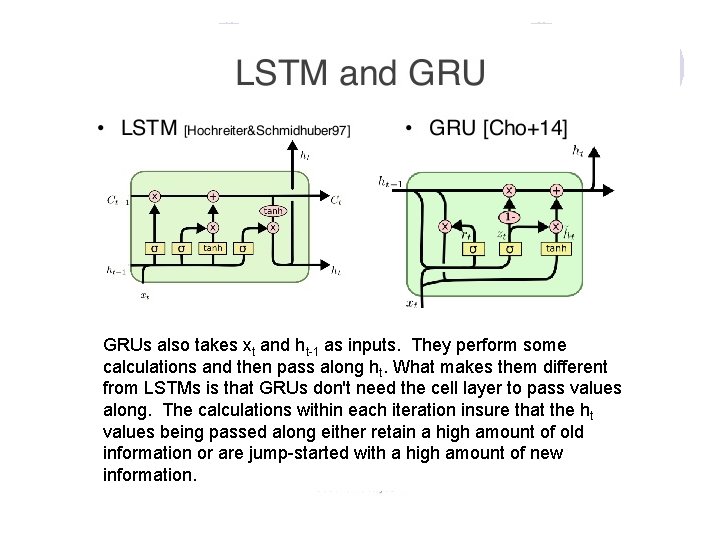

LSTM GRU – gated recurrent unit (more compression) reset gate Update gate It combines the forget and input into a single update gate. It also merges the cell state and hidden state. This is simpler than LSTM. There are many other variants too. X, *: element-wise multiply

GRUs also takes xt and ht-1 as inputs. They perform some calculations and then pass along ht. What makes them different from LSTMs is that GRUs don't need the cell layer to pass values along. The calculations within each iteration insure that the ht values being passed along either retain a high amount of old information or are jump-started with a high amount of new information.

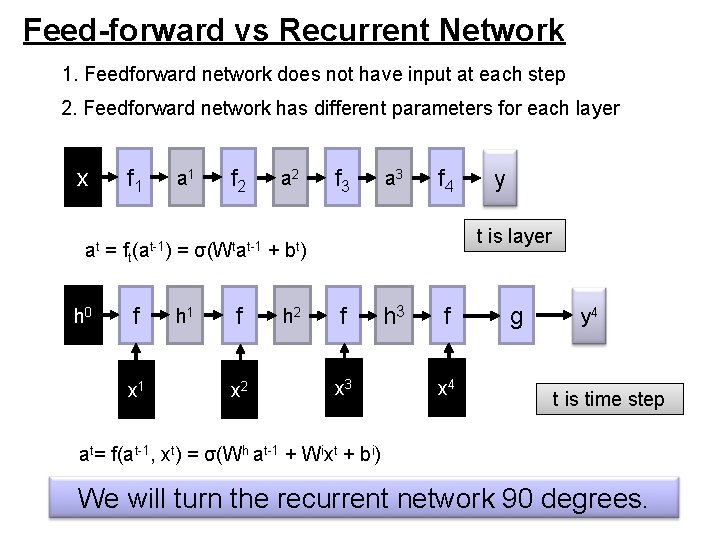

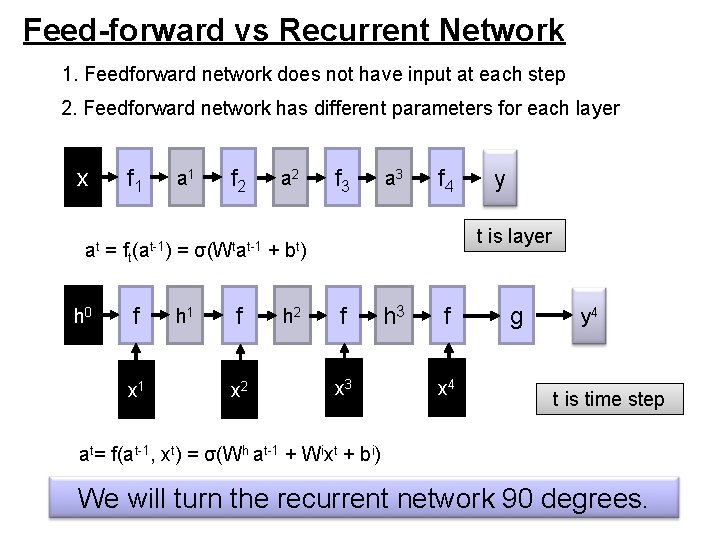

Feed-forward vs Recurrent Network 1. Feedforward network does not have input at each step 2. Feedforward network has different parameters for each layer x f 1 a 1 f 2 a 2 f 3 a 3 f 4 t is layer at = ft(at-1) = σ(Wtat-1 + bt) h 0 f x 1 h 1 f x 2 h 2 y f h 3 x 3 f x 4 g y 4 t is time step at= f(at-1, xt) = σ(Wh at-1 + Wixt + bi) We will turn the recurrent network 90 degrees.

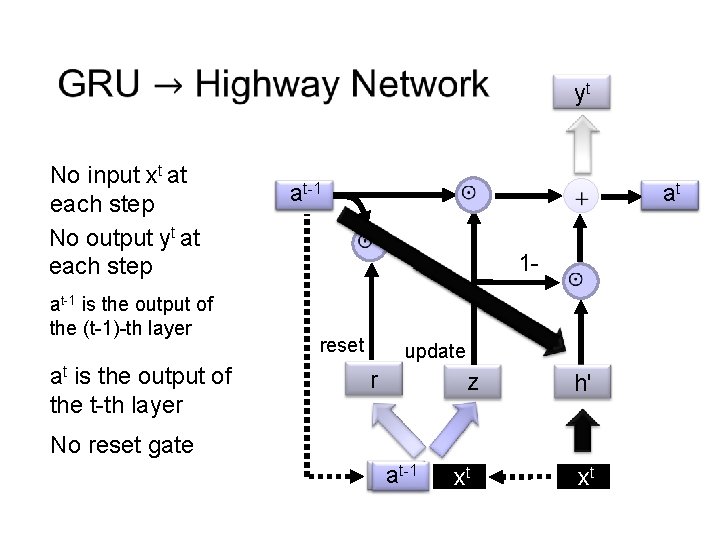

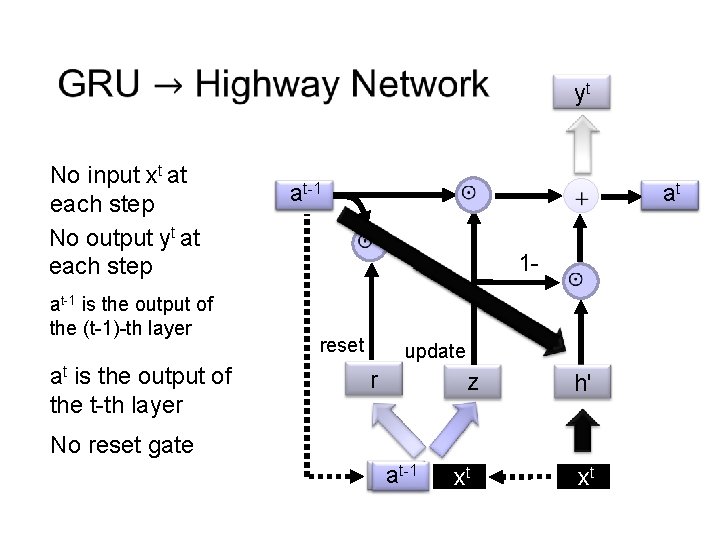

No input xt at each step No output yt at each step at-1 is the output of the (t-1)-th layer at is the output of the t-th layer yt t-1 at-1 h 1 - reset update r z h' No reset gate t-1 hat-1 xt xt ahtt

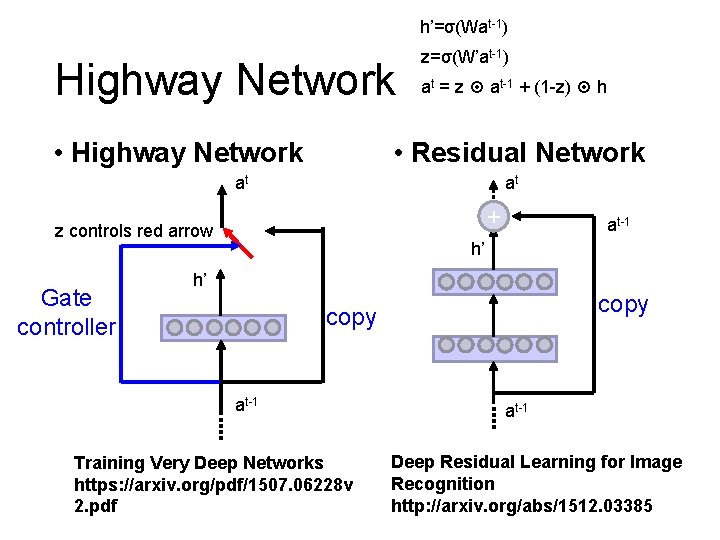

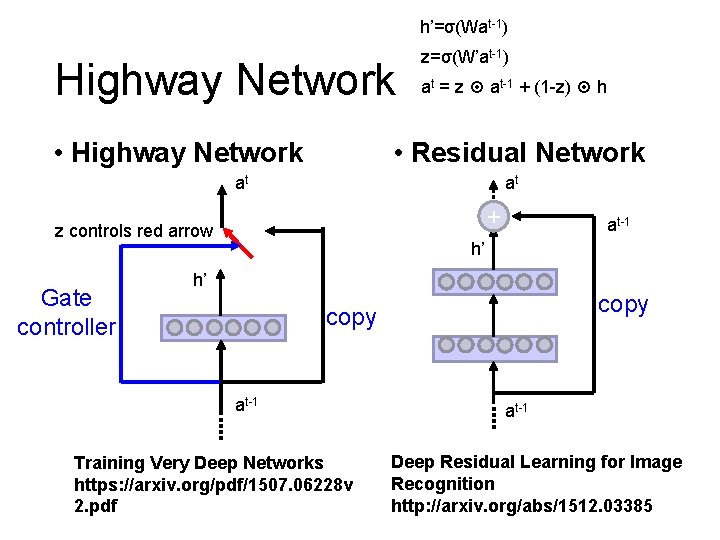

h’=σ(Wat-1) Highway Network • Highway Network z=σ(W’at-1) at = z at-1 + (1 -z) h • Residual Network at at + z controls red arrow Gate controller at-1 h’ h’ copy at-1 Training Very Deep Networks https: //arxiv. org/pdf/1507. 06228 v 2. pdf at-1 Deep Residual Learning for Image Recognition http: //arxiv. org/abs/1512. 03385

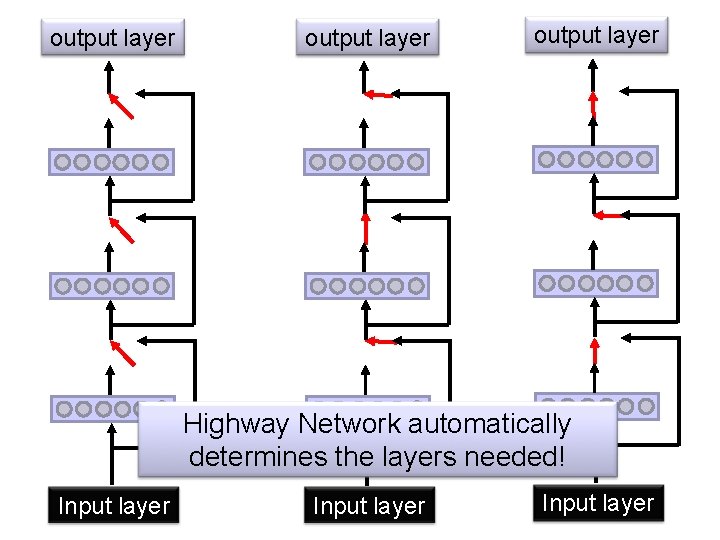

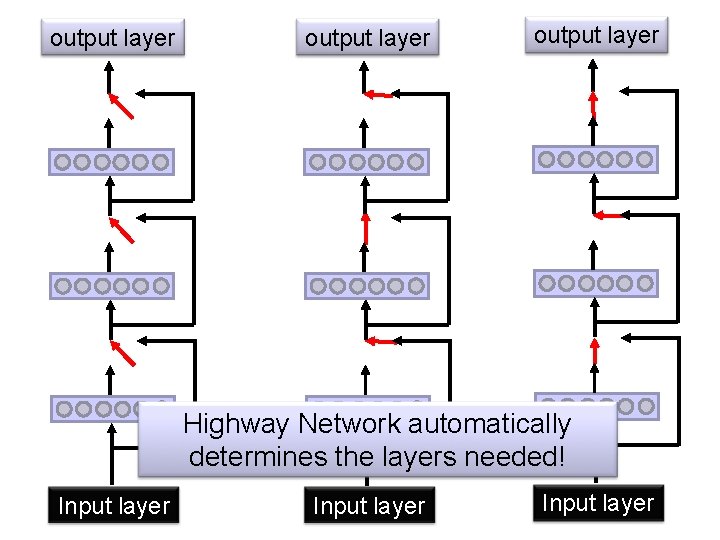

output layer Highway Network automatically determines the layers needed! Input layer

Highway Network Experiments

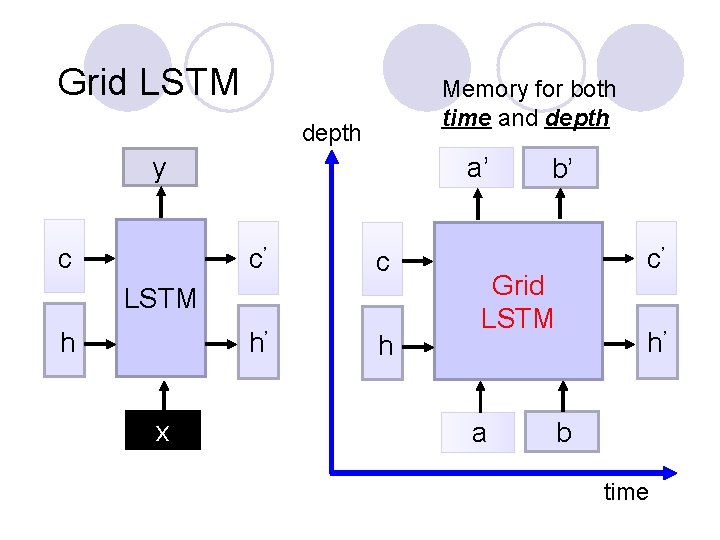

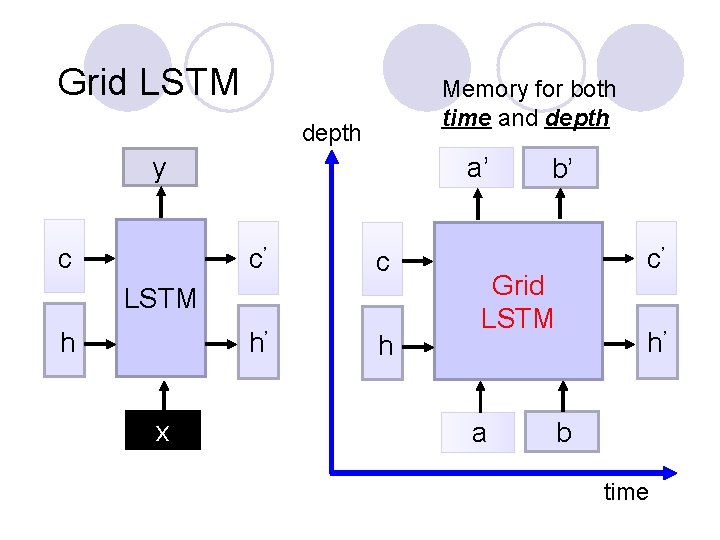

Grid LSTM Memory for both time and depth a’ y c c’ c h’ h LSTM h x b’ c’ Grid LSTM a h’ b time

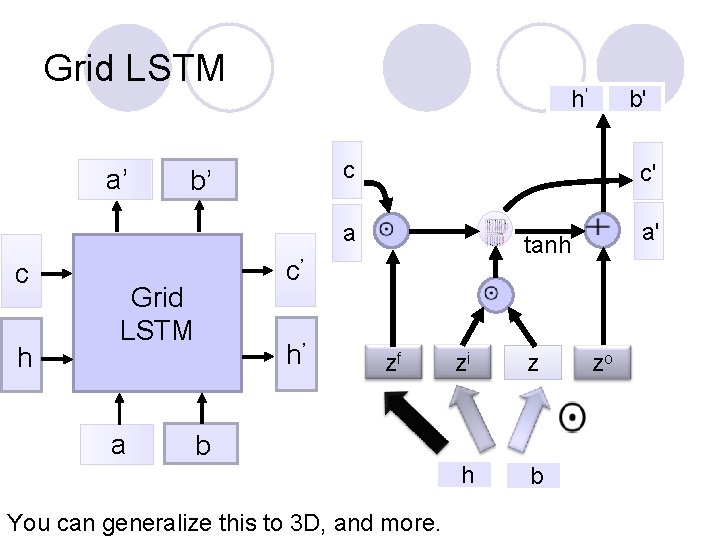

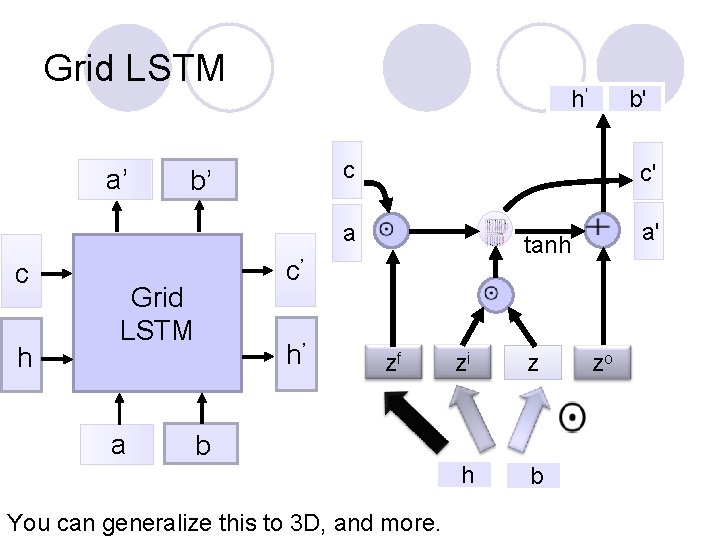

Grid LSTM a’ c h b’ h’ b' c c' a a' tanh c’ Grid LSTM a h' zf zi z h b b You can generalize this to 3 D, and more. zo

Applications of LSTM / RNN

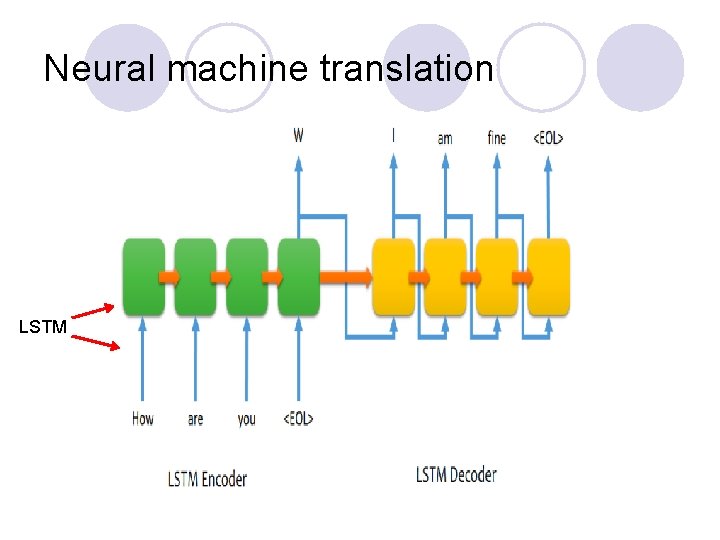

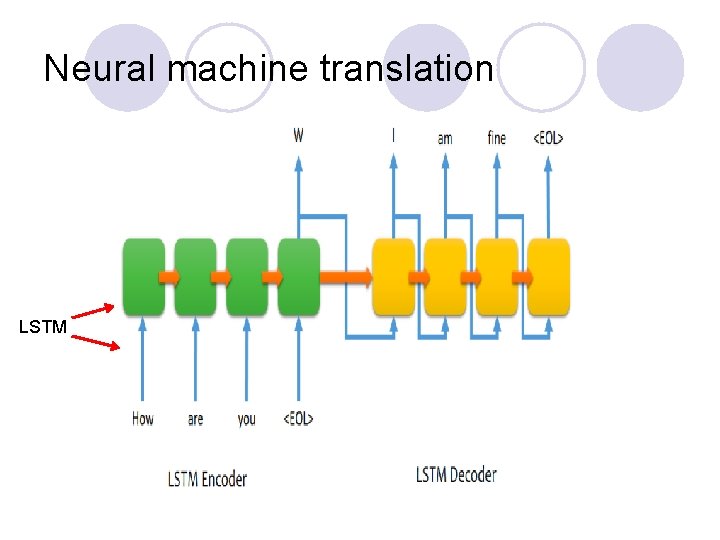

Neural machine translation LSTM

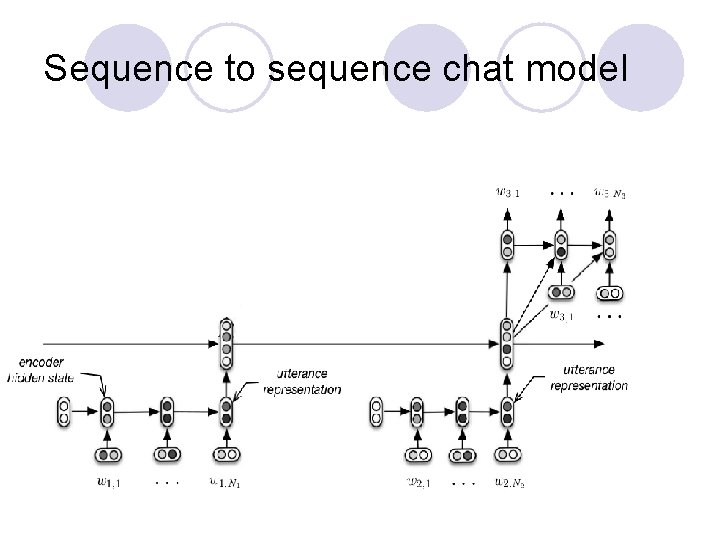

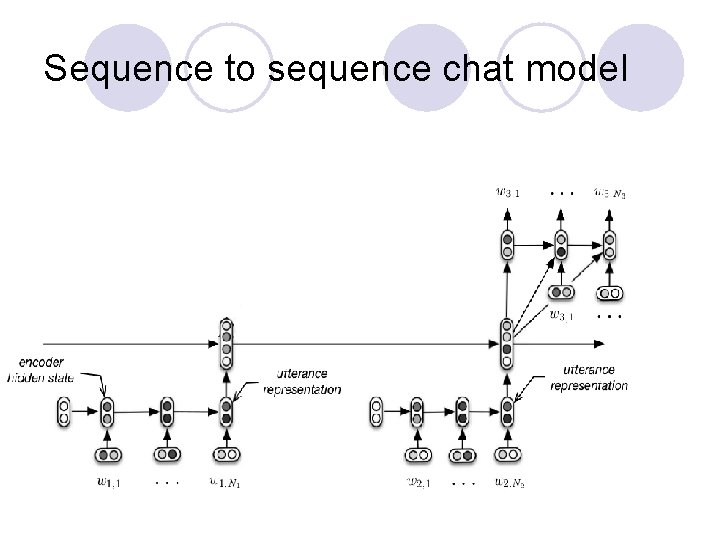

Sequence to sequence chat model

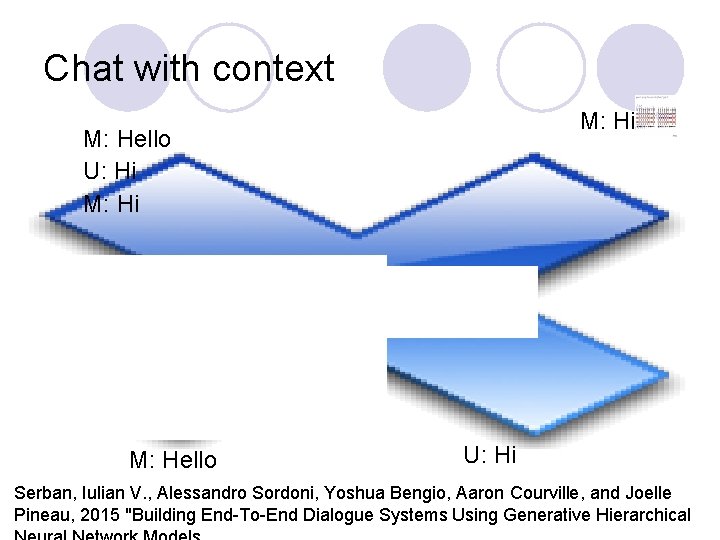

Chat with context M: Hi M: Hello U: Hi Serban, Iulian V. , Alessandro Sordoni, Yoshua Bengio, Aaron Courville, and Joelle Pineau, 2015 "Building End-To-End Dialogue Systems Using Generative Hierarchical

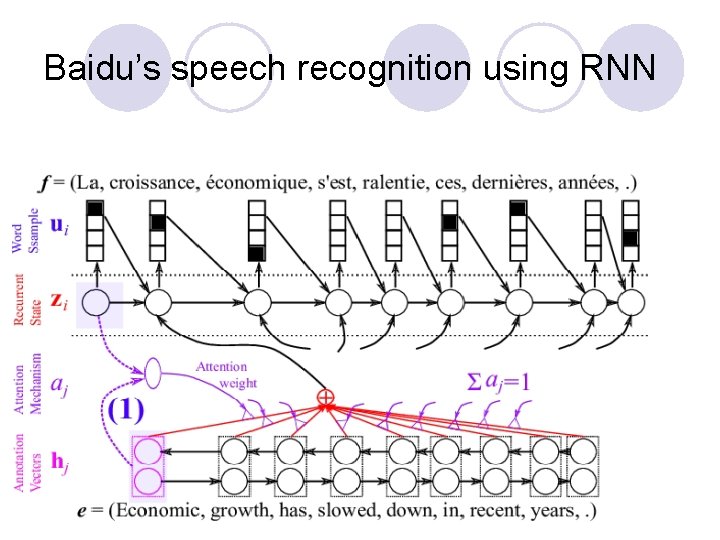

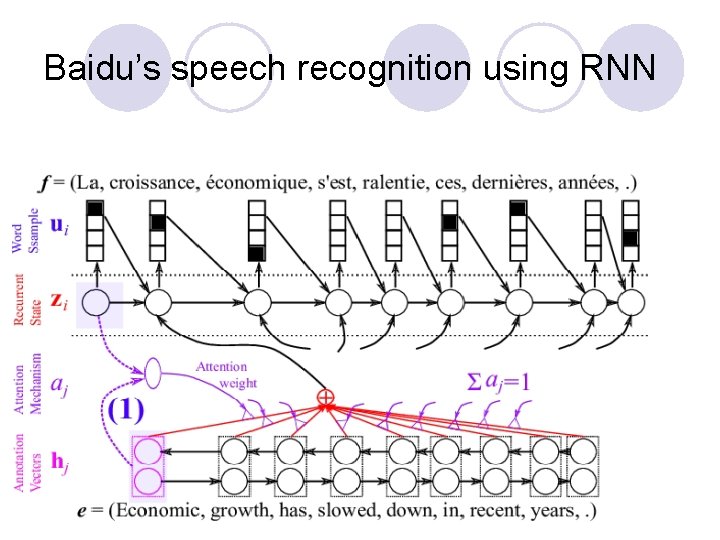

Baidu’s speech recognition using RNN

Attention

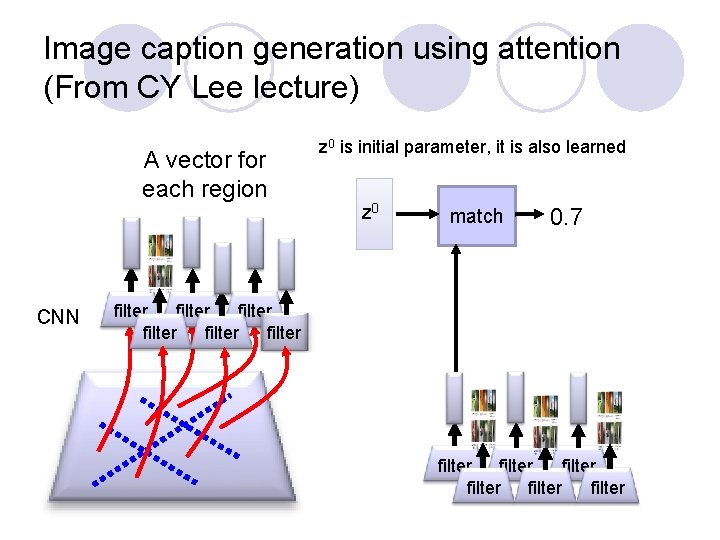

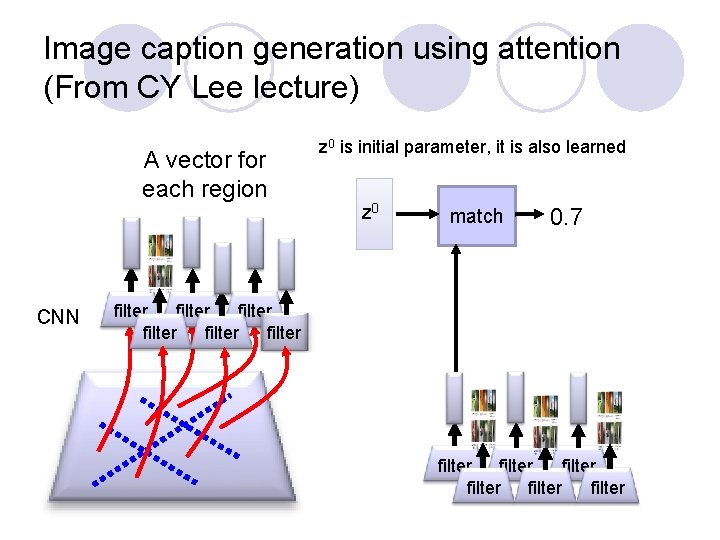

Image caption generation using attention (From CY Lee lecture) A vector for each region CNN z 0 is initial parameter, it is also learned z 0 match 0. 7 filter filter filter

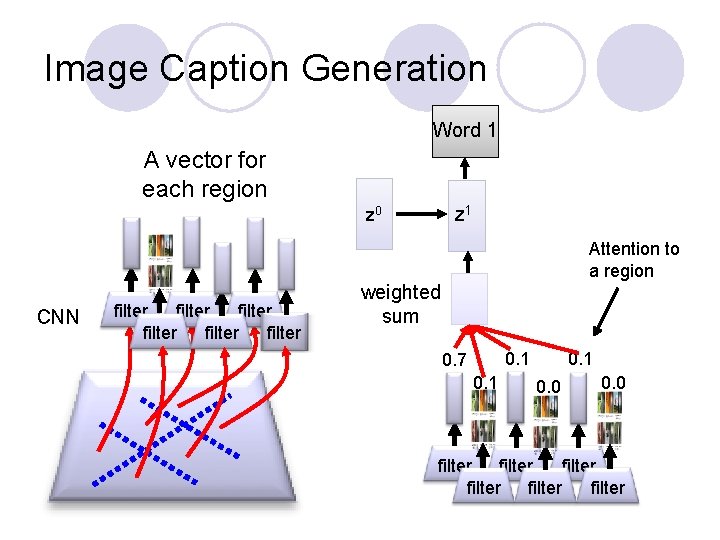

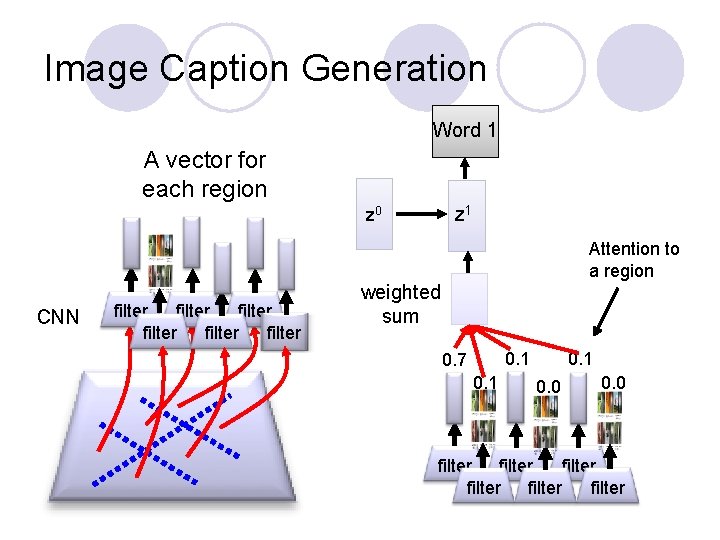

Image Caption Generation Word 1 A vector for each region z 1 z 0 Attention to a region CNN filter filter weighted sum 0. 1 0. 7 0. 1 0. 0 filter filter

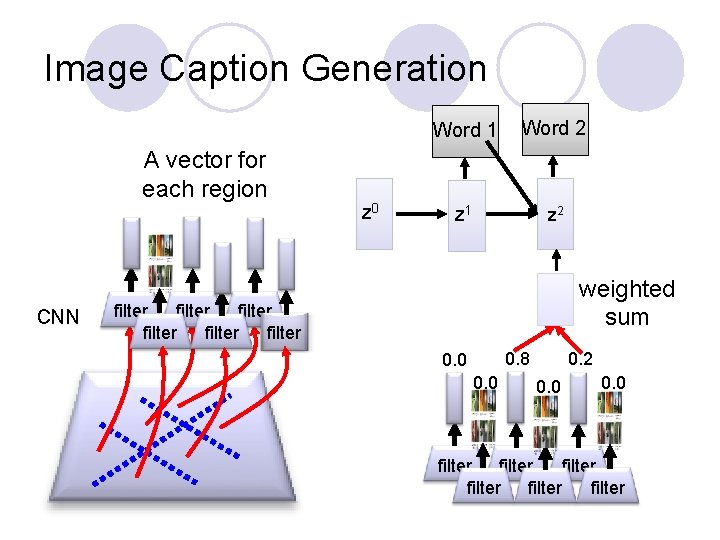

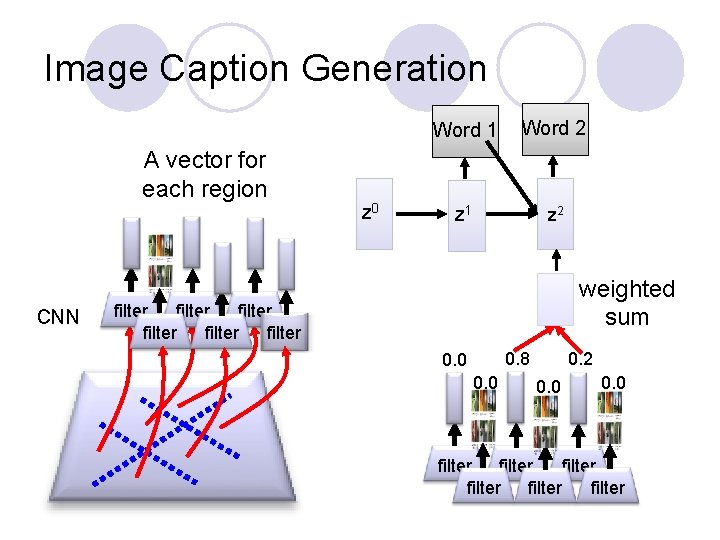

Image Caption Generation A vector for each region CNN z 0 Word 1 Word 2 z 1 z 2 weighted sum filter filter 0. 8 0. 0 0. 2 0. 0 filter filter

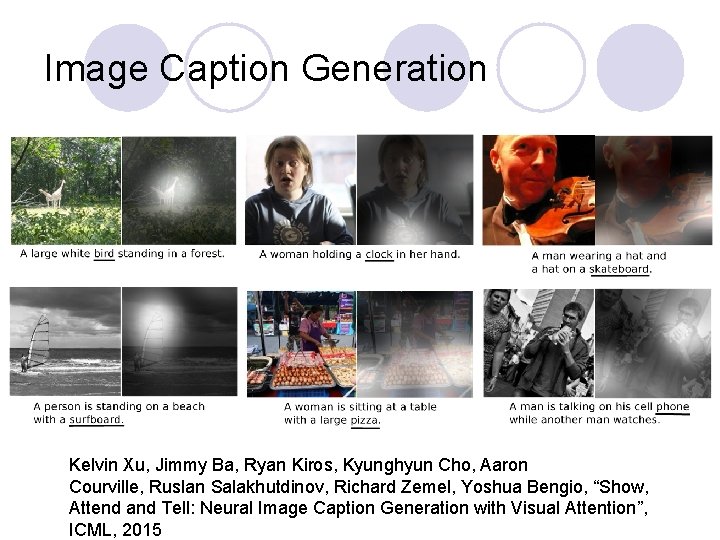

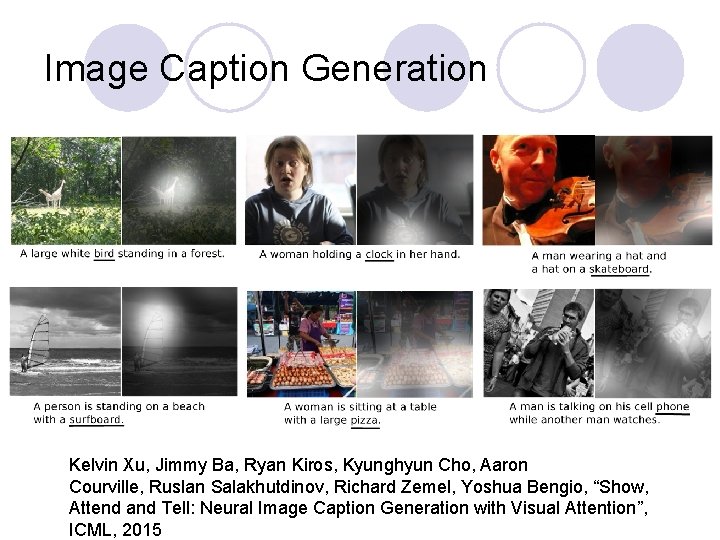

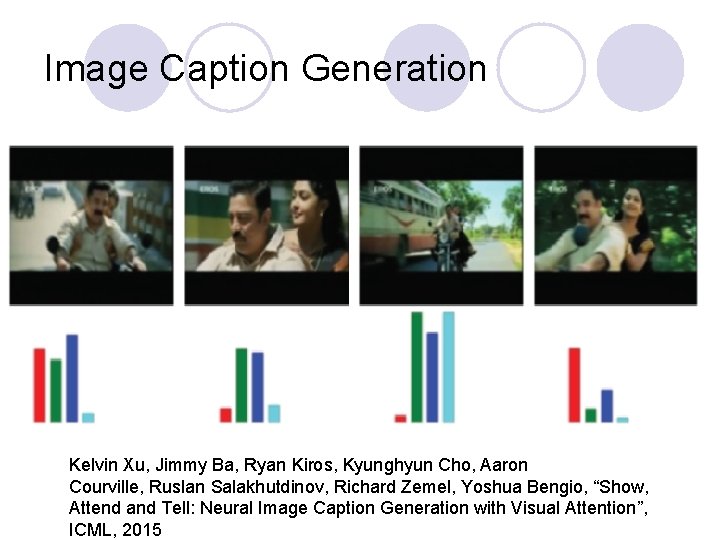

Image Caption Generation Kelvin Xu, Jimmy Ba, Ryan Kiros, Kyunghyun Cho, Aaron Courville, Ruslan Salakhutdinov, Richard Zemel, Yoshua Bengio, “Show, Attend and Tell: Neural Image Caption Generation with Visual Attention”, ICML, 2015

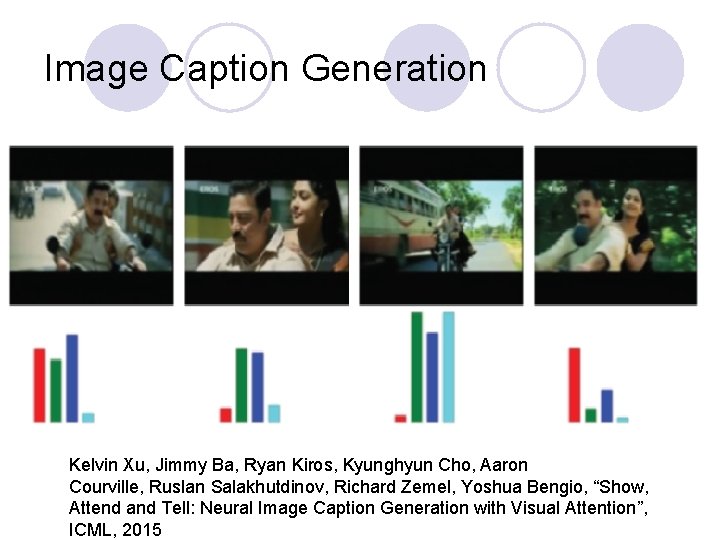

Image Caption Generation Kelvin Xu, Jimmy Ba, Ryan Kiros, Kyunghyun Cho, Aaron Courville, Ruslan Salakhutdinov, Richard Zemel, Yoshua Bengio, “Show, Attend and Tell: Neural Image Caption Generation with Visual Attention”, ICML, 2015

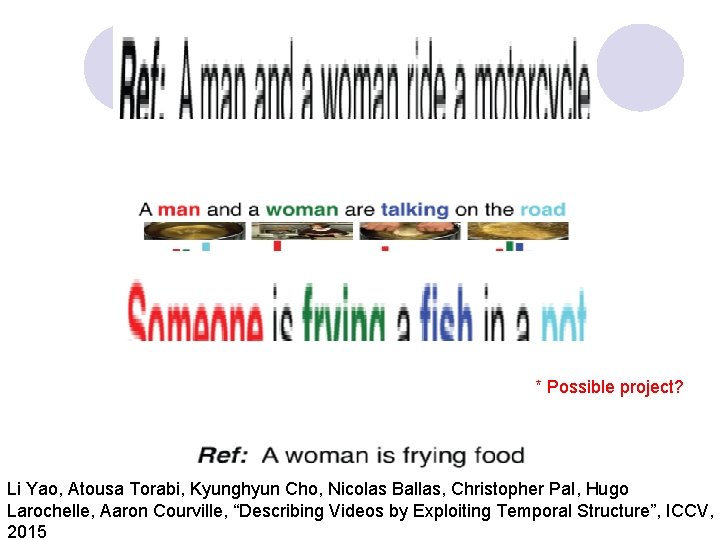

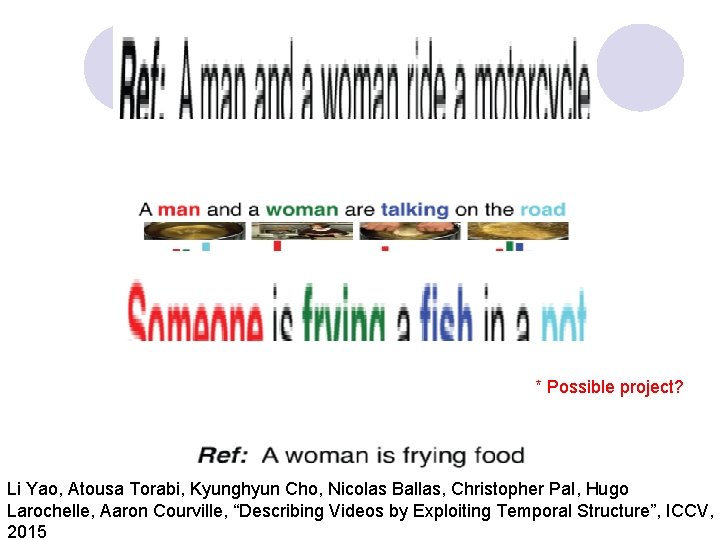

* Possible project? Li Yao, Atousa Torabi, Kyunghyun Cho, Nicolas Ballas, Christopher Pal, Hugo Larochelle, Aaron Courville, “Describing Videos by Exploiting Temporal Structure”, ICCV, 2015