CS 152 Computer Systems Architecture Processor Microarchitecture SangWoo

![Remember: Super simplified processor operation inst = mem[PC] next_PC = PC + 4 if Remember: Super simplified processor operation inst = mem[PC] next_PC = PC + 4 if](https://slidetodoc.com/presentation_image_h2/e1998359120e1ded2d961ebd4f7cd509/image-10.jpg)

![Aside: Impact of branches “[This code] takes ~12 seconds to run. But on commenting Aside: Impact of branches “[This code] takes ~12 seconds to run. But on commenting](https://slidetodoc.com/presentation_image_h2/e1998359120e1ded2d961ebd4f7cd509/image-49.jpg)

- Slides: 87

CS 152: Computer Systems Architecture Processor Microarchitecture Sang-Woo Jun Winter 2019 Large amount of material adapted from MIT 6. 004, “Computation Structures”, Morgan Kaufmann “Computer Organization and Design: The Hardware/Software Interface: RISC-V Edition”, and CS 152 Slides by Isaac Scherson

Course outline q Part 1: The Hardware-Software Interface o What makes a ‘good’ processor? o Assembly programming and conventions q Part 2: Recap of digital design o Combinational and sequential circuits o How their restrictions influence processor design q Part 3: Computer Architecture o Simple and pipelined processors o Computer Arithmetic o Caches and the memory hierarchy q Part 4: Computer Systems o Operating systems, Virtual memory

How to build a computing machine? q Pretend the computers we know and love have never existed q We want to build an automatic computing machine to solve mathematical problems q Starting from (almost) scratch, where you have transistors and integrated circuits but no existing microarchitecture o No PC, no register files, no ALU q How would you do it? Would it look similar to what we have now?

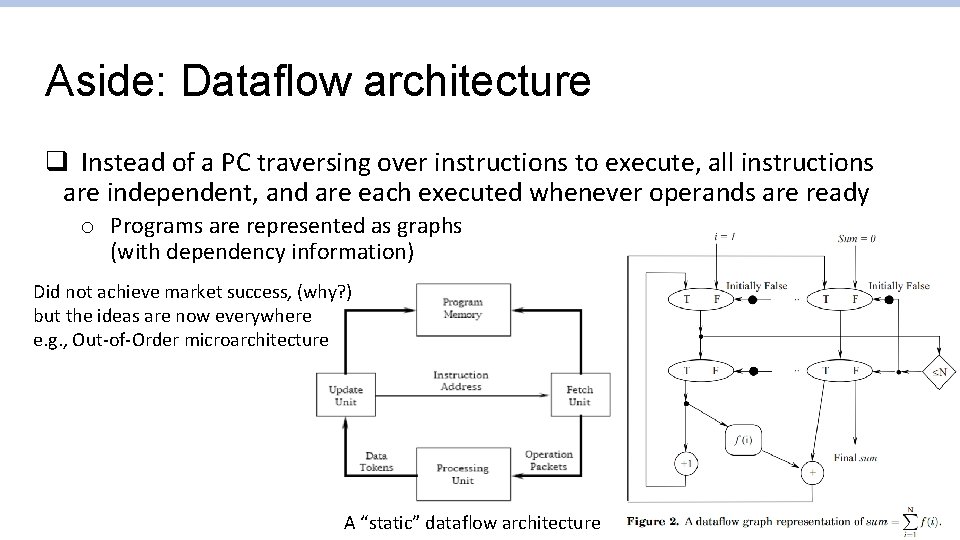

Aside: Dataflow architecture q Instead of a PC traversing over instructions to execute, all instructions are independent, and are each executed whenever operands are ready o Programs are represented as graphs (with dependency information) Did not achieve market success, (why? ) but the ideas are now everywhere e. g. , Out-of-Order microarchitecture A “static” dataflow architecture

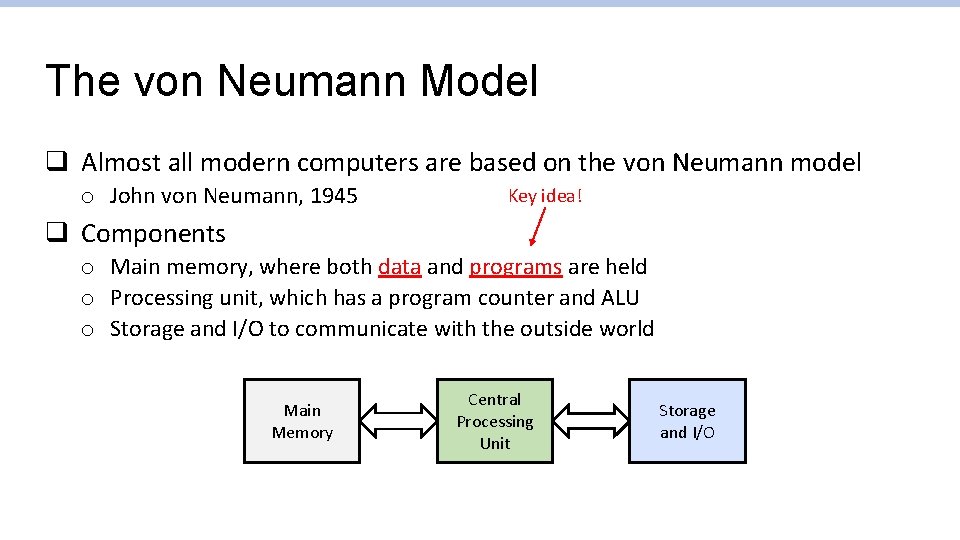

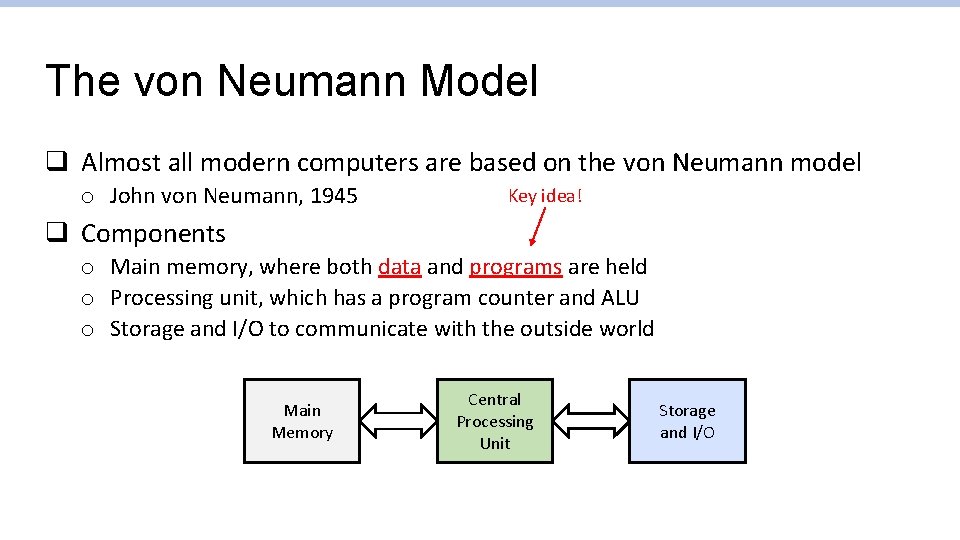

The von Neumann Model q Almost all modern computers are based on the von Neumann model o John von Neumann, 1945 Key idea! q Components o Main memory, where both data and programs are held o Processing unit, which has a program counter and ALU o Storage and I/O to communicate with the outside world Main Memory Central Processing Unit Storage and I/O

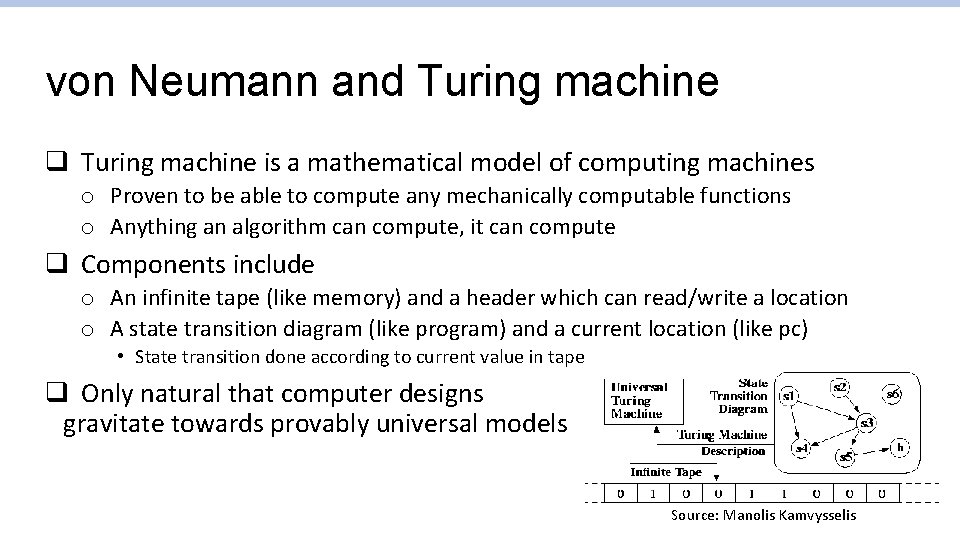

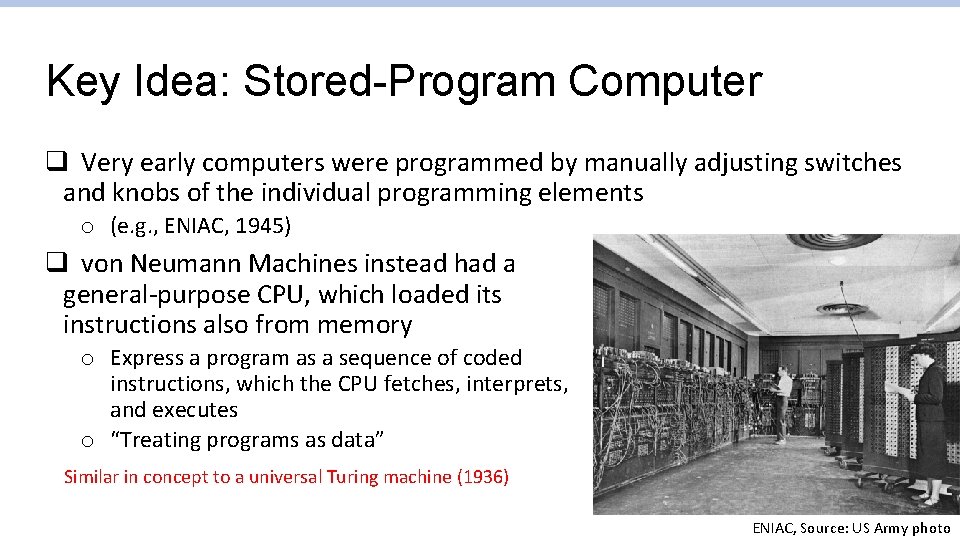

Key Idea: Stored-Program Computer q Very early computers were programmed by manually adjusting switches and knobs of the individual programming elements o (e. g. , ENIAC, 1945) q von Neumann Machines instead had a general-purpose CPU, which loaded its instructions also from memory o Express a program as a sequence of coded instructions, which the CPU fetches, interprets, and executes o “Treating programs as data” Similar in concept to a universal Turing machine (1936) ENIAC, Source: US Army photo

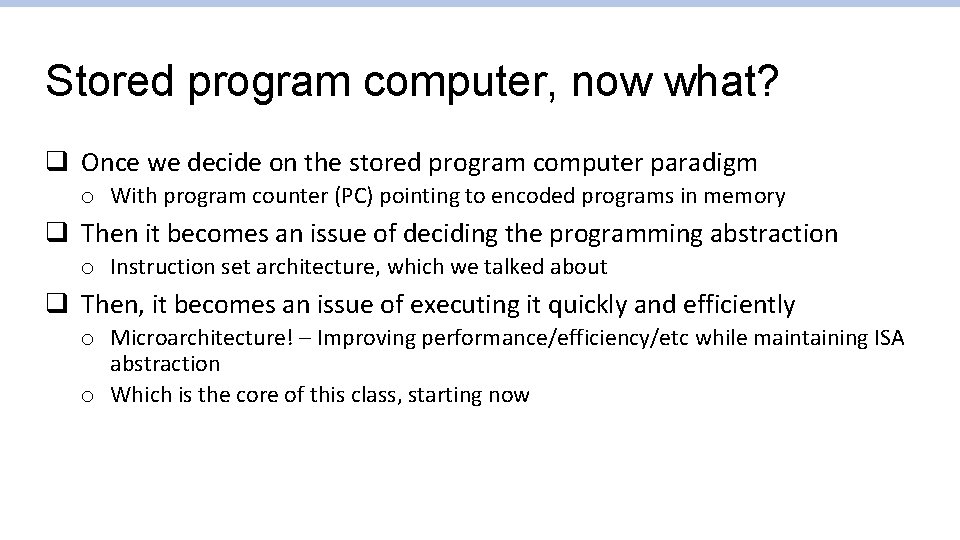

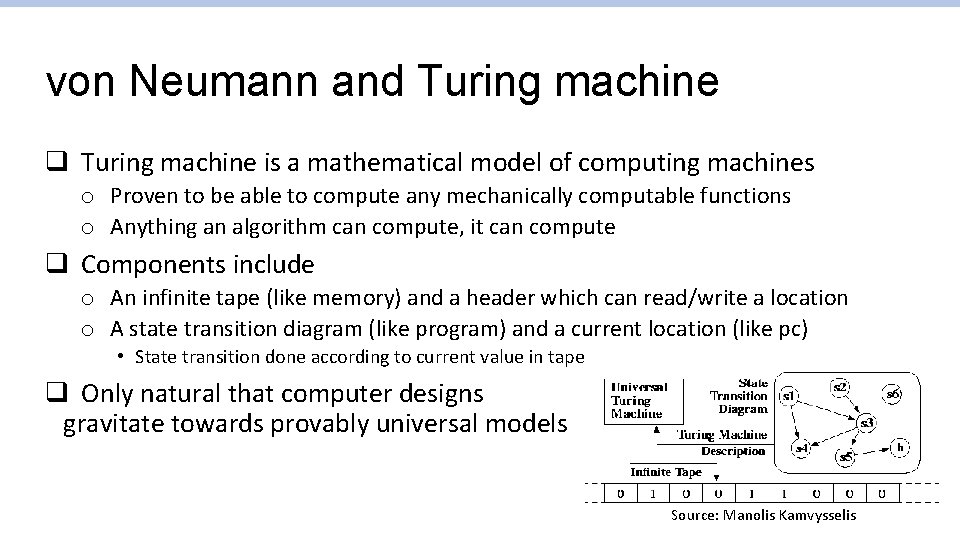

von Neumann and Turing machine q Turing machine is a mathematical model of computing machines o Proven to be able to compute any mechanically computable functions o Anything an algorithm can compute, it can compute q Components include o An infinite tape (like memory) and a header which can read/write a location o A state transition diagram (like program) and a current location (like pc) • State transition done according to current value in tape q Only natural that computer designs gravitate towards provably universal models Source: Manolis Kamvysselis

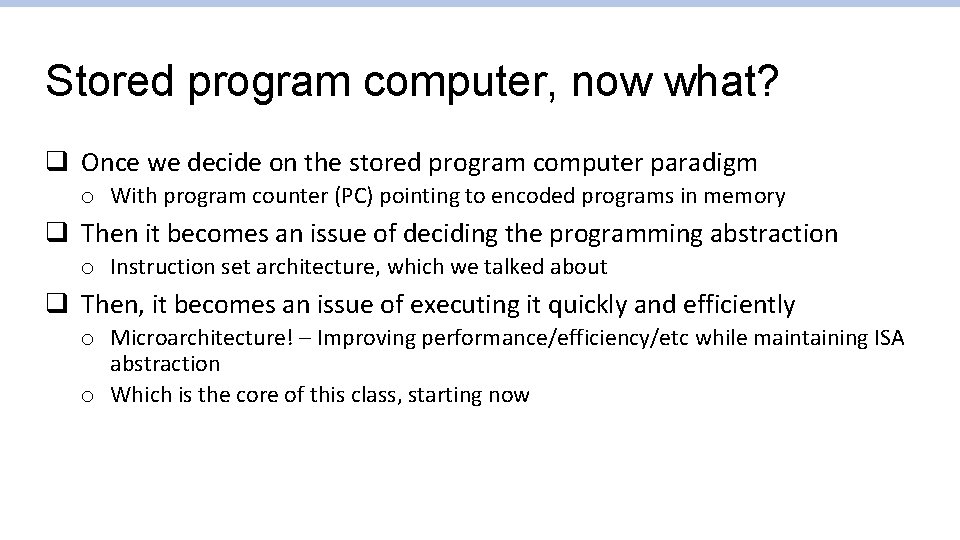

Stored program computer, now what? q Once we decide on the stored program computer paradigm o With program counter (PC) pointing to encoded programs in memory q Then it becomes an issue of deciding the programming abstraction o Instruction set architecture, which we talked about q Then, it becomes an issue of executing it quickly and efficiently o Microarchitecture! – Improving performance/efficiency/etc while maintaining ISA abstraction o Which is the core of this class, starting now

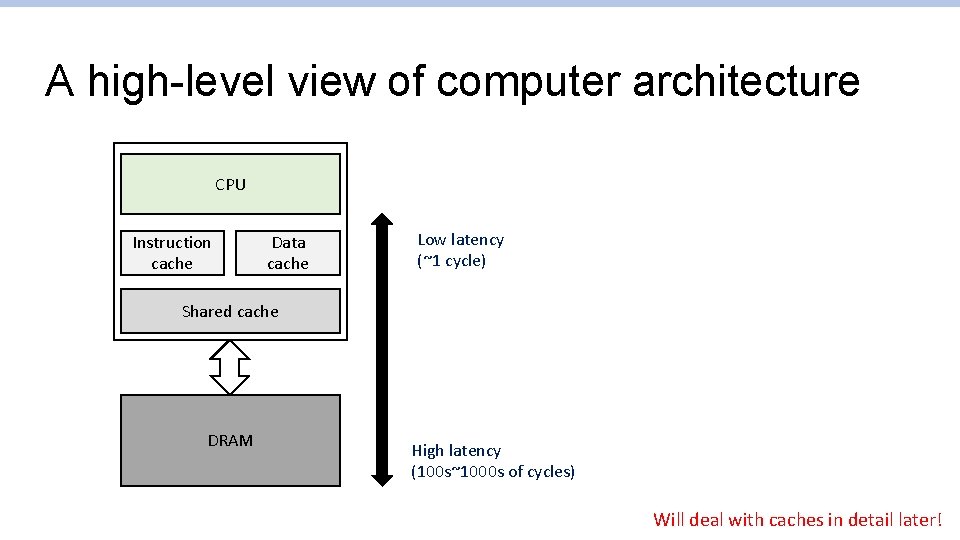

A high-level view of computer architecture CPU Instruction cache Data cache Low latency (~1 cycle) Shared cache DRAM High latency (100 s~1000 s of cycles) Will deal with caches in detail later!

![Remember Super simplified processor operation inst memPC nextPC PC 4 if Remember: Super simplified processor operation inst = mem[PC] next_PC = PC + 4 if](https://slidetodoc.com/presentation_image_h2/e1998359120e1ded2d961ebd4f7cd509/image-10.jpg)

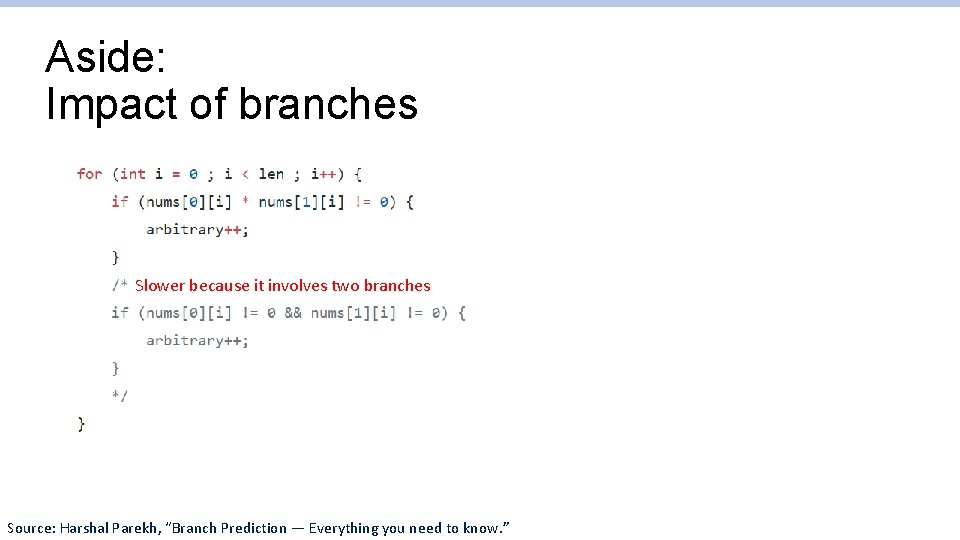

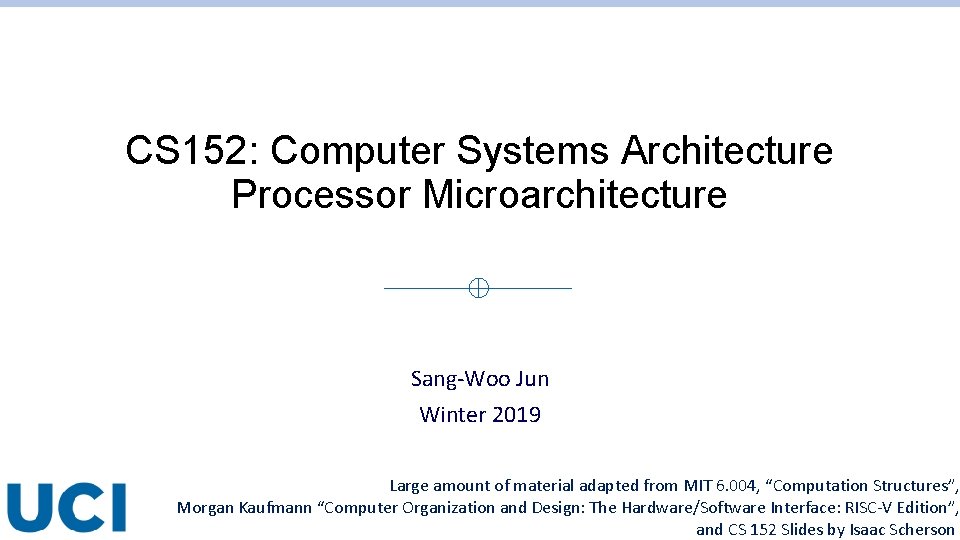

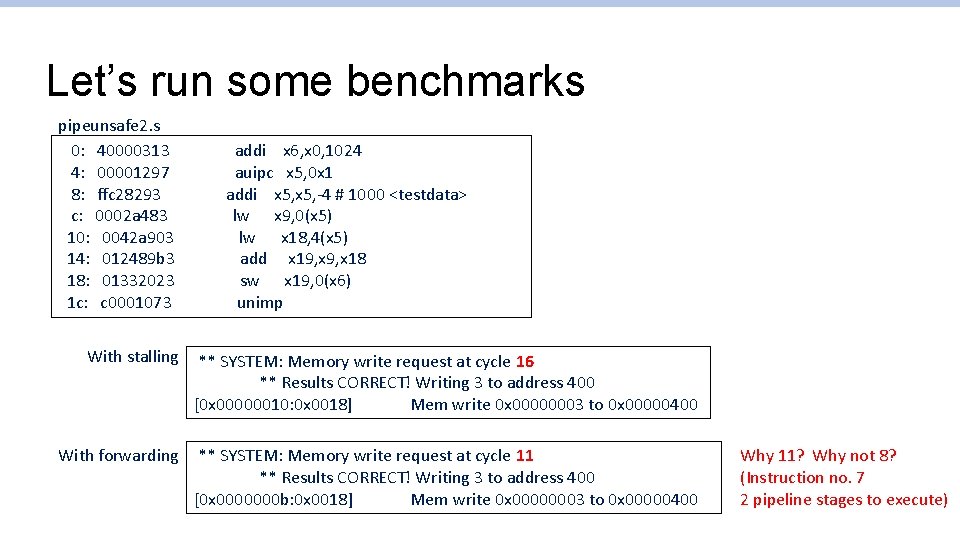

Remember: Super simplified processor operation inst = mem[PC] next_PC = PC + 4 if ( inst. type == STORE ) mem[rf[inst. arg 1]] = rf[inst. arg 2] if ( inst. type == LOAD ) rf[inst. arg 1] = mem[rf[inst. arg 2]] if ( inst. type == ALU ) rf[inst. arg 1] = alu(inst. op, rf[inst. arg 2], rf[inst. arg 3]) if ( inst. type == COND ) next_PC = rf[inst. arg 1] PC = next_PC

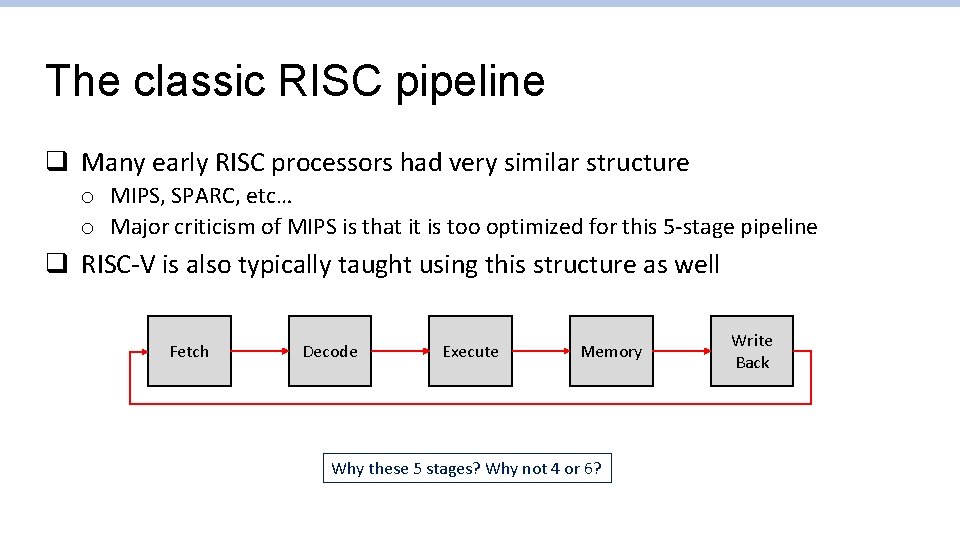

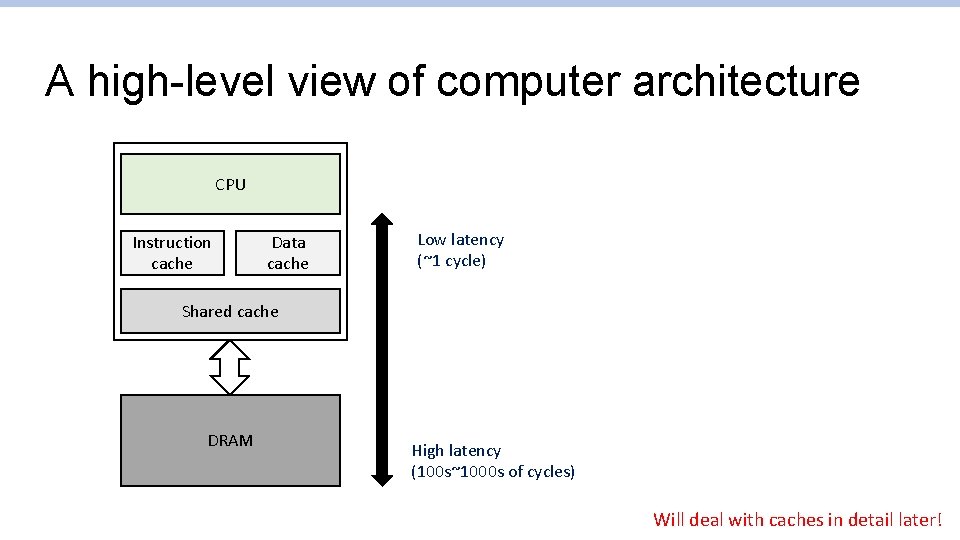

The classic RISC pipeline q Many early RISC processors had very similar structure o MIPS, SPARC, etc… o Major criticism of MIPS is that it is too optimized for this 5 -stage pipeline q RISC-V is also typically taught using this structure as well Fetch Decode Execute Memory Why these 5 stages? Why not 4 or 6? Write Back

The classic RISC pipeline q q q Fetch: Request instruction fetch from memory Decode: Instruction decode & register read Execute: Execute operation or calculate address Memory: Request memory read or write Writeback: Write result (either from execute or memory) back to register

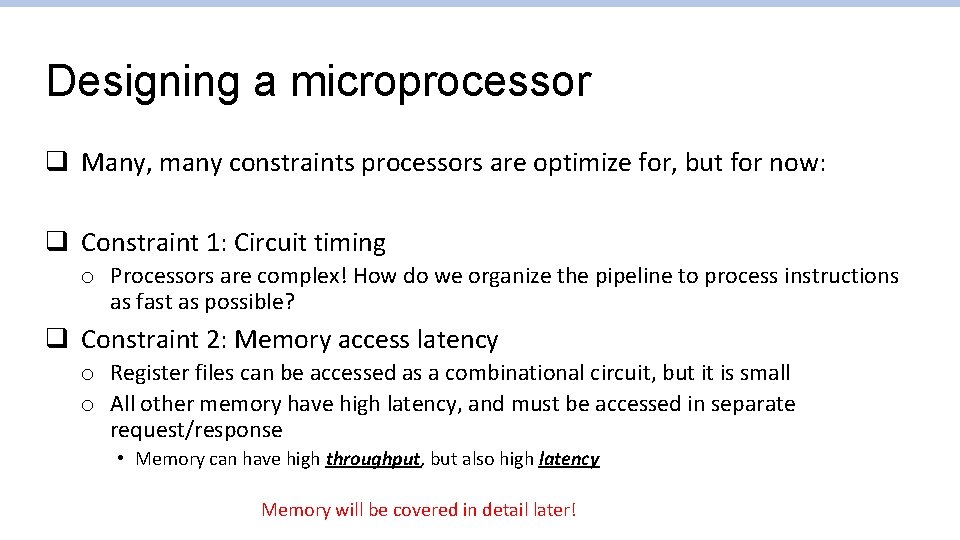

Designing a microprocessor q Many, many constraints processors are optimize for, but for now: q Constraint 1: Circuit timing o Processors are complex! How do we organize the pipeline to process instructions as fast as possible? q Constraint 2: Memory access latency o Register files can be accessed as a combinational circuit, but it is small o All other memory have high latency, and must be accessed in separate request/response • Memory can have high throughput, but also high latency Memory will be covered in detail later!

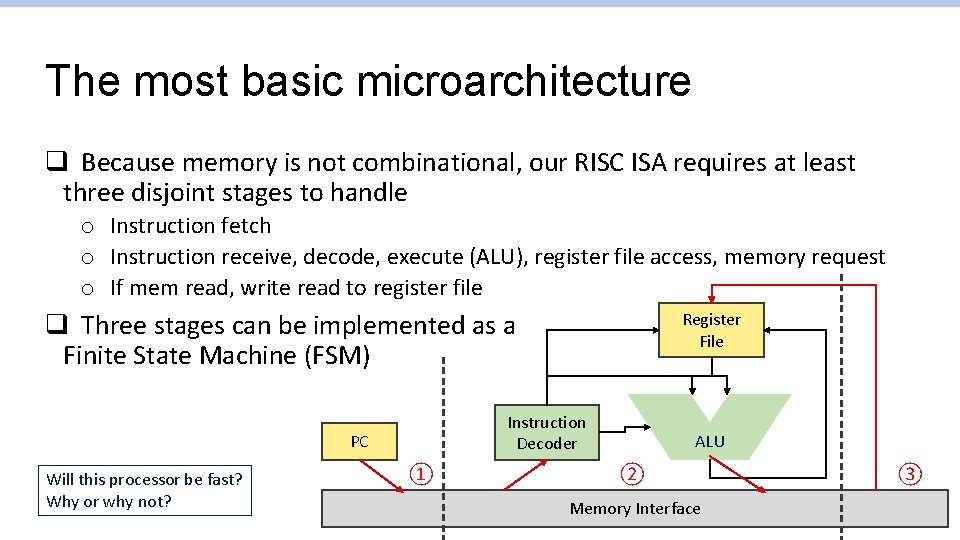

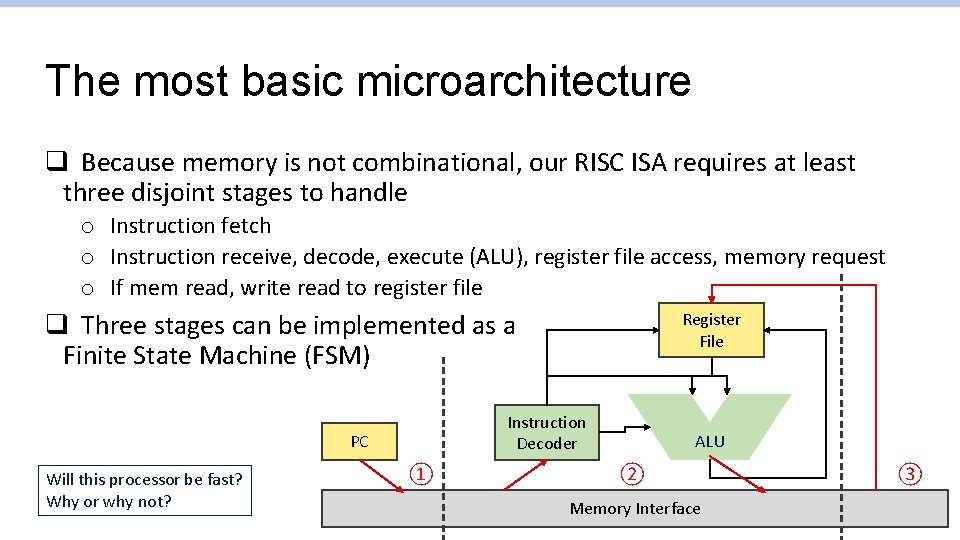

The most basic microarchitecture q Because memory is not combinational, our RISC ISA requires at least three disjoint stages to handle o Instruction fetch o Instruction receive, decode, execute (ALU), register file access, memory request o If mem read, write read to register file q Three stages can be implemented as a Finite State Machine (FSM) Instruction Decoder PC Will this processor be fast? Why or why not? Register File ① ALU ② Memory Interface ③

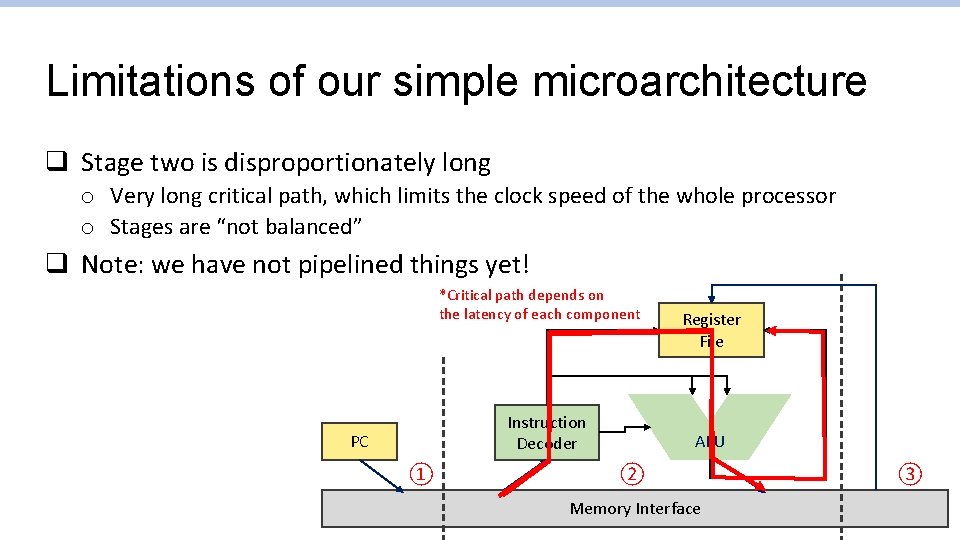

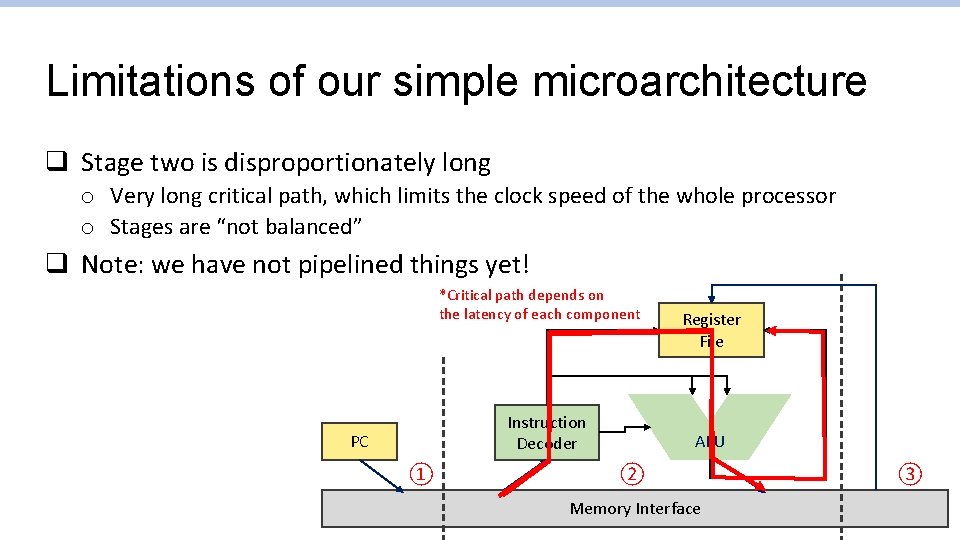

Limitations of our simple microarchitecture q Stage two is disproportionately long o Very long critical path, which limits the clock speed of the whole processor o Stages are “not balanced” q Note: we have not pipelined things yet! *Critical path depends on the latency of each component Instruction Decoder PC ① Register File ALU ② Memory Interface ③

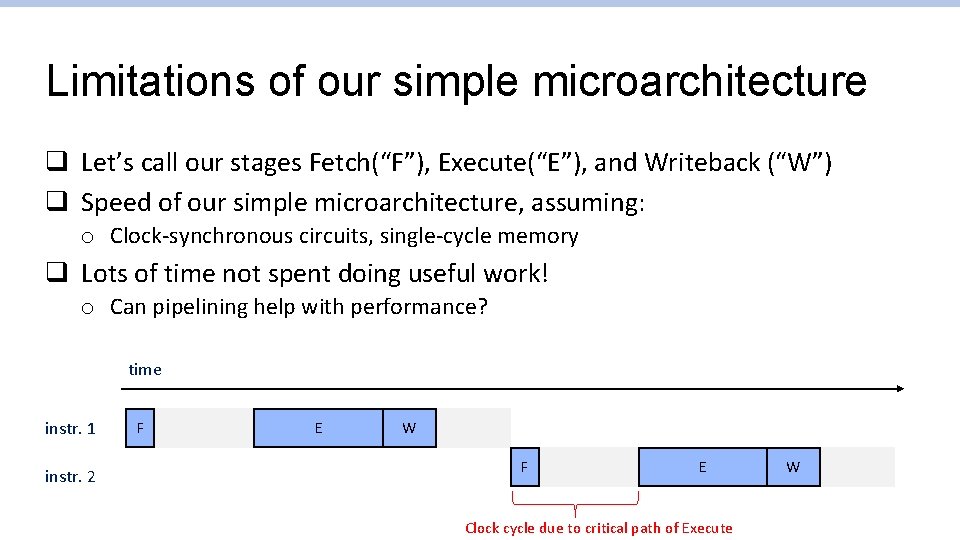

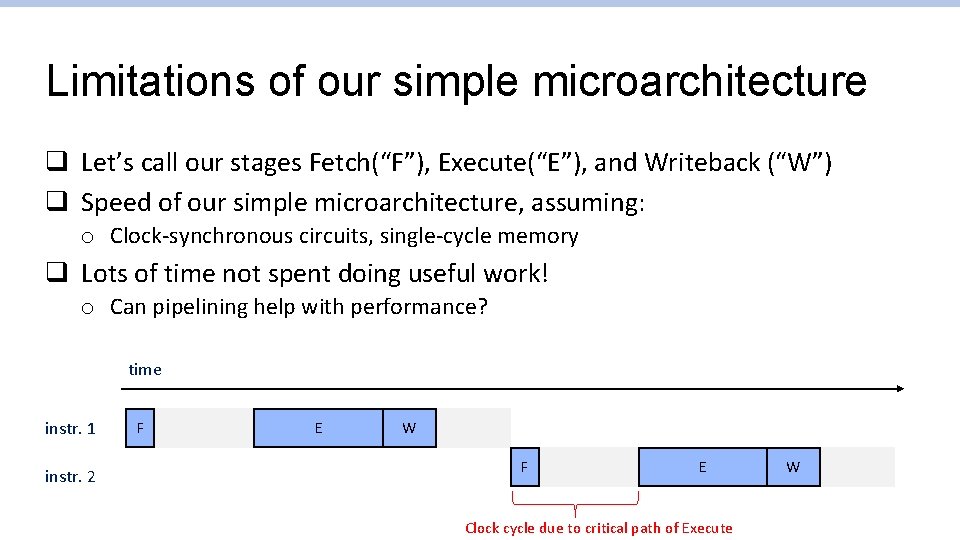

Limitations of our simple microarchitecture q Let’s call our stages Fetch(“F”), Execute(“E”), and Writeback (“W”) q Speed of our simple microarchitecture, assuming: o Clock-synchronous circuits, single-cycle memory q Lots of time not spent doing useful work! o Can pipelining help with performance? time instr. 1 instr. 2 F E W F E Clock cycle due to critical path of Execute W

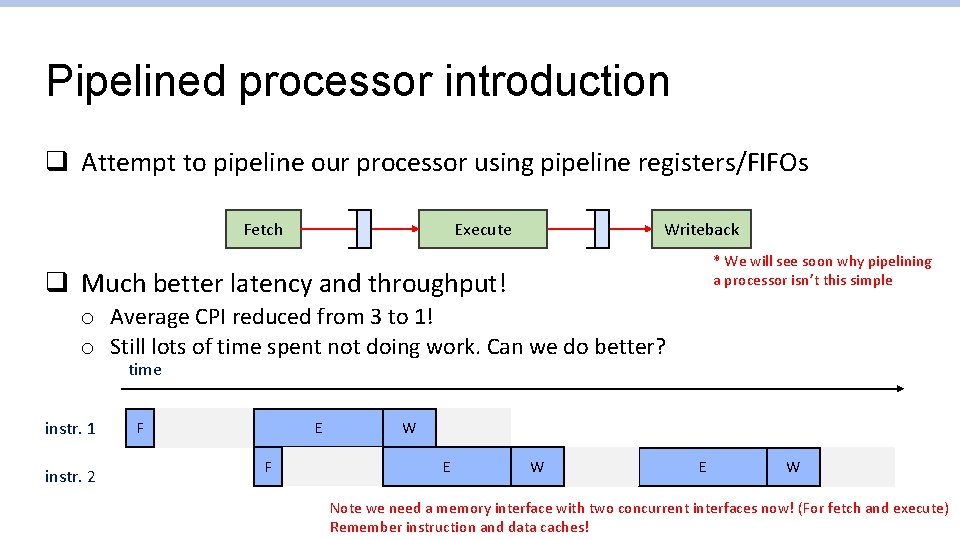

Pipelined processor introduction q Attempt to pipeline our processor using pipeline registers/FIFOs Fetch Writeback Execute * We will see soon why pipelining a processor isn’t this simple q Much better latency and throughput! o Average CPI reduced from 3 to 1! o Still lots of time spent not doing work. Can we do better? time instr. 1 instr. 2 F E F W E FW E W Note we need a memory interface with two concurrent interfaces now! (For fetch and execute) Remember instruction and data caches!

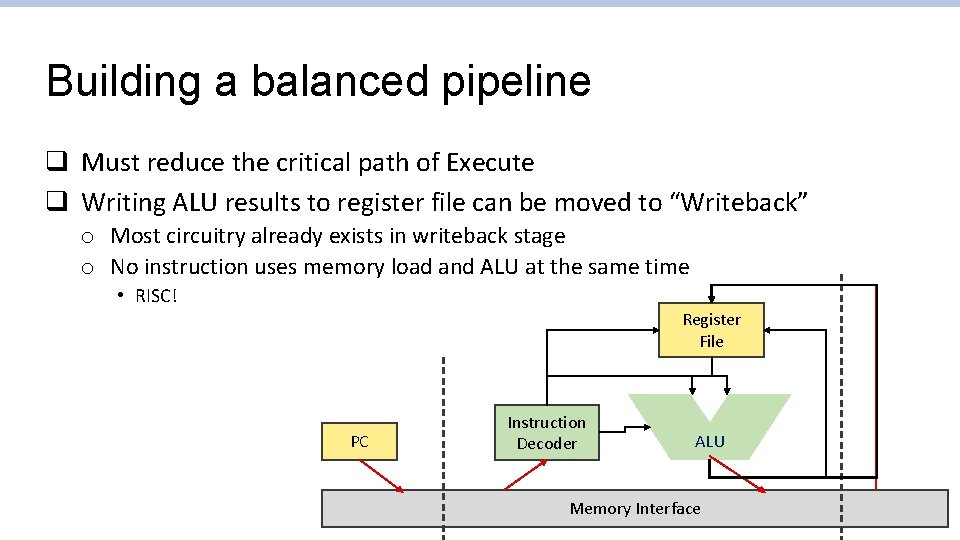

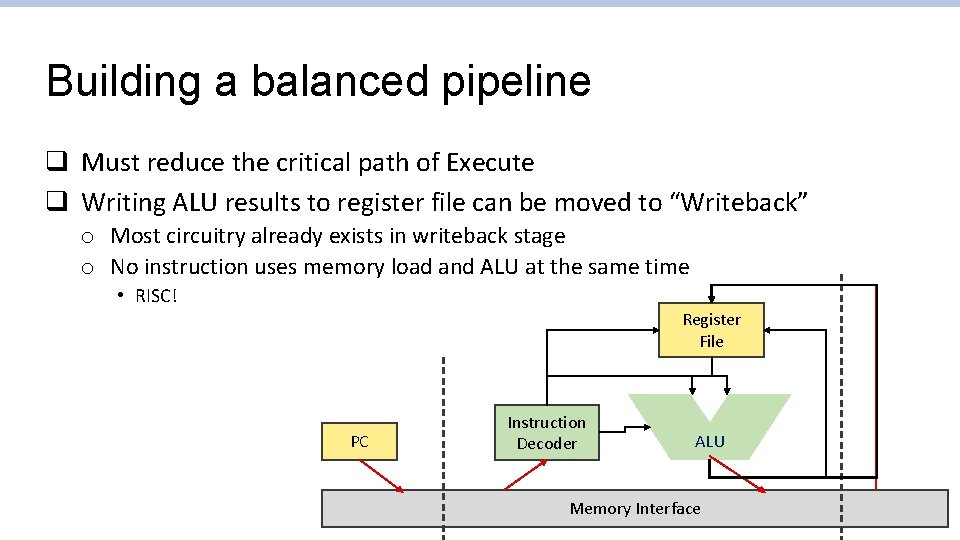

Building a balanced pipeline q Must reduce the critical path of Execute q Writing ALU results to register file can be moved to “Writeback” o Most circuitry already exists in writeback stage o No instruction uses memory load and ALU at the same time • RISC! Register File PC Instruction Decoder ALU Memory Interface

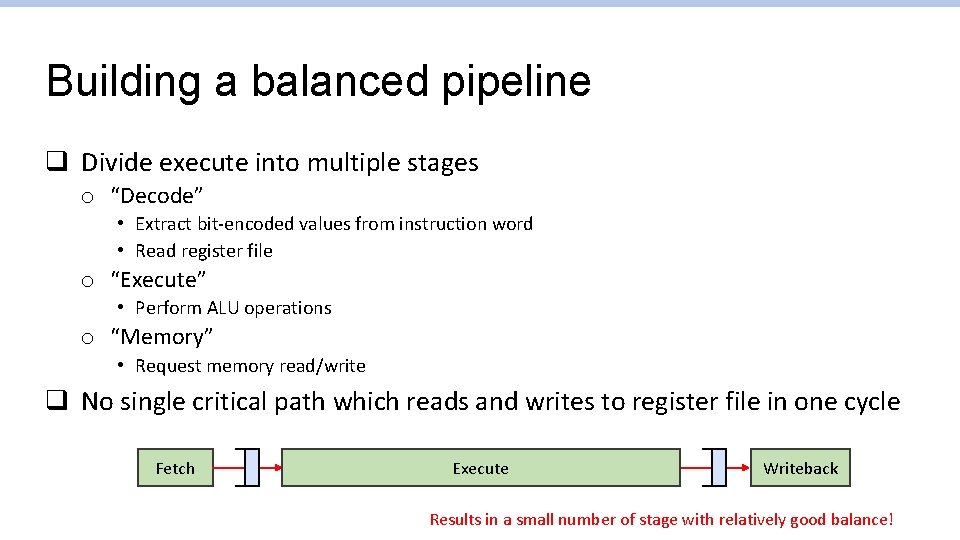

Building a balanced pipeline q Divide execute into multiple stages o “Decode” • Extract bit-encoded values from instruction word • Read register file o “Execute” • Perform ALU operations o “Memory” • Request memory read/write q No single critical path which reads and writes to register file in one cycle Fetch Decode Execute Memory Writeback Results in a small number of stage with relatively good balance!

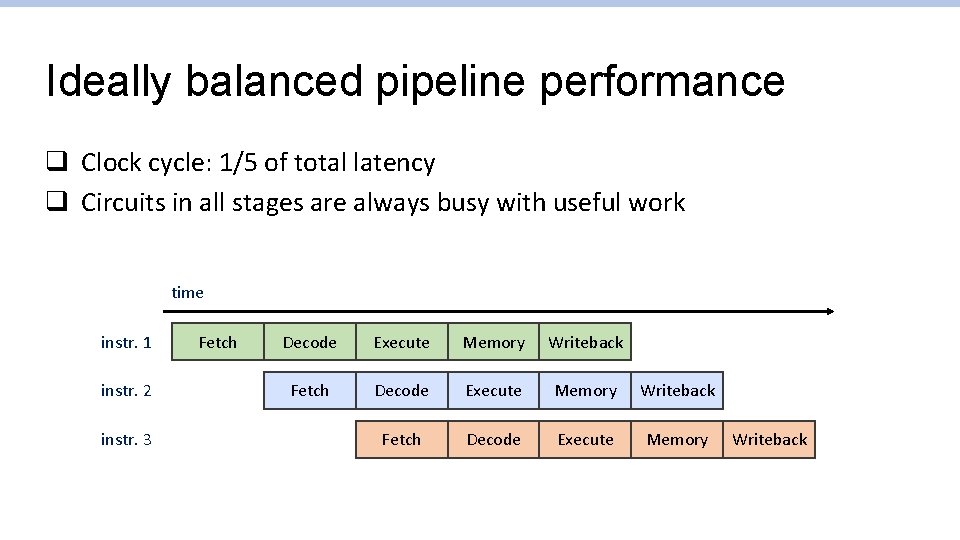

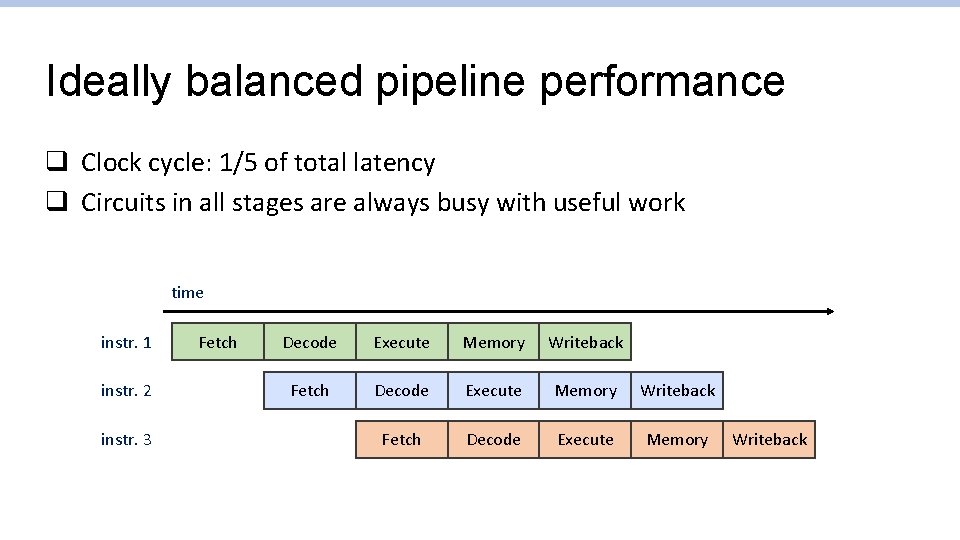

Ideally balanced pipeline performance q Clock cycle: 1/5 of total latency q Circuits in all stages are always busy with useful work time instr. 1 instr. 2 instr. 3 Fetch Decode Execute Memory Writeback

Aside: Real-world processors have wide range of pipeline stages Name Stages AVR/PIC microcontrollers 2 ARM Cortex-M 0 3 Apple A 9 (Based on ARMv 8) 16 Original Intel Pentium 5 Intel Pentium 4 30+ Intel Core (i 3, i 5, i 7, …) 14+ RISC-V Rocket 6 Designs change based on requirements!

Achieving correct pipelining

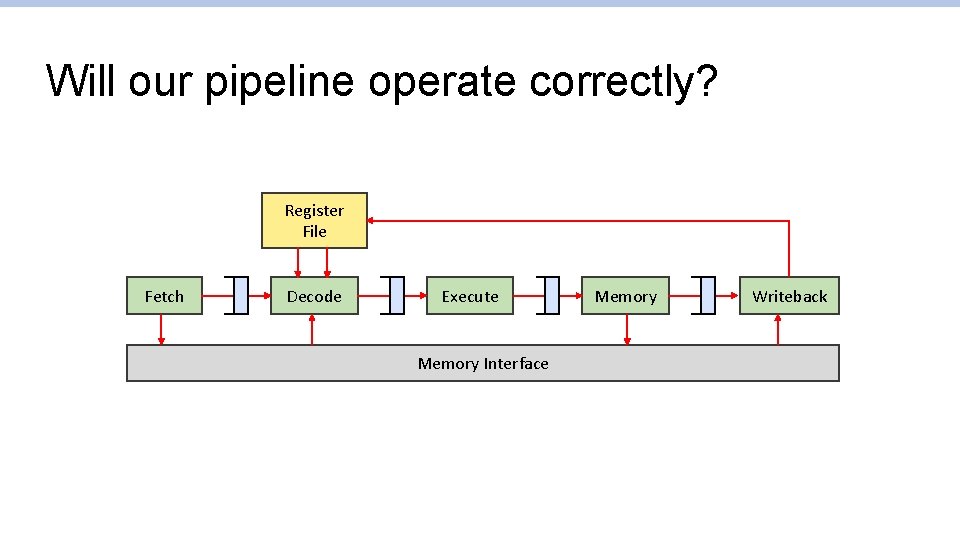

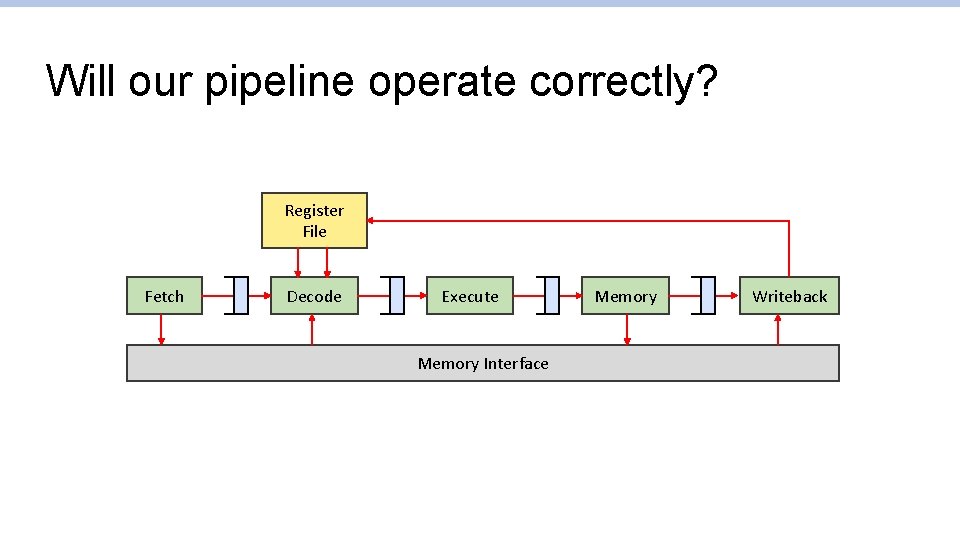

Will our pipeline operate correctly? Register File Fetch Decode Execute Memory Interface Memory Writeback

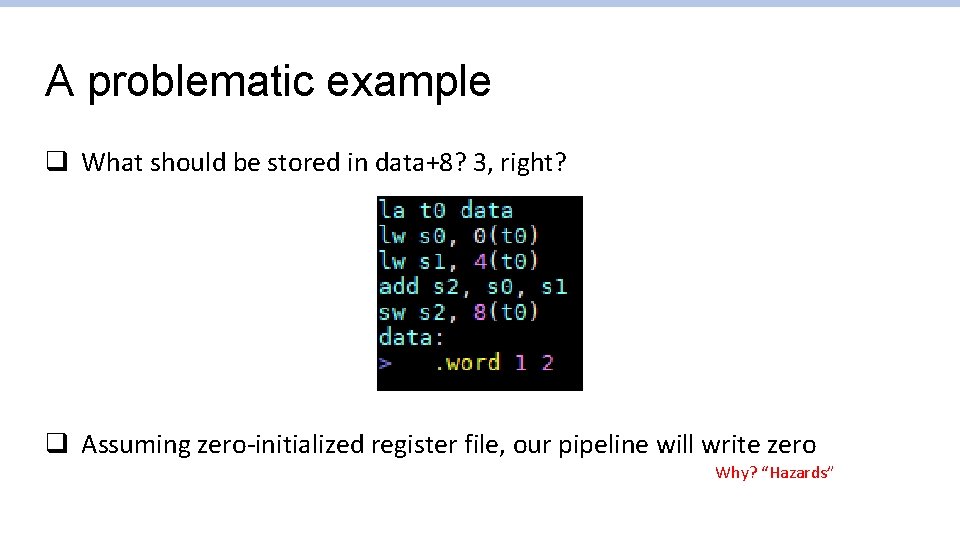

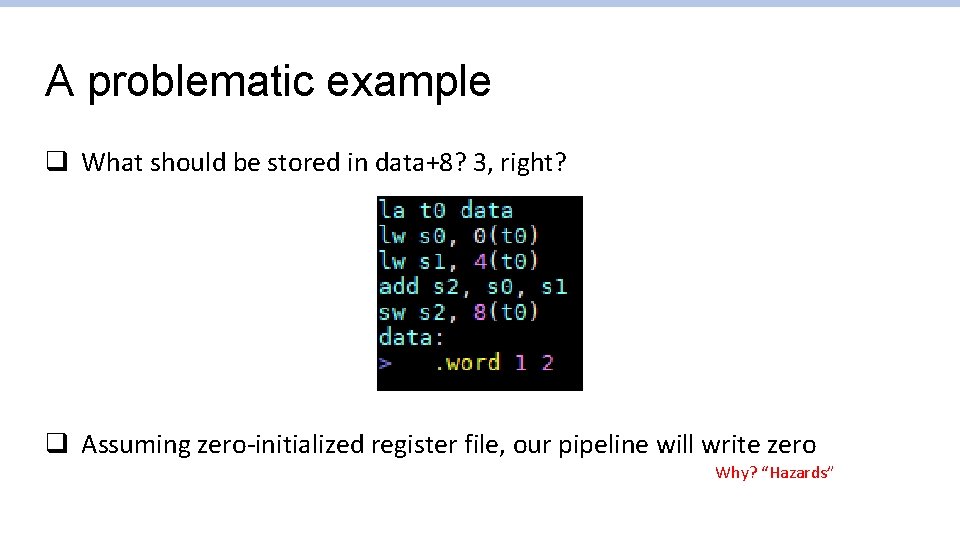

A problematic example q What should be stored in data+8? 3, right? q Assuming zero-initialized register file, our pipeline will write zero Why? “Hazards”

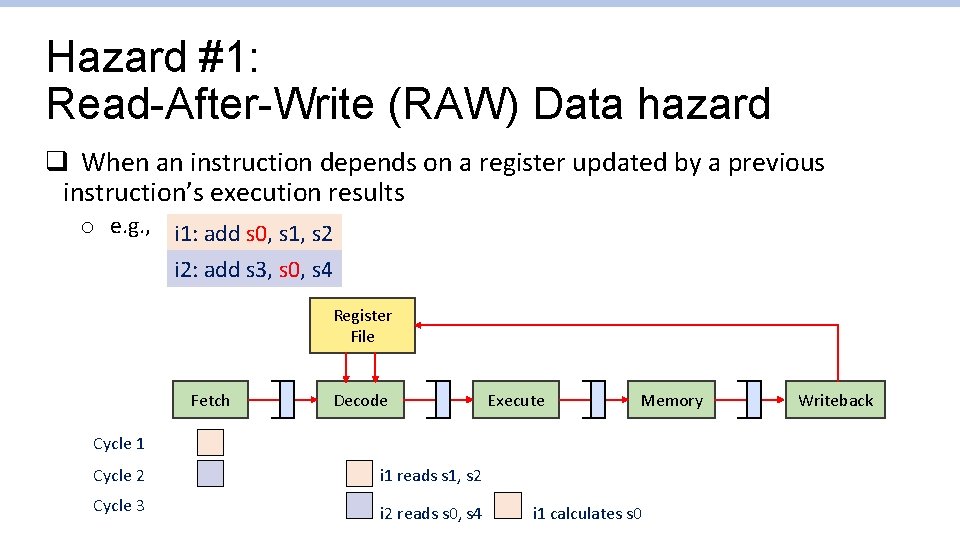

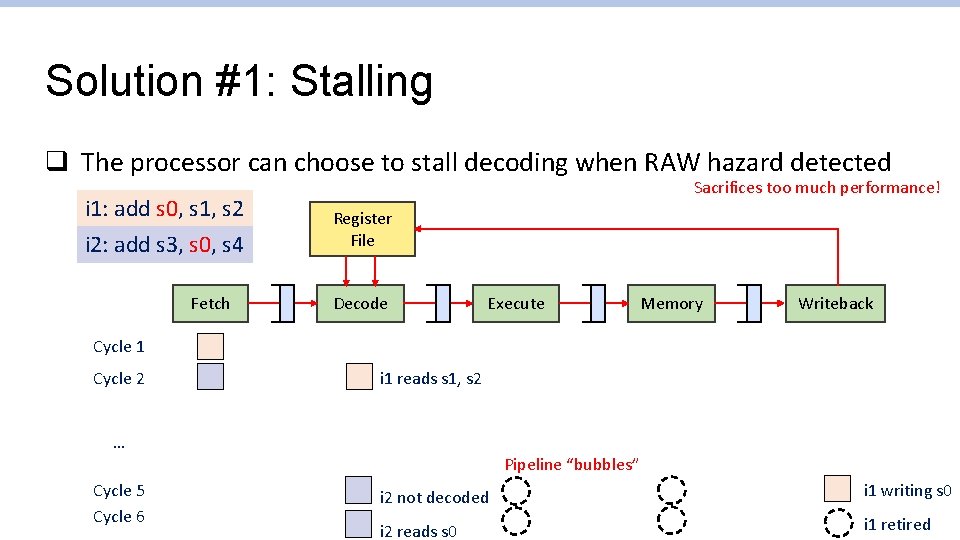

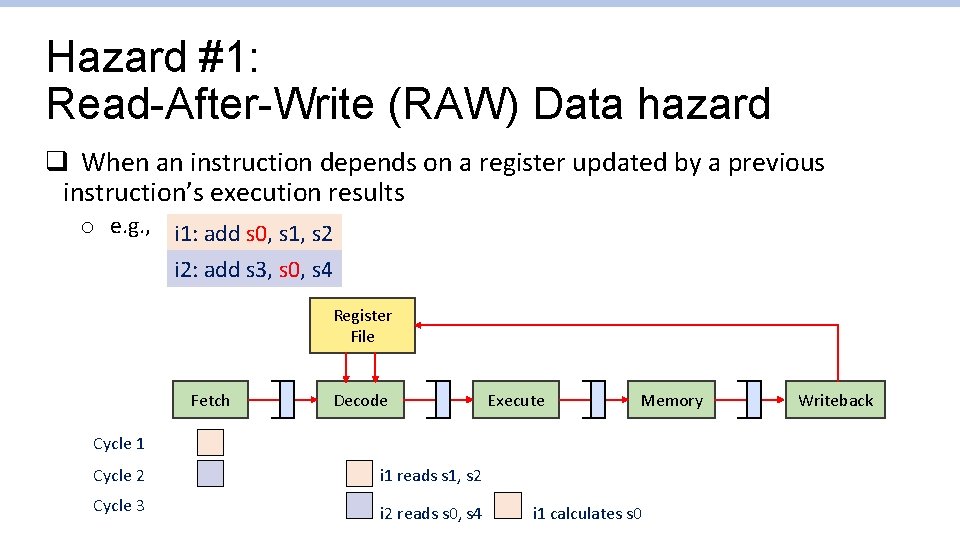

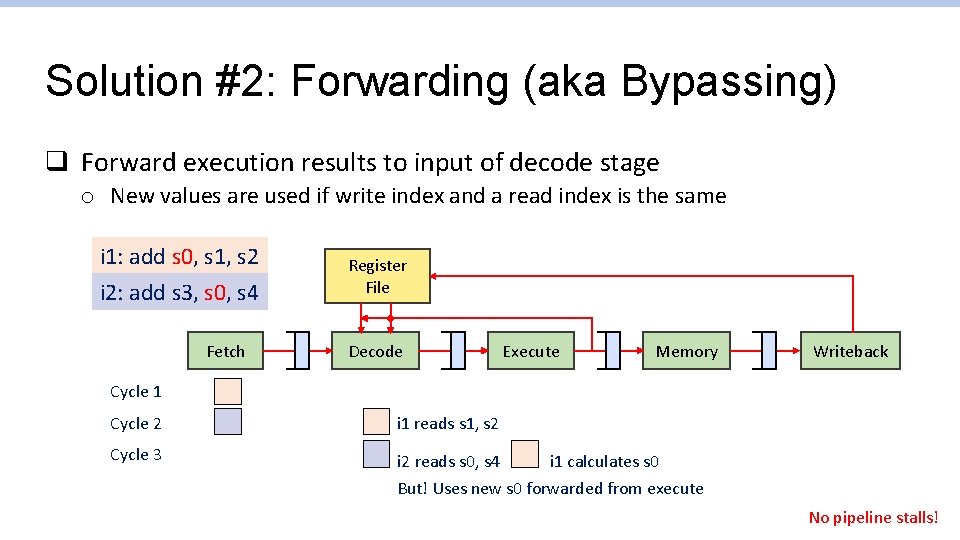

Hazard #1: Read-After-Write (RAW) Data hazard q When an instruction depends on a register updated by a previous instruction’s execution results o e. g. , i 1: add s 0, s 1, s 2 i 2: add s 3, s 0, s 4 Register File Fetch Decode Execute Memory Cycle 1 Cycle 2 i 1 reads s 1, s 2 Cycle 3 i 2 reads s 0, s 4 i 1 calculates s 0 Writeback

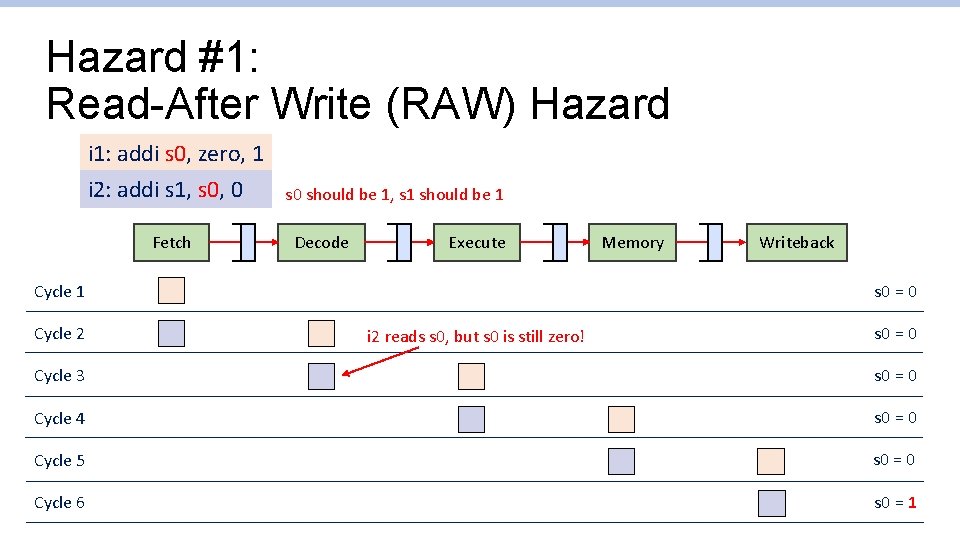

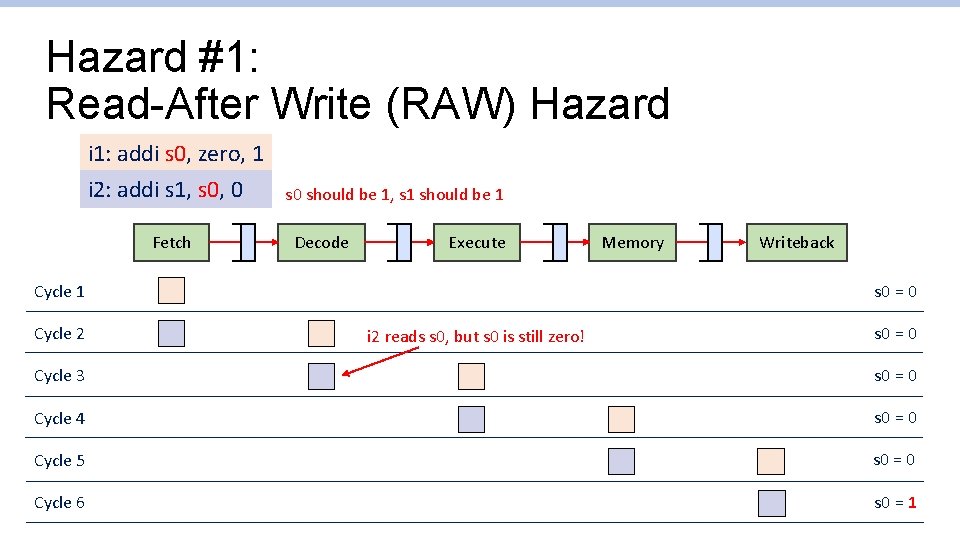

Hazard #1: Read-After Write (RAW) Hazard i 1: addi s 0, zero, 1 i 2: addi s 1, s 0, 0 Fetch s 0 should be 1, s 1 should be 1 Decode Execute Writeback s 0 = 0 Cycle 1 Cycle 2 Memory i 2 reads s 0, but s 0 is still zero! s 0 = 0 Cycle 3 s 0 = 0 Cycle 4 s 0 = 0 Cycle 5 s 0 = 0 Cycle 6 s 0 = 1

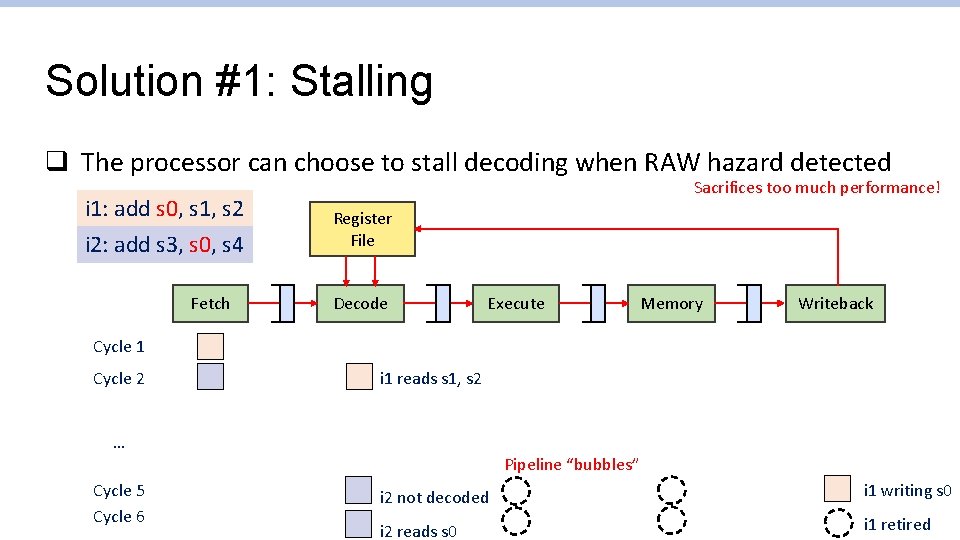

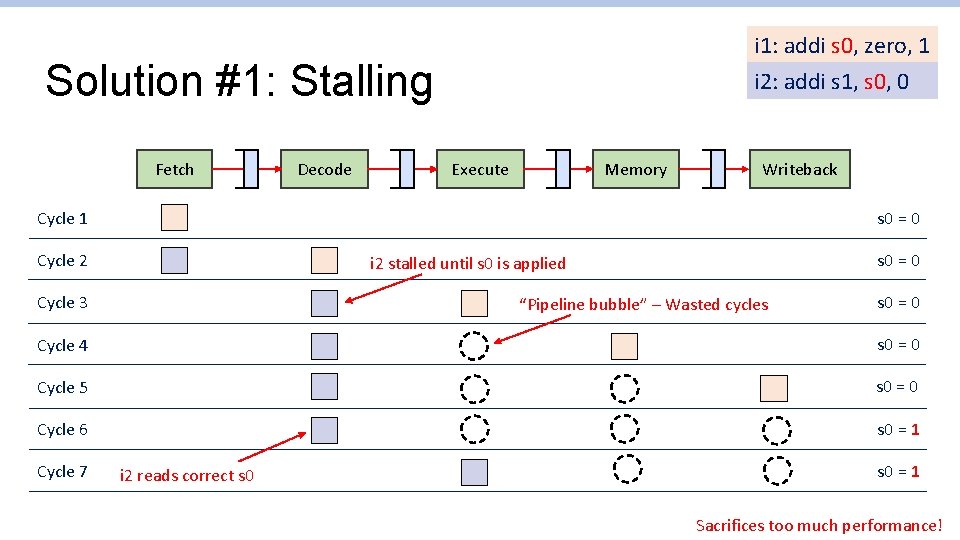

Solution #1: Stalling q The processor can choose to stall decoding when RAW hazard detected i 1: add s 0, s 1, s 2 i 2: add s 3, s 0, s 4 Fetch Sacrifices too much performance! Register File Decode Execute Memory Writeback Cycle 1 Cycle 2 i 1 reads s 1, s 2 … Pipeline “bubbles” Cycle 5 Cycle 6 i 2 not decoded i 1 writing s 0 i 2 reads s 0 i 1 retired

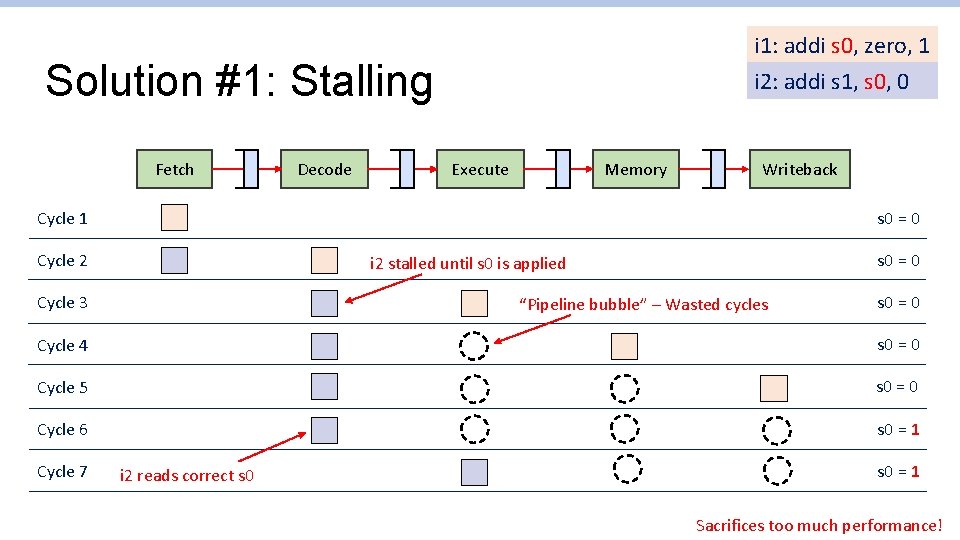

i 1: addi s 0, zero, 1 i 2: addi s 1, s 0, 0 Solution #1: Stalling Fetch Decode Execute Memory Writeback s 0 = 0 Cycle 1 Cycle 2 s 0 = 0 i 2 stalled until s 0 is applied Cycle 3 “Pipeline bubble” – Wasted cycles s 0 = 0 Cycle 4 s 0 = 0 Cycle 5 s 0 = 0 Cycle 6 s 0 = 1 Cycle 7 i 2 reads correct s 0 = 1 Sacrifices too much performance!

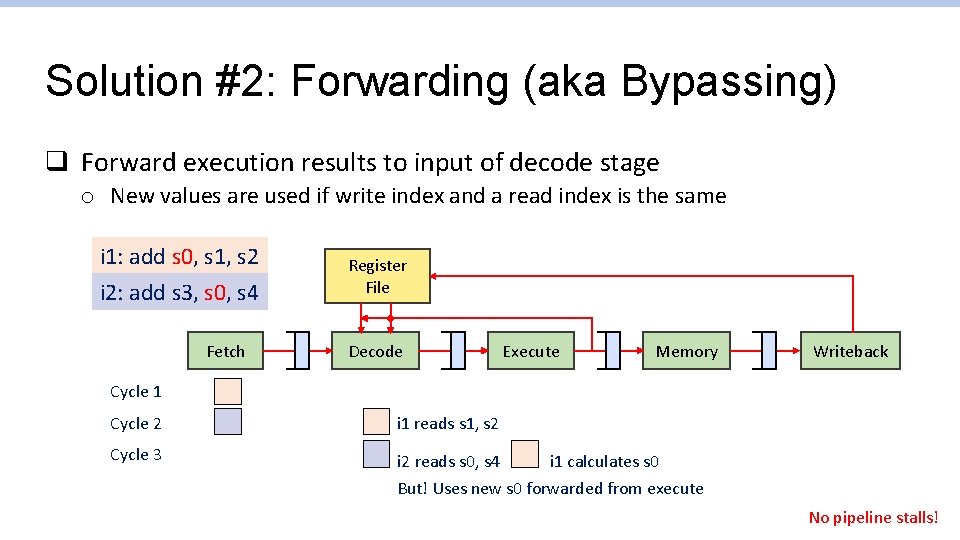

Solution #2: Forwarding (aka Bypassing) q Forward execution results to input of decode stage o New values are used if write index and a read index is the same i 1: add s 0, s 1, s 2 i 2: add s 3, s 0, s 4 Fetch Register File Decode Execute Memory Writeback Cycle 1 Cycle 2 i 1 reads s 1, s 2 Cycle 3 i 2 reads s 0, s 4 i 1 calculates s 0 But! Uses new s 0 forwarded from execute No pipeline stalls!

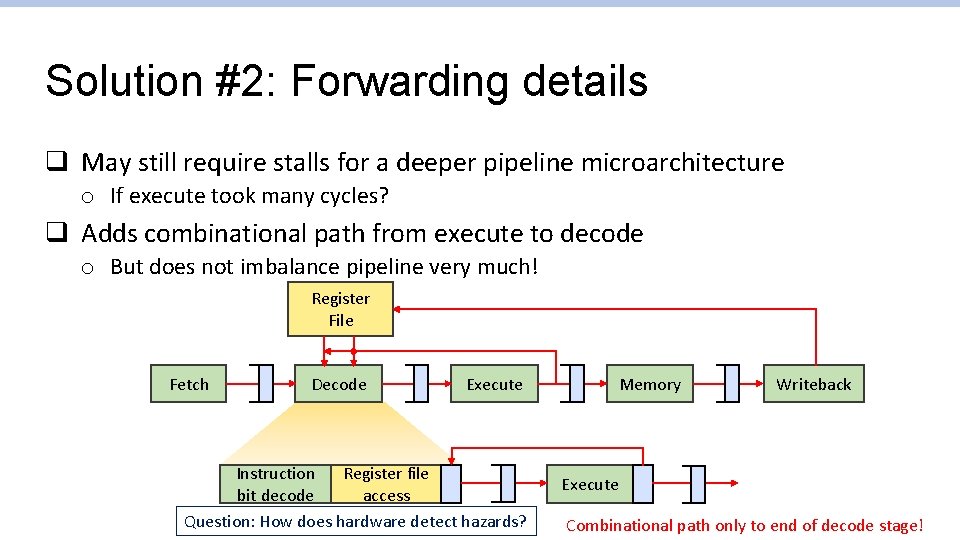

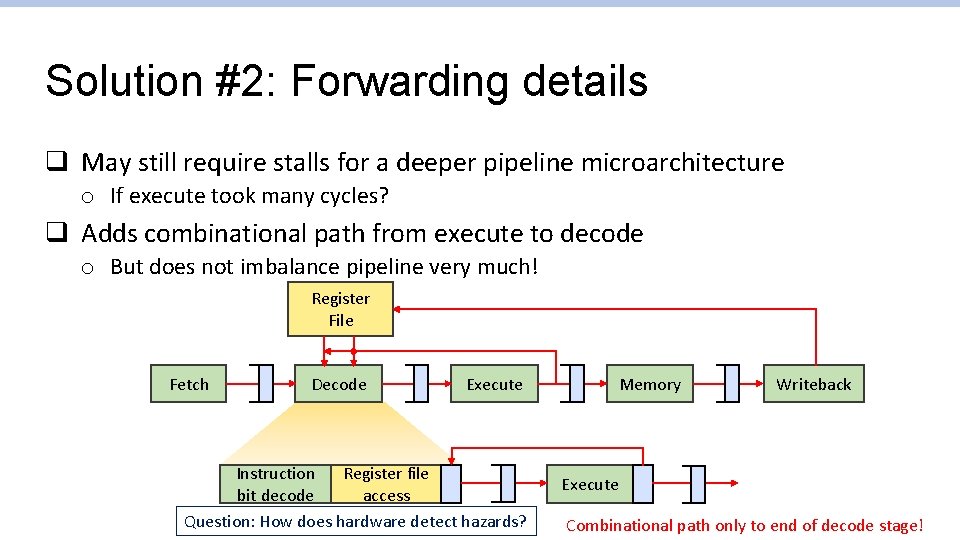

Solution #2: Forwarding details q May still require stalls for a deeper pipeline microarchitecture o If execute took many cycles? q Adds combinational path from execute to decode o But does not imbalance pipeline very much! Register File Fetch Decode Execute Instruction Register file bit decode access Question: How does hardware detect hazards? Memory Writeback Execute Combinational path only to end of decode stage!

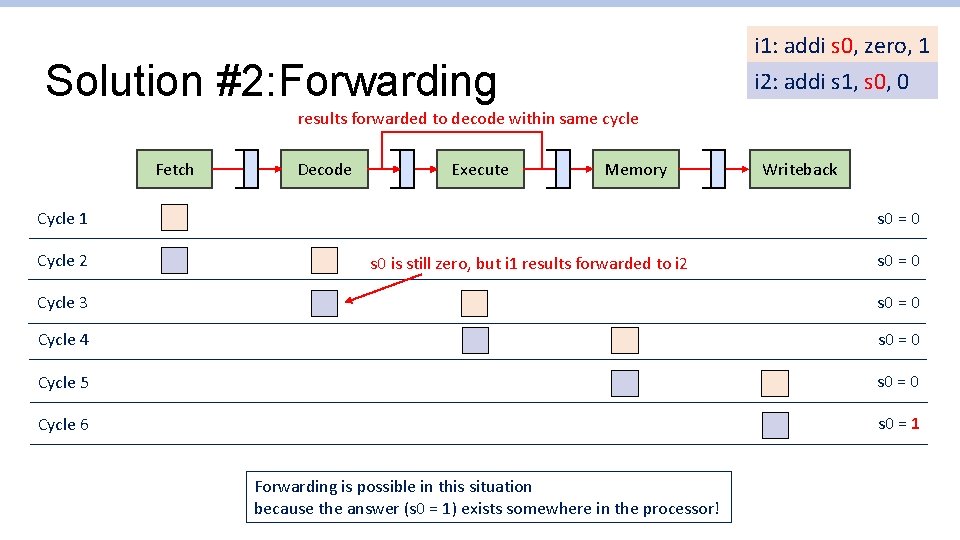

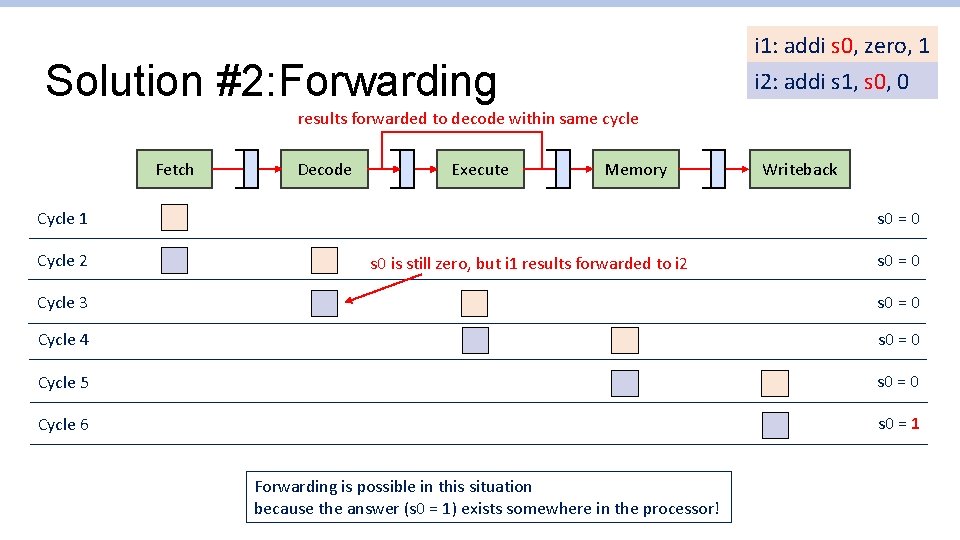

i 1: addi s 0, zero, 1 i 2: addi s 1, s 0, 0 Solution #2: Forwarding results forwarded to decode within same cycle Fetch Decode Execute Memory s 0 = 0 Cycle 1 Cycle 2 Writeback s 0 is still zero, but i 1 results forwarded to i 2 s 0 = 0 Cycle 3 s 0 = 0 Cycle 4 s 0 = 0 Cycle 5 s 0 = 0 Cycle 6 s 0 = 1 Forwarding is possible in this situation because the answer (s 0 = 1) exists somewhere in the processor!

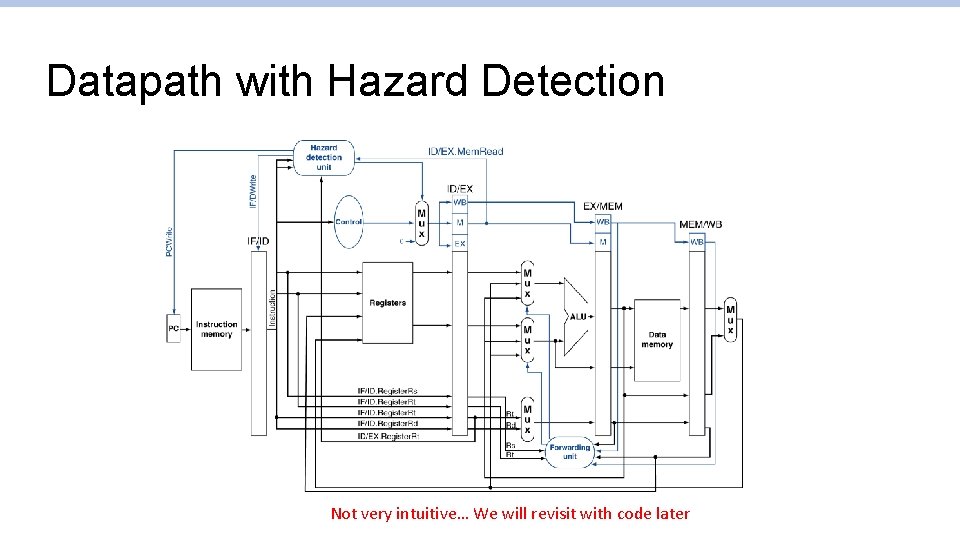

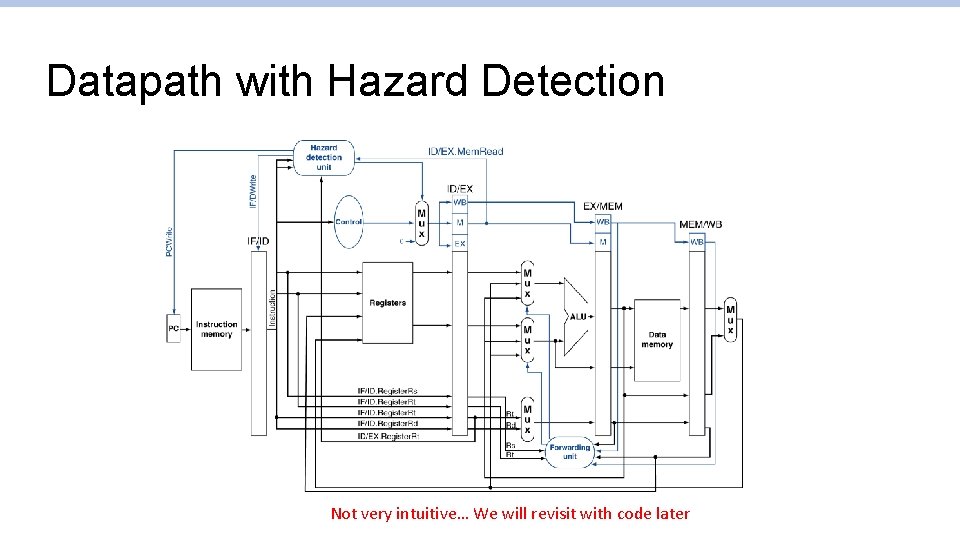

Datapath with Hazard Detection Not very intuitive… We will revisit with code later

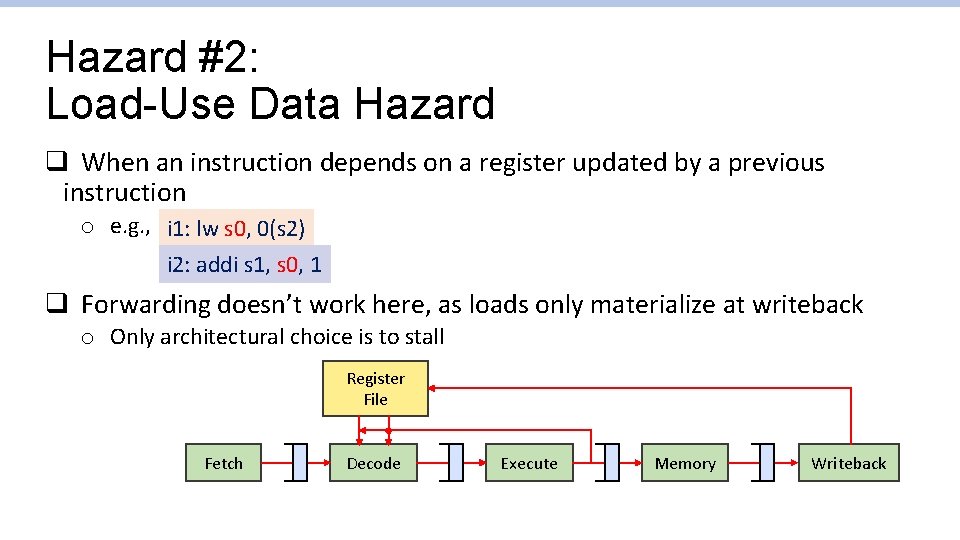

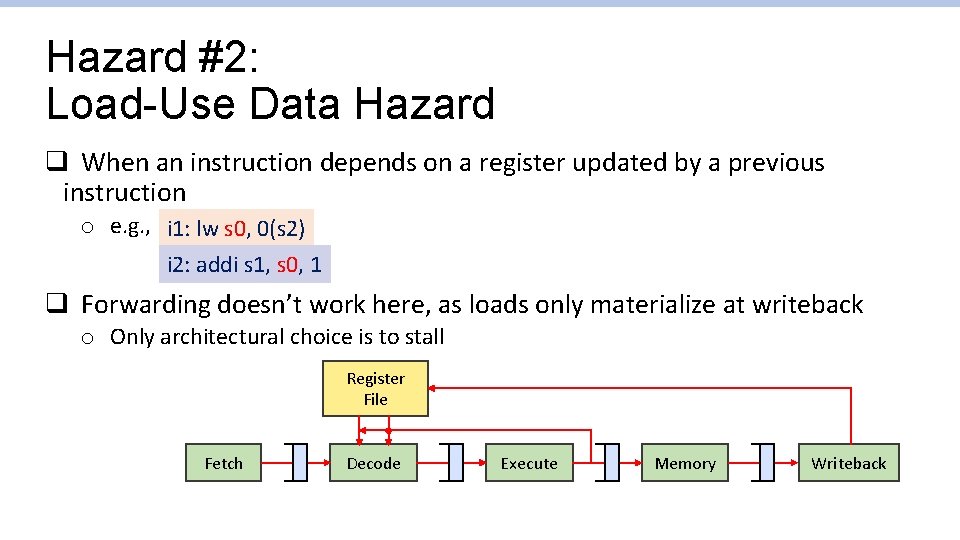

Hazard #2: Load-Use Data Hazard q When an instruction depends on a register updated by a previous instruction o e. g. , i 1: lw s 0, 0(s 2) i 2: addi s 1, s 0, 1 q Forwarding doesn’t work here, as loads only materialize at writeback o Only architectural choice is to stall Register File Fetch Decode Execute Memory Writeback

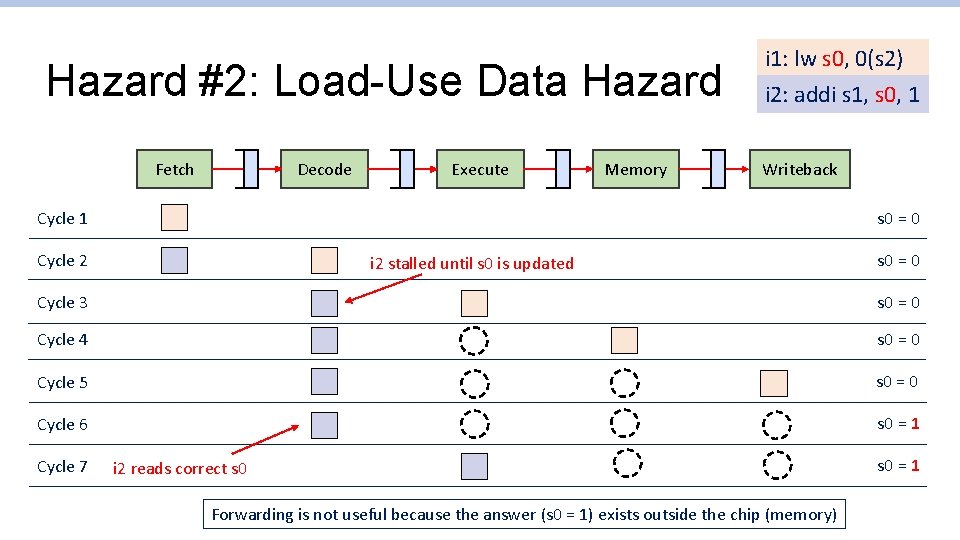

Hazard #2: Load-Use Data Hazard Decode Fetch Execute Memory i 1: lw s 0, 0(s 2) i 2: addi s 1, s 0, 1 Writeback s 0 = 0 Cycle 1 Cycle 2 i 2 stalled until s 0 is updated s 0 = 0 Cycle 3 s 0 = 0 Cycle 4 s 0 = 0 Cycle 5 s 0 = 0 Cycle 6 s 0 = 1 Cycle 7 i 2 reads correct s 0 Forwarding is not useful because the answer (s 0 = 1) exists outside the chip (memory) s 0 = 1

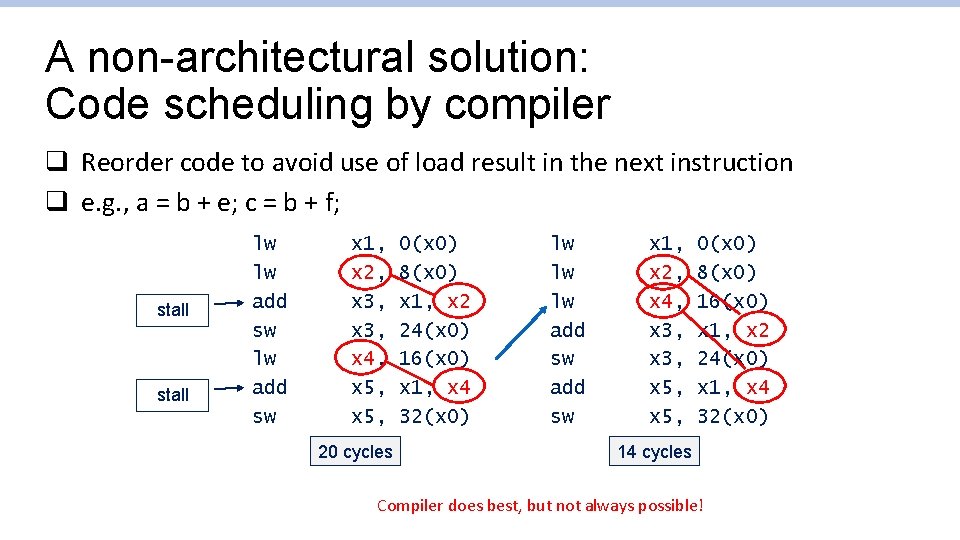

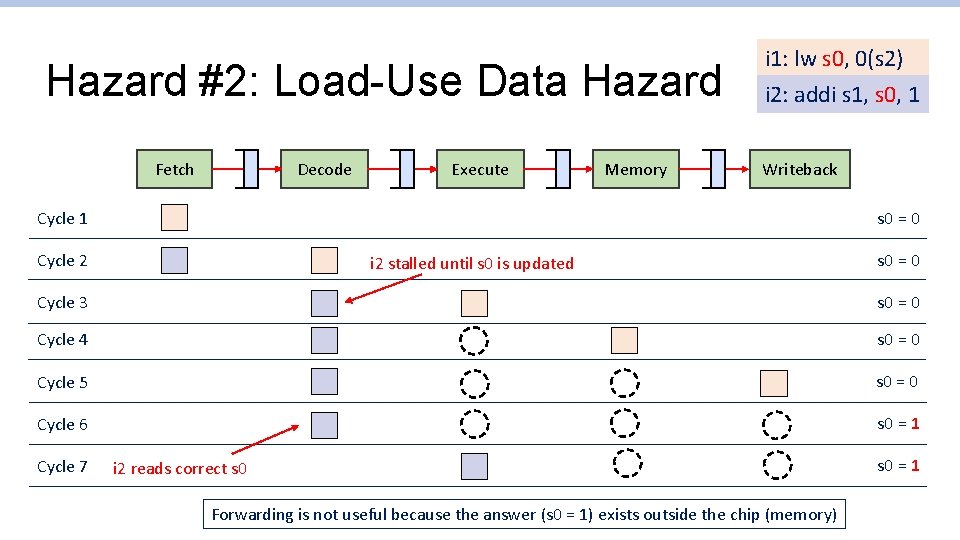

A non-architectural solution: Code scheduling by compiler q Reorder code to avoid use of load result in the next instruction q e. g. , a = b + e; c = b + f; stall lw lw add sw x 1, x 2, x 3, x 4, x 5, 20 cycles 0(x 0) 8(x 0) x 1, x 2 24(x 0) 16(x 0) x 1, x 4 32(x 0) lw lw lw add sw x 1, x 2, x 4, x 3, x 5, 0(x 0) 8(x 0) 16(x 0) x 1, x 2 24(x 0) x 1, x 4 32(x 0) 14 cycles Compiler does best, but not always possible!

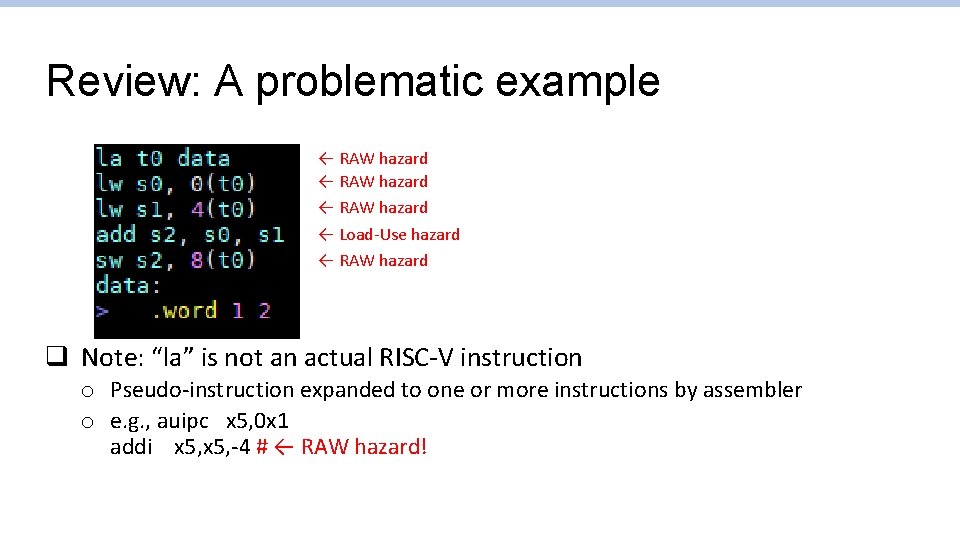

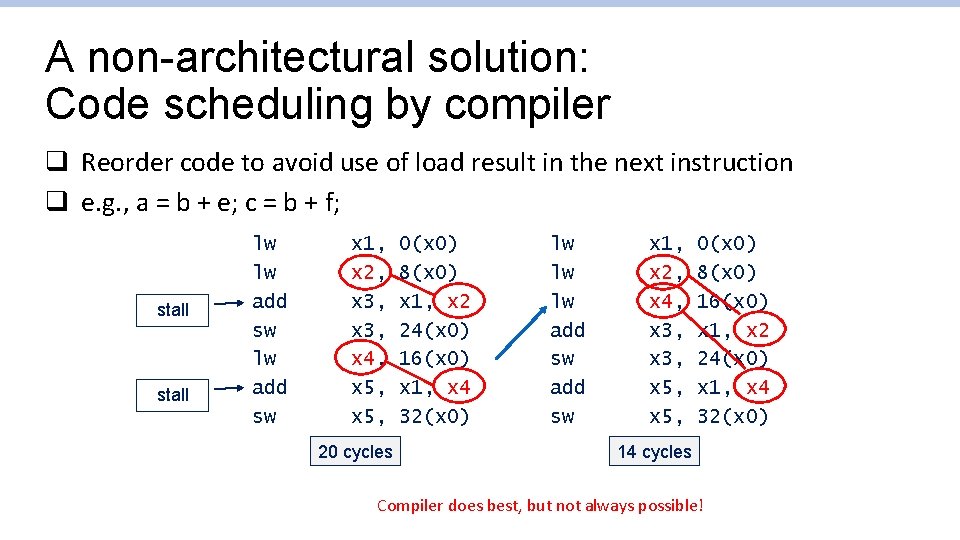

Review: A problematic example ← RAW hazard ← Load-Use hazard ← RAW hazard q Note: “la” is not an actual RISC-V instruction o Pseudo-instruction expanded to one or more instructions by assembler o e. g. , auipc x 5, 0 x 1 addi x 5, -4 # ← RAW hazard!

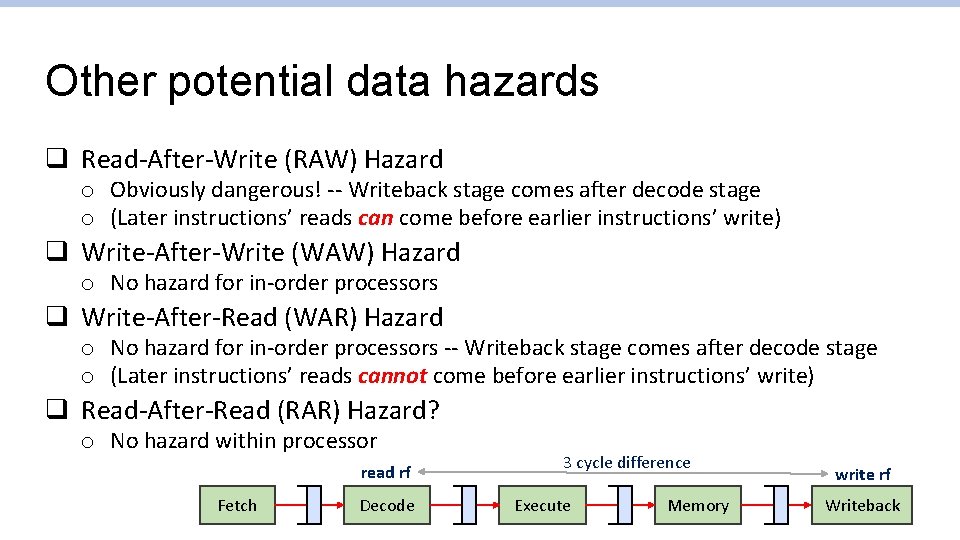

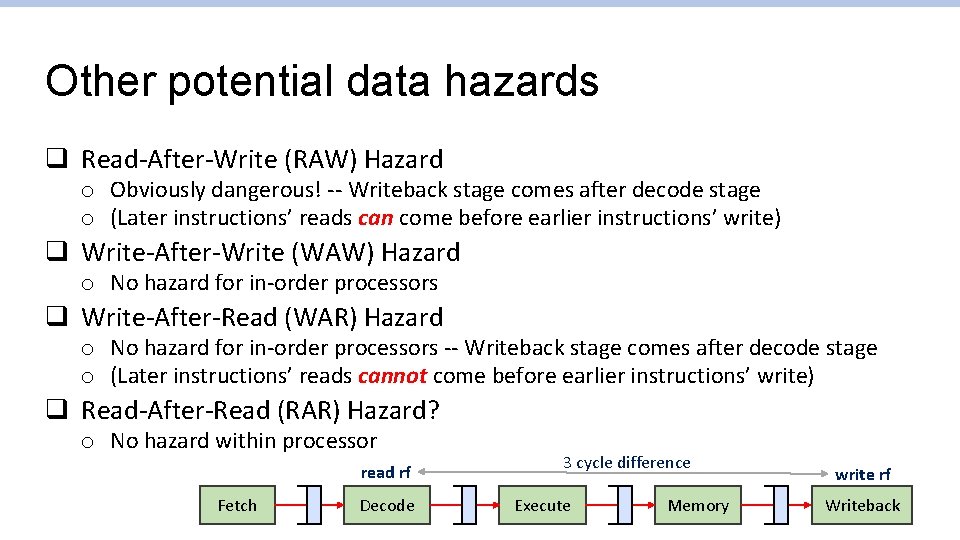

Other potential data hazards q Read-After-Write (RAW) Hazard o Obviously dangerous! -- Writeback stage comes after decode stage o (Later instructions’ reads can come before earlier instructions’ write) q Write-After-Write (WAW) Hazard o No hazard for in-order processors q Write-After-Read (WAR) Hazard o No hazard for in-order processors -- Writeback stage comes after decode stage o (Later instructions’ reads cannot come before earlier instructions’ write) q Read-After-Read (RAR) Hazard? o No hazard within processor read rf Fetch Decode 3 cycle difference Execute Memory write rf Writeback

Hazard #3: Control hazard q Branch determines flow of control o Fetching next instruction depends on branch outcome o Pipeline can’t always fetch correct instruction i 1: beq s 0, zero, elsewhere • e. g. , Still working on decode stage of branch i 2: addi s 1, s 0, 1 PC Fetch Cycle 1 Cycle 2 Should I load this or not? Decode Execute Memory Writeback

Control hazard (partial) solutions q Branch target address can be forwarded to the fetch stage o Without first being written to PC o Still may introduce (one less, but still) bubbles PC Fetch Decode Execute Memory Writeback q Decode stage can be augmented with logic to calculate branch target o May imbalance pipeline, reducing performance o Doesn’t help if instruction memory takes long (cache miss, for example)

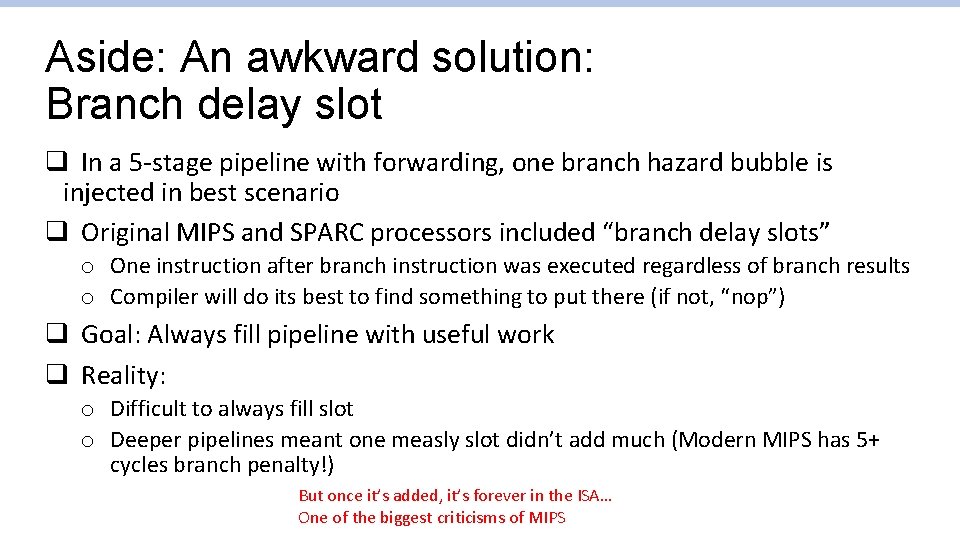

Aside: An awkward solution: Branch delay slot q In a 5 -stage pipeline with forwarding, one branch hazard bubble is injected in best scenario q Original MIPS and SPARC processors included “branch delay slots” o One instruction after branch instruction was executed regardless of branch results o Compiler will do its best to find something to put there (if not, “nop”) q Goal: Always fill pipeline with useful work q Reality: o Difficult to always fill slot o Deeper pipelines meant one measly slot didn’t add much (Modern MIPS has 5+ cycles branch penalty!) But once it’s added, it’s forever in the ISA… One of the biggest criticisms of MIPS

Eight great ideas q q q q Design for Moore’s Law Use abstraction to simplify design Make the common case fast Performance via parallelism Performance via pipelining Performance via prediction Hierarchy of memories Dependability via redundancy

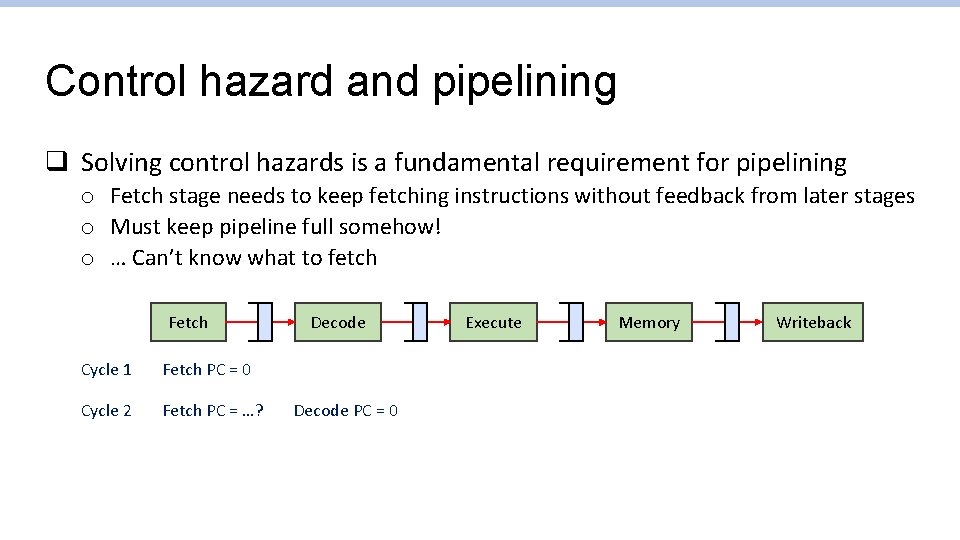

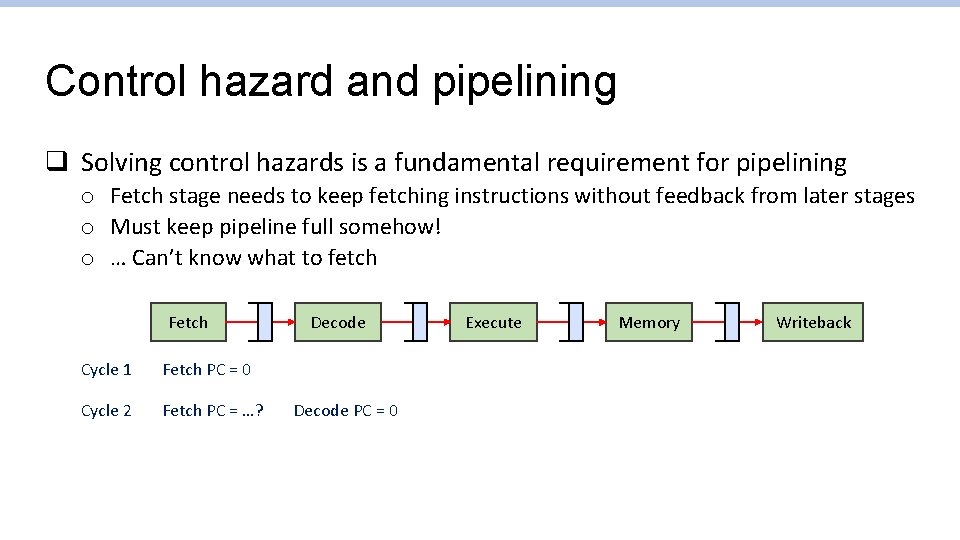

Control hazard and pipelining q Solving control hazards is a fundamental requirement for pipelining o Fetch stage needs to keep fetching instructions without feedback from later stages o Must keep pipeline full somehow! o … Can’t know what to fetch Fetch Cycle 1 Fetch PC = 0 Cycle 2 Fetch PC = …? Decode PC = 0 Execute Memory Writeback

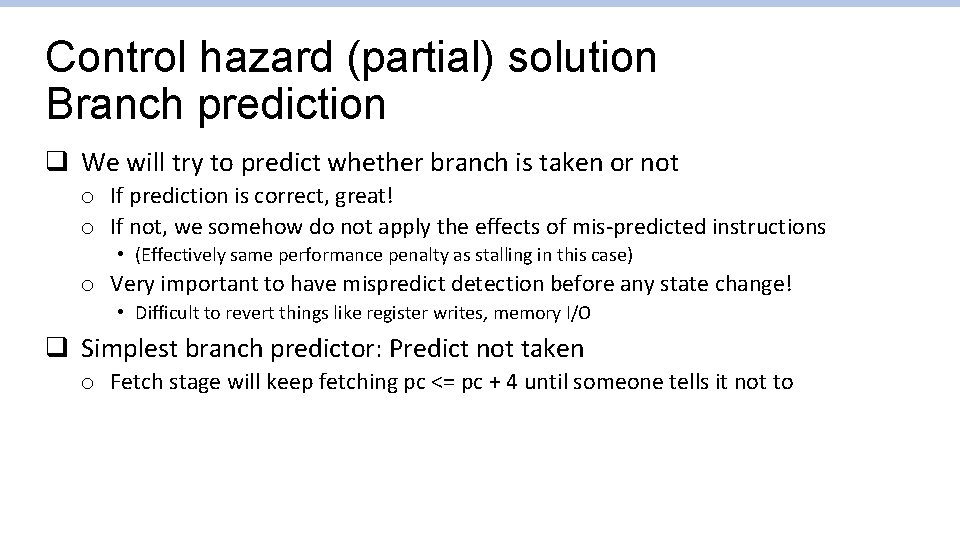

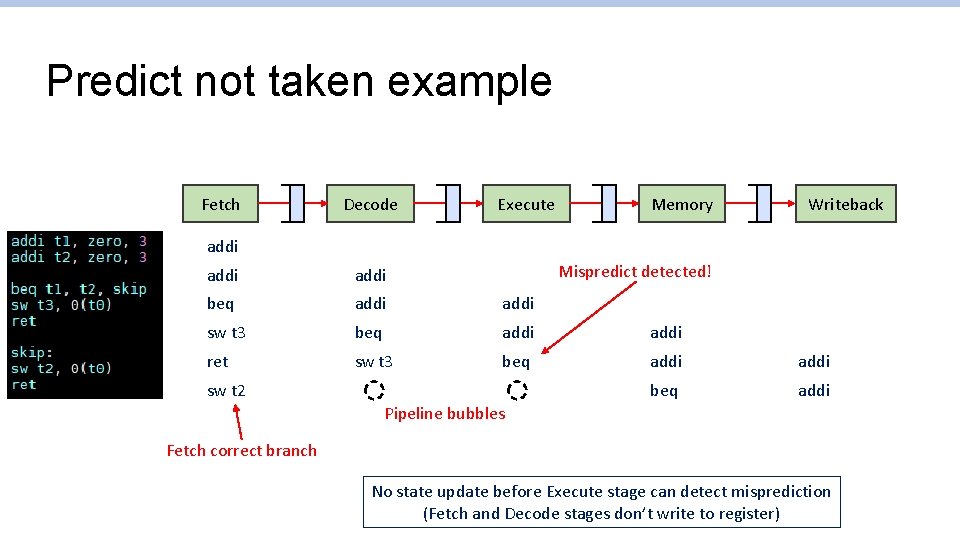

Control hazard (partial) solution Branch prediction q We will try to predict whether branch is taken or not o If prediction is correct, great! o If not, we somehow do not apply the effects of mis-predicted instructions • (Effectively same performance penalty as stalling in this case) o Very important to have mispredict detection before any state change! • Difficult to revert things like register writes, memory I/O q Simplest branch predictor: Predict not taken o Fetch stage will keep fetching pc <= pc + 4 until someone tells it not to

Predict not taken example Fetch Decode Execute Memory Writeback addi Mispredict detected! addi beq addi sw t 3 beq addi ret sw t 3 beq addi sw t 2 Pipeline bubbles Fetch correct branch No state update before Execute stage can detect misprediction (Fetch and Decode stages don’t write to register)

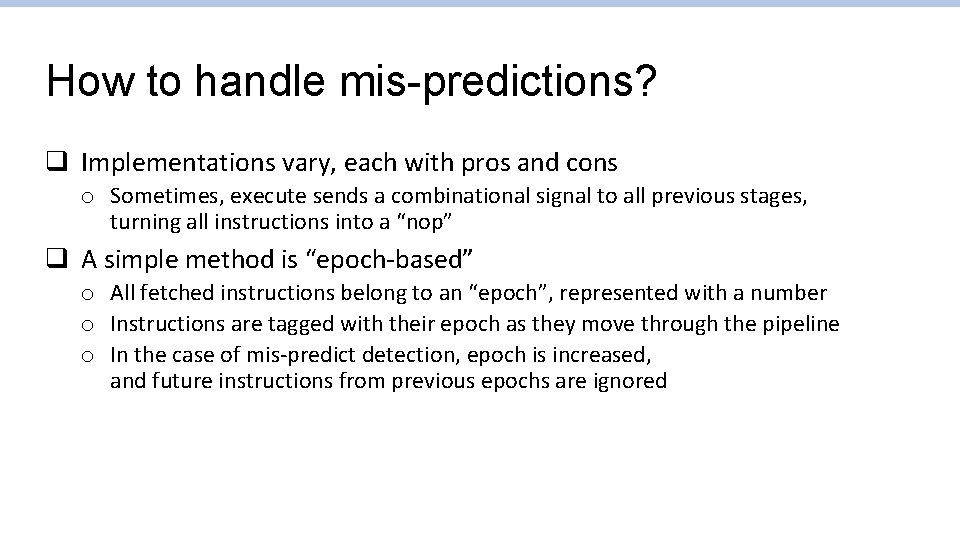

How to handle mis-predictions? q Implementations vary, each with pros and cons o Sometimes, execute sends a combinational signal to all previous stages, turning all instructions into a “nop” q A simple method is “epoch-based” o All fetched instructions belong to an “epoch”, represented with a number o Instructions are tagged with their epoch as they move through the pipeline o In the case of mis-predict detection, epoch is increased, and future instructions from previous epochs are ignored

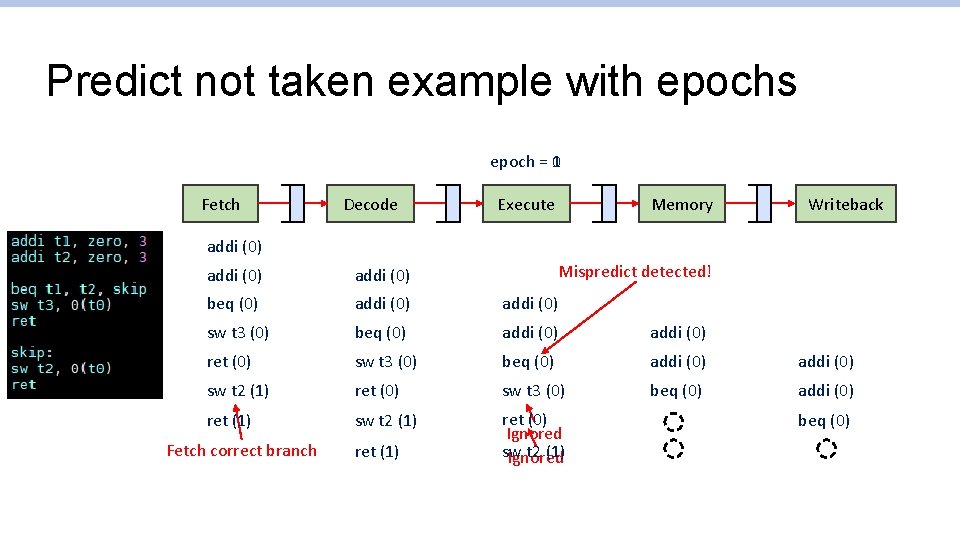

Predict not taken example with epochs epoch = 1 0 Fetch Decode Execute Memory Writeback addi (0) Mispredict detected! addi (0) beq (0) addi (0) sw t 3 (0) beq (0) addi (0) ret (0) sw t 3 (0) beq (0) addi (0) sw t 2 (1) ret (0) sw t 3 (0) beq (0) addi (0) ret (1) sw t 2 (1) ret (0) Ignored sw t 2 (1) Ignored Fetch correct branch ret (1) beq (0)

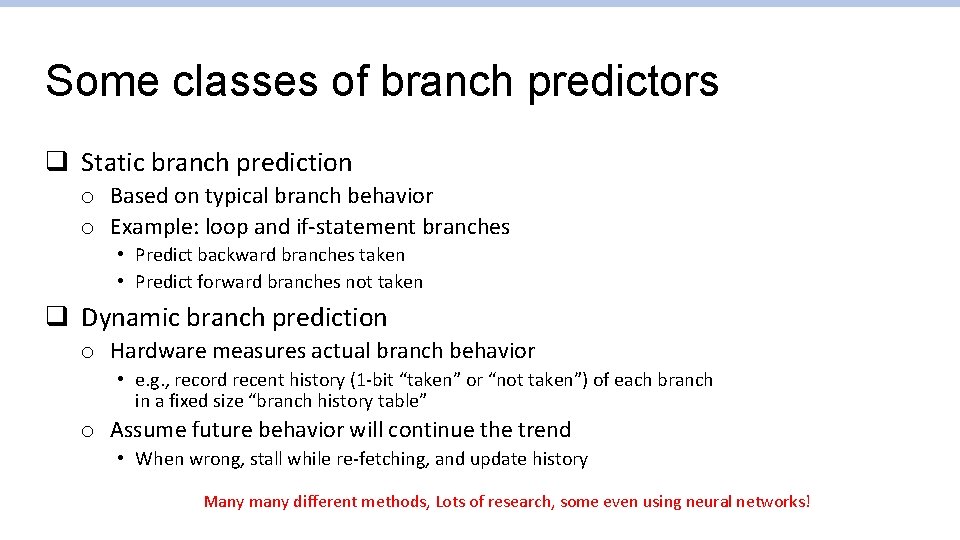

Some classes of branch predictors q Static branch prediction o Based on typical branch behavior o Example: loop and if-statement branches • Predict backward branches taken • Predict forward branches not taken q Dynamic branch prediction o Hardware measures actual branch behavior • e. g. , record recent history (1 -bit “taken” or “not taken”) of each branch in a fixed size “branch history table” o Assume future behavior will continue the trend • When wrong, stall while re-fetching, and update history Many many different methods, Lots of research, some even using neural networks!

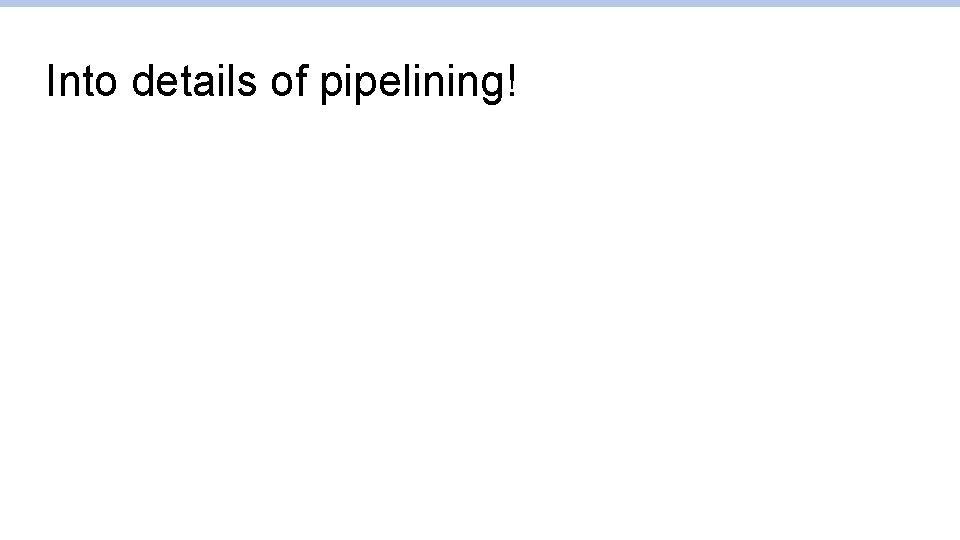

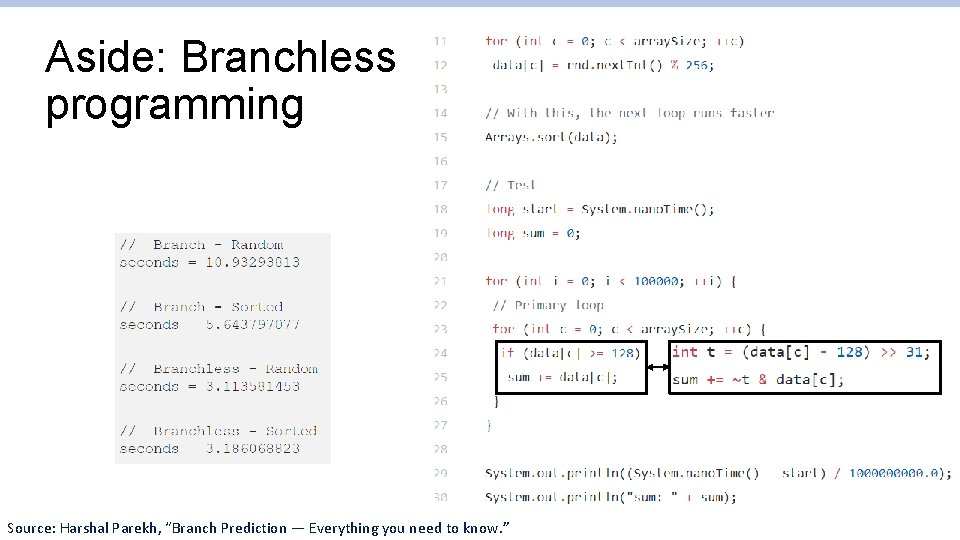

Branch prediction and performance q Effectiveness of branch predictors is crucial for performance o Spoilers: On SPEC benchmarks, modern predictors routinely have 98+% accuracy o Of course, less-optimized code may have much worse behavior q Branch-heavy software performance depends on good match between software pattern and branch prediction o Some high-performance software optimized for branch predictors in target hardware o Or, avoid branches altogether! (Branchless code)

![Aside Impact of branches This code takes 12 seconds to run But on commenting Aside: Impact of branches “[This code] takes ~12 seconds to run. But on commenting](https://slidetodoc.com/presentation_image_h2/e1998359120e1ded2d961ebd4f7cd509/image-49.jpg)

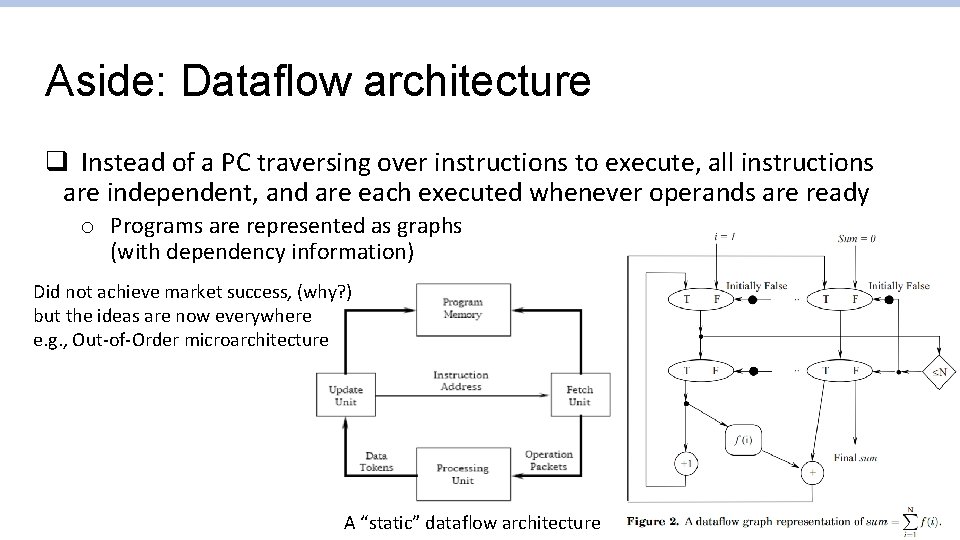

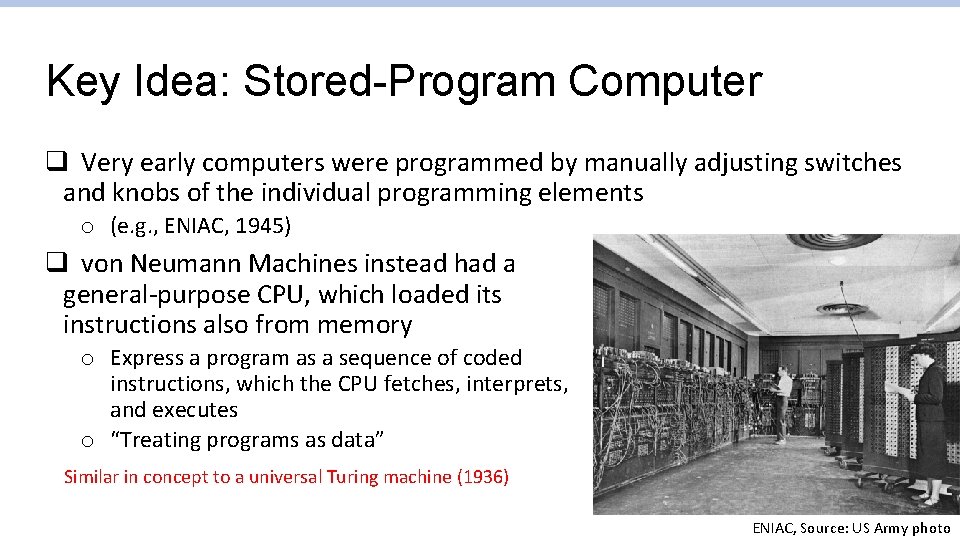

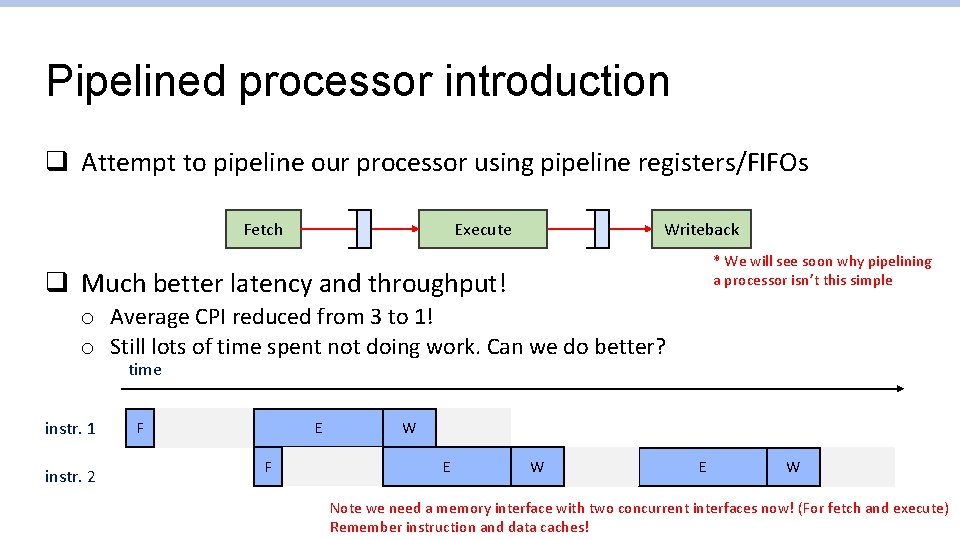

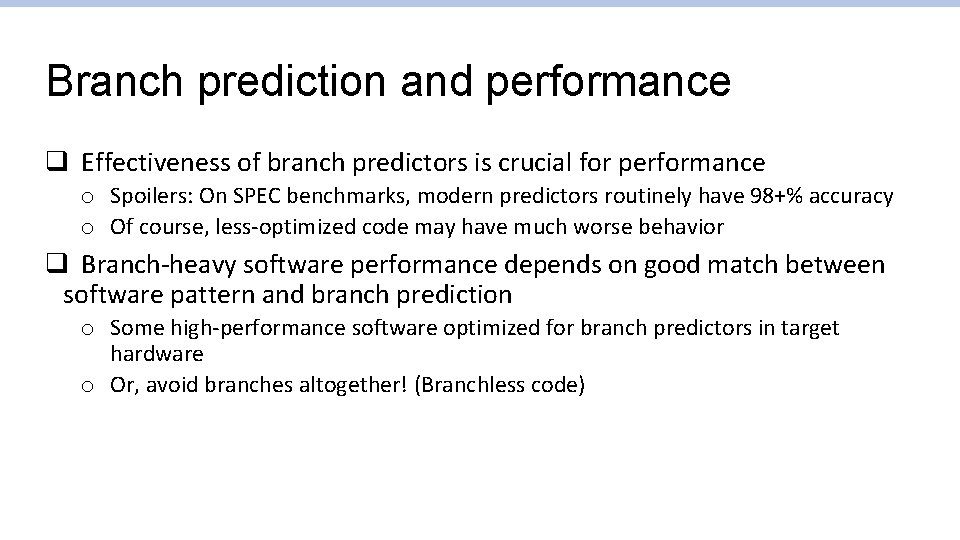

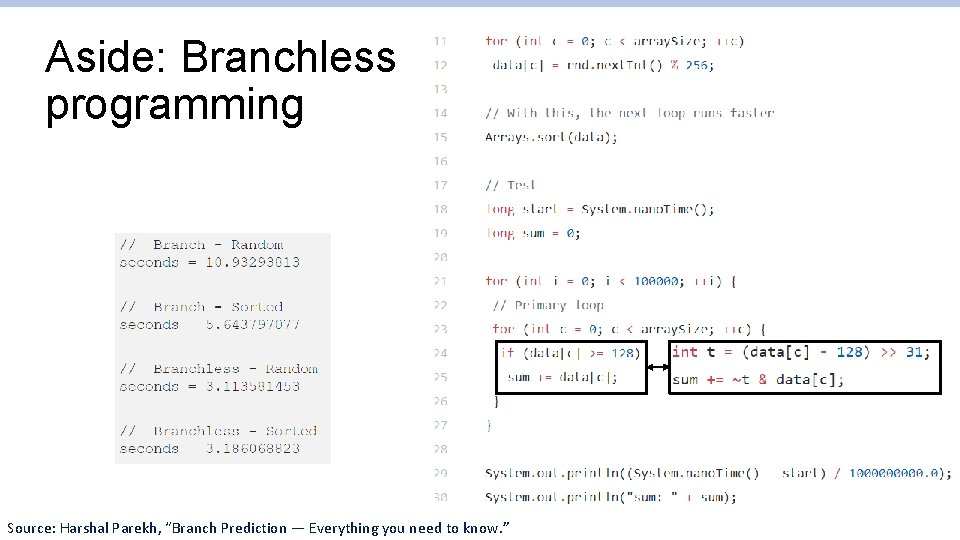

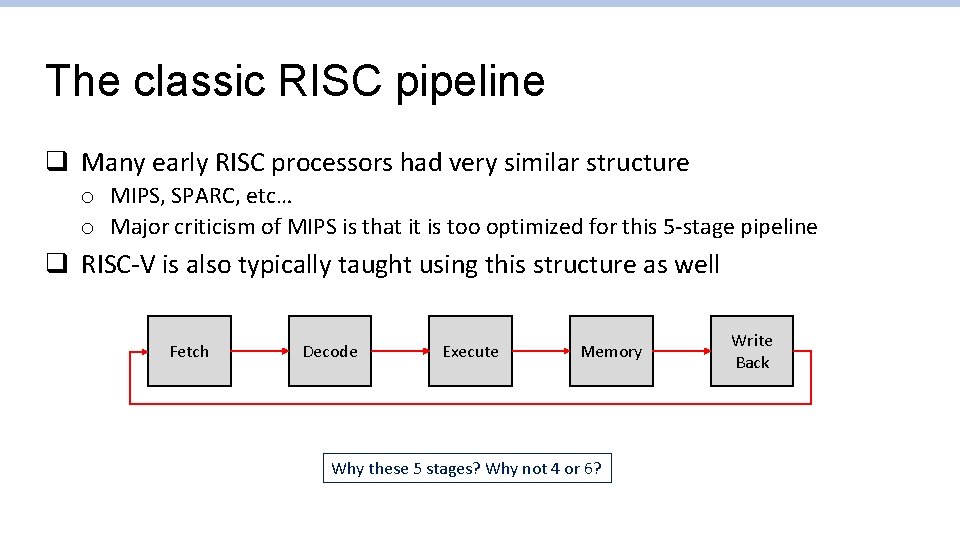

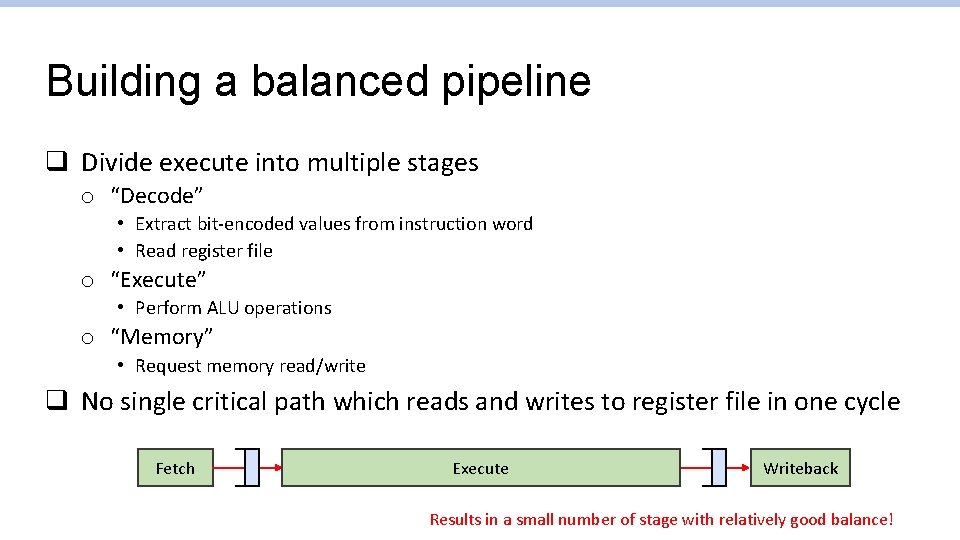

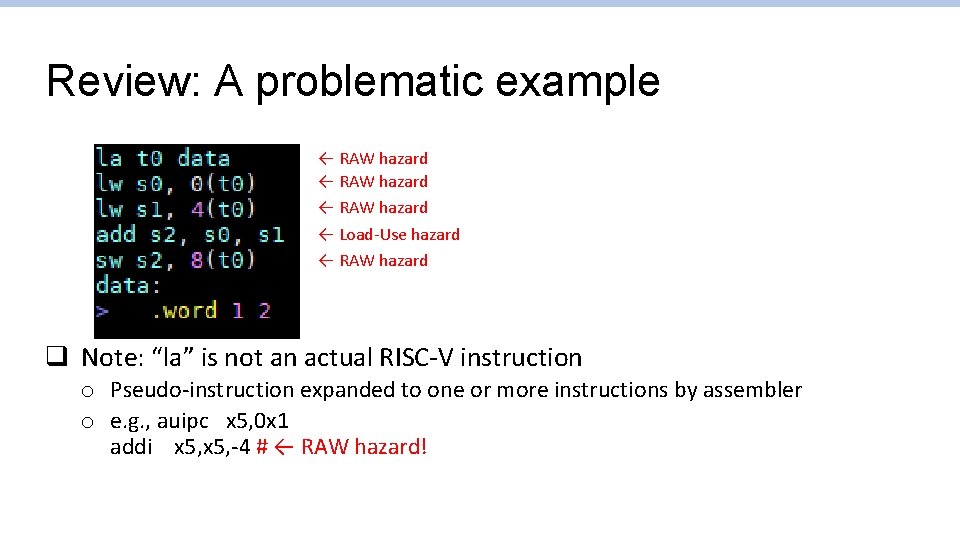

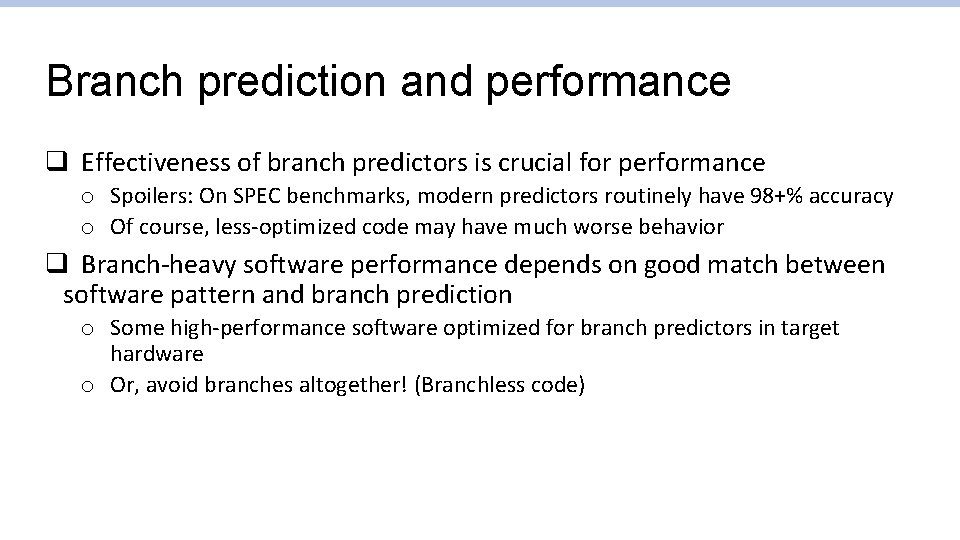

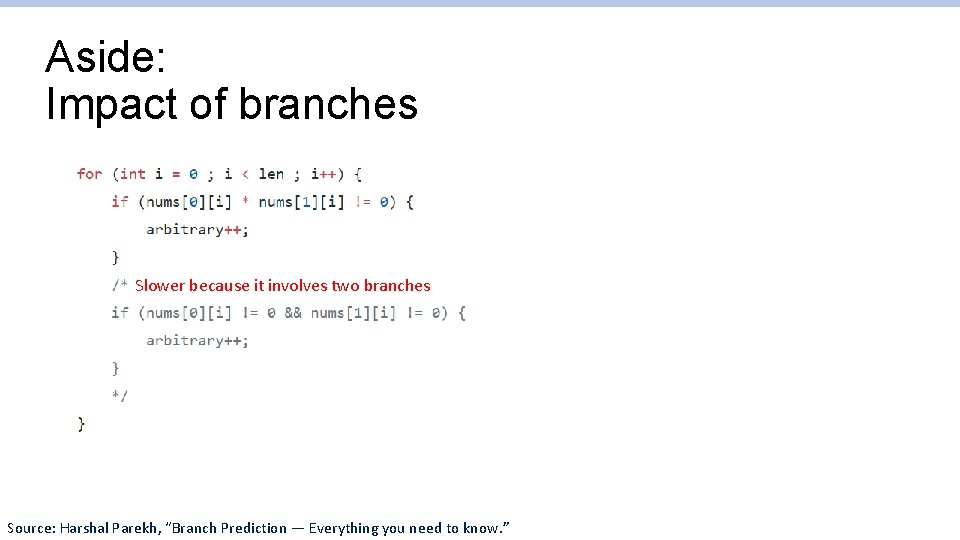

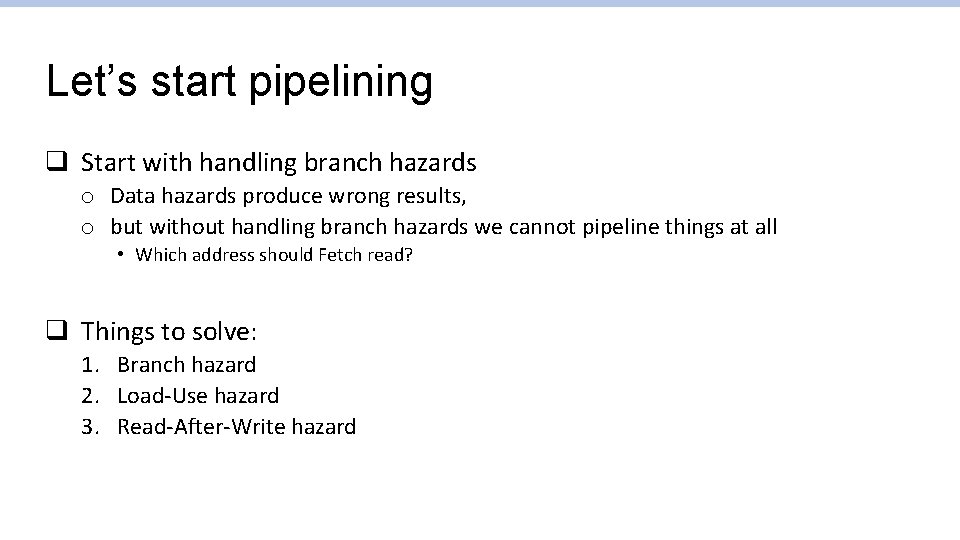

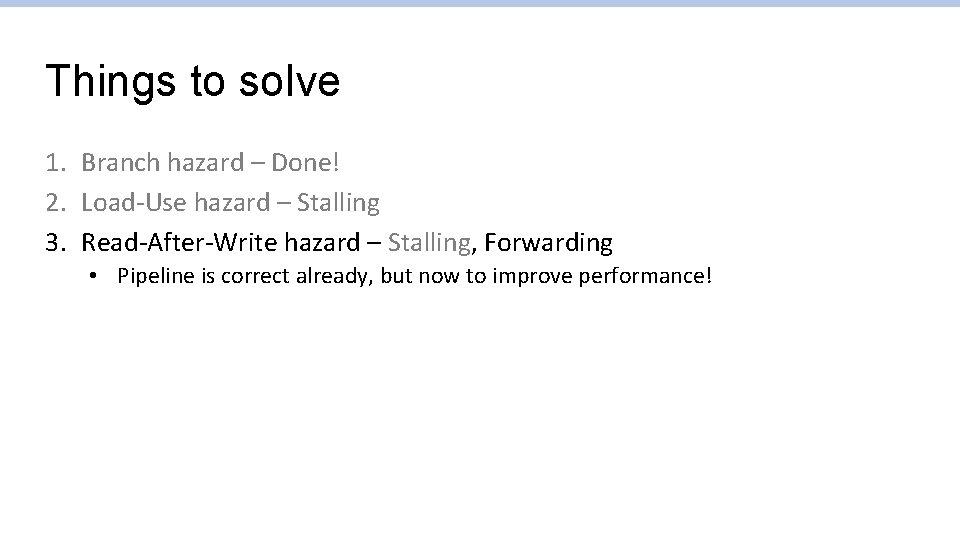

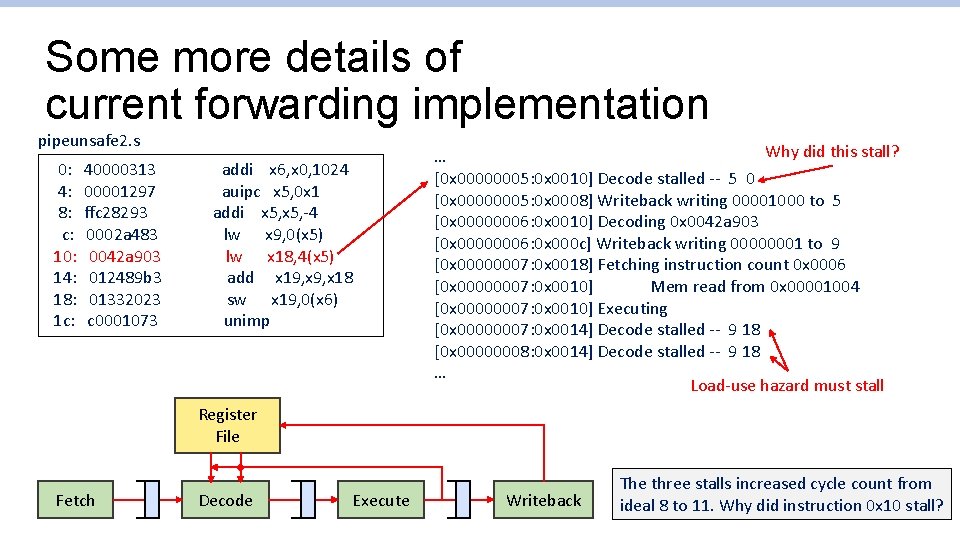

Aside: Impact of branches “[This code] takes ~12 seconds to run. But on commenting line 15, not touching the rest, the same code takes ~33 seconds to run. ” “(running time may wary on different machines, but the proportion will stay the same). ” Source: Harshal Parekh, “Branch Prediction — Everything you need to know. ”

Aside: Impact of branches Slower because it involves two branches Source: Harshal Parekh, “Branch Prediction — Everything you need to know. ”

Aside: Branchless programming Source: Harshal Parekh, “Branch Prediction — Everything you need to know. ”

Into details of pipelining!

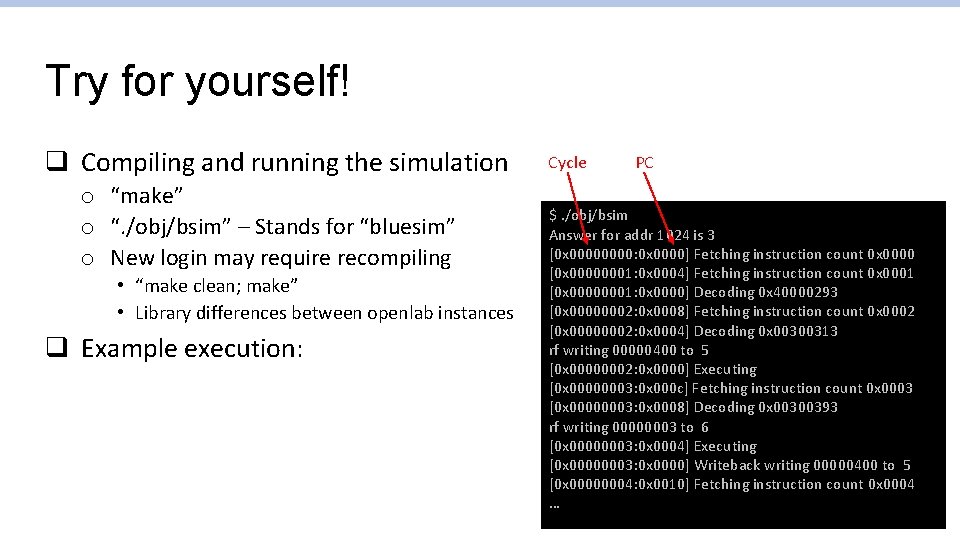

Try for yourself! q Up-to-date code in openlab o /home/swjun/cs 152/processor o Copy to your own directory! q Vim syntax files for Bluespec o /home/swjun/cs 152/vim-bsv q Environment variables for Bluespec, etc o /home/swjun/cs 152/setup. sh o Either run “source /home/swjun/cs 152/setup. sh” or add to. bashrc o Before you can run any bluespec binaries!

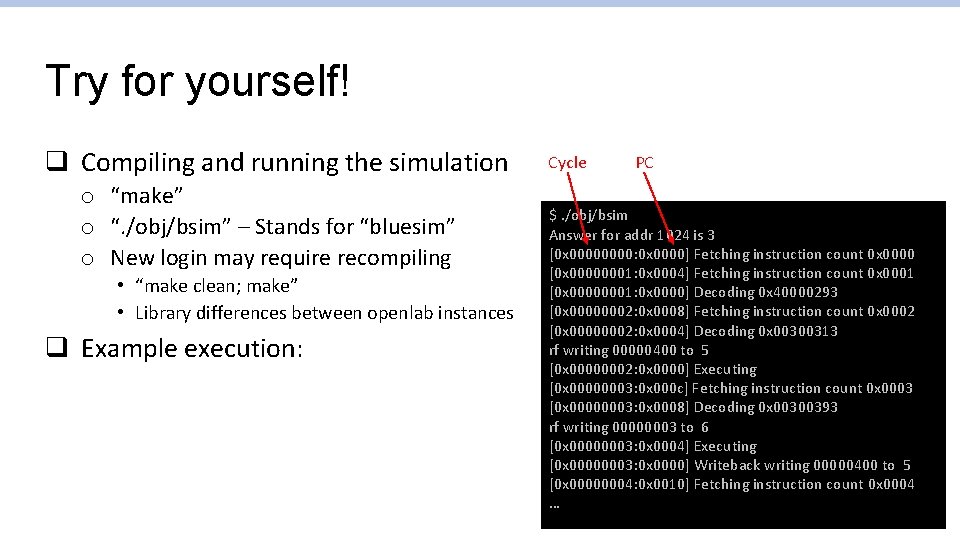

Try for yourself! q Compiling and running the simulation o “make” o “. /obj/bsim” – Stands for “bluesim” o New login may require recompiling • “make clean; make” • Library differences between openlab instances q Example execution: Cycle PC $. /obj/bsim Answer for addr 1024 is 3 [0 x 0000: 0 x 0000] Fetching instruction count 0 x 0000 [0 x 00000001: 0 x 0004] Fetching instruction count 0 x 0001 [0 x 00000001: 0 x 0000] Decoding 0 x 40000293 [0 x 00000002: 0 x 0008] Fetching instruction count 0 x 0002 [0 x 00000002: 0 x 0004] Decoding 0 x 00300313 rf writing 00000400 to 5 [0 x 00000002: 0 x 0000] Executing [0 x 00000003: 0 x 000 c] Fetching instruction count 0 x 0003 [0 x 00000003: 0 x 0008] Decoding 0 x 00300393 rf writing 00000003 to 6 [0 x 00000003: 0 x 0004] Executing [0 x 00000003: 0 x 0000] Writeback writing 00000400 to 5 [0 x 00000004: 0 x 0010] Fetching instruction count 0 x 0004 …

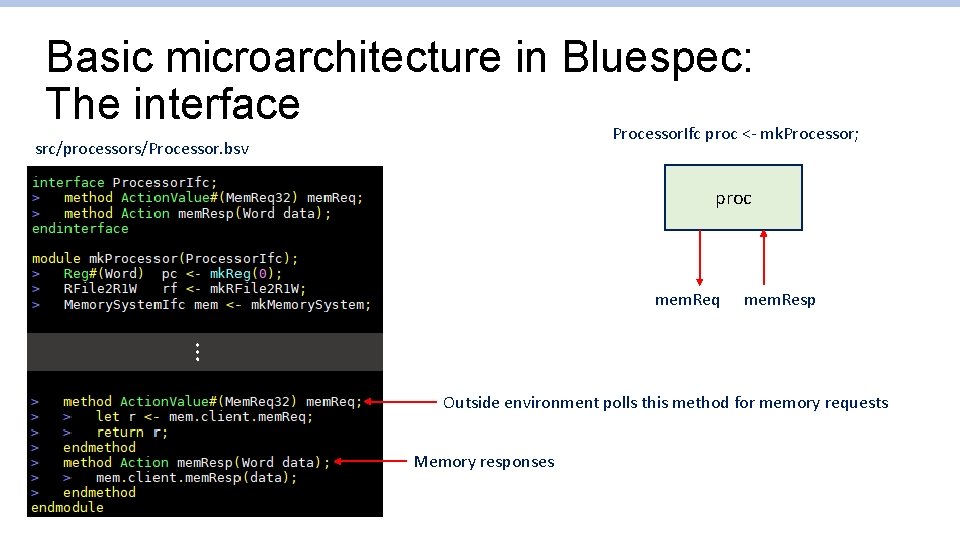

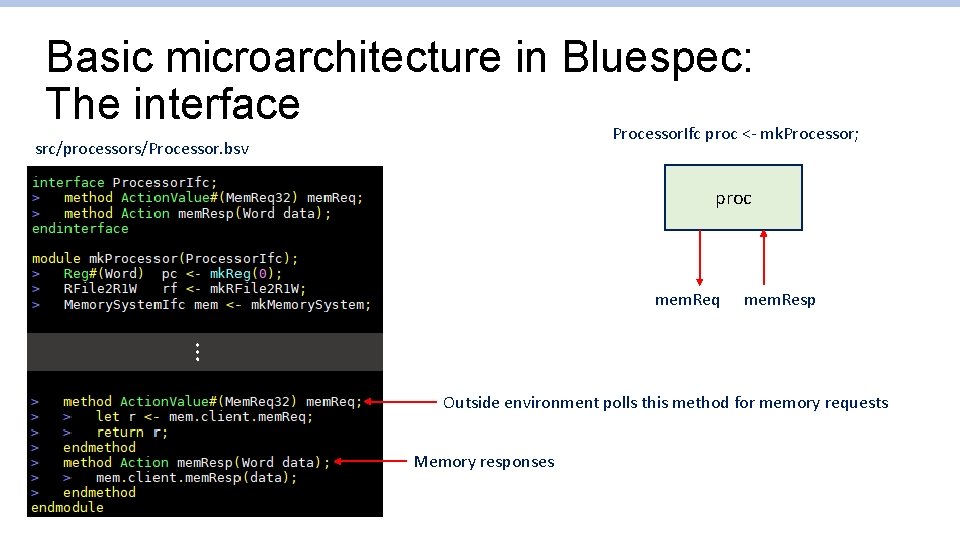

Basic microarchitecture in Bluespec: The interface Processor. Ifc proc <- mk. Processor; src/processors/Processor. bsv proc mem. Req mem. Resp … Outside environment polls this method for memory requests Memory responses

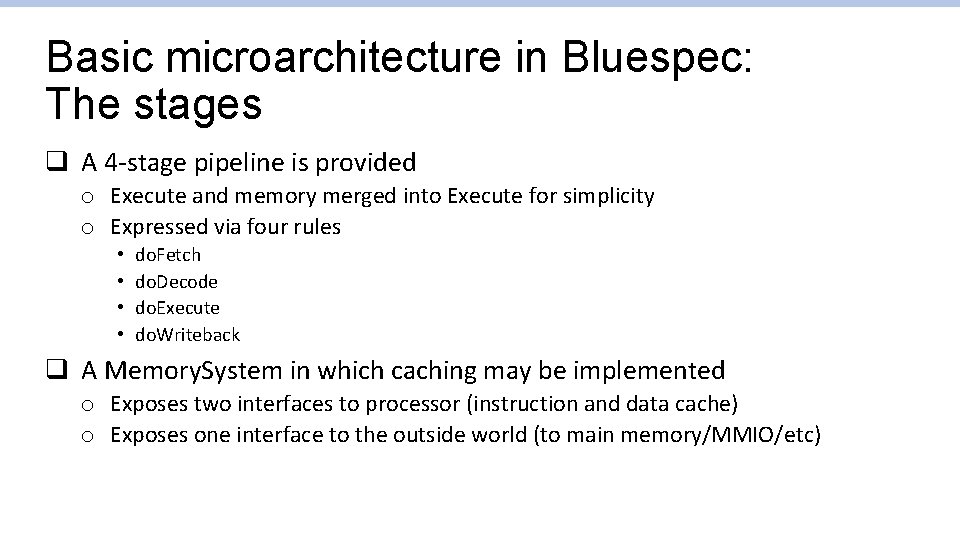

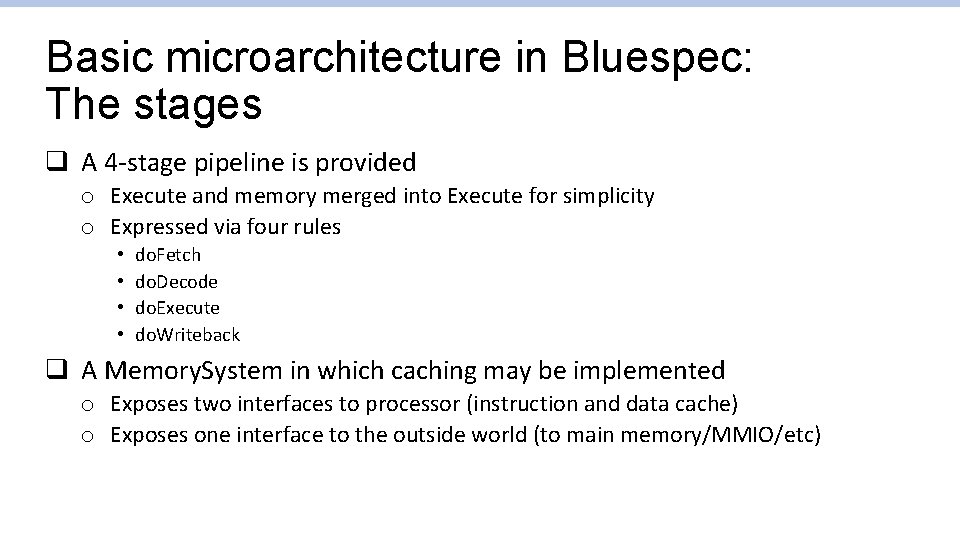

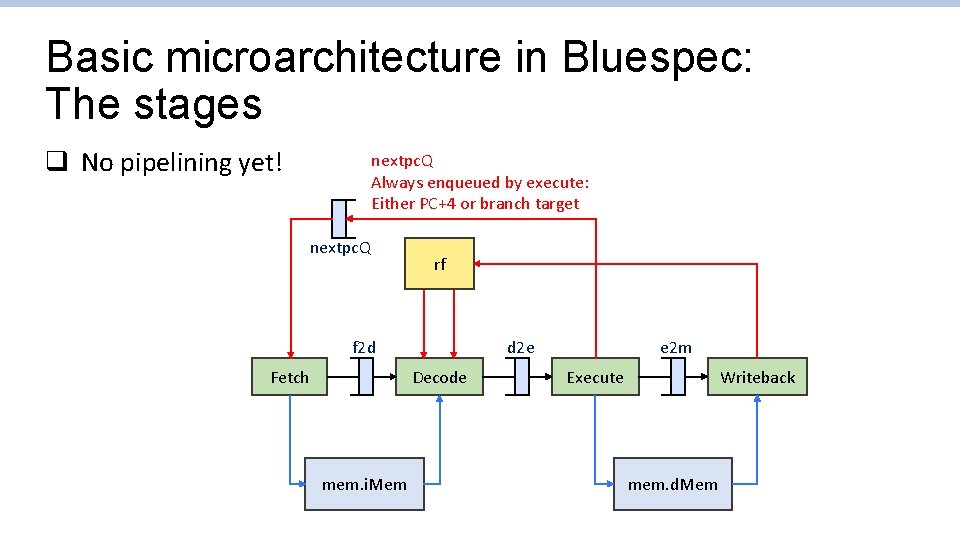

Basic microarchitecture in Bluespec: The stages q A 4 -stage pipeline is provided o Execute and memory merged into Execute for simplicity o Expressed via four rules • • do. Fetch do. Decode do. Execute do. Writeback q A Memory. System in which caching may be implemented o Exposes two interfaces to processor (instruction and data cache) o Exposes one interface to the outside world (to main memory/MMIO/etc)

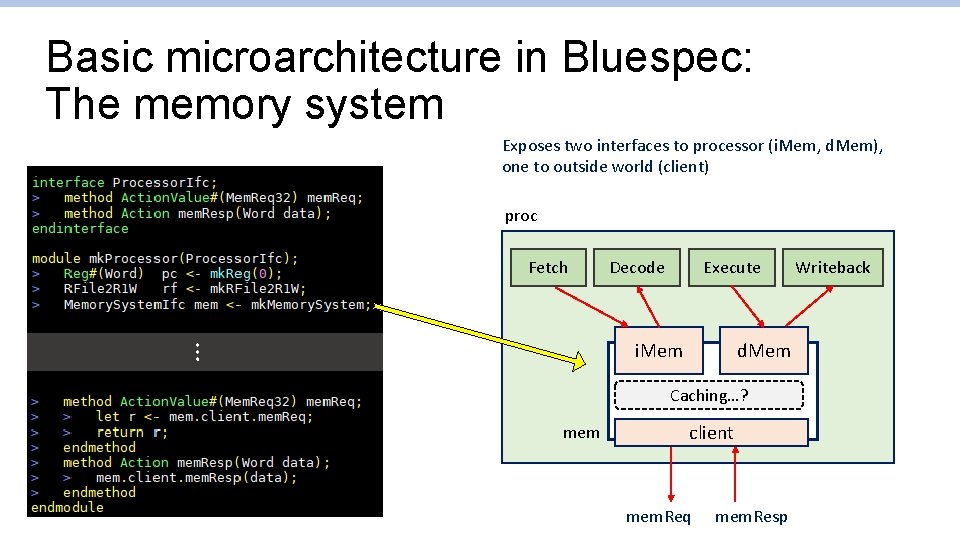

Basic microarchitecture in Bluespec: The memory system Exposes two interfaces to processor (i. Mem, d. Mem), one to outside world (client) proc Fetch Decode Execute d. Mem … i. Mem Caching…? mem client mem. Req mem. Resp Writeback

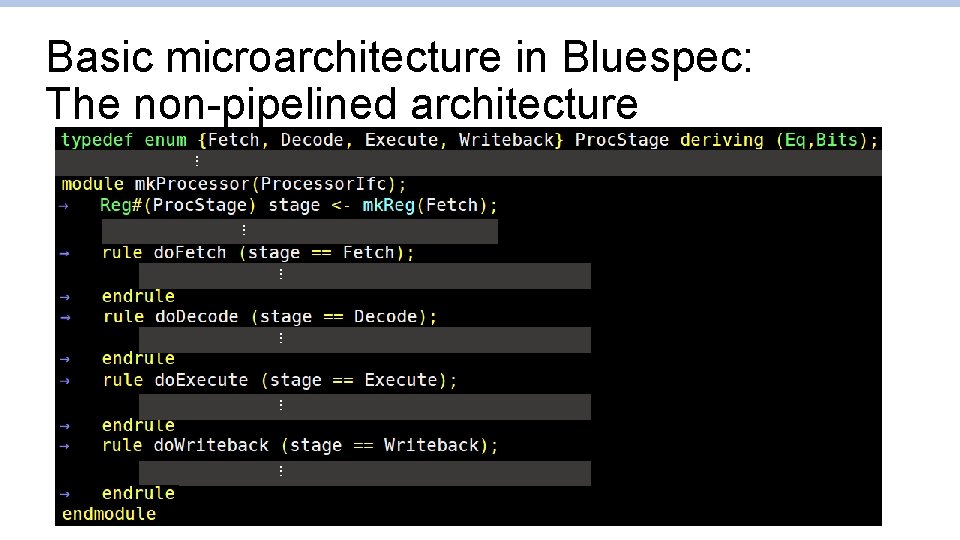

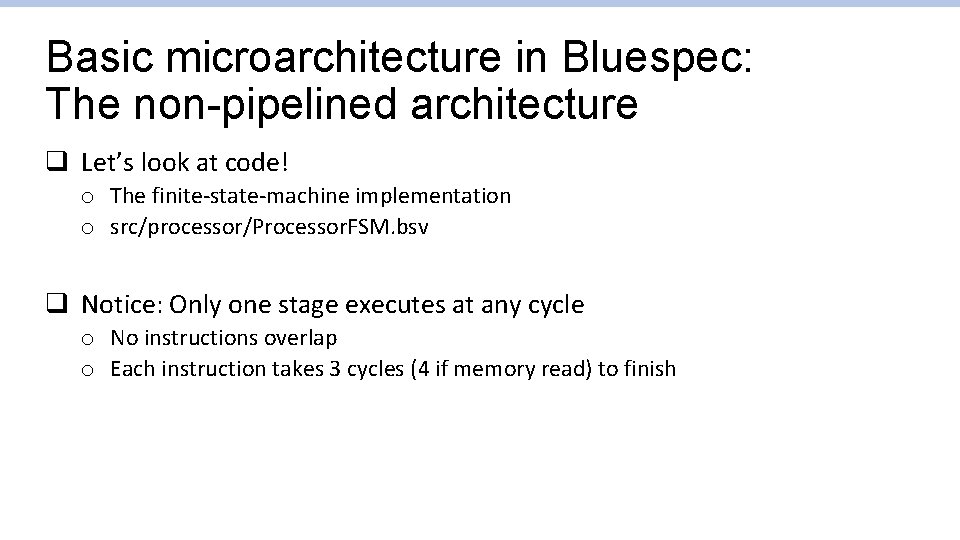

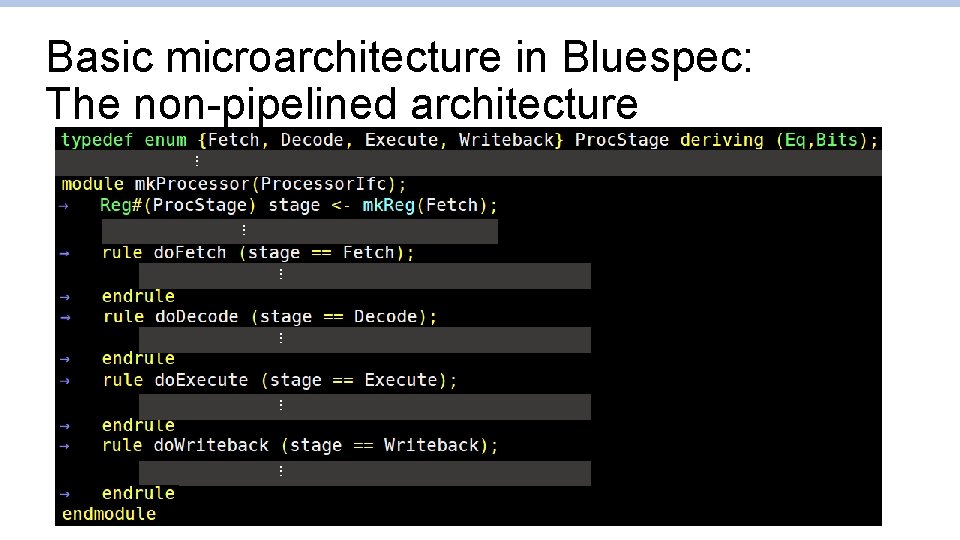

Basic microarchitecture in Bluespec: The non-pipelined architecture q Let’s look at code! o The finite-state-machine implementation o src/processor/Processor. FSM. bsv q Notice: Only one stage executes at any cycle o No instructions overlap o Each instruction takes 3 cycles (4 if memory read) to finish

… … … Basic microarchitecture in Bluespec: The non-pipelined architecture

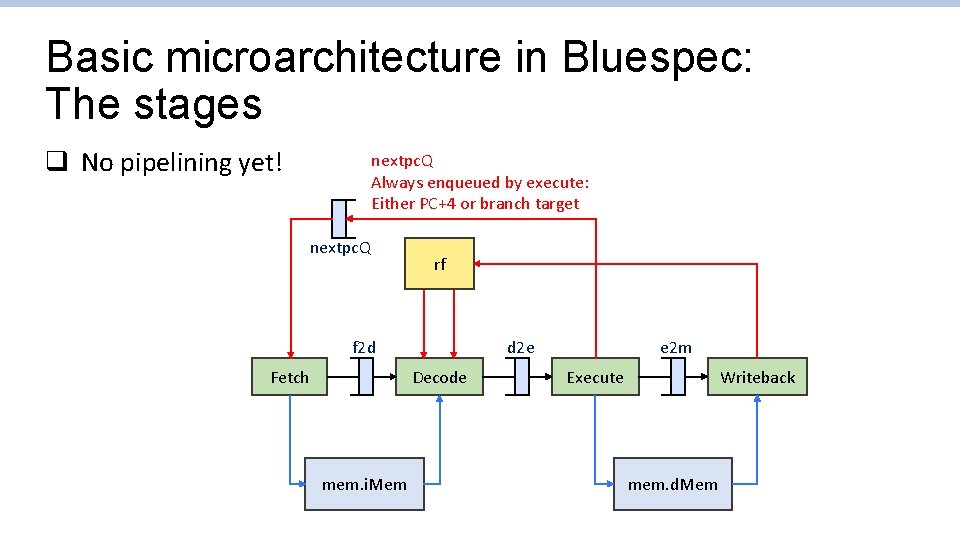

Basic microarchitecture in Bluespec: The stages q No pipelining yet! nextpc. Q Always enqueued by execute: Either PC+4 or branch target nextpc. Q rf f 2 d d 2 e Decode Fetch mem. i. Mem e 2 m Writeback Execute mem. d. Mem

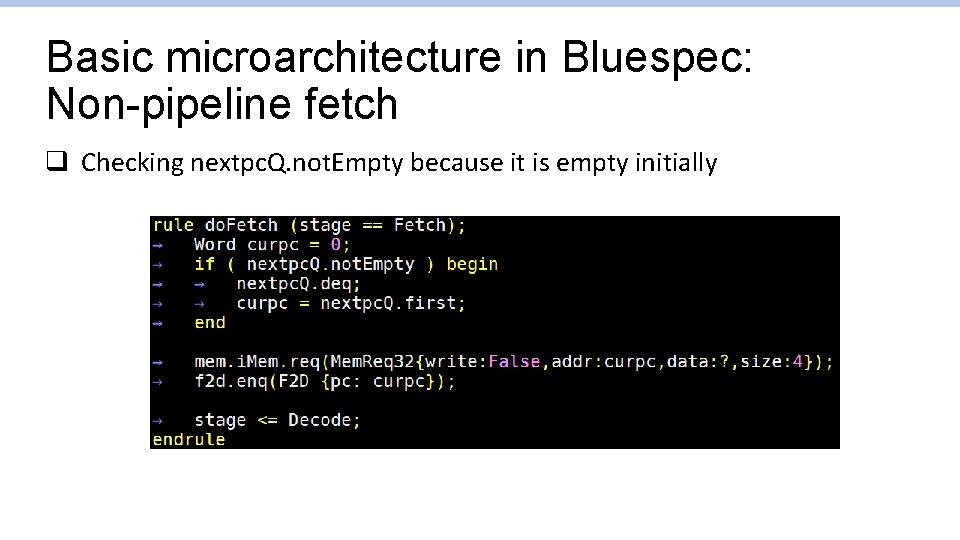

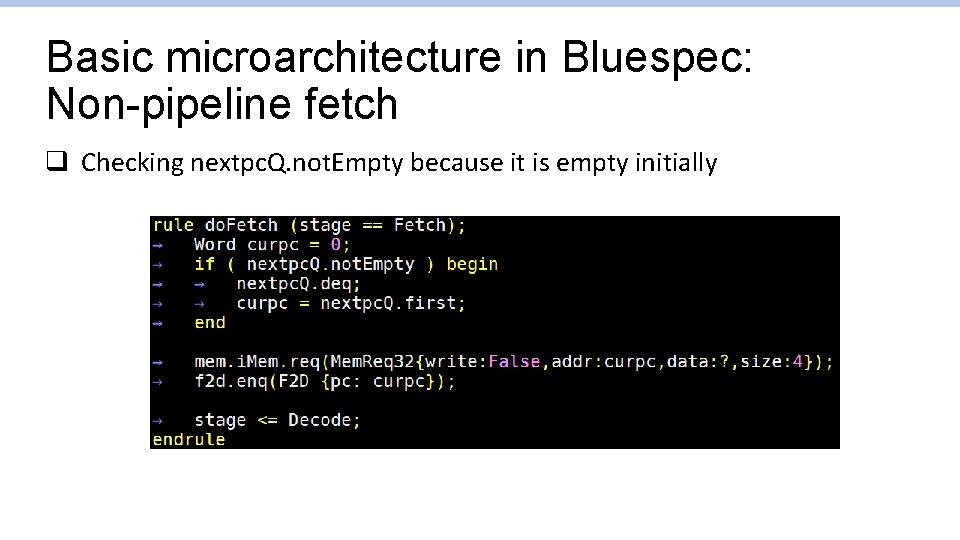

Basic microarchitecture in Bluespec: Non-pipeline fetch q Checking nextpc. Q. not. Empty because it is empty initially

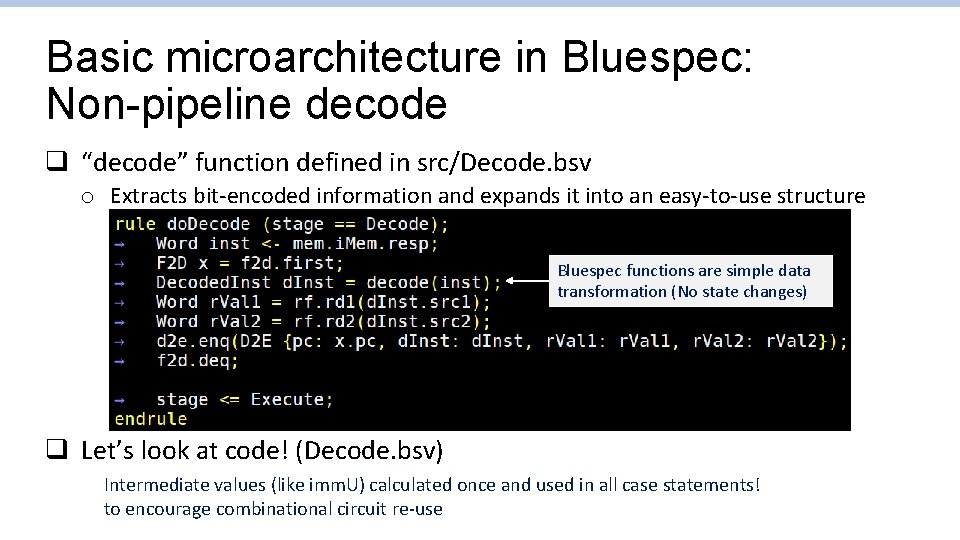

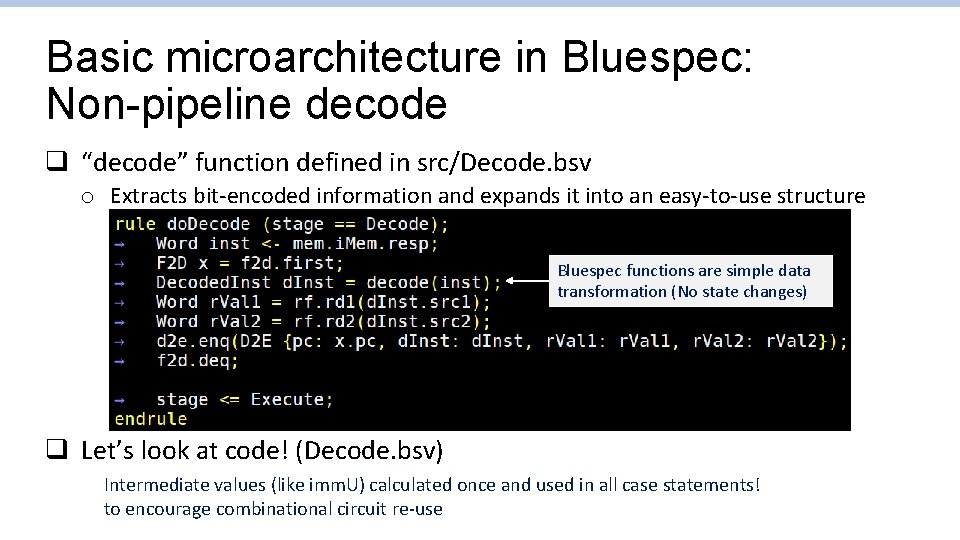

Basic microarchitecture in Bluespec: Non-pipeline decode q “decode” function defined in src/Decode. bsv o Extracts bit-encoded information and expands it into an easy-to-use structure Bluespec functions are simple data transformation (No state changes) q Let’s look at code! (Decode. bsv) Intermediate values (like imm. U) calculated once and used in all case statements! to encourage combinational circuit re-use

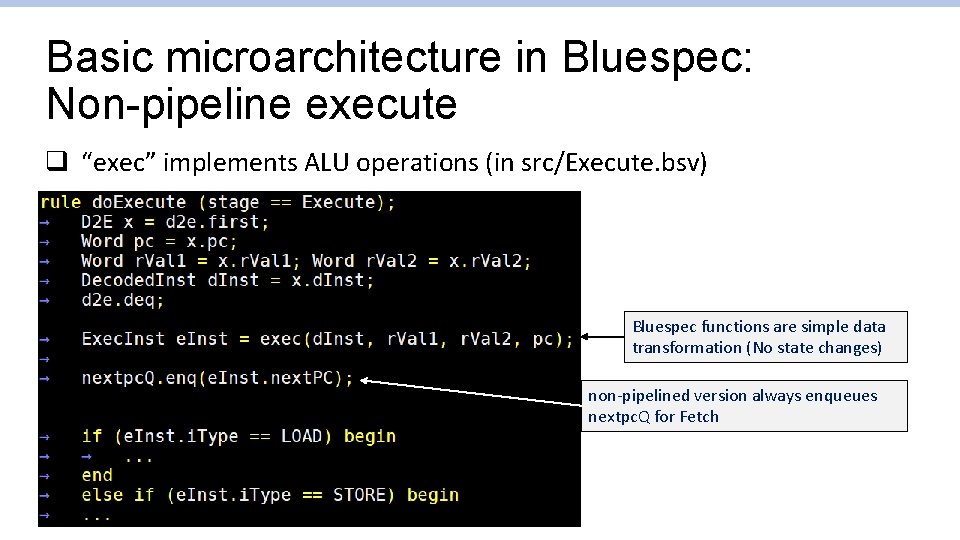

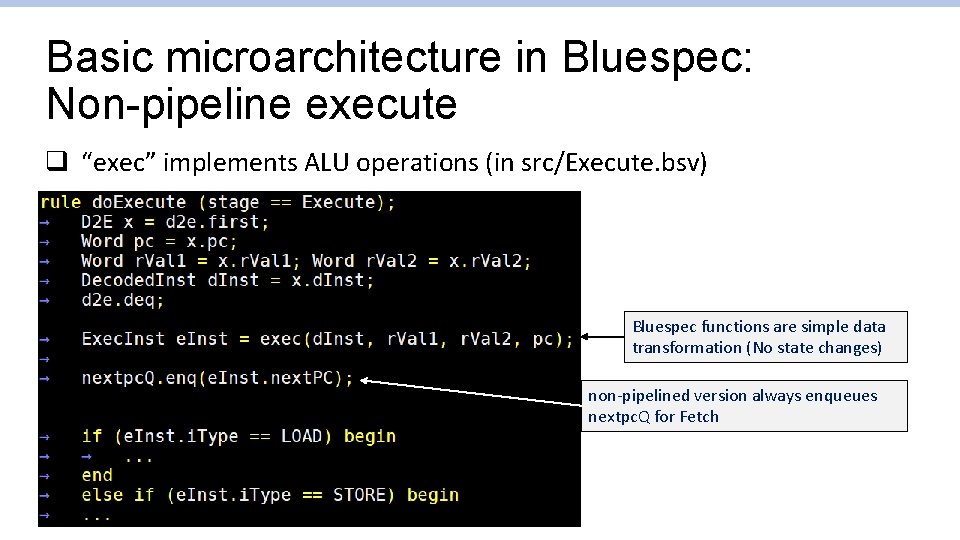

Basic microarchitecture in Bluespec: Non-pipeline execute q “exec” implements ALU operations (in src/Execute. bsv) Bluespec functions are simple data transformation (No state changes) non-pipelined version always enqueues nextpc. Q for Fetch

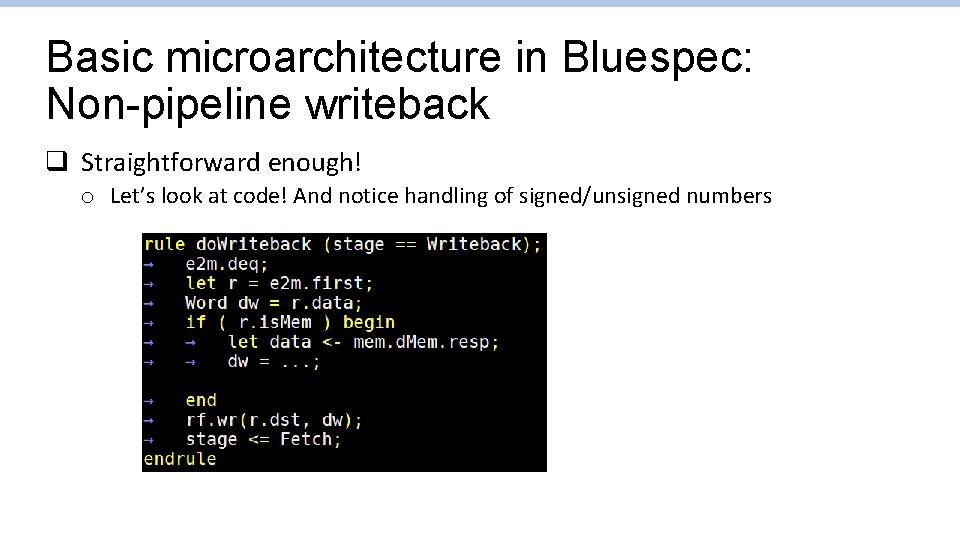

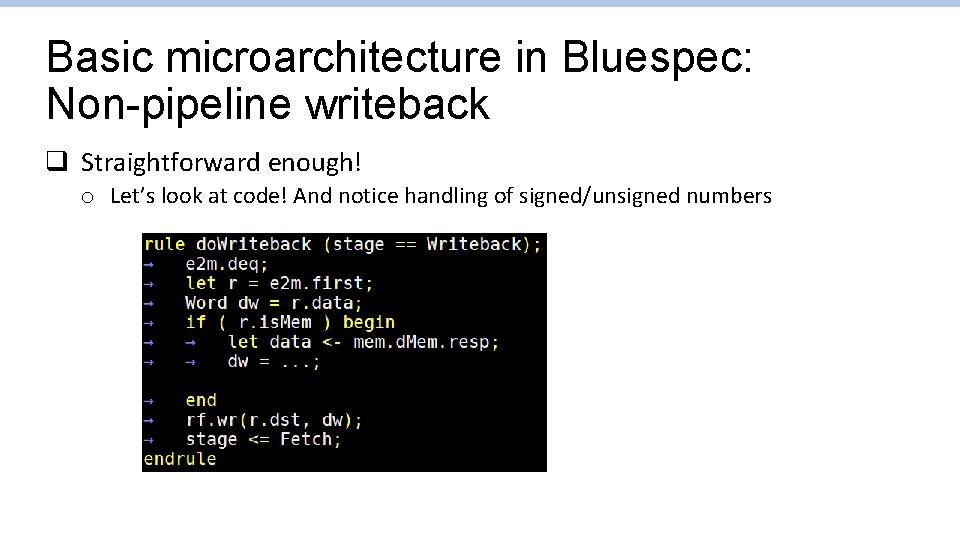

Basic microarchitecture in Bluespec: Non-pipeline writeback q Straightforward enough! o Let’s look at code! And notice handling of signed/unsigned numbers

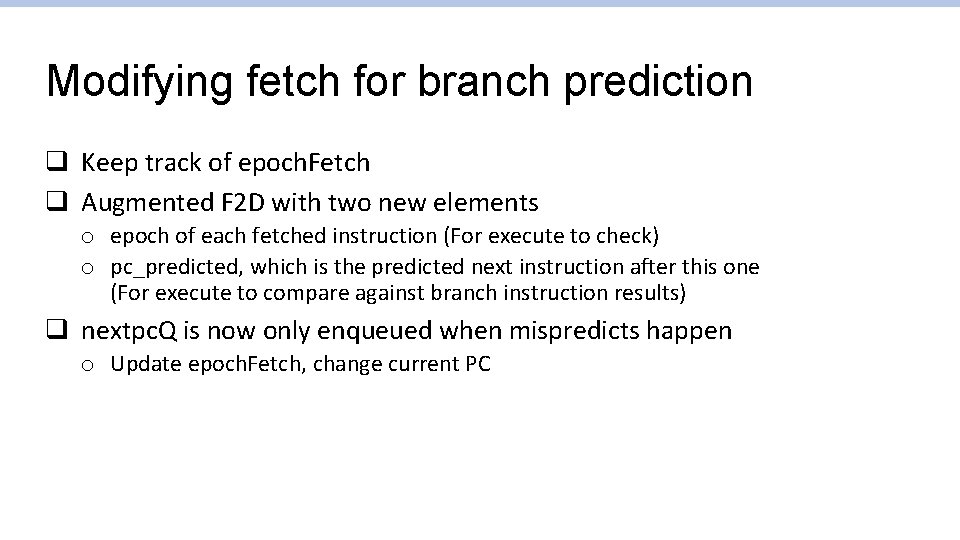

Let’s start pipelining q Start with handling branch hazards o Data hazards produce wrong results, o but without handling branch hazards we cannot pipeline things at all • Which address should Fetch read? q Things to solve: 1. Branch hazard 2. Load-Use hazard 3. Read-After-Write hazard

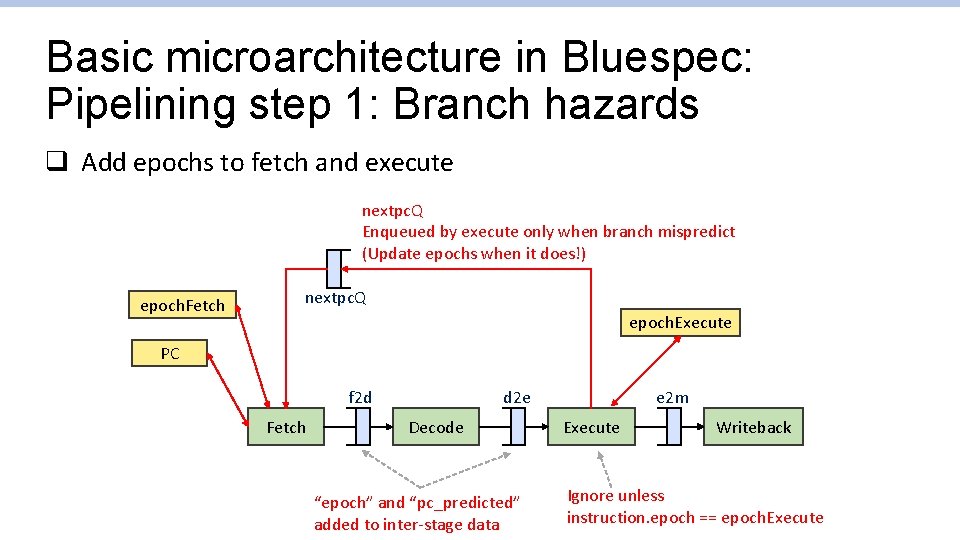

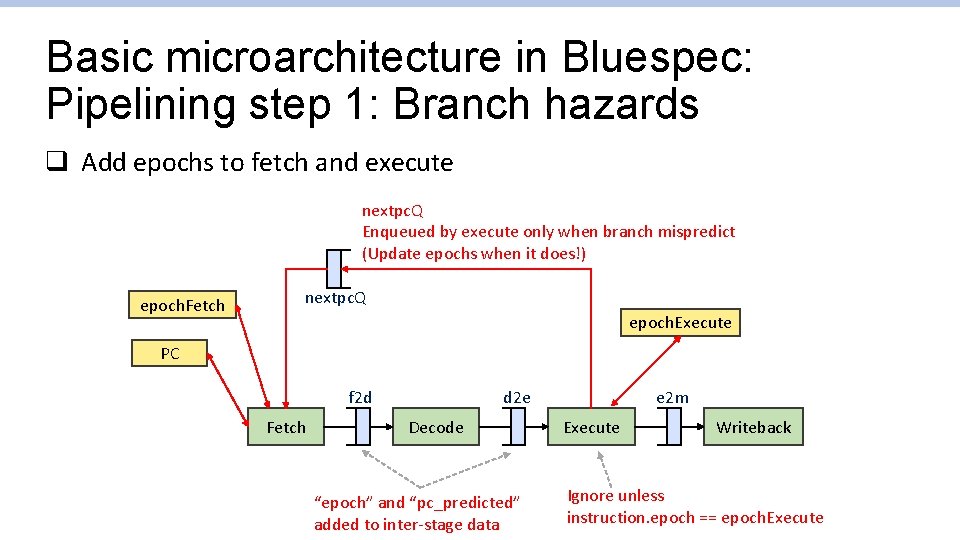

Basic microarchitecture in Bluespec: Pipelining step 1: Branch hazards q Add epochs to fetch and execute nextpc. Q Enqueued by execute only when branch mispredict (Update epochs when it does!) epoch. Fetch nextpc. Q epoch. Execute PC f 2 d Fetch d 2 e Decode “epoch” and “pc_predicted” added to inter-stage data e 2 m Execute Writeback Ignore unless instruction. epoch == epoch. Execute

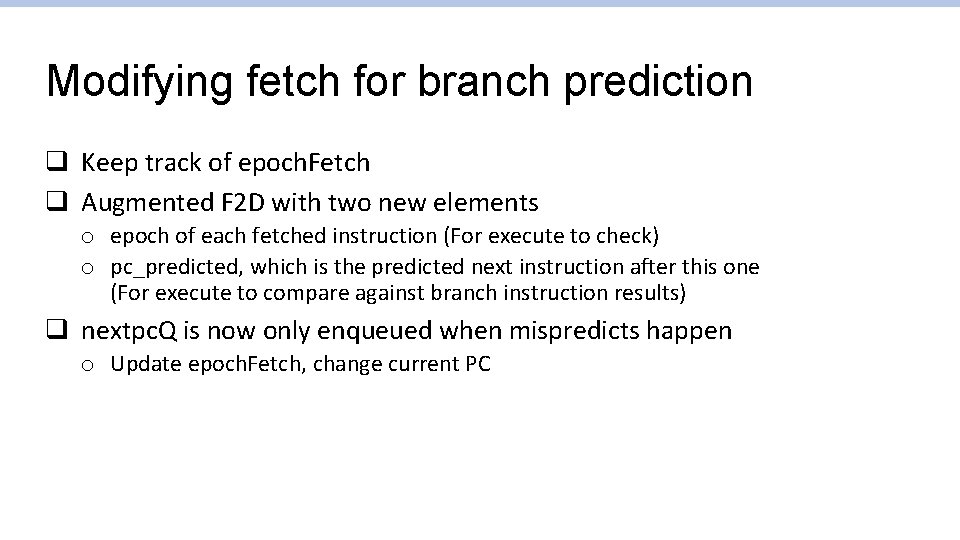

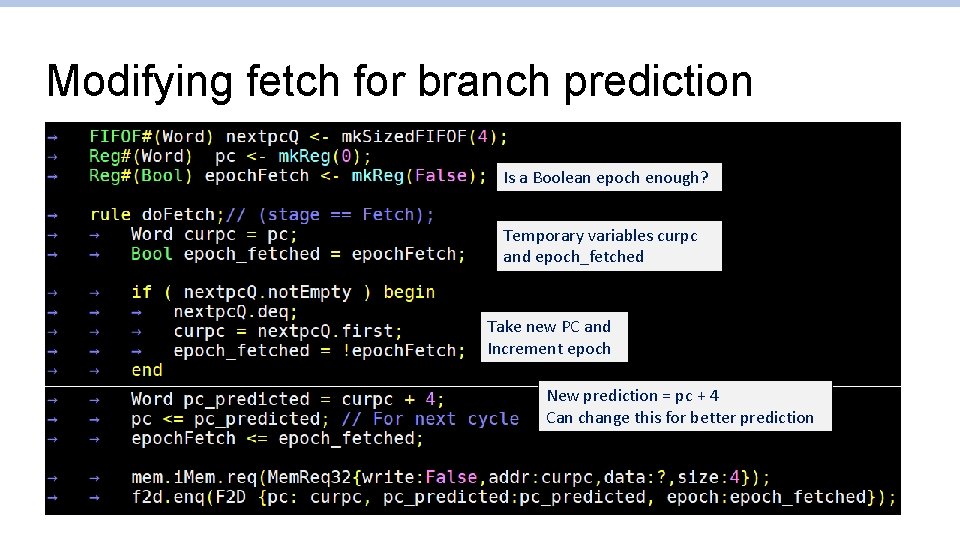

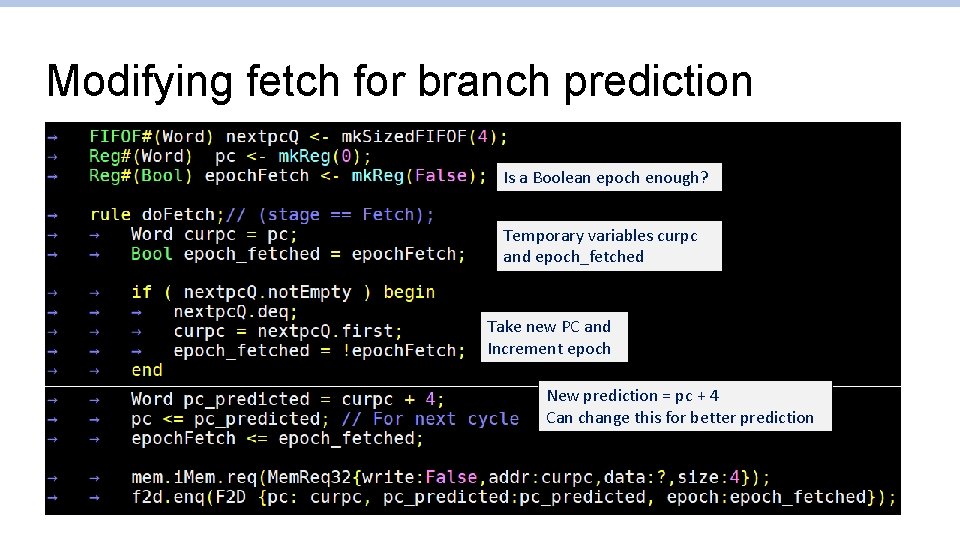

Modifying fetch for branch prediction q Keep track of epoch. Fetch q Augmented F 2 D with two new elements o epoch of each fetched instruction (For execute to check) o pc_predicted, which is the predicted next instruction after this one (For execute to compare against branch instruction results) q nextpc. Q is now only enqueued when mispredicts happen o Update epoch. Fetch, change current PC

Modifying fetch for branch prediction Is a Boolean epoch enough? Temporary variables curpc and epoch_fetched Take new PC and Increment epoch New prediction = pc + 4 Can change this for better prediction

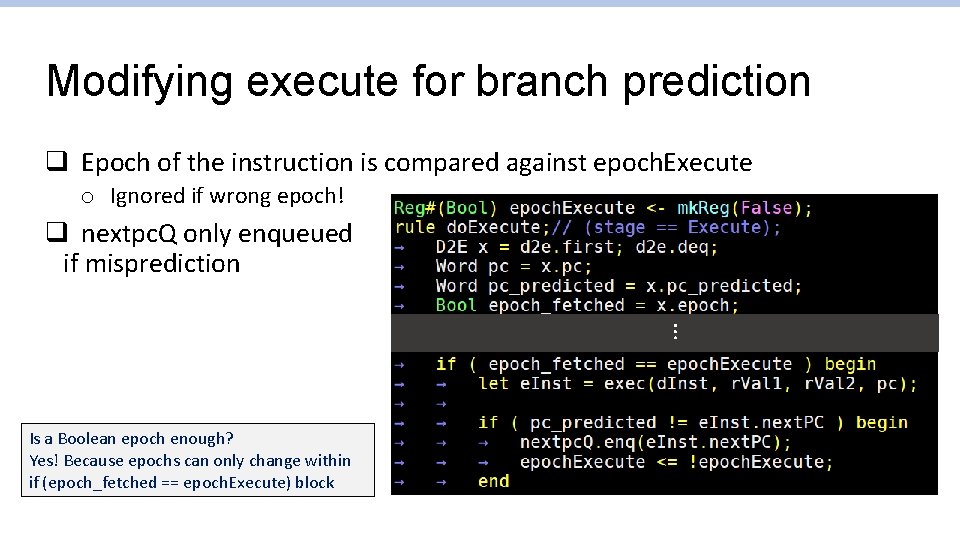

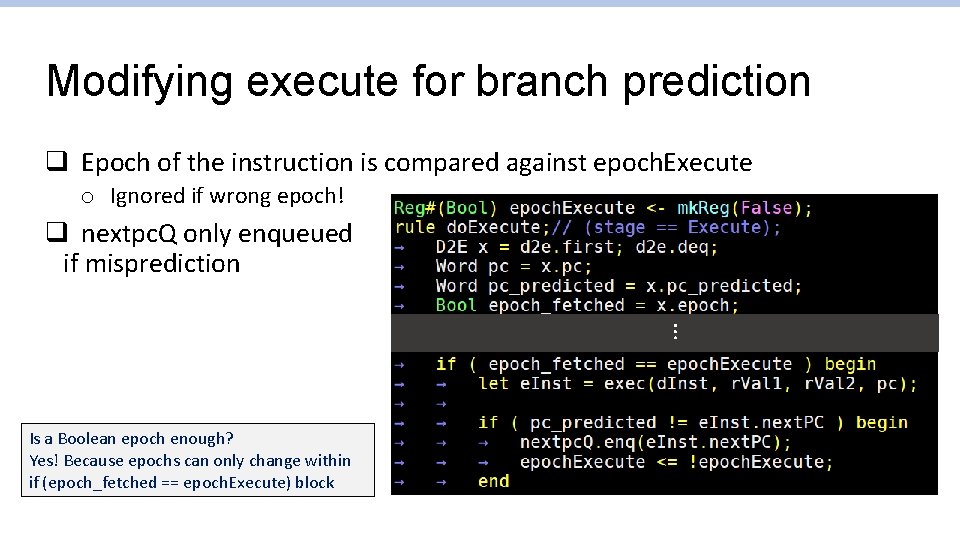

Modifying execute for branch prediction q Epoch of the instruction is compared against epoch. Execute o Ignored if wrong epoch! q nextpc. Q only enqueued if misprediction … Is a Boolean epoch enough? Yes! Because epochs can only change within if (epoch_fetched == epoch. Execute) block

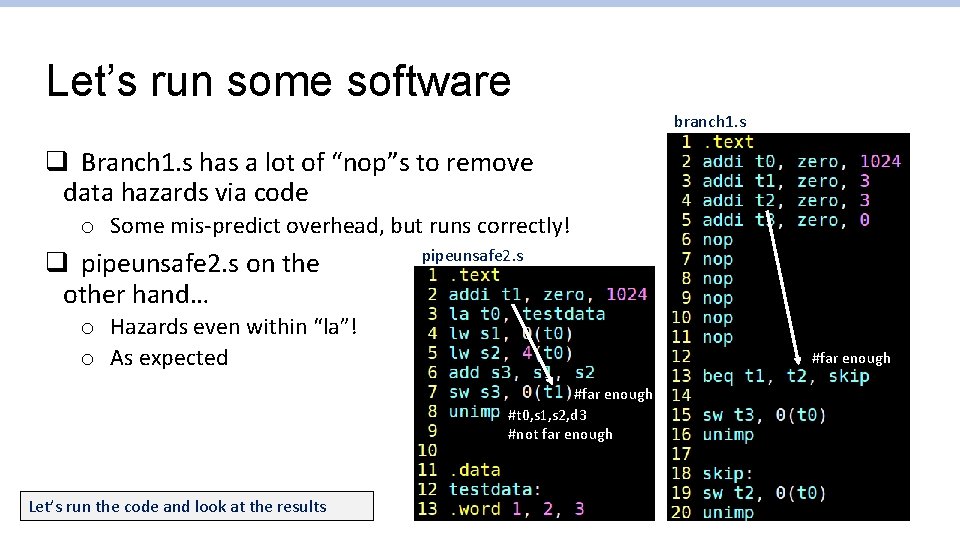

Basic microarchitecture in Bluespec: Pipelining step 1: Branch hazards q Let’s look at code! o The finite-state-machine implementation o src/processor/Processor. bsv q Notice: Now all stages fire at (almost) every cycle o Branches are handled correctly o Mis-predicts have performance overhead! o Data hazards not handled yet

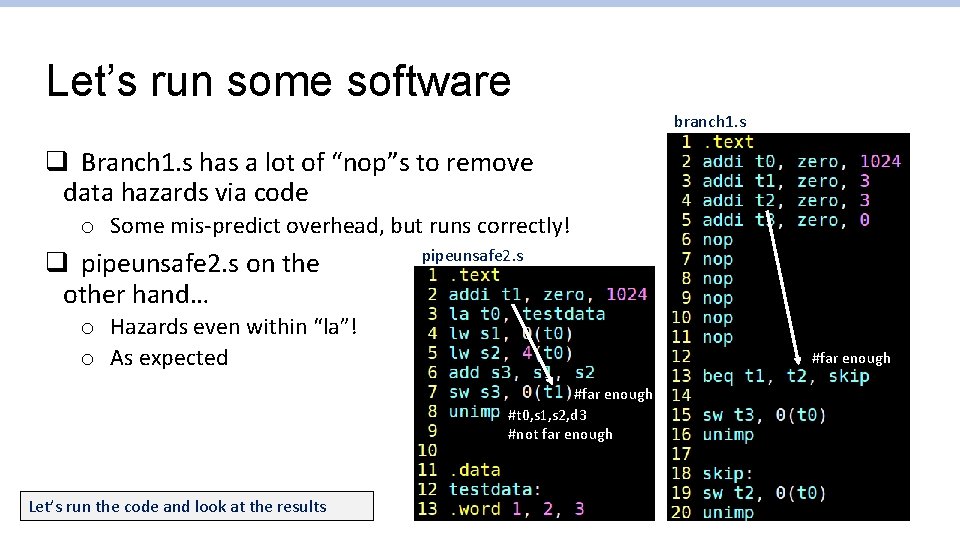

Let’s run some software q The simulation environment defined in mmap. txt o # Load sw/obj/branch 1. bin to address 0, and MMIO to check if data written to 1024 is “ 3” o +0 sw/obj/branch 1. bin o ? 1024 3 q Let’s try running branch 1. bin, pipeunsafe 2. bin

Let’s run some software branch 1. s q Branch 1. s has a lot of “nop”s to remove data hazards via code o Some mis-predict overhead, but runs correctly! q pipeunsafe 2. s on the other hand… pipeunsafe 2. s o Hazards even within “la”! o As expected #far enough #t 0, s 1, s 2, d 3 #not far enough Let’s run the code and look at the results

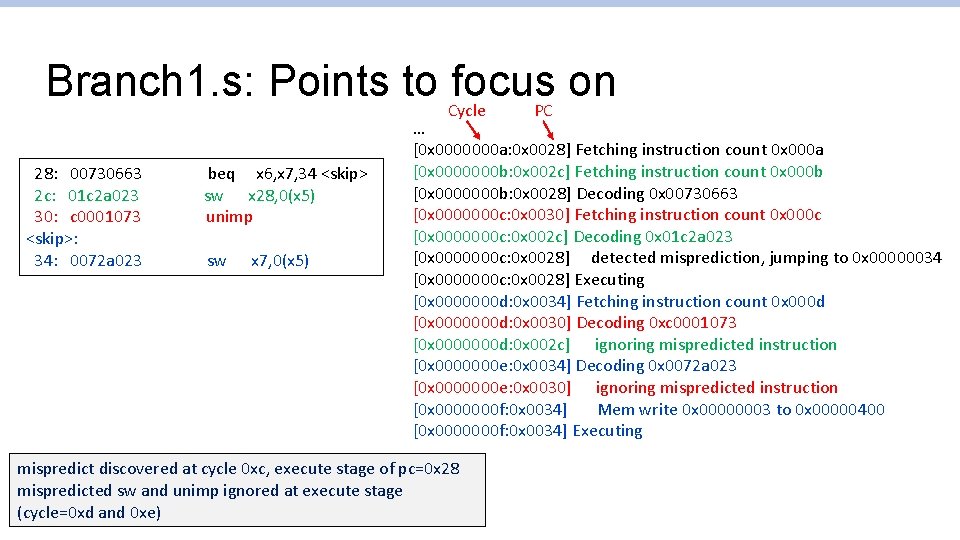

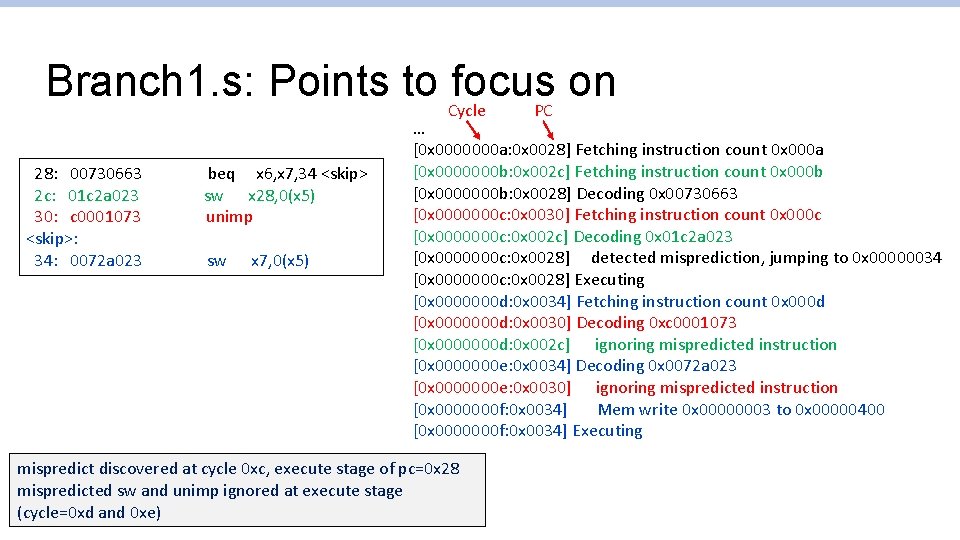

Branch 1. s: Points to Cycle focus on PC 28: 00730663 2 c: 01 c 2 a 023 30: c 0001073 <skip>: 34: 0072 a 023 beq x 6, x 7, 34 <skip> sw x 28, 0(x 5) unimp sw x 7, 0(x 5) … [0 x 0000000 a: 0 x 0028] Fetching instruction count 0 x 000 a [0 x 0000000 b: 0 x 002 c] Fetching instruction count 0 x 000 b [0 x 0000000 b: 0 x 0028] Decoding 0 x 00730663 [0 x 0000000 c: 0 x 0030] Fetching instruction count 0 x 000 c [0 x 0000000 c: 0 x 002 c] Decoding 0 x 01 c 2 a 023 [0 x 0000000 c: 0 x 0028] detected misprediction, jumping to 0 x 00000034 [0 x 0000000 c: 0 x 0028] Executing [0 x 0000000 d: 0 x 0034] Fetching instruction count 0 x 000 d [0 x 0000000 d: 0 x 0030] Decoding 0 xc 0001073 [0 x 0000000 d: 0 x 002 c] ignoring mispredicted instruction [0 x 0000000 e: 0 x 0034] Decoding 0 x 0072 a 023 [0 x 0000000 e: 0 x 0030] ignoring mispredicted instruction [0 x 0000000 f: 0 x 0034] Mem write 0 x 00000003 to 0 x 00000400 [0 x 0000000 f: 0 x 0034] Executing mispredict discovered at cycle 0 xc, execute stage of pc=0 x 28 mispredicted sw and unimp ignored at execute stage (cycle=0 xd and 0 xe)

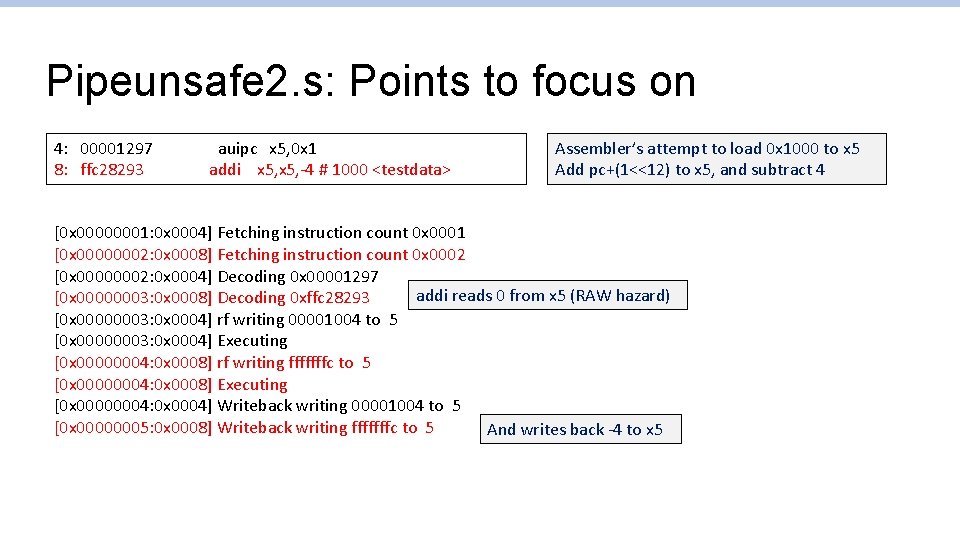

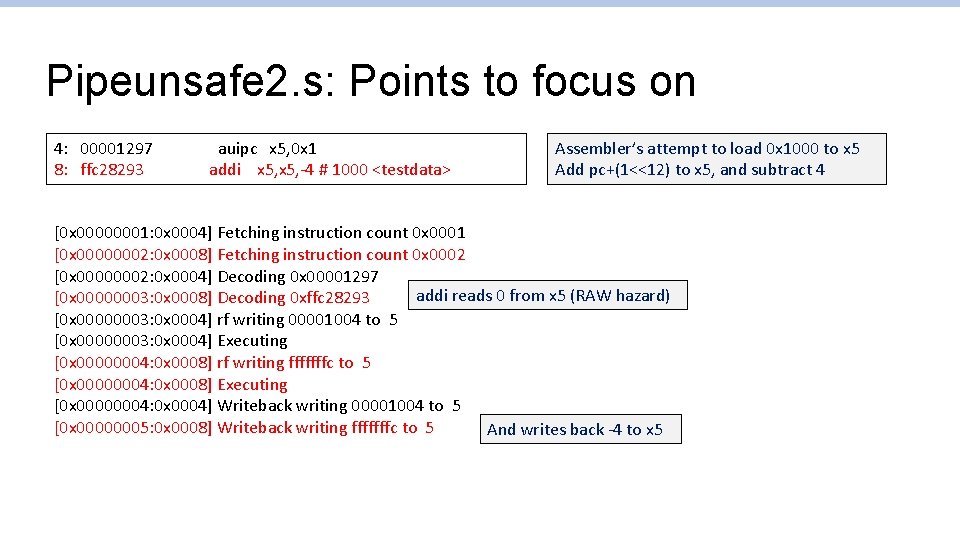

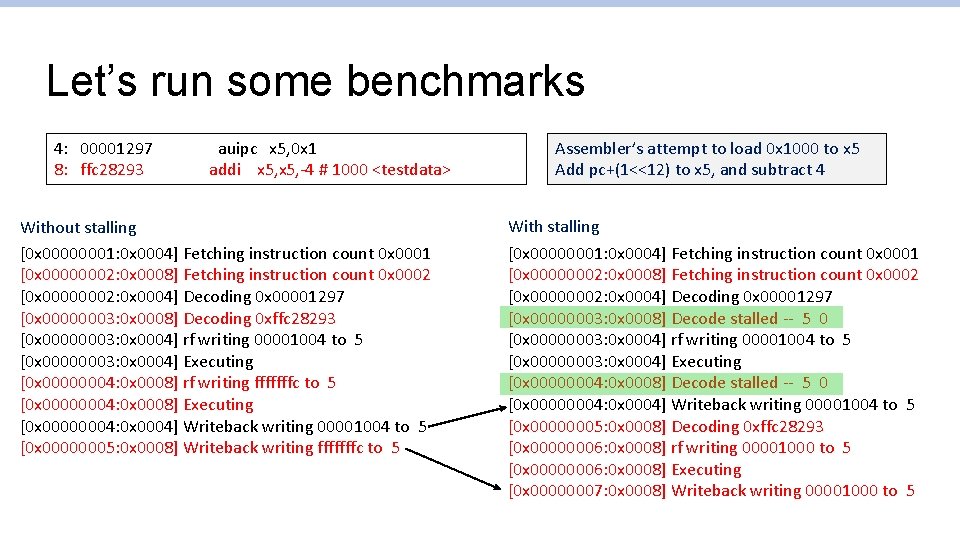

Pipeunsafe 2. s: Points to focus on 4: 00001297 8: ffc 28293 auipc x 5, 0 x 1 addi x 5, -4 # 1000 <testdata> Assembler’s attempt to load 0 x 1000 to x 5 Add pc+(1<<12) to x 5, and subtract 4 [0 x 00000001: 0 x 0004] Fetching instruction count 0 x 0001 [0 x 00000002: 0 x 0008] Fetching instruction count 0 x 0002 [0 x 00000002: 0 x 0004] Decoding 0 x 00001297 addi reads 0 from x 5 (RAW hazard) [0 x 00000003: 0 x 0008] Decoding 0 xffc 28293 [0 x 00000003: 0 x 0004] rf writing 00001004 to 5 [0 x 00000003: 0 x 0004] Executing [0 x 00000004: 0 x 0008] rf writing fffffffc to 5 [0 x 00000004: 0 x 0008] Executing [0 x 00000004: 0 x 0004] Writeback writing 00001004 to 5 [0 x 00000005: 0 x 0008] Writeback writing fffffffc to 5 And writes back -4 to x 5

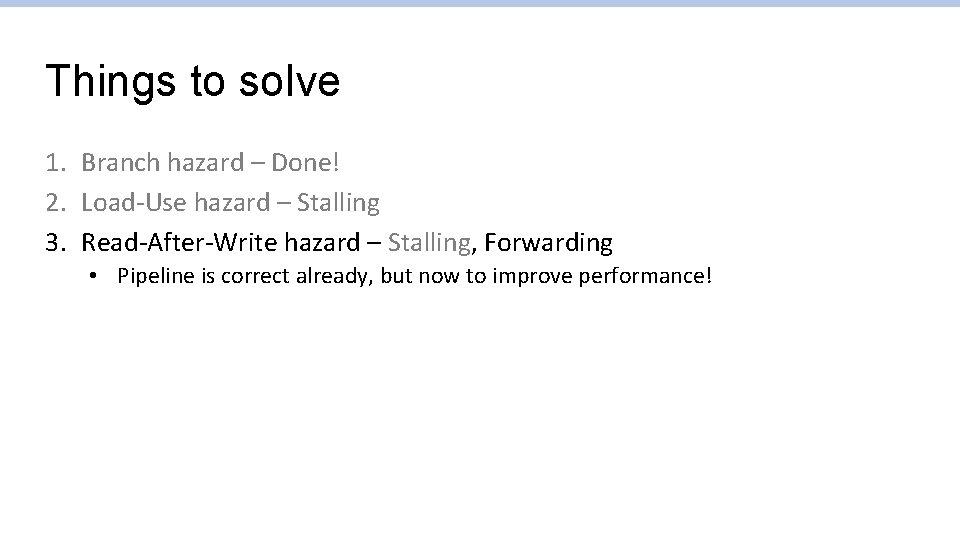

Things to solve 1. Branch hazard – Done! 2. Load-Use hazard – Stalling 3. Read-After-Write hazard – Stalling, Forwarding

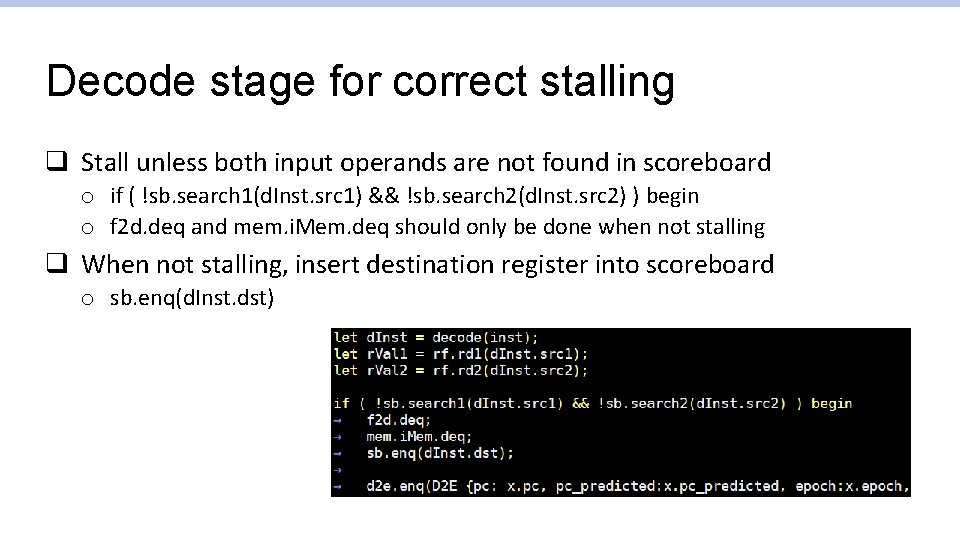

Solving data hazards! q Step 1: Stalling o How to detect data hazards? o The decode stage must know whether a previous instruction incurs data hazard • Previous instruction in flight will write to a register I need to read from? o Restriction: Detection must happen combinationally, within the decode cycle • Otherwise, we will slow down the pipeline q Step 2: Forwarding o To be continued

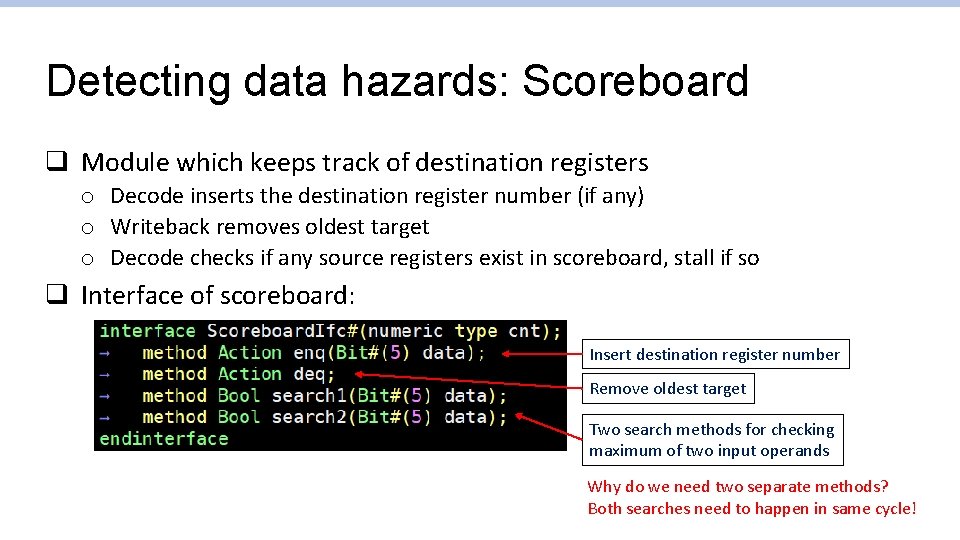

Detecting data hazards: Scoreboard q Module which keeps track of destination registers o Decode inserts the destination register number (if any) o Writeback removes oldest target o Decode checks if any source registers exist in scoreboard, stall if so q Interface of scoreboard: Insert destination register number Remove oldest target Two search methods for checking maximum of two input operands Why do we need two separate methods? Both searches need to happen in same cycle!

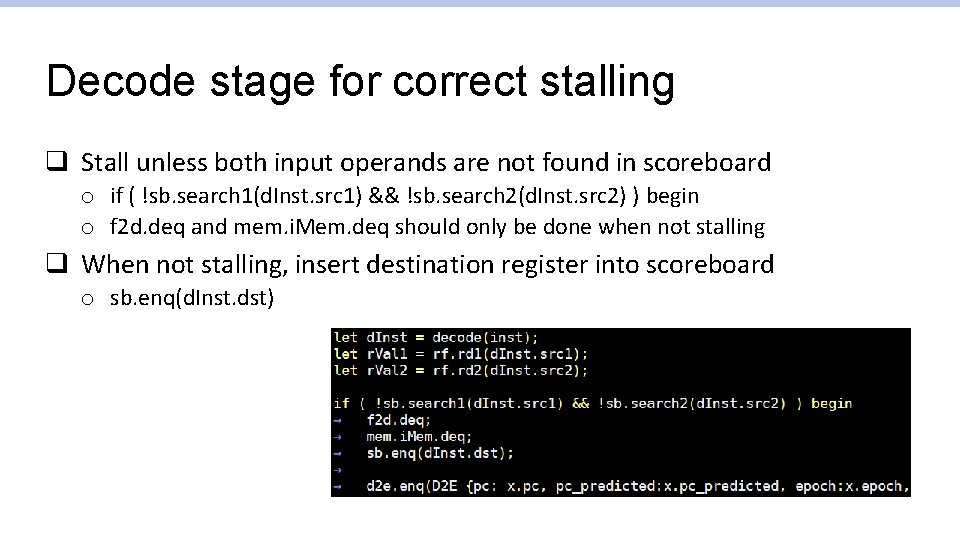

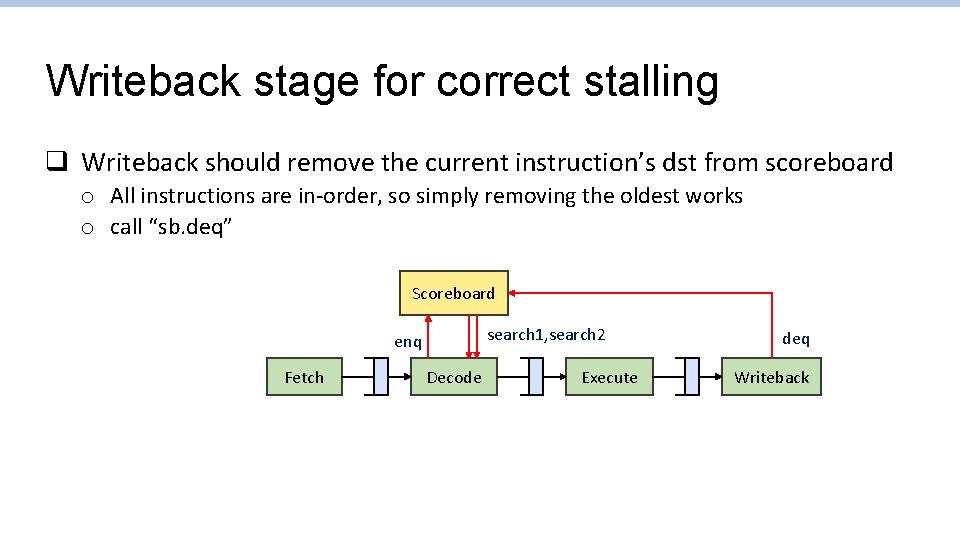

Decode stage for correct stalling q Stall unless both input operands are not found in scoreboard o if ( !sb. search 1(d. Inst. src 1) && !sb. search 2(d. Inst. src 2) ) begin o f 2 d. deq and mem. i. Mem. deq should only be done when not stalling q When not stalling, insert destination register into scoreboard o sb. enq(d. Inst. dst)

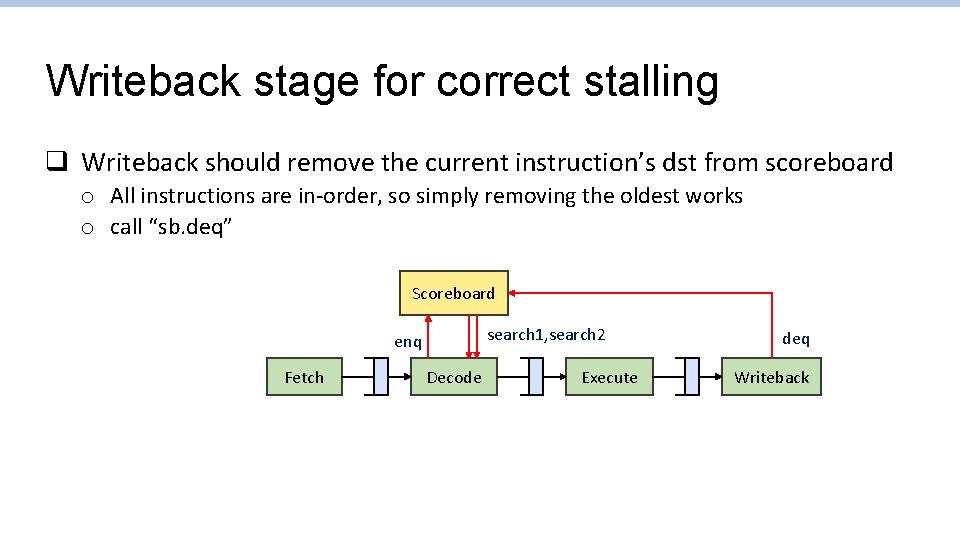

Writeback stage for correct stalling q Writeback should remove the current instruction’s dst from scoreboard o All instructions are in-order, so simply removing the oldest works o call “sb. deq” Scoreboard search 1, search 2 enq Fetch Decode Execute deq Writeback

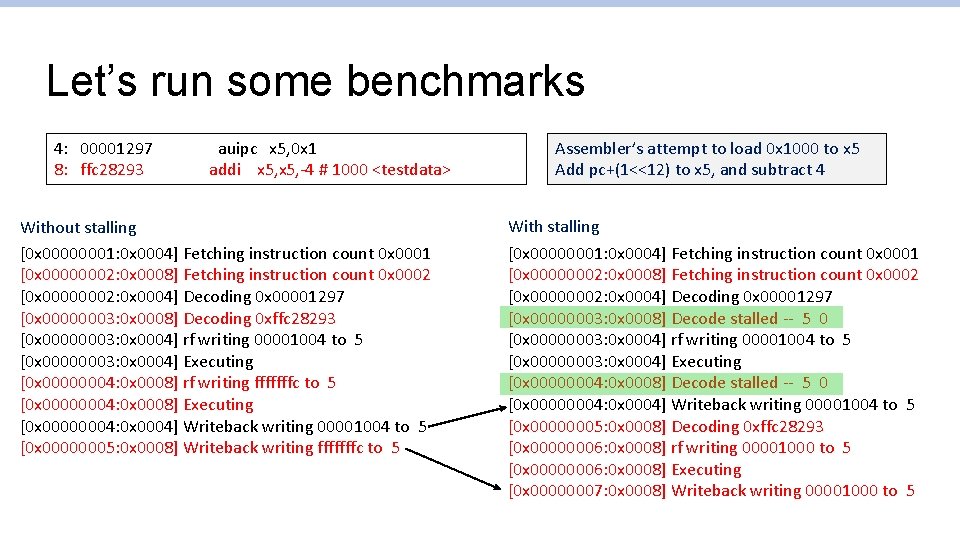

Let’s run some benchmarks 4: 00001297 8: ffc 28293 auipc x 5, 0 x 1 addi x 5, -4 # 1000 <testdata> Without stalling [0 x 00000001: 0 x 0004] Fetching instruction count 0 x 0001 [0 x 00000002: 0 x 0008] Fetching instruction count 0 x 0002 [0 x 00000002: 0 x 0004] Decoding 0 x 00001297 [0 x 00000003: 0 x 0008] Decoding 0 xffc 28293 [0 x 00000003: 0 x 0004] rf writing 00001004 to 5 [0 x 00000003: 0 x 0004] Executing [0 x 00000004: 0 x 0008] rf writing fffffffc to 5 [0 x 00000004: 0 x 0008] Executing [0 x 00000004: 0 x 0004] Writeback writing 00001004 to 5 [0 x 00000005: 0 x 0008] Writeback writing fffffffc to 5 Assembler’s attempt to load 0 x 1000 to x 5 Add pc+(1<<12) to x 5, and subtract 4 With stalling [0 x 00000001: 0 x 0004] Fetching instruction count 0 x 0001 [0 x 00000002: 0 x 0008] Fetching instruction count 0 x 0002 [0 x 00000002: 0 x 0004] Decoding 0 x 00001297 [0 x 00000003: 0 x 0008] Decode stalled -- 5 0 [0 x 00000003: 0 x 0004] rf writing 00001004 to 5 [0 x 00000003: 0 x 0004] Executing [0 x 00000004: 0 x 0008] Decode stalled -- 5 0 [0 x 00000004: 0 x 0004] Writeback writing 00001004 to 5 [0 x 00000005: 0 x 0008] Decoding 0 xffc 28293 [0 x 00000006: 0 x 0008] rf writing 00001000 to 5 [0 x 00000006: 0 x 0008] Executing [0 x 00000007: 0 x 0008] Writeback writing 00001000 to 5

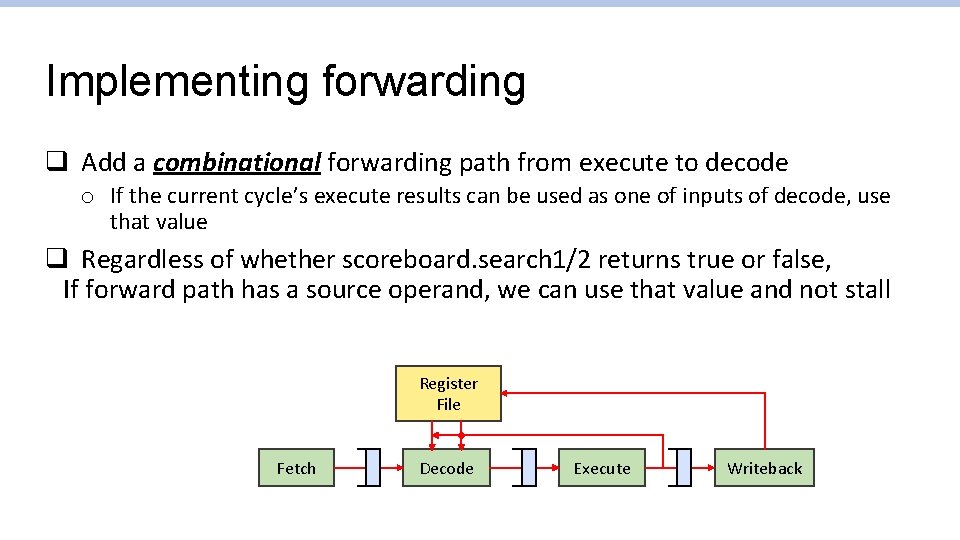

Things to solve 1. Branch hazard – Done! 2. Load-Use hazard – Stalling 3. Read-After-Write hazard – Stalling, Forwarding • Pipeline is correct already, but now to improve performance!

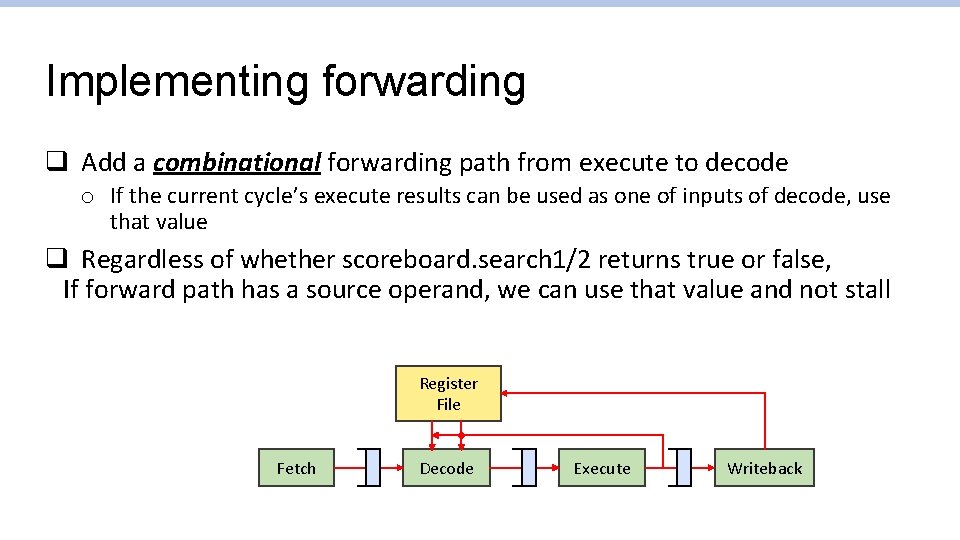

Implementing forwarding q Add a combinational forwarding path from execute to decode o If the current cycle’s execute results can be used as one of inputs of decode, use that value q Regardless of whether scoreboard. search 1/2 returns true or false, If forward path has a source operand, we can use that value and not stall Register File Fetch Decode Execute Writeback

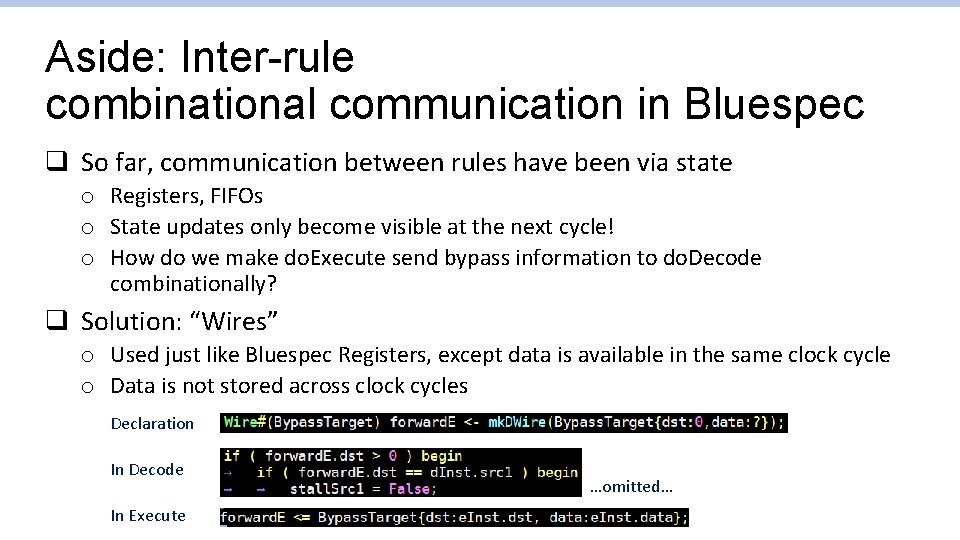

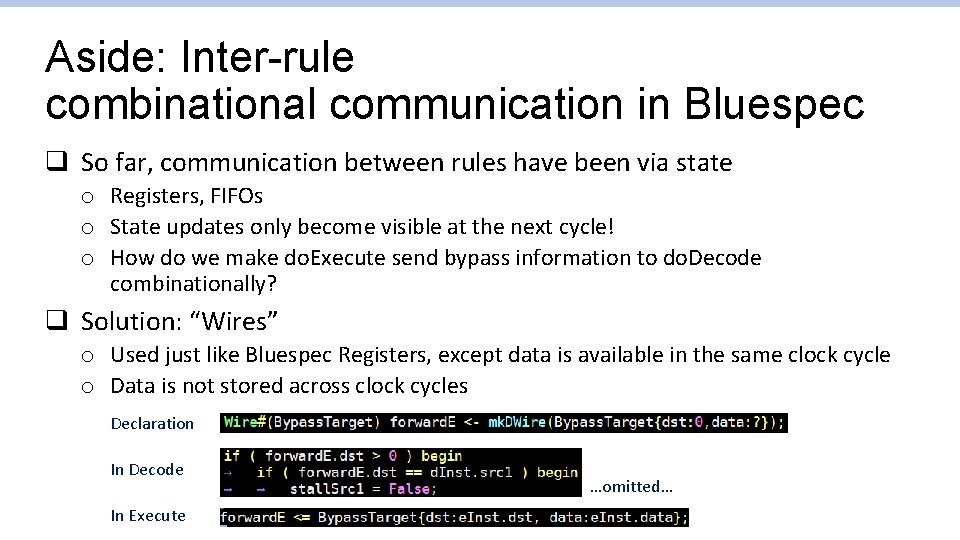

Aside: Inter-rule combinational communication in Bluespec q So far, communication between rules have been via state o Registers, FIFOs o State updates only become visible at the next cycle! o How do we make do. Execute send bypass information to do. Decode combinationally? q Solution: “Wires” o Used just like Bluespec Registers, except data is available in the same clock cycle o Data is not stored across clock cycles Declaration In Decode In Execute …omitted…

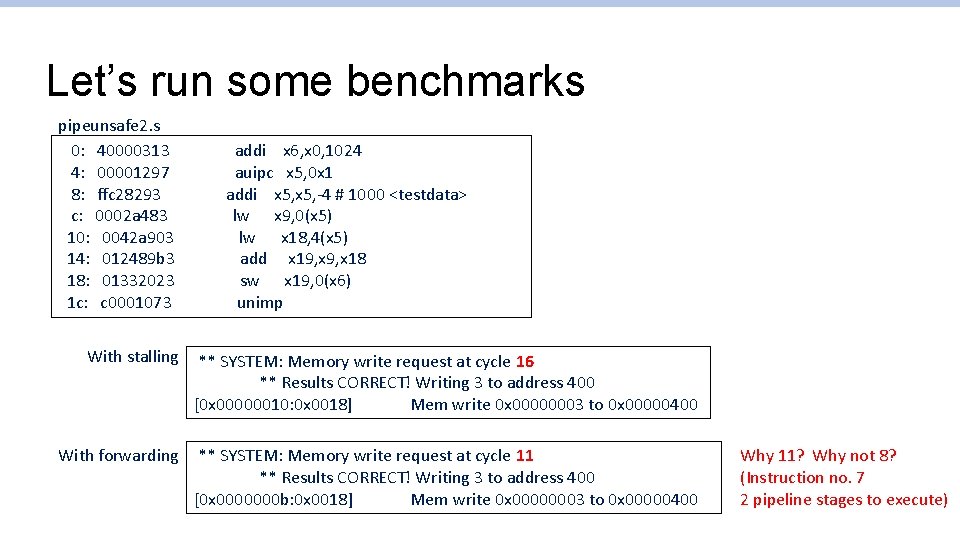

Let’s run some benchmarks pipeunsafe 2. s 0: 40000313 4: 00001297 8: ffc 28293 c: 0002 a 483 10: 0042 a 903 14: 012489 b 3 18: 01332023 1 c: c 0001073 addi x 6, x 0, 1024 auipc x 5, 0 x 1 addi x 5, -4 # 1000 <testdata> lw x 9, 0(x 5) lw x 18, 4(x 5) add x 19, x 18 sw x 19, 0(x 6) unimp With stalling ** SYSTEM: Memory write request at cycle 16 ** Results CORRECT! Writing 3 to address 400 [0 x 00000010: 0 x 0018] Mem write 0 x 00000003 to 0 x 00000400 With forwarding ** SYSTEM: Memory write request at cycle 11 ** Results CORRECT! Writing 3 to address 400 [0 x 0000000 b: 0 x 0018] Mem write 0 x 00000003 to 0 x 00000400 Why 11? Why not 8? (Instruction no. 7 2 pipeline stages to execute)

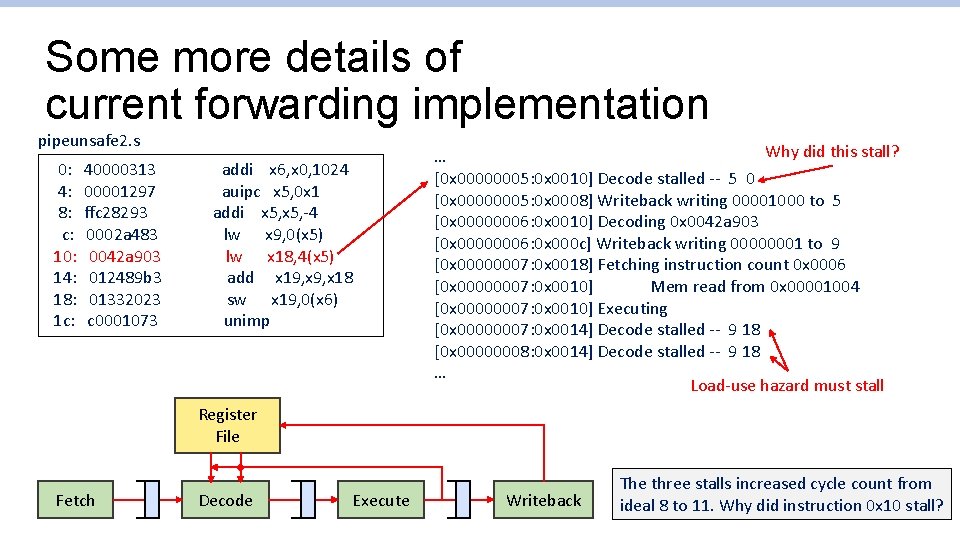

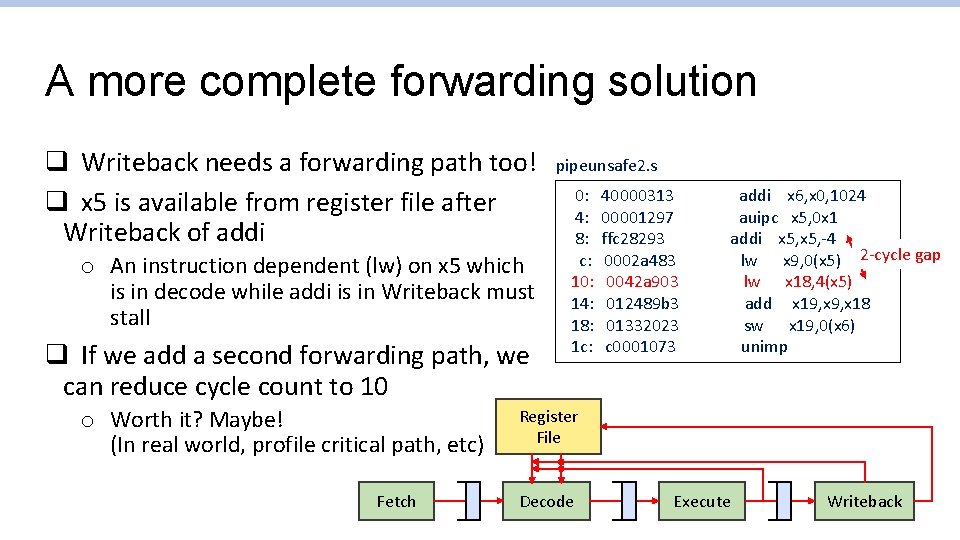

Some more details of current forwarding implementation pipeunsafe 2. s 0: 4: 8: c: 10: 14: 18: 1 c: 40000313 00001297 ffc 28293 0002 a 483 0042 a 903 012489 b 3 01332023 c 0001073 addi x 6, x 0, 1024 auipc x 5, 0 x 1 addi x 5, -4 lw x 9, 0(x 5) lw x 18, 4(x 5) add x 19, x 18 sw x 19, 0(x 6) unimp Why did this stall? … [0 x 00000005: 0 x 0010] Decode stalled -- 5 0 [0 x 00000005: 0 x 0008] Writeback writing 00001000 to 5 [0 x 00000006: 0 x 0010] Decoding 0 x 0042 a 903 [0 x 00000006: 0 x 000 c] Writeback writing 00000001 to 9 [0 x 00000007: 0 x 0018] Fetching instruction count 0 x 0006 [0 x 00000007: 0 x 0010] Mem read from 0 x 00001004 [0 x 00000007: 0 x 0010] Executing [0 x 00000007: 0 x 0014] Decode stalled -- 9 18 [0 x 00000008: 0 x 0014] Decode stalled -- 9 18 … Load-use hazard must stall Register File Fetch Decode Execute Writeback The three stalls increased cycle count from ideal 8 to 11. Why did instruction 0 x 10 stall?

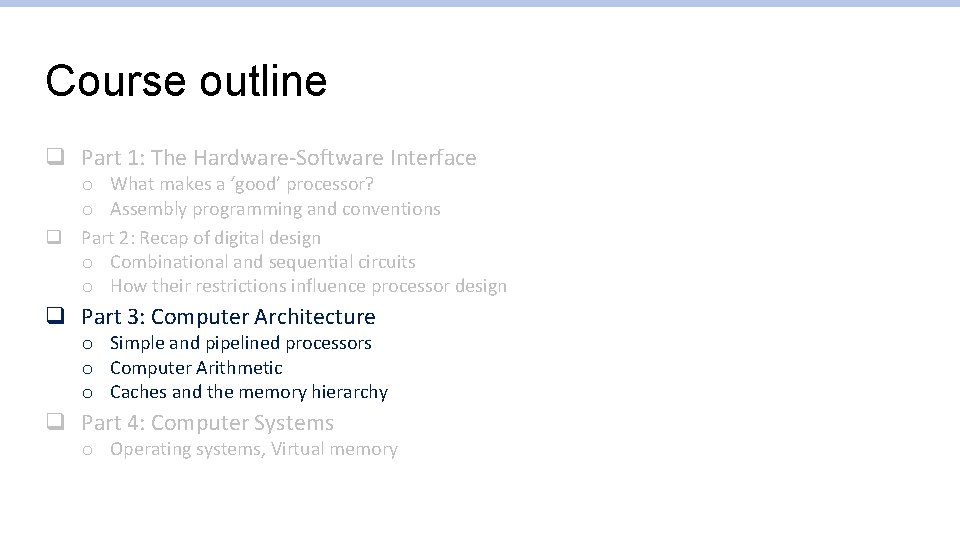

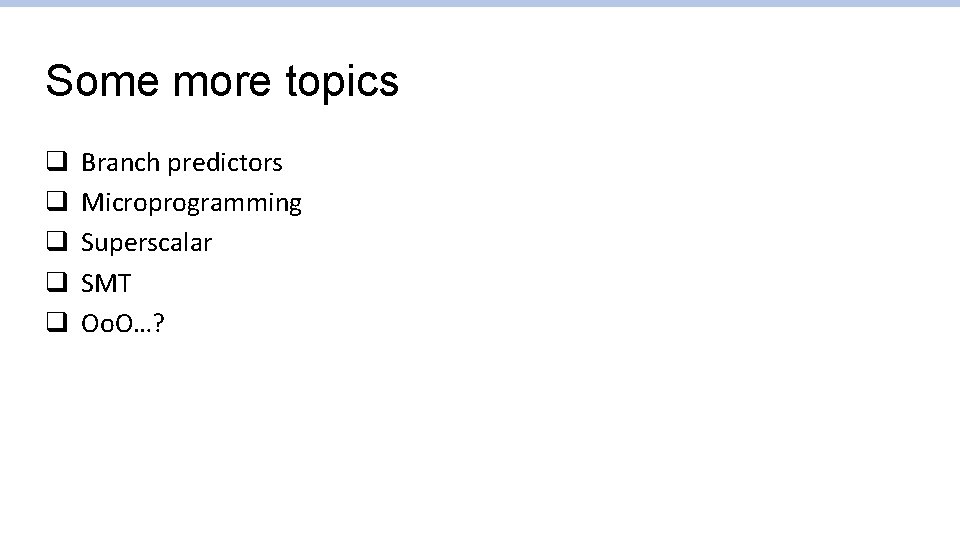

A more complete forwarding solution q Writeback needs a forwarding path too! q x 5 is available from register file after Writeback of addi o An instruction dependent (lw) on x 5 which is in decode while addi is in Writeback must stall q If we add a second forwarding path, we can reduce cycle count to 10 o Worth it? Maybe! (In real world, profile critical path, etc) Fetch pipeunsafe 2. s 0: 4: 8: c: 10: 14: 18: 1 c: 40000313 00001297 ffc 28293 0002 a 483 0042 a 903 012489 b 3 01332023 c 0001073 addi x 6, x 0, 1024 auipc x 5, 0 x 1 addi x 5, -4 lw x 9, 0(x 5) 2 -cycle gap lw x 18, 4(x 5) add x 19, x 18 sw x 19, 0(x 6) unimp Register File Decode Execute Writeback

Some more topics q q q Branch predictors Microprogramming Superscalar SMT Oo. O…?