Crawling Paolo Ferragina Dipartimento di Informatica Universit di

- Slides: 22

Crawling Paolo Ferragina Dipartimento di Informatica Università di Pisa Reading 20. 1, 20. 2 and 20. 3

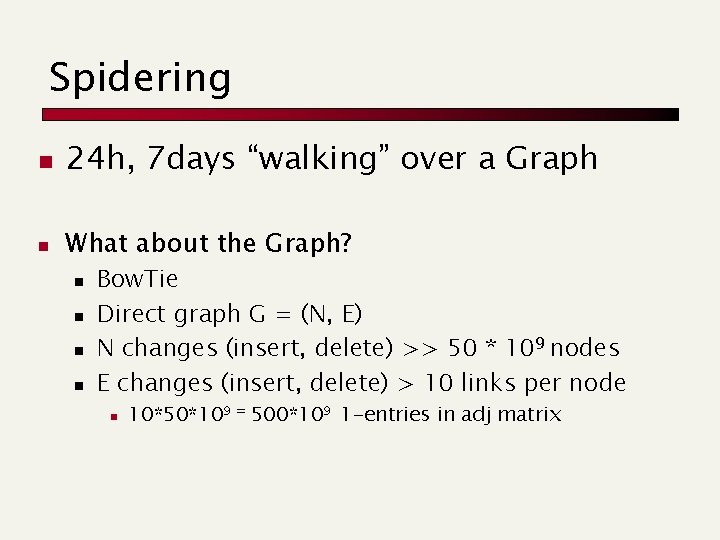

Spidering n 24 h, 7 days “walking” over a Graph n What about the Graph? n n Bow. Tie Direct graph G = (N, E) N changes (insert, delete) >> 50 * 109 nodes E changes (insert, delete) > 10 links per node n 10*50*109 = 500*109 1 -entries in adj matrix

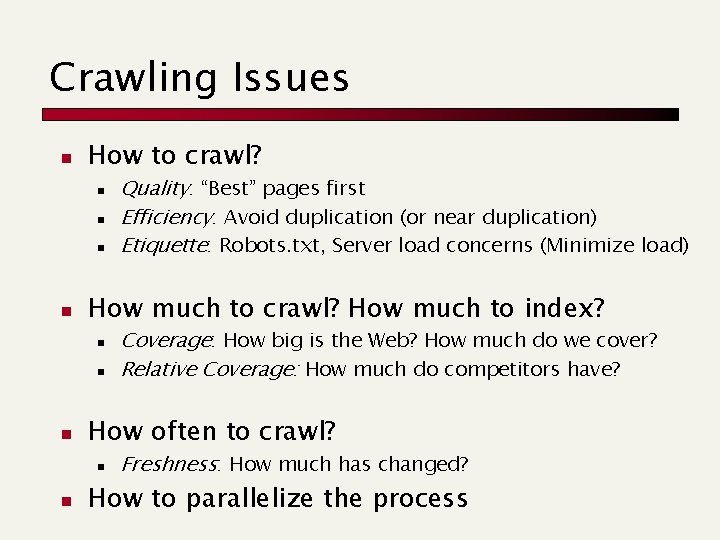

Crawling Issues n How to crawl? n n How much to crawl? How much to index? n n n Coverage: How big is the Web? How much do we cover? Relative Coverage: How much do competitors have? How often to crawl? n n Quality: “Best” pages first Efficiency: Avoid duplication (or near duplication) Etiquette: Robots. txt, Server load concerns (Minimize load) Freshness: How much has changed? How to parallelize the process

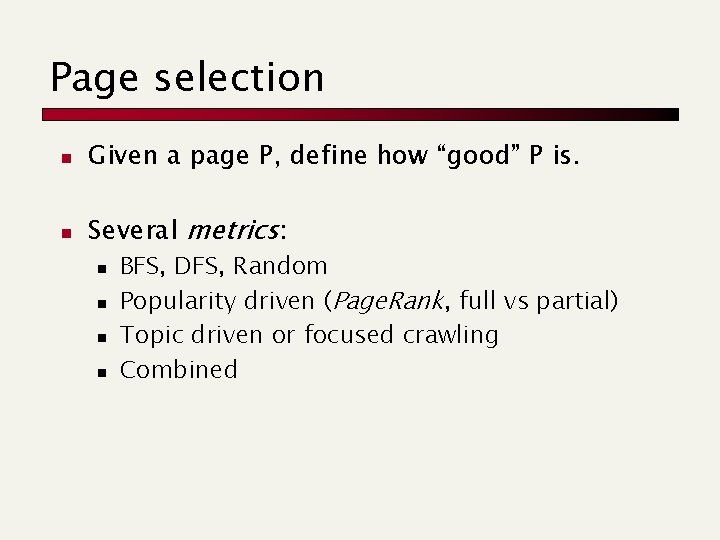

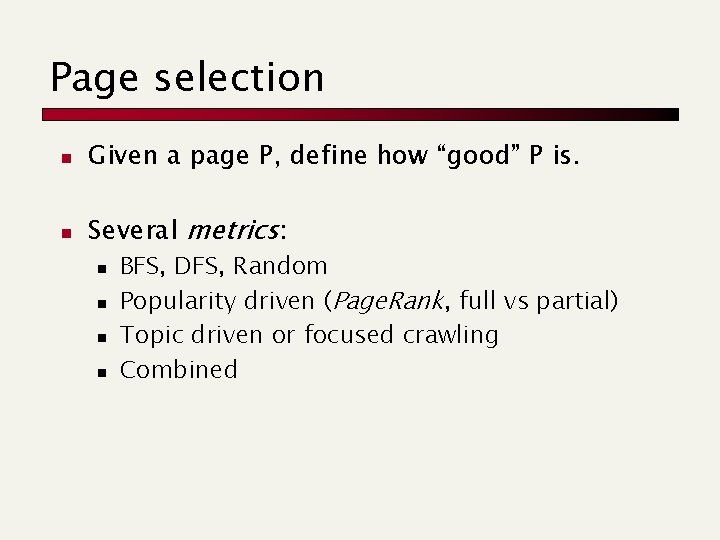

Page selection n Given a page P, define how “good” P is. n Several metrics: n n BFS, DFS, Random Popularity driven (Page. Rank, full vs partial) Topic driven or focused crawling Combined

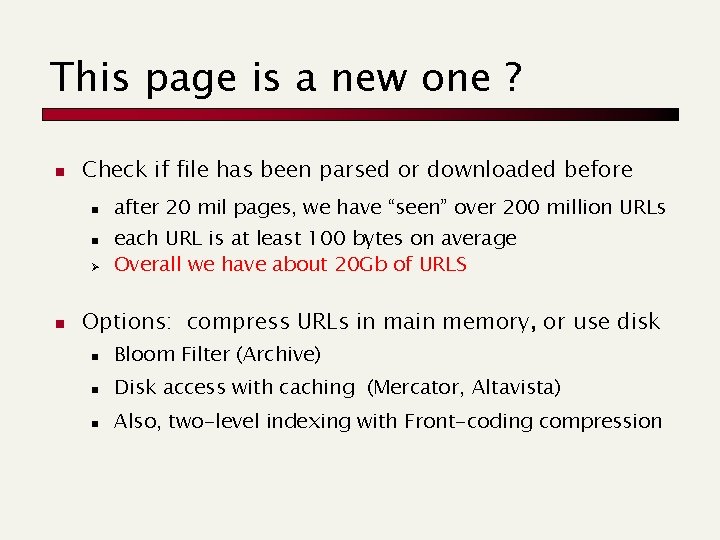

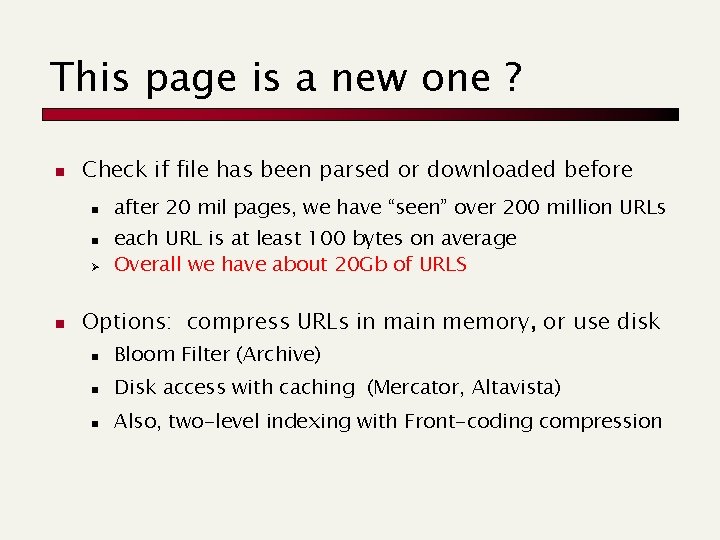

This page is a new one ? n Check if file has been parsed or downloaded before n n Ø n after 20 mil pages, we have “seen” over 200 million URLs each URL is at least 100 bytes on average Overall we have about 20 Gb of URLS Options: compress URLs in main memory, or use disk n Bloom Filter (Archive) n Disk access with caching (Mercator, Altavista) n Also, two-level indexing with Front-coding compression

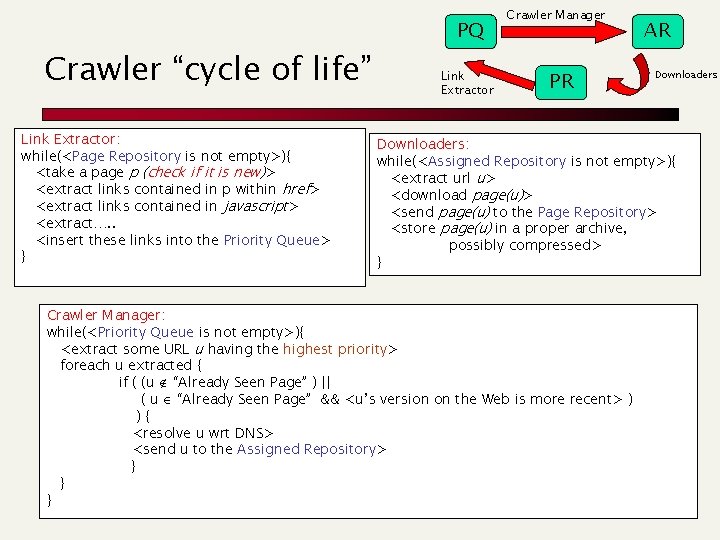

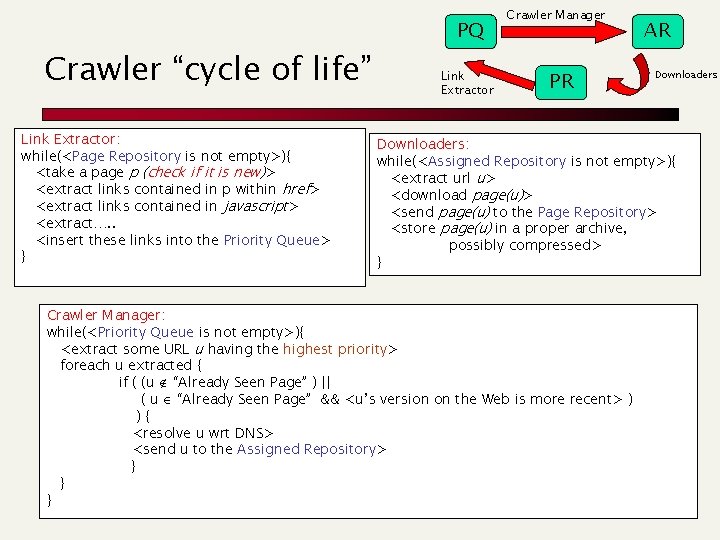

Crawler “cycle of life” Link Extractor: while(<Page Repository is not empty>){ <take a page p (check if it is new)> <extract links contained in p within href> <extract links contained in javascript> <extract…. . <insert these links into the Priority Queue> } PQ Link Extractor Crawler Manager PR AR Downloaders: while(<Assigned Repository is not empty>){ <extract url u> <download page(u)> <send page(u) to the Page Repository> <store page(u) in a proper archive, possibly compressed> } Crawler Manager: while(<Priority Queue is not empty>){ <extract some URL u having the highest priority> foreach u extracted { if ( (u “Already Seen Page” ) || ( u “Already Seen Page” && <u’s version on the Web is more recent> ) ){ <resolve u wrt DNS> <send u to the Assigned Repository> } } }

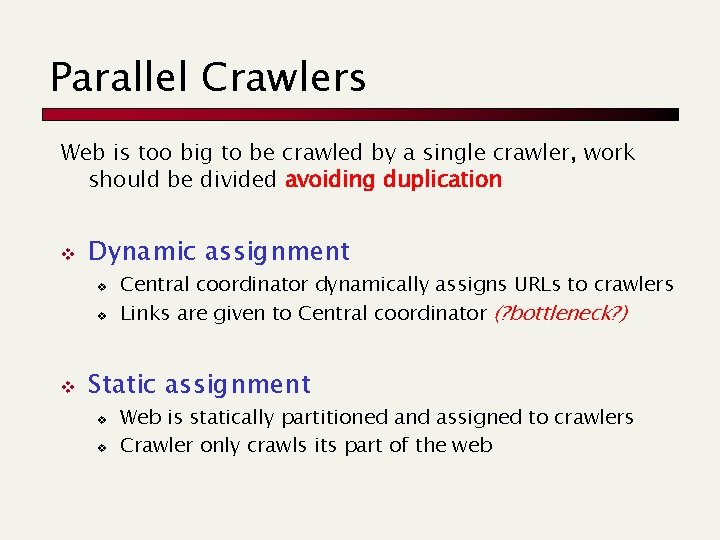

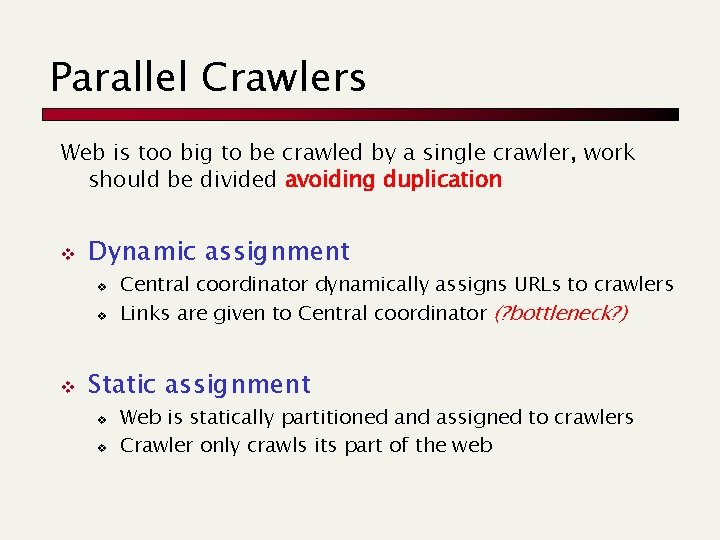

Parallel Crawlers Web is too big to be crawled by a single crawler, work should be divided avoiding duplication v Dynamic assignment v v v Central coordinator dynamically assigns URLs to crawlers Links are given to Central coordinator (? bottleneck? ) Static assignment v v Web is statically partitioned and assigned to crawlers Crawler only crawls its part of the web

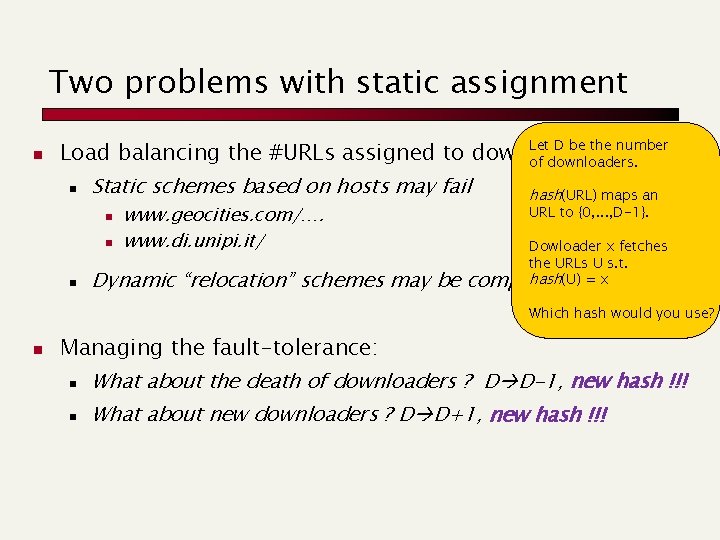

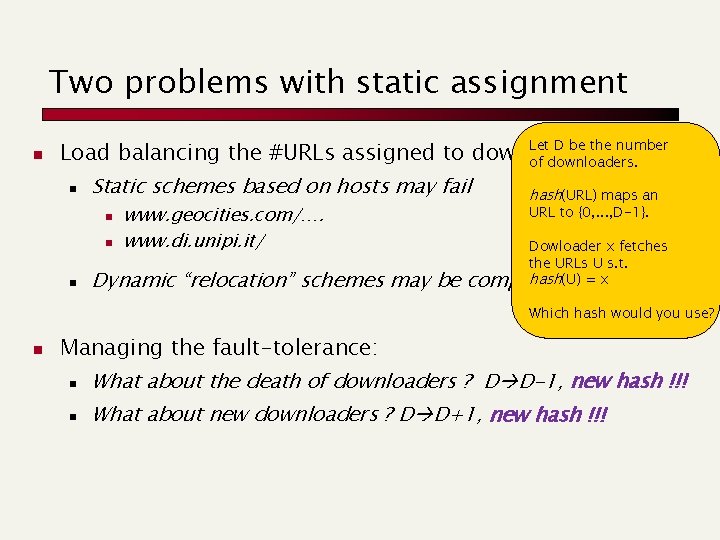

Two problems with static assignment n Let D be the number Load balancing the #URLs assigned to downloaders: of downloaders. n Static schemes based on hosts may fail hash(URL) maps an n www. geocities. com/…. www. di. unipi. it/ Dynamic “relocation” schemes may be URL to {0, . . . , D-1}. Dowloader x fetches the URLs U s. t. hash(U) = x complicated Which hash would you use? n Managing the fault-tolerance: n What about the death of downloaders ? D D-1, new hash !!! n What about new downloaders ? D D+1, new hash !!!

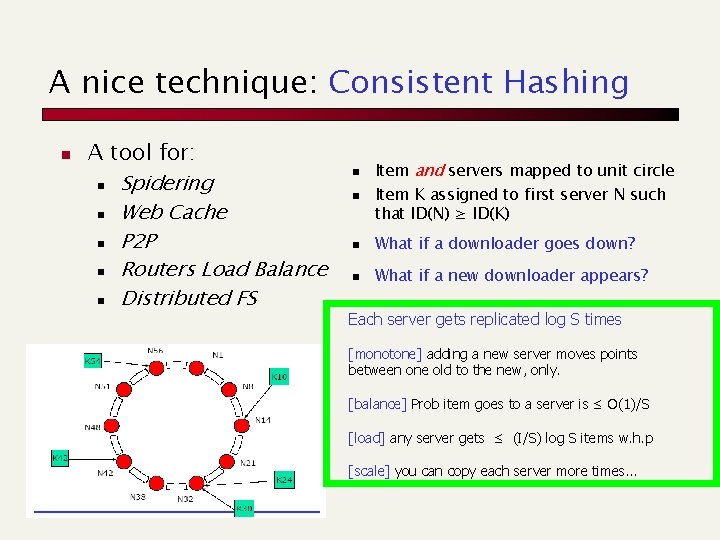

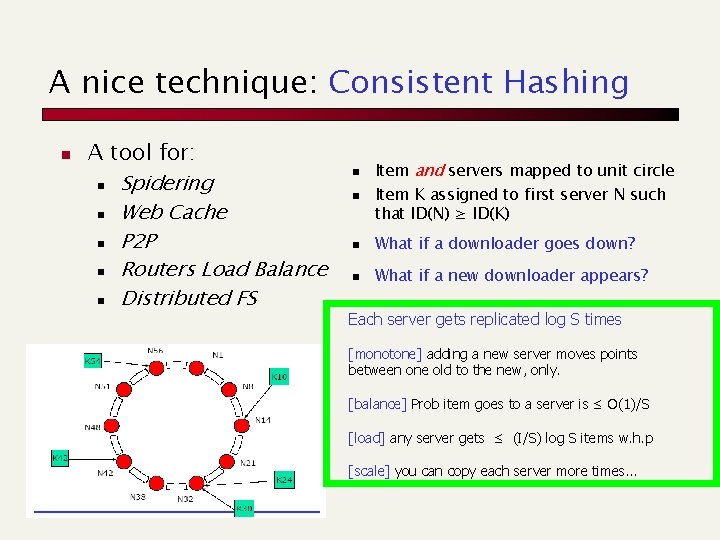

A nice technique: Consistent Hashing n A tool for: n Spidering n Web Cache n P 2 P n Routers Load Balance n Distributed FS n Item and servers mapped to unit circle Item K assigned to first server N such that ID(N) ≥ ID(K) n What if a downloader goes down? n What if a new downloader appears? n Each server gets replicated log S times [monotone] adding a new server moves points between one old to the new, only. [balance] Prob item goes to a server is ≤ O(1)/S [load] any server gets ≤ (I/S) log S items w. h. p [scale] you can copy each server more times. . .

Examples: Open Source n Nutch, also used by Wiki. Search n http: //nutch. apache. org/

Ranking Link-based Ranking (2° generation) Reading 21

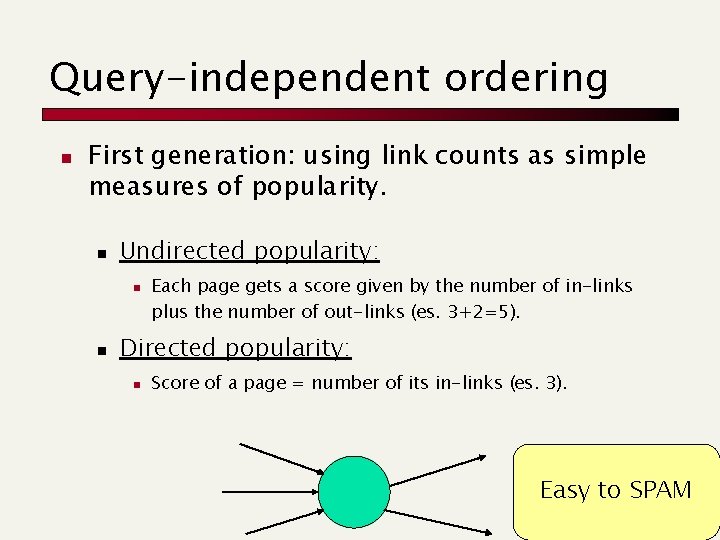

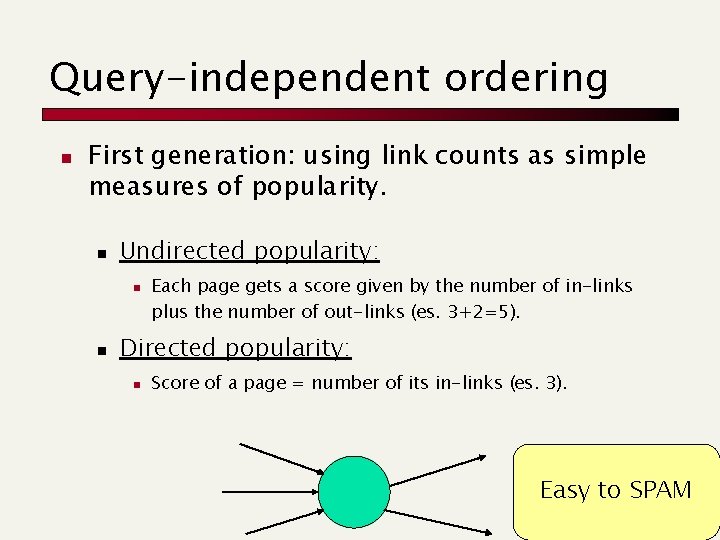

Query-independent ordering n First generation: using link counts as simple measures of popularity. n Undirected popularity: n n Each page gets a score given by the number of in-links plus the number of out-links (es. 3+2=5). Directed popularity: n Score of a page = number of its in-links (es. 3). Easy to SPAM

Second generation: Page. Rank n Each link has its own importance!! n Page. Rank is n independent of the query n many interpretations…

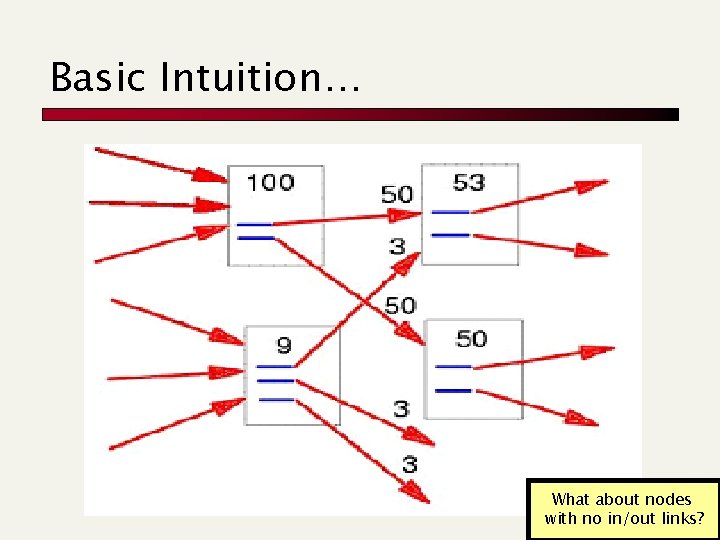

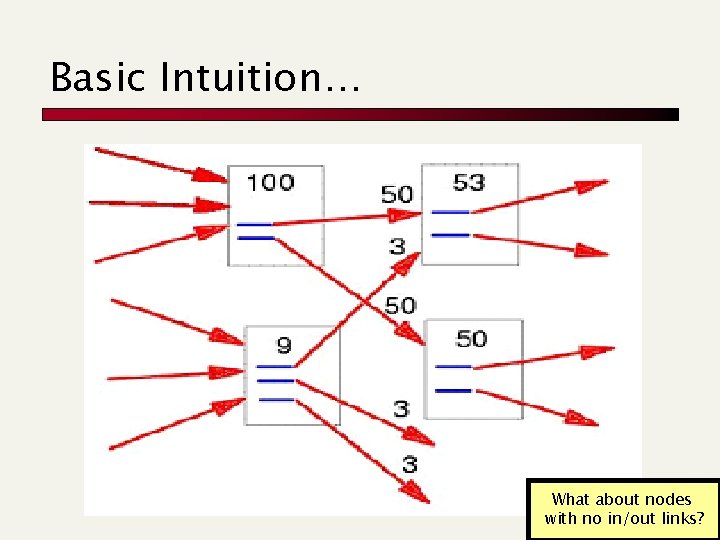

Basic Intuition… What about nodes with no in/out links?

Google’s Pagerank Random jump Principal eigenvector r = [ a PT + (1 - a) e e. T ] × r B(i) : set of pages linking to i. #out(j) : number of outgoing links from j. e : vector of components 1/sqrt{N}.

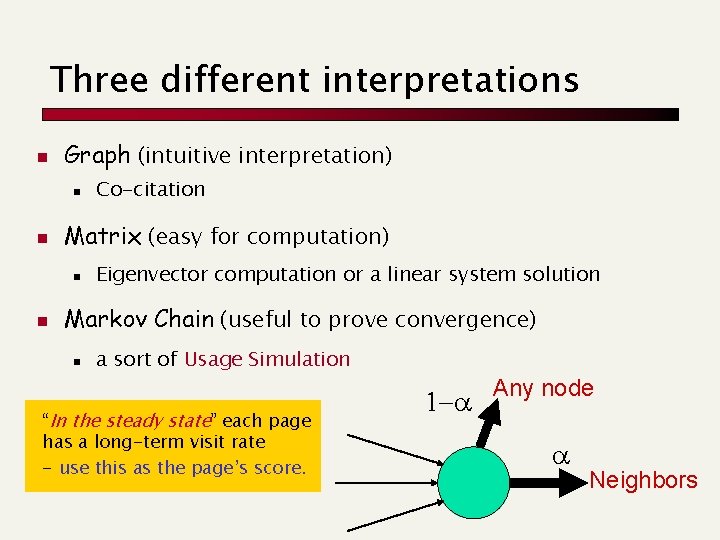

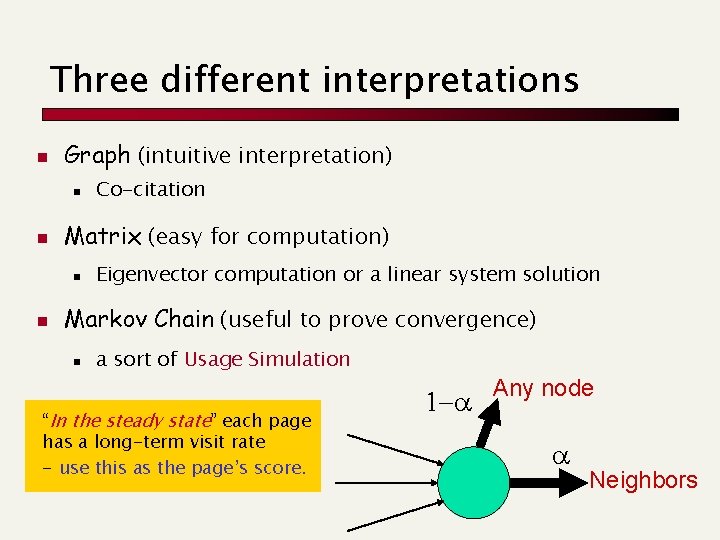

Three different interpretations n Graph (intuitive interpretation) n n Matrix (easy for computation) n n Co-citation Eigenvector computation or a linear system solution Markov Chain (useful to prove convergence) n a sort of Usage Simulation “In the steady state” each page has a long-term visit rate - use this as the page’s score. 1 -a Any node a Neighbors

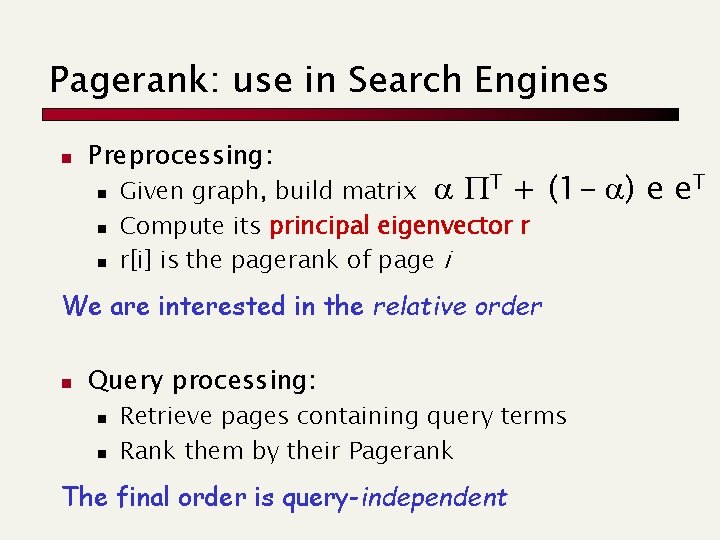

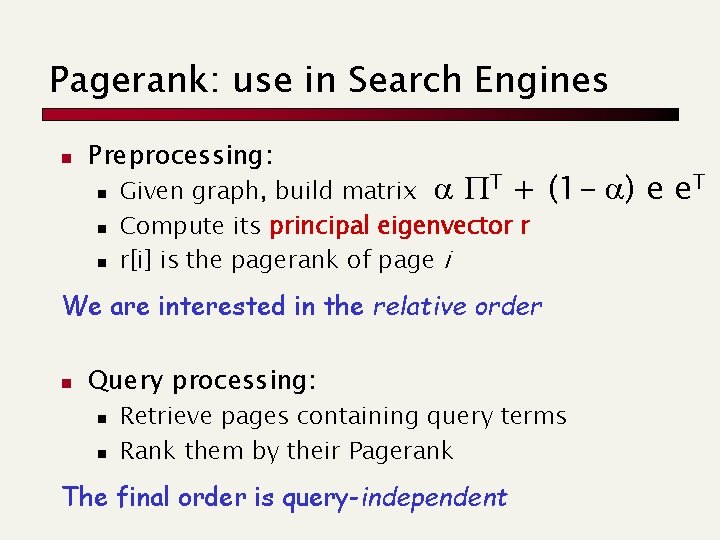

Pagerank: use in Search Engines n Preprocessing: n n n Given graph, build matrix a PT + Compute its principal eigenvector r r[i] is the pagerank of page i (1 - a) e e. T We are interested in the relative order n Query processing: n n Retrieve pages containing query terms Rank them by their Pagerank The final order is query-independent

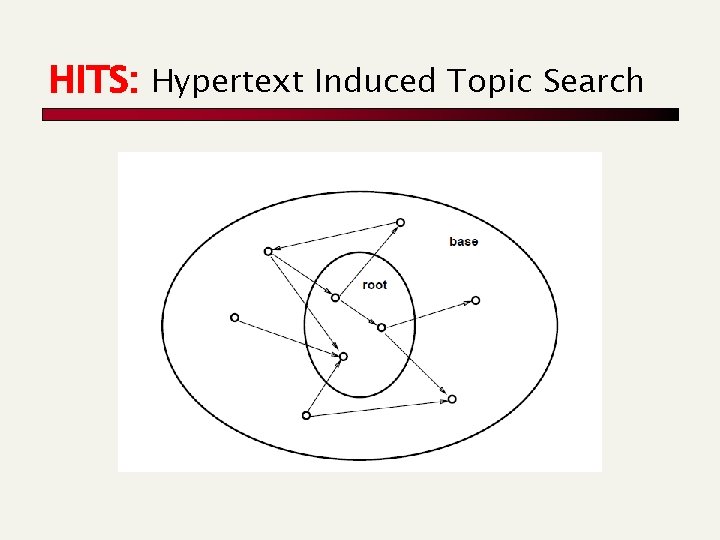

HITS: Hypertext Induced Topic Search

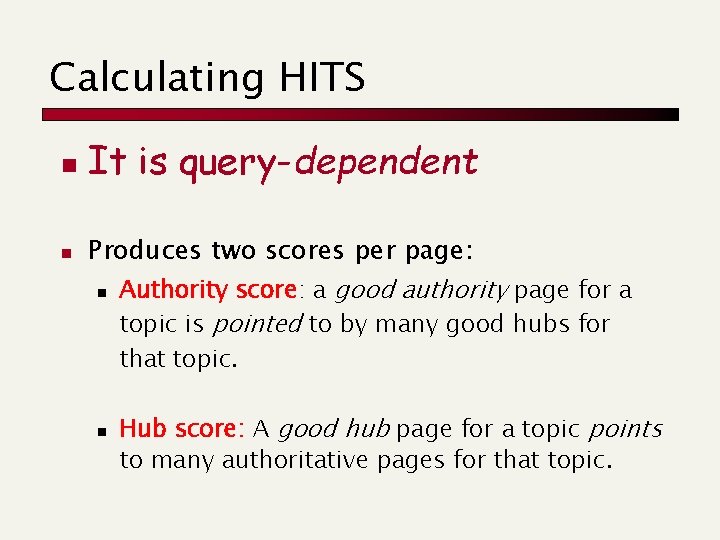

Calculating HITS n n It is query-dependent Produces two scores per page: n Authority score: a good authority page for a topic is pointed to by many good hubs for that topic. n Hub score: A good hub page for a topic points to many authoritative pages for that topic.

Authority and Hub scores 5 2 3 1 4 1 6 7 a(1) = h(2) + h(3) + h(4) h(1) = a(5) + a(6) + a(7)

HITS: Link Analysis Computation Where a: Vector of Authority’s scores h: Vector of Hub’s scores. A: Adjacency matrix in which ai, j = 1 if i j Thus, h is an eigenvector of AAt a is an eigenvector of At. A Symmetric matrices

Weighting links Weight more if the query occurs in the neighborhood of the link (e. g. anchor text).

Pfordelta

Pfordelta Paolo ferragina

Paolo ferragina Paolo ferragina

Paolo ferragina Paolo ferragina

Paolo ferragina Crawling informatica

Crawling informatica Crawling informatica

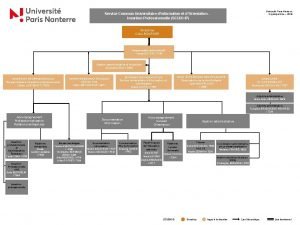

Crawling informatica Scuio ip nanterre

Scuio ip nanterre Universit

Universit Universit sherbrooke

Universit sherbrooke Rotterdam university economics

Rotterdam university economics Universit of london

Universit of london Crawling

Crawling Crawling

Crawling Crawling peg

Crawling peg Abnormal crawling

Abnormal crawling Dipartimento di chimica unipv

Dipartimento di chimica unipv Dipartimento di chimica pavia

Dipartimento di chimica pavia Dipartimento matematica pisa

Dipartimento matematica pisa Dipartimento del tesoro

Dipartimento del tesoro Dipartimento di psicologia vanvitelli

Dipartimento di psicologia vanvitelli Dipartimento di fisica ferrara

Dipartimento di fisica ferrara Scuola di chicago

Scuola di chicago Dipartimento psicologia torino

Dipartimento psicologia torino