Congestion Control and Resource Allocation Outline Overview Queuing

- Slides: 37

Congestion Control and Resource Allocation Outline: Overview Queuing Disciplines TCP Congestion Control Combined Techniques Spring 2006 CS 332 1

Congestion Control Overview Spring 2006 CS 332 2

Congestion vs Allocation • Congestion: Packets contend at router for use of link. When too many packets contend for given link, queues overflow, and packets are dropped. Drops become common => congestion. • Good allocation schemes can help avoid congestion. But allocating with precision is difficult because resources are distributed. Spring 2006 CS 332 3

Two Sides of Same Coin • Precise allocation (in both space and time) can completely avoid congestion. – As mentioned, difficult • Schedule nothing (I. e. allocate nothing) and deal with congestion when it occurs – Easier approach – Disruptive: many packets discarded before congestion controlled (and retransmits only add to problem) • Middle ground: make inexact allocation decisions and add some congestion recovery mechanisms Spring 2006 CS 332 4

Who handles congestion? • Network (Routers)? – Queueing disciplines decide what gets dropped – Queueing can also segregate traffic (your packets cannot affect mine, etc) • End Hosts? – Control speed at which packets are placed on network Spring 2006 CS 332 5

Issues • Resources to be allocated: – Link bandwidth – Buffer space in switches and routers • Fairness: share pain among users • Flow control is not congestion control – Flow control: keep fast sender from overrunning slow receiver – Congestion control: keep senders from sending too much data into network • Congestion control is not routing! – Some routers cannot be routed around (bottleneck) Spring 2006 CS 332 6

Our Model • Packet switched network – Excludes virtual circuit networks (though can have connection-oriented service at transport layer) • Service Model: Best effort • Connectionless flows – Flow: sequence of packets with same source/dest pair following same route through network – Does not imply any end-to-end semantics (such as reliable and ordered delivery) Spring 2006 CS 332 7

Spectrum of Paradigms • “Pure” connectionless: – complete independence of datagrams – no state at routers • “Pure” connection-oriented: – “hard” state at routers: must be explicitly created and removed by signaling • Connectionless flows: (Internet) – Datagrams not completely independent – Soft state: state maintained for each flow that helps with resource allocation decisions. Correct operation of network NOT dependent on presence of soft state – Set up is implicit or explicit (why isn’t explicit same as virtual circuit in connection-oriented network? ) Spring 2006 CS 332 8

Taxonomy of Congestion Control Mechanisms • Router-Centric vs Host Centric • Reservation-Based vs Feedback-Based • Window-Based vs Rate-based Spring 2006 CS 332 9

Taxonomy (cont. ) • Router-Centric vs Host-Centric – Router-centric: router decides when packets forwarded, which are dropped, and informs hosts of how many packets they may send – Host-centric: Hosts observe network conditions (I. e. packet success rate) and adjust behavior accordingly • Reservation-Based vs Feedback-Based – Reservation-based: End host asks for capacity, router allocates resources if possible (router-centric) – Feedback-based: • Explicit feedback (typically router-centric) • Implicit feedback (typically host-centric) Spring 2006 CS 332 10

Taxonomy (cont. ) • Windows-based vs rate-based – Windows-based: we’ve seen it – Rate-based: how many bits per second receiver or network is able to absorb • Still an open area of research • Logical choice for reservation-based systems supporting differentiated qualities of service (Qo. S) • Typically two combinations used: – Host-centric, feedback-based, window-based (Internet) – Router-centric, reservation-based, rate-based (Qo. S service models) Spring 2006 CS 332 11

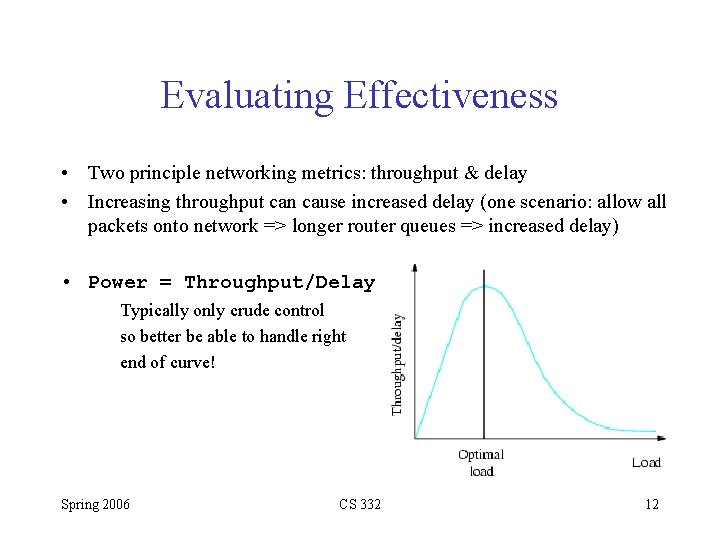

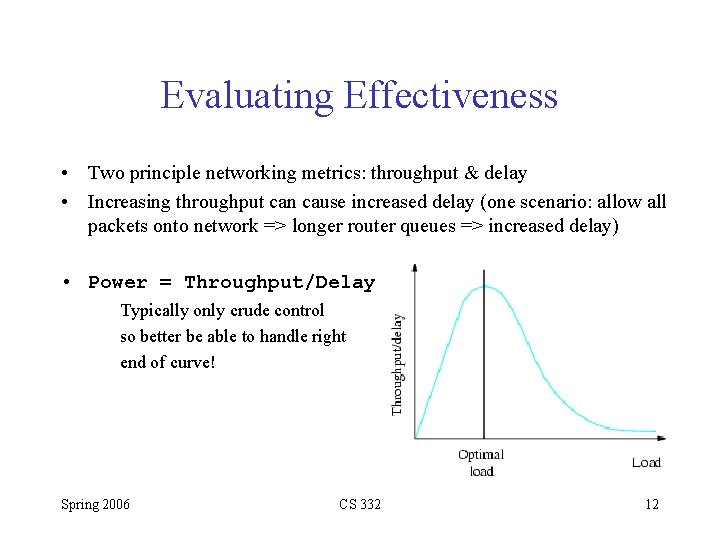

Evaluating Effectiveness • Two principle networking metrics: throughput & delay • Increasing throughput can cause increased delay (one scenario: allow all packets onto network => longer router queues => increased delay) • Power = Throughput/Delay Typically only crude control so better be able to handle right end of curve! Spring 2006 CS 332 12

Problems with Power • Derived from queuing theory under assumption of infinite queues. • Defined relative to single flow, so not clear how it extends to multiple competing flows. • It’s got problems, but right now it’s the best we have, so it’s used Spring 2006 CS 332 13

Evaluating Fairness • Murky waters – Reservation-based schemes provide explicit way to create controlled unfairness. • Is fair share same as equal share? – Should path length be considered (I. e. how does one four-hop flow compare with three one-hop flows? ) Spring 2006 CS 332 14

Fairness metric • Proposed by Raj Jain, assumes that fairness implies equality and all paths of equal length • Given flow throughputs (in bits/sec say) Assign fairness • Note: – n flows each receiving 1 bps => fairness 1 – k of n receive equal, others receive none => k/n Spring 2006 CS 332 15

Queuing Disciplines Spring 2006 CS 332 16

Queuing Disciplines • These effectively allocate: – Bandwidth: which packets gets transmitted – Buffer space: which packets get discarded • Affects latency • Two components: – Scheduling discipline: order packets transmitted – Drop policy: which packets get dropped • Two examples: – FIFO (also called first-come-first-served(FCFS)) – “Fair queuing” Spring 2006 CS 332 17

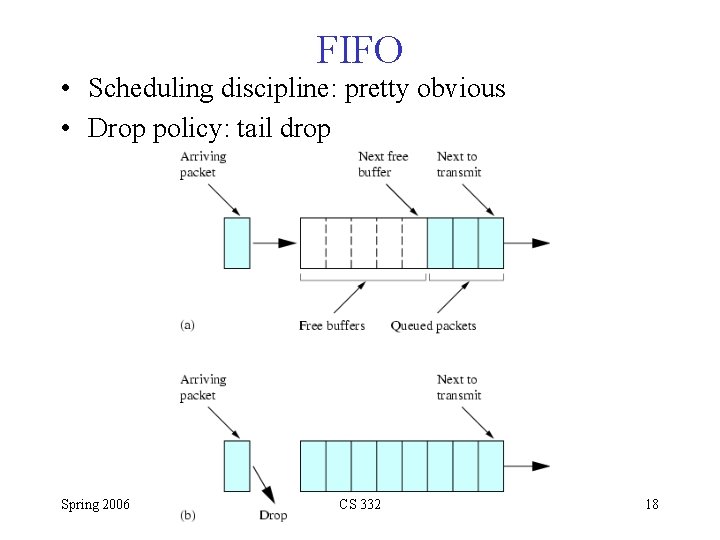

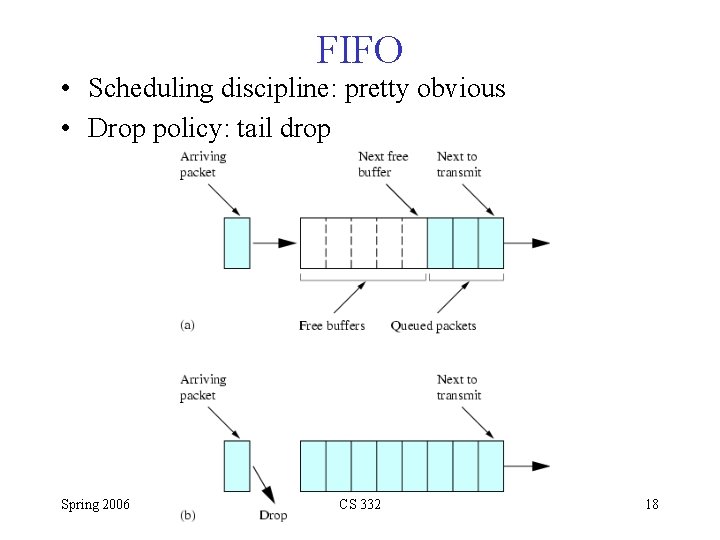

FIFO • Scheduling discipline: pretty obvious • Drop policy: tail drop Spring 2006 CS 332 18

FIFO (cont) • Simplest of all queuing algorithms • Most widely used in Internet • Places all responsibility for congestion control and resource allocation at edges of network (routers don’t have any responsibility for detecting badness) • A related mechanism: priority queuing – One queue for each priority level – Transmit packets of higher priority queues if nonempty – Who sets priority? What about “pushback”? Spring 2006 CS 332 19

FIFO problems • Doesn’t separate packets according to flow to which they belong – Can any congestion-control implemented entirely at source work? (Remember, no help from routers!) – No means to police how well sources adhere to policies (what if they’re not using TCP as transport protocol? ) • Ill-behaved app can grab arbitrarily large fraction of network capacity • Some apps do this now: Internet telephony Spring 2006 CS 332 20

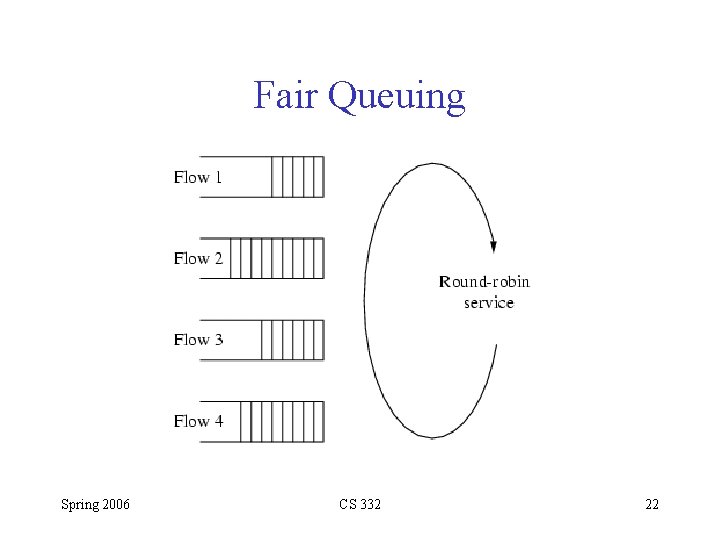

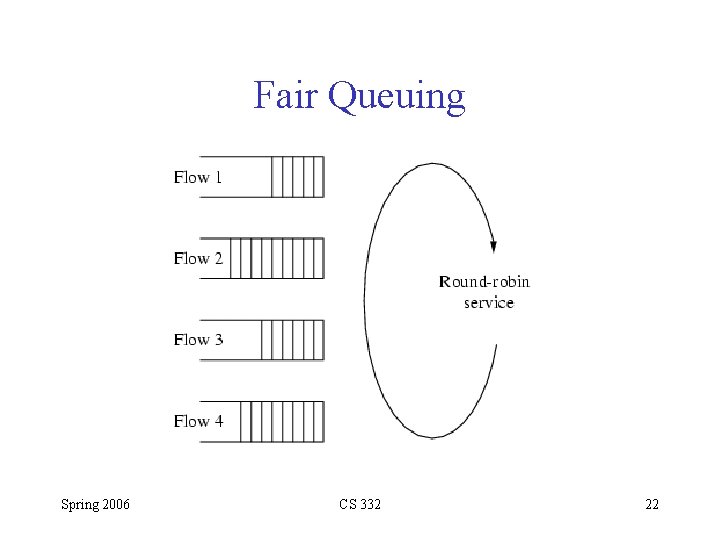

Fair Queuing (FQ) • Maintain separate queue for each flow, service these queues in round-robin manner • Prevents any single flow from grabbing too much capacity, since it only floods its own queue • Routers need not tell hosts anything about router state or limit the amount they send (I. e. designed to be used in conjunction with end-to-end mechanism, but limits damage from ill-behaved sources) Spring 2006 CS 332 21

Fair Queuing Spring 2006 CS 332 22

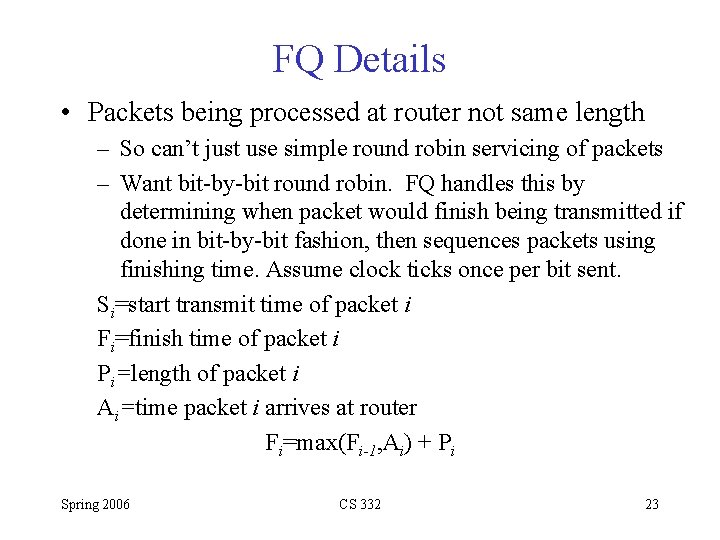

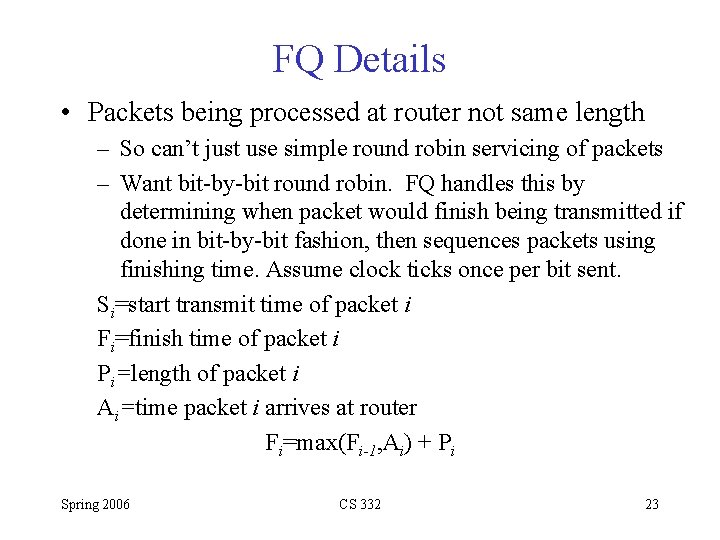

FQ Details • Packets being processed at router not same length – So can’t just use simple round robin servicing of packets – Want bit-by-bit round robin. FQ handles this by determining when packet would finish being transmitted if done in bit-by-bit fashion, then sequences packets using finishing time. Assume clock ticks once per bit sent. Si=start transmit time of packet i Fi=finish time of packet i Pi=length of packet i Ai=time packet i arrives at router Fi=max(Fi-1, Ai) + Pi Spring 2006 CS 332 23

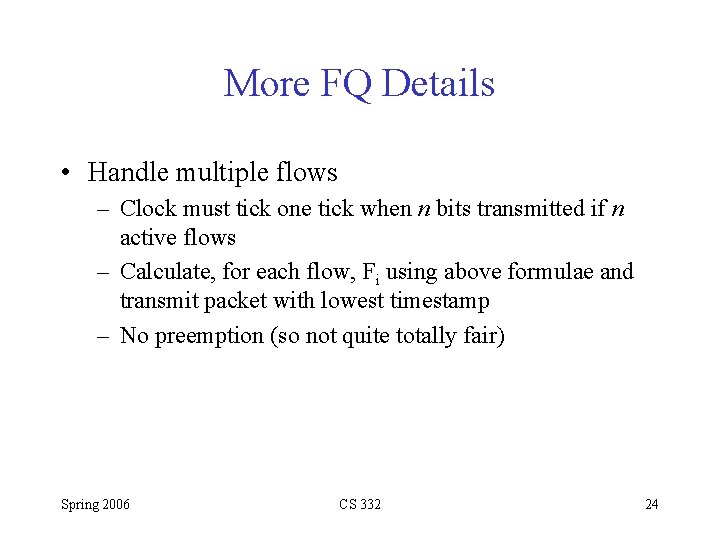

More FQ Details • Handle multiple flows – Clock must tick one tick when n bits transmitted if n active flows – Calculate, for each flow, Fi using above formulae and transmit packet with lowest timestamp – No preemption (so not quite totally fair) Spring 2006 CS 332 24

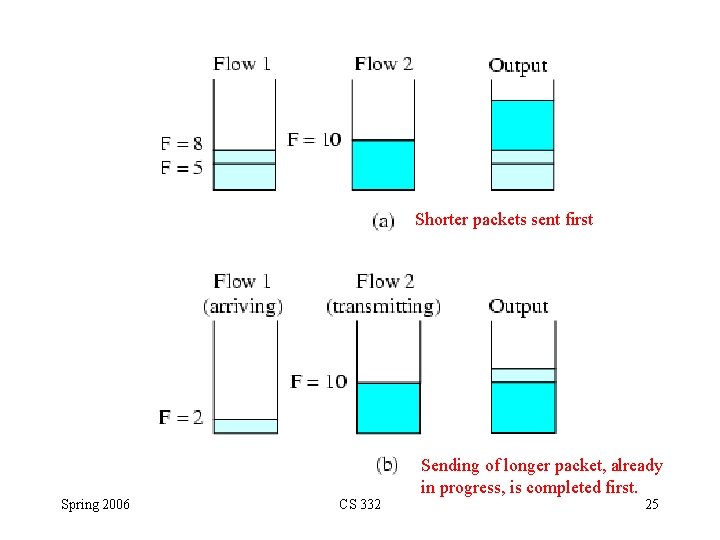

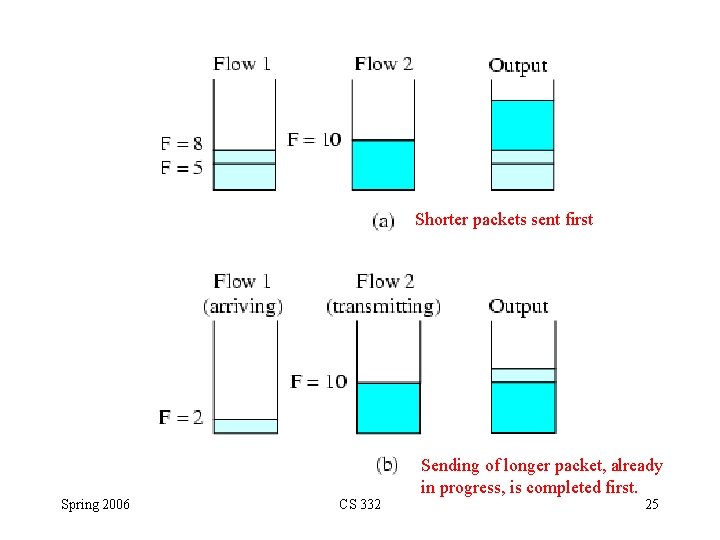

Shorter packets sent first Sending of longer packet, already in progress, is completed first. Spring 2006 CS 332 25

Still More FQ • FQ is work conserving: link never left idle as long as there is a packet queued – So sharing link with flows that are not sending data means that I can get larger share of bandwidth • n flows means I get 1/n of bandwidth. If I try to use more than that, packets get larger TS, sit in queue longer (which ones dropped is not concern of FQ since it’s only a scheduling policy) • Weighted Fair Queuing (WFC): assign different weights to flows (see Qo. S stuff) Spring 2006 CS 332 26

Thanks to thinkgeek. com. Check it out… TCP Congestion Control Spring 2006 CS 332 27

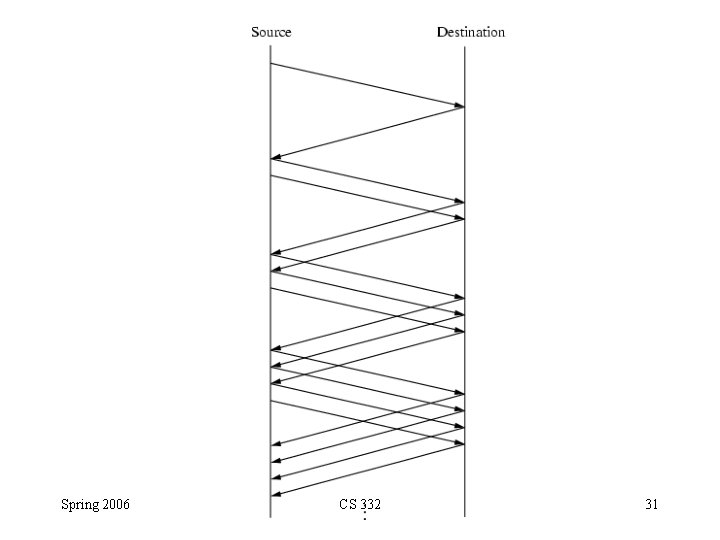

TCP Congestion Control • End-to-end congestion control • Assumes FIFO queuing, but works with FQ also • Broadly: – Each source determines how much capacity in network – When source has placed this many packets in network, ACKS signal that packets have left network, so safe to transmit (TCP is self-clocking) • How do we determine network capacity (which changes over time)? ! Spring 2006 CS 332 28

TCP Congestion Control • Additive increase/multiplicative decrease • State variables: Congestion. Window (measured in bytes, but think in terms of packets) Max. Window=MIN(Congestion. Window, Advertised. Window) Effective. Window=Max. Window–(Last. Byte. Sent–Last. Byte. Acked) • Who sets Congestion. Window? – TCP sets it based on level of congestion it perceives – Decrease if congestion increases and vice-versa Spring 2006 CS 332 29

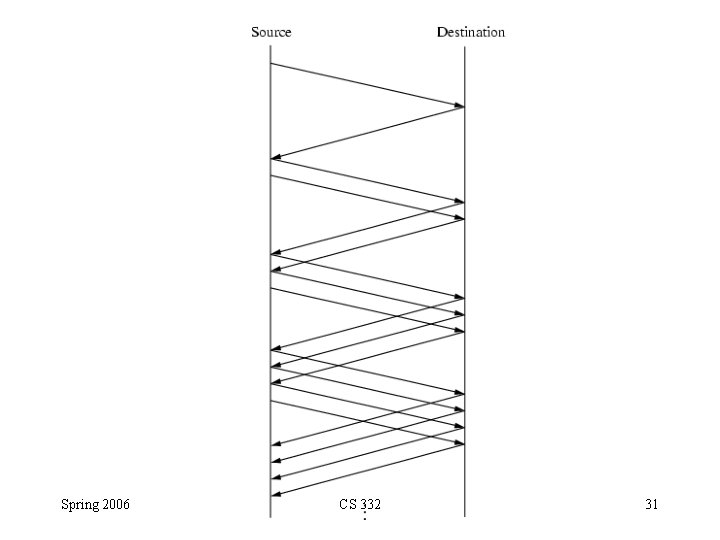

Setting Congestion. Window • Observation: when packets not delivered, cause is congestion (except in rare instances) • With any timeout, Congestion. Window is cut in half (multiplicative decrease) – In practice, not allowed to fall below maximum segment size (MSS) • Each time source successfully sends Congestion. Window worth of packets (each packet in last RTT is ACKed) it adds equivalent of 1 packet to Congestion. Window (additive increase) Spring 2006 CS 332 30

Spring 2006 CS 332 31

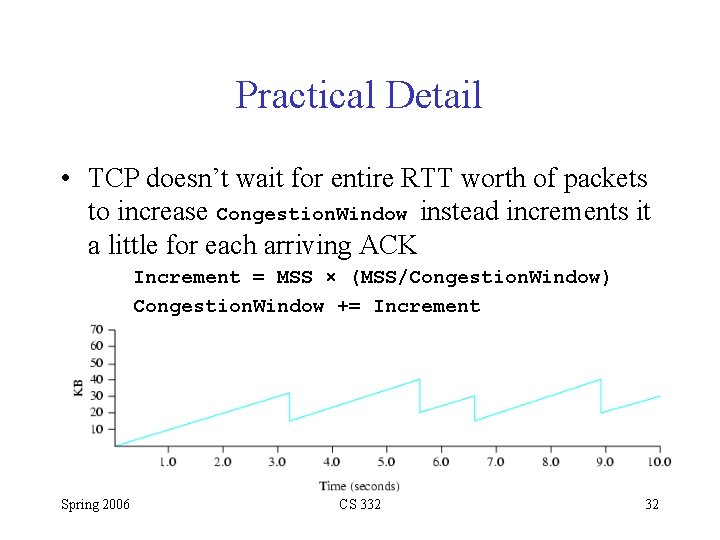

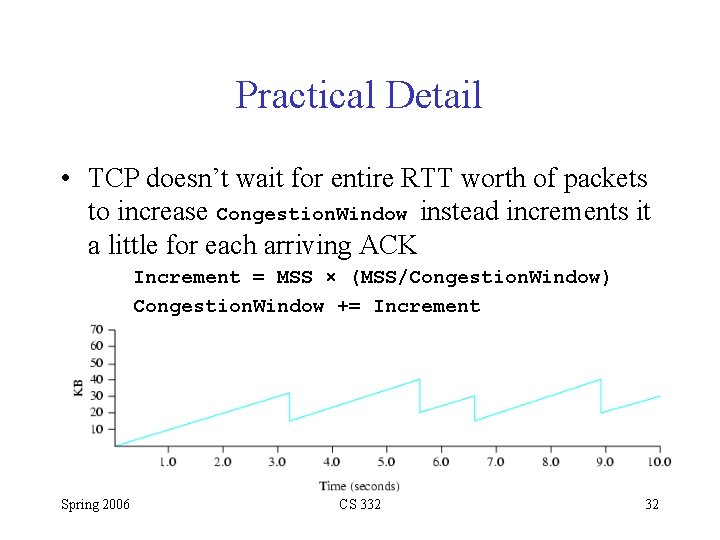

Practical Detail • TCP doesn’t wait for entire RTT worth of packets to increase Congestion. Window instead increments it a little for each arriving ACK Increment = MSS × (MSS/Congestion. Window) Congestion. Window += Increment Spring 2006 CS 332 32

TCP Congestion Control • Note: source reduces congestion window much faster than it increases it – A (proven) necessary condition for stability • Consequences of too large window much worse than too small window—packets dropped, adding to congestion, so important to leave this state quickly • Note need for accurate timeout mechanism (because timeouts trigger congestion control) Spring 2006 CS 332 33

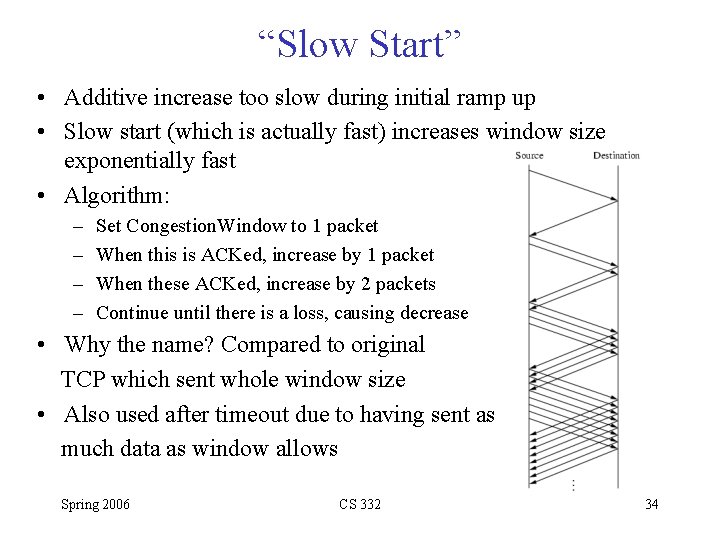

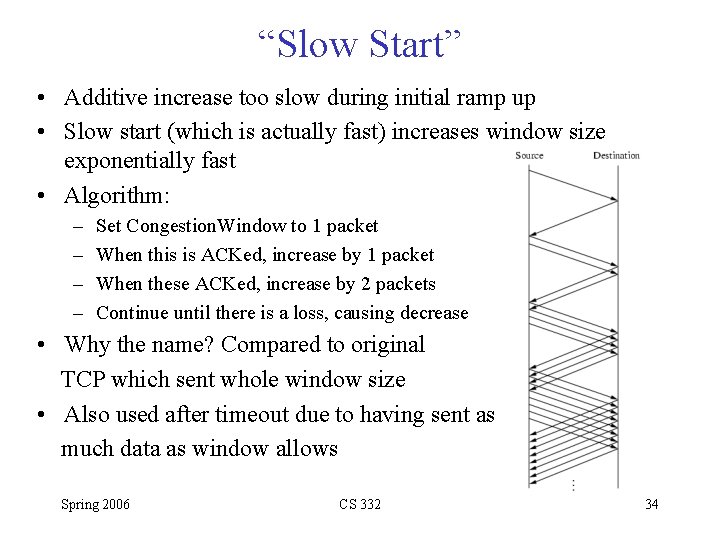

“Slow Start” • Additive increase too slow during initial ramp up • Slow start (which is actually fast) increases window size exponentially fast • Algorithm: – – Set Congestion. Window to 1 packet When this is ACKed, increase by 1 packet When these ACKed, increase by 2 packets Continue until there is a loss, causing decrease • Why the name? Compared to original TCP which sent whole window size • Also used after timeout due to having sent as much data as window allows Spring 2006 CS 332 34

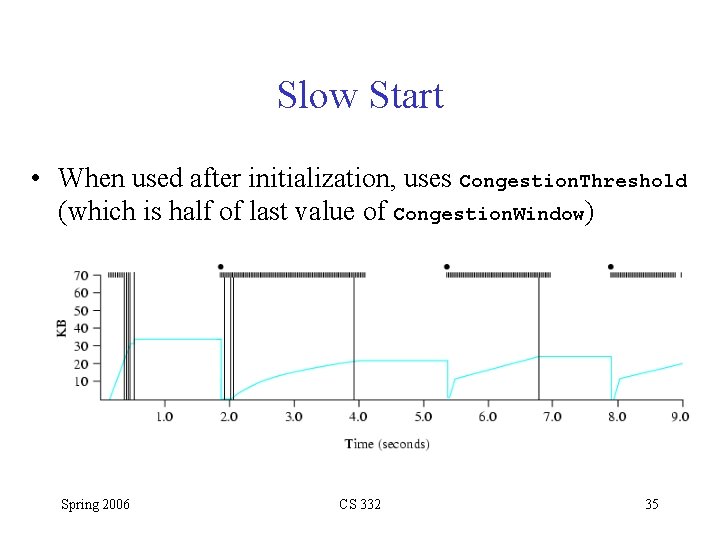

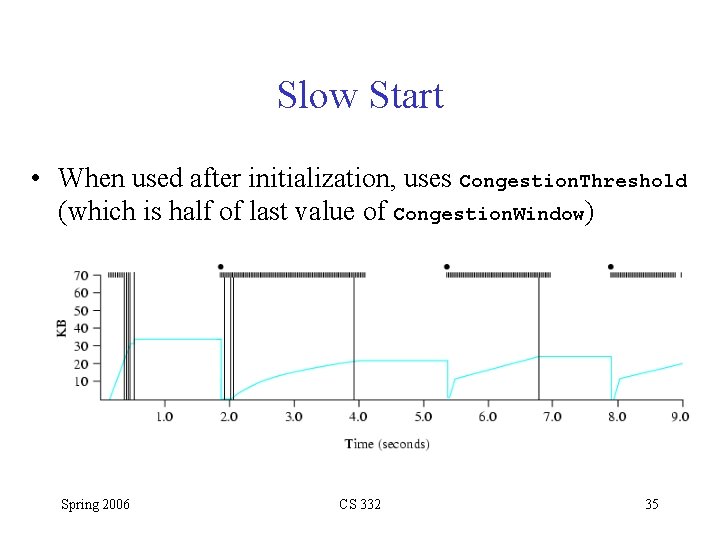

Slow Start • When used after initialization, uses Congestion. Threshold (which is half of last value of Congestion. Window) Spring 2006 CS 332 35

“Packet-pair” • Slow start has potential to cause lots of dropped packets (what if window is 16 K? ) • Packet-pair: Send groups of packets and see how many make it through – Specifically, send pair of packets with no spacing between them and measure difference in return time of Acks – Promising, but too new to know how effective it may be Spring 2006 CS 332 36

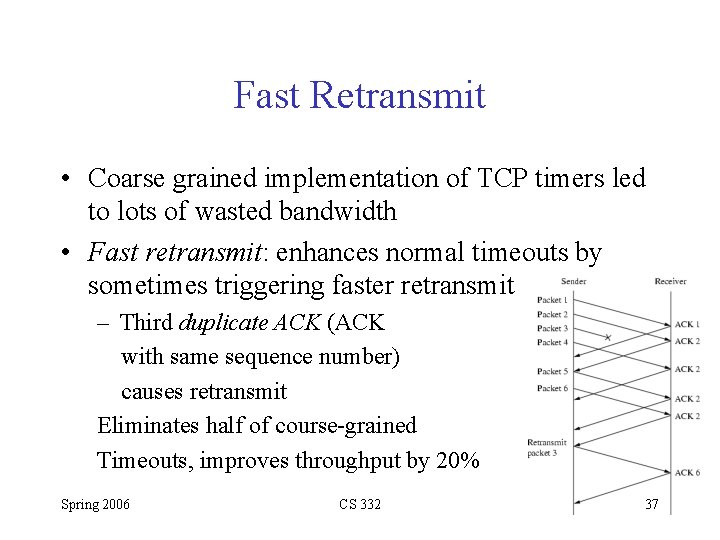

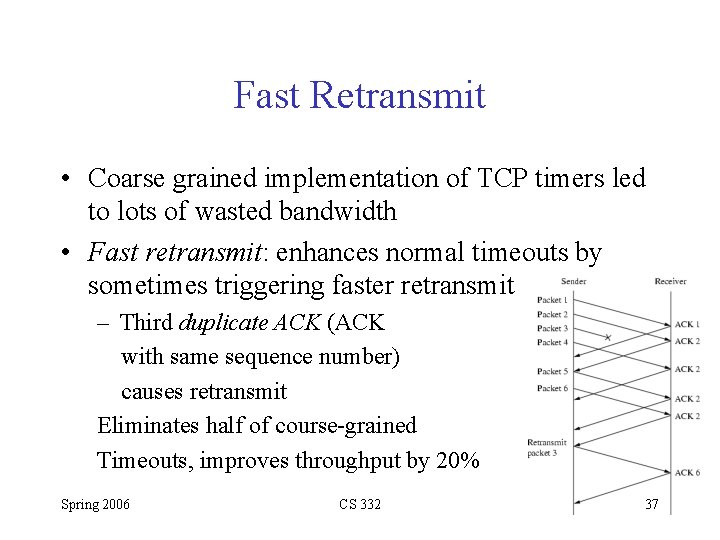

Fast Retransmit • Coarse grained implementation of TCP timers led to lots of wasted bandwidth • Fast retransmit: enhances normal timeouts by sometimes triggering faster retransmit – Third duplicate ACK (ACK with same sequence number) causes retransmit Eliminates half of course-grained Timeouts, improves throughput by 20% Spring 2006 CS 332 37