Congestion Control Outline Queuing Discipline Reacting to Congestion

- Slides: 34

Congestion Control Outline Queuing Discipline Reacting to Congestion Avoiding Congestion Quality of Service Fall 2006 CS 561 1

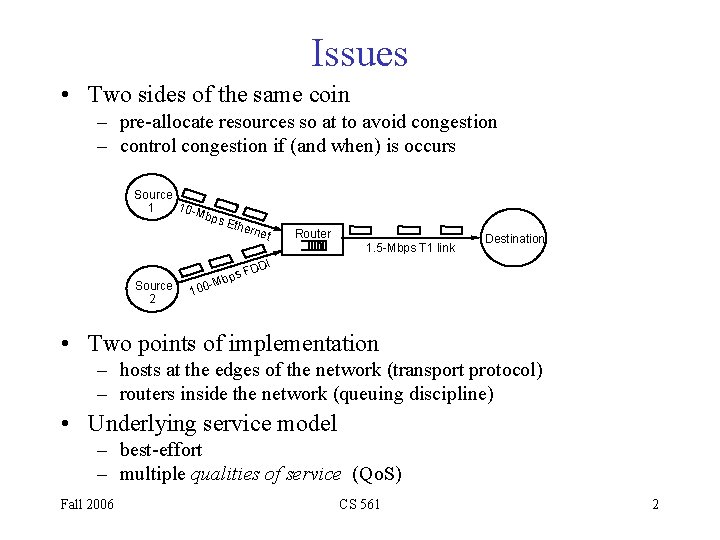

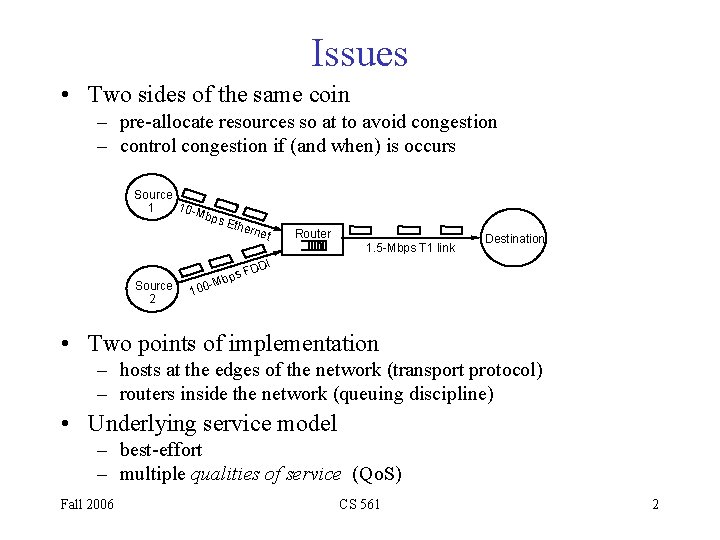

Issues • Two sides of the same coin – pre-allocate resources so at to avoid congestion – control congestion if (and when) is occurs Source 10 -M 1 bps Ethe rnet Router 1. 5 -Mbps T 1 link Destination DI Source 2 s FD Mbp - 100 • Two points of implementation – hosts at the edges of the network (transport protocol) – routers inside the network (queuing discipline) • Underlying service model – best-effort – multiple qualities of service (Qo. S) Fall 2006 CS 561 2

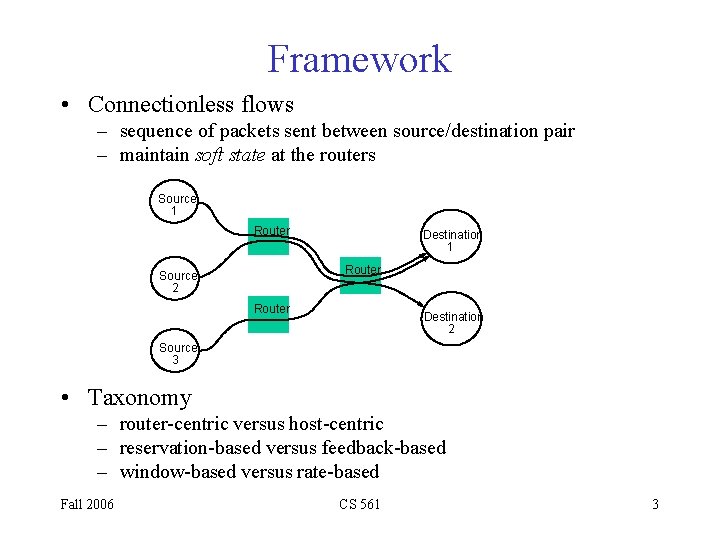

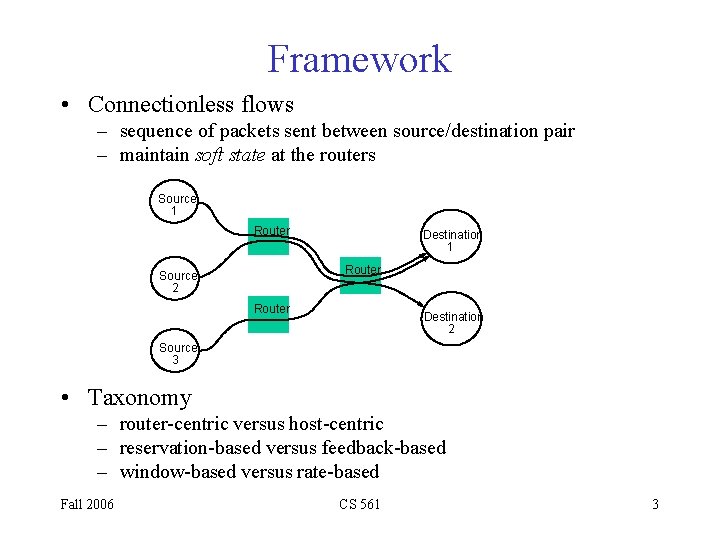

Framework • Connectionless flows – sequence of packets sent between source/destination pair – maintain soft state at the routers Source 1 Router Destination 1 Router Source 2 Router Destination 2 Source 3 • Taxonomy – router-centric versus host-centric – reservation-based versus feedback-based – window-based versus rate-based Fall 2006 CS 561 3

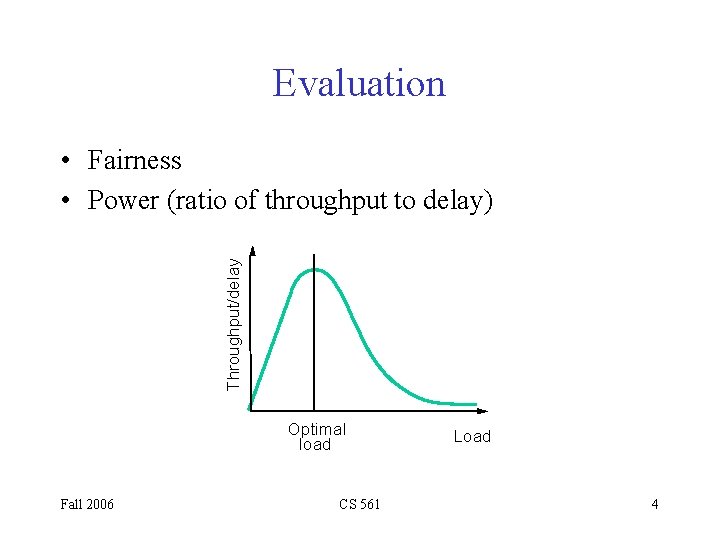

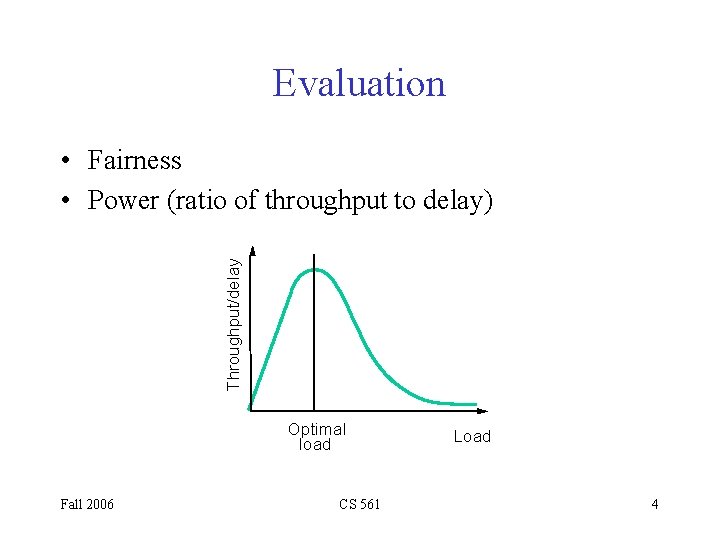

Evaluation Throughput/delay • Fairness • Power (ratio of throughput to delay) Optimal load Fall 2006 CS 561 Load 4

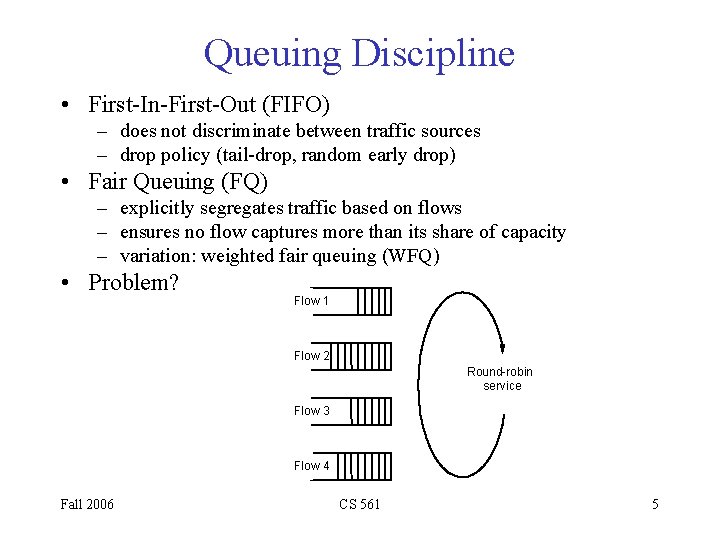

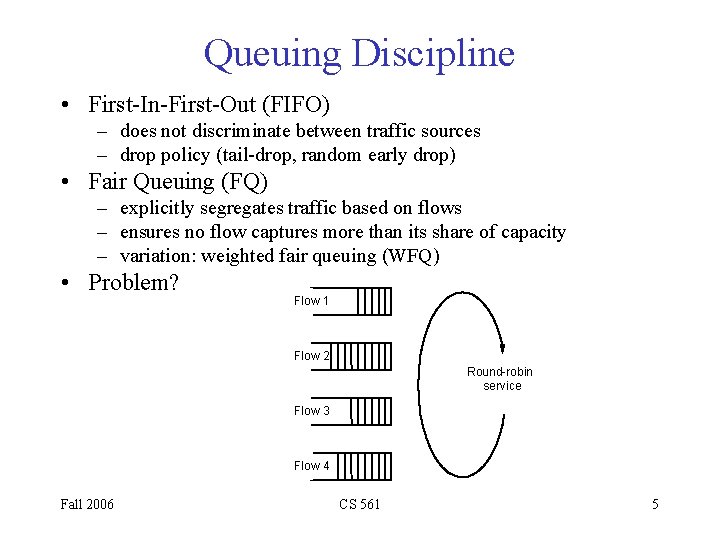

Queuing Discipline • First-In-First-Out (FIFO) – does not discriminate between traffic sources – drop policy (tail-drop, random early drop) • Fair Queuing (FQ) – explicitly segregates traffic based on flows – ensures no flow captures more than its share of capacity – variation: weighted fair queuing (WFQ) • Problem? Flow 1 Flow 2 Round-robin service Flow 3 Flow 4 Fall 2006 CS 561 5

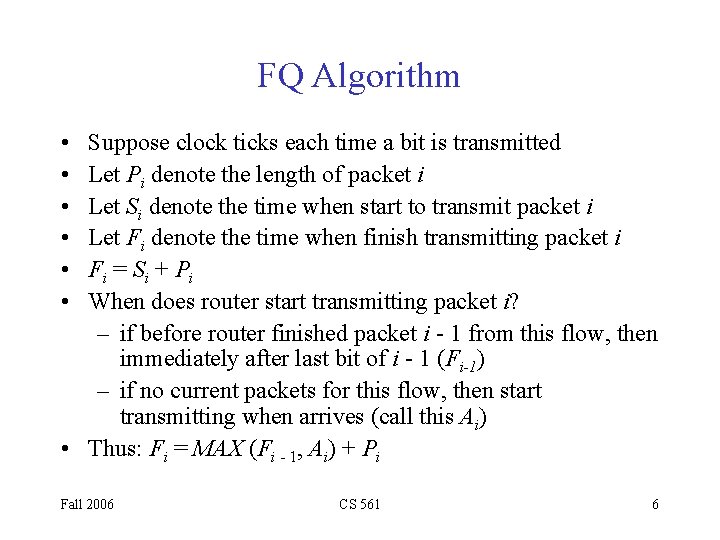

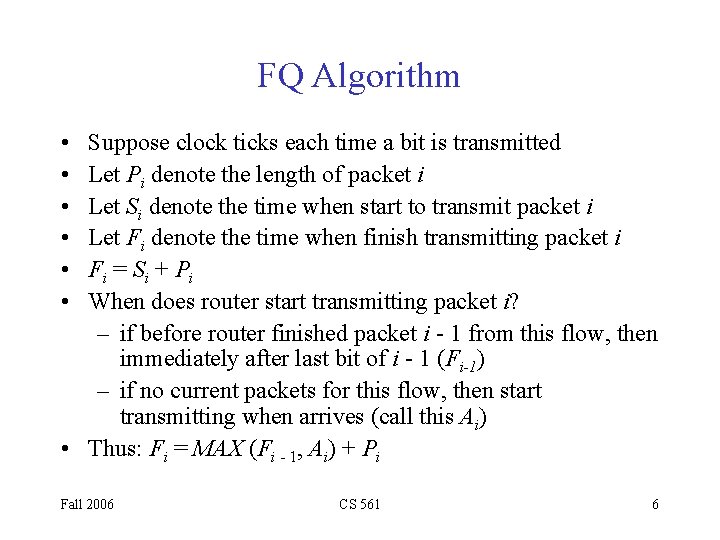

FQ Algorithm • • • Suppose clock ticks each time a bit is transmitted Let Pi denote the length of packet i Let Si denote the time when start to transmit packet i Let Fi denote the time when finish transmitting packet i Fi = Si + Pi When does router start transmitting packet i? – if before router finished packet i - 1 from this flow, then immediately after last bit of i - 1 (Fi-1) – if no current packets for this flow, then start transmitting when arrives (call this Ai) • Thus: Fi = MAX (Fi - 1, Ai) + Pi Fall 2006 CS 561 6

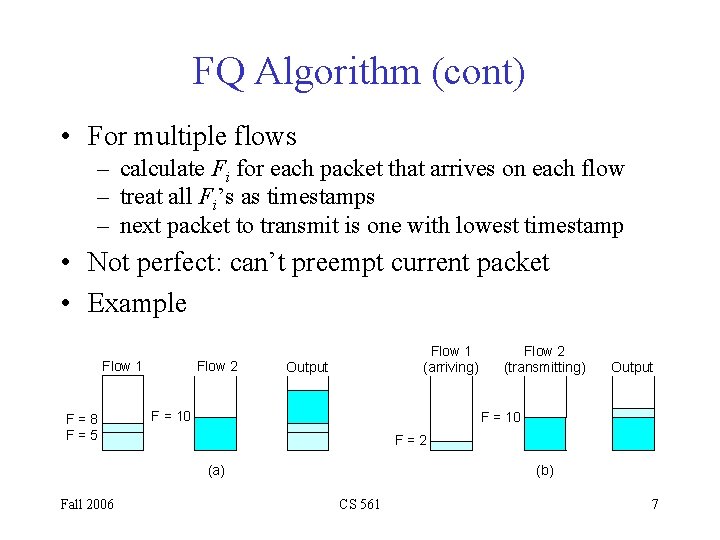

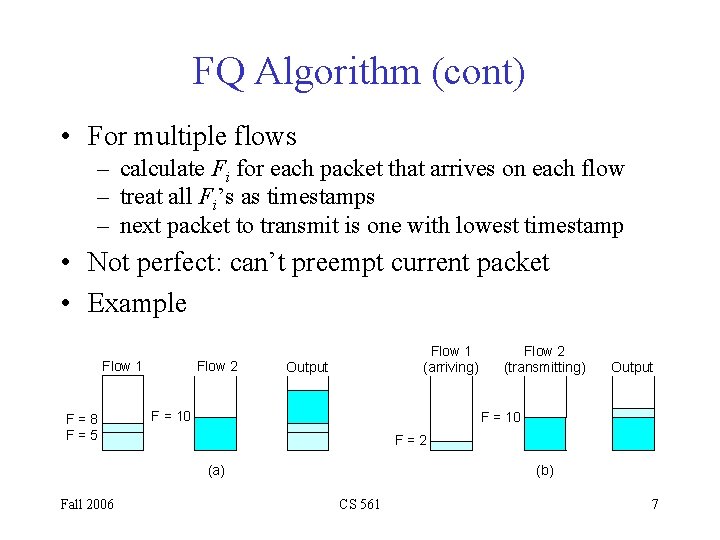

FQ Algorithm (cont) • For multiple flows – calculate Fi for each packet that arrives on each flow – treat all Fi’s as timestamps – next packet to transmit is one with lowest timestamp • Not perfect: can’t preempt current packet • Example Flow 1 F=8 F=5 Flow 2 Flow 1 (arriving) Output F = 10 F=2 (a) Fall 2006 Flow 2 (transmitting) (b) CS 561 7

TCP Congestion Control • Idea – assumes best-effort network (FIFO or FQ routers) each source determines network capacity for itself – uses implicit feedback – ACKs pace transmission (self-clocking) • Challenge – determining the available capacity in the first place – adjusting to changes in the available capacity Fall 2006 CS 561 8

Additive Increase/Multiplicative Decrease • Objective: adjust to changes in the available capacity • New state variable per connection: Congestion. Window – limits how much data source has in transit Max. Win = MIN(Congestion. Window, Advertised. Window) Eff. Win = Max. Win - (Last. Byte. Sent Last. Byte. Acked) • Idea: – increase Congestion. Window when congestion goes down – decrease Congestion. Window when congestion goes up Fall 2006 CS 561 9

AIMD (cont) • Question: how does the source determine whether or not the network is congested? • Answer: a timeout occurs – timeout signals that a packet was lost – packets are seldom lost due to transmission error – lost packet implies congestion Fall 2006 CS 561 10

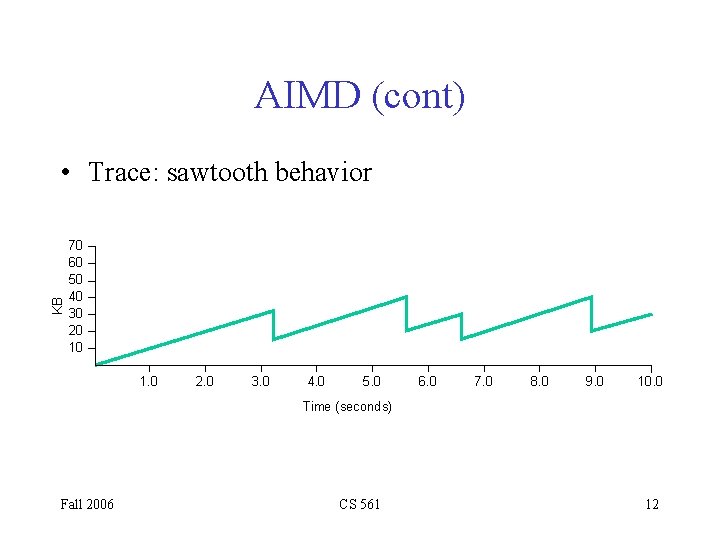

AIMD (cont) Source Destination • Algorithm … – increment Congestion. Window by one packet per RTT (linear increase) – divide Congestion. Window by two whenever a timeout occurs (multiplicative decrease) • In practice: increment a little for each ACK Increment = (MSS * MSS)/Congestion. Window += Increment Fall 2006 CS 561 11

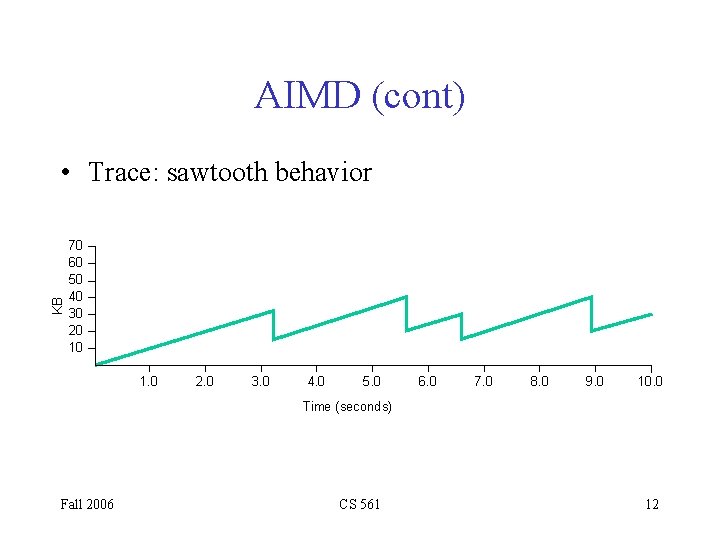

AIMD (cont) KB • Trace: sawtooth behavior 70 60 50 40 30 20 10 1. 0 2. 0 3. 0 4. 0 5. 0 6. 0 7. 0 8. 0 9. 0 10. 0 Time (seconds) Fall 2006 CS 561 12

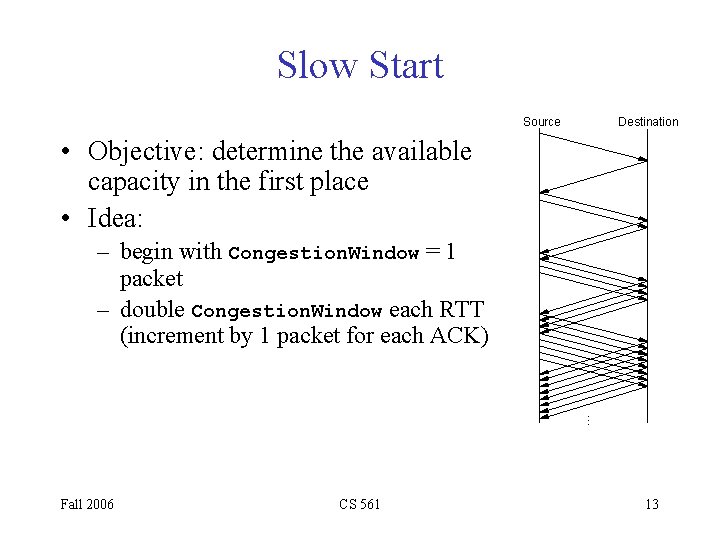

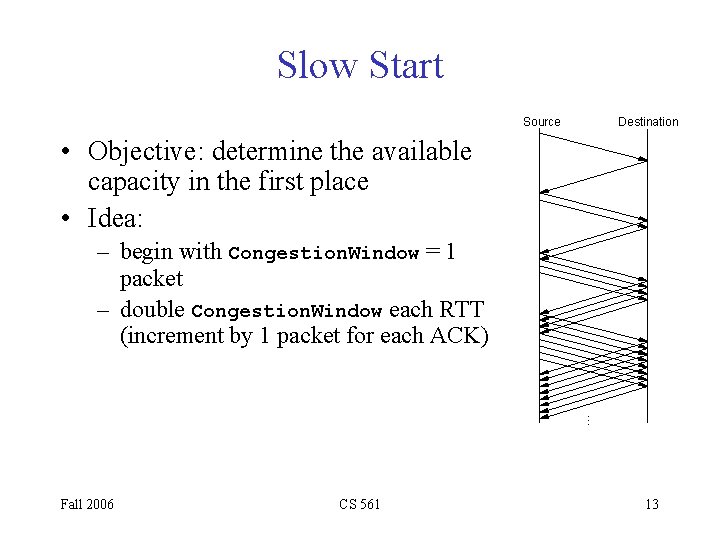

Slow Start Source Destination • Objective: determine the available capacity in the first place • Idea: … – begin with Congestion. Window = 1 packet – double Congestion. Window each RTT (increment by 1 packet for each ACK) Fall 2006 CS 561 13

Slow Start (cont) • Exponential growth, but slower than all at once • Used… – when first starting connection – when connection goes dead waiting for timeout KB • Trace 70 60 50 40 30 20 10 1. 0 2. 0 3. 0 4. 0 5. 0 6. 0 7. 0 8. 0 9. 0 • Problem: lose up to half a Congestion. Window’s worth of data Fall 2006 CS 561 14

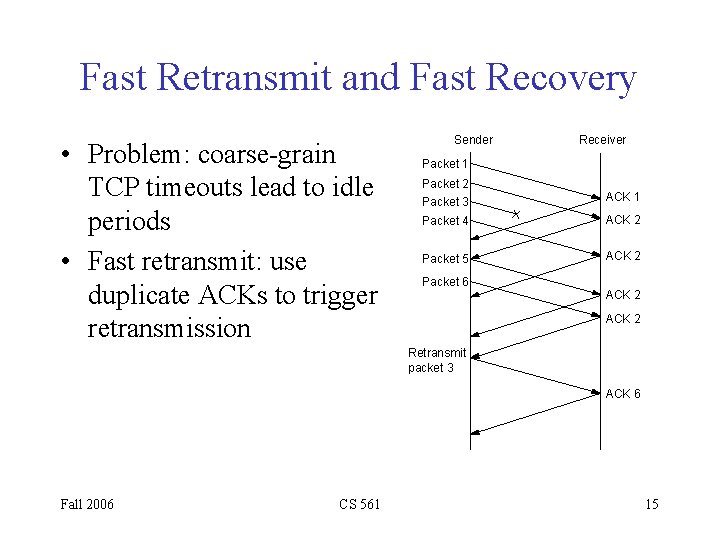

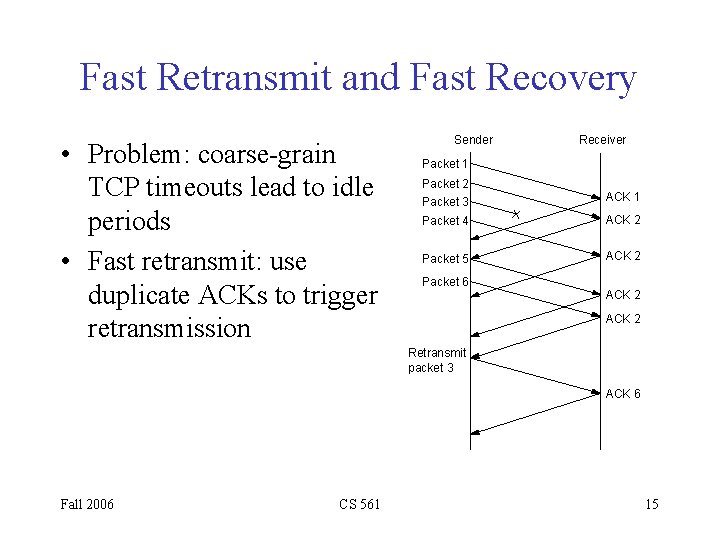

Fast Retransmit and Fast Recovery • Problem: coarse-grain TCP timeouts lead to idle periods • Fast retransmit: use duplicate ACKs to trigger retransmission Sender Receiver Packet 1 Packet 2 Packet 3 ACK 1 Packet 4 ACK 2 Packet 5 ACK 2 Packet 6 ACK 2 Retransmit packet 3 ACK 6 Fall 2006 CS 561 15

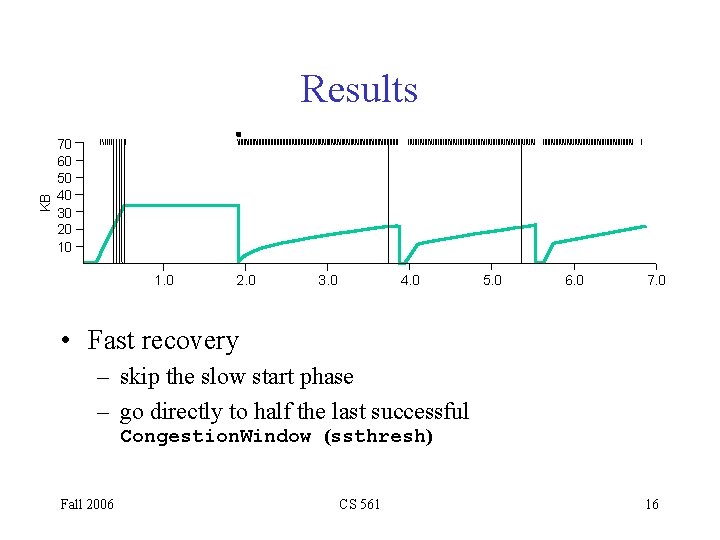

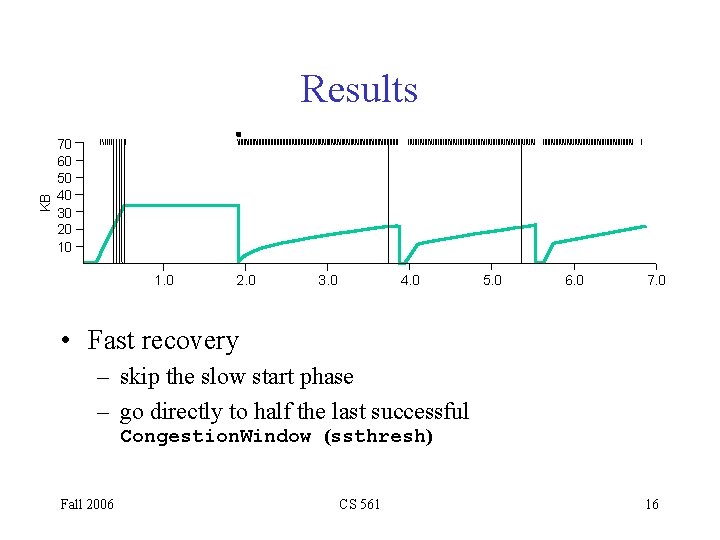

KB Results 70 60 50 40 30 20 10 1. 0 2. 0 3. 0 4. 0 5. 0 6. 0 7. 0 • Fast recovery – skip the slow start phase – go directly to half the last successful Congestion. Window (ssthresh) Fall 2006 CS 561 16

Congestion Avoidance • TCP’s strategy – control congestion once it happens – repeatedly increase load in an effort to find the point at which congestion occurs, and then back off • Alternative strategy – predict when congestion is about to happen – reduce rate before packets start being discarded – call this congestion avoidance, instead of congestion control • Two possibilities – router-centric: DECbit and RED Gateways – host-centric: TCP Vegas Fall 2006 CS 561 17

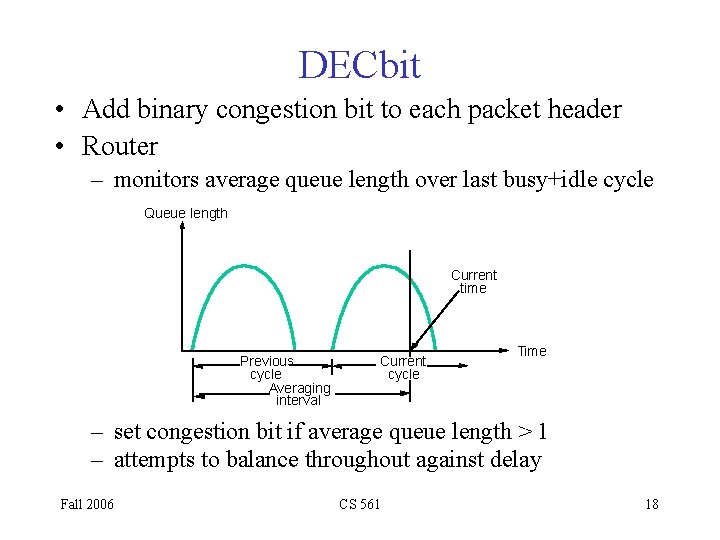

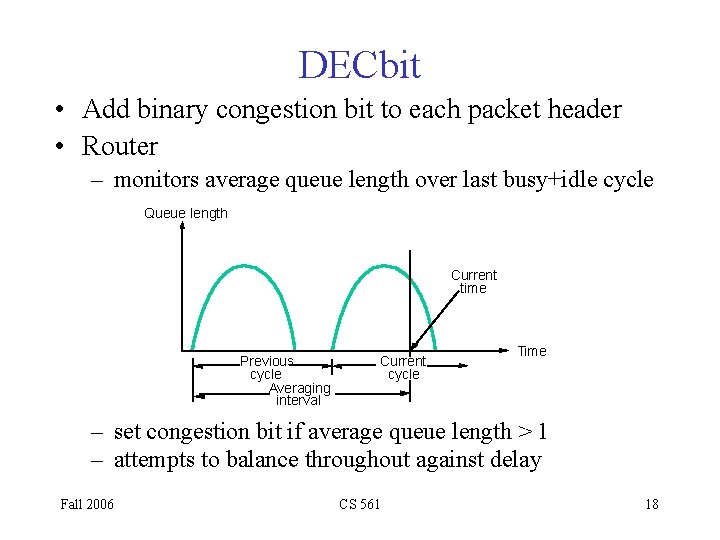

DECbit • Add binary congestion bit to each packet header • Router – monitors average queue length over last busy+idle cycle Queue length Current time Previous cycle Averaging interval Current cycle Time – set congestion bit if average queue length > 1 – attempts to balance throughout against delay Fall 2006 CS 561 18

DECbit (cont) • Destination echoes bit back to source • Source records how many packets resulted in set bit • If less than 50% of last window’s worth had bit set – increase Congestion. Window by 1 packet • If 50% or more of last window’s worth had bit set – decrease Congestion. Window by 0. 875 times Fall 2006 CS 561 19

Random Early Detection (RED) • Notification is implicit – just drop the packet (TCP will timeout) – could make explicit by marking the packet • Early random drop – rather than wait for queue to become full, drop each arriving packet with some drop probability whenever the queue length exceeds some drop level Fall 2006 CS 561 20

RED Details • Compute average queue length Avg. Len = (1 - Weight) * Avg. Len + Weight * Sample. Len 0 < Weight < 1 (usually 0. 002) Sample. Len is queue length each time a packet arrives Max. Threshold Min. Threshold Avg. Len Fall 2006 CS 561 21

RED Details (cont) • Two queue length thresholds if Avg. Len <= Min. Threshold then enqueue the packet if Min. Threshold < Avg. Len < Max. Threshold then calculate probability P drop arriving packet with probability P if Max. Threshold <= Avg. Len then drop arriving packet Fall 2006 CS 561 22

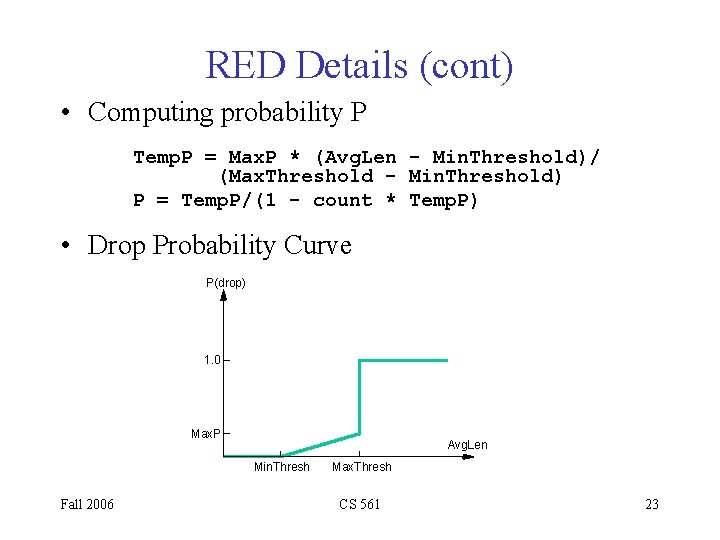

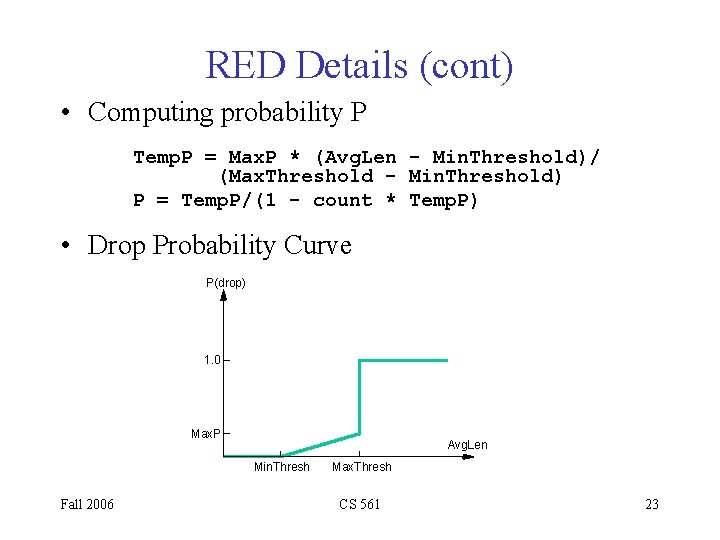

RED Details (cont) • Computing probability P Temp. P = Max. P * (Avg. Len - Min. Threshold)/ (Max. Threshold - Min. Threshold) P = Temp. P/(1 - count * Temp. P) • Drop Probability Curve P(drop) 1. 0 Max. P Avg. Len Min. Thresh Fall 2006 Max. Thresh CS 561 23

Tuning RED • Probability of dropping a particular flow’s packet(s) is roughly proportional to the share of the bandwidth that flow is currently getting • Max. P is typically set to 0. 02, meaning that when the average queue size is halfway between the two thresholds, the gateway drops roughly one out of 50 packets. • If traffic id bursty, then Min. Threshold should be sufficiently large to allow link utilization to be maintained at an acceptably high level • Difference between two thresholds should be larger than the typical increase in the calculated average queue length in one RTT; setting Max. Threshold to twice Min. Threshold is reasonable for traffic on today’s Internet • Penalty Box for Offenders Fall 2006 CS 561 24

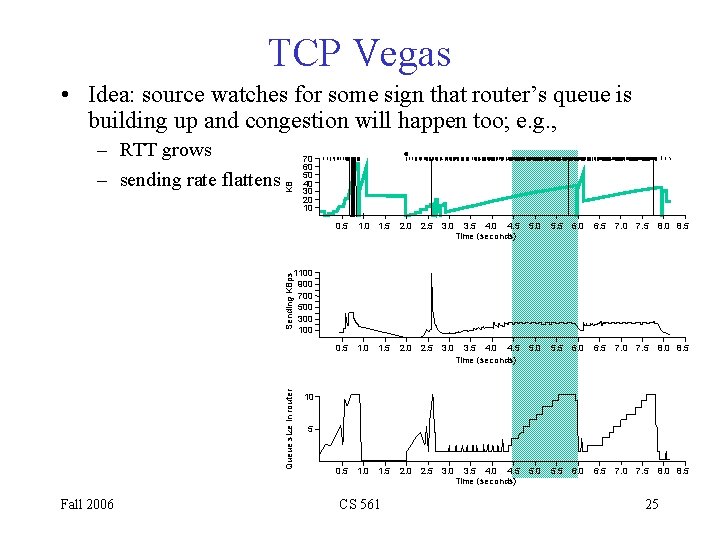

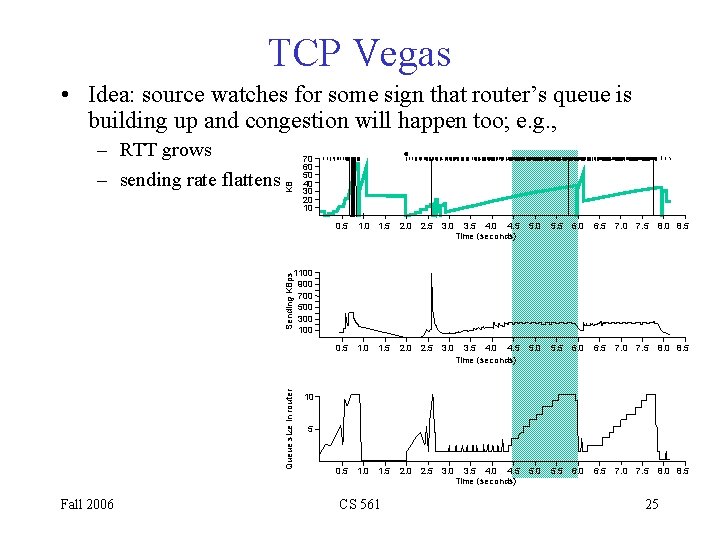

TCP Vegas • Idea: source watches for some sign that router’s queue is building up and congestion will happen too; e. g. , – sending rate flattens KB – RTT grows 70 60 50 40 30 20 10 0. 5 1. 0 1. 5 2. 0 2. 5 3. 0 3. 5 4. 0 4. 5 Time (seconds) 5. 0 5. 5 6. 0 6. 5 7. 0 7. 5 8. 0 8. 5 Queue size in router Sending KBps 1100 900 700 500 300 100 Fall 2006 10 5 CS 561 25

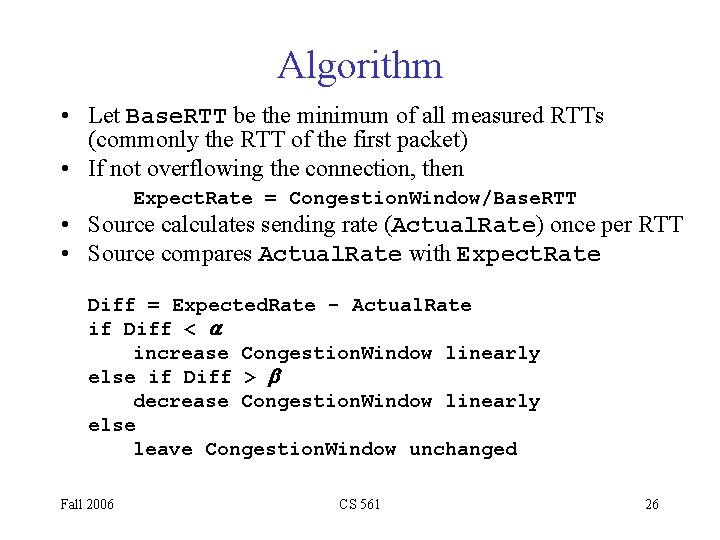

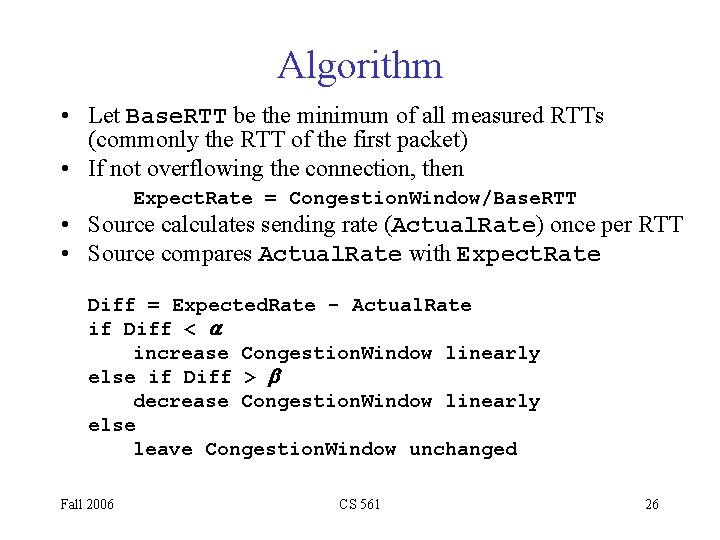

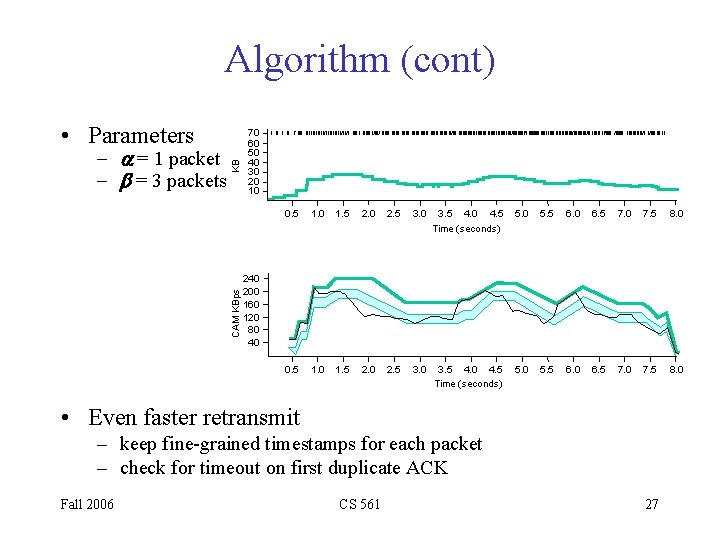

Algorithm • Let Base. RTT be the minimum of all measured RTTs (commonly the RTT of the first packet) • If not overflowing the connection, then Expect. Rate = Congestion. Window/Base. RTT • Source calculates sending rate (Actual. Rate) once per RTT • Source compares Actual. Rate with Expect. Rate Diff = Expected. Rate - Actual. Rate if Diff < a increase Congestion. Window linearly else if Diff > b decrease Congestion. Window linearly else leave Congestion. Window unchanged Fall 2006 CS 561 26

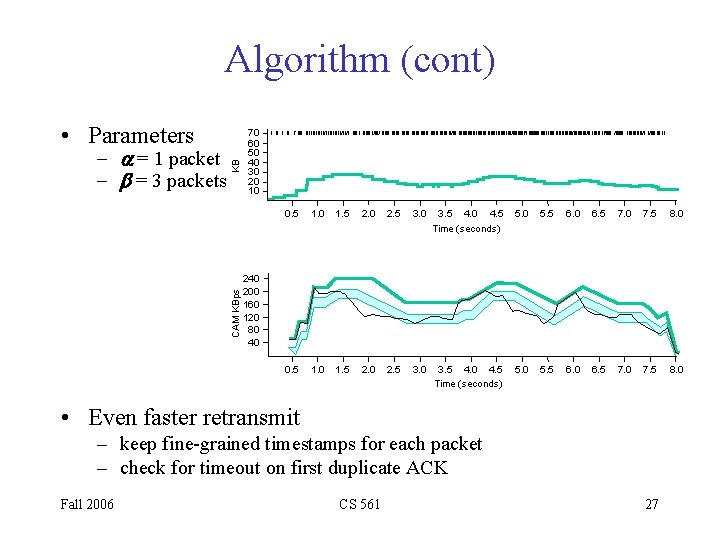

Algorithm (cont) - a = 1 packet - b = 3 packets KB • Parameters 70 60 50 40 30 20 10 0. 5 1. 0 1. 5 2. 0 2. 5 3. 0 3. 5 4. 0 4. 5 5. 0 5. 5 6. 0 6. 5 7. 0 7. 5 8. 0 CAM KBps Time (seconds) 240 200 160 120 80 40 0. 5 1. 0 1. 5 2. 0 2. 5 3. 0 3. 5 4. 0 4. 5 Time (seconds) • Even faster retransmit – keep fine-grained timestamps for each packet – check for timeout on first duplicate ACK Fall 2006 CS 561 27

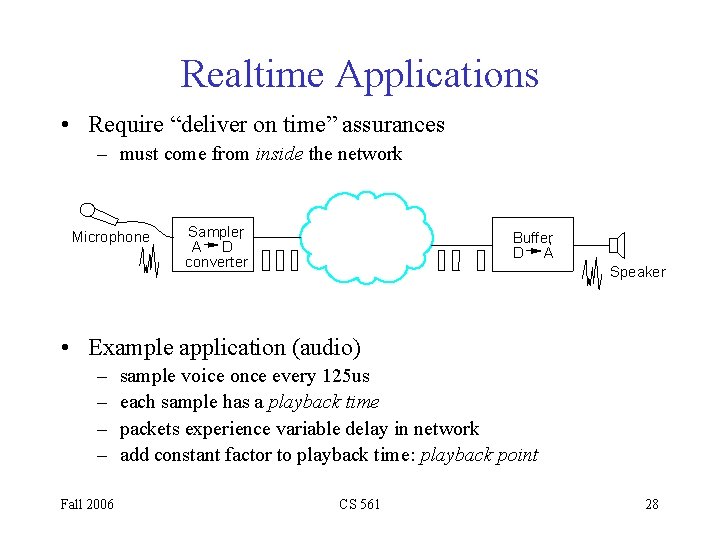

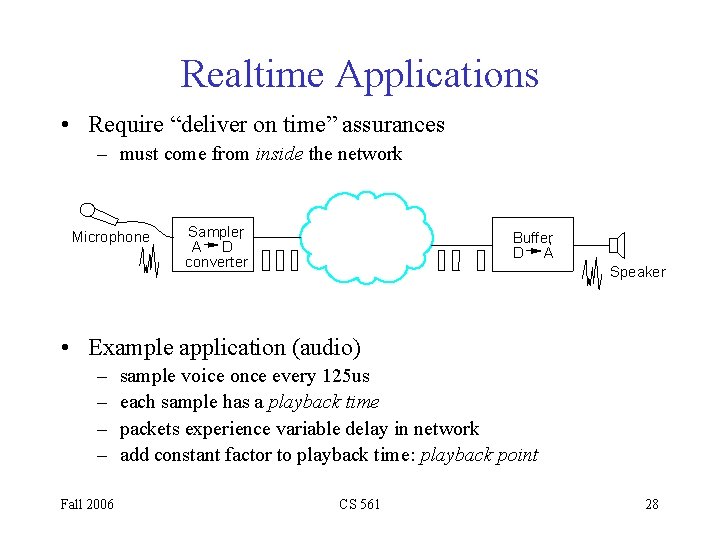

Realtime Applications • Require “deliver on time” assurances – must come from inside the network Microphone Sampler, A D converter Buffer, D A Speaker • Example application (audio) – – Fall 2006 sample voice once every 125 us each sample has a playback time packets experience variable delay in network add constant factor to playback time: playback point CS 561 28

Playback Buffer Sequence number Packet arrival Packet generation Playback Network delay Buffer Time Fall 2006 CS 561 29

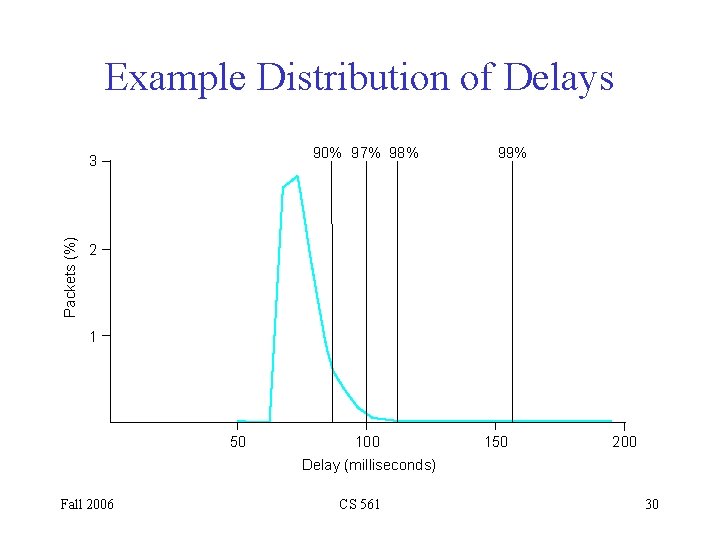

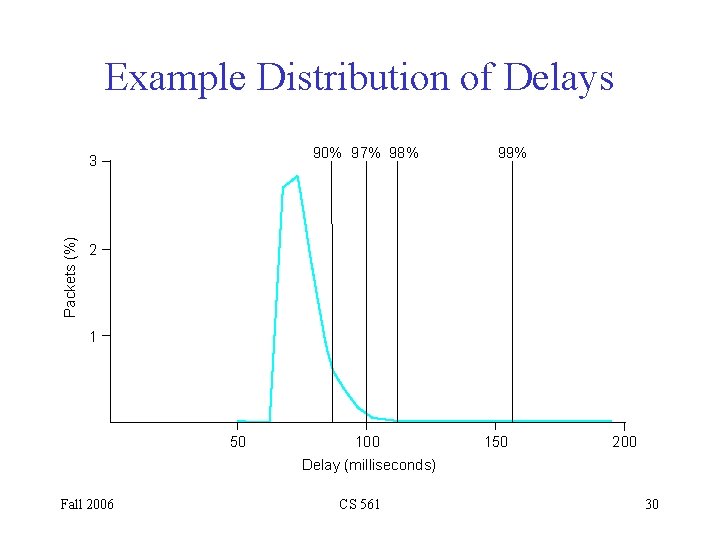

Example Distribution of Delays 90% 97% 98% Packets (%) 3 99% 2 1 50 100 150 200 Delay (milliseconds) Fall 2006 CS 561 30

Integrated Services • Service Classes – guaranteed – controlled-load • Mechanisms – – Fall 2006 signalling protocol admission control policing packet scheduling CS 561 31

Flowspec • Rspec: describes service requested from network – controlled-load: none – guaranteed: delay target • Tspec: describes flow’s traffic characteristics – – – – average bandwidth + burstiness: token bucket filter token rate r bucket depth B must have a token to send a byte must have n tokens to send n bytes start with no tokens accumulate tokens at rate of r per second can accumulate no more than B tokens Fall 2006 CS 561 32

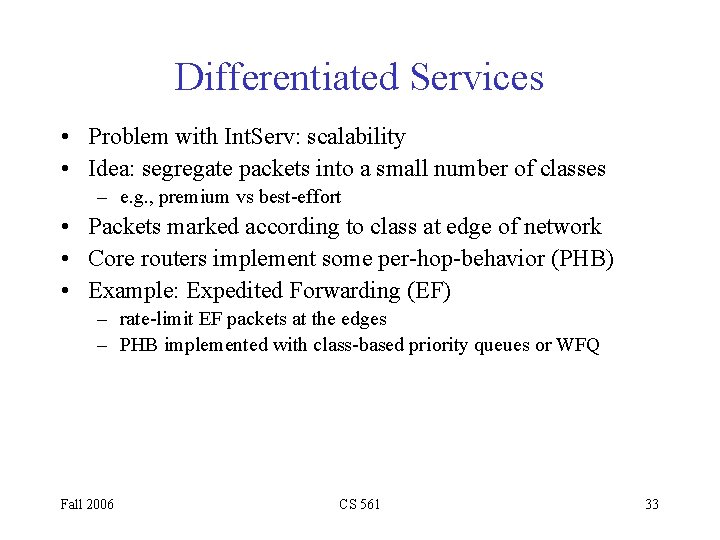

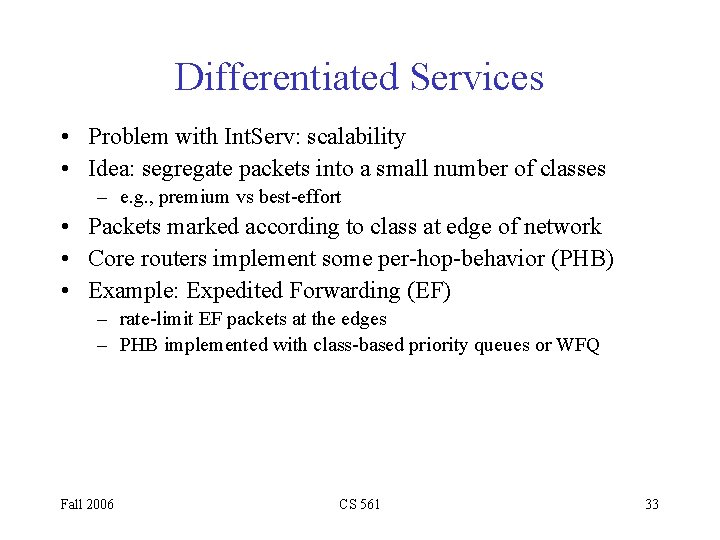

Differentiated Services • Problem with Int. Serv: scalability • Idea: segregate packets into a small number of classes – e. g. , premium vs best-effort • Packets marked according to class at edge of network • Core routers implement some per-hop-behavior (PHB) • Example: Expedited Forwarding (EF) – rate-limit EF packets at the edges – PHB implemented with class-based priority queues or WFQ Fall 2006 CS 561 33

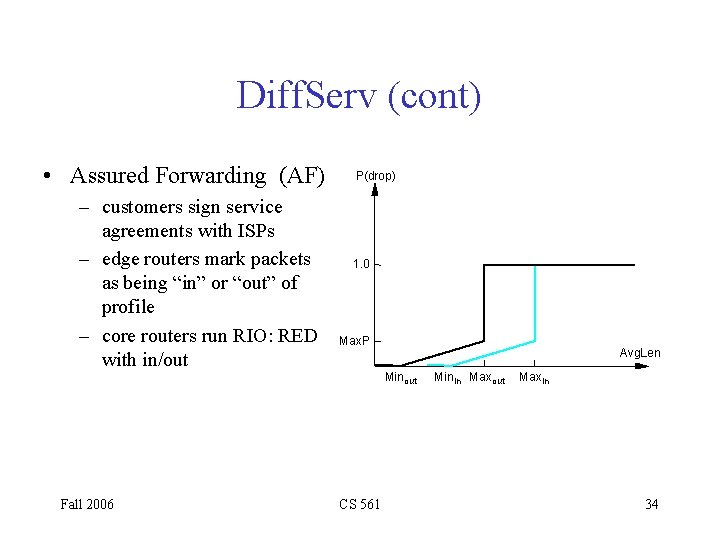

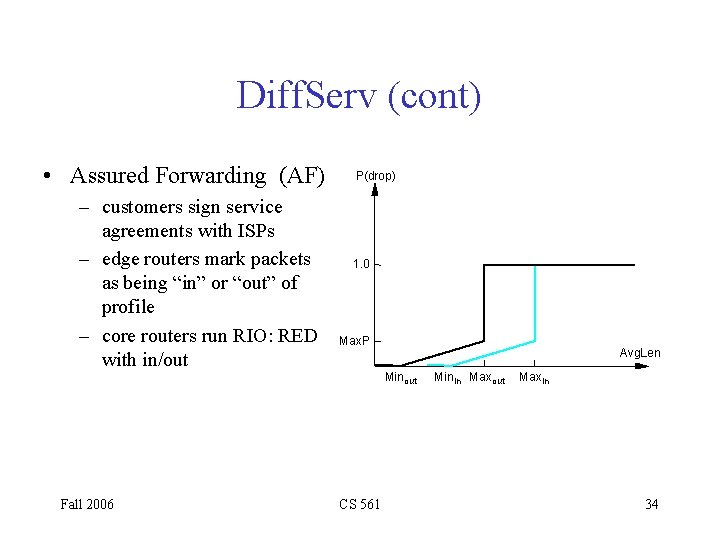

Diff. Serv (cont) • Assured Forwarding (AF) – customers sign service agreements with ISPs – edge routers mark packets as being “in” or “out” of profile – core routers run RIO: RED with in/out Fall 2006 P(drop) 1. 0 Max. P Avg. Len Min out CS 561 Min in Max out Maxin 34

Queuing discipline in computer networks

Queuing discipline in computer networks Srinivasan seshan

Srinivasan seshan Circumciliary congestion and conjunctival congestion

Circumciliary congestion and conjunctival congestion Sodium and oxygen chemical formula

Sodium and oxygen chemical formula Reacting system

Reacting system Magnesium reacting with nitric acid equation

Magnesium reacting with nitric acid equation Zinc carbonate

Zinc carbonate Reacting masses and volumes

Reacting masses and volumes Sodium bicarbonate to sodium carbonate reaction

Sodium bicarbonate to sodium carbonate reaction Magnesium reacting with oxygen

Magnesium reacting with oxygen Nh fl mc lv ts og

Nh fl mc lv ts og React to contact mounted

React to contact mounted React to indirect fire army

React to indirect fire army Reaction of copper with air

Reaction of copper with air Alkali metals reacting with water

Alkali metals reacting with water Alkali metals reacting with water

Alkali metals reacting with water Principles of congestion control

Principles of congestion control In2140

In2140 Tcp congestion control

Tcp congestion control Traffic throttling and load shedding

Traffic throttling and load shedding Hop by hop choke packet

Hop by hop choke packet Principles of congestion control in computer networks

Principles of congestion control in computer networks Load shedding in congestion control

Load shedding in congestion control Udp congestion control

Udp congestion control Tcp congestion control

Tcp congestion control Principles of congestion control

Principles of congestion control Congestion control in network layer

Congestion control in network layer Choke packets

Choke packets Tcp

Tcp General principles of congestion control

General principles of congestion control Multi channel queuing model

Multi channel queuing model Queuing theory formula

Queuing theory formula Constant service times occur with

Constant service times occur with Queuing analysis examples

Queuing analysis examples Queuing theory formula

Queuing theory formula