Computermarked assessment or learning analytics Sally Jordan Department

- Slides: 17

Computer-marked assessment or learning analytics? Sally Jordan Department of Physical Sciences The Open University VICE/PHEC 29 th August 2014

Background • Definitions of learning analytics e. g. Clow (2013, p. 683): “The analysis and representation of data about learners in order to improve learning”; • But assessment is sometimes ignored when learning analytics are discussed. Ellis (2013) points out that assessment is ubiquitous in higher education whilst student interactions in other online environments are not; I will also argue that analysing assessment behaviour also enables us to monitor behaviour at depth; • Assessment literature is also relevant e. g. Nicol & Macfarlane-Dick (2006) state that good feedback practice “Provides information to teachers that can be used to shape teaching”.

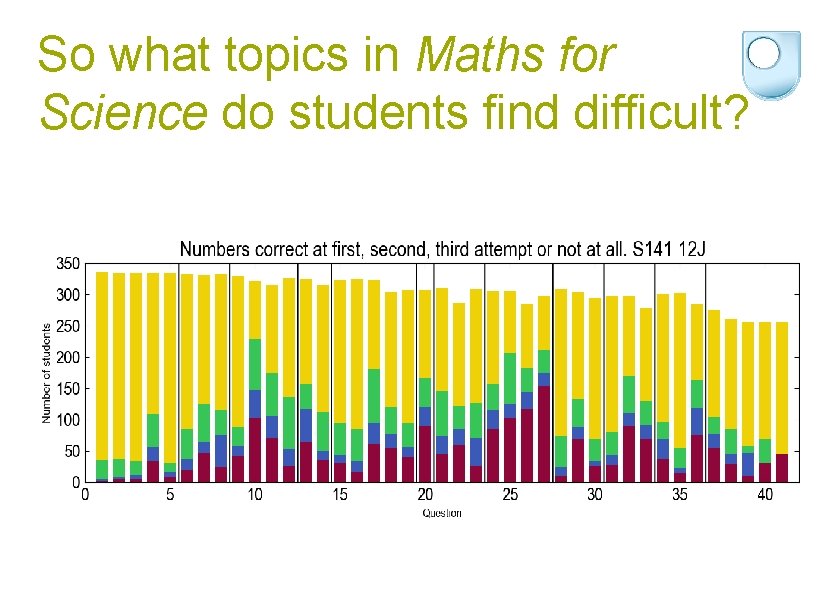

Analysis of student errors • Can look at the individual student or the cohort level; • At the individual level, this can form the basis of diagnostic testing; • At the cohort level, look for questions that students struggle with; • Look at responses in more detail to learn more about the errors that students make; • This can give insight into student misunderstandings.

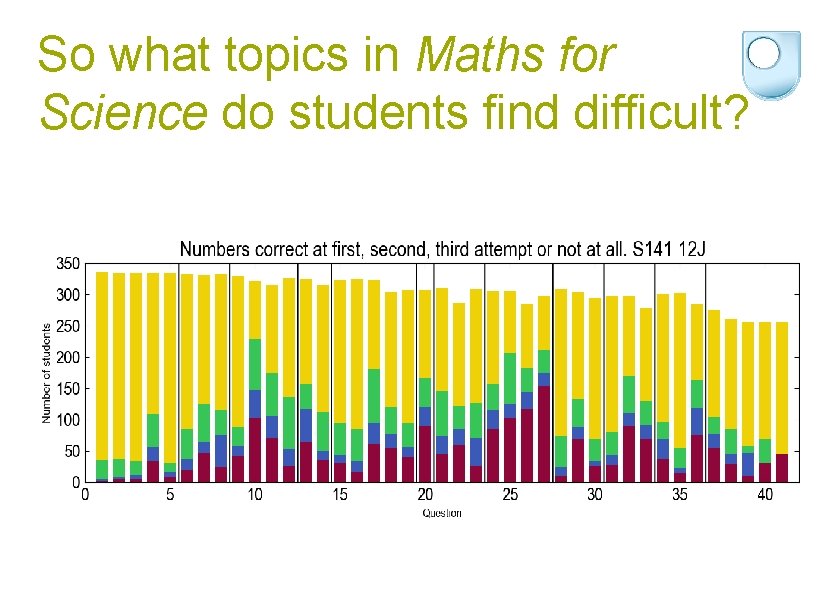

So what topics in Maths for Science do students find difficult?

So what topics in Maths for Science do students find difficult?

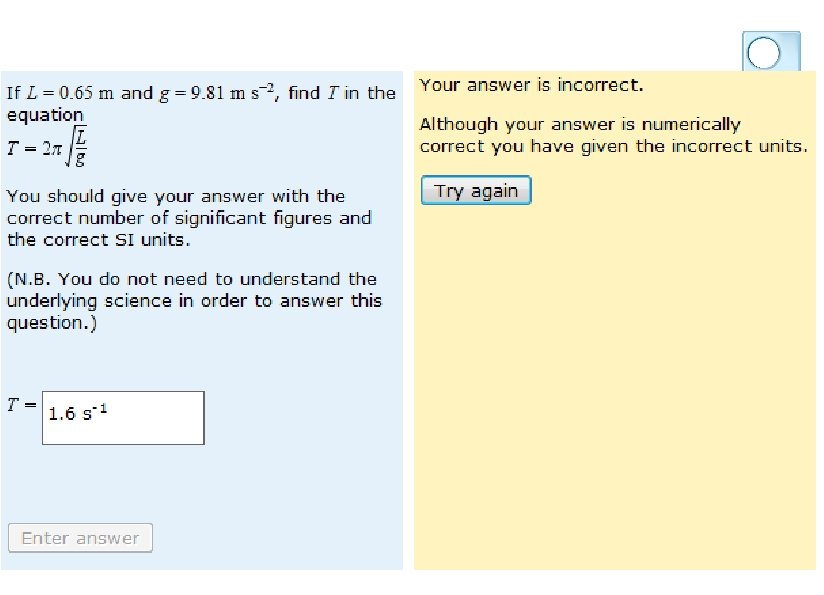

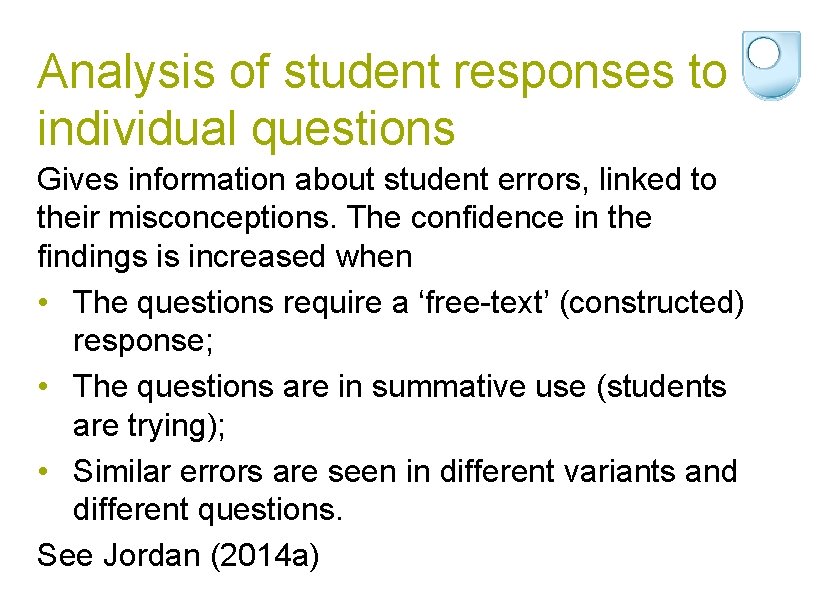

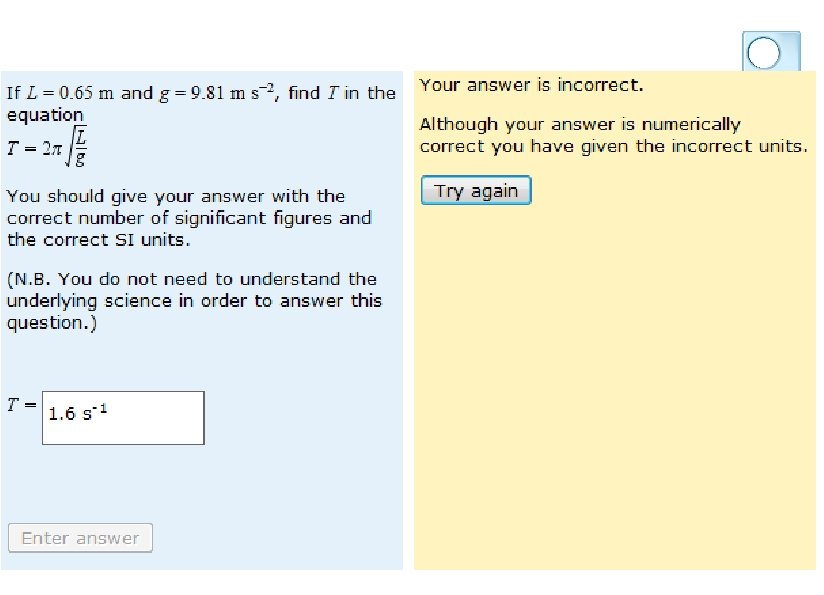

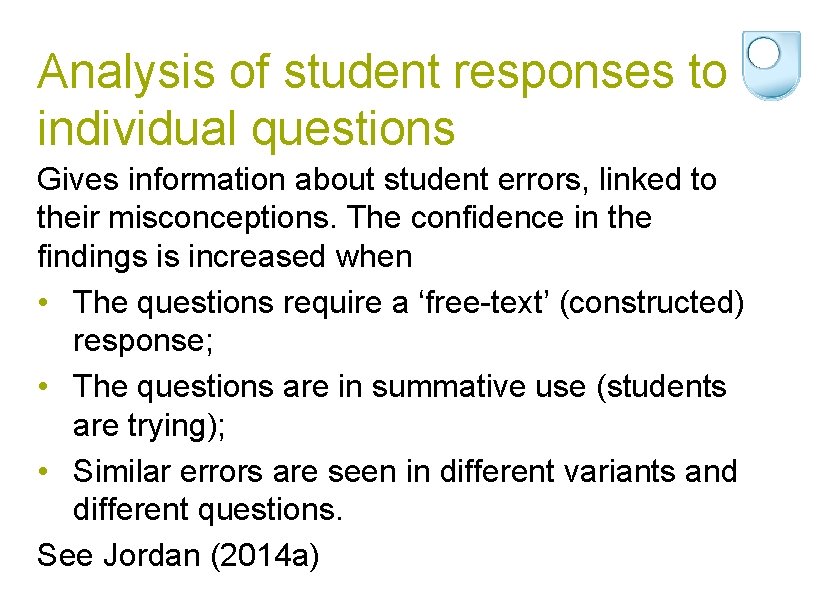

Analysis of student responses to individual questions Gives information about student errors, linked to their misconceptions. The confidence in the findings is increased when • The questions require a ‘free-text’ (constructed) response; • The questions are in summative use (students are trying); • Similar errors are seen in different variants and different questions. See Jordan (2014 a)

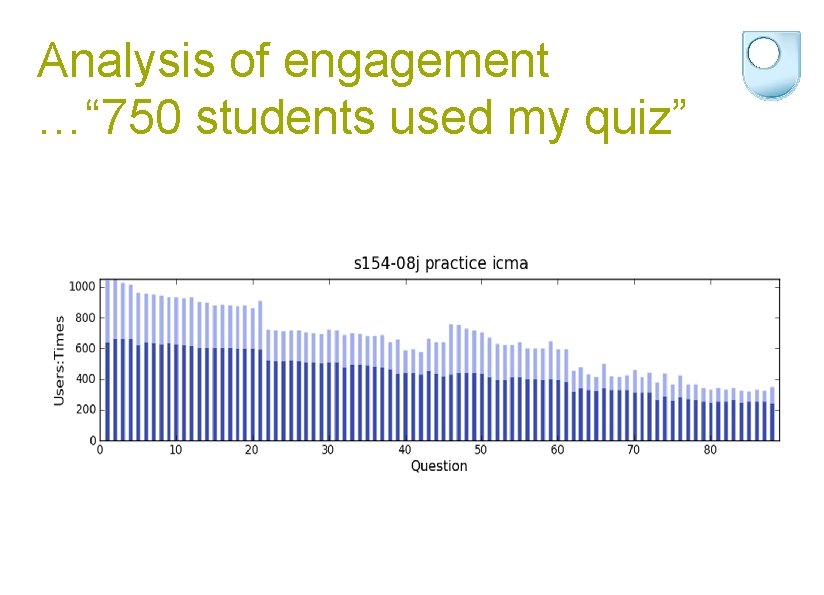

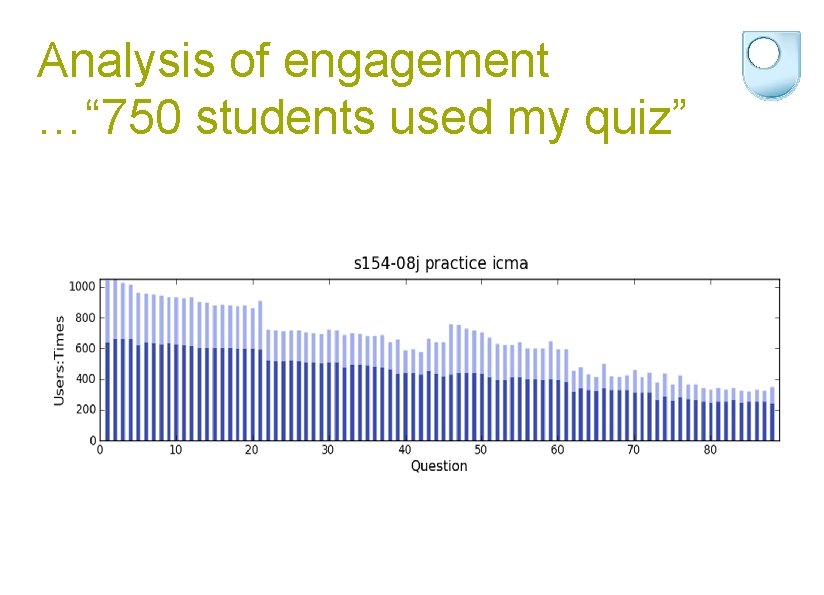

Analysis of engagement …“ 750 students used my quiz”

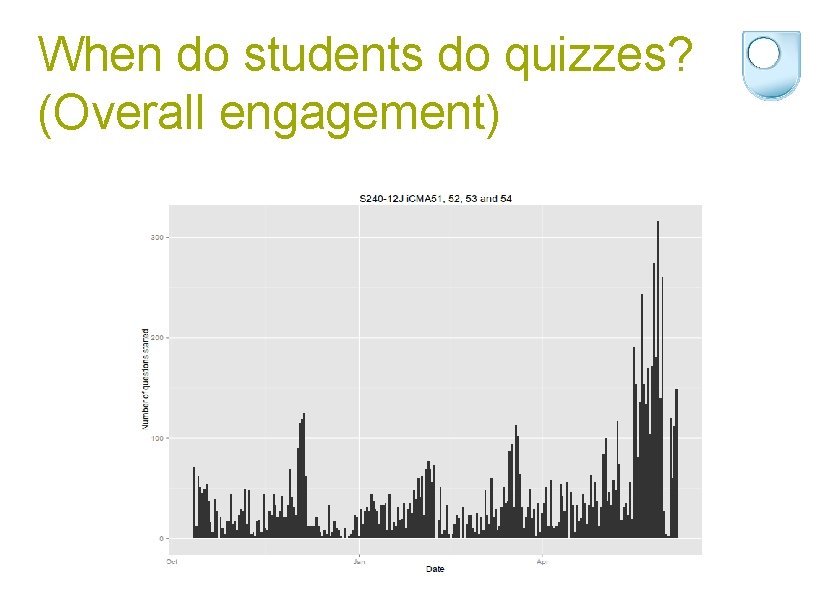

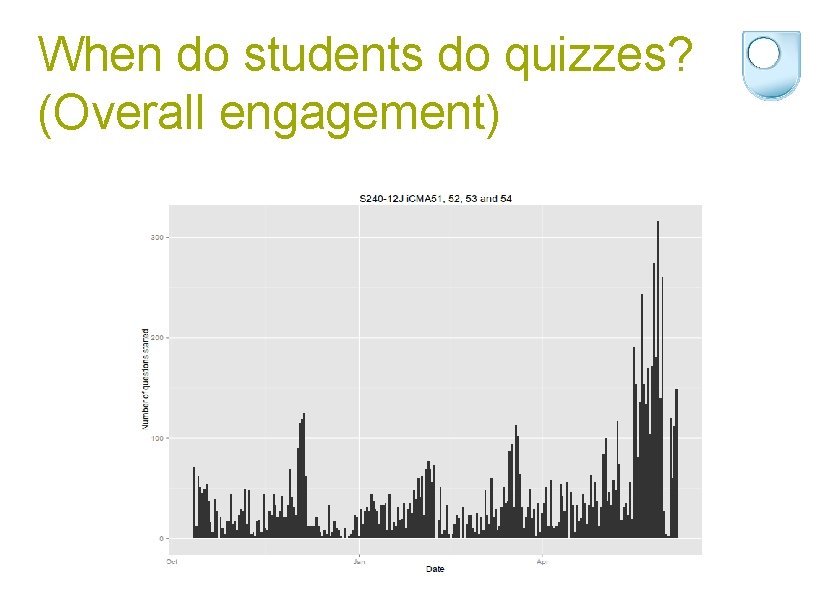

When do students do quizzes? (Overall engagement)

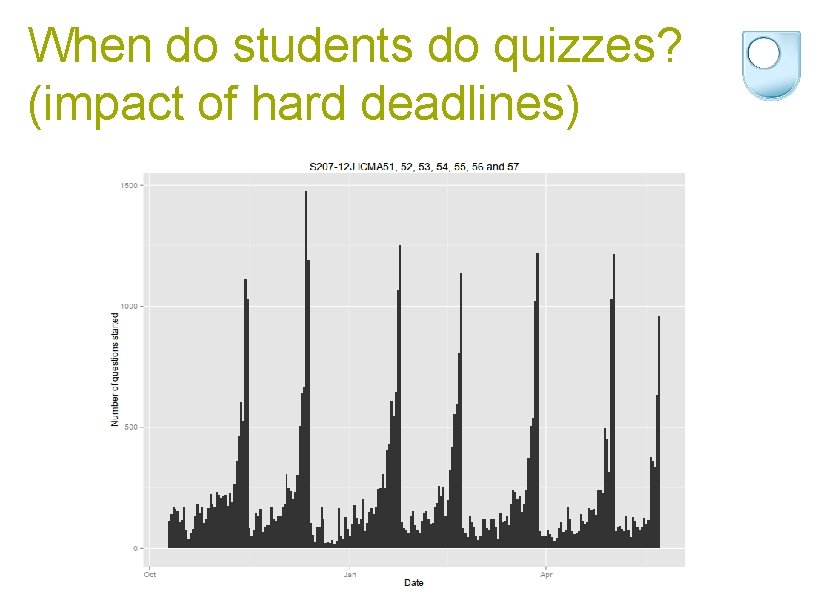

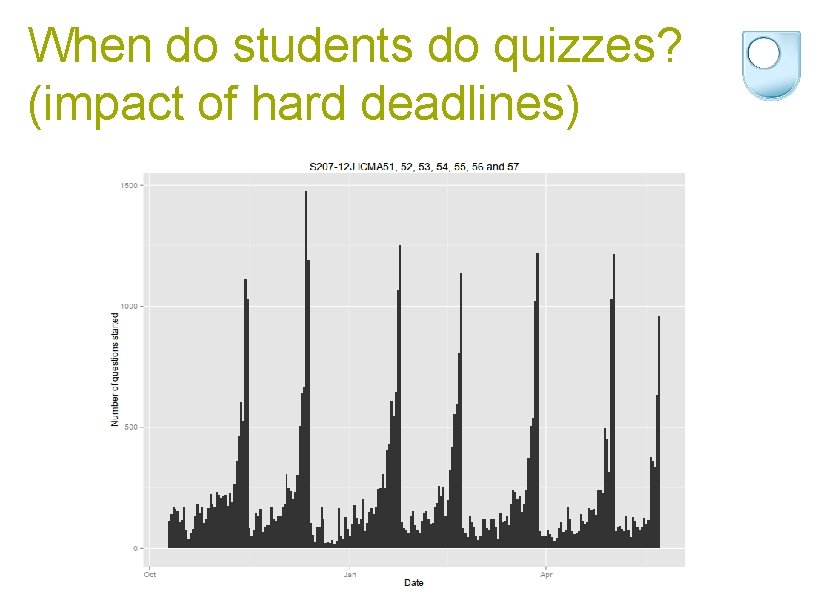

When do students do quizzes? (impact of hard deadlines)

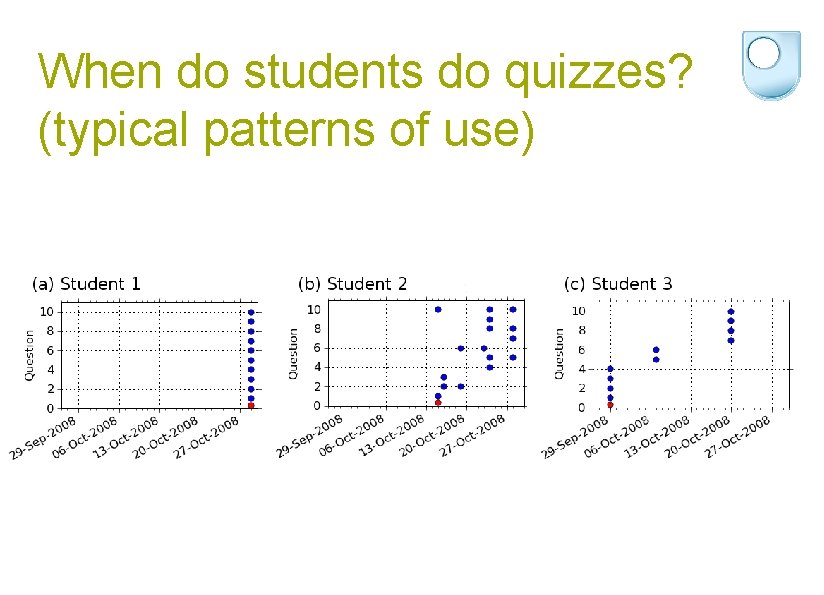

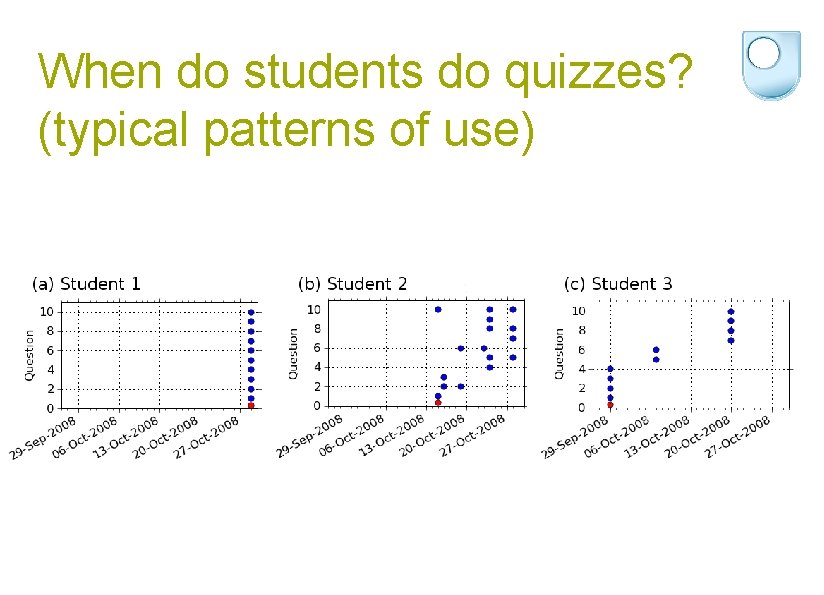

When do students do quizzes? (typical patterns of use)

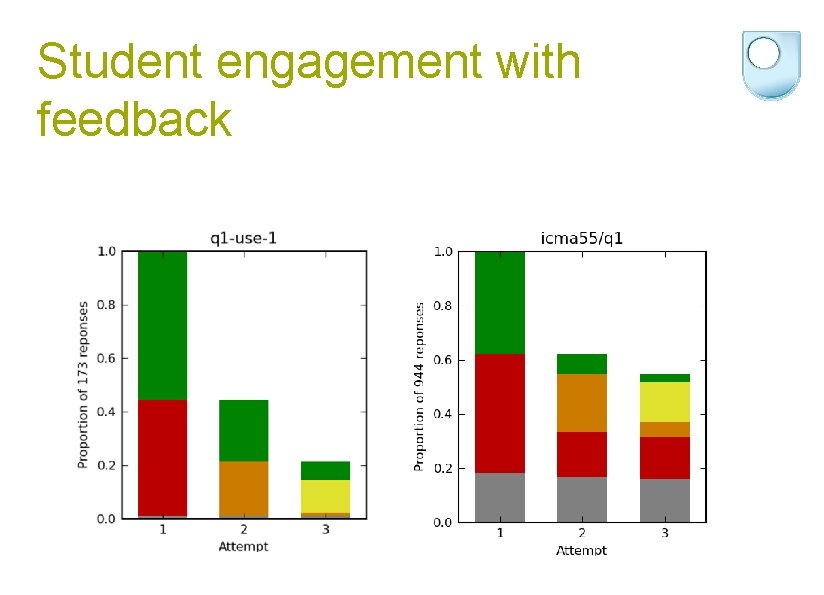

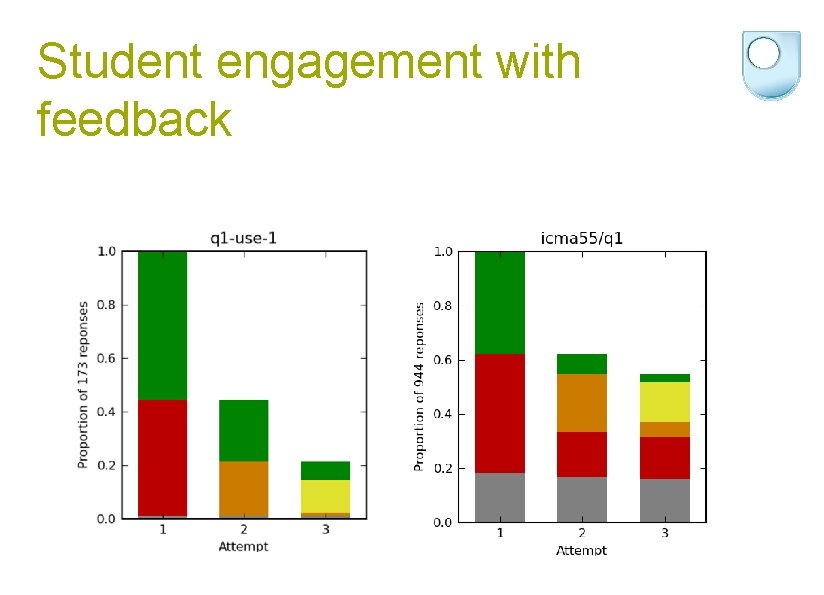

Student engagement with feedback

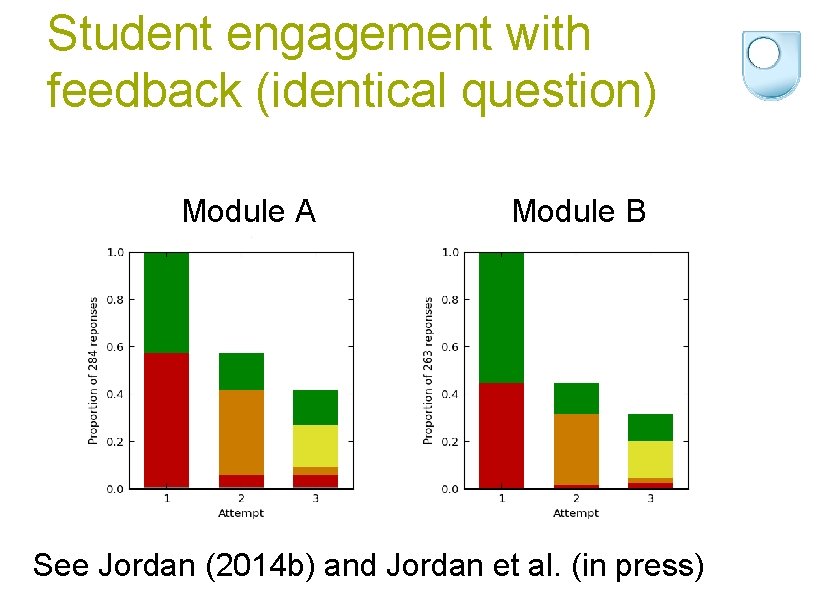

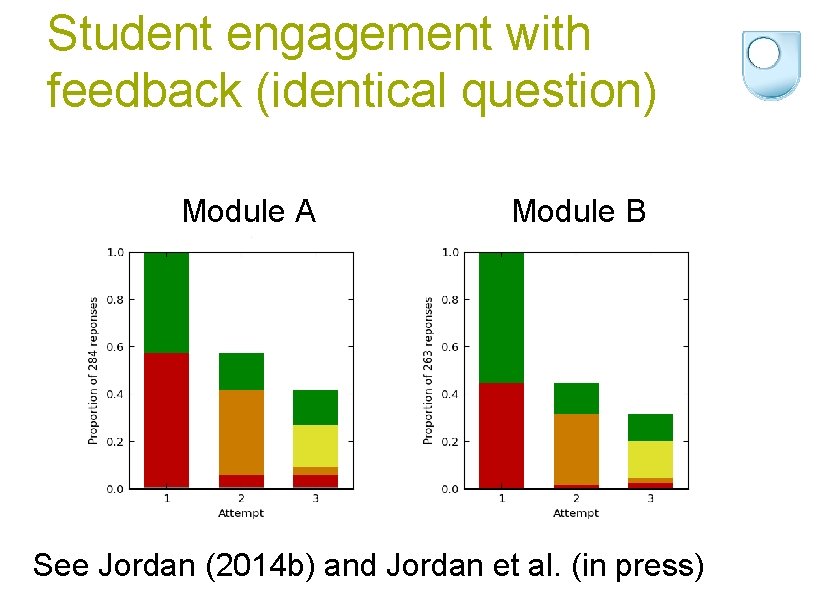

Student engagement with feedback (identical question) Module A Module B See Jordan (2014 b) and Jordan et al. (in press)

The future? Redecker, Punie and Ferrari (2012, p. 302) suggest that we should “transcend the testing paradigm”; data collected from student interaction in an online environment offers the possibility to assess students on their actual interactions rather than adding assessment separately.

References Clow, D. (2013). An overview of learning analytics. Teaching in Higher Education, 18(6), 683 -695. Ellis, C. (2013). Broadening the scope and increasing the usefulness of learning analytics: The case for assessment analytics. British Journal of Educational Technology, 44(4), 662664. Nicol, D. & Macfarlane‐Dick, D. (2006). Formative assessment and self‐regulated learning: a model and seven principles of good feedback practice. Studies in Higher Education, 31(2), 199218. Redecker, C. , Punie, Y. , & Ferrari, A. (2012). e. Assessment for 21 st Century Learning and Skills. In A. Ravenscroft, S. Lindstaedt, C. D. Kloos & D. Hernandez-Leo (Eds. ), 21 st Century Learning for 21 st Century Skills (pp. 292 -305). Berlin: Springer.

For more about what I’ve discussed Jordan, S. (2014 a). Adult science learners’ mathematical mistakes: an analysis of student responses to computermarked questions. European Journal of Science and Mathematics Education, 2(2), 63 -87. Jordan, S. (2014 b). Using e-assessment to learn about students and learning. International Journal of e. Assessment, 4(1) Jordan, S. E. , Bolton, J. P. R. , Cook, L. J. , Datta, S. B. , Golding, J. P. , Haresnape, J. M. , Jordan, R. S. , Murphy, K. P. S. J. , New, K. J. , & Williams, R. T. (in press). Thresholded assessment: Does it work? Report on an e. STEe. M Project. Will be available soon from http: //www. open. ac. uk/about/teaching-andlearning/esteem/projects/themes/innovative-assessment/thresholded -assessment-does-it-work

Sally Jordan Senior Lecturer and Staff Tutor Head of Physics Department of Physical Sciences The Open University sally. jordan@open. ac. uk blog: http: //www. open. ac. uk/blogs/Sally. Jordan/