Computer Architecture CS 3330 Systolic Array Accelerators Samira

![Scalar Code Example • For I = 0 to 49 – C[i] = (A[i] Scalar Code Example • For I = 0 to 49 – C[i] = (A[i]](https://slidetodoc.com/presentation_image_h2/db0c66e8609ad2853d4c2f537c81d10f/image-13.jpg)

- Slides: 38

Computer Architecture CS 3330 Systolic Array, Accelerators Samira Khan University of Virginia Feb 27, 2020 The content and concept of this course are adapted from CMU ECE 447

AGENDA • Logistics • Review from last lecture • Systolic Arrays • Why Accelerators? 2

LOGISTICS • Lab 2 – Part 1: Due on Feb 25, 2020 – Part 2: Due on Mar 3, 2020 – Even you have three weeks to finish, please start early • HW 3 – Will be out on Friday – Due on Mar 6, 2020 – More than two weeks to get it done – Important for midterm 3

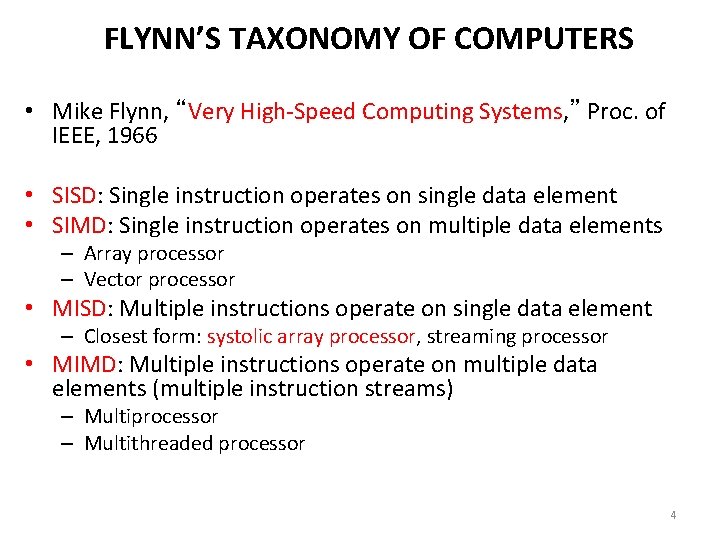

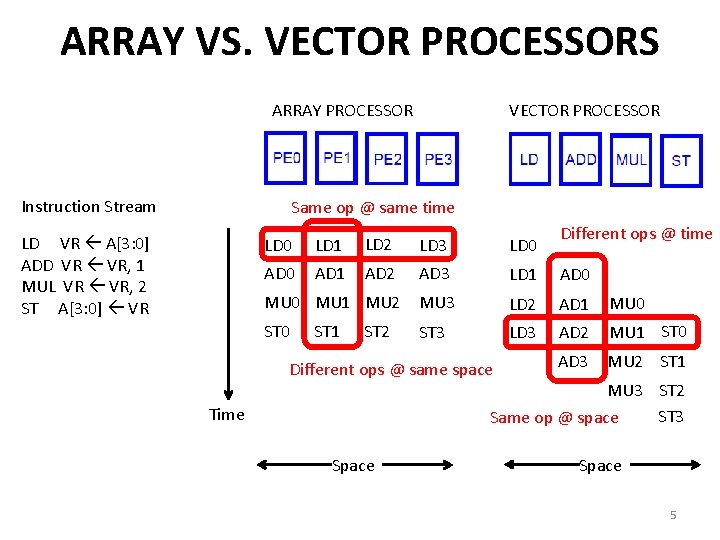

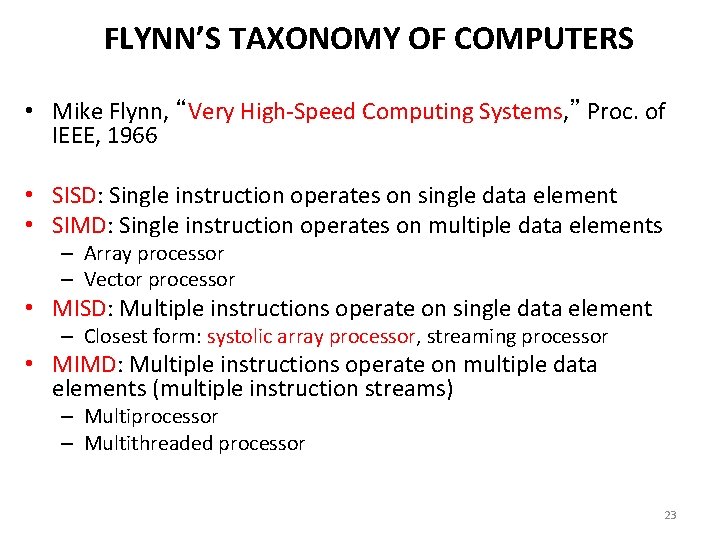

FLYNN’S TAXONOMY OF COMPUTERS • Mike Flynn, “Very High-Speed Computing Systems, ” Proc. of IEEE, 1966 • SISD: Single instruction operates on single data element • SIMD: Single instruction operates on multiple data elements – Array processor – Vector processor • MISD: Multiple instructions operate on single data element – Closest form: systolic array processor, streaming processor • MIMD: Multiple instructions operate on multiple data elements (multiple instruction streams) – Multiprocessor – Multithreaded processor 4

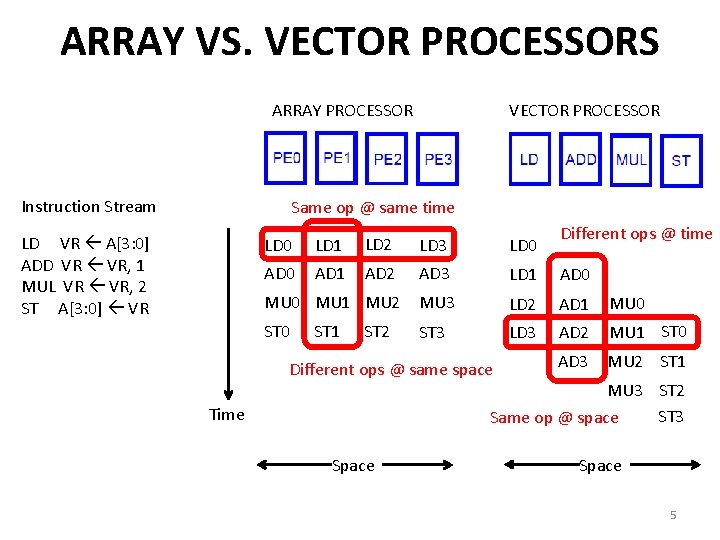

ARRAY VS. VECTOR PROCESSORS ARRAY PROCESSOR Instruction Stream VECTOR PROCESSOR Same op @ same time LD VR A[3: 0] ADD VR VR, 1 MUL VR VR, 2 ST A[3: 0] VR Different ops @ time LD 0 LD 1 LD 2 LD 3 LD 0 AD 1 AD 2 AD 3 LD 1 AD 0 MU 3 LD 2 AD 1 MU 0 ST 3 LD 3 AD 2 MU 1 ST 0 AD 3 MU 2 ST 1 MU 0 MU 1 MU 2 ST 0 ST 1 ST 2 Different ops @ same space MU 3 ST 2 ST 3 Same op @ space Time Space 5

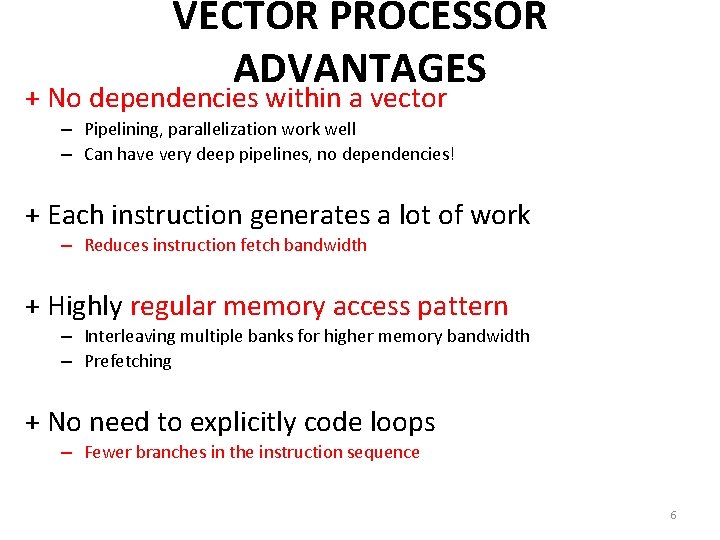

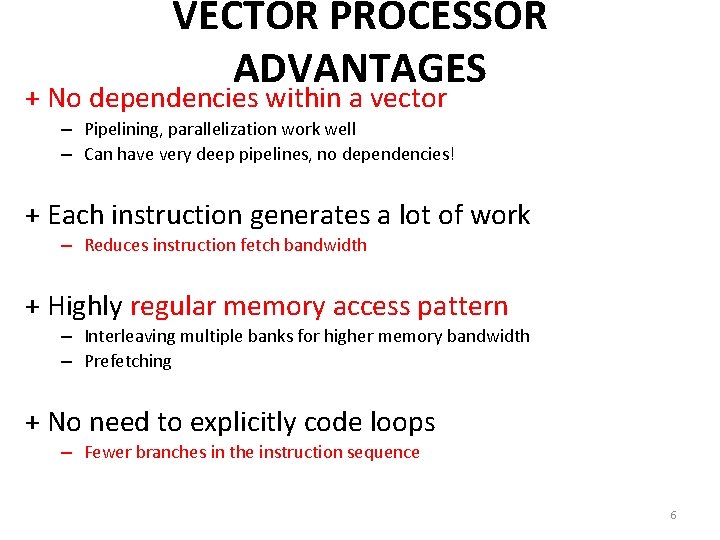

VECTOR PROCESSOR ADVANTAGES + No dependencies within a vector – Pipelining, parallelization work well – Can have very deep pipelines, no dependencies! + Each instruction generates a lot of work – Reduces instruction fetch bandwidth + Highly regular memory access pattern – Interleaving multiple banks for higher memory bandwidth – Prefetching + No need to explicitly code loops – Fewer branches in the instruction sequence 6

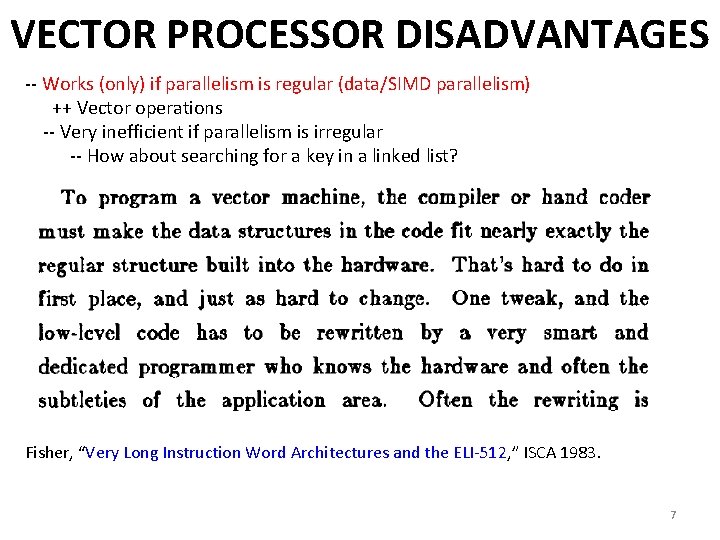

VECTOR PROCESSOR DISADVANTAGES -- Works (only) if parallelism is regular (data/SIMD parallelism) ++ Vector operations -- Very inefficient if parallelism is irregular -- How about searching for a key in a linked list? Fisher, “Very Long Instruction Word Architectures and the ELI-512, ” ISCA 1983. 7

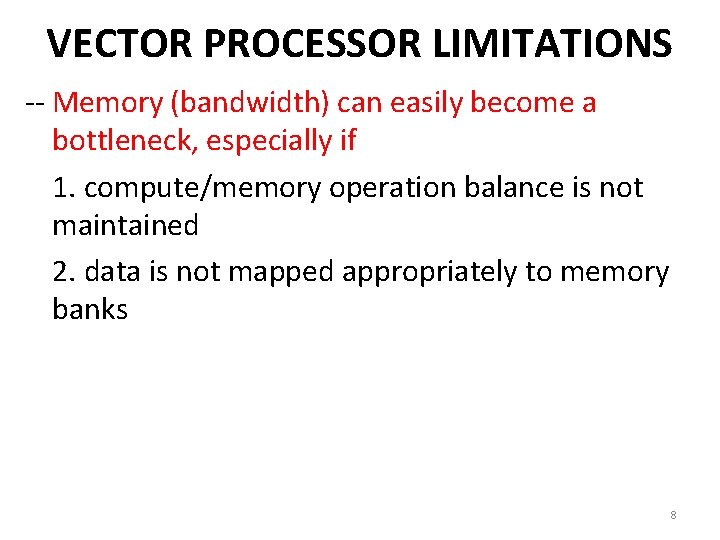

VECTOR PROCESSOR LIMITATIONS -- Memory (bandwidth) can easily become a bottleneck, especially if 1. compute/memory operation balance is not maintained 2. data is not mapped appropriately to memory banks 8

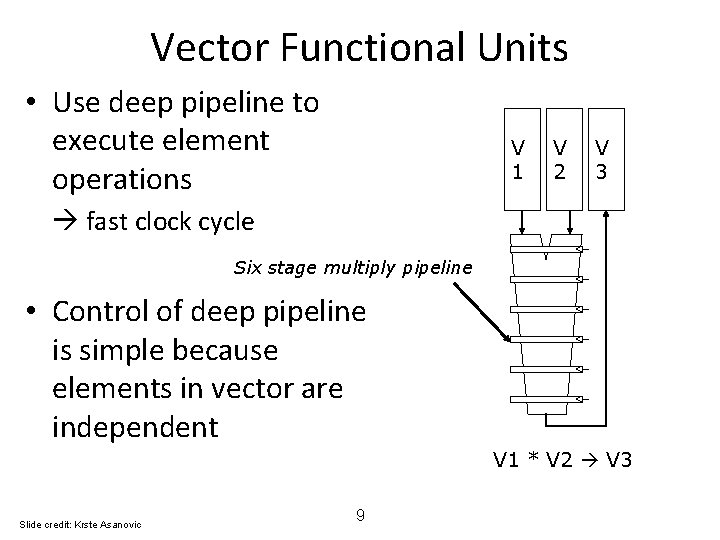

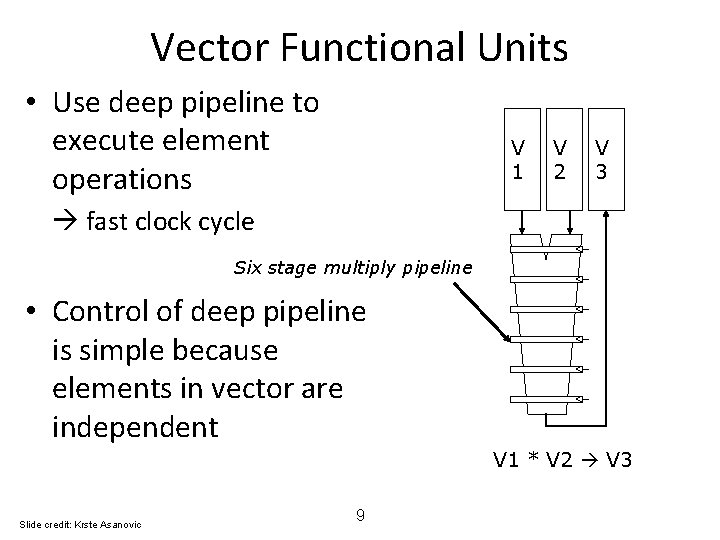

Vector Functional Units • Use deep pipeline to execute element operations V 1 V 2 V 3 fast clock cycle Six stage multiply pipeline • Control of deep pipeline is simple because elements in vector are independent V 1 * V 2 V 3 Slide credit: Krste Asanovic 9

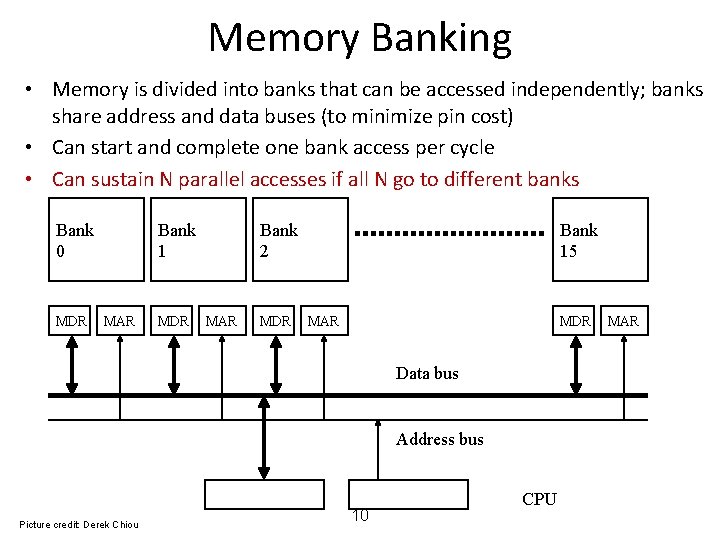

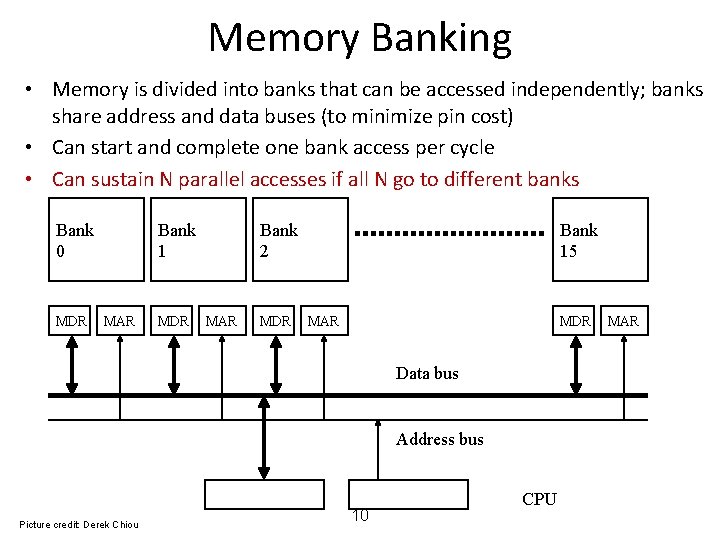

Memory Banking • Memory is divided into banks that can be accessed independently; banks share address and data buses (to minimize pin cost) • Can start and complete one bank access per cycle • Can sustain N parallel accesses if all N go to different banks Bank 0 MDR Bank 1 MAR MDR Bank 2 MAR MDR Bank 15 MAR MDR Data bus Address bus Picture credit: Derek Chiou 10 CPU MAR

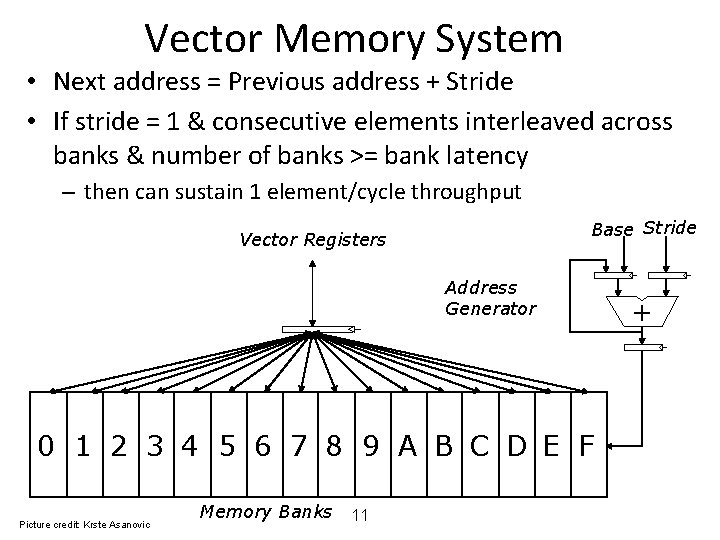

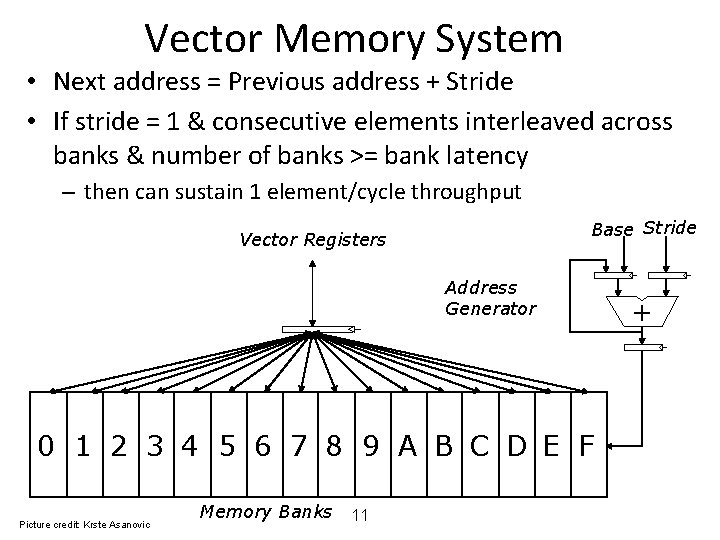

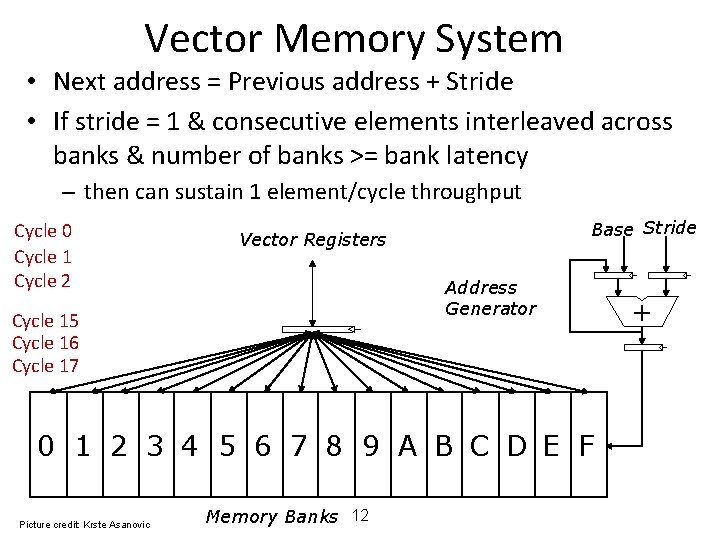

Vector Memory System • Next address = Previous address + Stride • If stride = 1 & consecutive elements interleaved across banks & number of banks >= bank latency – then can sustain 1 element/cycle throughput Base Stride Vector Registers Address Generator 0 1 2 3 4 5 6 7 8 9 A B C D E F Picture credit: Krste Asanovic Memory Banks 11 +

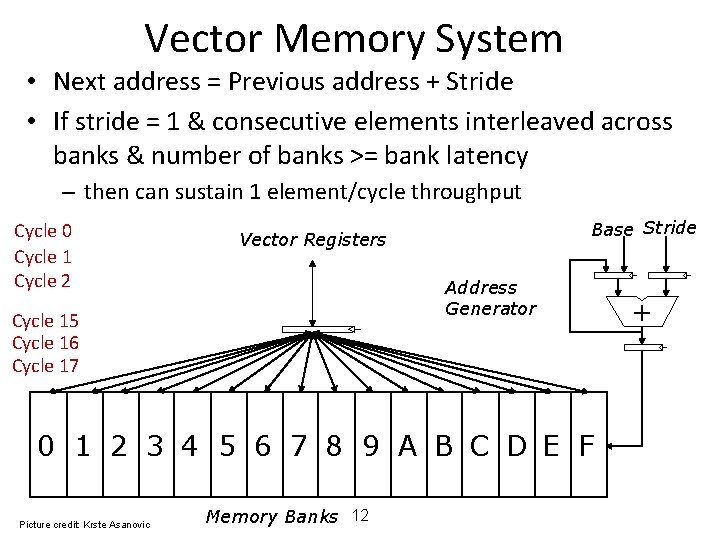

Vector Memory System • Next address = Previous address + Stride • If stride = 1 & consecutive elements interleaved across banks & number of banks >= bank latency – then can sustain 1 element/cycle throughput Cycle 0 Cycle 1 Cycle 2 Base Stride Vector Registers Address Generator Cycle 15 Cycle 16 Cycle 17 0 1 2 3 4 5 6 7 8 9 A B C D E F Picture credit: Krste Asanovic Memory Banks 12 +

![Scalar Code Example For I 0 to 49 Ci Ai Scalar Code Example • For I = 0 to 49 – C[i] = (A[i]](https://slidetodoc.com/presentation_image_h2/db0c66e8609ad2853d4c2f537c81d10f/image-13.jpg)

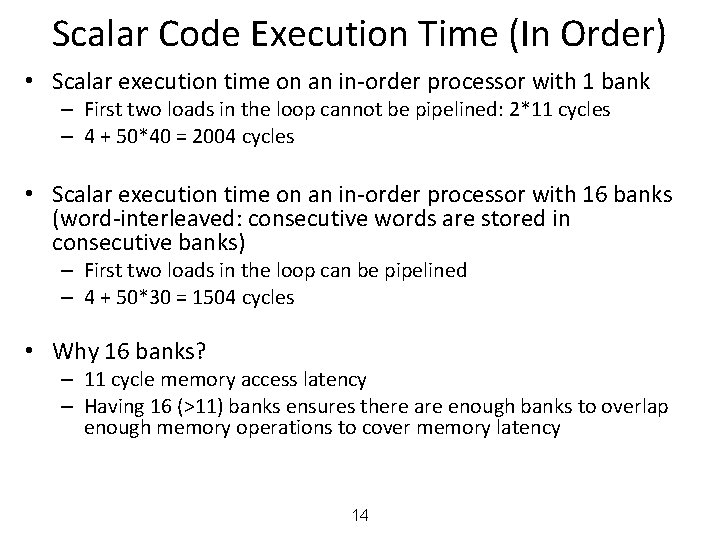

Scalar Code Example • For I = 0 to 49 – C[i] = (A[i] + B[i]) / 2 • Scalar code (instruction and its latency) MOVI R 0 = 50 MOVA R 1 = A MOVA R 2 = B MOVA R 3 = C X: LD R 4 = MEM[R 1++] LD R 5 = MEM[R 2++] ADD R 6 = R 4 + R 5 SHFR R 7 = R 6 >> 1 ST MEM[R 3++] = R 7 DECBNZ --R 0, X 1 1 4 304 dynamic instructions 11 ; autoincrement addressing 11 1 11 2 ; decrement and branch if NZ 13

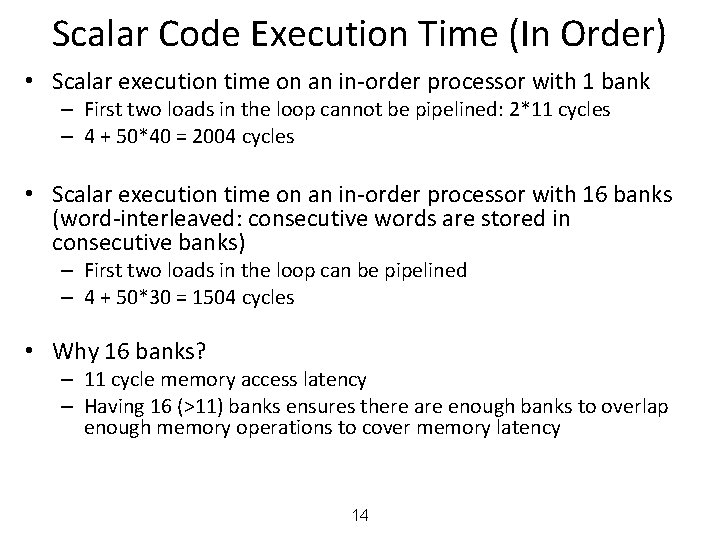

Scalar Code Execution Time (In Order) • Scalar execution time on an in-order processor with 1 bank – First two loads in the loop cannot be pipelined: 2*11 cycles – 4 + 50*40 = 2004 cycles • Scalar execution time on an in-order processor with 16 banks (word-interleaved: consecutive words are stored in consecutive banks) – First two loads in the loop can be pipelined – 4 + 50*30 = 1504 cycles • Why 16 banks? – 11 cycle memory access latency – Having 16 (>11) banks ensures there are enough banks to overlap enough memory operations to cover memory latency 14

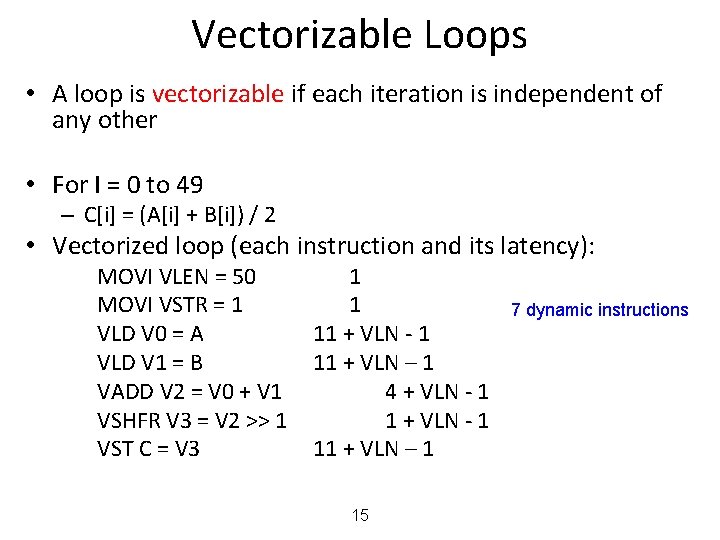

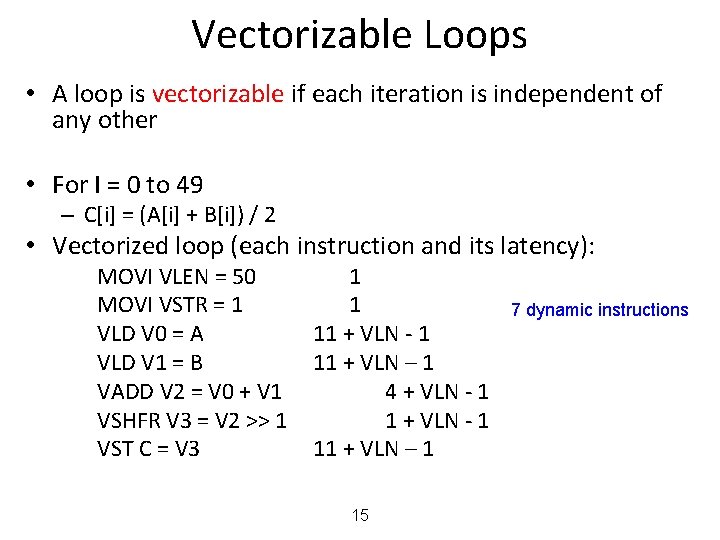

Vectorizable Loops • A loop is vectorizable if each iteration is independent of any other • For I = 0 to 49 – C[i] = (A[i] + B[i]) / 2 • Vectorized loop (each instruction and its latency): MOVI VLEN = 50 MOVI VSTR = 1 VLD V 0 = A VLD V 1 = B VADD V 2 = V 0 + V 1 VSHFR V 3 = V 2 >> 1 VST C = V 3 1 1 11 + VLN - 1 11 + VLN – 1 4 + VLN - 1 11 + VLN – 1 15 7 dynamic instructions

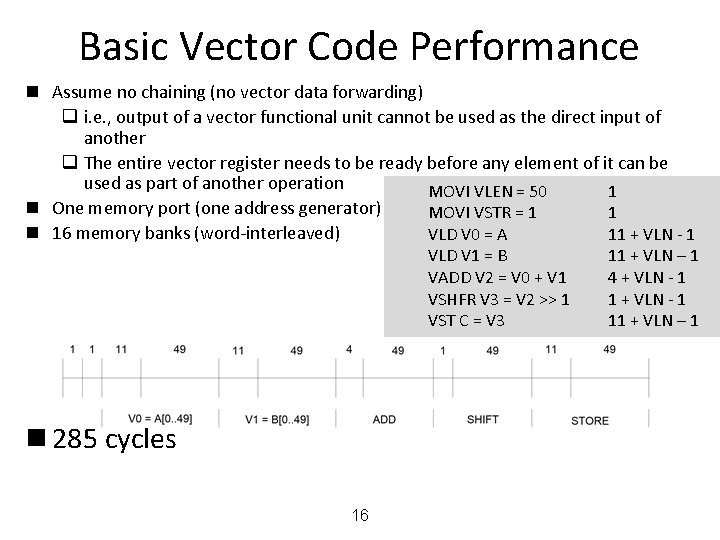

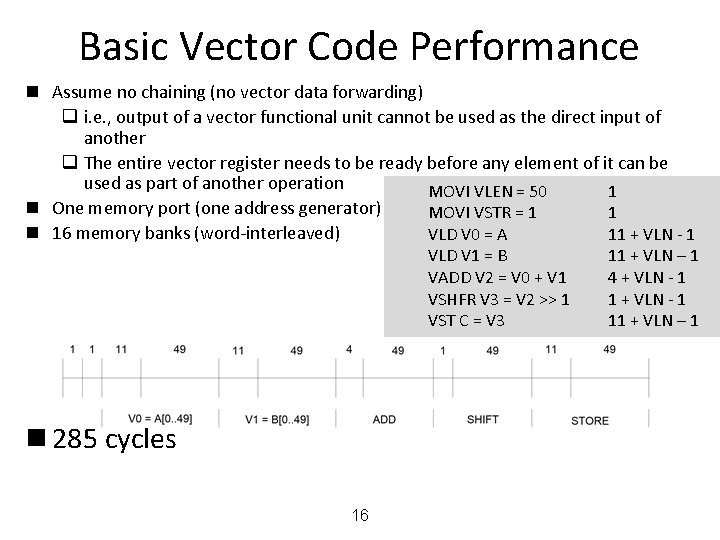

Basic Vector Code Performance n Assume no chaining (no vector data forwarding) q i. e. , output of a vector functional unit cannot be used as the direct input of another q The entire vector register needs to be ready before any element of it can be used as part of another operation MOVI VLEN = 50 1 n One memory port (one address generator) MOVI VSTR = 1 1 n 16 memory banks (word-interleaved) VLD V 0 = A 11 + VLN - 1 VLD V 1 = B VADD V 2 = V 0 + V 1 VSHFR V 3 = V 2 >> 1 VST C = V 3 n 285 cycles 16 11 + VLN – 1 4 + VLN - 1 11 + VLN – 1

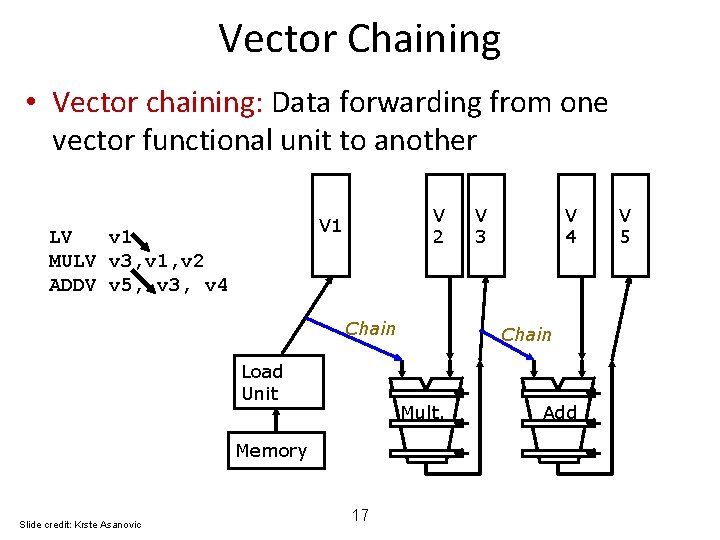

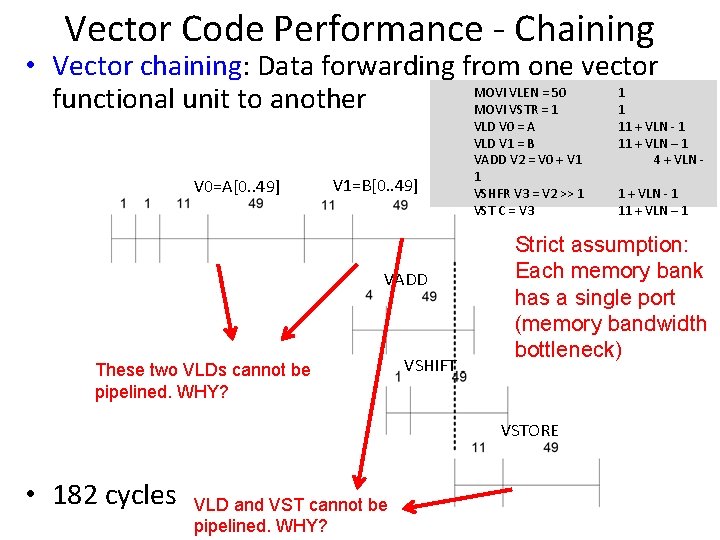

Vector Chaining • Vector chaining: Data forwarding from one vector functional unit to another V 2 V 1 LV v 1 MULV v 3, v 1, v 2 ADDV v 5, v 3, v 4 Chain Load Unit Slide credit: Krste Asanovic 17 V 4 Chain Mult. Memory V 3 Add V 5

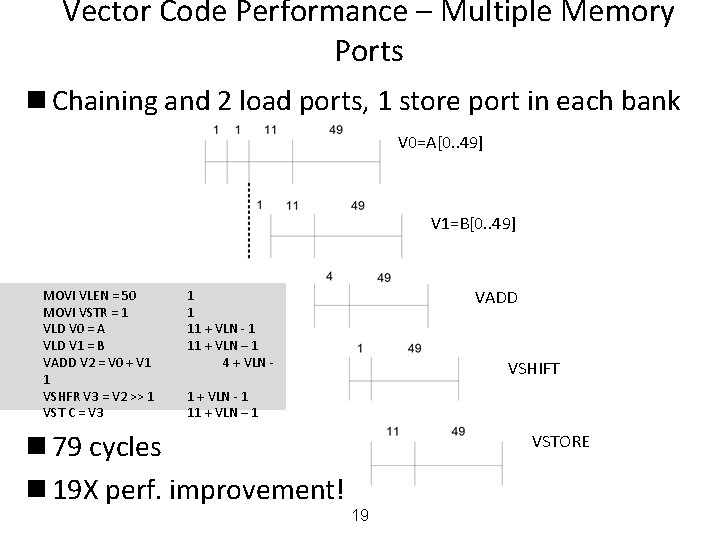

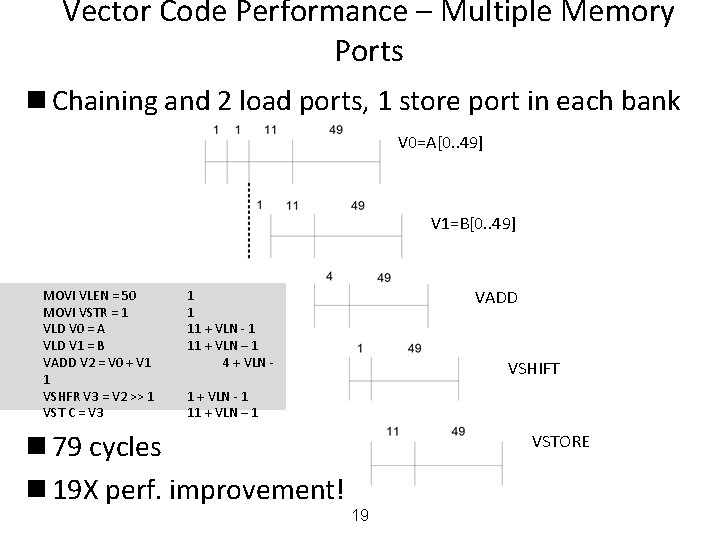

Vector Code Performance - Chaining • Vector chaining: Data forwarding from one vector MOVI VLEN = 50 1 functional unit to another MOVI VSTR = 1 1 V 0=A[0. . 49] V 1=B[0. . 49] VADD These two VLDs cannot be pipelined. WHY? VSHIFT VLD V 0 = A VLD V 1 = B VADD V 2 = V 0 + V 1 1 VSHFR V 3 = V 2 >> 1 VST C = V 3 VLD and VST cannot be pipelined. WHY? 1 + VLN - 1 11 + VLN – 1 Strict assumption: Each memory bank has a single port (memory bandwidth bottleneck) VSTORE • 182 cycles 11 + VLN - 1 11 + VLN – 1 4 + VLN -

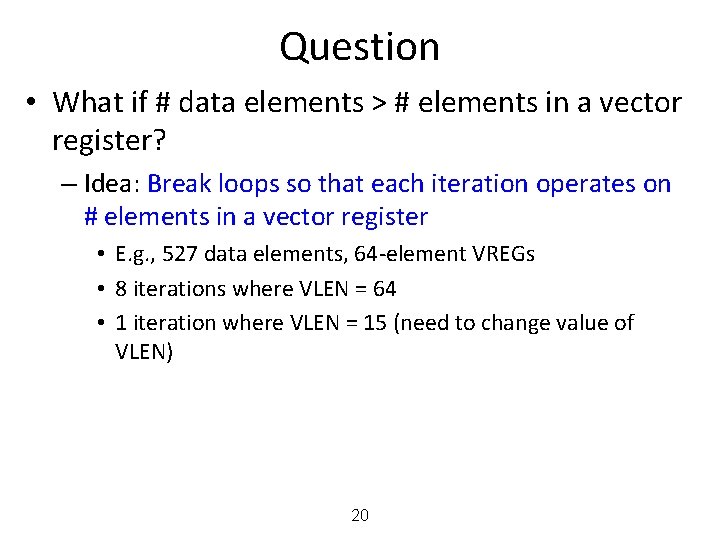

Vector Code Performance – Multiple Memory Ports n Chaining and 2 load ports, 1 store port in each bank V 0=A[0. . 49] V 1=B[0. . 49] MOVI VLEN = 50 MOVI VSTR = 1 VLD V 0 = A VLD V 1 = B VADD V 2 = V 0 + V 1 1 VSHFR V 3 = V 2 >> 1 VST C = V 3 VADD 1 1 11 + VLN - 1 11 + VLN – 1 4 + VLN - VSHIFT 1 + VLN - 1 11 + VLN – 1 n 79 cycles n 19 X perf. improvement! VSTORE 19

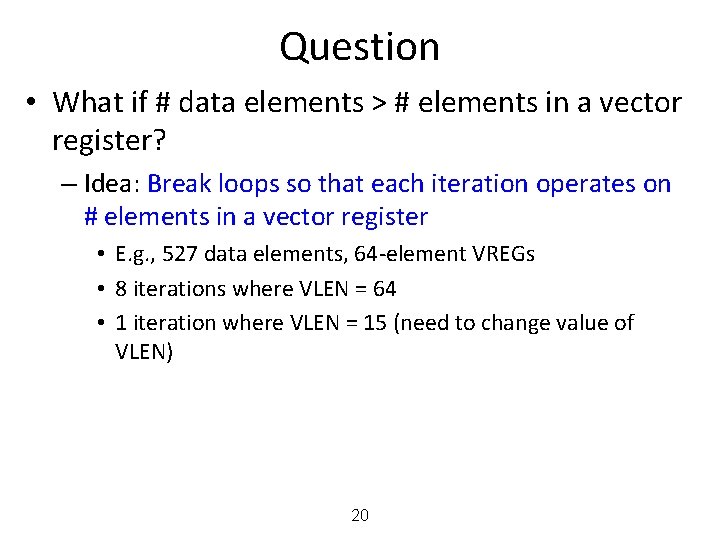

Question • What if # data elements > # elements in a vector register? – Idea: Break loops so that each iteration operates on # elements in a vector register • E. g. , 527 data elements, 64 -element VREGs • 8 iterations where VLEN = 64 • 1 iteration where VLEN = 15 (need to change value of VLEN) 20

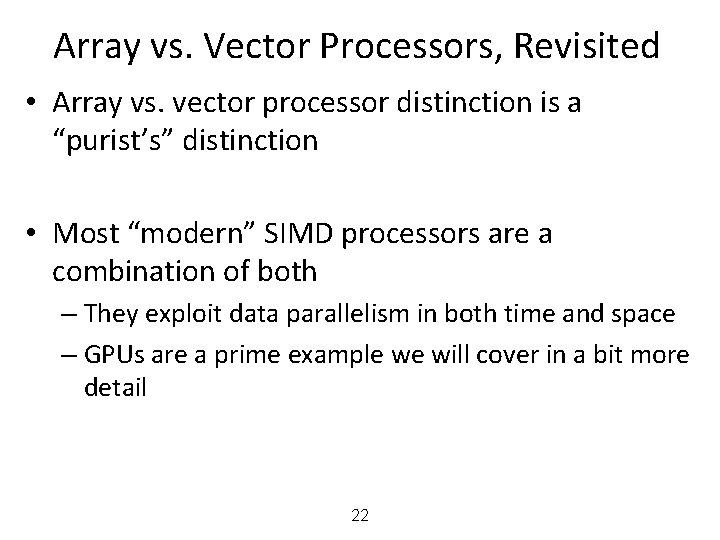

Vector/SIMD Processing Summary • Vector/SIMD machines are good at exploiting regular data -level parallelism – Same operation performed on many data elements – Improve performance, simplify design (no intra-vector dependencies) • Performance improvement limited by vectorizability of code – Scalar operations limit vector machine performance – Remember Amdahl’s Law – CRAY-1 was the fastest SCALAR machine at its time! • Many existing ISAs include (vector-like) SIMD operations – Intel MMX/SSEn/AVX, Power. PC Alti. Vec, ARM Advanced SIMD 21

Array vs. Vector Processors, Revisited • Array vs. vector processor distinction is a “purist’s” distinction • Most “modern” SIMD processors are a combination of both – They exploit data parallelism in both time and space – GPUs are a prime example we will cover in a bit more detail 22

FLYNN’S TAXONOMY OF COMPUTERS • Mike Flynn, “Very High-Speed Computing Systems, ” Proc. of IEEE, 1966 • SISD: Single instruction operates on single data element • SIMD: Single instruction operates on multiple data elements – Array processor – Vector processor • MISD: Multiple instructions operate on single data element – Closest form: systolic array processor, streaming processor • MIMD: Multiple instructions operate on multiple data elements (multiple instruction streams) – Multiprocessor – Multithreaded processor 23

SYSTOLIC ARRAYS

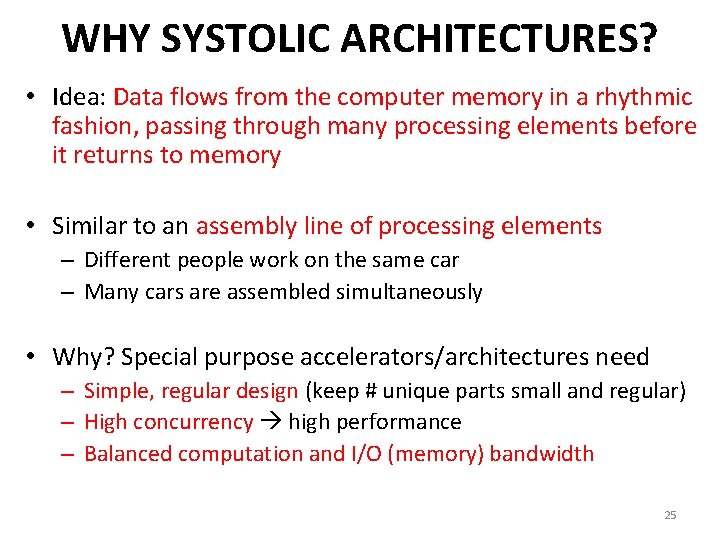

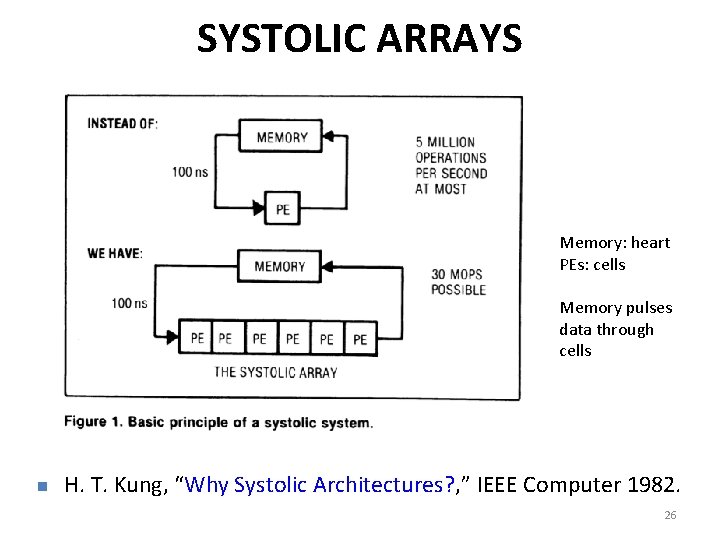

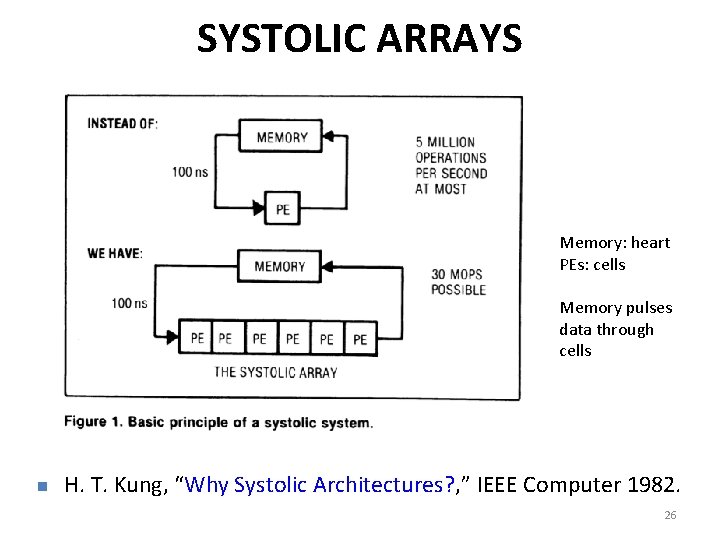

WHY SYSTOLIC ARCHITECTURES? • Idea: Data flows from the computer memory in a rhythmic fashion, passing through many processing elements before it returns to memory • Similar to an assembly line of processing elements – Different people work on the same car – Many cars are assembled simultaneously • Why? Special purpose accelerators/architectures need – Simple, regular design (keep # unique parts small and regular) – High concurrency high performance – Balanced computation and I/O (memory) bandwidth 25

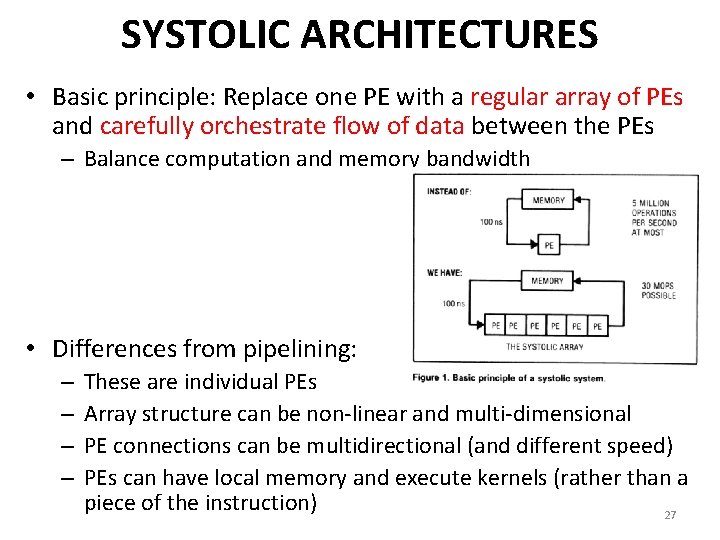

SYSTOLIC ARRAYS Memory: heart PEs: cells Memory pulses data through cells n H. T. Kung, “Why Systolic Architectures? , ” IEEE Computer 1982. 26

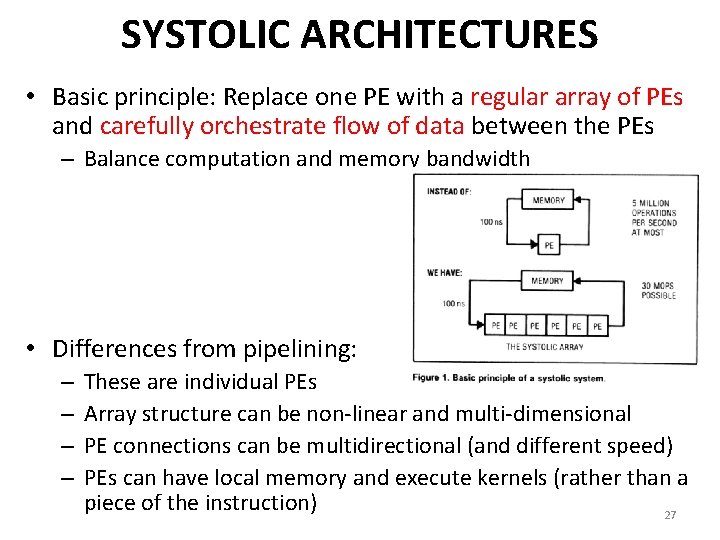

SYSTOLIC ARCHITECTURES • Basic principle: Replace one PE with a regular array of PEs and carefully orchestrate flow of data between the PEs – Balance computation and memory bandwidth • Differences from pipelining: – – These are individual PEs Array structure can be non-linear and multi-dimensional PE connections can be multidirectional (and different speed) PEs can have local memory and execute kernels (rather than a piece of the instruction) 27

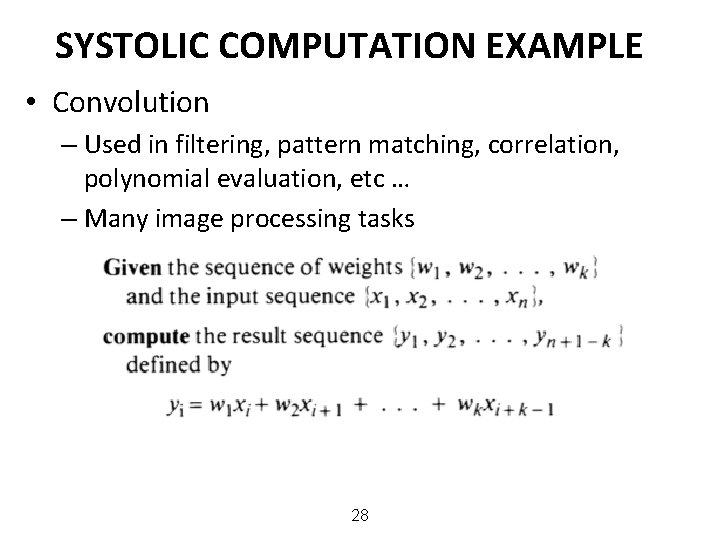

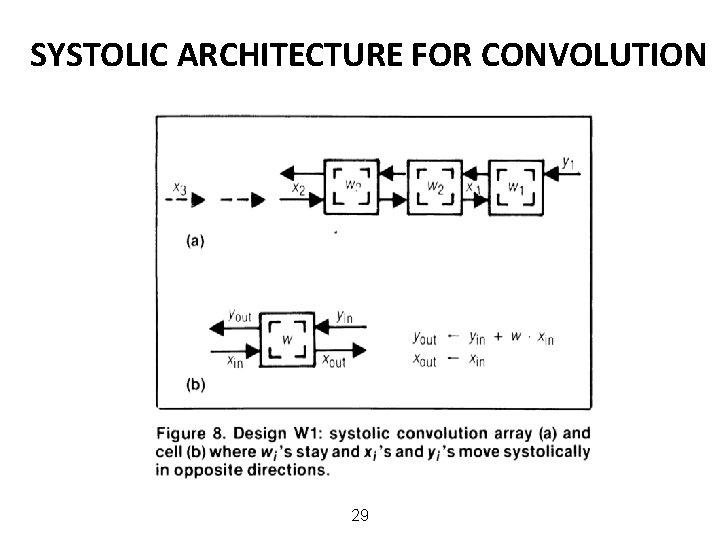

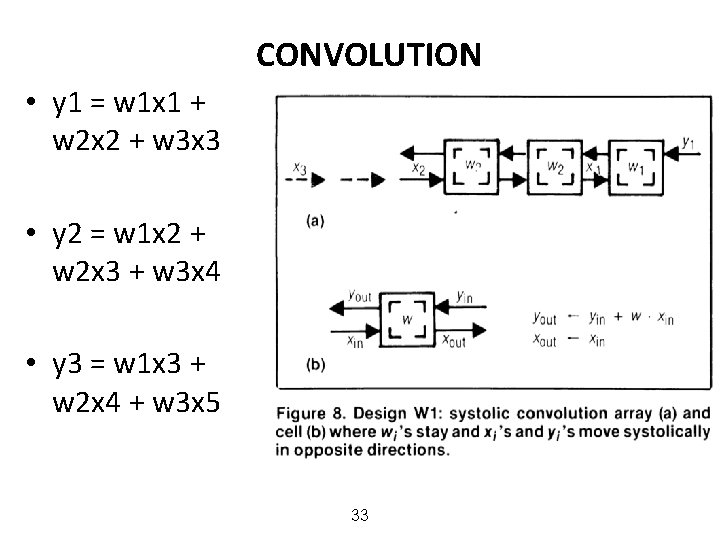

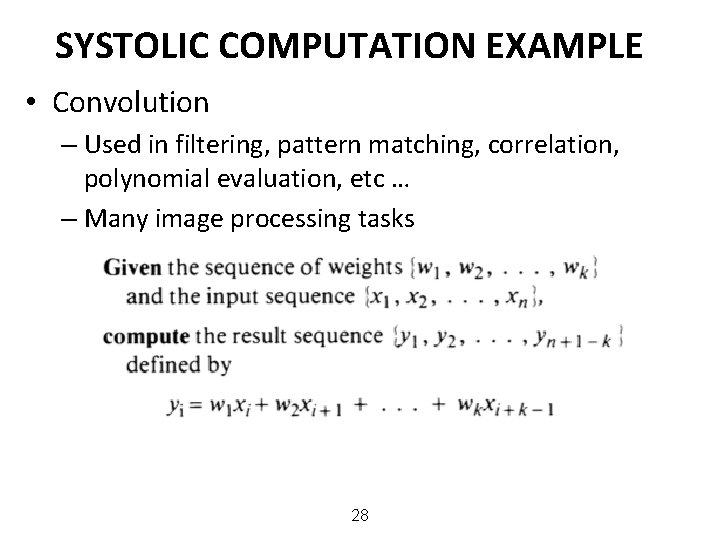

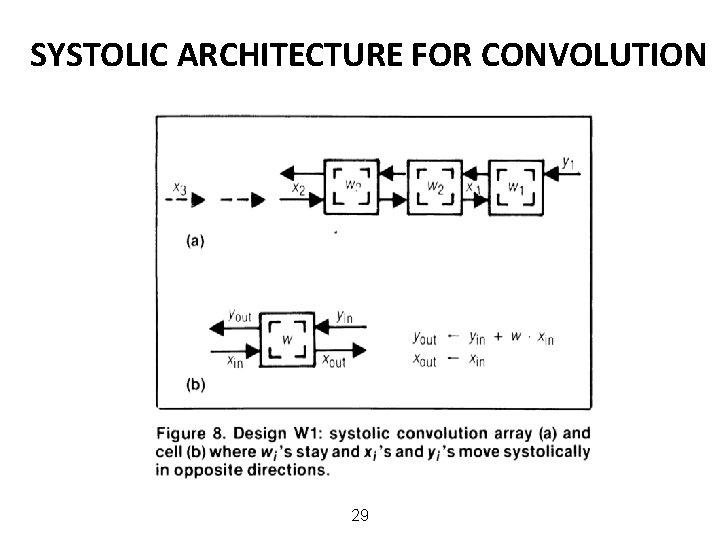

SYSTOLIC COMPUTATION EXAMPLE • Convolution – Used in filtering, pattern matching, correlation, polynomial evaluation, etc … – Many image processing tasks 28

SYSTOLIC ARCHITECTURE FOR CONVOLUTION 29

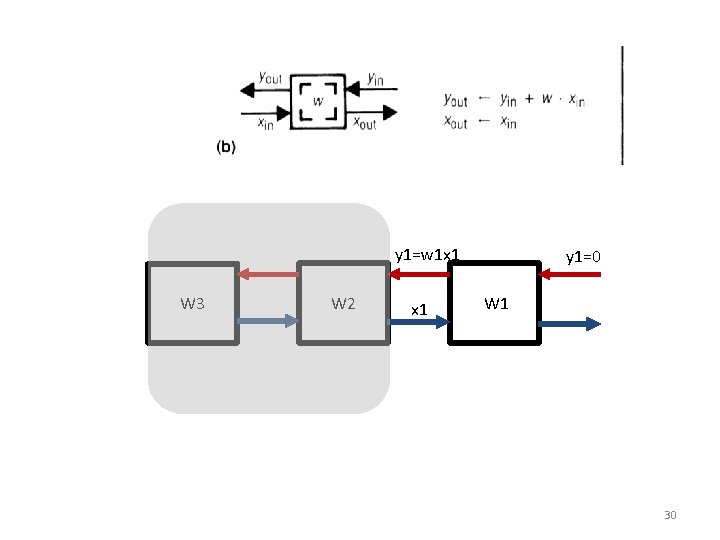

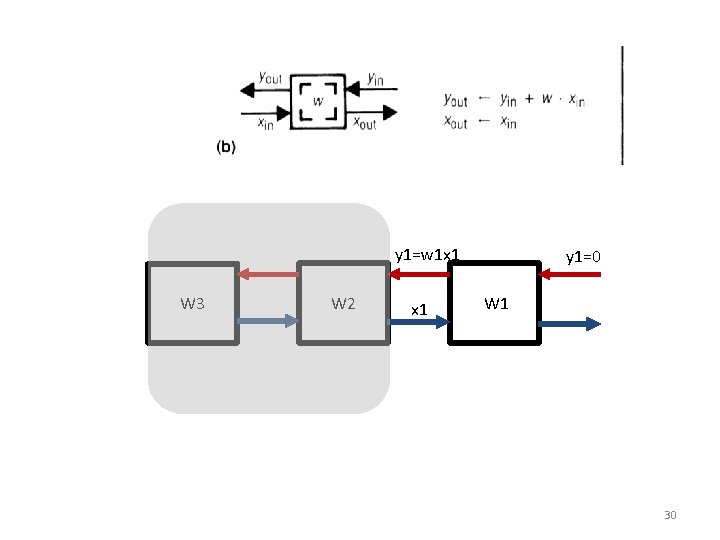

y 1=w 1 x 1 W 3 W 2 x 1 y 1=0 W 1 30

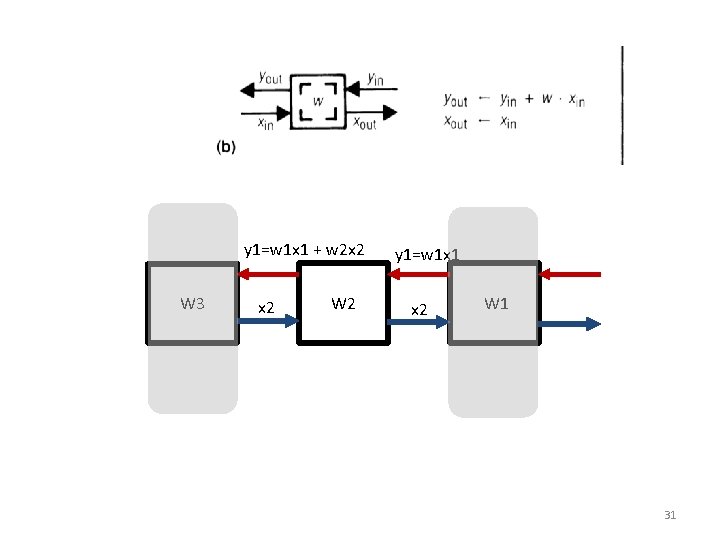

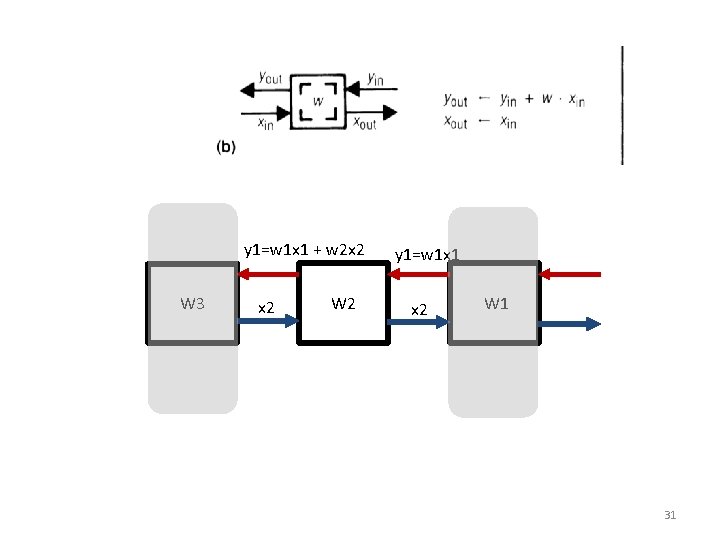

y 1=w 1 x 1 + w 2 x 2 W 3 x 2 W 2 y 1=w 1 x 1 x 2 W 1 31

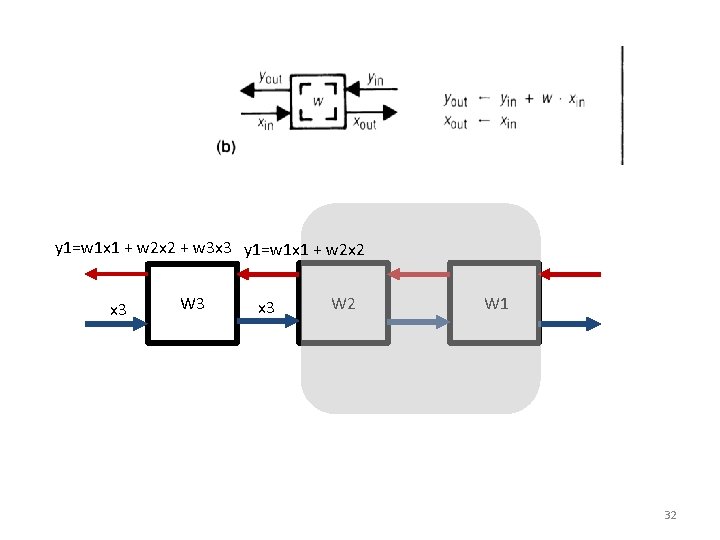

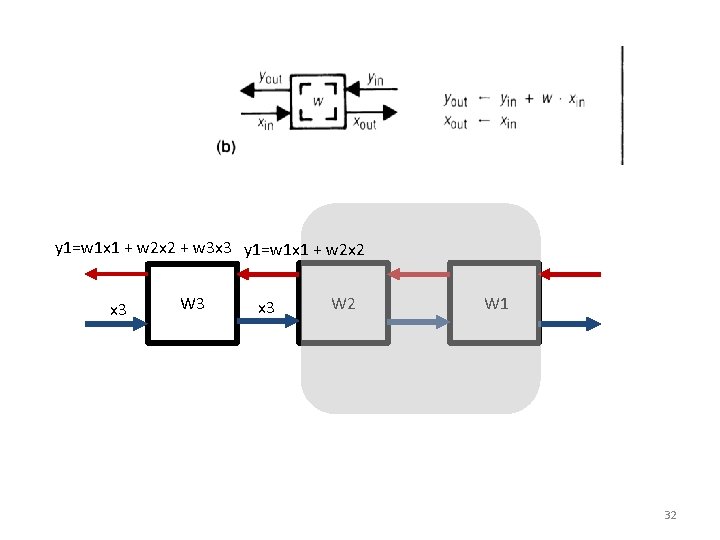

y 1=w 1 x 1 + w 2 x 2 + w 3 x 3 y 1=w 1 x 1 + w 2 x 2 x 3 W 3 x 3 W 2 W 1 32

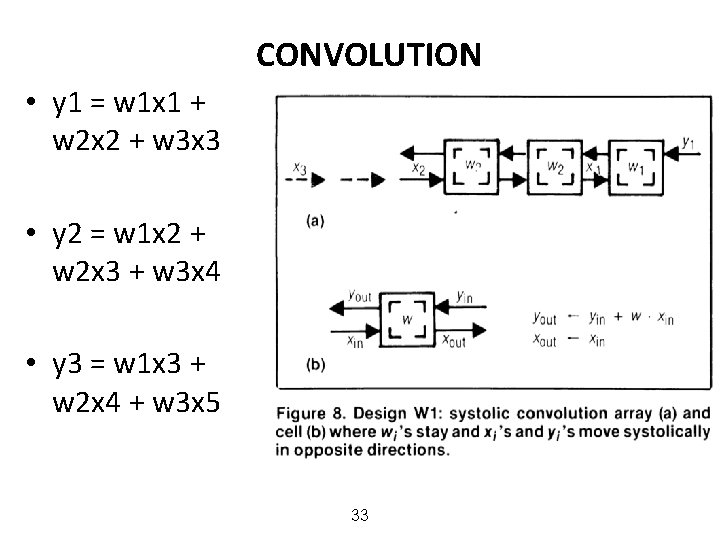

CONVOLUTION • y 1 = w 1 x 1 + w 2 x 2 + w 3 x 3 • y 2 = w 1 x 2 + w 2 x 3 + w 3 x 4 • y 3 = w 1 x 3 + w 2 x 4 + w 3 x 5 33

More Programmability • Each PE in a systolic array – Can store multiple “weights” – Weights can be selected on the fly – Eases implementation of, e. g. , adaptive filtering • Taken further – Each PE can have its own data and instruction memory – Data memory to store partial/temporary results, constants – Leads to stream processing, pipeline parallelism • More generally, staged execution 34

SYSTOLIC ARRAYS: PROS AND CONS • Advantage: – Specialized (computation needs to fit PE organization/functions) improved efficiency, simple design, high concurrency/ performance good to do more with less memory bandwidth requirement • Downside: – Specialized not generally applicable because computation needs to fit the PE functions/organization 35

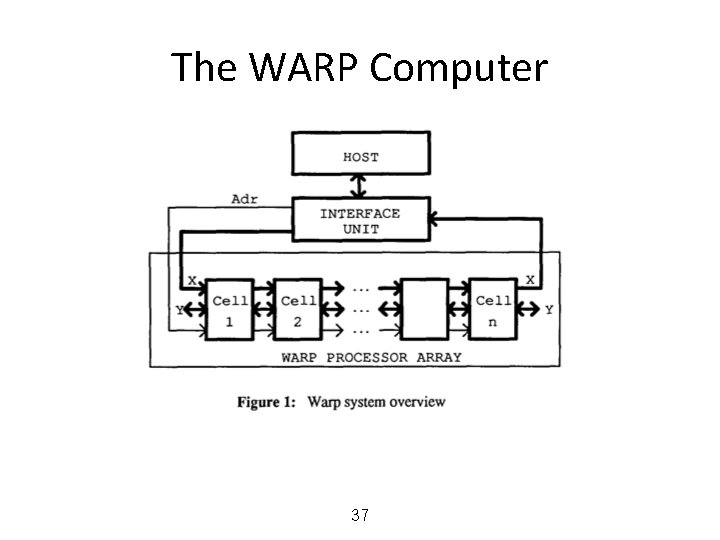

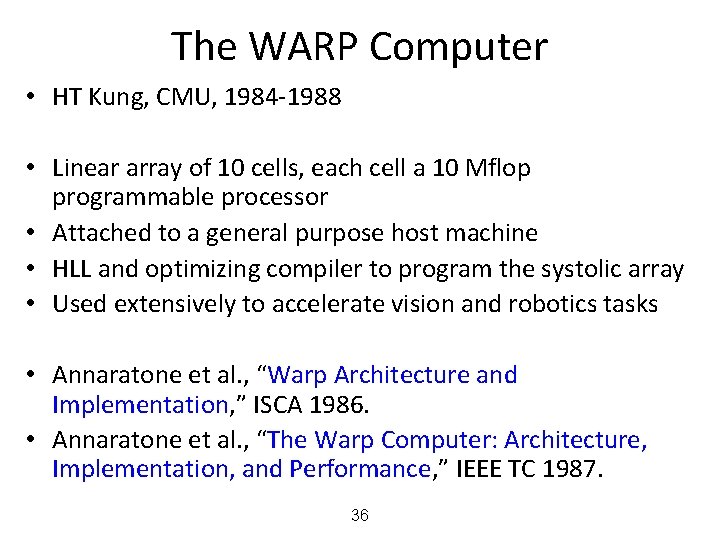

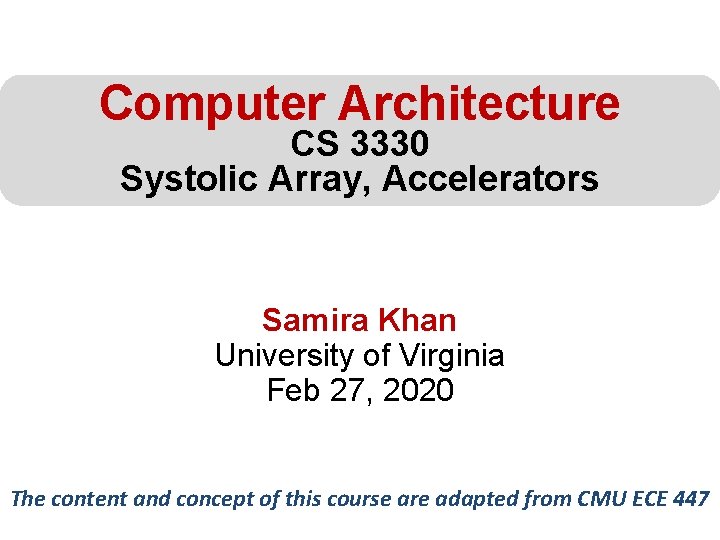

The WARP Computer • HT Kung, CMU, 1984 -1988 • Linear array of 10 cells, each cell a 10 Mflop programmable processor • Attached to a general purpose host machine • HLL and optimizing compiler to program the systolic array • Used extensively to accelerate vision and robotics tasks • Annaratone et al. , “Warp Architecture and Implementation, ” ISCA 1986. • Annaratone et al. , “The Warp Computer: Architecture, Implementation, and Performance, ” IEEE TC 1987. 36

The WARP Computer 37

Computer Architecture CS 3330 Systolic Array, Accelerators Samira Khan University of Virginia Feb 27, 2020 The content and concept of this course are adapted from CMU ECE 447