Clockless Logic Montek Singh Tue Apr 6 2004

![Background: HC Pipeline Style High-Capacity Pipelines (HC) [Singh/Nowick WVLSI-00] l bundled datapaths; dynamic logic Background: HC Pipeline Style High-Capacity Pipelines (HC) [Singh/Nowick WVLSI-00] l bundled datapaths; dynamic logic](https://slidetodoc.com/presentation_image_h2/98226114ea79e1950ca3d691ed7c0cf7/image-8.jpg)

- Slides: 30

Clockless Logic Montek Singh Tue, Apr 6, 2004

Case Study: An Adaptively-Pipelined Mixed Synchronous-Asynchronous System Montek Singh Univ. of North Carolina at Chapel Hill Jose Tierno, Alexander Rylyakov and Sergey Rylov IBM TJ Watson Research Center Steven M. Nowick Columbia University

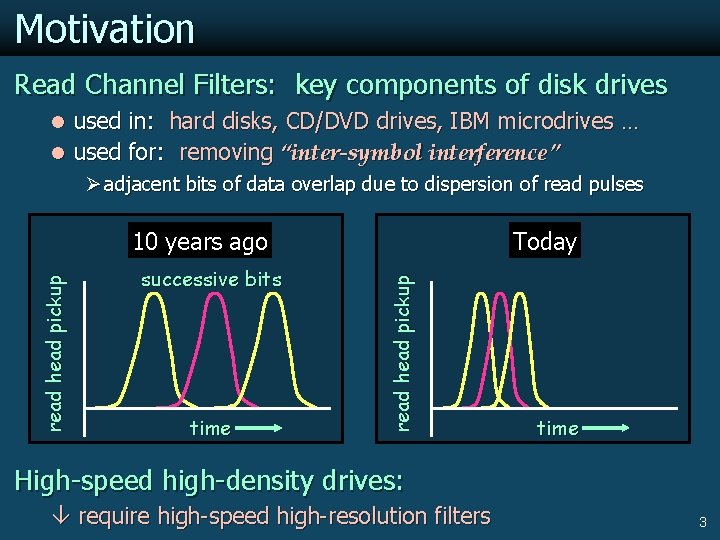

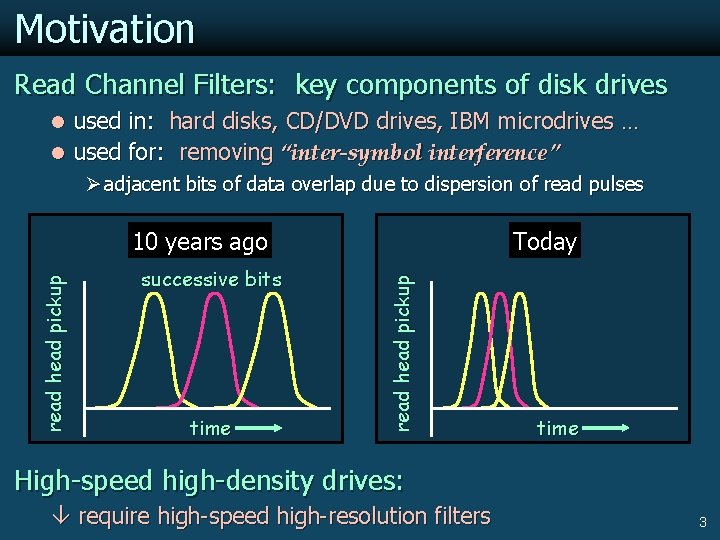

Motivation Read Channel Filters: key components of disk drives l used in: hard disks, CD/DVD drives, IBM microdrives … l used for: removing “inter-symbol interference” Ø adjacent bits of data overlap due to dispersion of read pulses successive bits time Today read head pickup 10 years ago time High-speed high-density drives: require high-speed high-resolution filters 3

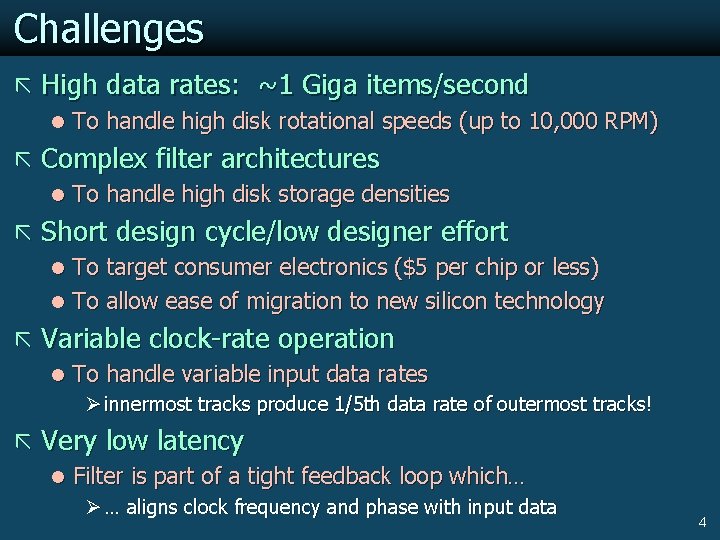

Challenges ã High data rates: ~1 Giga items/second l To handle high disk rotational speeds (up to 10, 000 RPM) ã Complex filter architectures l To handle high disk storage densities ã Short design cycle/low designer effort l To target consumer electronics ($5 per chip or less) l To allow ease of migration to new silicon technology ã Variable clock-rate operation l To handle variable input data rates Ø innermost tracks produce 1/5 th data rate of outermost tracks! ã Very low latency l Filter is part of a tight feedback loop which… Ø … aligns clock frequency and phase with input data 4

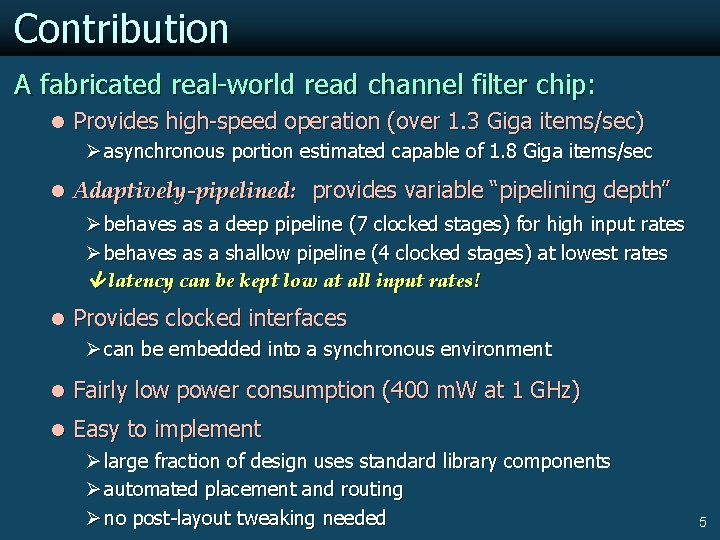

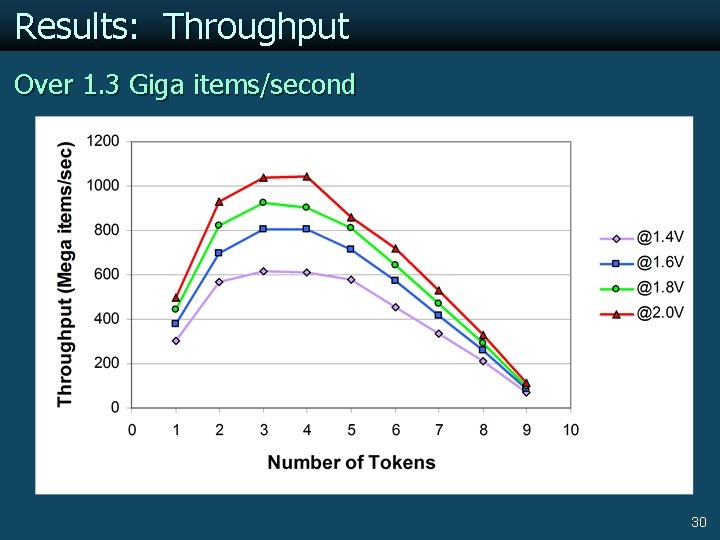

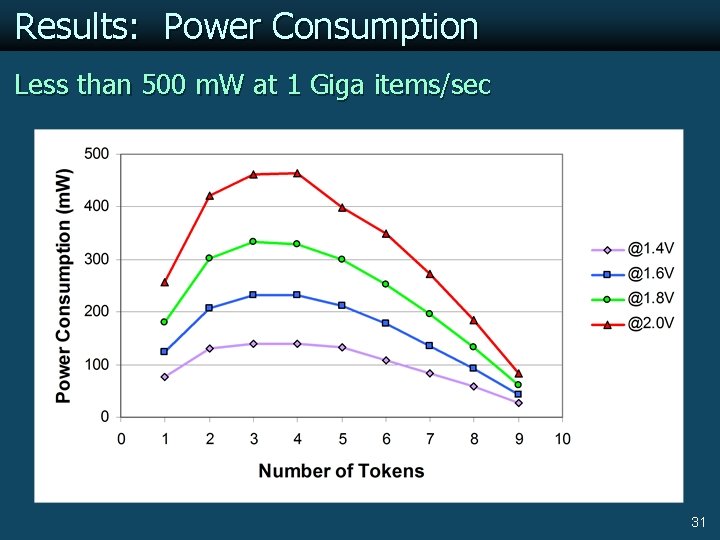

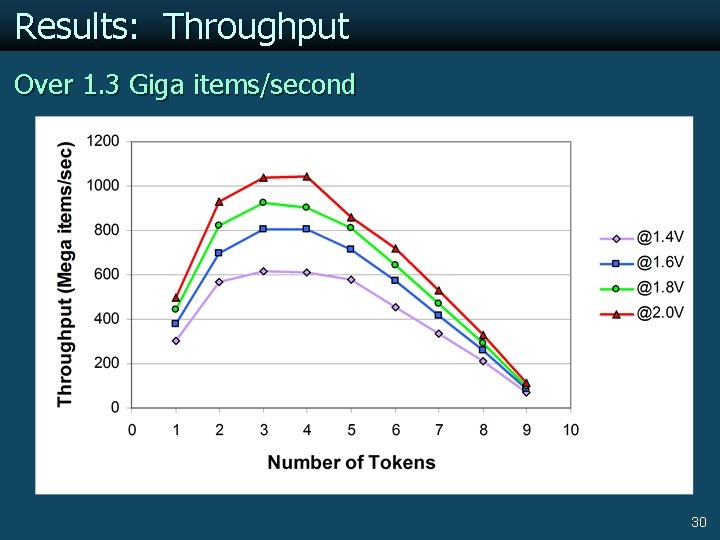

Contribution A fabricated real-world read channel filter chip: l Provides high-speed operation (over 1. 3 Giga items/sec) Ø asynchronous portion estimated capable of 1. 8 Giga items/sec l Adaptively-pipelined: provides variable “pipelining depth” Ø behaves as a deep pipeline (7 clocked stages) for high input rates Ø behaves as a shallow pipeline (4 clocked stages) at lowest rates latency can be kept low at all input rates! l Provides clocked interfaces Ø can be embedded into a synchronous environment l Fairly low power consumption (400 m. W at 1 GHz) l Easy to implement Ø large fraction of design uses standard library components Ø automated placement and routing Ø no post-layout tweaking needed 5

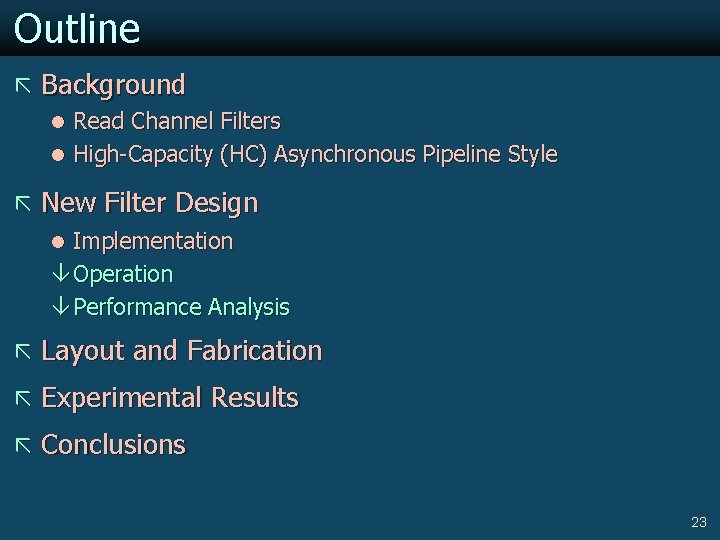

Outline ã Background l Read Channel Filters l High-Capacity (HC) Asynchronous Pipeline Style ã New Filter Design l Implementation l Operation l Performance Analysis ã Layout and Fabrication ã Experimental Results ã Conclusions 8

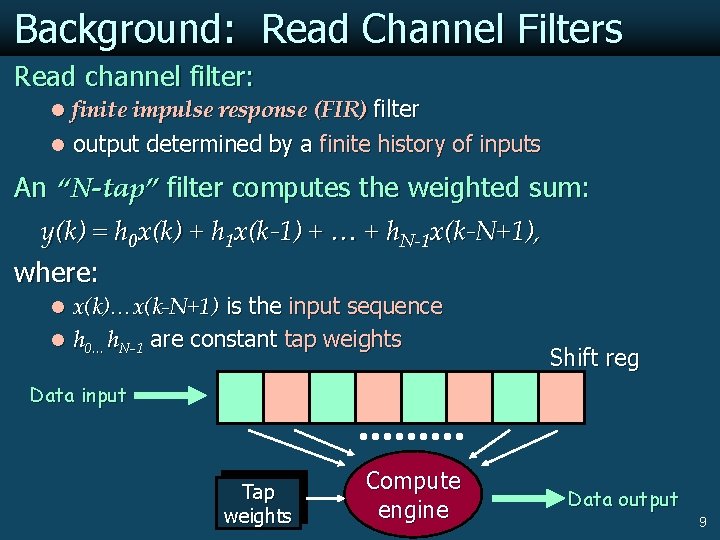

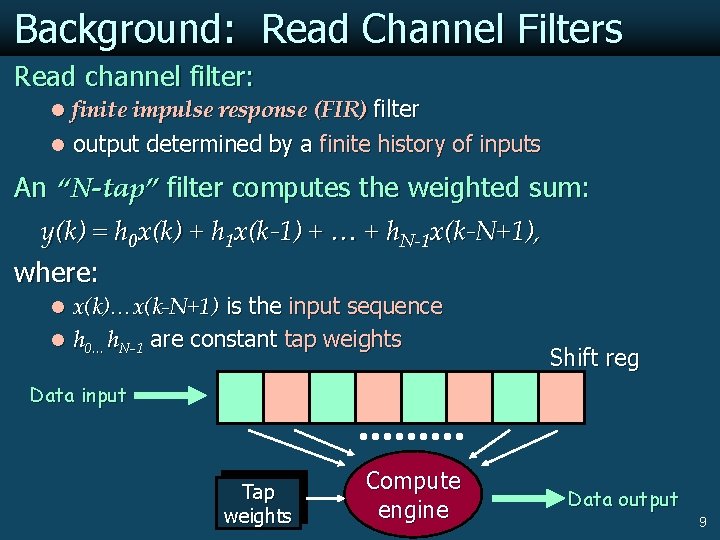

Background: Read Channel Filters Read channel filter: l finite impulse response (FIR) filter l output determined by a finite history of inputs An “N-tap” filter computes the weighted sum: y(k) = h 0 x(k) + h 1 x(k-1) + … + h. N-1 x(k-N+1), where: l x(k)…x(k-N+1) is the input sequence l h 0…h. N-1 are constant tap weights Shift reg Data input Tap weights Compute engine Data output 9

![Background HC Pipeline Style HighCapacity Pipelines HC SinghNowick WVLSI00 l bundled datapaths dynamic logic Background: HC Pipeline Style High-Capacity Pipelines (HC) [Singh/Nowick WVLSI-00] l bundled datapaths; dynamic logic](https://slidetodoc.com/presentation_image_h2/98226114ea79e1950ca3d691ed7c0cf7/image-8.jpg)

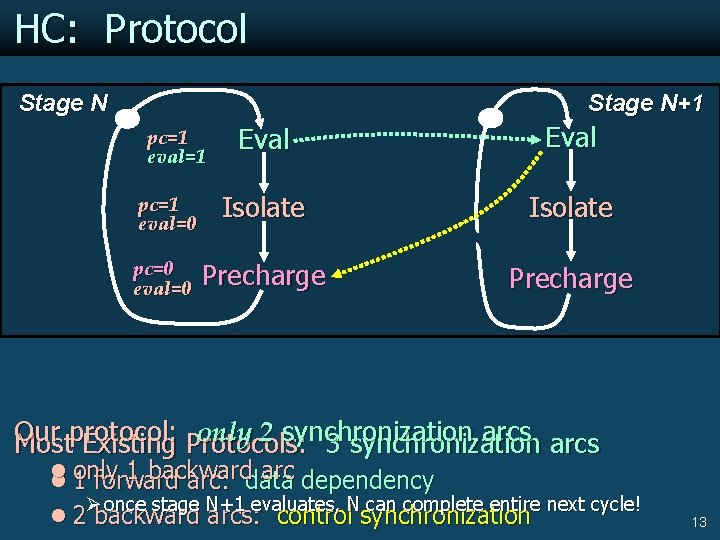

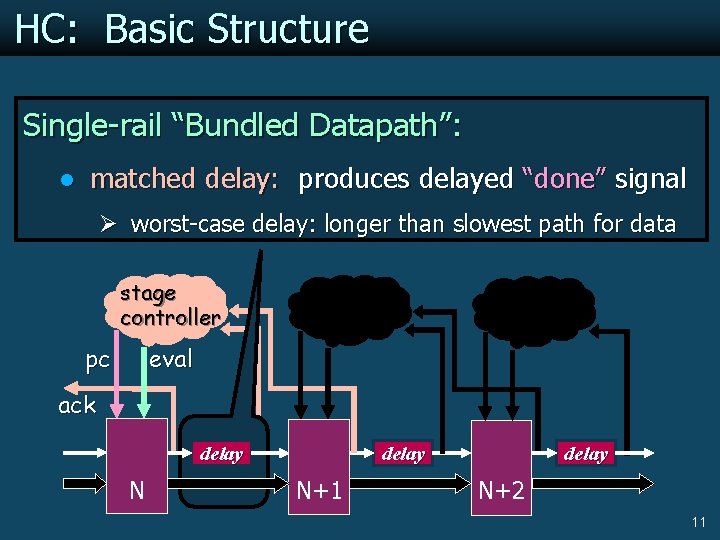

Background: HC Pipeline Style High-Capacity Pipelines (HC) [Singh/Nowick WVLSI-00] l bundled datapaths; dynamic logic function blocks l latch-free: no explicit latches needed Ø dynamic logic provides implicit latching l novel highly-concurrent protocol maximizes storage capacity Ø traditional latch-free approaches: “spacers” limit capacity to 50% Key Idea: Obtain greater control of stage’s operation l separate control of pull-up/pull-down l result = new “isolate phase” l stage holds outputs/impervious to input changes Advantage: Each stage can hold a distinct data item 100% storage capacity Extra Benefit: Obtain greater concurrency High throughput 10

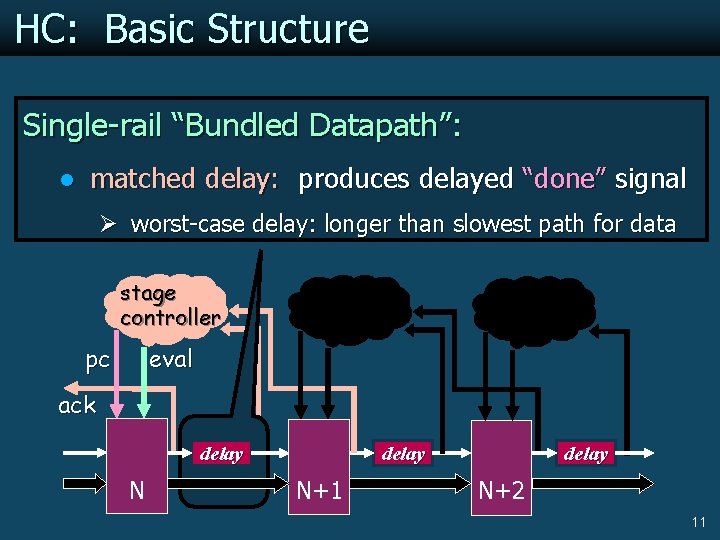

HC: Basic Structure Allows novel 3 -phase cycle: Key Idea: Single-rail “Bundled Datapath”: l Evaluate 2 independent control signals: l controls precharge l “Isolate” (hold)signal l pc: matched delay: produces delayed “done” l eval: controls evaluation l Precharge Ø worst-case delay: longer than slowest path for data stage controller pc eval ack delay N+1 N+2 11

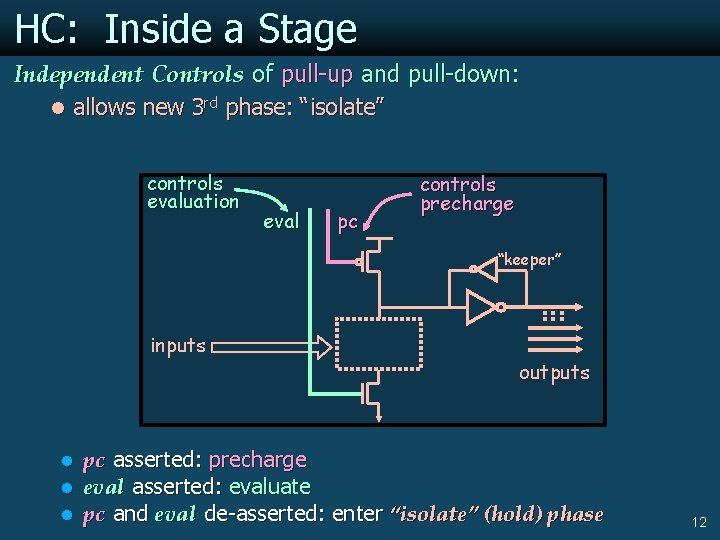

HC: Inside a Stage Independent Controls of pull-up and pull-down: l allows new 3 rd phase: “isolate” controls evaluation eval pc controls precharge “keeper” inputs l l l outputs pc asserted: precharge eval asserted: evaluate pc and eval de-asserted: enter “isolate” (hold) phase 12

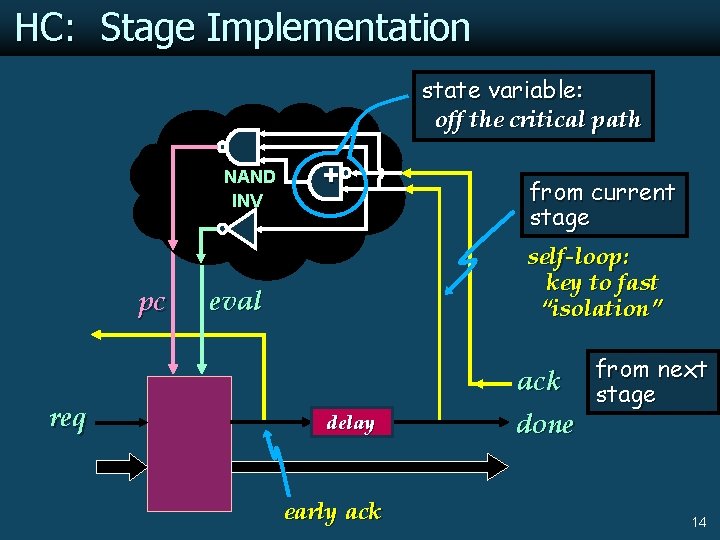

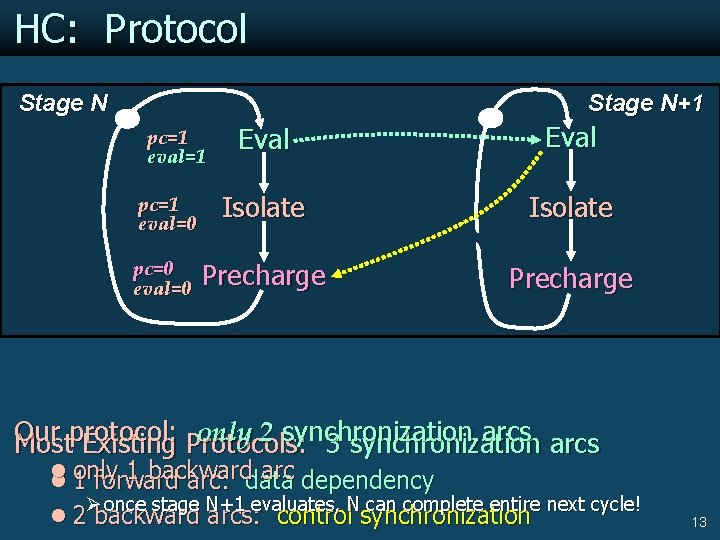

HC: Protocol Stage N+1 pc=1 eval=1 Eval pc=1 eval=0 Isolate pc=0 eval=0 Precharge X Isolate Precharge Our only 2 synchronization arcs Mostprotocol: Existing Protocols: 3 synchronization l 1 backward arc dependency l only 1 forward arc: data Ø once stage N+1 evaluates, N can complete entire next cycle! l 2 backward arcs: control synchronization 13

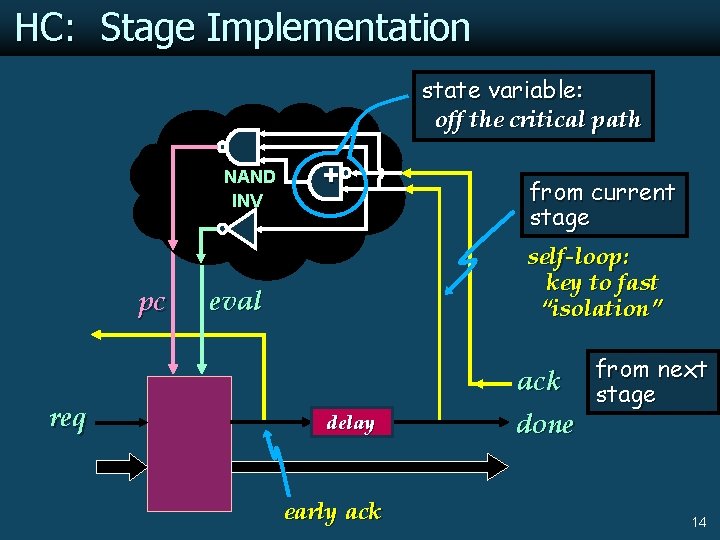

HC: Stage Implementation state variable: off the critical path NAND INV pc req + from current stage self-loop: key to fast “isolation” eval delay early ack next ack from stage done 14

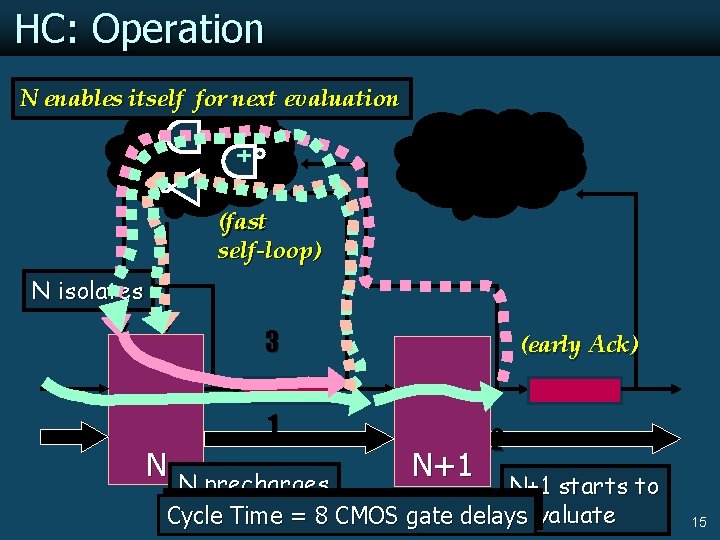

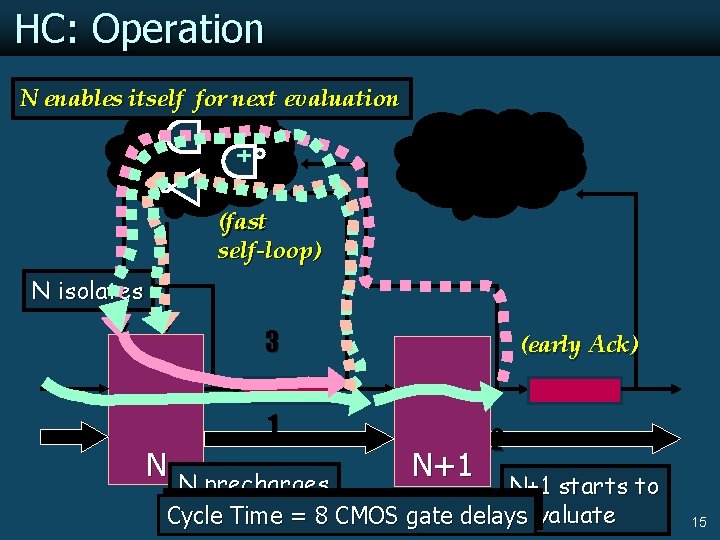

HC: Operation N enables itself for next evaluation (fast self-loop) N isolates 3 (early Ack) 1 N N evaluates N precharges N+1 2 N precharges N+1 starts to Cycle Time = 8 CMOS gate delaysevaluate 15

Outline ã Background l Read Channel Filters l High-Capacity (HC) Asynchronous Pipeline Style New Filter Design l Implementation l Operation l Performance Analysis ã Layout and Fabrication ã Experimental Results ã Conclusions 16

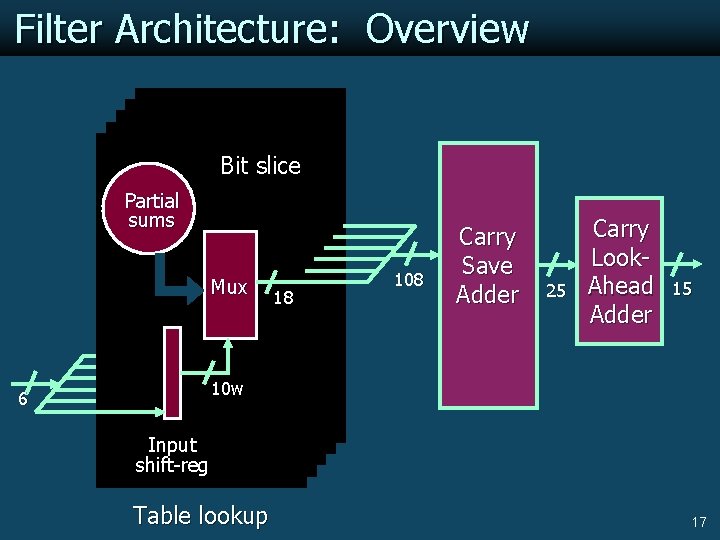

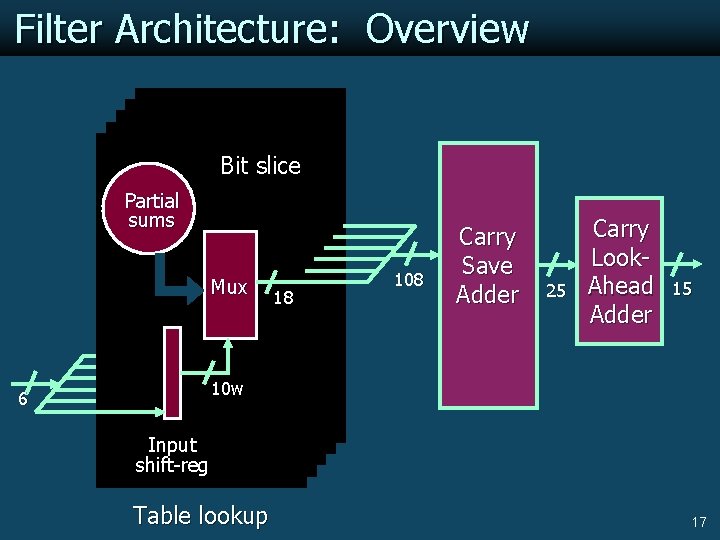

Filter Architecture: Overview Bit slice Partial sums Mux 18 108 Carry Save Adder 25 Carry Look. Ahead Adder 15 10 w 6 Input shift-reg Table lookup 17

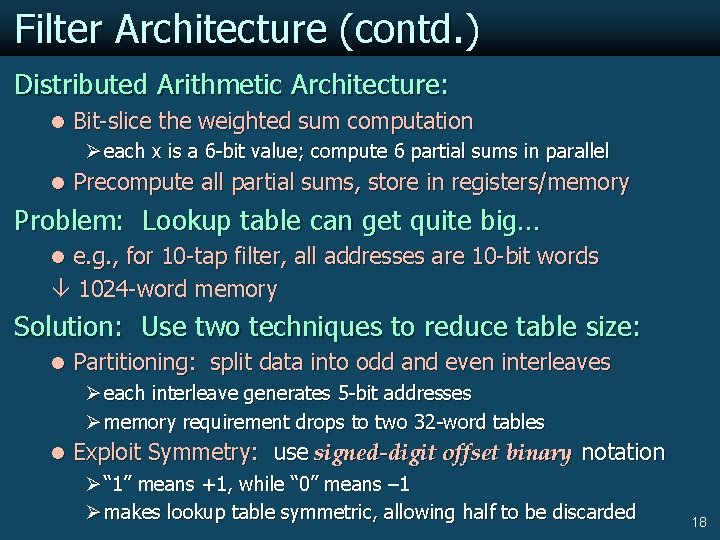

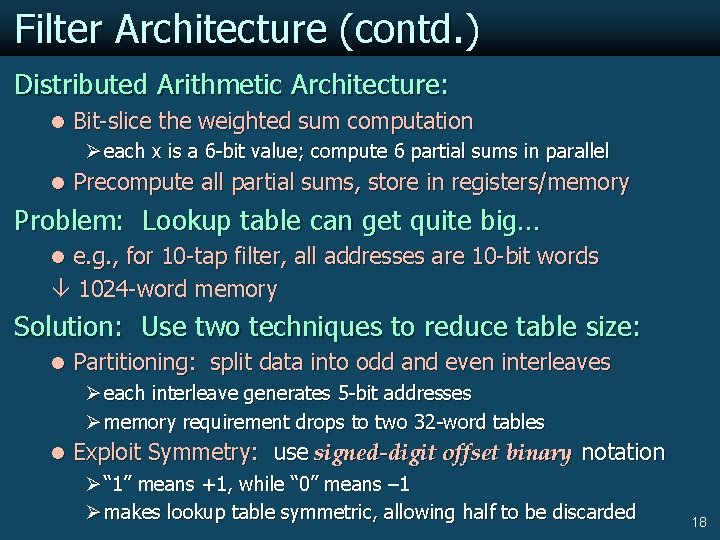

Filter Architecture (contd. ) Distributed Arithmetic Architecture: l Bit-slice the weighted sum computation Ø each x is a 6 -bit value; compute 6 partial sums in parallel l Precompute all partial sums, store in registers/memory Problem: Lookup table can get quite big… l e. g. , for 10 -tap filter, all addresses are 10 -bit words 1024 -word memory Solution: Use two techniques to reduce table size: l Partitioning: split data into odd and even interleaves Ø each interleave generates 5 -bit addresses Ø memory requirement drops to two 32 -word tables l Exploit Symmetry: use signed-digit offset binary notation Ø “ 1” means +1, while “ 0” means – 1 Ø makes lookup table symmetric, allowing half to be discarded 18

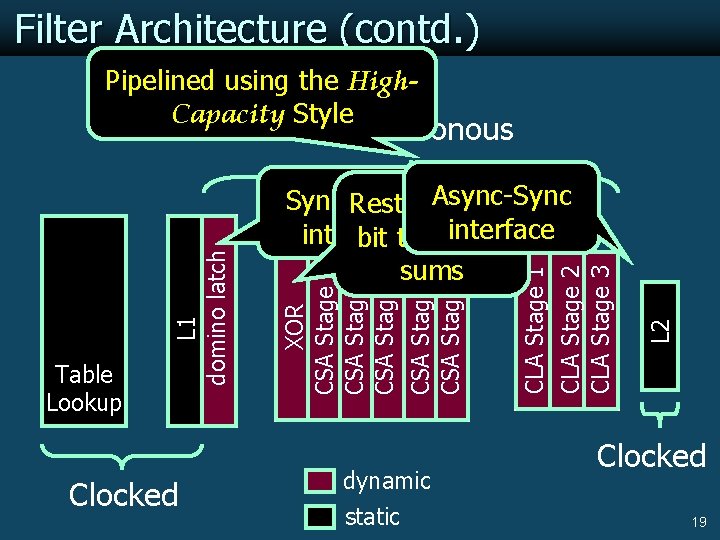

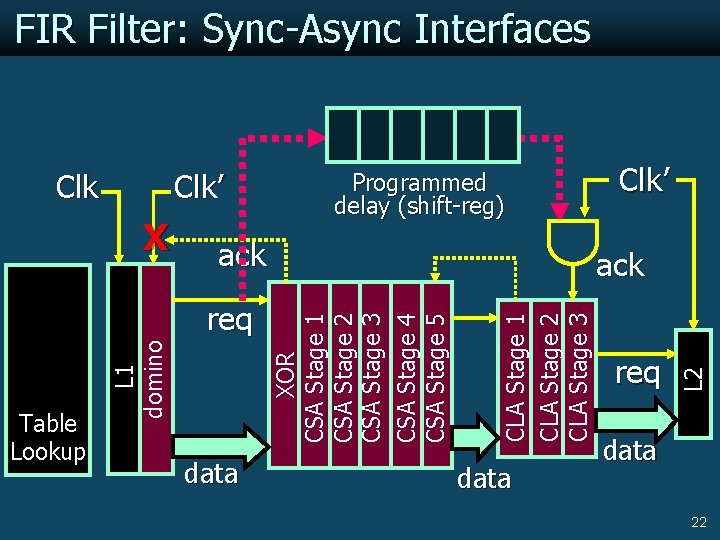

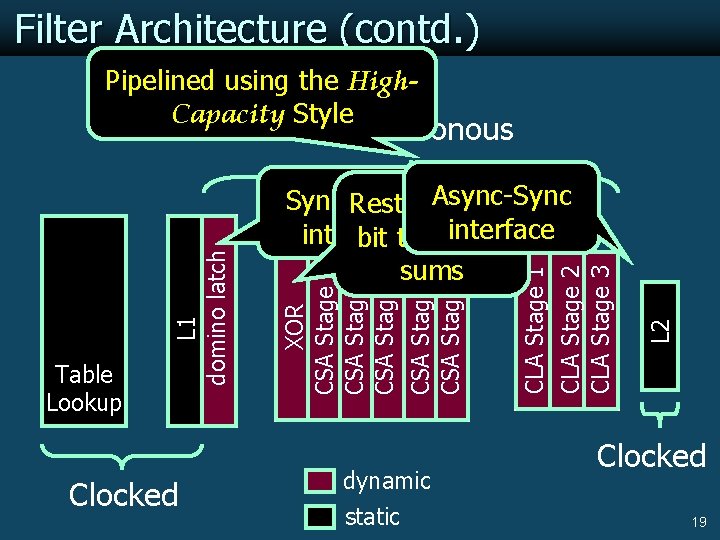

Filter Architecture (contd. ) Pipelined using the High. Capacity Style Clocked. dynamic static L 2 CLA Stage 1 CLA Stage 2 CLA Stage 3 1 2 3 4 5 Async-Sync-Async Restores sign interface bit to partial sums XOR CSA Stage CSA Stage Table Lookup L 1 domino latch Asynchronous Clocked 19

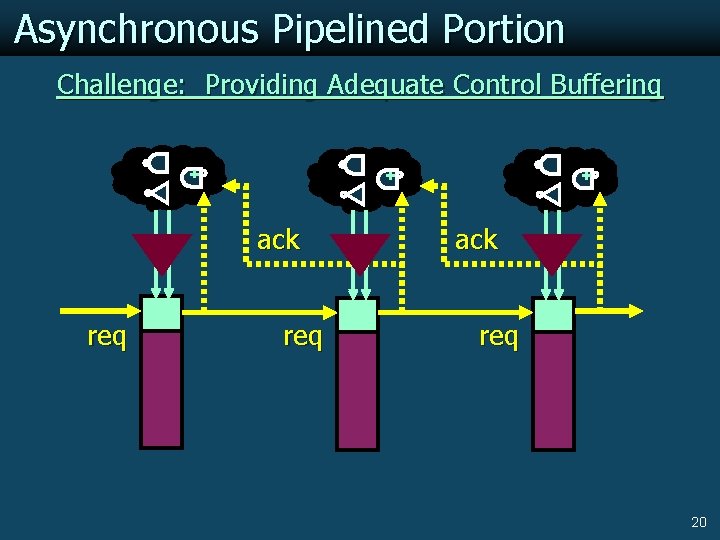

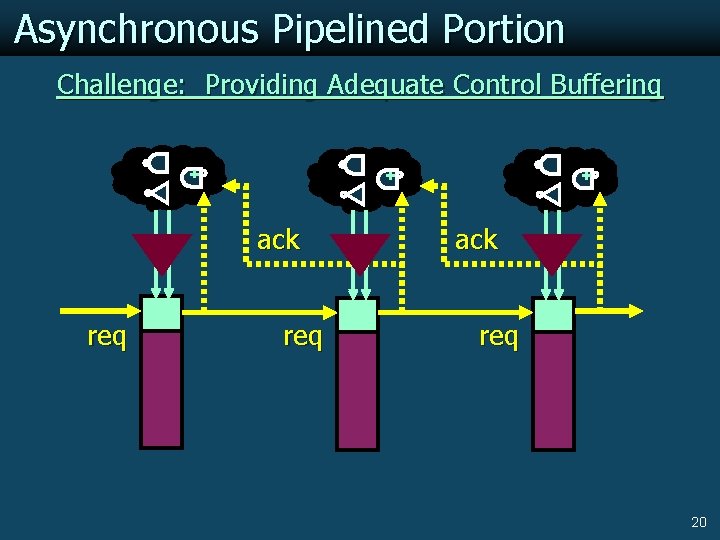

Asynchronous Pipelined Portion Challenge: Providing Adequate Control Buffering ack req 20

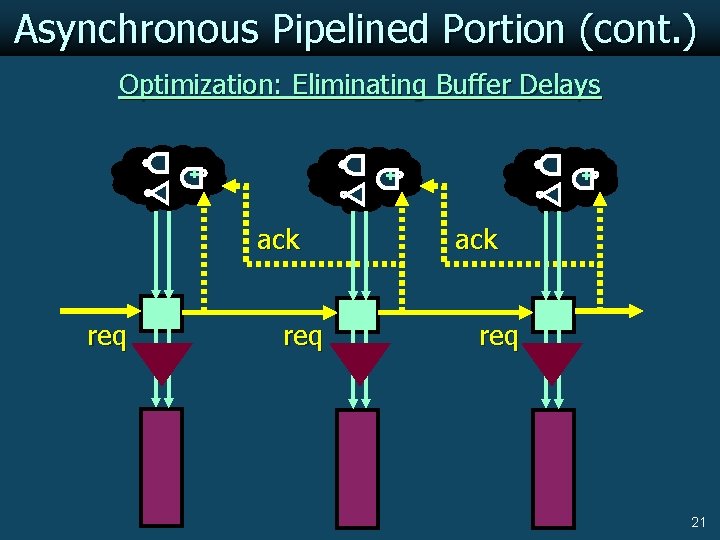

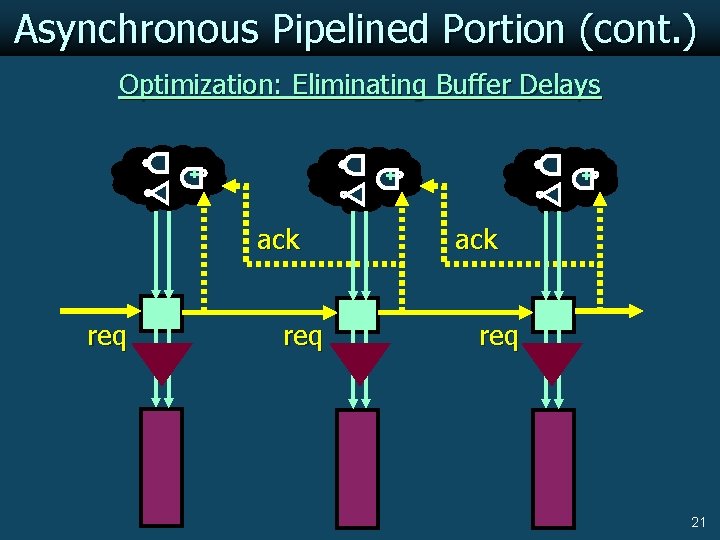

Asynchronous Pipelined Portion (cont. ) Optimization: Eliminating Buffer Delays ack req 21

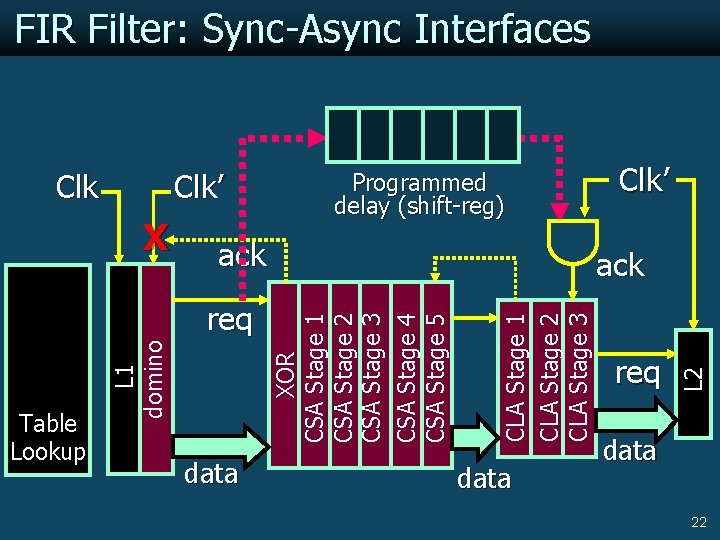

FIR Filter: Sync-Async Interfaces ack data CLA Stage 1 CLA Stage 2 CLA Stage 3 Table Lookup L 1 domino req Clk’ ack 1 2 3 4 5 X Programmed delay (shift-reg) data req L 2 Clk’ XOR CSA Stage CSA Stage Clk data 22

Outline ã Background l Read Channel Filters l High-Capacity (HC) Asynchronous Pipeline Style ã New Filter Design l Implementation Operation Performance Analysis ã Layout and Fabrication ã Experimental Results ã Conclusions 23

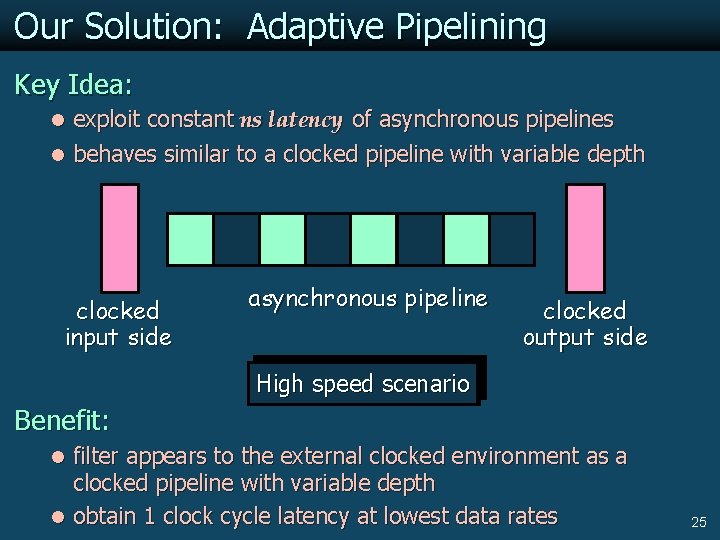

Filter Operation Performance Goals: l operation desired over wide range of clock frequencies Ø input data rate to a read channel can vary greatly Ø data rate varies as the read head moves from innermost to outermost track Ø variation up to factor of 5! l low filter latency required at all clock frequencies Ø filter is part of closed feedback loop (“clock recovery loop”) Ø low loop latency critical to accurate alignment of clock w. r. t. data Challenge: l purely synchronous pipeline cannot easily satisfy above goals Ø deep pipeline design required to meet highest data rates… Ø … but: deep pipelining implies long clock cycle latency Ø at lowest data rates: long clock cycle latency is unacceptable 24

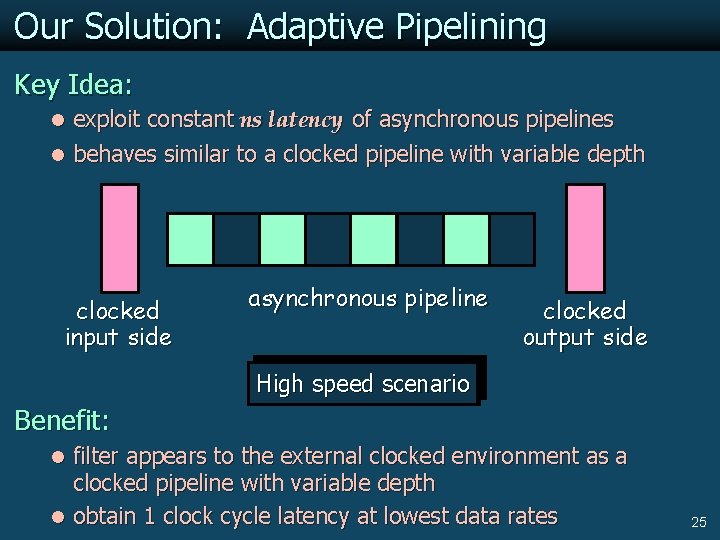

Our Solution: Adaptive Pipelining Key Idea: l exploit constant ns latency of asynchronous pipelines l behaves similar to a clocked pipeline with variable depth clocked input side asynchronous pipeline clocked output side High Slow speed scenario Benefit: l filter appears to the external clocked environment as a clocked pipeline with variable depth l obtain 1 clock cycle latency at lowest data rates 25

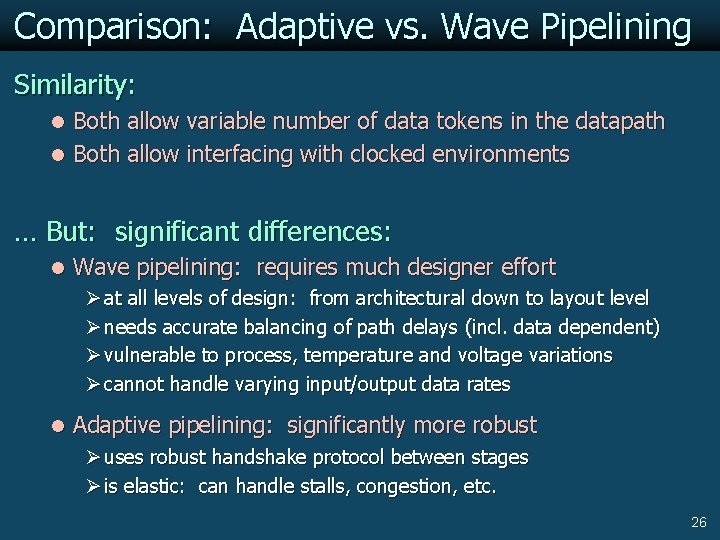

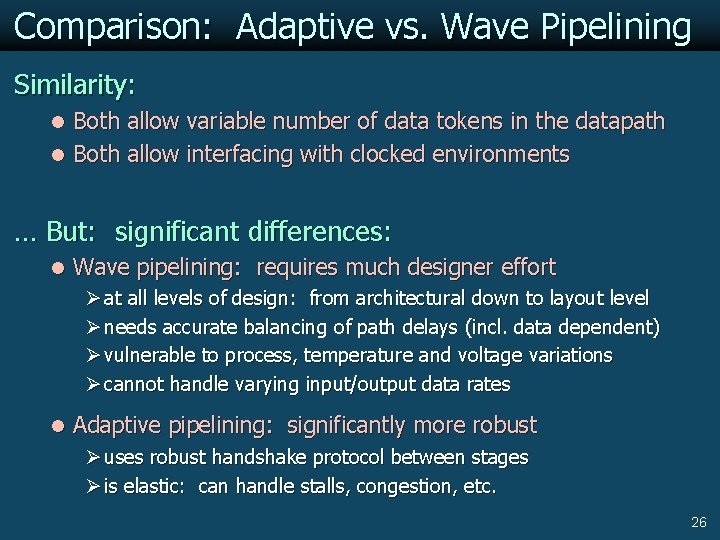

Comparison: Adaptive vs. Wave Pipelining Similarity: l Both allow variable number of data tokens in the datapath l Both allow interfacing with clocked environments … But: significant differences: l Wave pipelining: requires much designer effort Ø at all levels of design: from architectural down to layout level Ø needs accurate balancing of path delays (incl. data dependent) Ø vulnerable to process, temperature and voltage variations Ø cannot handle varying input/output data rates l Adaptive pipelining: significantly more robust Ø uses robust handshake protocol between stages Ø is elastic: can handle stalls, congestion, etc. 26

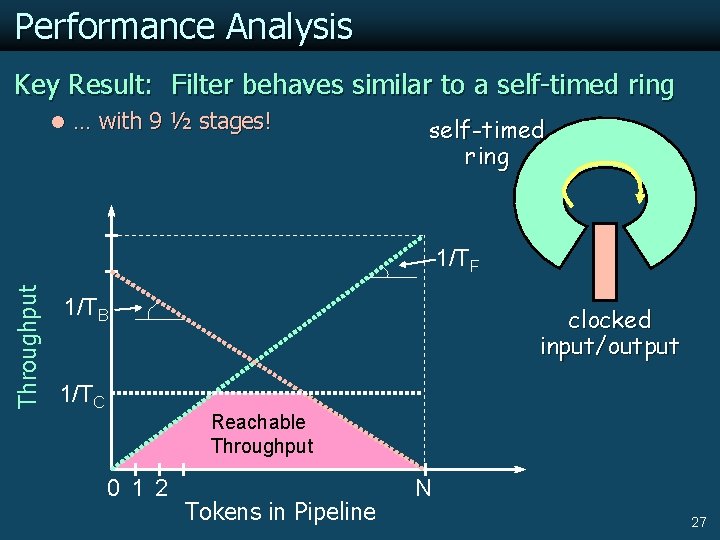

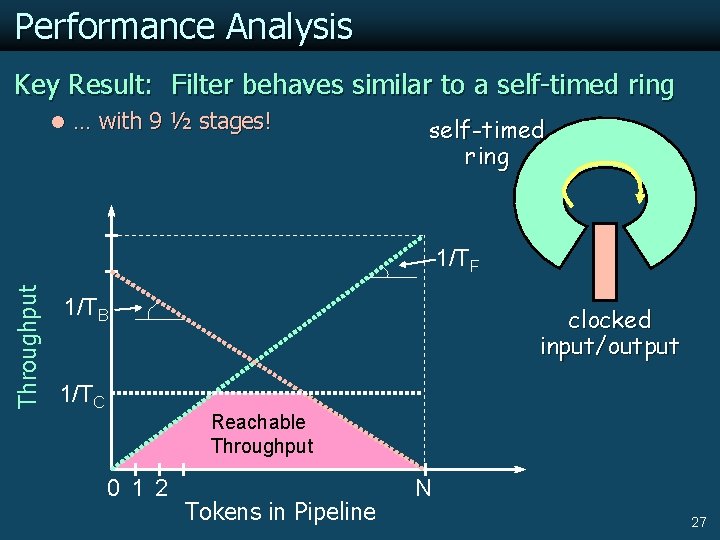

Performance Analysis Key Result: Filter behaves similar to a self-timed ring l … with 9 ½ stages! self-timed ring Throughput 1/TF 1/TB 1/TC clocked input/output Reachable Throughput 0 1 2 Tokens in Pipeline N 27

Outline ã Background l Read Channel Filters l High-Capacity (HC) Asynchronous Pipeline Style ã New Filter Design l Implementation l Operation l Performance Analysis Layout and Fabrication ã Experimental Results ã Conclusions 28

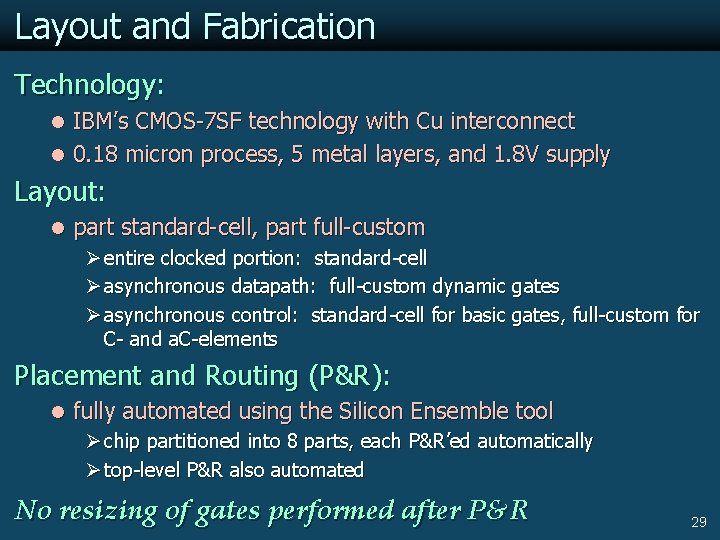

Layout and Fabrication Technology: l IBM’s CMOS-7 SF technology with Cu interconnect l 0. 18 micron process, 5 metal layers, and 1. 8 V supply Layout: l part standard-cell, part full-custom Ø entire clocked portion: standard-cell Ø asynchronous datapath: full-custom dynamic gates Ø asynchronous control: standard-cell for basic gates, full-custom for C- and a. C-elements Placement and Routing (P&R): l fully automated using the Silicon Ensemble tool Ø chip partitioned into 8 parts, each P&R’ed automatically Ø top-level P&R also automated No resizing of gates performed after P&R 29

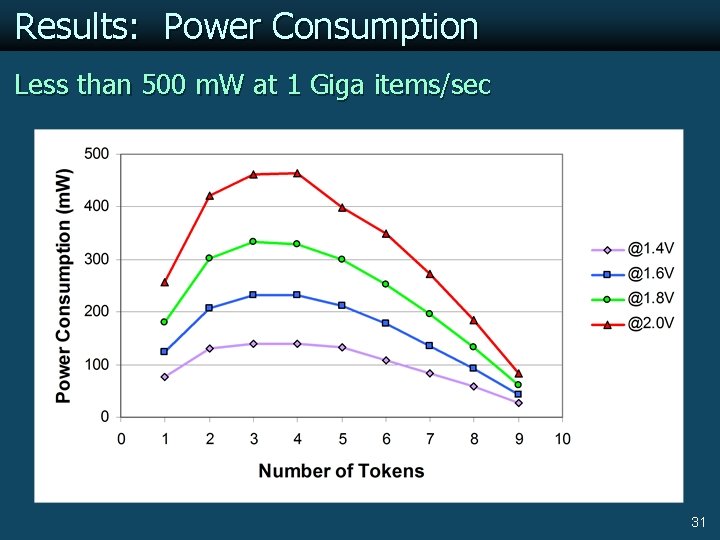

Results: Throughput Over 1. 3 Giga items/second 30

Results: Power Consumption Less than 500 m. W at 1 Giga items/sec 31

Conclusions Designed, fabricated and tested a real-world FIR filter: l Hybrid synchronous-asynchronous design l Exhibits adaptive pipelining Ø variable number of tokens in the datapath Ø enable low clock cycle latency operation at all frequencies l Exceeds all performance specifications: Ø obtains throughput over 1. 3 Giga. Hertz – 15% faster than best existing read channel filter – asynchronous portion estimated capable of up to 1. 8 Gigaitems/sec Ø obtains latency as low as 4 clock cycles l Testable l Required low designer effort: Ø Layout: mostly using library components Ø Placement and routing: full automated 32