CHAPTER 9 Simulation Methods SIMULATION METHODS SIMPOINTS PARALLEL

![A Better Solution n Eliminate spatial variability [Lepak, Cain, Lipasti, PACT 2003] n Force A Better Solution n Eliminate spatial variability [Lepak, Cain, Lipasti, PACT 2003] n Force](https://slidetodoc.com/presentation_image_h2/3cf4f822d84322bf0c6f1ceecf9fae9d/image-34.jpg)

- Slides: 37

CHAPTER 9 Simulation Methods • SIMULATION METHODS • SIMPOINTS • PARALLEL SIMULATIONS • NONDETERMINISM © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved

How to study a computer system Methodologies Ø Construct a hardware prototype Ø Mathematical modeling Ø Simulation © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved

Construct a hardware prototype Advantages Ø Runs fast Disadvantages Ø Takes long time to build - RPM (Rapid Prototyping engine for Multiprocessors) Project @ USC; took a few graduate students several years Ø Expensive Ø Not flexible © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved

Mathematically model the system Use analytical modeling Ø Ø Probabilistic Queuing Markov Petri Net Advantages Ø Very flexible Ø Very quick to develop Ø Runs quickly Disadvantages Ø Can not capture effects of system details Ø Computer architects are skeptical of models © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved

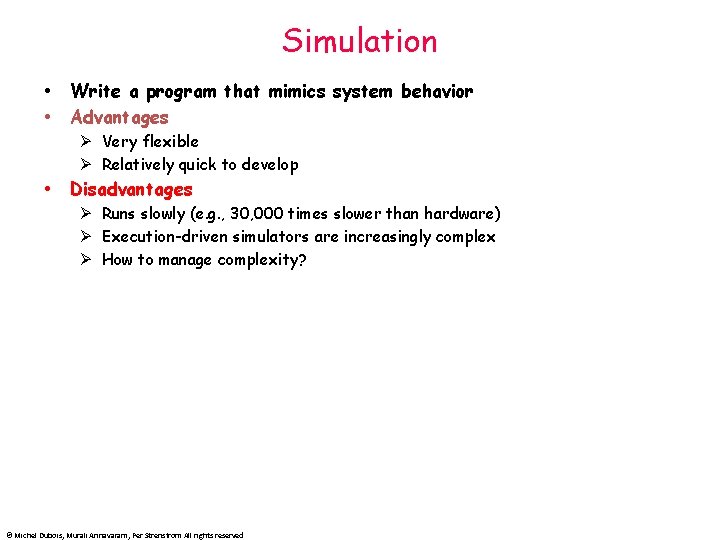

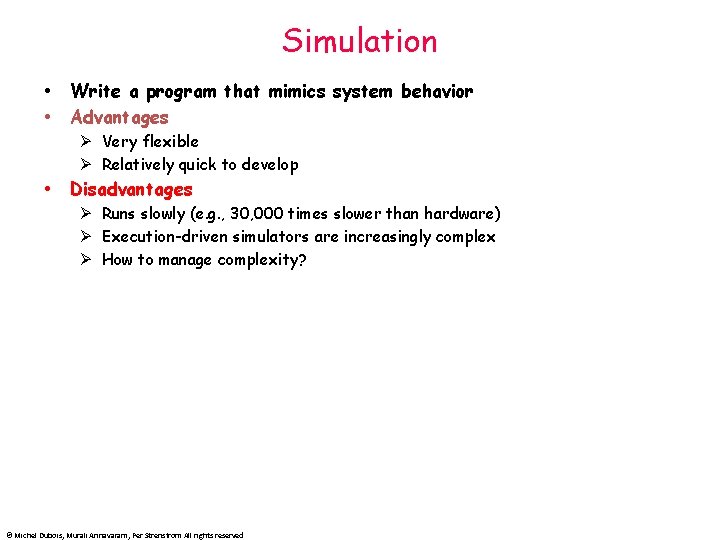

Simulation Write a program that mimics system behavior Advantages Ø Very flexible Ø Relatively quick to develop Disadvantages Ø Runs slowly (e. g. , 30, 000 times slower than hardware) Ø Execution-driven simulators are increasingly complex Ø How to manage complexity? © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved

Most popular research method Simulation is chosen by MOST research projects Why? Ø Mathematical model is NOT accurate Ø Building prototype is too time-consuming and too expensive for academic researchers © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved

Computer architecture simulation Study the characteristics of a complicated computer system with a fixed configuration Explore design space of a system Ø With an accurate model, we can make changes and see how they will affect a system © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved

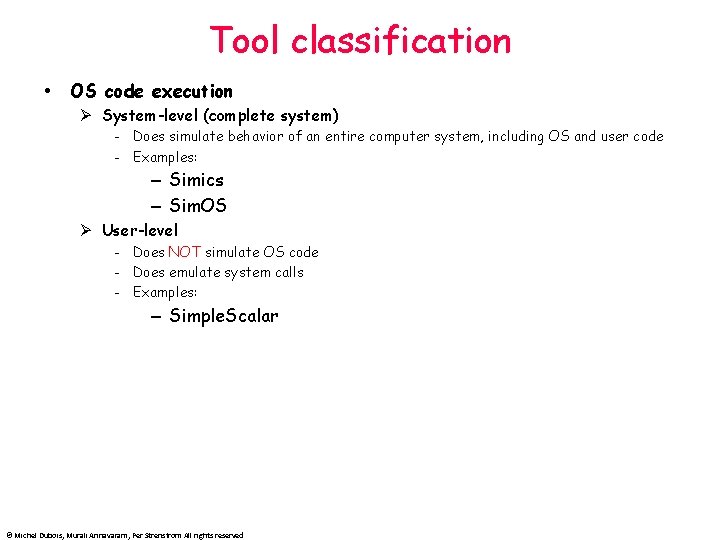

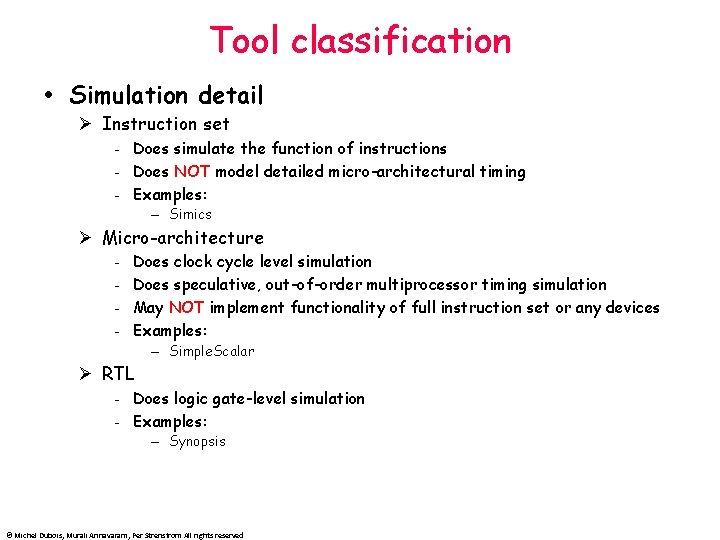

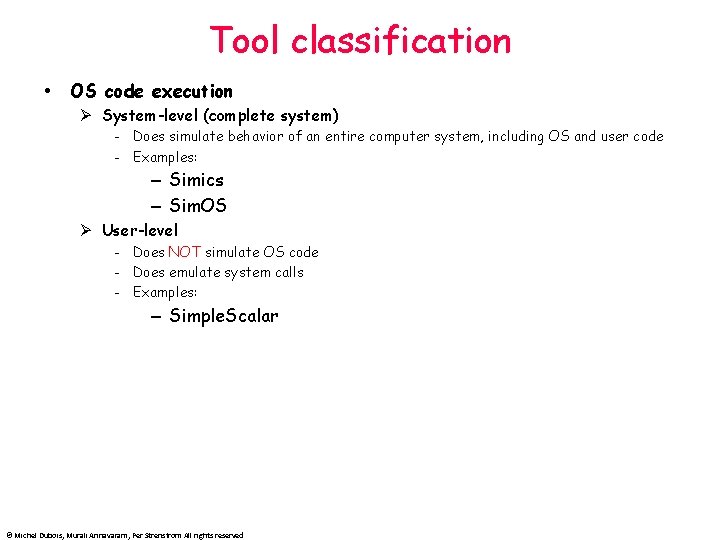

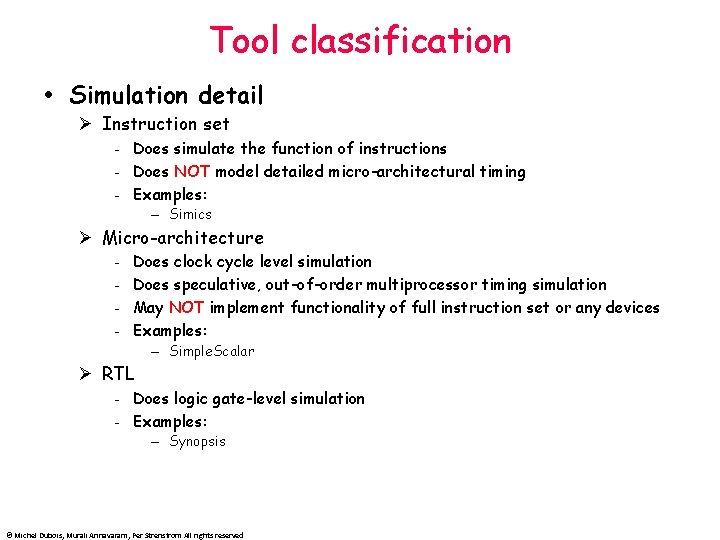

Tool classification OS code execution Ø System-level (complete system) - Does simulate behavior of an entire computer system, including OS and user code - Examples: – Simics – Sim. OS Ø User-level - Does NOT simulate OS code - Does emulate system calls - Examples: – Simple. Scalar © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved

Tool classification Simulation detail Ø Instruction set - Does simulate the function of instructions - Does NOT model detailed micro-architectural timing - Examples: – Simics Ø Micro-architecture - Does clock cycle level simulation Does speculative, out-of-order multiprocessor timing simulation May NOT implement functionality of full instruction set or any devices Examples: – Simple. Scalar Ø RTL - Does logic gate-level simulation - Examples: – Synopsis © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved

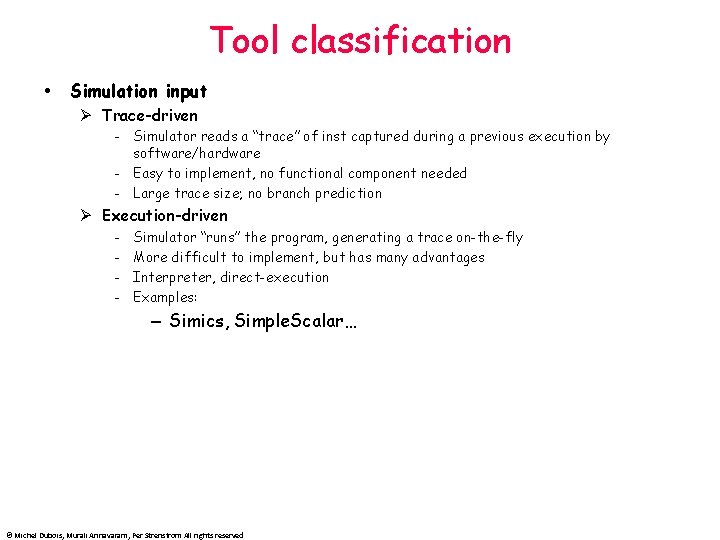

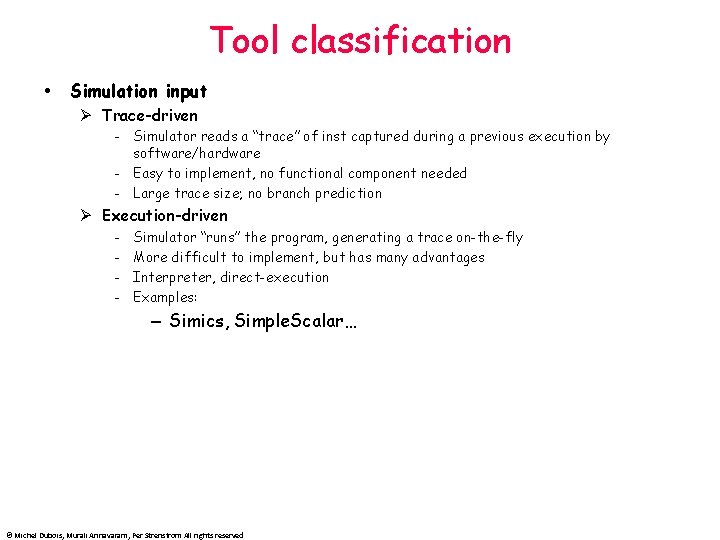

Tool classification Simulation input Ø Trace-driven - Simulator reads a “trace” of inst captured during a previous execution by software/hardware - Easy to implement, no functional component needed - Large trace size; no branch prediction Ø Execution-driven - Simulator “runs” the program, generating a trace on-the-fly More difficult to implement, but has many advantages Interpreter, direct-execution Examples: – Simics, Simple. Scalar… © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved

Tools introduction and tutorial Simple. Scalar Ø http: //www. simplescalar. com/ Simics Ø http: //www. virtutech. com/ Ø https: //www. simics. net/ Sim. Wattch. CMP © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved

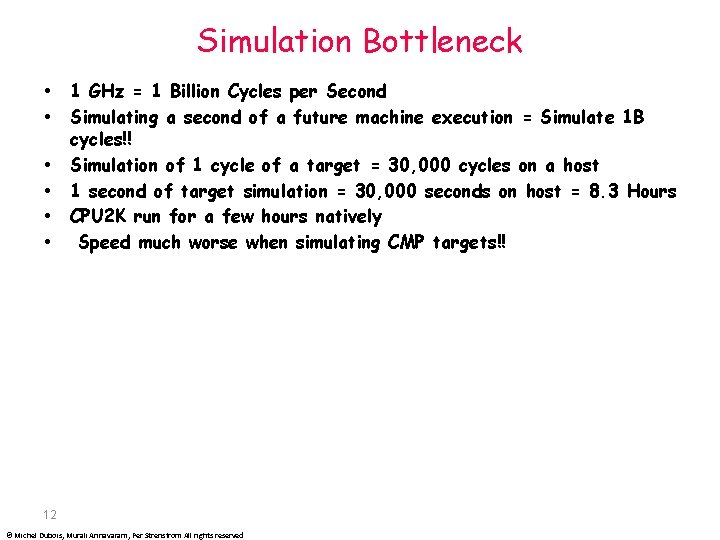

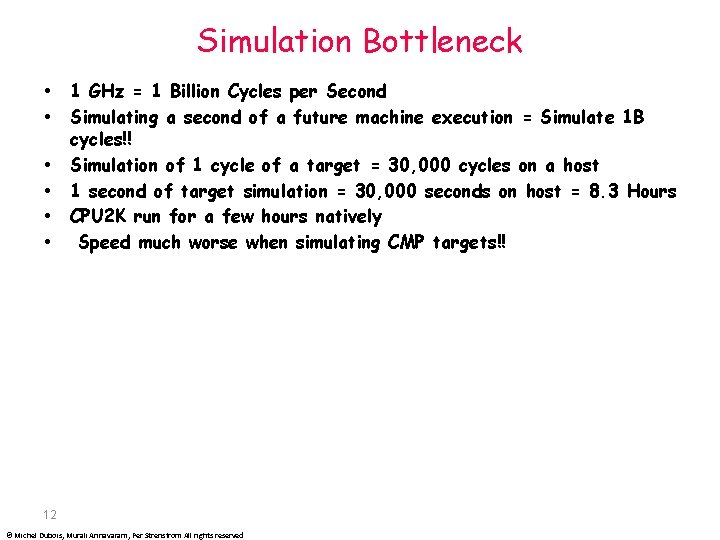

Simulation Bottleneck 1 GHz = 1 Billion Cycles per Second Simulating a second of a future machine execution = Simulate 1 B cycles!! Simulation of 1 cycle of a target = 30, 000 cycles on a host 1 second of target simulation = 30, 000 seconds on host = 8. 3 Hours CPU 2 K run for a few hours natively Speed much worse when simulating CMP targets!! 12 © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved

What to Simulate Simulating the entire application takes long So simulate a subsection But which subsection Ø Random Ø Starting point Ø Ending point How do we know what we selected is good? © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved

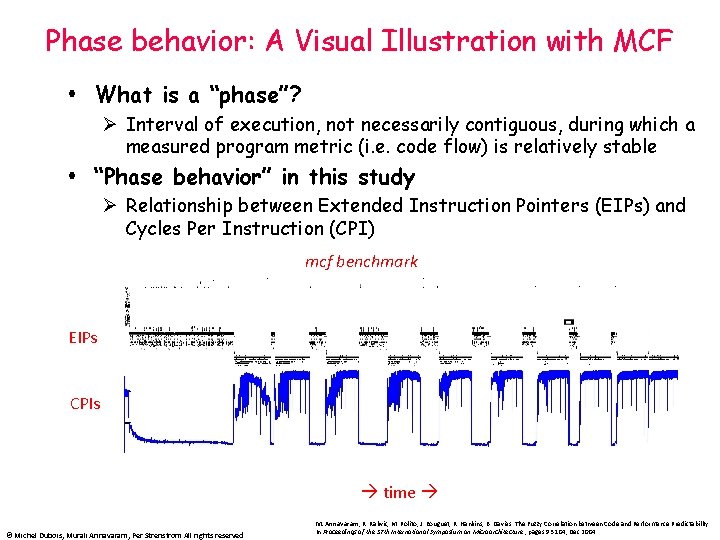

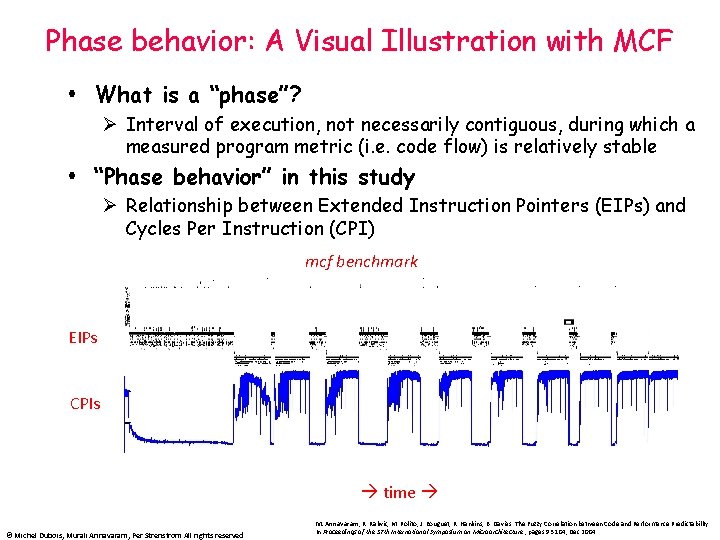

Phase behavior: A Visual Illustration with MCF What is a “phase”? Ø Interval of execution, not necessarily contiguous, during which a measured program metric (i. e. code flow) is relatively stable “Phase behavior” in this study Ø Relationship between Extended Instruction Pointers (EIPs) and Cycles Per Instruction (CPI) mcf benchmark EIPs CPIs time © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved M. Annavaram, R. Rakvic, M. Polito, J. Bouguet, R. Hankins, B. Davies. The Fuzzy Correlation between Code and Performance Predictability. In Proceedings of the 37 th International Symposium on Microarchitecture , pages 93 -104, Dec 2004

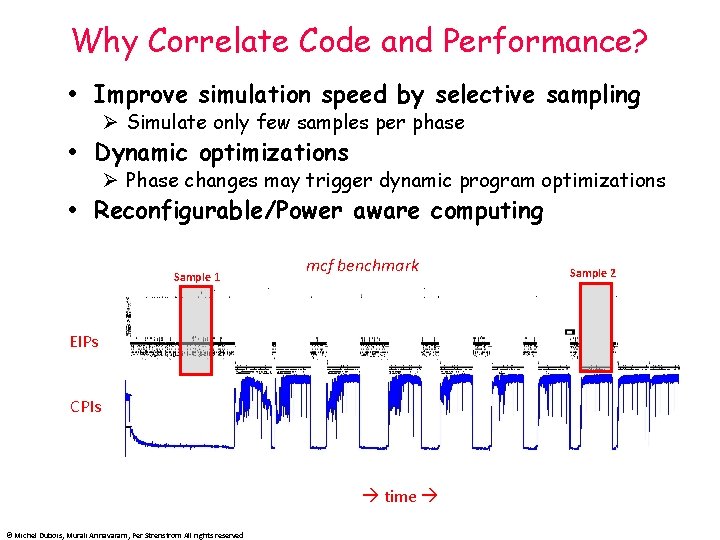

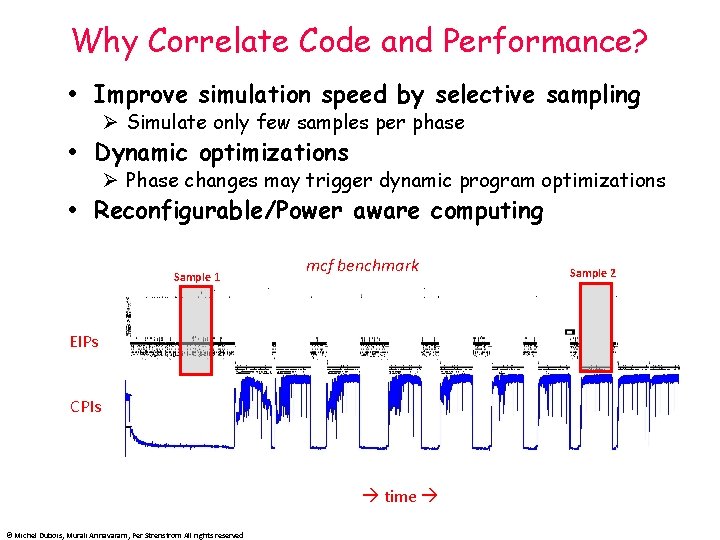

Why Correlate Code and Performance? Improve simulation speed by selective sampling Ø Simulate only few samples per phase Dynamic optimizations Ø Phase changes may trigger dynamic program optimizations Reconfigurable/Power aware computing Sample 1 mcf benchmark EIPs CPIs time © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved Sample 2

Program Phase Identification Must be independent of architecture Must be quick Phases must exist in the dimension we are interested in: CPI, $Misses, Branch Mispredictions… 16 © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved

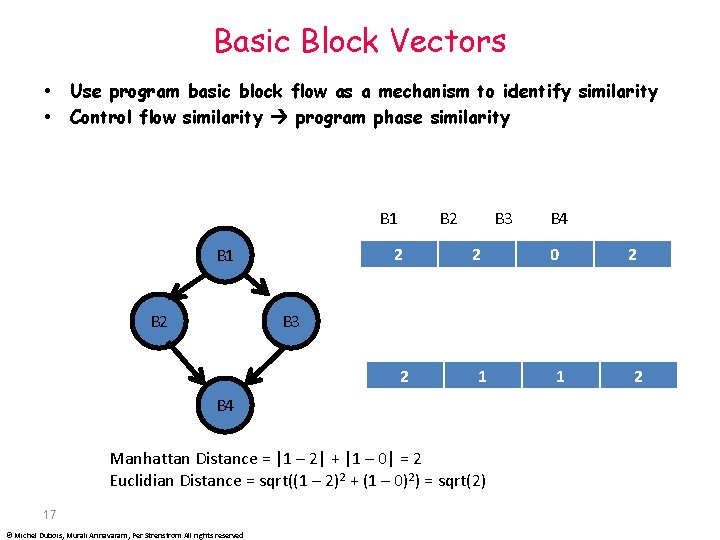

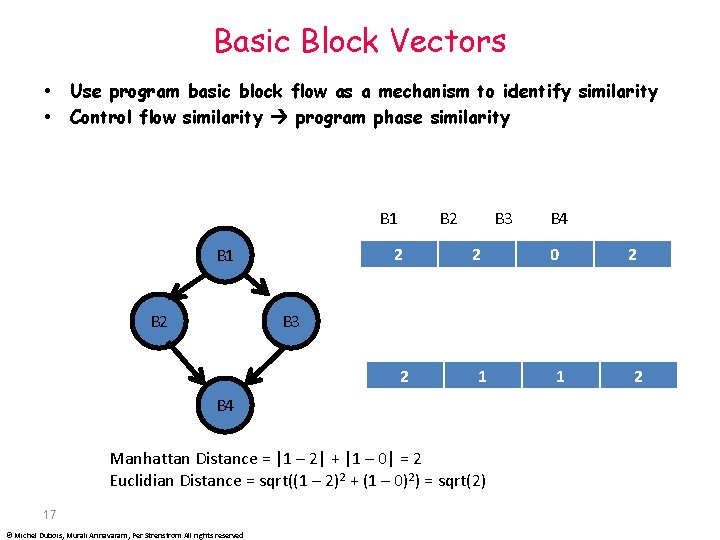

Basic Block Vectors Use program basic block flow as a mechanism to identify similarity Control flow similarity program phase similarity B 1 2 B 1 B 2 B 3 2 B 4 0 2 B 3 2 1 B 4 Manhattan Distance = |1 – 2| + |1 – 0| = 2 Euclidian Distance = sqrt((1 – 2)2 + (1 – 0)2) = sqrt(2) 17 © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved 1 2

Generating BBV Split program into 100 M instruction windows For each window compute the BBV Compare similarities in BBV using distance metric Cluster BBVs with minimum distance between themselves into groups 18 © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved

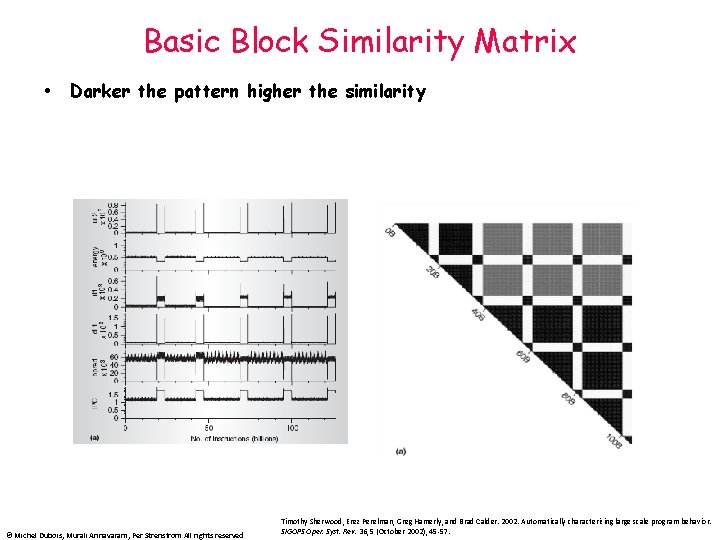

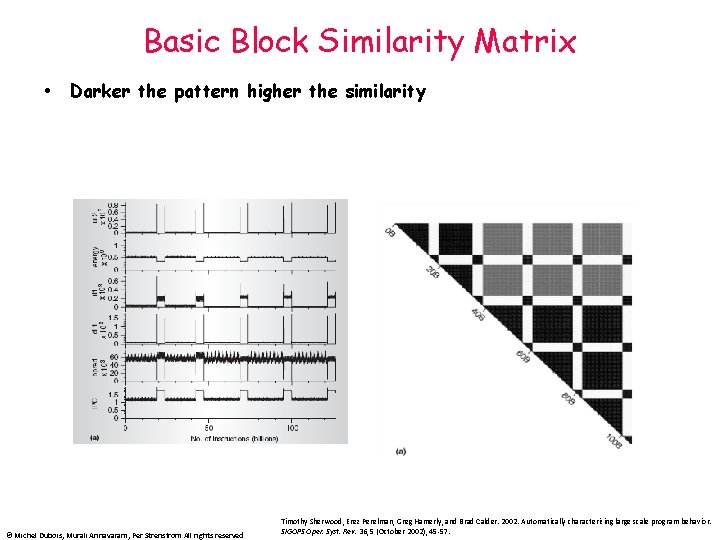

Basic Block Similarity Matrix Darker the pattern higher the similarity © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved Timothy Sherwood, Erez Perelman, Greg Hamerly, and Brad Calder. 2002. Automatically characterizing large scale program behavior. SIGOPS Oper. Syst. Rev. 36, 5 (October 2002), 45 -57.

Identifying Phases from BBV is very high dimension vector (1 entry per each unique basic block) Clustering on high dimensions is extremely complex Dimension reduction using random linear projection Cluster using lower order projection vectors using k-means 20 © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved

Parallel Simulations © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved

Organization Why is parallel simulation critical in future Improving parallel simulation speed using Slacksim: Implementation of our parallel simulator Comparison of Slack Simulation Schemes on Slack. Sim Conclusion and Future Work © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved

CMP Simulation – A Major Design Bottleneck Era of CMPs Ø CMPs become mainstream (Intel, AMD, SUN…) Ø Increasing core count Simulation - a Crucial Tool of Architects Ø Simulate a target design on an existing host system Ø Explore design space Ø Evaluate merit of design changes Typically, Simulate All Target CMP Cores in a Single Host Thread (Single-threaded CMP simulation) Ø When running the single-threaded simulator on a CMP host, only one core is utilized Ø Increasing gap between target core count and simulation speed using one host core © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved

Parallel Simulation Parallel Discrete Event Simulation (PDES) Ø Conservative - Barrier synchronization - Lookahead Ø Optimistic (checkpoint and rollback) - Time Warp WWT and WWT II Ø Multi-processor simulator Ø Conservative quantum-based synchronization Ø Compared to Slack. Sim - Slack. Sim provides higher simulation speed - Slack. Sim provides new trade-offs between simulation speed and accuracy - Slack is not limited by target architecture’s critical latency © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved

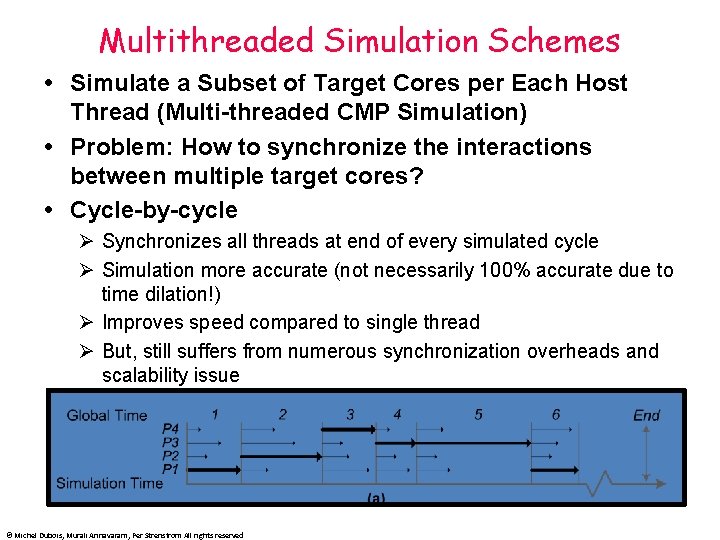

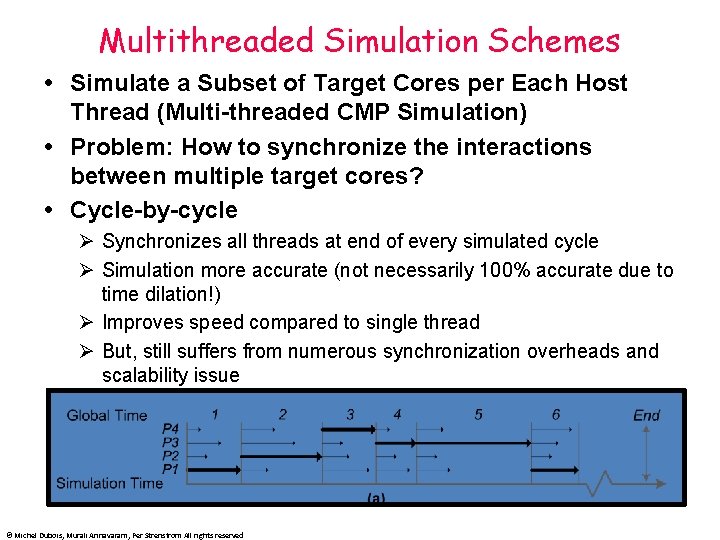

Multithreaded Simulation Schemes Simulate a Subset of Target Cores per Each Host Thread (Multi-threaded CMP Simulation) Problem: How to synchronize the interactions between multiple target cores? Cycle-by-cycle Ø Synchronizes all threads at end of every simulated cycle Ø Simulation more accurate (not necessarily 100% accurate due to time dilation!) Ø Improves speed compared to single thread Ø But, still suffers from numerous synchronization overheads and scalability issue © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved

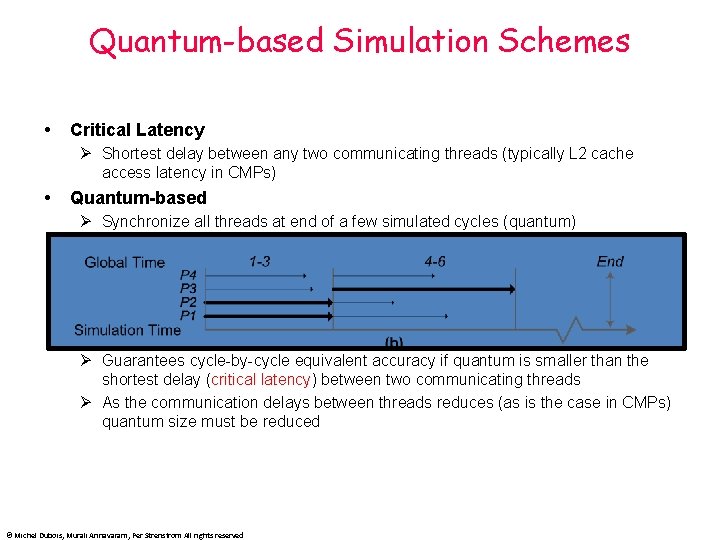

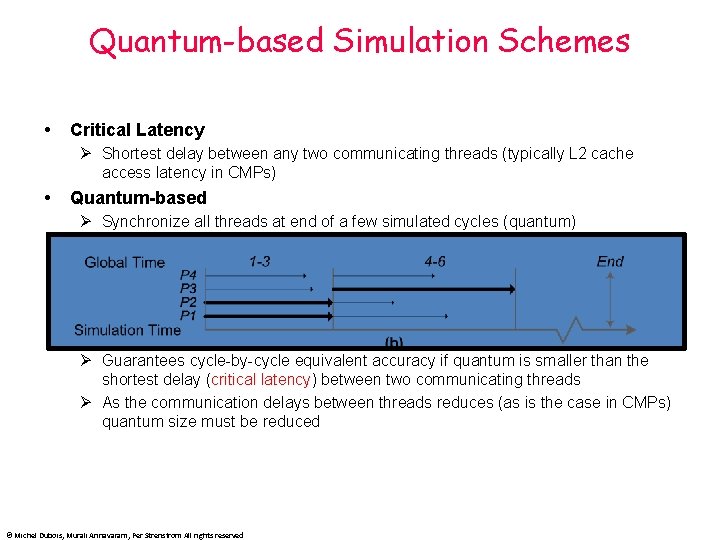

Quantum-based Simulation Schemes Critical Latency Ø Shortest delay between any two communicating threads (typically L 2 cache access latency in CMPs) Quantum-based Ø Synchronize all threads at end of a few simulated cycles (quantum) Ø Guarantees cycle-by-cycle equivalent accuracy if quantum is smaller than the shortest delay (critical latency) latency between two communicating threads Ø As the communication delays between threads reduces (as is the case in CMPs) quantum size must be reduced © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved

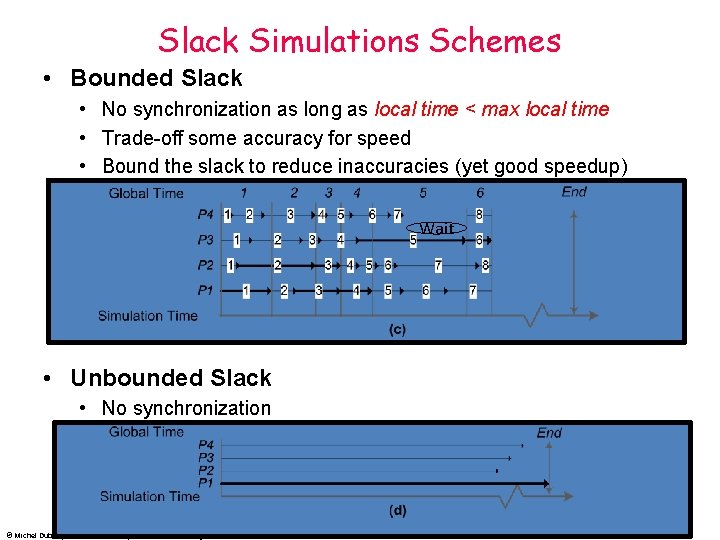

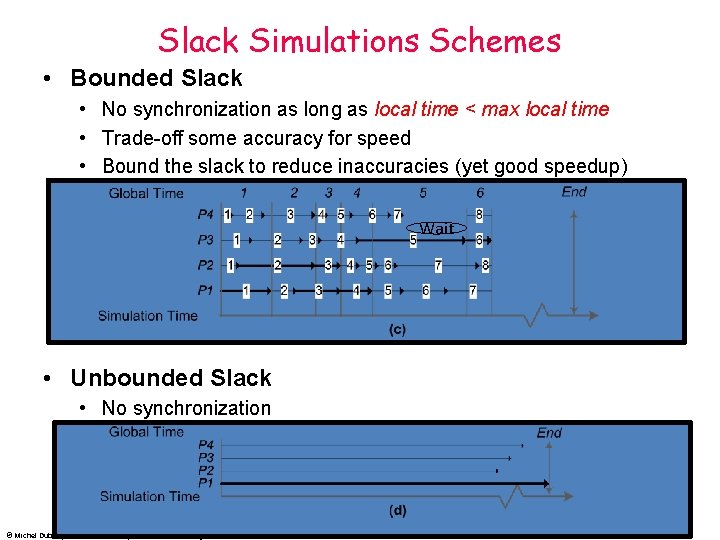

Slack Simulations Schemes • Bounded Slack • No synchronization as long as local time < max local time • Trade-off some accuracy for speed • Bound the slack to reduce inaccuracies (yet good speedup) Wait • Unbounded Slack • No synchronization © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved

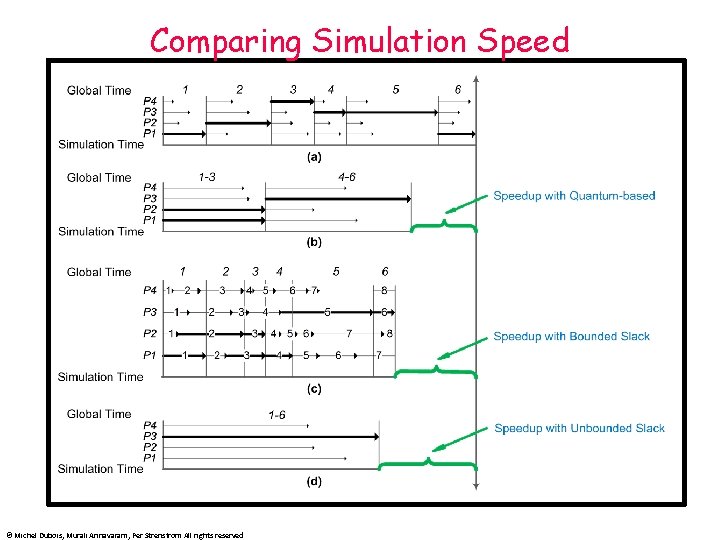

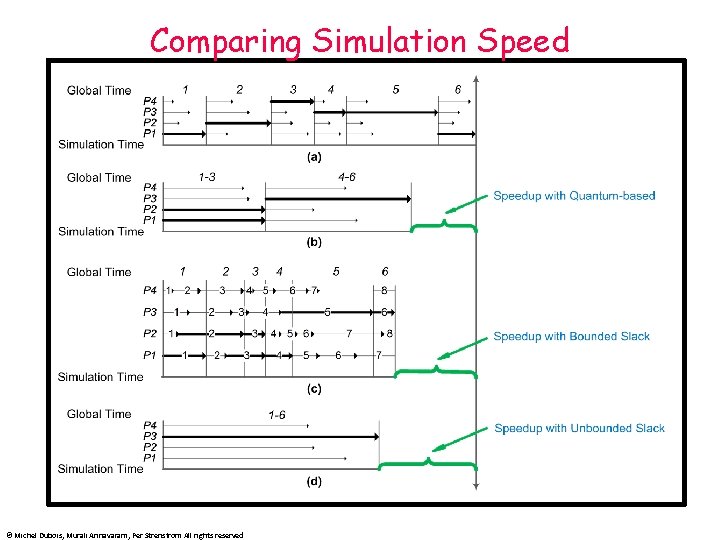

Comparing Simulation Speed © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved

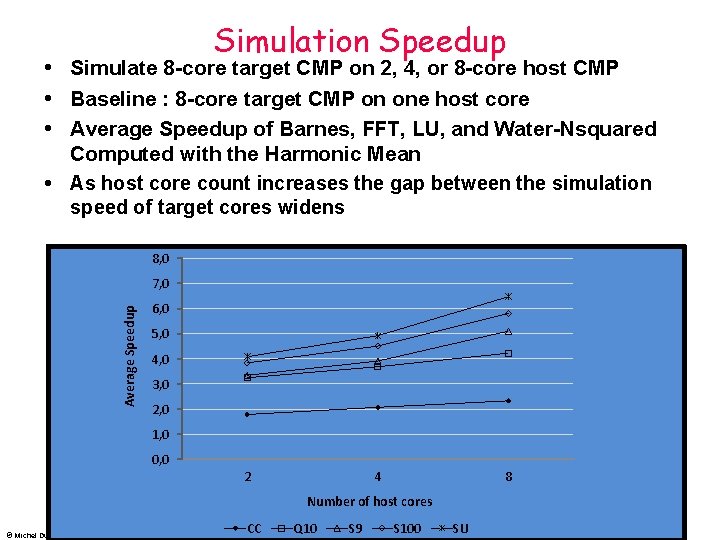

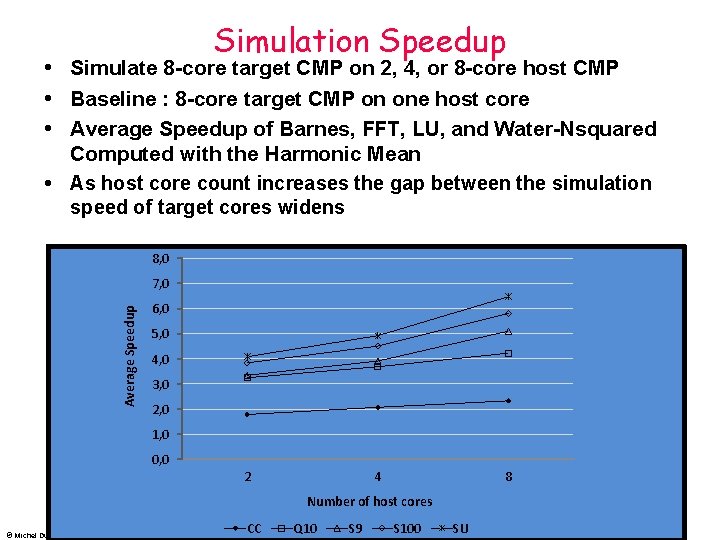

Simulation Speedup Simulate 8 -core target CMP on 2, 4, or 8 -core host CMP Baseline : 8 -core target CMP on one host core Average Speedup of Barnes, FFT, LU, and Water-Nsquared Computed with the Harmonic Mean As host core count increases the gap between the simulation speed of target cores widens 8, 0 Average Speedup 7, 0 6, 0 5, 0 4, 0 3, 0 2, 0 1, 0 0, 0 2 4 8 Number of host cores © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved CC Q 10 S 9 S 100 SU

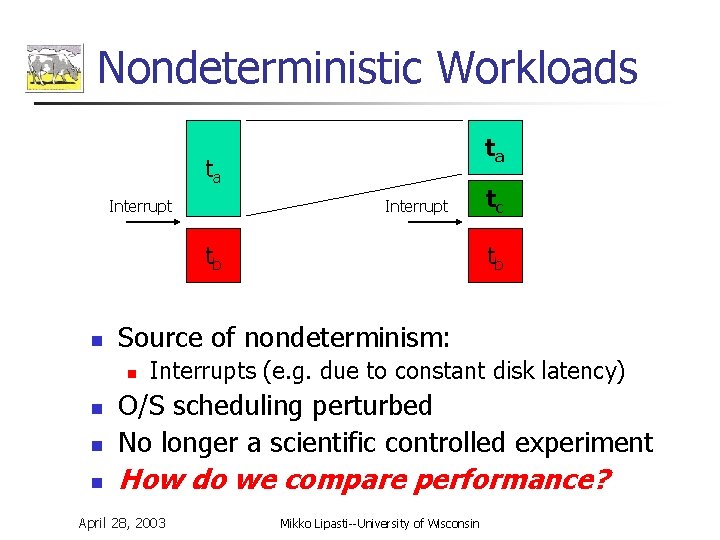

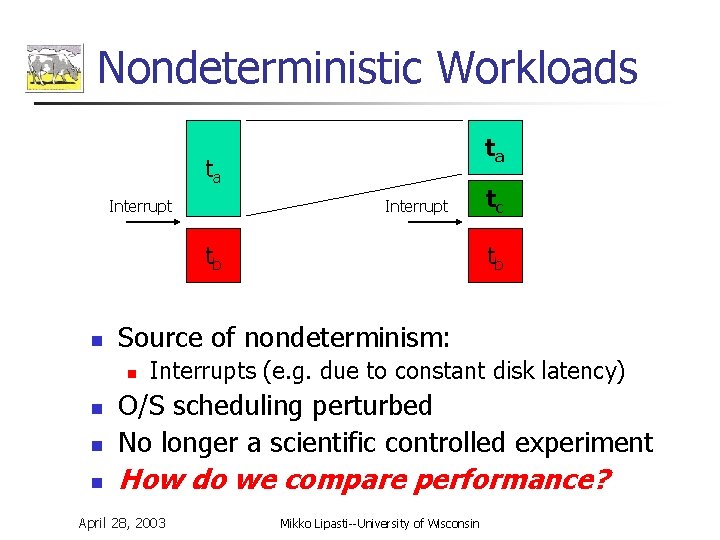

Nondeterministic Workloads ta ta Interrupt tb n tc tb Source of nondeterminism: n Interrupts (e. g. due to constant disk latency) n O/S scheduling perturbed No longer a scientific controlled experiment n How do we compare performance? n April 28, 2003 Mikko Lipasti--University of Wisconsin

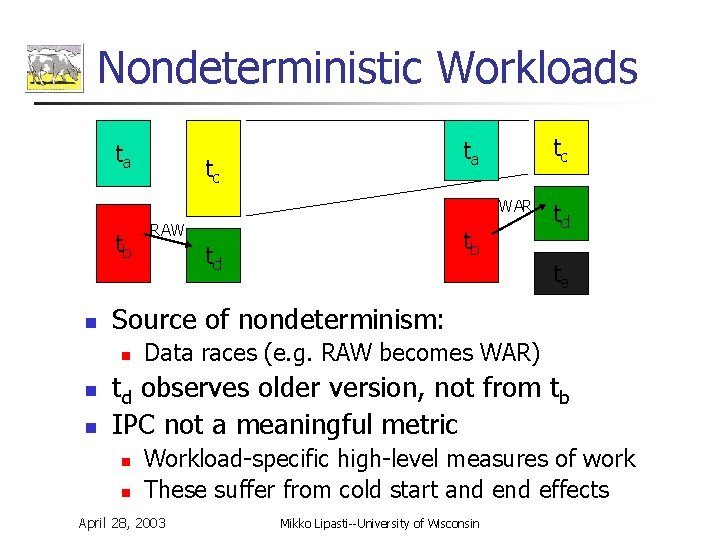

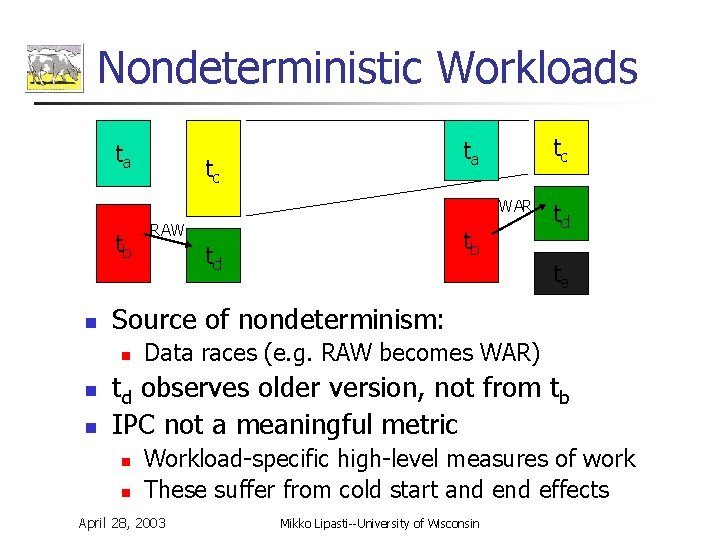

Nondeterministic Workloads ta tc WAR tb n n tb td td te Source of nondeterminism: n n RAW Data races (e. g. RAW becomes WAR) td observes older version, not from tb IPC not a meaningful metric n n Workload-specific high-level measures of work These suffer from cold start and effects April 28, 2003 Mikko Lipasti--University of Wisconsin

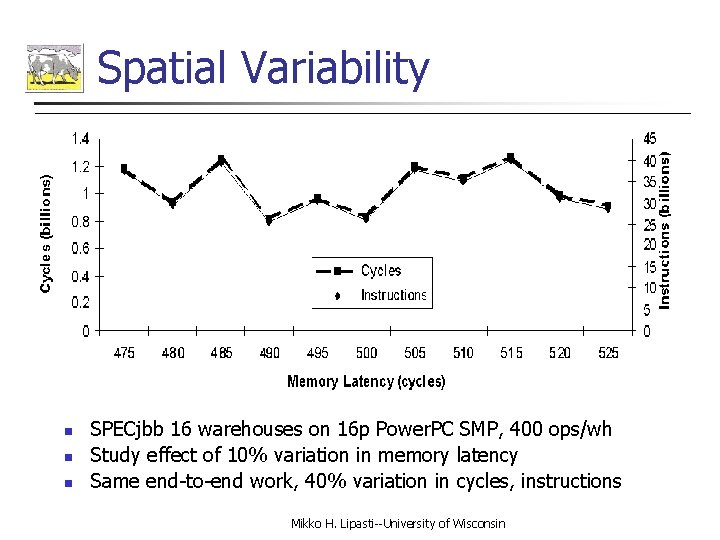

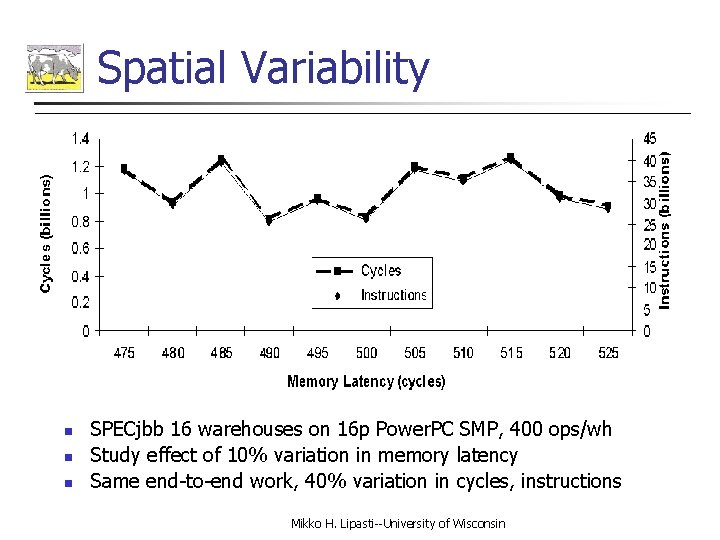

Spatial Variability n n n SPECjbb 16 warehouses on 16 p Power. PC SMP, 400 ops/wh Study effect of 10% variation in memory latency Same end-to-end work, 40% variation in cycles, instructions Mikko H. Lipasti--University of Wisconsin

Spatial Variability n The problem: variability due to (minor) machine changes n n Interrupts, thread synchronization differ in each experiment Result: A different set of instructions retire in every simulation Cannot use conventional performance metrics (e. g. IPC, miss rates) Must measure work and count cycles per unit of work n n Work: transaction, web interaction, database query Modify workload to count work, signal simulator when work complete Simulate billions of instructions to overcome cold start and effects n One solution: statistical simulation [Alameldeen et al. , 2003] n Simulate same interval n times with random perturbations n n n determined by coefficient of variation and desired confidence interval Problem: for small relative error, n can be very large Simulate n x billions of instructions per experiment Mikko H. Lipasti--University of Wisconsin

![A Better Solution n Eliminate spatial variability Lepak Cain Lipasti PACT 2003 n Force A Better Solution n Eliminate spatial variability [Lepak, Cain, Lipasti, PACT 2003] n Force](https://slidetodoc.com/presentation_image_h2/3cf4f822d84322bf0c6f1ceecf9fae9d/image-34.jpg)

A Better Solution n Eliminate spatial variability [Lepak, Cain, Lipasti, PACT 2003] n Force each experiment to follow same path n n Record control “trace” Inject stall time to prevent deviation from trace Bound sacrifice in fidelity with injected stall time Enable comparisons with single simulation at each point Simulate 10 s of millions of instructions per experiment Mikko H. Lipasti--University of Wisconsin

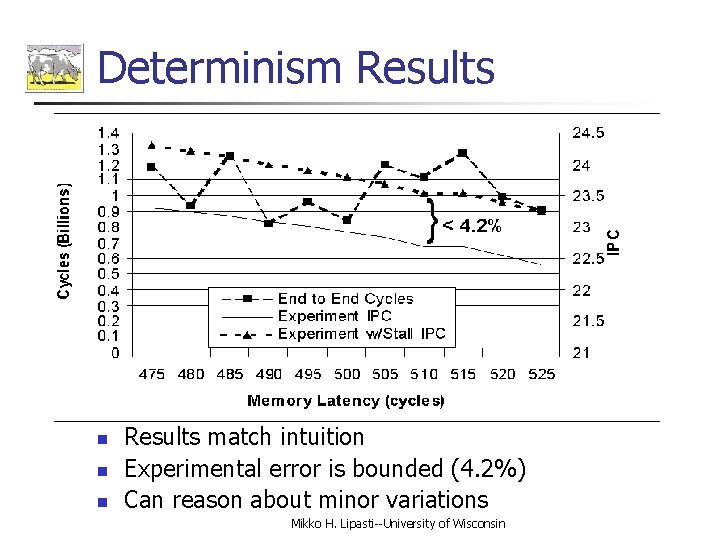

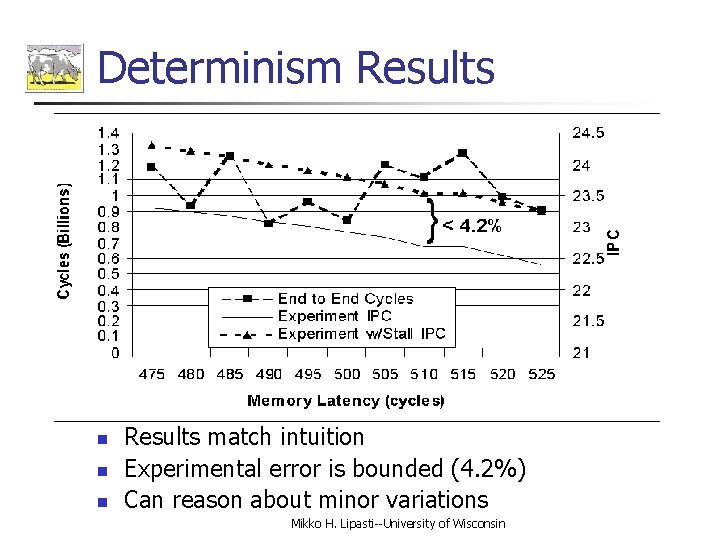

Determinism Results n n n Results match intuition Experimental error is bounded (4. 2%) Can reason about minor variations Mikko H. Lipasti--University of Wisconsin

Conclusions n n Spatial variability complicates multithreaded program performance evaluation Enforcing determinism enables: n n n Relative comparisons with a single simulation Immunity to start/end effects Use of conventional performance metrics Avoid cumbersome workload-specific setup Bound error with injected delay AMD has already adopted determinism Mikko H. Lipasti--University of Wisconsin

CHAPTER 9 Simulation Methods • SIMULATION METHODS • SIMPOINTS • PARALLEL SIMULATIONS • NONDETERMINISM © Michel Dubois, Murali Annavaram, Per Strenstrom All rights reserved