Categories of Processes Process program in execution Independent

- Slides: 41

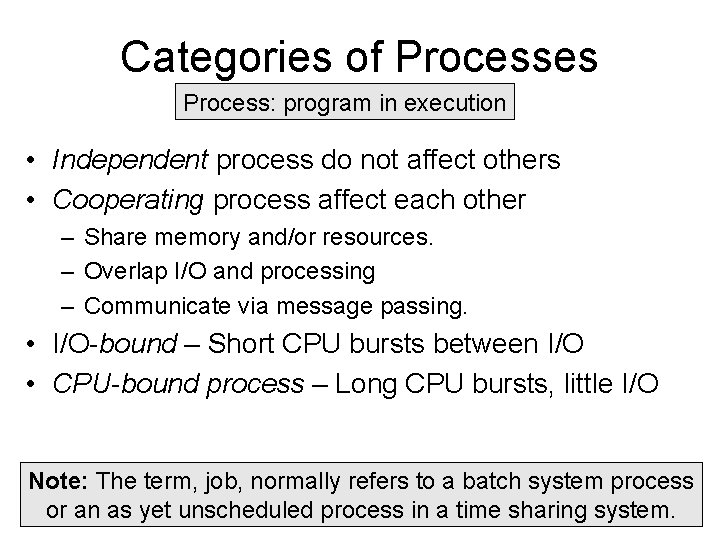

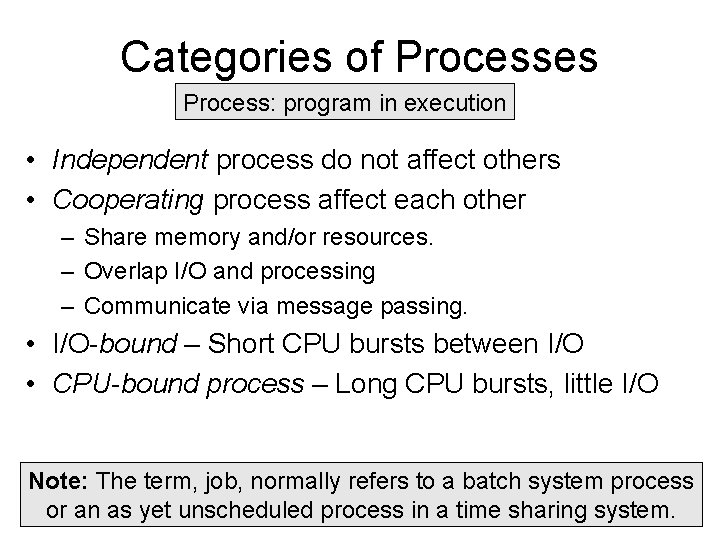

Categories of Processes Process: program in execution • Independent process do not affect others • Cooperating process affect each other – Share memory and/or resources. – Overlap I/O and processing – Communicate via message passing. • I/O-bound – Short CPU bursts between I/O • CPU-bound process – Long CPU bursts, little I/O Note: The term, job, normally refers to a batch system process or an as yet unscheduled process in a time sharing system.

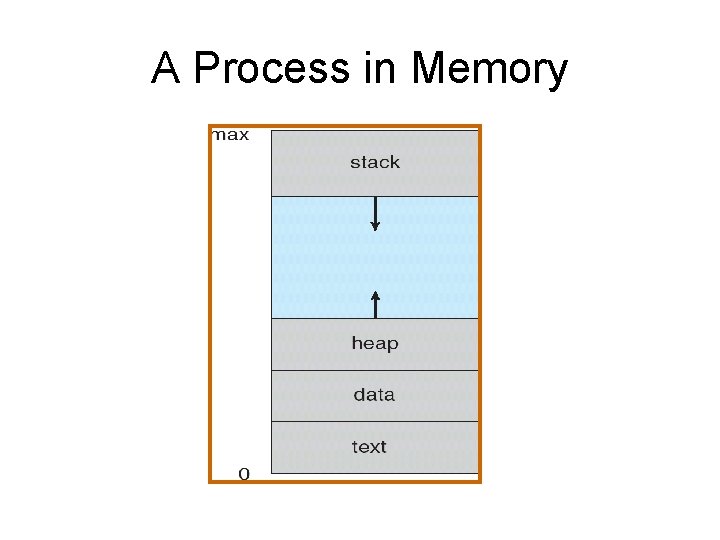

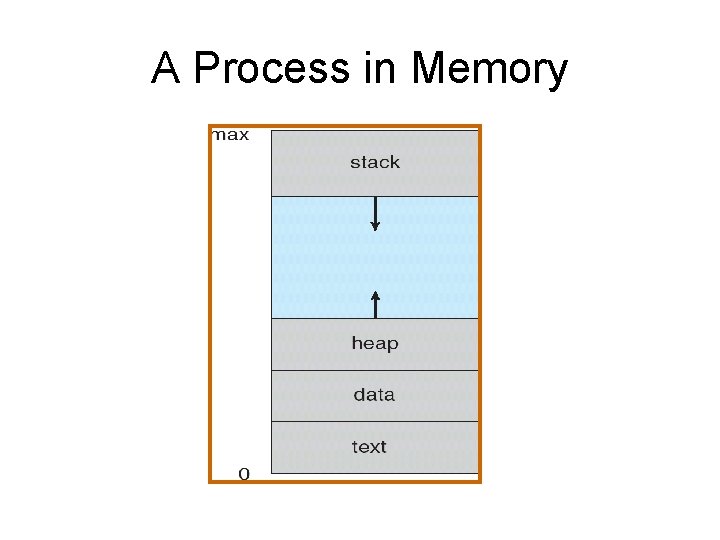

A Process in Memory

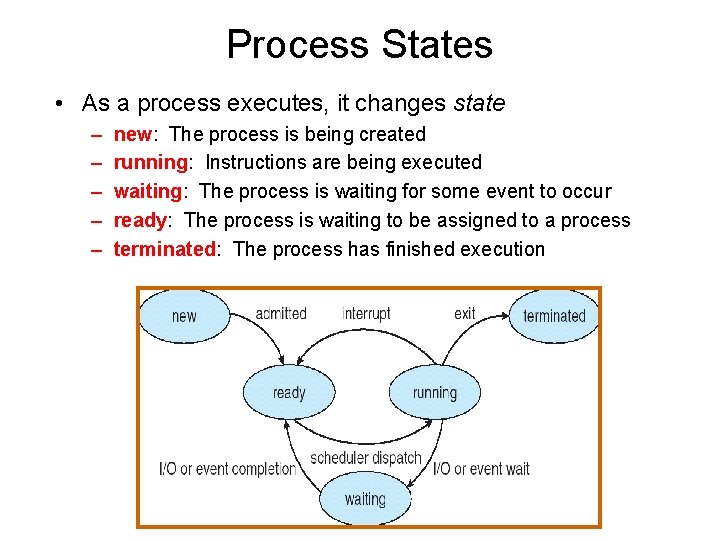

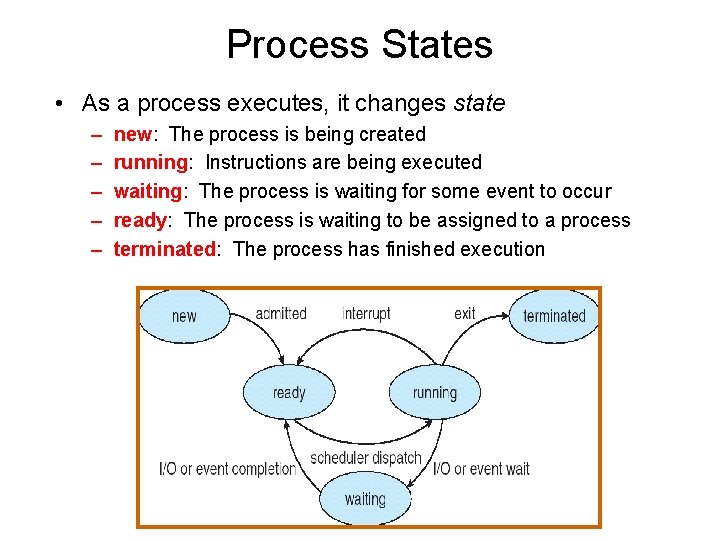

Process States • As a process executes, it changes state – – – new: The process is being created running: Instructions are being executed waiting: The process is waiting for some event to occur ready: The process is waiting to be assigned to a process terminated: The process has finished execution

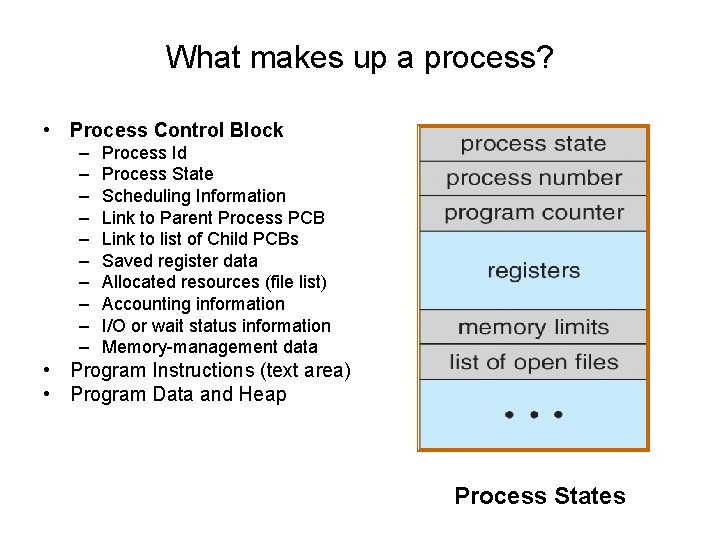

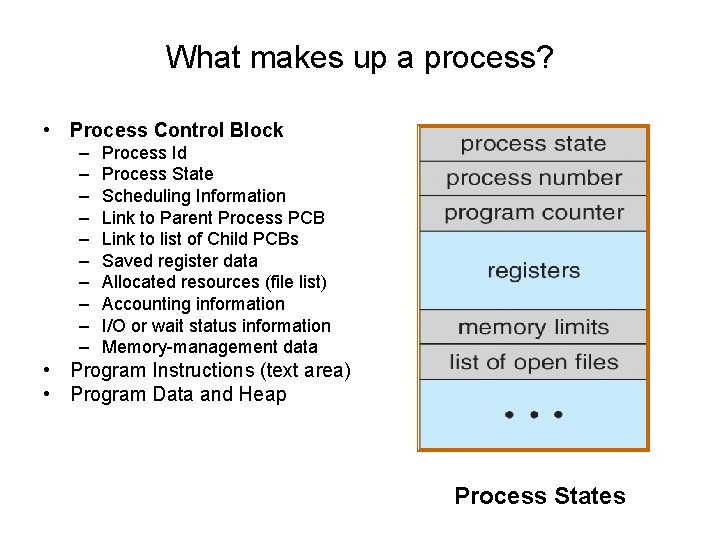

What makes up a process? • Process Control Block – – – – – Process Id Process State Scheduling Information Link to Parent Process PCB Link to list of Child PCBs Saved register data Allocated resources (file list) Accounting information I/O or wait status information Memory-management data • Program Instructions (text area) • Program Data and Heap Process States

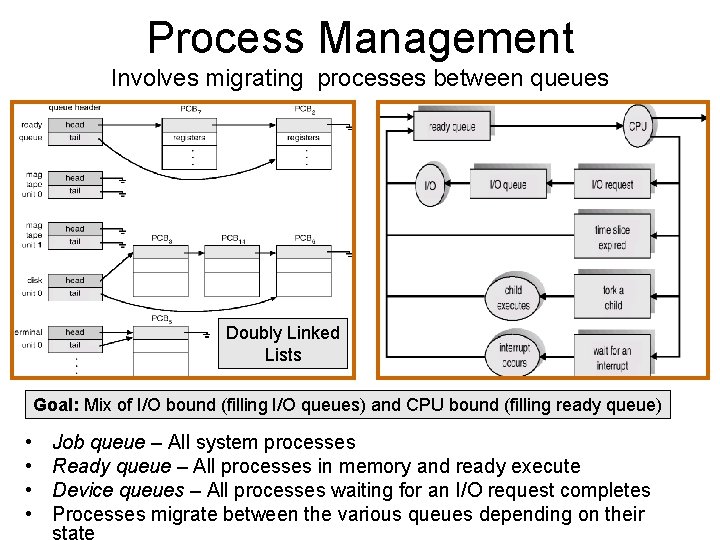

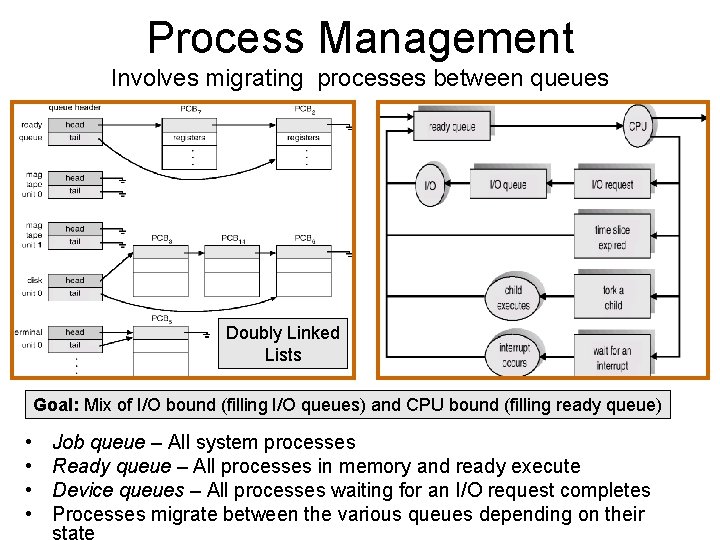

Process Management Involves migrating processes between queues Doubly Linked Lists Goal: Mix of I/O bound (filling I/O queues) and CPU bound (filling ready queue) • • Job queue – All system processes Ready queue – All processes in memory and ready execute Device queues – All processes waiting for an I/O request completes Processes migrate between the various queues depending on their state

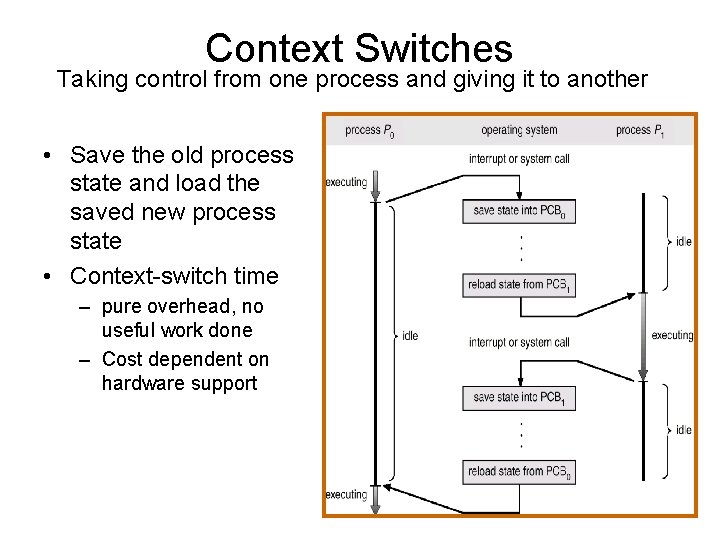

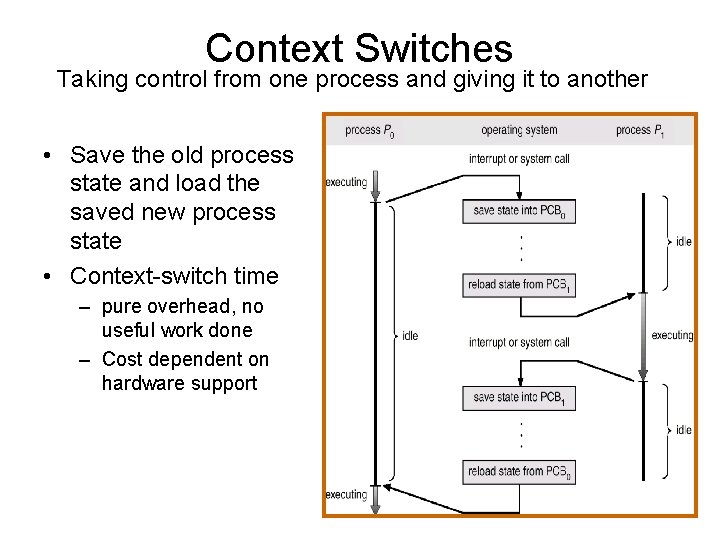

Context Switches Taking control from one process and giving it to another • Save the old process state and load the saved new process state • Context-switch time – pure overhead, no useful work done – Cost dependent on hardware support

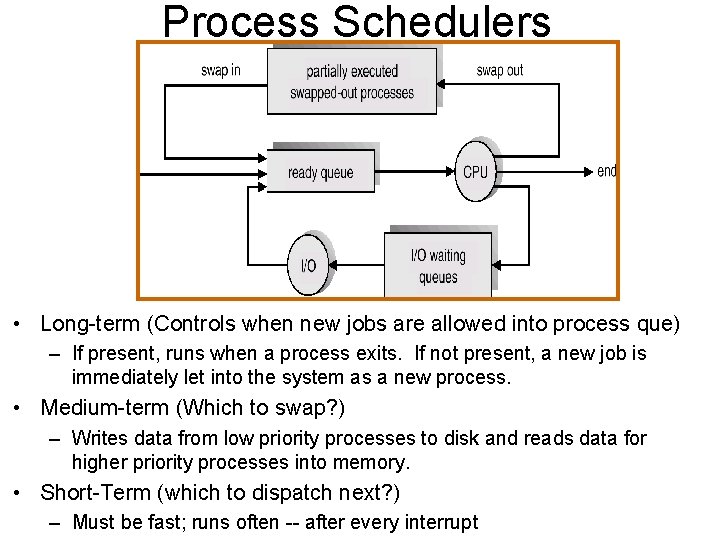

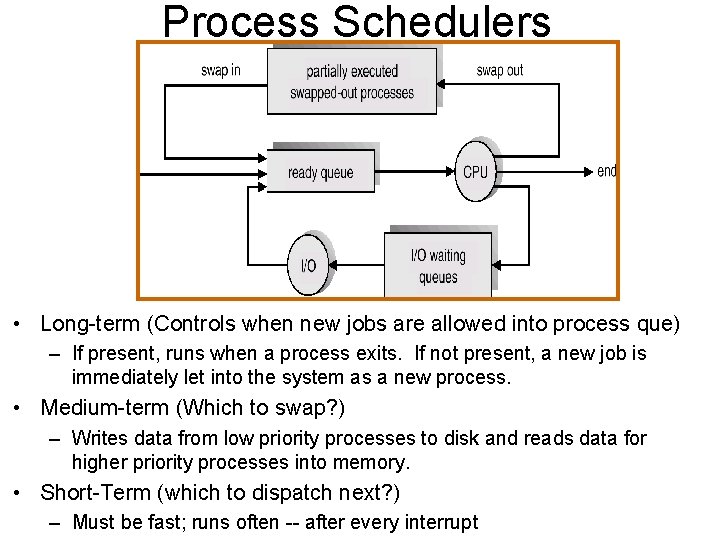

Process Schedulers • Long-term (Controls when new jobs are allowed into process que) – If present, runs when a process exits. If not present, a new job is immediately let into the system as a new process. • Medium-term (Which to swap? ) – Writes data from low priority processes to disk and reads data for higher priority processes into memory. • Short-Term (which to dispatch next? ) – Must be fast; runs often -- after every interrupt

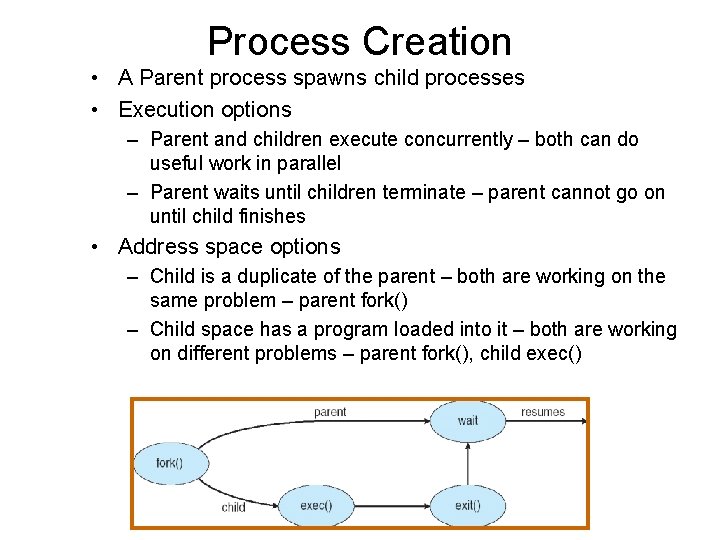

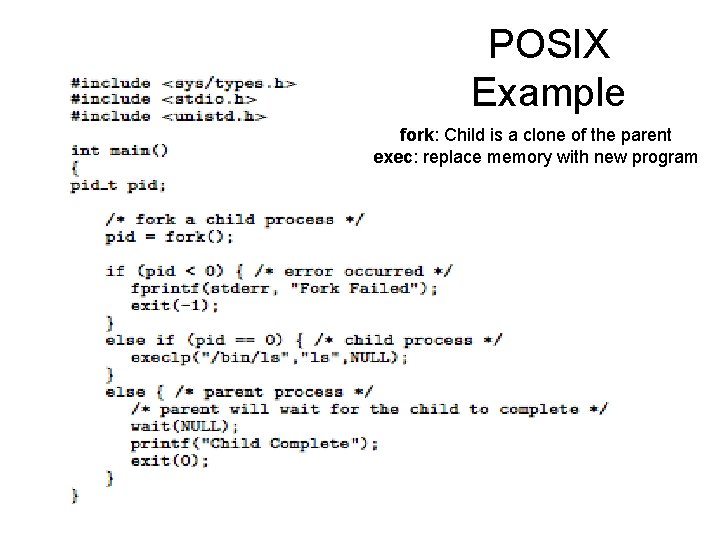

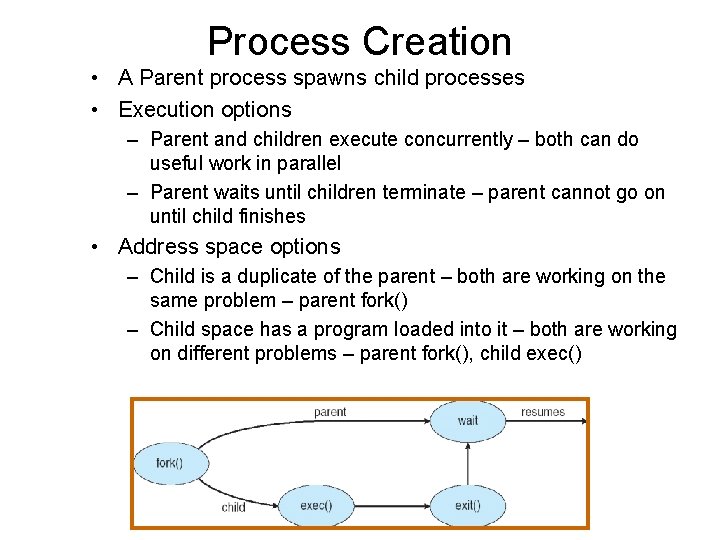

Process Creation • A Parent process spawns child processes • Execution options – Parent and children execute concurrently – both can do useful work in parallel – Parent waits until children terminate – parent cannot go on until child finishes • Address space options – Child is a duplicate of the parent – both are working on the same problem – parent fork() – Child space has a program loaded into it – both are working on different problems – parent fork(), child exec()

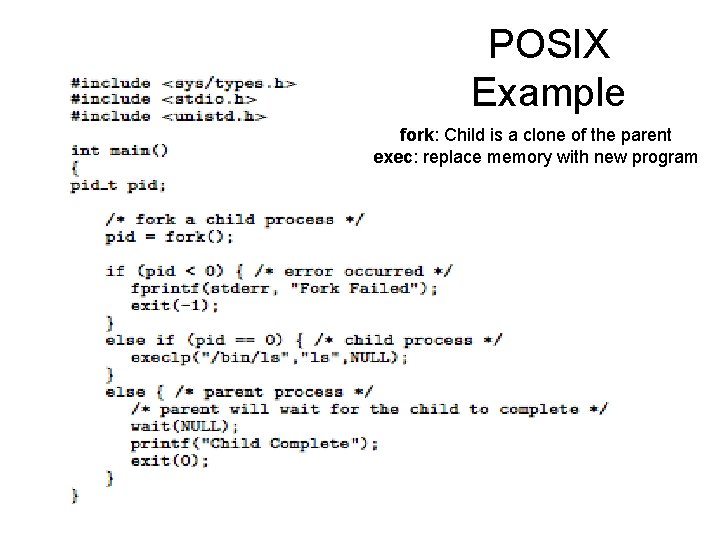

POSIX Example fork: Child is a clone of the parent exec: replace memory with new program

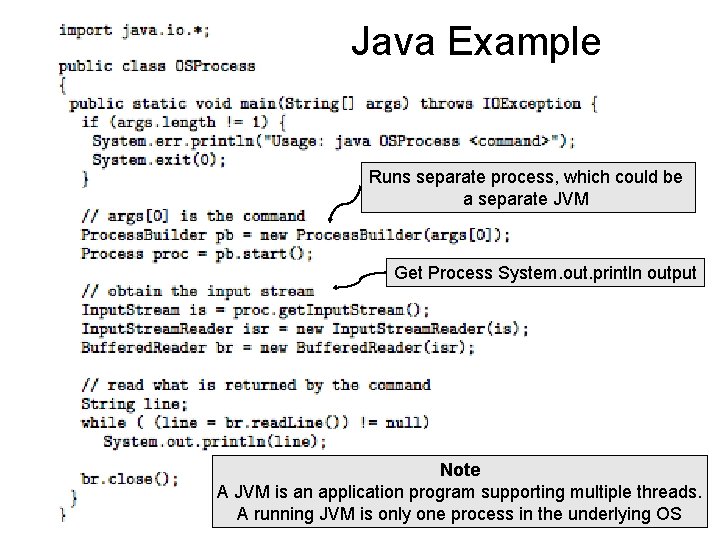

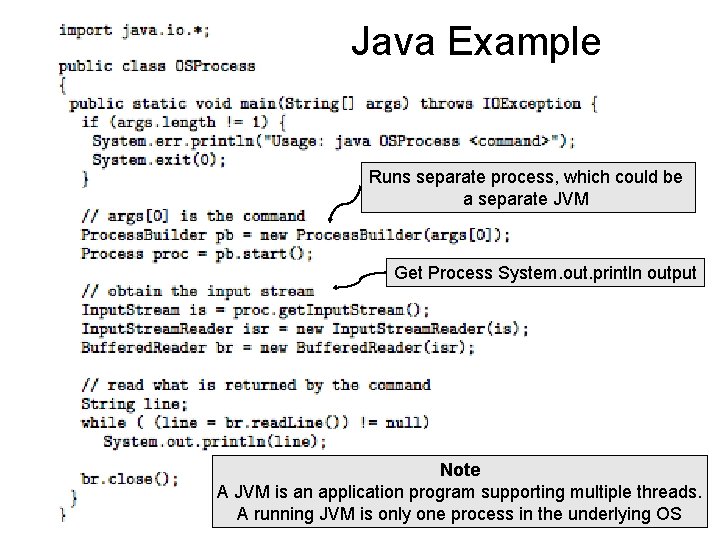

Java Example Runs separate process, which could be a separate JVM Get Process System. out. println output Note A JVM is an application program supporting multiple threads. A running JVM is only one process in the underlying OS

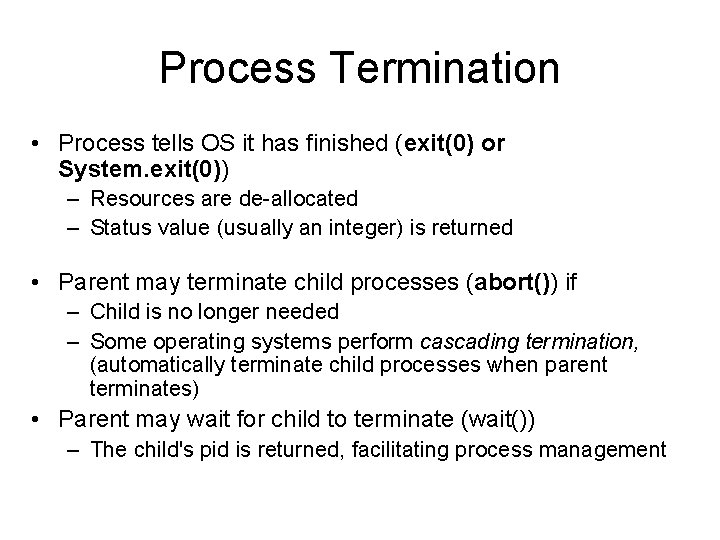

Process Termination • Process tells OS it has finished (exit(0) or System. exit(0)) – Resources are de-allocated – Status value (usually an integer) is returned • Parent may terminate child processes (abort()) if – Child is no longer needed – Some operating systems perform cascading termination, (automatically terminate child processes when parent terminates) • Parent may wait for child to terminate (wait()) – The child's pid is returned, facilitating process management

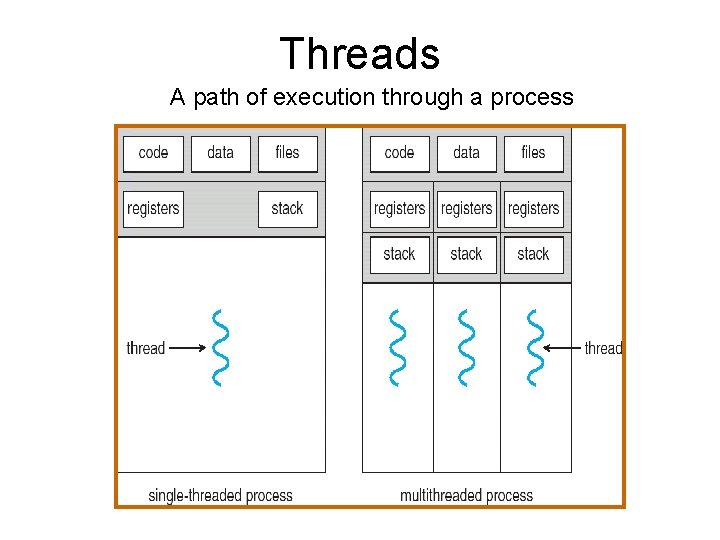

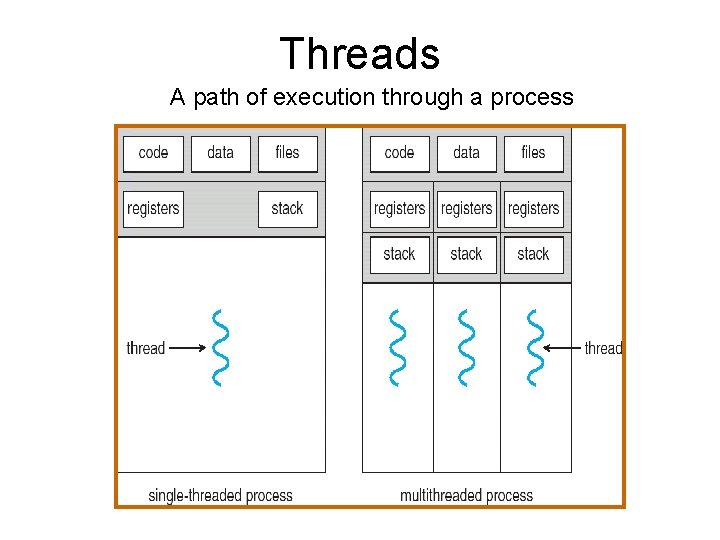

Threads A path of execution through a process

Motivation for Threads • Responsiveness: A threaded process can continue executing when a blocking call occurs in one thread • Economy: Creating new heavyweight processes is expensive time wise and consumes extra memory • Resource Sharing: All process threads are part of the same memory image • Parallel Processing: Threads can simultaneously execute on different cores Note: Internal kernel threads concurrently perform OS functions. Servers use threads to efficiently handle client requests

Java Threads • Java threads are managed by the JVM and generally utilize an OS provided thread-based library • Java threads may be created by: – Implementing the Runnable interface – Extending the Thread class • Java threads start by calling the start method – Allocate memory for the thread – Call the start method • Java threads terminate when they leave the run method

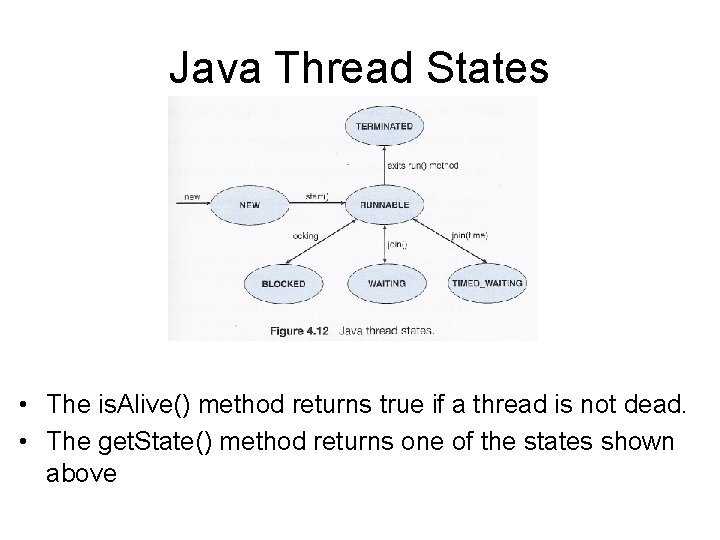

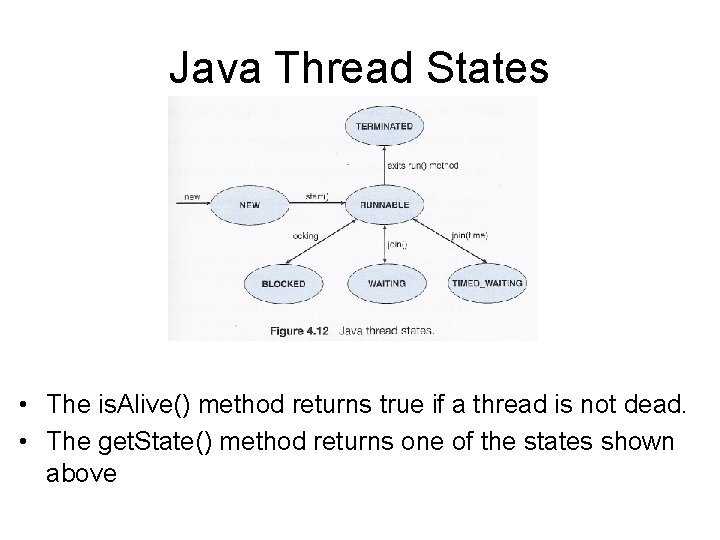

Java Thread States • The is. Alive() method returns true if a thread is not dead. • The get. State() method returns one of the states shown above

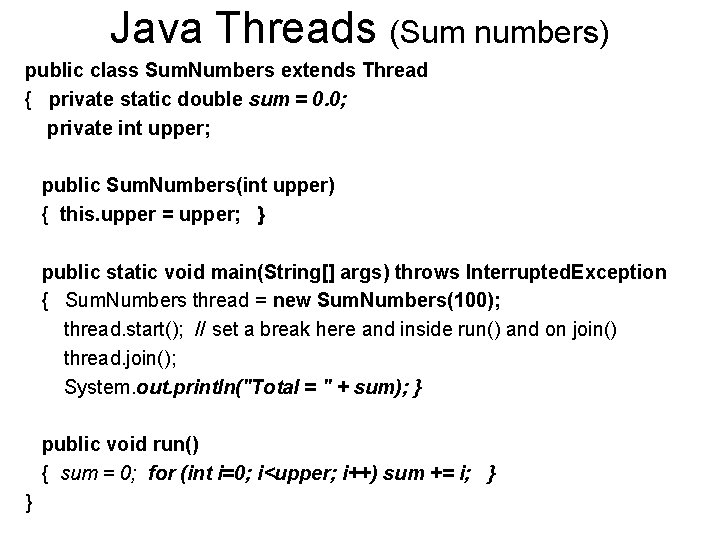

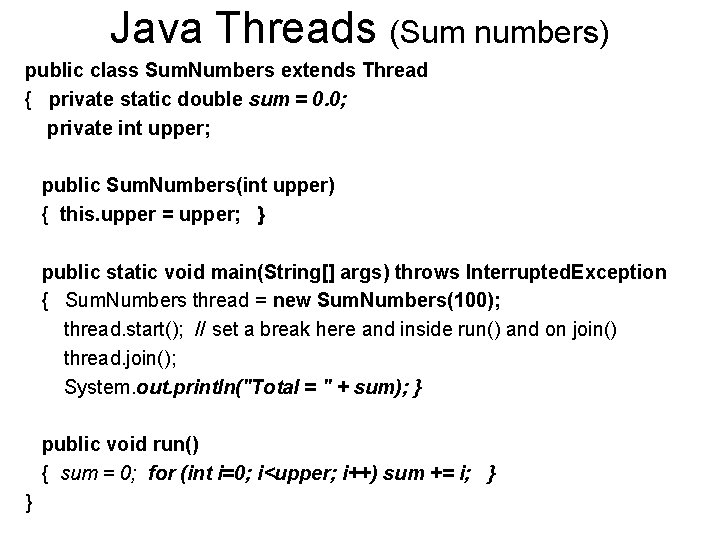

Java Threads (Sum numbers) public class Sum. Numbers extends Thread { private static double sum = 0. 0; private int upper; public Sum. Numbers(int upper) { this. upper = upper; } public static void main(String[] args) throws Interrupted. Exception { Sum. Numbers thread = new Sum. Numbers(100); thread. start(); // set a break here and inside run() and on join() thread. join(); System. out. println("Total = " + sum); } public void run() { sum = 0; for (int i=0; i<upper; i++) sum += i; } }

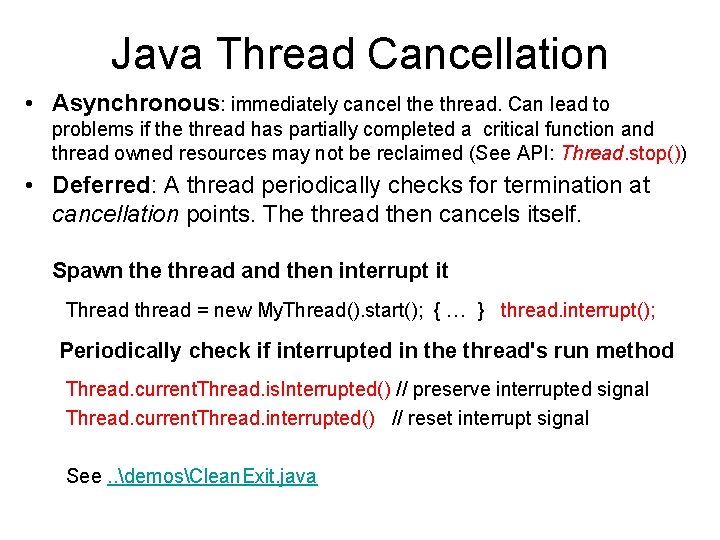

Java Thread Cancellation • Asynchronous: immediately cancel the thread. Can lead to problems if the thread has partially completed a critical function and thread owned resources may not be reclaimed (See API: Thread. stop()) • Deferred: A thread periodically checks for termination at cancellation points. The thread then cancels itself. Spawn the thread and then interrupt it Thread thread = new My. Thread(). start(); { … } thread. interrupt(); Periodically check if interrupted in the thread's run method Thread. current. Thread. is. Interrupted() // preserve interrupted signal Thread. current. Thread. interrupted() // reset interrupt signal See. . demosClean. Exit. java

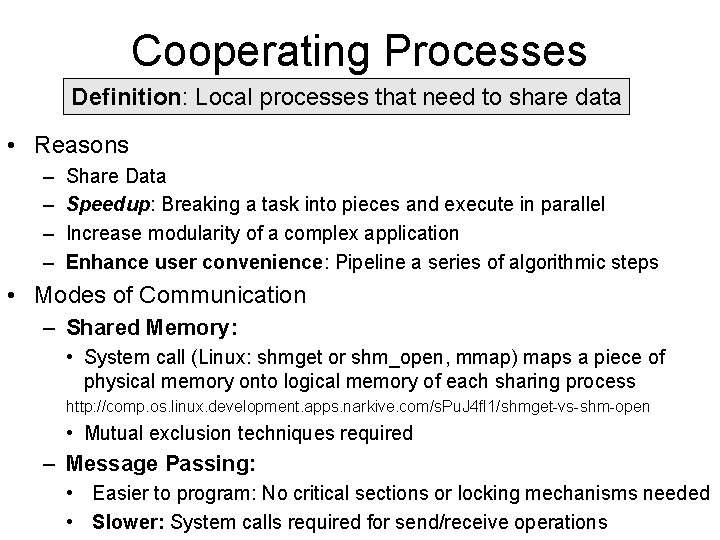

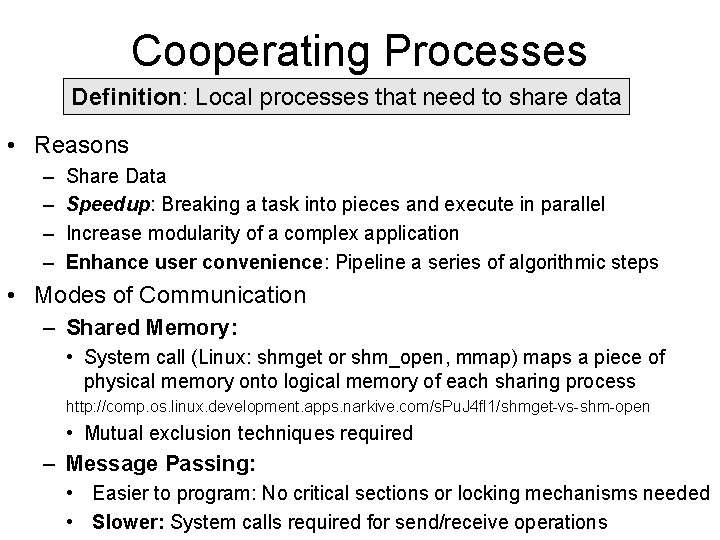

Cooperating Processes Definition: Local processes that need to share data • Reasons – – Share Data Speedup: Breaking a task into pieces and execute in parallel Increase modularity of a complex application Enhance user convenience: Pipeline a series of algorithmic steps • Modes of Communication – Shared Memory: • System call (Linux: shmget or shm_open, mmap) maps a piece of physical memory onto logical memory of each sharing process http: //comp. os. linux. development. apps. narkive. com/s. Pu. J 4 f. I 1/shmget-vs-shm-open • Mutual exclusion techniques required – Message Passing: • Easier to program: No critical sections or locking mechanisms needed • Slower: System calls required for send/receive operations

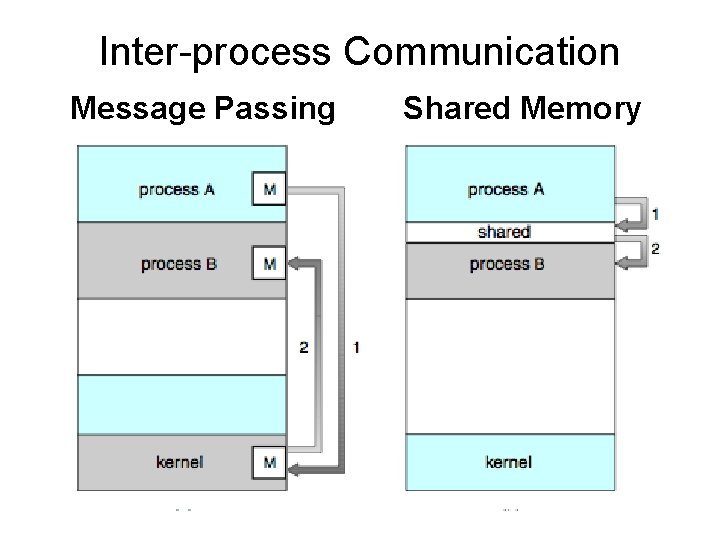

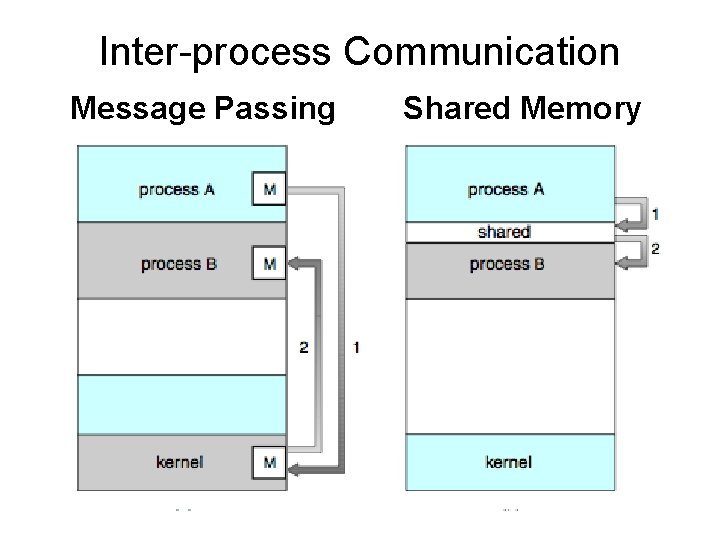

Inter-process Communication Message Passing Shared Memory

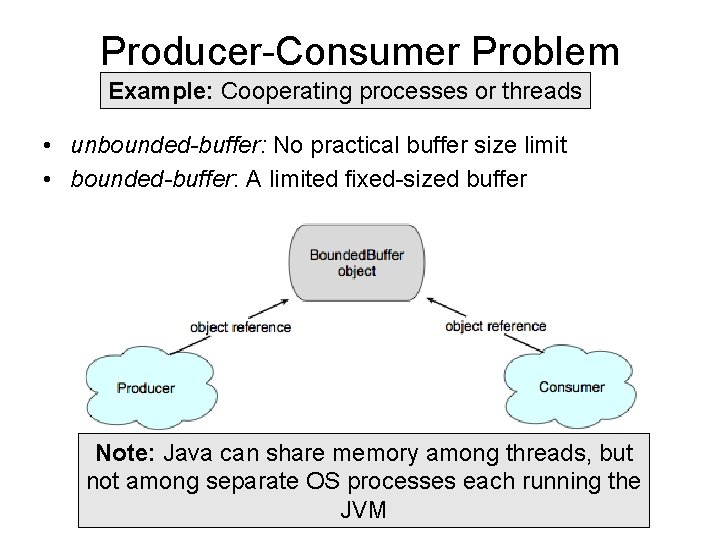

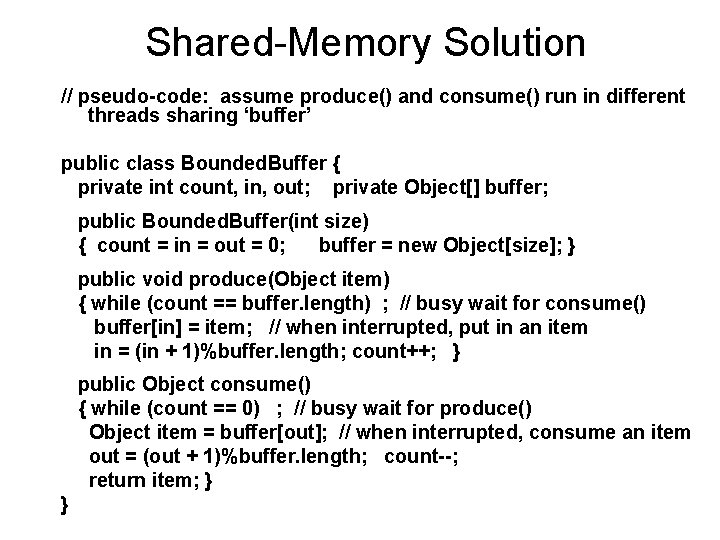

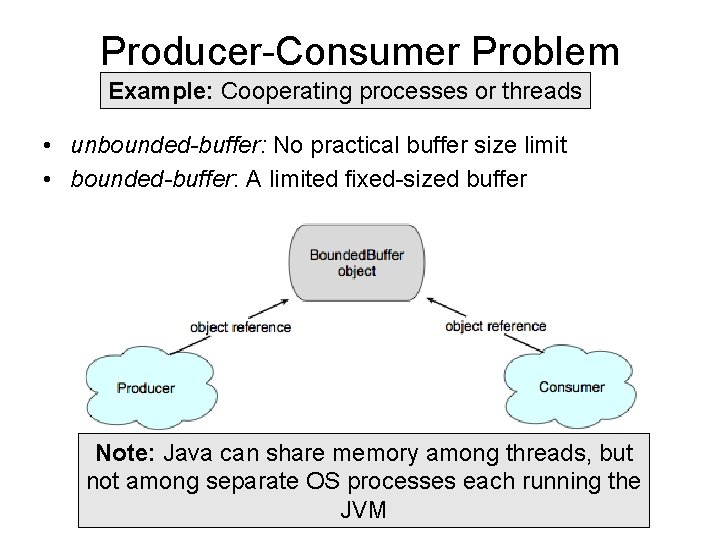

Producer-Consumer Problem Example: Cooperating processes or threads • unbounded-buffer: No practical buffer size limit • bounded-buffer: A limited fixed-sized buffer Note: Java can share memory among threads, but not among separate OS processes each running the JVM

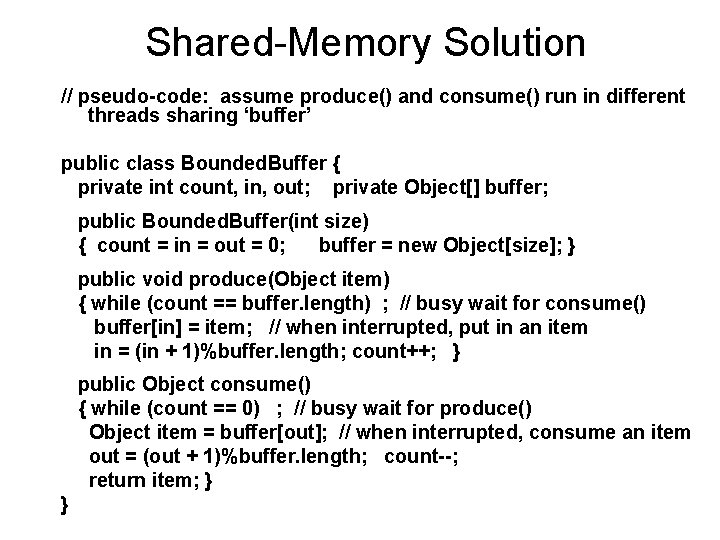

Shared-Memory Solution // pseudo-code: assume produce() and consume() run in different threads sharing ‘buffer’ public class Bounded. Buffer { private int count, in, out; private Object[] buffer; public Bounded. Buffer(int size) { count = in = out = 0; buffer = new Object[size]; } public void produce(Object item) { while (count == buffer. length) ; // busy wait for consume() buffer[in] = item; // when interrupted, put in an item in = (in + 1)%buffer. length; count++; } public Object consume() { while (count == 0) ; // busy wait for produce() Object item = buffer[out]; // when interrupted, consume an item out = (out + 1)%buffer. length; count--; return item; } }

Java Producer/Consumer See. . demosProd. Cons. Demo. java Uses a Vector (unbounded) rather than a bounded buffer. From the API: It is recommended that applications not use wait(), notify() or notify. All() on Thread instances.

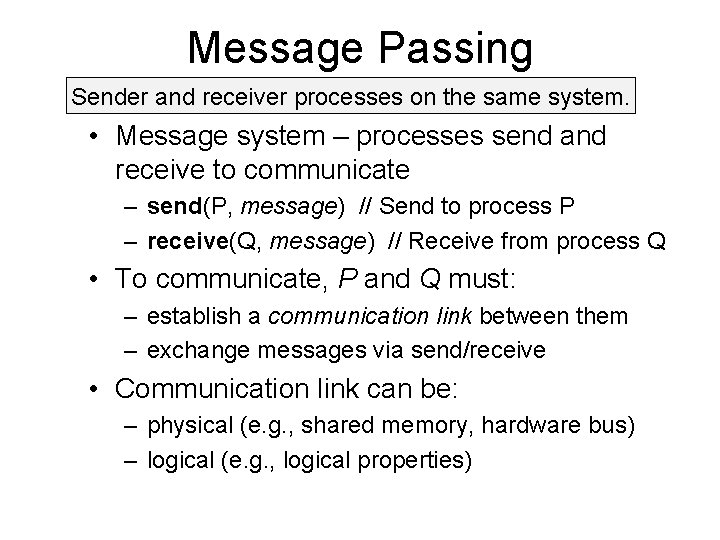

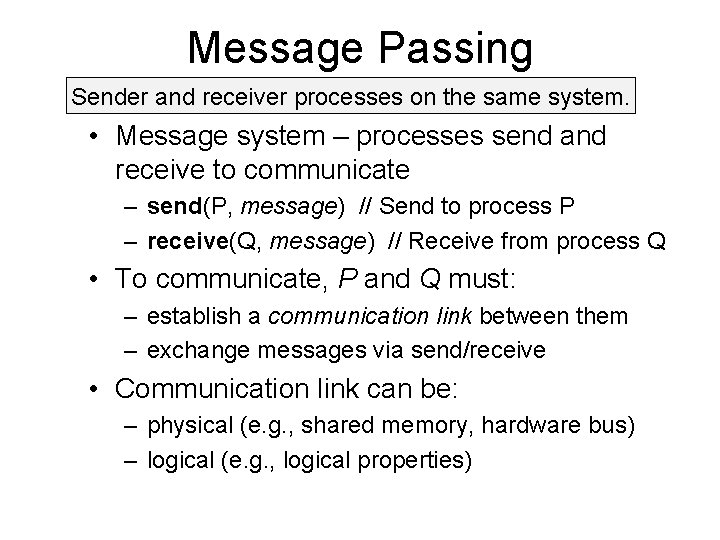

Message Passing Sender and receiver processes on the same system. • Message system – processes send and receive to communicate – send(P, message) // Send to process P – receive(Q, message) // Receive from process Q • To communicate, P and Q must: – establish a communication link between them – exchange messages via send/receive • Communication link can be: – physical (e. g. , shared memory, hardware bus) – logical (e. g. , logical properties)

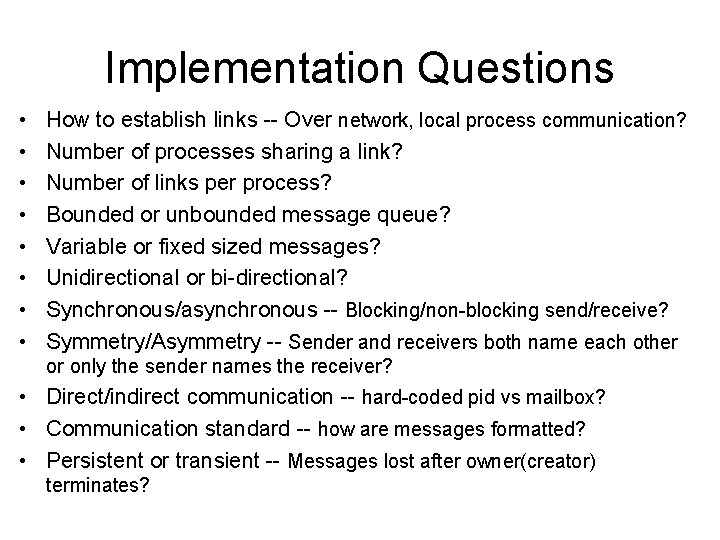

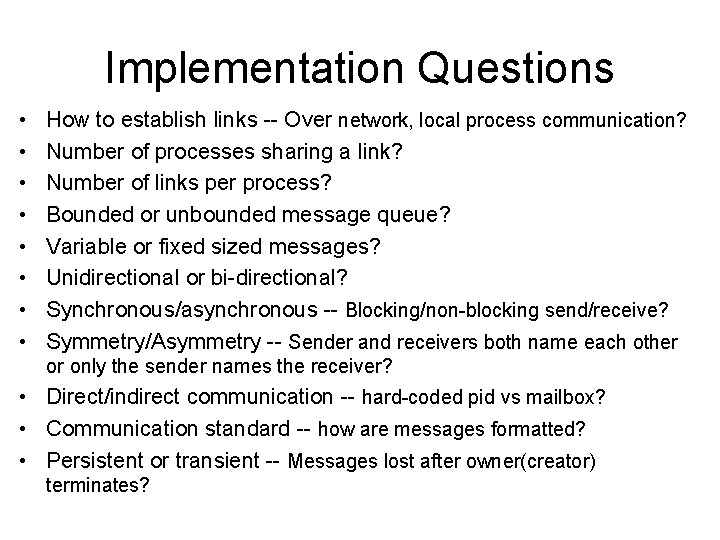

Implementation Questions • • How to establish links -- Over network, local process communication? Number of processes sharing a link? Number of links per process? Bounded or unbounded message queue? Variable or fixed sized messages? Unidirectional or bi-directional? Synchronous/asynchronous -- Blocking/non-blocking send/receive? Symmetry/Asymmetry -- Sender and receivers both name each other or only the sender names the receiver? • Direct/indirect communication -- hard-coded pid vs mailbox? • Communication standard -- how are messages formatted? • Persistent or transient -- Messages lost after owner(creator) terminates?

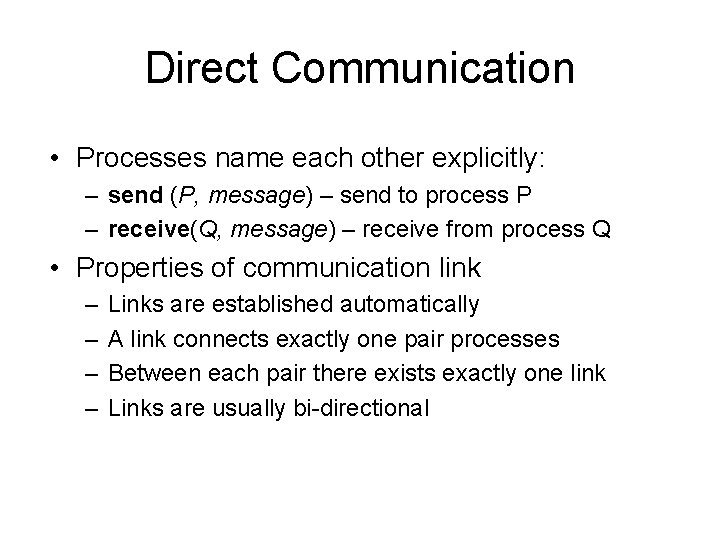

Direct Communication • Processes name each other explicitly: – send (P, message) – send to process P – receive(Q, message) – receive from process Q • Properties of communication link – – Links are established automatically A link connects exactly one pair processes Between each pair there exists exactly one link Links are usually bi-directional

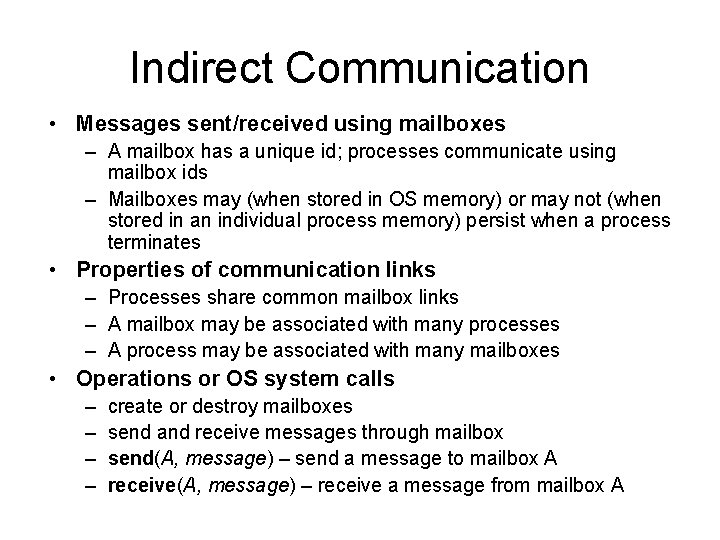

Indirect Communication • Messages sent/received using mailboxes – A mailbox has a unique id; processes communicate using mailbox ids – Mailboxes may (when stored in OS memory) or may not (when stored in an individual process memory) persist when a process terminates • Properties of communication links – Processes share common mailbox links – A mailbox may be associated with many processes – A process may be associated with many mailboxes • Operations or OS system calls – – create or destroy mailboxes send and receive messages through mailbox send(A, message) – send a message to mailbox A receive(A, message) – receive a message from mailbox A

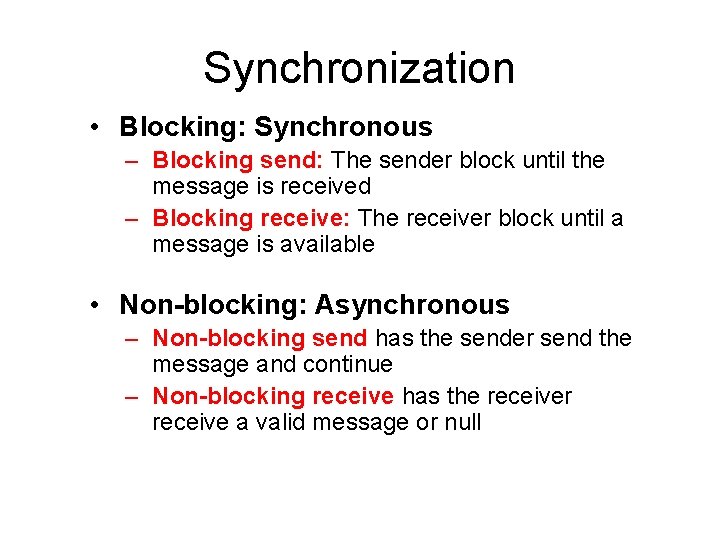

Synchronization • Blocking: Synchronous – Blocking send: The sender block until the message is received – Blocking receive: The receiver block until a message is available • Non-blocking: Asynchronous – Non-blocking send has the sender send the message and continue – Non-blocking receive has the receiver receive a valid message or null

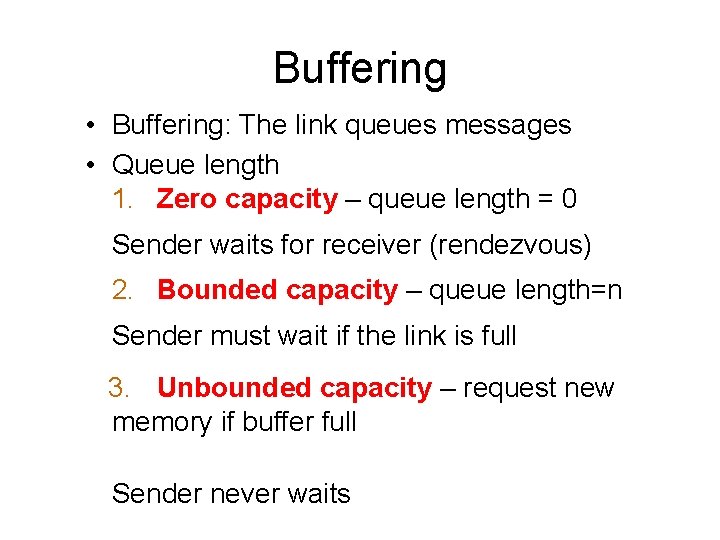

Buffering • Buffering: The link queues messages • Queue length 1. Zero capacity – queue length = 0 Sender waits for receiver (rendezvous) 2. Bounded capacity – queue length=n Sender must wait if the link is full 3. Unbounded capacity – request new memory if buffer full Sender never waits

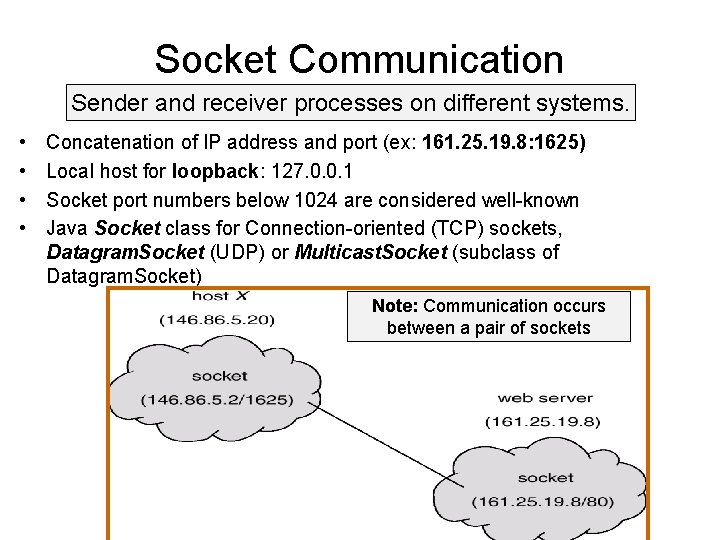

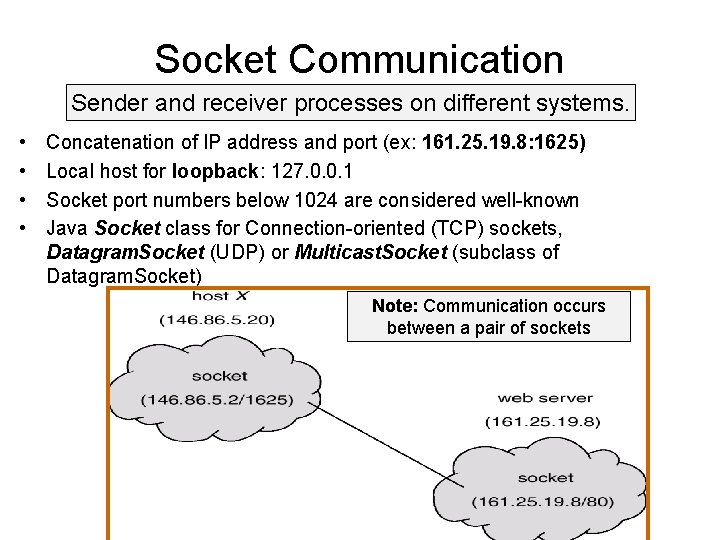

Socket Communication Sender and receiver processes on different systems. • • Concatenation of IP address and port (ex: 161. 25. 19. 8: 1625) Local host for loopback: 127. 0. 0. 1 Socket port numbers below 1024 are considered well-known Java Socket class for Connection-oriented (TCP) sockets, Datagram. Socket (UDP) or Multicast. Socket (subclass of Datagram. Socket) Note: Communication occurs between a pair of sockets

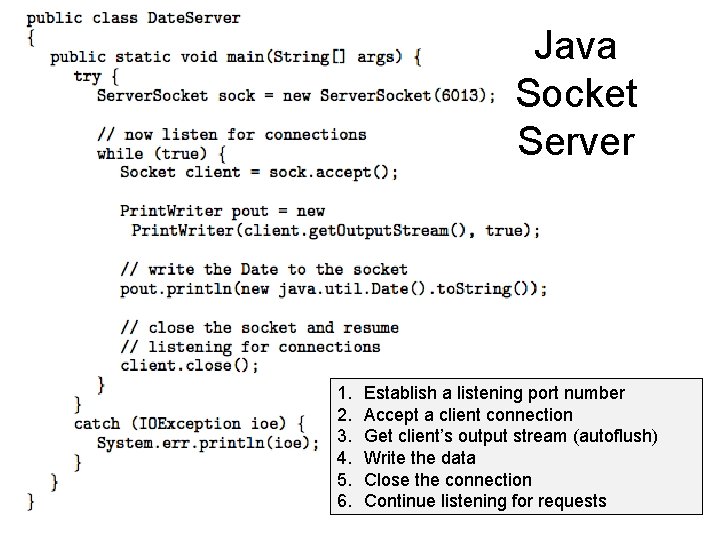

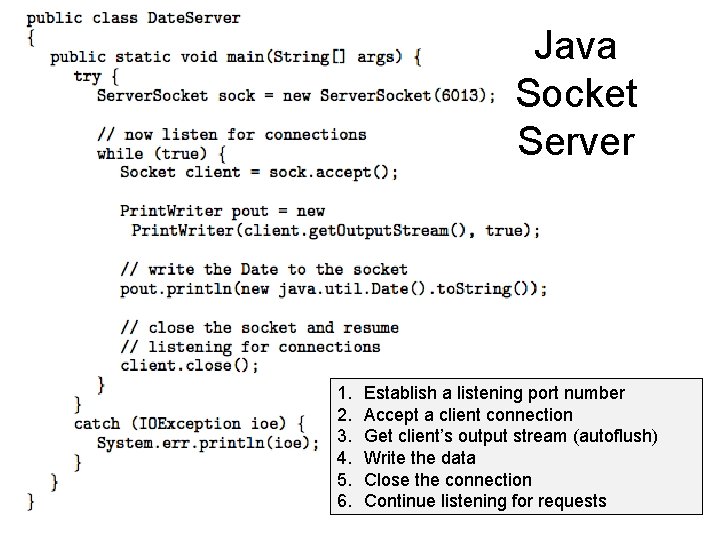

Java Socket Server 1. 2. 3. 4. 5. 6. Establish a listening port number Accept a client connection Get client’s output stream (autoflush) Write the data Close the connection Continue listening for requests

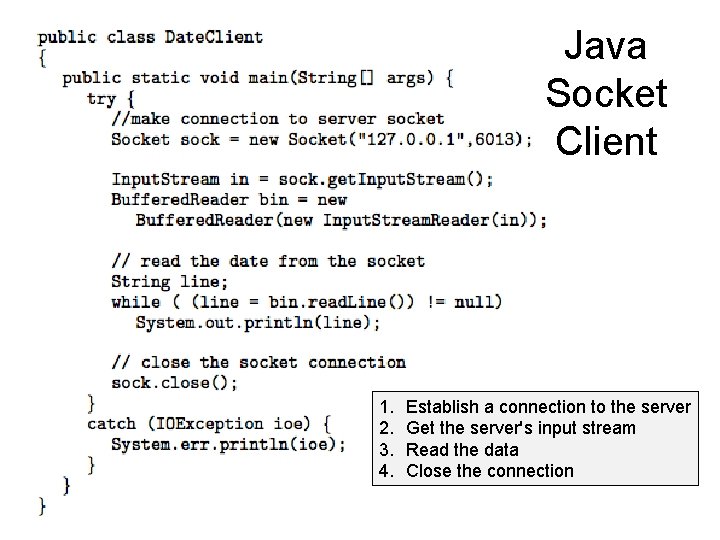

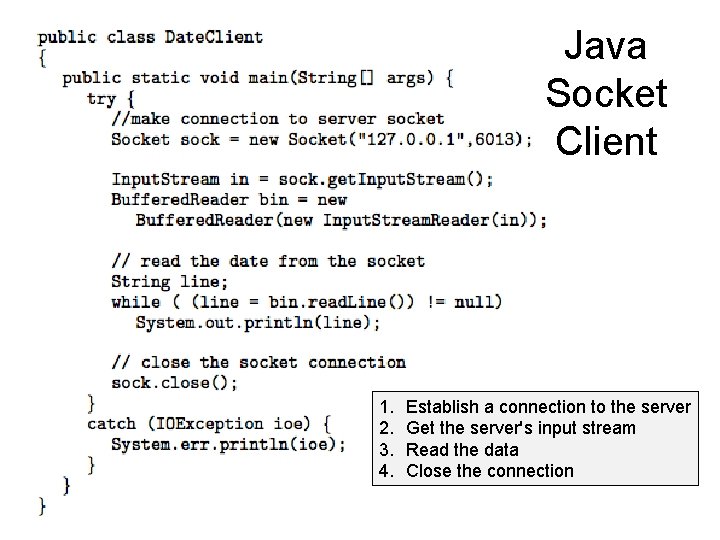

Java Socket Client 1. 2. 3. 4. Establish a connection to the server Get the server's input stream Read the data Close the connection

User and Kernel Threads • User threads - Thread management done by user-level threads library without OS support. Fewer system calls, so more efficient • Kernel threads – Thread management directly supported by the kernel. More OS overhead • Tradeoffs: Kernel thread handling incurs more overhead. User thread handling stops the application whenever any thread makes a syscall. • Most modern operating systems support kernel threads to some degree (Windows, Solaris, Linux, UNIX, Mac OS).

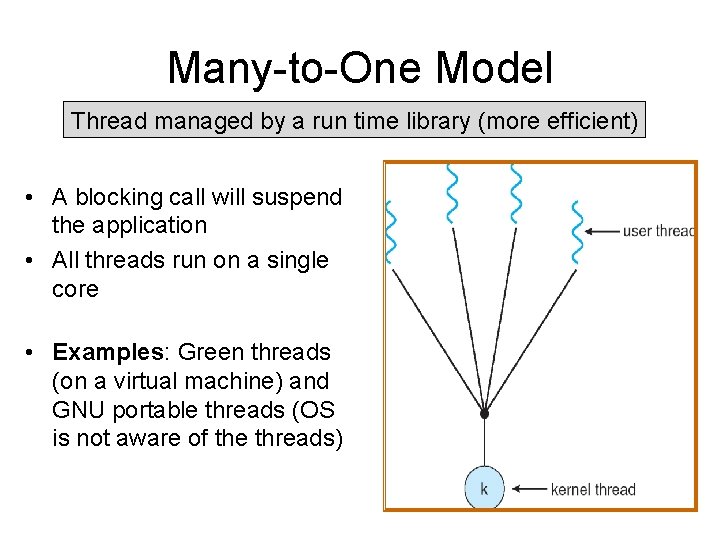

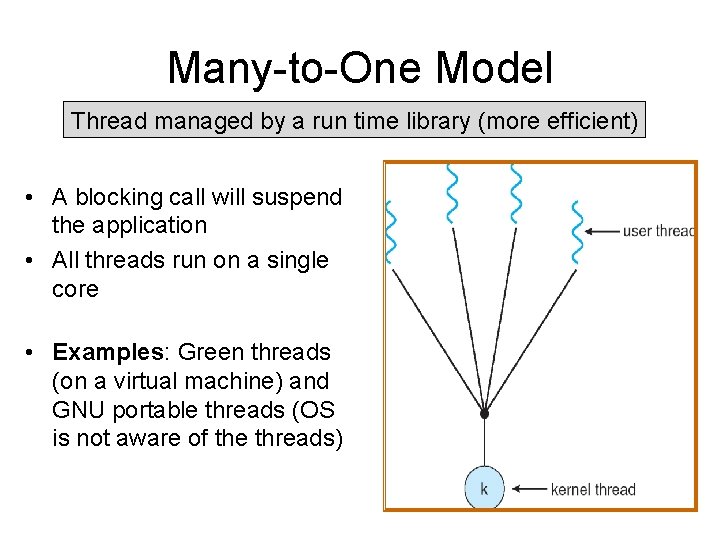

Many-to-One Model Thread managed by a run time library (more efficient) • A blocking call will suspend the application • All threads run on a single core • Examples: Green threads (on a virtual machine) and GNU portable threads (OS is not aware of the threads)

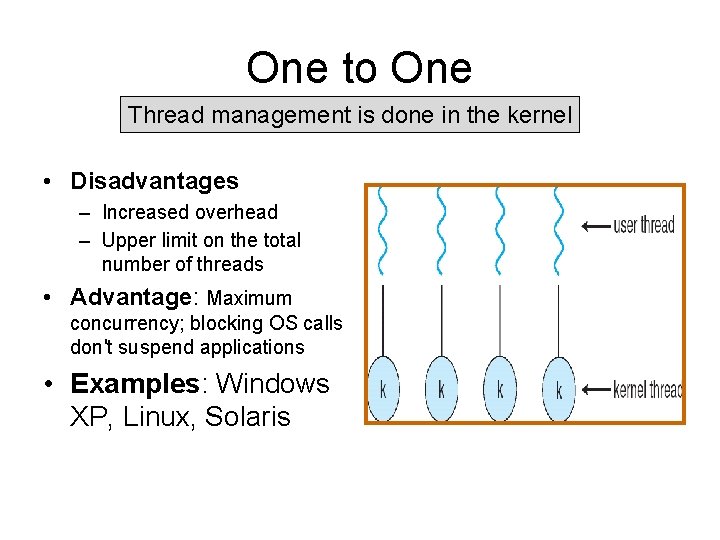

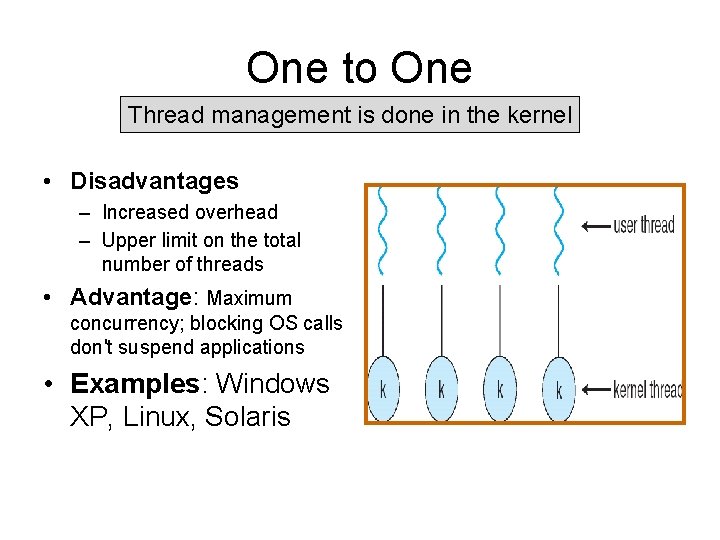

One to One Thread management is done in the kernel • Disadvantages – Increased overhead – Upper limit on the total number of threads • Advantage: Maximum concurrency; blocking OS calls don't suspend applications • Examples: Windows XP, Linux, Solaris

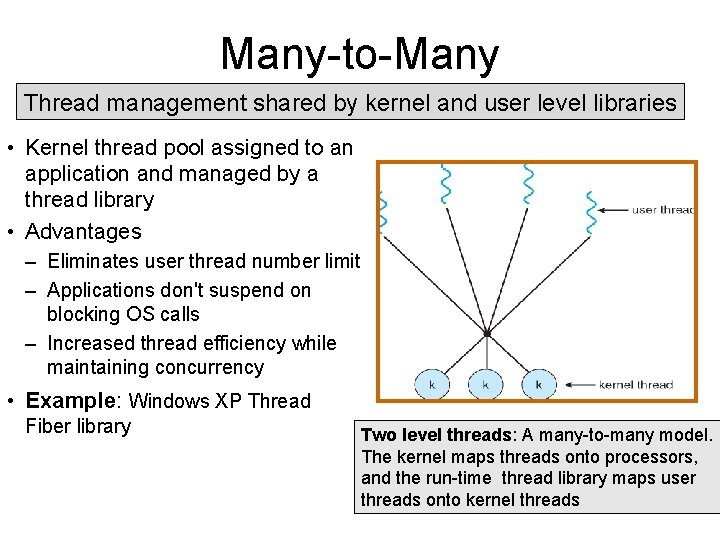

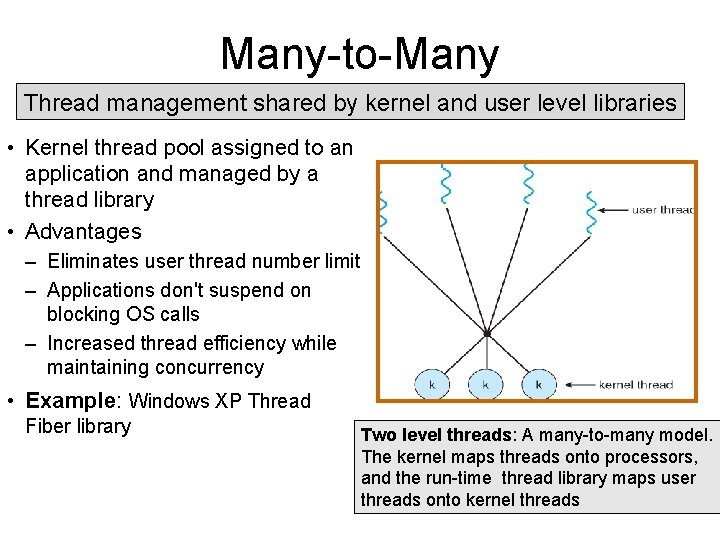

Many-to-Many Thread management shared by kernel and user level libraries • Kernel thread pool assigned to an application and managed by a thread library • Advantages – Eliminates user thread number limit – Applications don't suspend on blocking OS calls – Increased thread efficiency while maintaining concurrency • Example: Windows XP Thread Fiber library Two level threads: A many-to-many model. The kernel maps threads onto processors, and the run-time thread library maps user threads onto kernel threads

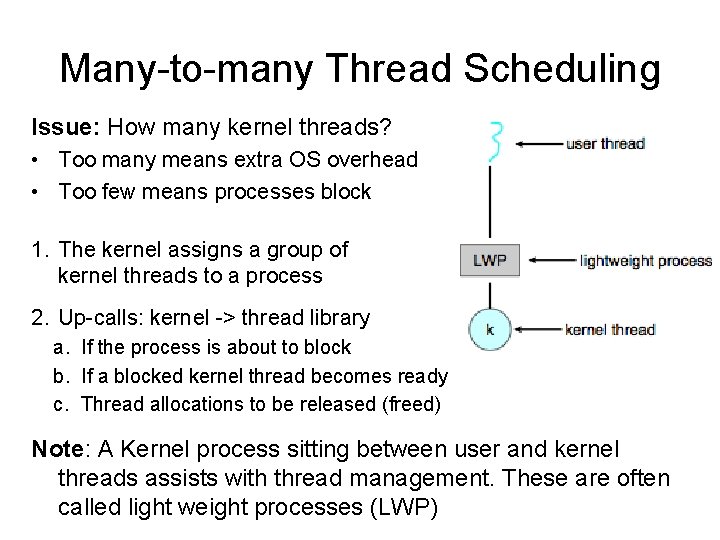

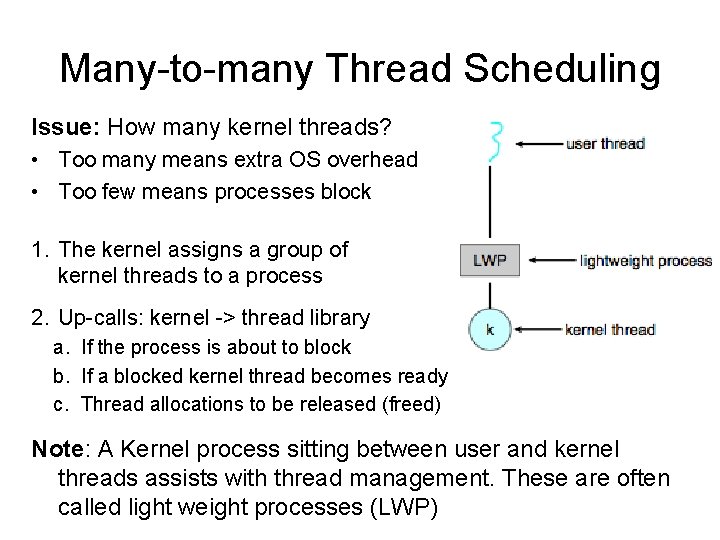

Many-to-many Thread Scheduling Issue: How many kernel threads? • Too many means extra OS overhead • Too few means processes block 1. The kernel assigns a group of kernel threads to a process 2. Up-calls: kernel -> thread library a. If the process is about to block b. If a blocked kernel thread becomes ready c. Thread allocations to be released (freed) Note: A Kernel process sitting between user and kernel threads assists with thread management. These are often called light weight processes (LWP)

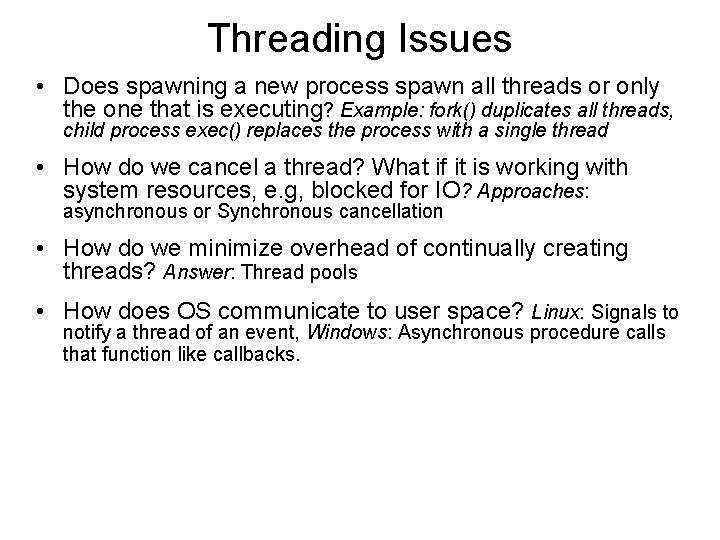

Threading Issues • Does spawning a new process spawn all threads or only the one that is executing? Example: fork() duplicates all threads, child process exec() replaces the process with a single thread • How do we cancel a thread? What if it is working with system resources, e. g, blocked for IO? Approaches: asynchronous or Synchronous cancellation • How do we minimize overhead of continually creating threads? Answer: Thread pools • How does OS communicate to user space? Linux: Signals to notify a thread of an event, Windows: Asynchronous procedure calls that function like callbacks.

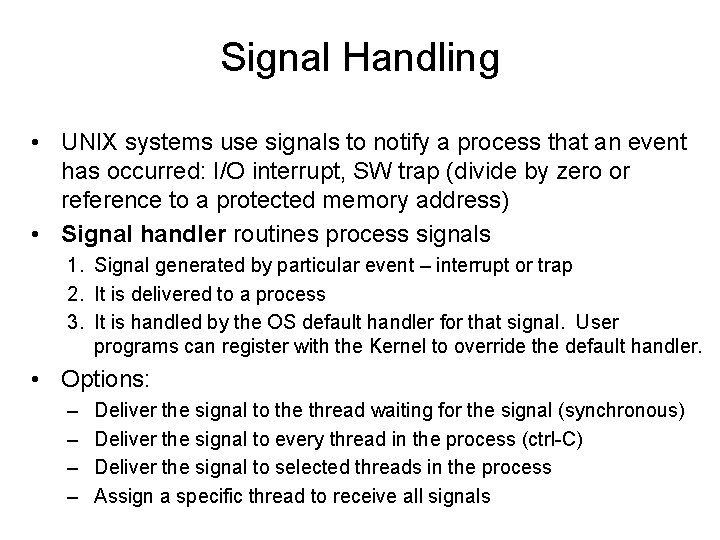

Signal Handling • UNIX systems use signals to notify a process that an event has occurred: I/O interrupt, SW trap (divide by zero or reference to a protected memory address) • Signal handler routines process signals 1. Signal generated by particular event – interrupt or trap 2. It is delivered to a process 3. It is handled by the OS default handler for that signal. User programs can register with the Kernel to override the default handler. • Options: – – Deliver the signal to the thread waiting for the signal (synchronous) Deliver the signal to every thread in the process (ctrl-C) Deliver the signal to selected threads in the process Assign a specific thread to receive all signals

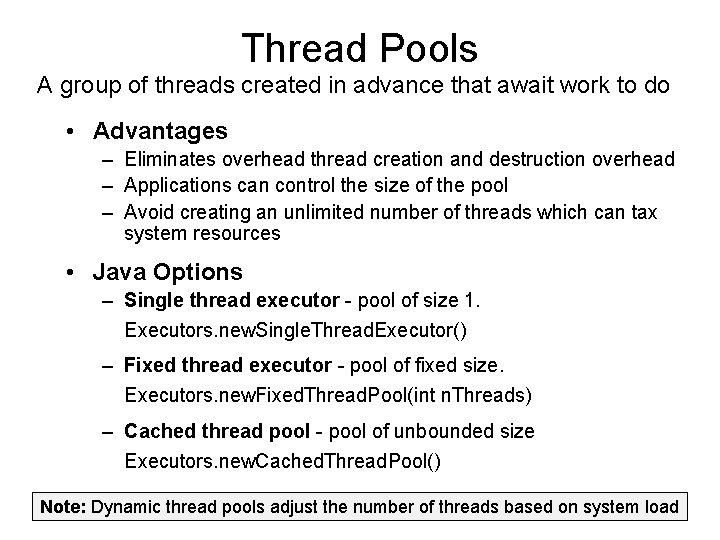

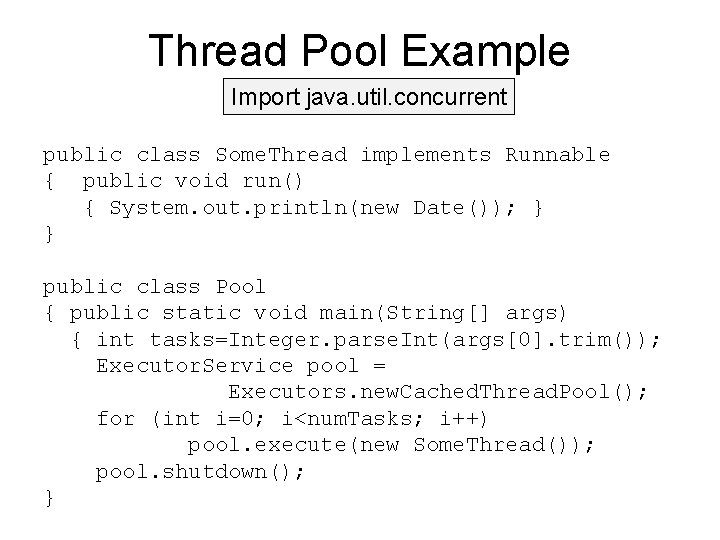

Thread Pools A group of threads created in advance that await work to do • Advantages – Eliminates overhead thread creation and destruction overhead – Applications can control the size of the pool – Avoid creating an unlimited number of threads which can tax system resources • Java Options – Single thread executor - pool of size 1. Executors. new. Single. Thread. Executor() – Fixed thread executor - pool of fixed size. Executors. new. Fixed. Thread. Pool(int n. Threads) – Cached thread pool - pool of unbounded size Executors. new. Cached. Thread. Pool() Note: Dynamic thread pools adjust the number of threads based on system load

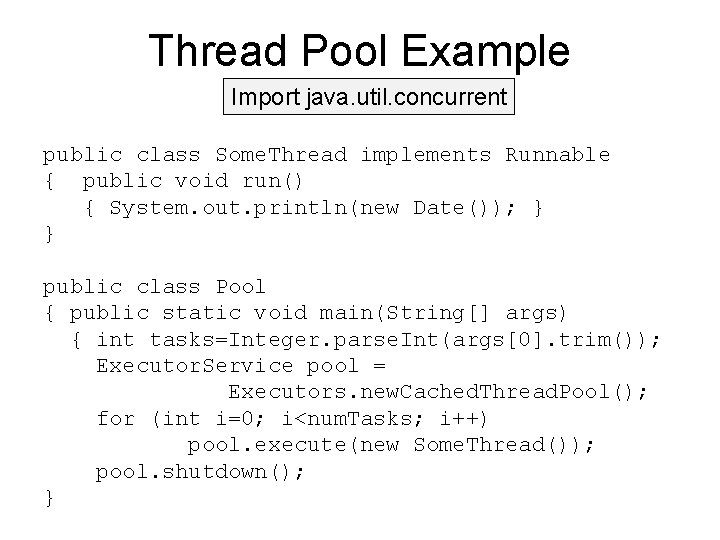

Thread Pool Example Import java. util. concurrent public class Some. Thread implements Runnable { public void run() { System. out. println(new Date()); } } public class Pool { public static void main(String[] args) { int tasks=Integer. parse. Int(args[0]. trim()); Executor. Service pool = Executors. new. Cached. Thread. Pool(); for (int i=0; i<num. Tasks; i++) pool. execute(new Some. Thread()); pool. shutdown(); }

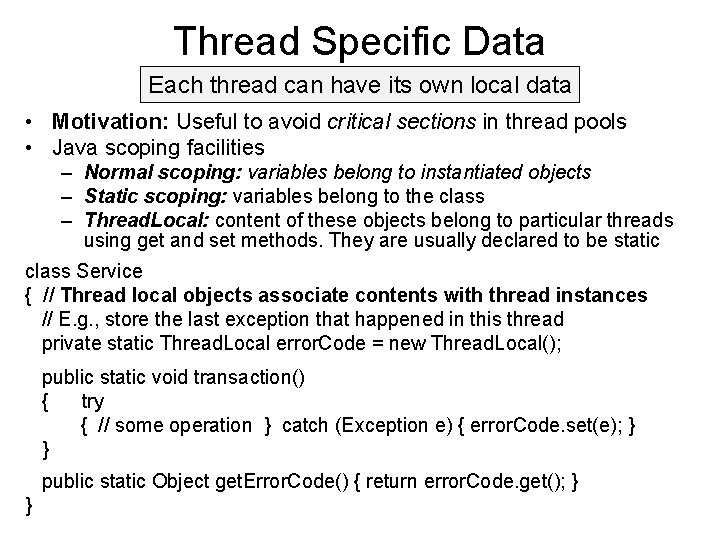

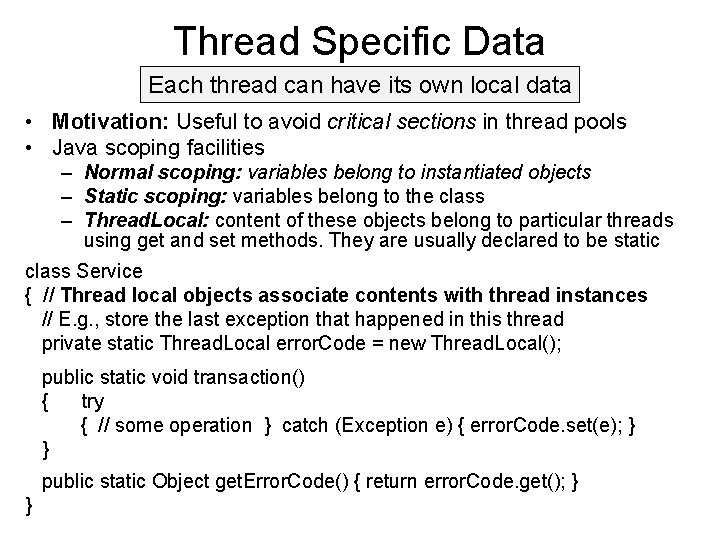

Thread Specific Data Each thread can have its own local data • Motivation: Useful to avoid critical sections in thread pools • Java scoping facilities – Normal scoping: variables belong to instantiated objects – Static scoping: variables belong to the class – Thread. Local: content of these objects belong to particular threads using get and set methods. They are usually declared to be static class Service { // Thread local objects associate contents with thread instances // E. g. , store the last exception that happened in this thread private static Thread. Local error. Code = new Thread. Local(); public static void transaction() { try { // some operation } catch (Exception e) { error. Code. set(e); } } public static Object get. Error. Code() { return error. Code. get(); } }