5 Slide Example Gene Chip Data Jude Shavlik

- Slides: 26

5 -Slide Example: Gene Chip Data © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 1

Decision Trees in One Picture © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 2

Example: Gene Expression Decision tree: AD_X 57809_at <= 20343. 4: myeloma (74) AD_X 57809_at > 20343. 4: normal (31) Leave-one-out cross-validation accuracy estimate: 97. 1% X 57809: IGL (immunoglobulin lambda locus) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 3

Problem with Result Easy to predict accurately with genes related to immune function, such as IGL, but this gives us no new insight. Eliminate these genes prior to training. Possible of comprehensibility of decision trees. © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 4

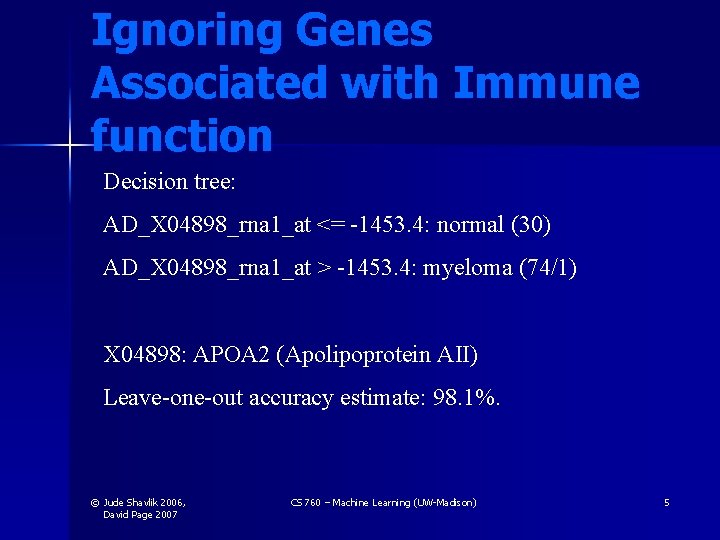

Ignoring Genes Associated with Immune function Decision tree: AD_X 04898_rna 1_at <= -1453. 4: normal (30) AD_X 04898_rna 1_at > -1453. 4: myeloma (74/1) X 04898: APOA 2 (Apolipoprotein AII) Leave-one-out accuracy estimate: 98. 1%. © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 5

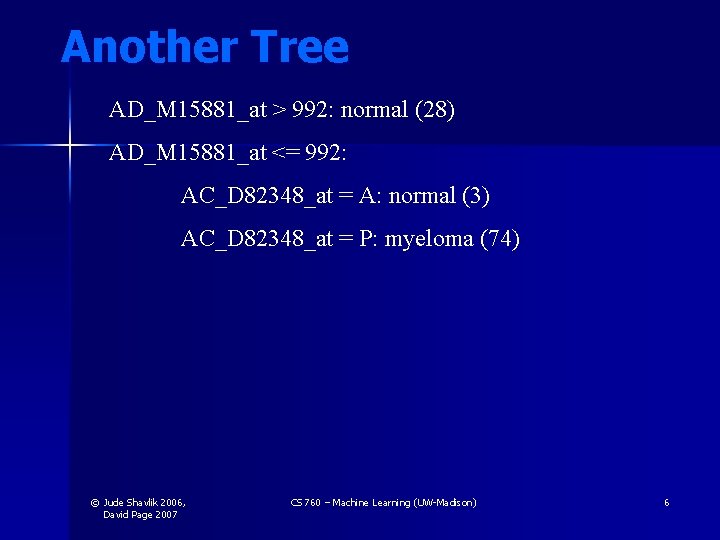

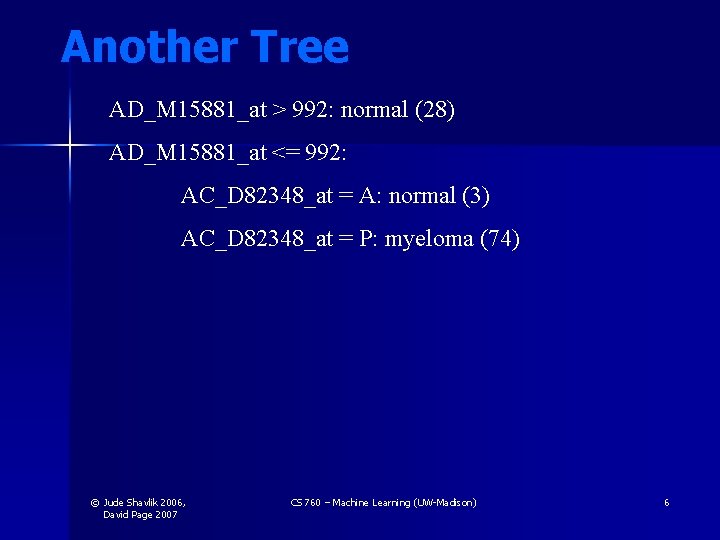

Another Tree AD_M 15881_at > 992: normal (28) AD_M 15881_at <= 992: AC_D 82348_at = A: normal (3) AC_D 82348_at = P: myeloma (74) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 6

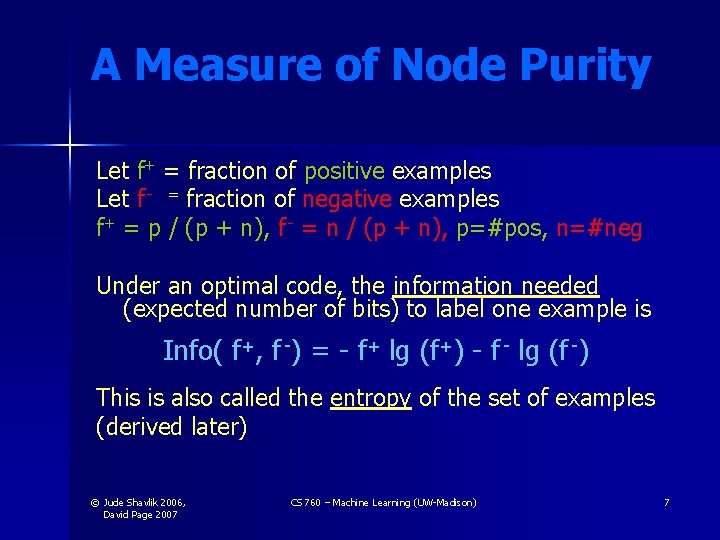

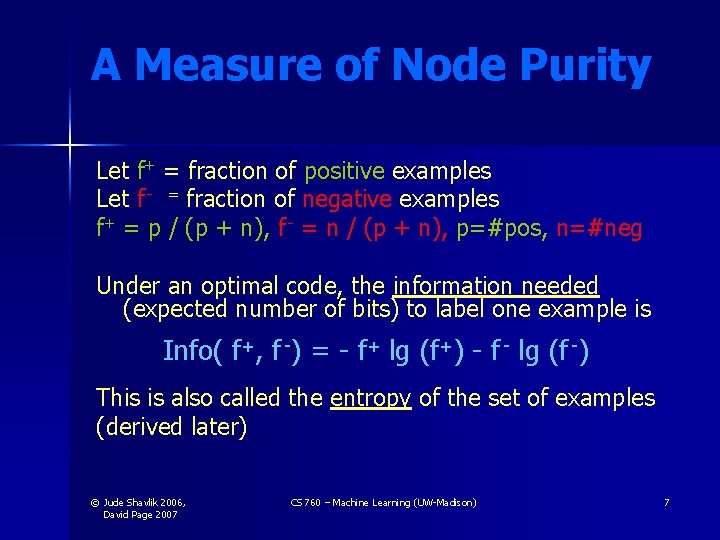

A Measure of Node Purity Let f+ = fraction of positive examples Let f - = fraction of negative examples f+ = p / (p + n), f - = n / (p + n), p=#pos, n=#neg Under an optimal code, the information needed (expected number of bits) to label one example is Info( f+, f -) = - f+ lg (f+) - f - lg (f -) This is also called the entropy of the set of examples (derived later) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 7

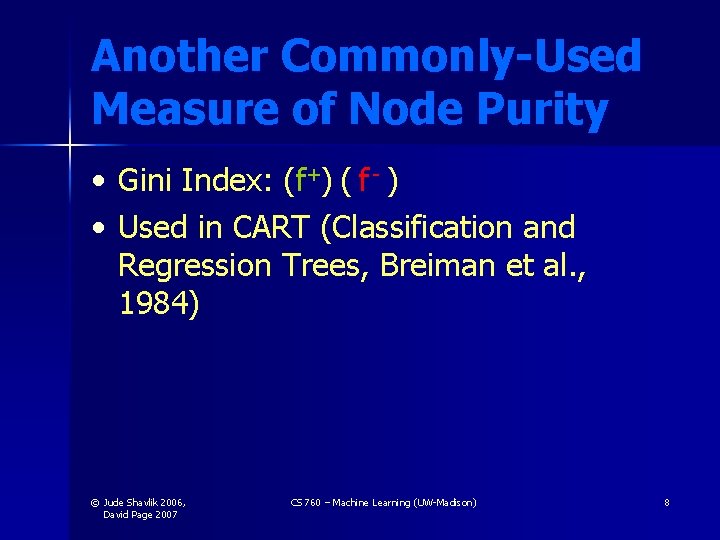

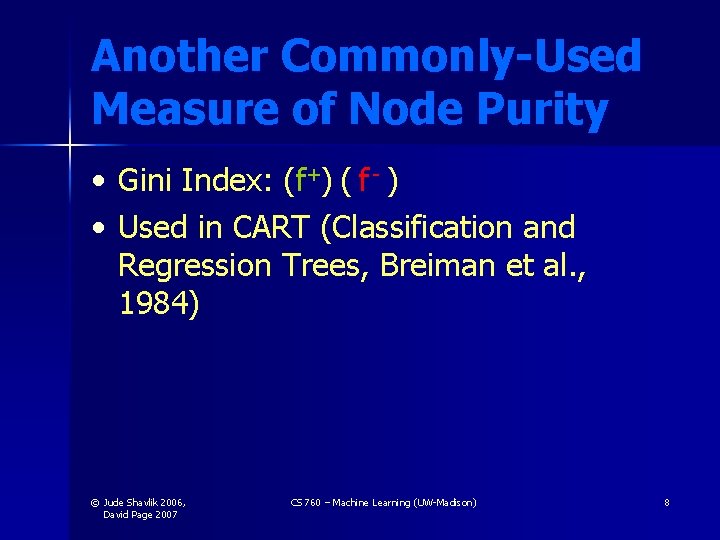

Another Commonly-Used Measure of Node Purity • Gini Index: (f+) ( f - ) • Used in CART (Classification and Regression Trees, Breiman et al. , 1984) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 8

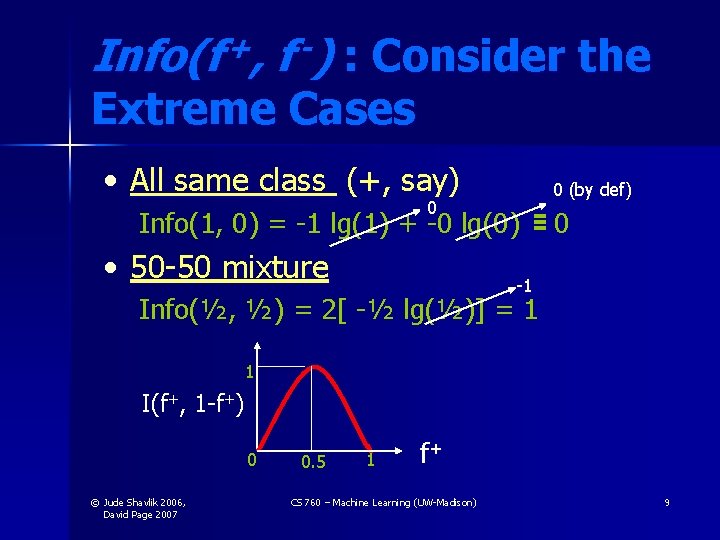

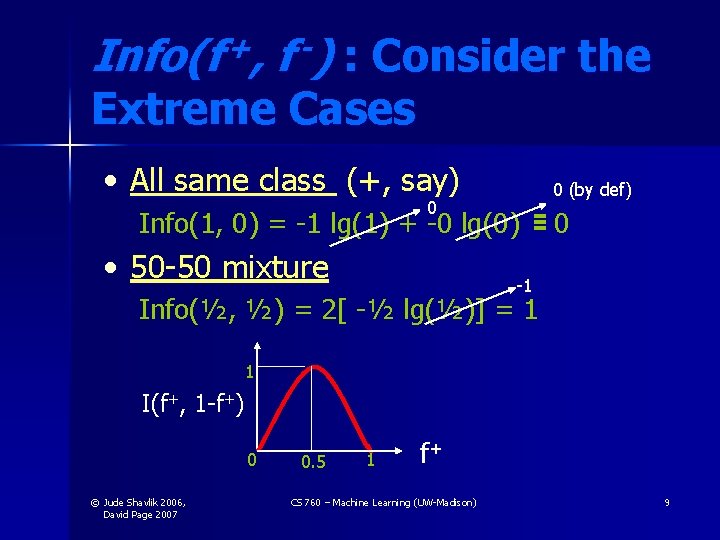

Info(f +, f -) : Consider the Extreme Cases • All same class (+, say) 0 (by def) 0 Info(1, 0) = -1 lg(1) + -0 lg(0) • 50 -50 mixture 0 -1 Info(½, ½) = 2[ -½ lg(½)] = 1 1 I(f+, 1 -f+) 0 © Jude Shavlik 2006, David Page 2007 0. 5 1 f+ CS 760 – Machine Learning (UW-Madison) 9

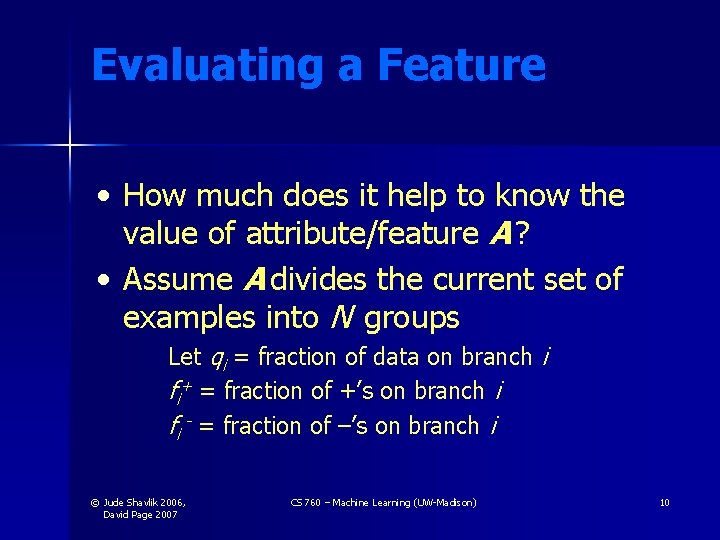

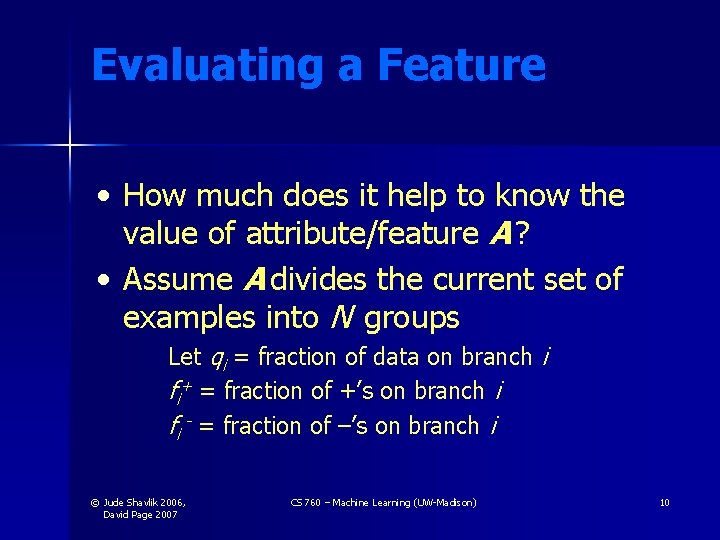

Evaluating a Feature • How much does it help to know the value of attribute/feature A ? • Assume A divides the current set of examples into N groups Let qi = fraction of data on branch i fi+ = fraction of +’s on branch i fi - = fraction of –’s on branch i © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 10

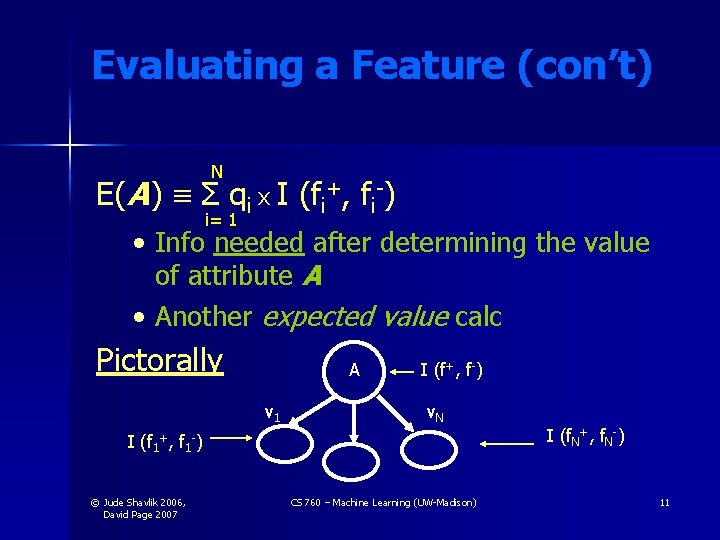

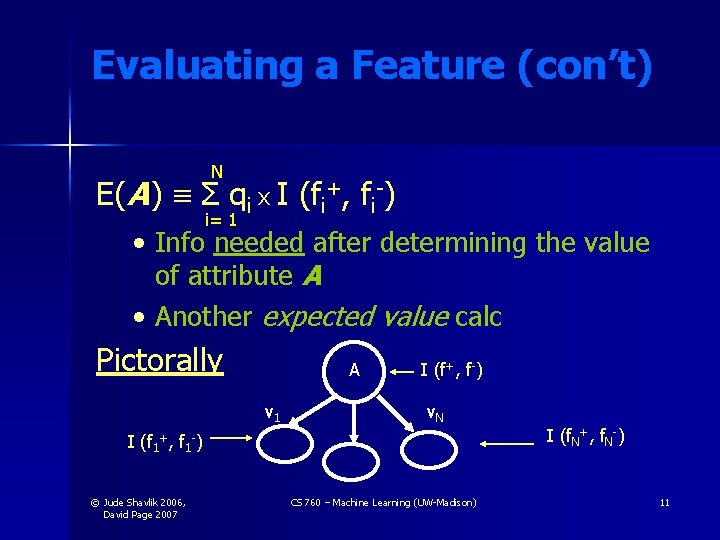

Evaluating a Feature (con’t) N E(A ) Σ qi x I (fi+, fi-) i= 1 • Info needed after determining the value of attribute A • Another expected value calc Pictorally A v 1 I (f+, f-) v. N I (f 1+, f 1 -) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) I (f. N+, f. N-) 11

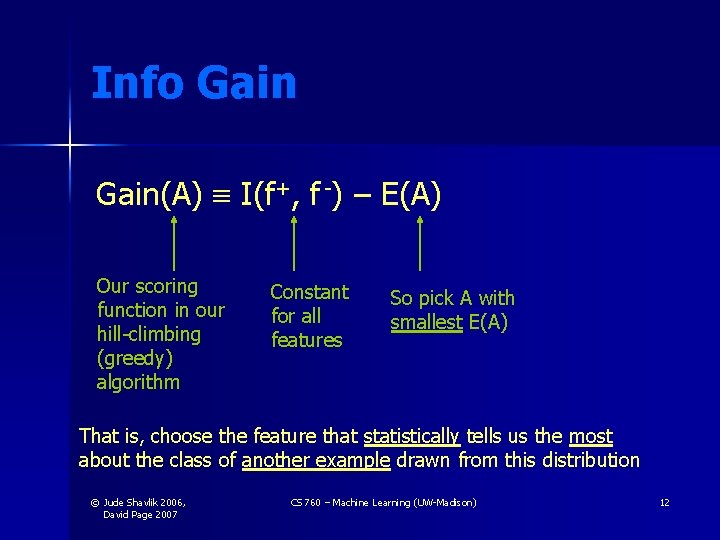

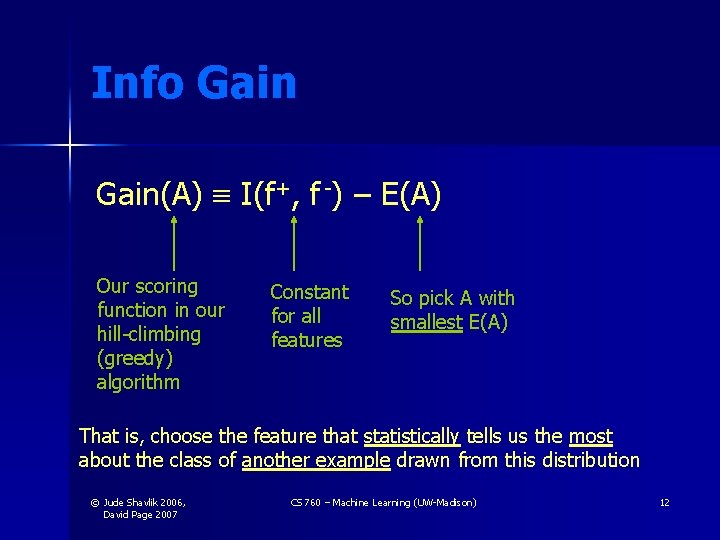

Info Gain(A) I(f+, f -) – E(A) Our scoring function in our hill-climbing (greedy) algorithm Constant for all features So pick A with smallest E(A) That is, choose the feature that statistically tells us the most about the class of another example drawn from this distribution © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 12

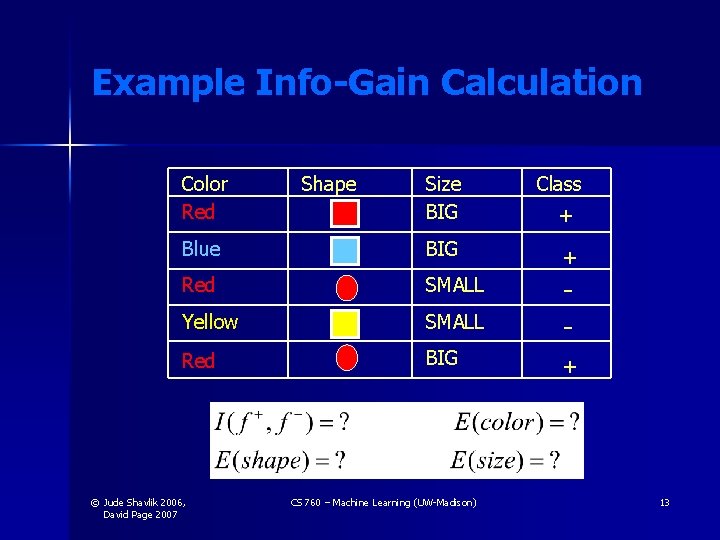

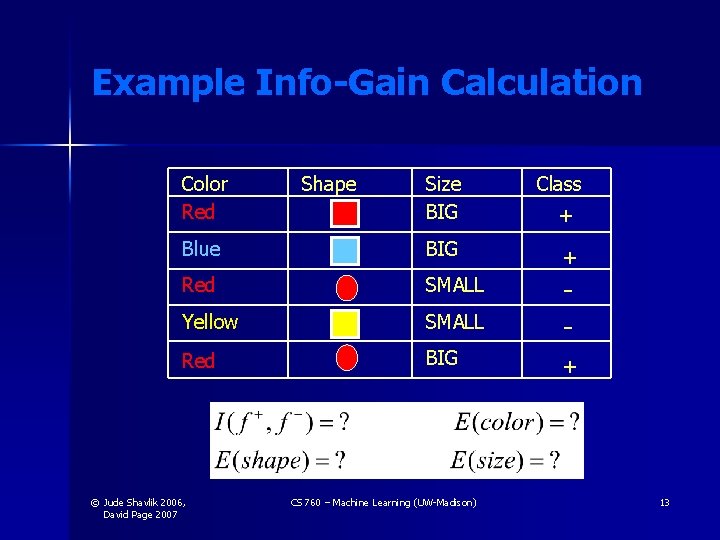

Example Info-Gain Calculation Color Red Size BIG Class + Blue BIG + Red SMALL Yellow SMALL - Red BIG + © Jude Shavlik 2006, David Page 2007 Shape CS 760 – Machine Learning (UW-Madison) 13

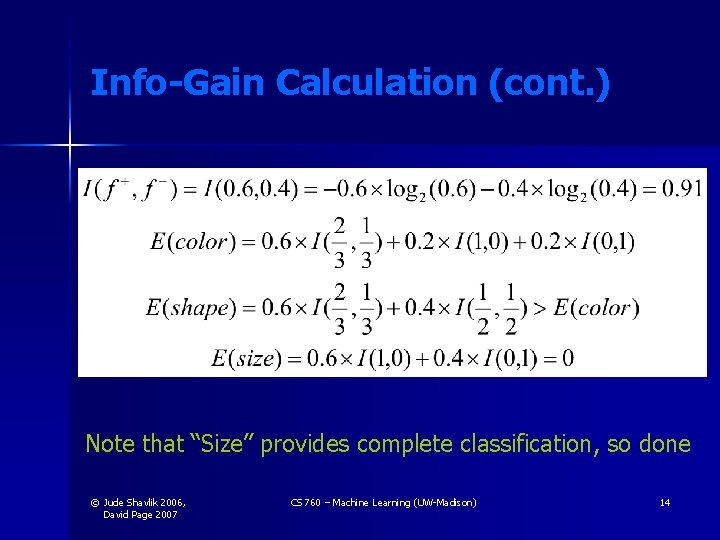

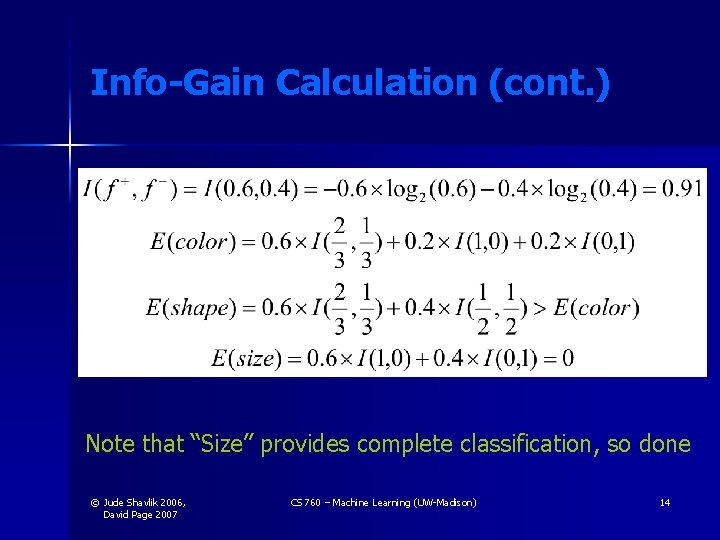

Info-Gain Calculation (cont. ) Note that “Size” provides complete classification, so done © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 14

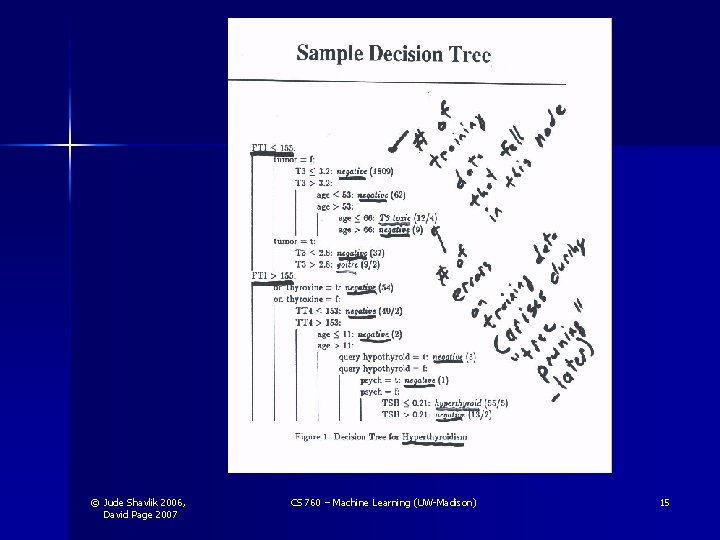

© Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 15

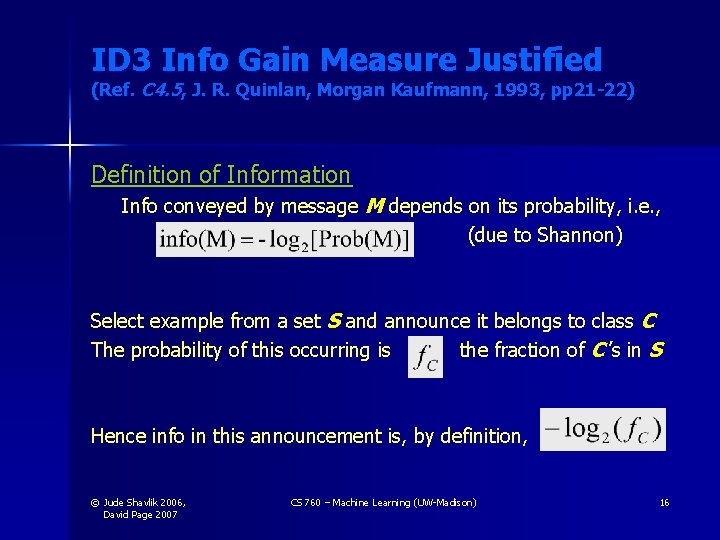

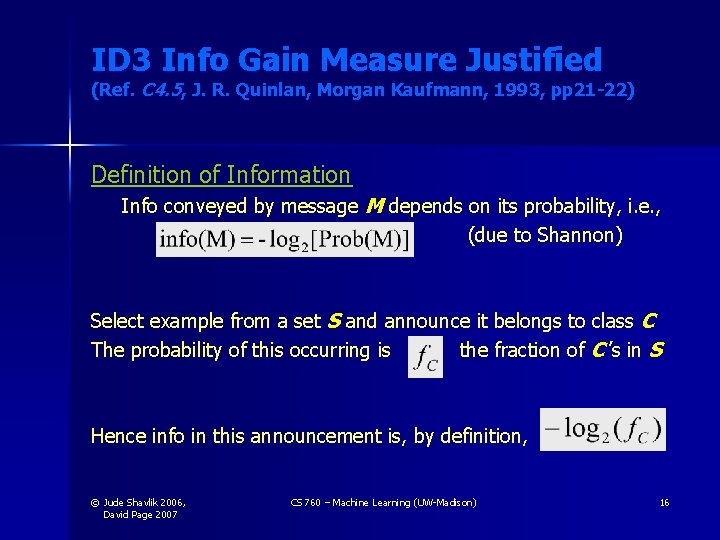

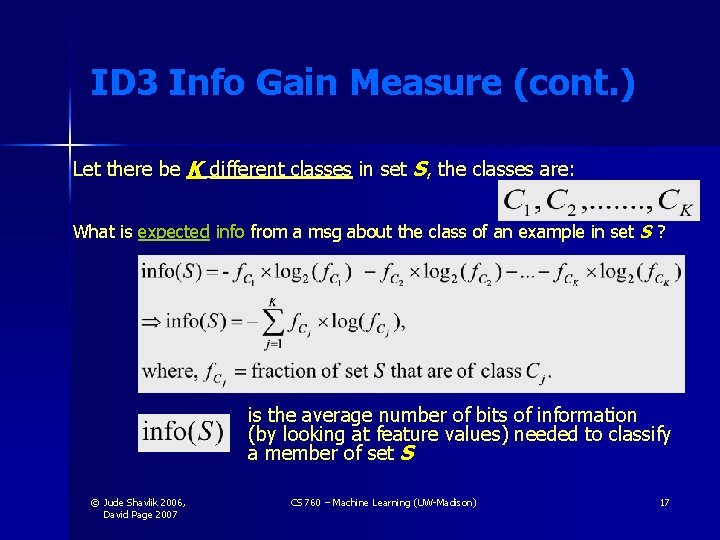

ID 3 Info Gain Measure Justified (Ref. C 4. 5, J. R. Quinlan, Morgan Kaufmann, 1993, pp 21 -22) Definition of Information Info conveyed by message M depends on its probability, i. e. , (due to Shannon) Select example from a set S and announce it belongs to class C The probability of this occurring is the fraction of C ’s in S Hence info in this announcement is, by definition, © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 16

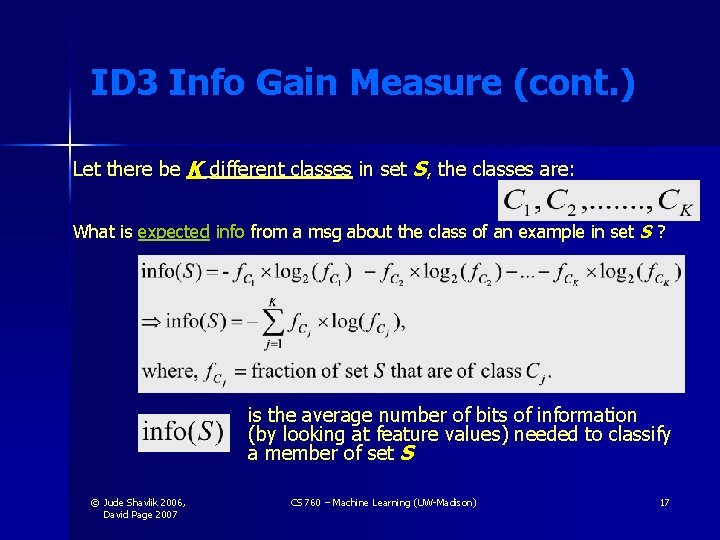

ID 3 Info Gain Measure (cont. ) Let there be K different classes in set S, the classes are: What is expected info from a msg about the class of an example in set S ? is the average number of bits of information (by looking at feature values) needed to classify a member of set S © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 17

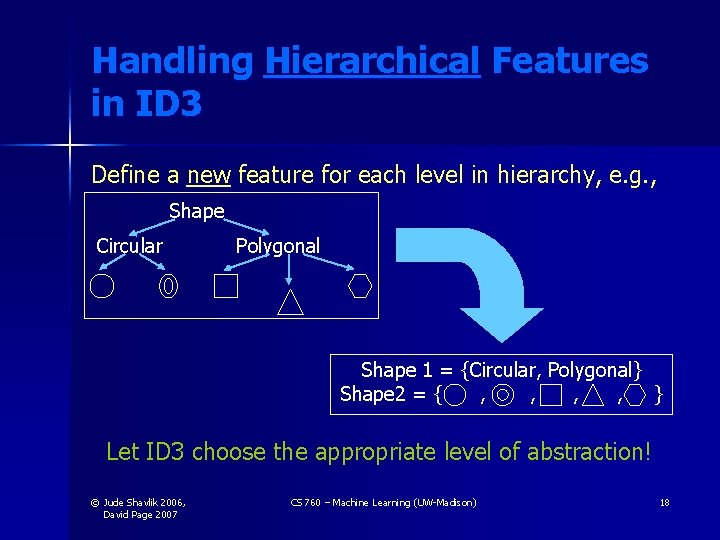

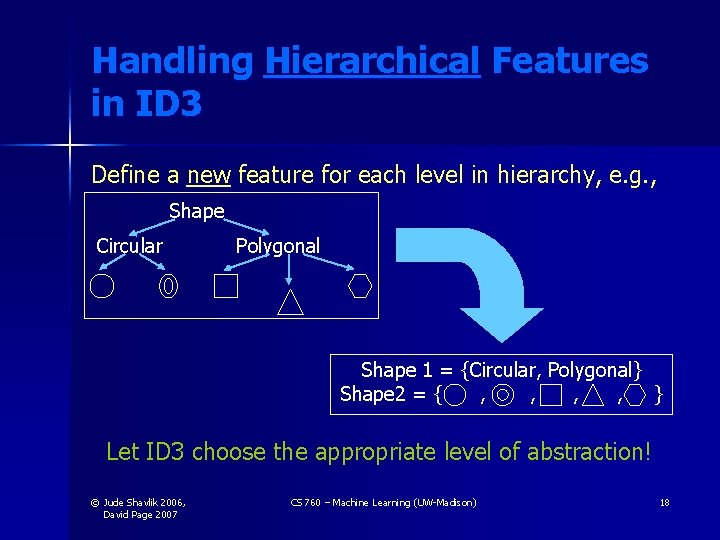

Handling Hierarchical Features in ID 3 Define a new feature for each level in hierarchy, e. g. , Shape Circular Polygonal Shape 1 = {Circular, Polygonal} Shape 2 = { , , } Let ID 3 choose the appropriate level of abstraction! © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 18

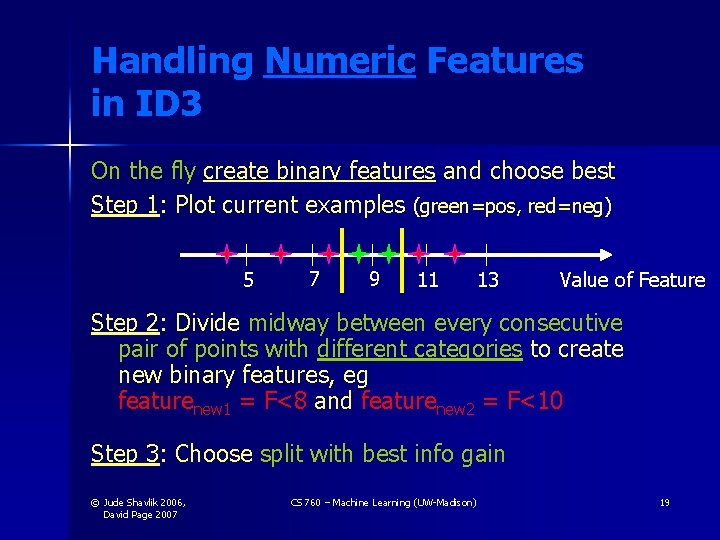

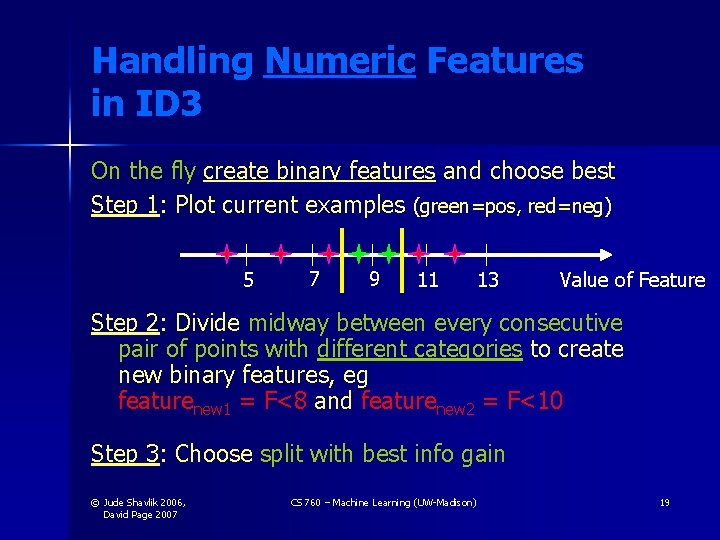

Handling Numeric Features in ID 3 On the fly create binary features and choose best Step 1: Plot current examples (green=pos, red=neg) 5 7 9 11 13 Value of Feature Step 2: Divide midway between every consecutive pair of points with different categories to create new binary features, eg featurenew 1 = F<8 and featurenew 2 = F<10 Step 3: Choose split with best info gain © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 19

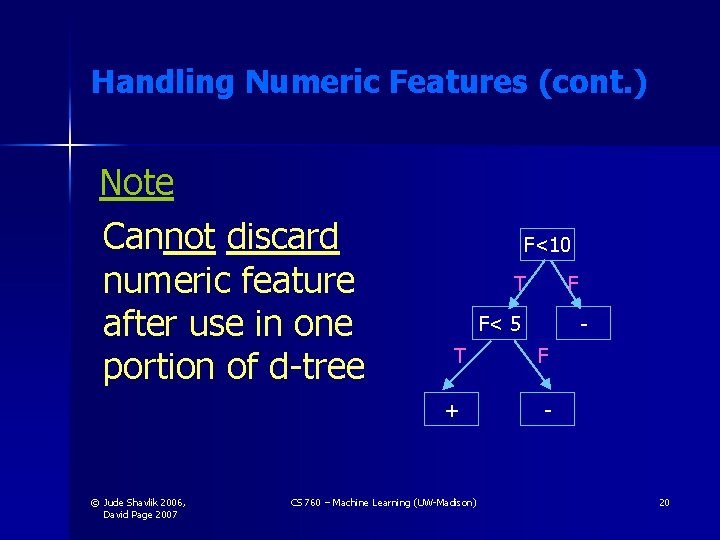

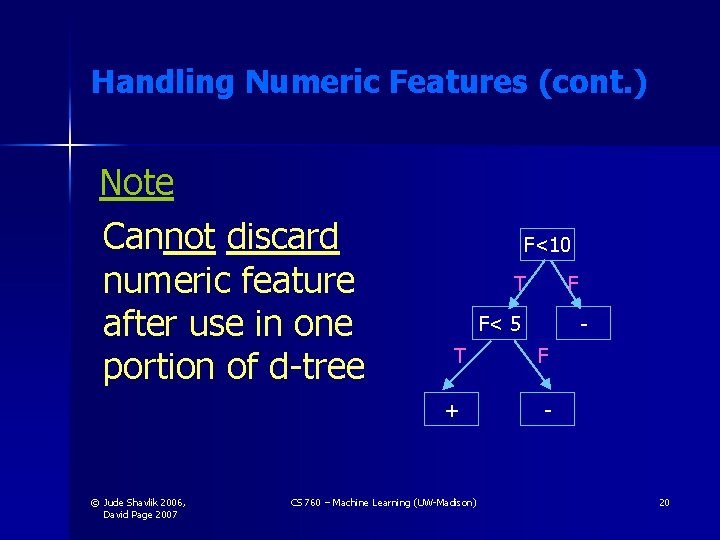

Handling Numeric Features (cont. ) Note Cannot discard numeric feature after use in one portion of d-tree F<10 T F< 5 T + © Jude Shavlik 2006, David Page 2007 F CS 760 – Machine Learning (UW-Madison) F - 20

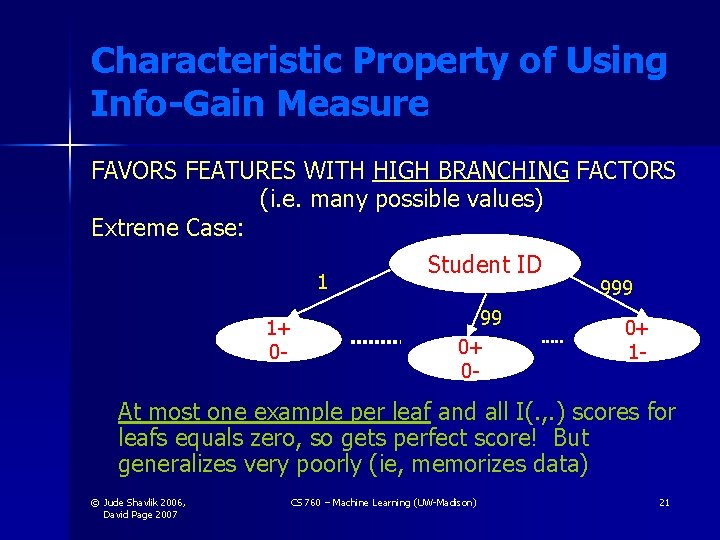

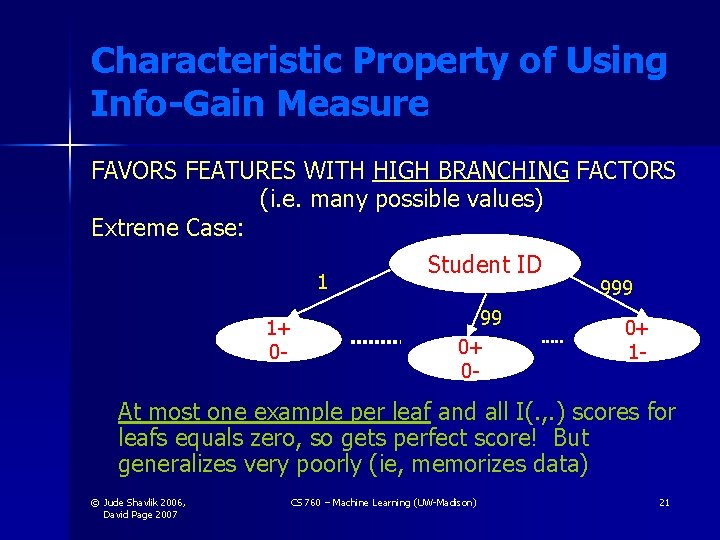

Characteristic Property of Using Info-Gain Measure FAVORS FEATURES WITH HIGH BRANCHING FACTORS (i. e. many possible values) Extreme Case: Student ID 1 1+ 0 - 999 99 0+ 0 - 0+ 1 - At most one example per leaf and all I(. , . ) scores for leafs equals zero, so gets perfect score! But generalizes very poorly (ie, memorizes data) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 21

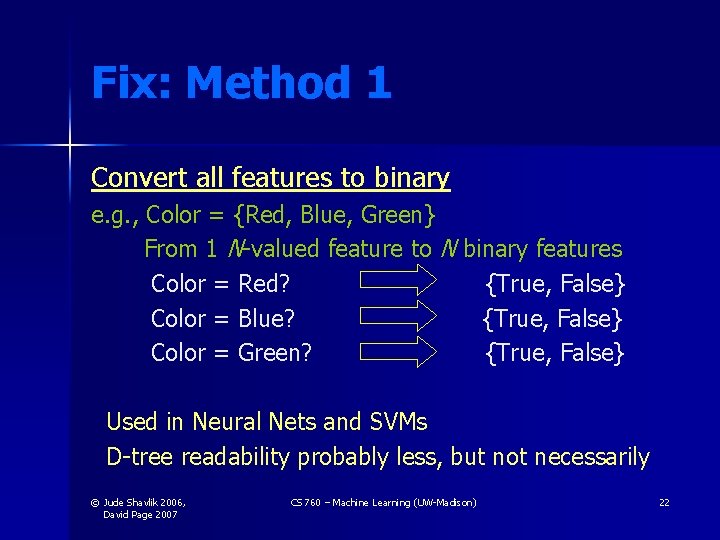

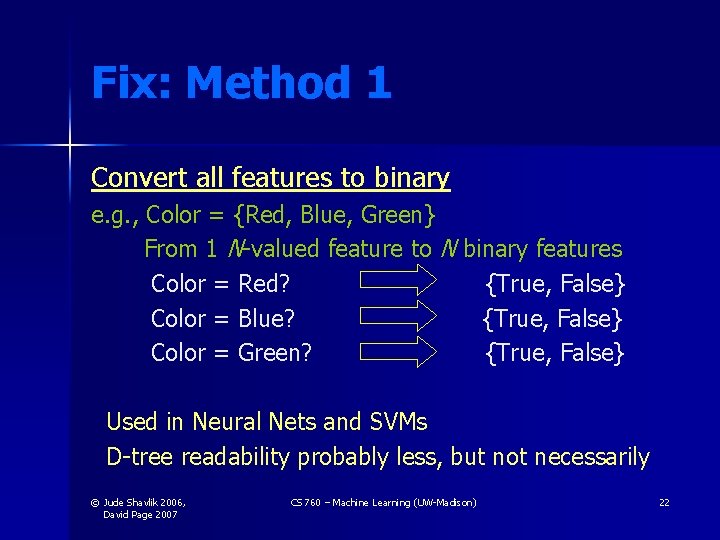

Fix: Method 1 Convert all features to binary e. g. , Color = {Red, Blue, Green} From 1 N-valued feature to N binary features Color = Red? {True, False} Color = Blue? {True, False} Color = Green? {True, False} Used in Neural Nets and SVMs D-tree readability probably less, but not necessarily © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 22

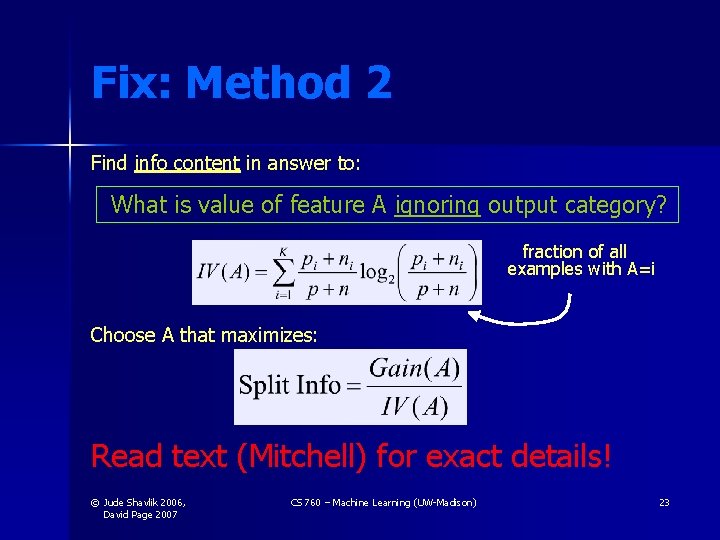

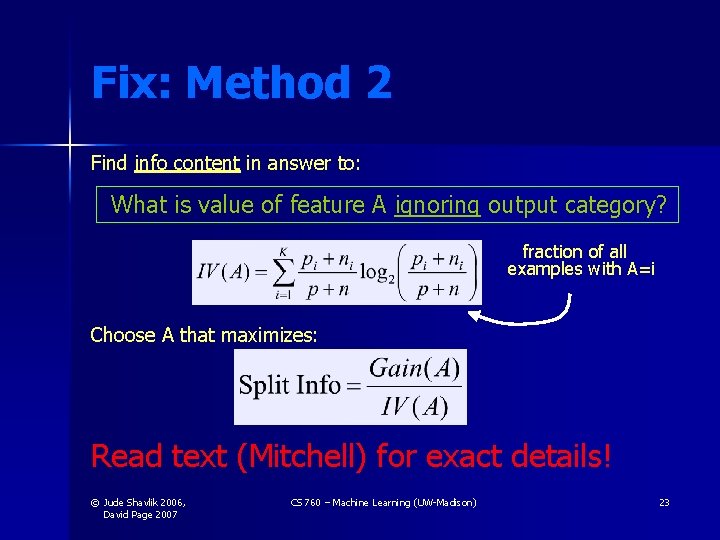

Fix: Method 2 Find info content in answer to: What is value of feature A ignoring output category? fraction of all examples with A=i Choose A that maximizes: Read text (Mitchell) for exact details! © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 23

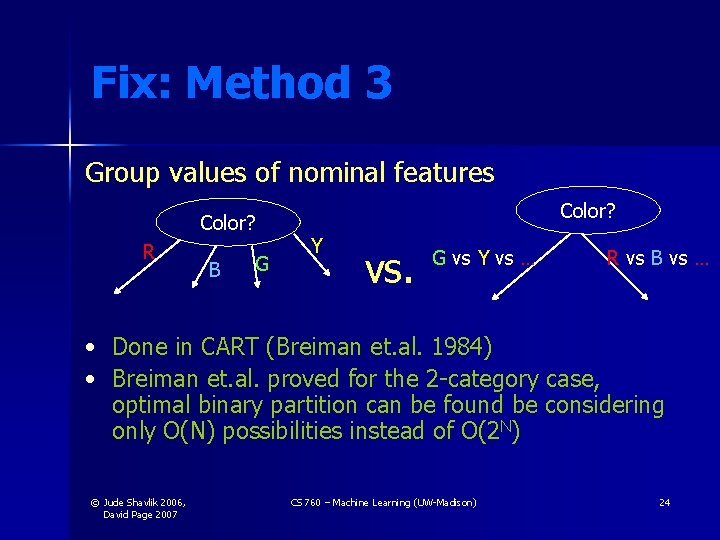

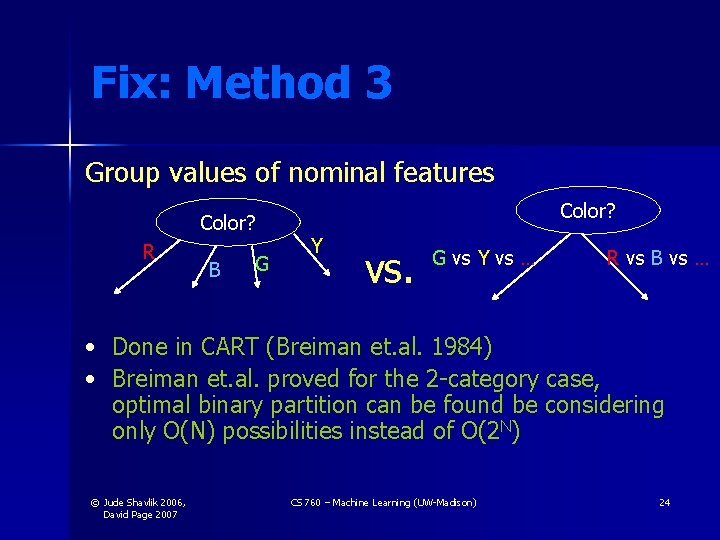

Fix: Method 3 Group values of nominal features Color? R • • B G Y vs. G vs Y vs … R vs B vs … Done in CART (Breiman et. al. 1984) Breiman et. al. proved for the 2 -category case, optimal binary partition can be found be considering only O(N) possibilities instead of O(2 N) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 24

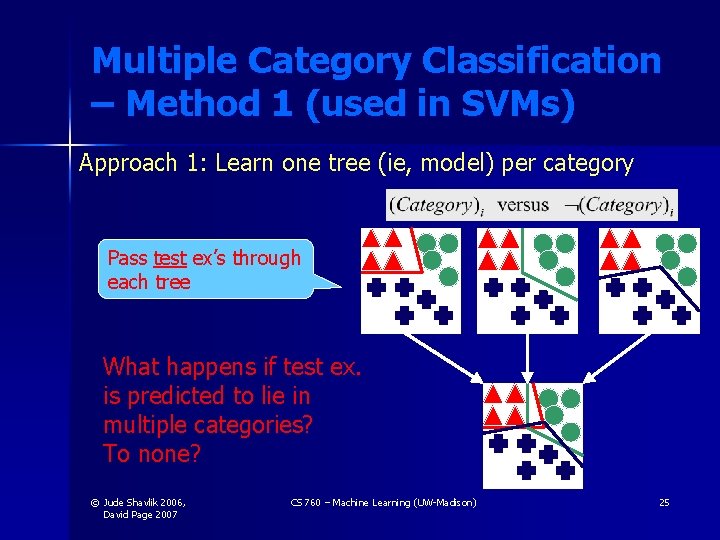

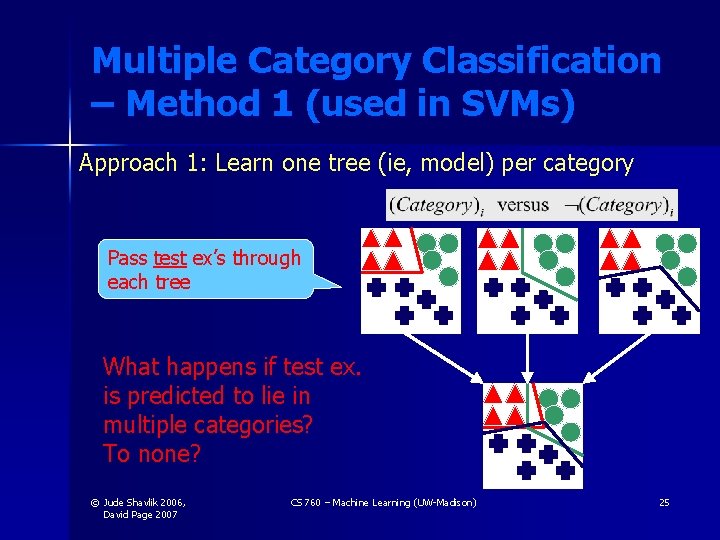

Multiple Category Classification – Method 1 (used in SVMs) Approach 1: Learn one tree (ie, model) per category Pass test ex’s through each tree What happens if test ex. is predicted to lie in multiple categories? To none? © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 25

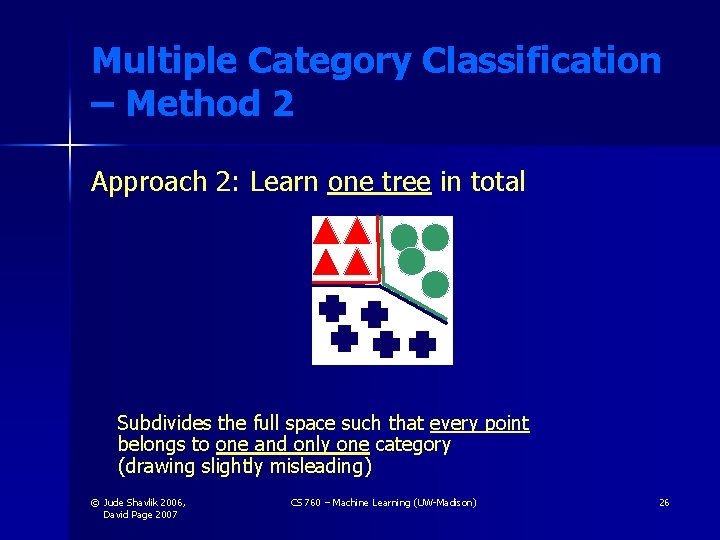

Multiple Category Classification – Method 2 Approach 2: Learn one tree in total Subdivides the full space such that every point belongs to one and only one category (drawing slightly misleading) © Jude Shavlik 2006, David Page 2007 CS 760 – Machine Learning (UW-Madison) 26