Machine Learning via Advice Taking Jude Shavlik Thanks

- Slides: 45

Machine Learning via Advice Taking Jude Shavlik

Thanks To. . . Rich Maclin Lisa Torrey Trevor Walker Prof. Olvi Mangasarian Glenn Fung Ted Wild DARPA

Quote (2002) from DARPA Sometimes an assistant will merely watch you and draw conclusions. Sometimes you have to tell a new person, 'Please don't do it this way' or 'From now on when I say X, you do Y. ' It's a combination of learning by example and by being guided.

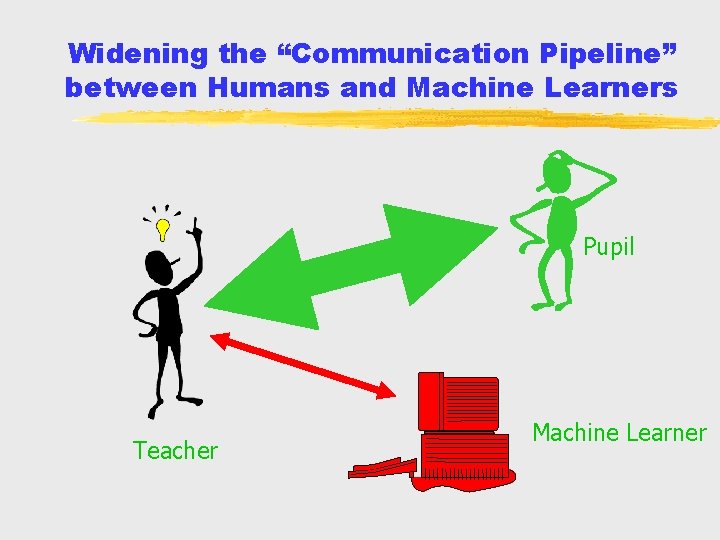

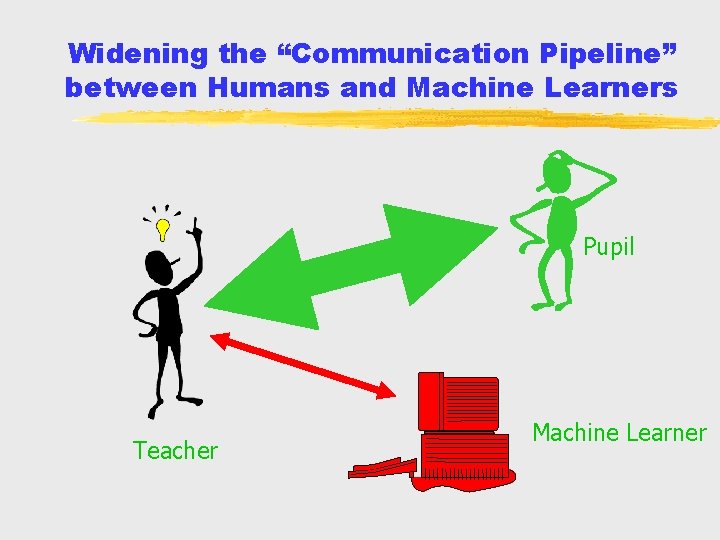

Widening the “Communication Pipeline” between Humans and Machine Learners Pupil Teacher Machine Learner

Our Approach to Building Better Machine Learners • Human partner expresses advice “naturally” and w/o knowledge of ML agent’s internals • Agent incorporates advice directly into the function it is learning • Additional feedback (rewards, I/O pairs, inferred labels, more advice) used to refine learner continually

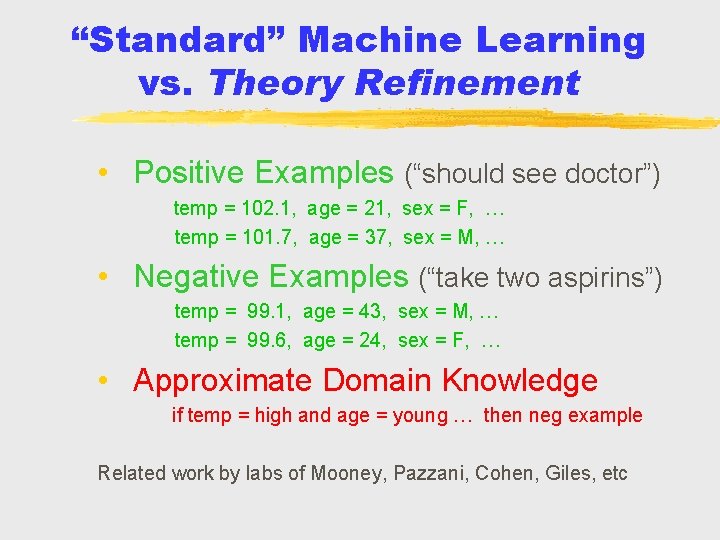

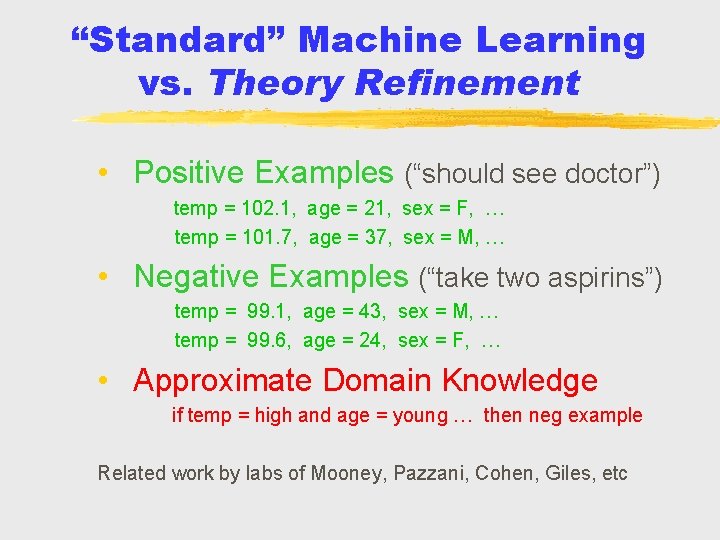

“Standard” Machine Learning vs. Theory Refinement • Positive Examples (“should see doctor”) temp = 102. 1, age = 21, sex = F, … temp = 101. 7, age = 37, sex = M, … • Negative Examples (“take two aspirins”) temp = 99. 1, age = 43, sex = M, … temp = 99. 6, age = 24, sex = F, … • Approximate Domain Knowledge if temp = high and age = young … then neg example Related work by labs of Mooney, Pazzani, Cohen, Giles, etc

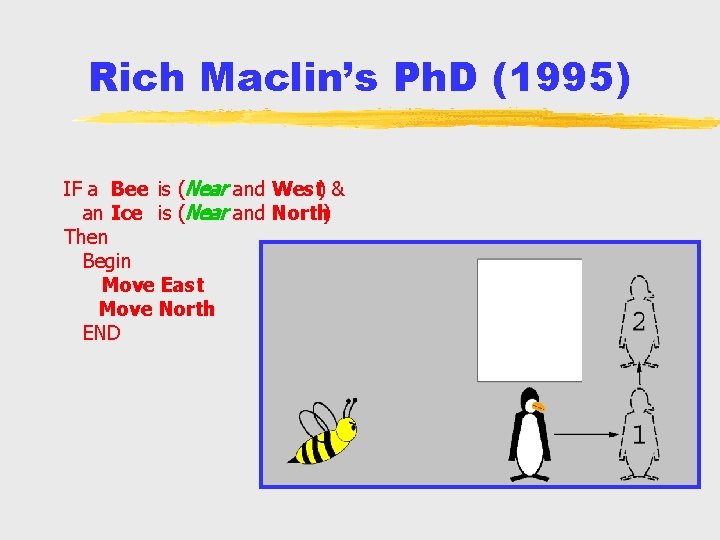

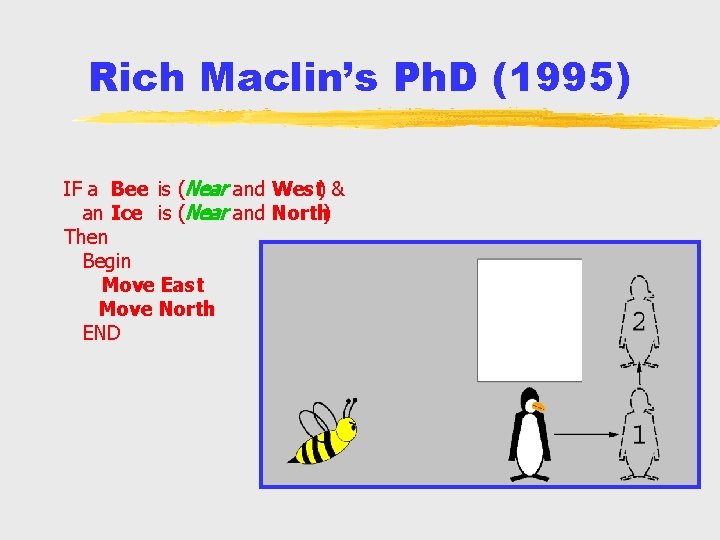

Rich Maclin’s Ph. D (1995) IF a Bee is (Near and West) & an Ice is (Near and North) Then Begin Move East Move North END

Sample Results With advice Without advice

Our Motto Give advice rather thancommands to your computer

Outline Prior Knowledge and Support Vector Machines § § § Intro to SVM’s Linear Separation Non-Linear Separation Function Fitting (“Regression”) Advice-Taking Reinforcement Learning Transfer Learning via Advice Taking

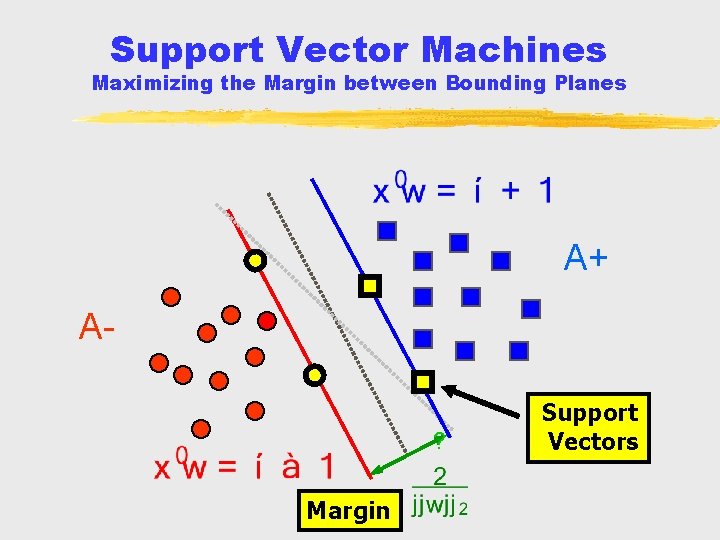

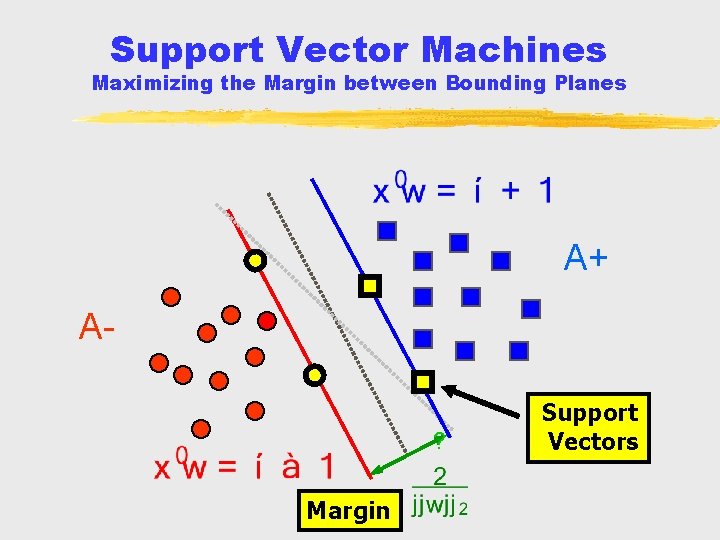

Support Vector Machines Maximizing the Margin between Bounding Planes A+ A? Margin Support Vectors

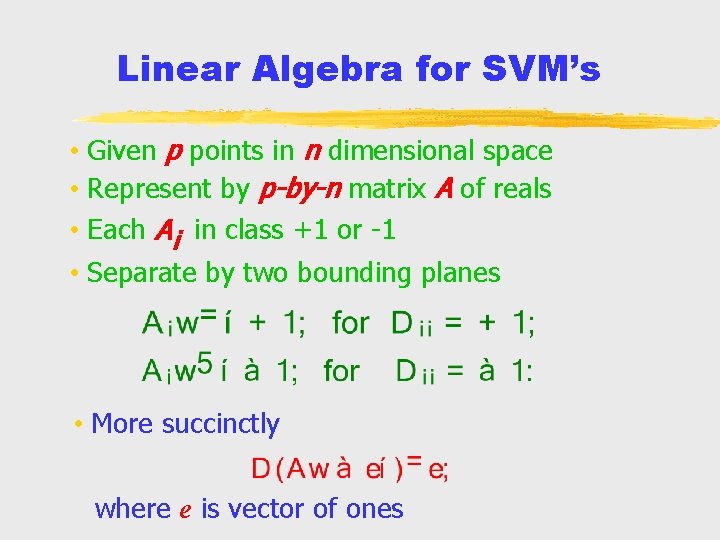

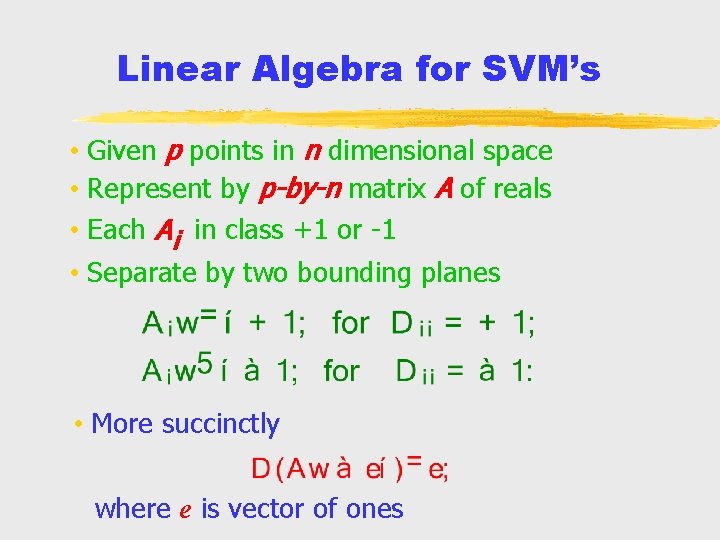

Linear Algebra for SVM’s • Given p points in n dimensional space • Represent by p-by-n matrix A of reals • Each Ai in class +1 or -1 • Separate by two bounding planes • More succinctly where e is vector of ones

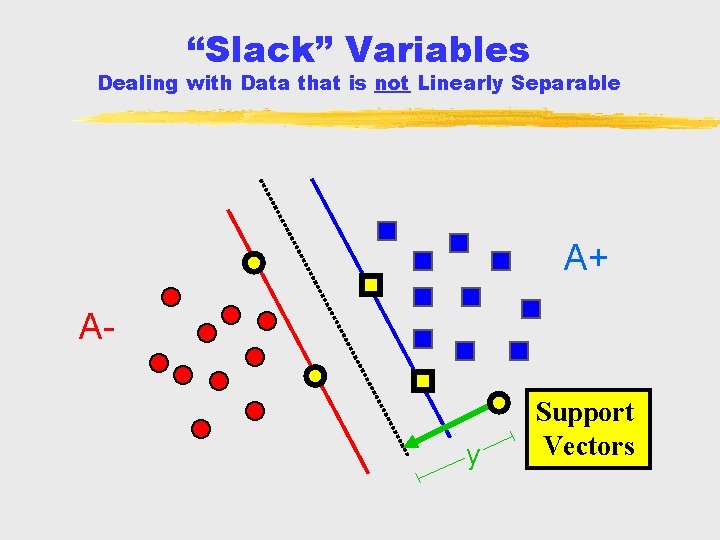

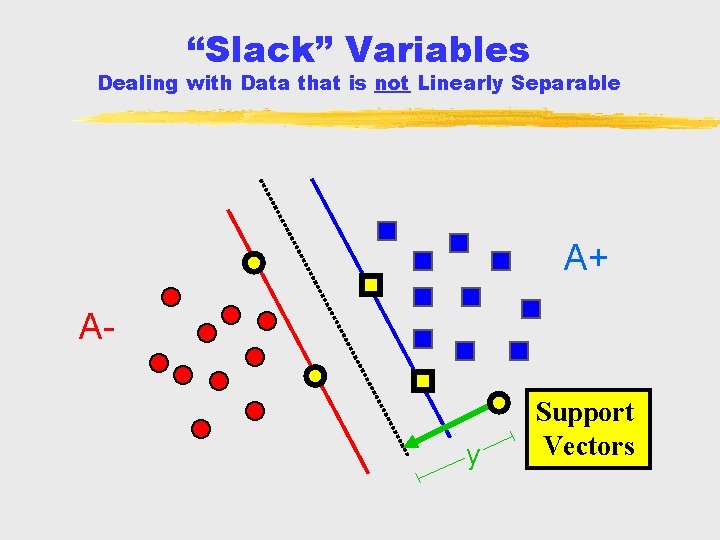

“Slack” Variables Dealing with Data that is not Linearly Separable A+ A- y Support Vectors

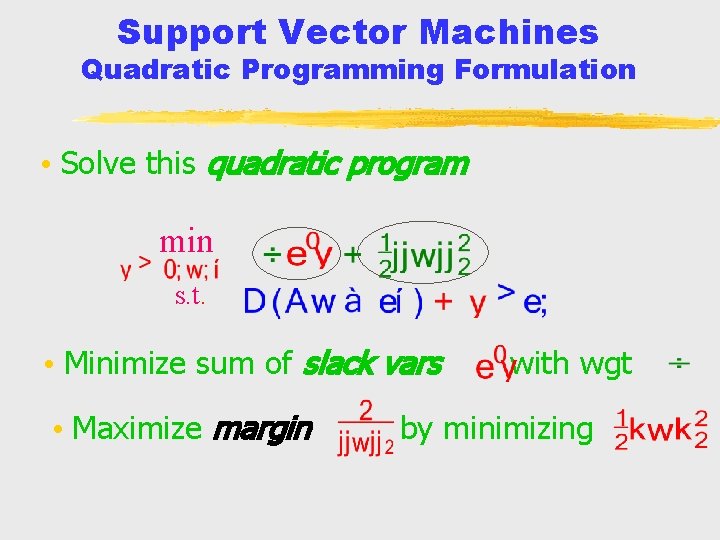

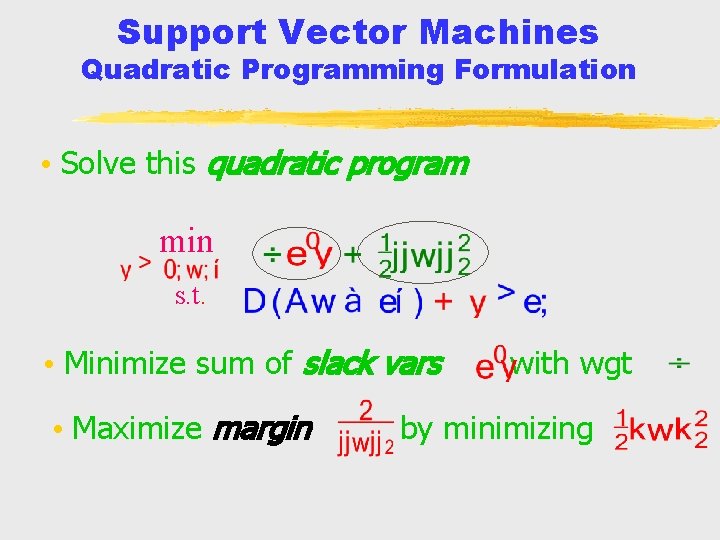

Support Vector Machines Quadratic Programming Formulation • Solve this quadratic program min s. t. • Minimize sum of slack vars • Maximize margin with wgt by minimizing

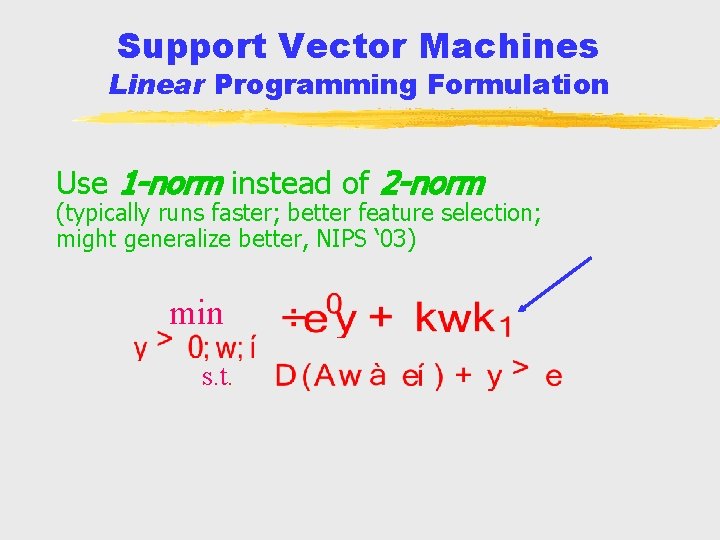

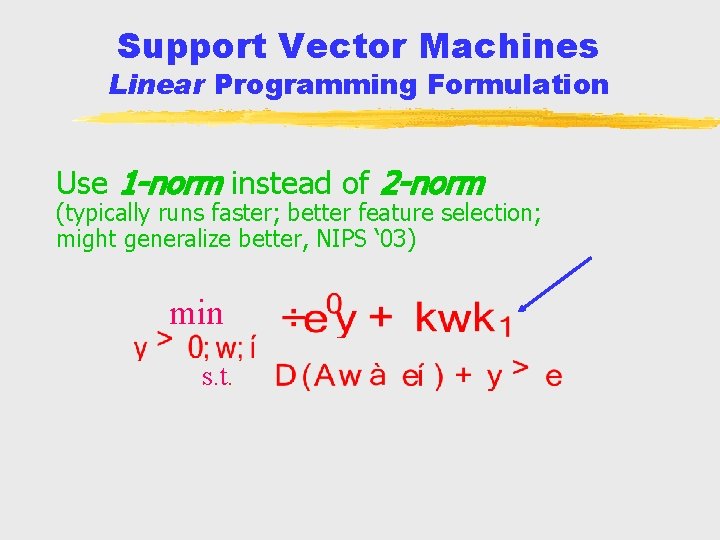

Support Vector Machines Linear Programming Formulation Use 1 -norm instead of 2 -norm (typically runs faster; better feature selection; might generalize better, NIPS ‘ 03) min s. t.

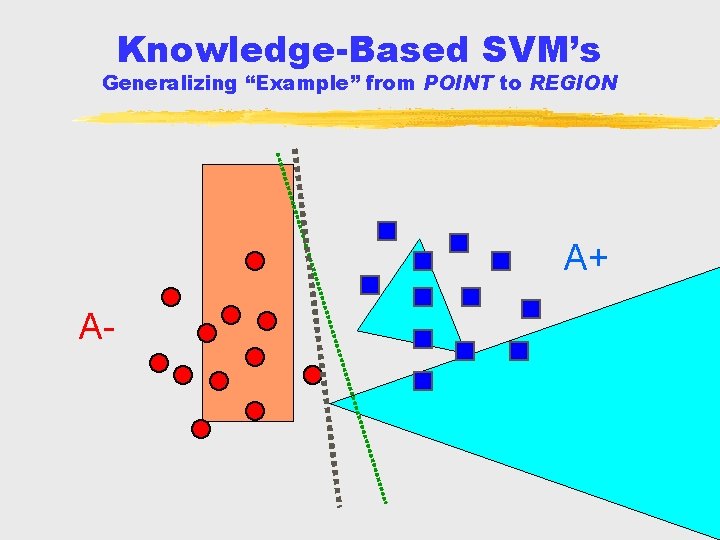

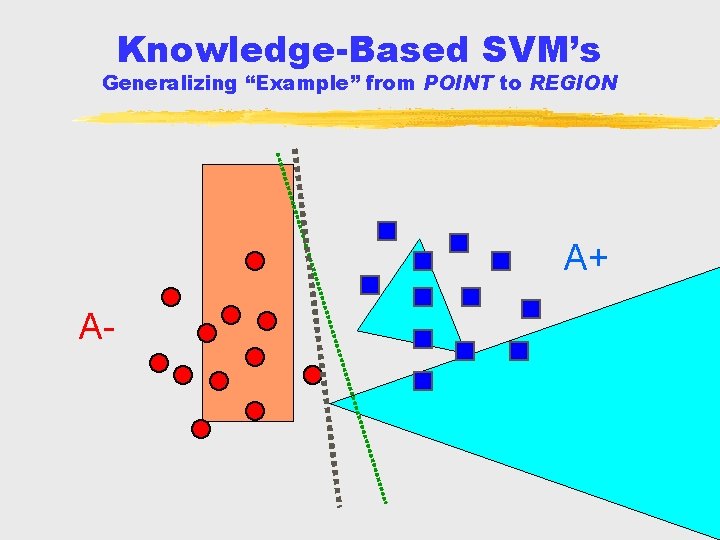

Knowledge-Based SVM’s Generalizing “Example” from POINT to REGION A+ A-

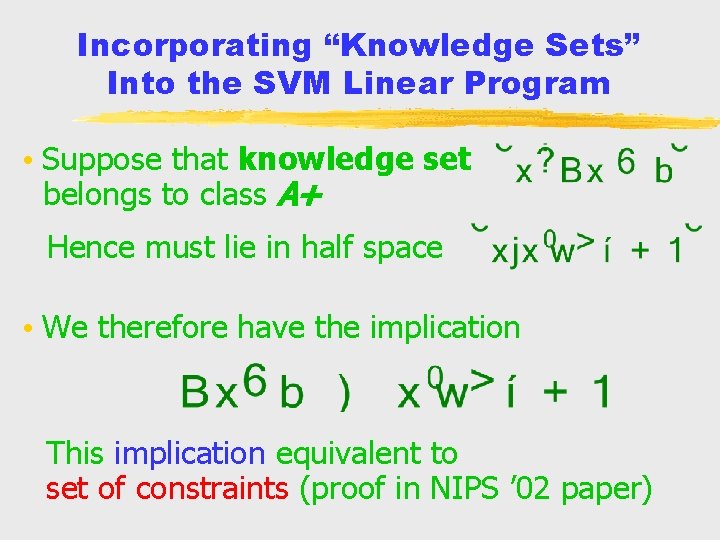

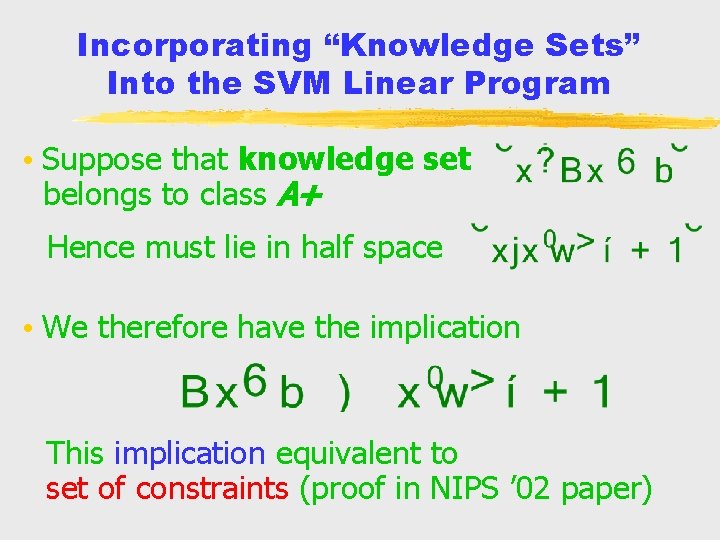

Incorporating “Knowledge Sets” Into the SVM Linear Program • Suppose that knowledge set belongs to class A+ Hence must lie in half space • We therefore have the implication This implication equivalent to set of constraints (proof in NIPS ’ 02 paper)

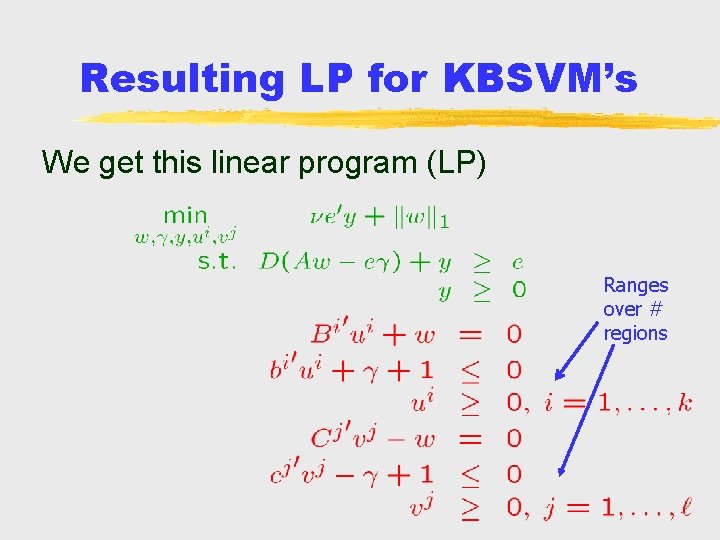

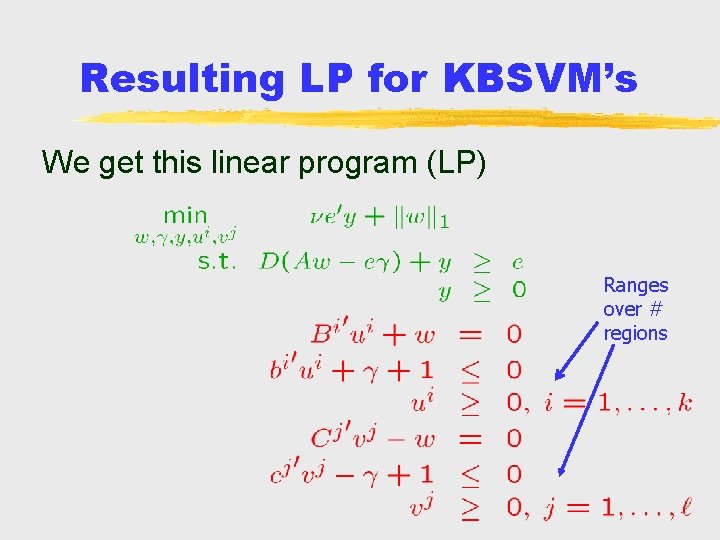

Resulting LP for KBSVM’s We get this linear program (LP) Ranges over # regions

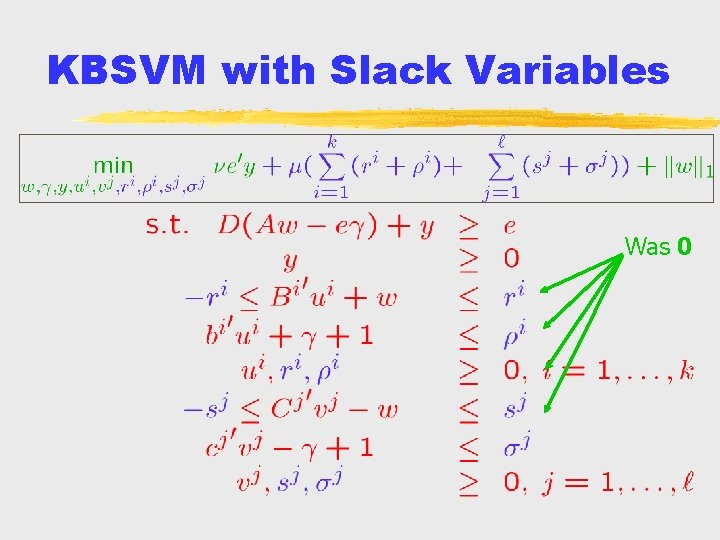

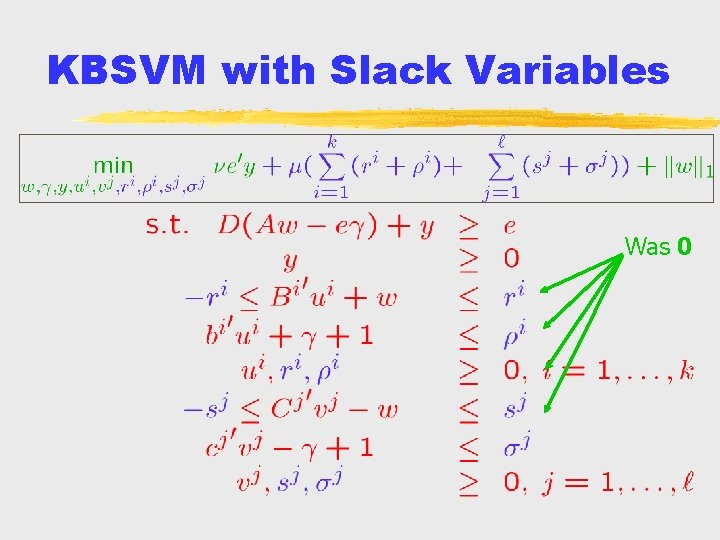

KBSVM with Slack Variables Was 0

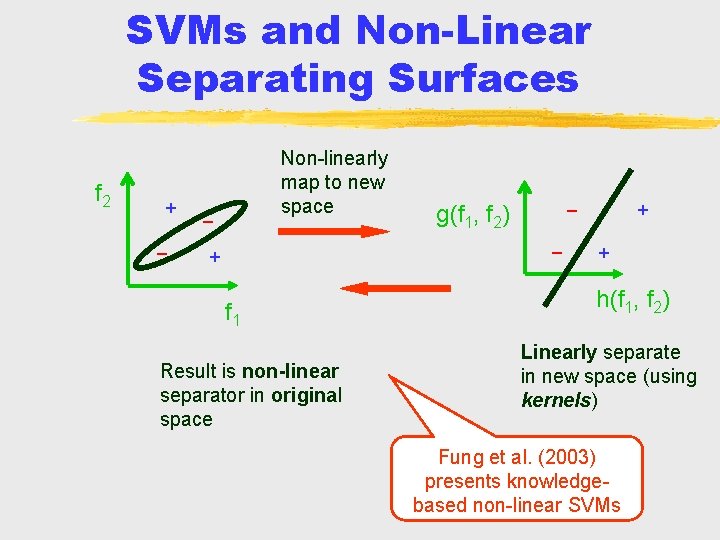

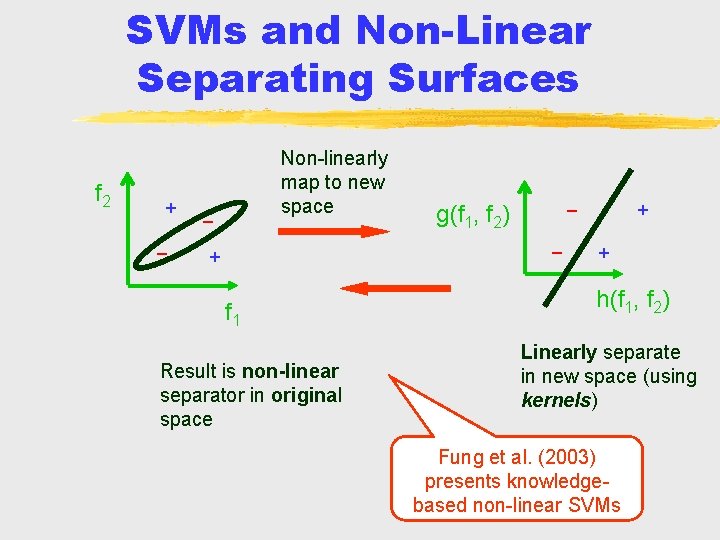

SVMs and Non-Linear Separating Surfaces f 2 + _ Non-linearly map to new space _ _ g(f 1, f 2) _ + f 1 Result is non-linear separator in original space + + h(f 1, f 2) Linearly separate in new space (using kernels) Fung et al. (2003) presents knowledgebased non-linear SVMs

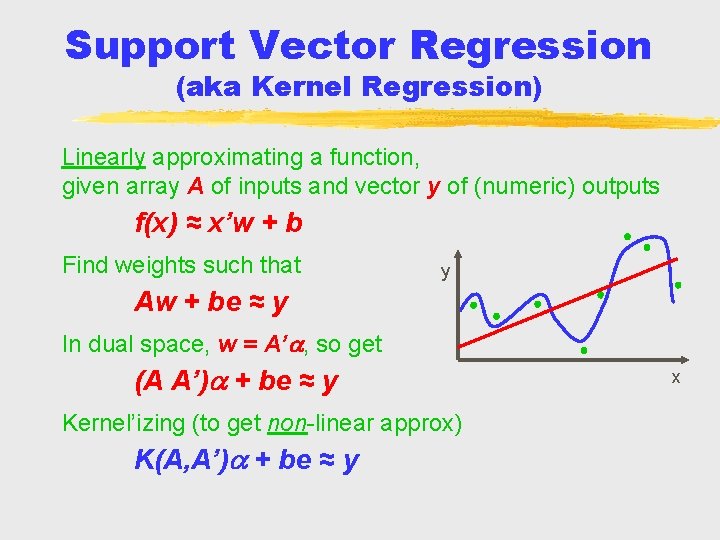

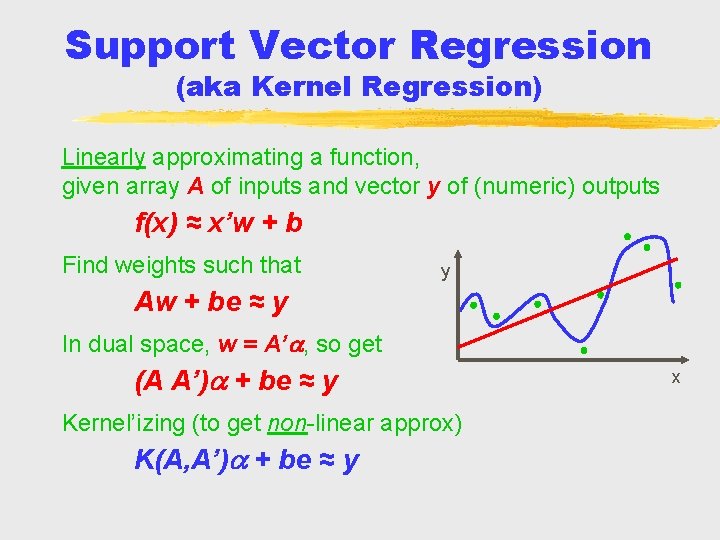

Support Vector Regression (aka Kernel Regression) Linearly approximating a function, given array A of inputs and vector y of (numeric) outputs f(x) ≈ x’w + b Find weights such that y Aw + be ≈ y In dual space, w = A’ , so get (A A’) + be ≈ y Kernel’izing (to get non-linear approx) K(A, A’) + be ≈ y x

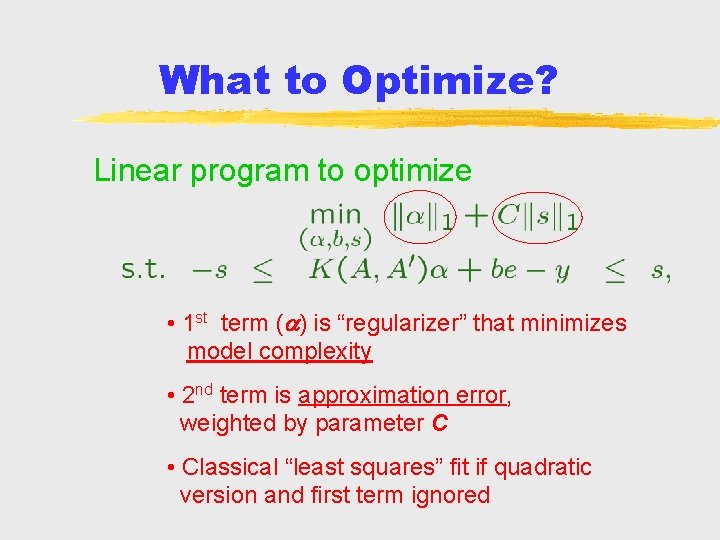

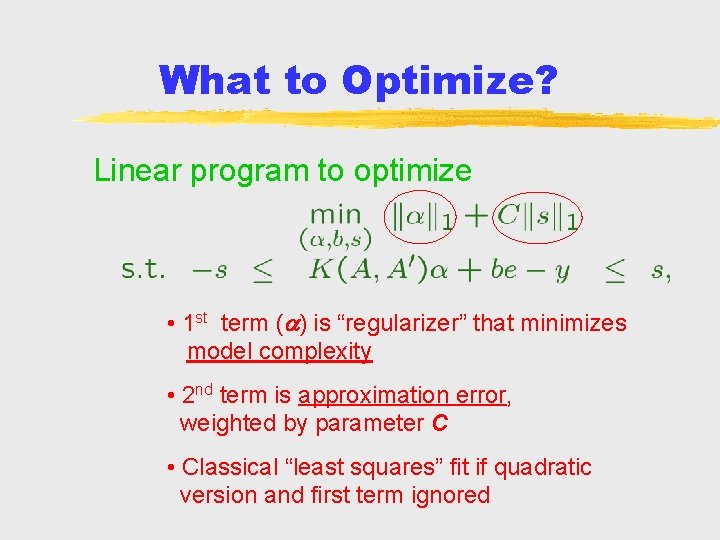

What to Optimize? Linear program to optimize • 1 st term ( ) is “regularizer” that minimizes model complexity • 2 nd term is approximation error, weighted by parameter C • Classical “least squares” fit if quadratic version and first term ignored

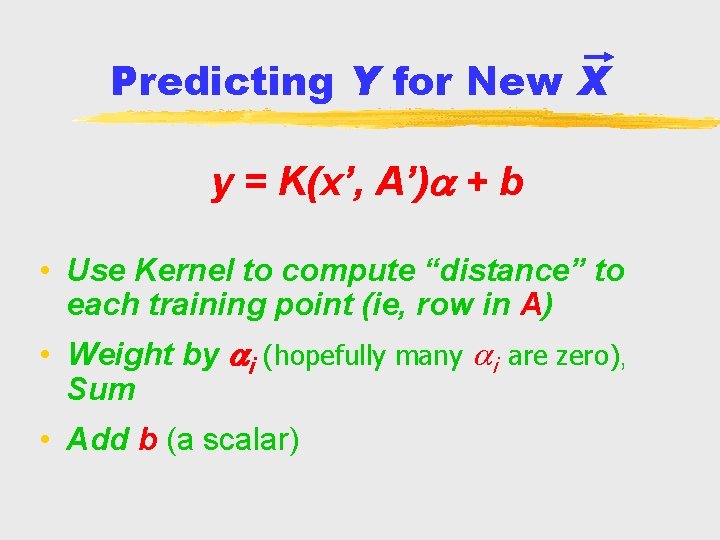

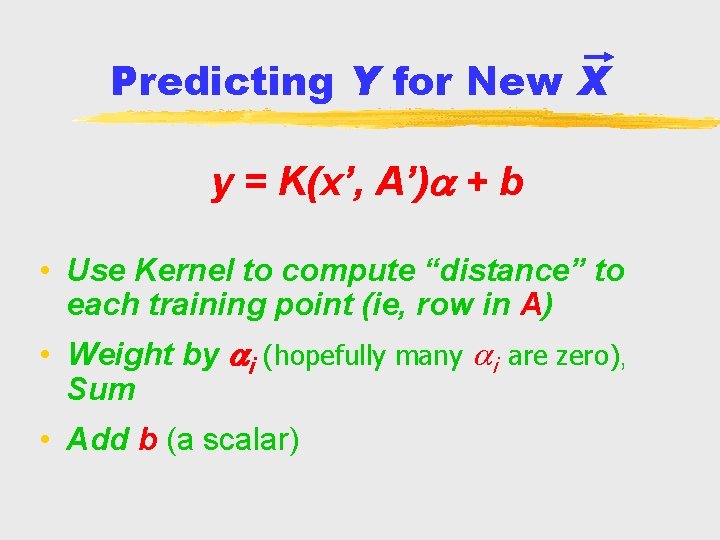

Predicting Y for New X y = K(x’, A’) + b • Use Kernel to compute “distance” to each training point (ie, row in A) • Weight by i (hopefully many i are zero), Sum • Add b (a scalar)

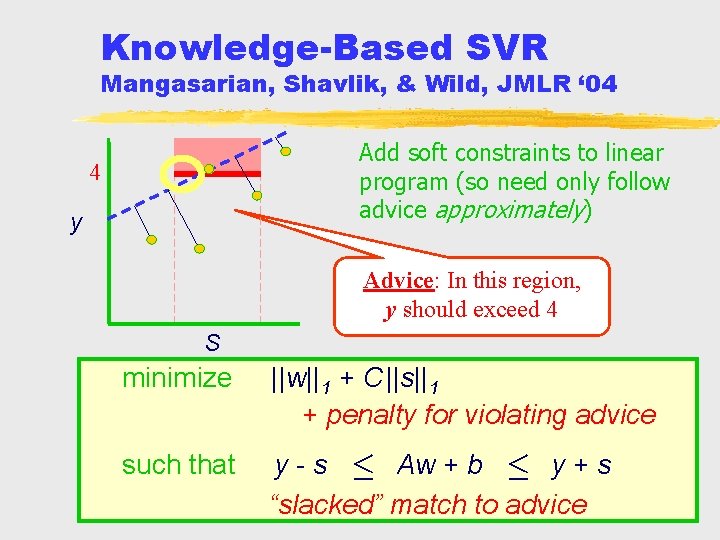

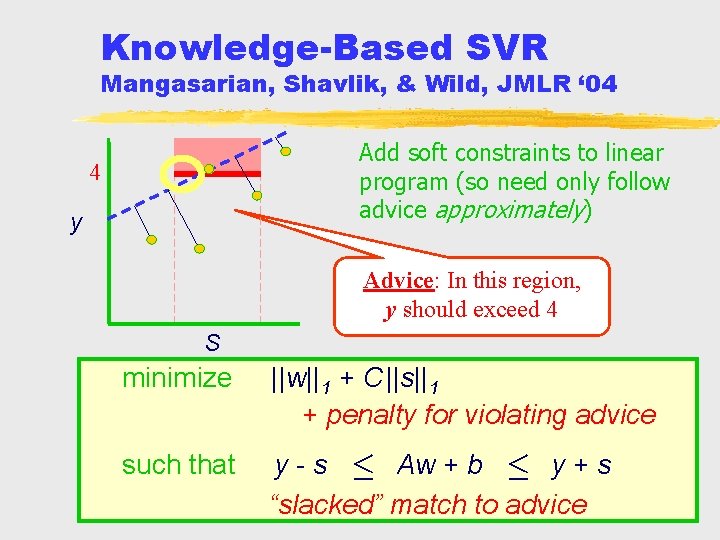

Knowledge-Based SVR Mangasarian, Shavlik, & Wild, JMLR ‘ 04 Add soft constraints to linear program (so need only follow advice approximately) 4 y Advice: In this region, y should exceed 4 S minimize ||w||1 + C ||s||1 + penalty for violating advice such that y - s Aw + b y + s “slacked” match to advice

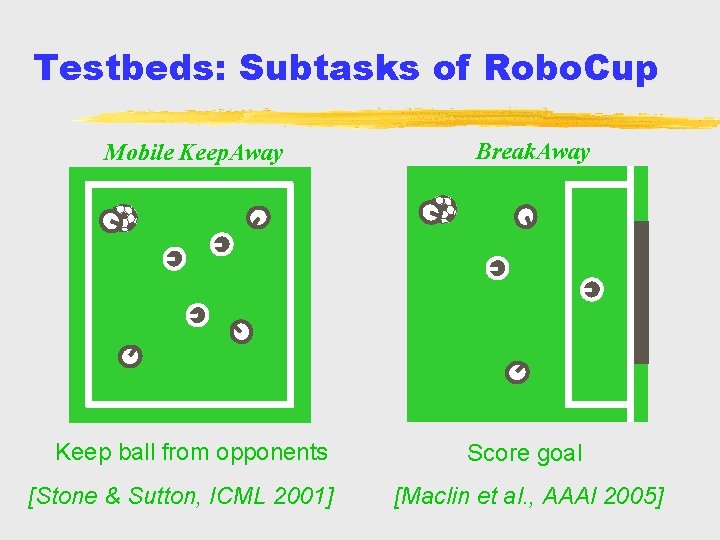

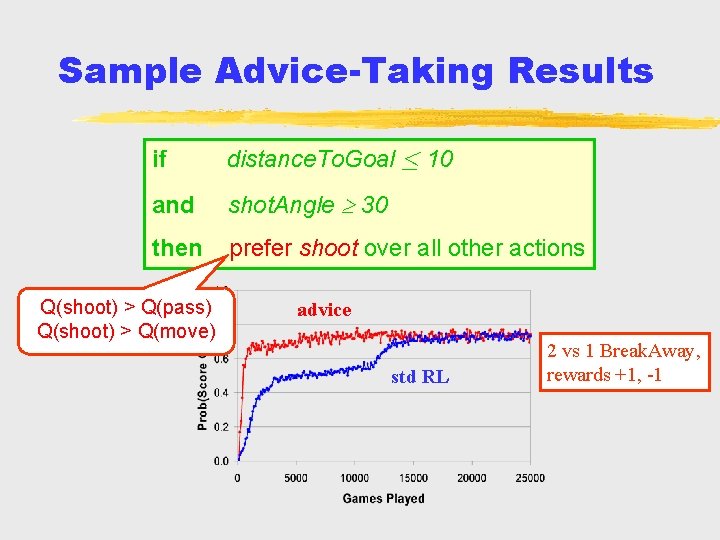

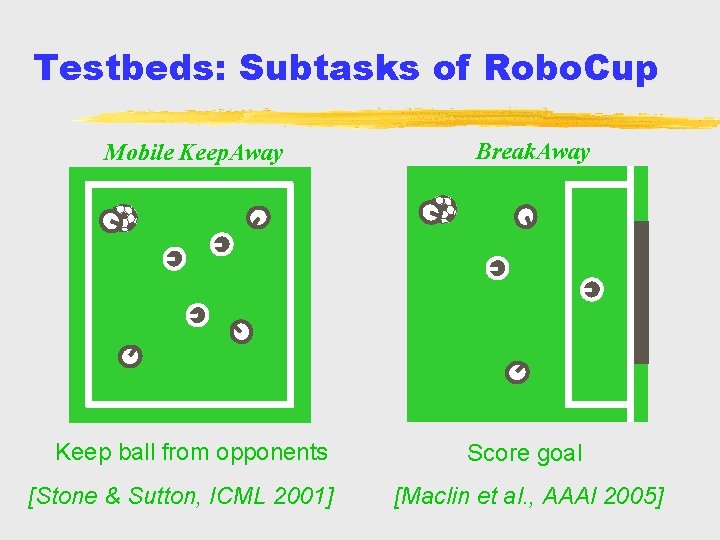

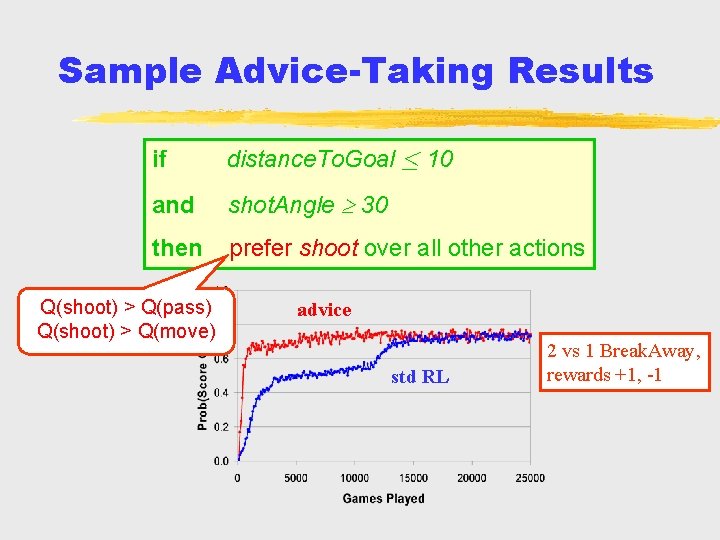

Testbeds: Subtasks of Robo. Cup Mobile Keep. Away Keep ball from opponents [Stone & Sutton, ICML 2001] Break. Away Score goal [Maclin et al. , AAAI 2005]

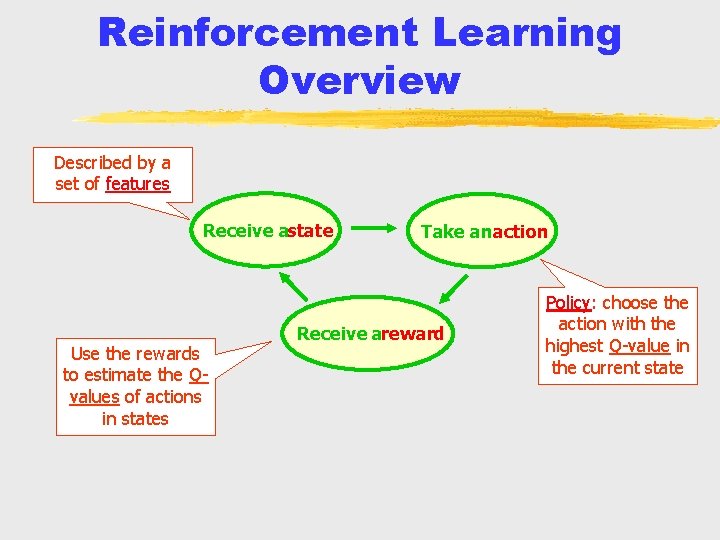

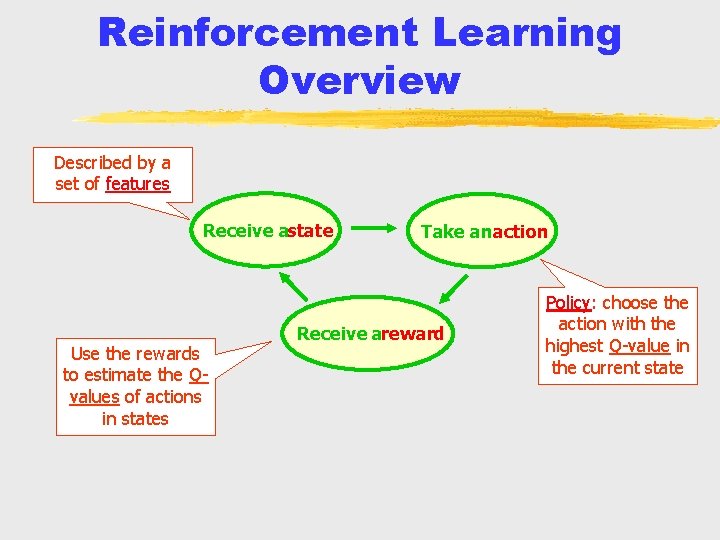

Reinforcement Learning Overview Described by a set of features Receive astate Use the rewards to estimate the Qvalues of actions in states Take anaction Receive areward Policy: choose the action with the highest Q-value in the current state

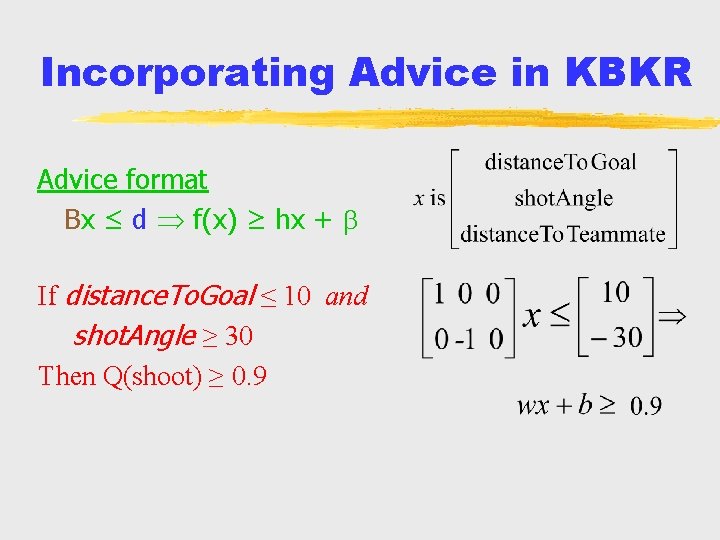

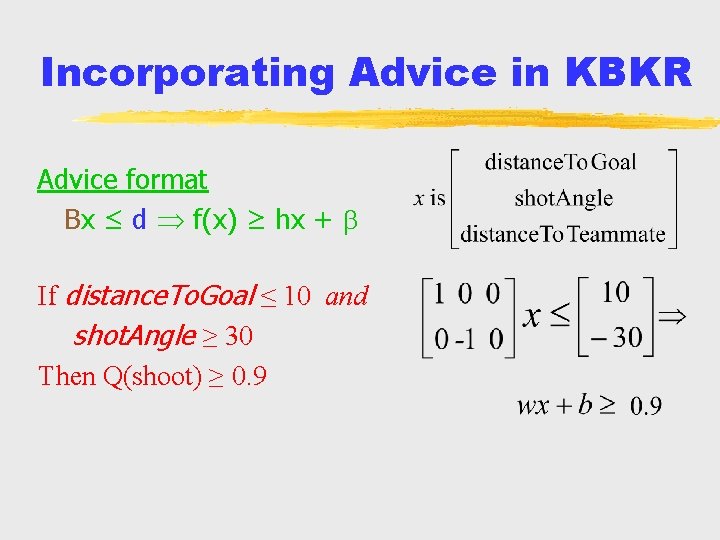

Incorporating Advice in KBKR Advice format Bx ≤ d f(x) ≥ hx + If distance. To. Goal ≤ 10 and shot. Angle ≥ 30 Then Q(shoot) ≥ 0. 9

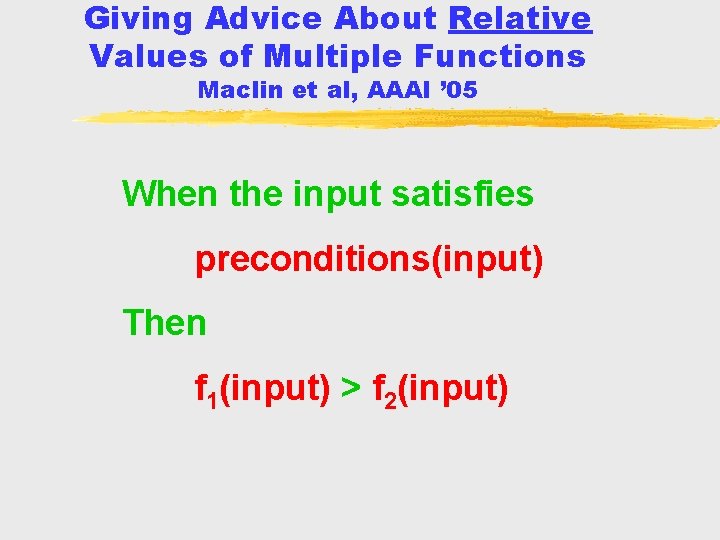

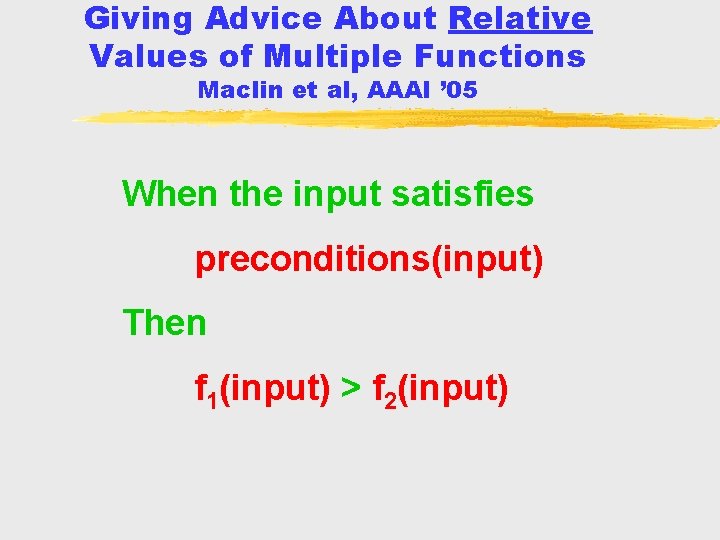

Giving Advice About Relative Values of Multiple Functions Maclin et al, AAAI ’ 05 When the input satisfies preconditions(input) Then f 1(input) > f 2(input)

Sample Advice-Taking Results if distance. To. Goal 10 and shot. Angle 30 then prefer shoot over all other actions Q(shoot) > Q(pass) Q(shoot) > Q(move) advice std RL 2 vs 1 Break. Away, rewards +1, -1

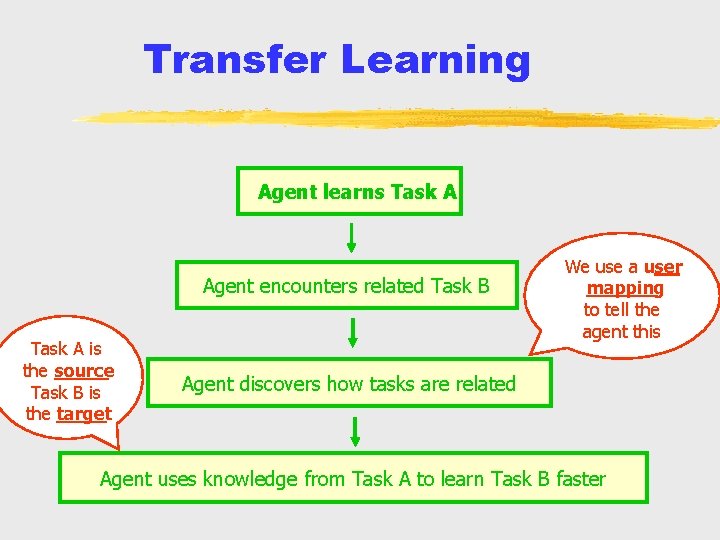

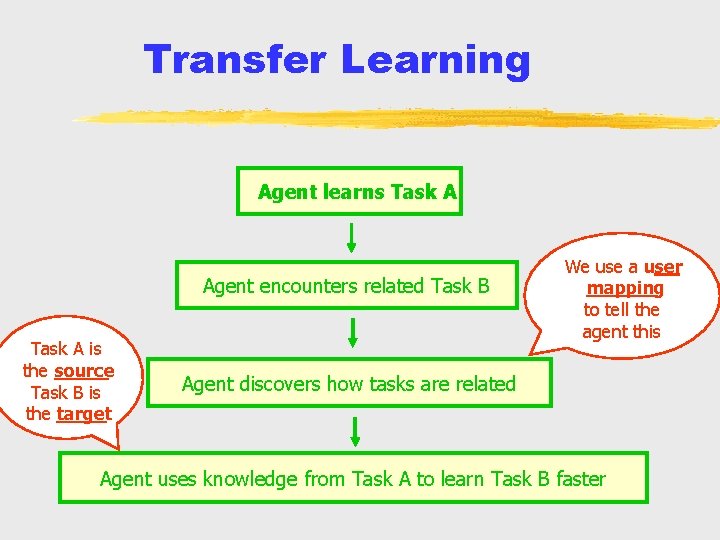

Transfer Learning Agent learns Task A Agent encounters related Task B Task A is the source Task B is the target We use a user mapping to tell the agent this Agent discovers how tasks are related Agent uses knowledge from Task A to learn Task B faster

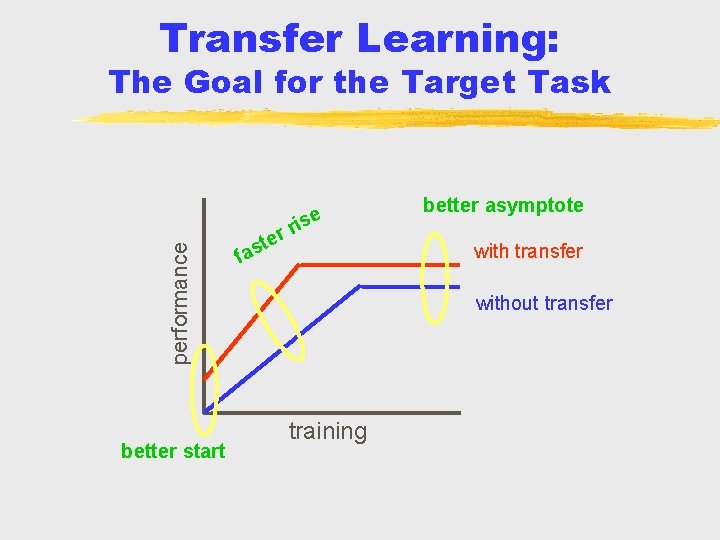

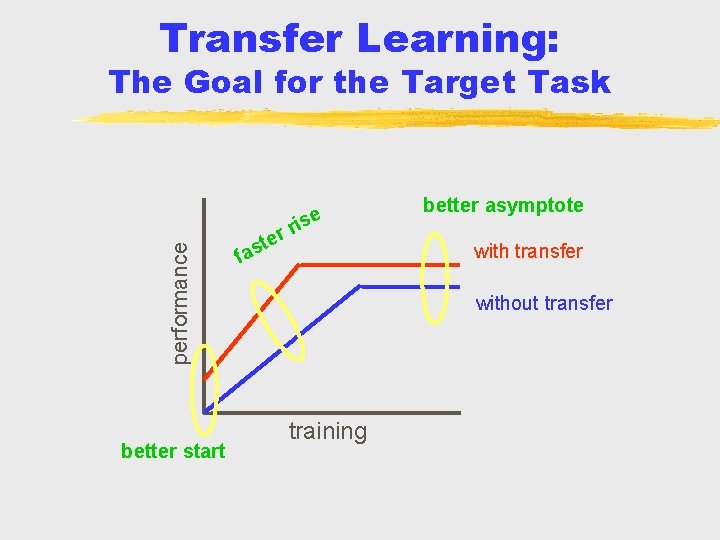

Transfer Learning: performance The Goal for the Target Task better start r ste ise better asymptote r with transfer fa without transfer training

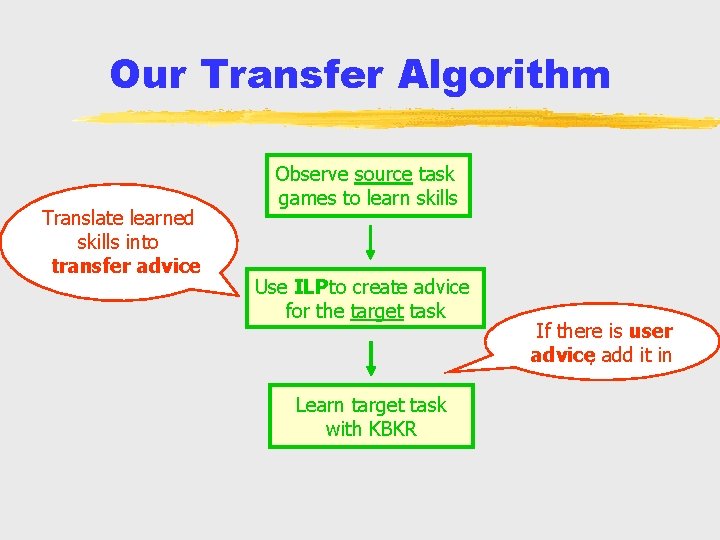

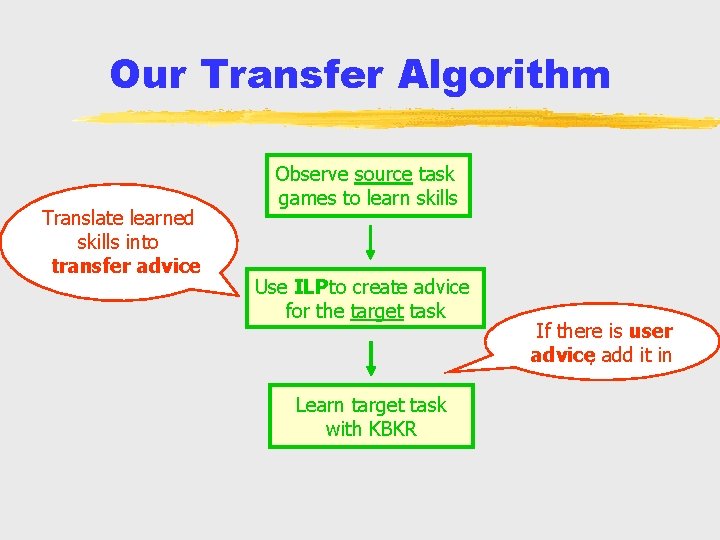

Our Transfer Algorithm Translate learned skills into transfer advice Observe source task games to learn skills Use ILP to create advice for the target task Learn target task with KBKR If there is user advice, add it in

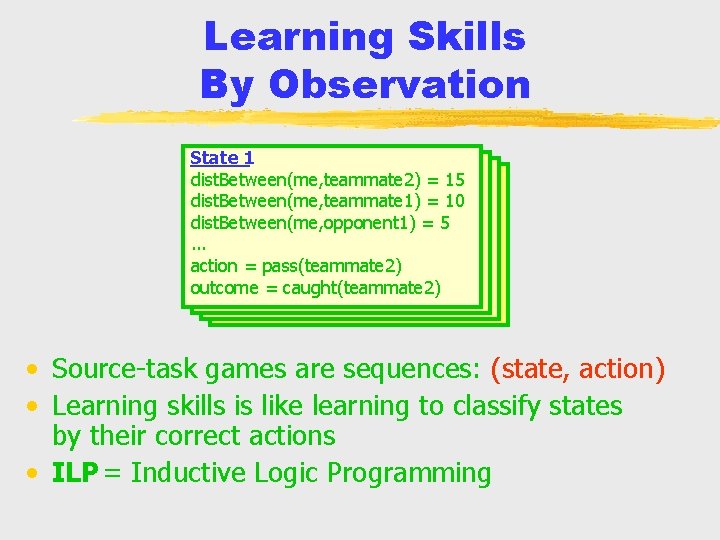

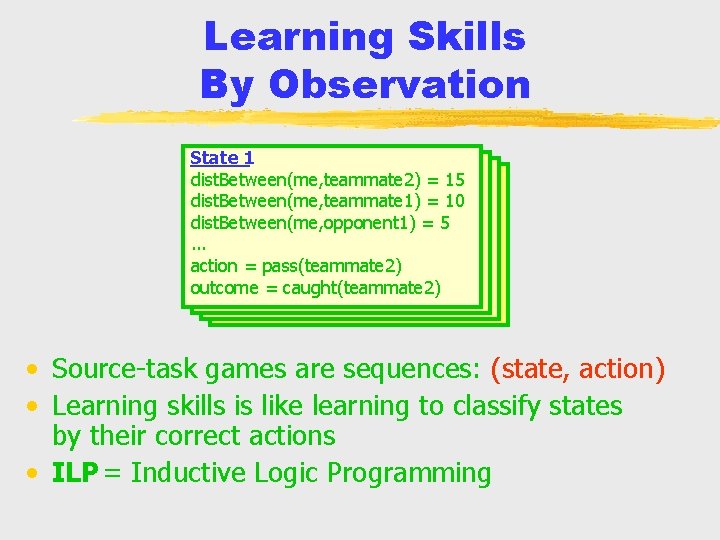

Learning Skills By Observation State 1 dist. Between(me, teammate 2) = 15 dist. Between(me, teammate 1) = 10 dist. Between(me, opponent 1) = 5. . . action = pass(teammate 2) outcome = caught(teammate 2) • Source-task games are sequences: (state, action) • Learning skills is like learning to classify states by their correct actions • ILP = Inductive Logic Programming

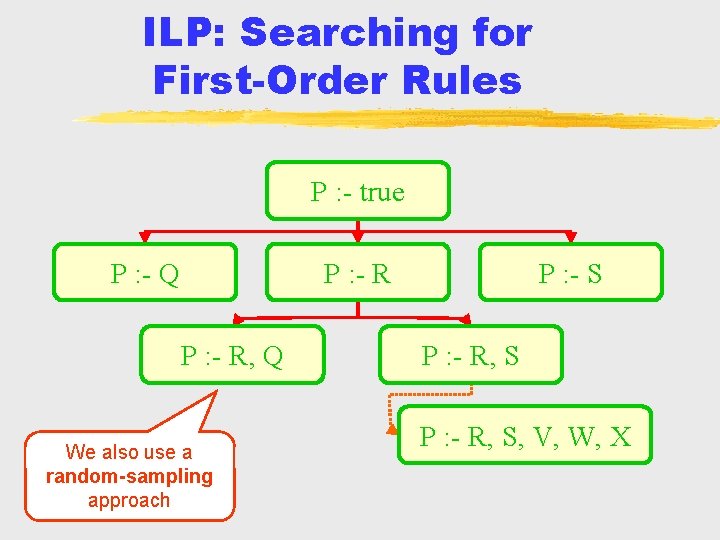

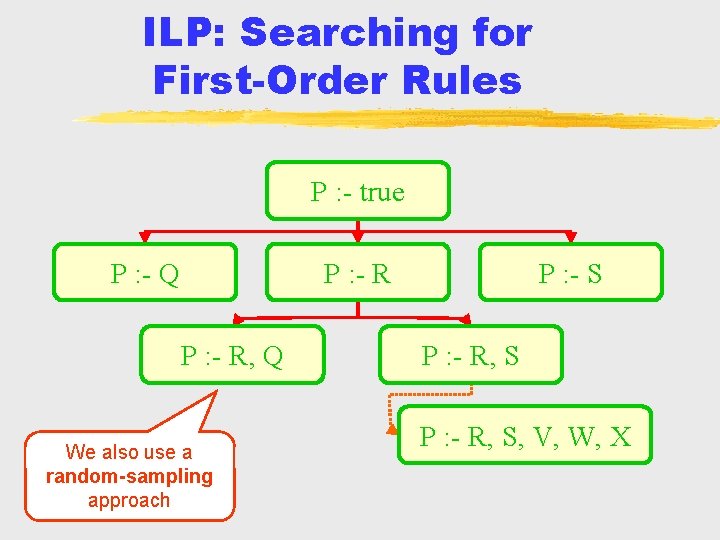

ILP: Searching for First-Order Rules P : - true P : - Q P : - R, Q We also use a random-sampling approach P : - S P : - R, S, V, W, X

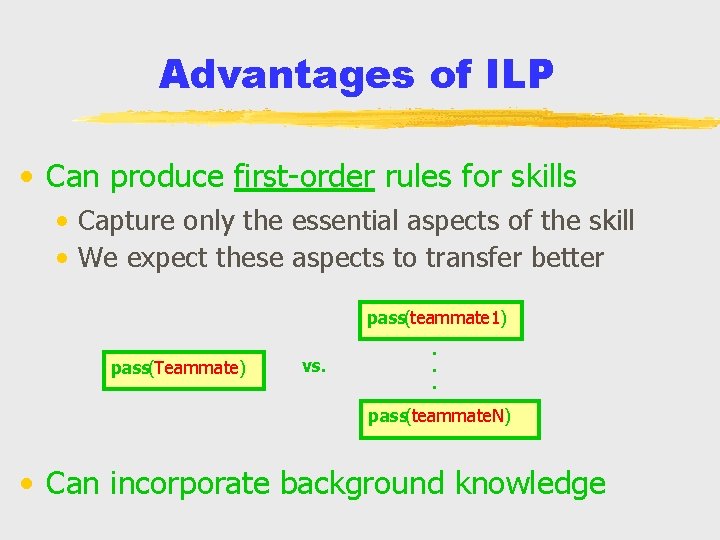

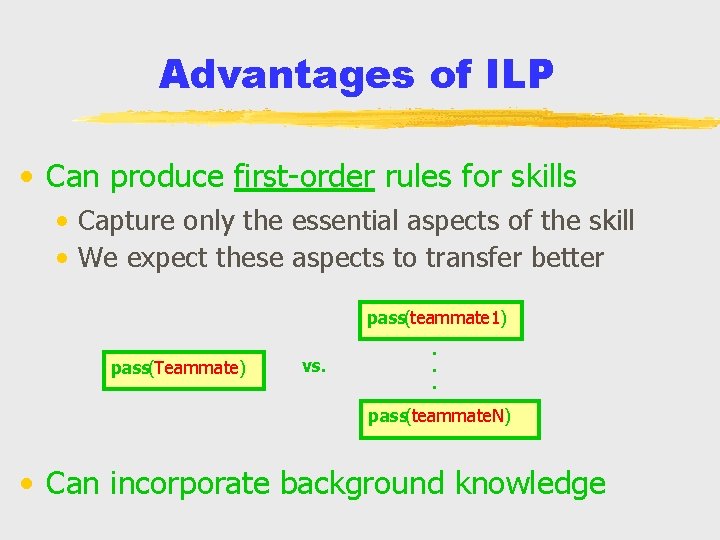

Advantages of ILP • Can produce first-order rules for skills • Capture only the essential aspects of the skill • We expect these aspects to transfer better pass(teammate 1) pass(Teammate) vs. . pass(teammate. N) • Can incorporate background knowledge

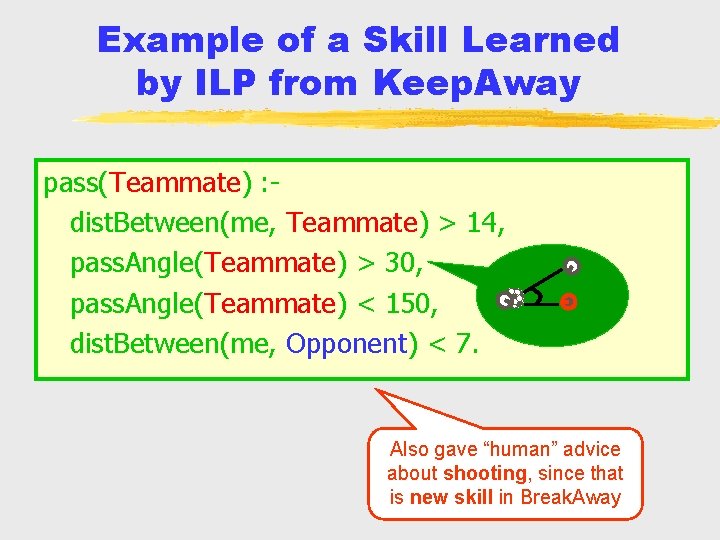

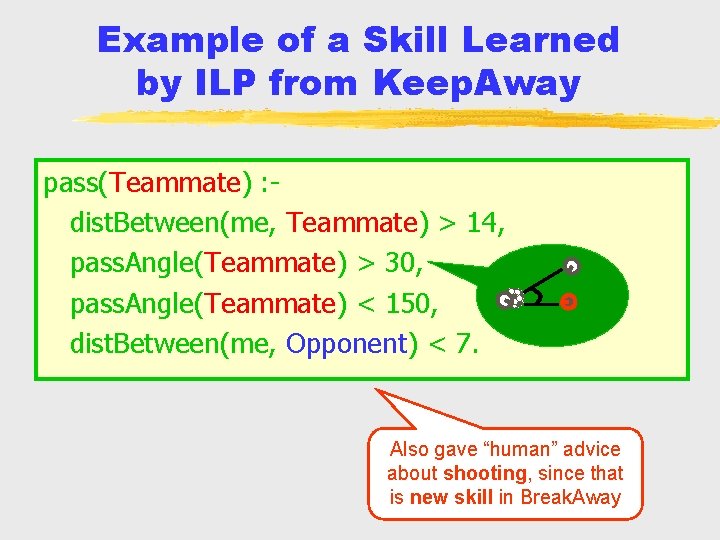

Example of a Skill Learned by ILP from Keep. Away pass(Teammate) : dist. Between(me, Teammate) > 14, pass. Angle(Teammate) > 30, pass. Angle(Teammate) < 150, dist. Between(me, Opponent) < 7. Also gave “human” advice about shooting, since that is new skill in Break. Away

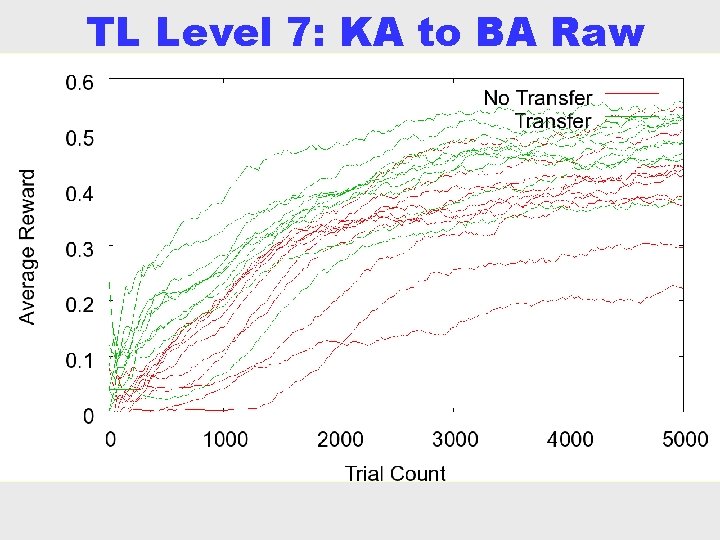

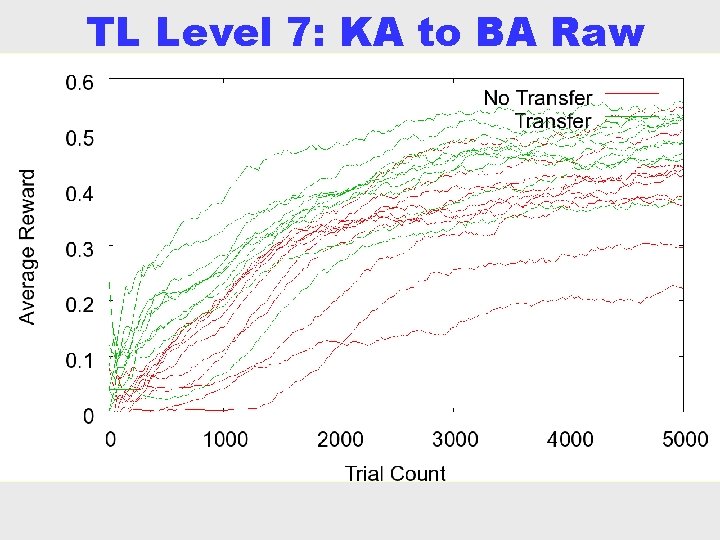

TL Level 7: KA to BA Raw Curves

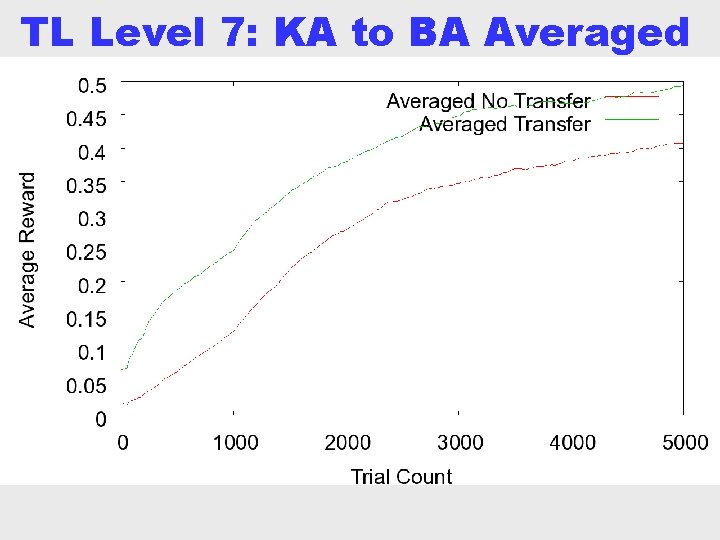

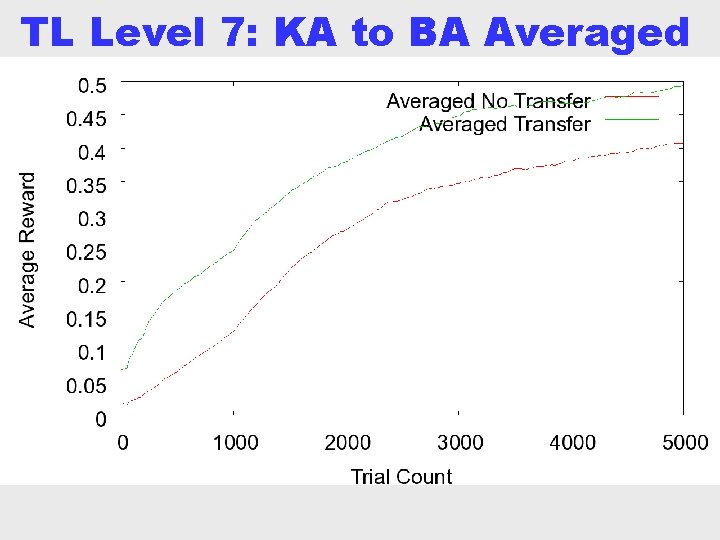

TL Level 7: KA to BA Averaged Curves

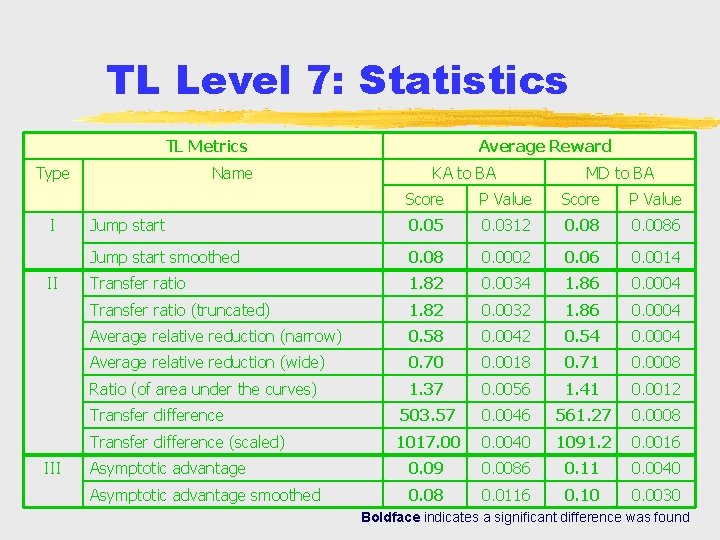

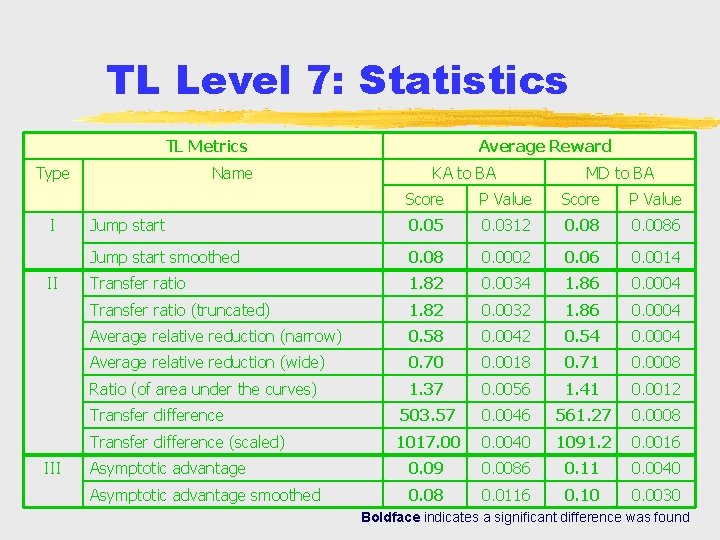

TL Level 7: Statistics TL Metrics Type I II III Name Average Reward KA to BA MD to BA Score P Value Jump start 0. 05 0. 0312 0. 08 0. 0086 Jump start smoothed 0. 08 0. 0002 0. 06 0. 0014 Transfer ratio 1. 82 0. 0034 1. 86 0. 0004 Transfer ratio (truncated) 1. 82 0. 0032 1. 86 0. 0004 Average relative reduction (narrow) 0. 58 0. 0042 0. 54 0. 0004 Average relative reduction (wide) 0. 70 0. 0018 0. 71 0. 0008 Ratio (of area under the curves) 1. 37 0. 0056 1. 41 0. 0012 Transfer difference 503. 57 0. 0046 561. 27 0. 0008 Transfer difference (scaled) 1017. 00 0. 0040 1091. 2 0. 0016 Asymptotic advantage 0. 09 0. 0086 0. 11 0. 0040 Asymptotic advantage smoothed 0. 08 0. 0116 0. 10 0. 0030 Boldface indicates a significant difference was found

Conclusion • Can use much more than I/O pairs in ML • Give adviceto computers; they automatically refine it based on feedback from user or environment • Advice an appealing mechanism for transferringlearned knowledge computer-to-computer

Some Papers (on-line, use Google : -) Creating Advice-Taking Reinforcement Learners, Maclin & Shavlik, Machine Learning 1996 Knowledge-Based Support Vector Machine Classifiers, Fung, Mangasarian, & Shavlik, NIPS 2002 Knowledge-Based Nonlinear Kernel Classifiers, Fung, Mangasarian, & Shavlik, COLT 2003 Knowledge-Based Kernel Approximation, Mangasarian, Shavlik, & Wild, JAIR 2004 Giving Advice about Preferred Actions to Reinforcement Learners Via Knowledge-Based Kernel Regression, Maclin, Shavlik, Torrey, Walker, & Wild, AAAI 2005 Skill Acquisition via Transfer Learning and Advice Taking, Torrey, Shavlik, Walker, & Maclin, ECML 2006

Backups

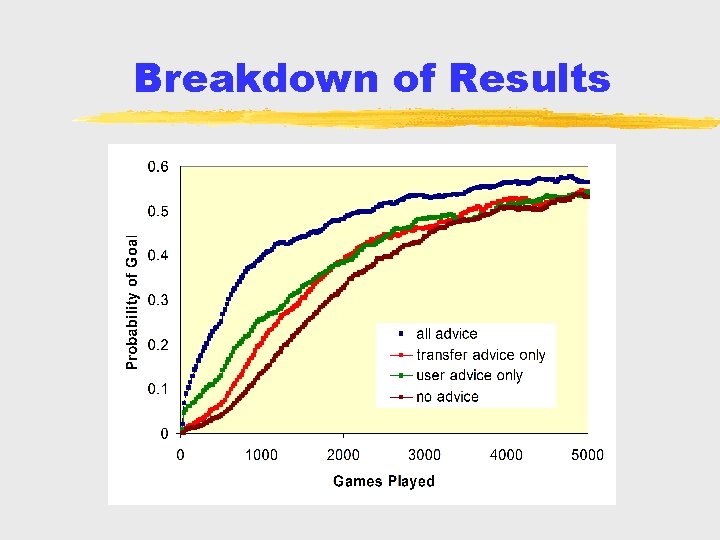

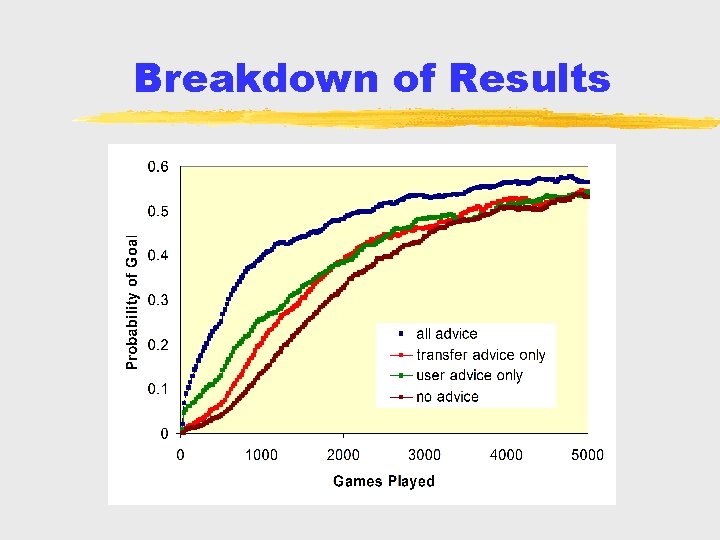

Breakdown of Results

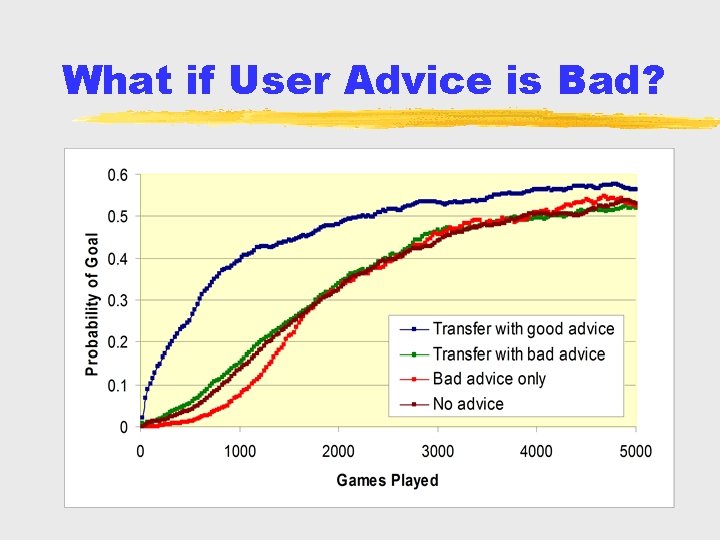

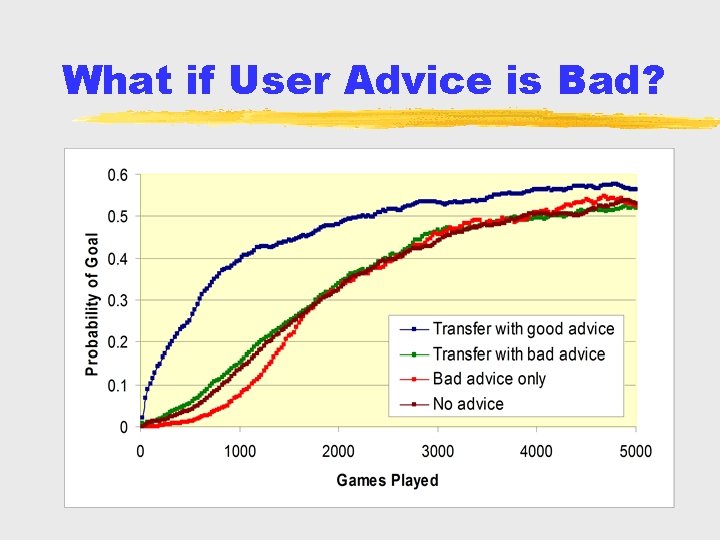

What if User Advice is Bad?

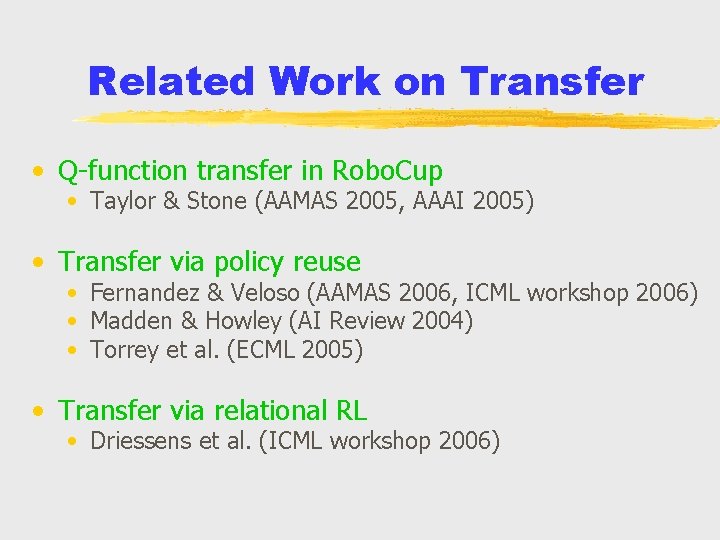

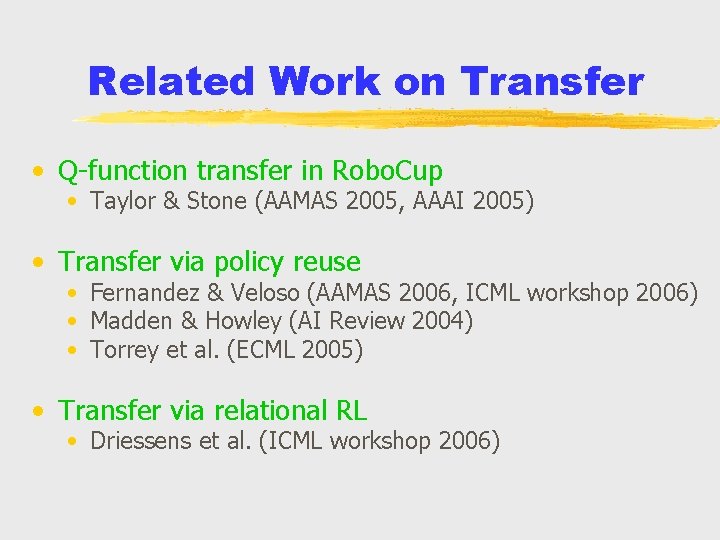

Related Work on Transfer • Q-function transfer in Robo. Cup • Taylor & Stone (AAMAS 2005, AAAI 2005) • Transfer via policy reuse • Fernandez & Veloso (AAMAS 2006, ICML workshop 2006) • Madden & Howley (AI Review 2004) • Torrey et al. (ECML 2005) • Transfer via relational RL • Driessens et al. (ICML workshop 2006)