45 Kernel Synchronization Ch 8 9 Kernel Synchronization

- Slides: 41

제 45강 : Kernel Synchronization Ch 8, 9 Kernel Synchronization 1

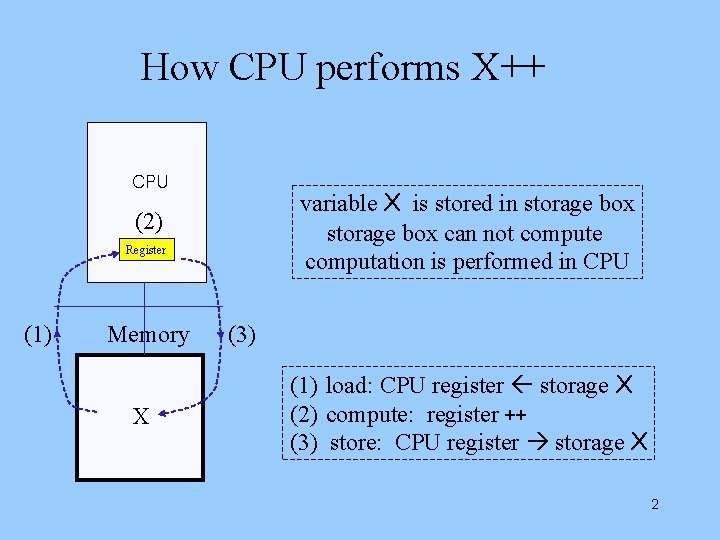

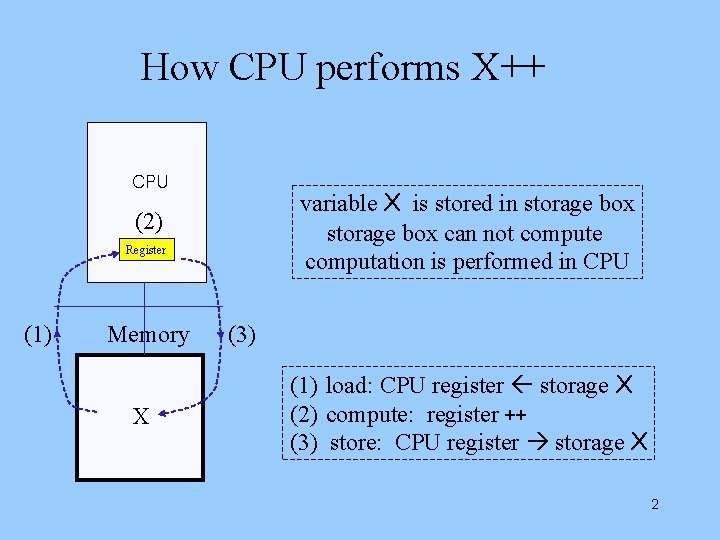

How CPU performs X++ CPU variable X is stored in storage box can not compute computation is performed in CPU (2) Register (1) Memory X (3) (1) load: CPU register storage X (2) compute: register ++ (3) store: CPU register storage X 2

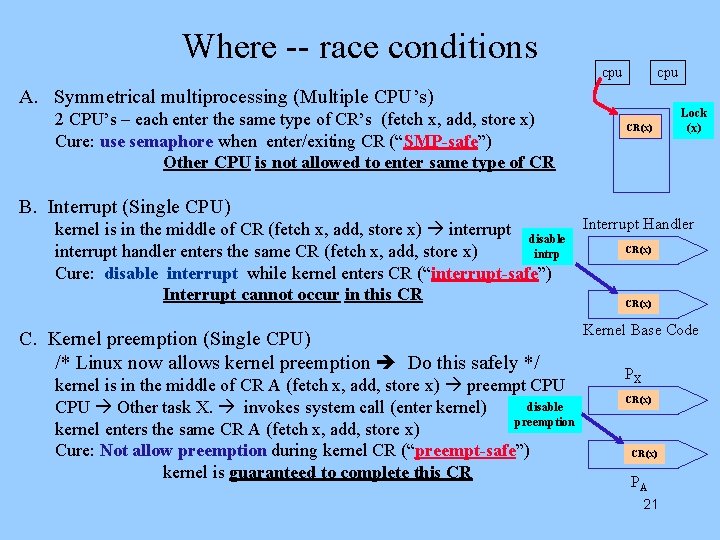

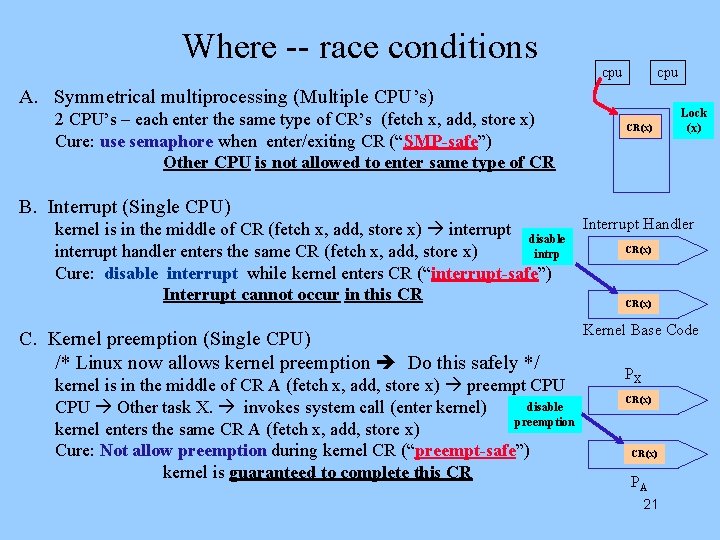

(Three Cases of Race) A. 2 CPU’s sharing a variable B. Kernel base code v. s. Interrupt handler C. Kernel Preemption between Processes 3

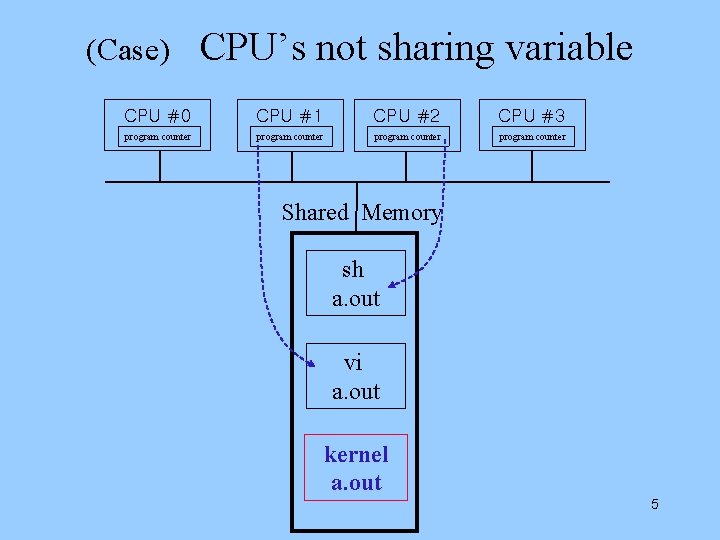

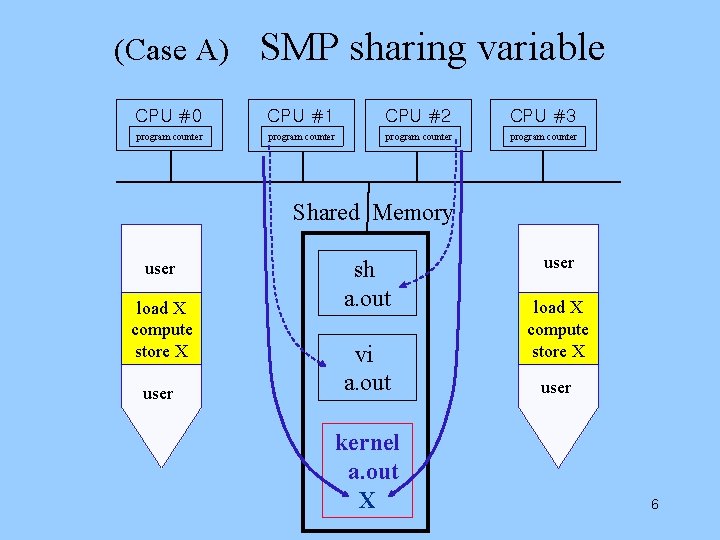

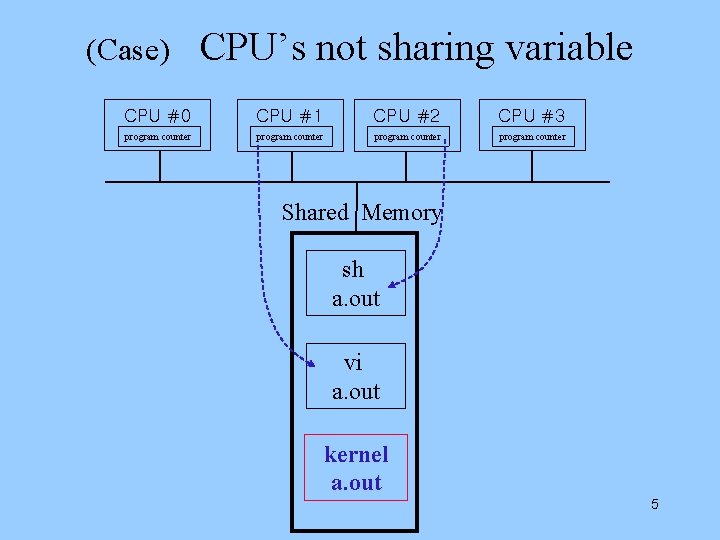

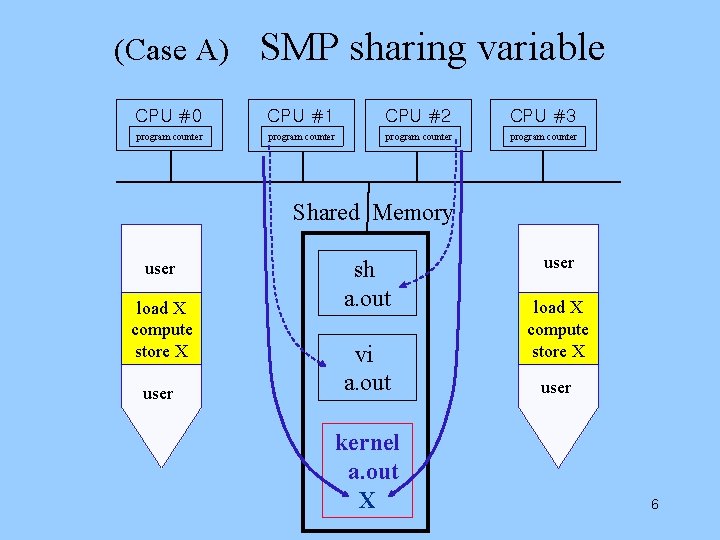

(Case A) 2 CPU’s sharing a variable 4

(Case) CPU’s not sharing variable CPU #0 CPU #1 CPU #2 CPU #3 program counter Shared Memory sh a. out vi a. out kernel a. out 5

(Case A) SMP sharing variable CPU #0 CPU #1 CPU #2 CPU #3 program counter Shared Memory user load X compute store X user sh a. out vi a. out kernel a. out X user load X compute store X user 6

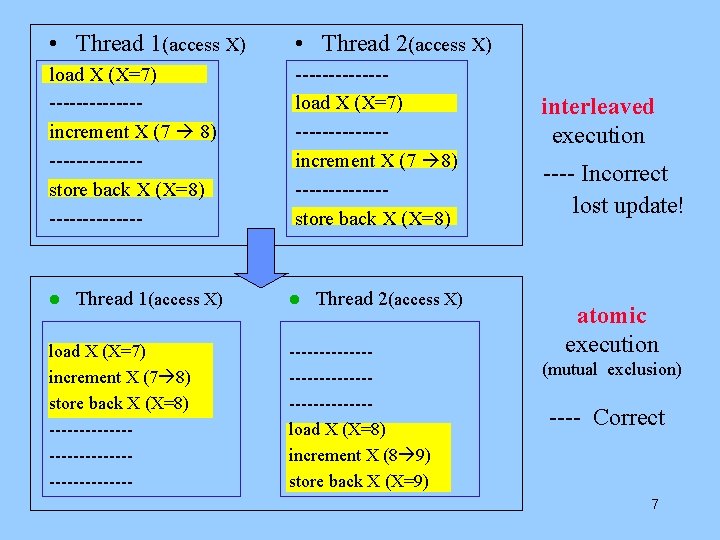

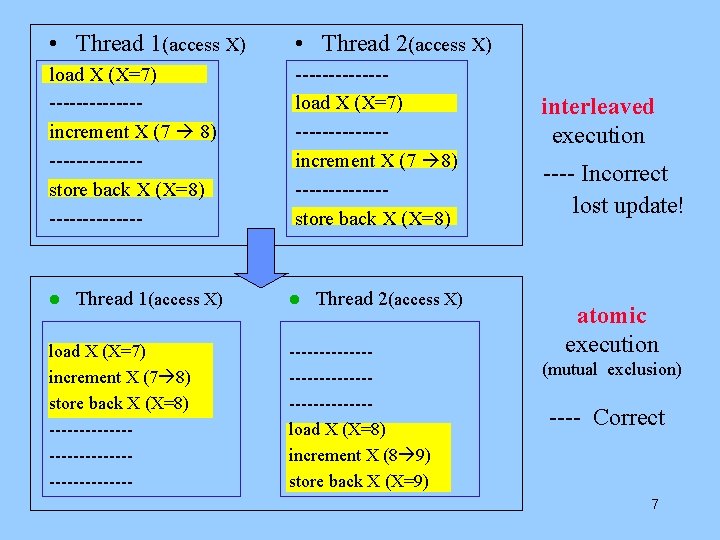

• Thread 1(access X) • Thread 2(access X) load X (X=7) -------increment X (7 8) -------store back X (X=8) -------load X (X=7) -------increment X (7 8) -------store back X (X=8) l Thread 1(access X) load X (X=7) increment X (7 8) store back X (X=8) -------------------- l Thread 2(access X) --------------------load X (X=8) increment X (8 9) store back X (X=9) interleaved execution ---- Incorrect lost update! atomic execution (mutual exclusion) ---- Correct 7

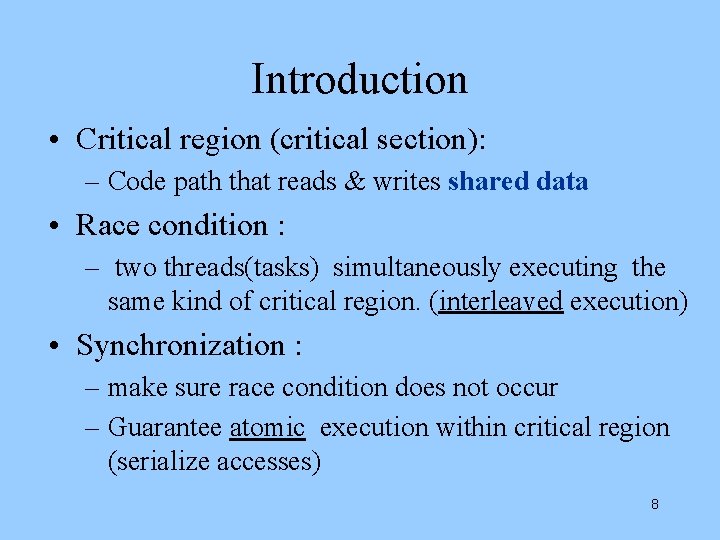

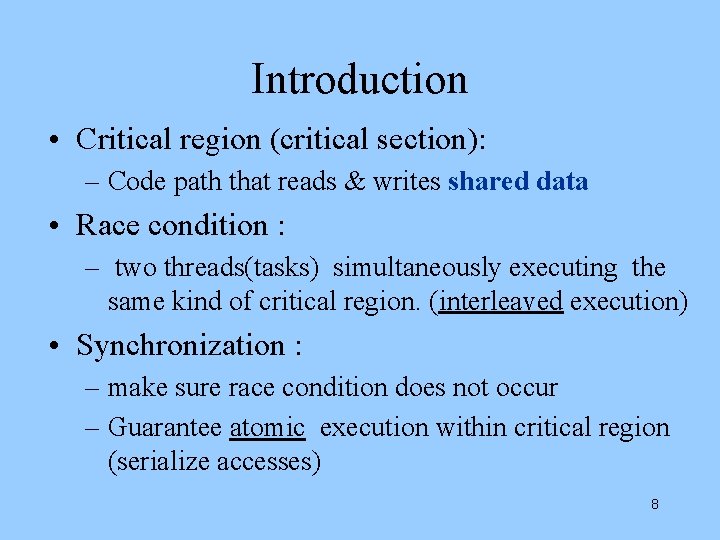

Introduction • Critical region (critical section): – Code path that reads & writes shared data • Race condition : – two threads(tasks) simultaneously executing the same kind of critical region. (interleaved execution) • Synchronization : – make sure race condition does not occur – Guarantee atomic execution within critical region (serialize accesses) 8

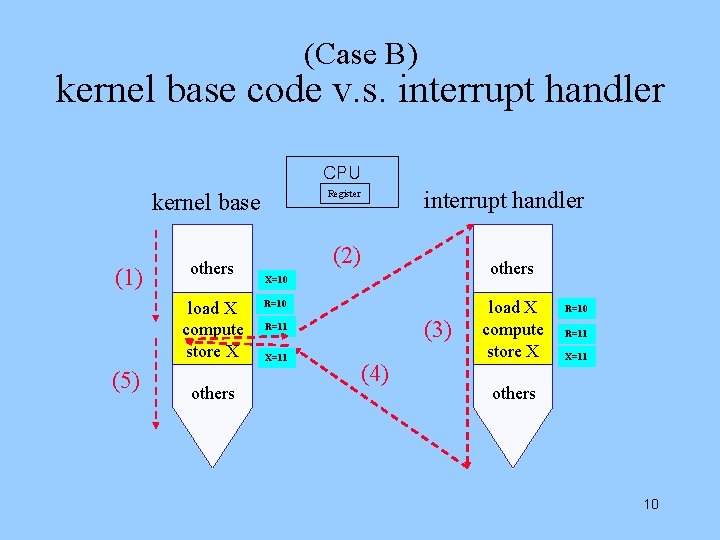

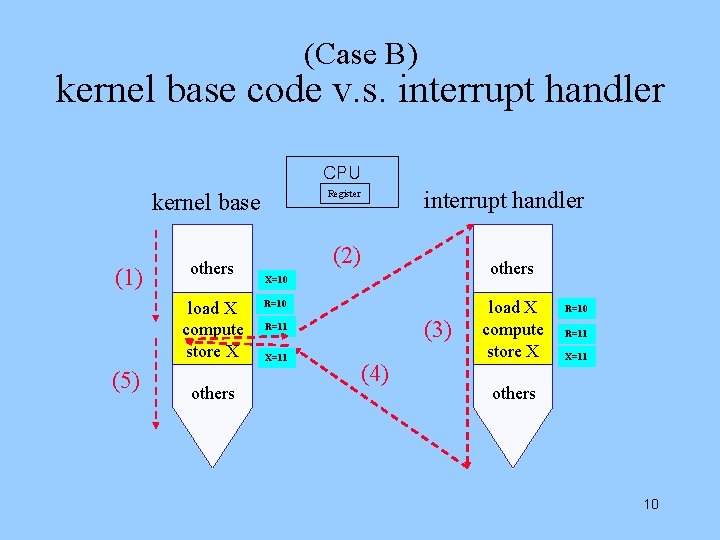

(Case B) kernel base code v. s. interrupt handler 9

(Case B) kernel base code v. s. interrupt handler CPU (1) others load X compute store X (5) others interrupt handler Register kernel base (2) others X=10 R=10 (3) R=11 X=11 (4) load X compute store X R=10 R=11 X=11 others 10

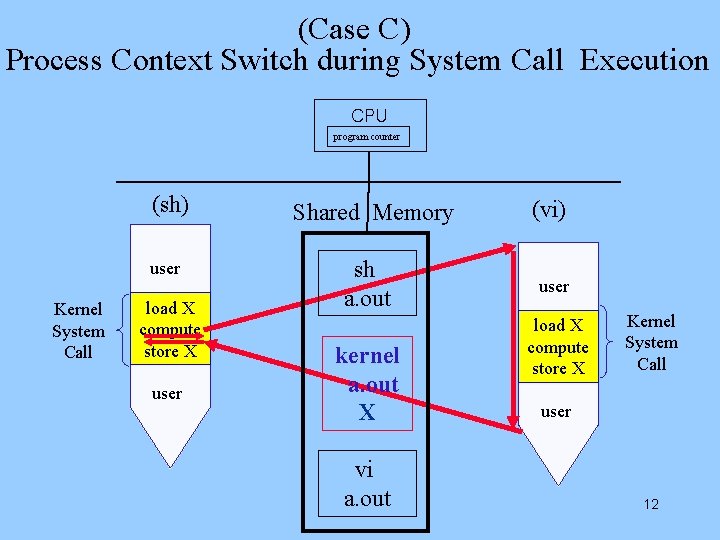

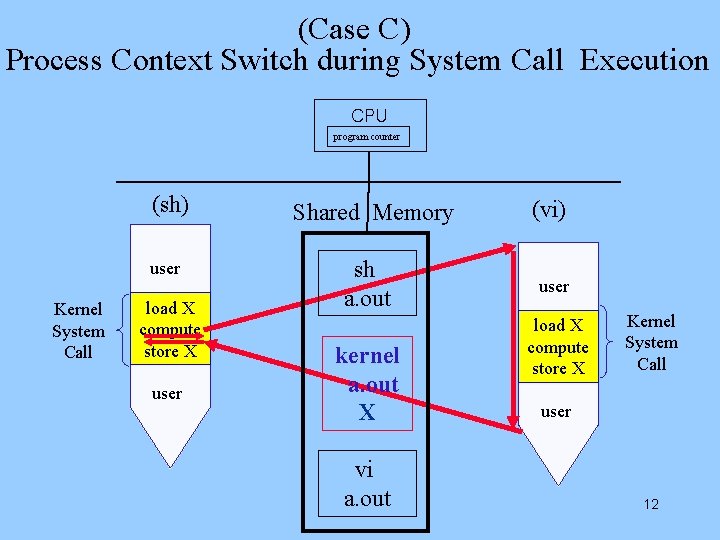

(Case C) Process Context Switch during System Call Execution 11

(Case C) Process Context Switch during System Call Execution CPU program counter (sh) user Kernel System Call load X compute store X user Shared Memory sh a. out kernel a. out X vi a. out (vi) user load X compute store X Kernel System Call user 12

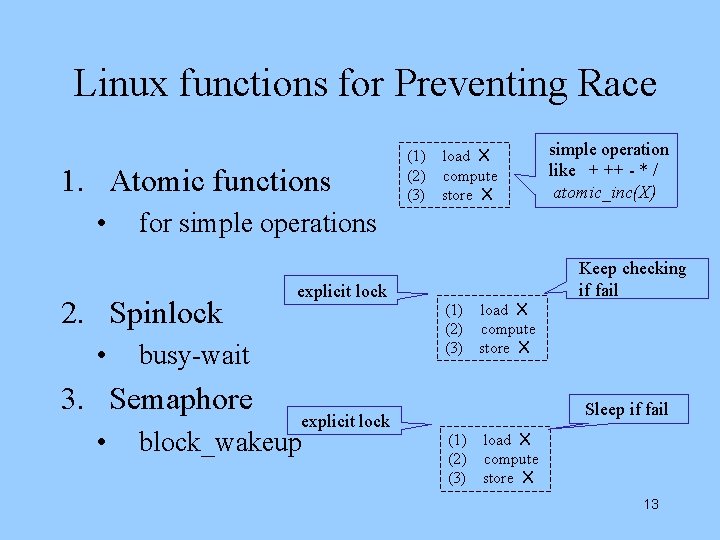

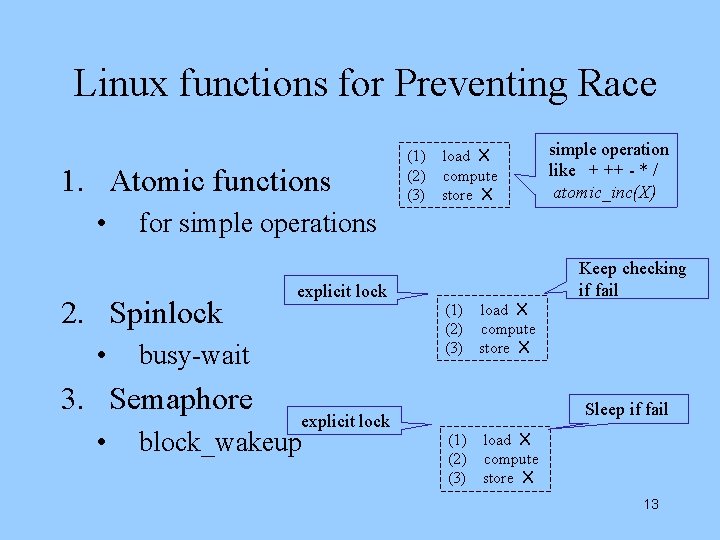

Linux functions for Preventing Race 1. Atomic functions • explicit lock busy-wait 3. Semaphore • simple operation like + ++ - * / atomic_inc(X) for simple operations 2. Spinlock • (1) load X (2) compute (3) store X explicit lock block_wakeup (1) load X (2) compute (3) store X Keep checking if fail Sleep if fail (1) load X (2) compute (3) store X 13

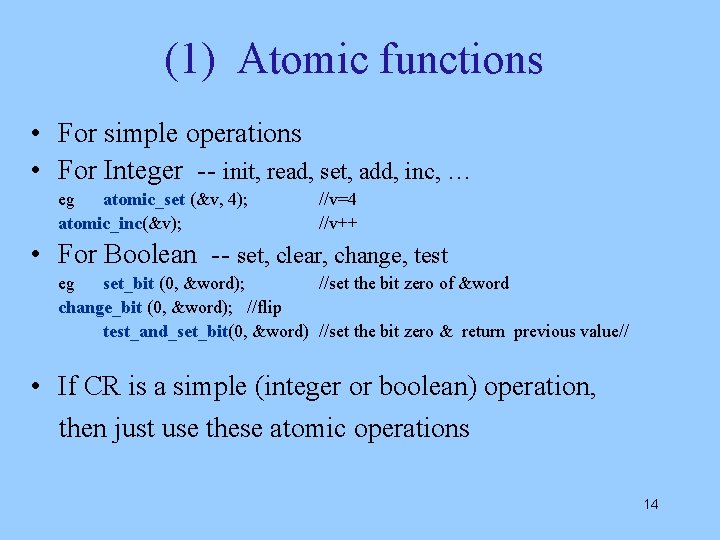

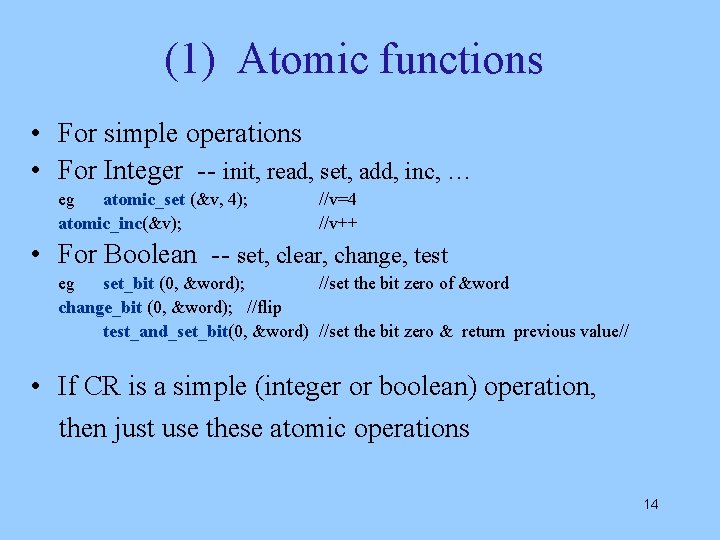

(1) Atomic functions • For simple operations • For Integer -- init, read, set, add, inc, … eg atomic_set (&v, 4); atomic_inc(&v); //v=4 //v++ • For Boolean -- set, clear, change, test eg set_bit (0, &word); //set the bit zero of &word change_bit (0, &word); //flip test_and_set_bit(0, &word) //set the bit zero & return previous value// • If CR is a simple (integer or boolean) operation, then just use these atomic operations 14

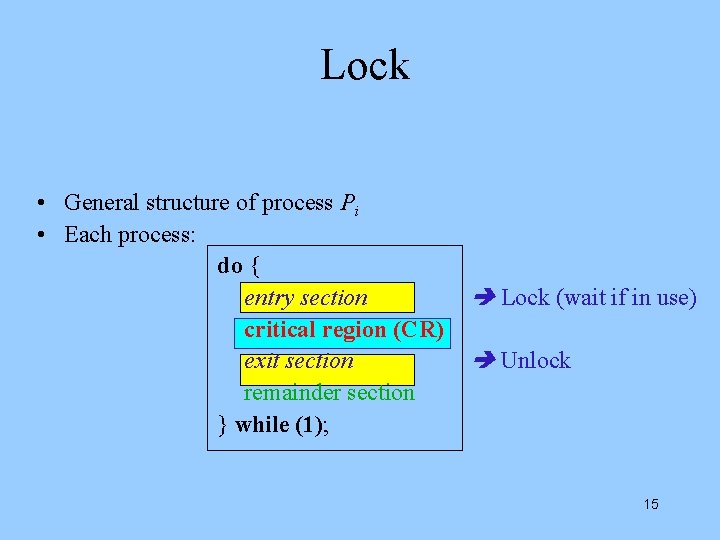

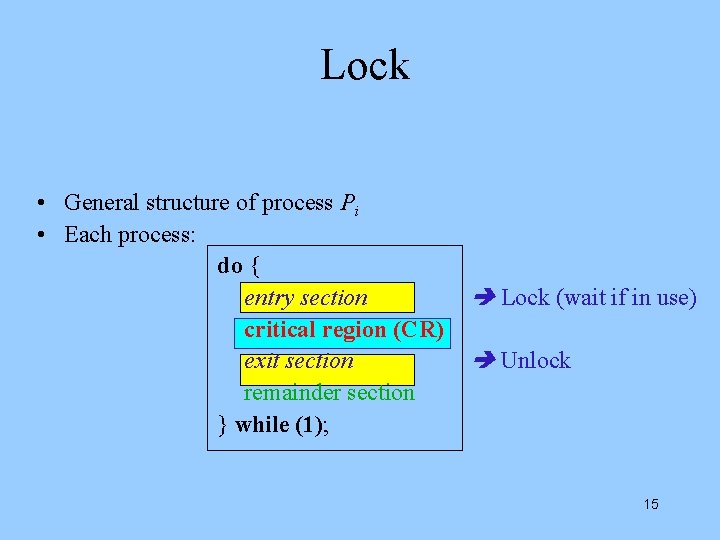

Lock • General structure of process Pi • Each process: do { entry section critical region (CR) exit section remainder section } while (1); Lock (wait if in use) Unlock 15

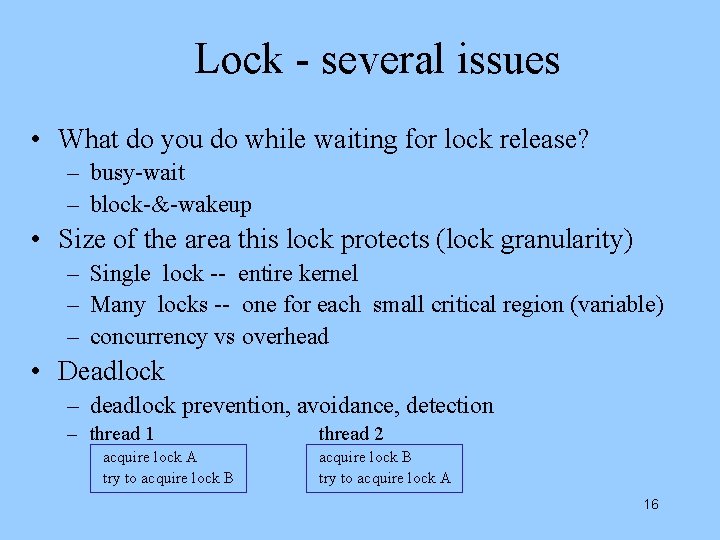

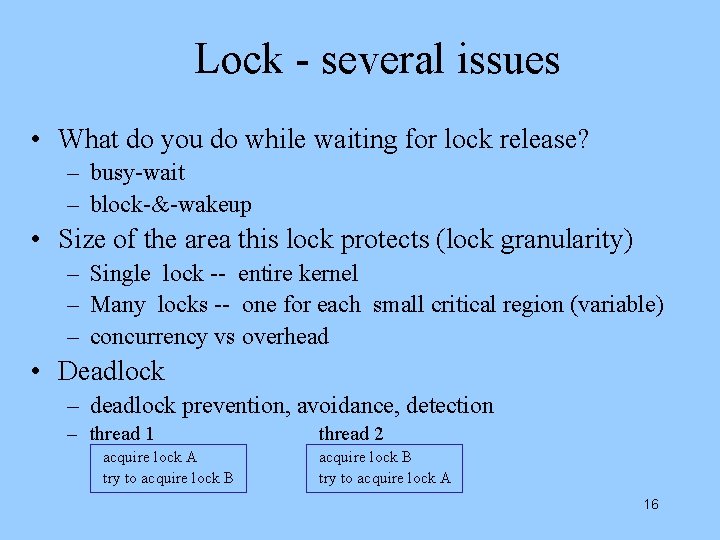

Lock - several issues • What do you do while waiting for lock release? – busy-wait – block-&-wakeup • Size of the area this lock protects (lock granularity) – Single lock -- entire kernel – Many locks -- one for each small critical region (variable) – concurrency vs overhead • Deadlock – deadlock prevention, avoidance, detection – thread 1 acquire lock A try to acquire lock B thread 2 acquire lock B try to acquire lock A 16

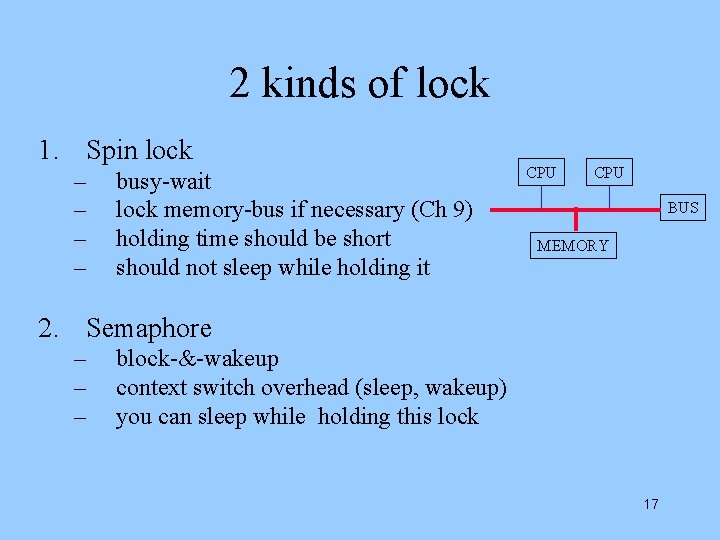

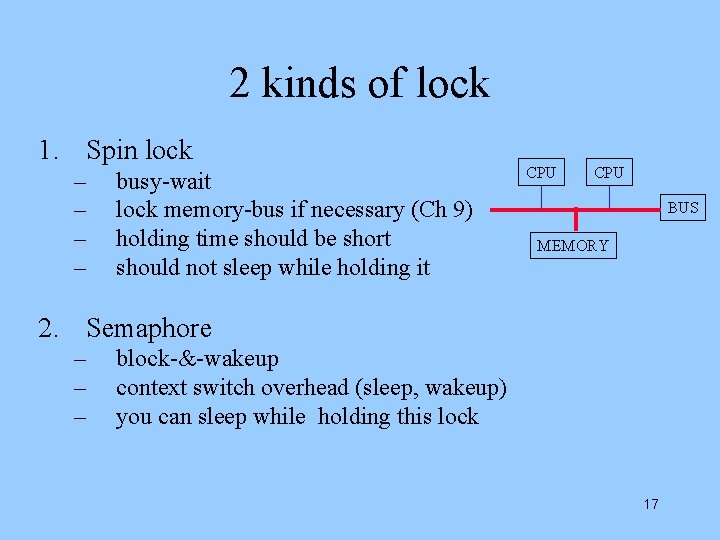

2 kinds of lock 1. Spin lock – – busy-wait lock memory-bus if necessary (Ch 9) holding time should be short should not sleep while holding it CPU BUS MEMORY 2. Semaphore – – – block-&-wakeup context switch overhead (sleep, wakeup) you can sleep while holding this lock 17

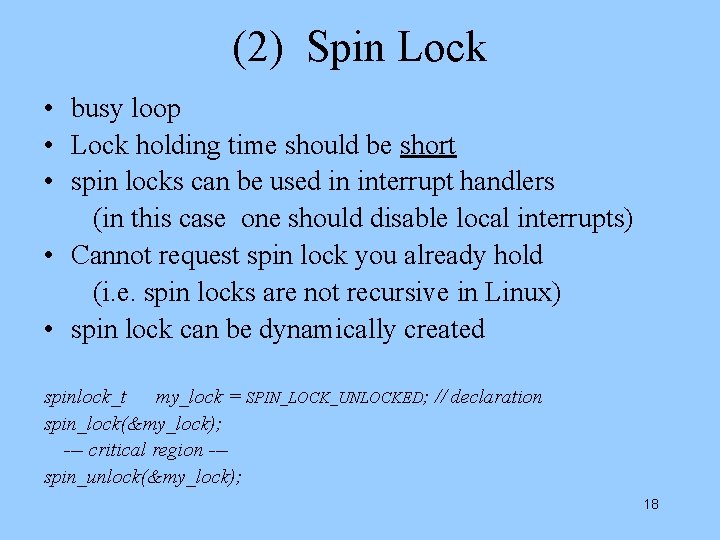

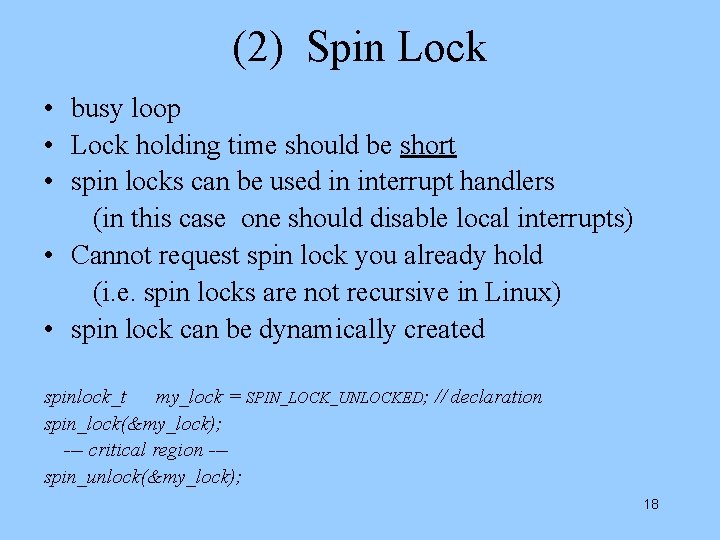

(2) Spin Lock • busy loop • Lock holding time should be short • spin locks can be used in interrupt handlers (in this case one should disable local interrupts) • Cannot request spin lock you already hold (i. e. spin locks are not recursive in Linux) • spin lock can be dynamically created spinlock_t my_lock = SPIN_LOCK_UNLOCKED; // declaration spin_lock(&my_lock); --- critical region --spin_unlock(&my_lock); 18

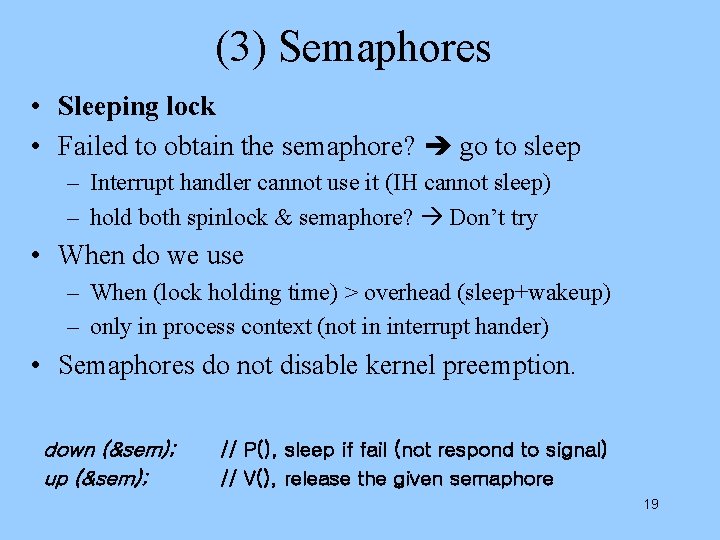

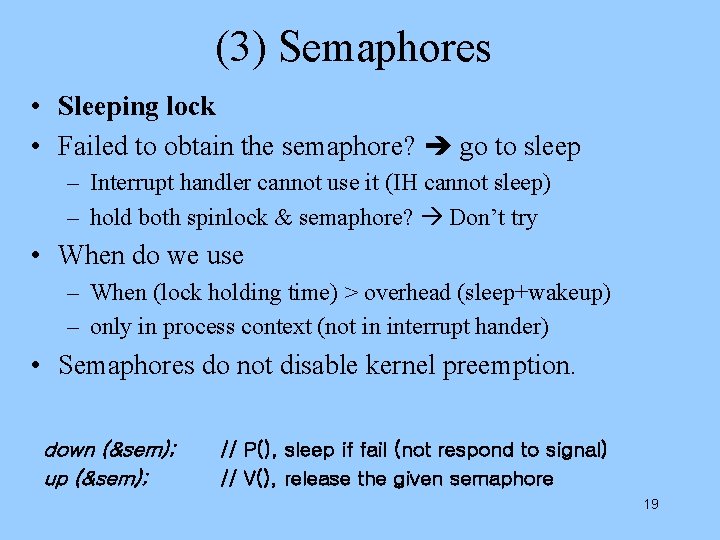

(3) Semaphores • Sleeping lock • Failed to obtain the semaphore? go to sleep – Interrupt handler cannot use it (IH cannot sleep) – hold both spinlock & semaphore? Don’t try • When do we use – When (lock holding time) > overhead (sleep+wakeup) – only in process context (not in interrupt hander) • Semaphores do not disable kernel preemption. down (&sem); up (&sem); // P(), sleep if fail (not respond to signal) // V(), release the given semaphore 19

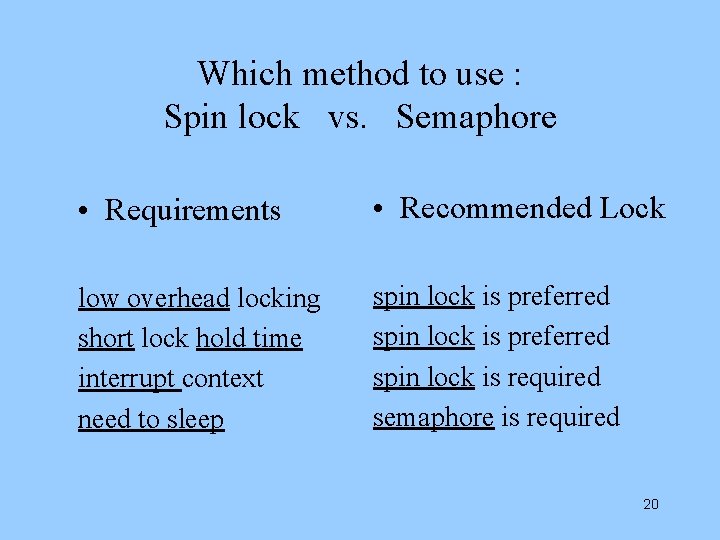

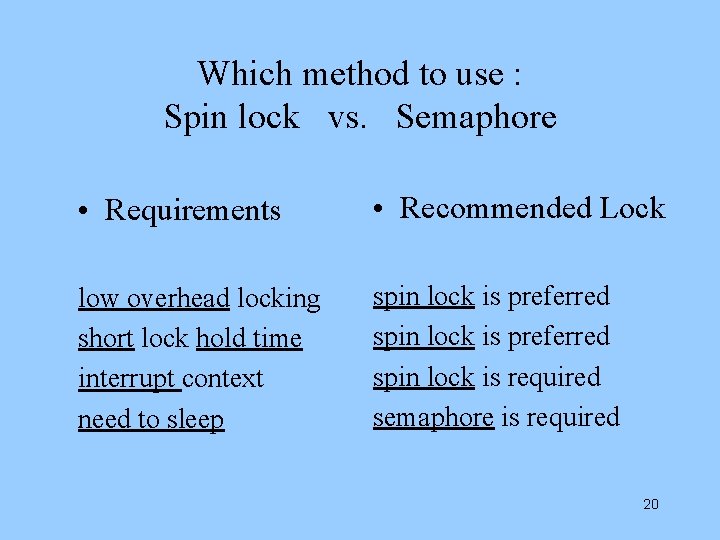

Which method to use : Spin lock vs. Semaphore • Requirements • Recommended Lock low overhead locking short lock hold time interrupt context need to sleep spin lock is preferred spin lock is required semaphore is required 20

Where -- race conditions cpu A. Symmetrical multiprocessing (Multiple CPU’s) 2 CPU’s – each enter the same type of CR’s (fetch x, add, store x) Cure: use semaphore when enter/exiting CR (“SMP-safe”) Other CPU is not allowed to enter same type of CR CR(x) Lock (x) B. Interrupt (Single CPU) kernel is in the middle of CR (fetch x, add, store x) interrupt disable Interrupt Handler CR(x) interrupt handler enters the same CR (fetch x, add, store x) intrp Cure: disable interrupt while kernel enters CR (“interrupt-safe”) Interrupt cannot occur in this CR CR(x) C. Kernel preemption (Single CPU) /* Linux now allows kernel preemption Do this safely */ kernel is in the middle of CR A (fetch x, add, store x) preempt CPU disable CPU Other task X. invokes system call (enter kernel) preemption kernel enters the same CR A (fetch x, add, store x) Cure: Not allow preemption during kernel CR (“preempt-safe”) kernel is guaranteed to complete this CR Kernel Base Code PX CR(x) PA 21

Advanced Issues 22

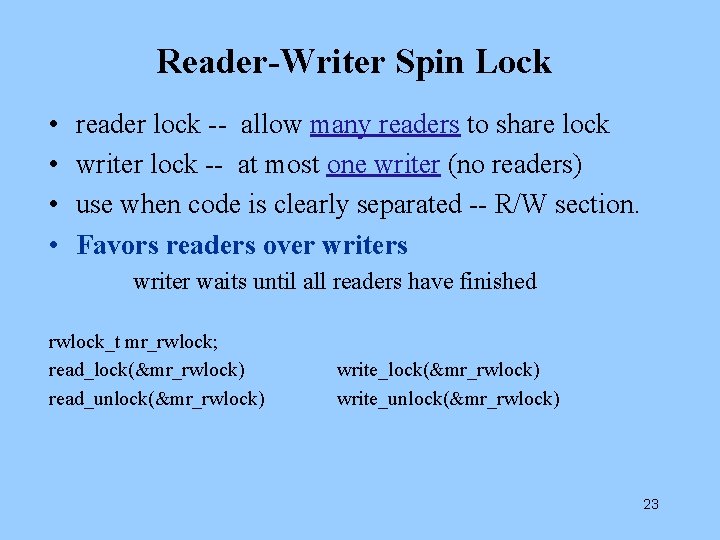

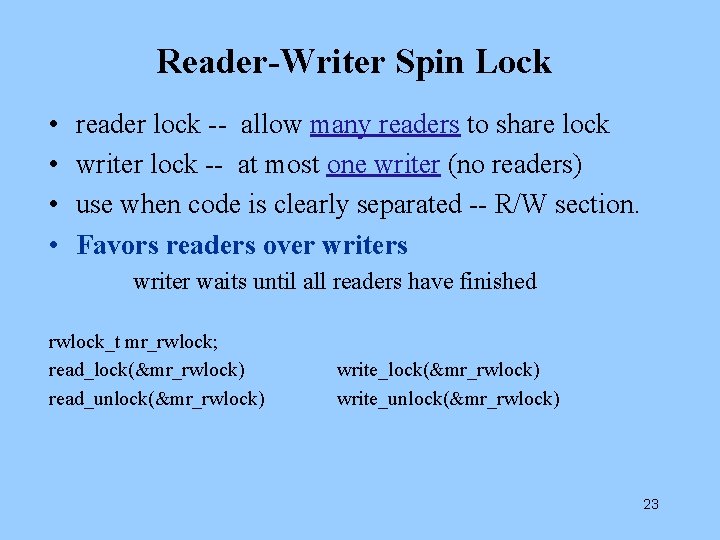

Reader-Writer Spin Lock • • reader lock -- allow many readers to share lock writer lock -- at most one writer (no readers) use when code is clearly separated -- R/W section. Favors readers over writers writer waits until all readers have finished rwlock_t mr_rwlock; read_lock(&mr_rwlock) read_unlock(&mr_rwlock) write_unlock(&mr_rwlock) 23

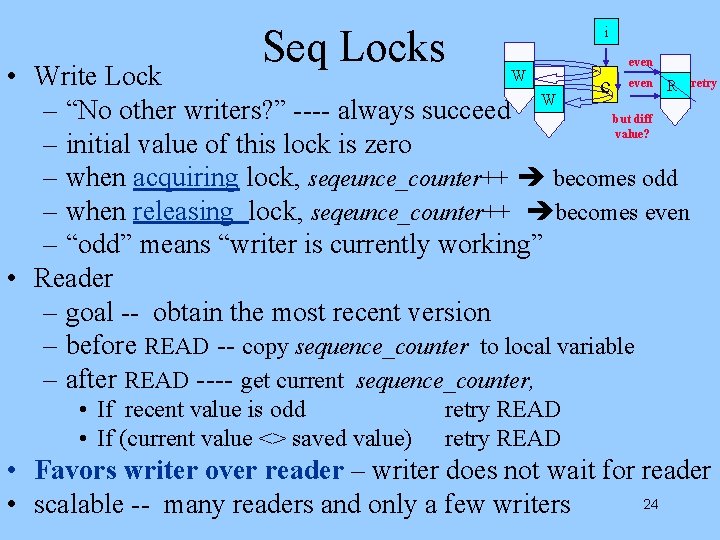

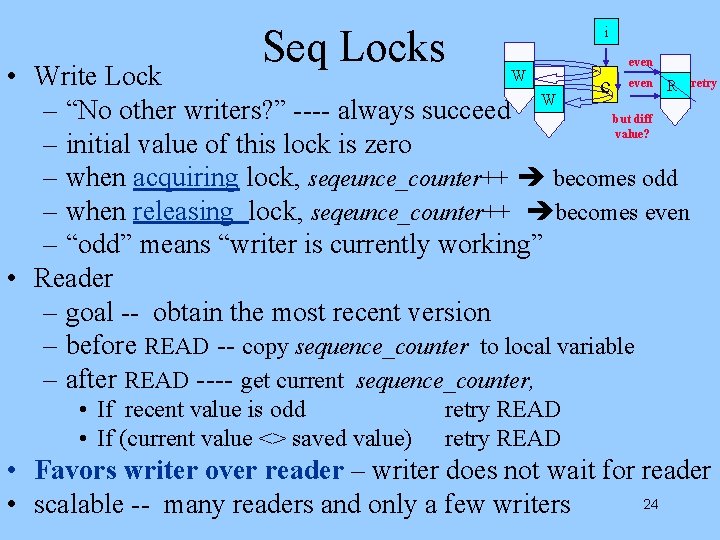

Seq Locks i even W • Write Lock even R retry c W – “No other writers? ” ---- always succeed but diff value? – initial value of this lock is zero – when acquiring lock, seqeunce_counter++ becomes odd – when releasing lock, seqeunce_counter++ becomes even – “odd” means “writer is currently working” • Reader – goal -- obtain the most recent version – before READ -- copy sequence_counter to local variable – after READ ---- get current sequence_counter, • If recent value is odd • If (current value <> saved value) retry READ • Favors writer over reader – writer does not wait for reader 24 • scalable -- many readers and only a few writers

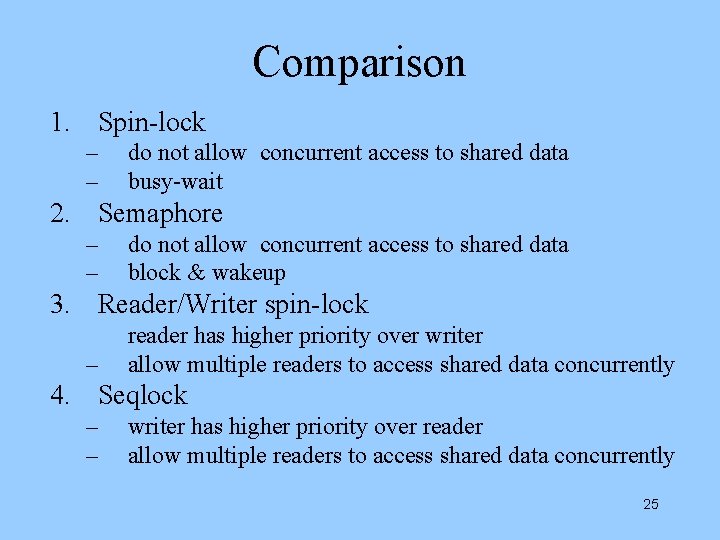

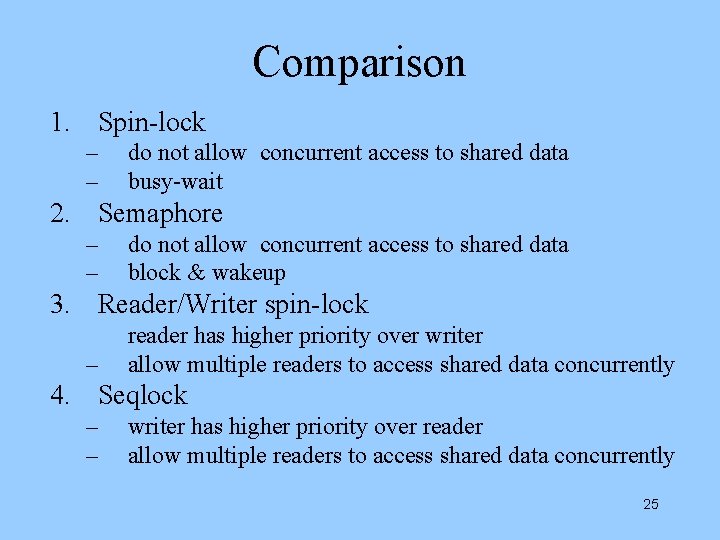

Comparison 1. Spin-lock – – do not allow concurrent access to shared data busy-wait 2. Semaphore – – do not allow concurrent access to shared data block & wakeup 3. Reader/Writer spin-lock – reader has higher priority over writer allow multiple readers to access shared data concurrently 4. Seqlock – – writer has higher priority over reader allow multiple readers to access shared data concurrently 25

BKL : The Big Kernel Lock • • 1 st SMP implementation Global spin lock Only one task could be in kernel at a time Later, fine-grained locking was introduced multiple CPU’s execute kernel concurrently • BKL is not scalable • BKL is obsolete now. lock_kernel(); unlock_kernel(); 26

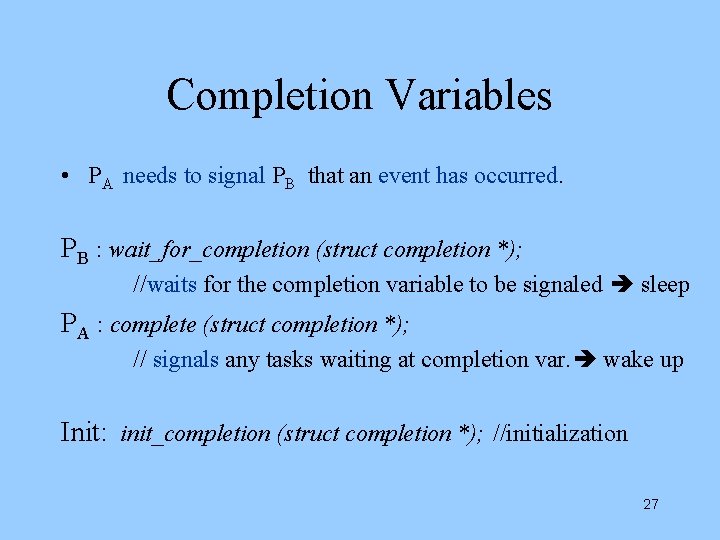

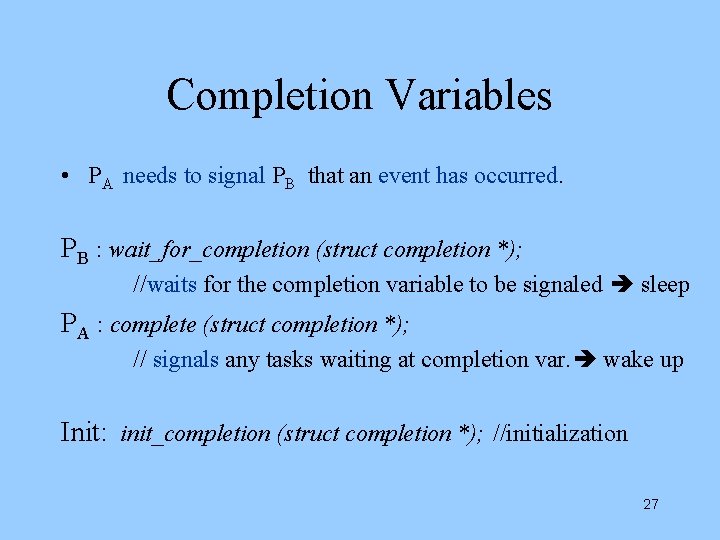

Completion Variables • PA needs to signal PB that an event has occurred. PB : wait_for_completion (struct completion *); //waits for the completion variable to be signaled sleep PA : complete (struct completion *); // signals any tasks waiting at completion var. wake up Init: init_completion (struct completion *); //initialization 27

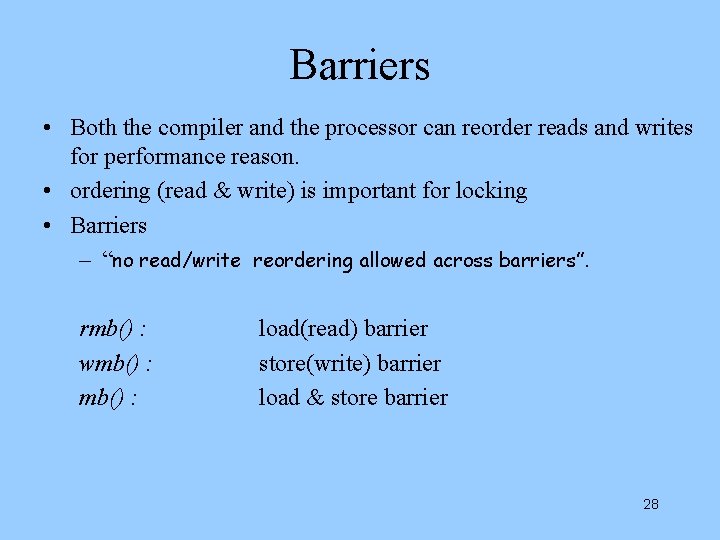

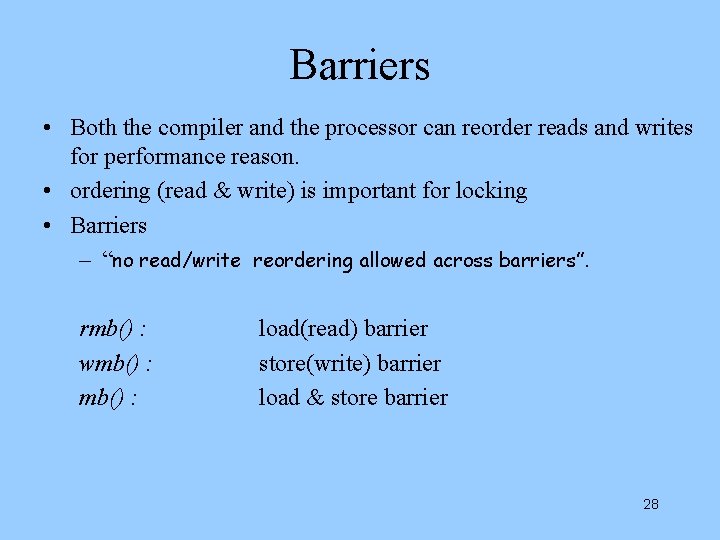

Barriers • Both the compiler and the processor can reorder reads and writes for performance reason. • ordering (read & write) is important for locking • Barriers – “no read/write reordering allowed across barriers”. rmb() : wmb() : load(read) barrier store(write) barrier load & store barrier 28

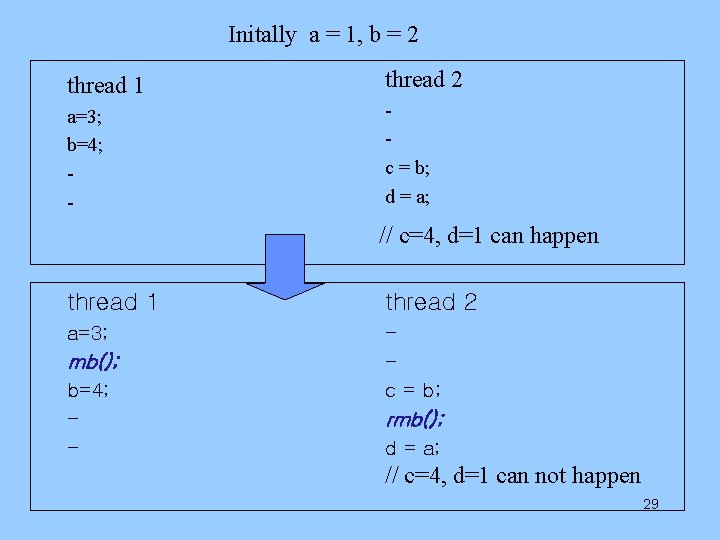

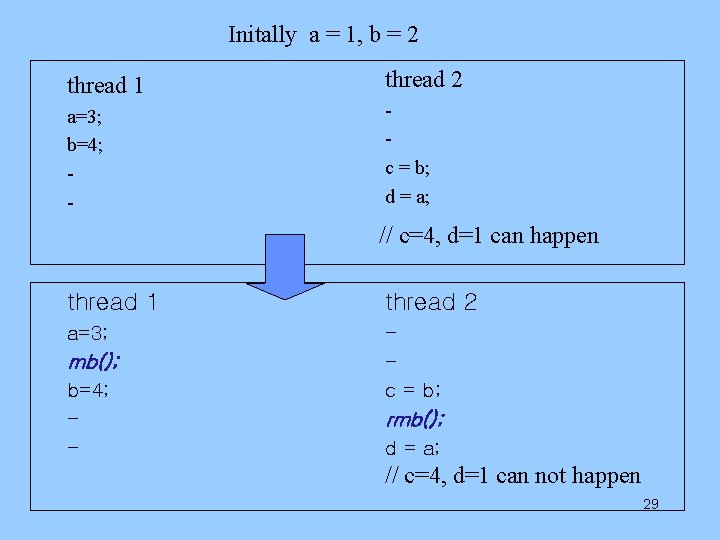

Initally a = 1, b = 2 thread 1 thread 2 a=3; b=4; - c = b; d = a; // c=4, d=1 can happen thread 1 thread 2 a=3; c = b; mb(); b=4; - rmb(); d = a; // c=4, d=1 can not happen 29

Ch 10 Timers and Time Management 30

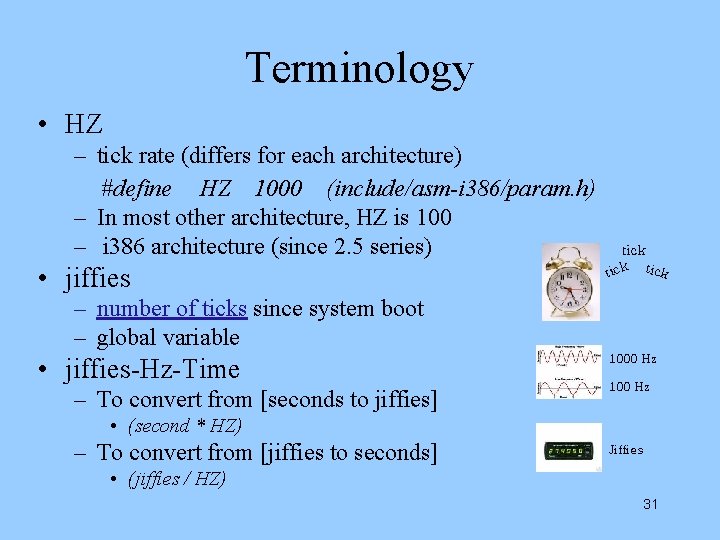

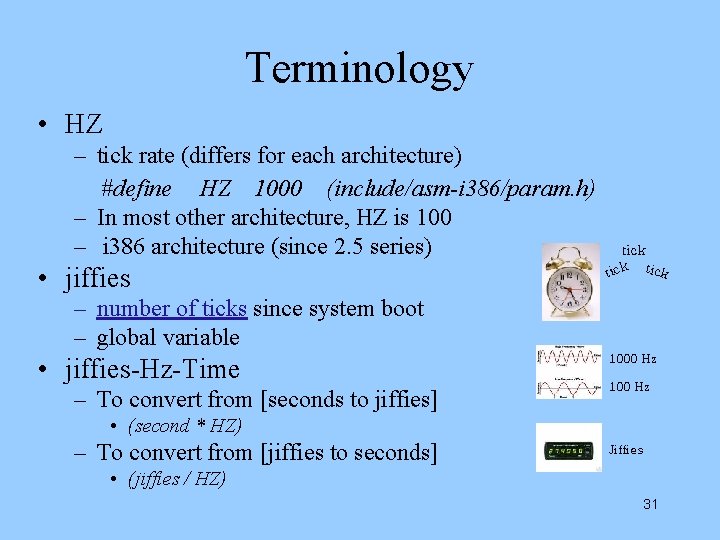

Terminology • HZ – tick rate (differs for each architecture) #define HZ 1000 (include/asm-i 386/param. h) – In most other architecture, HZ is 100 – i 386 architecture (since 2. 5 series) • jiffies tick – number of ticks since system boot – global variable • jiffies-Hz-Time – To convert from [seconds to jiffies] 1000 Hz 100 Hz • (second * HZ) – To convert from [jiffies to seconds] Jiffies • (jiffies / HZ) 31

Terminology • Issues on HZ – If increase the tick rate from 100 Hz 1000 Hz? • All timed events have a higher resolution • and the accuracy of timed events improve • overhead of timer Interrupt increase. • Issues on jiffies – Internal representation of jiffies – jiffies wrap-around 32

Hardware Clocks and Timers • System Timer – Drive an interrupt at a periodic rate – Programmable Interrupt Timer (PIT) on X 86 – kernel programs PIT on boot to drive timer interrupt at HZ • Real-Time Clock (RTC) – – Nonvolatile device for storing the time RTC continues even when the system is off (small battery) On boot, kernel reads the RTC Initialize the wall time (in struct timespec xtime) 33

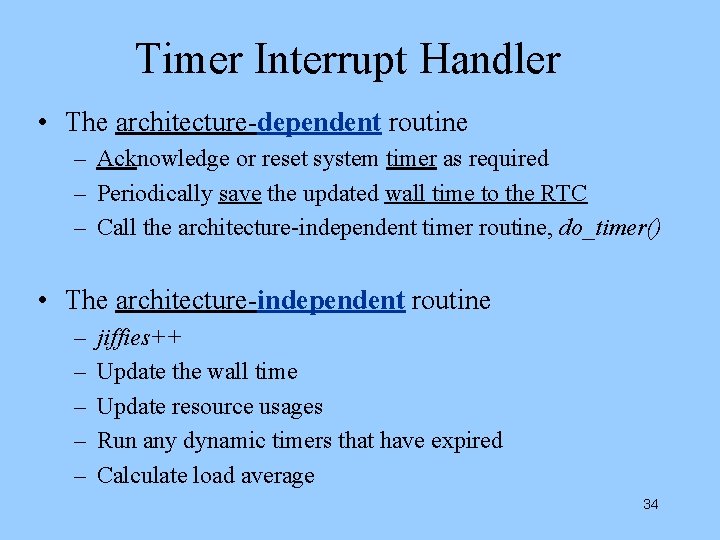

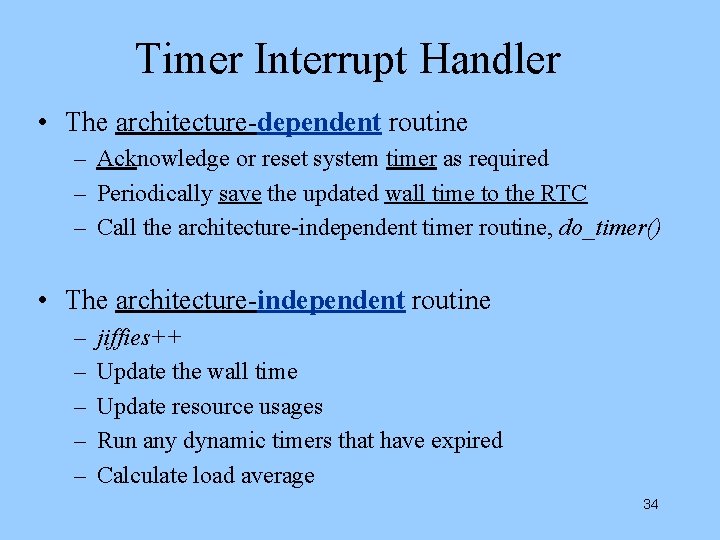

Timer Interrupt Handler • The architecture-dependent routine – Acknowledge or reset system timer as required – Periodically save the updated wall time to the RTC – Call the architecture-independent timer routine, do_timer() • The architecture-independent routine – – – jiffies++ Update the wall time Update resource usages Run any dynamic timers that have expired Calculate load average 34

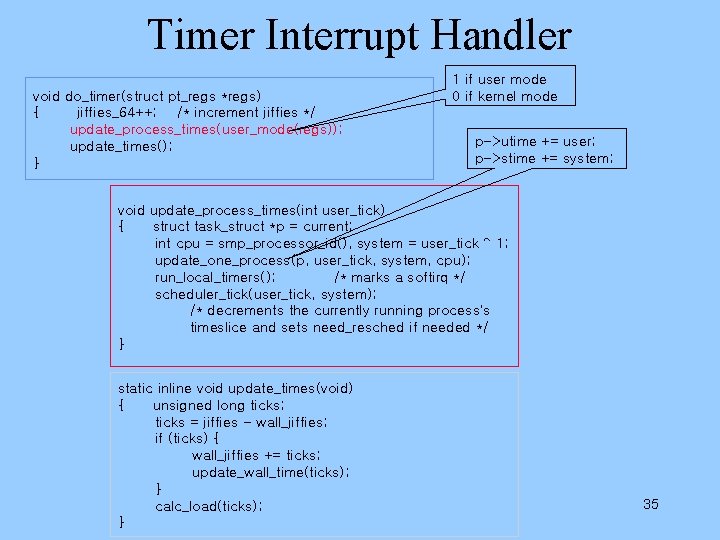

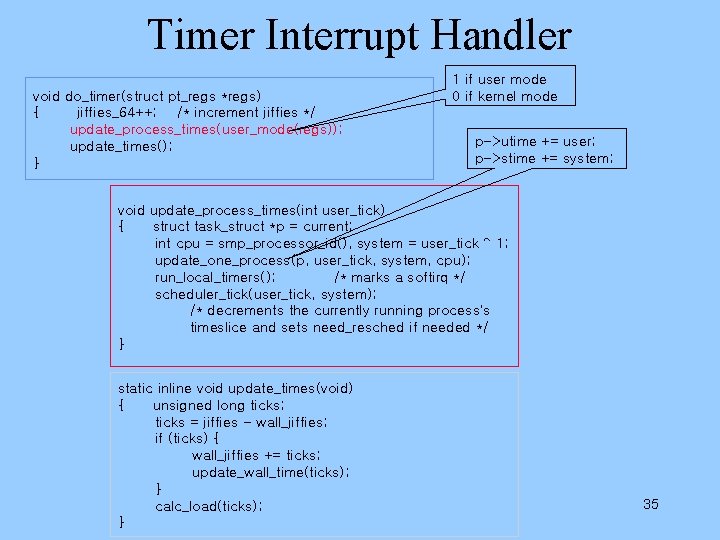

Timer Interrupt Handler void do_timer(struct pt_regs *regs) { jiffies_64++; /* increment jiffies */ update_process_times(user_mode(regs)); update_times(); } 1 if user mode 0 if kernel mode p->utime += user; p->stime += system; void update_process_times(int user_tick) { struct task_struct *p = current; int cpu = smp_processor_id(), system = user_tick ^ 1; update_one_process(p, user_tick, system, cpu); run_local_timers(); /* marks a softirq */ scheduler_tick(user_tick, system); /* decrements the currently running process’s timeslice and sets need_resched if needed */ } static inline void update_times(void) { unsigned long ticks; ticks = jiffies - wall_jiffies; if (ticks) { wall_jiffies += ticks; update_wall_time(ticks); } calc_load(ticks); } 35

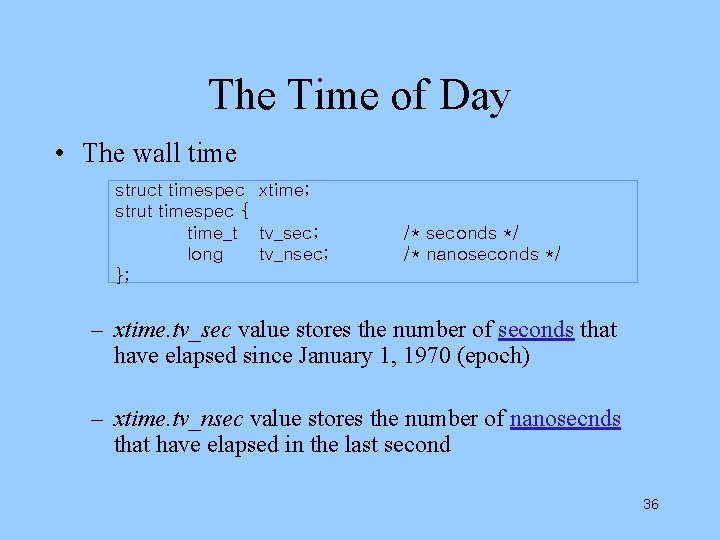

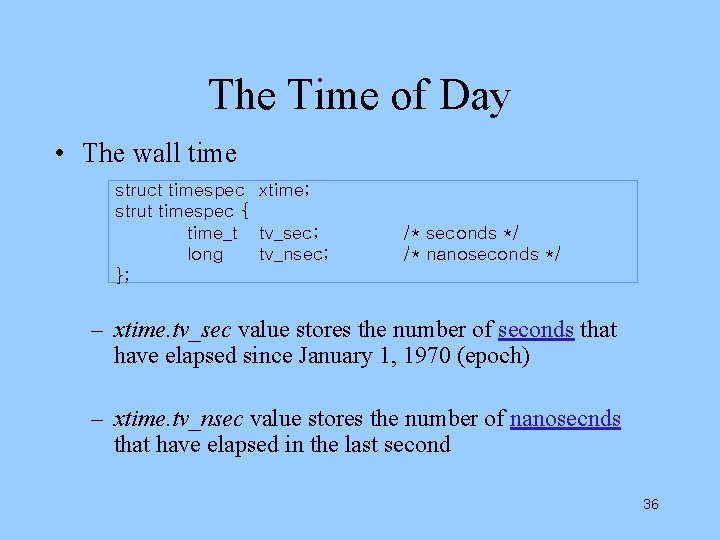

The Time of Day • The wall time struct timespec xtime; strut timespec { time_t tv_sec; long tv_nsec; }; /* seconds */ /* nanoseconds */ – xtime. tv_sec value stores the number of seconds that have elapsed since January 1, 1970 (epoch) – xtime. tv_nsec value stores the number of nanosecnds that have elapsed in the last second 36

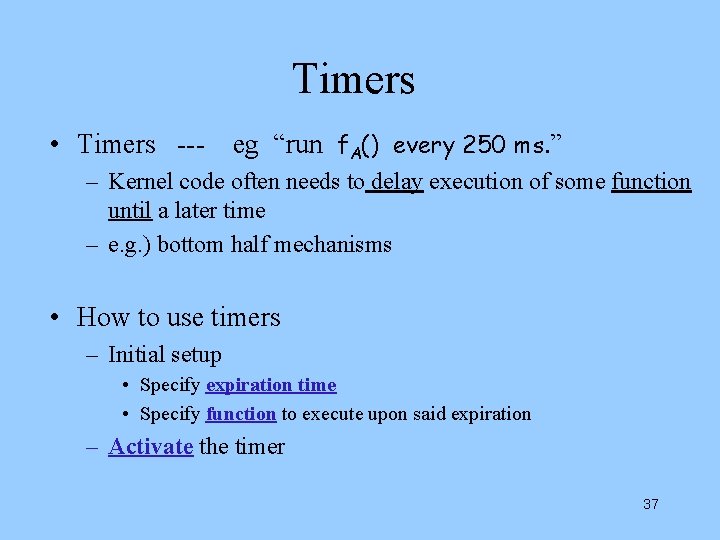

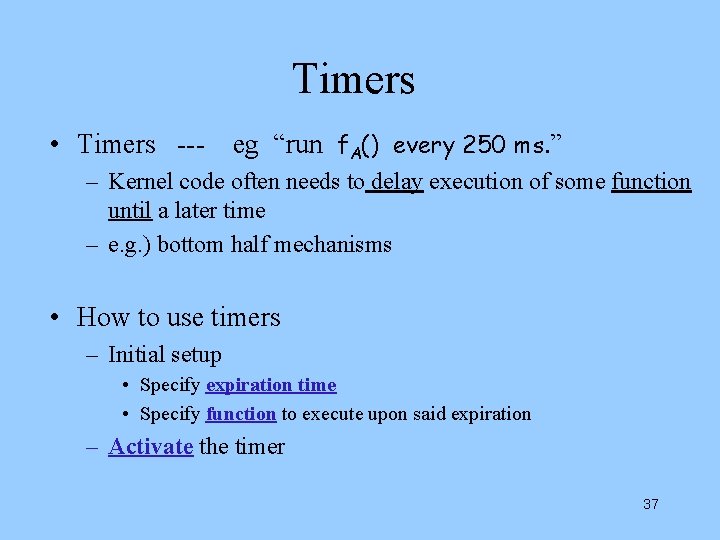

Timers • Timers --- eg “run f. A() every 250 ms. ” – Kernel code often needs to delay execution of some function until a later time – e. g. ) bottom half mechanisms • How to use timers – Initial setup • Specify expiration time • Specify function to execute upon said expiration – Activate the timer 37

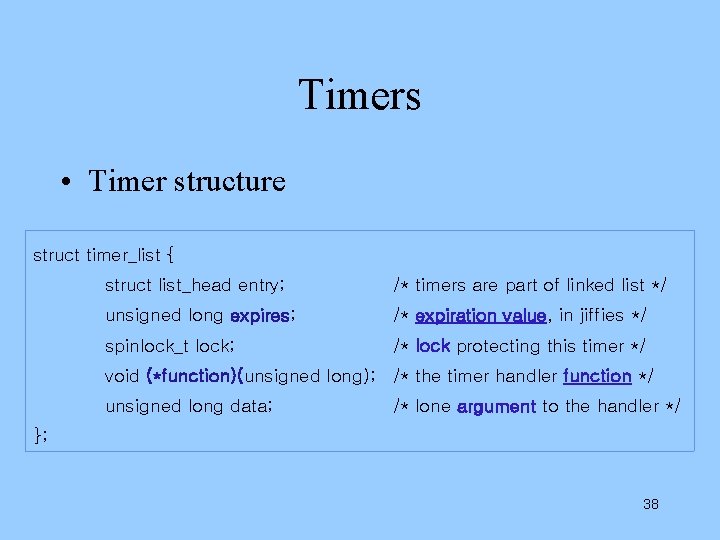

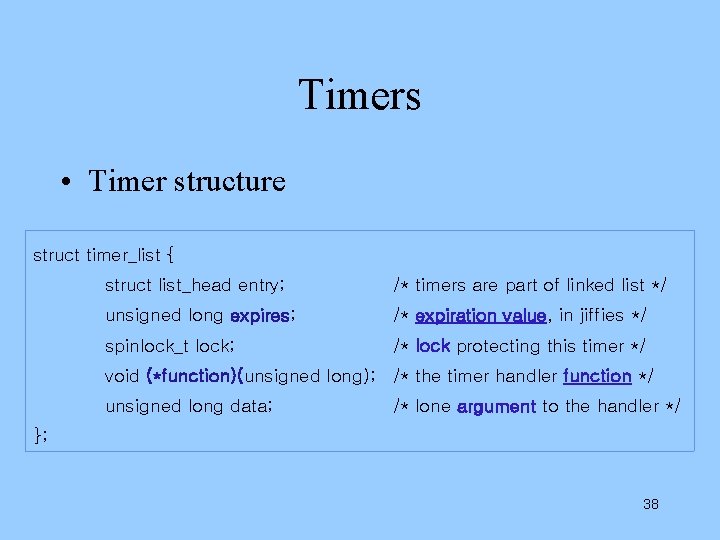

Timers • Timer structure struct timer_list { struct list_head entry; /* timers are part of linked list */ unsigned long expires; /* expiration value, in jiffies */ spinlock_t lock; /* lock protecting this timer */ void (*function)(unsigned long); /* the timer handler function */ unsigned long data; /* lone argument to the handler */ }; 38

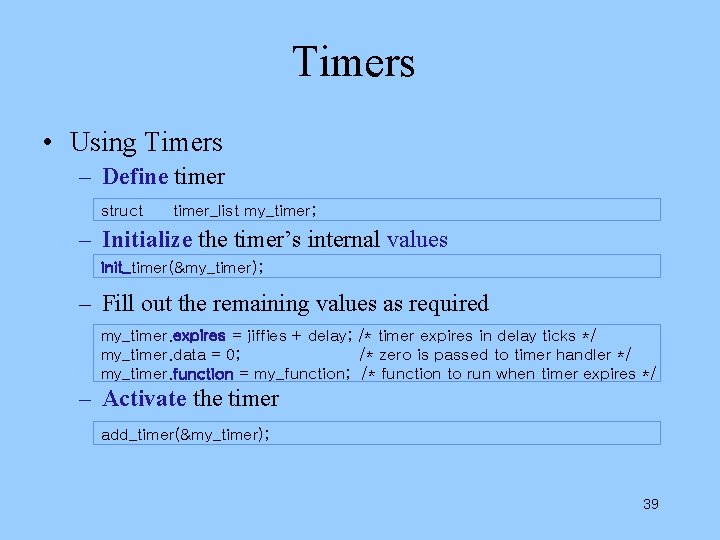

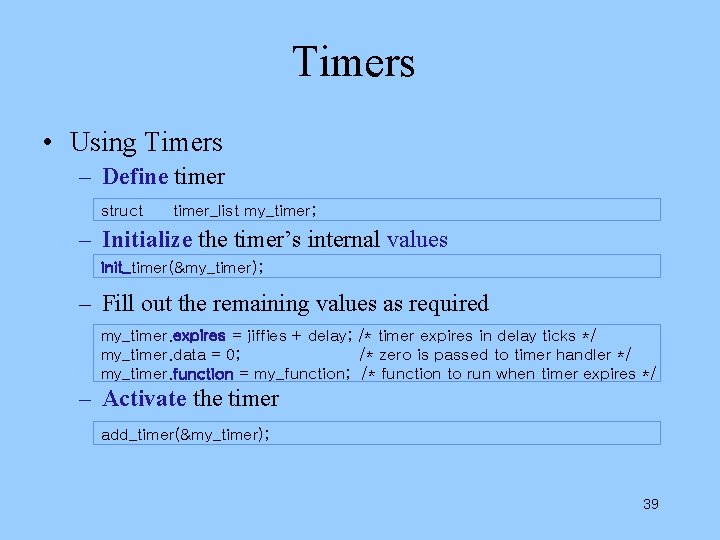

Timers • Using Timers – Define timer struct timer_list my_timer; – Initialize the timer’s internal values init_timer(&my_timer); – Fill out the remaining values as required my_timer. expires = jiffies + delay; /* timer expires in delay ticks */ my_timer. data = 0; /* zero is passed to timer handler */ my_timer. function = my_function; /* function to run when timer expires */ – Activate the timer add_timer(&my_timer); 39

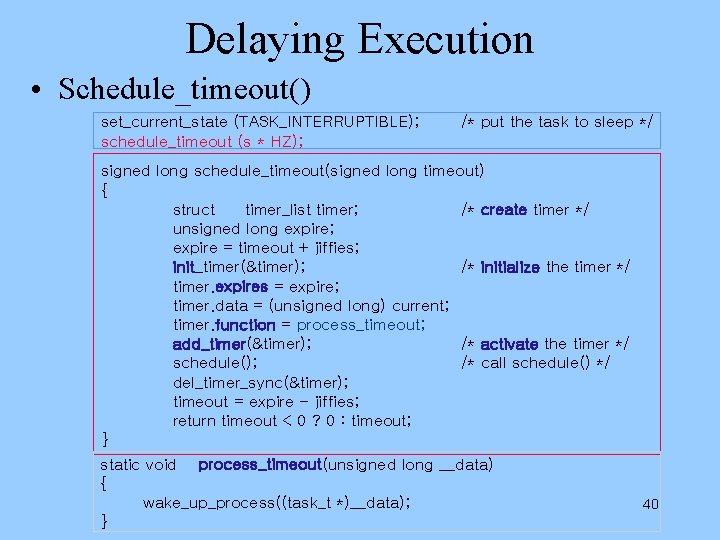

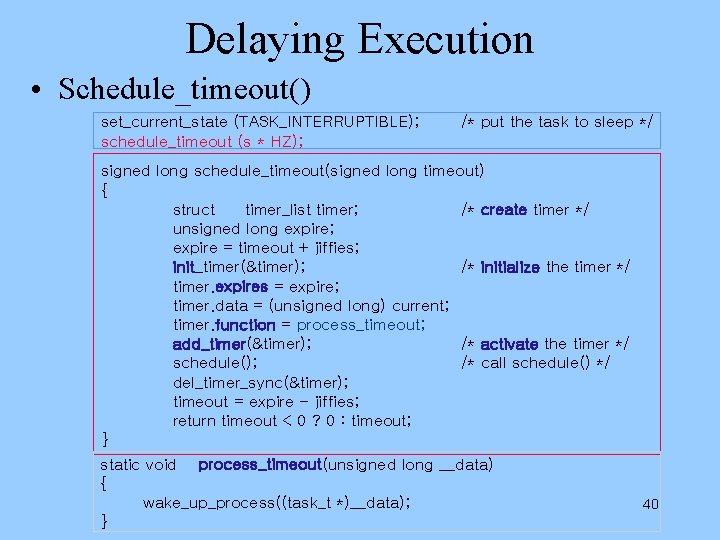

Delaying Execution • Schedule_timeout() set_current_state (TASK_INTERRUPTIBLE); schedule_timeout (s * HZ); /* put the task to sleep */ signed long schedule_timeout(signed long timeout) { struct timer_list timer; /* create timer */ unsigned long expire; expire = timeout + jiffies; init_timer(&timer); /* initialize the timer */ timer. expires = expire; timer. data = (unsigned long) current; timer. function = process_timeout; add_timer(&timer); /* activate the timer */ schedule(); /* call schedule() */ del_timer_sync(&timer); timeout = expire - jiffies; return timeout < 0 ? 0 : timeout; } static void process_timeout(unsigned long __data) { wake_up_process((task_t *)__data); } 40

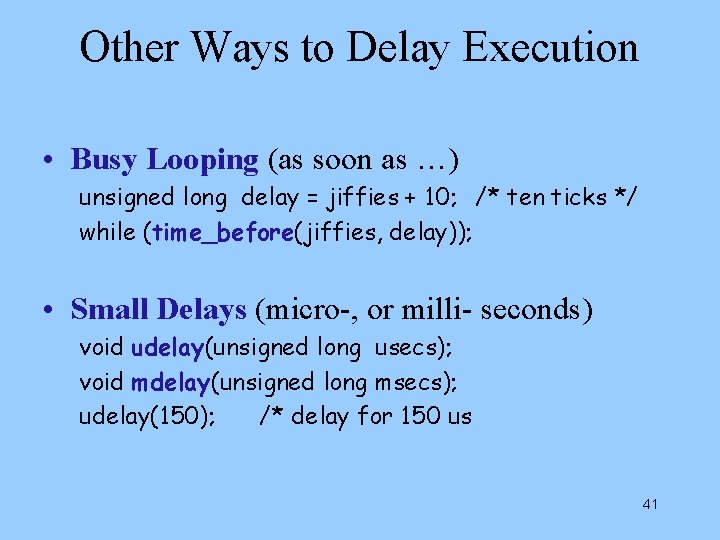

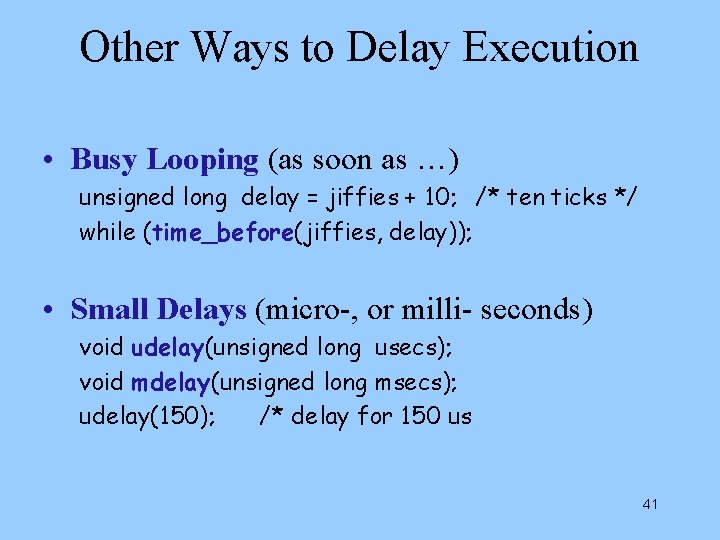

Other Ways to Delay Execution • Busy Looping (as soon as …) unsigned long delay = jiffies + 10; /* ten ticks */ while (time_before(jiffies, delay)); • Small Delays (micro-, or milli- seconds) void udelay(unsigned long usecs); void mdelay(unsigned long msecs); udelay(150); /* delay for 150 us 41