2014 REU Program at ECU Software Testing Foundations

![Pairwise tools • • • • 1. CATS (Constrained Array Test System) [Sherwood] Bell Pairwise tools • • • • 1. CATS (Constrained Array Test System) [Sherwood] Bell](https://slidetodoc.com/presentation_image/b467f056446291b36eb5cad84c79f2e6/image-10.jpg)

- Slides: 36

2014 REU Program at ECU Software Testing - Foundations, Tools, and Applications Lecture 2 May 27, 2014 Software Testing Research Dr. Sergiy Vilkomir

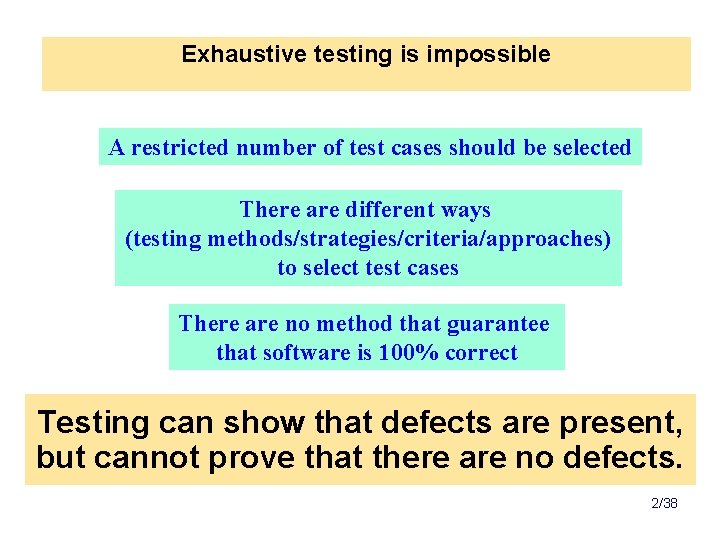

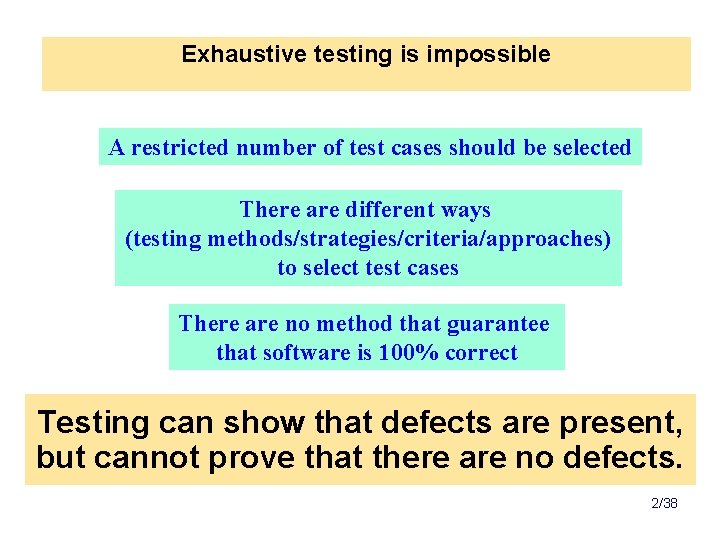

Exhaustive testing is impossible A restricted number of test cases should be selected There are different ways (testing methods/strategies/criteria/approaches) to select test cases There are no method that guarantee that software is 100% correct Testing can show that defects are present, but cannot prove that there are no defects. 2/38

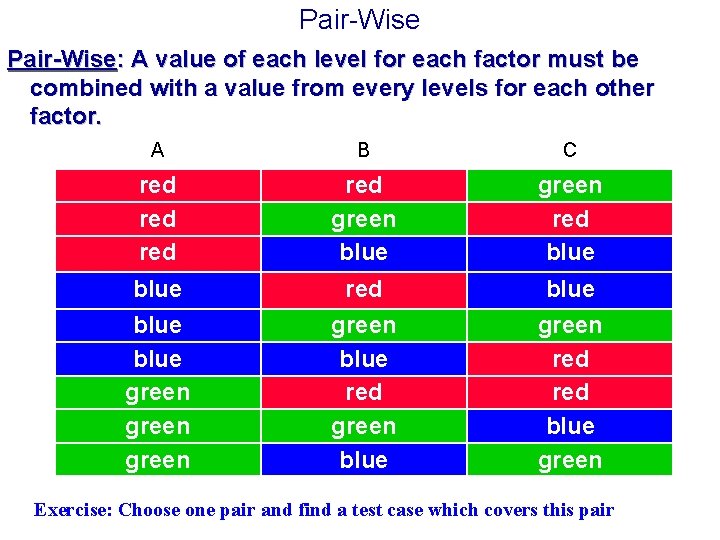

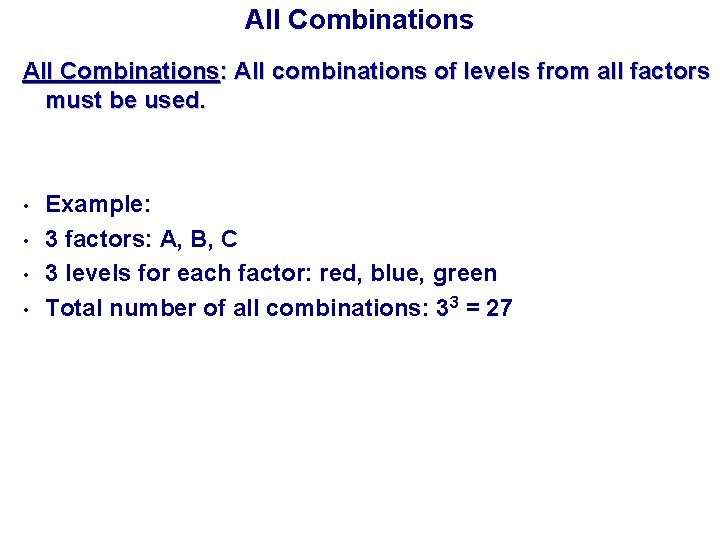

All Combinations: All combinations of levels from all factors must be used. • • Example: 3 factors: A, B, C 3 levels for each factor: red, blue, green Total number of all combinations: 33 = 27

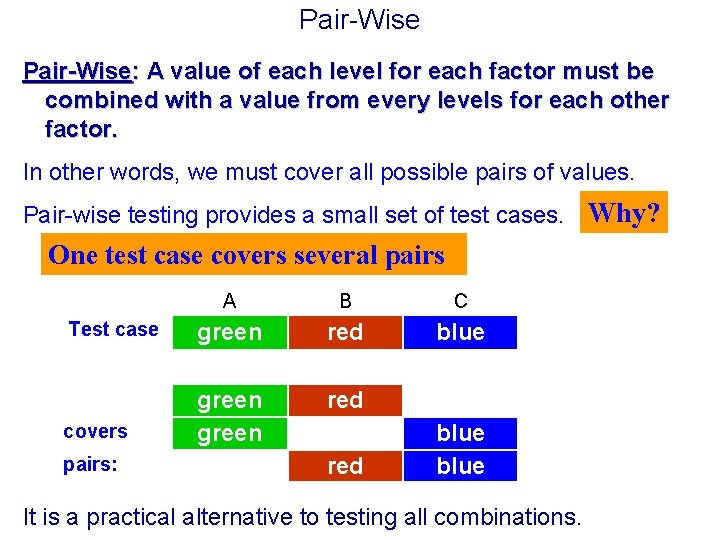

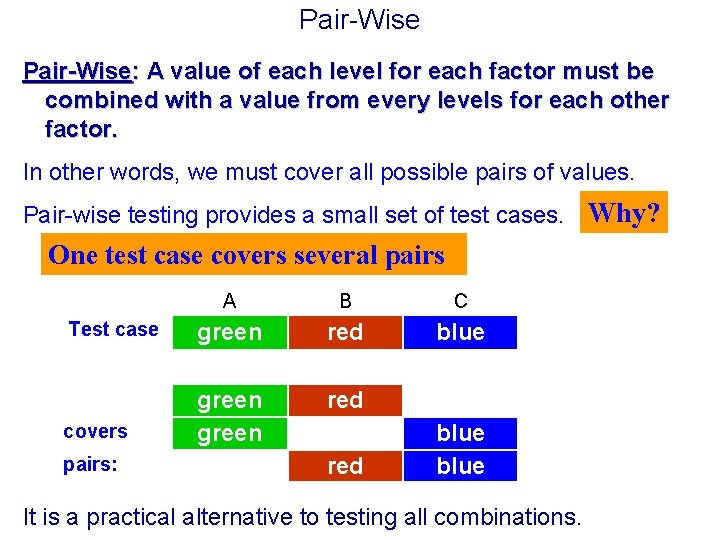

Pair-Wise: A value of each level for each factor must be combined with a value from every levels for each other factor. In other words, we must cover all possible pairs of values. Pair-wise testing provides a small set of test cases. Why? One test case covers several pairs A B C Test case green red blue red covers green pairs: red blue It is a practical alternative to testing all combinations.

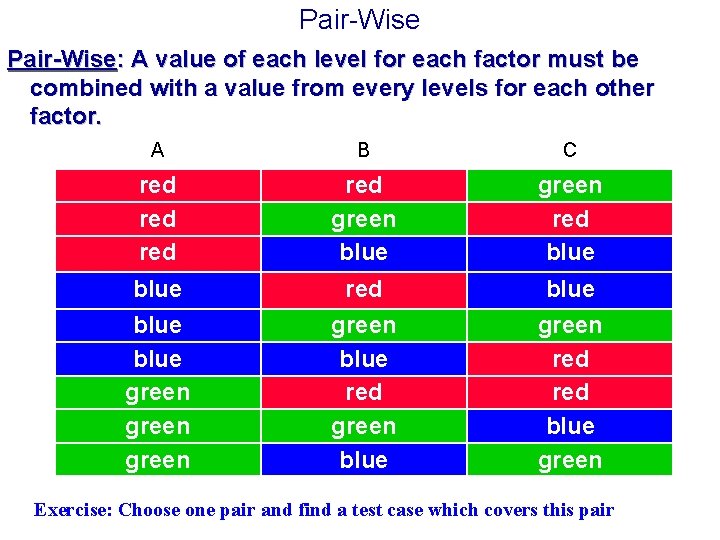

Pair-Wise: A value of each level for each factor must be combined with a value from every levels for each other factor. A B C red red green blue green red blue blue green blue red green blue green red blue green Exercise: Choose one pair and find a test case which covers this pair

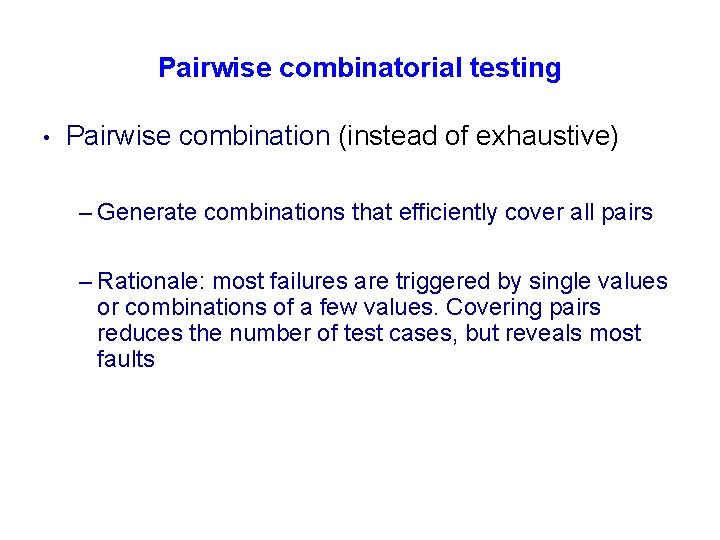

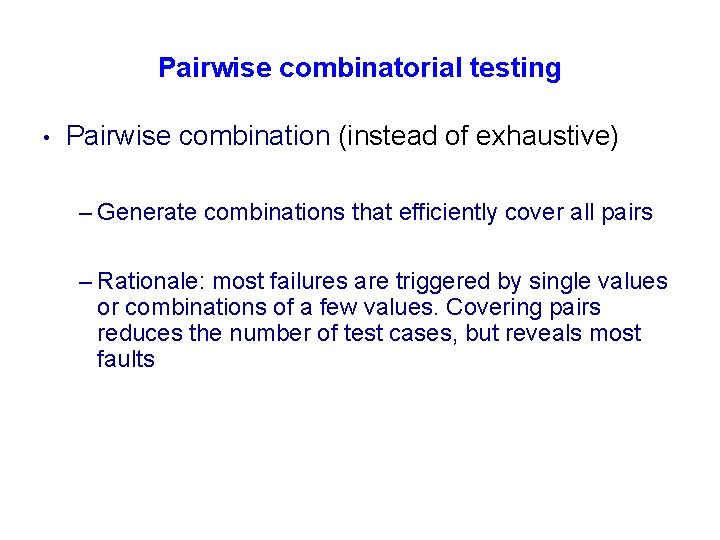

Pairwise combinatorial testing • Pairwise combination (instead of exhaustive) – Generate combinations that efficiently cover all pairs – Rationale: most failures are triggered by single values or combinations of a few values. Covering pairs reduces the number of test cases, but reveals most faults

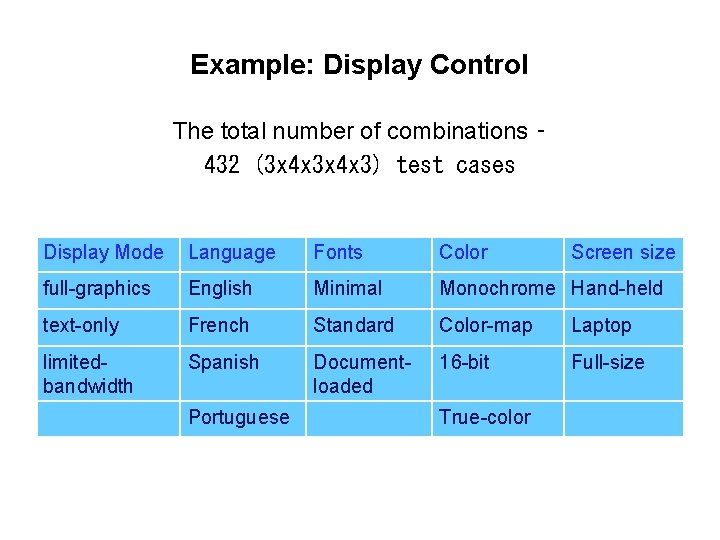

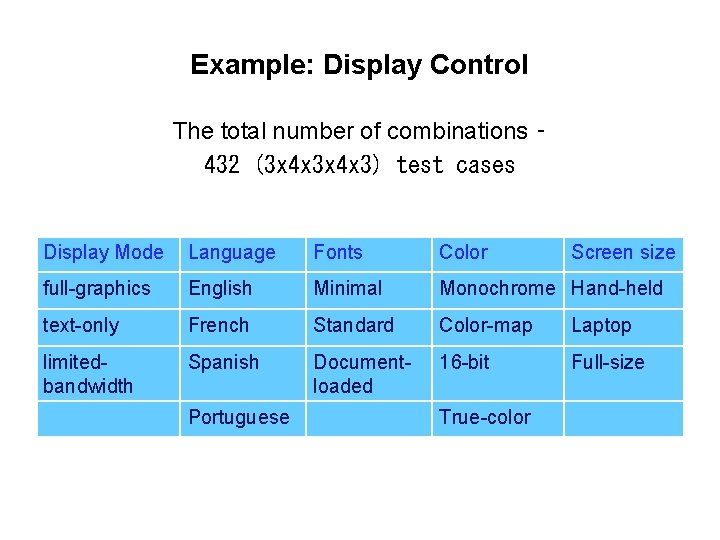

Example: Display Control The total number of combinations – 432 (3 x 4 x 3) test cases Display Mode Language Fonts Color full-graphics English Minimal Monochrome Hand-held text-only French Standard Color-map Laptop limitedbandwidth Spanish Documentloaded 16 -bit Full-size Portuguese True-color Screen size

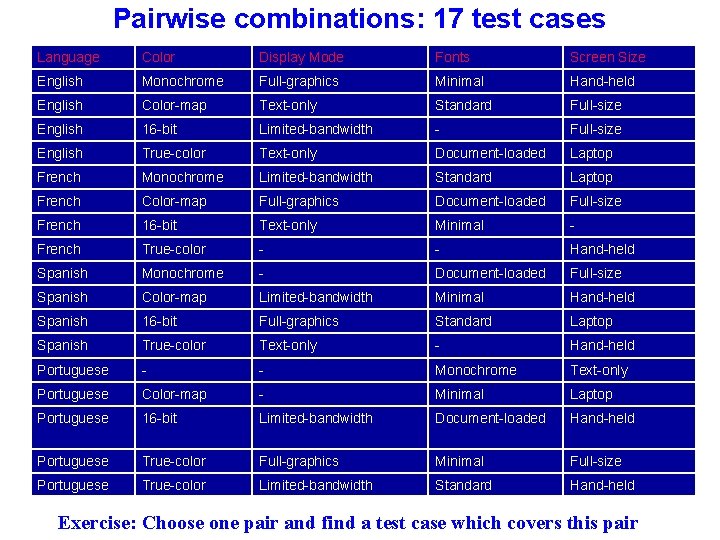

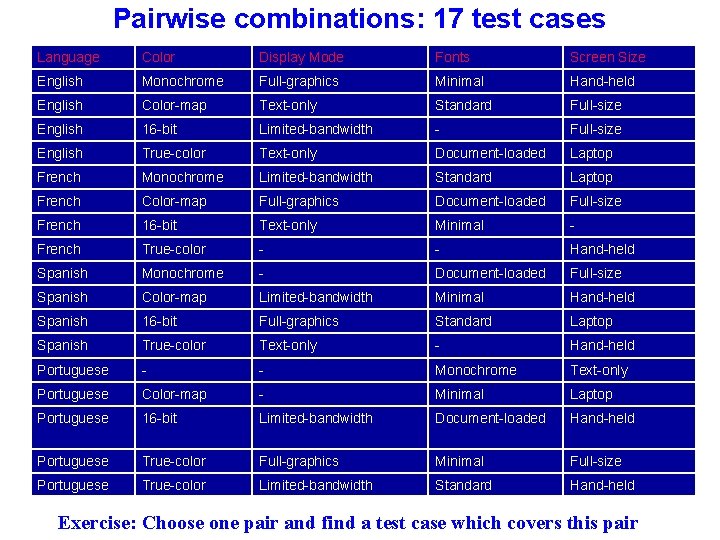

Pairwise combinations: 17 test cases Language Color Display Mode Fonts Screen Size English Monochrome Full-graphics Minimal Hand-held English Color-map Text-only Standard Full-size English 16 -bit Limited-bandwidth - Full-size English True-color Text-only Document-loaded Laptop French Monochrome Limited-bandwidth Standard Laptop French Color-map Full-graphics Document-loaded Full-size French 16 -bit Text-only Minimal - French True-color - - Hand-held Spanish Monochrome - Document-loaded Full-size Spanish Color-map Limited-bandwidth Minimal Hand-held Spanish 16 -bit Full-graphics Standard Laptop Spanish True-color Text-only - Hand-held Portuguese - - Monochrome Text-only Portuguese Color-map - Minimal Laptop Portuguese 16 -bit Limited-bandwidth Document-loaded Hand-held Portuguese True-color Full-graphics Minimal Full-size Portuguese True-color Limited-bandwidth Standard Hand-held Exercise: Choose one pair and find a test case which covers this pair

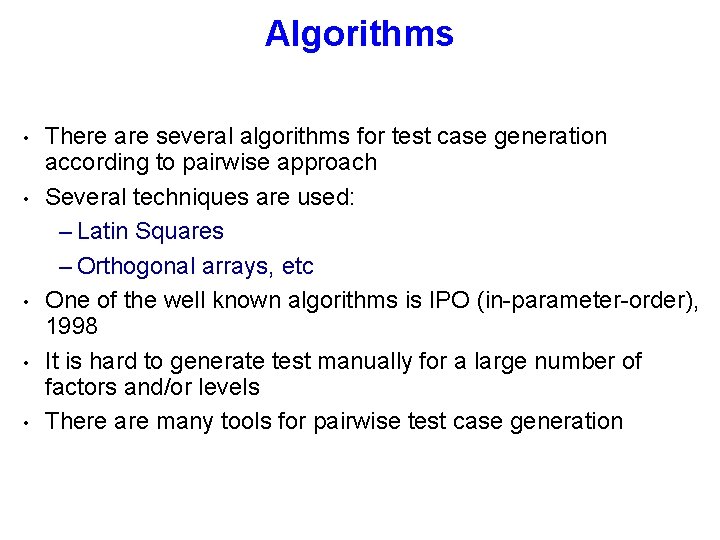

Algorithms • • • There are several algorithms for test case generation according to pairwise approach Several techniques are used: – Latin Squares – Orthogonal arrays, etc One of the well known algorithms is IPO (in-parameter-order), 1998 It is hard to generate test manually for a large number of factors and/or levels There are many tools for pairwise test case generation

![Pairwise tools 1 CATS Constrained Array Test System Sherwood Bell Pairwise tools • • • • 1. CATS (Constrained Array Test System) [Sherwood] Bell](https://slidetodoc.com/presentation_image/b467f056446291b36eb5cad84c79f2e6/image-10.jpg)

Pairwise tools • • • • 1. CATS (Constrained Array Test System) [Sherwood] Bell Labs. 2. OATS (Orthogonal Array Test System) [Phadke] AT&T. 3 AETG Telecordia Web-based, commercial 4. IPO (Pair. Test) [Tai/Lei] 5. TConfig [Williams] Java-applet 6. TCG (Test Case Generator) NASA 7. All. Pairs Satisfice Perl script, free, GPL 8. Pro-Test Sigma. Zone GUI, commercial 9. CTS (Combinatorial Test Services) IBM Free for non-commercial use 10. Jenny [Jenkins] Command-line, free, public-domain 11. Reduce. Array 2 STSC, U. S. Air Force Spreadsheet-based, free 12. Test. Cover Testcover. com Web-based, commercial 13. DDA [Colburn/Cohen/Turban] 14. Test Vector Generator GUI, free 15. OA 1 k sharp technology, etc

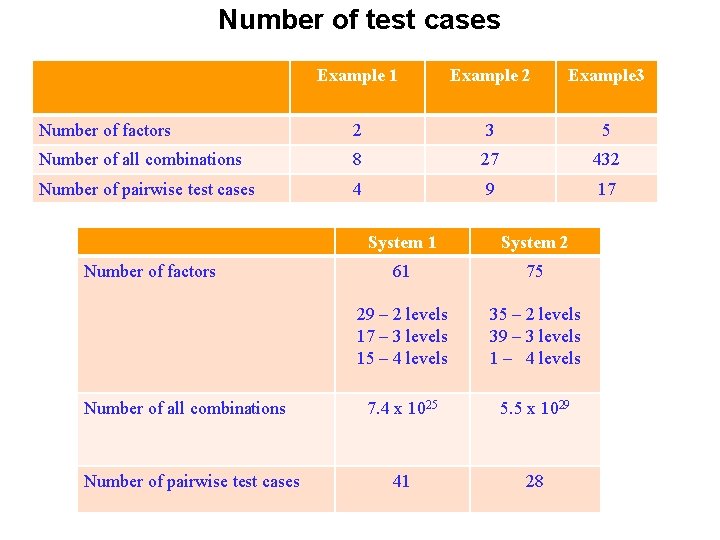

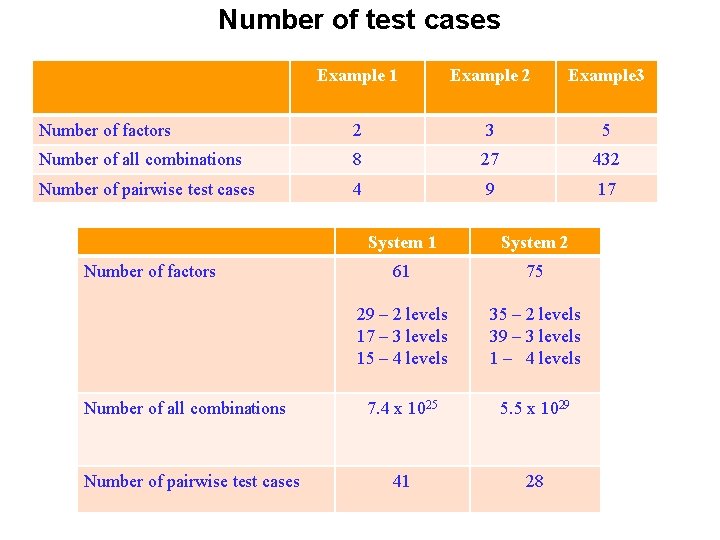

Number of test cases Example 1 Example 2 Example 3 Number of factors 2 3 5 Number of all combinations 8 27 432 Number of pairwise test cases 4 9 17 Number of factors Number of all combinations Number of pairwise test cases System 1 System 2 61 75 29 – 2 levels 17 – 3 levels 15 – 4 levels 35 – 2 levels 39 – 3 levels 1 – 4 levels 7. 4 x 1025 5. 5 x 1029 41 28

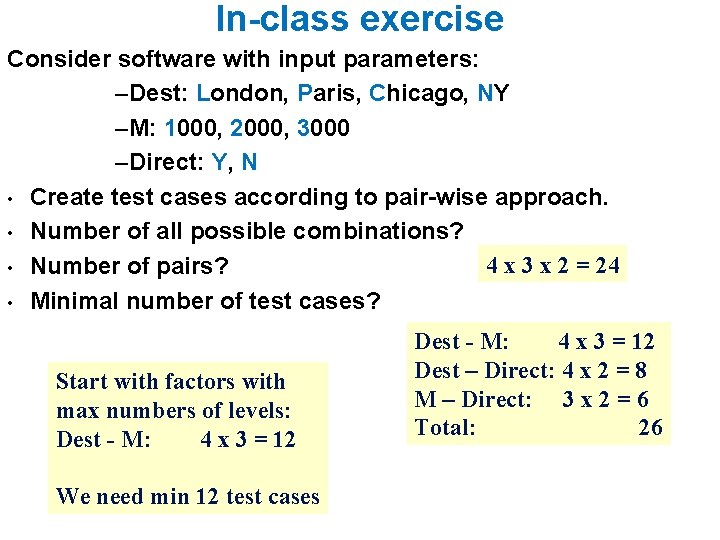

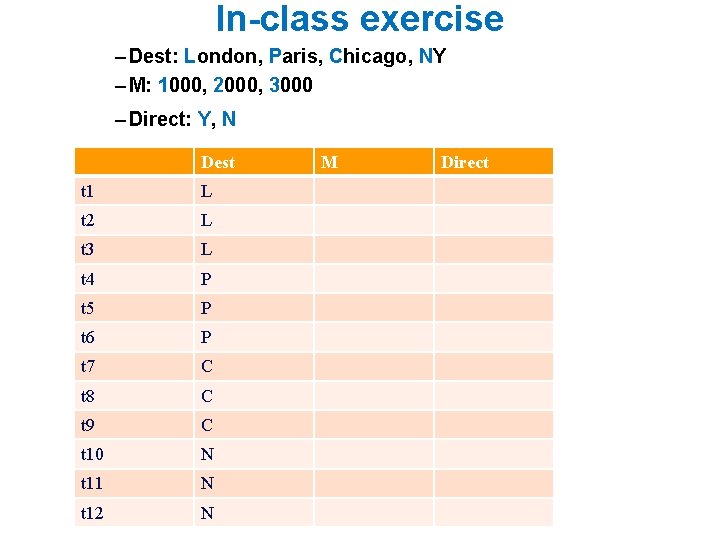

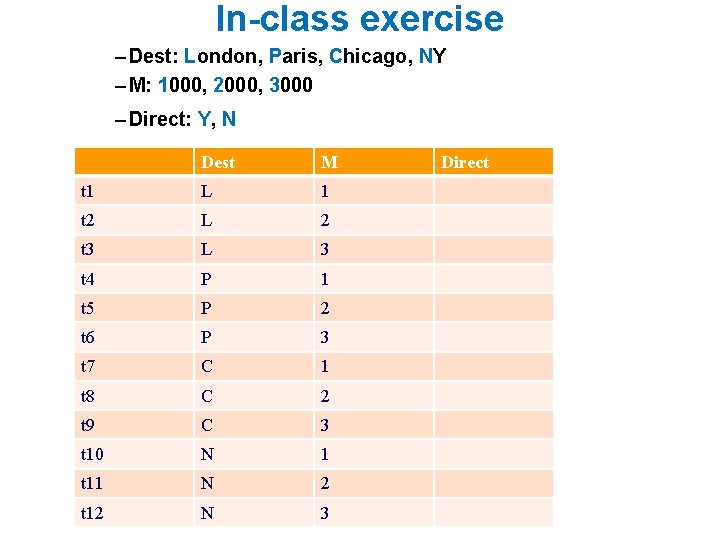

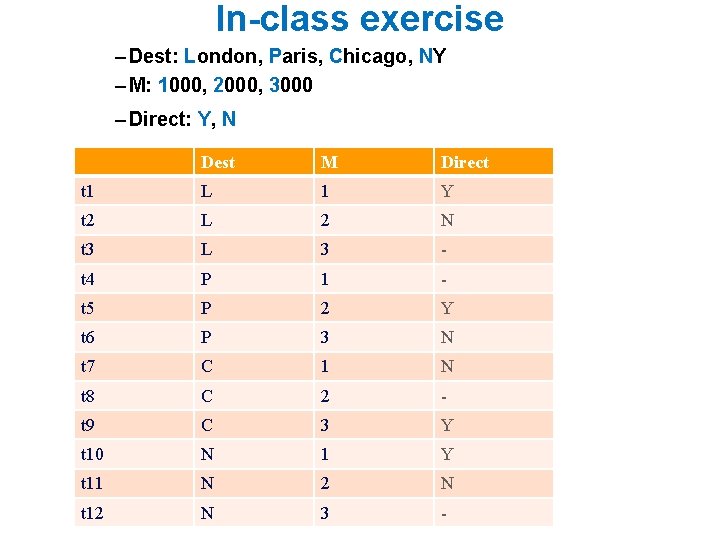

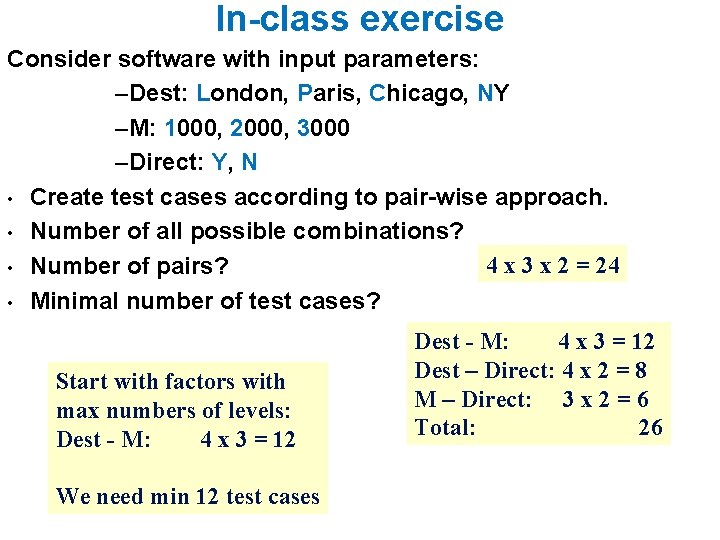

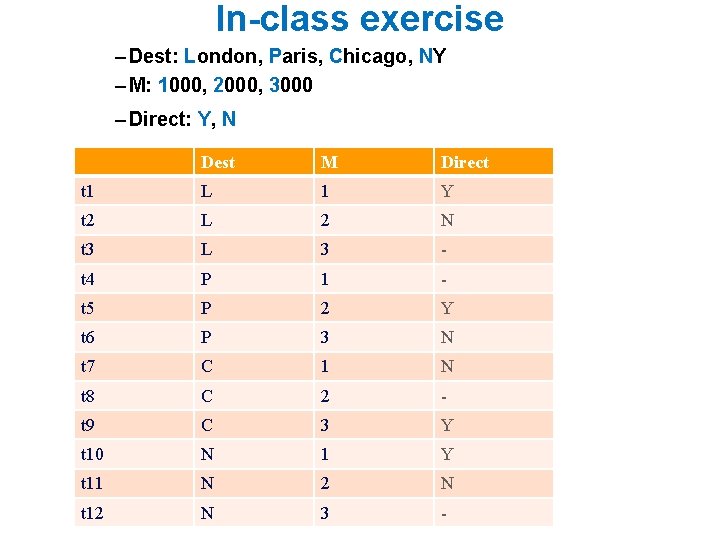

In-class exercise Consider software with input parameters: –Dest: London, Paris, Chicago, NY –M: 1000, 2000, 3000 –Direct: Y, N • Create test cases according to pair-wise approach. • Number of all possible combinations? 4 x 3 x 2 = 24 • Number of pairs? • Minimal number of test cases? Start with factors with max numbers of levels: Dest - M: 4 x 3 = 12 We need min 12 test cases Dest - M: 4 x 3 = 12 Dest – Direct: 4 x 2 = 8 M – Direct: 3 x 2 = 6 Total: 26

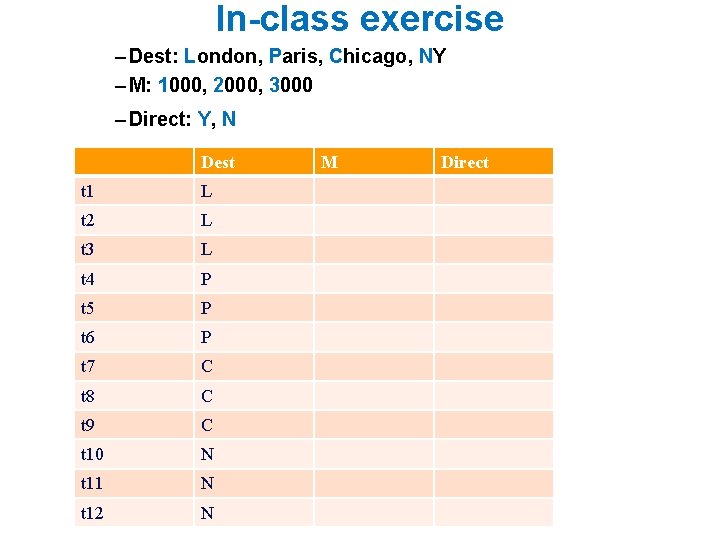

In-class exercise – Dest: London, Paris, Chicago, NY – M: 1000, 2000, 3000 – Direct: Y, N Dest t 1 L t 2 L t 3 L t 4 P t 5 P t 6 P t 7 C t 8 C t 9 C t 10 N t 11 N t 12 N M Direct

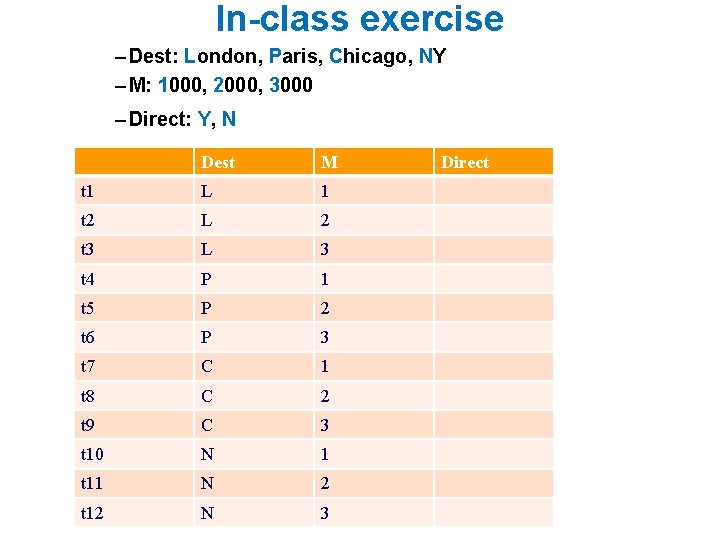

In-class exercise – Dest: London, Paris, Chicago, NY – M: 1000, 2000, 3000 – Direct: Y, N Dest M t 1 L 1 t 2 L 2 t 3 L 3 t 4 P 1 t 5 P 2 t 6 P 3 t 7 C 1 t 8 C 2 t 9 C 3 t 10 N 1 t 11 N 2 t 12 N 3 Direct

In-class exercise – Dest: London, Paris, Chicago, NY – M: 1000, 2000, 3000 – Direct: Y, N Dest M Direct t 1 L 1 Y t 2 L 2 N t 3 L 3 - t 4 P 1 - t 5 P 2 Y t 6 P 3 N t 7 C 1 N t 8 C 2 - t 9 C 3 Y t 10 N 1 Y t 11 N 2 N t 12 N 3 -

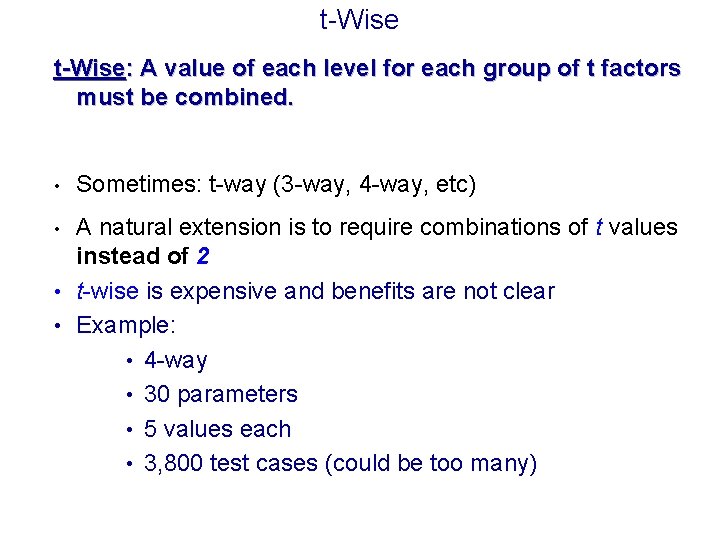

t-Wise: A value of each level for each group of t factors must be combined. • Sometimes: t-way (3 -way, 4 -way, etc) A natural extension is to require combinations of t values instead of 2 • t-wise is expensive and benefits are not clear • Example: • 4 -way • 30 parameters • 5 values each • 3, 800 test cases (could be too many) •

Combinatorial Methods in Software Testing • • National Institute of Standards and Technology (NIST) http: //csrc. nist. gov/groups/SNS/acts/index. html Presentation by Rick Kuhn, (NIST) at ECU, March 22, 2012 http: //core. ecu. edu/ST RG/seminars. html 17/18

Software Testing Study Software Testing is a part of ECU MSc SE program • SENG 6265 Foundations of Software Testing • SENG 6270 Software Verification and Validation

Software Testing Research - ? New Scientific approach: investigation, analysis, comparison, justification, etc. Publications Long period of time – BS, MS, Ph. D ….

http: //core. ecu. edu/STRG/

Project 1 Mobile testing to detect device specific faults 21/

Device-specific failures • • • Device-specific failures are very common for mobile software applications An application works reliably on many smartphones and tablets, but does not work properly (i. e. , fails) on some specific devices Examples: • App normally works under some specific operating system but fails under the latest or older OS • Graphics created for high-resolution screens are not shown properly on the mobile devices with extra-high or low screen resolutions • Factors: OS, screen resolution, screen size, device type (smartphone or tablet), handset manufacturer, RAM, etc. 22/

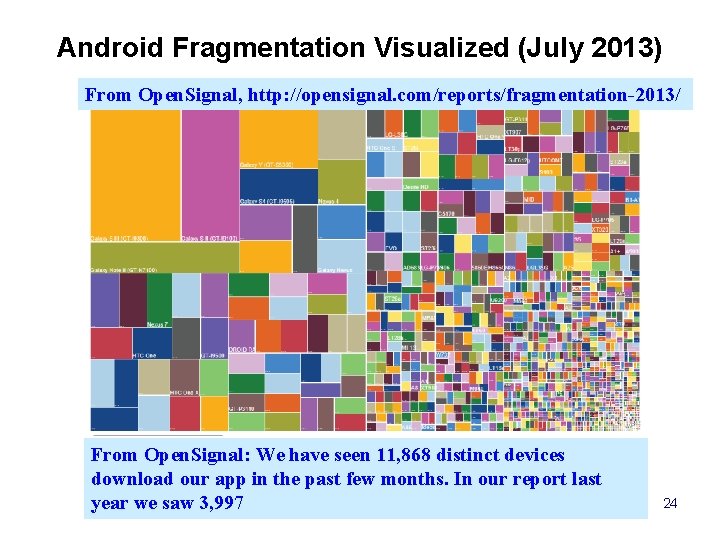

Device-specific failures How many? • There are many different mobile devices • Sufficient testing is required on different mobile devices • Such testing is expensive and time-consuming 23

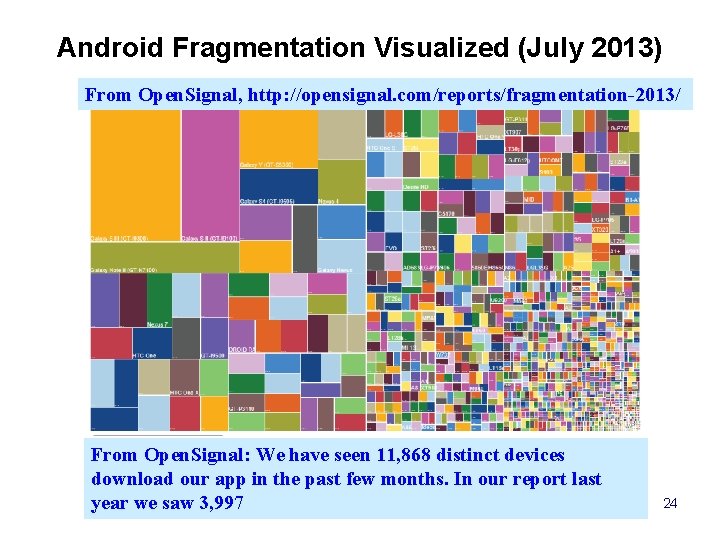

Android Fragmentation Visualized (July 2013) From Open. Signal, http: //opensignal. com/reports/fragmentation-2013/ From Open. Signal: We have seen 11, 868 distinct devices download our app in the past few months. In our report last year we saw 3, 997 24

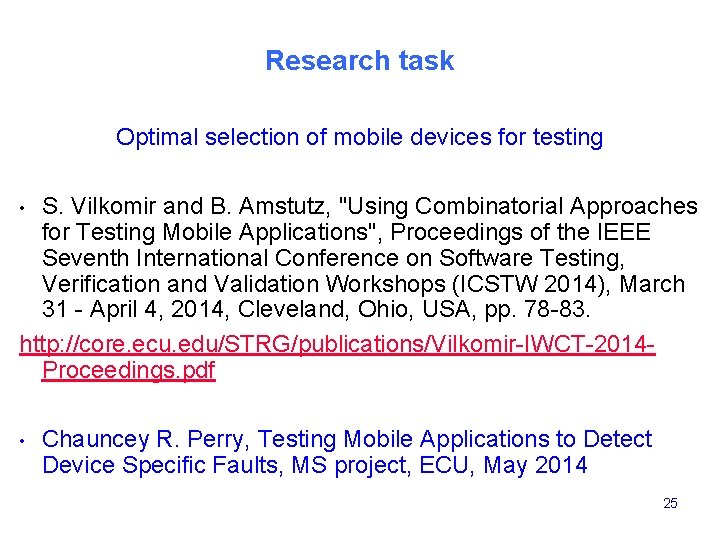

Research task Optimal selection of mobile devices for testing S. Vilkomir and B. Amstutz, "Using Combinatorial Approaches for Testing Mobile Applications", Proceedings of the IEEE Seventh International Conference on Software Testing, Verification and Validation Workshops (ICSTW 2014), March 31 - April 4, 2014, Cleveland, Ohio, USA, pp. 78 -83. http: //core. ecu. edu/STRG/publications/Vilkomir-IWCT-2014 Proceedings. pdf • • Chauncey R. Perry, Testing Mobile Applications to Detect Device Specific Faults, MS project, ECU, May 2014 25

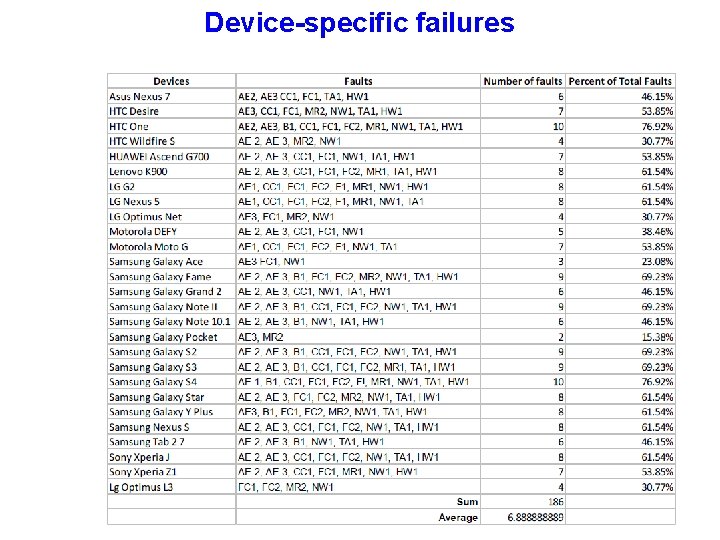

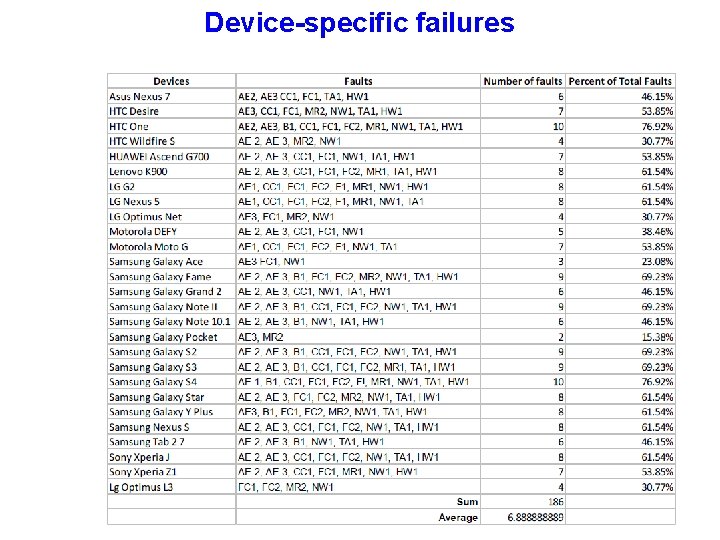

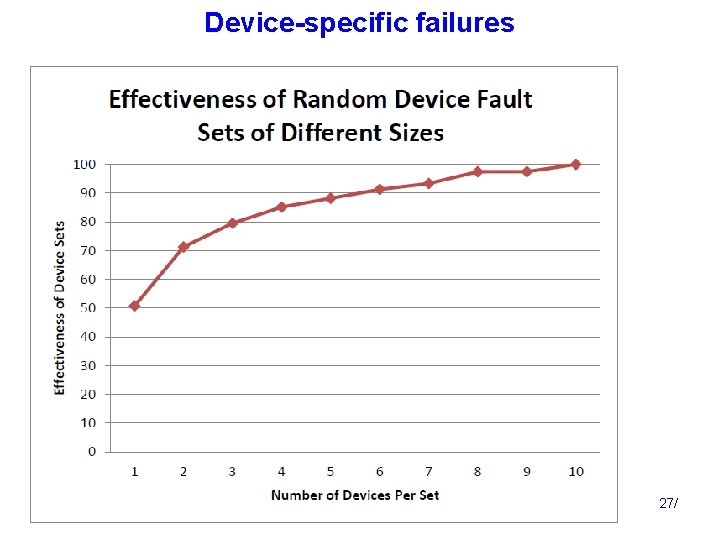

Device-specific failures 26/

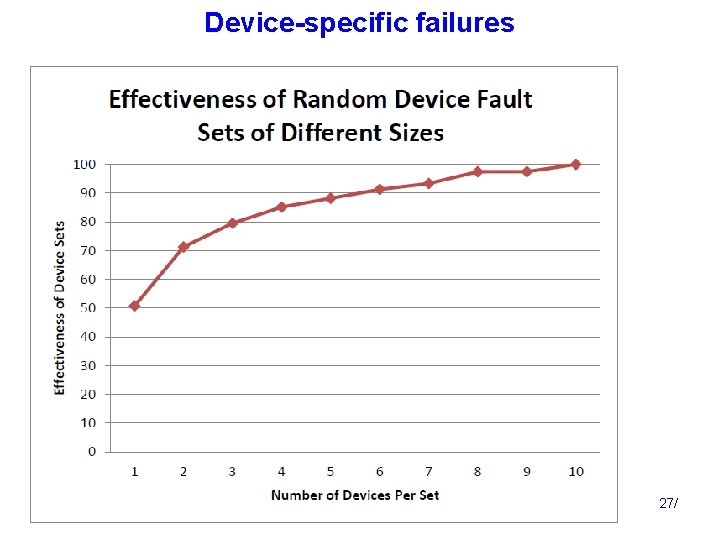

Device-specific failures 27/

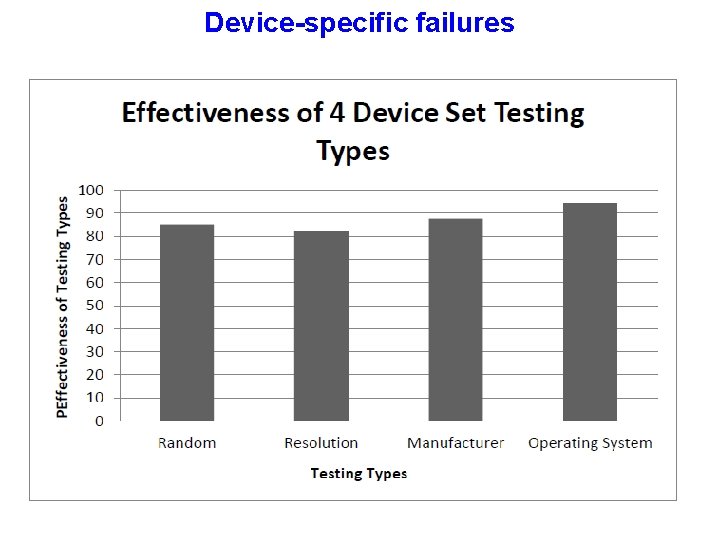

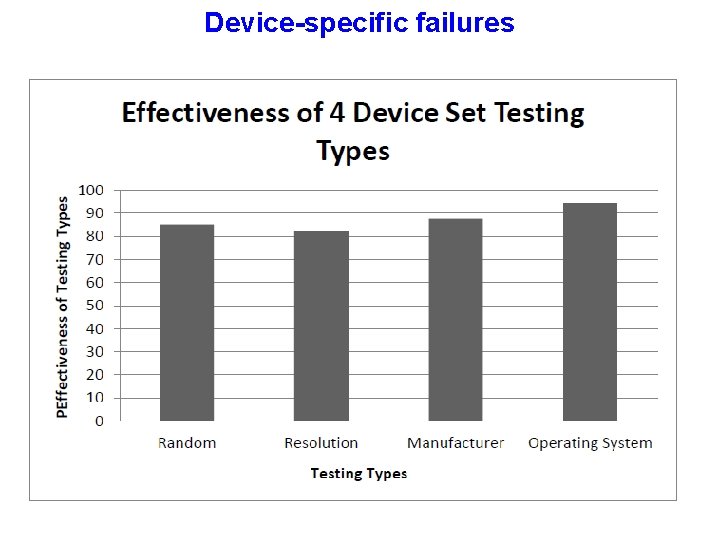

Device-specific failures 28/

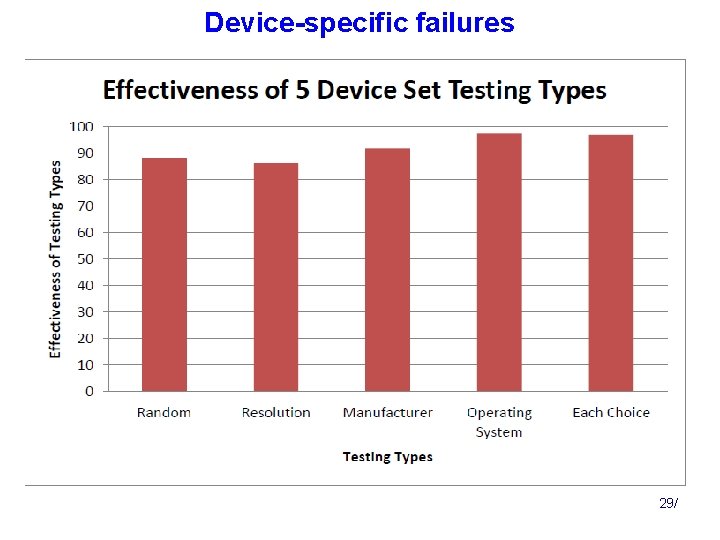

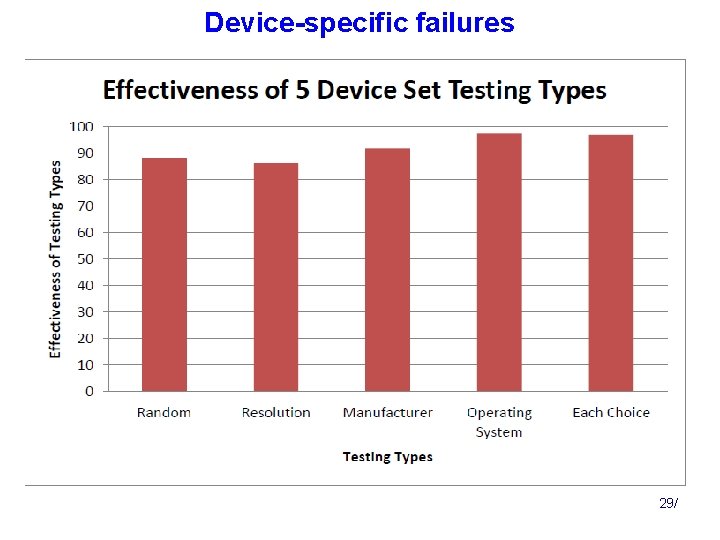

Device-specific failures 29/

Project 2 Effective Test Generation using Combinatorial Design and MCDC 30/

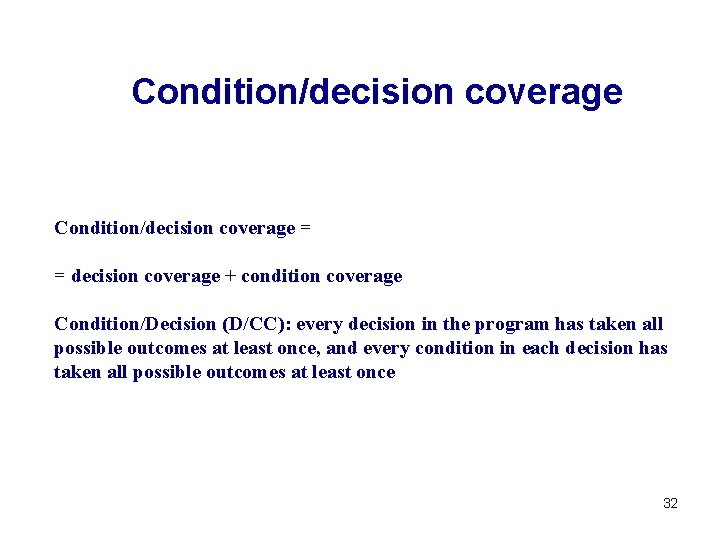

Terminology • • Logical expression (the program points at which the control flow can divide into various paths) = Decision Atomic predicates (elementary Boolean expressions), which form component parts of decisions = Conditions Example: d = A C B D d – decision Values of decisions and conditions are TRUE ( 1 ) or FALSE ( 0 ) A, B, C, D – conditions Conditions depend on program data, for example: A ↔ x<7 B ↔ y=3 31

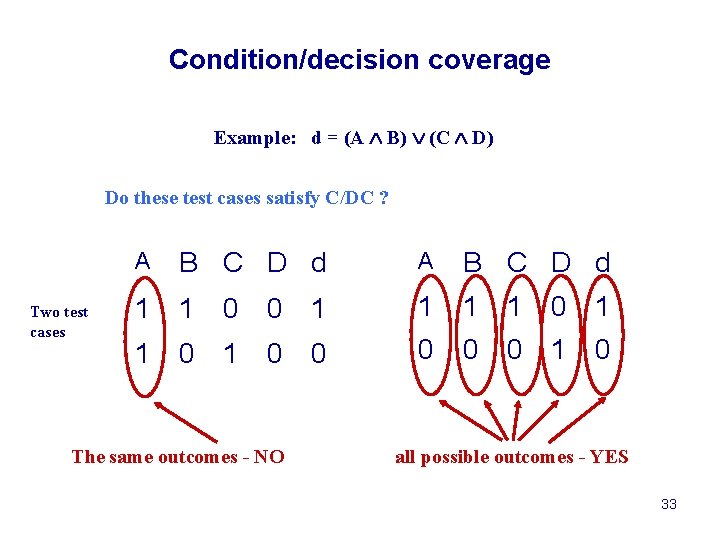

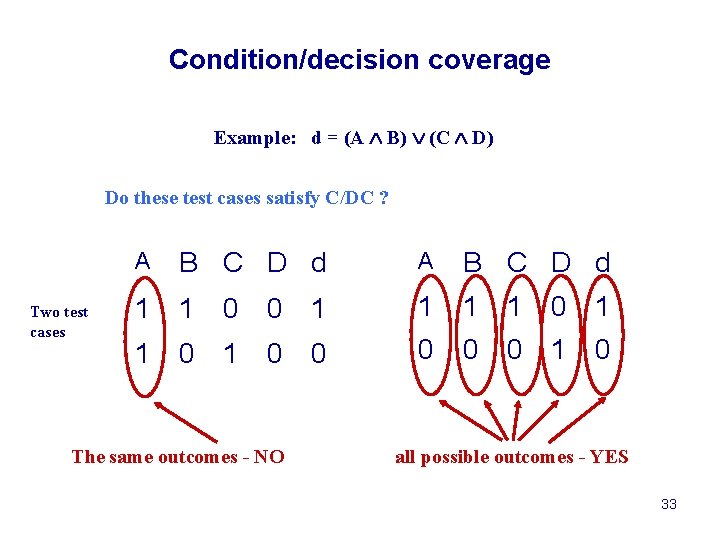

Condition/decision coverage = = decision coverage + condition coverage Condition/Decision (D/CC): every decision in the program has taken all possible outcomes at least once, and every condition in each decision has taken all possible outcomes at least once 32 32

Condition/decision coverage Example: d = (A B) (C D) Do these test cases satisfy C/DC ? Two test cases A B C D d 1 1 0 0 1 1 0 0 0 1 0 The same outcomes - NO all possible outcomes - YES 33

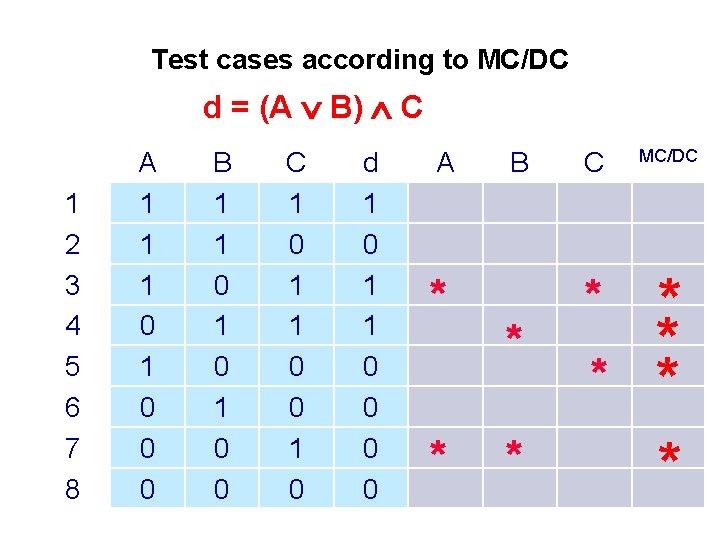

MC/DC • “Independent affect” is the main idea of Modified Condition/Decision Coverage (MC/DC) • Suggested in 1992 -1994 for avionic software • DO-178 B. Software Considerations in Airborne Systems and Equipment Certification. USA, 1992. Chilenski, J. and Miller, S. Software Engineering Journal, 1994 •

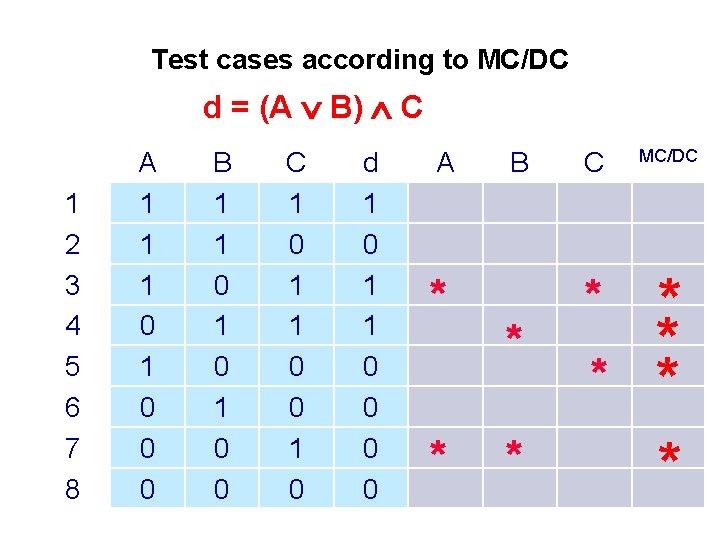

Test cases according to MC/DC d = (A B) C 1 2 3 4 5 6 7 8 A 1 1 1 0 0 0 B 1 1 0 1 0 0 C 1 0 1 1 0 0 1 0 d 1 0 1 1 0 0 A * * B * * C MC/DC * * *

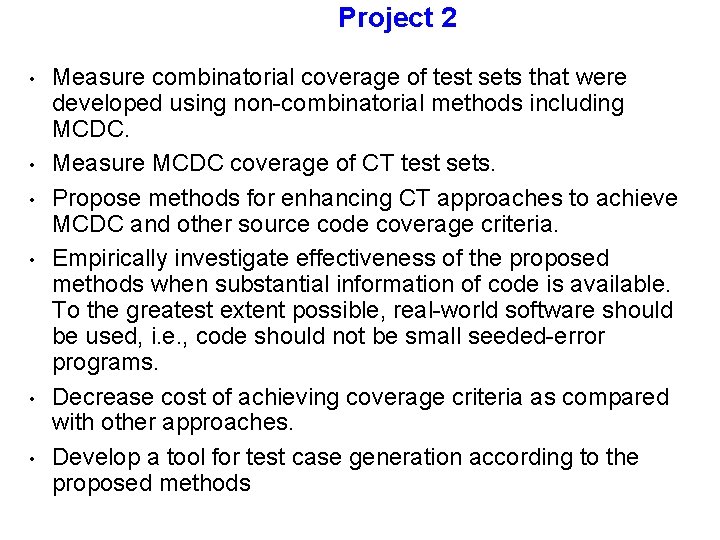

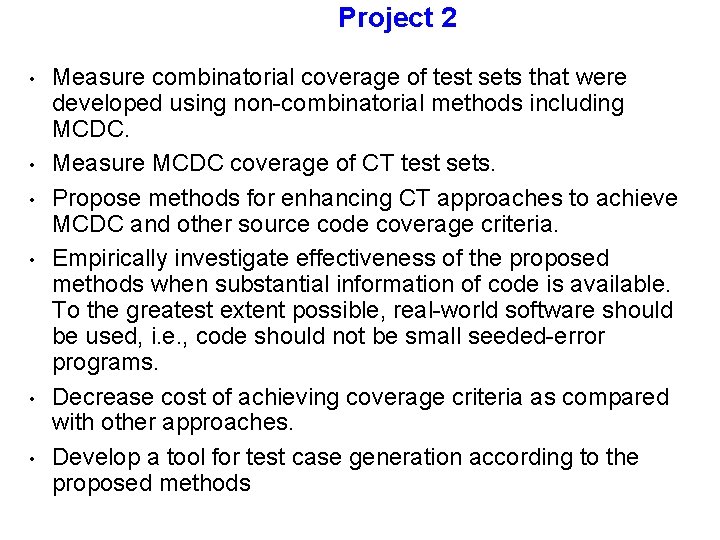

Project 2 • • • Measure combinatorial coverage of test sets that were developed using non-combinatorial methods including MCDC. Measure MCDC coverage of CT test sets. Propose methods for enhancing CT approaches to achieve MCDC and other source code coverage criteria. Empirically investigate effectiveness of the proposed methods when substantial information of code is available. To the greatest extent possible, real-world software should be used, i. e. , code should not be small seeded-error programs. Decrease cost of achieving coverage criteria as compared with other approaches. Develop a tool for test case generation according to the proposed methods