WORDPREDICTION AS A TOOL TO EVALUATE LOWLEVEL VISION

![Image pre-processing Segmentation* visual features [f 1 f 2 f 3 …. f. N] Image pre-processing Segmentation* visual features [f 1 f 2 f 3 …. f. N]](https://slidetodoc.com/presentation_image_h/034d2023820646797ae33eb3096f3ef6/image-6.jpg)

- Slides: 34

WORD-PREDICTION AS A TOOL TO EVALUATE LOW-LEVEL VISION PROCESSES Prasad Gabbur, Kobus Barnard University of Arizona

Overview q Word-prediction using translation model for object recognition q Feature evaluation q Segmentation evaluation q Modifications to Normalized Cuts segmentation algorithm q Evaluation of color constancy algorithms q Effects of illumination color change on object recognition q Strategies to deal with illumination color change

Motivation q Low-level computer vision algorithms q Segmentation, edge detection, feature extraction, etc. q Building blocks of computer vision systems q Is there a generic task to evaluate these algorithms quantitatively? q Word-prediction using translation model for object recognition q Sufficiently general q Quantitative evaluation is possible

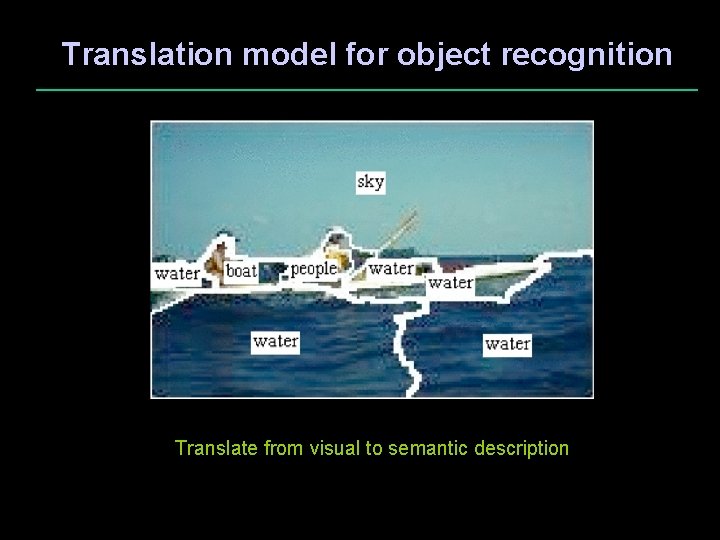

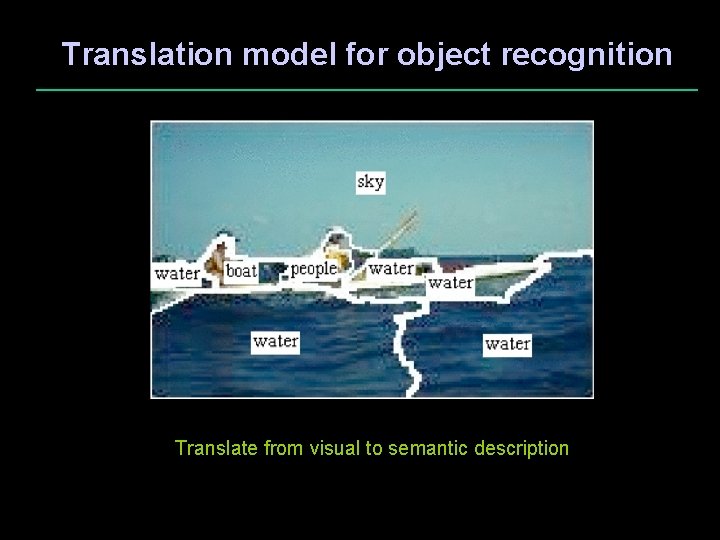

Translation model for object recognition Translate from visual to semantic description

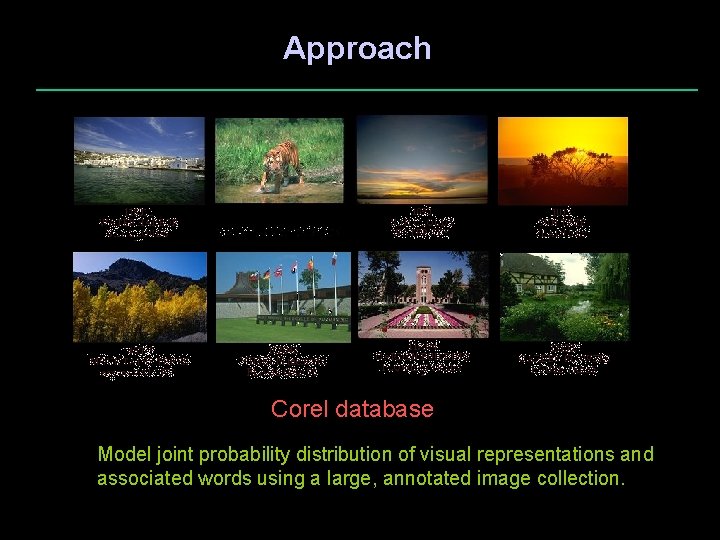

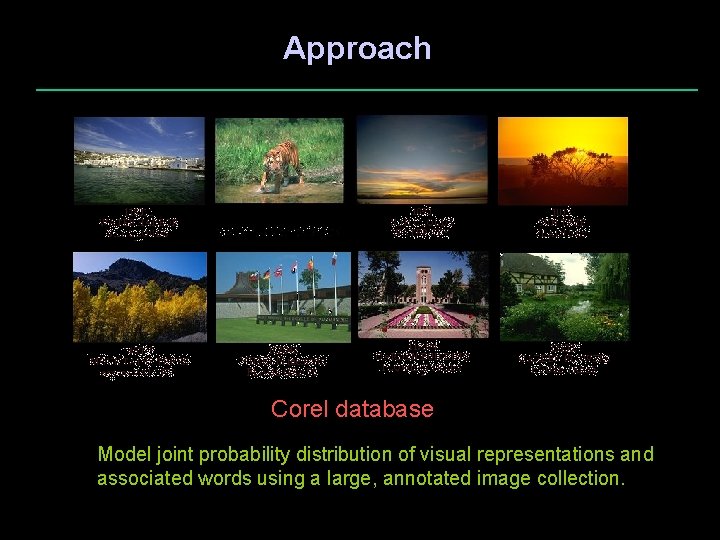

Approach Corel database Model joint probability distribution of visual representations and associated words using a large, annotated image collection.

![Image preprocessing Segmentation visual features f 1 f 2 f 3 f N Image pre-processing Segmentation* visual features [f 1 f 2 f 3 …. f. N]](https://slidetodoc.com/presentation_image_h/034d2023820646797ae33eb3096f3ef6/image-6.jpg)

Image pre-processing Segmentation* visual features [f 1 f 2 f 3 …. f. N] sun sky waves sea Joint distribution * Thanks to N-cuts team [Shi, Tal, Malik] for their segmentation algorithm

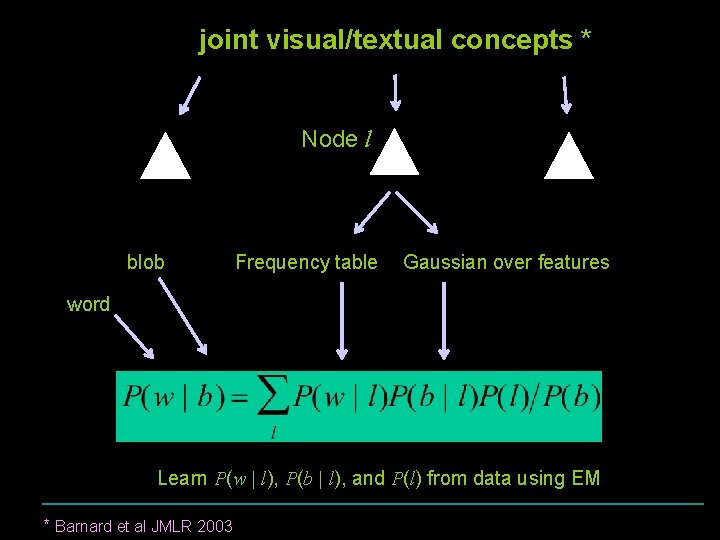

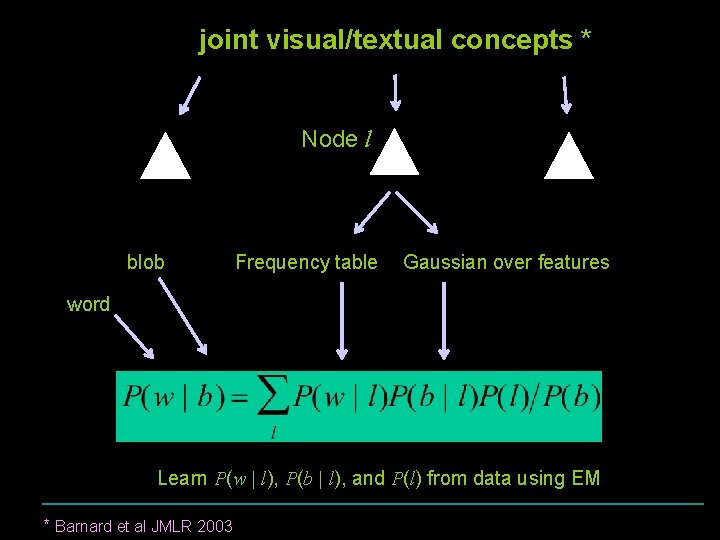

joint visual/textual concepts * Node l blob Frequency table Gaussian over features word Learn P(w | l), P(b | l), and P(l) from data using EM * Barnard et al JMLR 2003

Annotating images b 1 P(w|b 1) + Segment image b 2 P(w|b 2) . . . Compute P(w|b) for each region Sum over regions P(w|image)

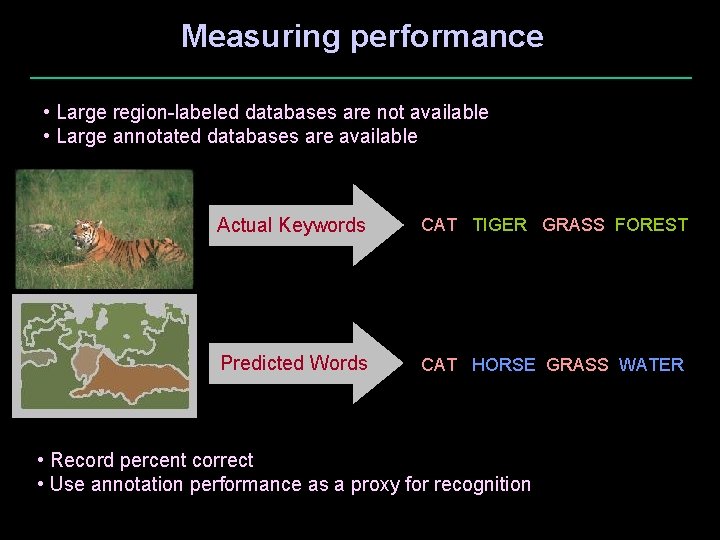

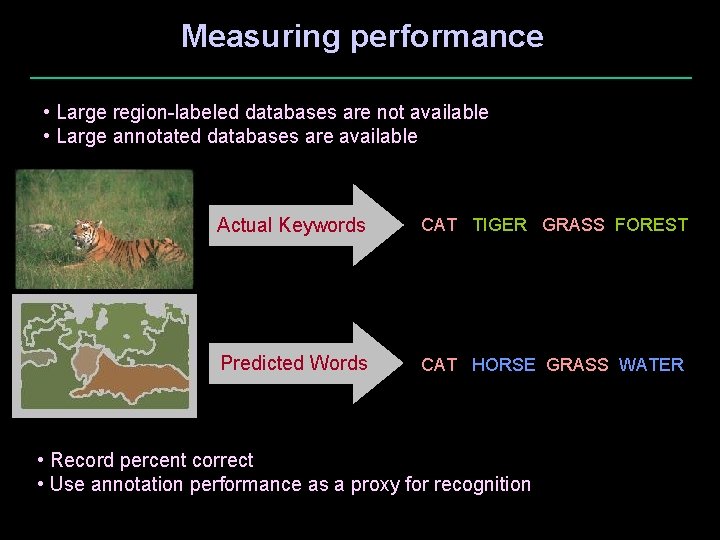

Measuring performance • Large region-labeled databases are not available • Large annotated databases are available Actual Keywords CAT TIGER GRASS FOREST Predicted Words CAT HORSE GRASS WATER • Record percent correct • Use annotation performance as a proxy for recognition

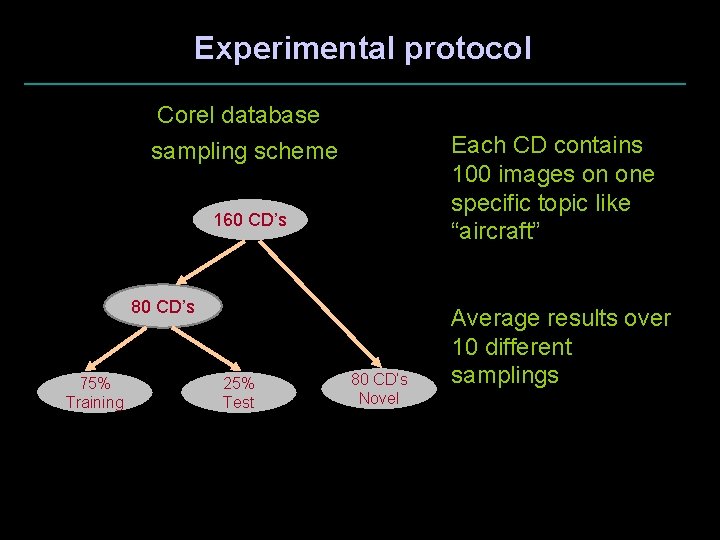

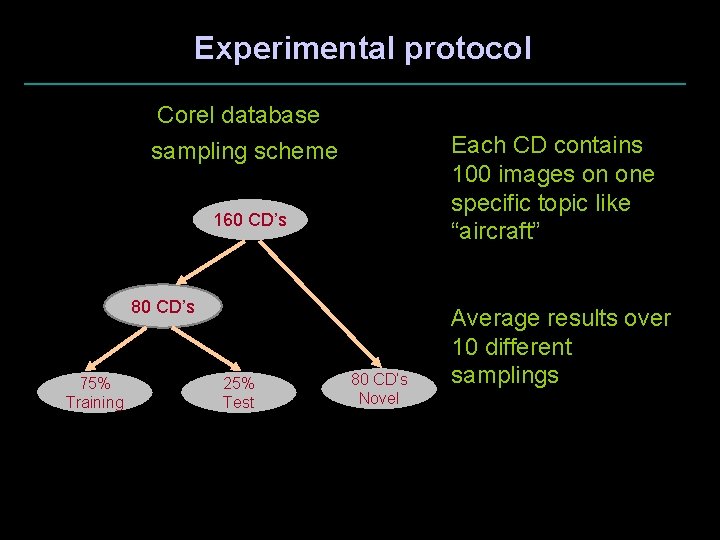

Experimental protocol Corel database Each CD contains 100 images on one specific topic like “aircraft” sampling scheme 160 CD’s 80 CD’s 75% Training 25% Test 80 CD’s Novel Average results over 10 different samplings

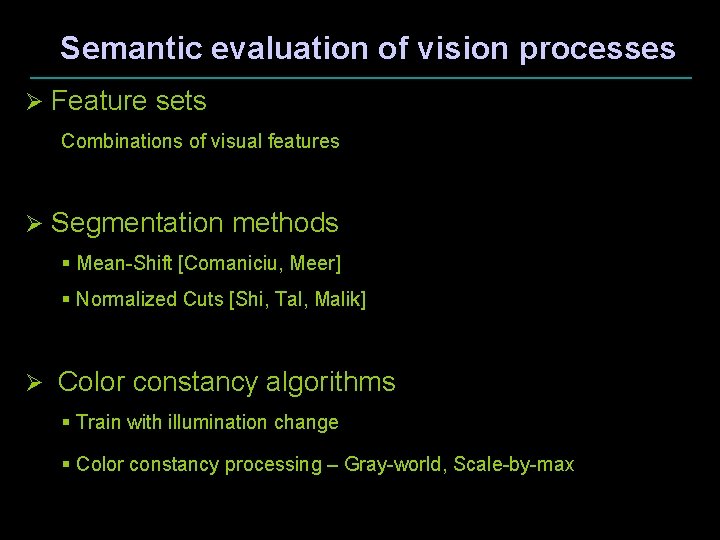

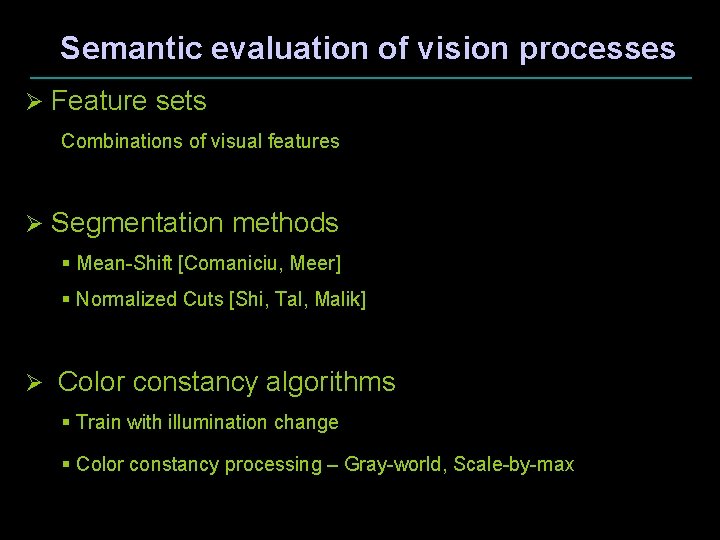

Semantic evaluation of vision processes Ø Feature sets Combinations of visual features Ø Segmentation methods § Mean-Shift [Comaniciu, Meer] § Normalized Cuts [Shi, Tal, Malik] Ø Color constancy algorithms § Train with illumination change § Color constancy processing – Gray-world, Scale-by-max

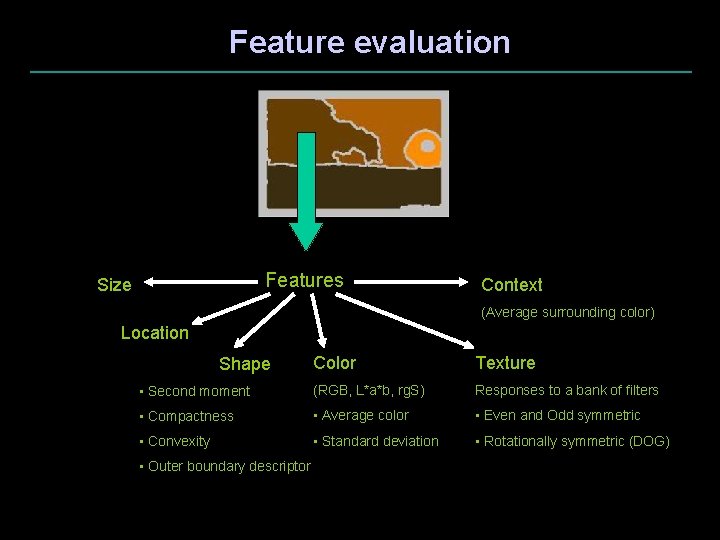

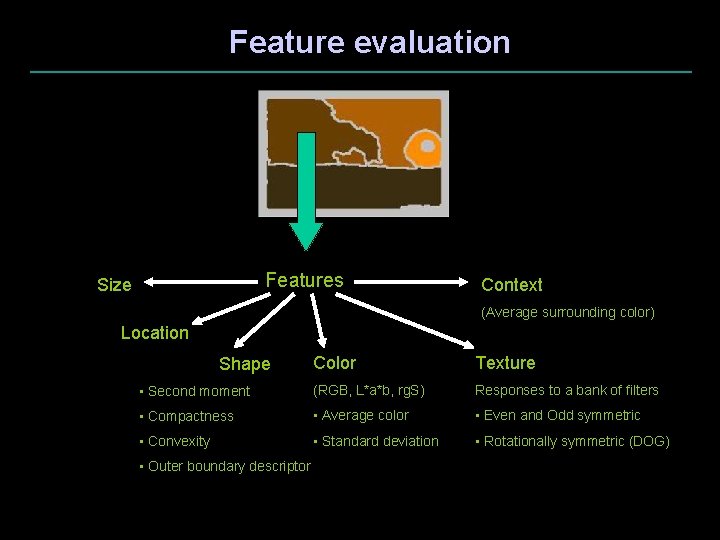

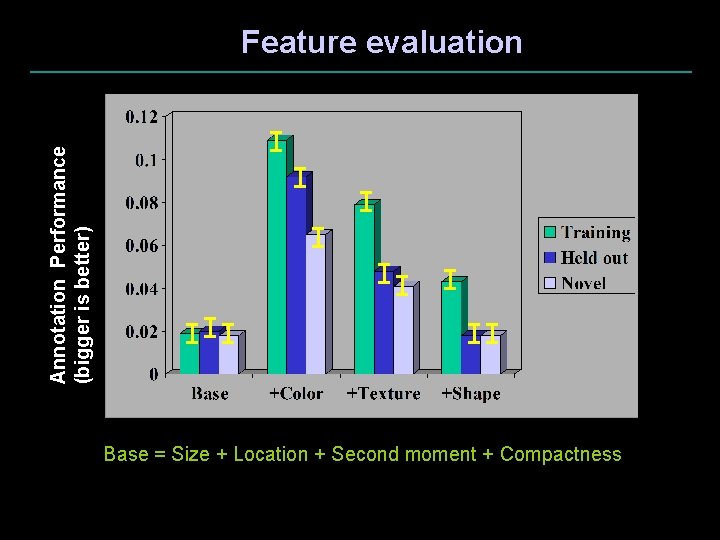

Feature evaluation Features Size Context (Average surrounding color) Location Color Texture • Second moment (RGB, L*a*b, rg. S) Responses to a bank of filters • Compactness • Average color • Even and Odd symmetric • Convexity • Standard deviation • Rotationally symmetric (DOG) Shape • Outer boundary descriptor

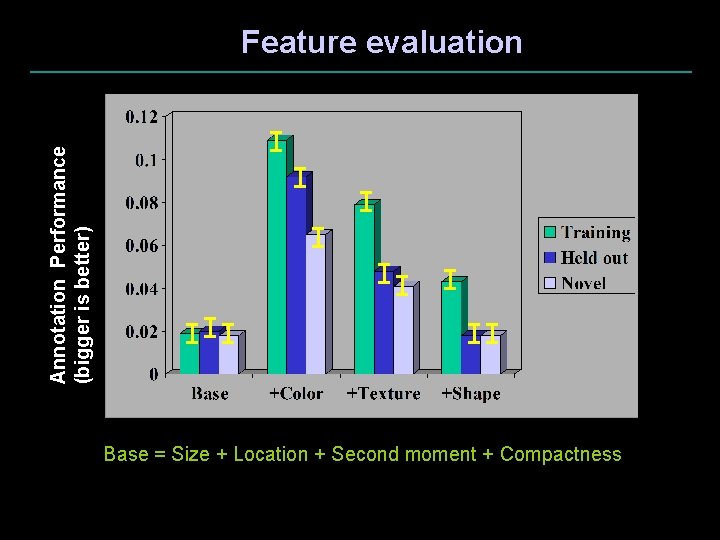

Annotation Performance (bigger is better) Feature evaluation Base = Size + Location + Second moment + Compactness

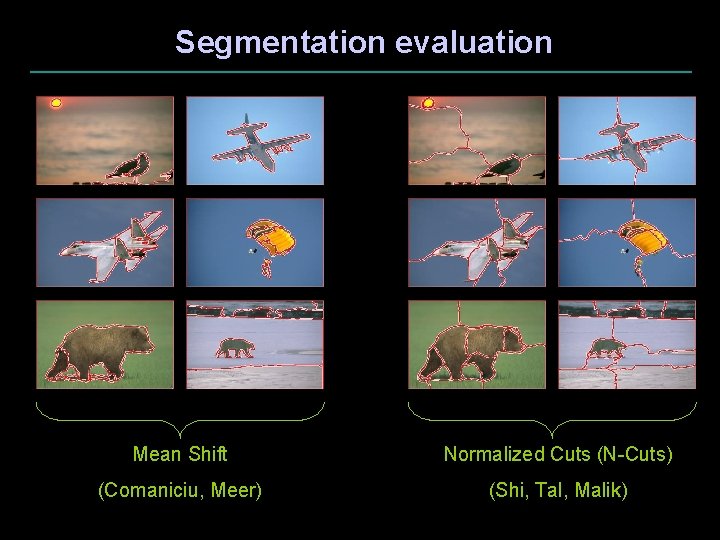

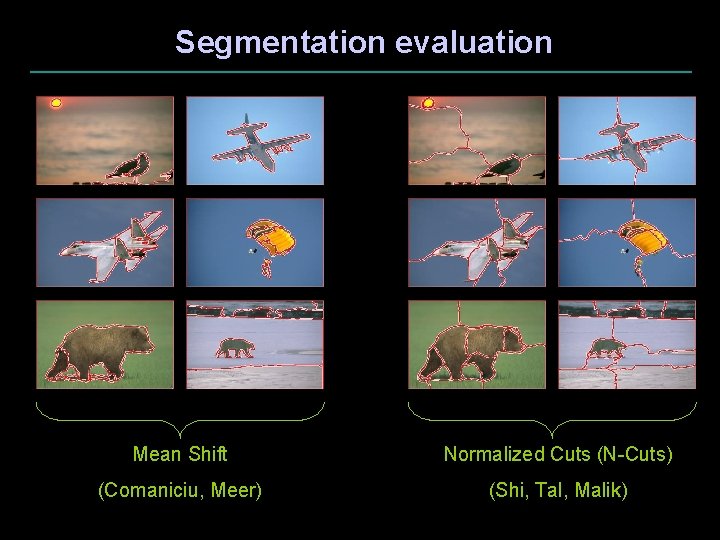

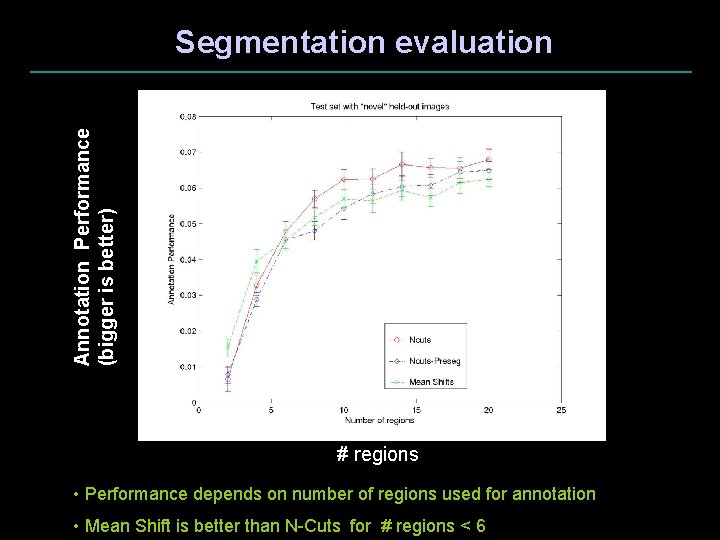

Segmentation evaluation Mean Shift Normalized Cuts (N-Cuts) (Comaniciu, Meer) (Shi, Tal, Malik)

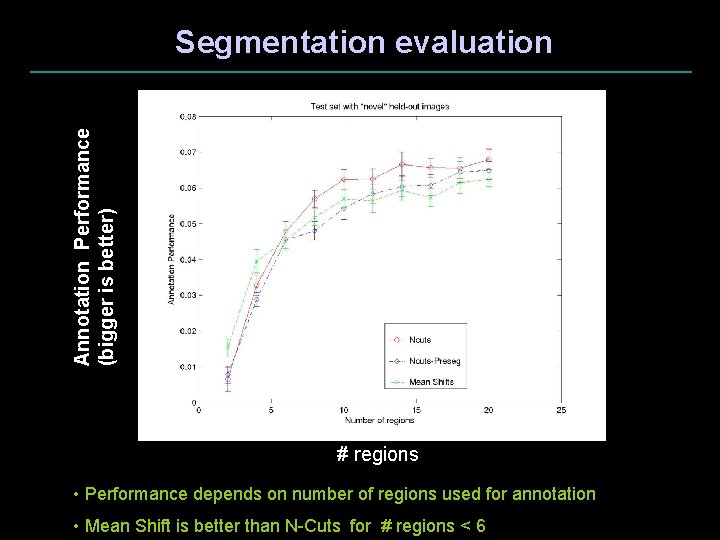

Annotation Performance (bigger is better) Segmentation evaluation # regions • Performance depends on number of regions used for annotation • Mean Shift is better than N-Cuts for # regions < 6

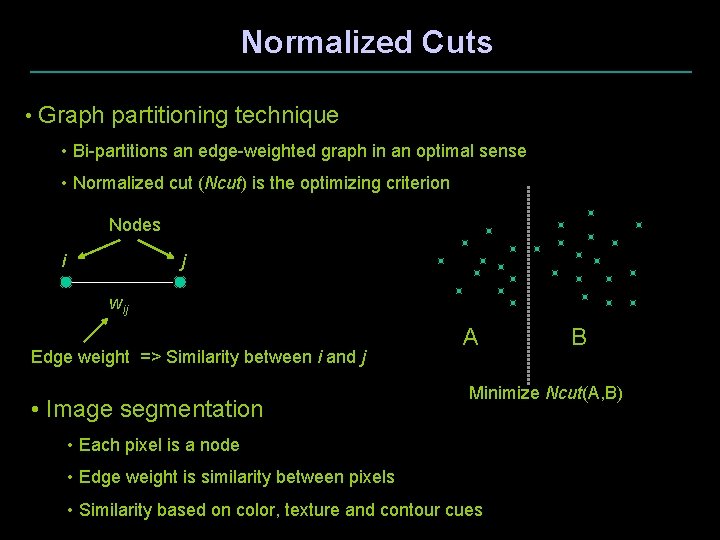

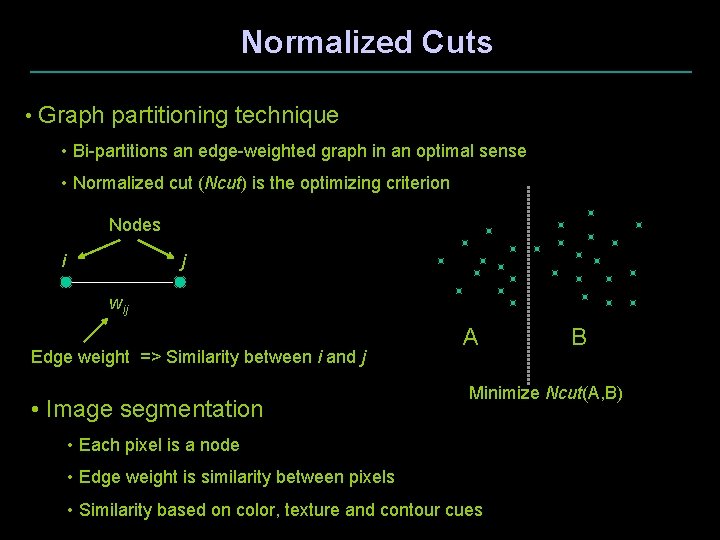

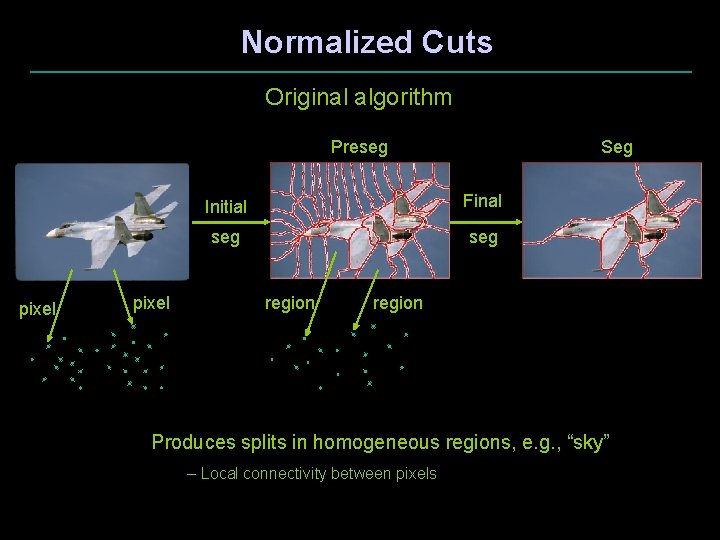

Normalized Cuts • Graph partitioning technique • Bi-partitions an edge-weighted graph in an optimal sense • Normalized cut (Ncut) is the optimizing criterion Nodes i j wij Edge weight => Similarity between i and j • Image segmentation A B Minimize Ncut(A, B) • Each pixel is a node • Edge weight is similarity between pixels • Similarity based on color, texture and contour cues

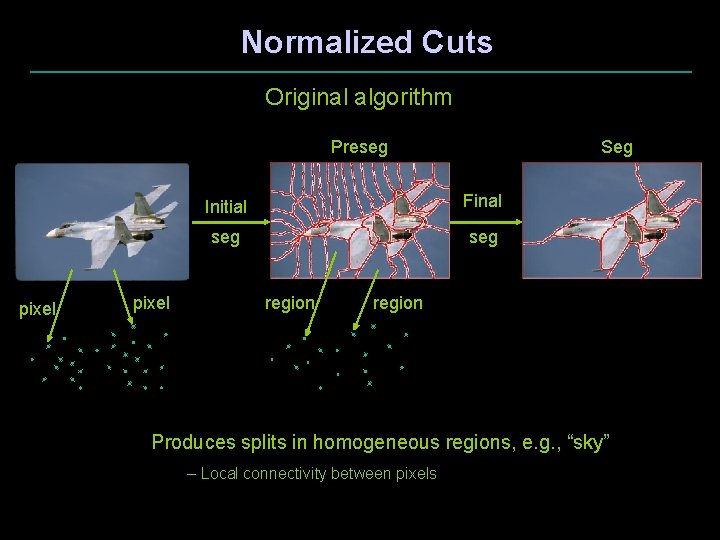

Normalized Cuts Original algorithm Preseg pixel Seg Initial Final seg region Produces splits in homogeneous regions, e. g. , “sky” – Local connectivity between pixels

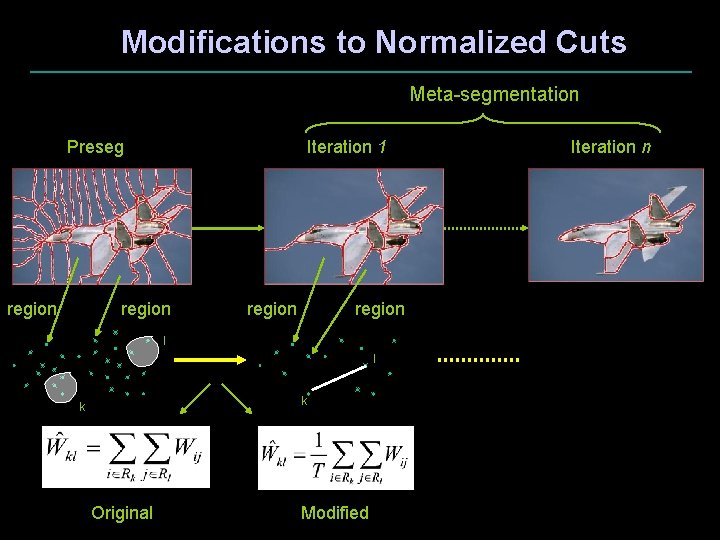

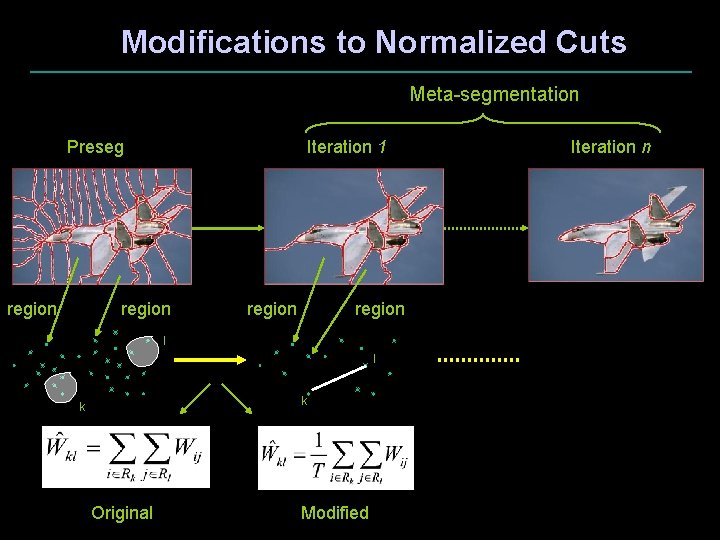

Modifications to Normalized Cuts Meta-segmentation Preseg region Iteration 1 region l l k k Original Modified Iteration n

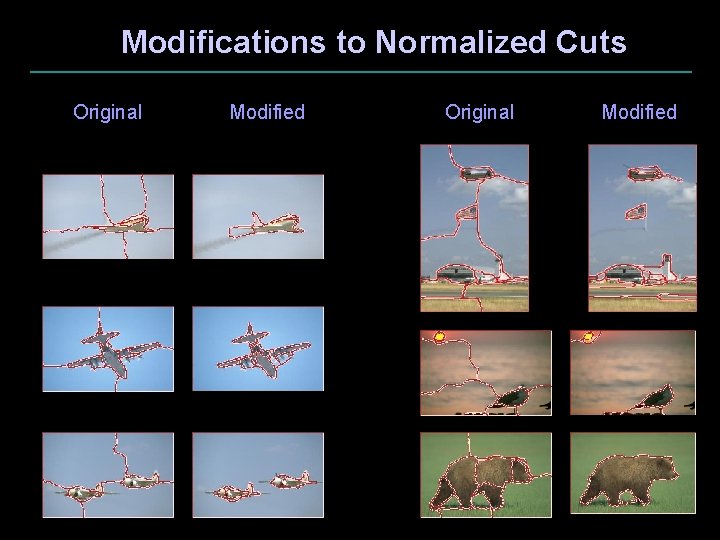

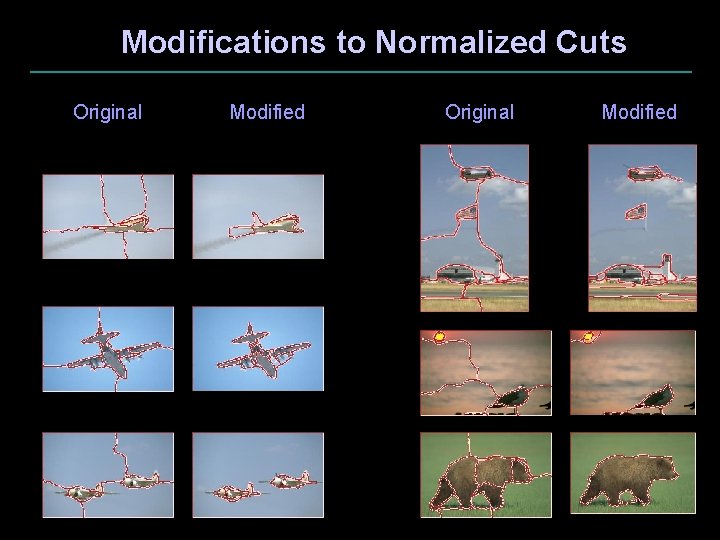

Modifications to Normalized Cuts Original Modified

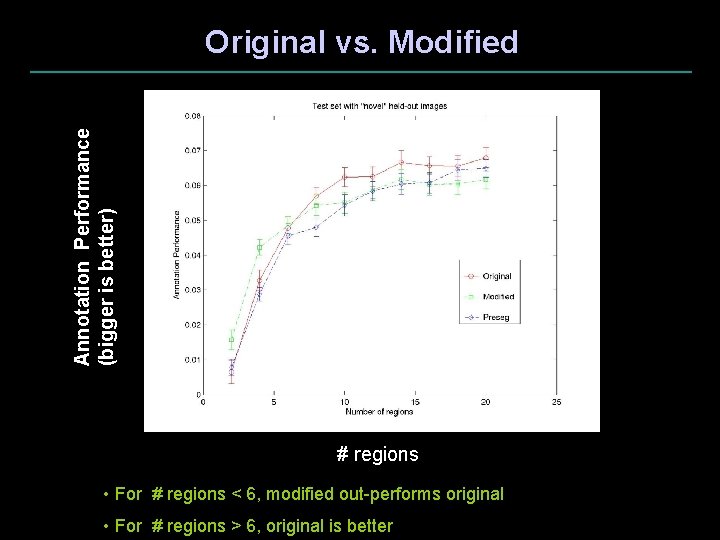

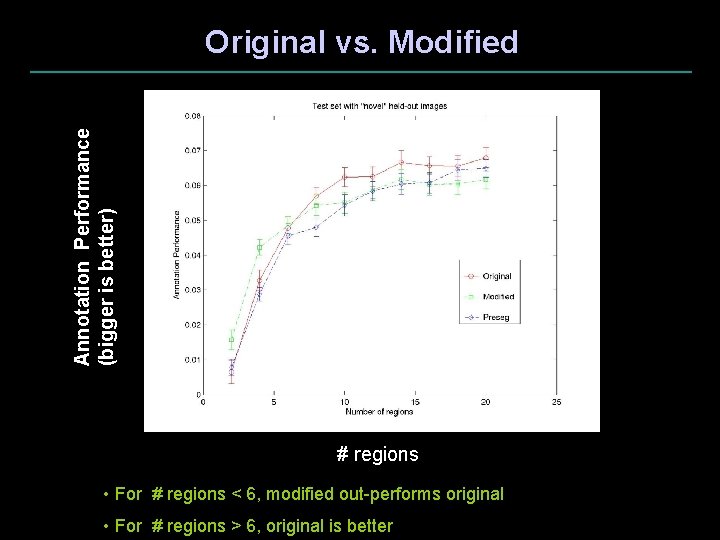

Annotation Performance (bigger is better) Original vs. Modified # regions • For # regions < 6, modified out-performs original • For # regions > 6, original is better

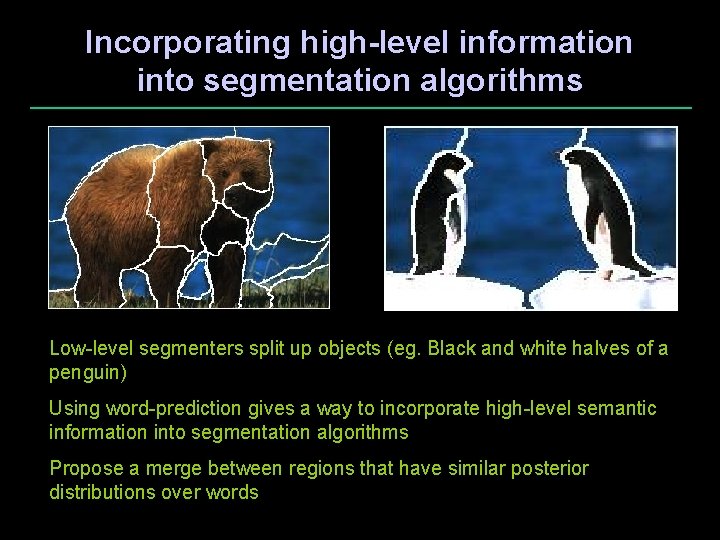

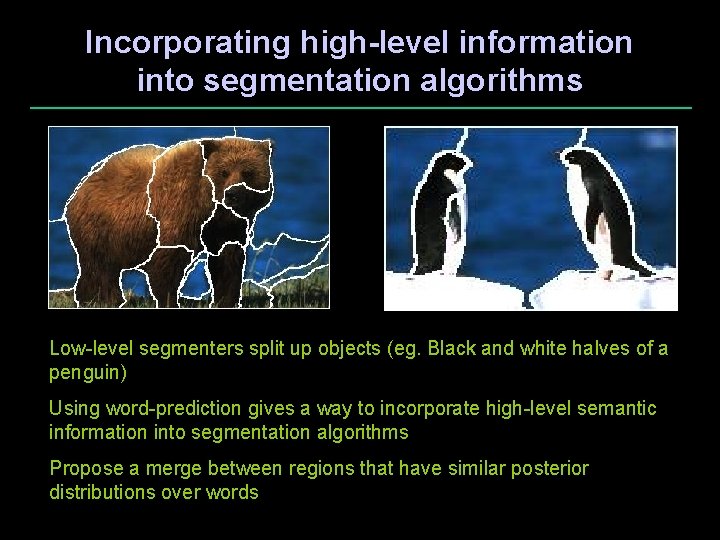

Incorporating high-level information into segmentation algorithms Low-level segmenters split up objects (eg. Black and white halves of a penguin) Using word-prediction gives a way to incorporate high-level semantic information into segmentation algorithms Propose a merge between regions that have similar posterior distributions over words

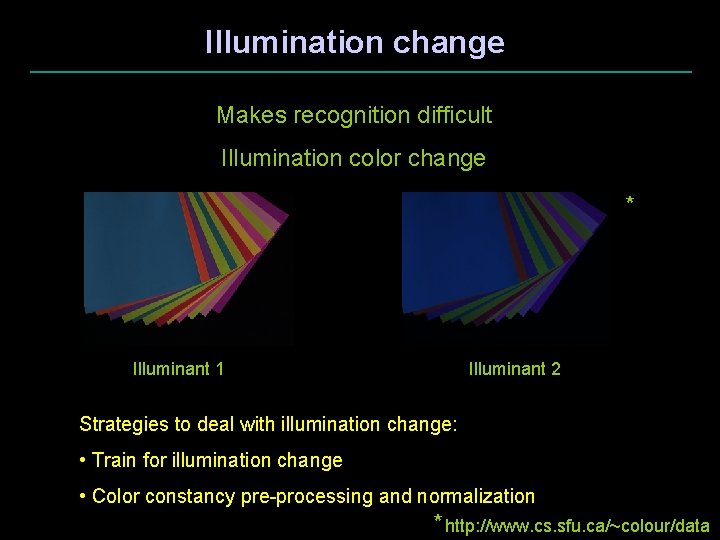

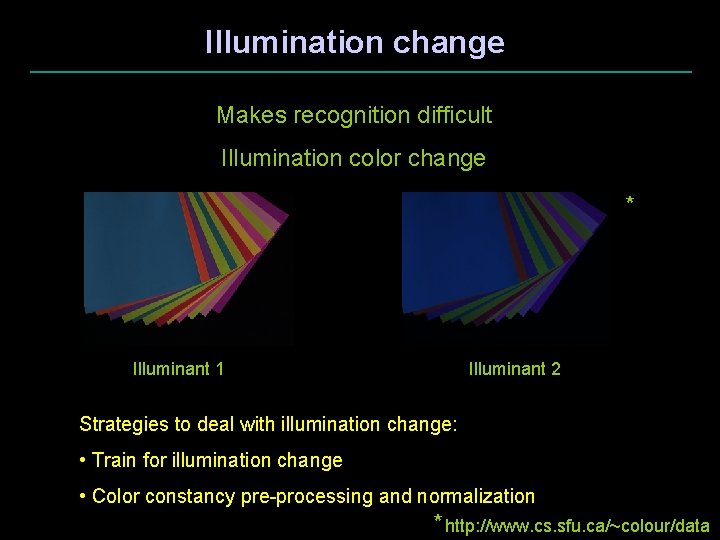

Illumination change Makes recognition difficult Illumination color change * Illuminant 1 Illuminant 2 Strategies to deal with illumination change: • Train for illumination change • Color constancy pre-processing and normalization * http: //www. cs. sfu. ca/~colour/data

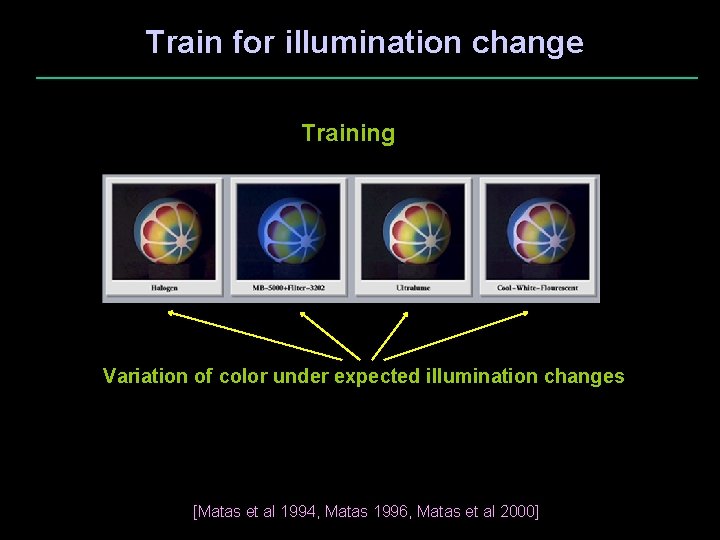

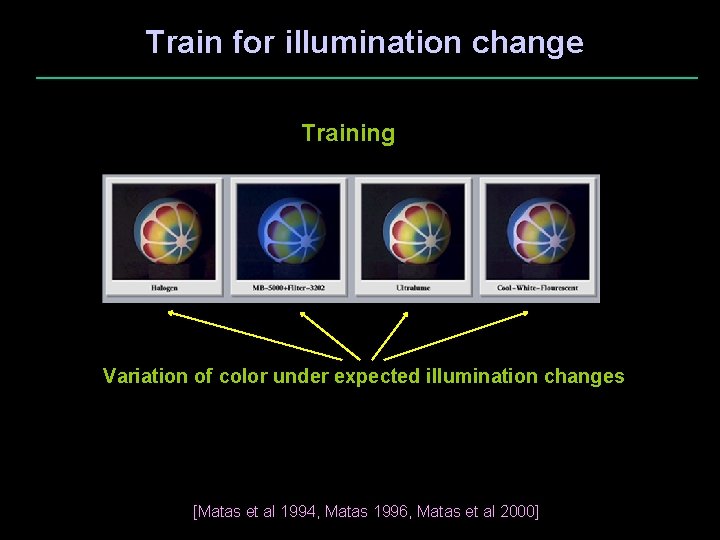

Train for illumination change Training Variation of color under expected illumination changes [Matas et al 1994, Matas 1996, Matas et al 2000]

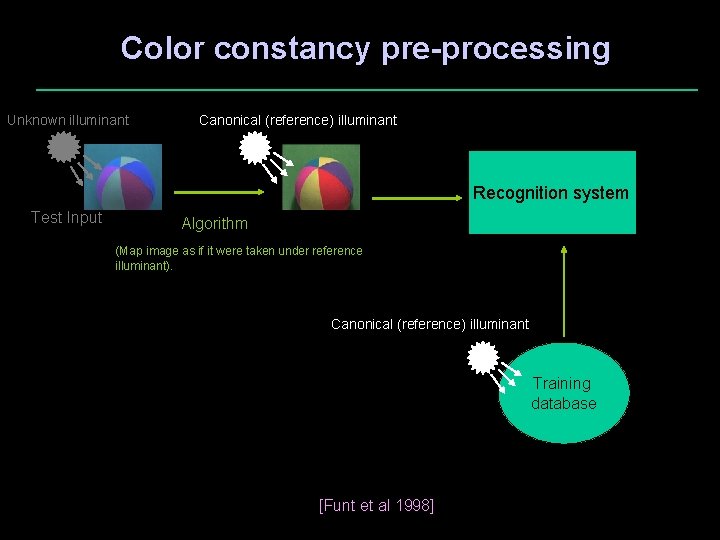

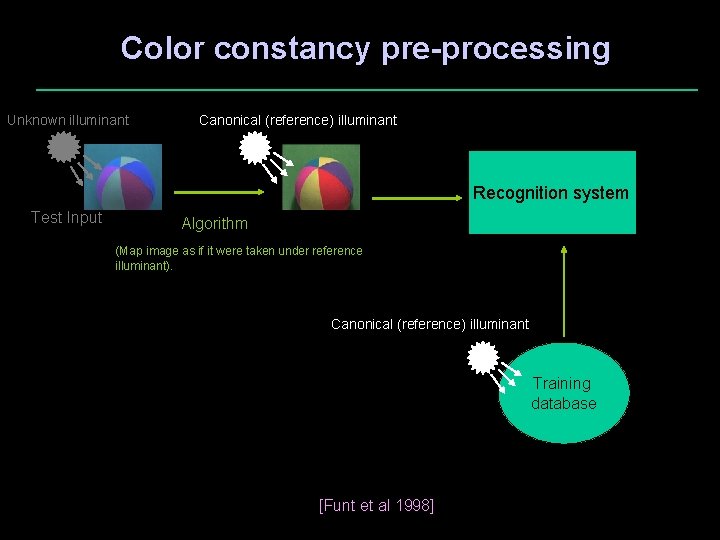

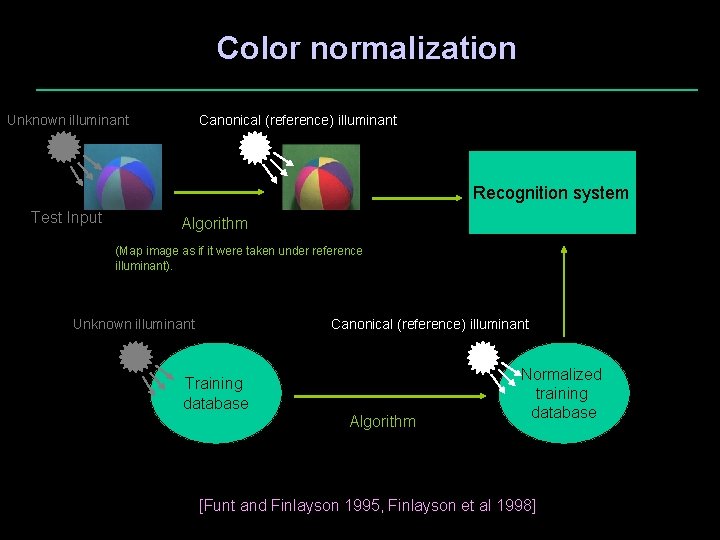

Color constancy pre-processing Unknown illuminant Canonical (reference) illuminant Recognition system Test Input Algorithm (Map image as if it were taken under reference illuminant). Canonical (reference) illuminant Training database [Funt et al 1998]

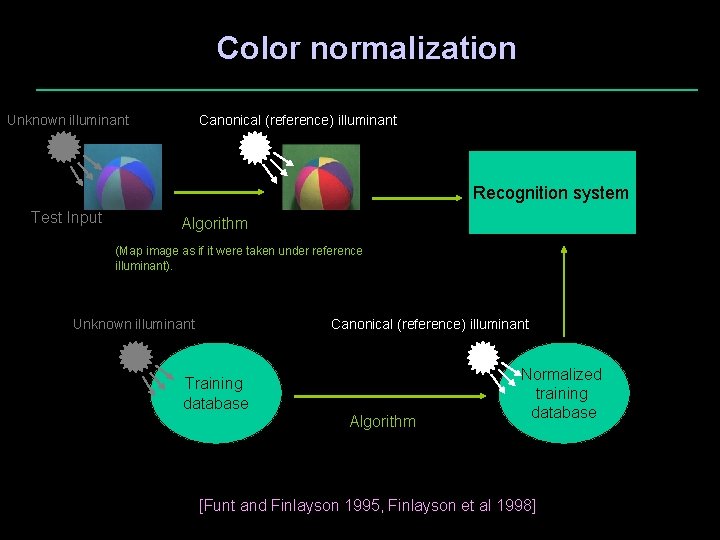

Color normalization Unknown illuminant Canonical (reference) illuminant Recognition system Test Input Algorithm (Map image as if it were taken under reference illuminant). Unknown illuminant Canonical (reference) illuminant Training database Algorithm Normalized training database [Funt and Finlayson 1995, Finlayson et al 1998]

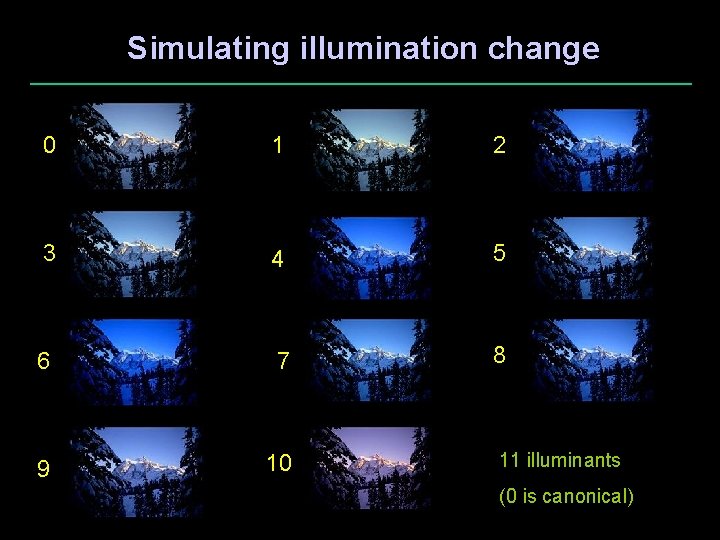

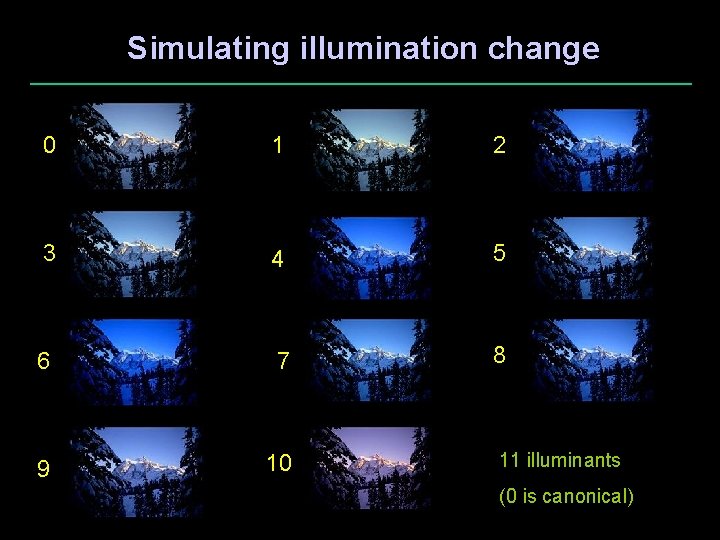

Simulating illumination change 0 1 2 3 4 5 6 7 8 9 10 11 illuminants (0 is canonical)

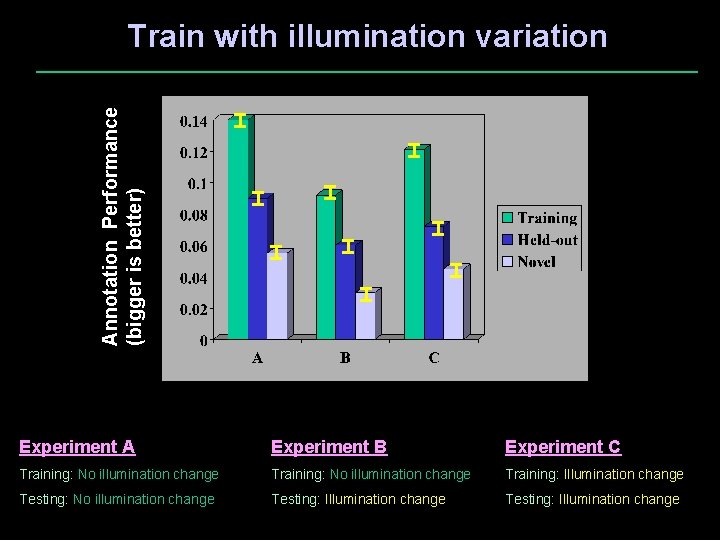

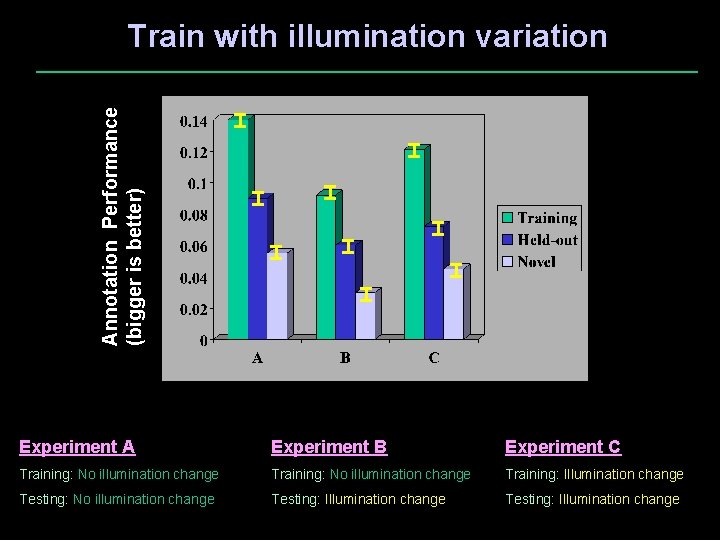

Annotation Performance (bigger is better) Train with illumination variation Experiment A Experiment B Experiment C Training: No illumination change Training: Illumination change Testing: No illumination change Testing: Illumination change

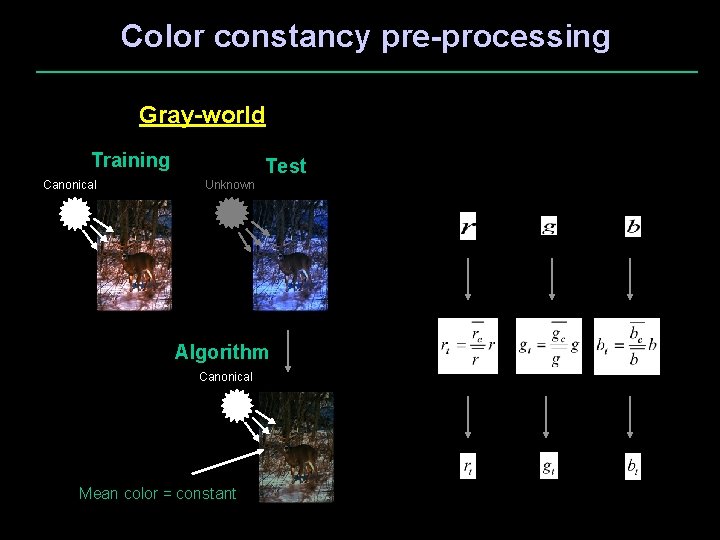

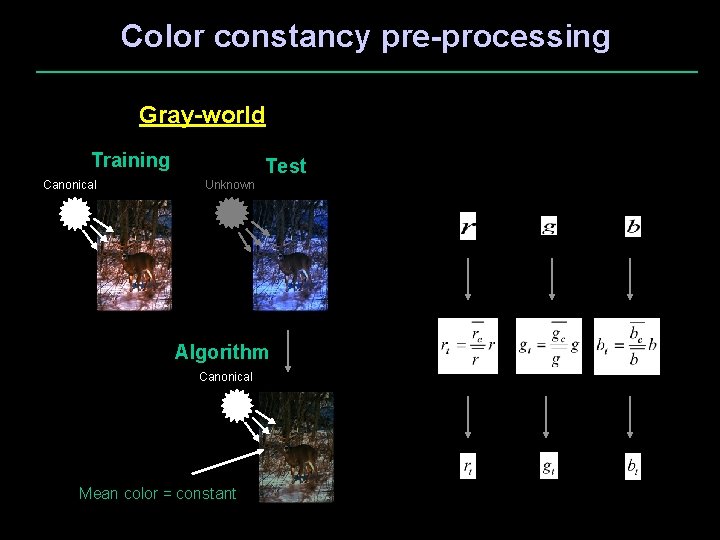

Color constancy pre-processing Gray-world Training Canonical Test Unknown Algorithm Canonical Mean color = constant

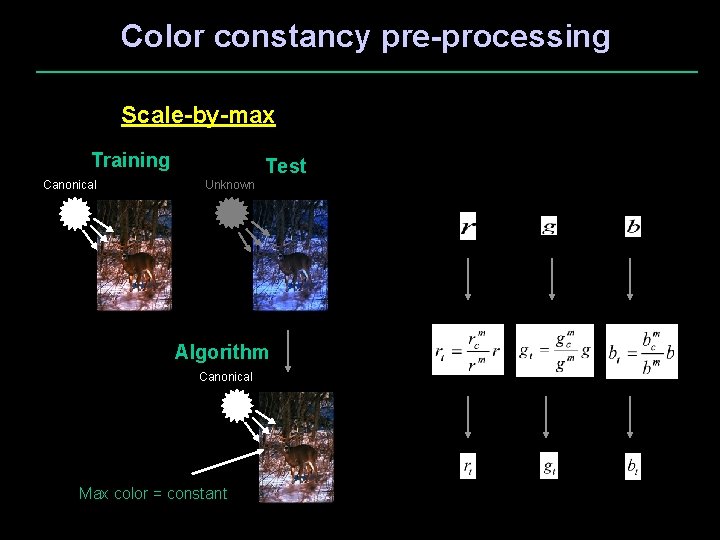

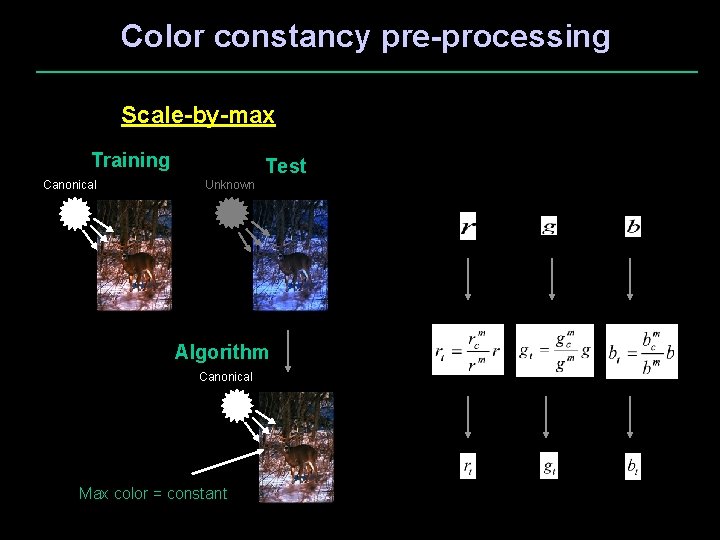

Color constancy pre-processing Scale-by-max Training Canonical Test Unknown Algorithm Canonical Max color = constant

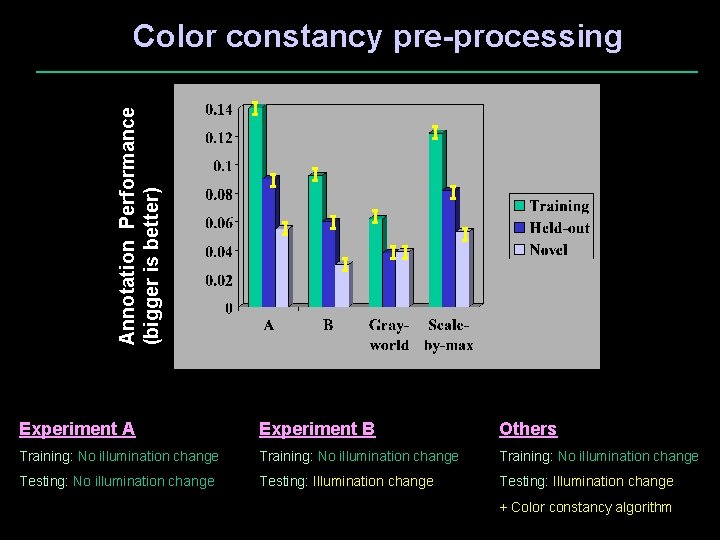

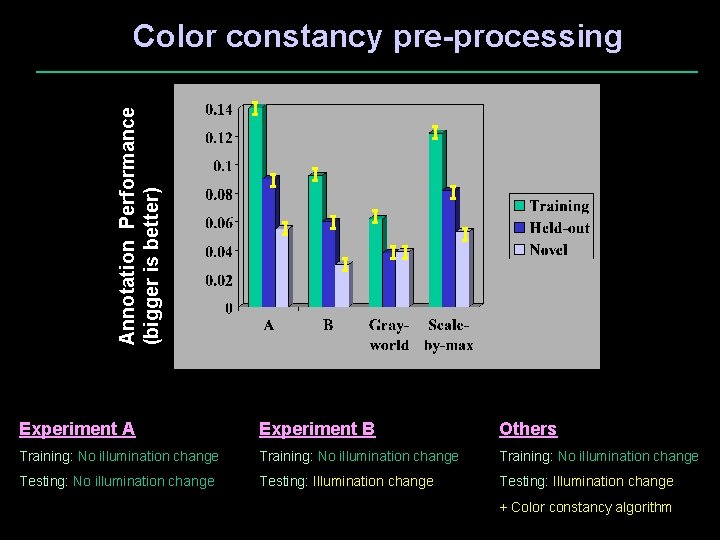

Annotation Performance (bigger is better) Color constancy pre-processing Experiment A Experiment B Others Training: No illumination change Testing: Illumination change + Color constancy algorithm

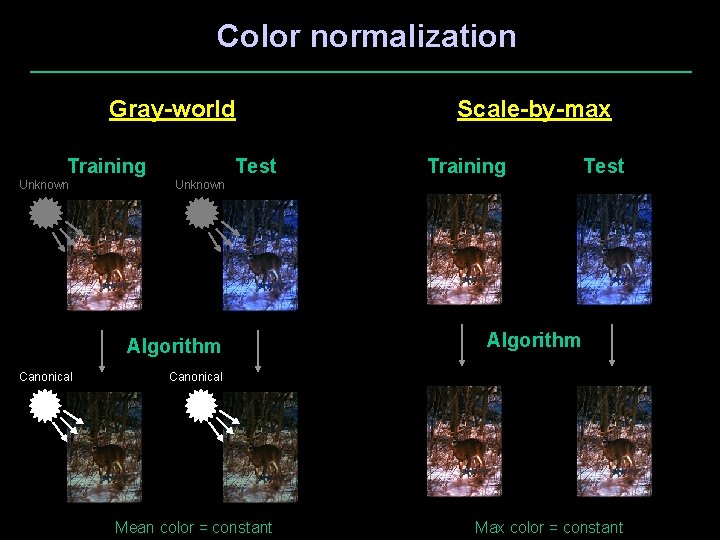

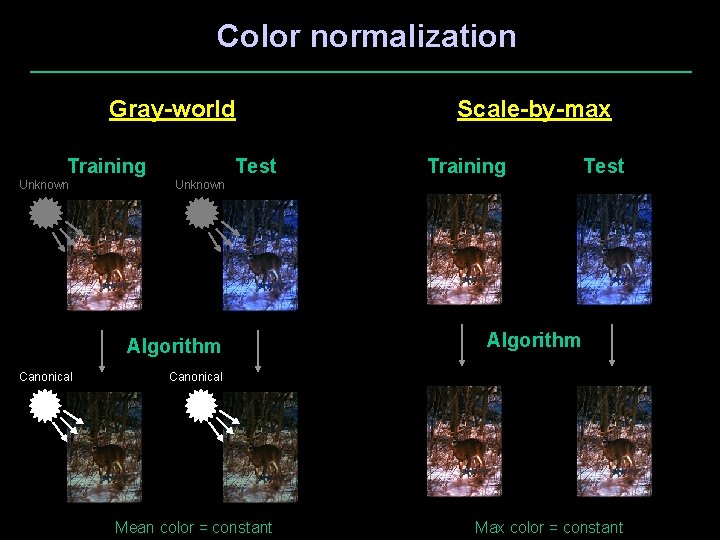

Color normalization Gray-world Training Unknown Test Training Test Unknown Algorithm Canonical Scale-by-max Algorithm Canonical Mean color = constant Max color = constant

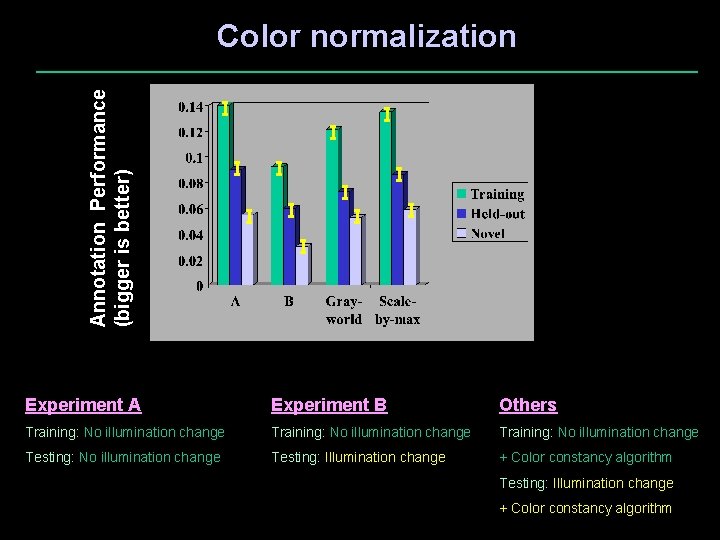

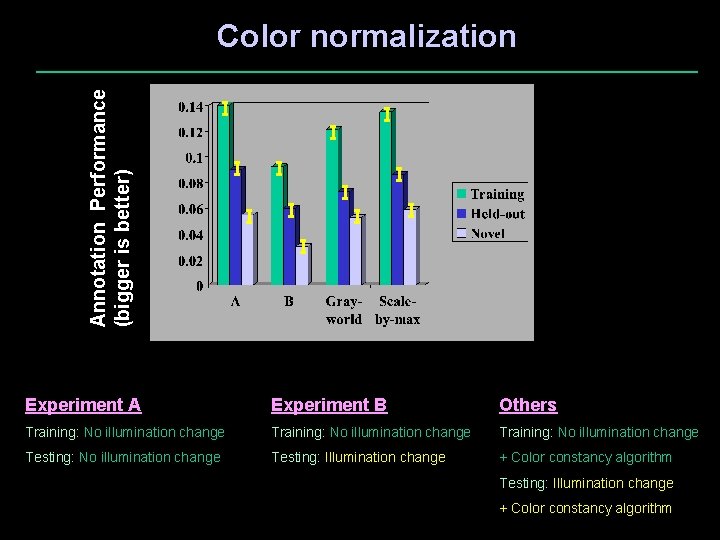

Annotation Performance (bigger is better) Color normalization Experiment A Experiment B Others Training: No illumination change Testing: Illumination change + Color constancy algorithm

Conclusions q Translation (visual to semantic) model for object recognition q Identify and evaluate low-level vision processes for recognition q Feature evaluation q Color and texture are the most important in that order q Shape needs better segmentation methods q Segmentation evaluation q Performance depends on # regions for annotation q Mean Shift and modified NCuts do better than original NCuts for # regions < 6 q Color constancy evaluation q Training with illumination helps q Color constancy processing helps (scale-by-max better than gray-world)

Thank you!