Windows HPC Launching to expand grid usage Windows

- Slides: 23

Windows HPC: Launching to expand grid usage Windows vs. Linux or Windows with Linux?

Agenda Launching Windows HPC Server 2008 Windows HPC: who is it for? Windows and Linux for the best fit to your needs Windows HPC Server 2008: characteristics Tools for Parallel Programming

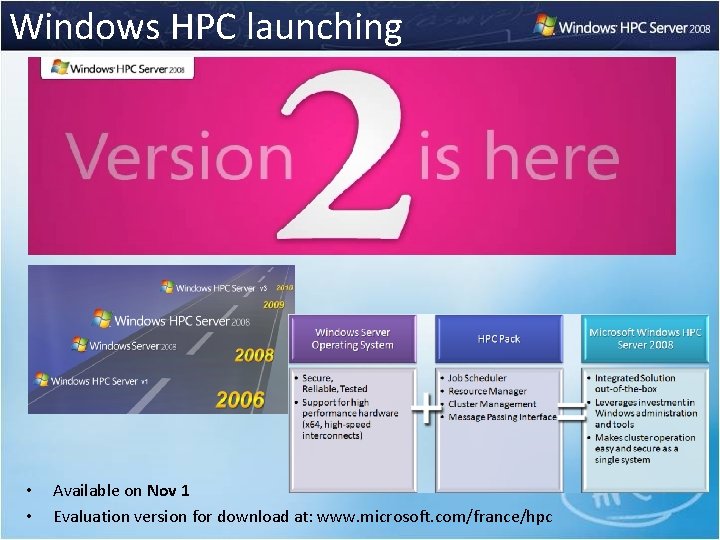

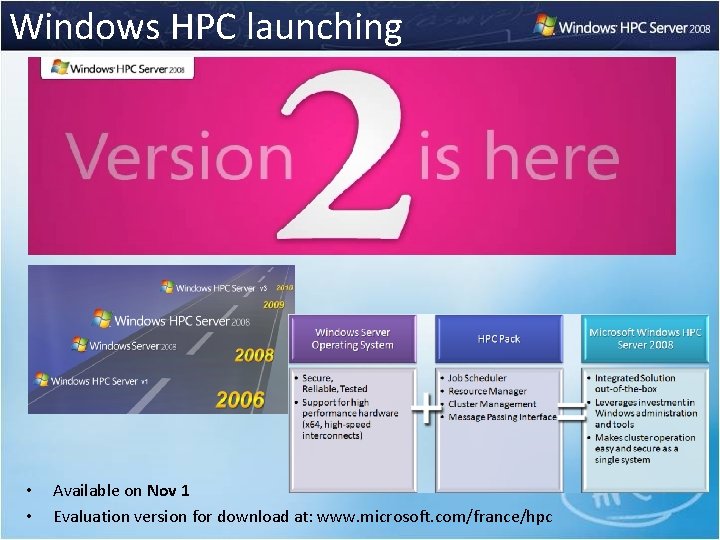

Windows HPC launching • • Available on Nov 1 Evaluation version for download at: www. microsoft. com/france/hpc

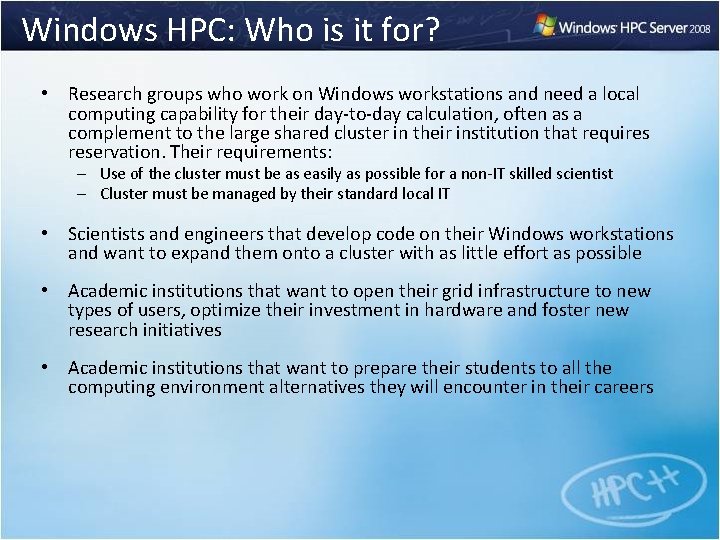

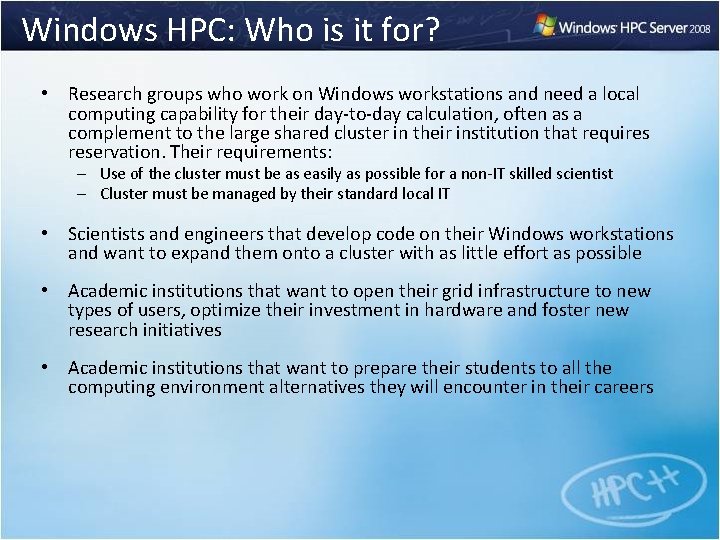

Windows HPC: Who is it for? • Research groups who work on Windows workstations and need a local computing capability for their day-to-day calculation, often as a complement to the large shared cluster in their institution that requires reservation. Their requirements: – Use of the cluster must be as easily as possible for a non-IT skilled scientist – Cluster must be managed by their standard local IT • Scientists and engineers that develop code on their Windows workstations and want to expand them onto a cluster with as little effort as possible • Academic institutions that want to open their grid infrastructure to new types of users, optimize their investment in hardware and foster new research initiatives • Academic institutions that want to prepare their students to all the computing environment alternatives they will encounter in their careers

Open approach

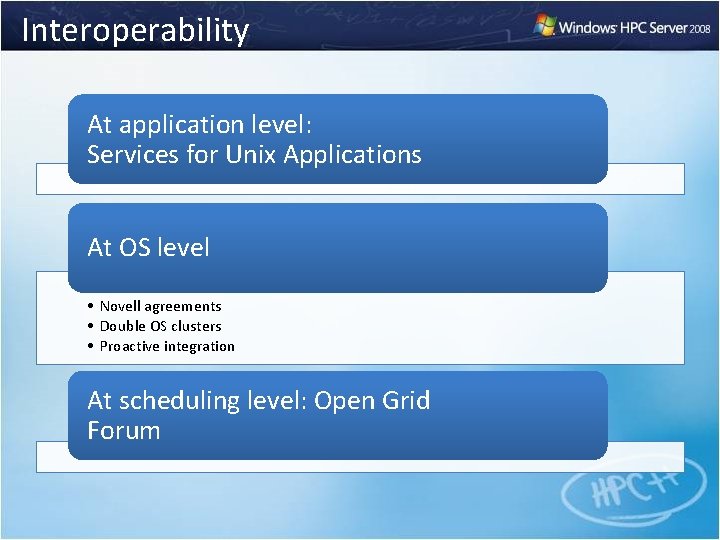

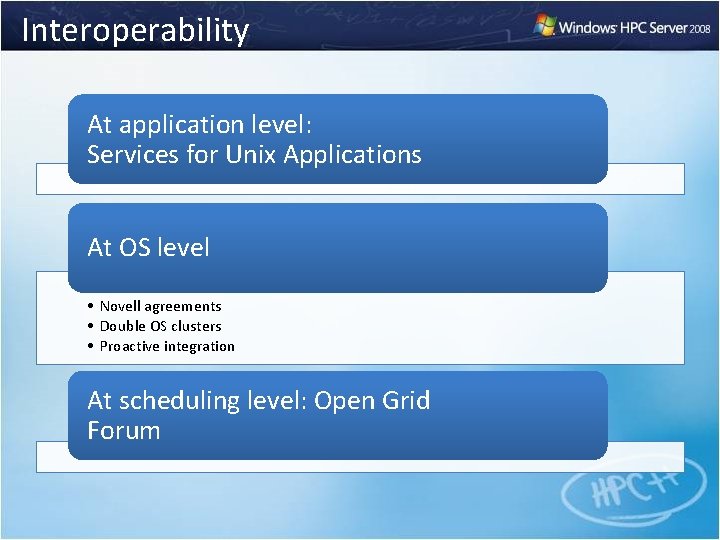

Interoperability At application level: Services for Unix Applications At OS level • Novell agreements • Double OS clusters • Proactive integration At scheduling level: Open Grid Forum

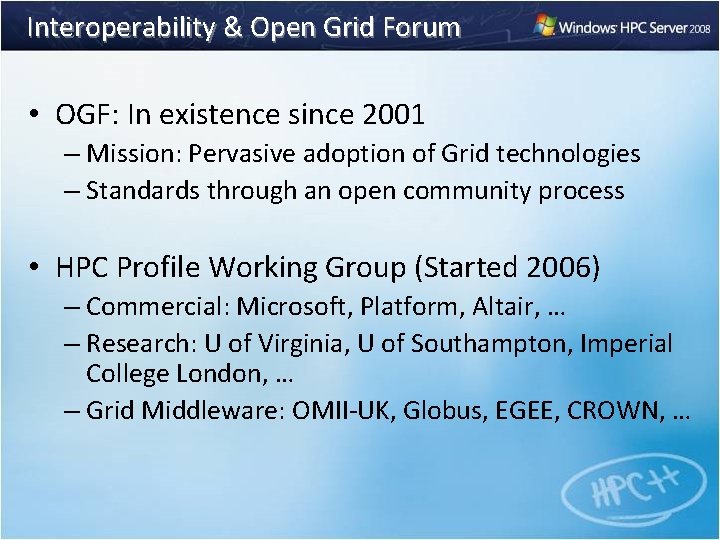

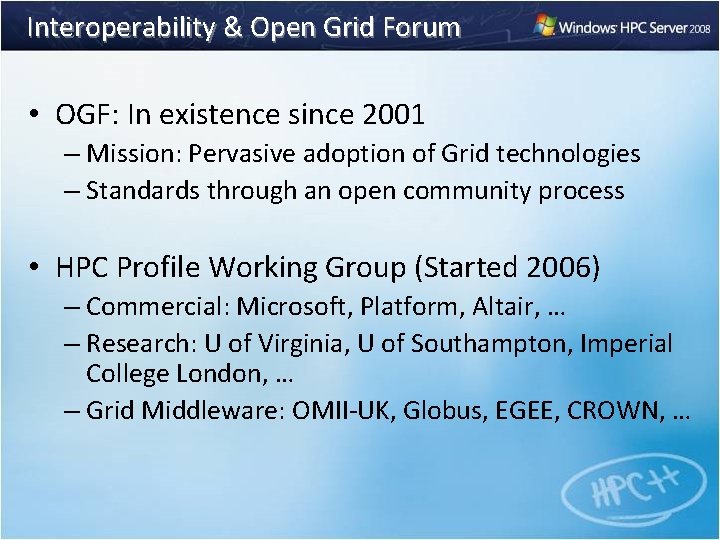

Interoperability & Open Grid Forum • OGF: In existence since 2001 – Mission: Pervasive adoption of Grid technologies – Standards through an open community process • HPC Profile Working Group (Started 2006) – Commercial: Microsoft, Platform, Altair, … – Research: U of Virginia, U of Southampton, Imperial College London, … – Grid Middleware: OMII-UK, Globus, EGEE, CROWN, …

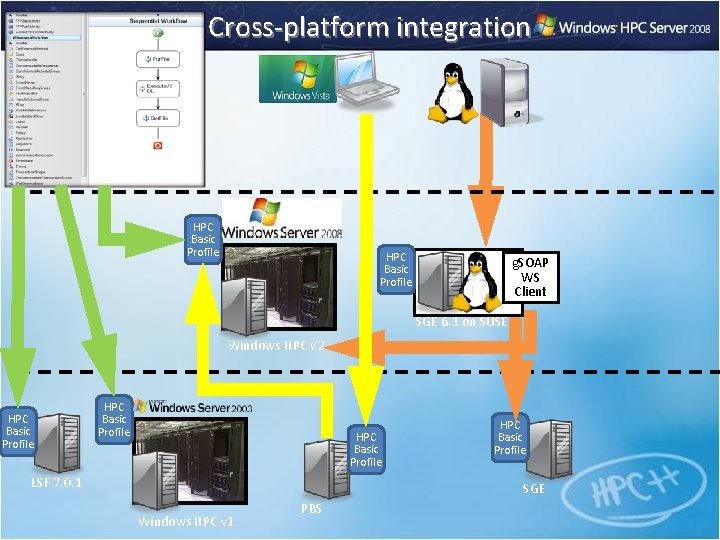

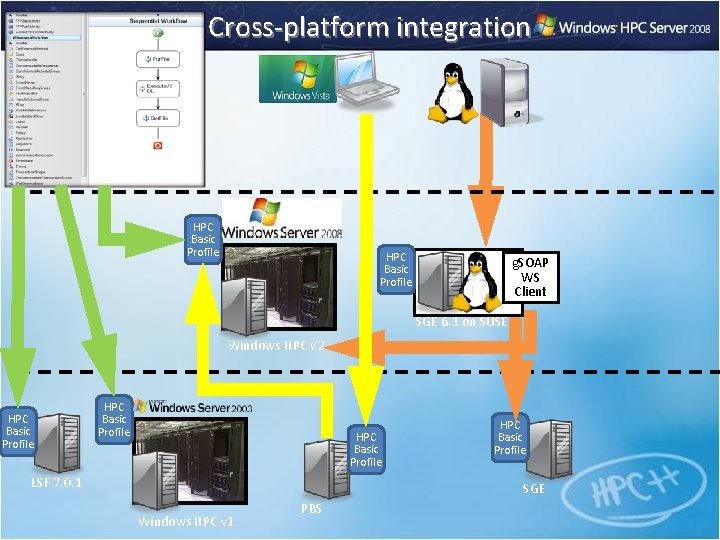

Cross-platform integration HPC Basic Profile g. SOAP WS Client SGE 6. 1 on SUSE Windows HPC v 2 HPC Basic Profile LSF 7. 0. 1 HPC Basic Profile SGE Windows HPC v 1 PBS

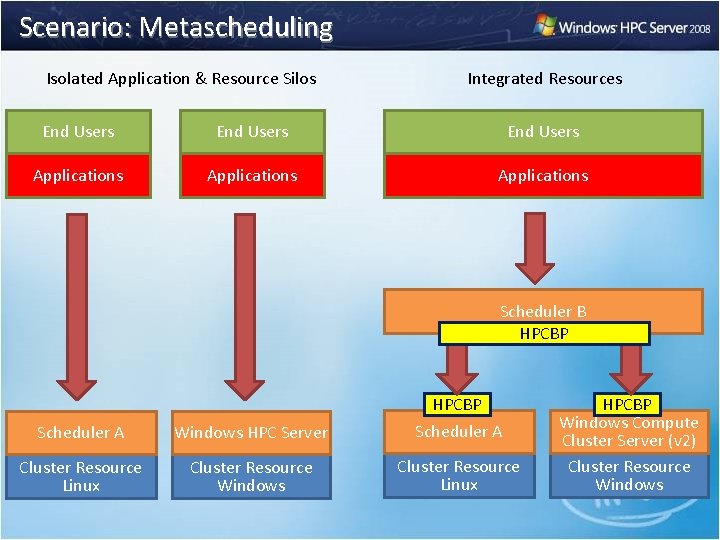

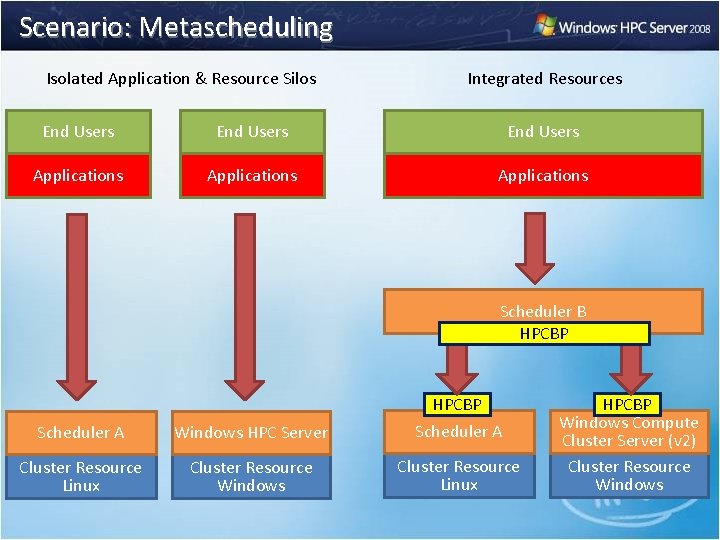

Scenario: Metascheduling Isolated Application & Resource Silos Integrated Resources End Users Applications Scheduler B HPCBP Scheduler A Windows HPC Server Scheduler A Cluster Resource Linux Cluster Resource Windows Cluster Resource Linux HPCBP Windows Compute Cluster Server (v 2) Cluster Resource Windows

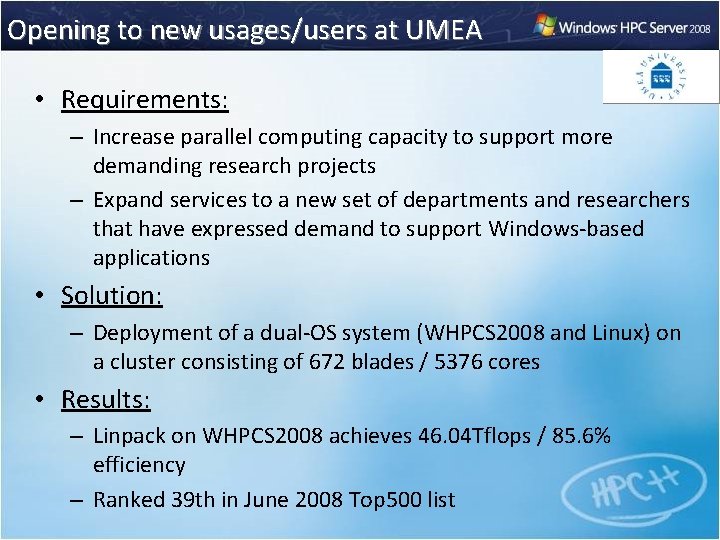

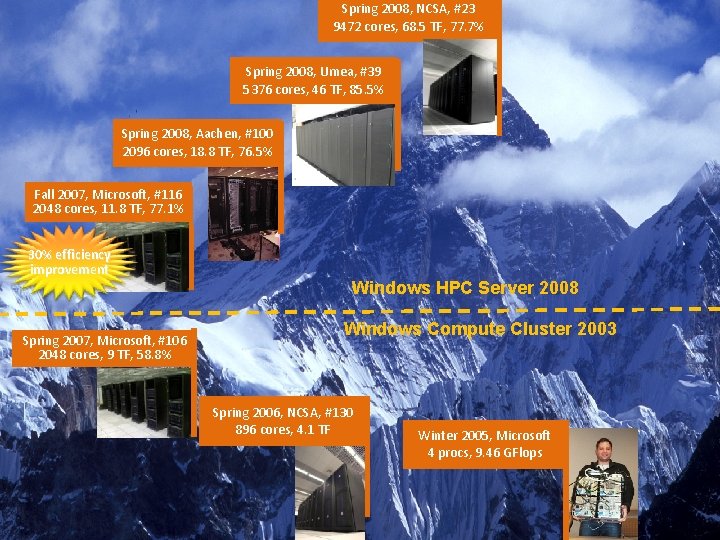

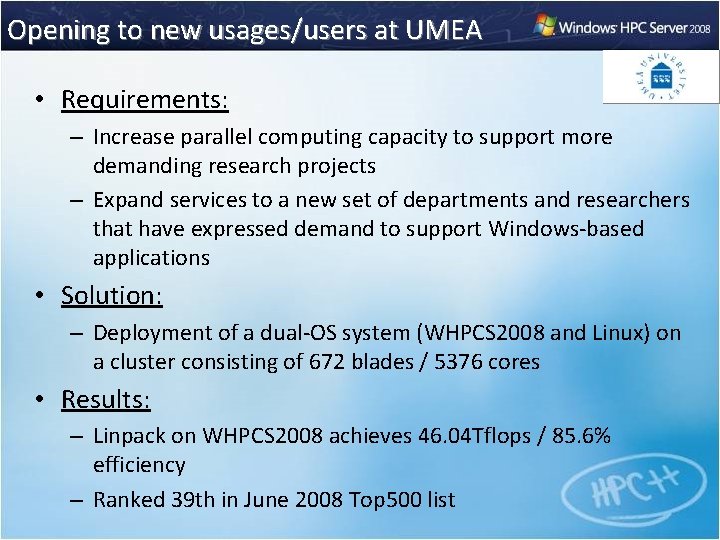

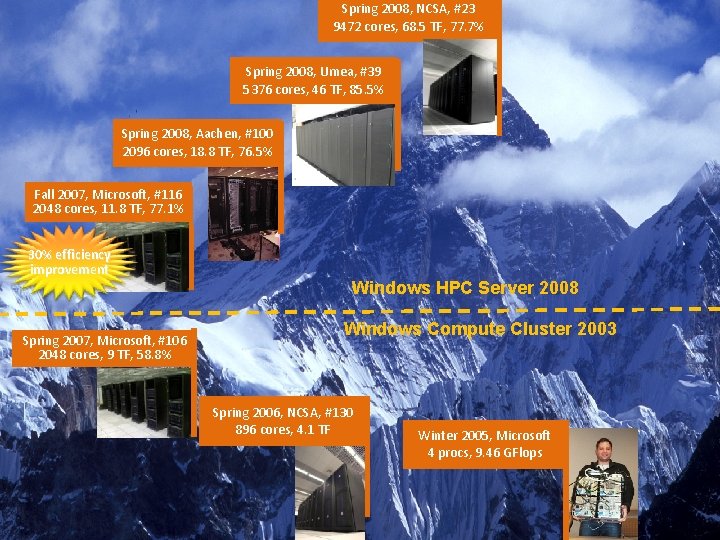

Opening to new usages/users at UMEA • Requirements: – Increase parallel computing capacity to support more demanding research projects – Expand services to a new set of departments and researchers that have expressed demand to support Windows-based applications • Solution: – Deployment of a dual-OS system (WHPCS 2008 and Linux) on a cluster consisting of 672 blades / 5376 cores • Results: – Linpack on WHPCS 2008 achieves 46. 04 Tflops / 85. 6% efficiency – Ranked 39 th in June 2008 Top 500 list

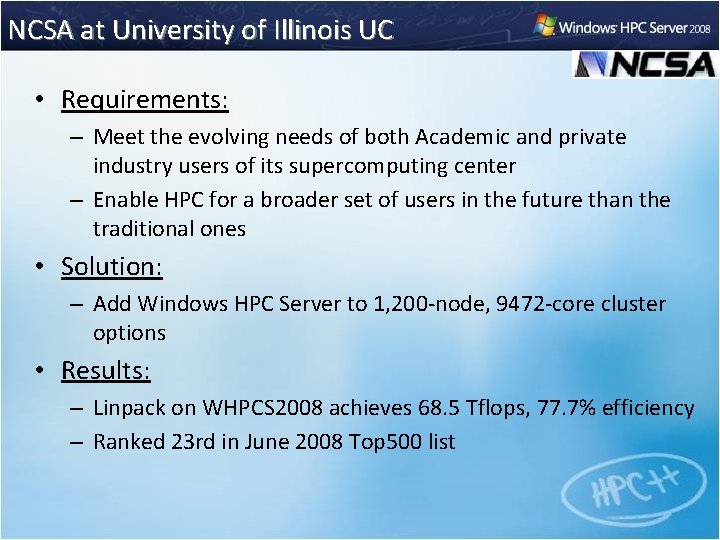

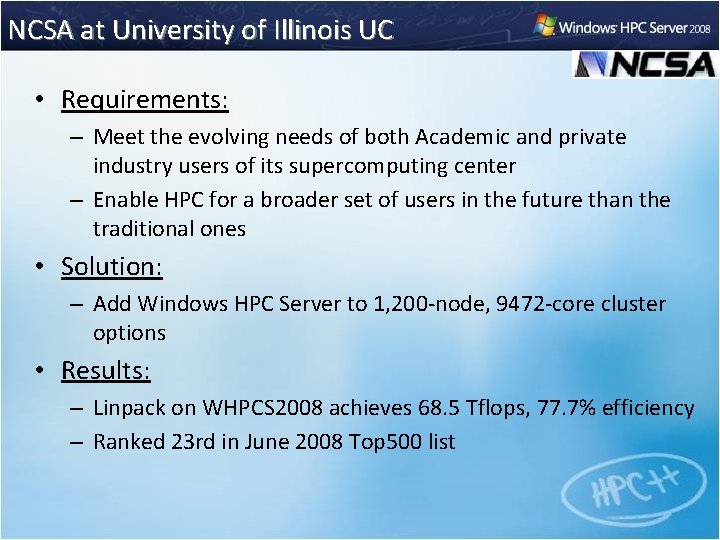

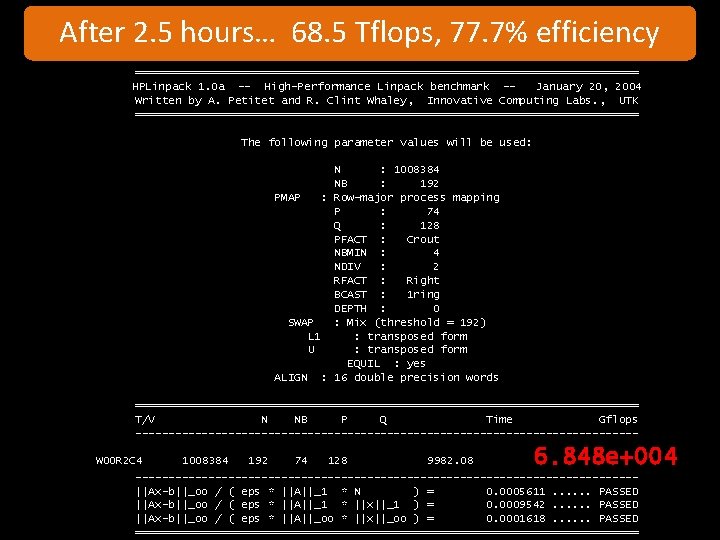

NCSA at University of Illinois UC • Requirements: – Meet the evolving needs of both Academic and private industry users of its supercomputing center – Enable HPC for a broader set of users in the future than the traditional ones • Solution: – Add Windows HPC Server to 1, 200 -node, 9472 -core cluster options • Results: – Linpack on WHPCS 2008 achieves 68. 5 Tflops, 77. 7% efficiency – Ranked 23 rd in June 2008 Top 500 list

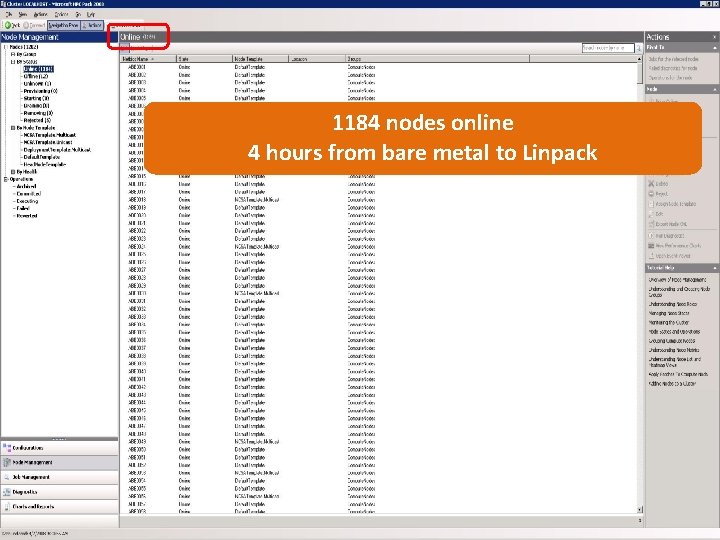

The prize: NCSA’s Abe cluster #14 on Nov 2007 Top 500 The goal: Unseat #13 Barcelona cluster at 63. 8 TFlops #23 Top 500 70% eff

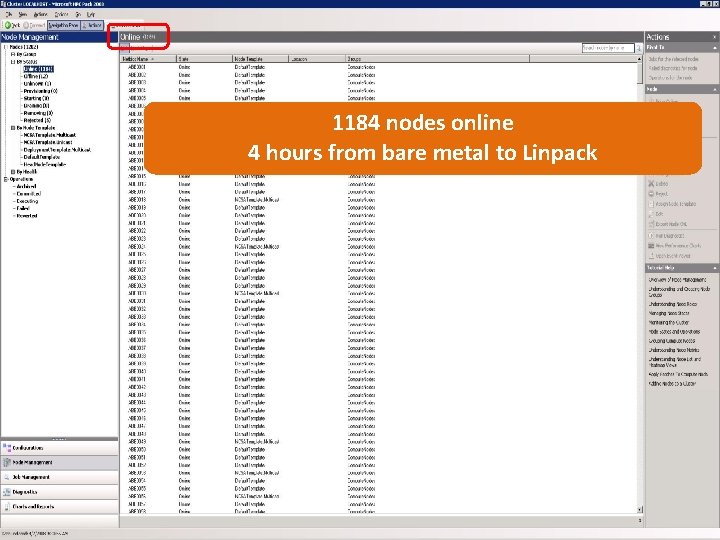

1184 nodes online 4 hours from bare metal to Linpack

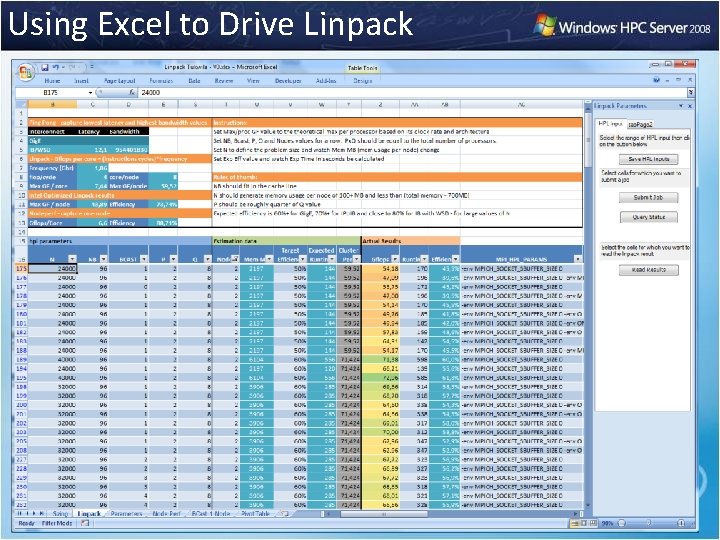

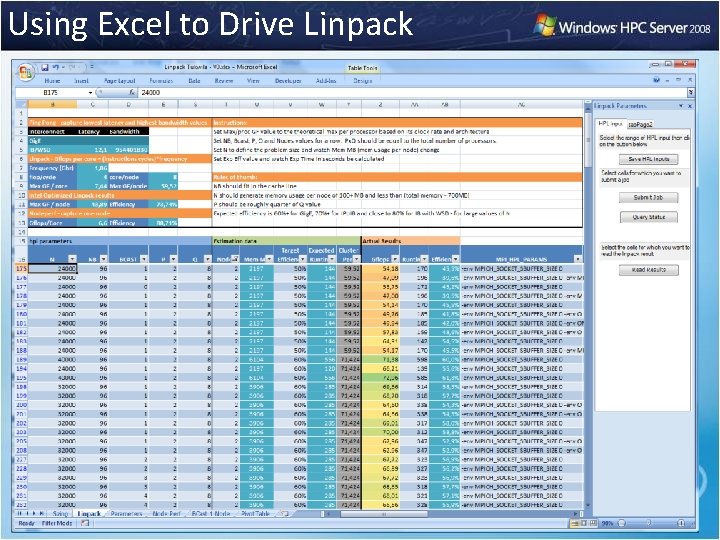

Using Excel to Drive Linpack

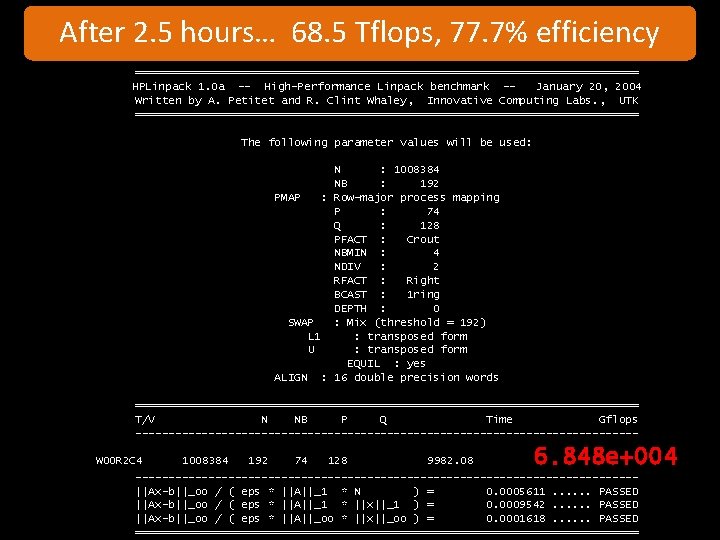

After 2. 5 hours… 68. 5 Tflops, 77. 7% efficiency ====================================== HPLinpack 1. 0 a -- High-Performance Linpack benchmark -January 20, 2004 Written by A. Petitet and R. Clint Whaley, Innovative Computing Labs. , UTK ====================================== The following parameter values will be used: N : 1008384 NB : 192 PMAP : Row-major process mapping P : 74 Q : 128 PFACT : Crout NBMIN : 4 NDIV : 2 RFACT : Right BCAST : 1 ring DEPTH : 0 SWAP : Mix (threshold = 192) L 1 : transposed form U : transposed form EQUIL : yes ALIGN : 16 double precision words ====================================== T/V N NB P Q Time Gflops --------------------------------------W 00 R 2 C 4 1008384 192 74 128 9982. 08 6. 848 e+004 --------------------------------------||Ax-b||_oo / ( eps * ||A||_1 * N ) = 0. 0005611. . . PASSED ||Ax-b||_oo / ( eps * ||A||_1 * ||x||_1 ) = 0. 0009542. . . PASSED ||Ax-b||_oo / ( eps * ||A||_oo * ||x||_oo ) = 0. 0001618. . . PASSED ======================================

Spring 2008, NCSA, #23 9472 cores, 68. 5 TF, 77. 7% Spring 2008, Umea, #39 5376 cores, 46 TF, 85. 5% Spring 2008, Aachen, #100 2096 cores, 18. 8 TF, 76. 5% Fall 2007, Microsoft, #116 2048 cores, 11. 8 TF, 77. 1% 30% efficiency improvement Spring 2007, Microsoft, #106 2048 cores, 9 TF, 58. 8% Windows HPC Server 2008 Windows Compute Cluster 2003 Spring 2006, NCSA, #130 896 cores, 4. 1 TF Winter 2005, Microsoft 4 procs, 9. 46 GFlops

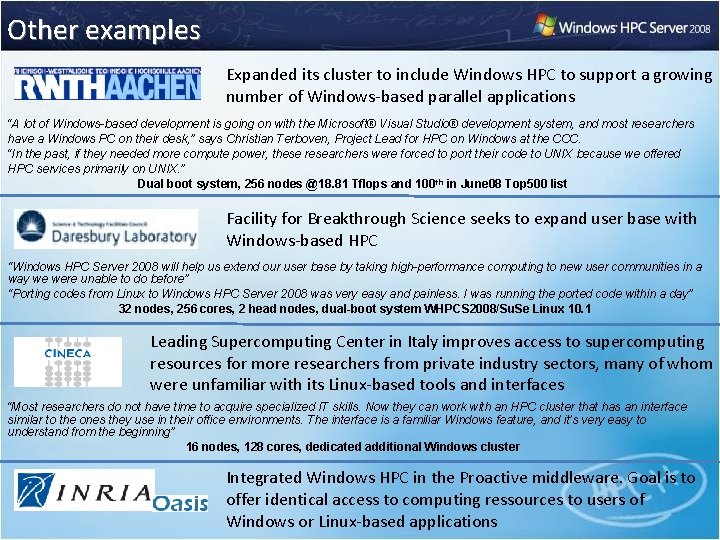

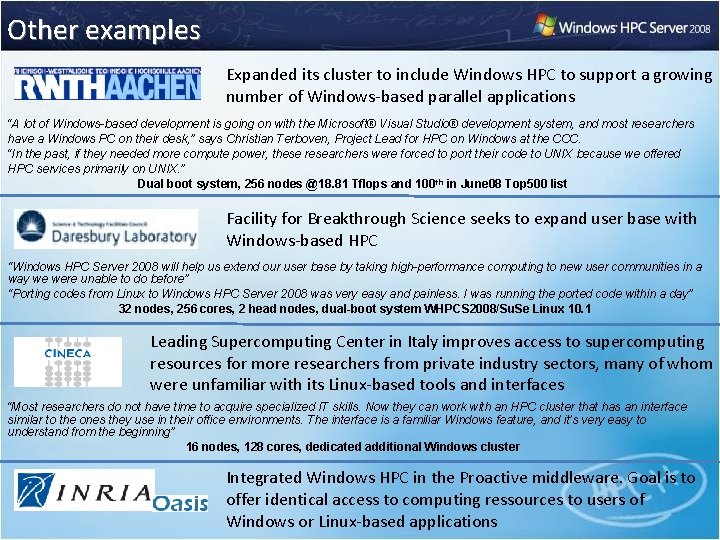

Other examples Expanded its cluster to include Windows HPC to support a growing number of Windows-based parallel applications “A lot of Windows-based development is going on with the Microsoft® Visual Studio® development system, and most researchers have a Windows PC on their desk, ” says Christian Terboven, Project Lead for HPC on Windows at the CCC. “In the past, if they needed more compute power, these researchers were forced to port their code to UNIX because we offered HPC services primarily on UNIX. ” Dual boot system, 256 nodes @18. 81 Tflops and 100 th in June 08 Top 500 list Facility for Breakthrough Science seeks to expand user base with Windows-based HPC “Windows HPC Server 2008 will help us extend our user base by taking high-performance computing to new user communities in a way we were unable to do before” “Porting codes from Linux to Windows HPC Server 2008 was very easy and painless. I was running the ported code within a day” 32 nodes, 256 cores, 2 head nodes, dual-boot system WHPCS 2008/Su. Se Linux 10. 1 Leading Supercomputing Center in Italy improves access to supercomputing resources for more researchers from private industry sectors, many of whom were unfamiliar with its Linux-based tools and interfaces “Most researchers do not have time to acquire specialized IT skills. Now they can work with an HPC cluster that has an interface similar to the ones they use in their office environments. The interface is a familiar Windows feature, and it’s very easy to understand from the beginning” 16 nodes, 128 cores, dedicated additional Windows cluster Integrated Windows HPC in the Proactive middleware. Goal is to offer identical access to computing ressources to users of Windows or Linux-based applications

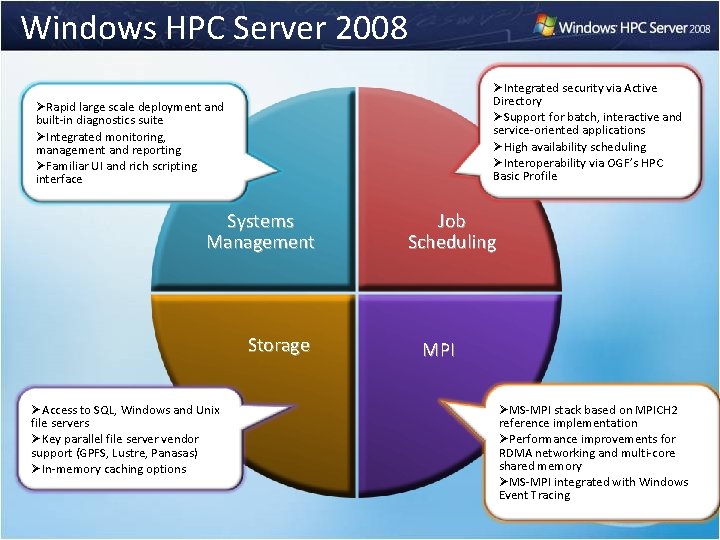

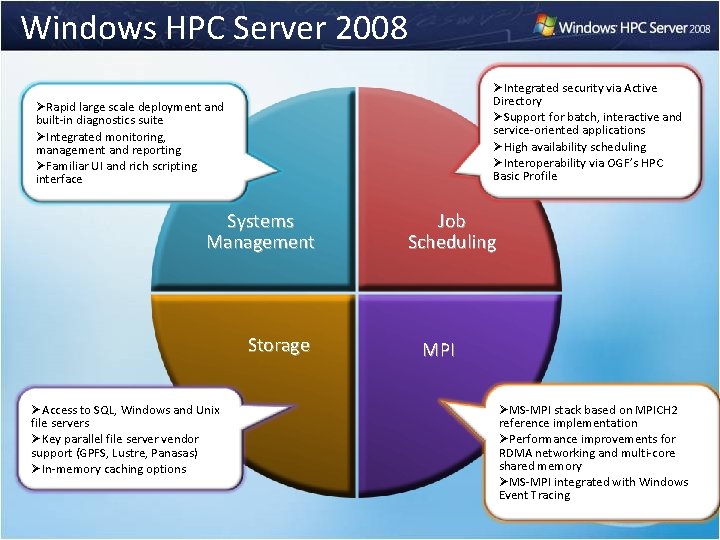

Windows HPC Server 2008 ØIntegrated security via Active Directory ØSupport for batch, interactive and service-oriented applications ØHigh availability scheduling ØInteroperability via OGF’s HPC Basic Profile ØRapid large scale deployment and built-in diagnostics suite ØIntegrated monitoring, management and reporting ØFamiliar UI and rich scripting interface Systems Management Storage ØAccess to SQL, Windows and Unix file servers ØKey parallel file server vendor support (GPFS, Lustre, Panasas) ØIn-memory caching options Job Scheduling MPI ØMS-MPI stack based on MPICH 2 reference implementation ØPerformance improvements for RDMA networking and multi-core shared memory ØMS-MPI integrated with Windows Event Tracing

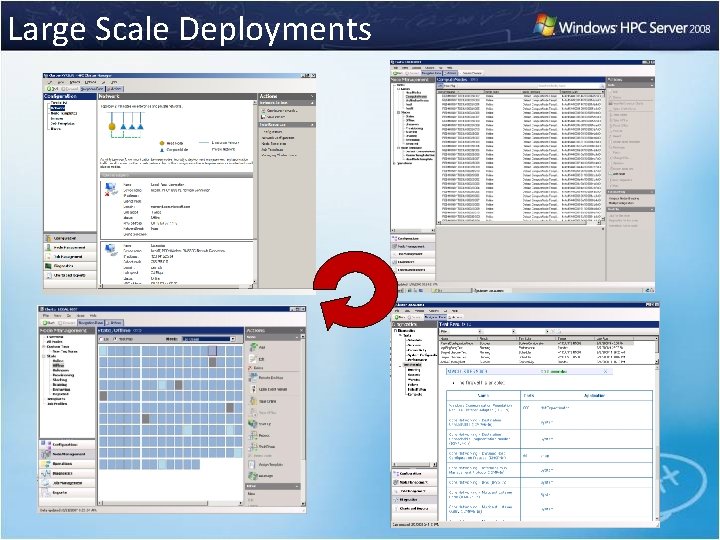

Large Scale Deployments

And out-of-the-box, integrated solution for smaller environments …

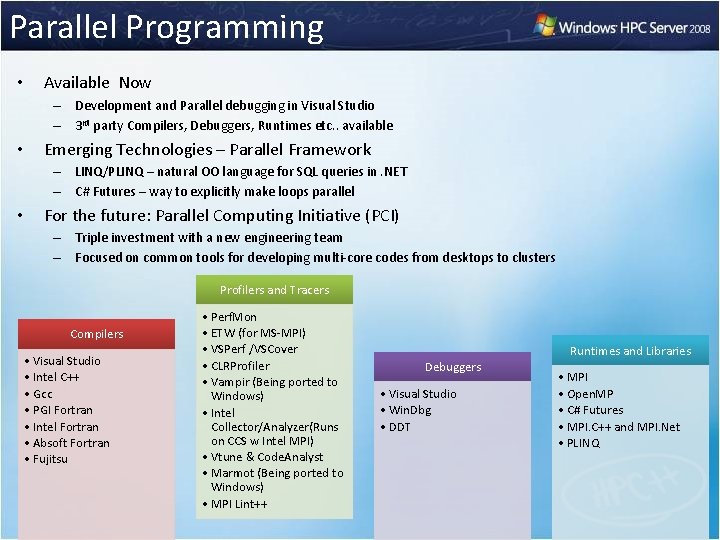

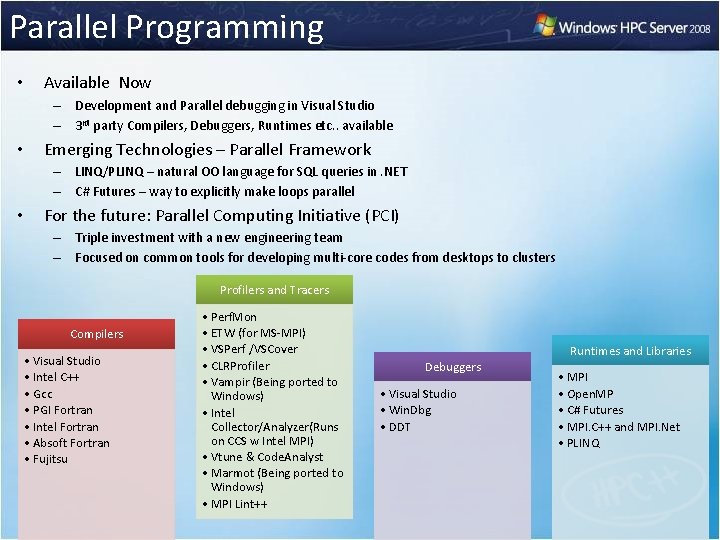

Parallel Programming • Available Now – Development and Parallel debugging in Visual Studio – 3 rd party Compilers, Debuggers, Runtimes etc. . available • Emerging Technologies – Parallel Framework – LINQ/PLINQ – natural OO language for SQL queries in. NET – C# Futures – way to explicitly make loops parallel • For the future: Parallel Computing Initiative (PCI) – Triple investment with a new engineering team – Focused on common tools for developing multi-core codes from desktops to clusters Profilers and Tracers Compilers • Visual Studio • Intel C++ • Gcc • PGI Fortran • Intel Fortran • Absoft Fortran • Fujitsu • Perf. Mon • ETW (for MS-MPI) • VSPerf /VSCover • CLRProfiler • Vampir (Being ported to Windows) • Intel Collector/Analyzer(Runs on CCS w Intel MPI) • Vtune & Code. Analyst • Marmot (Being ported to Windows) • MPI Lint++ Runtimes and Libraries Debuggers • Visual Studio • Win. Dbg • DDT • MPI • Open. MP • C# Futures • MPI. C++ and MPI. Net • PLINQ

Resources • Microsoft HPC Web site – download the evaluation version – http: //www. microsoft. com/france/hpc • Windows HPC Community site – http: //www. windowshpc. net • Dual-OS cluster white paper – Online soon

© 2008 Microsoft Corporation. All rights reserved. This presentation is for informational purposes only. Microsoft makes no warranties, express or implied, in this summary.