Welcome to the KernelClass My name Max Welling

- Slides: 11

Welcome to the Kernel-Class • • My name: Max (Welling) Book: There will be class-notes/slides. Homework: reading material, some exercises, some MATLAB implementations. • I like: an active attitude in class. ask questions! respond to my questions. • Enjoy. 1

Primary Goal • What is the primary goal of: - Machine Learning - Data Mining - Pattern Recognition - Data Analysis - Statistics Automatic detection of non-coincidental structure in data. 2

Desiderata • Robust algorithms are insensitive to outliers and wrong model assumptions. • Stable algorithms: generalize well to unseen data. • Computationally efficient algorithms are necessary to handle large datasets. 3

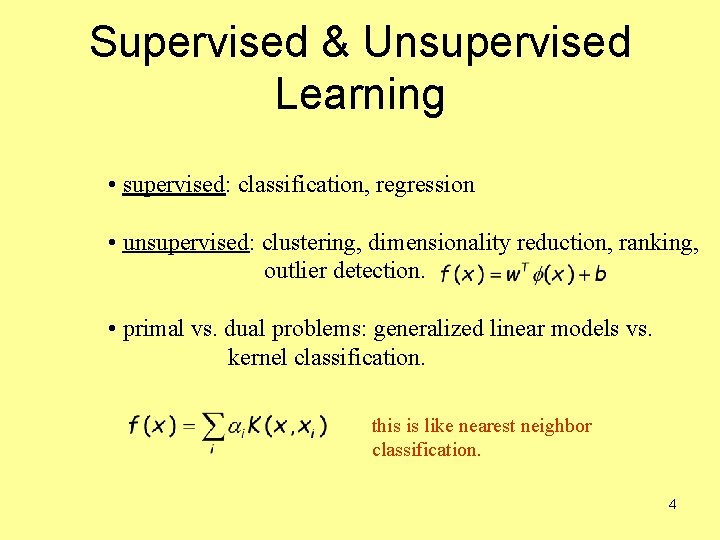

Supervised & Unsupervised Learning • supervised: classification, regression • unsupervised: clustering, dimensionality reduction, ranking, outlier detection. • primal vs. dual problems: generalized linear models vs. kernel classification. this is like nearest neighbor classification. 4

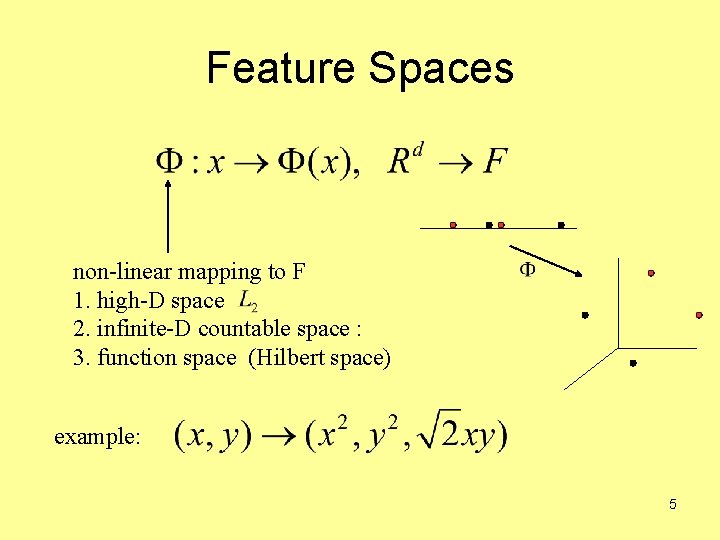

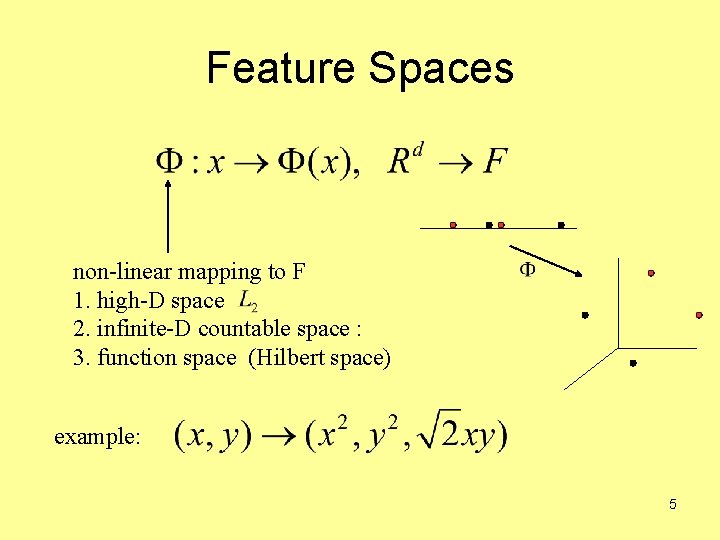

Feature Spaces non-linear mapping to F 1. high-D space 2. infinite-D countable space : 3. function space (Hilbert space) example: 5

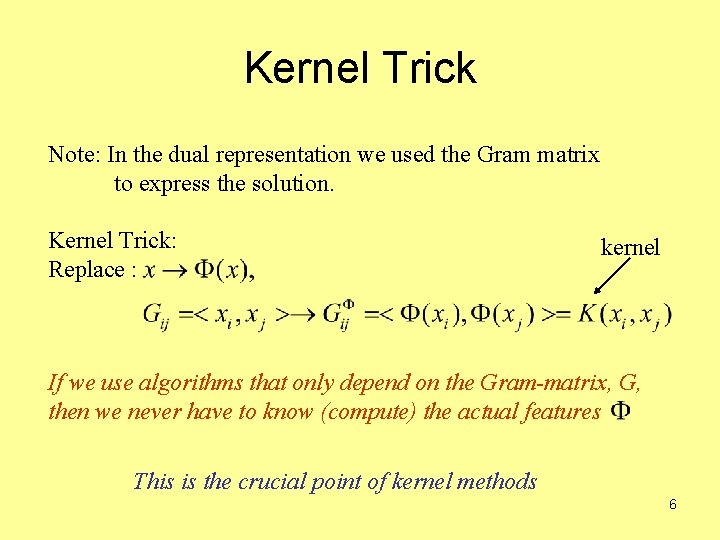

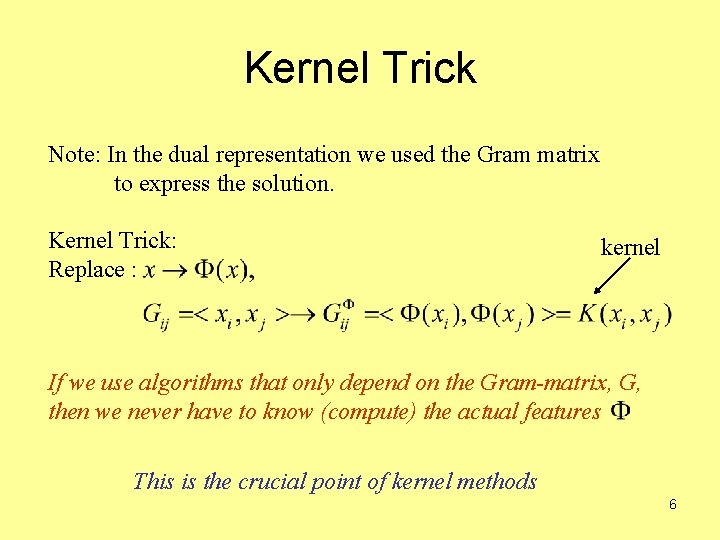

Kernel Trick Note: In the dual representation we used the Gram matrix to express the solution. Kernel Trick: Replace : kernel If we use algorithms that only depend on the Gram-matrix, G, then we never have to know (compute) the actual features This is the crucial point of kernel methods 6

Properties of a Kernel Definition: A finitely positive semi-definite function is a symmetric function of its arguments for which matrices formed by restriction on any finite subset of points is positive semi-definite. Theorem: A function can be written as where is a feature map iff k(x, y) satisfies the semi-definiteness property. Relevance: We can now check if k(x, y) is a proper kernel using only properties of k(x, y) itself, 7 i. e. without the need to know the feature map!

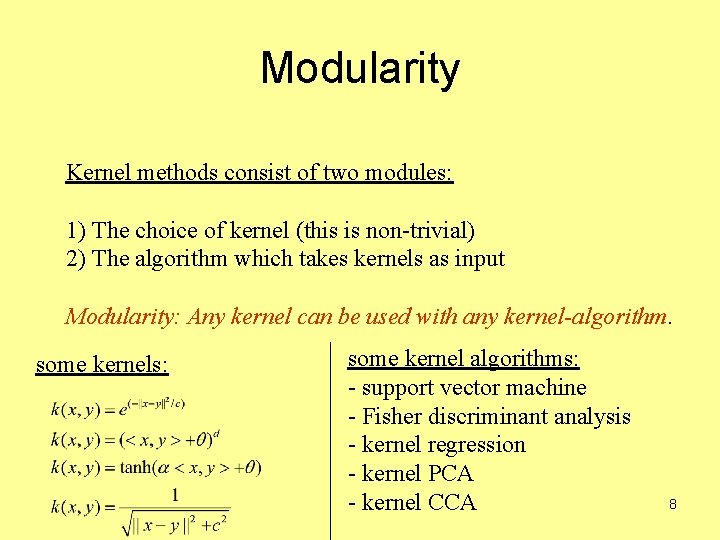

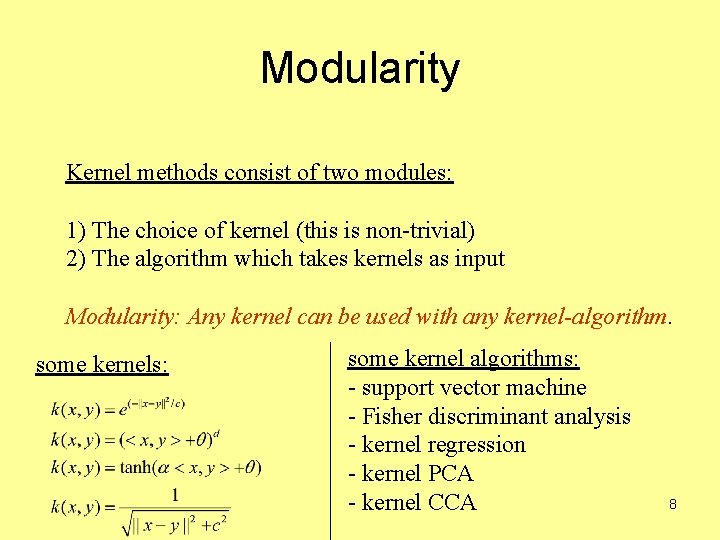

Modularity Kernel methods consist of two modules: 1) The choice of kernel (this is non-trivial) 2) The algorithm which takes kernels as input Modularity: Any kernel can be used with any kernel-algorithm. some kernels: some kernel algorithms: - support vector machine - Fisher discriminant analysis - kernel regression - kernel PCA - kernel CCA 8

Goodies and Baddies Goodies: • Kernel algorithms are typically constrained convex optimization problems solved with either spectral methods or convex optimization tools. • Efficient algorithms do exist in most cases. • The similarity to linear methods facilitates analysis. There are strong generalization bounds on test error. Baddies: • You need to choose the appropriate kernel • Kernel learning is prone to over-fitting • All information must go through the kernel-bottleneck. 9

Regularization • regularization is very important! • regularization parameters determined by out of sample. measures (cross-validation, leave-one-out). Demo Trevor Hastie. 10

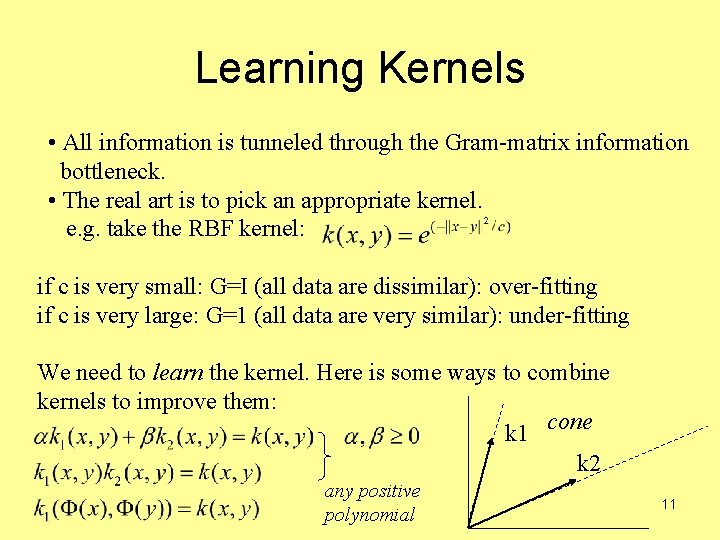

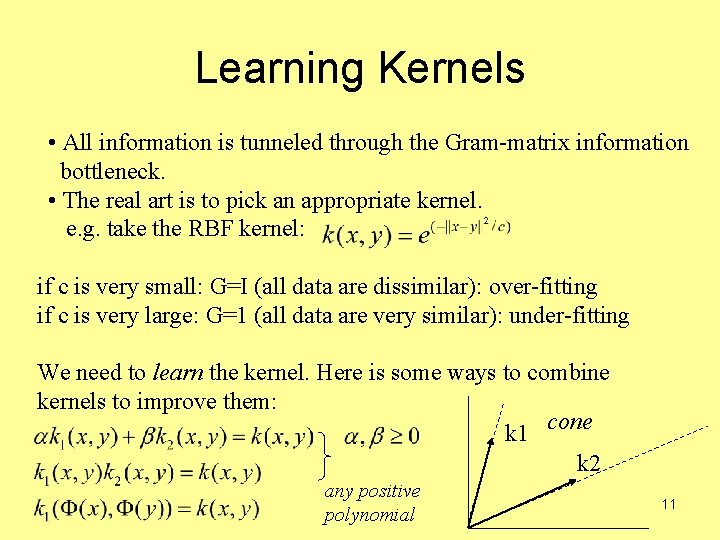

Learning Kernels • All information is tunneled through the Gram-matrix information bottleneck. • The real art is to pick an appropriate kernel. e. g. take the RBF kernel: if c is very small: G=I (all data are dissimilar): over-fitting if c is very large: G=1 (all data are very similar): under-fitting We need to learn the kernel. Here is some ways to combine kernels to improve them: k 1 cone k 2 any positive polynomial 11