Machine Learning ICS 178 Instructor Max Welling What

- Slides: 15

Machine Learning ICS 178 Instructor: Max Welling

What is Expected? • • Class Homework/Projects (40%) Midterm (20%) Final (40%) • For the projects, students should make teams. • This class needs your active participation: please ask questions and participate in discussions (there is no such thing as a dumb question).

Syllabus • 1: Introduction: overview, examples, goals, probability, conditional independence, matrices, eigenvalue decompositions • 2: Optimization and Data Visualization: Stochastic gradient descent, coordinate descent, centering, sphering, histograms, scatter-plots. • 3: Classification I: emprirical Risk Minimization, k-nearest neighbors, decision stumps, decision tree. • 4: Classification II: random forests, boosting. • 5: Neural networks: perceptron, logistic regression, multi-layer networks, backpropagation. • 6: Regression: Least squares regression. • 7: Clustering: k-means, single linkage, agglomorative clustering, MDL penalty. • 8: Dimesionality reduction: principal components analysis, Fisher linear discriminant analysis. • 9: Reinforcement learning: MDPs, TD- and Q-learning, value iteration. • 10: Bayesian methods: Bayes rule, generative models, naive Bayes classifier.

Machine Learning according to • The ability of a machine to improve its performance based on previous results. • The process by which computer systems can be directed to improve their performance over time. Examples are neural networks and genetic algorithms. • Subspecialty of artificial intelligence concerned with developing methods for software to learn from experience or extract knowledge from examples in a database. • The ability of a program to learn from experience — that is, to modify its execution on the basis of newly acquired information. • Machine learning is an area of artificial intelligence concerned with the development of techniques which allow computers to "learn". More specifically, machine learning is a method for creating computer programs by the analysis of data sets. Machine learning overlaps heavily with statistics, since both fields study the analysis of data, but unlike statistics, machine learning is concerned with the algorithmic complexity of computational implementations. . .

Some Examples • ZIP code recognition • Loan application classification • Signature recognition • Voice recognition over phone • Credit card fraud detection • Spam filter • Suggesting other products at Amazone. com • Marketing • Stock market prediction • Expert level chess and checkers systems • biometric identification (fingerprints, DNA, iris scan, face) • machine translation • web-search • document & information retrieval • camera surveillance • robosoccer • and so on. . .

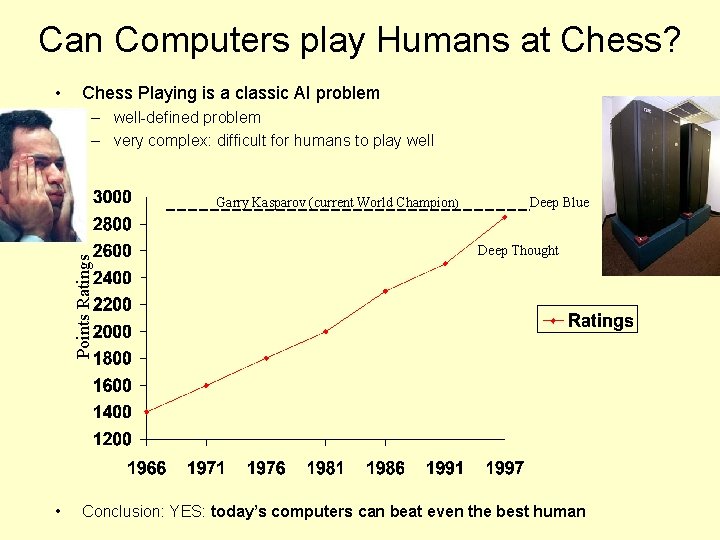

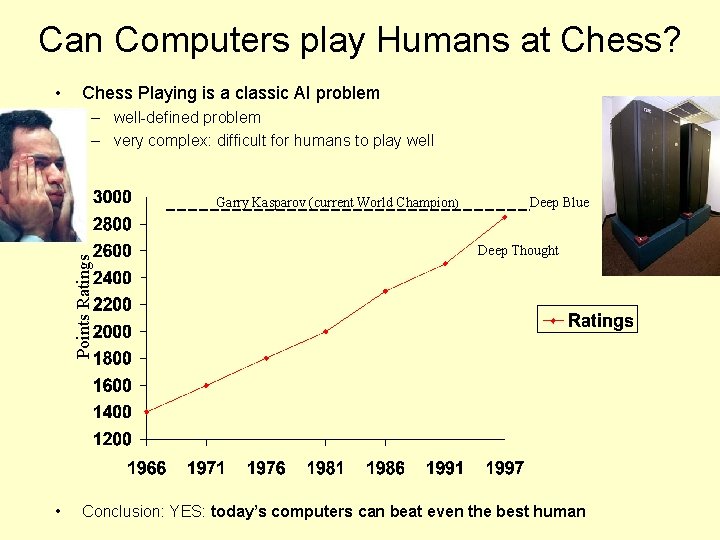

Can Computers play Humans at Chess? • Chess Playing is a classic AI problem – well-defined problem – very complex: difficult for humans to play well Points Ratings Garry Kasparov (current World Champion) • Deep Blue Deep Thought Conclusion: YES: today’s computers can beat even the best human

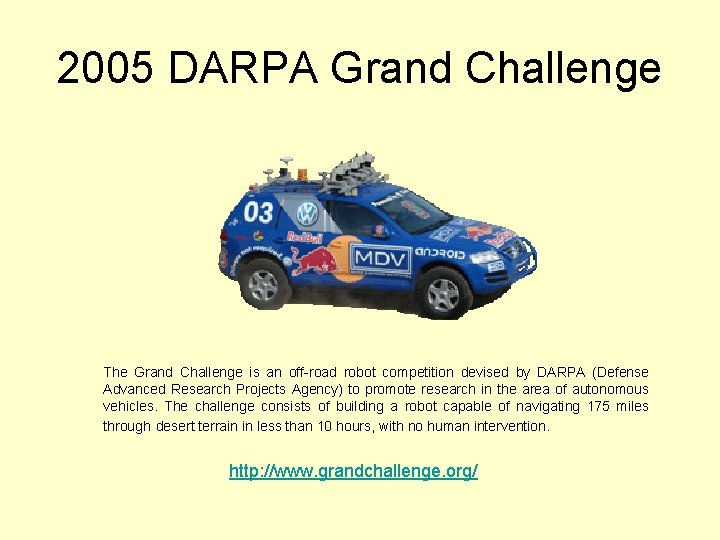

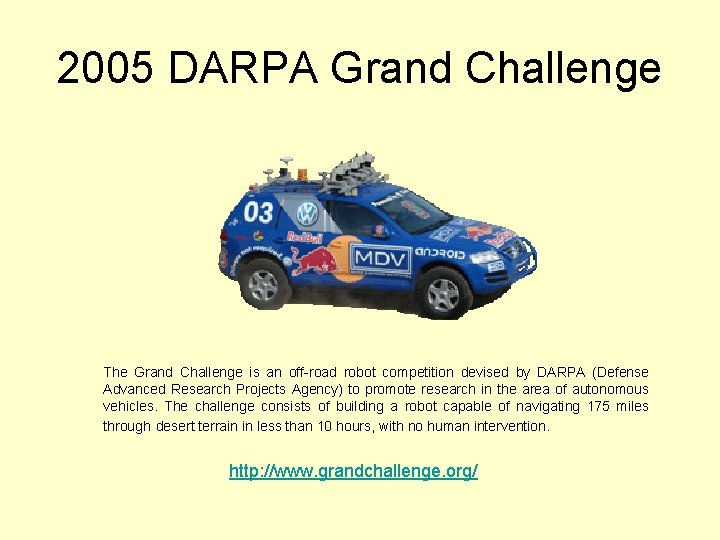

2005 DARPA Grand Challenge The Grand Challenge is an off-road robot competition devised by DARPA (Defense Advanced Research Projects Agency) to promote research in the area of autonomous vehicles. The challenge consists of building a robot capable of navigating 175 miles through desert terrain in less than 10 hours, with no human intervention. http: //www. grandchallenge. org/

2007 Darpa Challenge http: //www. darpa. mil/grandchallenge/overview. asp

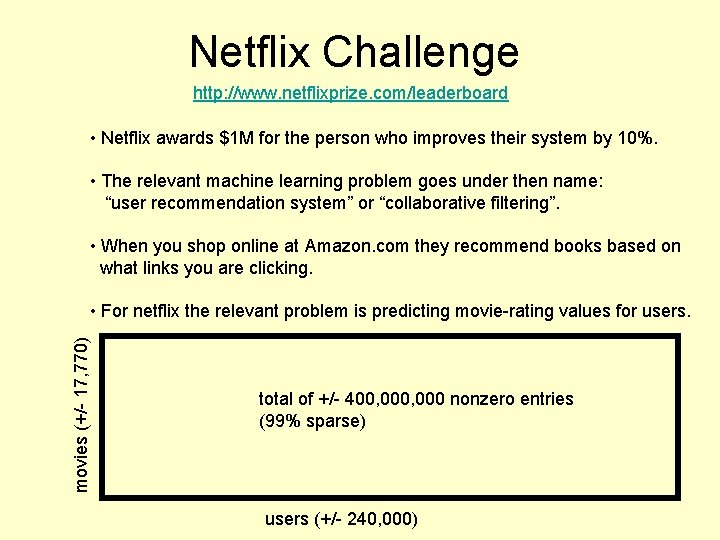

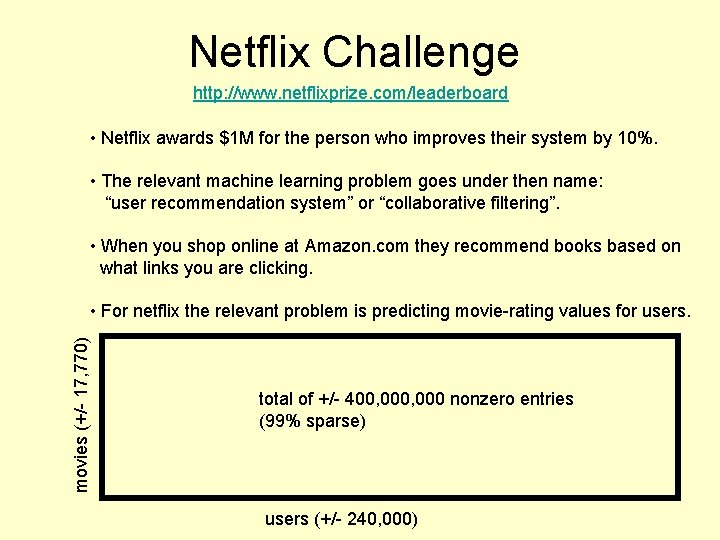

Netflix Challenge http: //www. netflixprize. com/leaderboard • Netflix awards $1 M for the person who improves their system by 10%. • The relevant machine learning problem goes under then name: “user recommendation system” or “collaborative filtering”. • When you shop online at Amazon. com they recommend books based on what links you are clicking. movies (+/- 17, 770) • For netflix the relevant problem is predicting movie-rating values for users. total of +/- 400, 000 nonzero entries (99% sparse) users (+/- 240, 000)

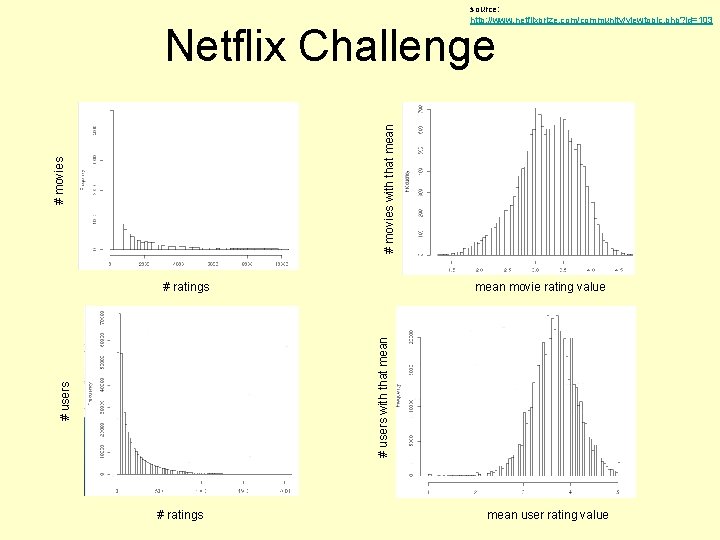

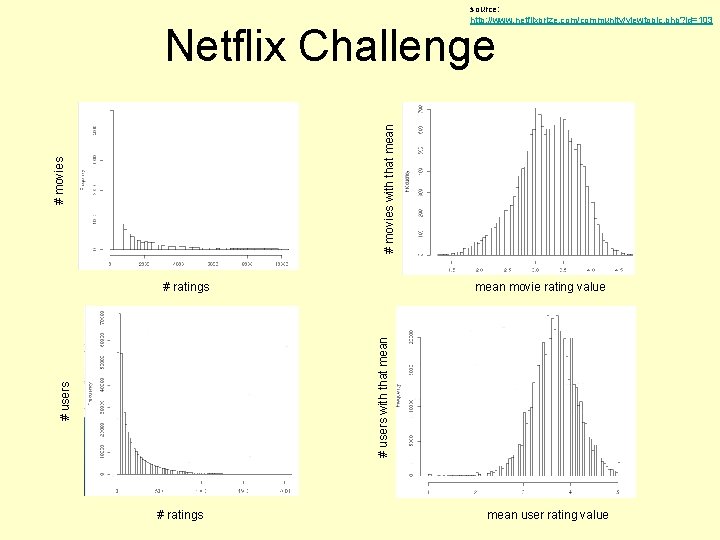

source: http: //www. netflixprize. com/community/viewtopic. php? id=103 # movies with that mean Netflix Challenge mean movie rating value # users with that mean # ratings mean user rating value

The Task • The user-movie matrix has many missing entries: Joe did not happen to rate “ET”. • Netflix wants to recommend unseen movies to users based on movies he/she has seen (and rated!) in the past. • To recommend movies we are being asked to fill in the missing entries for Joe with predicted ratings and pick the movies with the highest predicted ratings. • Where does the information come from? Say we want to predict the rating for Joe and ET. • I: Mary has rated all movies that Joe has seen in the past very similarly. She has also seen ET and rated it with a 5. What would you predict for Joe? • II: Star. Trek that has obtained very similar ratings as ET from all users. Star. Trek was rated 4 by Joe. What would you predict for ET?

Your Homework & Project • You will team up with 1 or more partners and implement algorithms that we discuss in class on the netflix problem. • Our goal is to get high up on the leaderboard • This involves both trying out various learning techniques (machine learning) as well as dealing with the large size of the data (data mining). • Towards the end we will combine all our algorithms to get a final score. • Every class (starting next week) we will have a presentation by 1 team to report on their progress and to share experience. Read this article on how good these systems can be: http: //www. theonion. com/content/node/57311? utm_source=onion_rss_daily

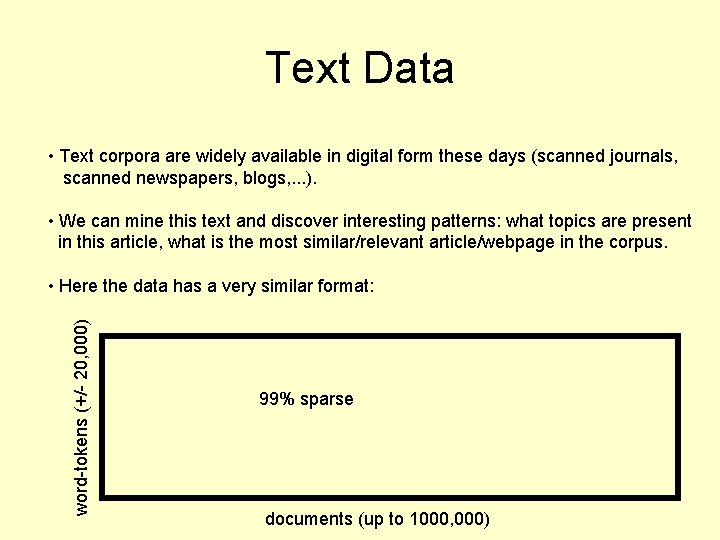

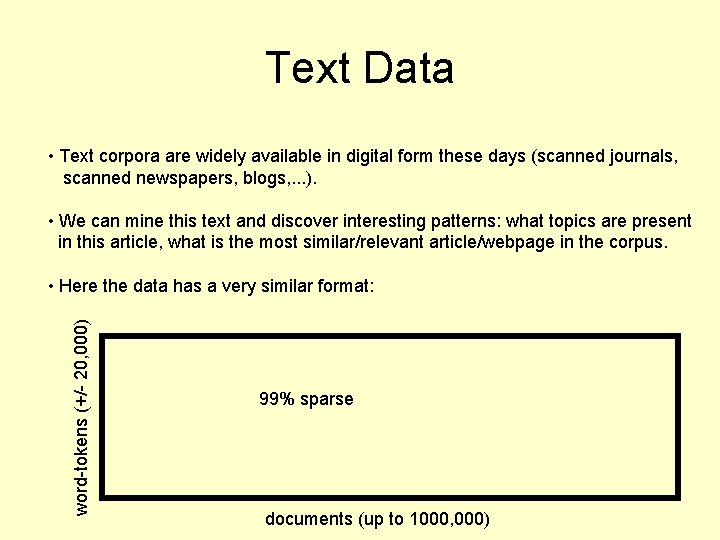

Text Data • Text corpora are widely available in digital form these days (scanned journals, scanned newspapers, blogs, . . . ). • We can mine this text and discover interesting patterns: what topics are present in this article, what is the most similar/relevant article/webpage in the corpus. word-tokens (+/- 20, 000) • Here the data has a very similar format: 99% sparse documents (up to 1000, 000)

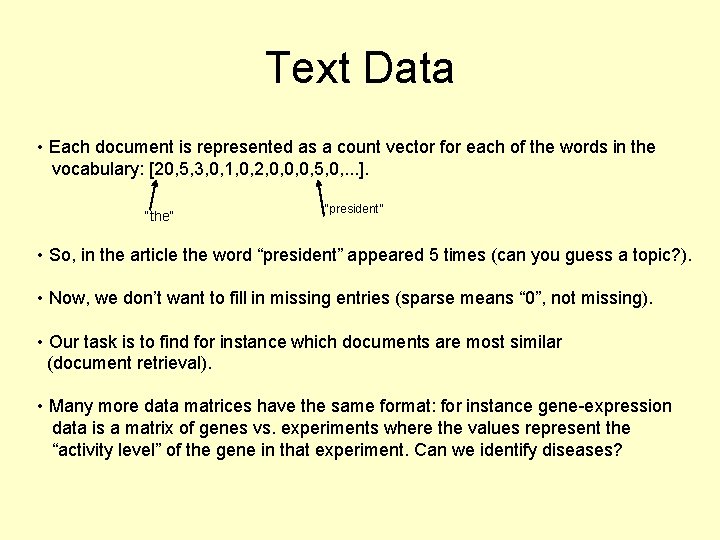

Text Data • Each document is represented as a count vector for each of the words in the vocabulary: [20, 5, 3, 0, 1, 0, 2, 0, 0, 0, 5, 0, . . . ]. “the” “president” • So, in the article the word “president” appeared 5 times (can you guess a topic? ). • Now, we don’t want to fill in missing entries (sparse means “ 0”, not missing). • Our task is to find for instance which documents are most similar (document retrieval). • Many more data matrices have the same format: for instance gene-expression data is a matrix of genes vs. experiments where the values represent the “activity level” of the gene in that experiment. Can we identify diseases?

Why is this cool/important? • Modern technologies generate data at an unprecedented scale. • The amount of data doubles every year. “One petabyte is equivalent to the text in one billion books, yet many scientific instruments, including the Large Synoptic Survey Telescope, will soon be generating several petabytes annually”. (2020 Computing: Science in an exponential world: Nature Published online: 22 March 2006) • Computers dominate our daily lives • Science, industry, army, our social interactions etc. We can no longer “eyeball” the images captured by some satellite for interesting events, or check every webpage for some topic. We need to trust computers to do the work for us.