Selfadjustable bootstrapping for Named Entity set expansion Sushant

Self-adjustable bootstrapping for Named Entity set expansion Sushant Narsale (JHU) Satoshi Sekine (NYU) July 30 th, 2009 Lexical Knowledge from Ngrams

Nail: Set (NE list) Expansion using bootstrapping Self-adjustable bootstrapping ng ra m s Expand Named Entity Sets for 150 Named Entity Categories July 30 th, 2009 Lexical Knowledge from Ngrams 2

Our Task • Input: Seeds for 150 Named Entity Categories • Output: More examples like seeds • Motivation – “Creating lists of Named Entities on Web is critical for query analysis, document categorization and ad matching” -Web Scale Distributional Similarity and Entity Set Expansion, Pantel et. al July 30 th, 2009 Lexical Knowledge from Ngrams 3

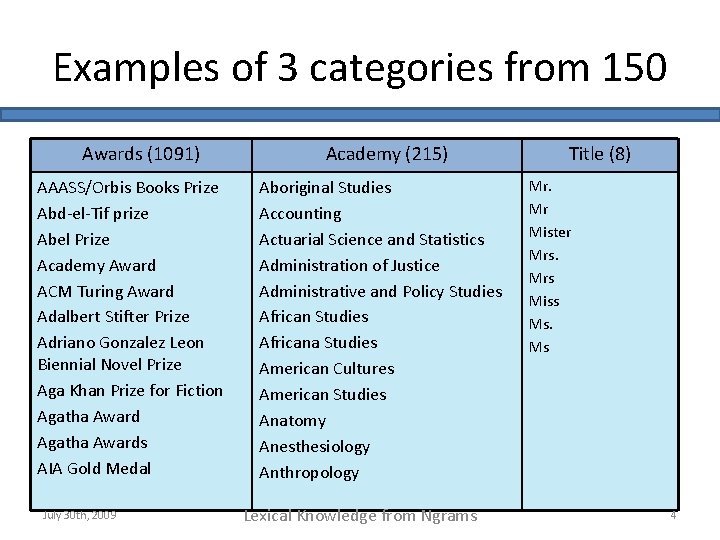

Examples of 3 categories from 150 Awards (1091) AAASS/Orbis Books Prize Abd-el-Tif prize Abel Prize Academy Award ACM Turing Award Adalbert Stifter Prize Adriano Gonzalez Leon Biennial Novel Prize Aga Khan Prize for Fiction Agatha Awards AIA Gold Medal July 30 th, 2009 Academy (215) Aboriginal Studies Accounting Actuarial Science and Statistics Administration of Justice Administrative and Policy Studies Africana Studies American Cultures American Studies Anatomy Anesthesiology Anthropology Lexical Knowledge from Ngrams Title (8) Mr. Mr Mister Mrs Miss Ms. Ms 4

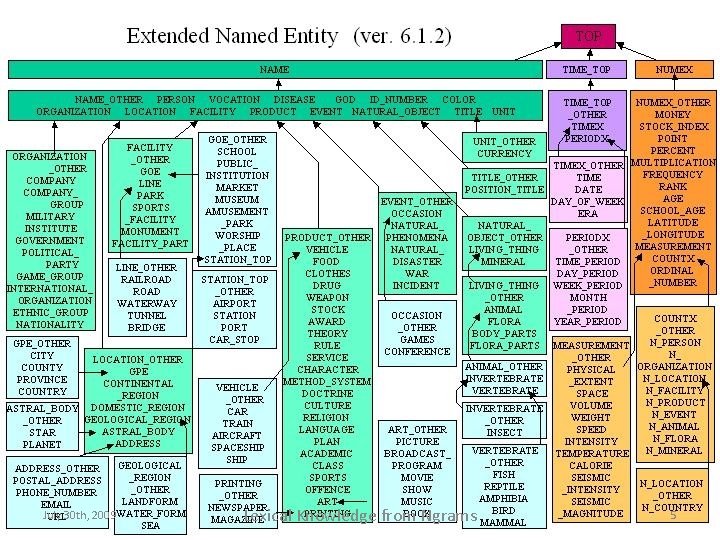

150 category Named Entity July 30 th, 2009 Lexical Knowledge from Ngrams 5

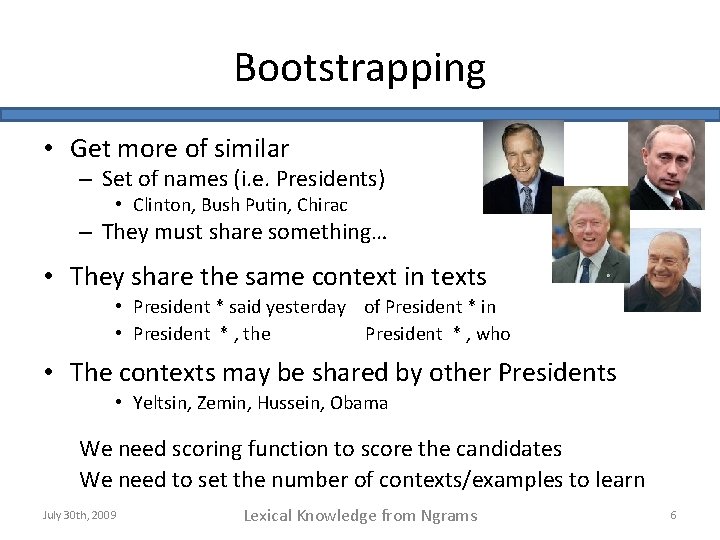

Bootstrapping • Get more of similar – Set of names (i. e. Presidents) • Clinton, Bush Putin, Chirac – They must share something… • They share the same context in texts • President * said yesterday of President * in • President * , the President * , who • The contexts may be shared by other Presidents • Yeltsin, Zemin, Hussein, Obama We need scoring function to score the candidates We need to set the number of contexts/examples to learn July 30 th, 2009 Lexical Knowledge from Ngrams 6

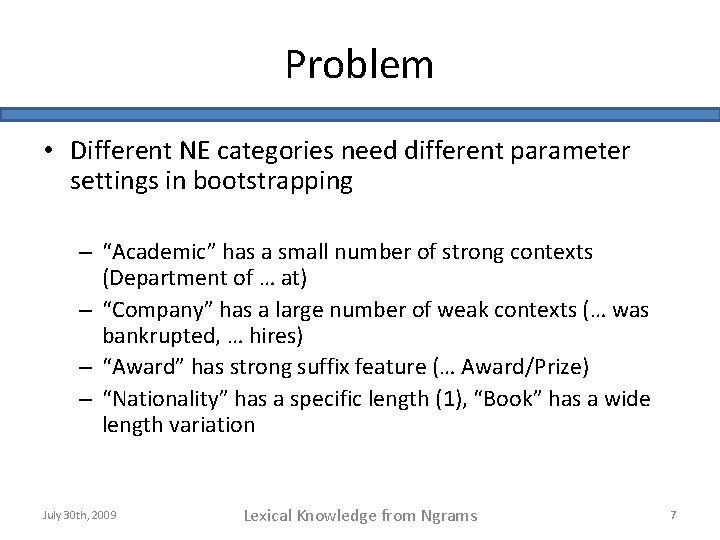

Problem • Different NE categories need different parameter settings in bootstrapping – “Academic” has a small number of strong contexts (Department of … at) – “Company” has a large number of weak contexts (… was bankrupted, … hires) – “Award” has strong suffix feature (… Award/Prize) – “Nationality” has a specific length (1), “Book” has a wide length variation July 30 th, 2009 Lexical Knowledge from Ngrams 7

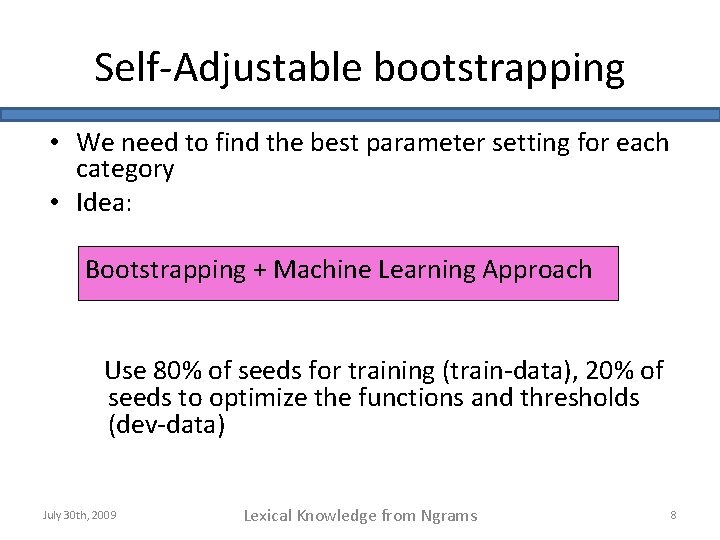

Self-Adjustable bootstrapping • We need to find the best parameter setting for each category • Idea: Bootstrapping + Machine Learning Approach Use 80% of seeds for training (train-data), 20% of seeds to optimize the functions and thresholds (dev-data) July 30 th, 2009 Lexical Knowledge from Ngrams 8

Our Approach • Parameters 1. Context • • Formula’s to score Contexts and Targets Number of contexts to be used 2. Suffix/Prefix e. g. Suffix=Awards, for award categories 3. Length a bias on lengths of retrieved Entity set – • Weighted Linear Interpolation of three functions Optimization Function : Total Reciprocal Rank July 30 th, 2009 Lexical Knowledge from Ngrams 9

Our Approach • Parameters 1. Context • • Formula’s to score Contexts and Targets Number of contexts to be used 2. Suffix/Prefix e. g. Suffix=Awards, for award categories 3. Length a bias on lengths of retrieved Entity set – • Weighted Linear Interpolation of three functions Optimization Function : Total Reciprocal Rank July 30 th, 2009 Lexical Knowledge from Ngrams 10

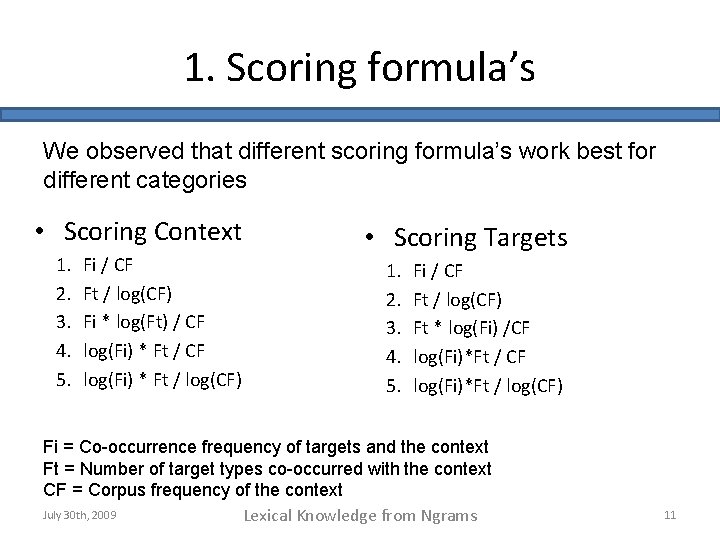

1. Scoring formula’s We observed that different scoring formula’s work best for different categories • Scoring Context 1. 2. 3. 4. 5. Fi / CF Ft / log(CF) Fi * log(Ft) / CF log(Fi) * Ft / log(CF) • Scoring Targets 1. 2. 3. 4. 5. Fi / CF Ft / log(CF) Ft * log(Fi) /CF log(Fi)*Ft / log(CF) Fi = Co-occurrence frequency of targets and the context Ft = Number of target types co-occurred with the context CF = Corpus frequency of the context July 30 th, 2009 Lexical Knowledge from Ngrams 11

Our Approach • Parameters 1. Context • • Formula’s to score Contexts and Targets Number of contexts to be used 2. Suffix/Prefix e. g. Suffix=Awards, for award categories 3. Length a bias on lengths of retrieved Entity set – • Weighted Linear Interpolation of three functions Optimization Function : Total Reciprocal Rank July 30 th, 2009 Lexical Knowledge from Ngrams 12

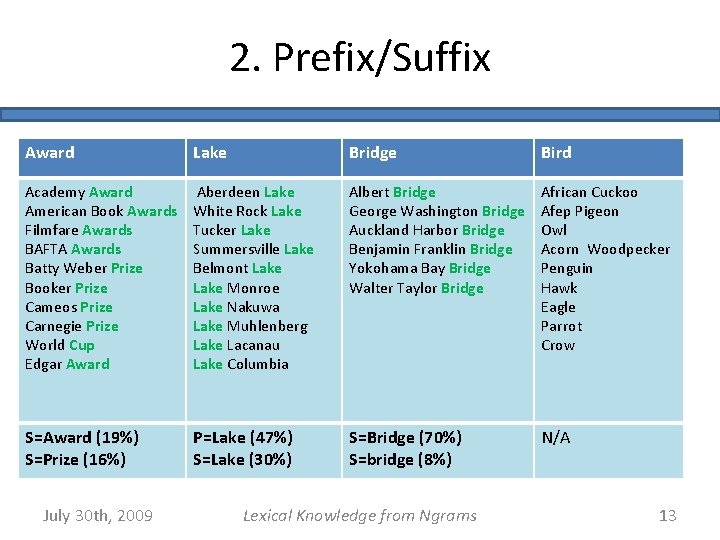

2. Prefix/Suffix Award Lake Bridge Bird Academy Award American Book Awards Filmfare Awards BAFTA Awards Batty Weber Prize Booker Prize Cameos Prize Carnegie Prize World Cup Edgar Award Aberdeen Lake White Rock Lake Tucker Lake Summersville Lake Belmont Lake Monroe Lake Nakuwa Lake Muhlenberg Lake Lacanau Lake Columbia Albert Bridge George Washington Bridge Auckland Harbor Bridge Benjamin Franklin Bridge Yokohama Bay Bridge Walter Taylor Bridge African Cuckoo Afep Pigeon Owl Acorn Woodpecker Penguin Hawk Eagle Parrot Crow S=Award (19%) S=Prize (16%) P=Lake (47%) S=Lake (30%) S=Bridge (70%) S=bridge (8%) N/A July 30 th, 2009 Lexical Knowledge from Ngrams 13

Our Approach • Parameters 1. Context • • Formula’s to score Contexts and Targets Number of contexts to be used 2. Suffix/Prefix e. g. Suffix=Awards, for award categories 3. Length a bias on lengths of retrieved Entity set – • Weighted Linear Interpolation of three functions Optimization Function : Total Reciprocal Rank July 30 th, 2009 Lexical Knowledge from Ngrams 14

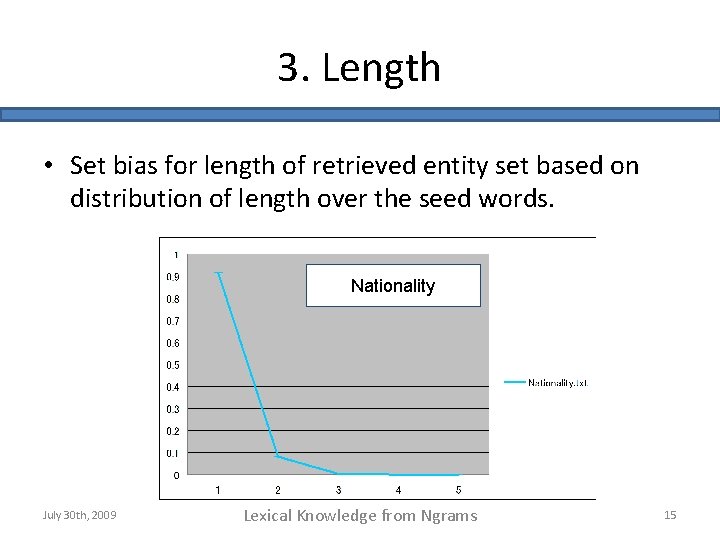

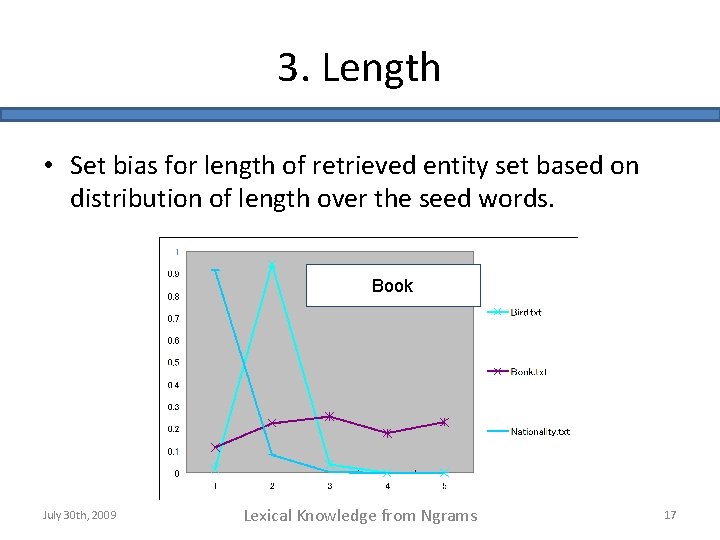

3. Length • Set bias for length of retrieved entity set based on distribution of length over the seed words. Nationality July 30 th, 2009 Lexical Knowledge from Ngrams 15

3. Length • Set bias for length of retrieved entity set based on distribution of length over the seed words. Bird July 30 th, 2009 Lexical Knowledge from Ngrams 16

3. Length • Set bias for length of retrieved entity set based on distribution of length over the seed words. Book July 30 th, 2009 Lexical Knowledge from Ngrams 17

3. Length • Set bias for length of retrieved entity set based on distribution of length over the seed words. July 30 th, 2009 Lexical Knowledge from Ngrams 18

Our Approach • Parameters 1. Context • • Formula’s to score Contexts and Targets Number of contexts to be used 2. Suffix/Prefix e. g. Suffix=Awards, for award categories 3. Length a bias on lengths of retrieved Entity set – • Weighted Linear Interpolation of three functions Optimization Function : Total Reciprocal Rank July 30 th, 2009 Lexical Knowledge from Ngrams 19

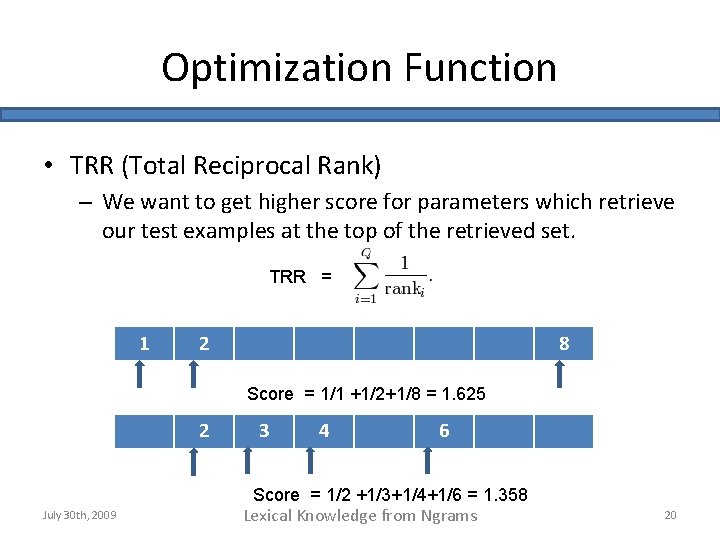

Optimization Function • TRR (Total Reciprocal Rank) – We want to get higher score for parameters which retrieve our test examples at the top of the retrieved set. TRR = 1 2 8 Score = 1/1 +1/2+1/8 = 1. 625 2 July 30 th, 2009 3 4 6 Score = 1/2 +1/3+1/4+1/6 = 1. 358 Lexical Knowledge from Ngrams 20

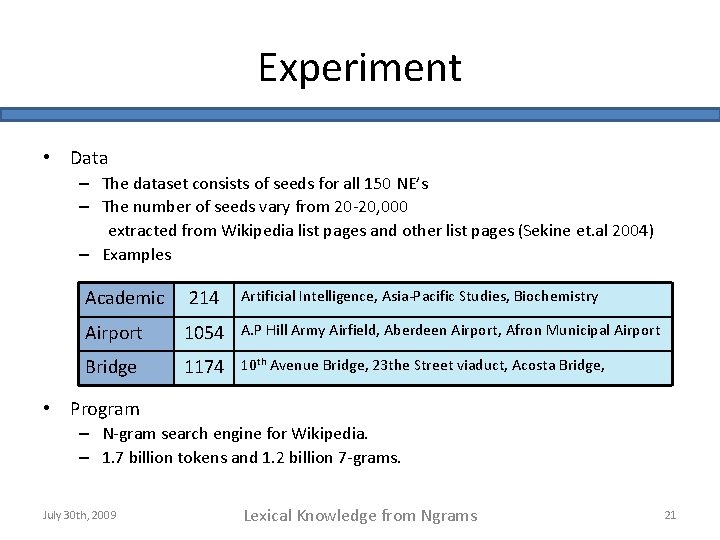

Experiment • Data – The dataset consists of seeds for all 150 NE’s – The number of seeds vary from 20 -20, 000 extracted from Wikipedia list pages and other list pages (Sekine et. al 2004) – Examples Academic 214 Airport 1054 A. P Hill Army Airfield, Aberdeen Airport, Afron Municipal Airport Bridge 1174 10 th Avenue Bridge, 23 the Street viaduct, Acosta Bridge, Artificial Intelligence, Asia-Pacific Studies, Biochemistry • Program – N-gram search engine for Wikipedia. – 1. 7 billion tokens and 1. 2 billion 7 -grams. July 30 th, 2009 Lexical Knowledge from Ngrams 21

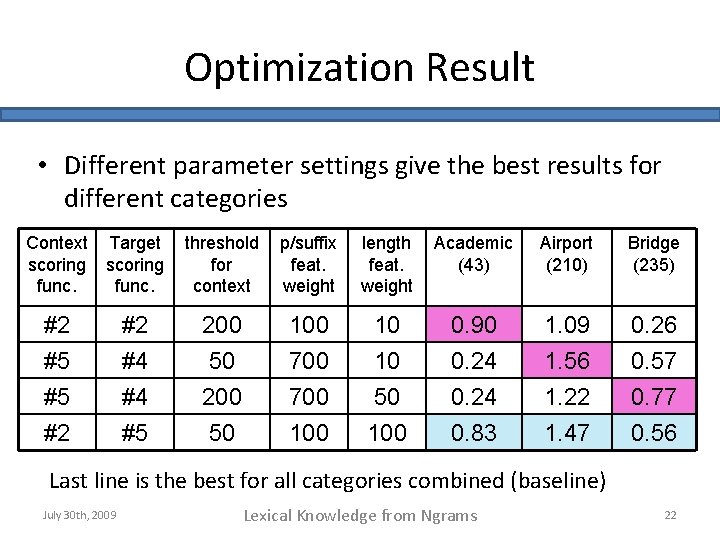

Optimization Result • Different parameter settings give the best results for different categories Context scoring func. Target scoring func. threshold for context p/suffix feat. weight length feat. weight Academic (43) Airport (210) Bridge (235) #2 #5 #2 #4 200 50 100 700 10 10 0. 90 0. 24 1. 09 1. 56 0. 26 0. 57 #5 #2 #4 #5 200 50 700 100 50 100 0. 24 0. 83 1. 22 1. 47 0. 77 0. 56 Last line is the best for all categories combined (baseline) July 30 th, 2009 Lexical Knowledge from Ngrams 22

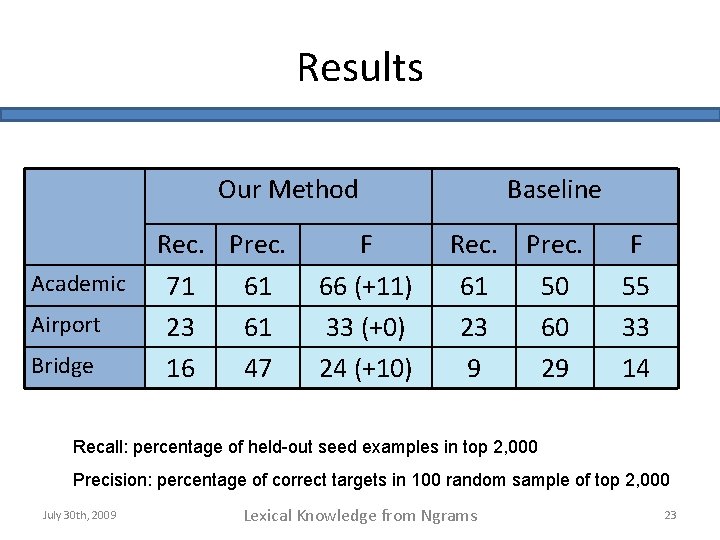

Results Our Method Academic Airport Bridge Rec. Prec. 71 61 23 61 16 47 F 66 (+11) 33 (+0) 24 (+10) Baseline Rec. 61 23 9 Prec. 50 60 29 F 55 33 14 Recall: percentage of held-out seed examples in top 2, 000 Precision: percentage of correct targets in 100 random sample of top 2, 000 July 30 th, 2009 Lexical Knowledge from Ngrams 23

Future Work • More Features – Phrase Clustering – Genre information – Longer dependency • • Better optimization Start with smaller number of seeds Other targets (e. g. relation) Make a tool (like Google Sets) July 30 th, 2009 Lexical Knowledge from Ngrams 24

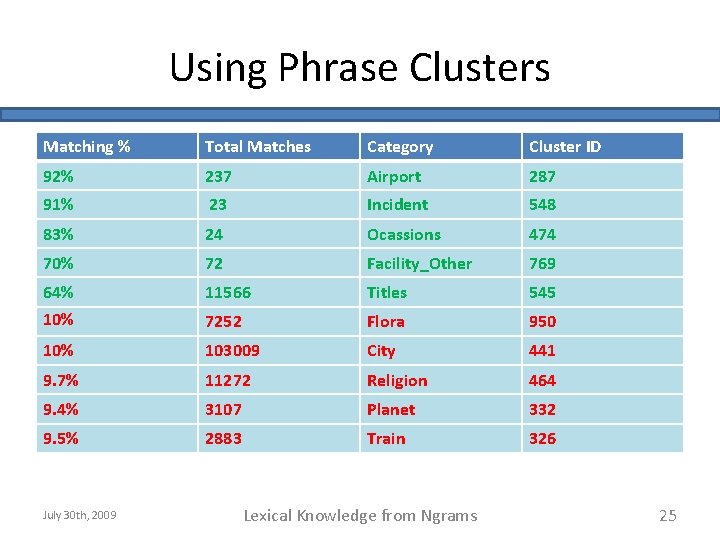

Using Phrase Clusters Matching % Total Matches Category Cluster ID 92% 237 Airport 287 91% 23 Incident 548 83% 24 Ocassions 474 70% 72 Facility_Other 769 64% 11566 Titles 545 10% 7252 Flora 950 10% 103009 City 441 9. 7% 11272 Religion 464 9. 4% 3107 Planet 332 9. 5% 2883 Train 326 July 30 th, 2009 Lexical Knowledge from Ngrams 25

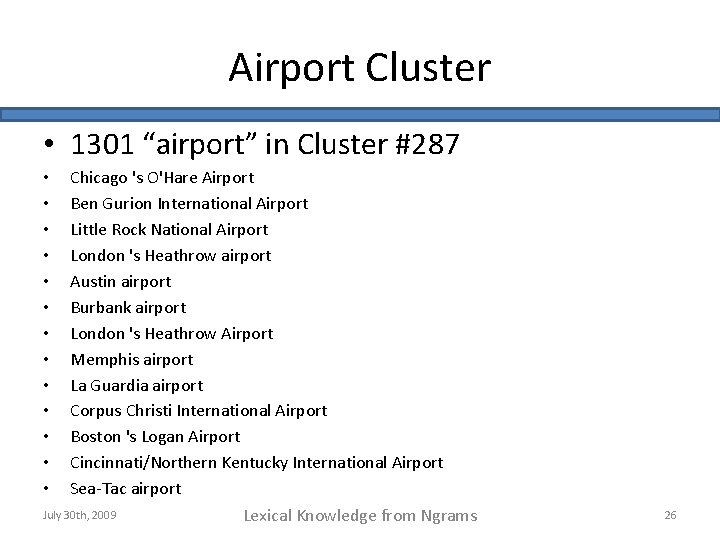

Airport Cluster • 1301 “airport” in Cluster #287 Chicago 's O'Hare Airport Ben Gurion International Airport Little Rock National Airport London 's Heathrow airport Austin airport Burbank airport London 's Heathrow Airport Memphis airport La Guardia airport Corpus Christi International Airport Boston 's Logan Airport Cincinnati/Northern Kentucky International Airport Sea-Tac airport July 30 th, 2009 Lexical Knowledge from Ngrams • • • • 26

Conclusion • A solution for “Different methods work different categories” • Large dictionary of 150 category Named Entities sushant@jhu. edu, sekine@cs. nyu. edu July 30 th, 2009 Lexical Knowledge from Ngrams 27

- Slides: 27