Named Entity Recognition in Tweets An Experimental Study

- Slides: 21

Named Entity Recognition in Tweets: An Experimental Study Chuanhao Ma, Yiqi Tang, Ziyan Yang

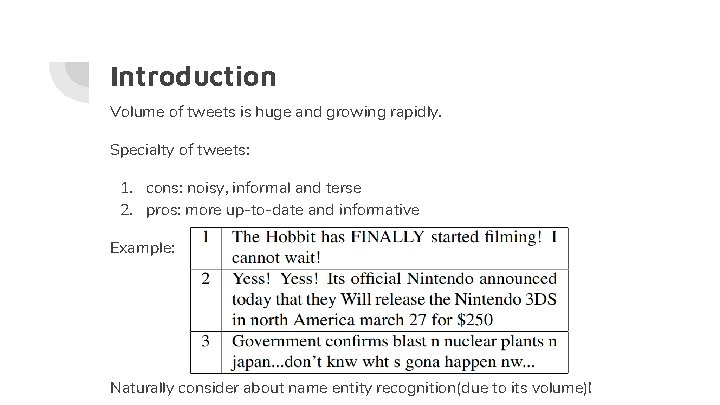

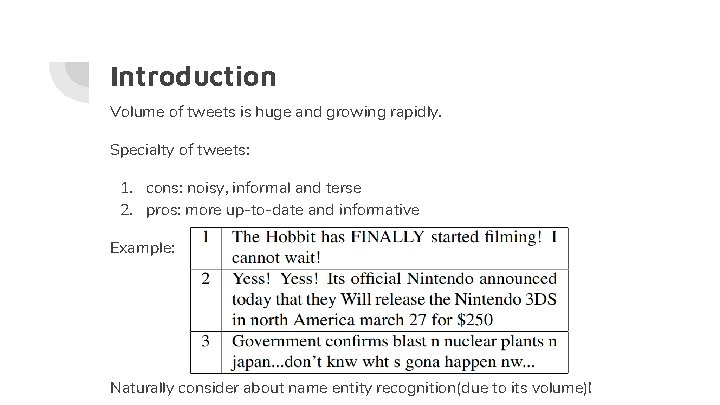

Introduction Volume of tweets is huge and growing rapidly. Specialty of tweets: 1. cons: noisy, informal and terse 2. pros: more up-to-date and informative Example: Naturally consider about name entity recognition(due to its volume)!

Introduction Two reasons for difficulty in classifying name entities in tweets: 1. Too much distinctive(mostly infrequent) name entity types 2. Lack sufficient context due to the 140 characters limit. Solution: ● ● ● A distantly supervised approach. Applying Labeled. LDA to utilize much unlabeled data with large dictionaries of entities gathered from Freebase Combine information about an entity from the contexts across its mentions.

Feature extraction ❖ Two fundamental NLP tasks for Shallow Syntax in Tweets: ➢ Part of Speech Tagging ➢ Noun-phrase Chunking ❖ Capitalization Outputs of all these classifiers are used in feature generation for Name Entity Recognition.

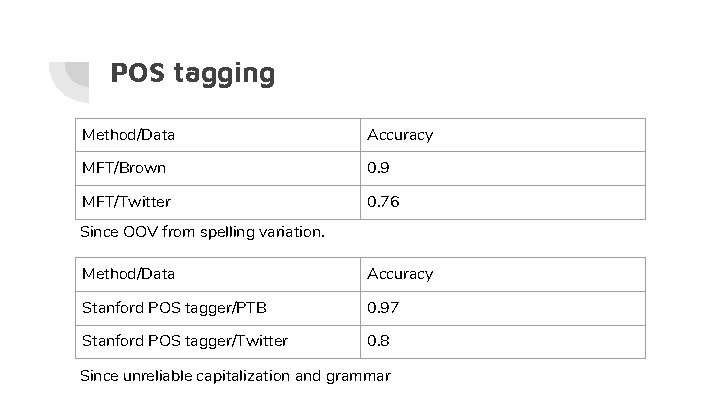

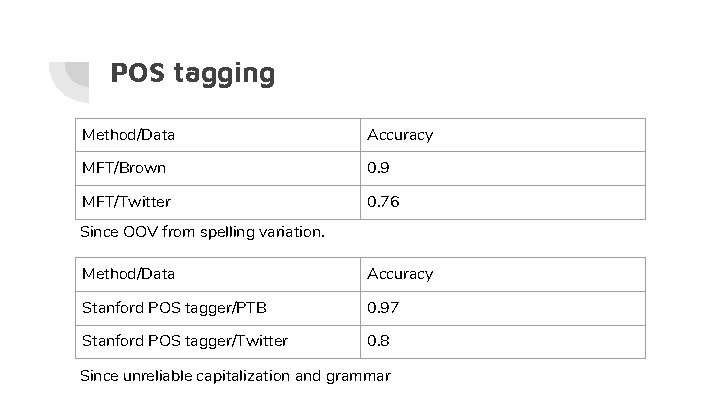

POS tagging Method/Data Accuracy MFT/Brown 0. 9 MFT/Twitter 0. 76 Since OOV from spelling variation. Method/Data Accuracy Stanford POS tagger/PTB 0. 97 Stanford POS tagger/Twitter 0. 8 Since unreliable capitalization and grammar

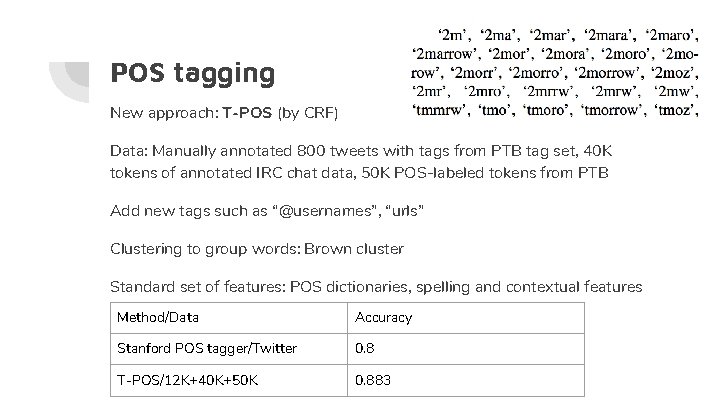

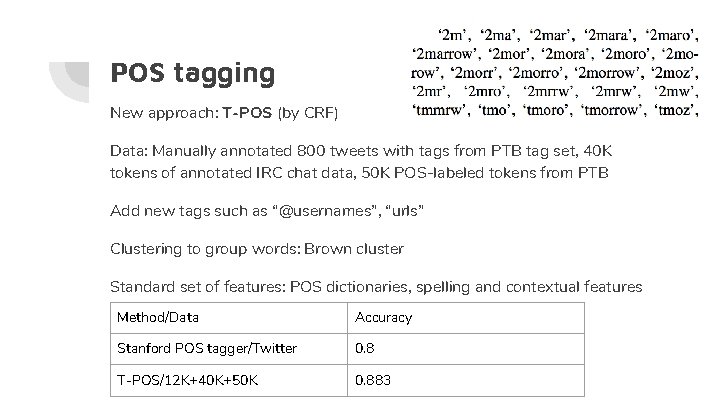

POS tagging New approach: T-POS (by CRF) Data: Manually annotated 800 tweets with tags from PTB tag set, 40 K tokens of annotated IRC chat data, 50 K POS-labeled tokens from PTB Add new tags such as “@usernames”, “urls” Clustering to group words: Brown cluster Standard set of features: POS dictionaries, spelling and contextual features Method/Data Accuracy Stanford POS tagger/Twitter 0. 8 T-POS/12 K+40 K+50 K 0. 883

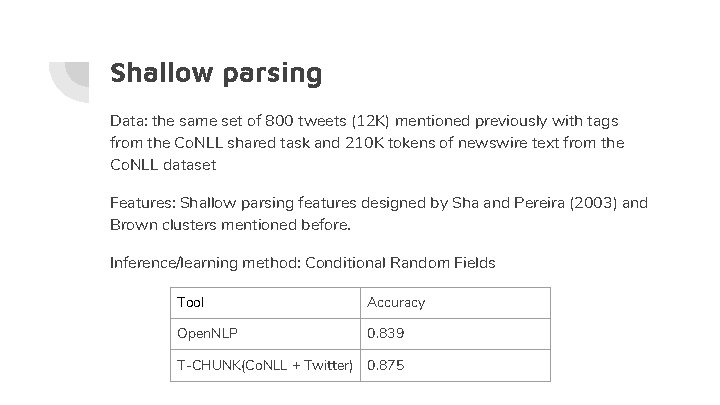

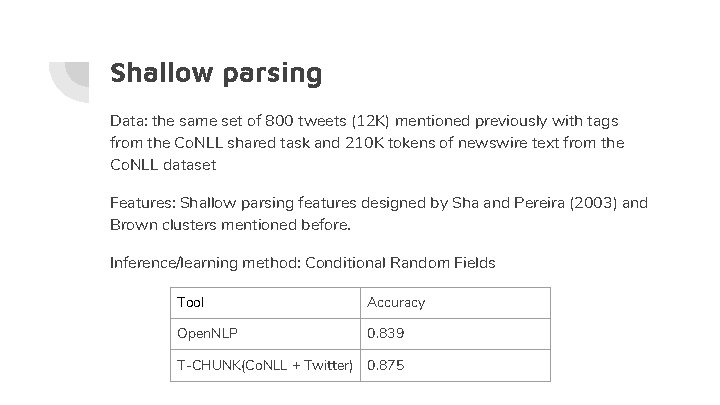

Shallow parsing Data: the same set of 800 tweets (12 K) mentioned previously with tags from the Co. NLL shared task and 210 K tokens of newswire text from the Co. NLL dataset Features: Shallow parsing features designed by Sha and Pereira (2003) and Brown clusters mentioned before. Inference/learning method: Conditional Random Fields Tool Accuracy Open. NLP 0. 839 T-CHUNK(Co. NLL + Twitter) 0. 875

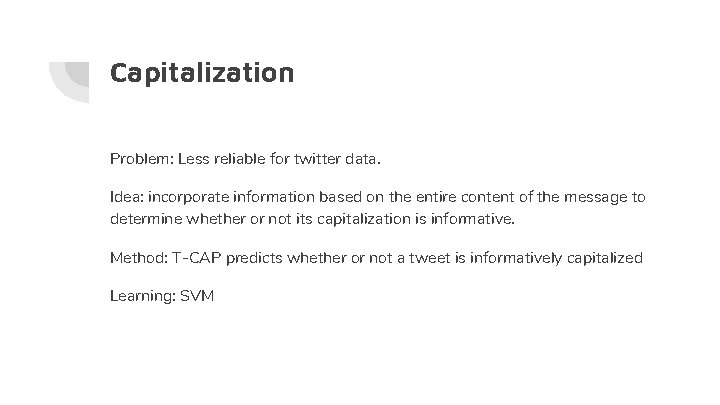

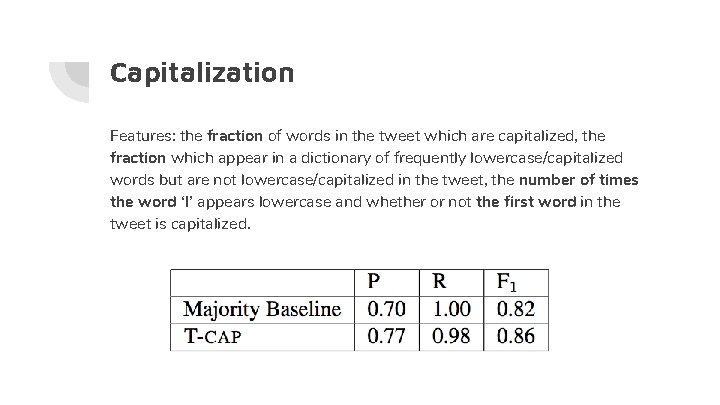

Capitalization Problem: Less reliable for twitter data. Idea: incorporate information based on the entire content of the message to determine whether or not its capitalization is informative. Method: T-CAP predicts whether or not a tweet is informatively capitalized Learning: SVM

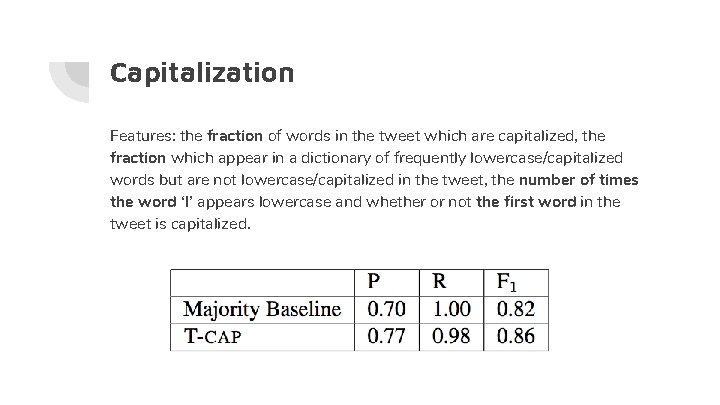

Capitalization Features: the fraction of words in the tweet which are capitalized, the fraction which appear in a dictionary of frequently lowercase/capitalized words but are not lowercase/capitalized in the tweet, the number of times the word ‘I’ appears lowercase and whether or not the first word in the tweet is capitalized.

Segmenting Named Entities Exhaustively annotating 2, 400 tweets (34 K tokens) with named entities. T-SEG models Named Entity Segmentation as a sequence-labeling task using IOB encoding, using CRF. Including features like T-POS and T-CHUNK.

Classifying Named Entities Though some tweets do not contain enough context. . . … We can use the redundancy in our data -- we can leverage large lists of entities and their types gathered from an open-domain ontology (Freebase) However. . . 35% of the entities in our data appear in more than one of our (mutually exclusive) Freebase dictionaries. Additionally, 30% of entities mentioned on Twitter do not appear in any Freebase dictionary.

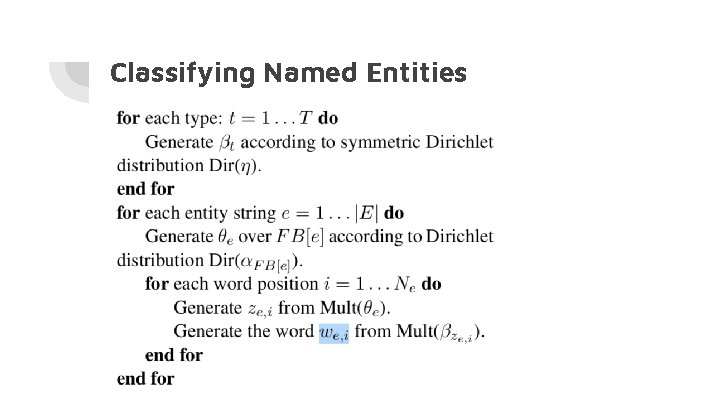

Classifying Named Entities We apply Labeled. LDA, to model unlabeled entities and their possible types in a topic view. Labeled. LDA models each entity string as a mixture of types.

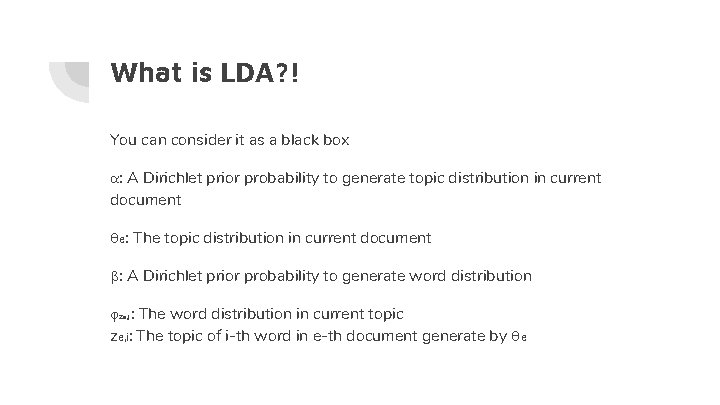

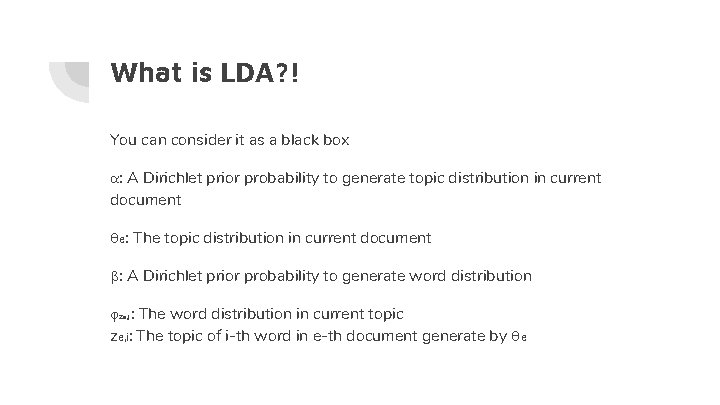

What is LDA? ! You can consider it as a black box α: A Dirichlet prior probability to generate topic distribution in current document θe: The topic distribution in current document β: A Dirichlet prior probability to generate word distribution φz : The word distribution in current topic ze, i: The topic of i-th word in e-th document generate by θe e, j

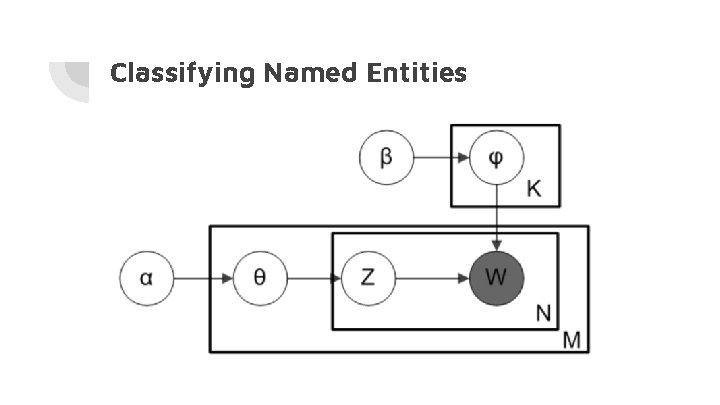

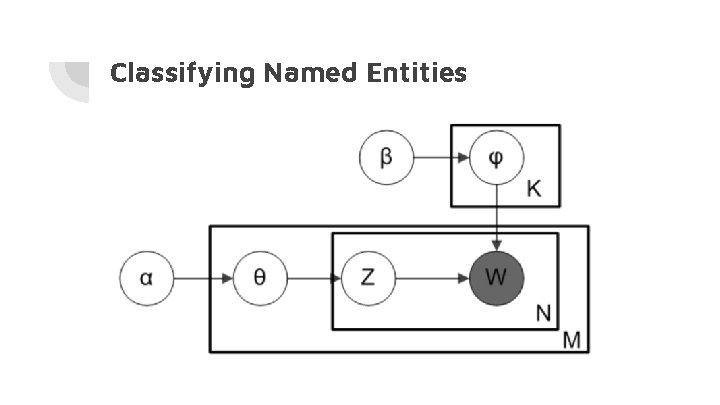

Classifying Named Entities

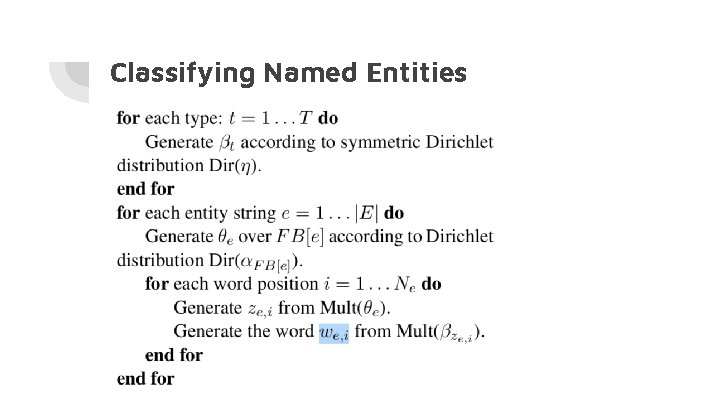

Classifying Named Entities The specialty in Labeled. LDA 1. It constrains the distribution θ to topics in FREEBASE 2. It assumes all words distribution follows the same Dirichlet prior distribution Using Gibbs sampling to infer the hidden variable(train the model) Applying Bayesian Rule to make prediction:

Classifying Named Entities

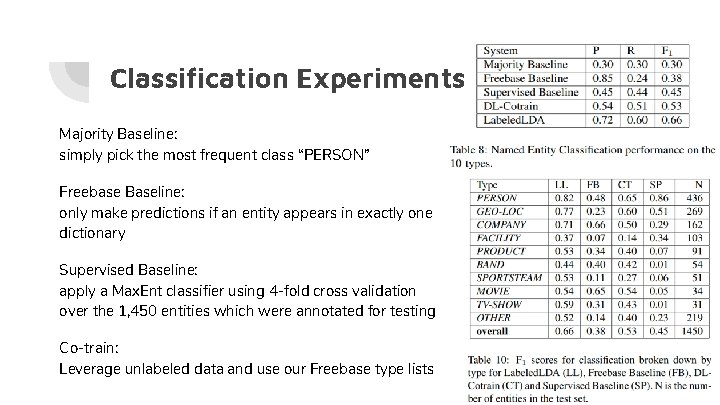

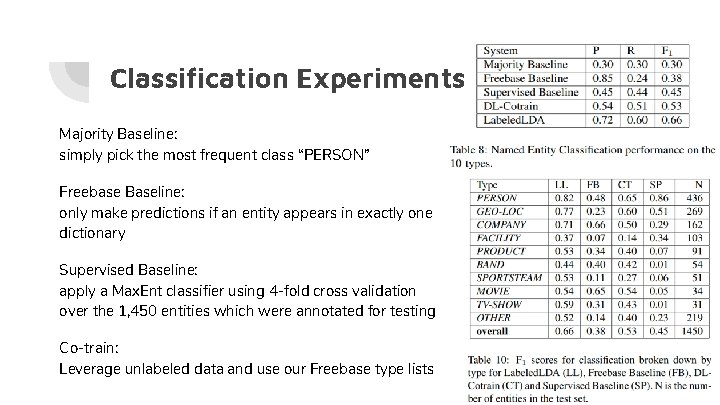

Classification Experiments Goal: Evaluate the ability of T-CLASS to classify named entities. Evaluation Data: 2, 400 tweets annotated manually with 10 types which are popular in Twitter and Freebase. Some types are generated by combining multiple Freebase types. Training: Run T-SEG on 60 M tweets and keep the entities appear more than 100 times, totally 23, 651 distinct named entity strings. For each string, collect 3 words of context window from its all mentions in the data. Run Gibbs sampling for 1000 iterations. Use the last sample to estimate entity-type distribution, as well as type-word distribution.

Classification Experiments Majority Baseline: simply pick the most frequent class “PERSON” Freebase Baseline: only make predictions if an entity appears in exactly one dictionary Supervised Baseline: apply a Max. Ent classifier using 4 -fold cross validation over the 1, 450 entities which were annotated for testing Co-train: Leverage unlabeled data and use our Freebase type lists

Classification Experiments Entity String vs. Entity Mention Labeled. LDA groups words across all mentions of an entity, infer a distribution over the possible types Co-train considers mentions separately and predicts a type for each independently. Test these two methods on both unlabeled datasets (grouped and separate) to ensure the reason for the difference on performance DL-Cotrain-entity: discriminative learning algorithm, trained on entities and tested on mentions Labeled. LDA-mention: lack of context

Classification Experiments End to end performance on segmentation and classification (T-NER) T-NER outperforms co-training. Comparing against the Stanford Named Entity Recognizer on the 3 MUC types, T-NER doubles F 1 score.

Conclusion ● Demonstrated that existing tools for POS tagging, Chunking and Named Entity Recognition perform poorly applying to Tweets. ● Annotated tweets and built tools trained on unlabeled, in-domain and out-ofdomain data, showed improvement over news-trained tools. ● Showed the benefits of features generated from T-POS and T-CHUNK in segmenting Named Entities ● Presented and evaluated a distantly supervised approach based on Labeled. LDA