Realtime Scheduling Review Research Seminar on Software Systems

- Slides: 35

Real-time Scheduling Review Research Seminar on Software Systems February 2, 2004 Venkita Subramonian venkita@cs. wustl. edu

Main Topics for Discussion § Single Processor Scheduling § End-to-end Scheduling § Holistic Scheduling

What is a Real-time System? § Real-time systems have been defined as: “those systems in which the correctness of the system depends not only on the logical result of the computation, but also on the time at which the results are produced"; § J. Stankovic, "Misconceptions About Real-Time Computing, " IEEE Computer, 21(10), October 1988. § Real-time does not necessarily mean “Real fast”. § Predictability is key in real-time systems § “There was a man who drowned crossing a stream with an average depth of six inches” – J. Stankovic

Real-time Scheduling § Job (Jij): Unit of work, scheduled and executed by system. Jobs repeated at regular or semi-regular intervals modeled as periodic § Task (Ti): Set of related jobs. § Jobs scheduled and allocated resources based on a set of scheduling algorithms and access control protocols. § Scheduler: Module implementing scheduling algorithms § Schedule: assignment of all jobs to available processors, produced by scheduler. § Valid schedule: All jobs meet their deadline § Clock-driven scheduling vs Event(priority)-driven scheduling § Fixed Priority vs Dynamic Priority assignment

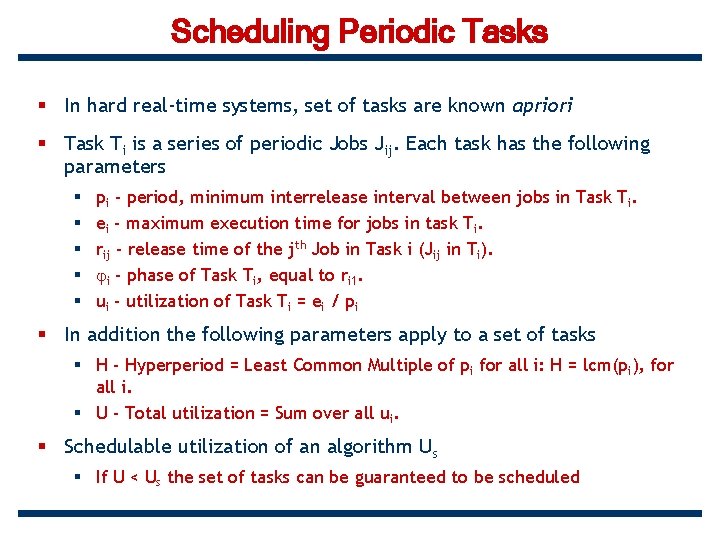

Scheduling Periodic Tasks § In hard real-time systems, set of tasks are known apriori § Task Ti is a series of periodic Jobs Jij. Each task has the following parameters § § § pi - period, minimum interrelease interval between jobs in Task Ti. ei - maximum execution time for jobs in task Ti. rij - release time of the jth Job in Task i (Jij in Ti). i - phase of Task Ti, equal to ri 1. ui - utilization of Task Ti = ei / pi § In addition the following parameters apply to a set of tasks § H - Hyperperiod = Least Common Multiple of pi for all i: H = lcm(pi), for all i. § U - Total utilization = Sum over all ui. § Schedulable utilization of an algorithm Us § If U < Us the set of tasks can be guaranteed to be scheduled

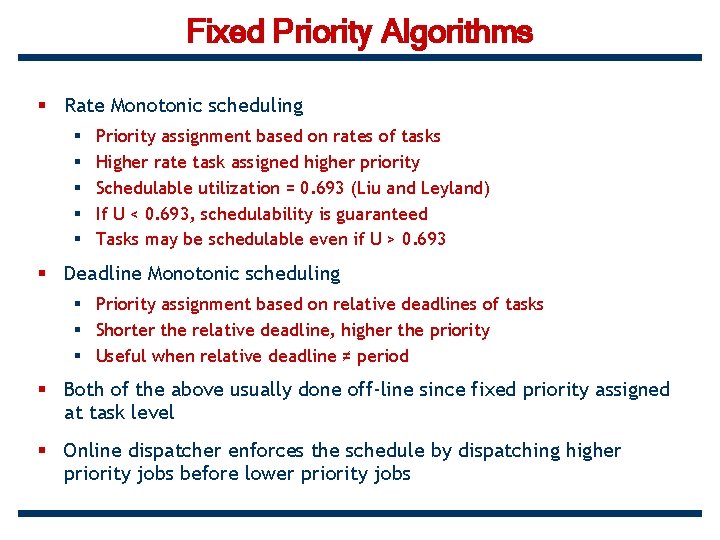

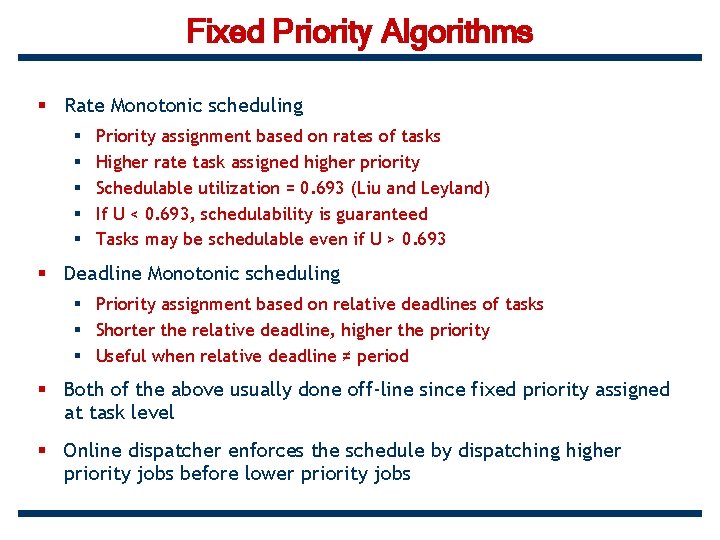

Fixed Priority Algorithms § Rate Monotonic scheduling § § § Priority assignment based on rates of tasks Higher rate task assigned higher priority Schedulable utilization = 0. 693 (Liu and Leyland) If U < 0. 693, schedulability is guaranteed Tasks may be schedulable even if U > 0. 693 § Deadline Monotonic scheduling § Priority assignment based on relative deadlines of tasks § Shorter the relative deadline, higher the priority § Useful when relative deadline ≠ period § Both of the above usually done off-line since fixed priority assigned at task level § Online dispatcher enforces the schedule by dispatching higher priority jobs before lower priority jobs

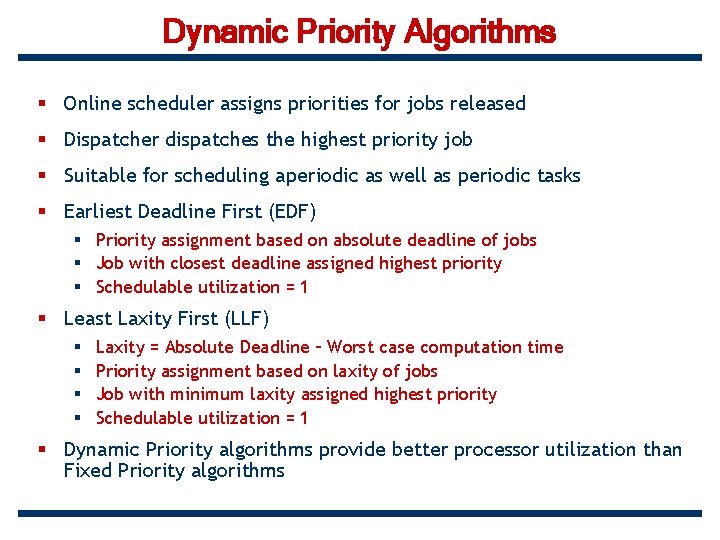

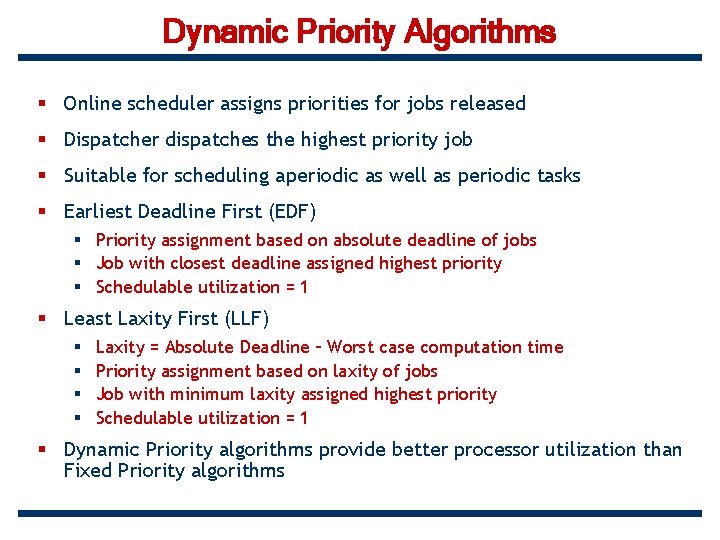

Dynamic Priority Algorithms § Online scheduler assigns priorities for jobs released § Dispatcher dispatches the highest priority job § Suitable for scheduling aperiodic as well as periodic tasks § Earliest Deadline First (EDF) § Priority assignment based on absolute deadline of jobs § Job with closest deadline assigned highest priority § Schedulable utilization = 1 § Least Laxity First (LLF) § § Laxity = Absolute Deadline – Worst case computation time Priority assignment based on laxity of jobs Job with minimum laxity assigned highest priority Schedulable utilization = 1 § Dynamic Priority algorithms provide better processor utilization than Fixed Priority algorithms

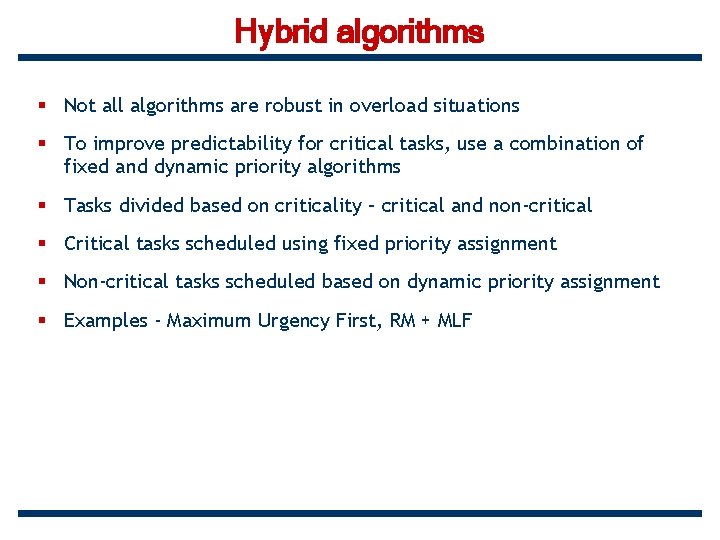

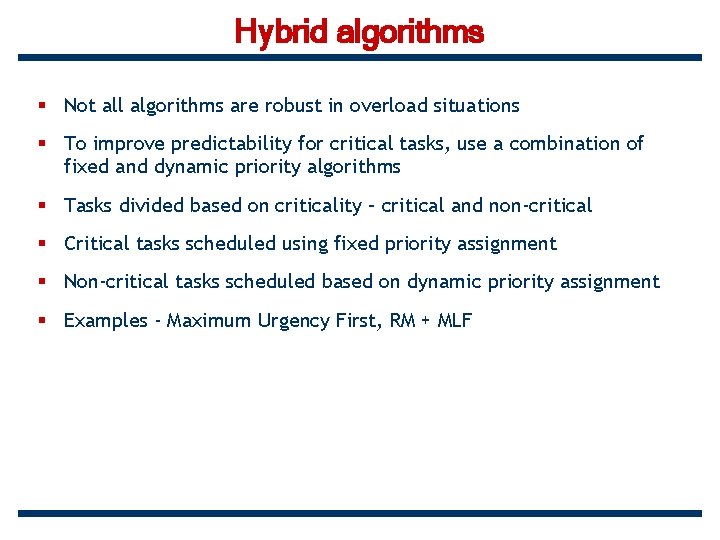

Hybrid algorithms § Not all algorithms are robust in overload situations § To improve predictability for critical tasks, use a combination of fixed and dynamic priority algorithms § Tasks divided based on criticality – critical and non-critical § Critical tasks scheduled using fixed priority assignment § Non-critical tasks scheduled based on dynamic priority assignment § Examples - Maximum Urgency First, RM + MLF

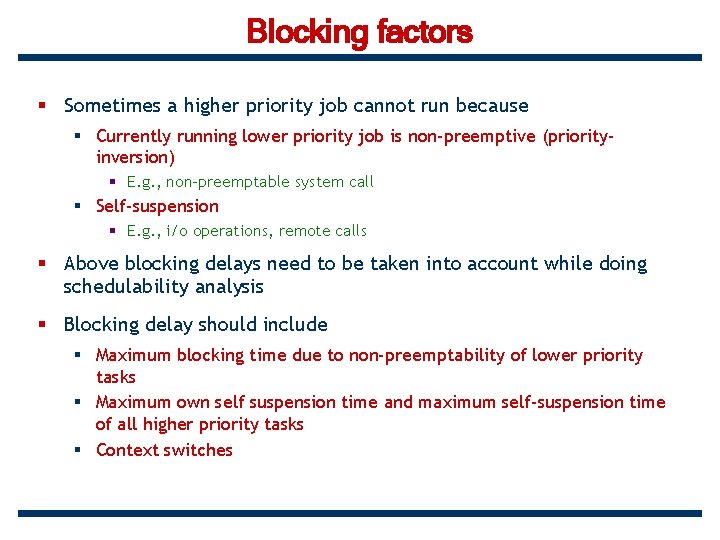

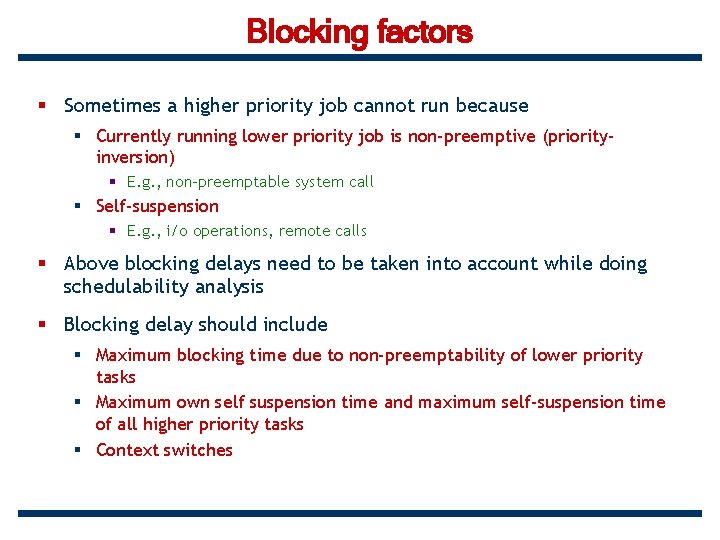

Blocking factors § Sometimes a higher priority job cannot run because § Currently running lower priority job is non-preemptive (priorityinversion) § E. g. , non-preemptable system call § Self-suspension § E. g. , i/o operations, remote calls § Above blocking delays need to be taken into account while doing schedulability analysis § Blocking delay should include § Maximum blocking time due to non-preemptability of lower priority tasks § Maximum own self suspension time and maximum self-suspension time of all higher priority tasks § Context switches

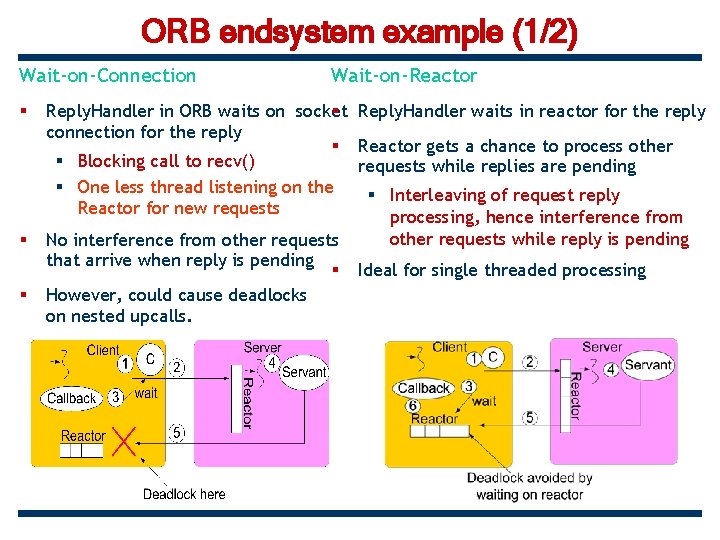

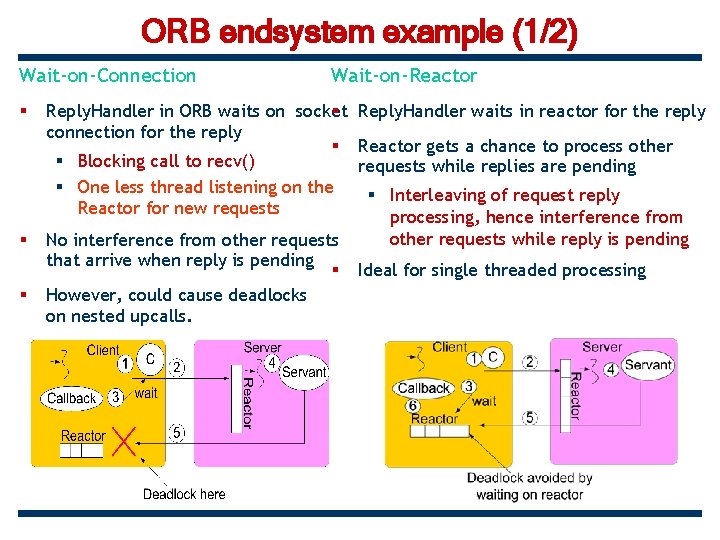

ORB endsystem example (1/2) Wait-on-Connection § § § Wait-on-Reactor Reply. Handler in ORB waits on socket § Reply. Handler waits in reactor for the reply connection for the reply § Reactor gets a chance to process other § Blocking call to recv() requests while replies are pending § One less thread listening on the § Interleaving of request reply Reactor for new requests processing, hence interference from other requests while reply is pending No interference from other requests that arrive when reply is pending § Ideal for single threaded processing However, could cause deadlocks on nested upcalls.

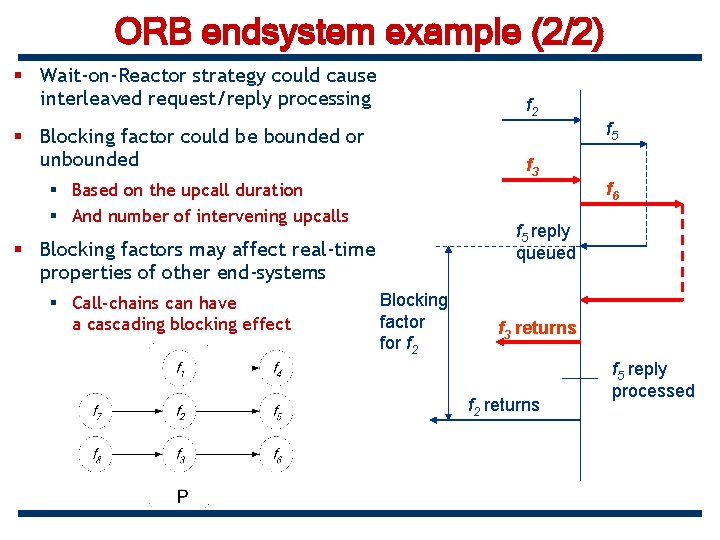

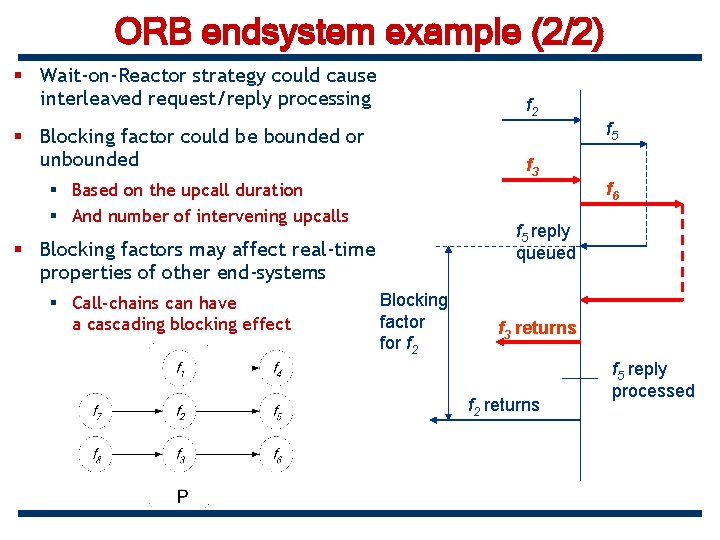

ORB endsystem example (2/2) § Wait-on-Reactor strategy could cause interleaved request/reply processing f 2 f 5 § Blocking factor could be bounded or unbounded f 3 f 6 § Based on the upcall duration § And number of intervening upcalls f 5 reply queued § Blocking factors may affect real-time properties of other end-systems § Call-chains can have a cascading blocking effect Blocking factor f 2 f 3 returns f 2 returns f 5 reply processed

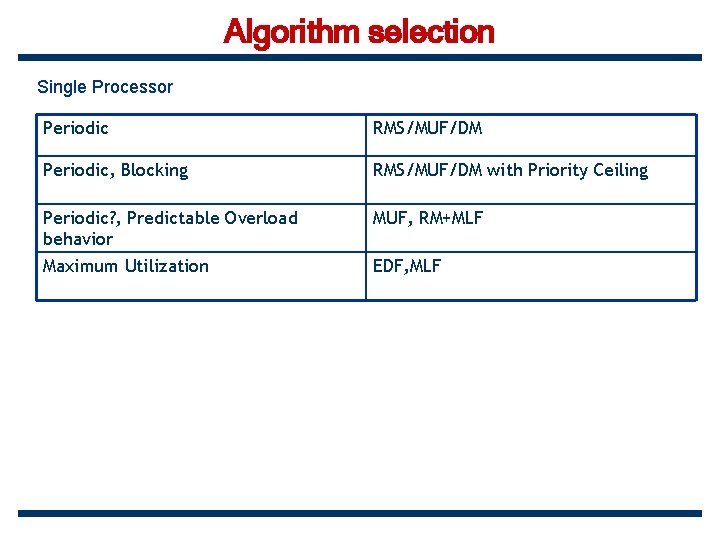

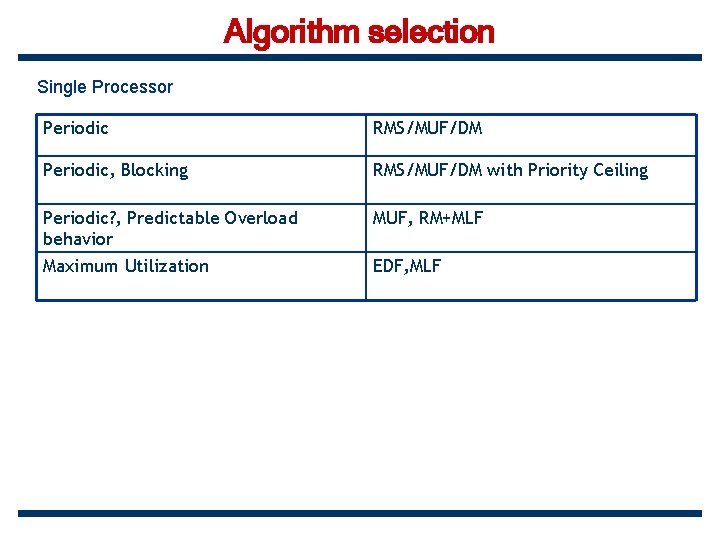

Algorithm selection Single Processor Periodic RMS/MUF/DM Periodic, Blocking RMS/MUF/DM with Priority Ceiling Periodic? , Predictable Overload behavior MUF, RM+MLF Maximum Utilization EDF, MLF

End to End Scheduling

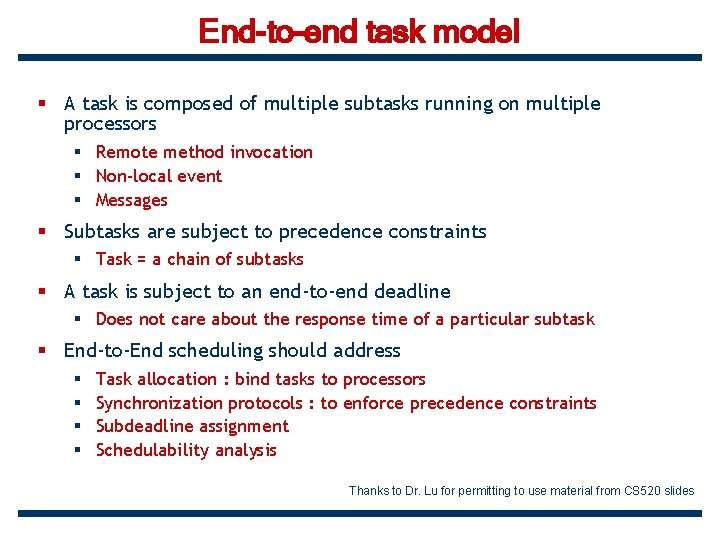

End-to-end task model § A task is composed of multiple subtasks running on multiple processors § Remote method invocation § Non-local event § Messages § Subtasks are subject to precedence constraints § Task = a chain of subtasks § A task is subject to an end-to-end deadline § Does not care about the response time of a particular subtask § End-to-End scheduling should address § § Task allocation : bind tasks to processors Synchronization protocols : to enforce precedence constraints Subdeadline assignment Schedulability analysis Thanks to Dr. Lu for permitting to use material from CS 520 slides

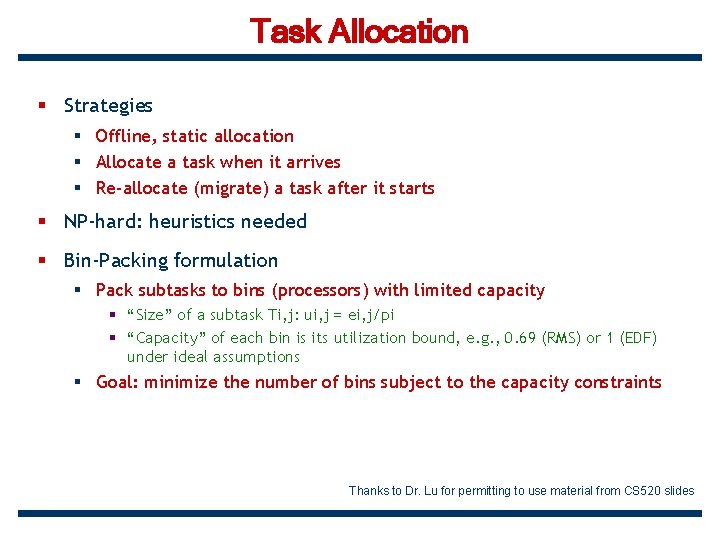

Task Allocation § Strategies § Offline, static allocation § Allocate a task when it arrives § Re-allocate (migrate) a task after it starts § NP-hard: heuristics needed § Bin-Packing formulation § Pack subtasks to bins (processors) with limited capacity § “Size” of a subtask Ti, j: ui, j = ei, j/pi § “Capacity” of each bin is its utilization bound, e. g. , 0. 69 (RMS) or 1 (EDF) under ideal assumptions § Goal: minimize the number of bins subject to the capacity constraints Thanks to Dr. Lu for permitting to use material from CS 520 slides

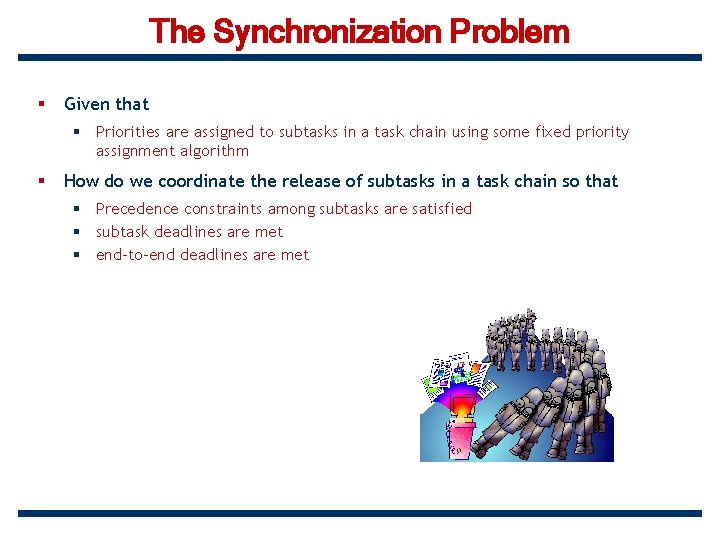

The Synchronization Problem § Given that § Priorities are assigned to subtasks in a task chain using some fixed priority assignment algorithm § How do we coordinate the release of subtasks in a task chain so that § Precedence constraints among subtasks are satisfied § subtask deadlines are met § end-to-end deadlines are met

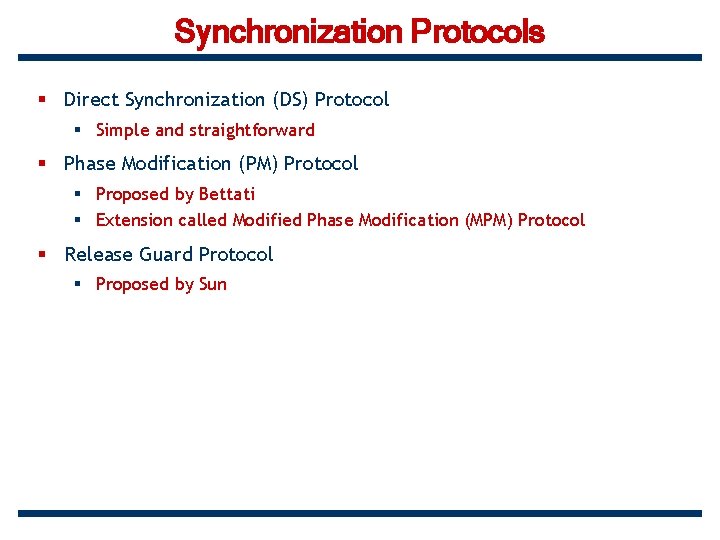

Synchronization Protocols § Direct Synchronization (DS) Protocol § Simple and straightforward § Phase Modification (PM) Protocol § Proposed by Bettati § Extension called Modified Phase Modification (MPM) Protocol § Release Guard Protocol § Proposed by Sun

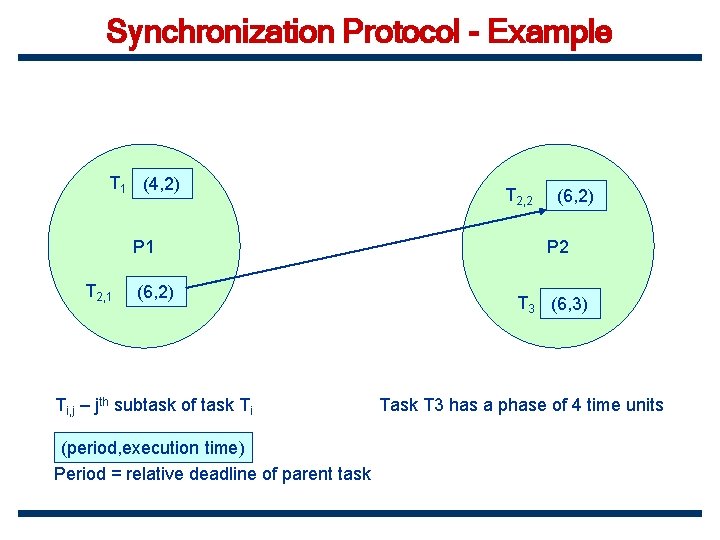

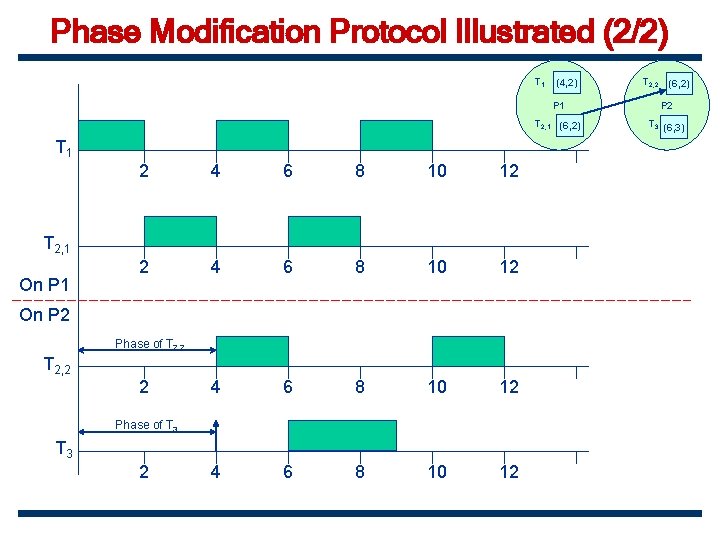

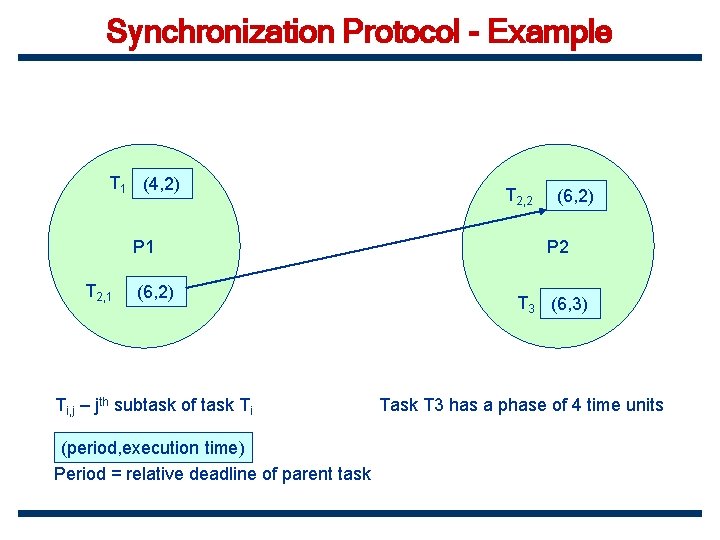

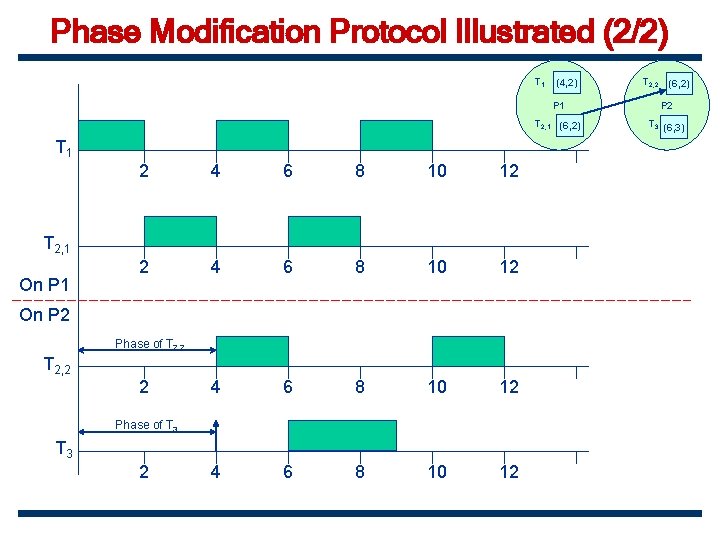

Synchronization Protocol - Example T 1 (4, 2) P 1 T 2, 1 (6, 2) Ti, j – jth subtask of task Ti (period, execution time) Period = relative deadline of parent task T 2, 2 (6, 2) P 2 T 3 (6, 3) Task T 3 has a phase of 4 time units

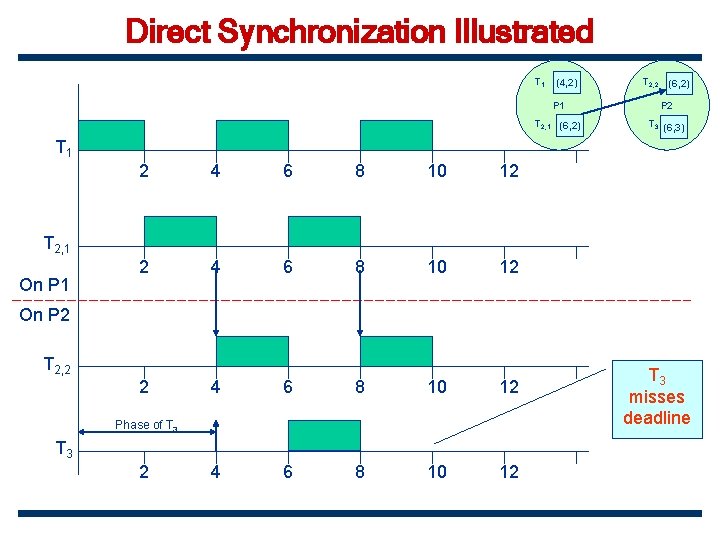

Direct Synchronization Protocol § Greedy strategy § On completion of subtask § A synchronization signal sent to the next processor § Successor subtask competes with other tasks/subtasks on the next processor

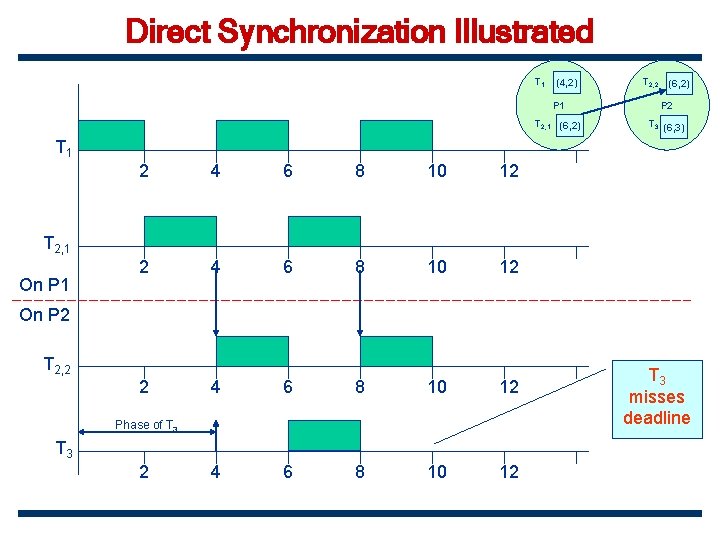

Direct Synchronization Illustrated T 1 (4, 2) T 2, 2 (6, 2) P 1 P 2 T 2, 1 (6, 2) T 3 (6, 3) T 1 2 4 6 8 10 12 T 2, 1 On P 2 T 2, 2 Phase of T 3 2 T 3 misses deadline

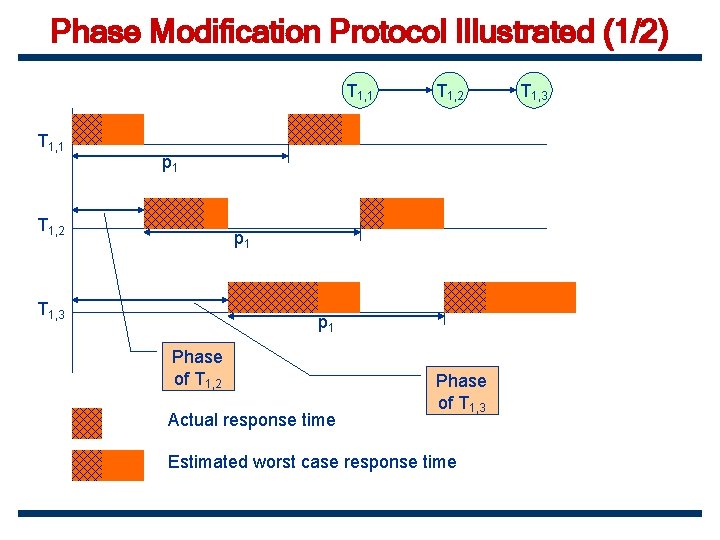

Phase Modification Protocol § Proposed by Bettati § Release subtasks periodically § According to the periods of their parent tasks § Each subtask given its own phase § Phase determined by subtask precedence constraints

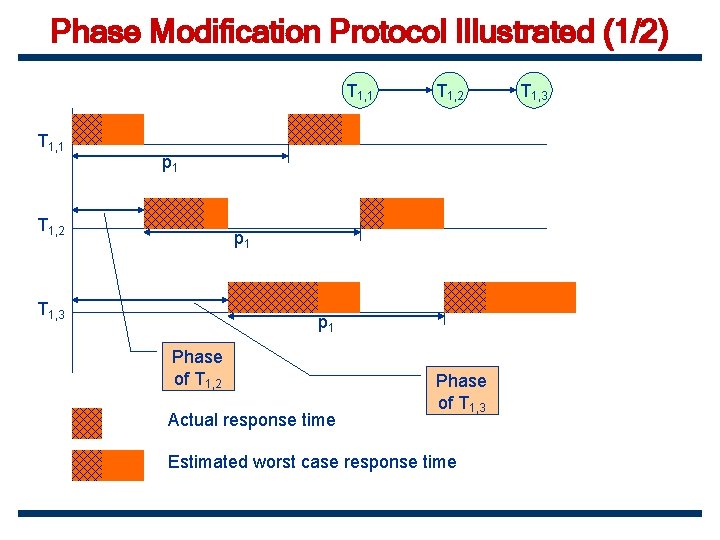

Phase Modification Protocol Illustrated (1/2) T 1, 1 T 1, 2 p 1 T 1, 3 p 1 Phase of T 1, 2 Actual response time Phase of T 1, 3 Estimated worst case response time T 1, 3

Phase Modification Protocol Illustrated (2/2) T 1 2 4 6 8 10 12 T 2, 1 On P 2 Phase of T 2, 2 2 Phase of T 3 2 (4, 2) T 2, 2 (6, 2) P 1 P 2 T 2, 1 (6, 2) T 3 (6, 3)

Phase Modification Protocol - Analysis § Periodic Timer interrupt to release subtasks § Centralized clock or strict clock synchronization § Task overruns could cause Precedence constraint violations

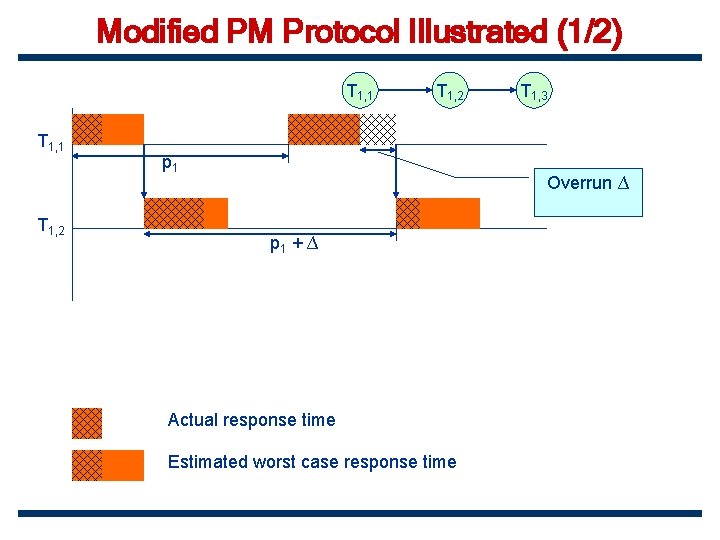

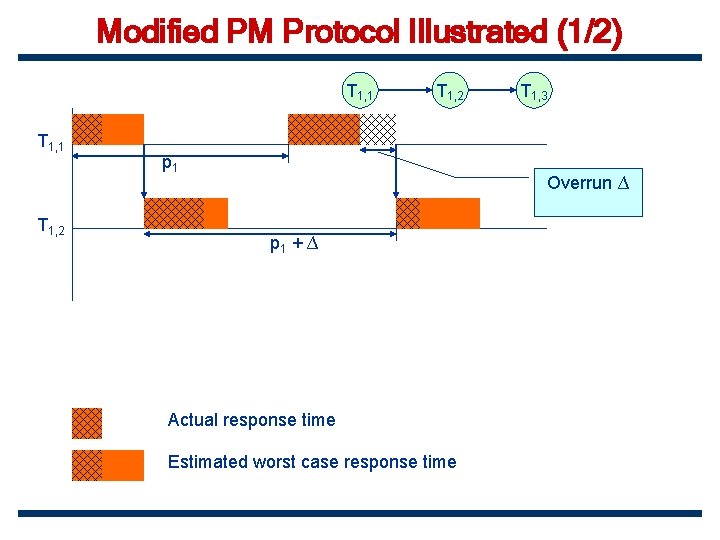

Modified PM Protocol Illustrated (1/2) T 1, 1 T 1, 2 p 1 T 1, 3 Overrun ∆ p 1 + ∆ Actual response time Estimated worst case response time

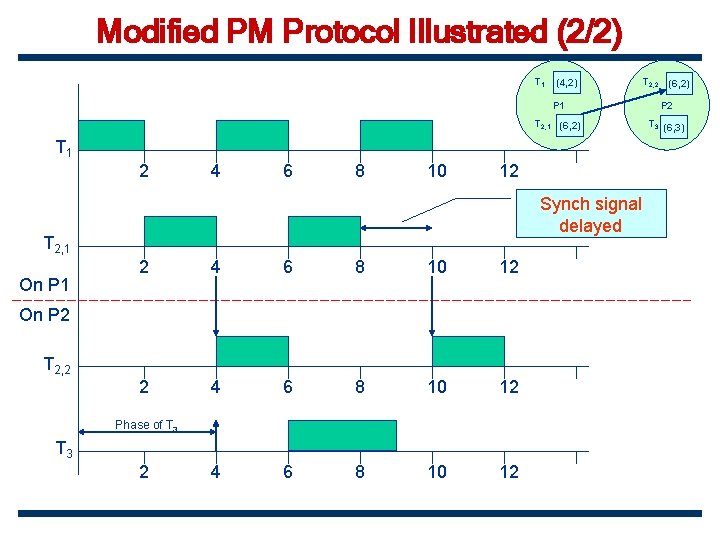

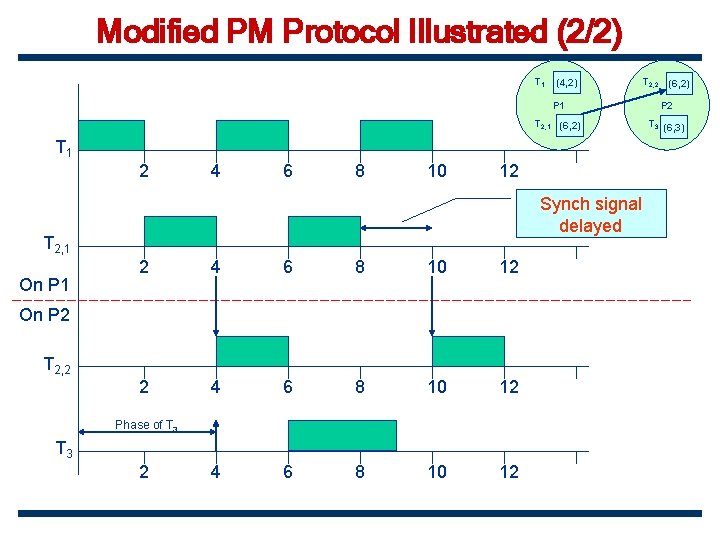

Modified PM Protocol Illustrated (2/2) T 1 (4, 2) 4 6 8 10 P 2 T 2, 1 (6, 2) T 3 (6, 3) 12 Synch signal delayed T 2, 1 On P 1 2 4 6 8 10 12 On P 2 T 2, 2 Phase of T 3 2 (6, 2) P 1 T 1 2 T 2, 2

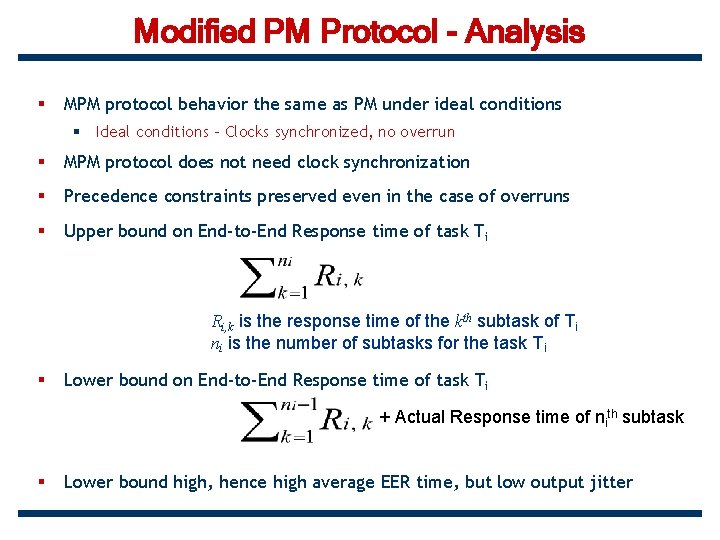

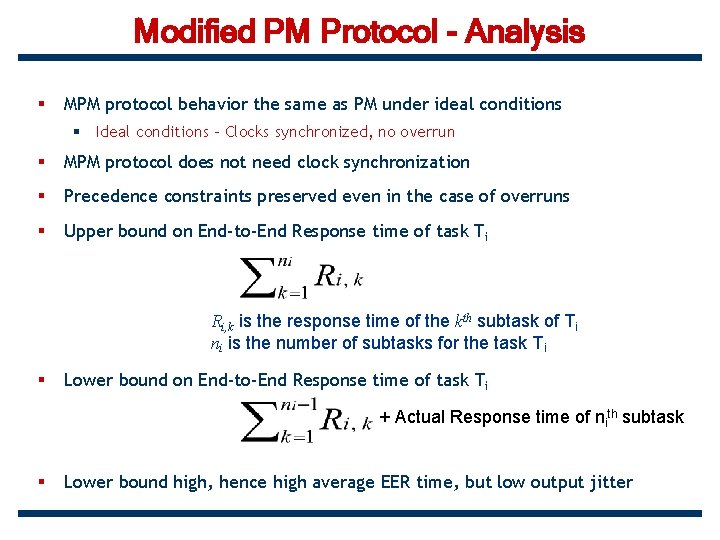

Modified PM Protocol - Analysis § MPM protocol behavior the same as PM under ideal conditions § Ideal conditions – Clocks synchronized, no overrun § MPM protocol does not need clock synchronization § Precedence constraints preserved even in the case of overruns § Upper bound on End-to-End Response time of task Ti Ri, k is the response time of the kth subtask of Ti ni is the number of subtasks for the task Ti § Lower bound on End-to-End Response time of task Ti + Actual Response time of nith subtask § Lower bound high, hence high average EER time, but low output jitter

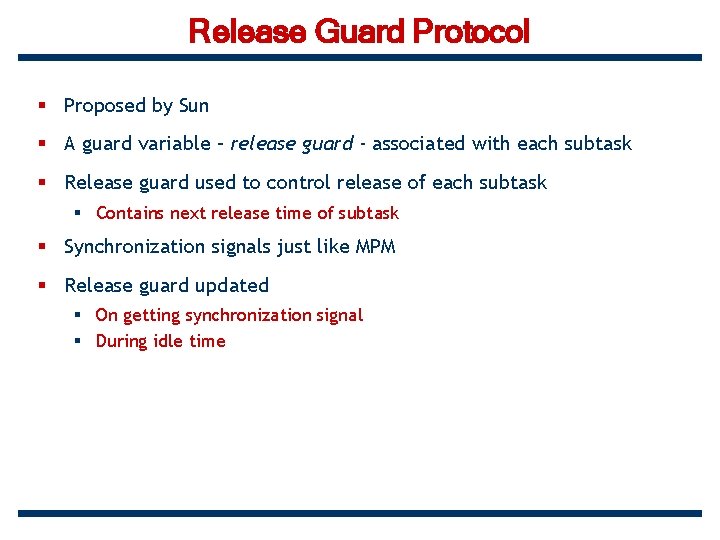

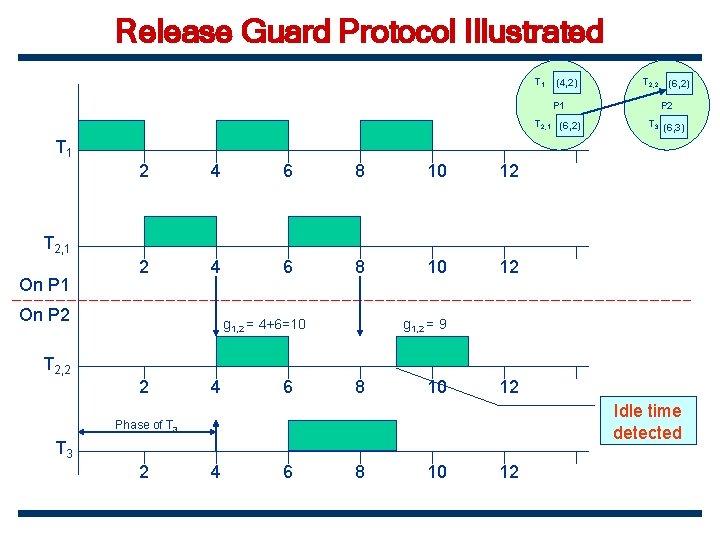

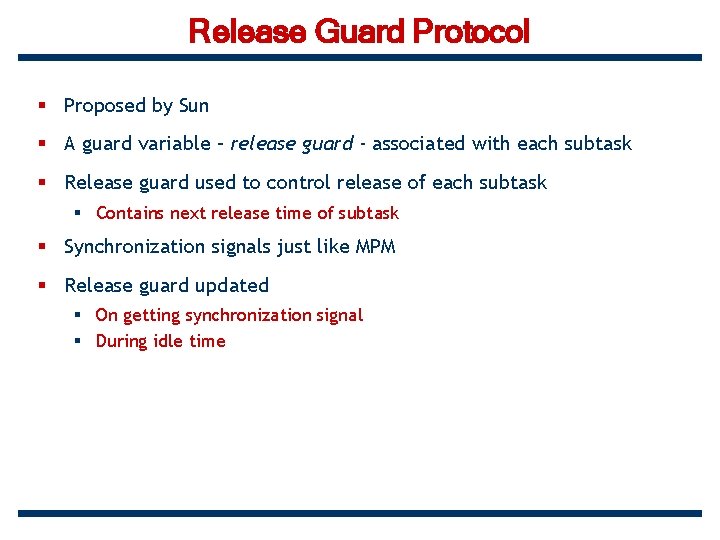

Release Guard Protocol § Proposed by Sun § A guard variable – release guard - associated with each subtask § Release guard used to control release of each subtask § Contains next release time of subtask § Synchronization signals just like MPM § Release guard updated § On getting synchronization signal § During idle time

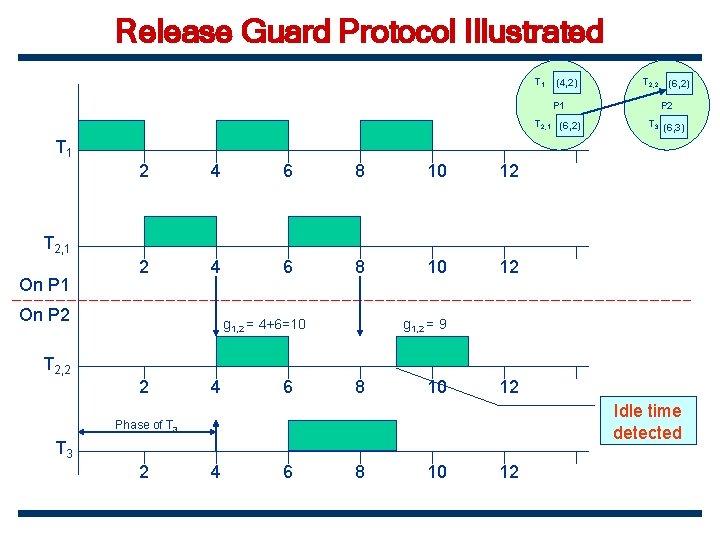

Release Guard Protocol Illustrated T 1 (4, 2) T 2, 2 (6, 2) P 1 P 2 T 2, 1 (6, 2) T 3 (6, 3) T 1 2 4 6 8 10 12 T 2, 1 On P 2 g 1, 2 = 4+6=10 g 1, 2 = 9 T 2, 2 2 4 6 8 10 12 Idle time detected Phase of T 3 2 4 6 8 10 12

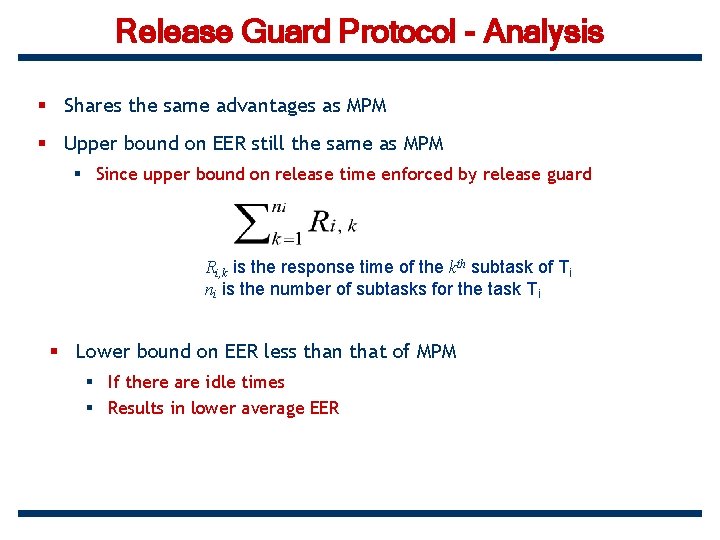

Release Guard Protocol - Analysis § Shares the same advantages as MPM § Upper bound on EER still the same as MPM § Since upper bound on release time enforced by release guard Ri, k is the response time of the kth subtask of Ti ni is the number of subtasks for the task Ti § Lower bound on EER less than that of MPM § If there are idle times § Results in lower average EER

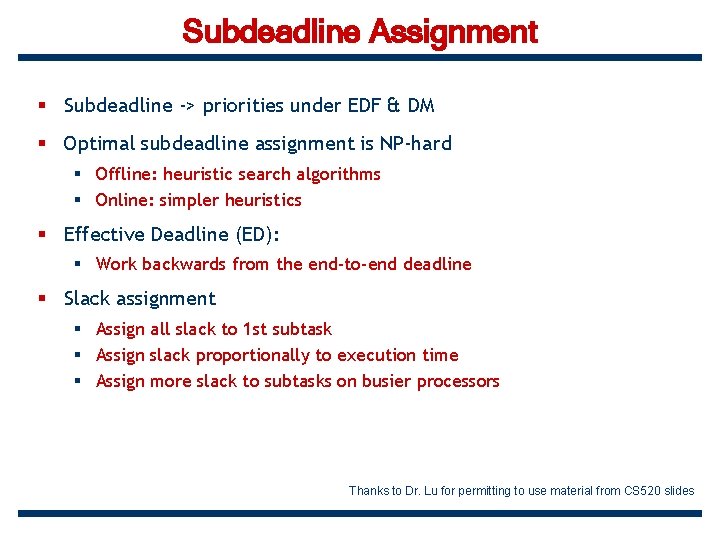

Subdeadline Assignment § Subdeadline -> priorities under EDF & DM § Optimal subdeadline assignment is NP-hard § Offline: heuristic search algorithms § Online: simpler heuristics § Effective Deadline (ED): § Work backwards from the end-to-end deadline § Slack assignment § Assign all slack to 1 st subtask § Assign slack proportionally to execution time § Assign more slack to subtasks on busier processors Thanks to Dr. Lu for permitting to use material from CS 520 slides

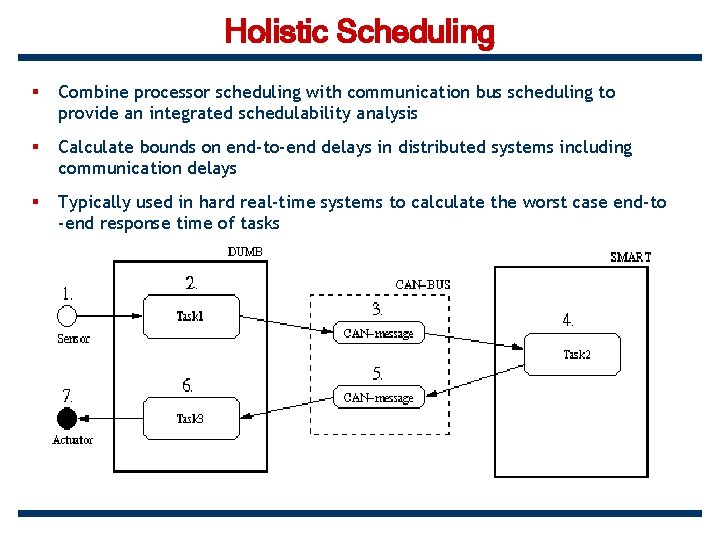

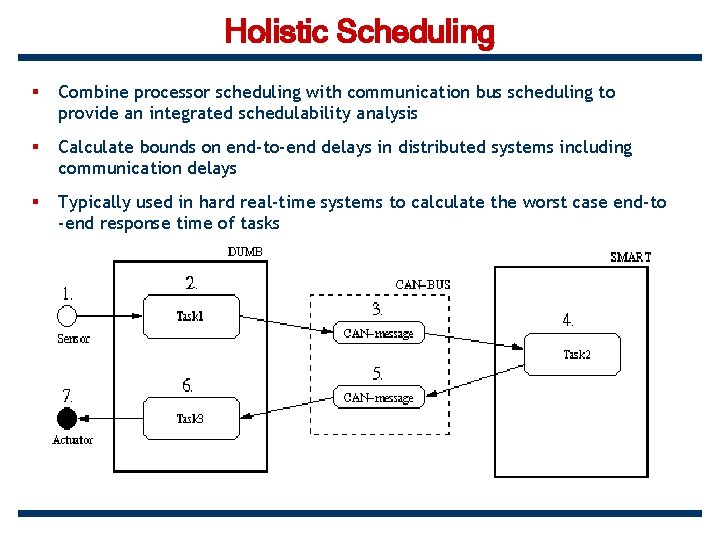

Holistic Scheduling § Combine processor scheduling with communication bus scheduling to provide an integrated schedulability analysis § Calculate bounds on end-to-end delays in distributed systems including communication delays § Typically used in hard real-time systems to calculate the worst case end-to -end response time of tasks

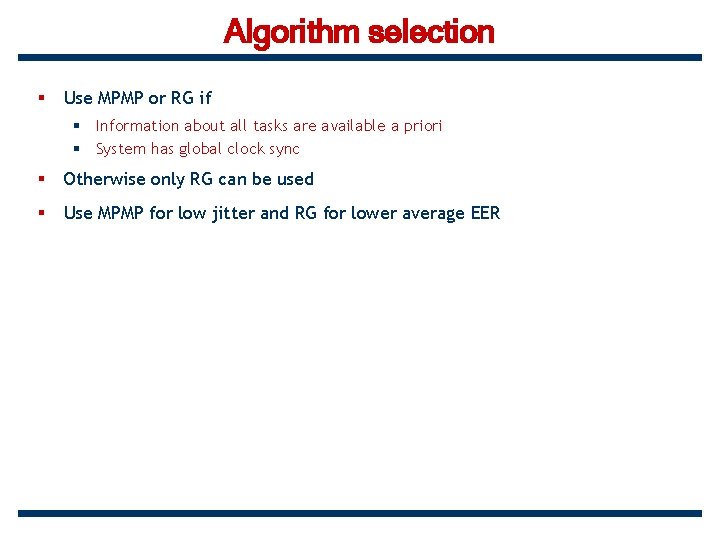

Algorithm selection § Use MPMP or RG if § Information about all tasks are available a priori § System has global clock sync § Otherwise only RG can be used § Use MPMP for low jitter and RG for lower average EER

References § Synchronization Protocols in Distributed Real-Time Systems, ICDCS 96 § Jun Sun, Jane Liu § Real-time Systems § Jane Liu § Holistic Schedulability for Distributed Hard Real-time Systems, Microprocessing and Microprogramming - Euromicro Journal 1994 (Special Issue on Parallel Embedded Real-Time Systems) § Ken Tindell, John Clark § VEST: An Aspect-Based Composition Tool for Real-Time Systems, RTAS 2003 § Stankovic, Lu, et. al

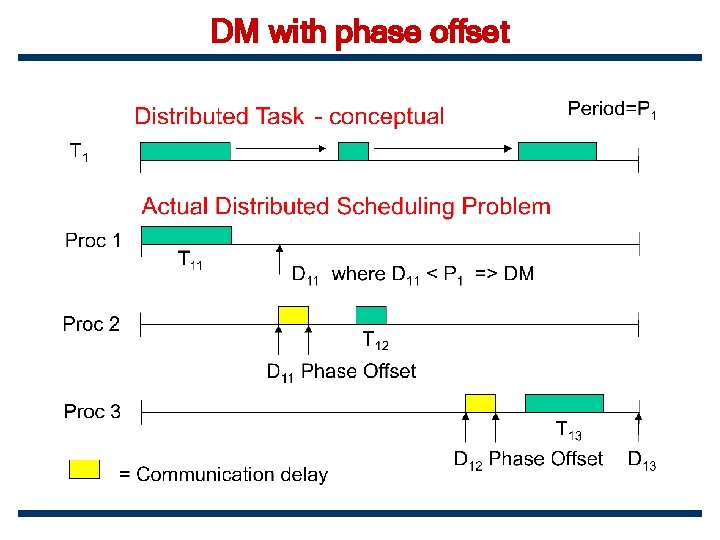

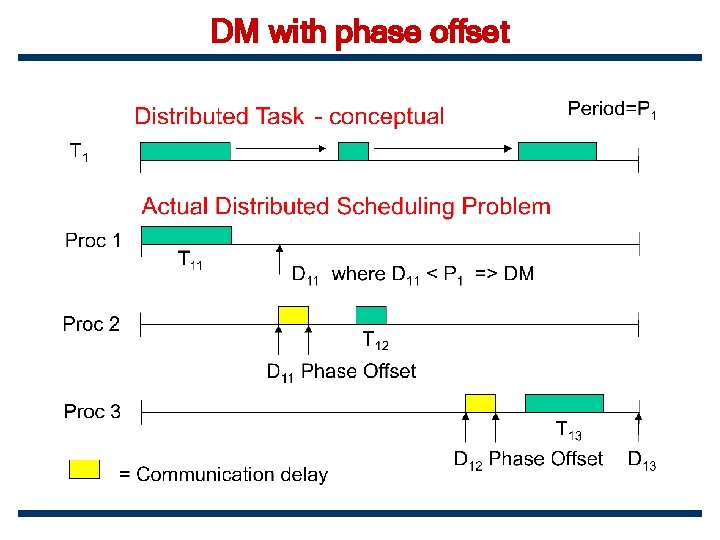

DM with phase offset