Privacy without Noise Yitao Duan Net Ease Youdao

- Slides: 27

Privacy without Noise Yitao Duan Net. Ease Youdao R&D Beijing China duan@rd. netease. com CIKM 2009

The Problem • Given a database d, consisted of records about individual users, wish to release some statistical information f(d) without compromising individual’s privacy

Our Results • Main stream approach relies on additive noise. We show that this alone is neither sufficient, nor, for some type of queries, necessary for privacy • The inherent uncertainty associated with unknown quantities is enough to provide the same privacy without external noise • Provide the first mathematical proof, and conditions, for the widely accepted heuristic that aggregates are private

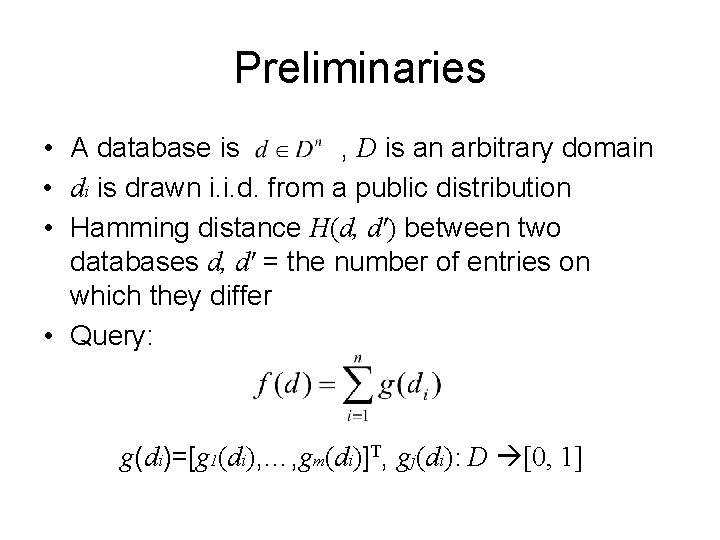

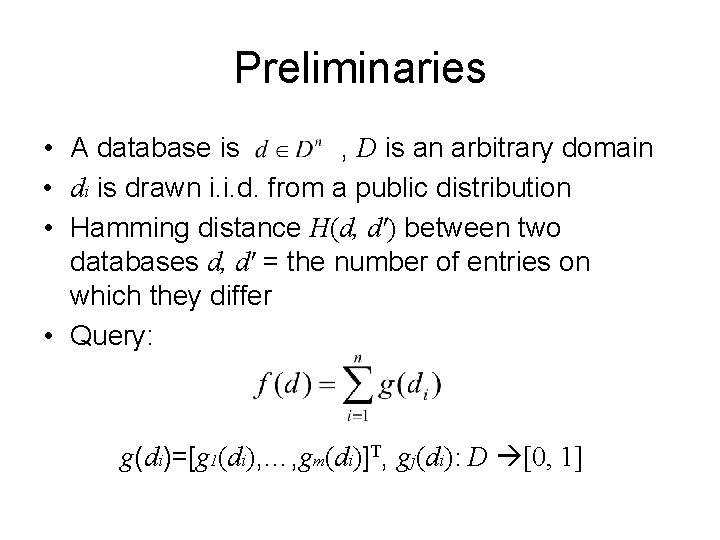

Preliminaries • A database is , D is an arbitrary domain • di is drawn i. i. d. from a public distribution • Hamming distance H(d, d') between two databases d, d' = the number of entries on which they differ • Query: g(di)=[g 1(di), …, gm(di)]T, gj(di): D [0, 1]

The Power of Addition • A large number of popular algorithms can be run with addition-only steps – Linear algorithms: voting and summation, nonlinear algorithm: regression, classification, SVD, PCA, k-means, ID 3, EM etc – All algorithms in the statistical query model – Many other gradient-based numerical algorithms • Addition-only framework has very efficient private implementation in cryptography and admits efficient zero-knowledge proofs (ZKPs)

Notions of Privacy • But what do we mean by privacy? • I don’t know how much you weigh but I can find out its highest digit is 2 • Or, I don’t know whether you drink or not but I can find that drinking people are happier • The definition must meet people’s expectation • And allow for rigorous mathematical reasoning

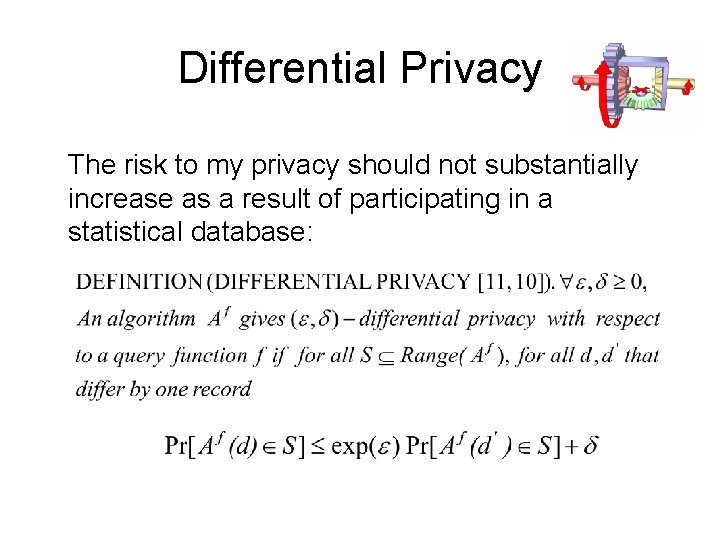

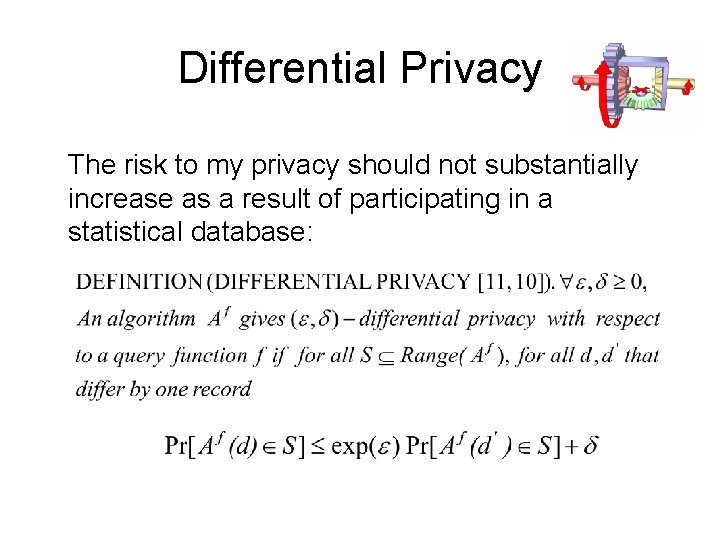

Differential Privacy The risk to my privacy should not substantially increase as a result of participating in a statistical database:

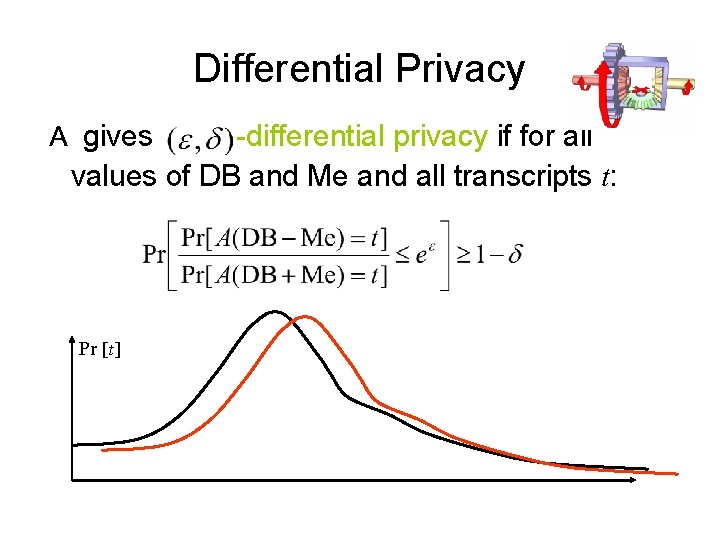

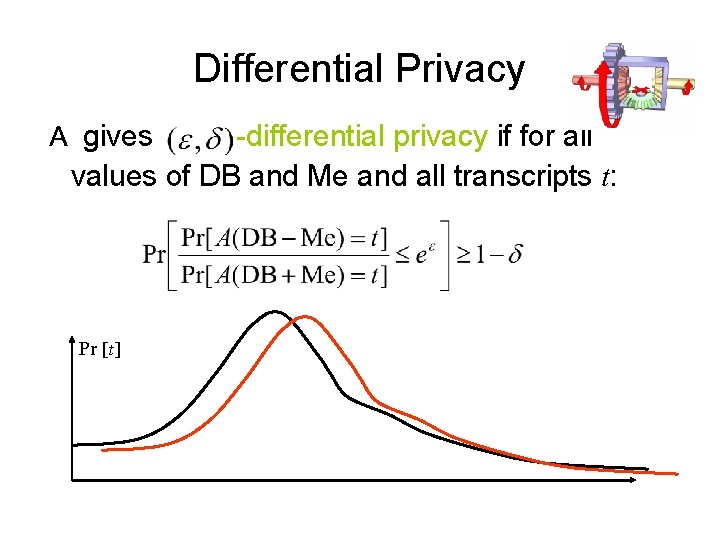

Differential Privacy A gives -differential privacy if for all values of DB and Me and all transcripts t: Pr [t]

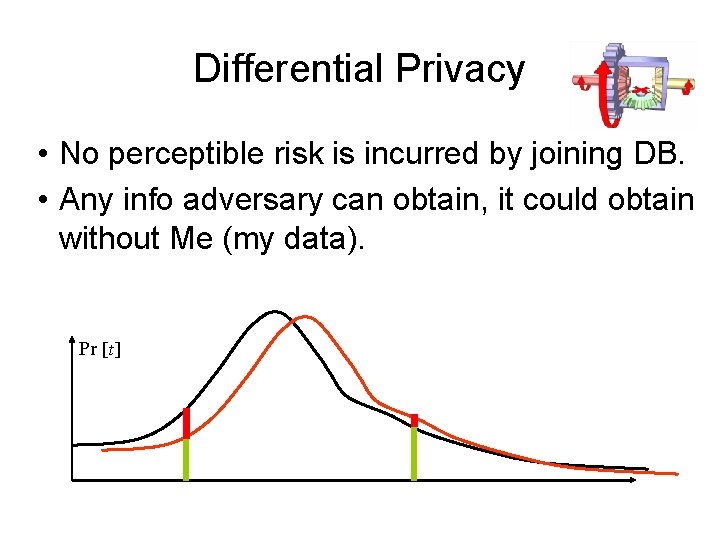

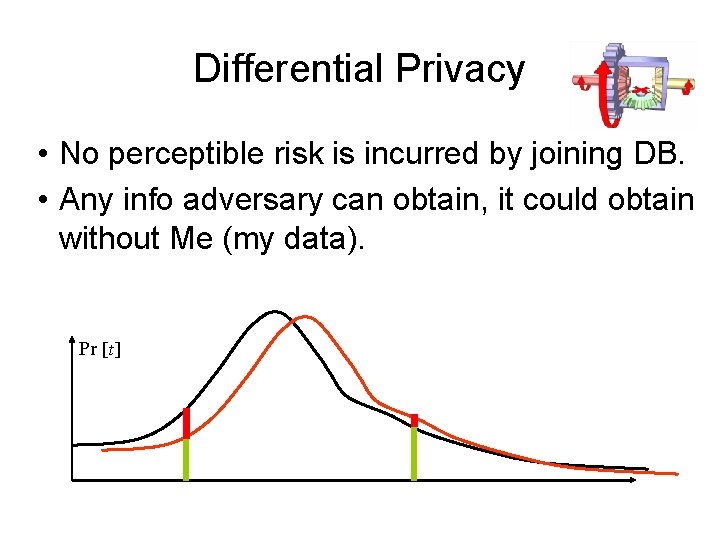

Differential Privacy • No perceptible risk is incurred by joining DB. • Any info adversary can obtain, it could obtain without Me (my data). Pr [t]

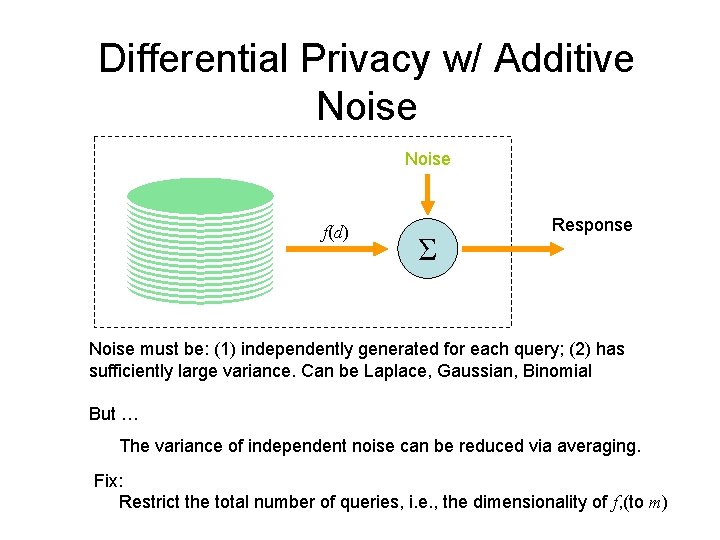

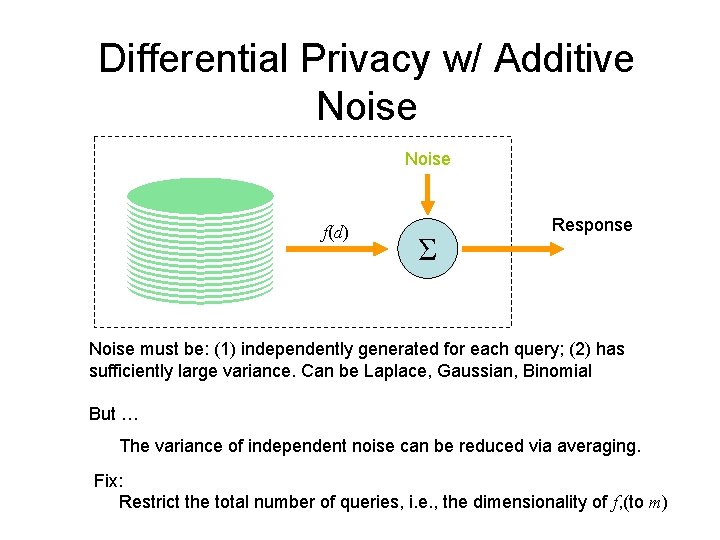

Differential Privacy w/ Additive Noise f(d) Σ Response Noise must be: (1) independently generated for each query; (2) has sufficiently large variance. Can be Laplace, Gaussian, Binomial But … The variance of independent noise can be reduced via averaging. Fix: Restrict the total number of queries, i. e. , the dimensionality of f, (to m)

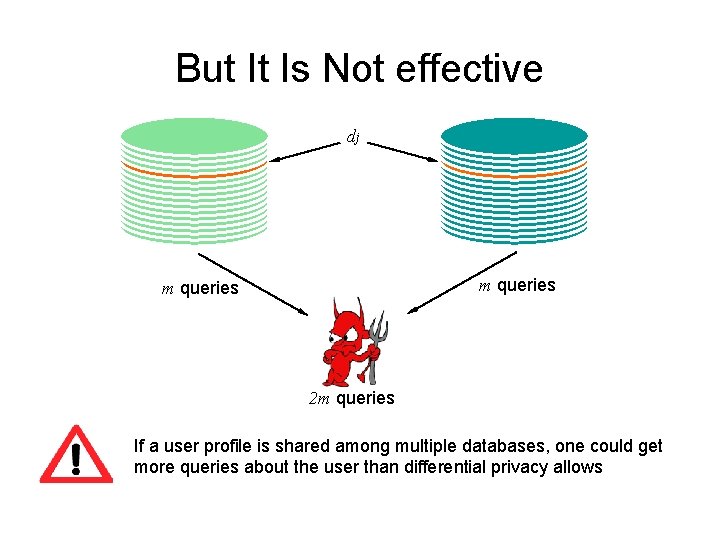

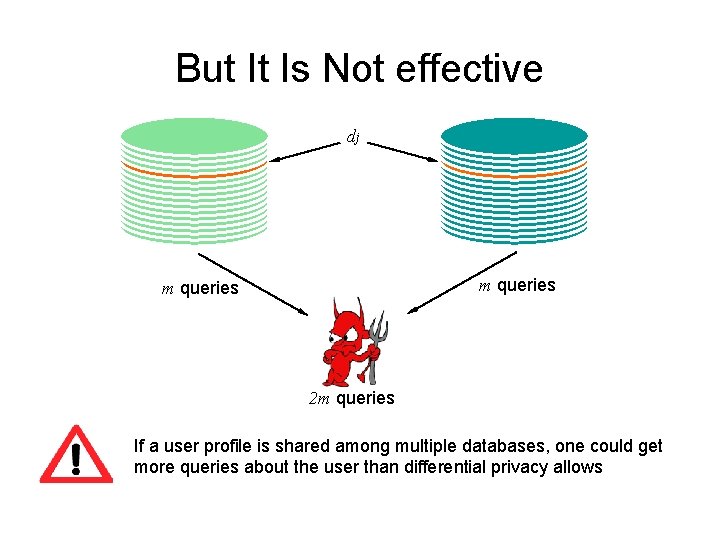

But It Is Not effective dj m queries 2 m queries If a user profile is shared among multiple databases, one could get more queries about the user than differential privacy allows

And It Is Not Necessary Either • There is another source of randomness that could provide similar protection as external noise – the data itself • Some functions are insensitive to small perturbation to the input

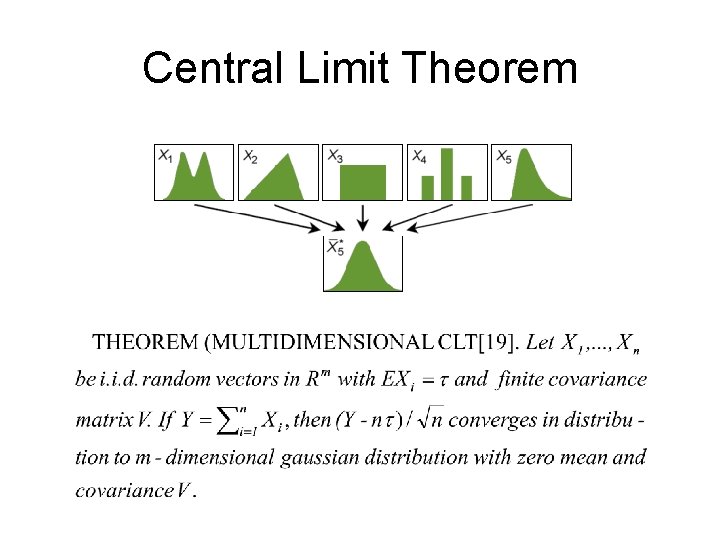

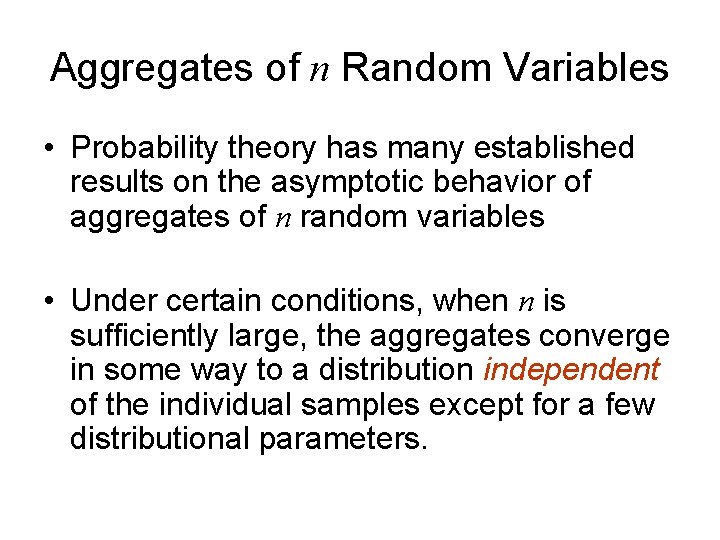

Aggregates of n Random Variables • Probability theory has many established results on the asymptotic behavior of aggregates of n random variables • Under certain conditions, when n is sufficiently large, the aggregates converge in some way to a distribution independent of the individual samples except for a few distributional parameters.

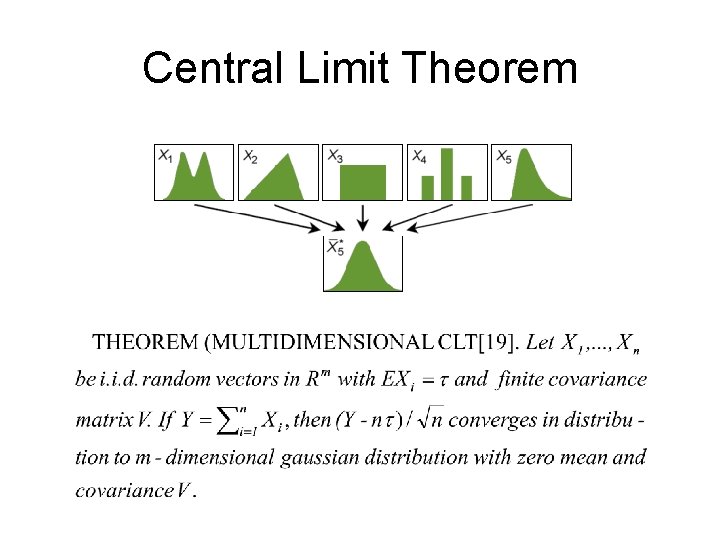

Central Limit Theorem

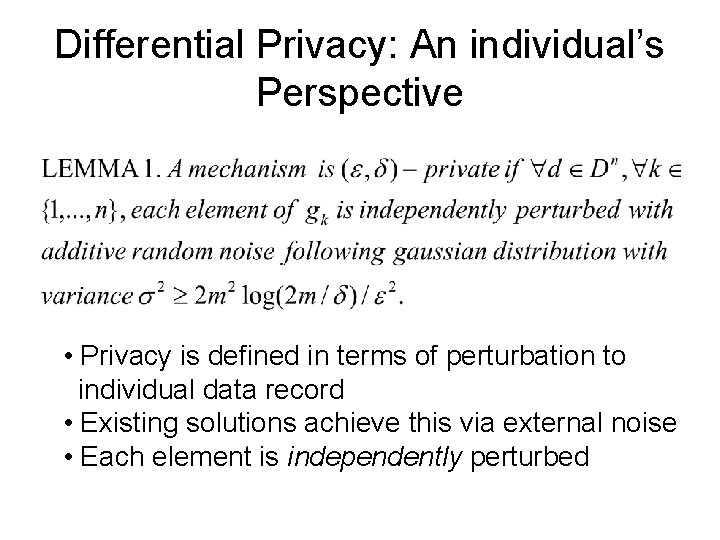

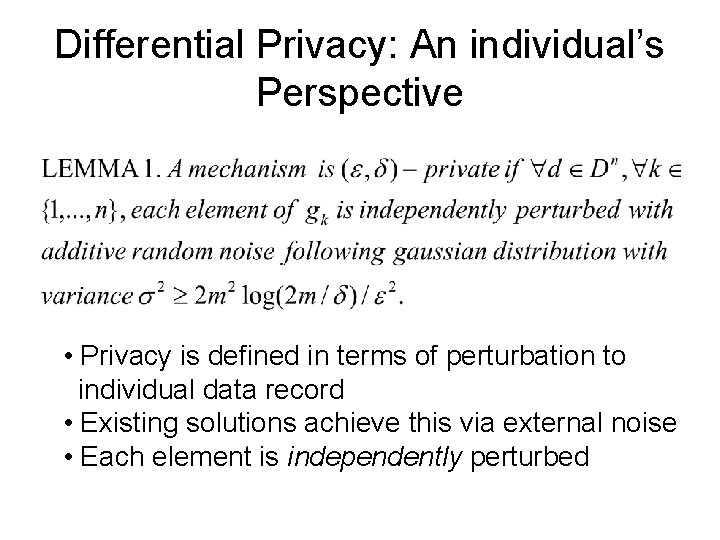

Differential Privacy: An individual’s Perspective • Privacy is defined in terms of perturbation to individual data record • Existing solutions achieve this via external noise • Each element is independently perturbed

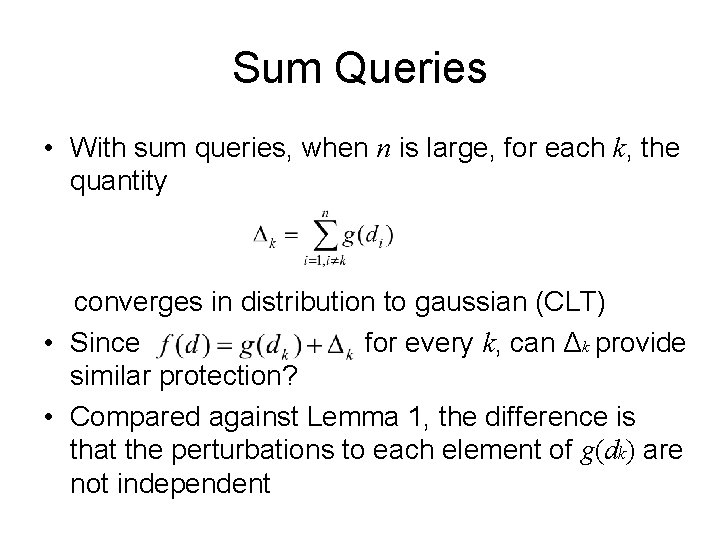

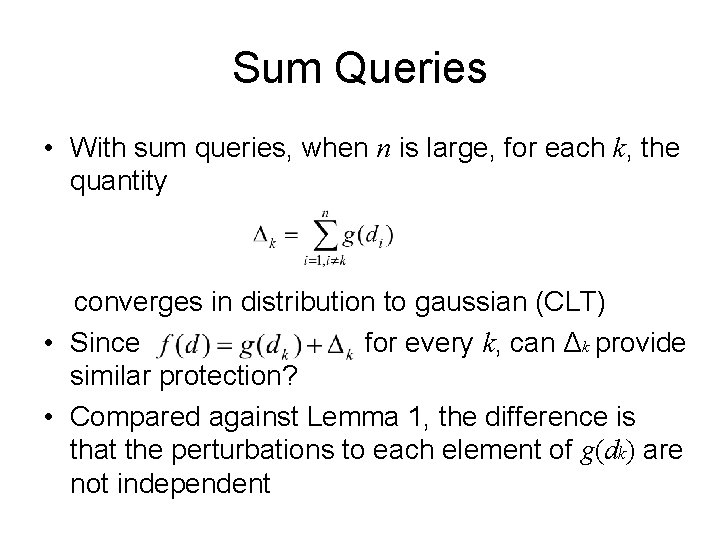

Sum Queries • With sum queries, when n is large, for each k, the quantity converges in distribution to gaussian (CLT) • Since for every k, can Δk provide similar protection? • Compared against Lemma 1, the difference is that the perturbations to each element of g(dk) are not independent

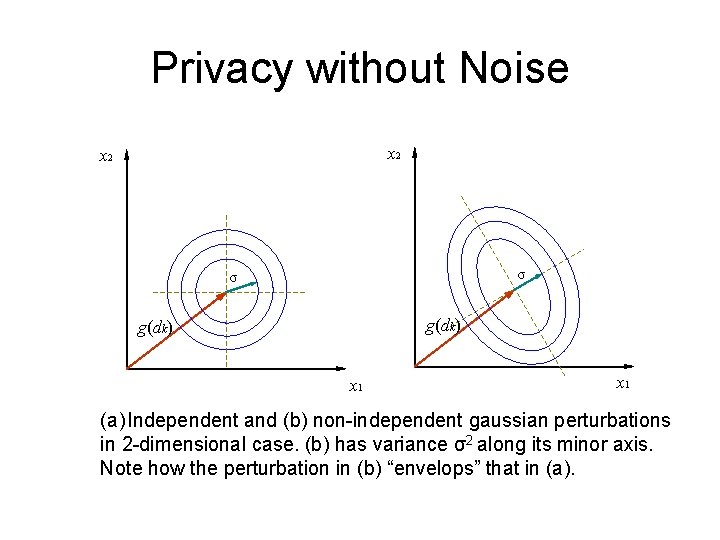

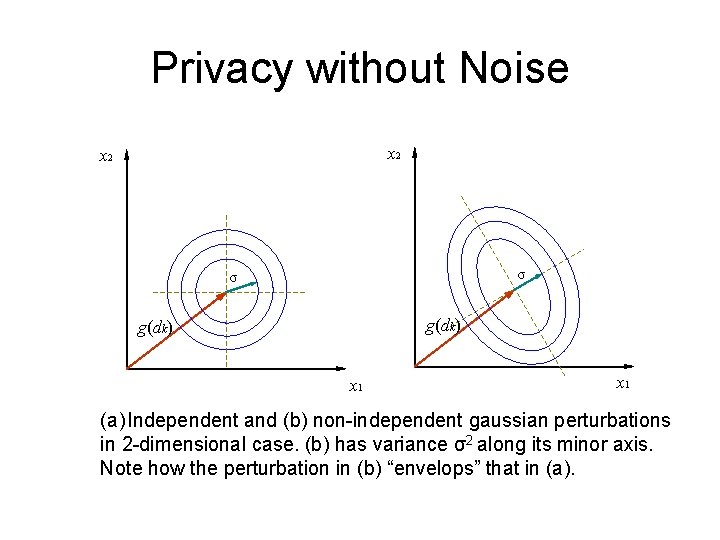

Privacy without Noise x 2 σ σ g(dk) x 1 (a) Independent and (b) non-independent gaussian perturbations in 2 -dimensional case. (b) has variance σ2 along its minor axis. Note how the perturbation in (b) “envelops” that in (a).

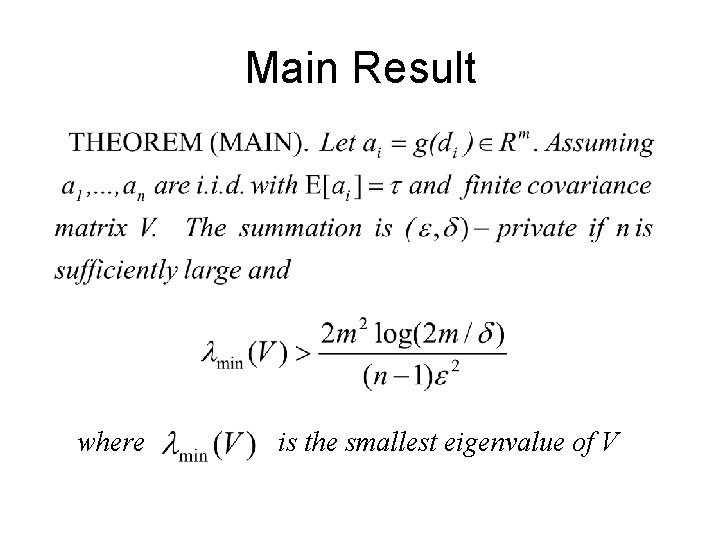

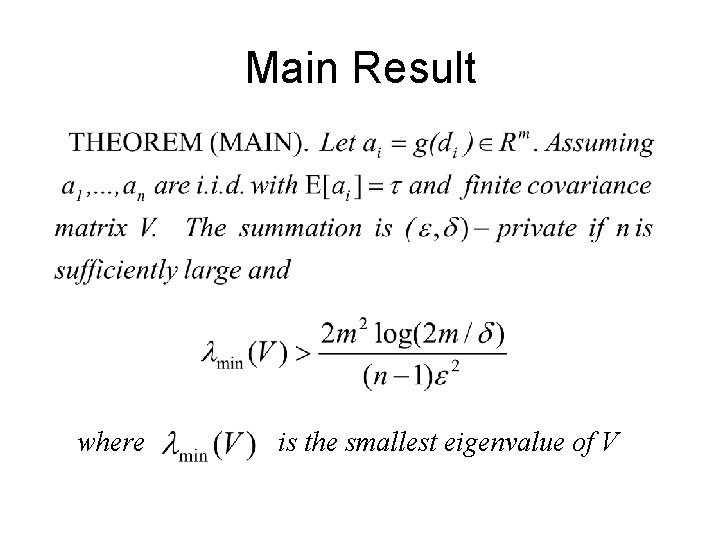

Main Result where is the smallest eigenvalue of V

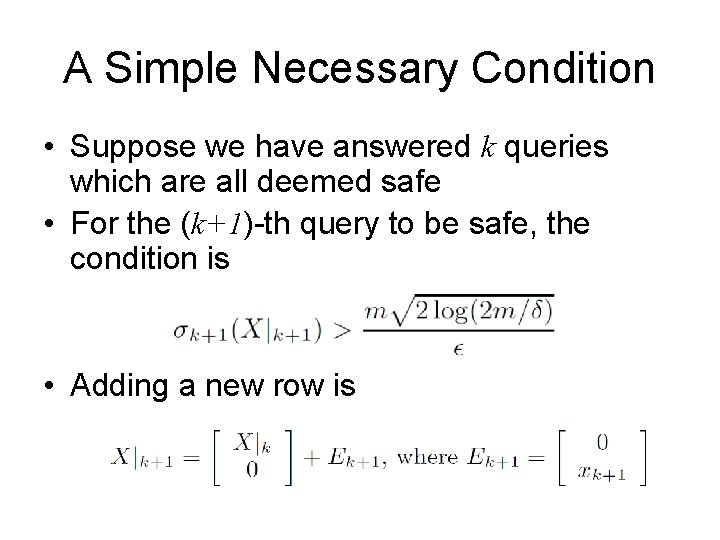

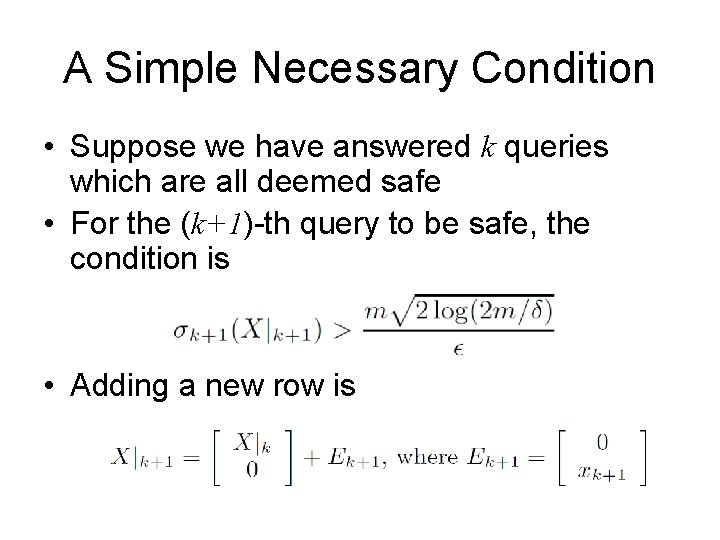

A Simple Necessary Condition • Suppose we have answered k queries which are all deemed safe • For the (k+1)-th query to be safe, the condition is • Adding a new row is

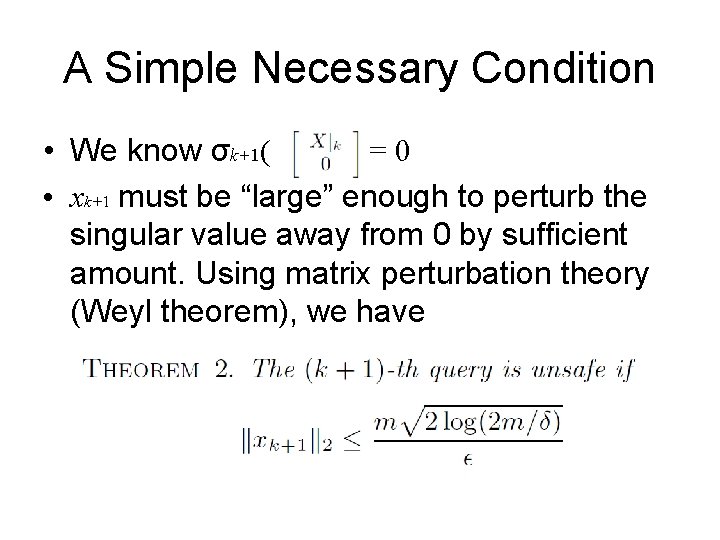

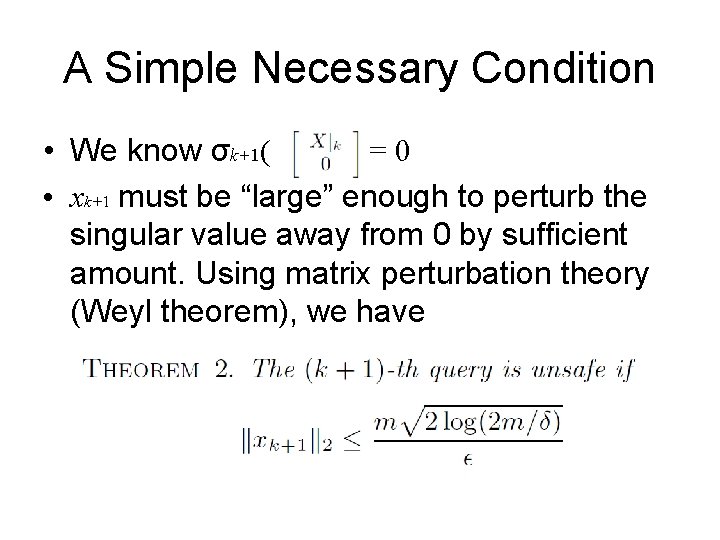

A Simple Necessary Condition • We know σk+1( )=0 • xk+1 must be “large” enough to perturb the singular value away from 0 by sufficient amount. Using matrix perturbation theory (Weyl theorem), we have

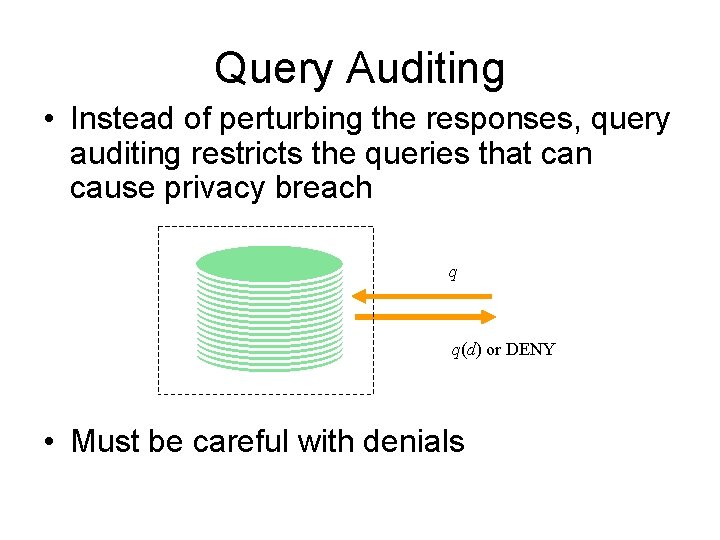

Query Auditing • Instead of perturbing the responses, query auditing restricts the queries that can cause privacy breach q q(d) or DENY • Must be careful with denials

Simulatability • Key idea: if the adversary can simulate the output of the auditor using only public information, then nothing more is leaked • Denials: if the decision to deny or grant query answers is based on information that can be approximated by the adversary, then the decision itself does not reveal more info

Simulatable Query Auditing • Previous schemes achieve simulatablity by not using the data • Using our condition to verify privacy in online query auditing is simulatable • Even though the data is used in the decision making process, the information is still simulatable

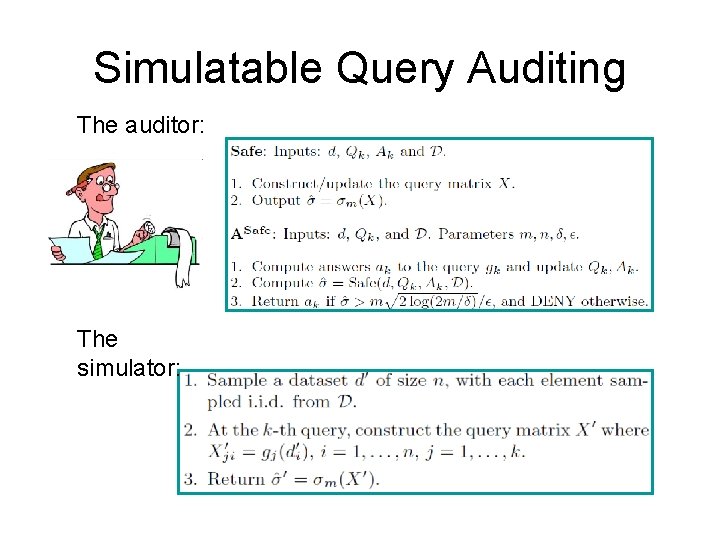

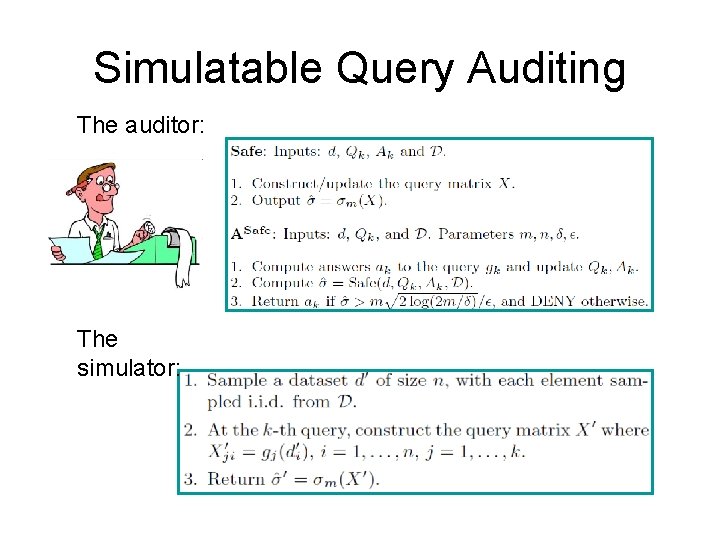

Simulatable Query Auditing The auditor: The simulator:

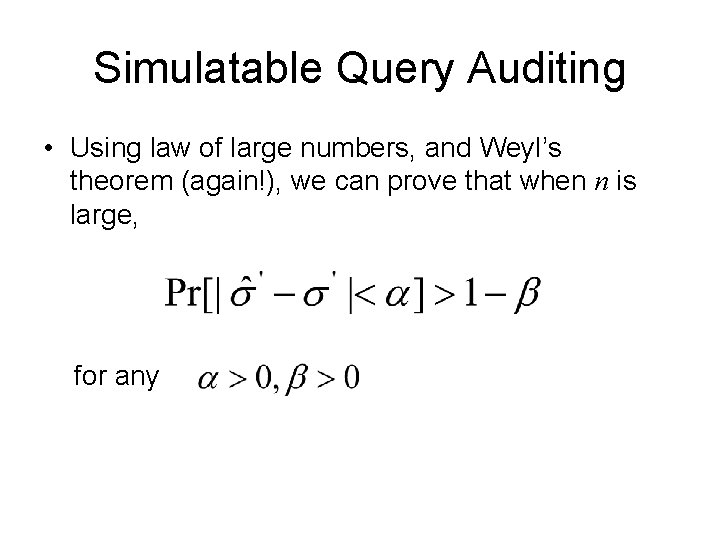

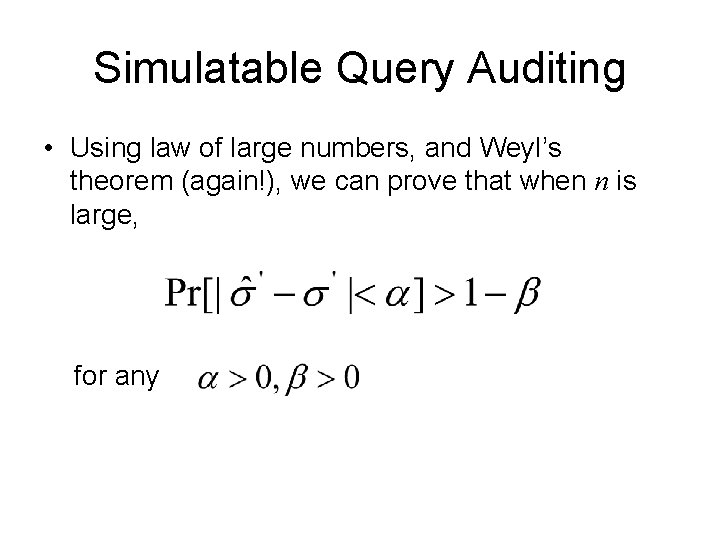

Simulatable Query Auditing • Using law of large numbers, and Weyl’s theorem (again!), we can prove that when n is large, for any

Issue of Shared Records • We are not totally immune to this vulnerability, but our privacy condition is actually stronger than simply restricting the number of queries, even though we do not add noise • An adversary gets less information about individual records from the same number of queries

More info: duan@rd. netease. com Full version of the paper: http: //bid. berkeley. edu/projects/p 4 p/papers/ pwn-full. pdf

Yitao duan

Yitao duan Prinsip ease of learning and ease of use

Prinsip ease of learning and ease of use Cvs privacy awareness training answers

Cvs privacy awareness training answers Zhiyao duan

Zhiyao duan Jiali duan

Jiali duan Lingjie duan

Lingjie duan Charles duan

Charles duan Pcm companding

Pcm companding Without title for my father who lived without ceremony

Without title for my father who lived without ceremony Justify the title of keeping quiet

Justify the title of keeping quiet Without a title poem

Without a title poem Heart like doors will open with ease

Heart like doors will open with ease Consult ease

Consult ease Doing business in estonia

Doing business in estonia The ease with which an asset can be

The ease with which an asset can be Ease engineering

Ease engineering Ease of doing business indonesia 2020

Ease of doing business indonesia 2020 Bay scissors looted

Bay scissors looted Designing software for ease of extension and contraction

Designing software for ease of extension and contraction Sweden

Sweden Ease and cost of formation

Ease and cost of formation Ease stitch

Ease stitch Ease focus 3

Ease focus 3 Ease of doing business in japan

Ease of doing business in japan Mega ease

Mega ease Label ease method

Label ease method Walk with ease leader training

Walk with ease leader training The command for this movement is tindig paluwag.

The command for this movement is tindig paluwag.