Presenter Alireza Ahadi Faculty of Human Sciences Department

![§ Where does the data come from? [7] Data collection Feedback Data processing Postprocessing § Where does the data come from? [7] Data collection Feedback Data processing Postprocessing](https://slidetodoc.com/presentation_image/14d7a23f58bd5d6831f7fa9e465d0e07/image-16.jpg)

![§ Data collection Granularity [7] Data collection Feedback Data processing Postprocessing Analysis Metrics 17/06/2019 § Data collection Granularity [7] Data collection Feedback Data processing Postprocessing Analysis Metrics 17/06/2019](https://slidetodoc.com/presentation_image/14d7a23f58bd5d6831f7fa9e465d0e07/image-17.jpg)

- Slides: 27

Presenter: Alireza Ahadi Faculty of Human Sciences, Department of Educational Studies Macquarie university June the 17 th, 2019.

§ Introduction § Classic Success Factors in Education § Learning Analytics (LA) and Educational Data Mining (EDM) § Machine Learning (ML) in LA/EDM § An example of Learning Analytics for CS 1 courses 17/06/2019 2

17/06/2019 3

§ Student needs help but is not seeking help. § Student is not sure if they need help. § The million dollar question: Who is going to pass the exam? 17/06/2019 4

§ Static predictors: § Maths score § Intelligence Quotient (IQ) § Gender (context specific) § Past academic performance § Prior Knowledge § Work style (full time vs part time) § Behavioural factors (somewhat static): § Confusion § Boredom § Focus § Discussion 17/06/2019 5

§ Extremely context dependent: § Gender (r = 0. 08, r = 0. 72, r = 0. 93) § Academic performance (r = -0. 01, r = 0. 06, r = 0. 24) § Behaviour in labs and lectures (r between -0. 38 and 0. 34) § Not strong enough § Not easy to collect § Relatively subject to change 17/06/2019 6

§ Dynamic predictors of students’ success § Level of engagement § Discussion boards § Forums § Wikis § Attendance § Attempts on different exercises § Time spent on tasks § Practice time 17/06/2019 7

§ Learning Analytics (LA) § LA is the measurement, collection, analysis and reporting of data about learners and their contexts, for purposes of understanding and optimizing learning and the environments in which it occurs [1]. § Educational Data Mining (EDM) § An emerging discipline, concerned with developing methods for exploring the unique types of data that come from educational settings, and using those methods to better understand students, and the settings which they learn in [2]. § LA is statistics based whereas EDM is computer science based 17/06/2019 8

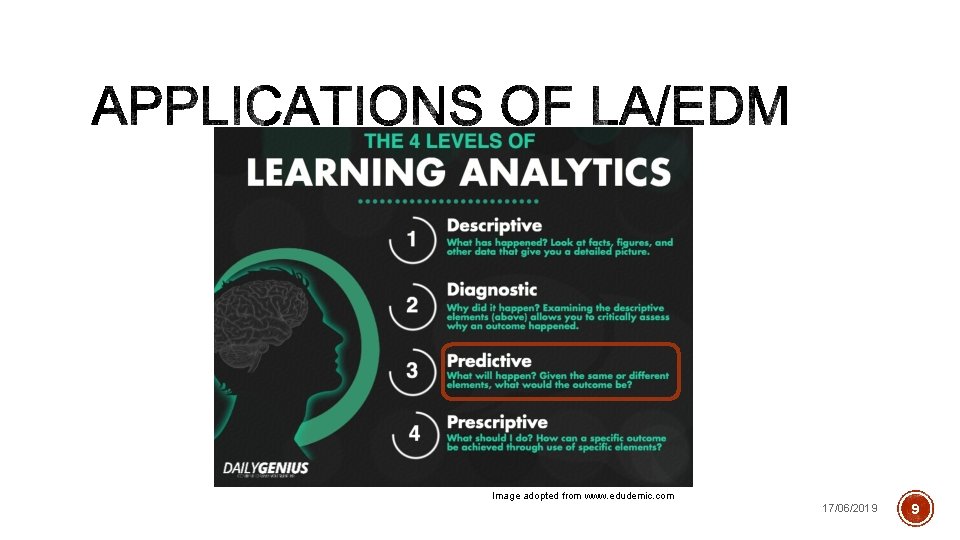

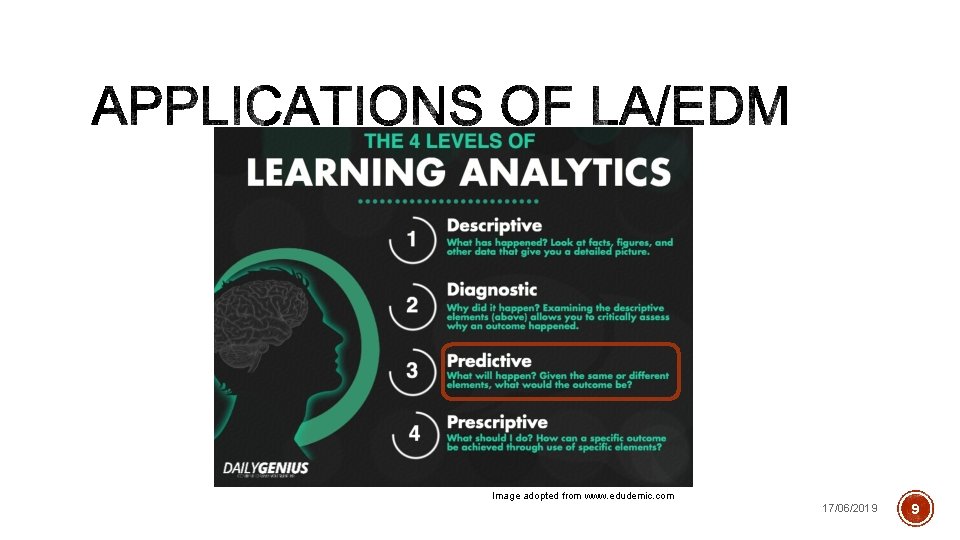

Image adopted from www. edudemic. com 17/06/2019 9

§ Student modelling § Knowledge § Skills § Motivation § Satisfaction § Meta-cognition § Attitudes § Experience § Learning process § Generating recommendations § Learners’ behaviour analysis § Domain structure analysis 17/06/2019 10

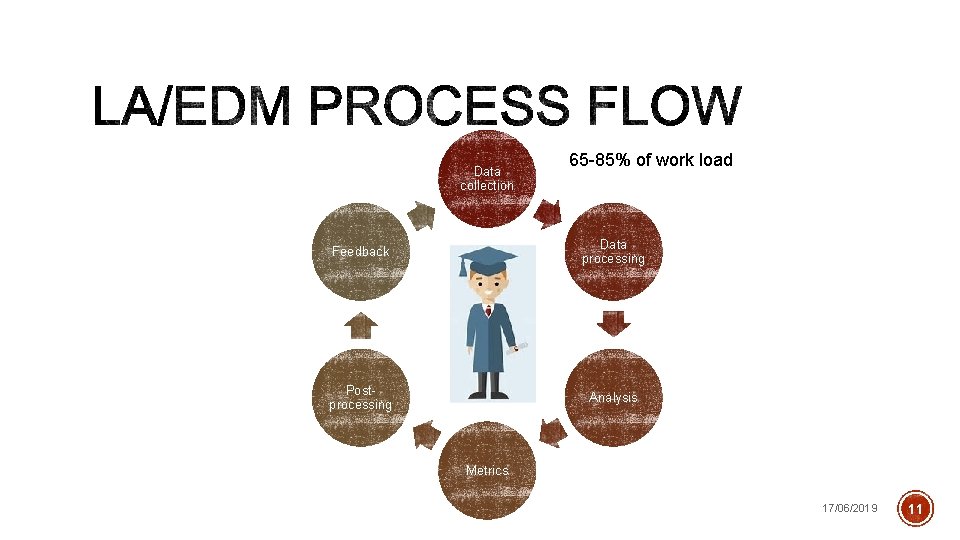

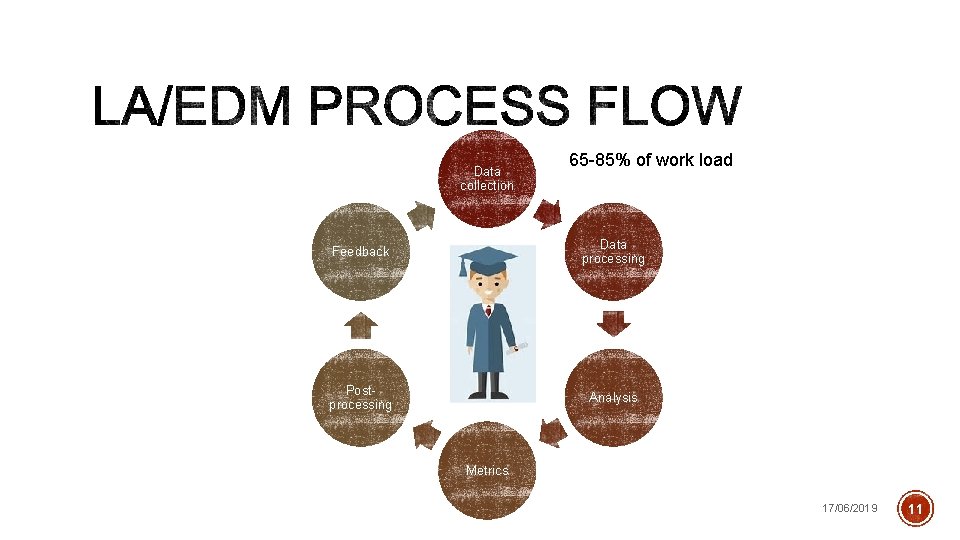

Data collection 65 -85% of work load Feedback Data processing Postprocessing Analysis Metrics 17/06/2019 11

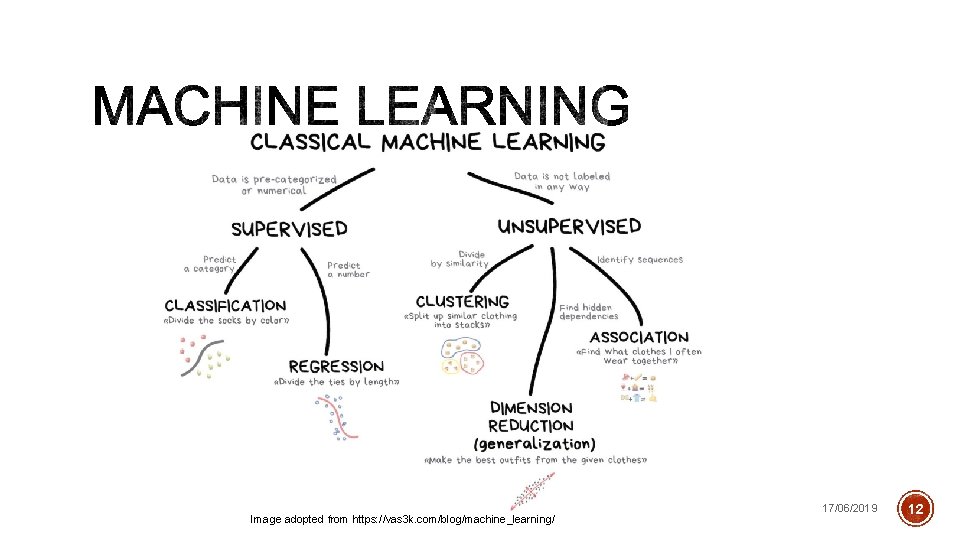

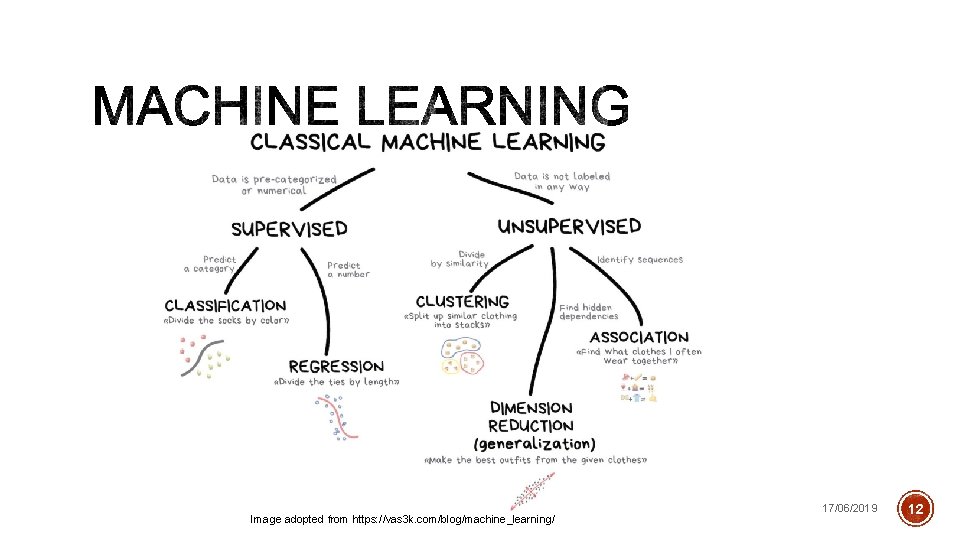

Image adopted from https: //vas 3 k. com/blog/machine_learning/ 17/06/2019 12

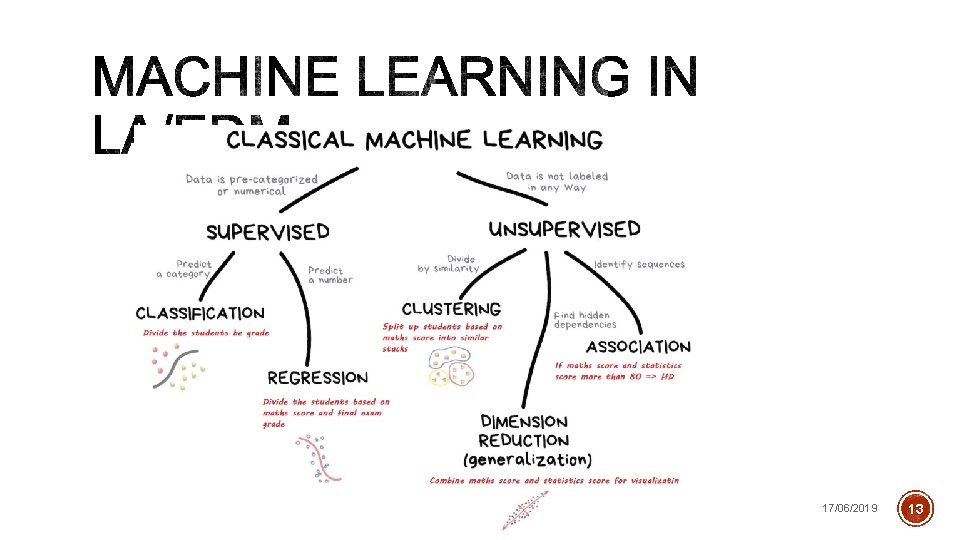

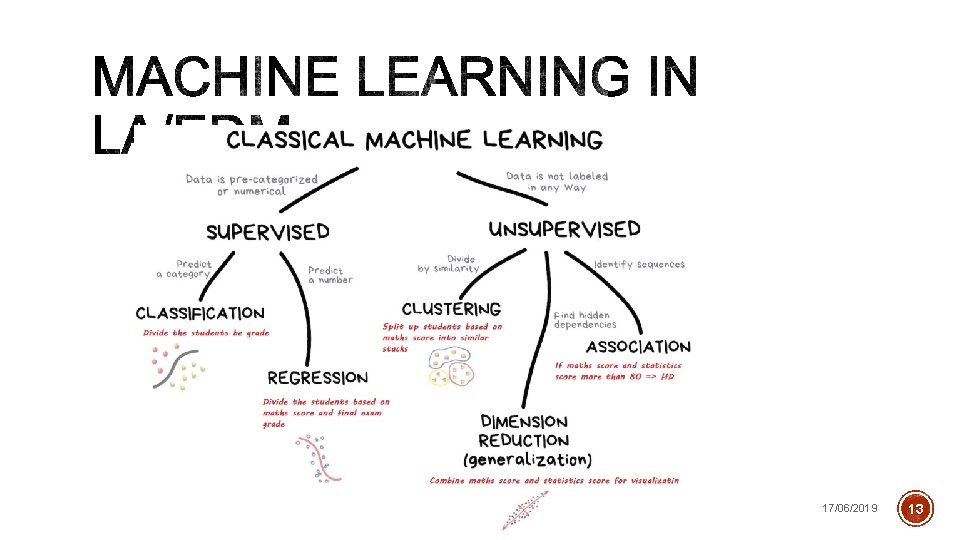

17/06/2019 13

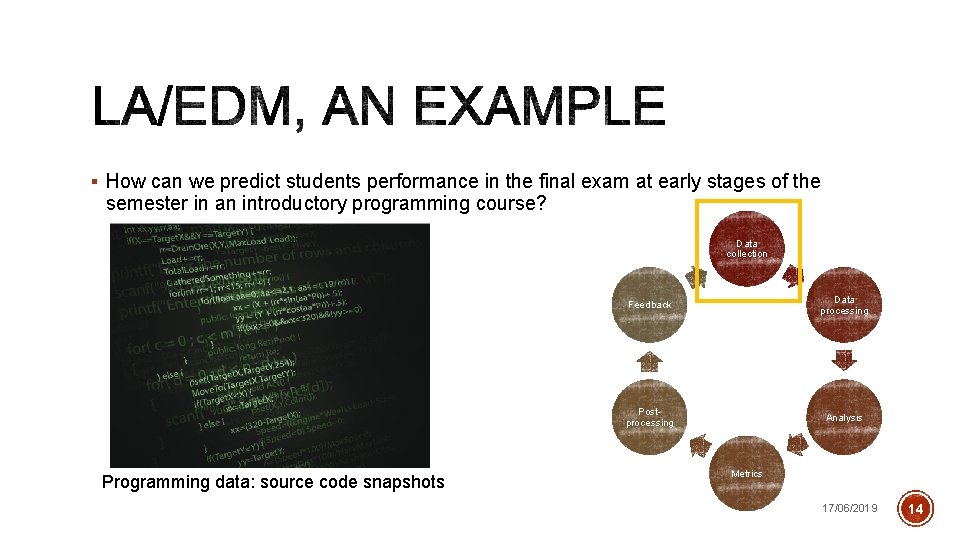

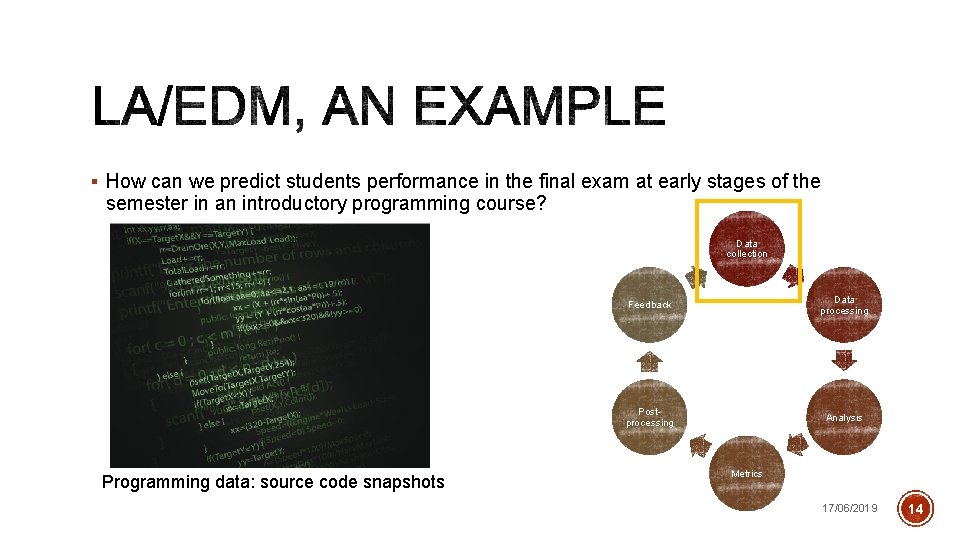

§ How can we predict students performance in the final exam at early stages of the semester in an introductory programming course? Data collection Programming data: source code snapshots Feedback Data processing Postprocessing Analysis Metrics 17/06/2019 14

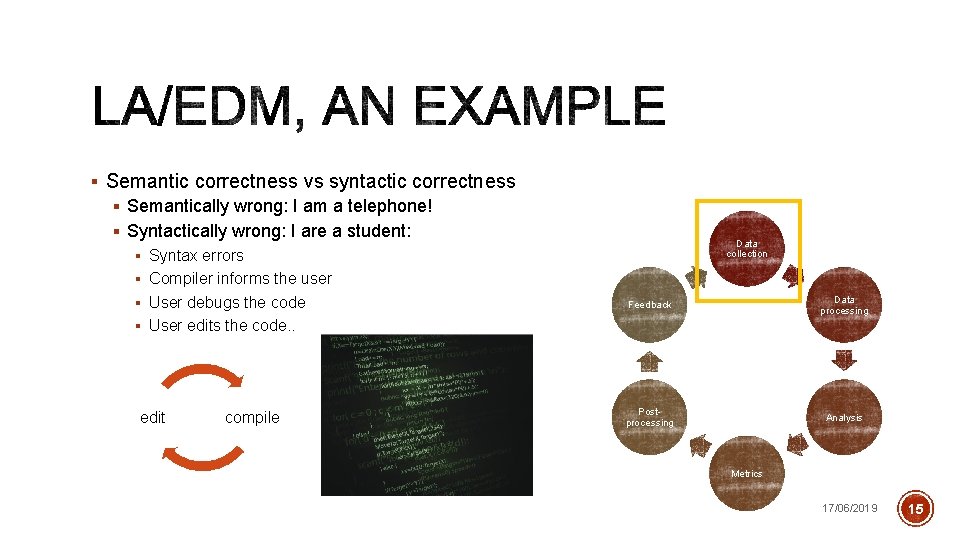

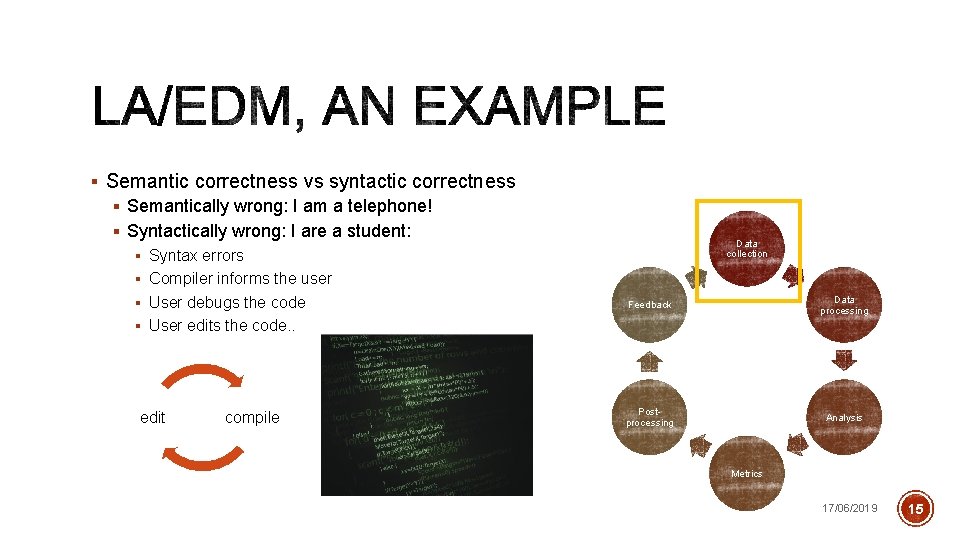

§ Semantic correctness vs syntactic correctness § Semantically wrong: I am a telephone! § Syntactically wrong: I are a student: Data collection § Syntax errors § Compiler informs the user § User debugs the code Feedback Data processing Postprocessing Analysis § User edits the code. . edit compile Metrics 17/06/2019 15

![Where does the data come from 7 Data collection Feedback Data processing Postprocessing § Where does the data come from? [7] Data collection Feedback Data processing Postprocessing](https://slidetodoc.com/presentation_image/14d7a23f58bd5d6831f7fa9e465d0e07/image-16.jpg)

§ Where does the data come from? [7] Data collection Feedback Data processing Postprocessing Analysis Metrics 17/06/2019 16

![Data collection Granularity 7 Data collection Feedback Data processing Postprocessing Analysis Metrics 17062019 § Data collection Granularity [7] Data collection Feedback Data processing Postprocessing Analysis Metrics 17/06/2019](https://slidetodoc.com/presentation_image/14d7a23f58bd5d6831f7fa9e465d0e07/image-17.jpg)

§ Data collection Granularity [7] Data collection Feedback Data processing Postprocessing Analysis Metrics 17/06/2019 17

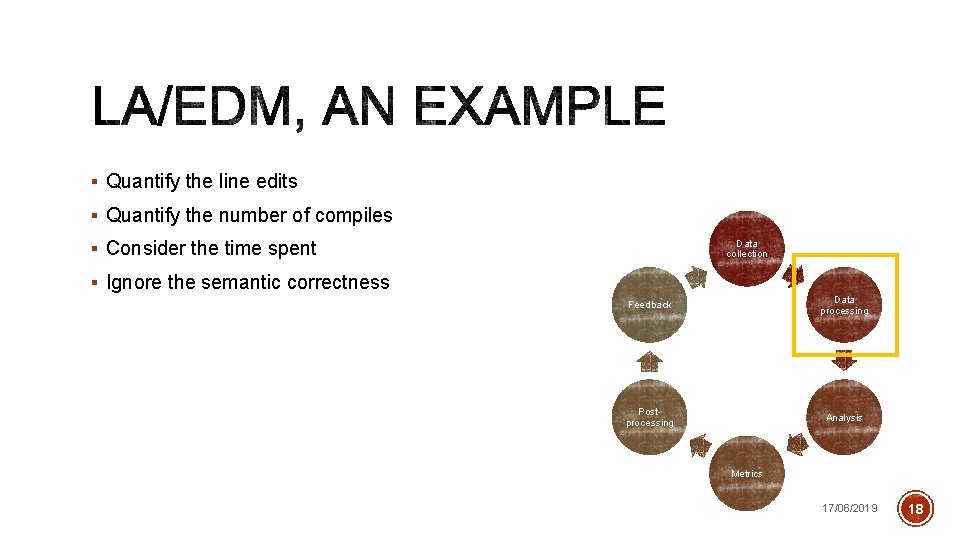

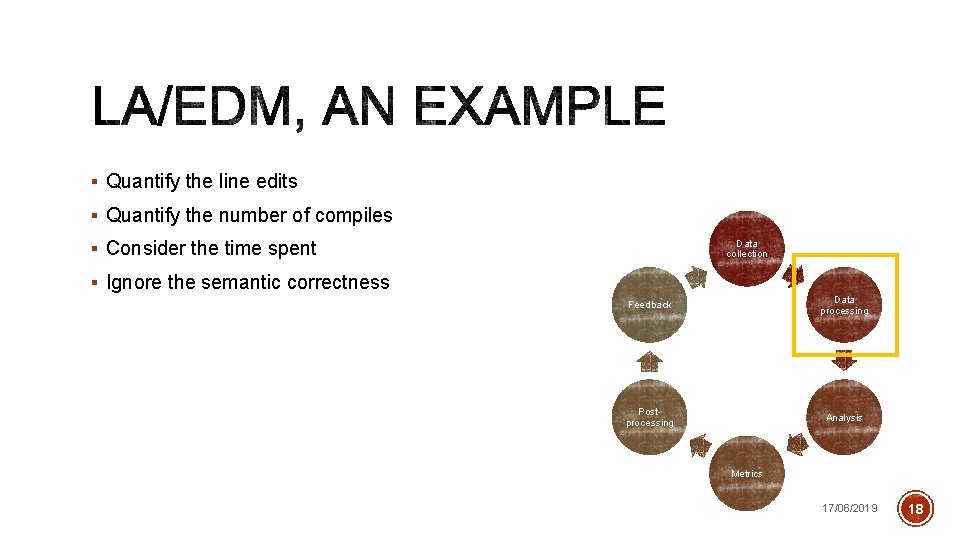

§ Quantify the line edits § Quantify the number of compiles § Consider the time spent Data collection § Ignore the semantic correctness Feedback Data processing Postprocessing Analysis Metrics 17/06/2019 18

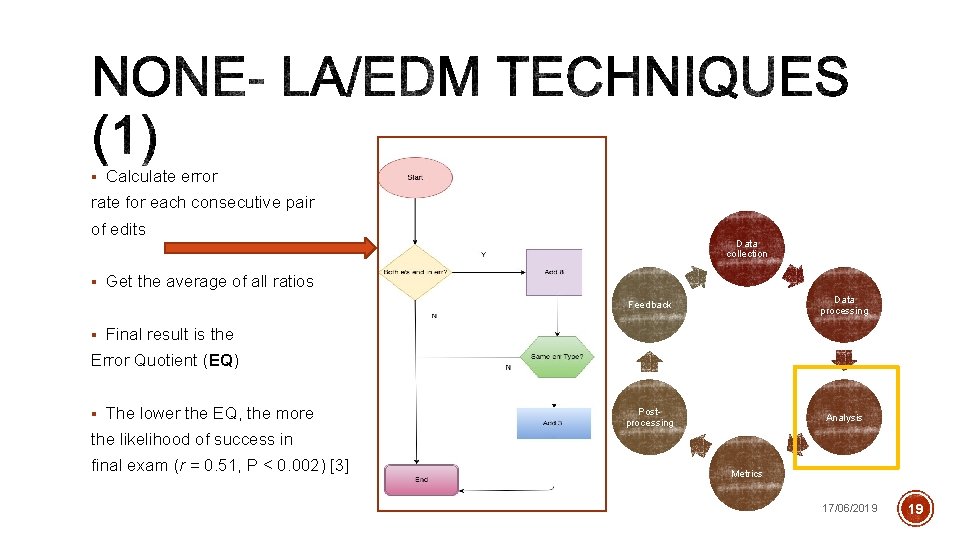

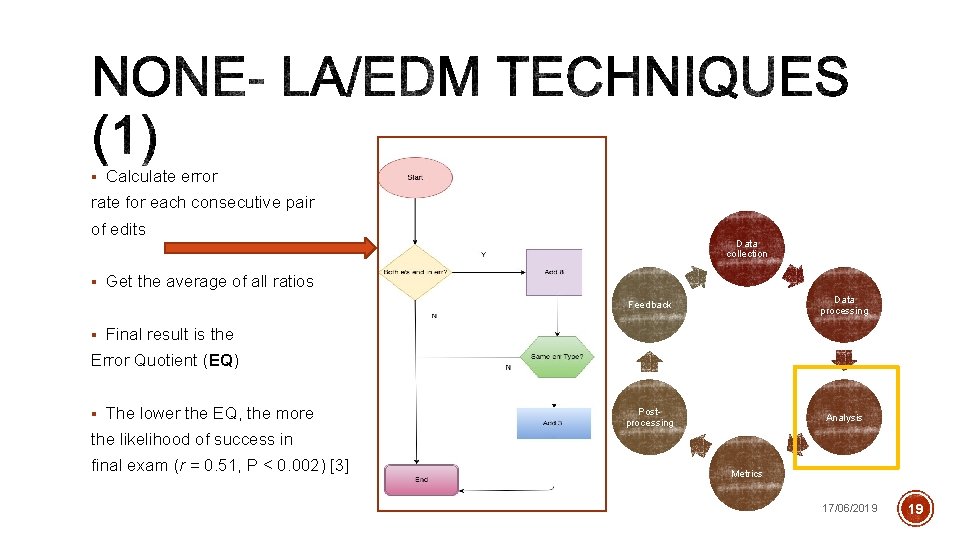

§ Calculate error rate for each consecutive pair of edits Data collection § Get the average of all ratios Feedback Data processing Postprocessing Analysis § Final result is the Error Quotient (EQ) § The lower the EQ, the more the likelihood of success in final exam (r = 0. 51, P < 0. 002) [3] Metrics 17/06/2019 19

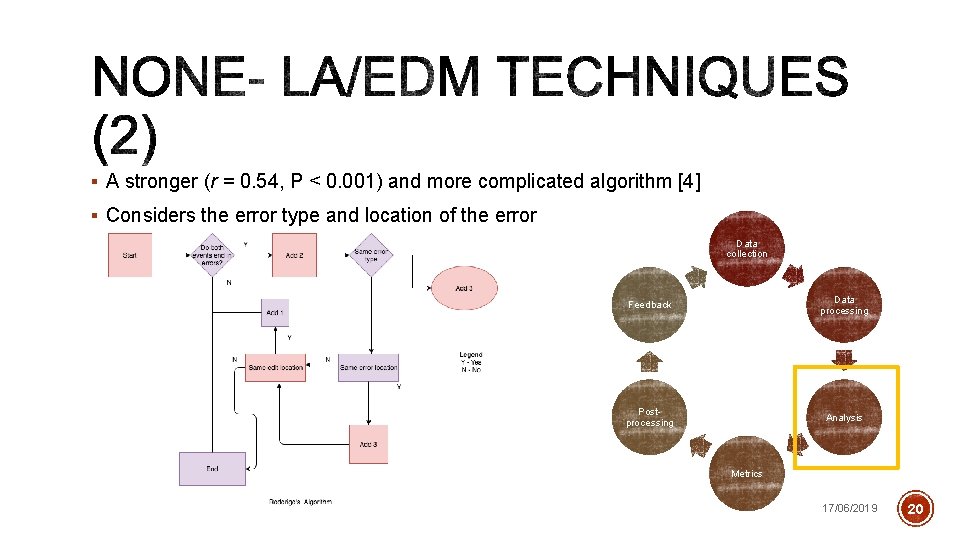

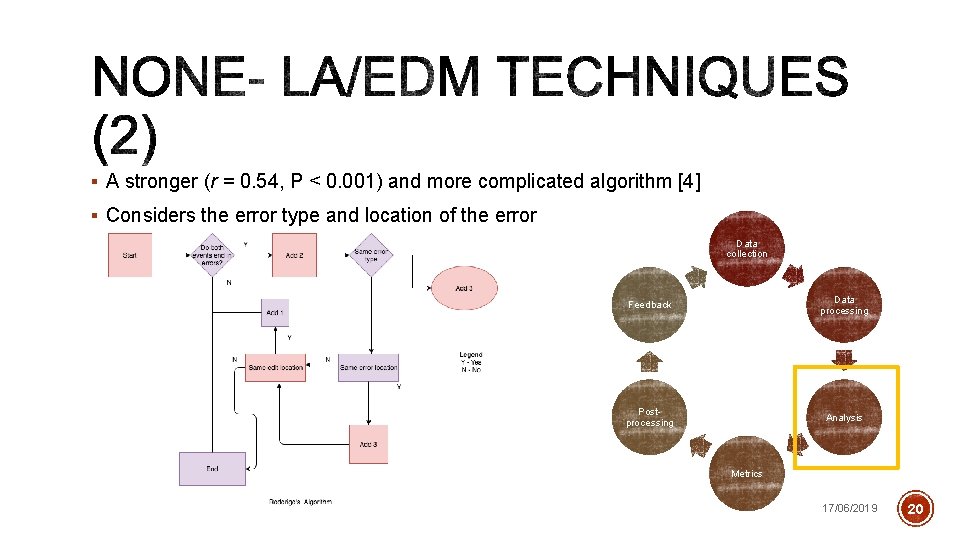

§ A stronger (r = 0. 54, P < 0. 001) and more complicated algorithm [4] § Considers the error type and location of the error Data collection Feedback Data processing Postprocessing Analysis Metrics 17/06/2019 20

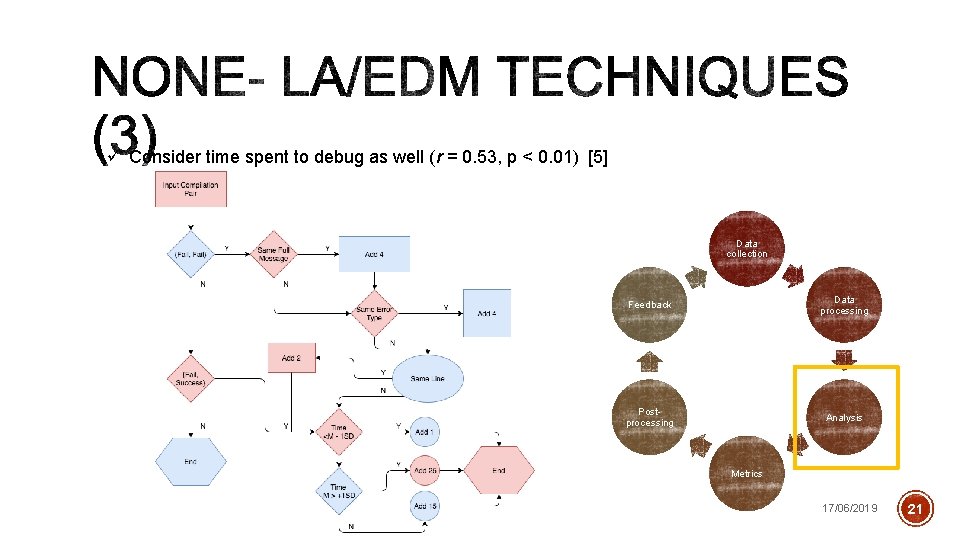

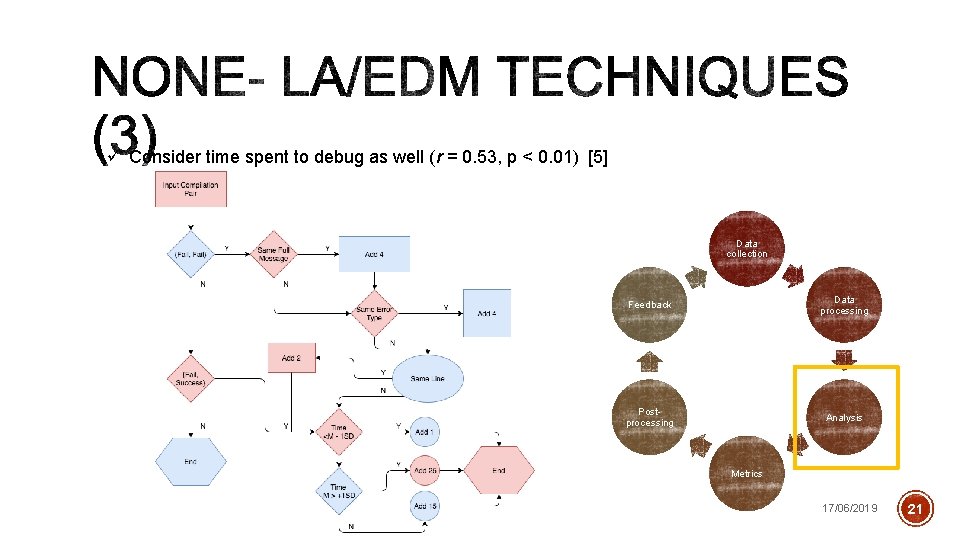

ü Consider time spent to debug as well (r = 0. 53, p < 0. 01) [5] Data collection Feedback Data processing Postprocessing Analysis Metrics 17/06/2019 21

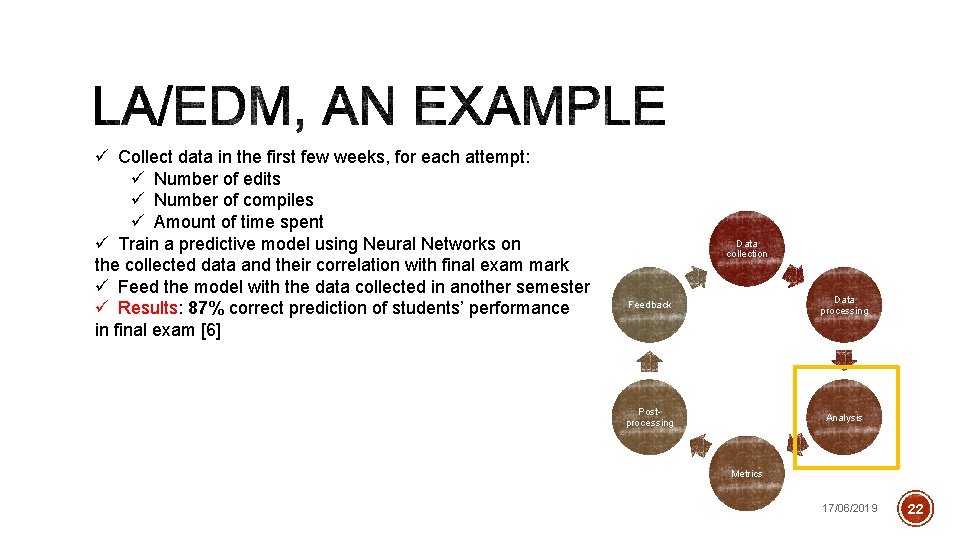

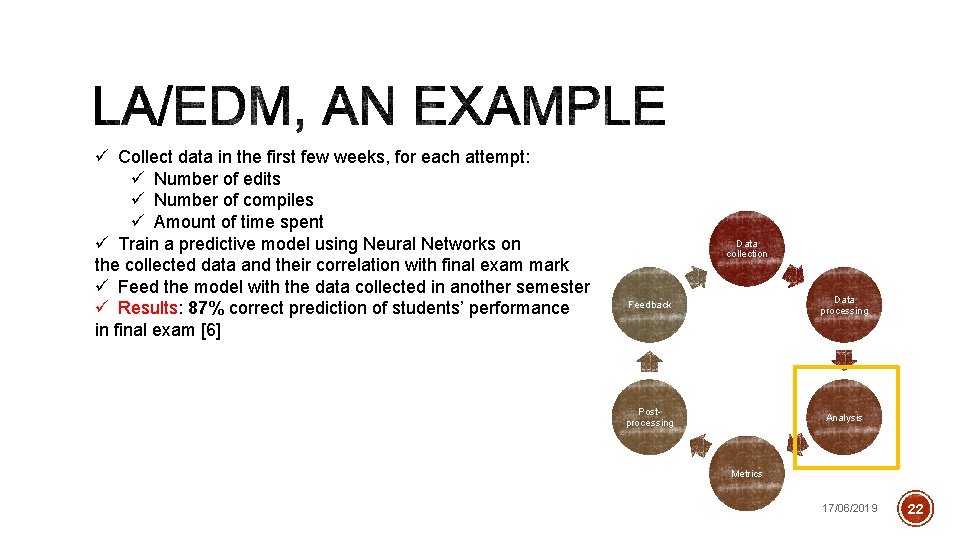

ü Collect data in the first few weeks, for each attempt: ü Number of edits ü Number of compiles ü Amount of time spent ü Train a predictive model using Neural Networks on the collected data and their correlation with final exam mark ü Feed the model with the data collected in another semester ü Results: 87% correct prediction of students’ performance in final exam [6] Data collection Feedback Data processing Postprocessing Analysis Metrics 17/06/2019 22

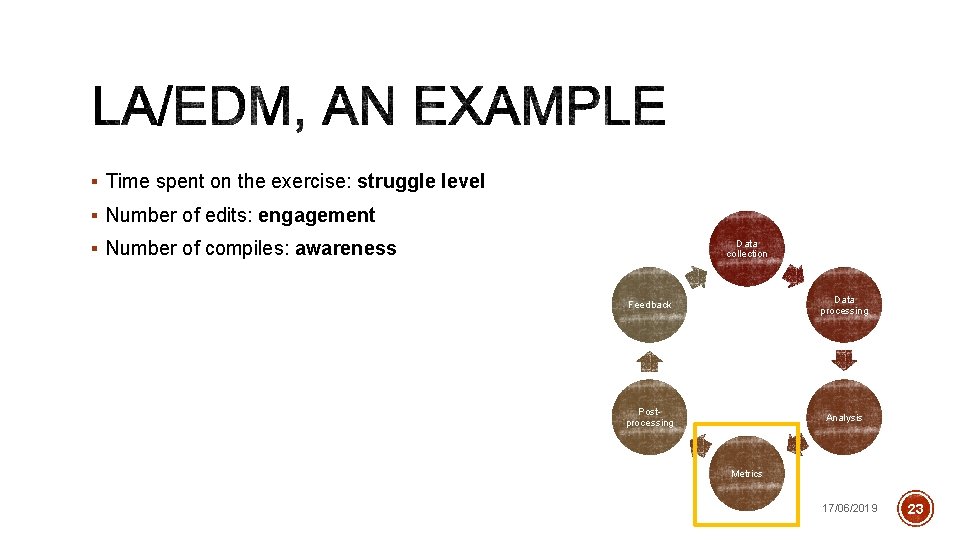

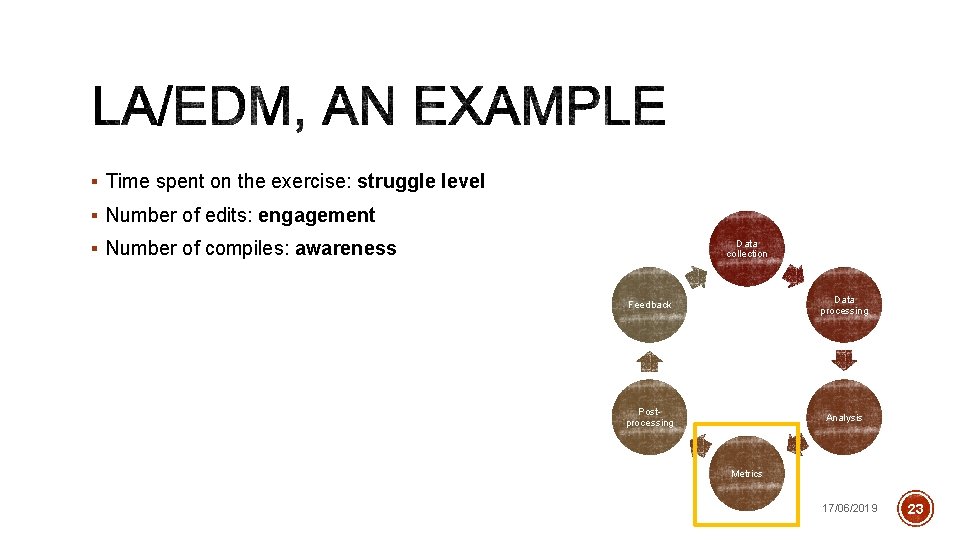

§ Time spent on the exercise: struggle level § Number of edits: engagement § Number of compiles: awareness Data collection Feedback Data processing Postprocessing Analysis Metrics 17/06/2019 23

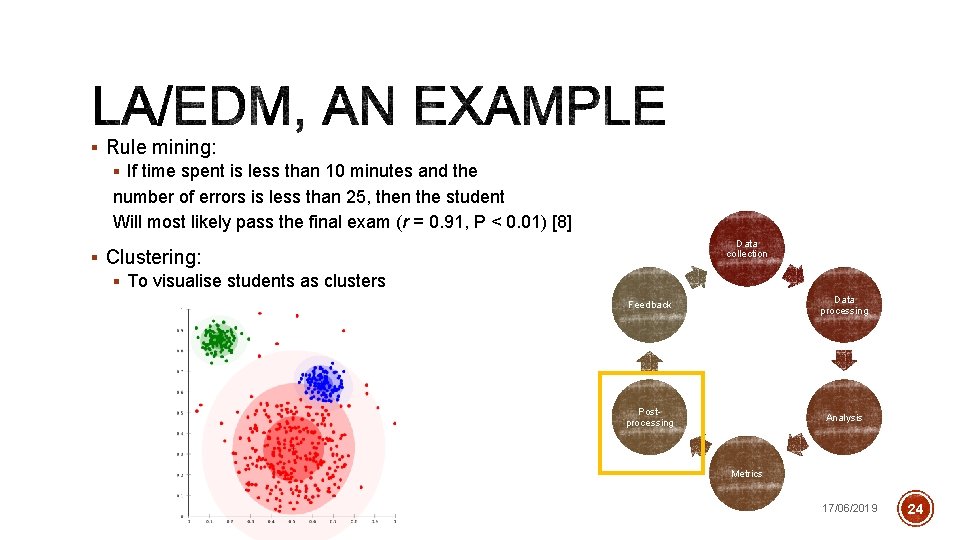

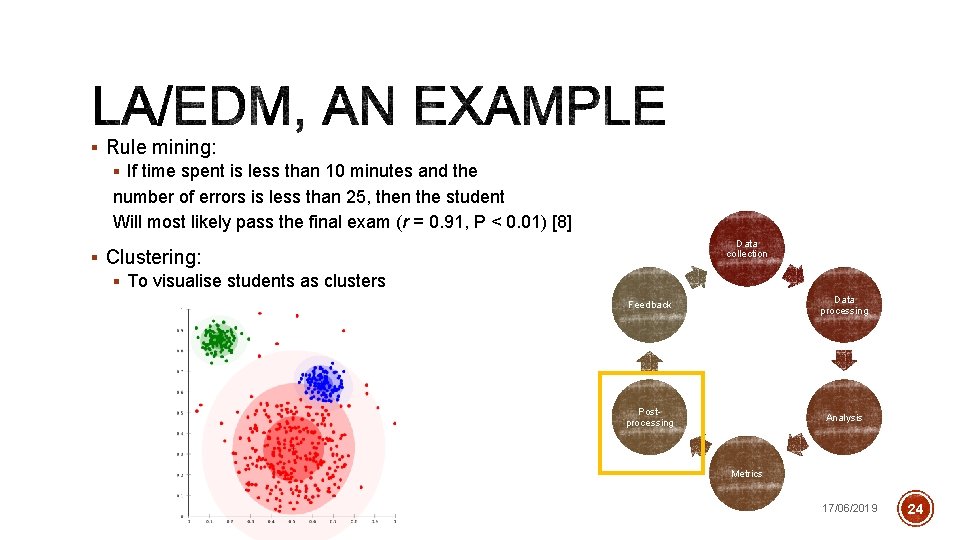

§ Rule mining: § If time spent is less than 10 minutes and the number of errors is less than 25, then the student Will most likely pass the final exam (r = 0. 91, P < 0. 01) [8] Data collection § Clustering: § To visualise students as clusters Feedback Data processing Postprocessing Analysis Metrics 17/06/2019 24

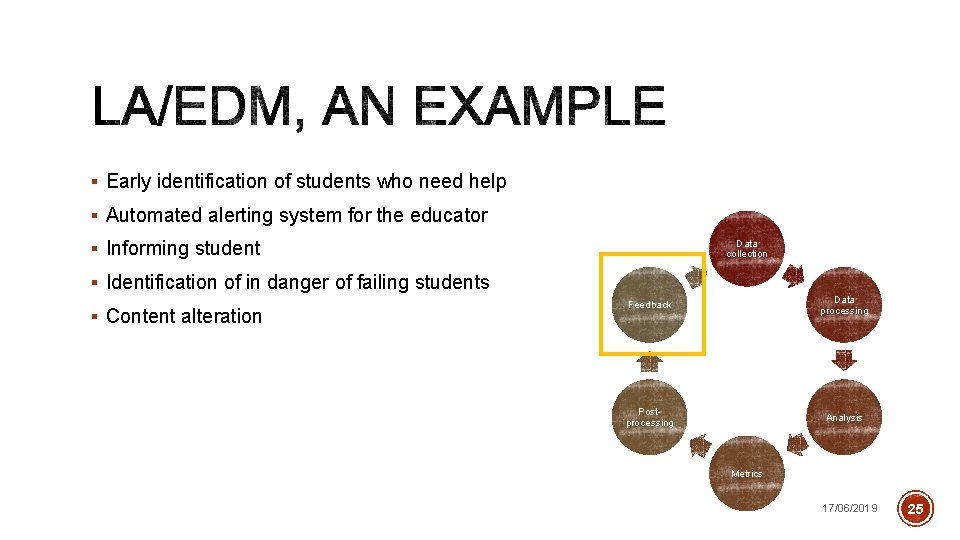

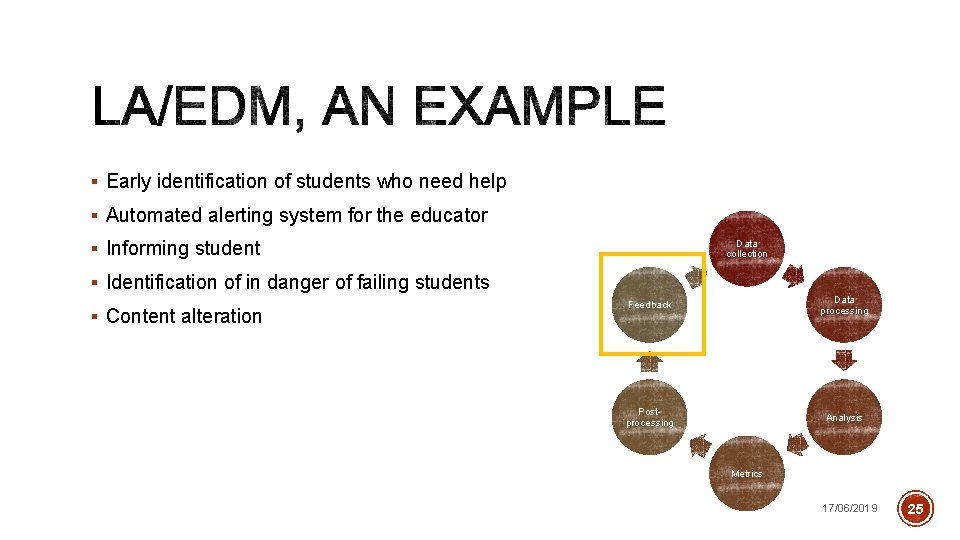

§ Early identification of students who need help § Automated alerting system for the educator § Informing student Data collection § Identification of in danger of failing students § Content alteration Feedback Data processing Postprocessing Analysis Metrics 17/06/2019 25

1. Chatti, M. A. , Dyckhoff, A. L. , Schroeder, U. , Thus, H. : A reference model for learninganalytics. Int. J. Technol. Enhanced Learn. 4(5 -6), 318 -331 (2012) 2. Romero, C. , Ventura, S. : Data mining in education. Wiley Interdisc. Rev. : Data Min. Knowl. Discovery 3(1), 12 -27 (2013) 3. Jadud, M. C. , 2006, September. Methods and tools for exploring novice compilation behaviour. In Proceedings of the second international workshop on Computing education research (pp. 73 -84). ACM. 4. Tabanao, E. S. , Rodrigo, M. M. T. and Jadud, M. C. , 2011, August. Predicting at-risk novice Java programmers through the analysis of online protocols. In Proceedings of the seventh international workshop on Computing education research (pp. 85 -92). ACM. 5. Watson, C. , Li, F. W. and Godwin, J. L. , 2013, July. Predicting performance in an introductory programming course by logging and analyzing student programming behavior. In 2013 IEEE 13 th International Conference on Advanced Learning Technologies (pp. 319 -323). IEEE. 6. Ahadi, A. , Lister, R. , Haapala, H. and Vihavainen, A. , 2015, July. Exploring machine learning methods to automatically identify students in need of assistance. In Proceedings of the eleventh annual International Conference on International Computing Education Research (pp. 121 -130). ACM. 7. Ihantola, P. , Vihavainen, A. , Ahadi, A. , Butler, M. , Börstler, J. , Edwards, S. H. , Isohanni, E. , Korhonen, A. , Petersen, A. , Rivers, K. and Rubio, M. Á. , 2015, July. Educational data mining and learning analytics in programming: Literature review and case studies. In Proceedings of the 2015 ITi. CSE on Working Group Reports (pp. 41 -63). ACM. 8. Ahadi, A. , Lister, R. , Lal, S. , Leinonen, J. and Hellas, A. , 2017, January. Performance and consistency in learning to program. In ACM International Conference Proceeding Series. 17/06/2019 26

17/06/2019 27

Alireza ahadi

Alireza ahadi Human science tok

Human science tok Faculty of medicine nursing and health sciences

Faculty of medicine nursing and health sciences Faculty of organizational sciences

Faculty of organizational sciences University of kragujevac faculty of technical sciences

University of kragujevac faculty of technical sciences Pubh4401

Pubh4401 Facultad de ciencias de la salud uma

Facultad de ciencias de la salud uma Keralastec

Keralastec Alireza khosravi

Alireza khosravi Tf 2

Tf 2 Dr. alireza amirbaigloo, md, endocrinologist

Dr. alireza amirbaigloo, md, endocrinologist دكتر عليرضا پيمان

دكتر عليرضا پيمان Alireza akhavanpour

Alireza akhavanpour Adaptive pooling pytorch

Adaptive pooling pytorch Alireza akhavanpour

Alireza akhavanpour Alireza etesami

Alireza etesami دکتر علیرضا پیمان

دکتر علیرضا پیمان Dr. alireza amirbaigloo, md, endocrinologist

Dr. alireza amirbaigloo, md, endocrinologist Alireza farshi

Alireza farshi Alireza yalda

Alireza yalda Alireza yalda

Alireza yalda Shortlyaia

Shortlyaia Alireza firooz

Alireza firooz Alireza shafaei

Alireza shafaei Human sciences tok

Human sciences tok Revealed knowledge adalah

Revealed knowledge adalah Aok knowledge framework

Aok knowledge framework Swot analysis introduction

Swot analysis introduction