PGAS Languages and Halo Updates Will Sawyer CSCS

![UPC Version “Elegant” shared struct double *dotprod; /* on thread 0 */ double shared_a[THREADS]; UPC Version “Elegant” shared struct double *dotprod; /* on thread 0 */ double shared_a[THREADS];](https://slidetodoc.com/presentation_image_h2/a615dcc8c51e41aa861872a28b0524ff/image-11.jpg)

![UPC “Inelegant 1”: reproduce existing messaging § MPI_Isend(ed->v 1, ed->nlstates, MPI_DOUBLE, ed->to_nbs[0], k, MPI_COMM_WORLD, UPC “Inelegant 1”: reproduce existing messaging § MPI_Isend(ed->v 1, ed->nlstates, MPI_DOUBLE, ed->to_nbs[0], k, MPI_COMM_WORLD,](https://slidetodoc.com/presentation_image_h2/a615dcc8c51e41aa861872a28b0524ff/image-12.jpg)

![UPC “Inelegant 3”: use only PUT operations shared[NBLOCK] double vtmp 1[THREADS*NBLOCK]; shared[NBLOCK] double vtmp UPC “Inelegant 3”: use only PUT operations shared[NBLOCK] double vtmp 1[THREADS*NBLOCK]; shared[NBLOCK] double vtmp](https://slidetodoc.com/presentation_image_h2/a615dcc8c51e41aa861872a28b0524ff/image-13.jpg)

- Slides: 17

PGAS Languages and Halo Updates Will Sawyer, CSCS

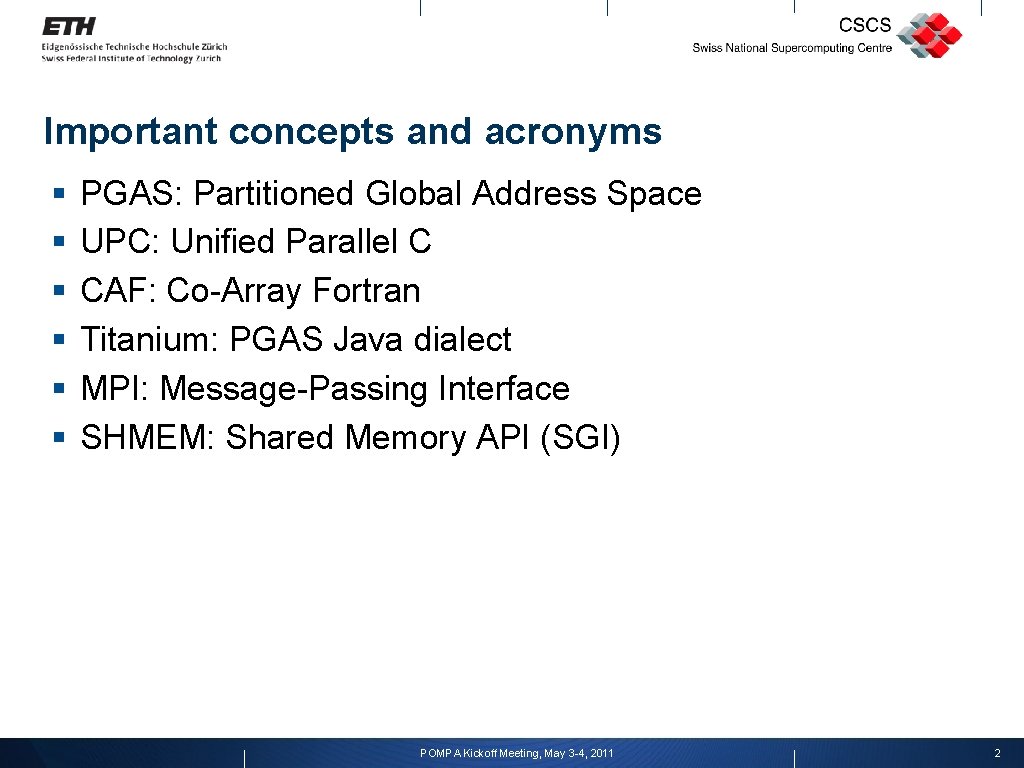

Important concepts and acronyms § § § PGAS: Partitioned Global Address Space UPC: Unified Parallel C CAF: Co-Array Fortran Titanium: PGAS Java dialect MPI: Message-Passing Interface SHMEM: Shared Memory API (SGI) POMPA Kickoff Meeting, May 3 -4, 2011 2

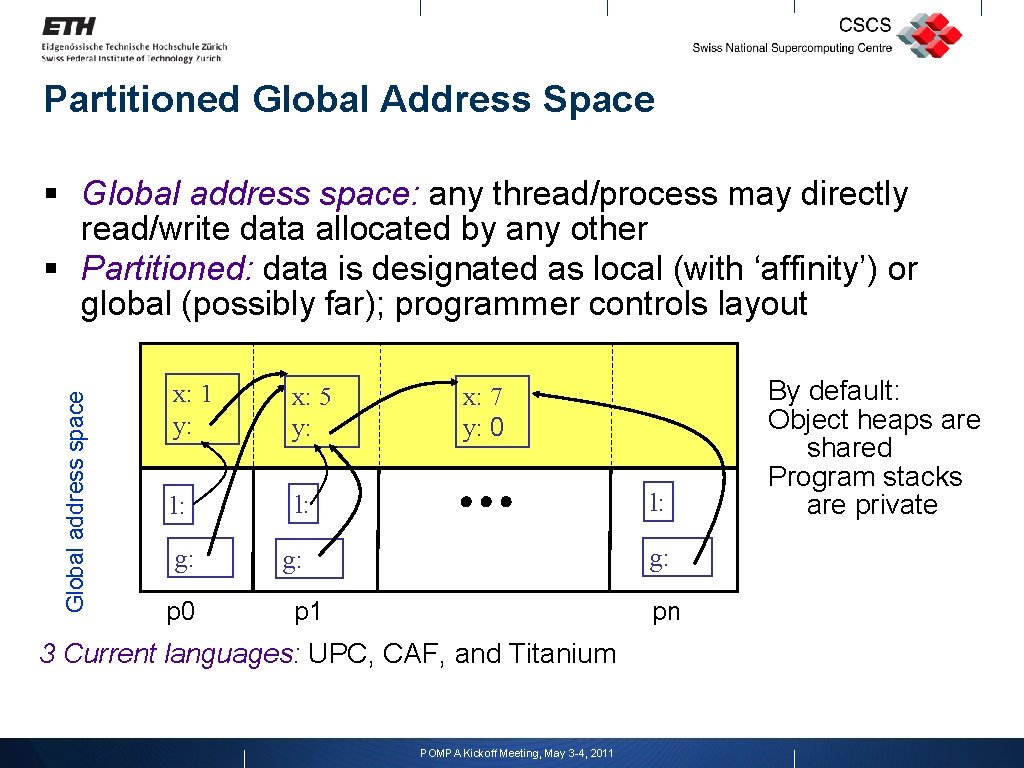

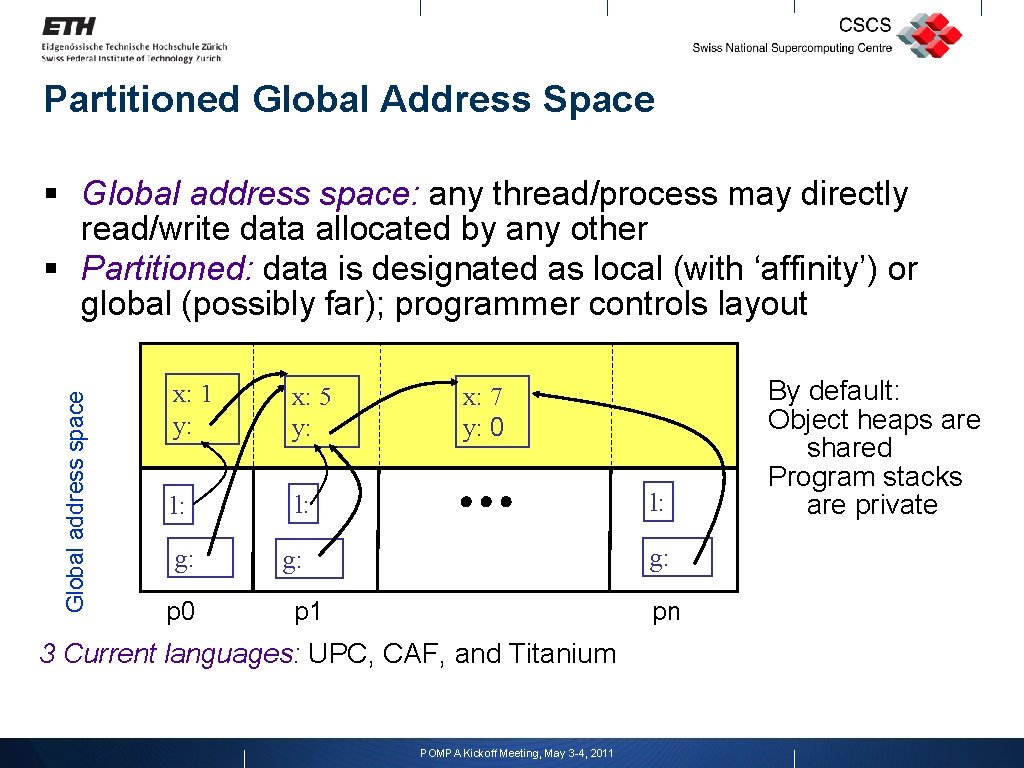

Partitioned Global Address Space Global address space § Global address space: any thread/process may directly read/write data allocated by any other § Partitioned: data is designated as local (with ‘affinity’) or global (possibly far); programmer controls layout x: 1 y: x: 5 y: l: l: g: g: p 0 x: 7 y: 0 p 1 pn 3 Current languages: UPC, CAF, and Titanium POMPA Kickoff Meeting, May 3 -4, 2011 By default: Object heaps are shared Program stacks are private

Potential strengths of a PGAS language § Interprocess communication intrinsic to language § Explicit support for distributed data structures (private and shared data) § Conceptually the parallel formulation can be more elegant § One-sided shared-memory communication § Values are either ‘put’ or ‘got’ from remote images § Support for bulk messages, synchronization § Could be implemented with message-passing library or through RDMA (remote direct memory access) § PGAS hardware support available § Cray Gemini (XE 6) interconnect supports RDMA § Potential interoperability with existing C/Fortran/Java code POMPA Kickoff Meeting, May 3 -4, 2011

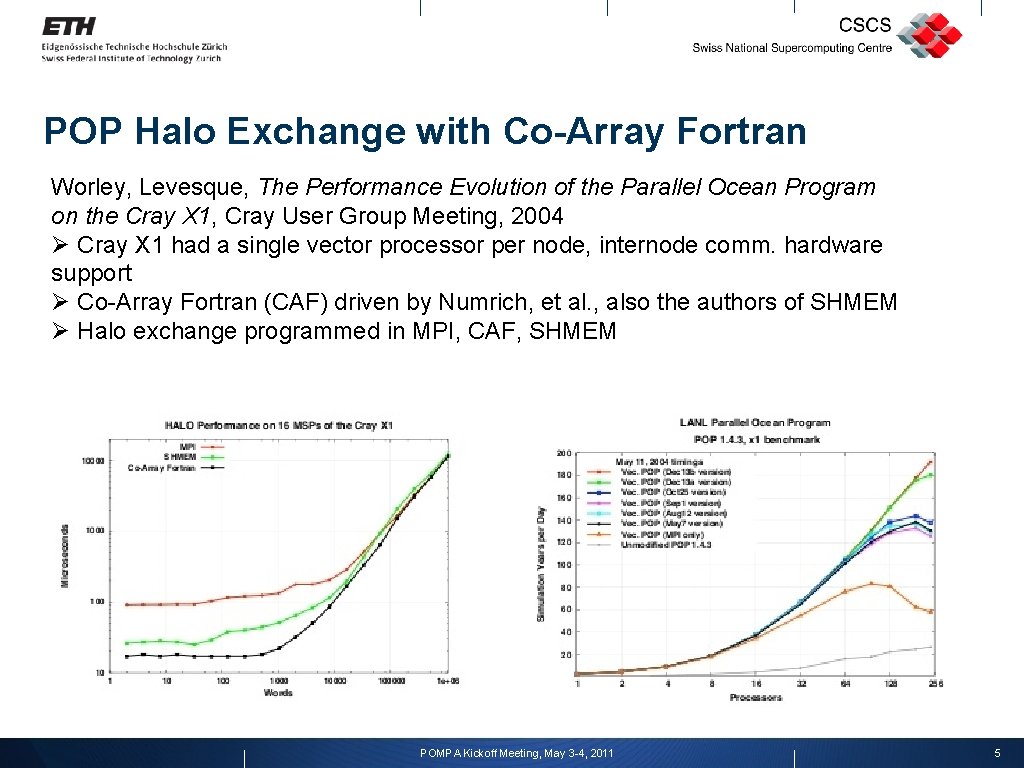

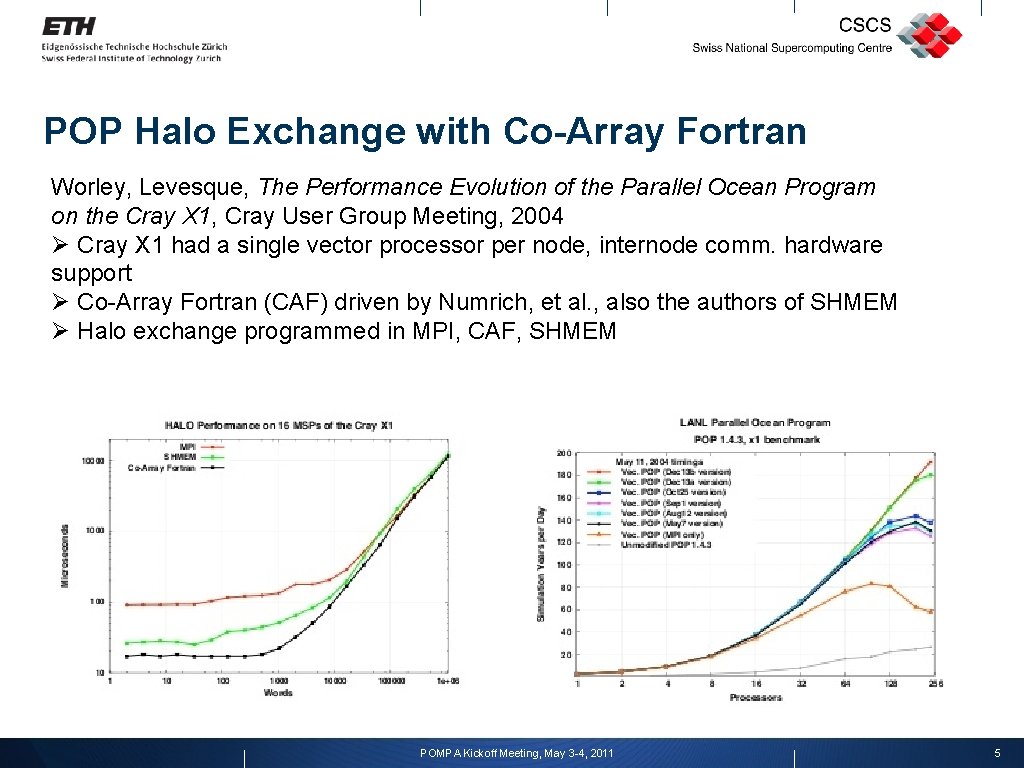

POP Halo Exchange with Co-Array Fortran Worley, Levesque, The Performance Evolution of the Parallel Ocean Program on the Cray X 1, Cray User Group Meeting, 2004 Ø Cray X 1 had a single vector processor per node, internode comm. hardware support Ø Co-Array Fortran (CAF) driven by Numrich, et al. , also the authors of SHMEM Ø Halo exchange programmed in MPI, CAF, SHMEM POMPA Kickoff Meeting, May 3 -4, 2011 5

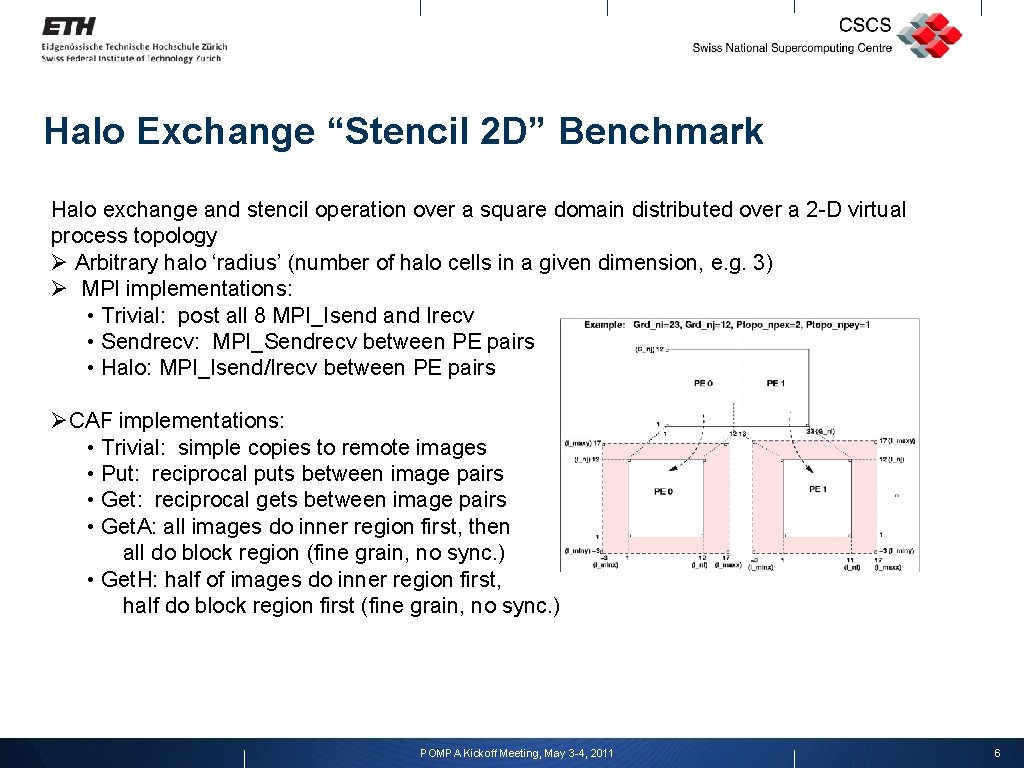

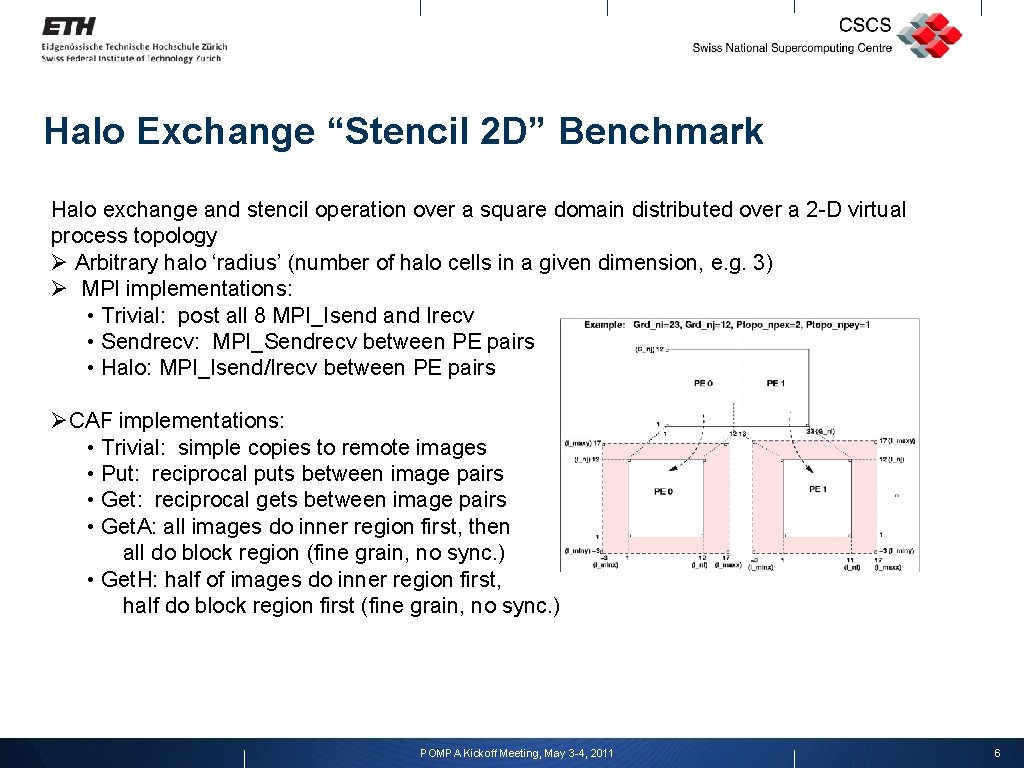

Halo Exchange “Stencil 2 D” Benchmark Halo exchange and stencil operation over a square domain distributed over a 2 -D virtual process topology Ø Arbitrary halo ‘radius’ (number of halo cells in a given dimension, e. g. 3) Ø MPI implementations: • Trivial: post all 8 MPI_Isend and Irecv • Sendrecv: MPI_Sendrecv between PE pairs • Halo: MPI_Isend/Irecv between PE pairs ØCAF implementations: • Trivial: simple copies to remote images • Put: reciprocal puts between image pairs • Get: reciprocal gets between image pairs • Get. A: all images do inner region first, then all do block region (fine grain, no sync. ) • Get. H: half of images do inner region first, half do block region first (fine grain, no sync. ) POMPA Kickoff Meeting, May 3 -4, 2011 6

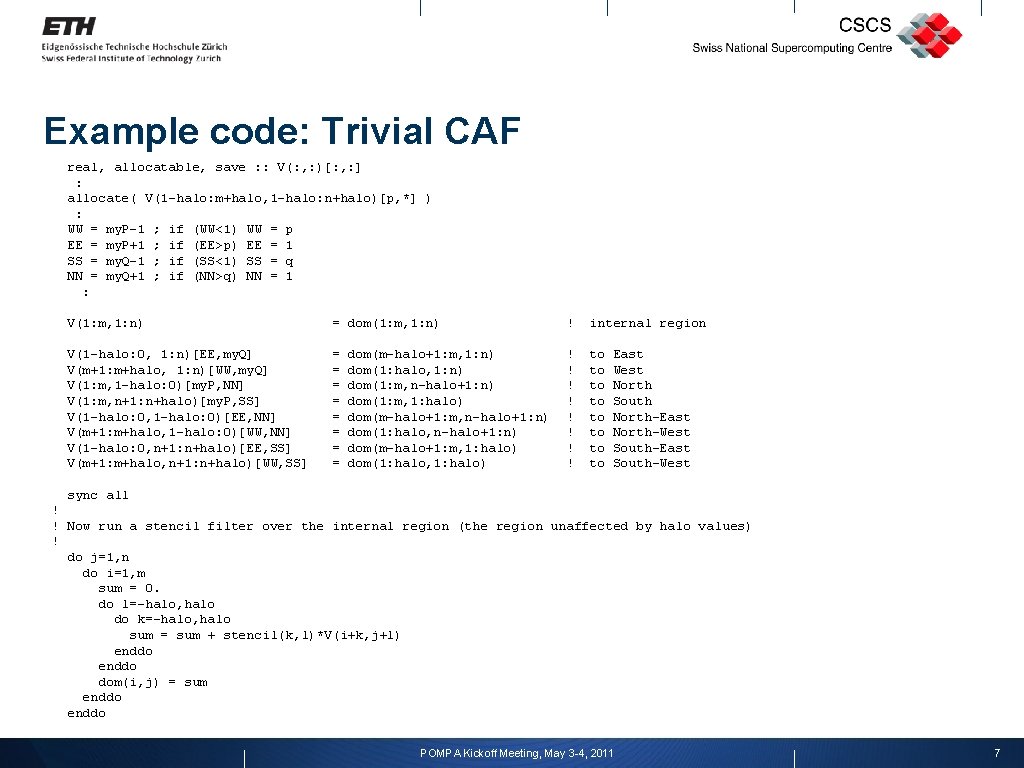

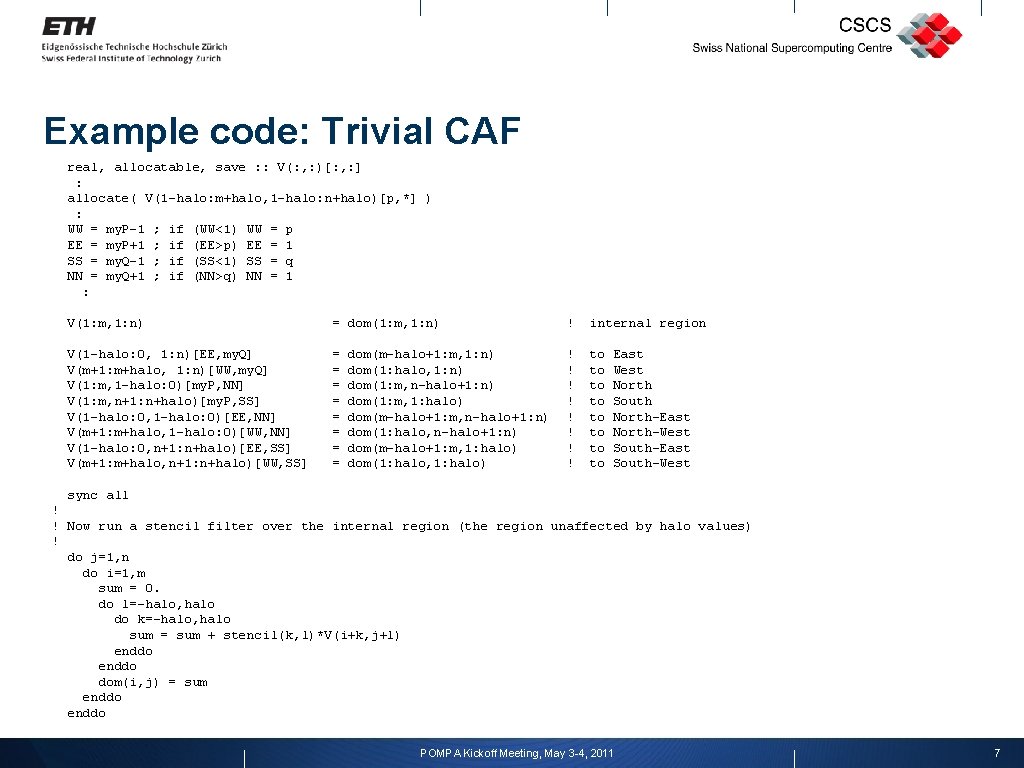

Example code: Trivial CAF real, allocatable, save : : V(: , : )[: , : ] : allocate( V(1 -halo: m+halo, 1 -halo: n+halo)[p, *] ) : WW = my. P-1 ; if (WW<1) WW = p EE = my. P+1 ; if (EE>p) EE = 1 SS = my. Q-1 ; if (SS<1) SS = q NN = my. Q+1 ; if (NN>q) NN = 1 : V(1: m, 1: n) = dom(1: m, 1: n) ! internal region V(1 -halo: 0, 1: n)[EE, my. Q] V(m+1: m+halo, 1: n)[WW, my. Q] V(1: m, 1 -halo: 0)[my. P, NN] V(1: m, n+1: n+halo)[my. P, SS] V(1 -halo: 0, 1 -halo: 0)[EE, NN] V(m+1: m+halo, 1 -halo: 0)[WW, NN] V(1 -halo: 0, n+1: n+halo)[EE, SS] V(m+1: m+halo, n+1: n+halo)[WW, SS] = = = = ! ! ! ! to to dom(m-halo+1: m, 1: n) dom(1: halo, 1: n) dom(1: m, n-halo+1: n) dom(1: m, 1: halo) dom(m-halo+1: m, n-halo+1: n) dom(1: halo, n-halo+1: n) dom(m-halo+1: m, 1: halo) dom(1: halo, 1: halo) East West North South North-East North-West South-East South-West sync all ! ! Now run a stencil filter over the internal region (the region unaffected by halo values) ! do j=1, n do i=1, m sum = 0. do l=-halo, halo do k=-halo, halo sum = sum + stencil(k, l)*V(i+k, j+l) enddo dom(i, j) = sum enddo POMPA Kickoff Meeting, May 3 -4, 2011 7

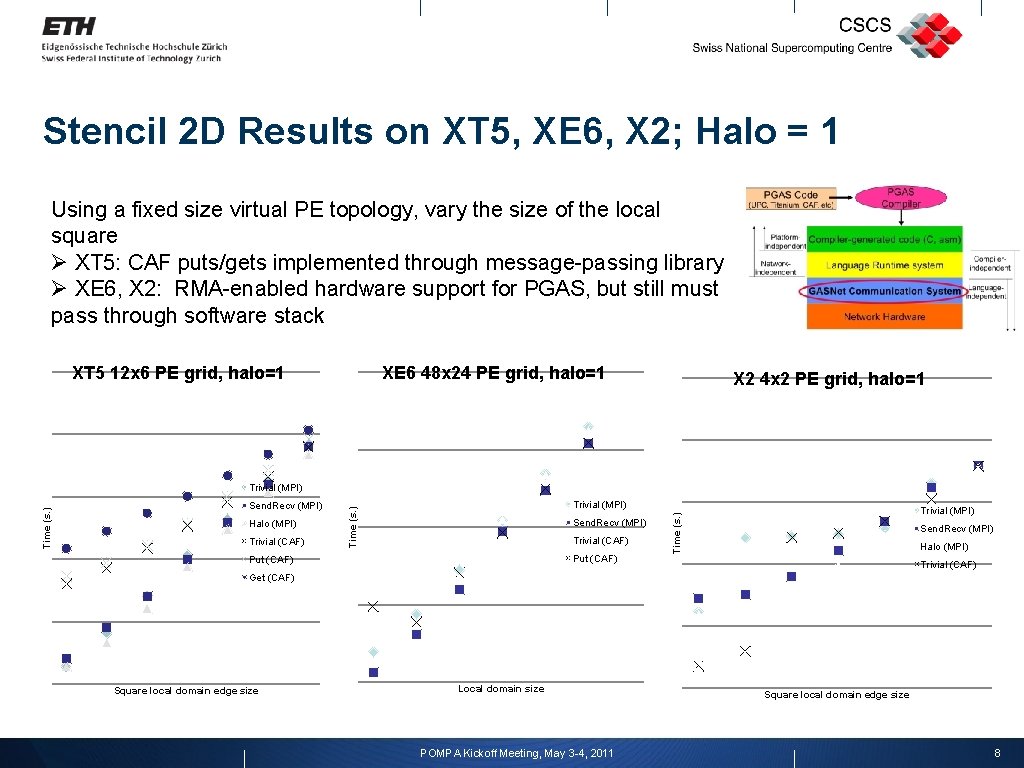

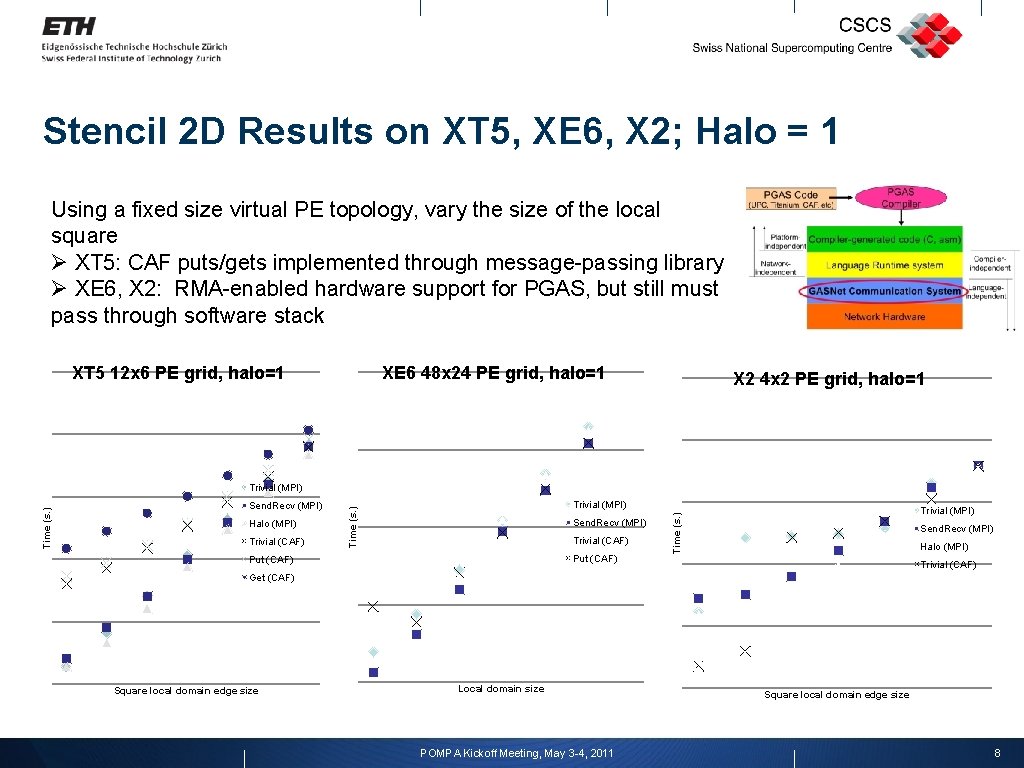

Stencil 2 D Results on XT 5, XE 6, X 2; Halo = 1 Using a fixed size virtual PE topology, vary the size of the local square Ø XT 5: CAF puts/gets implemented through message-passing library Ø XE 6, X 2: RMA-enabled hardware support for PGAS, but still must pass through software stack XT 5 12 x 6 PE grid, halo=1 XE 6 48 x 24 PE grid, halo=1 X 2 4 x 2 PE grid, halo=1 Halo (MPI) Trivial (CAF) Trivial (MPI) Send. Recv (MPI) Trivial (CAF) Put (CAF) Trivial (MPI) Time (s. ) Send. Recv (MPI) Time (s. ) Trivial (MPI) Send. Recv (MPI) Halo (MPI) Trivial (CAF) Get (CAF) Square local domain edge size Local domain size POMPA Kickoff Meeting, May 3 -4, 2011 Square local domain edge size 8

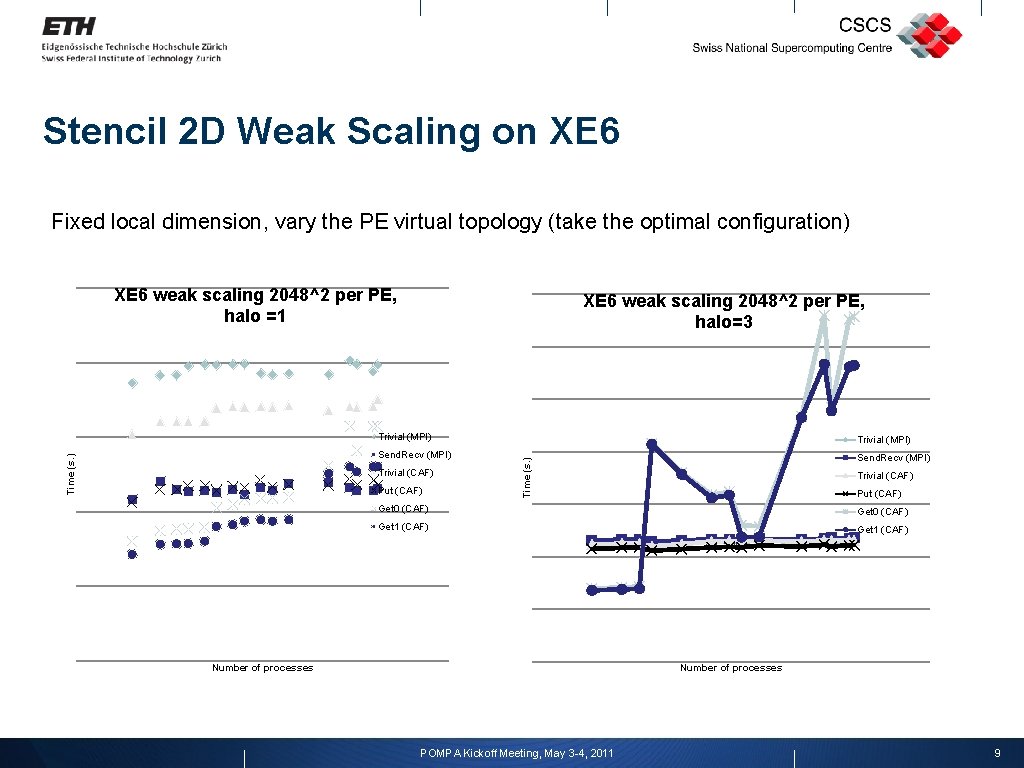

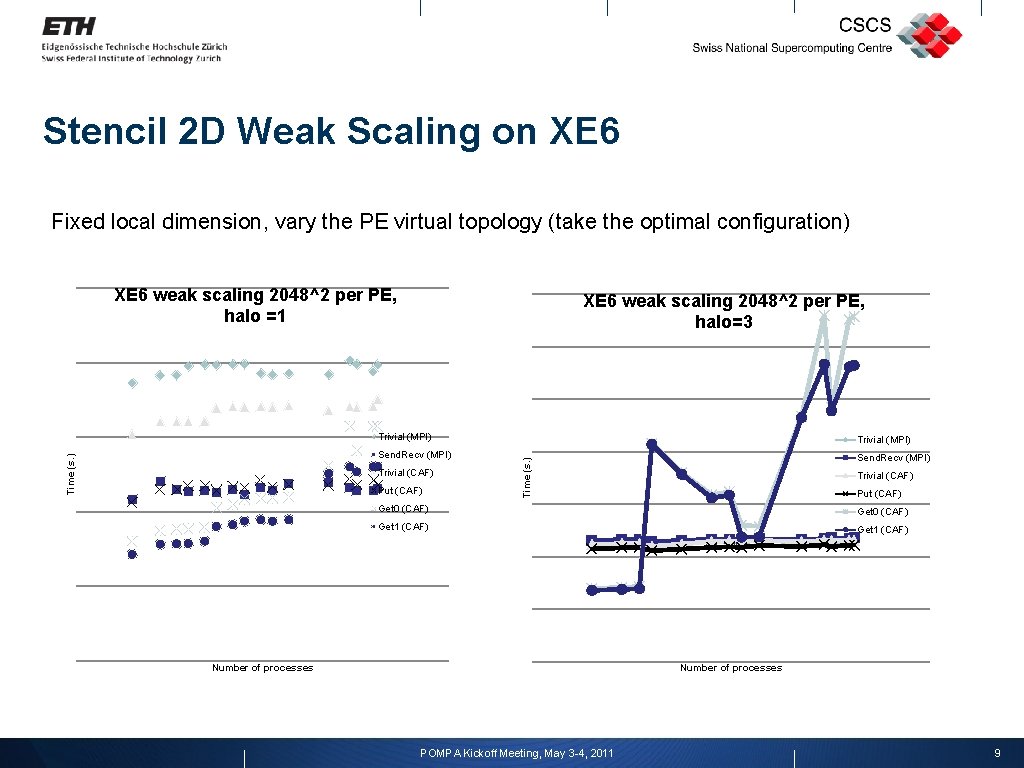

Stencil 2 D Weak Scaling on XE 6 Fixed local dimension, vary the PE virtual topology (take the optimal configuration) XE 6 weak scaling 2048^2 per PE, halo=3 Trivial (MPI) Send. Recv (MPI) Trivial (CAF) Put (CAF) Time (s. ) XE 6 weak scaling 2048^2 per PE, halo =1 Trivial (CAF) Put (CAF) Get 0 (CAF) Get 1 (CAF) Number of processes POMPA Kickoff Meeting, May 3 -4, 2011 9

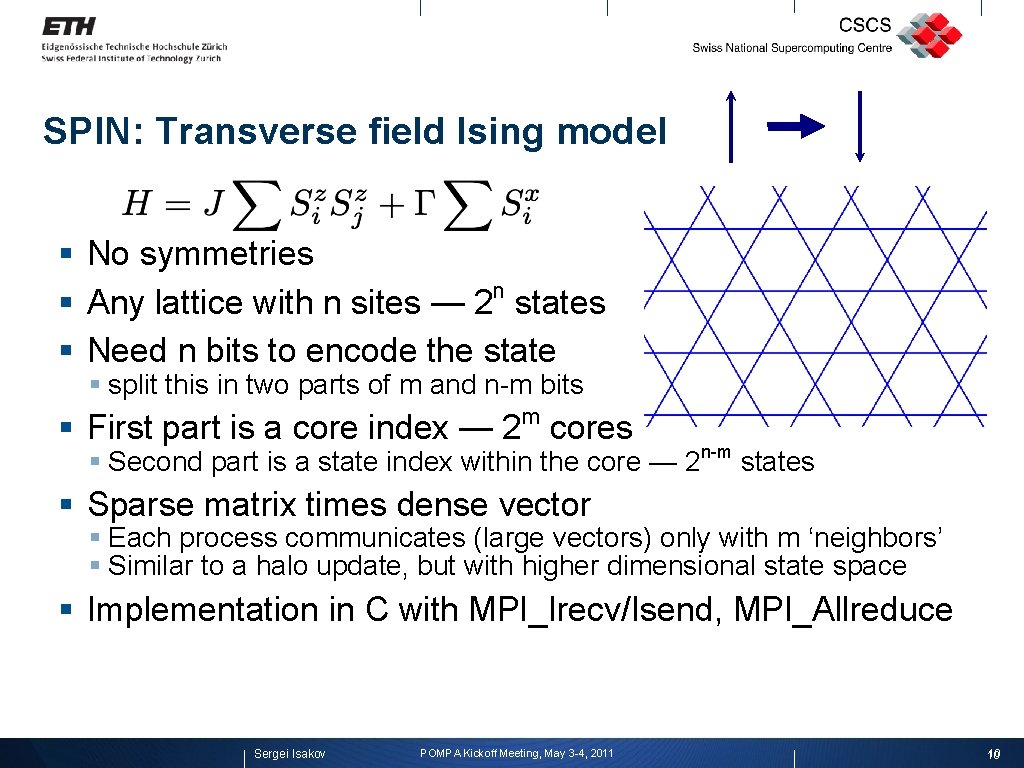

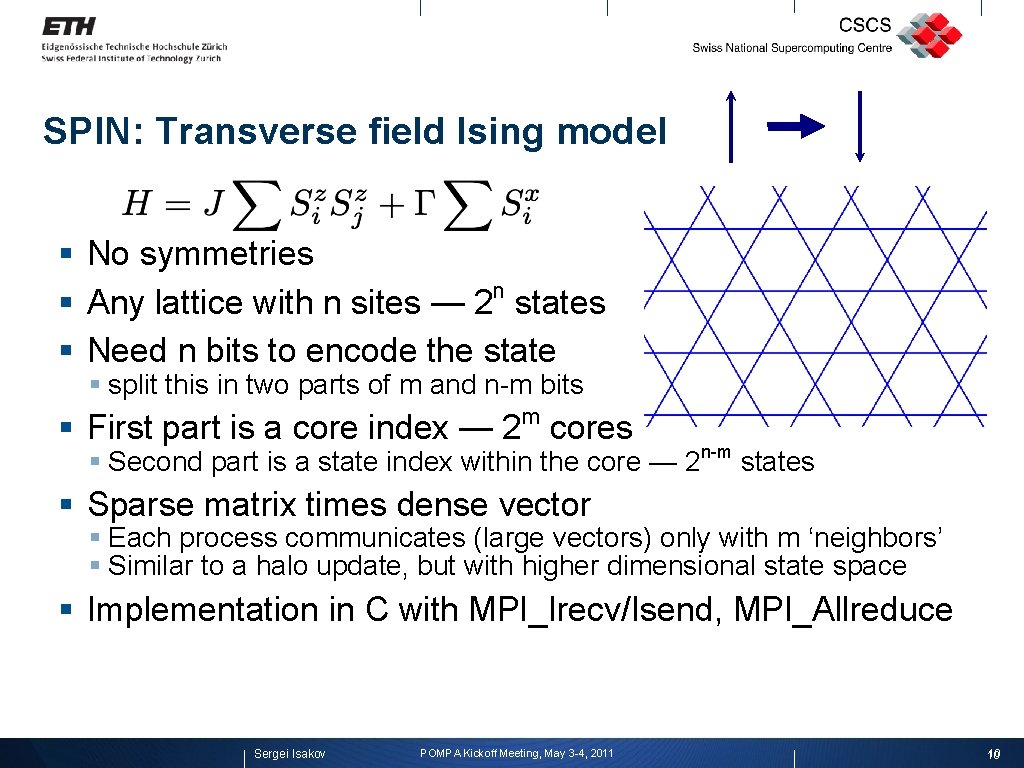

SPIN: Transverse field Ising model § No symmetries n § Any lattice with n sites — 2 states § Need n bits to encode the state § split this in two parts of m and n-m bits m § First part is a core index — 2 cores § Second part is a state index within the core — 2 n-m states § Sparse matrix times dense vector § Each process communicates (large vectors) only with m ‘neighbors’ § Similar to a halo update, but with higher dimensional state space § Implementation in C with MPI_Irecv/Isend, MPI_Allreduce Sergei Isakov POMPA Kickoff Meeting, May 3 -4, 2011 10 10

![UPC Version Elegant shared struct double dotprod on thread 0 double sharedaTHREADS UPC Version “Elegant” shared struct double *dotprod; /* on thread 0 */ double shared_a[THREADS];](https://slidetodoc.com/presentation_image_h2/a615dcc8c51e41aa861872a28b0524ff/image-11.jpg)

UPC Version “Elegant” shared struct double *dotprod; /* on thread 0 */ double shared_a[THREADS]; double shared_b[THREADS]; ed_s {. . . shared double *v 0, *v 1, *v 2; /* vectors */ shared double *swap; /* for swapping vectors */ }; : for (iter = 0; iter < ed->max_iter; ++iter) { shared_b[MYTHREAD] = b; /* calculate beta */ upc_all_reduce. D( dotprod, shared_b, UPC_ADD, THREADS, 1, NULL, UPC_IN_ALLSYNC | UPC_OUT_ALLSYNC ); ed->beta[iter] = sqrt(fabs(dotprod[0])); ib = 1. 0 / ed->beta[iter]; /* normalize v 1 */ upc_forall (i = 0; i < ed->nlstates; ++i; &(ed->v 1[i]) ) ed->v 1[i] *= ib; upc_barrier(0); /* matrix vector multiplication */ upc_forall (s = 0; s < ed->nlstates; ++s; &(ed->v 1[s]) ) { /* v 2 = A * v 1, over all threads */ ed->v 2[s] = diag(s, ed->n, ed->j) * ed->v 1[s]; /* diagonal part */ for (k = 0; k < ed->n; ++k) { /* offdiagonal part */ s 1 = flip_state(s, k); ed->v 2[s] += ed->gamma * ed->v 1[s 1]; } } a = 0. 0; /* Calculate local conjugate term */ upc_forall (i = 0; i < ed->nlstates; ++i; &(ed->v 1[i]) ) { a += ed->v 1[i] * ed->v 2[i]; } shared_a[MYTHREAD] = a; upc_all_reduce. D( dotprod, shared_a, UPC_ADD, THREADS, 1, NULL, UPC_IN_ALLSYNC | UPC_OUT_ALLSYNC ); ed->alpha[iter] = dotprod[0]; b = 0. 0; /* v 2 = v 2 - v 0 * beta 1 - v 1 * alpha 1 */ upc_forall (i = 0; i < ed->nlstates; ++i; &(ed->v 2[i]) ) { ed->v 2[i] -= ed->v 0[i] * ed->beta[iter] + ed->v 1[i] * ed->alpha[iter]; b += ed->v 2[i] * ed->v 2[i]; } swap 01(ed); swap 12(ed); /* "shift" vectors */ } } POMPA Kickoff Workshop, May 3 -4, 2011

![UPC Inelegant 1 reproduce existing messaging MPIIsendedv 1 ednlstates MPIDOUBLE edtonbs0 k MPICOMMWORLD UPC “Inelegant 1”: reproduce existing messaging § MPI_Isend(ed->v 1, ed->nlstates, MPI_DOUBLE, ed->to_nbs[0], k, MPI_COMM_WORLD,](https://slidetodoc.com/presentation_image_h2/a615dcc8c51e41aa861872a28b0524ff/image-12.jpg)

UPC “Inelegant 1”: reproduce existing messaging § MPI_Isend(ed->v 1, ed->nlstates, MPI_DOUBLE, ed->to_nbs[0], k, MPI_COMM_WORLD, &req_send 2); MPI_Irecv(ed->vv 1, ed->nlstates, MPI_DOUBLE, ed->from_nbs[0], ed->nm-1, MPI_COMM_WORLD, &req_recv); : MPI_Isend(ed->v 1, ed->nlstates, MPI_DOUBLE, ed->to_nbs[neighb], k, MPI_COMM_WORLD, &req_send 2); MPI_Irecv(ed->vv 2, ed->nlstates, MPI_DOUBLE, ed->from_nbs[neighb], k, MPI_COMM_WORLD, &req_recv 2); : § UPC shared[NBLOCK] double vtmp[THREADS*NBLOCK]; : for (i = 0; i < NBLOCK; ++i) vtmp[i+MYTHREAD*NBLOCK] = ed->v 1[i]; upc_barrier(1); for (i = 0; i < NBLOCK; ++i) ed->vv 1[i] = vtmp[i+(ed->from_nbs[0]*NBLOCK)]; : for (i = 0; i < NBLOCK; ++i) ed->vv 2[i] = vtmp[i+(ed->from_nbs[neighb]*NBLOCK)]; upc_barrier(2); : s. POMPA Kickoff Workshop, May 3 -4, 2011

![UPC Inelegant 3 use only PUT operations sharedNBLOCK double vtmp 1THREADSNBLOCK sharedNBLOCK double vtmp UPC “Inelegant 3”: use only PUT operations shared[NBLOCK] double vtmp 1[THREADS*NBLOCK]; shared[NBLOCK] double vtmp](https://slidetodoc.com/presentation_image_h2/a615dcc8c51e41aa861872a28b0524ff/image-13.jpg)

UPC “Inelegant 3”: use only PUT operations shared[NBLOCK] double vtmp 1[THREADS*NBLOCK]; shared[NBLOCK] double vtmp 2[THREADS*NBLOCK]; : upc_memput( &vtmp 1[ed->to_nbs[0]*NBLOCK], ed->v 1, upc_barrier(1); : if ( mode == 0 ) { upc_memput( &vtmp 2[ed->to_nbs[neighb]*NBLOCK], } else { upc_memput( &vtmp 1[ed->to_nbs[neighb]*NBLOCK], } : if ( mode == 0 ) { for (i = 0; i < ed->nlstates; ++i) { ed->v 2[i] mode = 1; } else { for (i = 0; i < ed->nlstates; ++i) { ed->v 2[i] mode = 0; } upc_barrier(2); NBLOCK*sizeof(double) ); ed->v 1, NBLOCK*sizeof(double) ); += ed->gamma * vtmp 1[i+MYTHREAD*NBLOCK]; } += ed->gamma * vtmp 2[i+MYTHREAD*NBLOCK]; } POMPA Kickoff Workshop, May 3 -4, 2011 13

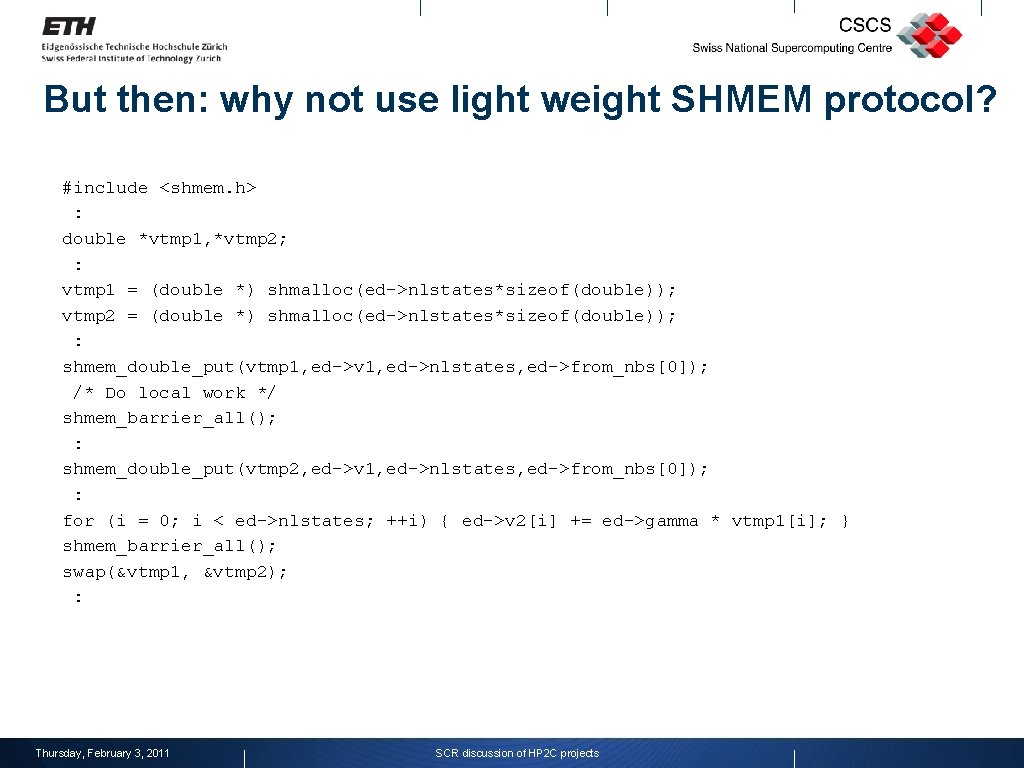

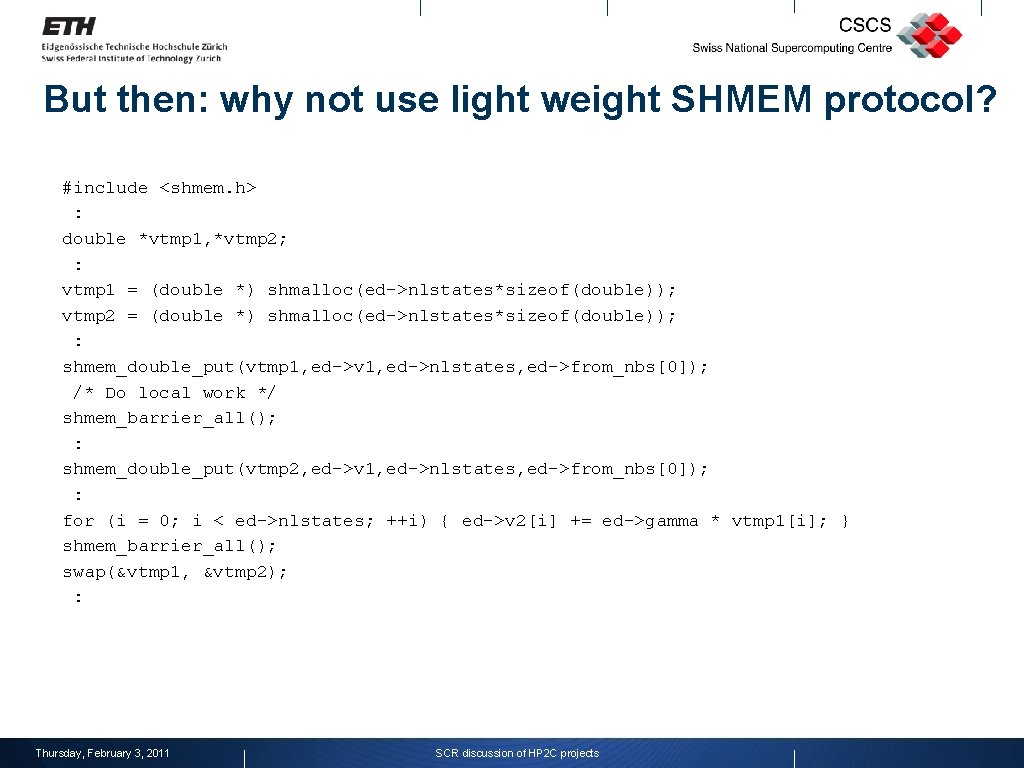

But then: why not use light weight SHMEM protocol? #include <shmem. h> : double *vtmp 1, *vtmp 2; : vtmp 1 = (double *) shmalloc(ed->nlstates*sizeof(double)); vtmp 2 = (double *) shmalloc(ed->nlstates*sizeof(double)); : shmem_double_put(vtmp 1, ed->v 1, ed->nlstates, ed->from_nbs[0]); /* Do local work */ shmem_barrier_all(); : shmem_double_put(vtmp 2, ed->v 1, ed->nlstates, ed->from_nbs[0]); : for (i = 0; i < ed->nlstates; ++i) { ed->v 2[i] += ed->gamma * vtmp 1[i]; } shmem_barrier_all(); swap(&vtmp 1, &vtmp 2); : Thursday, February 3, 2011 SCR discussion of HP 2 C projects

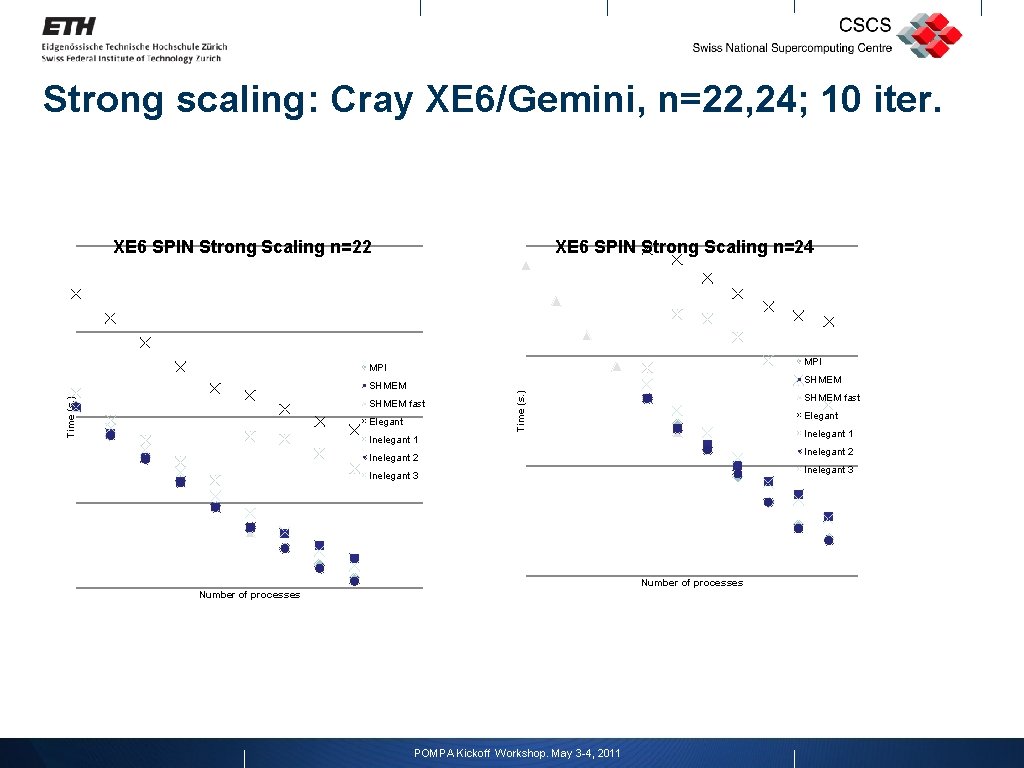

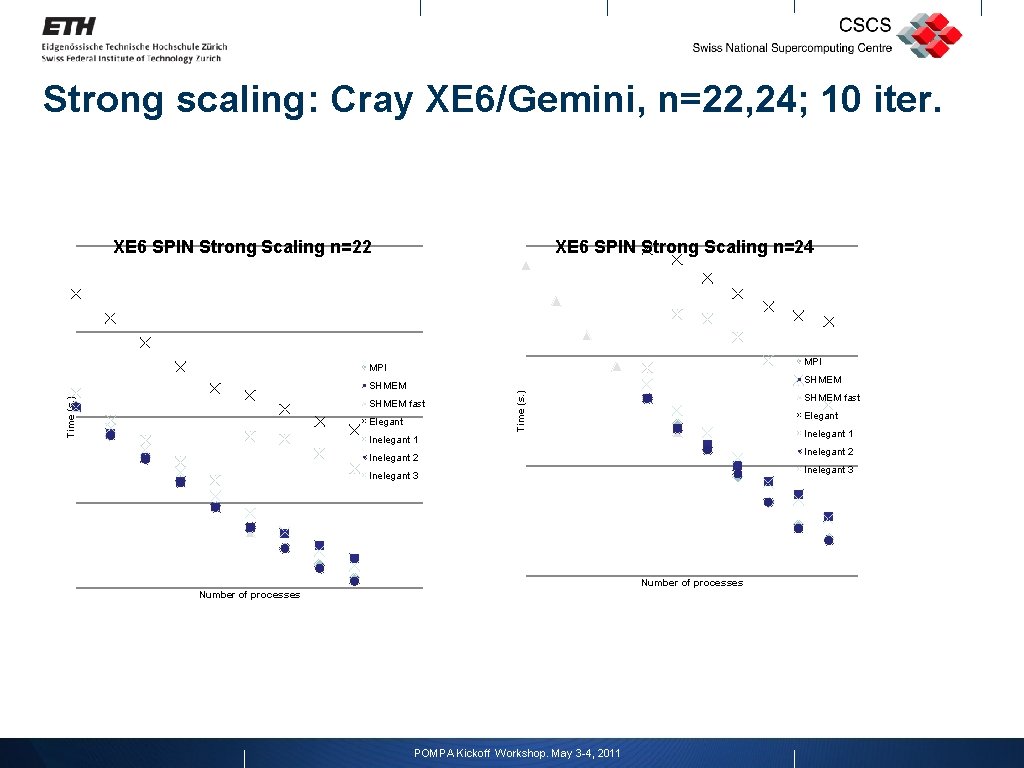

Strong scaling: Cray XE 6/Gemini, n=22, 24; 10 iter. XE 6 SPIN Strong Scaling n=22 XE 6 SPIN Strong Scaling n=24 MPI Time (s. ) SHMEM fast Elegant Time (s. ) SHMEM fast Elegant Inelegant 1 Inelegant 2 Inelegant 3 Number of processes POMPA Kickoff Workshop. May 3 -4, 2011

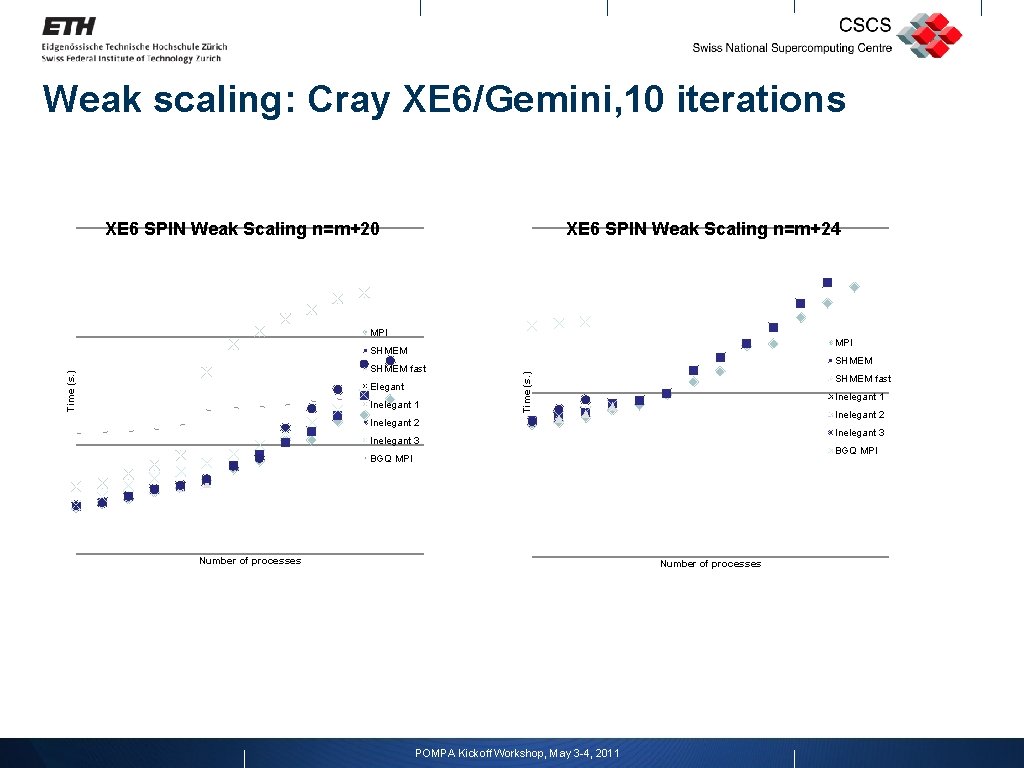

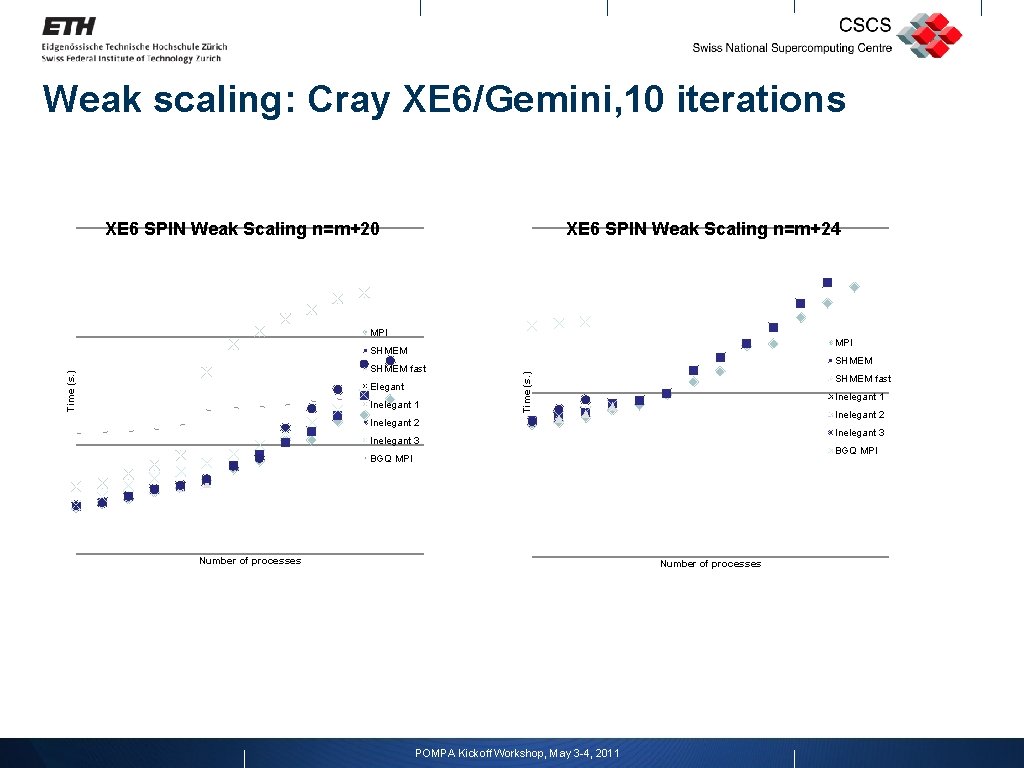

Weak scaling: Cray XE 6/Gemini, 10 iterations XE 6 SPIN Weak Scaling n=m+20 XE 6 SPIN Weak Scaling n=m+24 MPI SHMEM Elegant Inelegant 1 SHMEM Time (s. ) SHMEM fast Inelegant 1 Inelegant 2 Inelegant 3 BGQ MPI Number of processes POMPA Kickoff Workshop, May 3 -4, 2011

Conclusions § One-way communication has conceptual and can have real benefits (e. g. , Cray T 3 E, X 1, perhaps X 2) § On XE 6, CAF/UPC formulation can achieve SHMEM performance, but only by using puts and gets, but ‘elegant’ implementations have poor performance § If the domain decomposition is already properly formulated… why not use a simple, light-weight protocol like SHMEM? ? § For XE 6 Gemini interconnect: study of one-sided communication primitives (Tineo, et al. ) indicates 2 -sided MPI communication is still most effective. To do: test MPI-2 one-sided primitives § Still: PGAS path should be kept open; possible task: PGAS (CAF or SHMEM) implementation of COSMO halo update? POMPA Kickoff Workshop, May 3 -4, 2011