Paper Review Inherently LowerPower HighPerformance Superscalar Architectures Rami

![Flynn’s Classifications (1972) [1] Data stream SISD – Single Instruction stream, Single Conventional sequential Flynn’s Classifications (1972) [1] Data stream SISD – Single Instruction stream, Single Conventional sequential](https://slidetodoc.com/presentation_image_h2/eeef8ebb413348140d32d951f6e4fc86/image-2.jpg)

![Important Terminology [2] [4] Issue Width instructions are Issue Window Register File bank Register Important Terminology [2] [4] Issue Width instructions are Issue Window Register File bank Register](https://slidetodoc.com/presentation_image_h2/eeef8ebb413348140d32d951f6e4fc86/image-5.jpg)

![Energy Models [5] Model 1 – Multiported RAM Access energy (R or W) = Energy Models [5] Model 1 – Multiported RAM Access energy (R or W) =](https://slidetodoc.com/presentation_image_h2/eeef8ebb413348140d32d951f6e4fc86/image-7.jpg)

![Previous Decentralized Solutions Particular Solution Main Features Limited Connectivity VLIWs [6] • RF is Previous Decentralized Solutions Particular Solution Main Features Limited Connectivity VLIWs [6] • RF is](https://slidetodoc.com/presentation_image_h2/eeef8ebb413348140d32d951f6e4fc86/image-12.jpg)

![References (1) [1] M. J. Flynn, “Very High-Speed Computing Systems, ” Proceedings of the References (1) [1] M. J. Flynn, “Very High-Speed Computing Systems, ” Proceedings of the](https://slidetodoc.com/presentation_image_h2/eeef8ebb413348140d32d951f6e4fc86/image-19.jpg)

![References (2) [8] S. Vajapeyam and T. Miltra, “Improving Superscalar Instruction Dispatch and Issue References (2) [8] S. Vajapeyam and T. Miltra, “Improving Superscalar Instruction Dispatch and Issue](https://slidetodoc.com/presentation_image_h2/eeef8ebb413348140d32d951f6e4fc86/image-20.jpg)

- Slides: 21

Paper Review Inherently Lower-Power High-Performance Superscalar Architectures Rami Abielmona Prof. Maitham Shams 95. 575 March 4, 2002

![Flynns Classifications 1972 1 Data stream SISD Single Instruction stream Single Conventional sequential Flynn’s Classifications (1972) [1] Data stream SISD – Single Instruction stream, Single Conventional sequential](https://slidetodoc.com/presentation_image_h2/eeef8ebb413348140d32d951f6e4fc86/image-2.jpg)

Flynn’s Classifications (1972) [1] Data stream SISD – Single Instruction stream, Single Conventional sequential machines Program executed is instruction stream, and data operated on is data stream SIMD – Single Instruction stream, Multiple Data streams Vector machines (superscalar) Processors execute same program, but operate on different data streams MIMD – Multiple Instruction streams, Multiple Data streams Parallel machines Independent processors execute different programs, using unique data streams MISD – Multiple Instruction streams, Single Data stream Systolic array machines Common data structure is manipulated by separate processors, executing different instruction streams (programs)

Pipelined Execution Effective way of organizing concurrent activity in a computer system Makes it possible to execute instructions concurrently Maximum throughput of a pipelined processor is one instruction per clock cycle Shown in figure 1 is a two-stage pipeline, with buffer B 1 receiving new information at the end of Figure 1, courtesy each clock cycle [2]

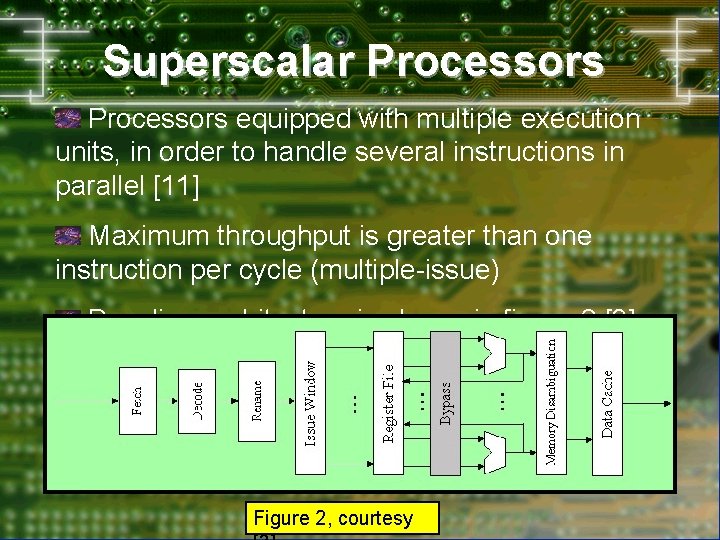

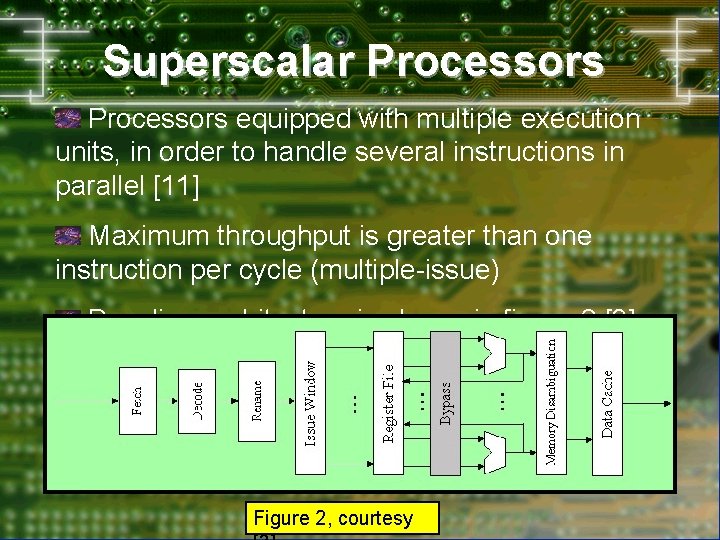

Superscalar Processors equipped with multiple execution units, in order to handle several instructions in parallel [11] Maximum throughput is greater than one instruction per cycle (multiple-issue) Baseline architecture is shown in figure 2 [3] Figure 2, courtesy

![Important Terminology 2 4 Issue Width instructions are Issue Window Register File bank Register Important Terminology [2] [4] Issue Width instructions are Issue Window Register File bank Register](https://slidetodoc.com/presentation_image_h2/eeef8ebb413348140d32d951f6e4fc86/image-5.jpg)

Important Terminology [2] [4] Issue Width instructions are Issue Window Register File bank Register Renaming The metric designating how many issued per cycle Comprises the last n entries of the instruction buffer Set of n-byte, dual read, single write of registers Technique used to prevent stalling the processor false data dependencies between instructions Instruction Steering instructions to Technique used to send decoded appropriate memory banks Memory Disambiguation Unit data Mechanism for enforcing dependencies through

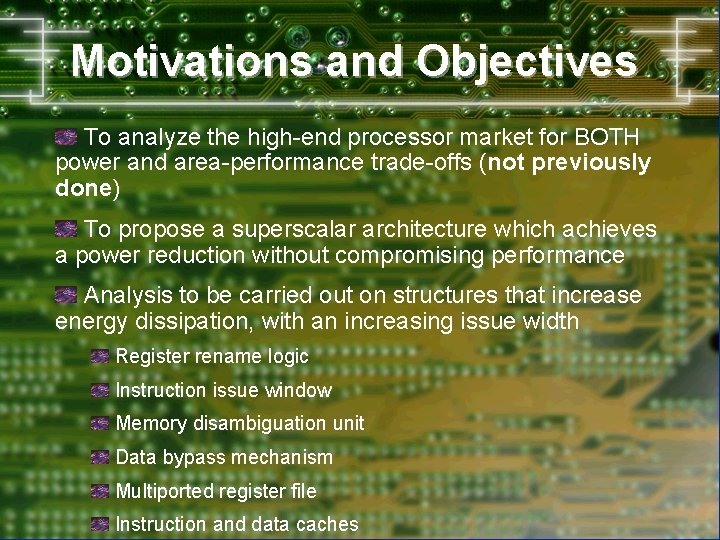

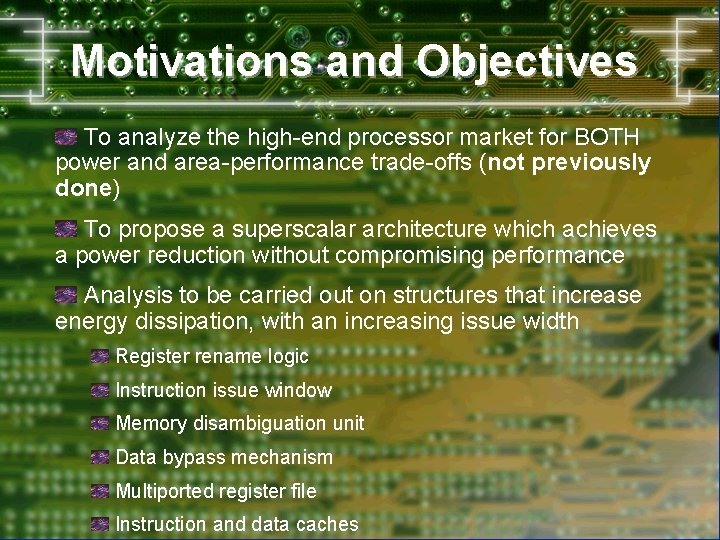

Motivations and Objectives To analyze the high-end processor market for BOTH power and area-performance trade-offs (not previously done) To propose a superscalar architecture which achieves a power reduction without compromising performance Analysis to be carried out on structures that increase energy dissipation, with an increasing issue width Register rename logic Instruction issue window Memory disambiguation unit Data bypass mechanism Multiported register file Instruction and data caches

![Energy Models 5 Model 1 Multiported RAM Access energy R or W Energy Models [5] Model 1 – Multiported RAM Access energy (R or W) =](https://slidetodoc.com/presentation_image_h2/eeef8ebb413348140d32d951f6e4fc86/image-7.jpg)

Energy Models [5] Model 1 – Multiported RAM Access energy (R or W) = Edecode + Earray + ESA + Ectl. SA + Epre + Econtrol Word line energy = Vdd 2 Nbits( Cgate Wpass, r + ( 2 Nwrite+ Nread ) Wpitch Cmetal ) Bit line energy = Vdd Mmargin Vsense Cbl, read Nbits Model 2 – CAM (Content-Addressable Memory) Using IW write word lines and IW write bitline pairs Model 3 – Pipeline latches and clocking tree Assume balanced clocking tree (less power dissipation than grids) Assume lower power single phase clocking scheme Near minimum transistor sizes used in latches Model 4 – Functional Units Eavg = Econst + Nchange x Echange Energy complexity is independent of issue width

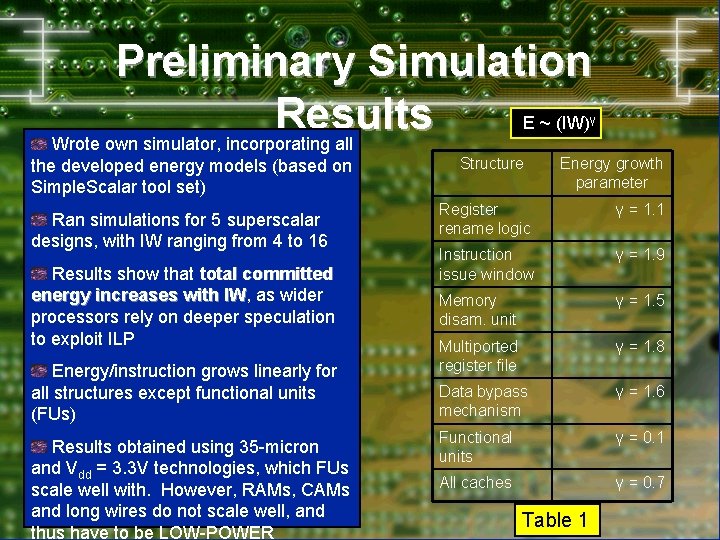

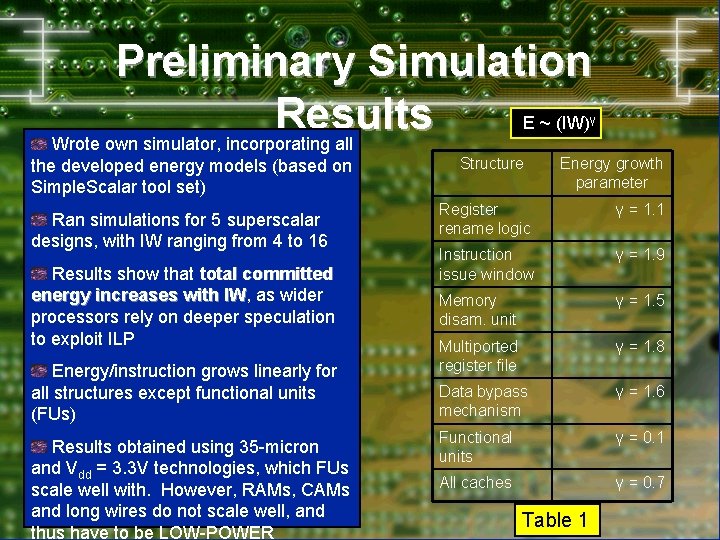

Preliminary Simulation Results E ~ (IW) Wrote own simulator, incorporating all γ the developed energy models (based on Simple. Scalar tool set) Ran simulations for 5 superscalar designs, with IW ranging from 4 to 16 Results show that total committed energy increases with IW, IW as wider processors rely on deeper speculation to exploit ILP Energy/instruction grows linearly for all structures except functional units (FUs) Results obtained using 35 -micron and Vdd = 3. 3 V technologies, which FUs scale well with. However, RAMs, CAMs and long wires do not scale well, and thus have to be LOW-POWER Structure Energy growth parameter Register rename logic γ = 1. 1 Instruction issue window γ = 1. 9 Memory disam. unit γ = 1. 5 Multiported register file γ = 1. 8 Data bypass mechanism γ = 1. 6 Functional units γ = 0. 1 All caches γ = 0. 7 Table 1

Problem Formulation Energy-Delay Product E x D = energy/operation x cycles/operation E x D = (energy/cycle) / IPC 2 E x D = [ IPC x (IW)γ ] / IPC 2 ~ (IW)γ / IPC E x D ~ (IW)γ - α ~ (IPC) (γ – α) / α Problem Definition If α = 1, then E x D ~ (IPC)γ-1 ~ (IW)γ-1 If α = 0. 5, then E x D ~ (IPC)γ-1/2 ~ (IW)2γ-1 Need new techniques to achieve more ILP with conventional superscalar design

Intermediary Recap We have discussed Superscalar processsor design and terminology Energy modeling of microarchitecture structures Analysis of energy-delay metric Preliminary simulation results We will introduce General solution methodology Previous decentralization schemes Proposed strategy Simulation results of multicluster architecture Conclusions

General Design Solution Decentralization of microarchitecture Replace tightly coupled CPU with a set of clusters, each capable of superscalar processing Can ideally reduce γ to zero, with good cluster partitioning techniques Solution introduces the following issues 1. Additional paths for intercluster communication 2. Need for cluster assignment algorithms 3. Interaction of cluster with common memory system

![Previous Decentralized Solutions Particular Solution Main Features Limited Connectivity VLIWs 6 RF is Previous Decentralized Solutions Particular Solution Main Features Limited Connectivity VLIWs [6] • RF is](https://slidetodoc.com/presentation_image_h2/eeef8ebb413348140d32d951f6e4fc86/image-12.jpg)

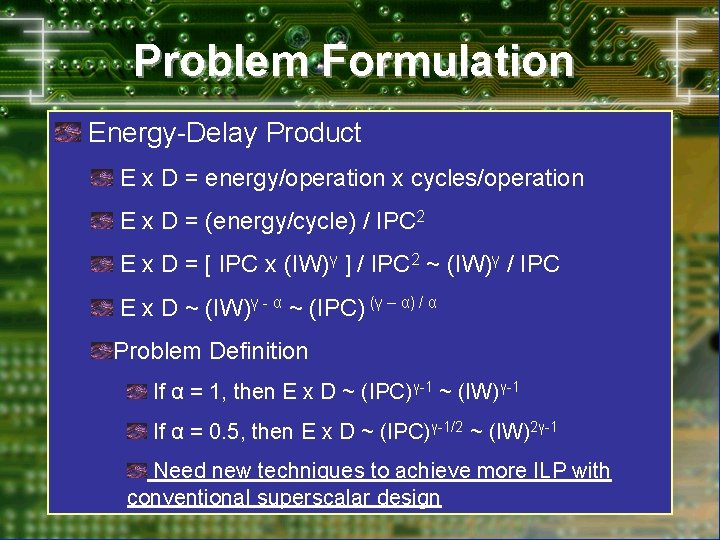

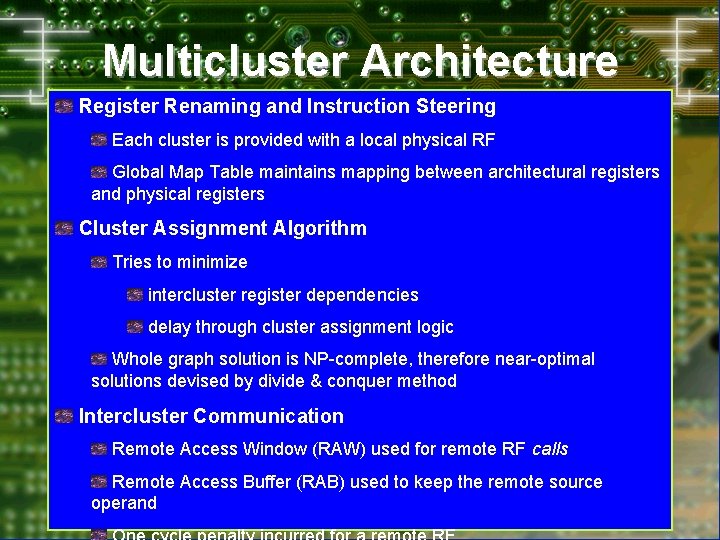

Previous Decentralized Solutions Particular Solution Main Features Limited Connectivity VLIWs [6] • RF is partitioned into banks • Every operation specifies a destination bank Multiscalar Architecture [7] • PEs organized in a circular chain • RF is decentralized Trace Window Architecture [8] • RF and issue window are partitioned • All instructions must be buffered Multicluster Architecture [9] • RF, issue window and FUs are decentralized • Special instruction used for intercluster communication Dependence-Based Architecutre [10] • Contains instruction dispatch intelligence 2 -cluster Alpha 21264 Processor [11] • Both clusters contain copy of RF

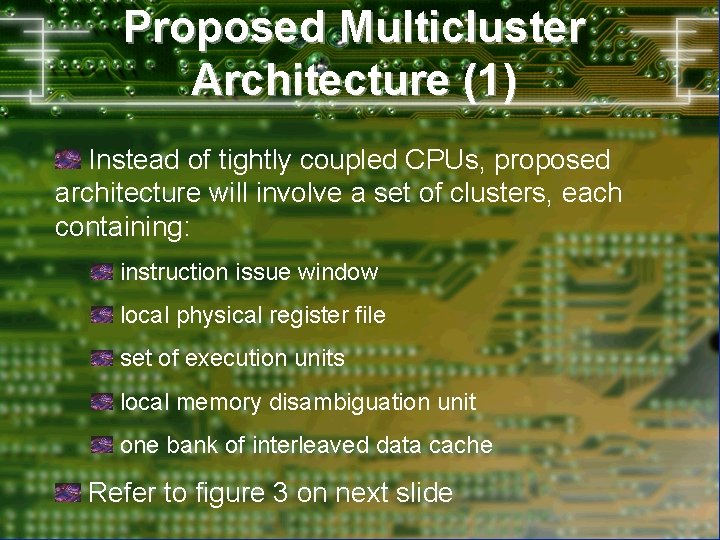

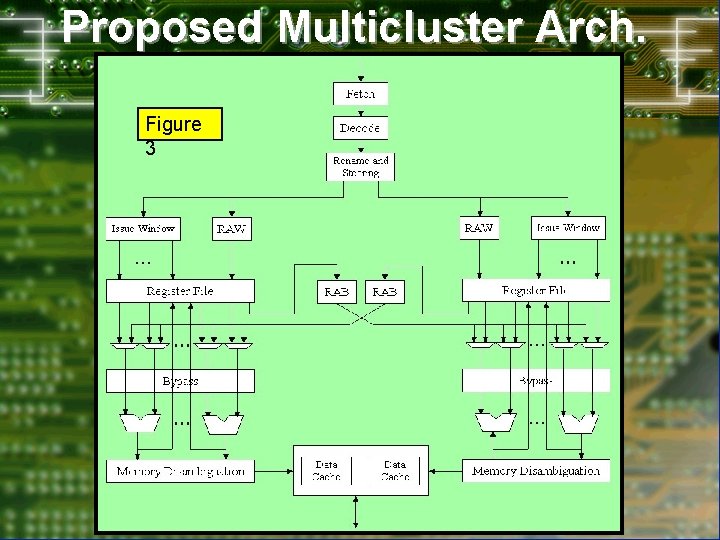

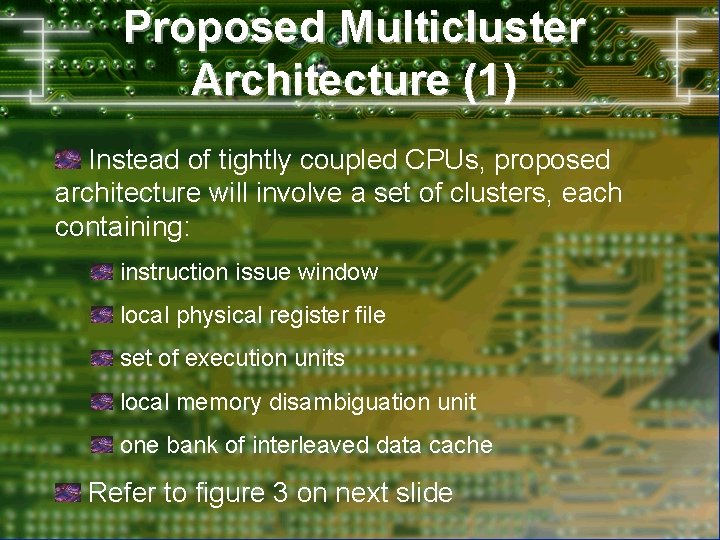

Proposed Multicluster Architecture (1) Instead of tightly coupled CPUs, proposed architecture will involve a set of clusters, each containing: instruction issue window local physical register file set of execution units local memory disambiguation unit one bank of interleaved data cache Refer to figure 3 on next slide

Proposed Multicluster Arch. (2) Figure 3

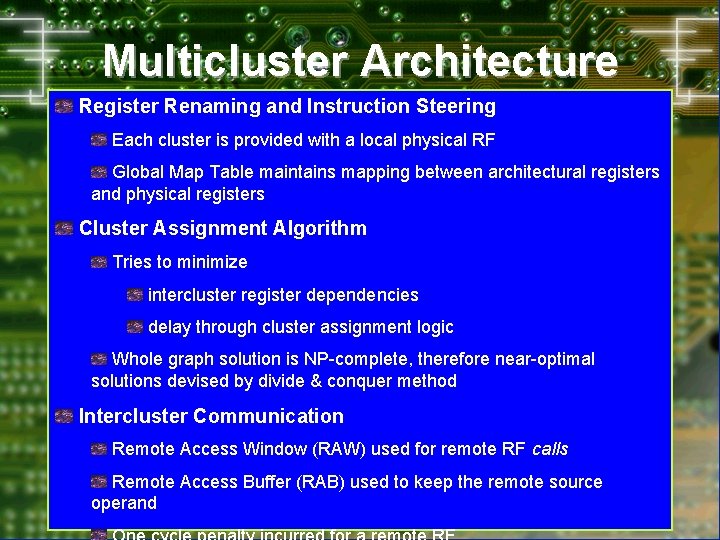

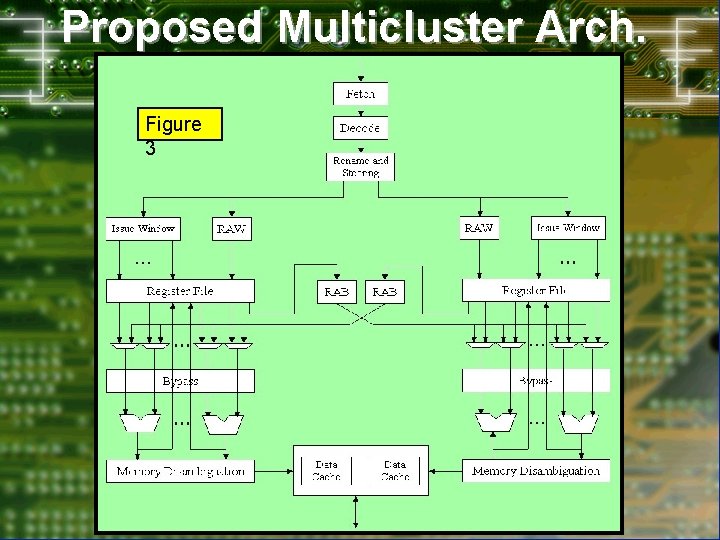

Multicluster Architecture Register Renaming and Instruction Steering Details Each cluster is provided with a local physical RF Global Map Table maintains mapping between architectural registers and physical registers Cluster Assignment Algorithm Tries to minimize intercluster register dependencies delay through cluster assignment logic Whole graph solution is NP-complete, therefore near-optimal solutions devised by divide & conquer method Intercluster Communication Remote Access Window (RAW) used for remote RF calls Remote Access Buffer (RAB) used to keep the remote source operand

Multicluster Architecture Details Memory Dataflow (Cont’d) Centralized memory disambiguation unit does not scale with increasing issue width and bigger sizes of the load/store window Proposed scheme: Every cluster is provided with a local load/store window that is hardwired to a particular data cache bank Developed a bank predictor in order to combat not knowing which cluster the instruction is being routed to at the decode stage Stack Pointer (SP) References Realized an eager mechanism for handling SP references Ø With a new reference to SP, an entry is allocated in RAB Ø Upon instruction completion, results written into RF and RAB Ø RAB entry is not freed after instruction reads contents

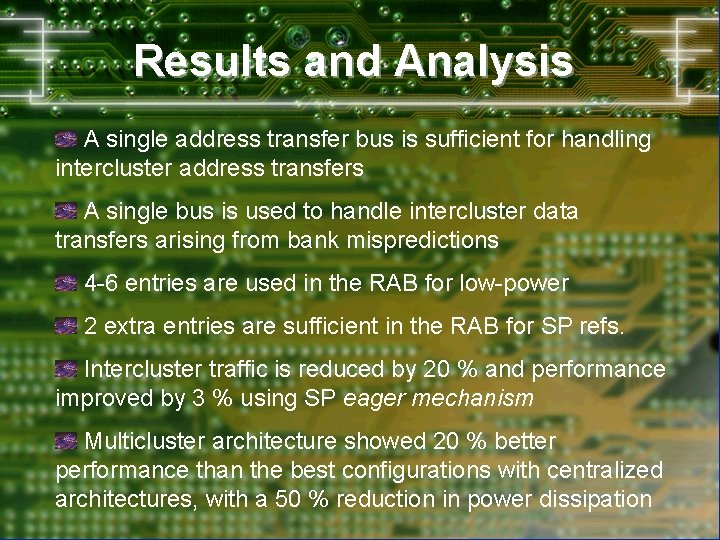

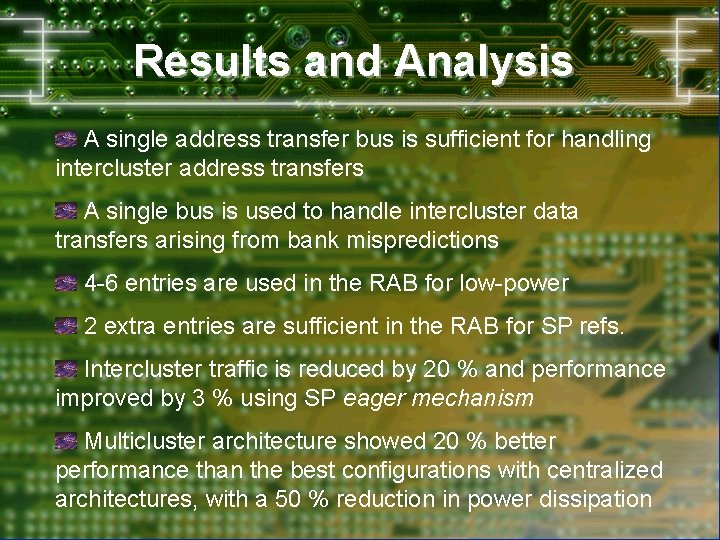

Results and Analysis A single address transfer bus is sufficient for handling intercluster address transfers A single bus is used to handle intercluster data transfers arising from bank mispredictions 4 -6 entries are used in the RAB for low-power 2 extra entries are sufficient in the RAB for SP refs. Intercluster traffic is reduced by 20 % and performance improved by 3 % using SP eager mechanism Multicluster architecture showed 20 % better performance than the best configurations with centralized architectures, with a 50 % reduction in power dissipation

Conclusions Main Result of Work Using this architecture will allow the development of high-performance processors while keeping the microarchitecture energyefficient, as proven by the energy-delay product Main Contribution of Work A methodology for doing energy-efficiency analysis was derived for use with the next generation high-performance decentralized superscalar processors Other Major Contributions Ø Opened analyst’s eyes to the 3 -D IPC-area-energy space A roadmap for future high-performance low-power microprocessor development has been proposed Ø Coined the energy-efficient family concept, composed of equally optimal energy-efficient configurations Ø

![References 1 1 M J Flynn Very HighSpeed Computing Systems Proceedings of the References (1) [1] M. J. Flynn, “Very High-Speed Computing Systems, ” Proceedings of the](https://slidetodoc.com/presentation_image_h2/eeef8ebb413348140d32d951f6e4fc86/image-19.jpg)

References (1) [1] M. J. Flynn, “Very High-Speed Computing Systems, ” Proceedings of the IEEE, vol. 54, December 1966, p. p. 1901 -1909. [2] C. Hamacher, Z. Vranesic and S. Zaky, “Computer Organization, ” fifth edition, Mc. Graw-Hill: New York, 2002. [3] V. Zyuban and P. Kogge, “Inherently Lower-Power High. Performance Superscalar Architectures, ” IEEE Transactions on Computers, vol. 50, no. 3, March 2001, p. p. 268 -285. [4] E. Rotenberg, “AR-SMT: A Microarchitectural Approach to Fault Tolerance in Microprocessors, ”, Proceedings of the 29 th Fault. Tolerant Computing Symposium, June 1999 [5] V. Zyuban, “Inherently Lower-Power High-Performance Superscalar Architectures, ” Ph. D thesis, Univ. of Notre Dame, Mar. 2000. [6] R. Colwell et al. , “A VLIW Architecture for a Trace Scheduling Compiler, ” IEEE Trans. Computers, vol. 37, no. 8, pp. 967 -979, Aug. 1988. [7] M. Franklin and G. S. Sohi, “The Expandable Split Window

![References 2 8 S Vajapeyam and T Miltra Improving Superscalar Instruction Dispatch and Issue References (2) [8] S. Vajapeyam and T. Miltra, “Improving Superscalar Instruction Dispatch and Issue](https://slidetodoc.com/presentation_image_h2/eeef8ebb413348140d32d951f6e4fc86/image-20.jpg)

References (2) [8] S. Vajapeyam and T. Miltra, “Improving Superscalar Instruction Dispatch and Issue by Exploiting Dynamic Code Sequences, ” Proc. 24 th Ann. Int’l Symp. Computer Architecture, June 1997. [9] K. Farkas, P. Chow, N. Jouppi, and Z. Vranesic, “The Multicluster Architecture: Reducing Cycle Time through Partitioning, ” Proc. 30 th Ann. Int’l Symp. Microarchitecture, Dec. 1997. [10] S. Palacharla, N. Jouppi and J. Smith, “Complexity-Effective Superscalar Processor, ” Proc. 24 th Ann. Int’l Symp. Computer Architecture, pp. 206 -218, June 1997. [11] K. Hwang, “Advanced Computer Architecture: Parallelism, Scalability, Programmability, ” Mc. Graw. Hill: New York, 1993.

Questions/Comments ?

Aviation in itself is not inherently dangerous

Aviation in itself is not inherently dangerous Is man inherently evil

Is man inherently evil Are humans inherently good or evil

Are humans inherently good or evil Hedgehog concept examples

Hedgehog concept examples Inherently dangerous felony limitation

Inherently dangerous felony limitation Difference between vliw and superscalar processor

Difference between vliw and superscalar processor Scalar pipeline

Scalar pipeline Superpipelining

Superpipelining Superscalar vs vliw

Superscalar vs vliw Pipelining and superscalar techniques

Pipelining and superscalar techniques Superscalar simulator

Superscalar simulator Superscalar architecture diagram

Superscalar architecture diagram Superscalar pipeline

Superscalar pipeline Disadvantages of vliw

Disadvantages of vliw Superscalar execution

Superscalar execution Superscalar architecture diagram

Superscalar architecture diagram Integral vs modular architecture

Integral vs modular architecture Database storage architecture

Database storage architecture Base system architectures

Base system architectures Backbone network architectures

Backbone network architectures Autoencoders

Autoencoders George schlossnagle

George schlossnagle