Measurement Modeling and Analysis of a Peer2 Peer

- Slides: 19

Measurement, Modeling, and Analysis of a Peer-2 -Peer File -Sharing Workload Presented For Cs 294 -4 Fall 2003 By Jon Hess

Measurement, Modeling, and Analysis of a Peer-2 -Peer File-Sharing Workload • Goal - Overview – Determine if the Ka. Za. A search space is queried in such a way that a group of 25, 000 clients can satisfy most of their own requests.

Measurement, Modeling, and Analysis of a Peer-2 -Peer File-Sharing Workload • Goals - Details – Capture an extensive trace – Utilize that trace to understand filesharing traffic flows – Model user and object activity – Determine inefficiencies in the distribution model – Propose solutions to inefficiencies

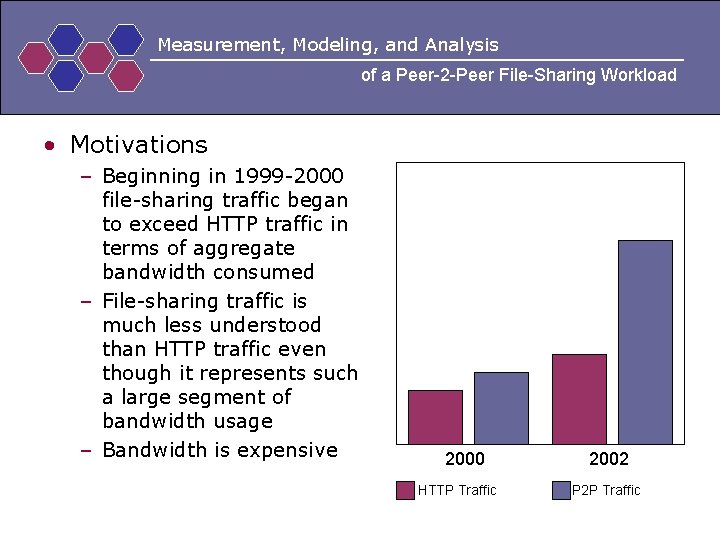

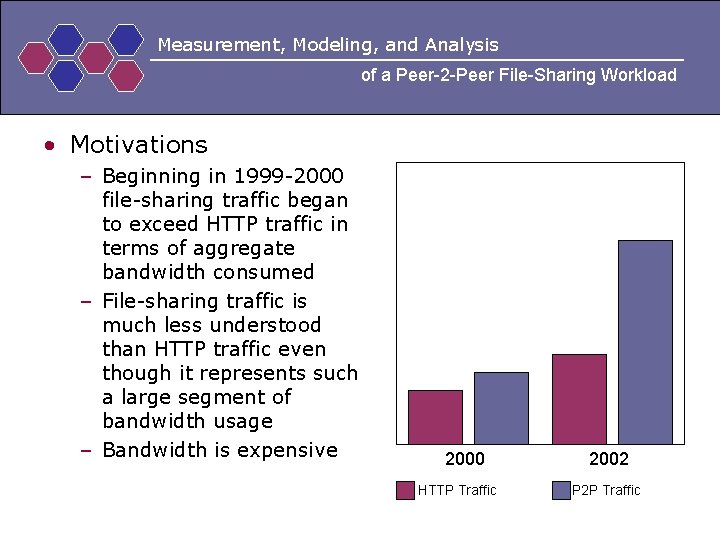

Measurement, Modeling, and Analysis of a Peer-2 -Peer File-Sharing Workload • Motivations – Beginning in 1999 -2000 file-sharing traffic began to exceed HTTP traffic in terms of aggregate bandwidth consumed – File-sharing traffic is much less understood than HTTP traffic even though it represents such a large segment of bandwidth usage – Bandwidth is expensive 2000 HTTP Traffic 2002 P 2 P Traffic

Measurement, Modeling, and Analysis of a Peer-2 -Peer File-Sharing Workload • The Trace – 2 Machines – 203 days 5 hours and 6 minutes – 22. 7 TB of Ka. Za. A file transfer traffic – Captured seasonal variations • End of spring • Summer • Fall semester

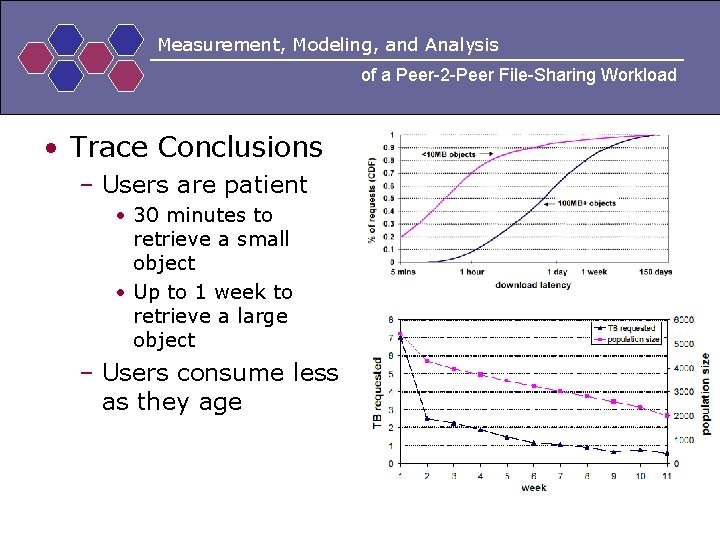

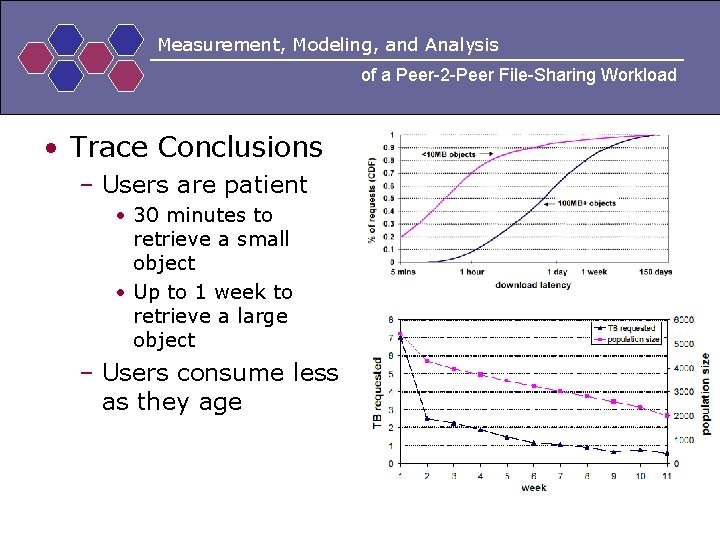

Measurement, Modeling, and Analysis of a Peer-2 -Peer File-Sharing Workload • Trace Conclusions – Users are patient • 30 minutes to retrieve a small object • Up to 1 week to retrieve a large object – Users consume less as they age

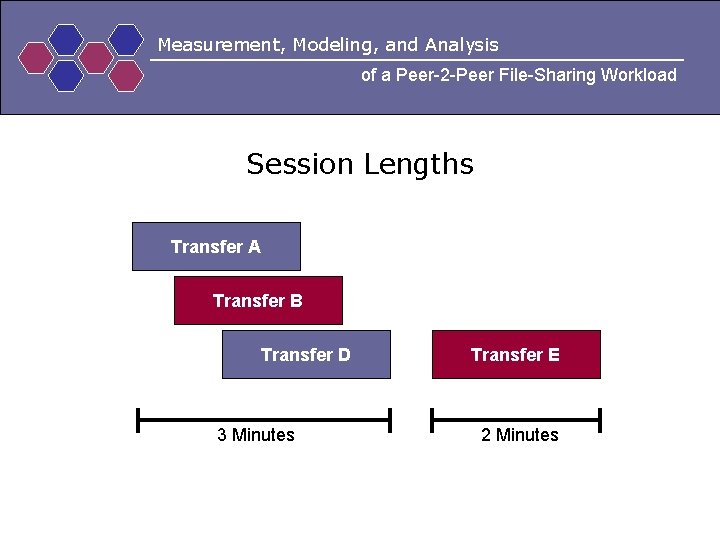

Measurement, Modeling, and Analysis of a Peer-2 -Peer File-Sharing Workload • Trace Conclusions – Users machines are not very active • A session is an unbroken length of time where a client has one or more file transfers in progress. • Average sessions are only 2 minutes – 90 th percentile 28 minutes • Over the life of a client, it is only active 5. 54% of the time or 0. 20% of the trace period – 90 th percentile clients are active most of their life, and 4. 15% of the trace – Without control traffic analysis, is this meaningful?

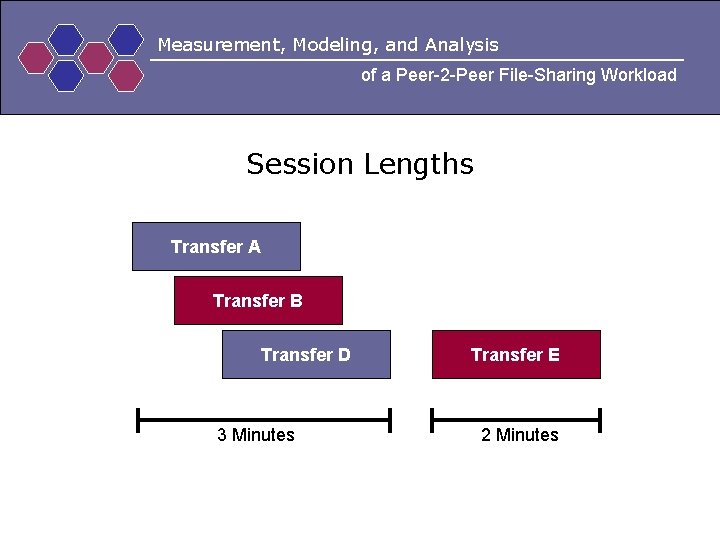

Measurement, Modeling, and Analysis of a Peer-2 -Peer File-Sharing Workload Session Lengths Transfer A Transfer B Transfer D 3 Minutes Transfer E 2 Minutes

Measurement, Modeling, and Analysis of a Peer-2 -Peer File-Sharing Workload • Trace Conclusions – Objects – Most requests are for small objects – 91% – Most bytes transferred are part of large objects – 65% – There are many small objects – There are few large objects – Small Objects’ popularity is subject to heavy churn • • No small object was in the top 10 for all 6 months Only 1 large object lived in the top 10 for 6 months 44 large files remained in the top 100 for 6 months The most popular small objects are new objects – Most requests are for old objects

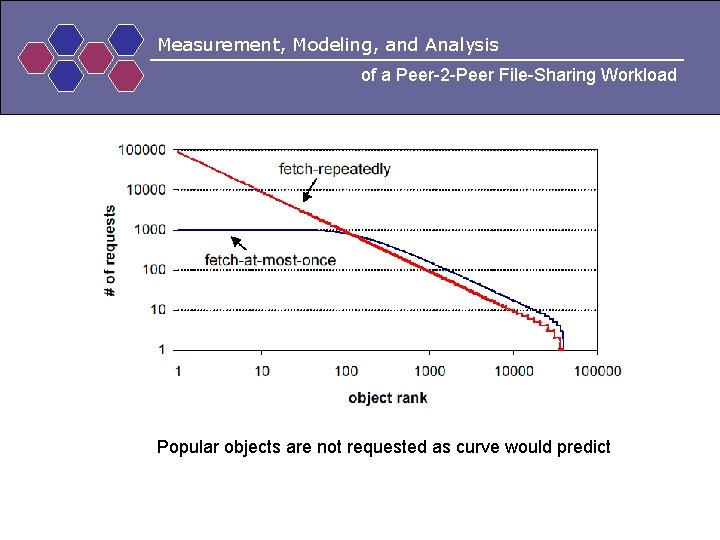

Measurement, Modeling, and Analysis of a Peer-2 -Peer File-Sharing Workload • Fetch-at-most-once – Once a Ka. Za. A user obtains an object, they will not need to retrieve another copy • 94% of Objects are fetched once per user • 99% are fetched less than twice per user – Stems from the fact that media files are immutable and never ‘stale’ • You may refresh ‘slashdot. org’ three times a day, but there is no point download ‘thriller. mpeg’ seventeen times. – This keeps Ka. Za. A workload from following a Zipf curve even though object popularity does.

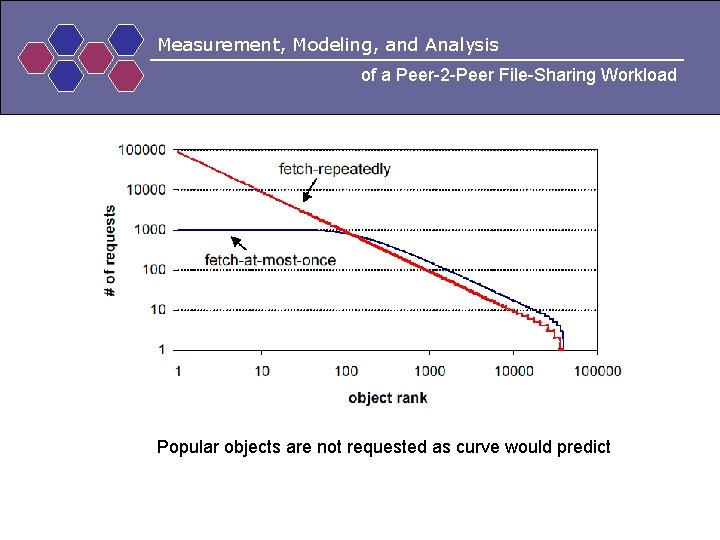

Measurement, Modeling, and Analysis of a Peer-2 -Peer File-Sharing Workload • Workload Modeling – Create a set of objects and give them popularity based on a zipf distribution – Create a set of clients that requests objects in proportion to there popularity – Have each client ‘fetch-at-most-once’ – Measure the distribution of transfers • Does it follow a zipf curve • How many big-object requests can a population of size N satisfy

Measurement, Modeling, and Analysis of a Peer-2 -Peer File-Sharing Workload Popular objects are not requested as curve would predict

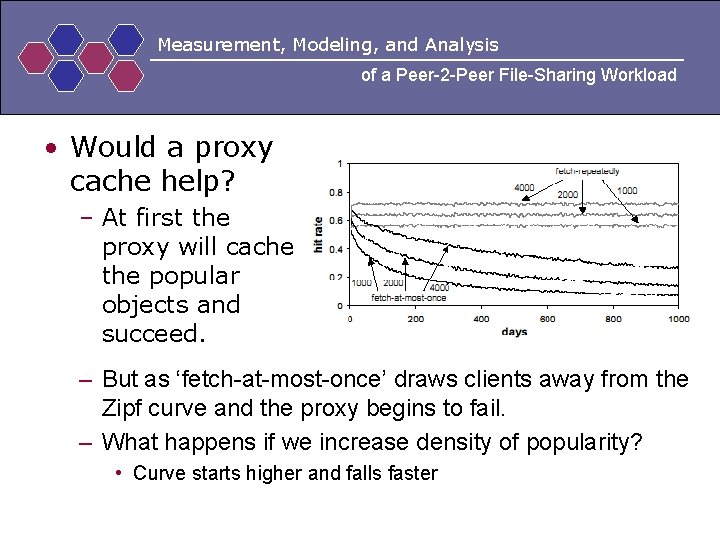

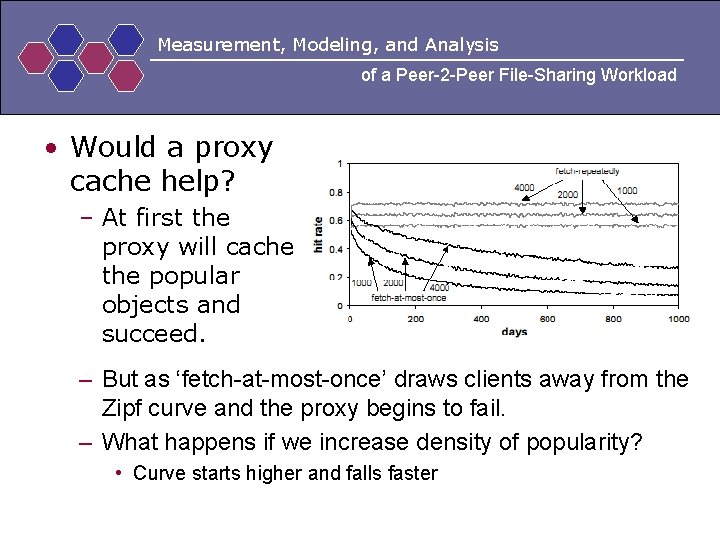

Measurement, Modeling, and Analysis of a Peer-2 -Peer File-Sharing Workload • Would a proxy cache help? – At first the proxy will cache the popular objects and succeed. – But as ‘fetch-at-most-once’ draws clients away from the Zipf curve and the proxy begins to fail. – What happens if we increase density of popularity? • Curve starts higher and falls faster

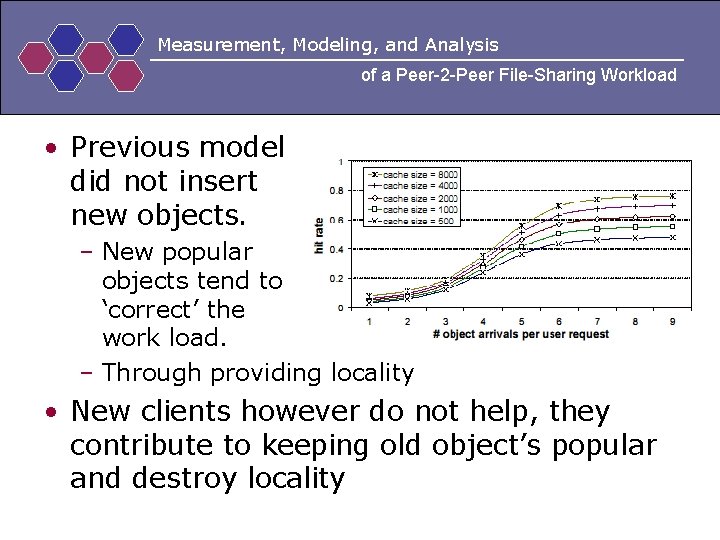

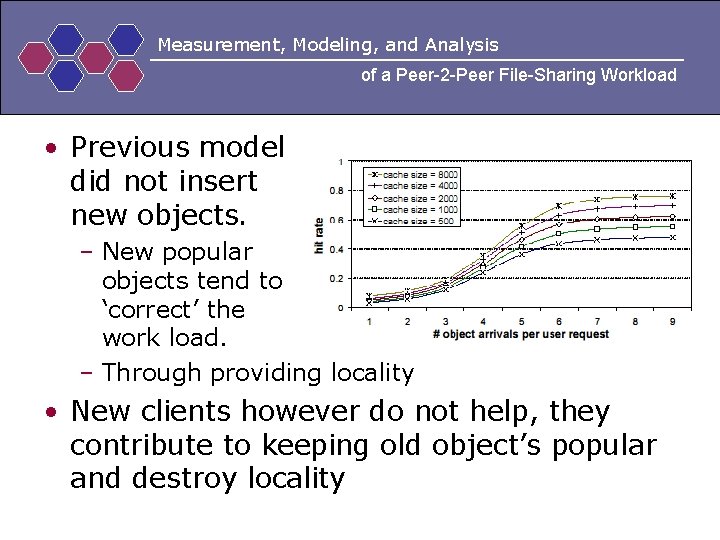

Measurement, Modeling, and Analysis of a Peer-2 -Peer File-Sharing Workload • Previous model did not insert new objects. – New popular objects tend to ‘correct’ the work load. – Through providing locality • New clients however do not help, they contribute to keeping old object’s popular and destroy locality

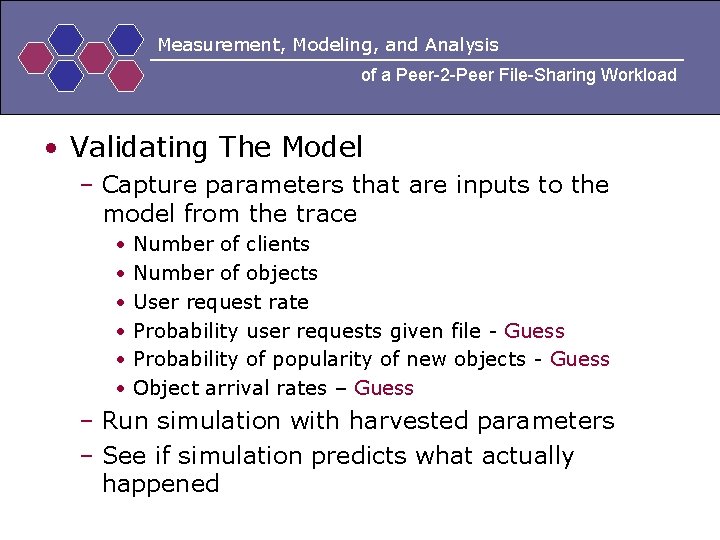

Measurement, Modeling, and Analysis of a Peer-2 -Peer File-Sharing Workload • Validating The Model – Capture parameters that are inputs to the model from the trace • • • Number of clients Number of objects User request rate Probability user requests given file - Guess Probability of popularity of new objects - Guess Object arrival rates – Guess – Run simulation with harvested parameters – See if simulation predicts what actually happened

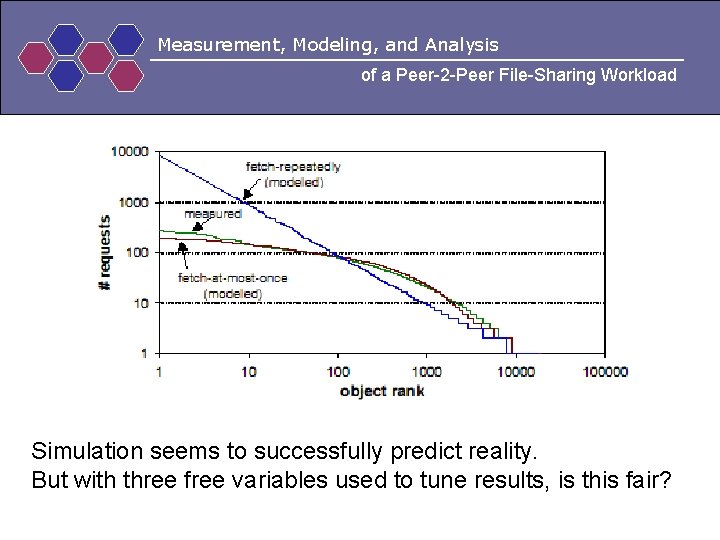

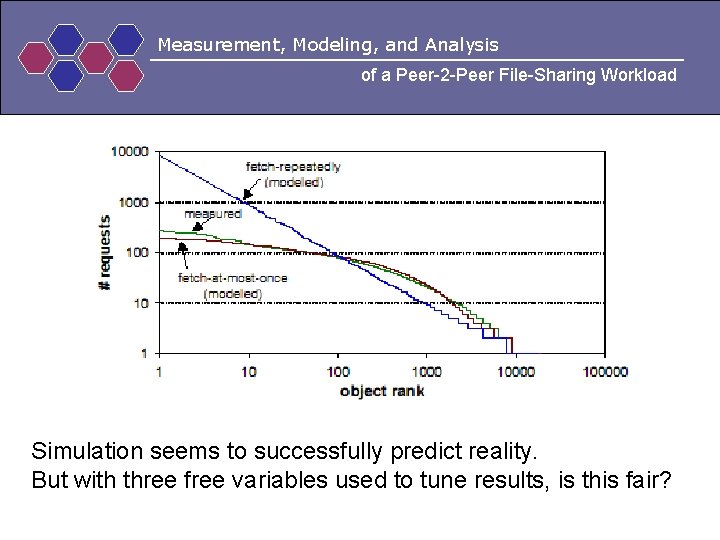

Measurement, Modeling, and Analysis of a Peer-2 -Peer File-Sharing Workload Simulation seems to successfully predict reality. But with three free variables used to tune results, is this fair?

Measurement, Modeling, and Analysis of a Peer-2 -Peer File-Sharing Workload • What inefficiencies can we eliminate? – Analysis against the trace shows • 86% of object transfers were from external sources when an internal source possessed the object. • A traditional proxy, given the resources, could cut bandwidth utilization by 86% – Would have to host pirated data • Could use a proxy redirector instead. Must know the availability of the objects – Control traffic is obfuscated • Build locality into the protocol – Does this sacrifice anonymity?

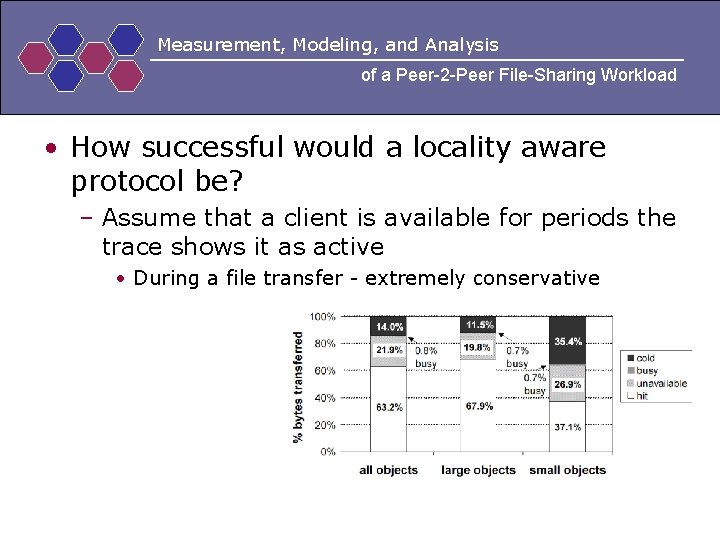

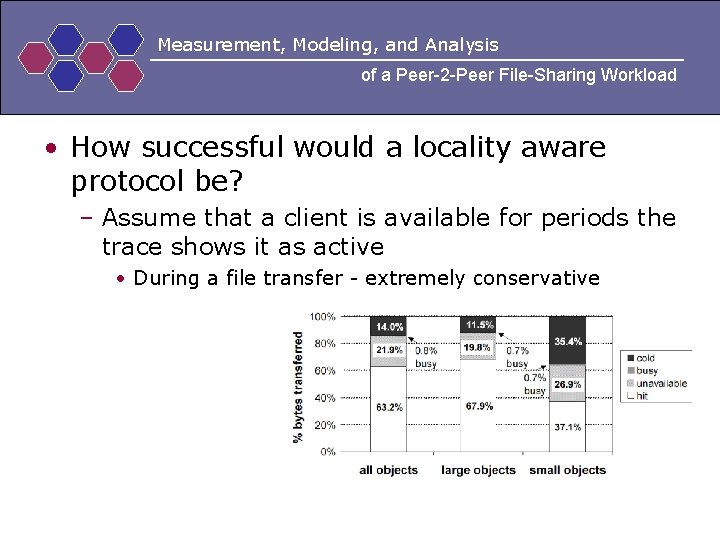

Measurement, Modeling, and Analysis of a Peer-2 -Peer File-Sharing Workload • How successful would a locality aware protocol be? – Assume that a client is available for periods the trace shows it as active • During a file transfer - extremely conservative

Measurement, Modeling, and Analysis of a Peer-2 -Peer File-Sharing Workload • Questions? Will increasing efficiency decrease load as the authors would like? Or simply increase work achieved per dollar? Do clients have insatiable appetites? Are you worried that a large number of queries might have already been locally satisfied?

Features of peer to peer network and client server network

Features of peer to peer network and client server network Skype reviews pros and cons

Skype reviews pros and cons Modeling and role modeling theory

Modeling and role modeling theory Annotazioni sulla verifica effettuata peer to peer

Annotazioni sulla verifica effettuata peer to peer Peer-to-peer

Peer-to-peer Peer to peer transactional replication

Peer to peer transactional replication Peer to peer transactional replication

Peer to peer transactional replication Gambar topologi peer to peer

Gambar topologi peer to peer Esempi di peer to peer compilati

Esempi di peer to peer compilati Scheda osservazione tutor compilata

Scheda osservazione tutor compilata Registro peer to peer compilato

Registro peer to peer compilato Peer to peer l

Peer to peer l Peer to peer merupakan jenis jaringan… *

Peer to peer merupakan jenis jaringan… * Bitcoin: a peer-to-peer electronic cash system

Bitcoin: a peer-to-peer electronic cash system Ambiti operativi da supportare

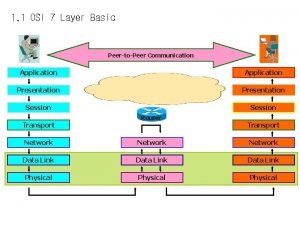

Ambiti operativi da supportare Peer-to-peer communication in osi model

Peer-to-peer communication in osi model Peer to p

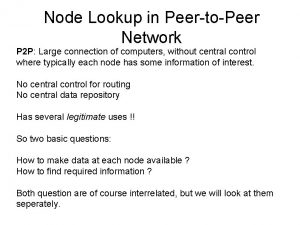

Peer to p Node lookup in peer to peer network

Node lookup in peer to peer network Peer-to-peer o que é

Peer-to-peer o que é Peer to peer computing environment

Peer to peer computing environment