LowEnergy HighPerformance Computing via HighLevel Synthesis on FPGAs

- Slides: 16

Low-Energy High-Performance Computing via High-Level Synthesis on FPGAs Luciano Lavagno

Objectives and approach § Provide HW efficiency with SW-like nonrecurrent engineering cost – Exploit recent advances of High-Level Synthesis to enable a compilation flow for HW – Reduce HW design time (in particular verification time) by using High-Level Synthesis from a variety of concurrent models – Improve Quality of Results by means of manual and automated Design Space Exploration – Reduce energy consumption while retaining reprogrammability, by implementing SW on FPGA Electronics Group 2

Multi-language synthesis § No single winner in the domain of specification languages for HLS (synthesizing a high-level model into RTL) § C, C++, System. C, Simulink/Stateflow, CUDA/Open. Cl have all been proposed, and have all been successful to some extent § Avoid the need to learn a new language § Speed up development by enabling verification using a domain-specific language § C++/System. C can act as a common “intermediate language” between domain-specific modeling and HLS Electronics Group 3

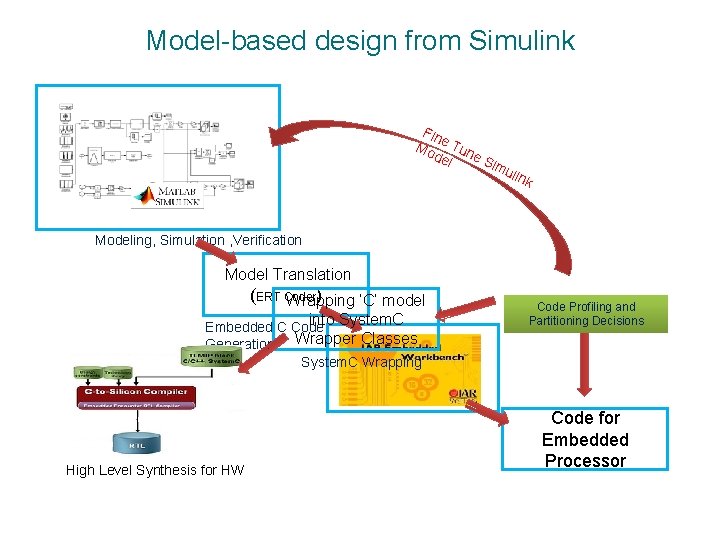

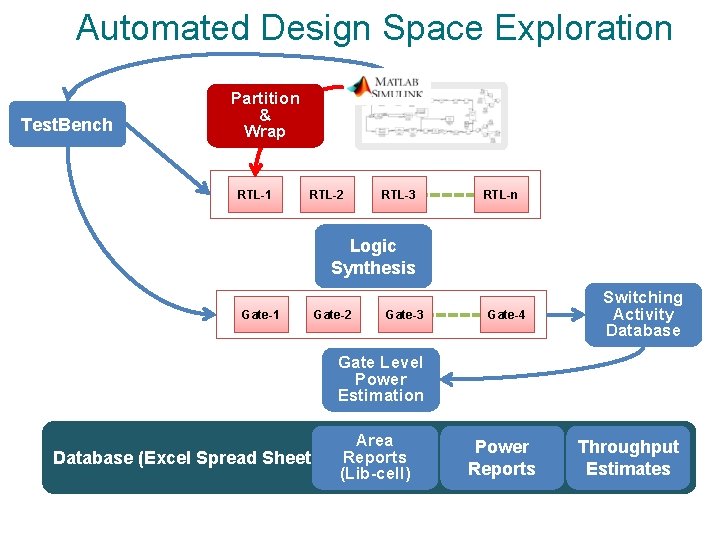

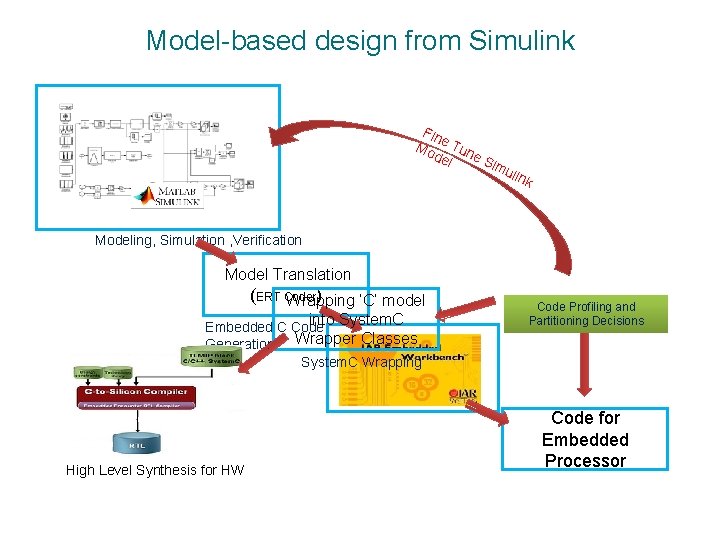

HLS from Simulink models § Simulink/Stateflow is an industry-standard model-based design tool for: – Algorithmic modeling and simulation – Code generation for both SW and HW § HW implementation generation is limited to: – One or a few architectures (cost/performance points) – One or a few platforms (Xilinx- or Altera-specific tools) § Our approach: exploit well-established SW code generation in C – Customize code-generation for efficient HW implementation – Perform automated Design Space Exploration, without designer input – In case of broadly used blocks (e. g. FFT), write HLS-specific models for HW DSE Electronics Group 4

Model-based design from Simulink Fin Mo e Tun del e. S im ulin k Modeling, Simulation , Verification Model Translation (ERT Coder ) Wrapping ‘C’ model into System. C Embedded C Code Generation Wrapper Classes System. C Wrapping High Level Synthesis for HW Code Profiling and Partitioning Decisions Code for Embedded Processor

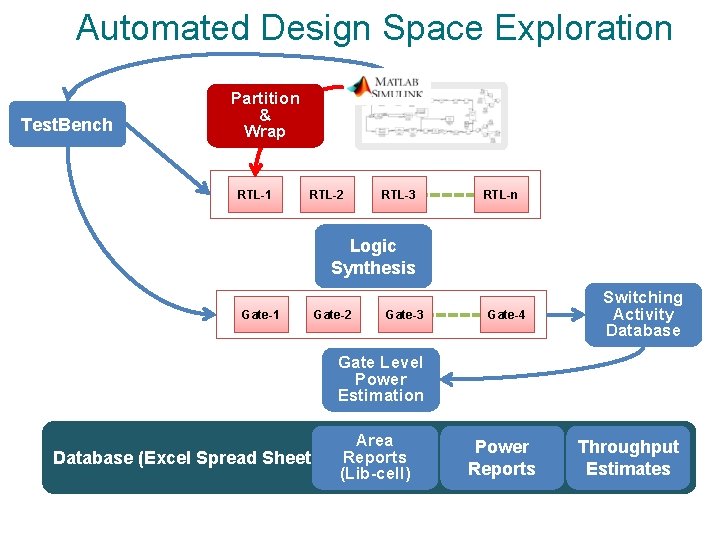

Automated Design Space Exploration Test. Bench Partition & Wrap RTL-1 RTL-2 RTL-3 RTL-n Logic Synthesis Gate-1 Gate-2 Gate-3 Gate-4 Switching Activity Database Gate Level Power Estimation Database (Excel Spread Sheet) Area Reports (Lib-cell) Power Reports Throughput Estimates

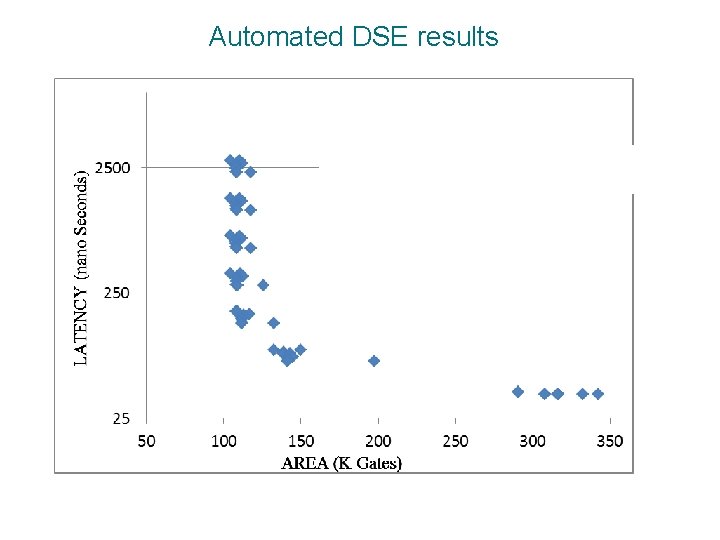

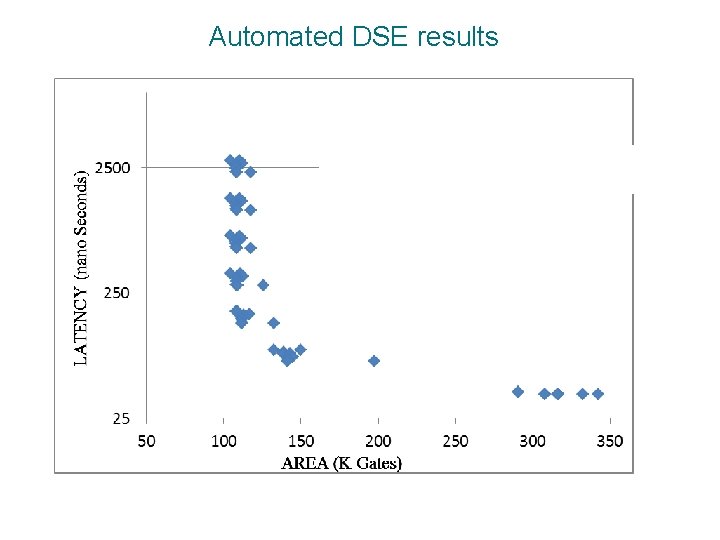

Automated DSE results

HLS from Open. Cl to FPGAs § A significant portion of the cost of data centers is due to energy consumption (both energy and cooling costs) § A large number of data center algorithms (e. g. search, image recognition, speech recognition) are “embarrassingly parallel” – Efficient code written in parallel languages is available § FPGA implementation provides a nice alternative to fullyprogrammable implementation on CPUs or GP-GPUs – Low-energy – Reconfiguration enables use for different applications – Good performance

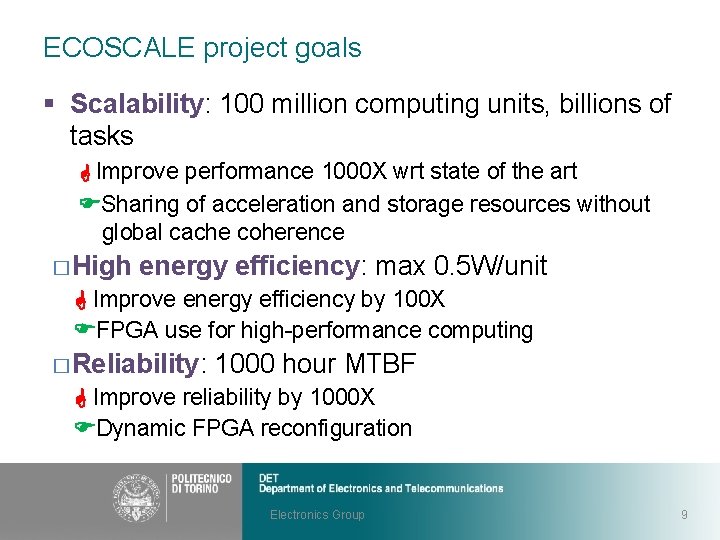

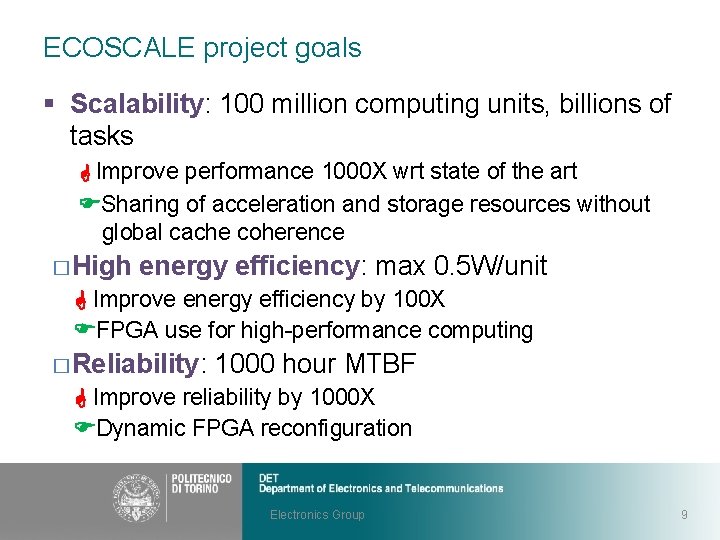

ECOSCALE project goals § Scalability: 100 million computing units, billions of tasks Improve performance 1000 X wrt state of the art Sharing of acceleration and storage resources without global cache coherence � High energy efficiency: max 0. 5 W/unit Improve energy efficiency by 100 X FPGA use for high-performance computing � Reliability: 1000 hour MTBF Improve reliability by 1000 X Dynamic FPGA reconfiguration Electronics Group 9

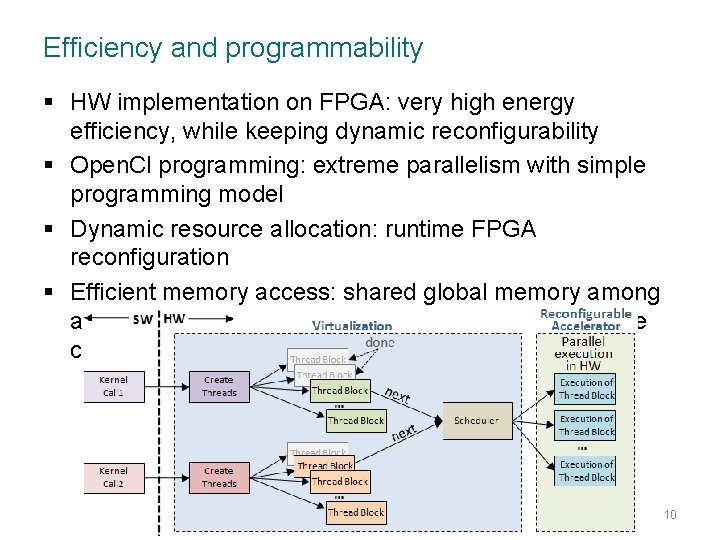

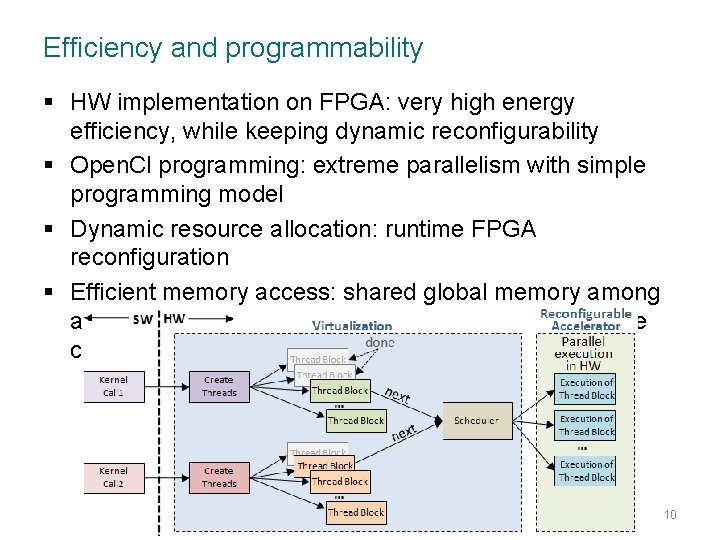

Efficiency and programmability § HW implementation on FPGA: very high energy efficiency, while keeping dynamic reconfigurability § Open. Cl programming: extreme parallelism with simple programming model § Dynamic resource allocation: runtime FPGA reconfiguration § Efficient memory access: shared global memory among all CPUs and FPGAs in a cluster, without global cache coherency 10

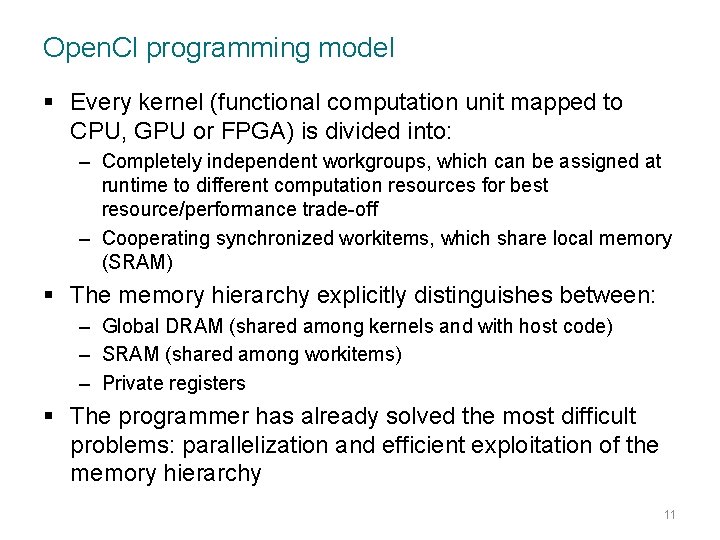

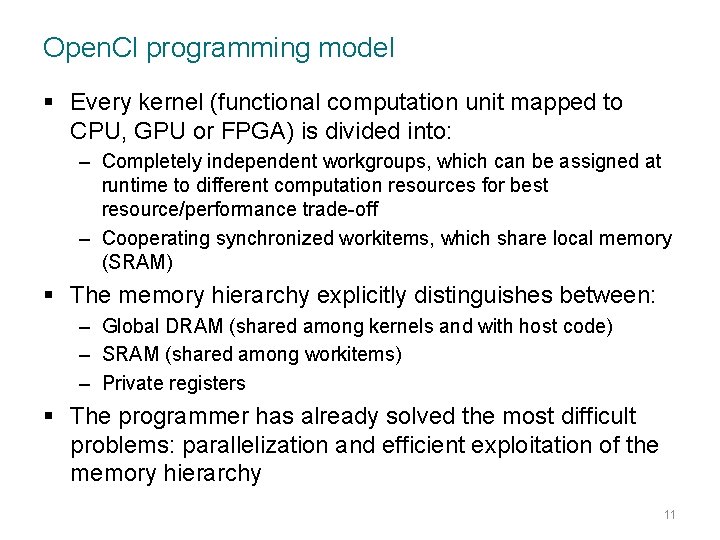

Open. Cl programming model § Every kernel (functional computation unit mapped to CPU, GPU or FPGA) is divided into: – Completely independent workgroups, which can be assigned at runtime to different computation resources for best resource/performance trade-off – Cooperating synchronized workitems, which share local memory (SRAM) § The memory hierarchy explicitly distinguishes between: – Global DRAM (shared among kernels and with host code) – SRAM (shared among workitems) – Private registers § The programmer has already solved the most difficult problems: parallelization and efficient exploitation of the memory hierarchy 11

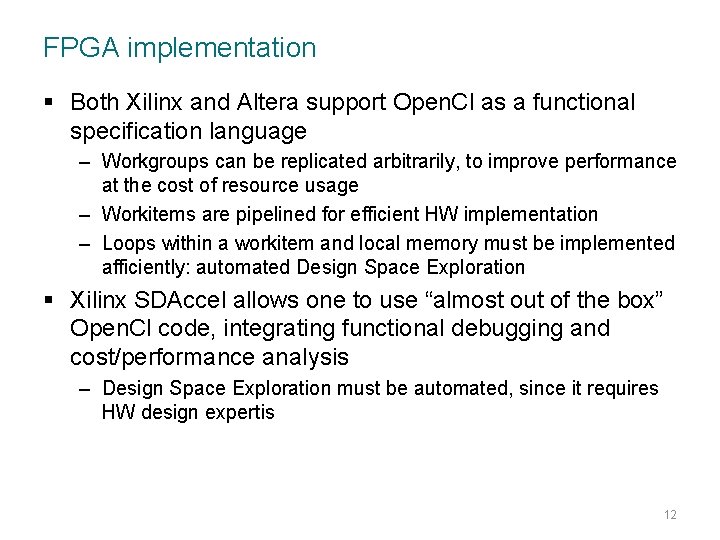

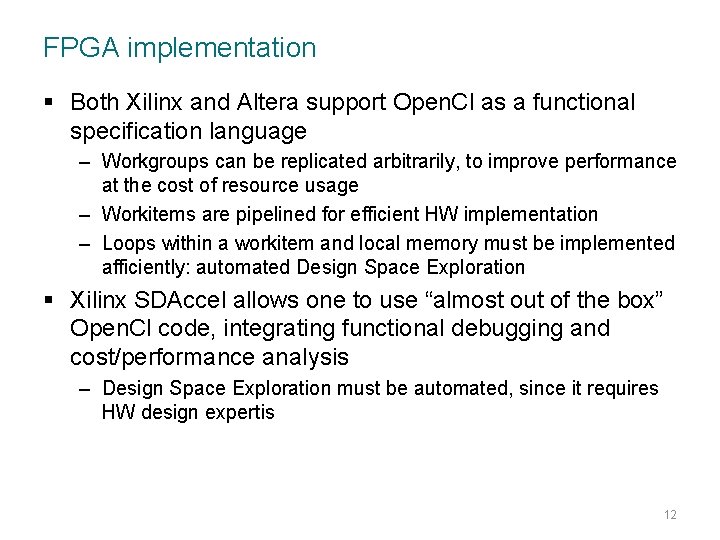

FPGA implementation § Both Xilinx and Altera support Open. Cl as a functional specification language – Workgroups can be replicated arbitrarily, to improve performance at the cost of resource usage – Workitems are pipelined for efficient HW implementation – Loops within a workitem and local memory must be implemented afficiently: automated Design Space Exploration § Xilinx SDAccel allows one to use “almost out of the box” Open. Cl code, integrating functional debugging and cost/performance analysis – Design Space Exploration must be automated, since it requires HW design expertis 12

Application examples § Financial algorithms: e. g. Black-Scholes and Heston – Monte-Carlo parallel simulations: no local or global memory – FPGA is much more efficient than GPU, in terms of both performance and energy-per-computation § Machine learning: e. g. K-nearest-neighbors – Limited by global memory bandwidth (GPU is typically better) – FPGA consumes much less energy, and can exploit streaming communication § Sorting: e. g. bitonic sorting – Limited by global memory bandwidth (GPU is typically better) – FPGA consumes much less energy, and can exploit streaming communication 13

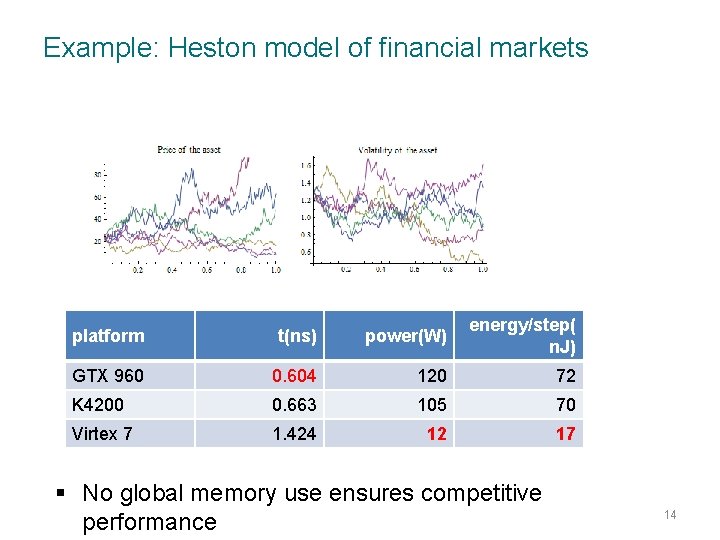

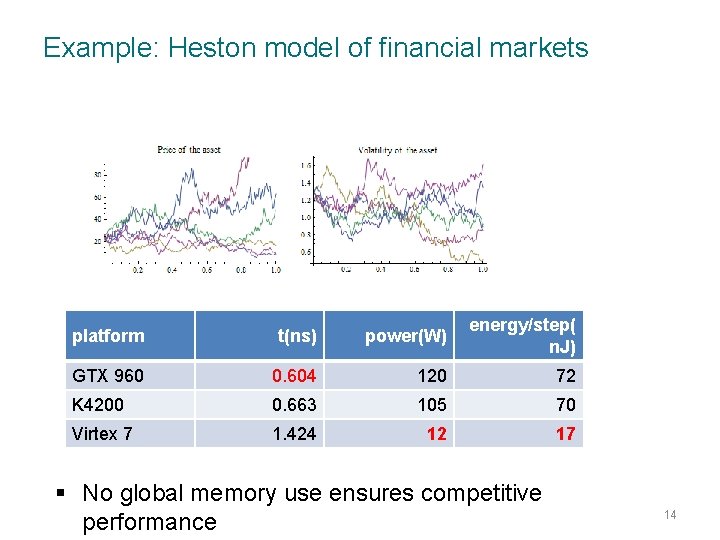

Example: Heston model of financial markets platform t(ns) power(W) energy/step( n. J) GTX 960 0. 604 120 72 K 4200 0. 663 105 70 Virtex 7 1. 424 12 17 § No global memory use ensures competitive performance 14

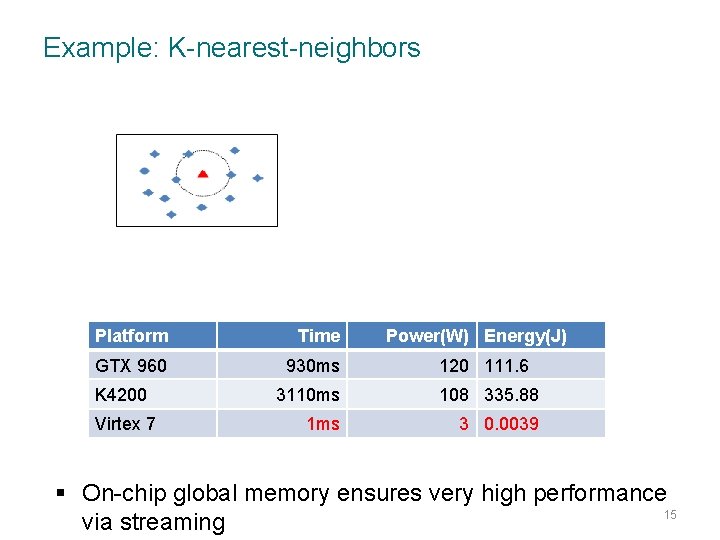

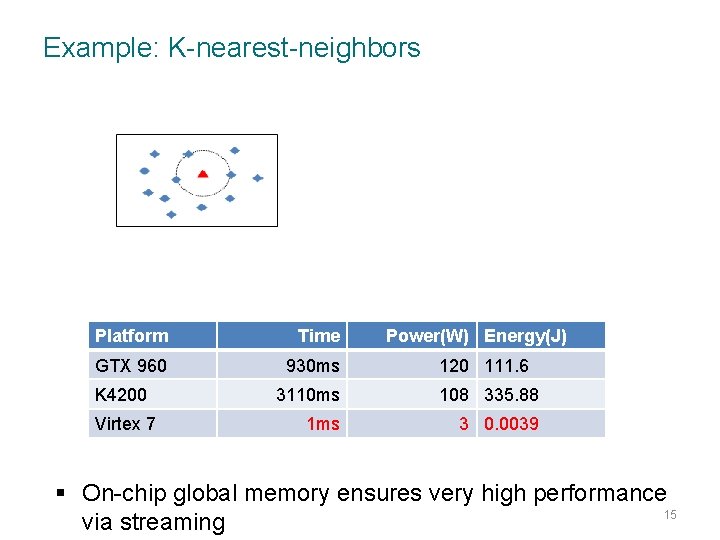

Example: K-nearest-neighbors Platform Time GTX 960 930 ms 120 111. 6 3110 ms 108 335. 88 1 ms 3 0. 0039 K 4200 Virtex 7 Power(W) Energy(J) § On-chip global memory ensures very high performance 15 via streaming

Summary § Open. Cl and FPGAs provide an almost-ideal platform for highly-parallel software for data centers § Excellent energy-per-computation savings, with good performance § Some FPGA-specific high-level optimization is required – E. g. to exploit global memory access bursts § Several examples from different application domains provide encouraging results – Design space exploration is much easier than with other (less embarrassingly parallel) models – Dynamic resource management is key to data center and highperformance computing applicability 16