Lecture 5 MonteCarlo method for TwoStage SLP Leonidas

- Slides: 28

Lecture 5 Monte-Carlo method for Two-Stage SLP Leonidas Sakalauskas Institute of Mathematics and Informatics Vilnius, Lithuania EURO Working Group on Continuous Optimization

Content ¡ ¡ ¡ ¡ Introduction Monte Carlo estimators Stochastic Differentiation -feasible gradient approach for two-stage SLP Interior-point method for two stage SLP Testing optimality Convergence analysis Counterexample

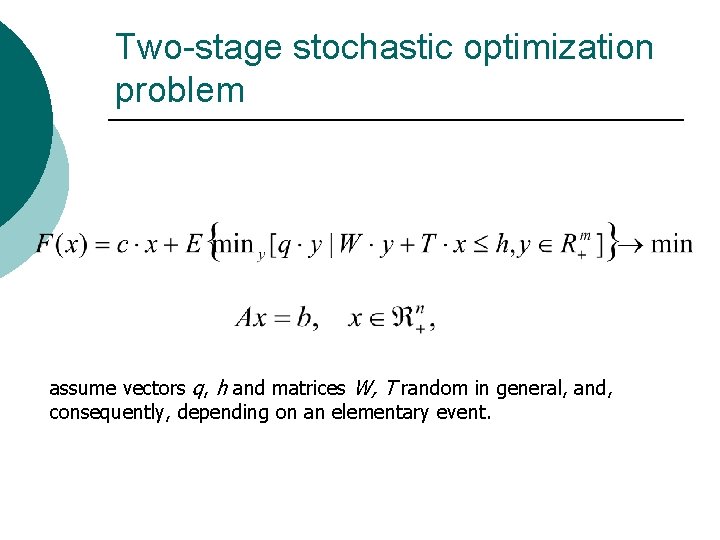

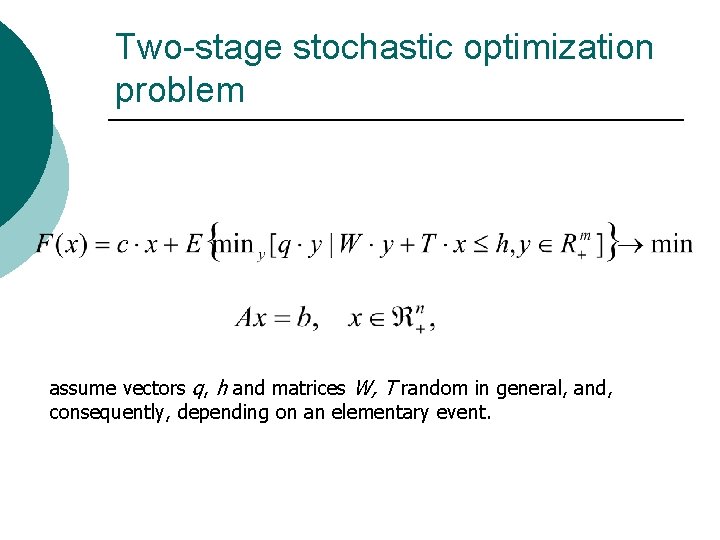

Two-stage stochastic optimization problem assume vectors q, h and matrices W, T random in general, and, consequently, depending on an elementary event.

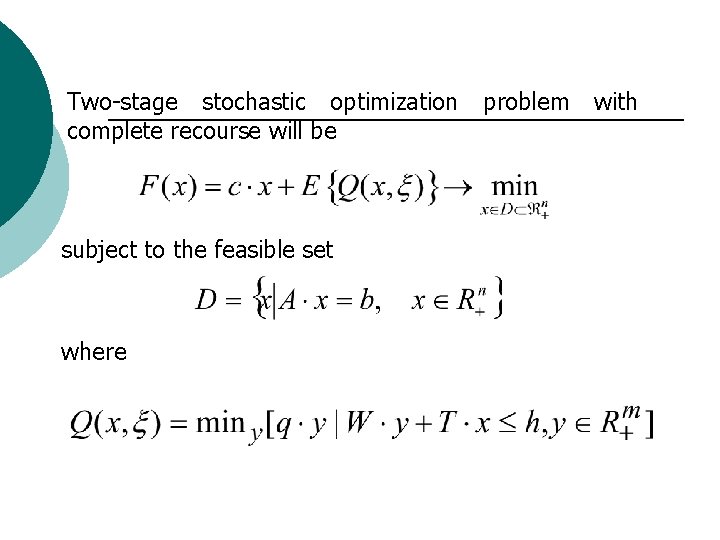

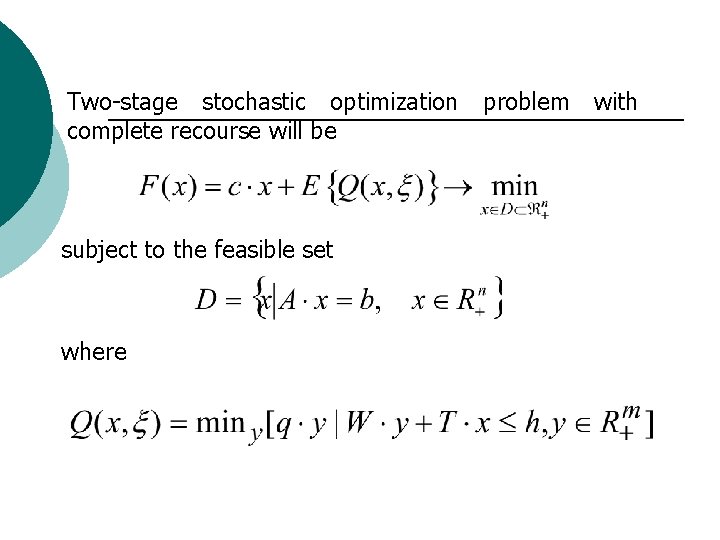

Two-stage stochastic optimization complete recourse will be subject to the feasible set where problem with

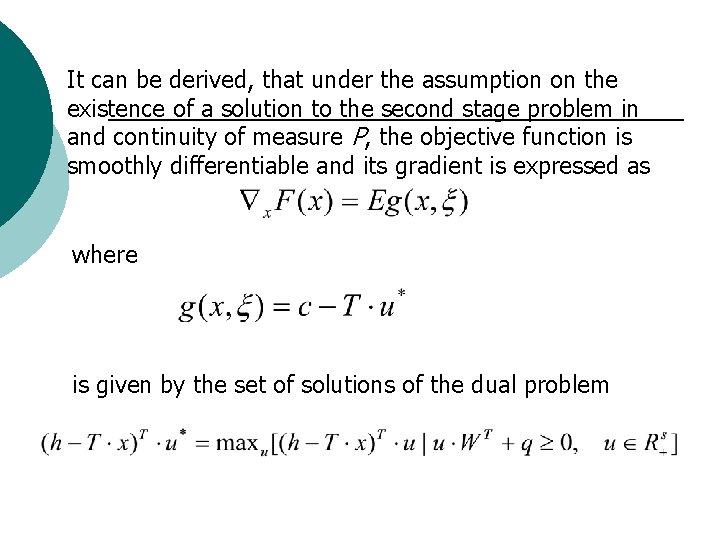

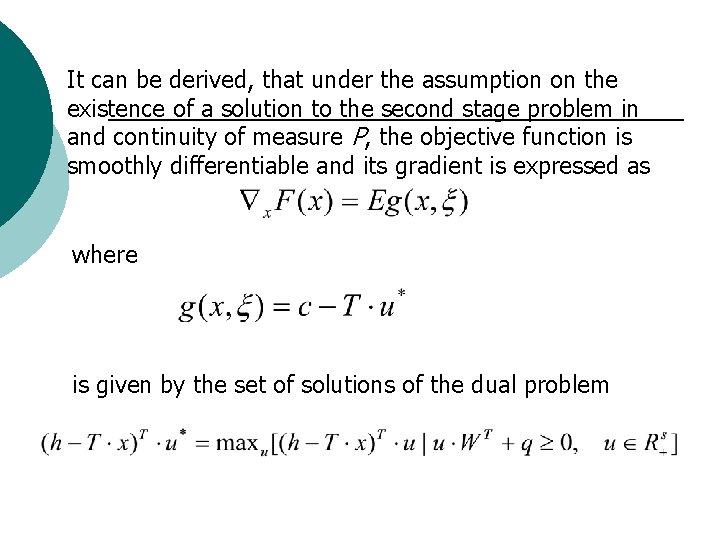

It can be derived, that under the assumption on the existence of a solution to the second stage problem in and continuity of measure P, the objective function is smoothly differentiable and its gradient is expressed as where is given by the set of solutions of the dual problem

Monte-Carlo samples We assume here that the Monte-Carlo samples of a certain size N are provided for any and the sampling estimator of the objective function can be computed : Aad sampling variance can be computed also which is useful to evaluate the accuracy of estimator

Gradient The gradient is evaluated using the same random sample:

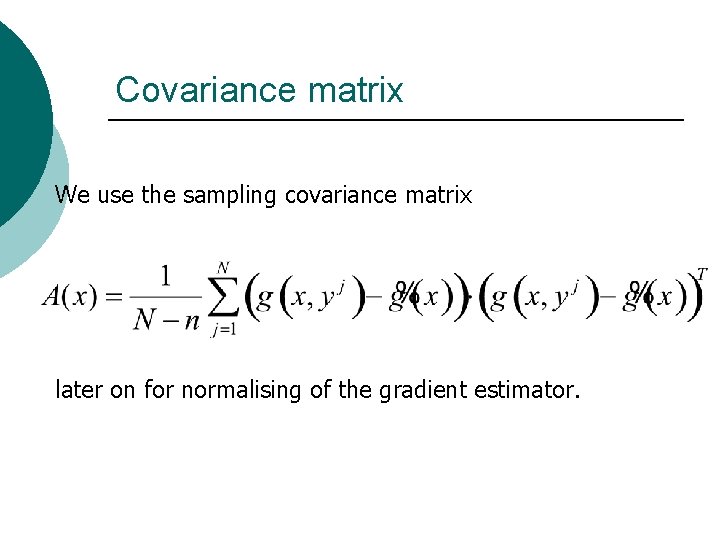

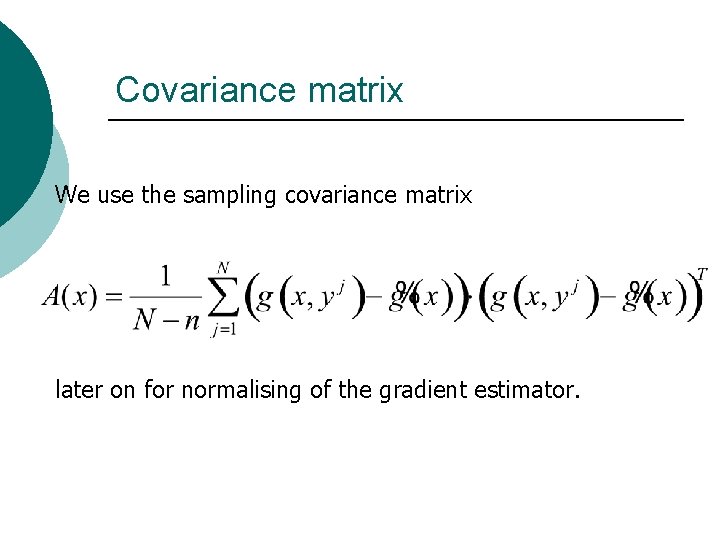

Covariance matrix We use the sampling covariance matrix later on for normalising of the gradient estimator.

Approaches of stochastic gradient ¡ We examine several estimators for stochastic gradient: l l Analytical approach (AA); Finite difference approach (FD); Simulated perturbation stochastic approach (SPSA); Likelihood ratio approach (LR).

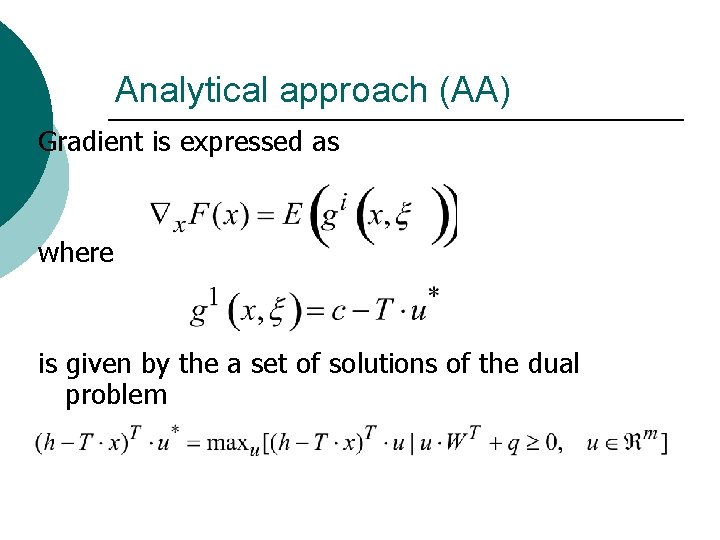

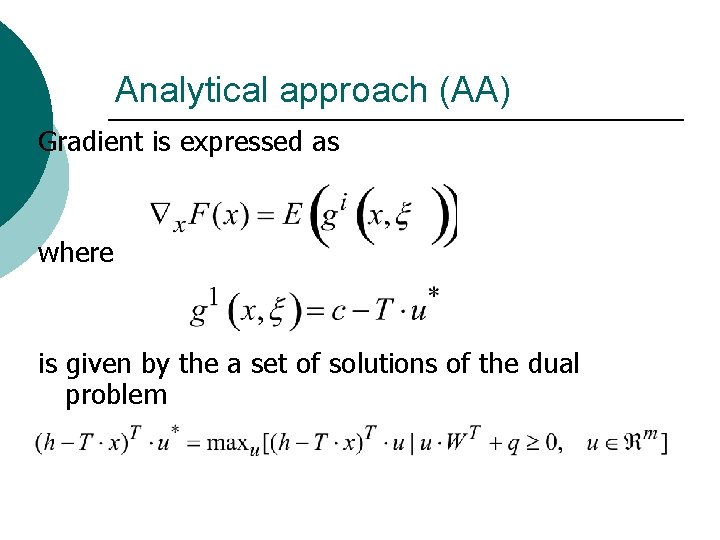

Analytical approach (AA) Gradient is expressed as where is given by the a set of solutions of the dual problem

Gradient search procedure Let some initial point be given. The random sample of a certain initial size N 0 be generated at this point, and Monte-Carlo estimates be computed. The iterative stochastic procedure of gradient search could be used further:

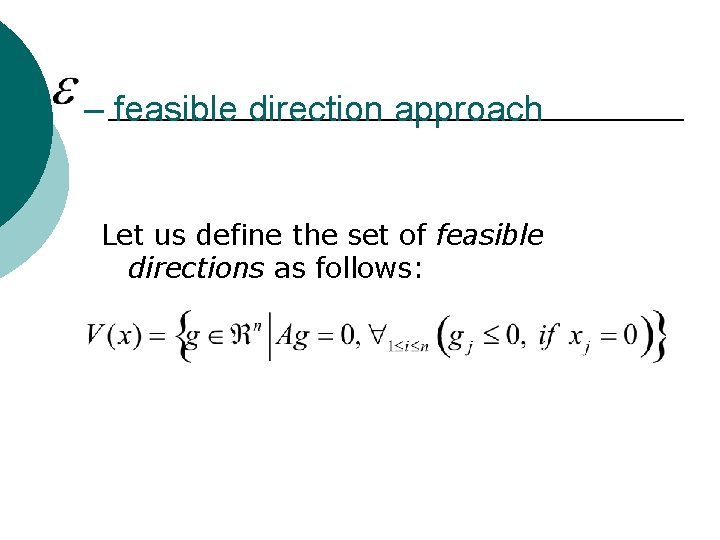

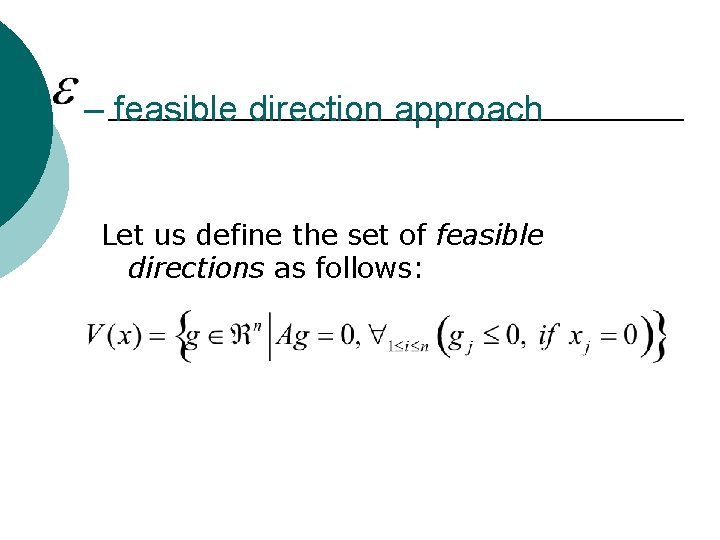

– feasible direction approach Let us define the set of feasible directions as follows:

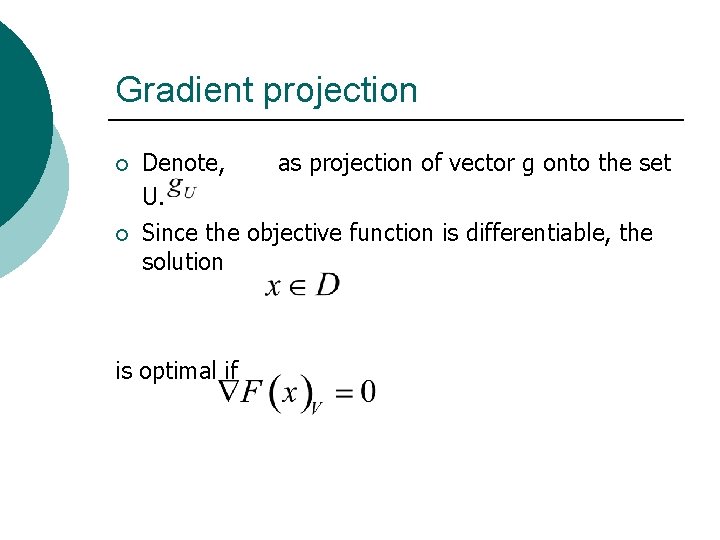

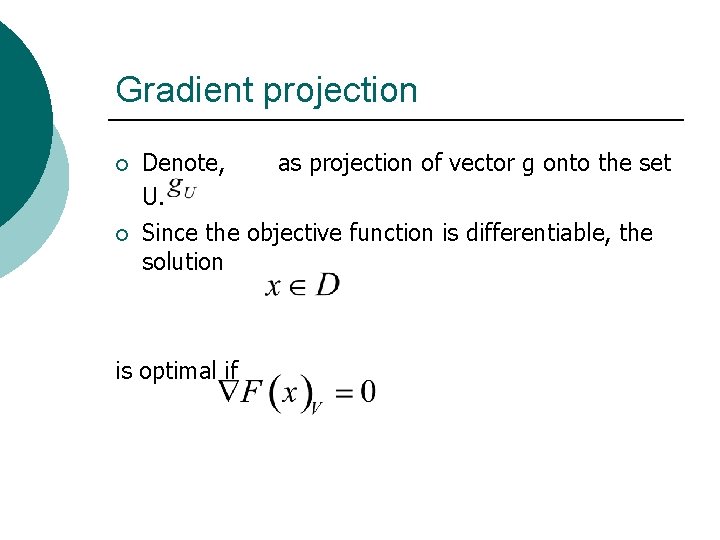

Gradient projection ¡ ¡ Denote, as projection of vector g onto the set U. Since the objective function is differentiable, the solution is optimal if

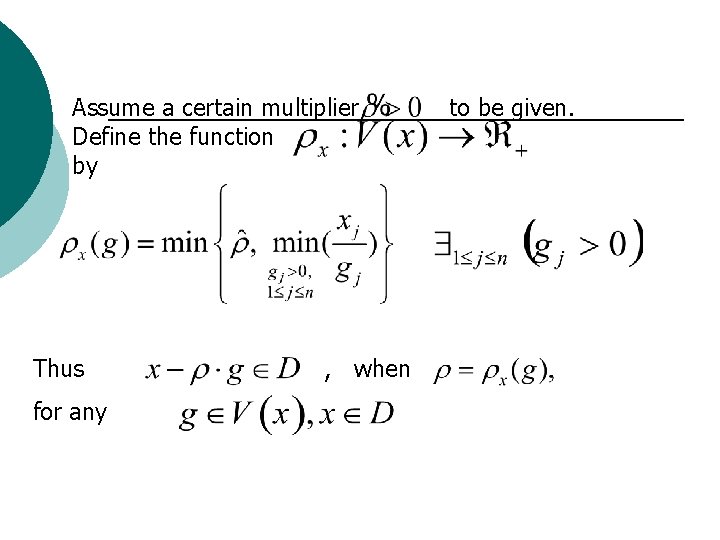

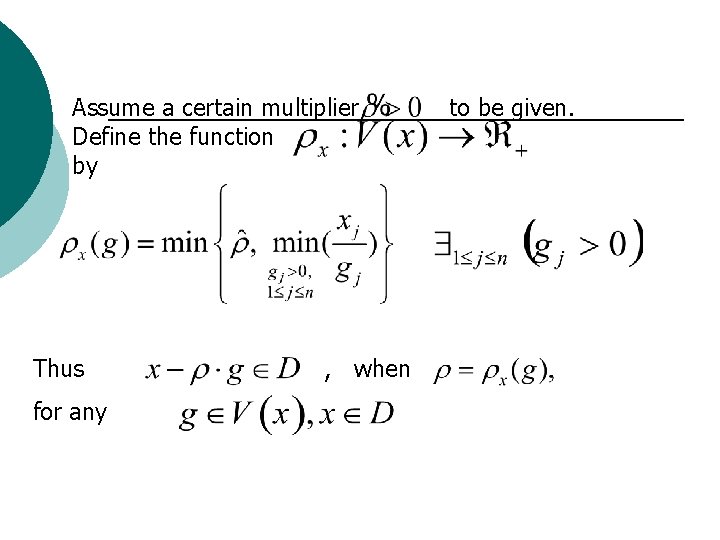

Assume a certain multiplier Define the function by Thus for any , when to be given.

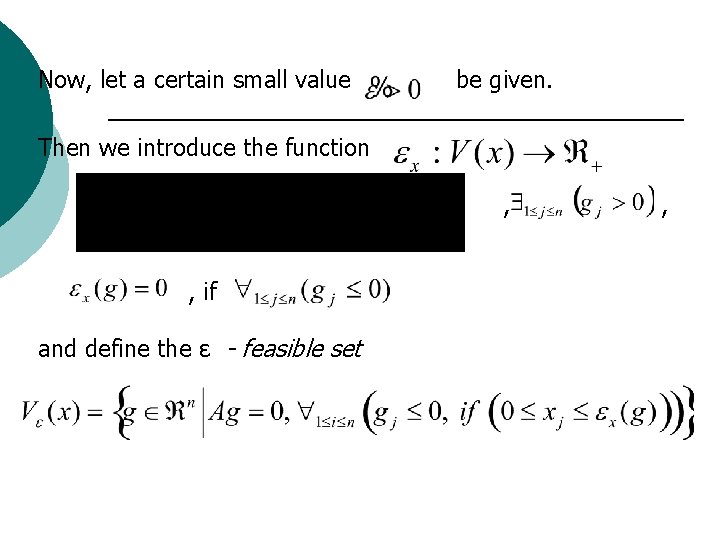

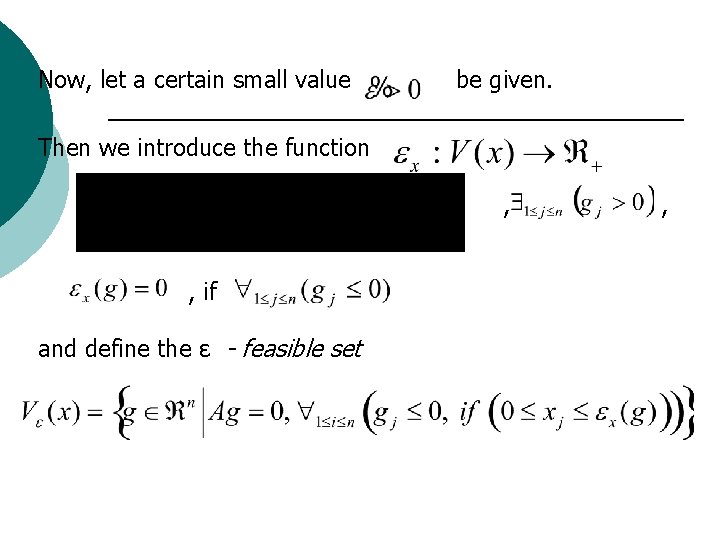

Now, let a certain small value be given. Then we introduce the function , , if and define the ε - feasible set ,

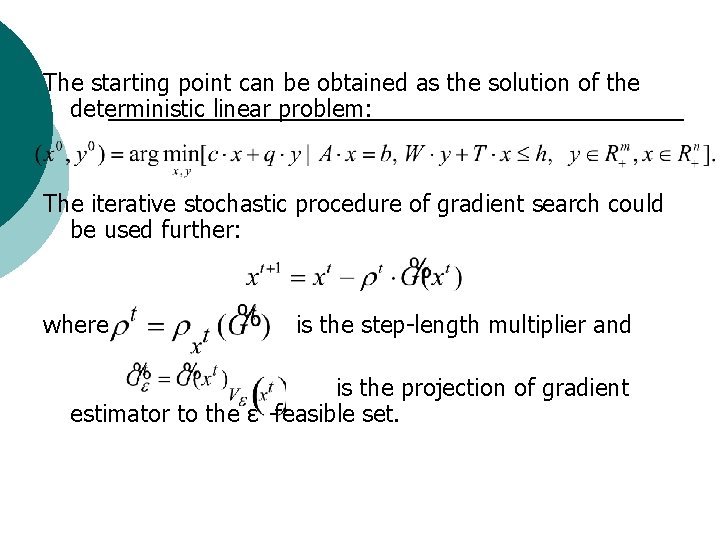

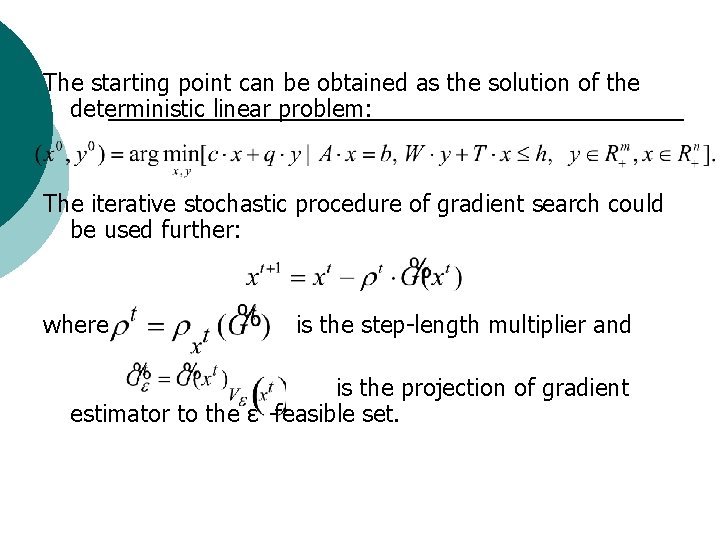

The starting point can be obtained as the solution of the deterministic linear problem: The iterative stochastic procedure of gradient search could be used further: where is the step-length multiplier and is the projection of gradient estimator to the ε -feasible set.

Monte-Carlo sample size problem There is no a great necessity to compute estimators with a high accuracy on starting the optimisation, because then it suffices only to approximately evaluate the direction leading to the optimum. Therefore, one can obtain not so large samples at the beginning of the optimum search and, later on, increase the size of samples so as to get the estimate of the objective function with a desired accuracy just at the time of decision making on finding the solution to the optimisation problem.

We propose a following version for regulating the sample size in practice:

Statistical testing of the optimality hypothesis The optimality hypothesis could be accepted for some point xt with significance , if the following condition is satisfied Next, we can use the asymptotic normality again and decide that the objective function is estimated with a permissible accuracy , if its confidence bound does not exceed this value:

Computer simulation ¡ ¡ Two-stage stochastic linear optimisation problem. Dimensions of the task are as follows: l l the first stage has 10 rows and 20 variables; the second stage has 20 rows and 30 variables. http: //www. math. bme. hu/~deak/twostage/ l 1/20 x 20. 1/ (2006 -01 -20).

Dwo stage stochastic programing ¡ ¡ The estimate of the optimal value of the objective function given in the database is 182. 94234 0. 066 N 0=Nmin=100 Nmax=10000. Maximal number of iterations , generation of trials was broken when the estimated confidence interval of the objective function exceeds admissible value. Initial data were as follows:

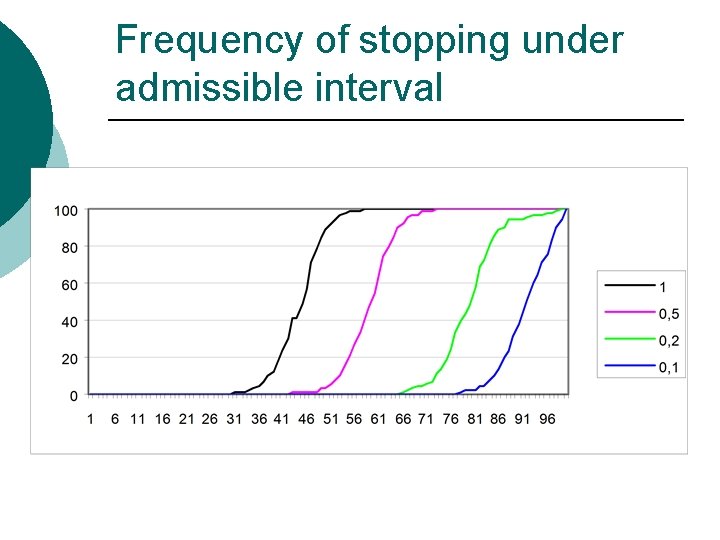

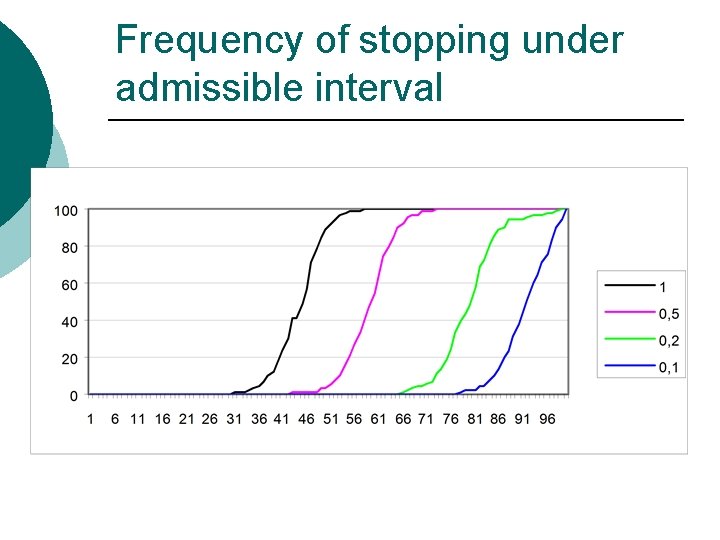

Frequency of stopping under admissible interval

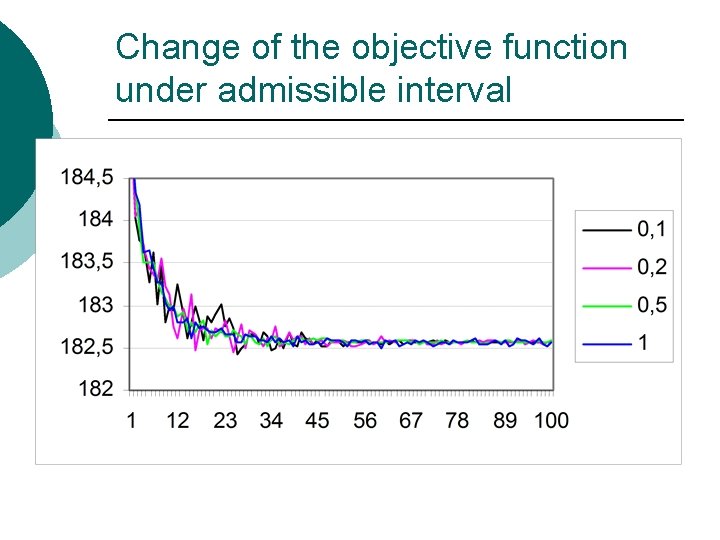

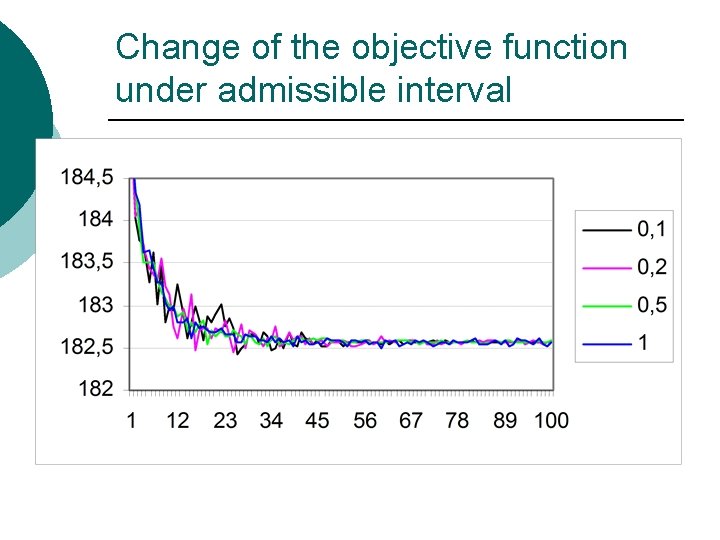

Change of the objective function under admissible interval

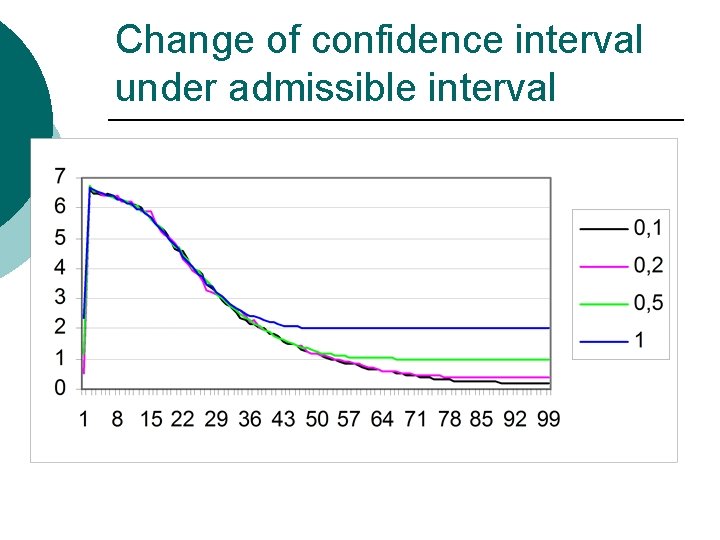

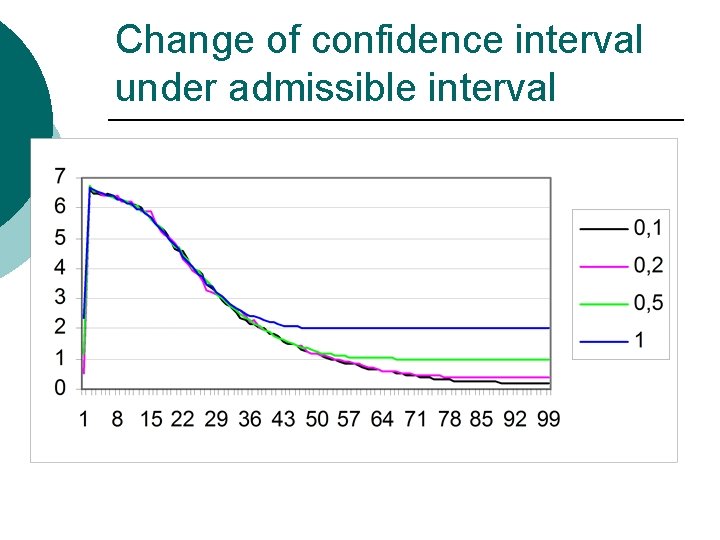

Change of confidence interval under admissible interval

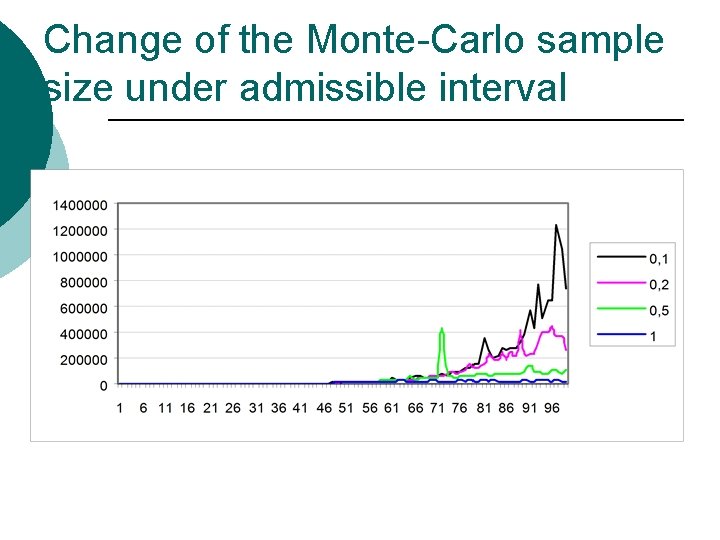

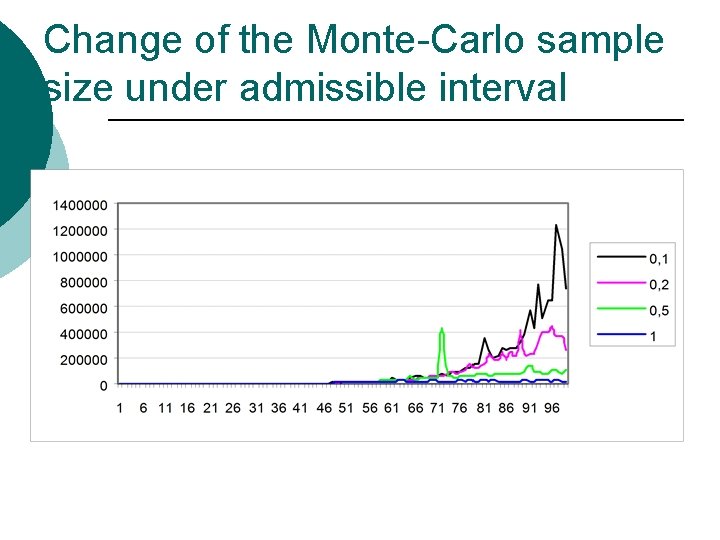

Change of the Monte-Carlo sample size under admissible interval

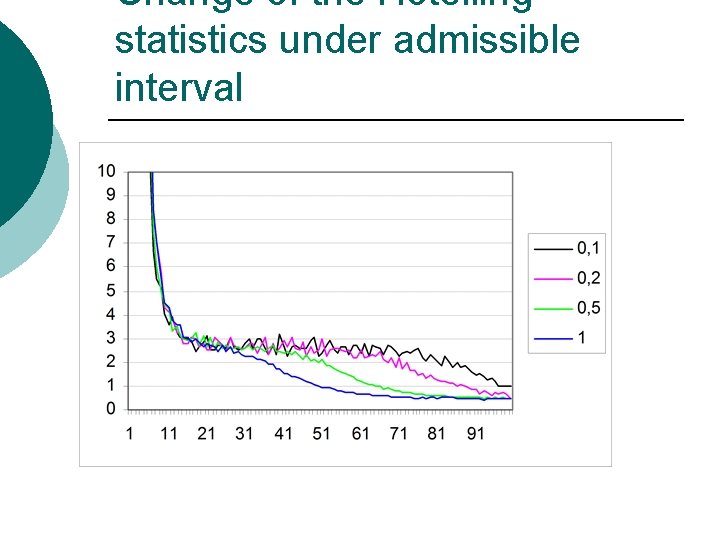

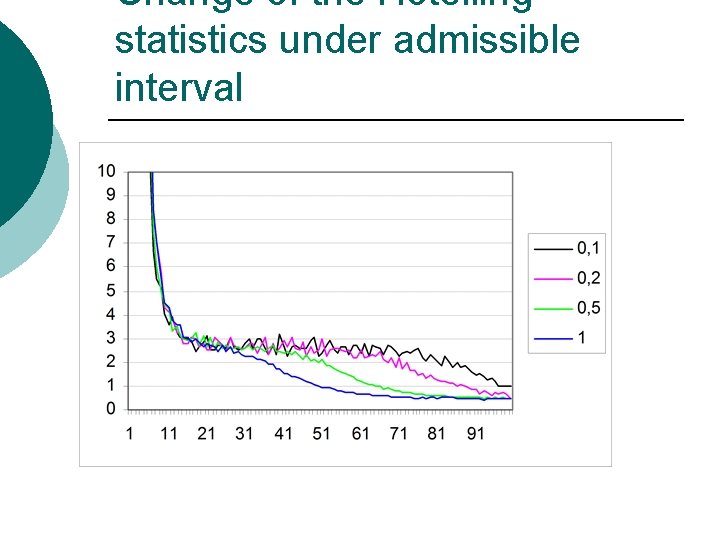

Change of the Hotelling statistics under admissible interval

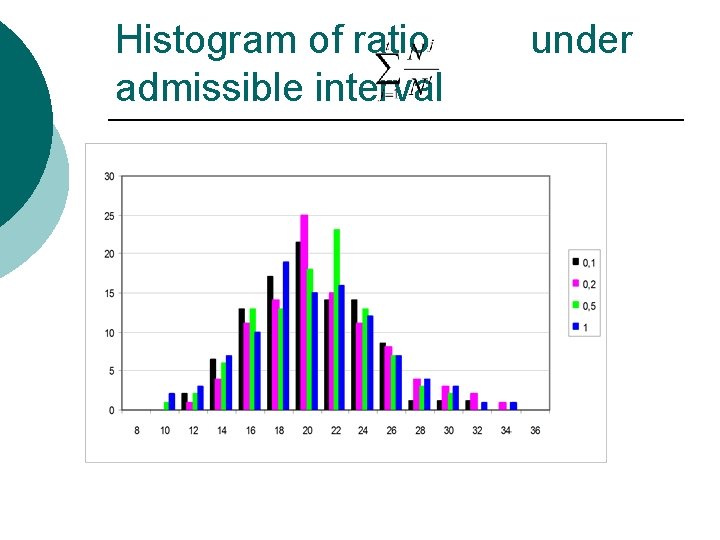

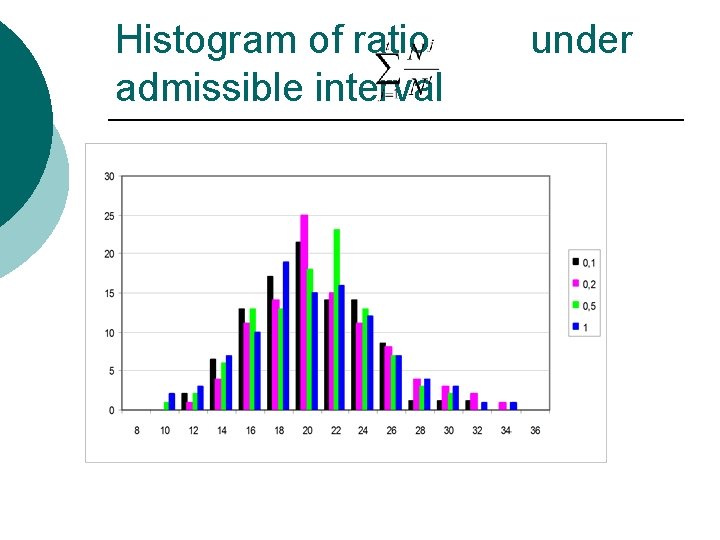

Histogram of ratio admissible interval under

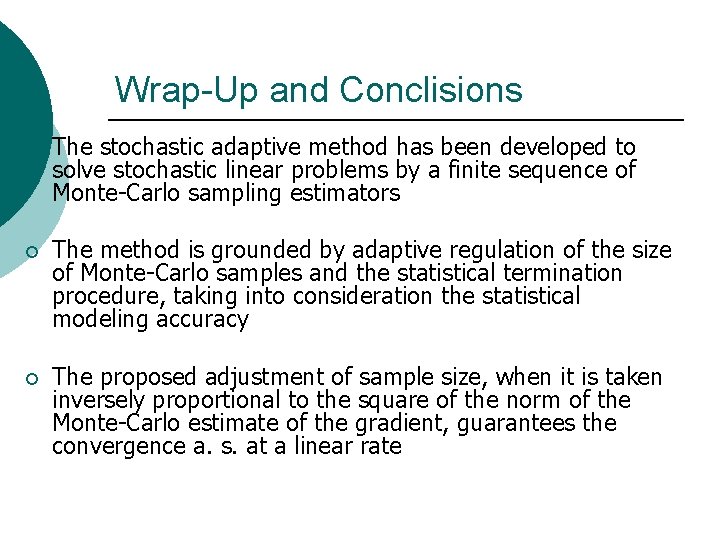

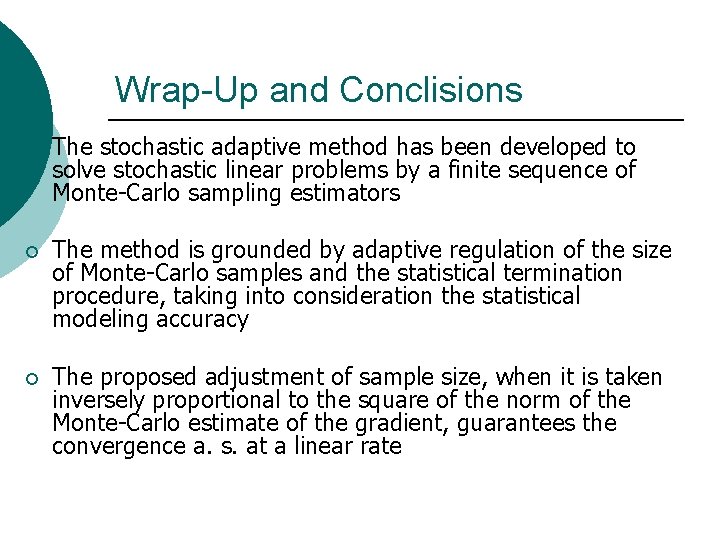

Wrap-Up and Conclisions ¡ The stochastic adaptive method has been developed to solve stochastic linear problems by a finite sequence of Monte-Carlo sampling estimators ¡ The method is grounded by adaptive regulation of the size of Monte-Carlo samples and the statistical termination procedure, taking into consideration the statistical modeling accuracy ¡ The proposed adjustment of sample size, when it is taken inversely proportional to the square of the norm of the Monte-Carlo estimate of the gradient, guarantees the convergence a. s. at a linear rate