Lecture 16 Higher Level Parallelism The PRAM Model

![SIMD Programming, Parallel sum=0 for (i=0; i<65536; i=i+1) sum=sum+A[Pn, i]; /* Loop over 65 SIMD Programming, Parallel sum=0 for (i=0; i<65536; i=i+1) sum=sum+A[Pn, i]; /* Loop over 65](https://slidetodoc.com/presentation_image_h/2e09c52629f43f52cf5d4651076fce68/image-20.jpg)

- Slides: 24

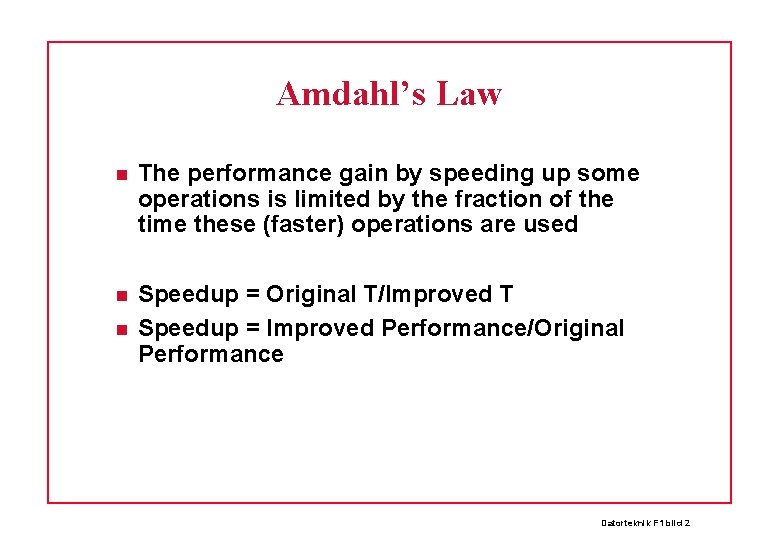

Lecture 16 Higher Level Parallelism The PRAM Model Vector Processors Flynn Classification Connection Machine CM-2 (SIMD) Communication Networks Memory Architectures Synchronization Datorteknik F 1 bild 1

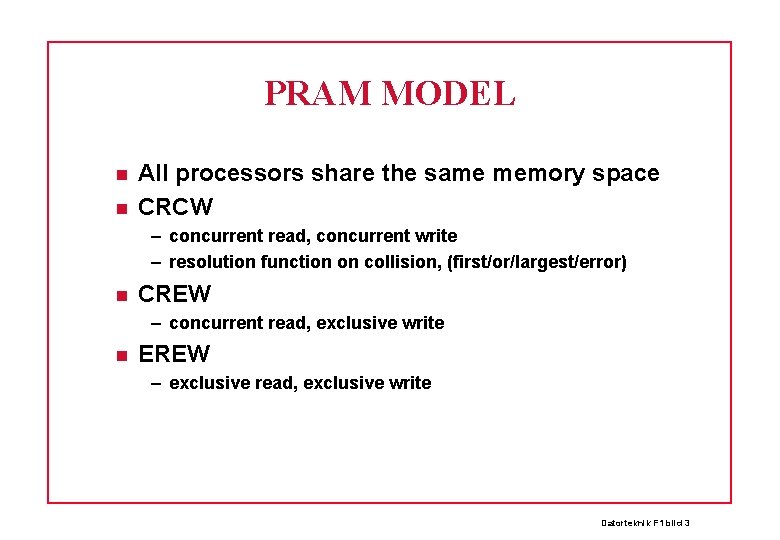

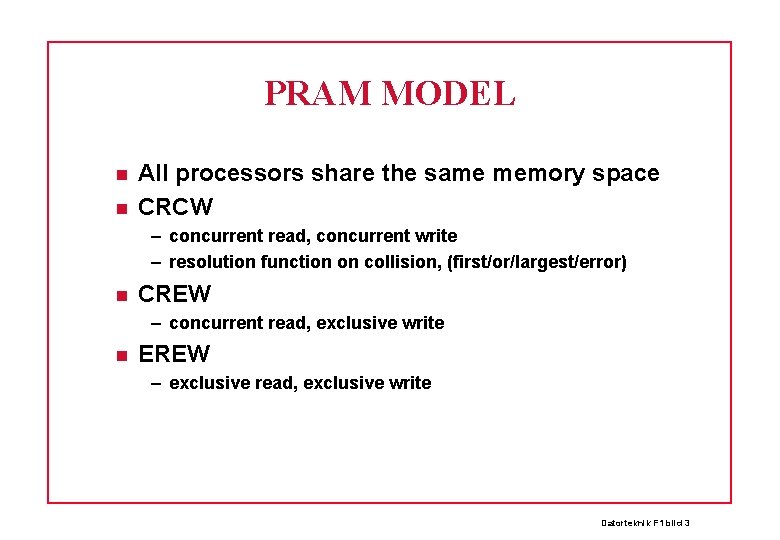

Amdahl’s Law The performance gain by speeding up some operations is limited by the fraction of the time these (faster) operations are used Speedup = Original T/Improved T Speedup = Improved Performance/Original Performance Datorteknik F 1 bild 2

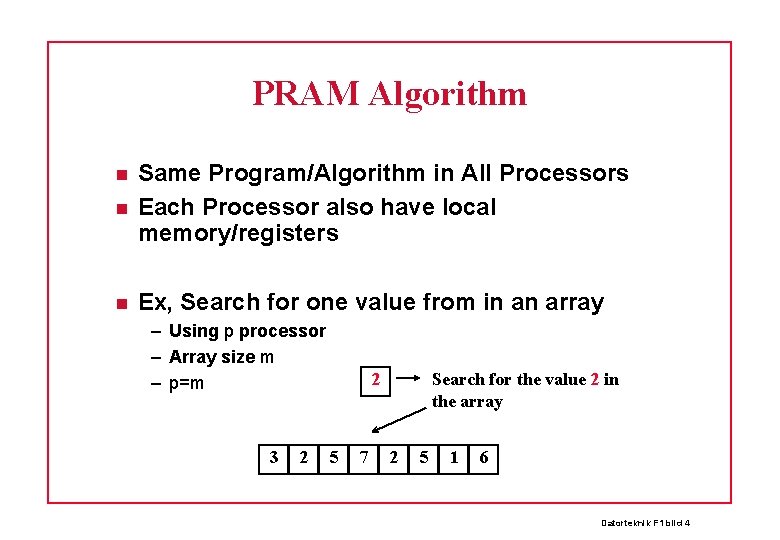

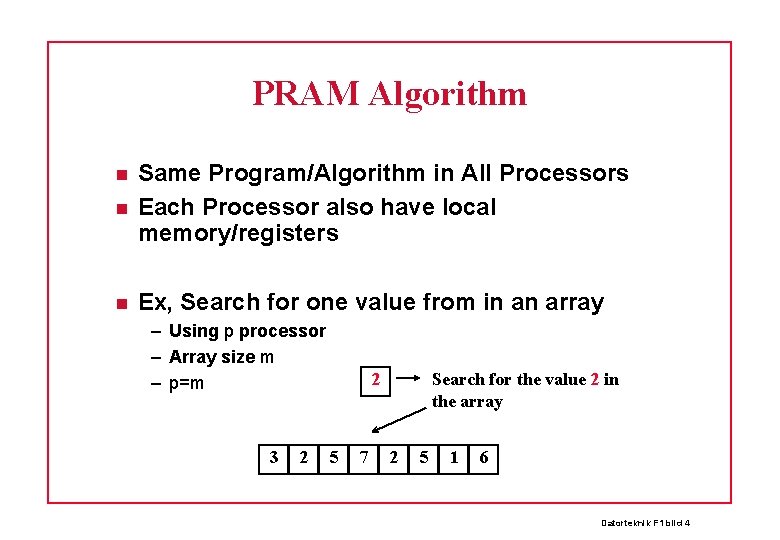

PRAM MODEL All processors share the same memory space CRCW – concurrent read, concurrent write – resolution function on collision, (first/or/largest/error) CREW – concurrent read, exclusive write EREW – exclusive read, exclusive write Datorteknik F 1 bild 3

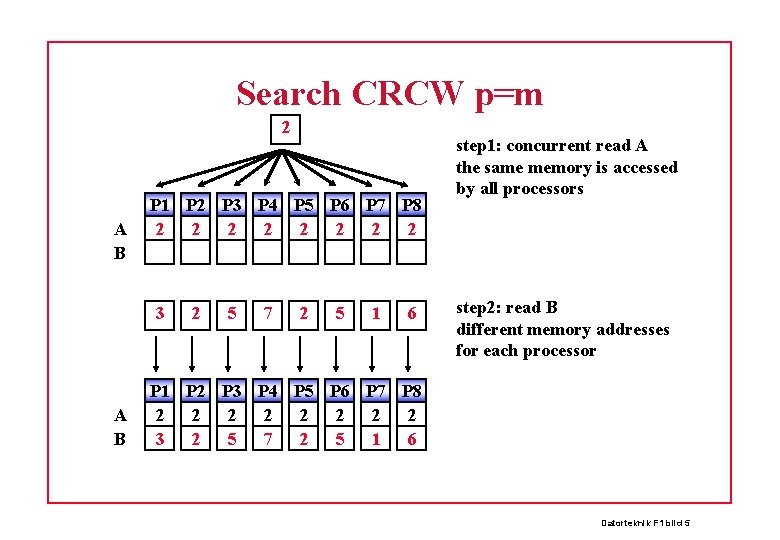

PRAM Algorithm Same Program/Algorithm in All Processors Each Processor also have local memory/registers Ex, Search for one value from in an array – Using p processor – Array size m – p=m 3 2 2 5 7 Search for the value 2 in the array 2 5 1 6 Datorteknik F 1 bild 4

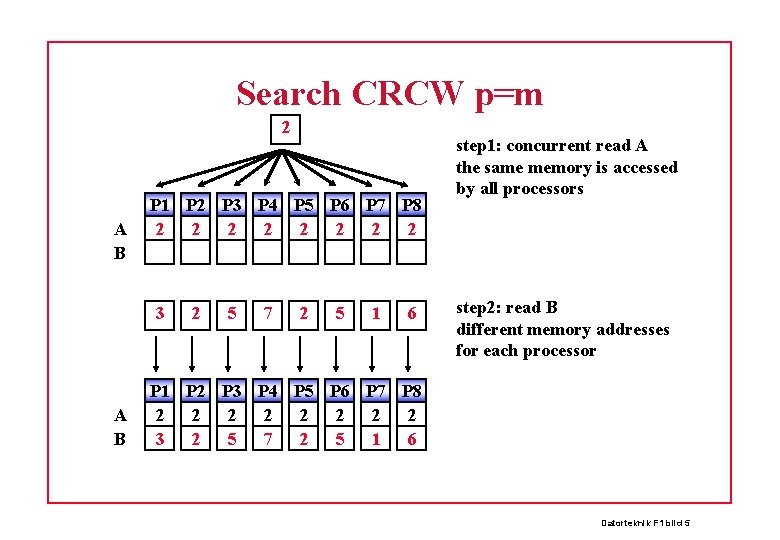

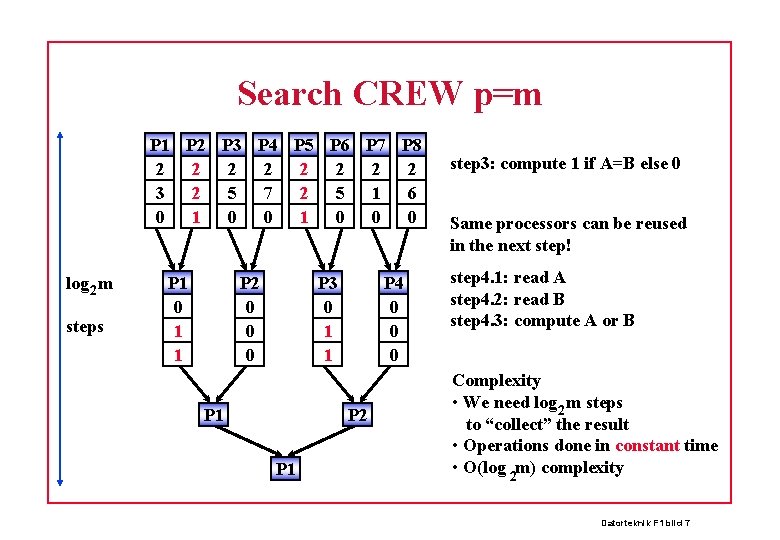

Search CRCW p=m 2 A B P 1 P 2 P 3 P 4 P 5 P 6 P 7 P 8 2 2 2 2 3 A B 2 5 7 2 5 1 6 step 1: concurrent read A the same memory is accessed by all processors step 2: read B different memory addresses for each processor P 1 P 2 P 3 P 4 P 5 P 6 P 7 P 8 2 2 2 2 3 2 5 7 2 5 1 6 Datorteknik F 1 bild 5

Search CRCW p=m A B P 1 P 2 P 3 P 4 P 5 P 6 P 7 P 8 2 2 2 2 3 2 5 7 2 5 1 6 1 step 3: concurrent write 1 if A=B else 0 We use “or” resolution 1: Value found 0: Value not found Complexity • All operations performed in constant time • Count only the cost of communication steps • In this case the number of steps is independent of m, (if enough processors) • Search is done in constant time O(1) for CRCW and p=m Datorteknik F 1 bild 6

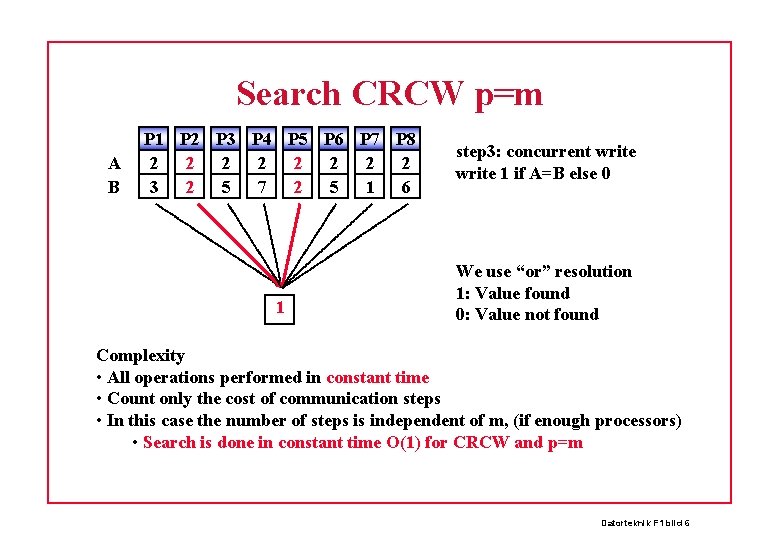

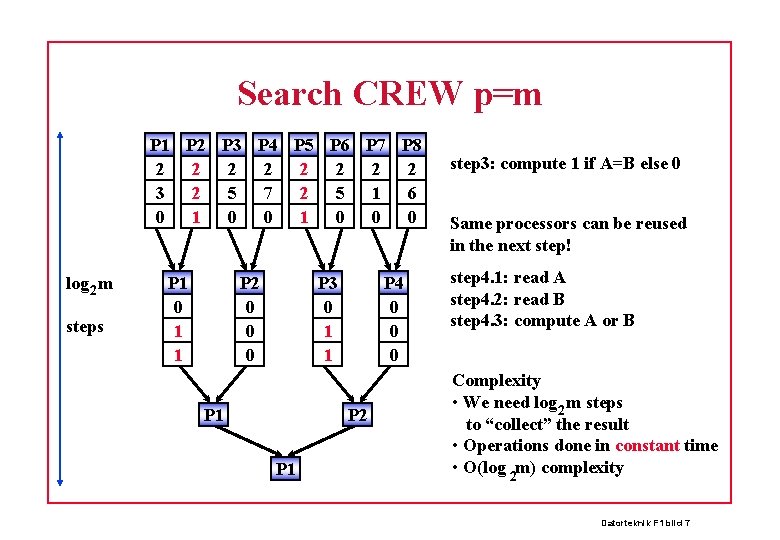

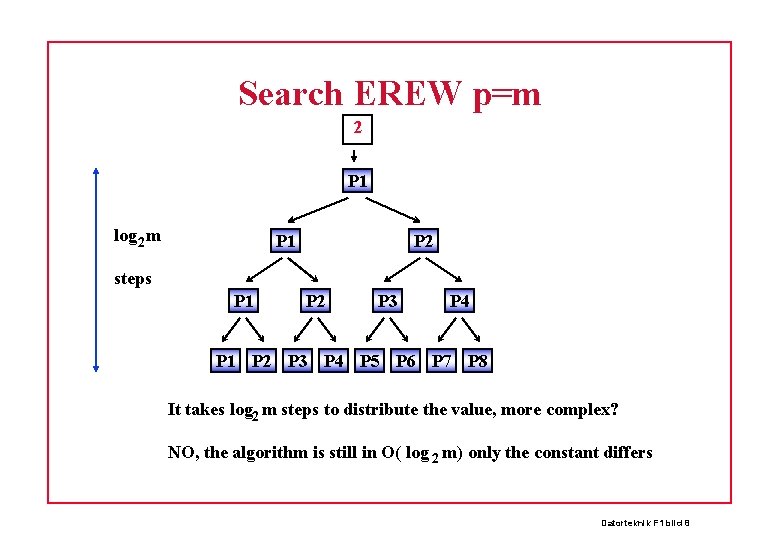

Search CREW p=m P 1 P 2 P 3 P 4 P 5 P 6 P 7 P 8 2 2 2 2 3 2 5 7 2 5 1 6 0 1 0 0 0 log 2 m steps P 1 0 1 1 P 2 0 0 0 P 3 0 1 1 P 4 0 0 0 P 2 P 1 step 3: compute 1 if A=B else 0 Same processors can be reused in the next step! step 4. 1: read A step 4. 2: read B step 4. 3: compute A or B Complexity • We need log 2 m steps to “collect” the result • Operations done in constant time • O(log 2 m) complexity Datorteknik F 1 bild 7

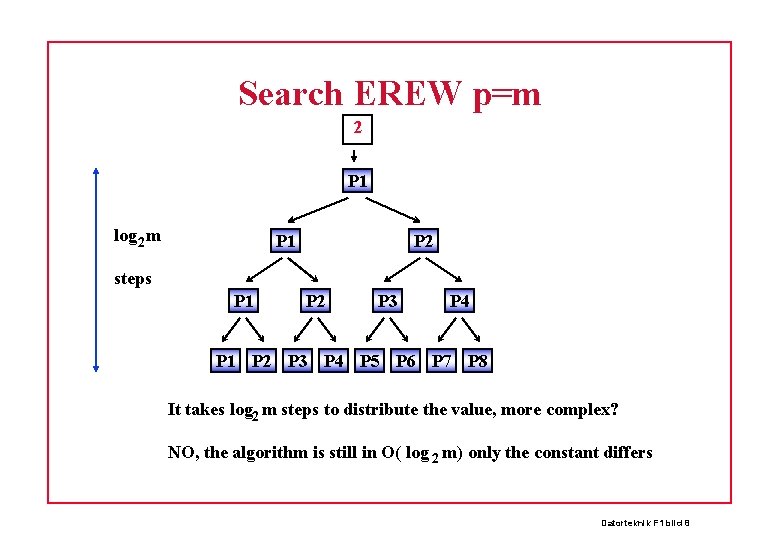

Search EREW p=m 2 P 1 log 2 m P 1 P 2 steps P 1 P 2 P 3 P 4 P 5 P 6 P 7 P 8 It takes log 2 m steps to distribute the value, more complex? NO, the algorithm is still in O( log 2 m) only the constant differs Datorteknik F 1 bild 8

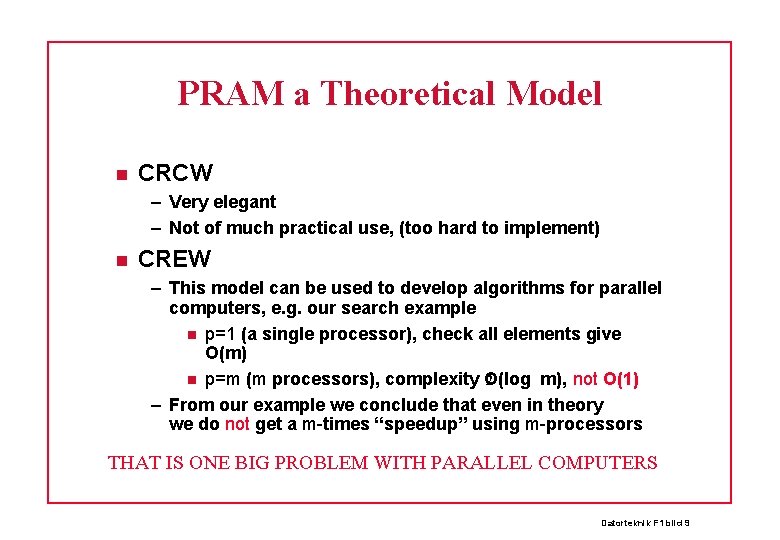

PRAM a Theoretical Model CRCW – Very elegant – Not of much practical use, (too hard to implement) CREW – This model can be used to develop algorithms for parallel computers, e. g. our search example p=1 (a single processor), check all elements give O(m) 2 p=m (m processors), complexity O(log m), not O(1) – From our example we conclude that even in theory we do not get a m-times “speedup” using m-processors THAT IS ONE BIG PROBLEM WITH PARALLEL COMPUTERS Datorteknik F 1 bild 9

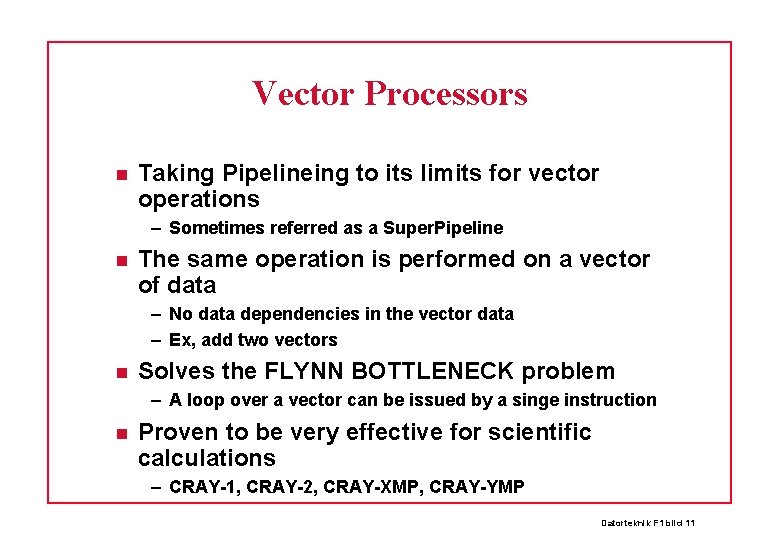

Parallelism so far By pipelineing several instructions (at different stages) are executed simultaneously – Pipeline depth limited by hazards Super. Scalar designs provide parallel execution units – Limited by instruction and machine level parallelism – VLIW might improve over hardware instruction issuing All limited by the instruction fetch mechanism – Called the FLYNN BOTTLENECK – Only a very limited nr of instructions can be fetched each cycle – That makes vector operations ineffective Datorteknik F 1 bild 10

Vector Processors Taking Pipelineing to its limits for vector operations – Sometimes referred as a Super. Pipeline The same operation is performed on a vector of data – No data dependencies in the vector data – Ex, add two vectors Solves the FLYNN BOTTLENECK problem – A loop over a vector can be issued by a singe instruction Proven to be very effective for scientific calculations – CRAY-1, CRAY-2, CRAY-XMP, CRAY-YMP Datorteknik F 1 bild 11

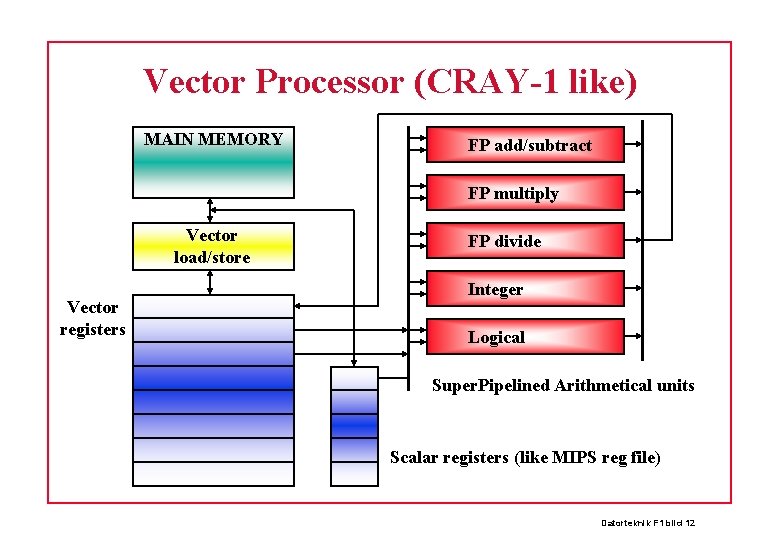

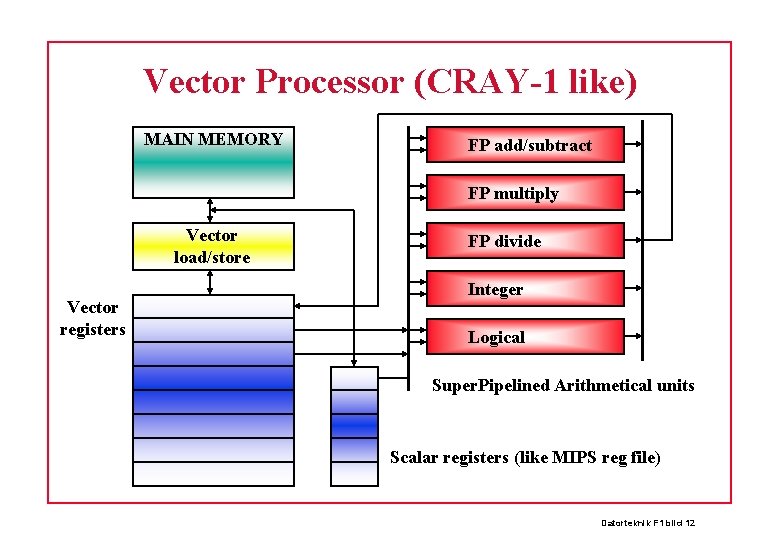

Vector Processor (CRAY-1 like) MAIN MEMORY FP add/subtract FP multiply Vector load/store Vector registers FP divide Integer Logical Super. Pipelined Arithmetical units Scalar registers (like MIPS reg file) Datorteknik F 1 bild 12

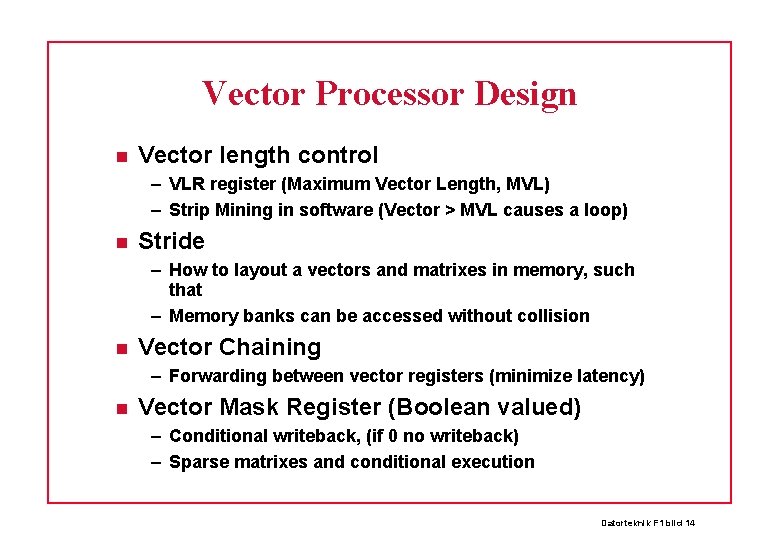

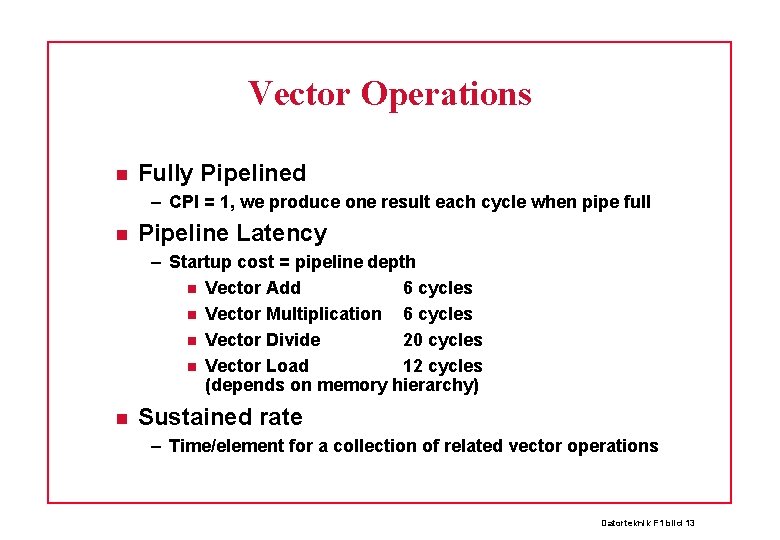

Vector Operations Fully Pipelined – CPI = 1, we produce one result each cycle when pipe full Pipeline Latency – Startup cost = pipeline depth Vector Add 6 cycles Vector Multiplication 6 cycles Vector Divide 20 cycles Vector Load 12 cycles (depends on memory hierarchy) Sustained rate – Time/element for a collection of related vector operations Datorteknik F 1 bild 13

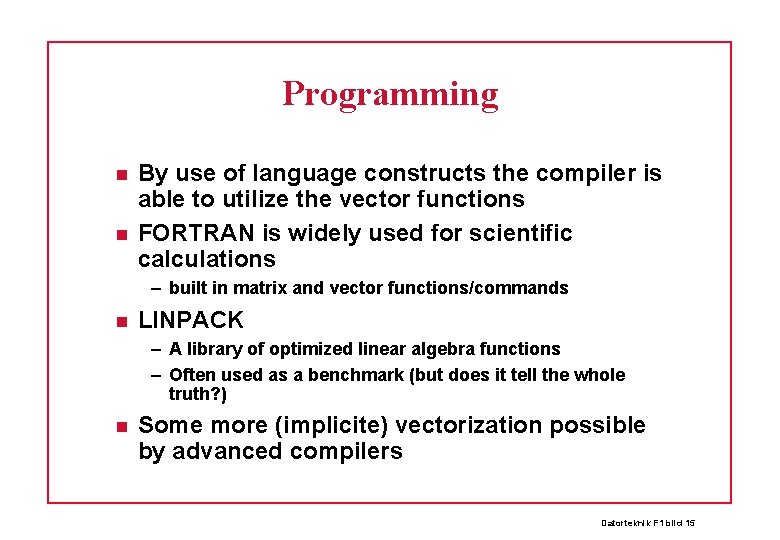

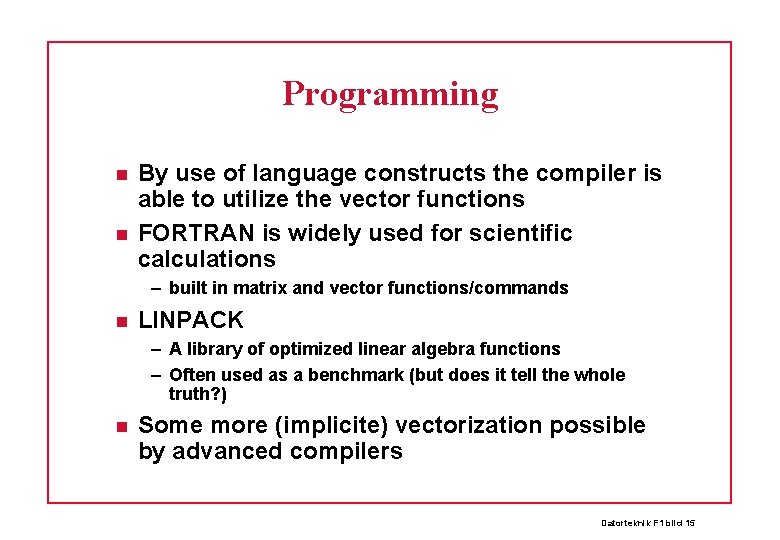

Vector Processor Design Vector length control – VLR register (Maximum Vector Length, MVL) – Strip Mining in software (Vector > MVL causes a loop) Stride – How to layout a vectors and matrixes in memory, such that – Memory banks can be accessed without collision Vector Chaining – Forwarding between vector registers (minimize latency) Vector Mask Register (Boolean valued) – Conditional writeback, (if 0 no writeback) – Sparse matrixes and conditional execution Datorteknik F 1 bild 14

Programming By use of language constructs the compiler is able to utilize the vector functions FORTRAN is widely used for scientific calculations – built in matrix and vector functions/commands LINPACK – A library of optimized linear algebra functions – Often used as a benchmark (but does it tell the whole truth? ) Some more (implicite) vectorization possible by advanced compilers Datorteknik F 1 bild 15

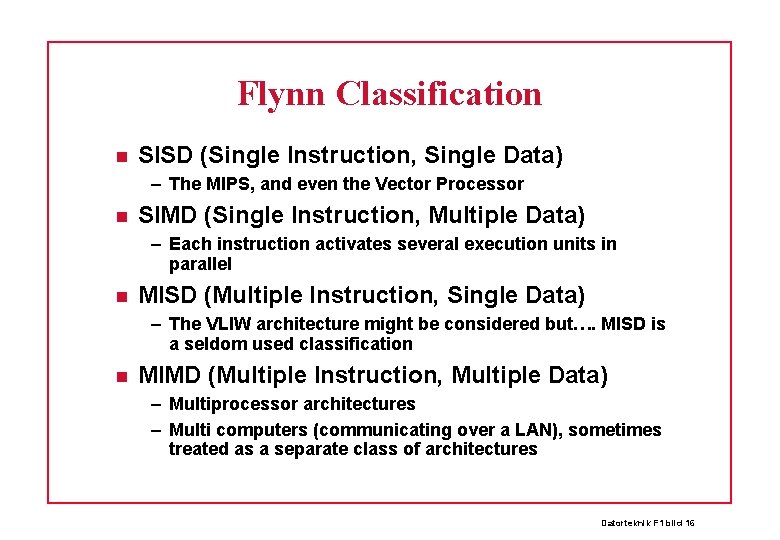

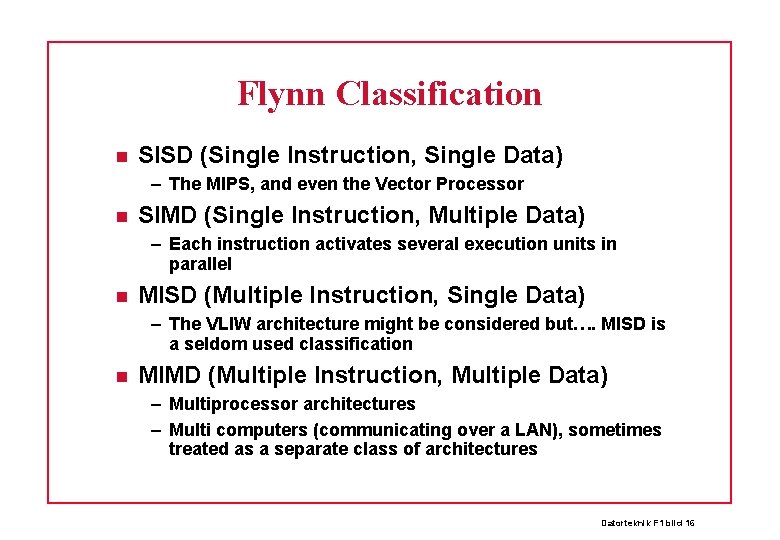

Flynn Classification SISD (Single Instruction, Single Data) – The MIPS, and even the Vector Processor SIMD (Single Instruction, Multiple Data) – Each instruction activates several execution units in parallel MISD (Multiple Instruction, Single Data) – The VLIW architecture might be considered but…. MISD is a seldom used classification MIMD (Multiple Instruction, Multiple Data) – Multiprocessor architectures – Multi computers (communicating over a LAN), sometimes treated as a separate class of architectures Datorteknik F 1 bild 16

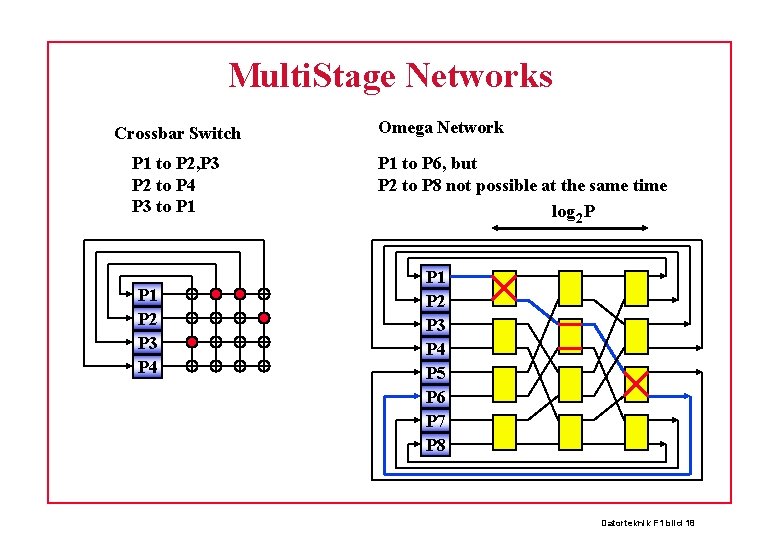

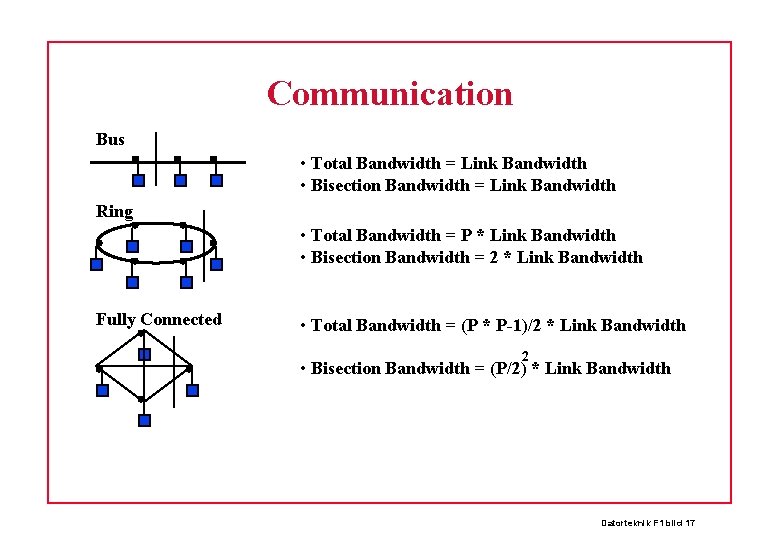

Communication Bus • Total Bandwidth = Link Bandwidth • Bisection Bandwidth = Link Bandwidth Ring • Total Bandwidth = P * Link Bandwidth • Bisection Bandwidth = 2 * Link Bandwidth Fully Connected • Total Bandwidth = (P * P-1)/2 * Link Bandwidth 2 • Bisection Bandwidth = (P/2) * Link Bandwidth Datorteknik F 1 bild 17

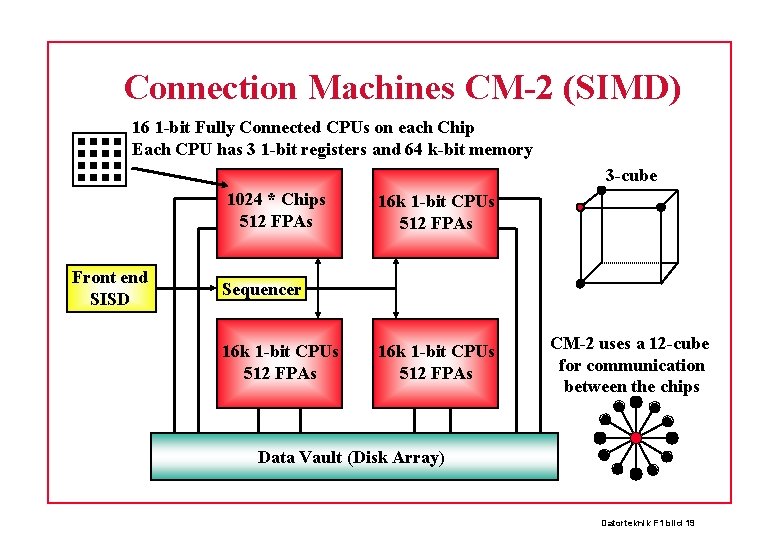

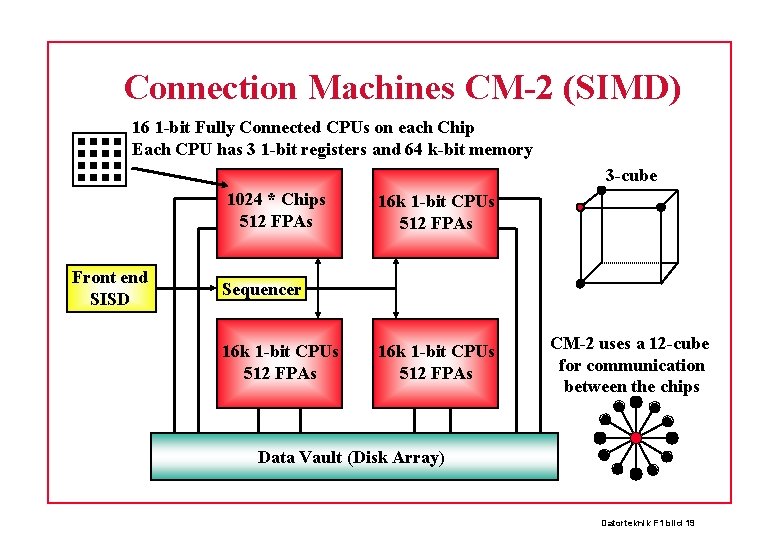

Multi. Stage Networks Crossbar Switch P 1 to P 2, P 3 P 2 to P 4 P 3 to P 1 P 2 P 3 P 4 Omega Network P 1 to P 6, but P 2 to P 8 not possible at the same time log 2 P P 1 P 2 P 3 P 4 P 5 P 6 P 7 P 8 Datorteknik F 1 bild 18

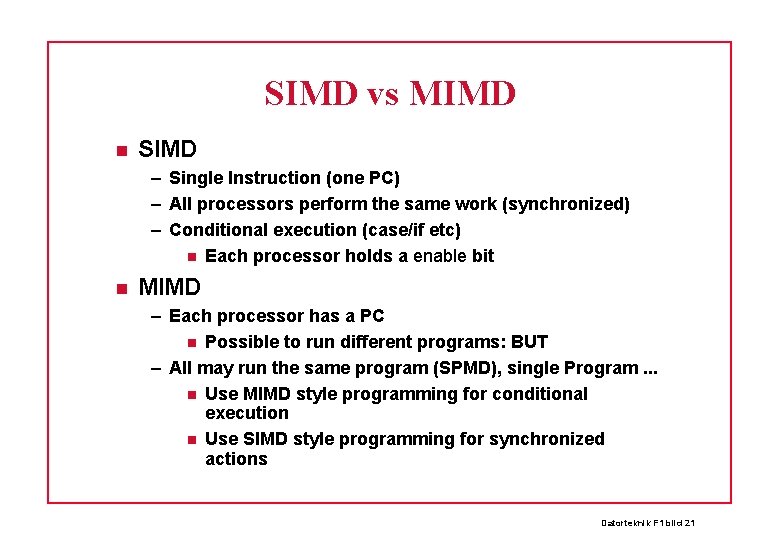

Connection Machines CM-2 (SIMD) 16 1 -bit Fully Connected CPUs on each Chip Each CPU has 3 1 -bit registers and 64 k-bit memory 3 -cube 1024 * Chips 512 FPAs Front end SISD 16 k 1 -bit CPUs 512 FPAs Sequencer 16 k 1 -bit CPUs 512 FPAs CM-2 uses a 12 -cube for communication between the chips Data Vault (Disk Array) Datorteknik F 1 bild 19

![SIMD Programming Parallel sum0 for i0 i65536 ii1 sumsumAPn i Loop over 65 SIMD Programming, Parallel sum=0 for (i=0; i<65536; i=i+1) sum=sum+A[Pn, i]; /* Loop over 65](https://slidetodoc.com/presentation_image_h/2e09c52629f43f52cf5d4651076fce68/image-20.jpg)

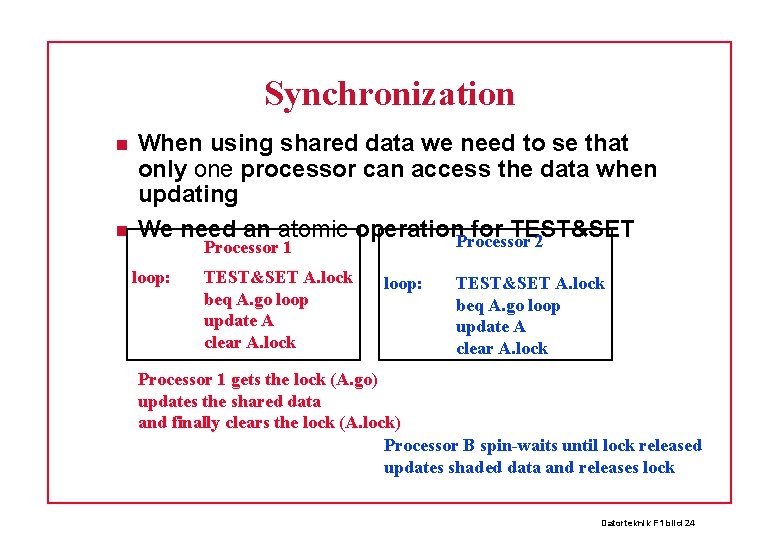

SIMD Programming, Parallel sum=0 for (i=0; i<65536; i=i+1) sum=sum+A[Pn, i]; /* Loop over 65 k elements */ /* Pn is the processor number */ limit=8192; half=limit; /* Collect sum from 8192 processors */ repeat half=half/2 /* Split into sender/receiver */ if (Pn>=half && Pn<limit) send(Pn/2 -half, sum); if (Pn<half) sum=sum+receive(); limit=half; until (half==1) /* final sum */ limit 4 3 half 2 1 0 send(1, sum) send(0, sum) sum=sum+R limit 2 half 1 send(0, sum) 0 sum=sum+R 0 Final sum Datorteknik F 1 bild 20

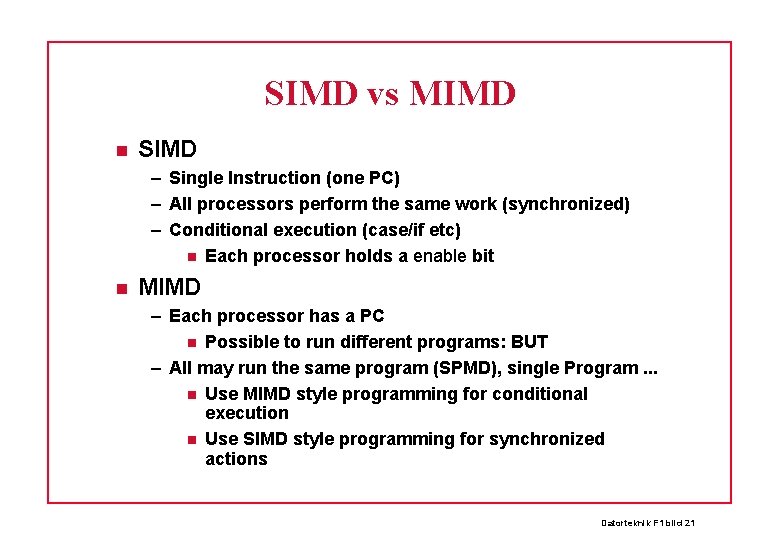

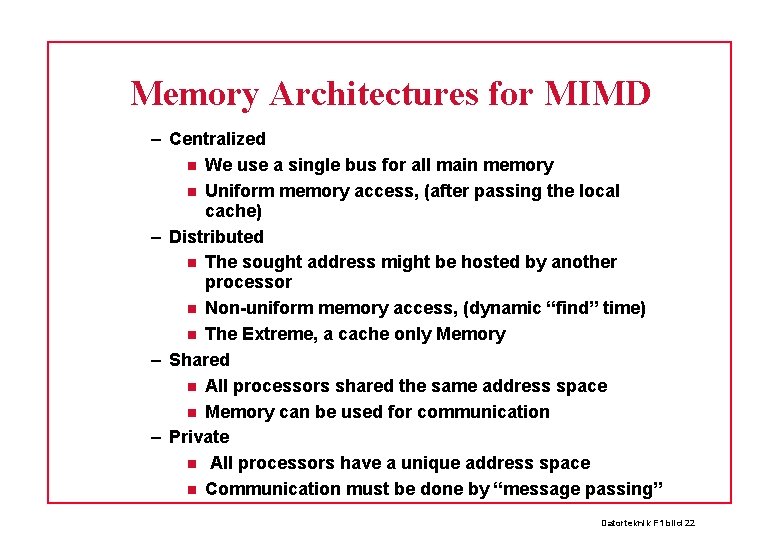

SIMD vs MIMD SIMD – Single Instruction (one PC) – All processors perform the same work (synchronized) – Conditional execution (case/if etc) Each processor holds a enable bit MIMD – Each processor has a PC Possible to run different programs: BUT – All may run the same program (SPMD), single Program. . . Use MIMD style programming for conditional execution Use SIMD style programming for synchronized actions Datorteknik F 1 bild 21

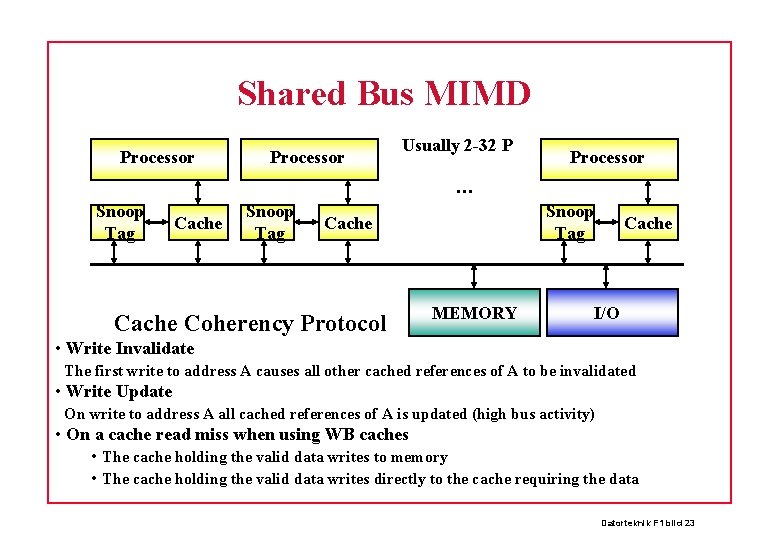

Memory Architectures for MIMD – Centralized We use a single bus for all main memory Uniform memory access, (after passing the local cache) – Distributed The sought address might be hosted by another processor Non-uniform memory access, (dynamic “find” time) The Extreme, a cache only Memory – Shared All processors shared the same address space Memory can be used for communication – Private All processors have a unique address space Communication must be done by “message passing” Datorteknik F 1 bild 22

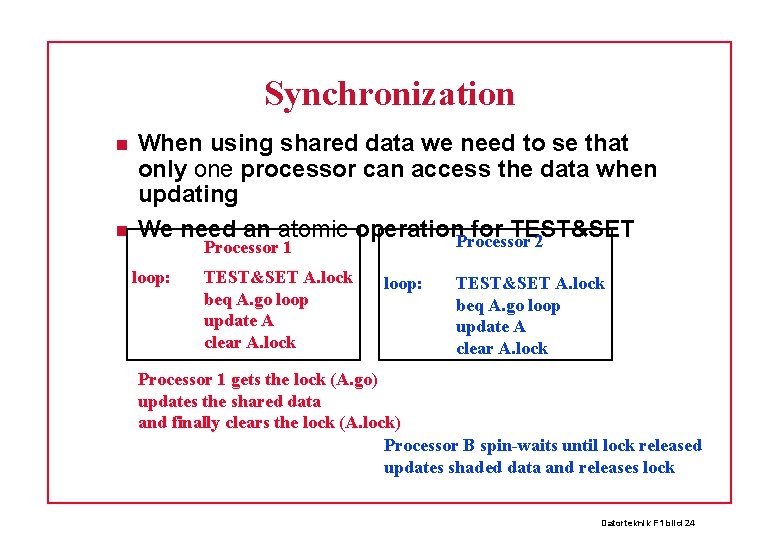

Shared Bus MIMD Processor Usually 2 -32 P Processor … Snoop Tag Cache Coherency Protocol MEMORY Cache I/O • Write Invalidate The first write to address A causes all other cached references of A to be invalidated • Write Update On write to address A all cached references of A is updated (high bus activity) • On a cache read miss when using WB caches • The cache holding the valid data writes to memory • The cache holding the valid data writes directly to the cache requiring the data Datorteknik F 1 bild 23

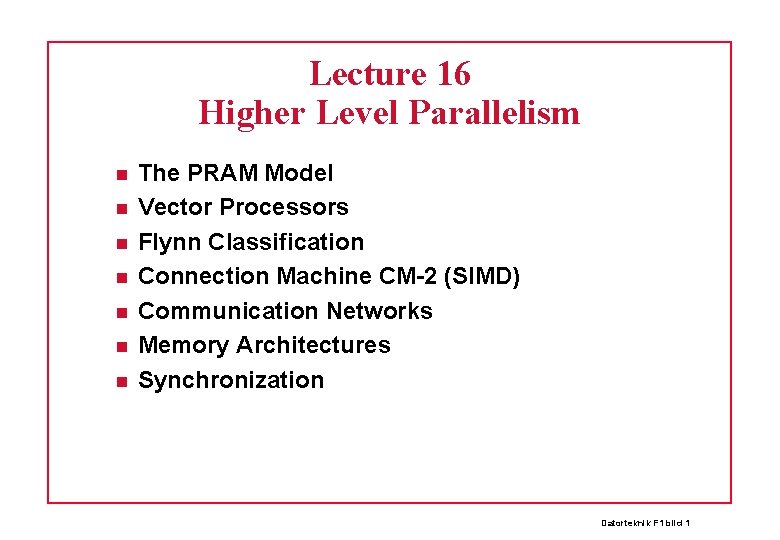

Synchronization When using shared data we need to se that only one processor can access the data when updating We need an atomic operation. Processor for TEST&SET 2 Processor 1 loop: TEST&SET A. lock beq A. go loop update A clear A. lock Processor 1 gets the lock (A. go) updates the shared data and finally clears the lock (A. lock) Processor B spin-waits until lock released updates shaded data and releases lock Datorteknik F 1 bild 24