Learning with Noise Relation Extraction with Dynamic Transition

- Slides: 26

Learning with Noise: Relation Extraction with Dynamic Transition Matrix Bingfeng Luo, Yansong Feng, Zheng Wang, Zhanxing Zhu, Songfang Huang, Rui Yan and Dongyan Zhao 2017/04/22

About Dataset Noise u Noise is common in dataset u Human can make erroneous annotations u Noise is significant in automatically constructed dataset u Relation Extraction u Heavily rely on automatically constructed dataset

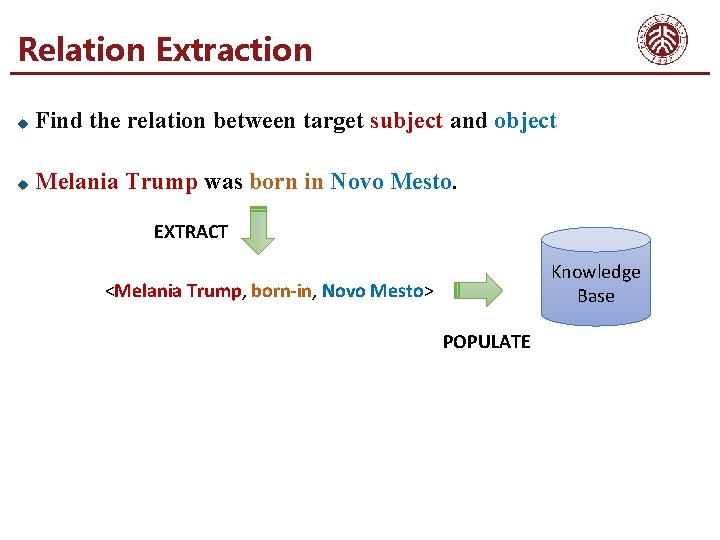

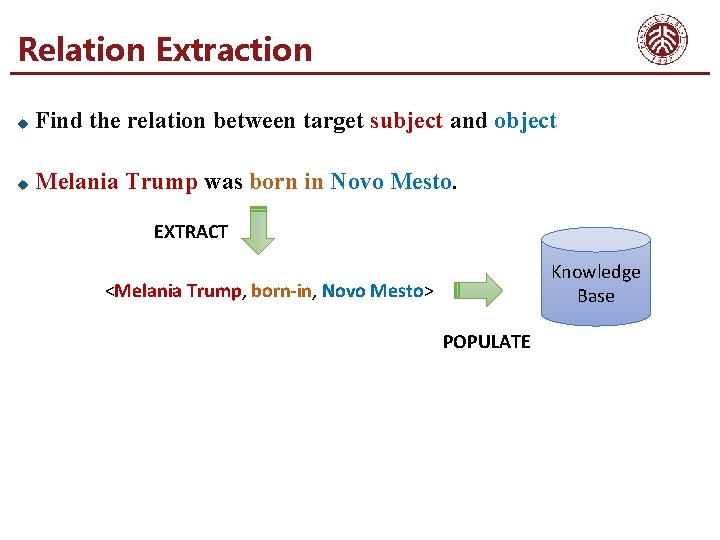

Relation Extraction u Find the relation between target subject and object u Melania Trump was born in Novo Mesto. EXTRACT Knowledge Base <Melania Trump, born-in, Novo Mesto> POPULATE

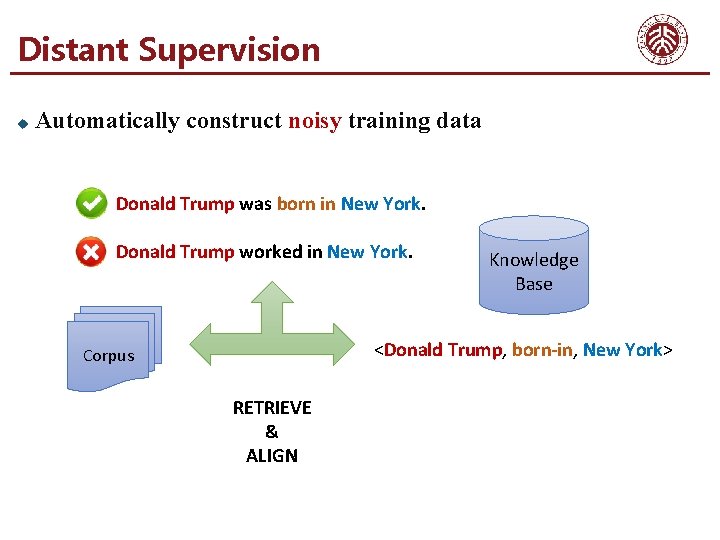

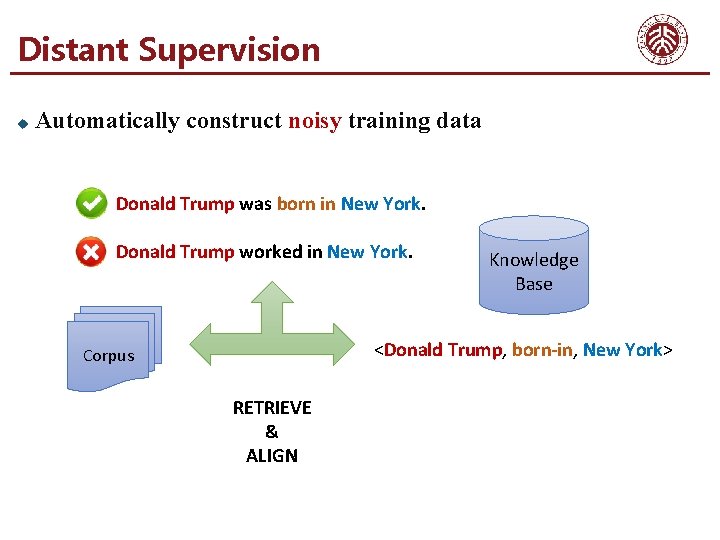

Distant Supervision u Automatically construct noisy training data Donald Trump was born in New York. Donald Trump worked in New York. Knowledge Base <Donald Trump, born-in, New York> Corpus RETRIEVE & ALIGN

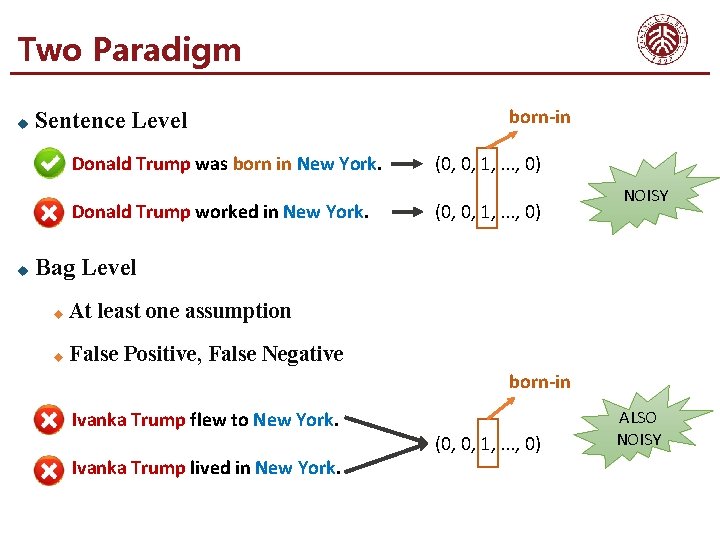

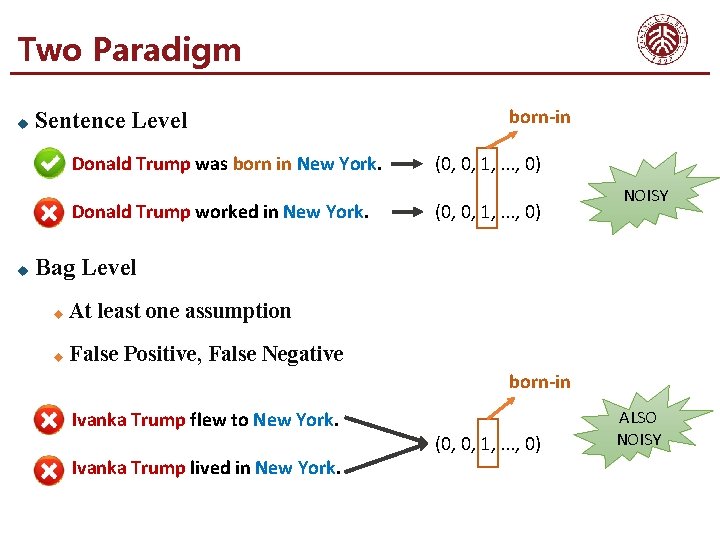

Two Paradigm u Sentence Level Donald Trump was born in New York. Donald Trump worked in New York. u born-in (0, 0, 1, . . . , 0) NOISY Bag Level u At least one assumption u False Positive, False Negative born-in Ivanka Trump flew to New York. Ivanka Trump lived in New York. (0, 0, 1, . . . , 0) ALSO NOISY

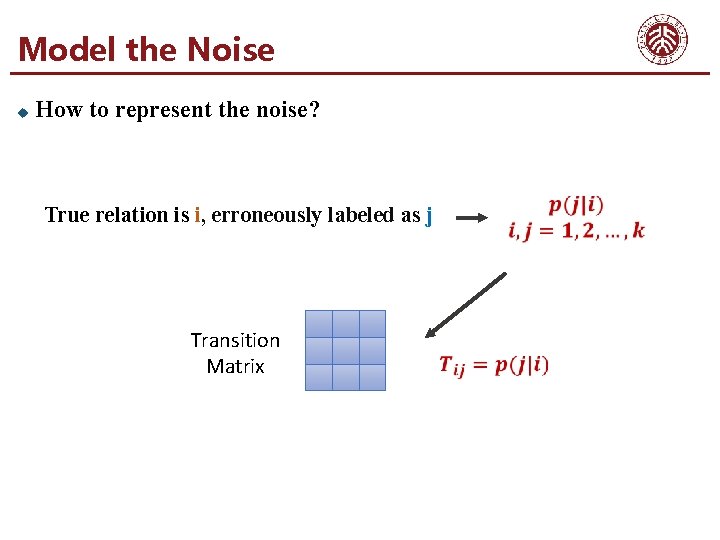

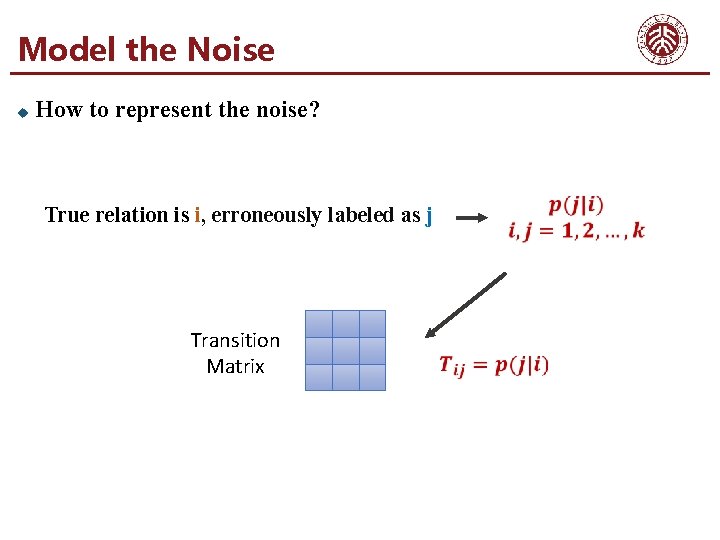

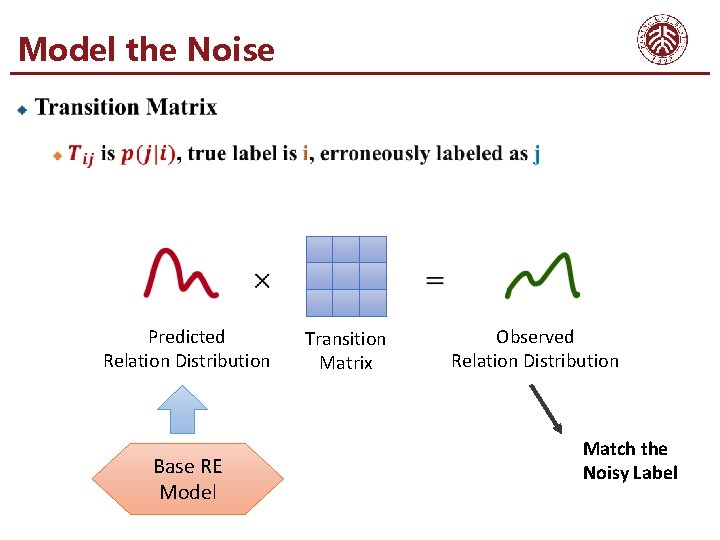

Model the Noise u How to represent the noise? True relation is i, erroneously labeled as j Transition Matrix

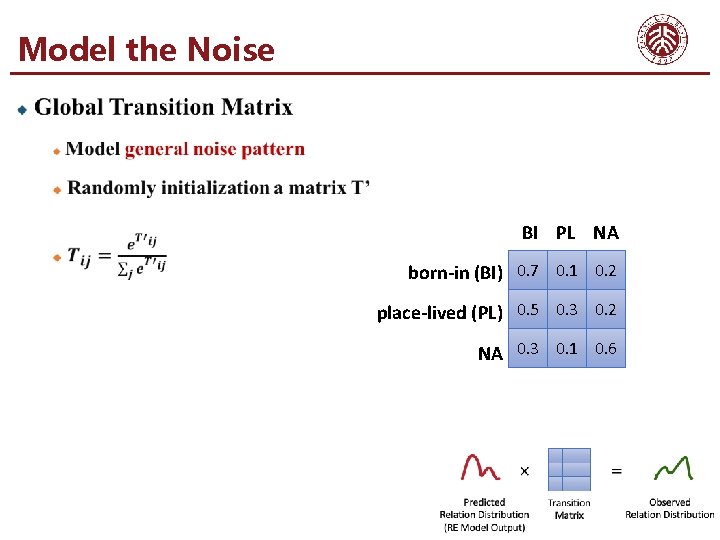

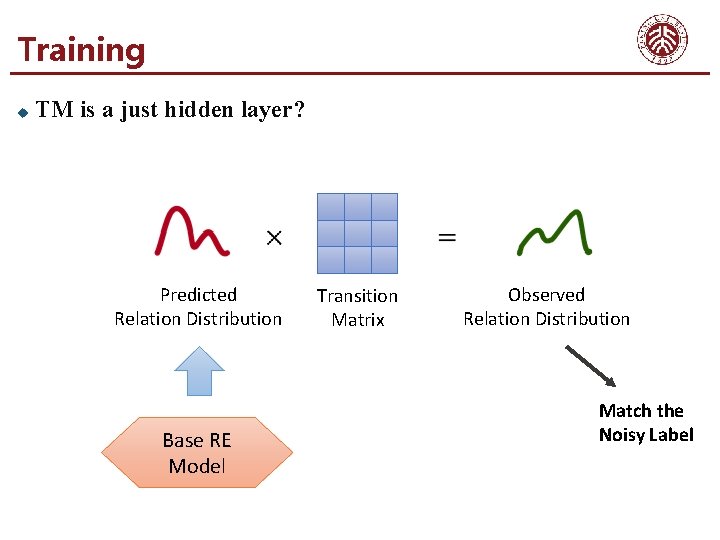

Model the Noise Predicted Relation Distribution Base RE Model Transition Matrix Observed Relation Distribution Match the Noisy Label

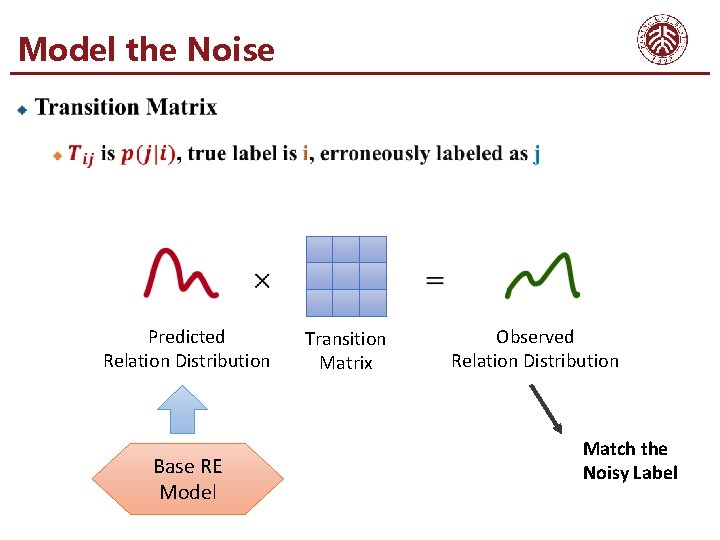

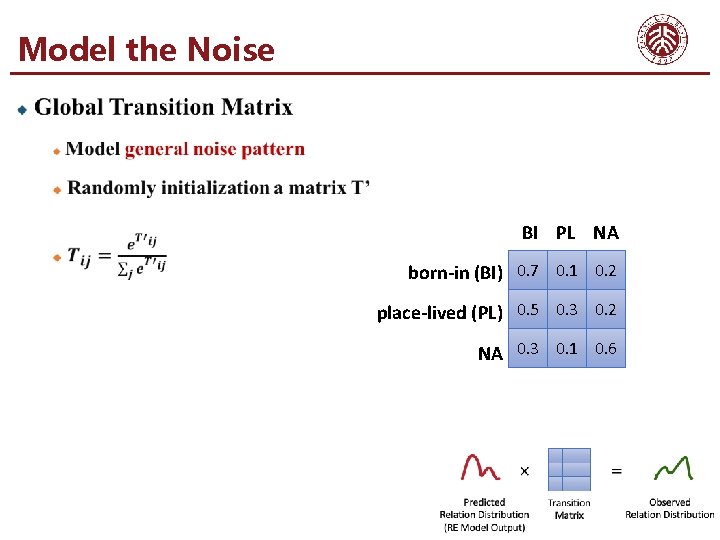

Model the Noise BI PL NA born-in (BI) 0. 7 0. 1 0. 2 place-lived (PL) 0. 5 0. 3 0. 2 NA 0. 3 0. 1 0. 6

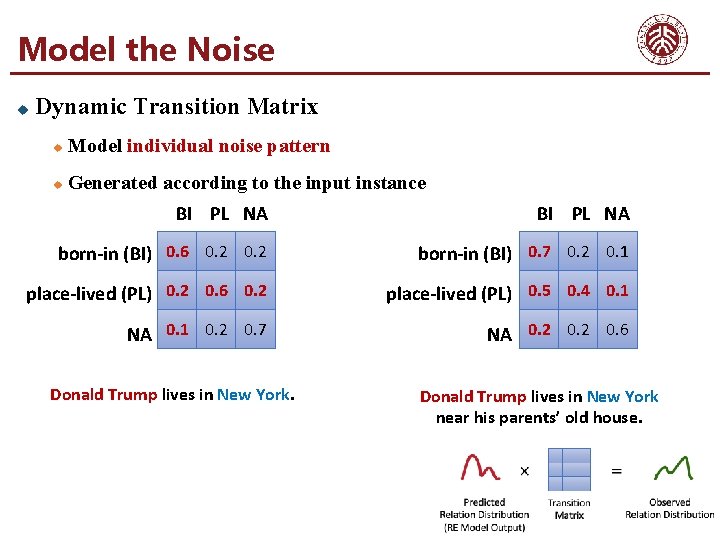

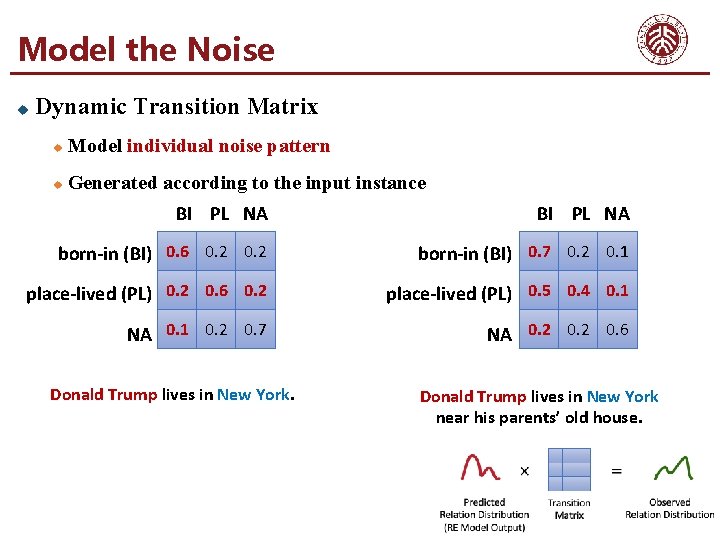

Model the Noise u Dynamic Transition Matrix u Model individual noise pattern u Generated according to the input instance BI PL NA born-in (BI) 0. 6 0. 2 born-in (BI) 0. 7 0. 2 0. 1 place-lived (PL) 0. 2 0. 6 0. 2 place-lived (PL) 0. 5 0. 4 0. 1 NA 0. 1 0. 2 0. 7 NA 0. 2 0. 6 Donald Trump lives in New York near his parents’ old house.

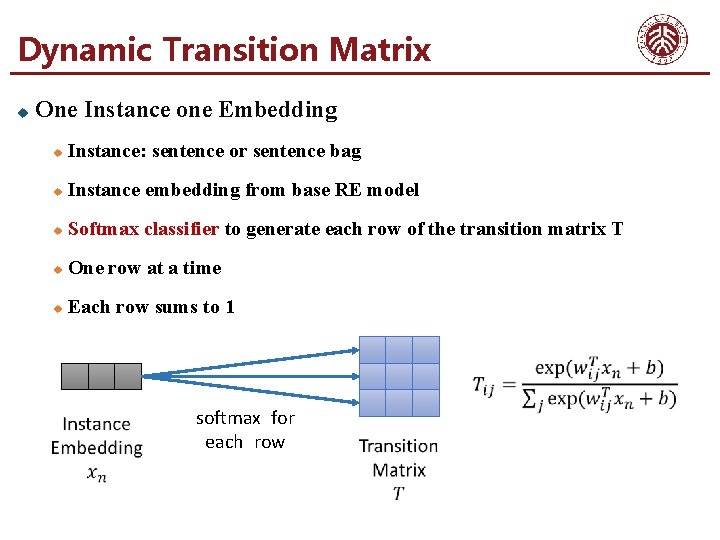

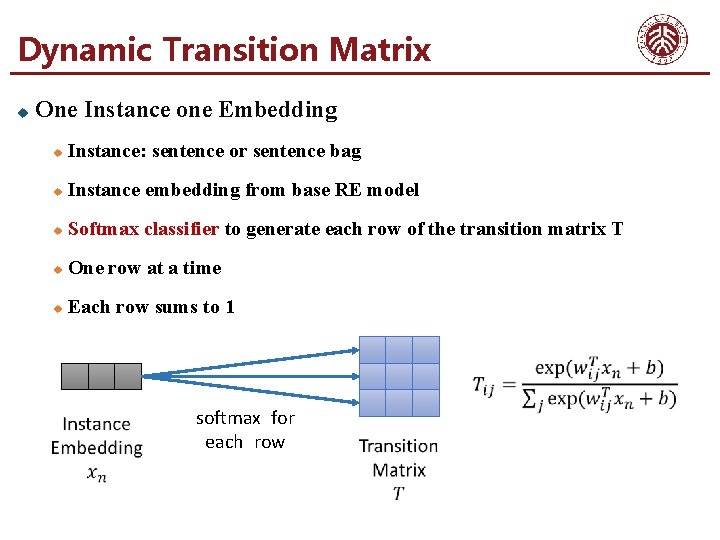

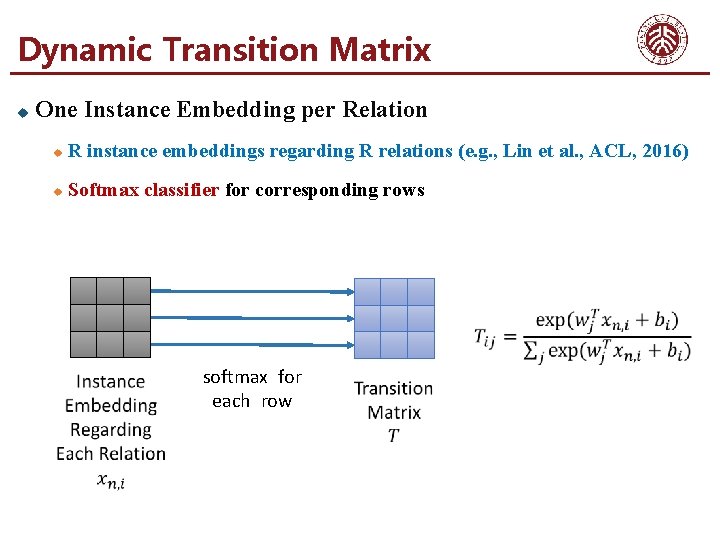

Dynamic Transition Matrix u One Instance one Embedding u Instance: sentence or sentence bag u Instance embedding from base RE model u Softmax classifier to generate each row of the transition matrix T u One row at a time u Each row sums to 1 softmax for each row

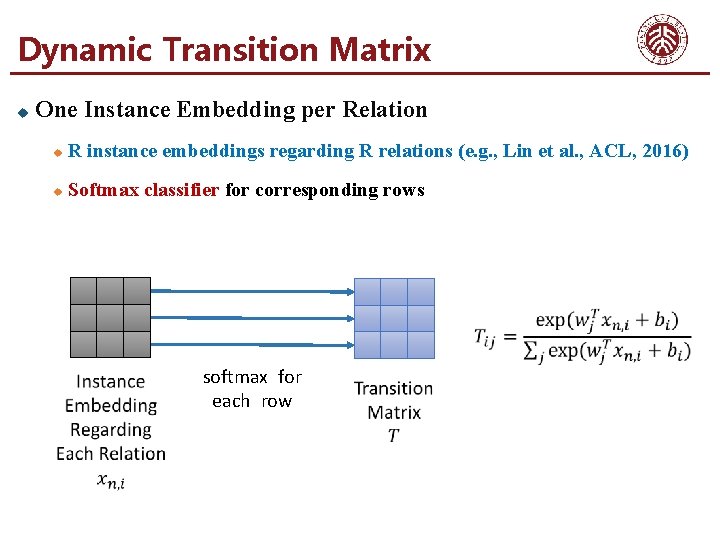

Dynamic Transition Matrix u One Instance Embedding per Relation u R instance embeddings regarding R relations (e. g. , Lin et al. , ACL, 2016) u Softmax classifier for corresponding rows softmax for each row

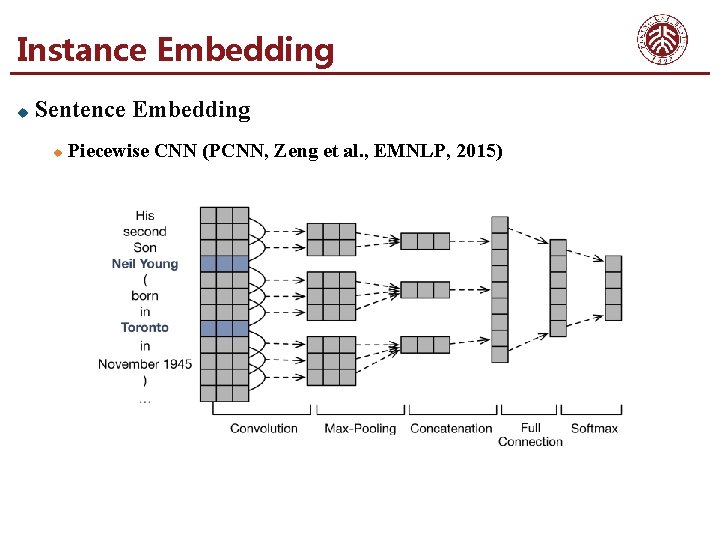

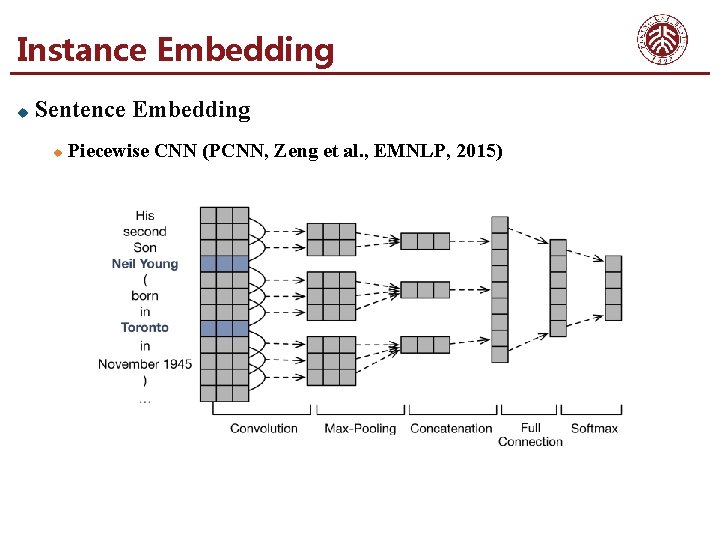

Instance Embedding u Sentence Embedding u Piecewise CNN (PCNN, Zeng et al. , EMNLP, 2015)

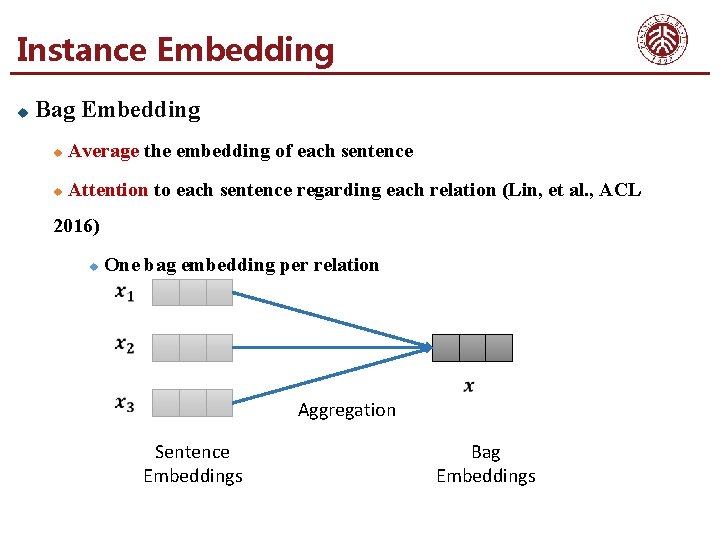

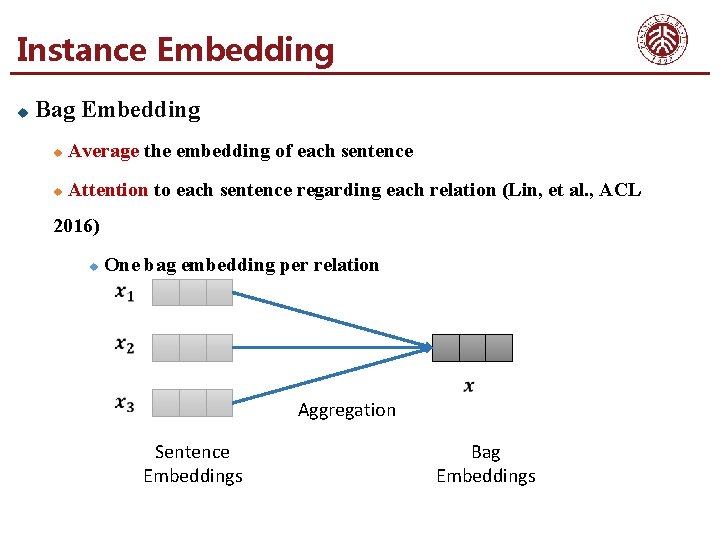

Instance Embedding u Bag Embedding u Average the embedding of each sentence u Attention to each sentence regarding each relation (Lin, et al. , ACL 2016) u One bag embedding per relation Aggregation Sentence Embeddings Bag Embeddings

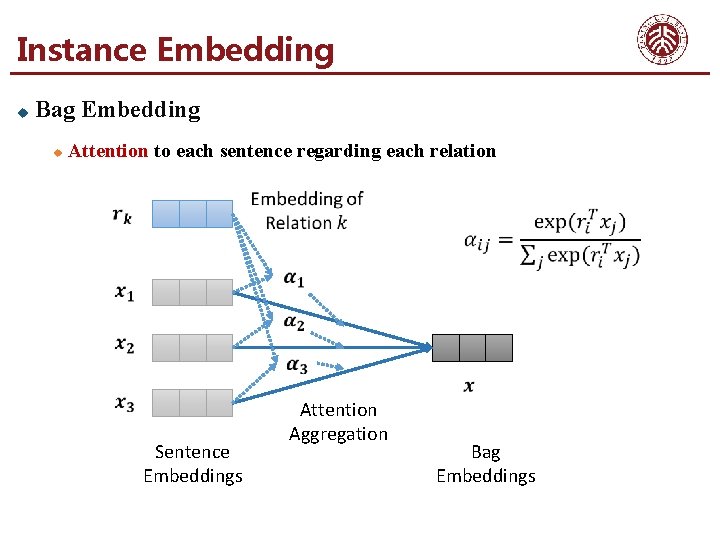

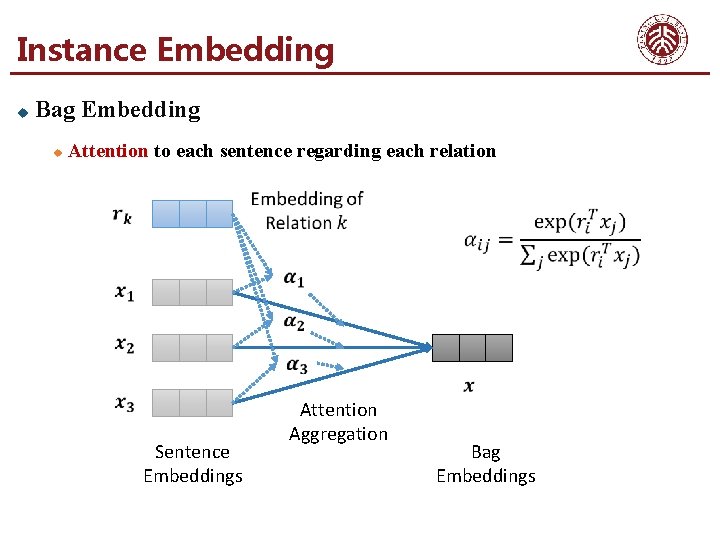

Instance Embedding u Bag Embedding u Attention to each sentence regarding each relation Sentence Embeddings Attention Aggregation Bag Embeddings

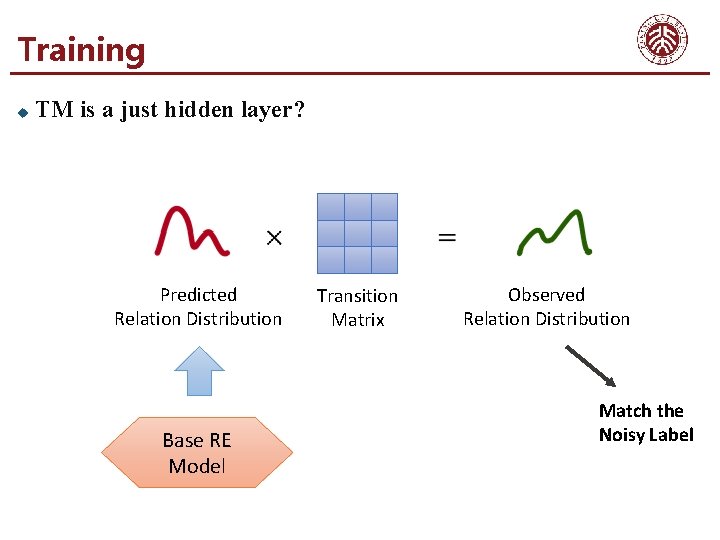

Training u TM is a just hidden layer? Predicted Relation Distribution Base RE Model Transition Matrix Observed Relation Distribution Match the Noisy Label

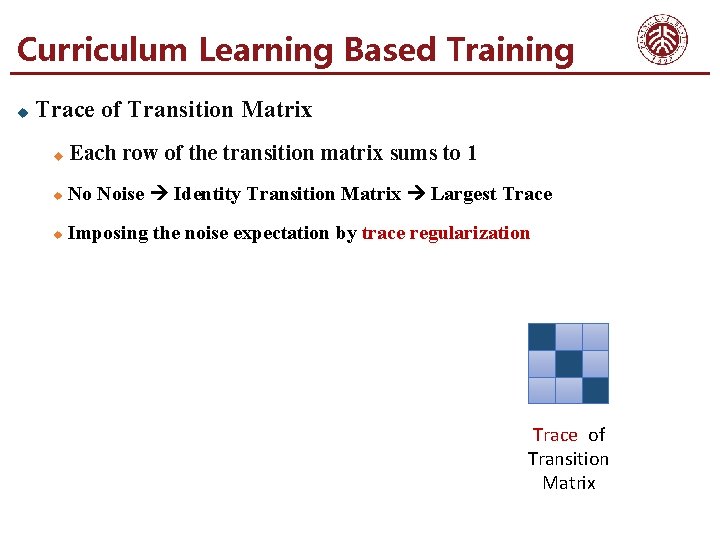

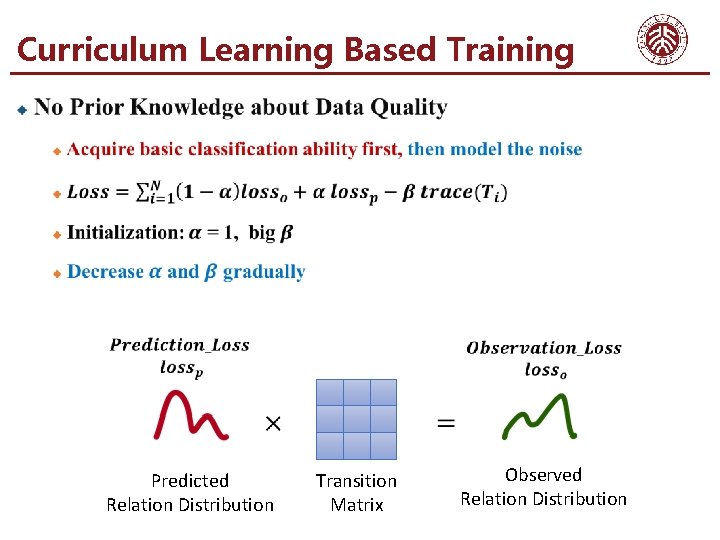

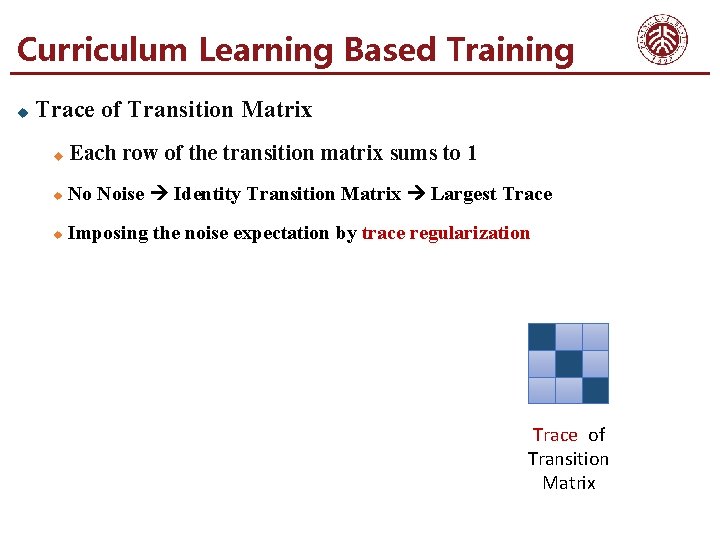

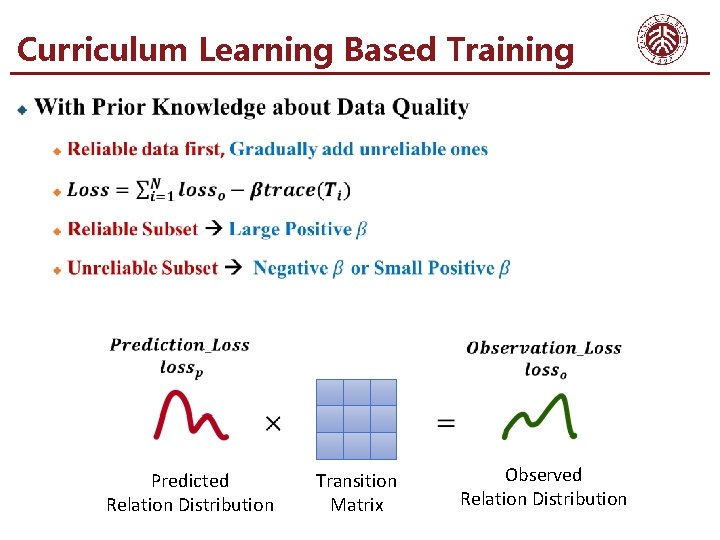

Curriculum Learning Based Training u Trace of Transition Matrix u Each row of the transition matrix sums to 1 u No Noise Identity Transition Matrix Largest Trace u Imposing the noise expectation by trace regularization Trace of Transition Matrix

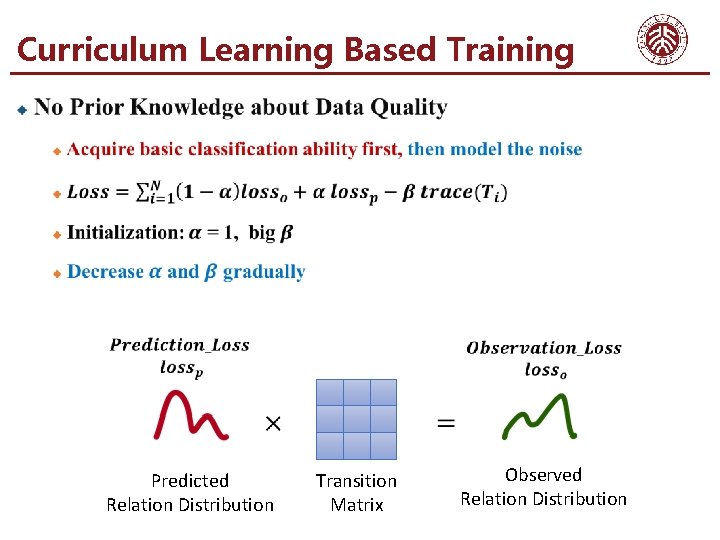

Curriculum Learning Based Training Predicted Relation Distribution Transition Matrix Observed Relation Distribution

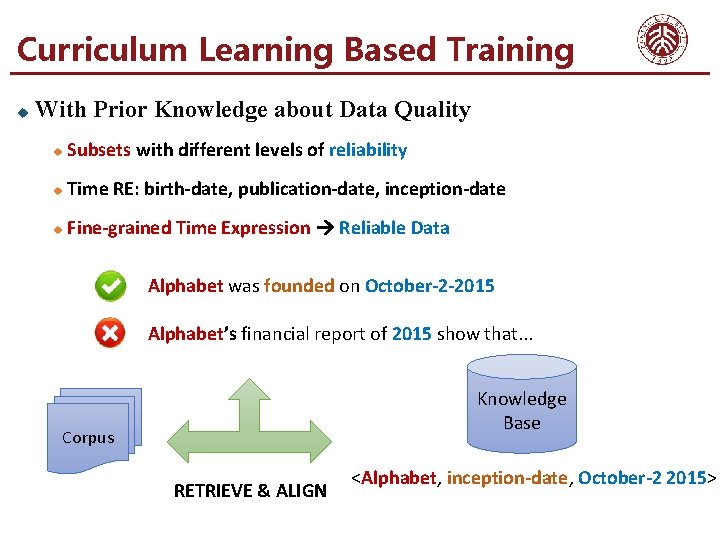

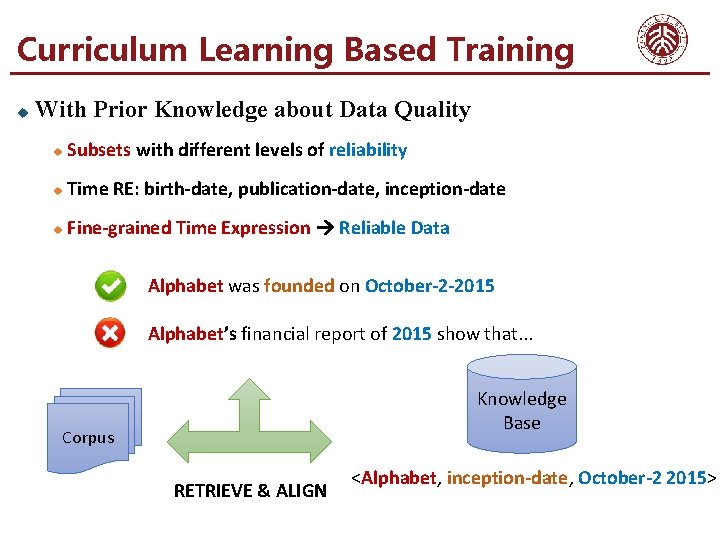

Curriculum Learning Based Training u With Prior Knowledge about Data Quality u Subsets with different levels of reliability u Time RE: birth-date, publication-date, inception-date u Fine-grained Time Expression Reliable Data Alphabet was founded on October-2 -2015 Alphabet’s financial report of 2015 show that. . . Knowledge Base Corpus RETRIEVE & ALIGN <Alphabet, inception-date, October-2 2015>

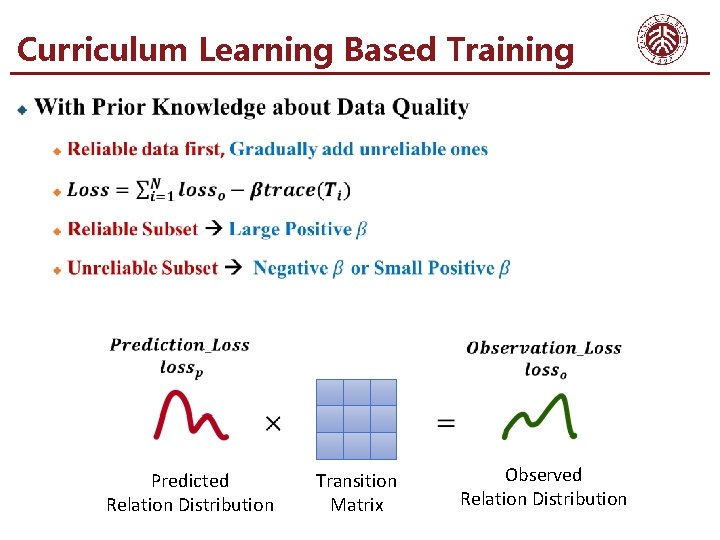

Curriculum Learning Based Training Predicted Relation Distribution Transition Matrix Observed Relation Distribution

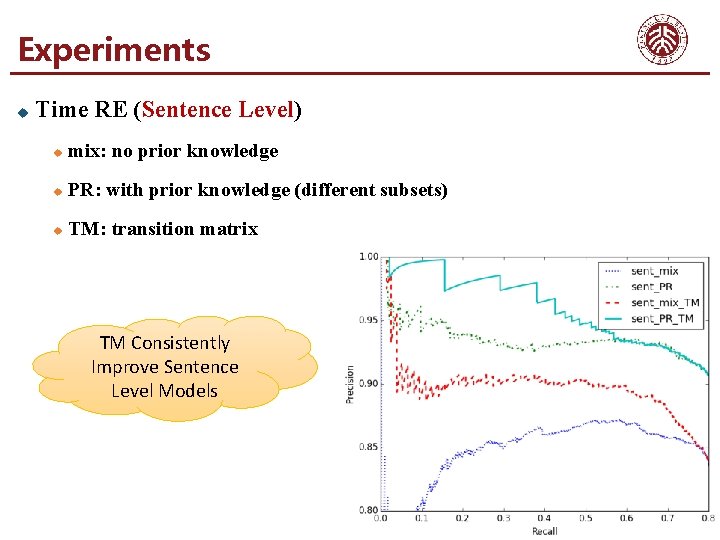

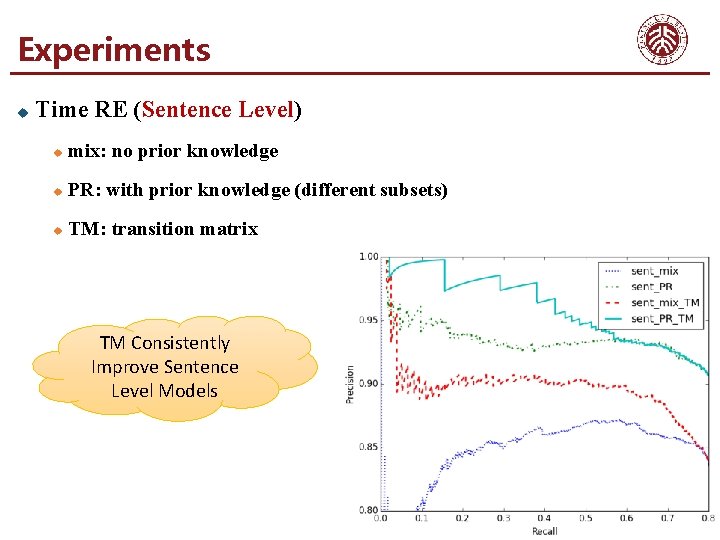

Experiments u Time RE (Sentence Level) u mix: no prior knowledge u PR: with prior knowledge (different subsets) u TM: transition matrix TM Consistently Improve Sentence Level Models

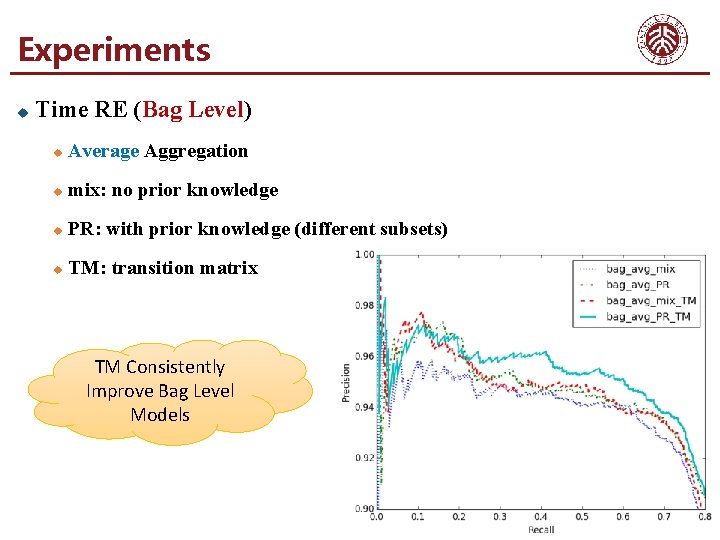

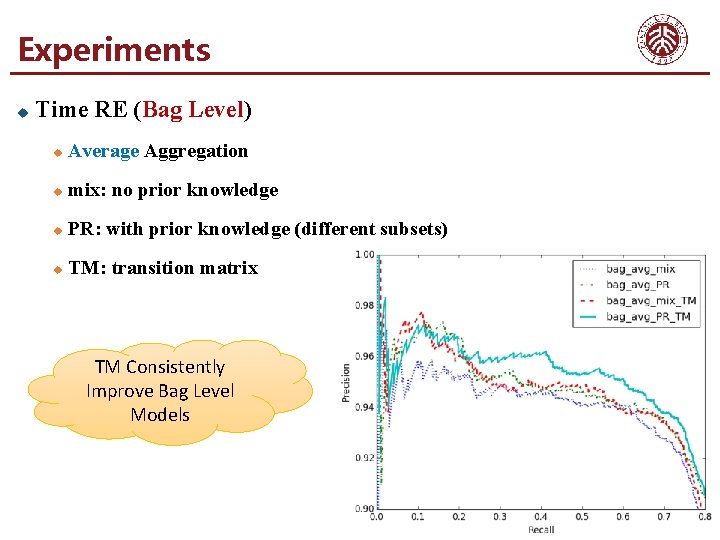

Experiments u Time RE (Bag Level) u Average Aggregation u mix: no prior knowledge u PR: with prior knowledge (different subsets) u TM: transition matrix TM Consistently Improve Bag Level Models

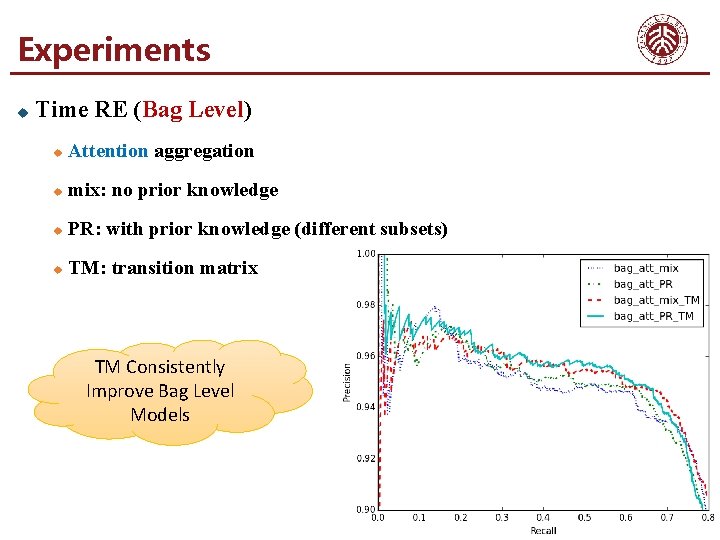

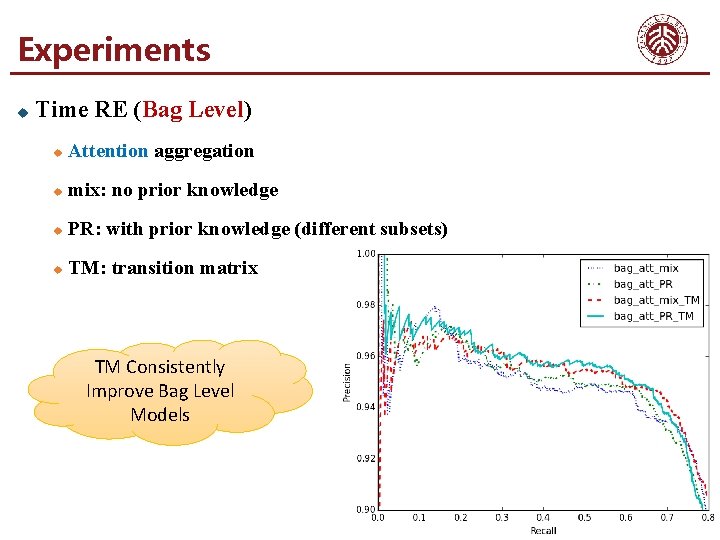

Experiments u Time RE (Bag Level) u Attention aggregation u mix: no prior knowledge u PR: with prior knowledge (different subsets) u TM: transition matrix TM Consistently Improve Bag Level Models

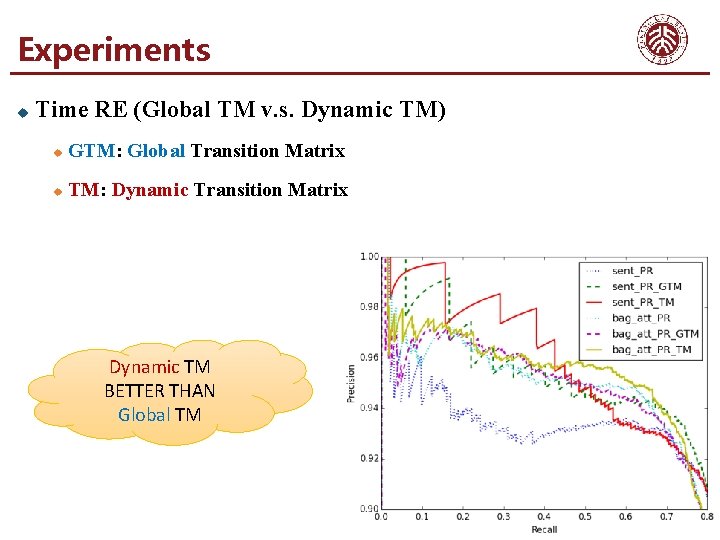

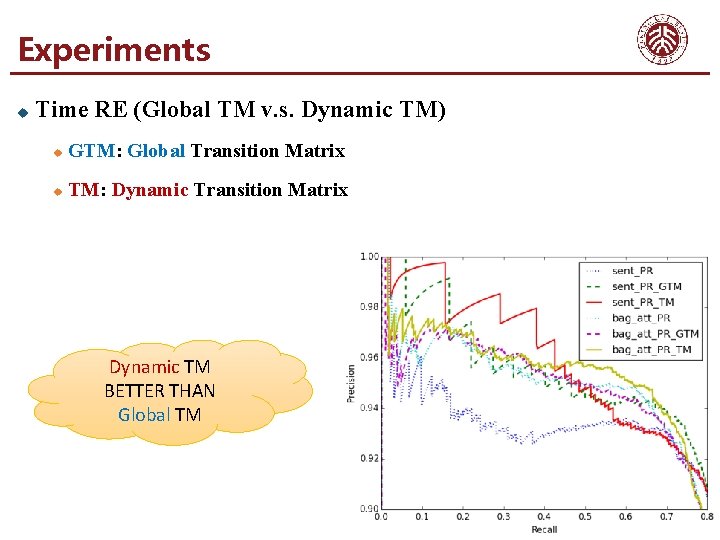

Experiments u Time RE (Global TM v. s. Dynamic TM) u GTM: Global Transition Matrix u TM: Dynamic Transition Matrix Dynamic TM BETTER THAN Global TM

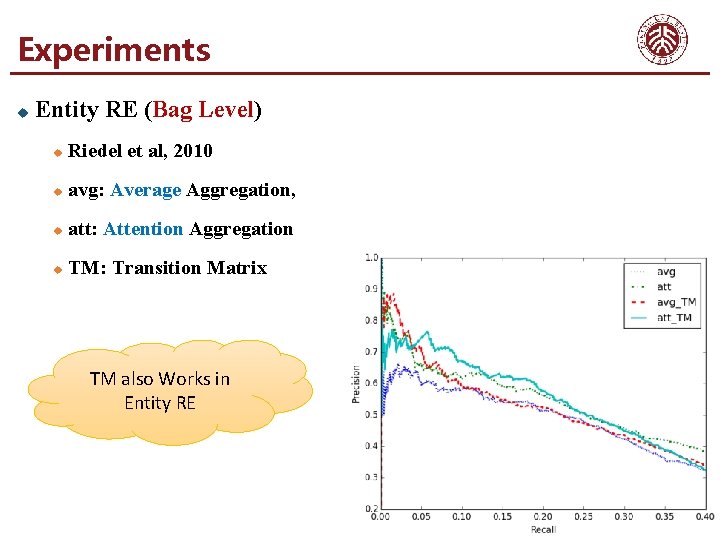

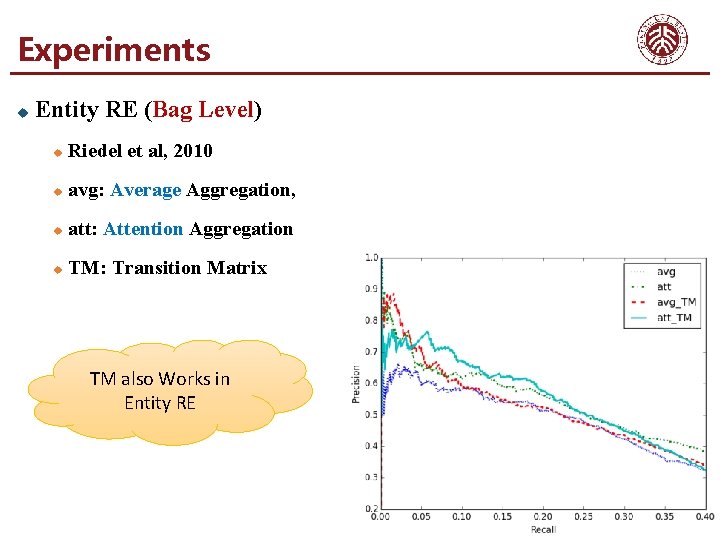

Experiments u Entity RE (Bag Level) u Riedel et al, 2010 u avg: Average Aggregation, u att: Attention Aggregation u TM: Transition Matrix TM also Works in Entity RE

Conclusion u Modeling noise benefits RE results u Dynamic/Global Transition matrix can model noise u Dynamic TM is better than Global TM u Curriculum Learning can train the transition matrix u Curriculum Learning can incorporate prior knowledge about data quality

Q&A